Abstract

Melanoma is easily detectable by visual examination since it occurs on the skin’s surface. In melanomas, which are the most severe types of skin cancer, the cells that make melanin are affected. However, the lack of expert opinion increases the processing time and cost of computer-aided skin cancer detection. As such, we aimed to incorporate deep learning algorithms to conduct automatic melanoma detection from dermoscopic images. The fuzzy-based GrabCut-stacked convolutional neural networks (GC-SCNN) model was applied for image training. The image features extraction and lesion classification were performed on different publicly available datasets. The fuzzy GC-SCNN coupled with the support vector machines (SVM) produced 99.75% classification accuracy and 100% sensitivity and specificity, respectively. Additionally, model performance was compared with existing techniques and outcomes suggesting the proposed model could detect and classify the lesion segments with higher accuracy and lower processing time than other techniques.

Keywords: fuzzy logic, GrabCut, convolution neural network, support vector machine, skin lesion

1. Introduction

The skin’s vital role is to regulate body temperature as well as to protect against infections and injuries. Melanoma is a malignant growth of skin cells that typically develops on body parts that receive little or no sun exposure [1]. The number of skin cancer cases across the globe is reported to be around 5.4 million every year [2]. Several studies report an increase in the number of skin cancer cases in the United States from 95,360 in 2017 to 207,390 in 2021 [3,4].

Early detection and prevention of skin cancer reduce mortality rates [5]. The diagnosis of skin cancer is dependent on dermatoscopic training and experience. The patient’s clinical information is needed to screen the skin lesion due to the need to visualize morphological features not visible to the naked eye because of similar pixels and textures [6,7,8]. Dermatologists diagnose skin cancer based on conventional approaches such as color, diameter, and asymmetry. In comparison to conventional models, imaging technology allows more accurate and manual inspection of images while reducing time consumption and costs [9,10].

Each skin lesion has its shape, size, and border. Due to their intrinsic naivety, locality, and lack of adaptability, low-level hand-crafted features used by traditional methods and machine learning (ML) methods have limited discriminative properties. The existing literature highlighted the automatic detection of skin lesions by different ML models including gradient boosting [11], support vector machine (SVM) [12], and Quadtree [13]. SVM is used to classify the features extracted from the grey level co-occurrence matrix [14]. In [15], K-Nearest Neighbor (KNN) with a Gaussian filter is used to extract the region of interest (ROI) which is classified using SVM [15].

In the framework of medical image analysis, deep learning (DL) automates systems to detect, classify, and diagnose several diseases. These DL models are very effective for large sample datasets and, especially, they have become more viable for skin image analysis [16]. Some studies have compared the performance of DL models in the detection of skin lesions in several categories [17]. It is highlighted from the reports of [18,19] that the convolution neural network (CNN) is better performed than dermatologists in the segmentation of skin lesions [19,20,21]. These studies involved feature extraction techniques from segmented images that enabled quick diagnosis.

Other models such as deep neural networks (DNN), CNN, long short-term memory (LSTM), and recurrent neural networks (RNN) also help to detect malignant skin cells [22,23]. It is highlighted that CNN helps to detect dangerous skin cells from dermoscopy images which were found to be difficult to screen for nonmelanocytic and non-pigmented lesions [22]. In [23], a stacked CNN model with improved loss function was proposed to detect skin lesions from given datasets, and 94.8–98.4% classification accuracy was reported. The main drawbacks of previous approaches are that visual characteristics of skin lesion images contain inhomogeneous features and fuzzy boundaries, and the processing time.

Therefore, in this paper, we proposed an approach called fuzzy-based GrabCut-stacked convolutional neural networks (GC-SCNN) model with enhanced loss function in support vector machines (SVM). Additionally, we test the accuracy of the generated model and compare the outcomes of the enhanced Fuzzy GC-SCNN with existing techniques in lesion classification. Furthermore, this study aimed to understand the model’s effectiveness in the detection and classification of the lesion segments with better accuracy and lower processing time than other models.

2. Methods

2.1. Dataset

Various datasets of skin images were used including PH2 (http://www.fc.up.pt/addi/, accessed on 18 March 2022), and International Skin Imaging Collaboration (ISIC) 2018–2019 archives (http://isic-archive.com, accessed on 18 March 2022) for skin melanoma detection. There are 10,015 training images and 1512 test images in ISIC 2018, including lesion categories of melanoma, melanocytic nevus, basal cell carcinoma, actinic keratosis, benign keratosis, dermatofibroma, and vascular regions. The ISIC 2019 dataset contains 25,531 training images and 8238 test images divided into nine categories, including melanoma, melanocytic nevus, basal cell carcinoma, actinic keratosis, and benign keratosis, dermatofibroma, vascular regions, and an unknown class. PH2 datasets aimed at melanoma diagnosis and ISIC datasets are biased towards melanocytic lesions. They both focus on melanocytic lesions and disregarded the non-melanocytic lesions. The images available in the datasets are clinical images of skin images but not the dermoscopic images, so there is a mismatch between the available training images and the real-life data, which deviates the automated diagnostic system’s performance and builds a classifier for multiple skin diseases that is more challenging.

HAM10000 (Human Against Machine) serves as a benchmark dataset for comparing humans and machines. This dataset consists of 10,015 dermatoscopic images of pigmented skin lesions with seven different categories: Actinic Keratoses and Intraepithelial Carcinoma (AKIEC), Basel Cell Carcinoma (BCC), Benign Keratosis-like Lesions (BKL), Dermatofibroma (DF), Melanoma (MEL), Melanocytic Nevi (NV), and Vascular Lesions (VASC) [24]. We decomposed the image dataset into an 80:20 ratio where 80% was used for training and 20% for testing.

2.2. Data Preprocessing

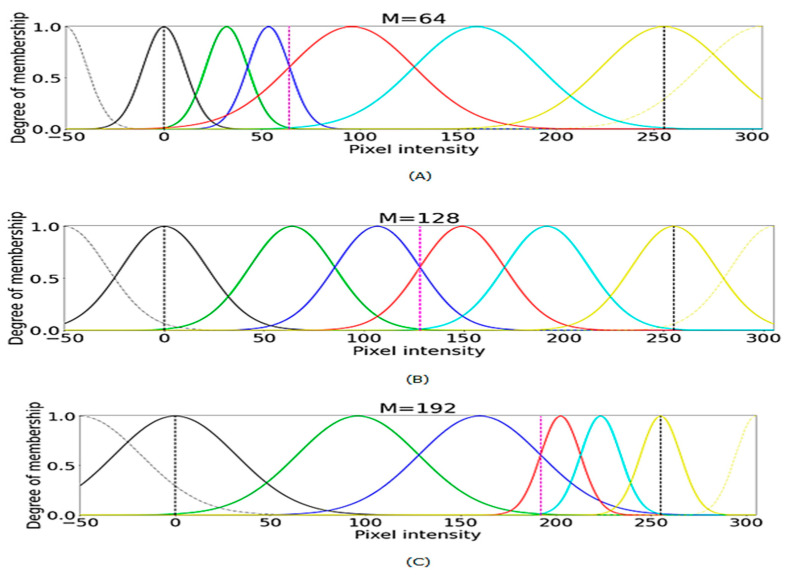

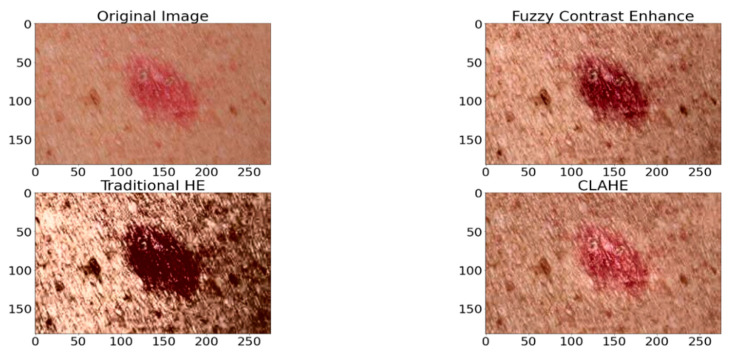

The original dermoscopy image sizes varied from 540 × 576 to 2016 × 3024. We applied the image resizing and maintained the uniform image size of 256 × 256. The morphological filtering and marker concepts were adopted to highlight the melanoma region and skin hair removal. These morphological filters are used for image sharpening. Erosion and dilation are the two basic morphological operators, where dilation selects the brightest value near the structuring element. The membership functions of dermoscopic images with different channels can be observed in Figure 1. The preprocessing of the dermoscopy images (Refer to Figure 2) for enhancement and detection of the lesion boundaries was conducted as mentioned below.

Figure 1.

The degree of membership of three different channels with (A) M = 64, (B) M = 128, and (C) M = 192.

Figure 2.

Preprocessed Image.

-

❖

The pixels of the skin lesion domain are taken to a fuzzy domain. Let M be an image of p × q, and M (p,q) represent the intensity of the skin lesion image pixels that must be mapped to the fuzzy characteristic plane. It can be expressed as follows , p = 1, …, m and q = 1, …, n; where represent the pixels and µM (p, q) is the intensity level degree of the image ranging from zero to one.

-

❖

Assign the fuzzy plane pixels to the logarithmic function to map to the fuzzy domain f (M (p, q)) = ((1 + ); where Mmax and Mmin are the maximum and minimum intensity of the skin lesion image pixels.

-

❖

To enhance the portions of the skin lesion images, transform the image using the trigonometric series with fuzzy principles as mentioned f (T (p, q)) = T (p, q) + f (M (p, q))2 where 0 ≤ f (M (p, q)) ≤ 0.5; where T (p, q) = and a = π (f (M (p, q) − 0.5) + 1.

-

❖

The defuzzification can be expressed as D = Mmin + ((Mmax − Mmin) × 2 T(p, q) − 1)

-

❖

Later, enhance the image quality by skin lesion image channel-wise.

2.3. Image Segmentation

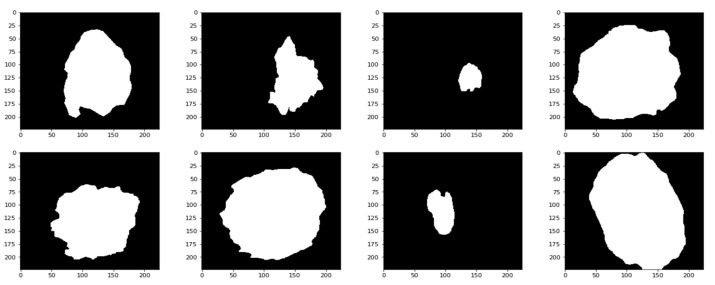

In this work, the segmentation phase has done by the GrabCut (GC) segmentation that is used to segment the fuzzy preprocessed image. Figure 3 shows the results of segmenting the data and identifying the necessary areas.

Figure 3.

Segmented lesions.

Let the color image be represented with x and the array of pixels represented as the = (1, 2, …, n) where each zi = (Ri, Gi, Bi), i [1, …, n]. During the segmentation, the label of the pixels is represented as the {0,1}. The trimap with a semiautomated direction can be applied to three regions called the background, foreground, and the uncertain pixels and they can be represented as ZB, ZF, and ZU. The covariance of the gaussian mixture model of n elements is determined using the background pixels and the foreground pixels.

= {}; where , are the weight, mean and covariance matrices and = {} where {1, …, n} for the elements of the gaussian mixture model of the pixels .

The function for the segmentation can be expressed as =+ R ; where P represents the probability distribution Z of the gaussian mixture model and R represents the regularizing of the segregated regions concerning the color and the neighborhood pixels and R assumes the neighborhood E over the pixels

2.4. Feature Extraction

After performing the segmentation, we applied the stacked CNN technique to extract the corresponding features in the segmented image. The proposed hybrid approach learns nonlinear discriminative features from the dermoscopy images at different levels. Algorithm 1 discusses the GC-SCNN Algorithm. CNN automatically learns the valuable features, and we integrated three modules, Inception-V3 [25], Xception [26], and VGG-19 [27]. In the first module, pre-trained Inception-V3, Xception, and VGG-19 models are tuned for dermoscopy images to extract features from the segmented image. The second model of the stacked CNN obtained six sub-models during the training of the CNN models. We stacked together all the sub-models and then applied the SVM classification to build a model to classify the lesions. The Algorithm 1 for GC-SCNN is written as

| Algorithm 1: GC-SCNN. |

|

Input: Segmented Images Output: Skin cancer Classification results for k = 1 to length (segmented images) do for j = 1 to 3 do sub-model j. predicts (segmented image) end for final = concatenation (P1, P2, P3) end for assess the SoftMax classifier on the feature vector final stacked CNN = Train (final, label) classification of skin cancer images prediction = classification (stacked CNN, testset) return prediction |

2.5. Lesion Classification

The SVM classifier takes the extracted features and classifies the lesion. First, the SVM calculates the feature score by using the linear mapping on feature vectors and uses this feature score to calculate the loss. The loss should be minimal to get better accuracy; we use an improved loss function [28] to calculate the weighted score for each pixel in the segmented lesion image. The Algorithm 2 for the enhanced SVM is described as

| Algorithm 2: Enhanced SVM algorithm. |

| Initialize the values in the training set Repeat for every i = 1 to N calculate the loss function for all values compare the extracted patches in the images end for Repeat for every score vector i- 1 to N Compute SVM with imputed labels argmax((w × xi) + b), i end for Evaluate for different weights and compute output. |

Enhanced SVM reduces the number of neurons, leading to overfitting minimization, increasing accuracy, and reducing processing time. The enhanced loss function reduces the load of the segmented dermoscopy images fed to the enhanced SVM classifier, which reduces the processing time. The improved loss function in the existing SVM algorithm improves the performance in classifying the lesion segments depending on the intensity and the score vectors.

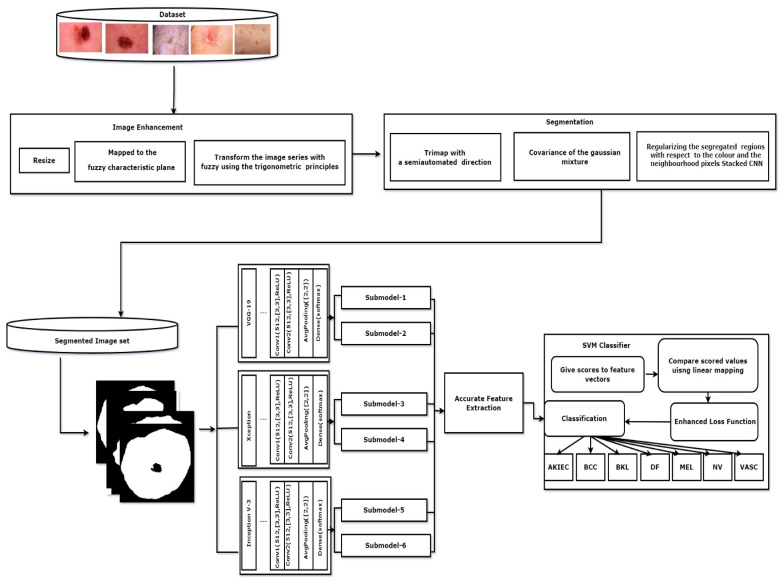

2.6. Experimental Framework

The proposed methodology discusses the classification of skin lesions. First, we fed the input data for preprocessing using fuzzy logic to enhance the image and identify the lesion boundaries. We then applied the morphological operators to remove the hair on the skin. Then the images were sent for segmentation using the GrabCut technique. Later, the features were selected using the stacked CNN. Finally, to classify the lesions, we used the improved SVM classifier. The proposed experimental framework is illustrated in Figure 4.

Figure 4.

Block diagram of the proposed model.

2.7. Performance Metrics

The performance metrics can help measure the model presented in terms of the different parameters mentioned below. For instance,

-

❖

Accuracy measures the portion of the true results among the total number of the cases and is written as accuracy = ; Where FP-False positive, FN-False Negative, TP-True Positive, TN-True Negative

-

❖

Sensitivity is the portion of the positive outcomes among the actual positive and it is defined as sensitivity =

-

❖

Specificity is defined as the portion of the true negative outcomes among the negative outcomes and it is written as specificity =

3. Results

The performance of the stacked CNN frameworks is assessed in this section and compared to the performance of the existing models, and the dataset is decomposed into an 80:20 ratio. For simulation, the enhanced fuzzy-SCNN was implemented in Python with IDE Anaconda on the Intel Core i5 3.4 GHz processor.

Deep learning models require a robust set of hyperparameters. Hyperparameter tuning enhances deep learning performance. Various optimization techniques exist for hyperparameters and the manual search technique is one of them. A variety of hyperparameter combinations have been tested and the best model has been selected. Various hyperparameters were set for optimizers, learning rate, weight decay value, and dense layers. Hyperparameters in the network include the learning rate, optimizer, dense layers, and decay constant. A stacked CNN is tuned by varying its hyperparameters, as shown in Table 1. Optimizing algorithms affect both training speed and prediction accuracy. The popular optimizer algorithms in deep learning are the Root Mean Square Propagation (RMSProp), adaptive Moment Optimization (Adam), Stochastic gradient descent (SGD), and Adaptive Gradient (AdaGrad), Adadelta.

Table 1.

Different hyperparameter tuning effects.

| Batch Size | Optimizer | Dense | Learning Rate | Weight Decay Values | Epoch | Loss | Processing Time (ms) |

|---|---|---|---|---|---|---|---|

| 32 | RMSProp | 4 | 0.0001 | 0.0001 | 50 | 6.50 | 4 |

| RMSProp | 4 | 0.0001 | 0.001 | 100 | 6.56 | 5 | |

| RMSProp | 4 | 0.001 | 0.0001 | 50 | 6.25 | 4 | |

| RMSProp | 4 | 0.001 | 0.001 | 100 | 6.57 | 5 | |

| 64 | RMSProp | 4 | 0.0001 | 0.0001 | 50 | 7.27 | 5 |

| RMSProp | 4 | 0.0001 | 0.001 | 100 | 7.37 | 6 | |

| RMSProp | 4 | 0.001 | 0.0001 | 50 | 7.52 | 5 | |

| RMSProp | 4 | 0.001 | 0.001 | 100 | 7.79 | 7 | |

| 32 | RMSProp | 5 | 0.0001 | 0.0001 | 50 | 8.46 | 6 |

| RMSProp | 5 | 0.0001 | 0.001 | 100 | 8.63 | 7 | |

| RMSProp | 5 | 0.001 | 0.0001 | 50 | 8.21 | 6 | |

| RMSProp | 5 | 0.001 | 0.001 | 100 | 8.35 | 7 | |

| 64 | RMSProp | 5 | 0.0001 | 0.0001 | 50 | 8.32 | 8 |

| RMSProp | 5 | 0.0001 | 0.001 | 100 | 8.34 | 7 | |

| RMSProp | 5 | 0.001 | 0.0001 | 50 | 8.25 | 7 | |

| RMSProp | 5 | 0.001 | 0.001 | 100 | 8.31 | 7 | |

| 32 | ADAM | 4 | 0.0001 | 0.0001 | 50 | 6.26 | 3 |

| ADAM | 4 | 0.0001 | 0.001 | 100 | 6.28 | 4 | |

| ADAM | 4 | 0.001 | 0.0001 | 50 | 6.27 | 4 | |

| ADAM | 4 | 0.001 | 0.001 | 100 | 6.55 | 5 | |

| 64 | ADAM | 4 | 0.0001 | 0.0001 | 50 | 7.04 | 4 |

| ADAM | 4 | 0.0001 | 0.001 | 100 | 7.06 | 5 | |

| ADAM | 4 | 0.001 | 0.0001 | 50 | 7.26 | 4 | |

| ADAM | 4 | 0.001 | 0.001 | 100 | 7.27 | 6 | |

| 32 | ADAM | 5 | 0.0001 | 0.0001 | 50 | 7.67 | 4 |

| ADAM | 5 | 0.0001 | 0.001 | 100 | 7.63 | 5 | |

| ADAM | 5 | 0.001 | 0.0001 | 50 | 7.21 | 4 | |

| ADAM | 5 | 0.001 | 0.001 | 100 | 7.35 | 6 | |

| 64 | ADAM | 5 | 0.0001 | 0.0001 | 50 | 8.02 | 4 |

| ADAM | 5 | 0.0001 | 0.001 | 100 | 8.14 | 5 | |

| ADAM | 5 | 0.001 | 0.0001 | 50 | 8.05 | 5 | |

| ADAM | 5 | 0.001 | 0.001 | 100 | 8.10 | 6 | |

| 32 | AdaGrad | 4 | 0.0001 | 0.0001 | 50 | 6.47 | 4 |

| AdaGrad | 4 | 0.0001 | 0.001 | 100 | 6.74 | 4 | |

| AdaGrad | 4 | 0.001 | 0.0001 | 50 | 6.25 | 5 | |

| AdaGrad | 4 | 0.001 | 0.001 | 100 | 6.55 | 5 | |

| 64 | AdaGrad | 4 | 0.0001 | 0.0001 | 50 | 7.14 | 5 |

| AdaGrad | 4 | 0.0001 | 0.001 | 100 | 7.06 | 6 | |

| AdaGrad | 4 | 0.001 | 0.0001 | 50 | 7.16 | 6 | |

| AdaGrad | 4 | 0.001 | 0.001 | 100 | 7.29 | 7 | |

| 32 | AdaGrad | 5 | 0.0001 | 0.0001 | 50 | 7.77 | 5 |

| AdaGrad | 5 | 0.0001 | 0.001 | 100 | 7.61 | 6 | |

| AdaGrad | 5 | 0.001 | 0.0001 | 50 | 7.23 | 6 | |

| AdaGrad | 5 | 0.001 | 0.001 | 100 | 7.32 | 7 | |

| 64 | AdaGrad | 5 | 0.0001 | 0.0001 | 50 | 8.06 | 5 |

| AdaGrad | 5 | 0.0001 | 0.001 | 100 | 8.18 | 5 | |

| AdaGrad | 5 | 0.001 | 0.0001 | 50 | 8.09 | 6 | |

| AdaGrad | 5 | 0.001 | 0.001 | 100 | 8.11 | 7 | |

| 32 | Adadelta | 4 | 0.0001 | 0.0001 | 50 | 6.69 | 4 |

| Adadelta | 4 | 0.0001 | 0.001 | 100 | 6.56 | 5 | |

| Adadelta | 4 | 0.001 | 0.0001 | 50 | 6.28 | 4 | |

| Adadelta | 4 | 0.001 | 0.001 | 100 | 6.47 | 4 | |

| 64 | Adadelta | 4 | 0.0001 | 0.0001 | 50 | 6.85 | 4 |

| Adadelta | 4 | 0.0001 | 0.001 | 100 | 7.44 | 4 | |

| Adadelta | 4 | 0.001 | 0.0001 | 50 | 7.16 | 5 | |

| Adadelta | 4 | 0.001 | 0.001 | 100 | 7.26 | 5 | |

| 32 | Adadelta | 5 | 0.0001 | 0.0001 | 50 | 7.67 | 6 |

| Adadelta | 5 | 0.0001 | 0.001 | 100 | 7.77 | 6 | |

| Adadelta | 5 | 0.001 | 0.0001 | 50 | 7.73 | 5 | |

| Adadelta | 5 | 0.001 | 0.001 | 100 | 7.31 | 7 | |

| 64 | Adadelta | 5 | 0.0001 | 0.0001 | 50 | 7.55 | 6 |

| Adadelta | 5 | 0.0001 | 0.001 | 100 | 8.08 | 7 | |

| Adadelta | 5 | 0.001 | 0.0001 | 50 | 8.19 | 5 | |

| Adadelta | 5 | 0.001 | 0.001 | 100 | 8.12 | 6 |

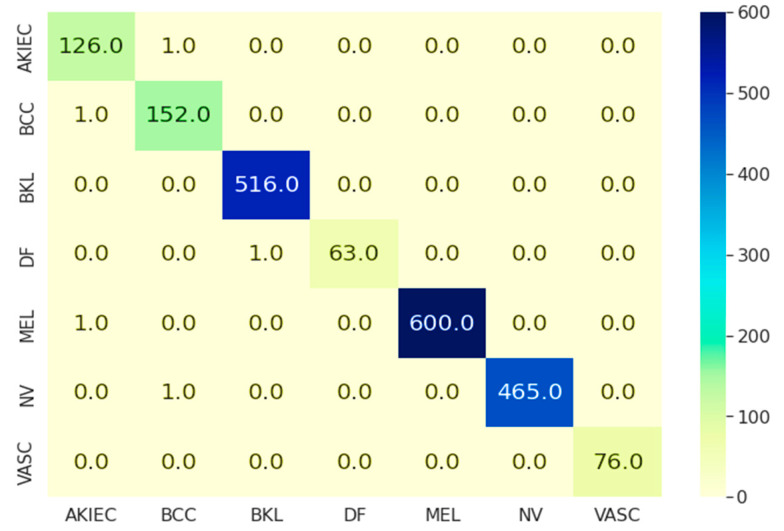

Different hyperparameters were varied for each optimizer and accuracy was compared. With both Adam and RMS prop tuned with various hyperparameters, the Adam optimizer had the best performance, followed by AdaGrad and Adadelta. We used two values of the learning rates, 0.01, 0.001, weight decay constants of 0.01 and 0.001, and dense layers of 4 and 5, which required a batch size of 64. With all of these settings, the performance was highest with the least amount of computing resources. With batch size 32, optimizer ADAM, dense layers 4 with learning rate and weight decay constants of 0.0001 and 0.0001, we achieved low loss. These hyperparameters were used to classify skin lesions. The model training included seven categorical skin lesions and the model performance was assessed with the confusion matrix outcome shown in Figure 5. AKIEC, BCC, and BKL lesion classes were predicted with 99.21%, 99.34%, and 100% accuracy, respectively. In contrast, DF, MEL, NV, and VASC had 98.437%, 99.83%, 99.78%, and 100% prediction accuracy. The overall model accuracy was reported as 99.75%. Other metrics such as sensitivity (true positive rate) and specificity (true negative rate) were achieved at 100% which is higher than other previous studies.

Figure 5.

Confusion matrix.

Table 2 presents the comparison of different existing models with the GC-SCNN over the test dataset. In Table 3 and Table 4, we compare the proposed model to the state-of-the-art approaches to the ISIC2018 and ISIC2019 datasets. Based on the accuracy figures of 99.78% (for ISIC 2018) and 99.81% (for ISIC 2019), as a result, the proposed model outperforms by 1% and 2.5%.

Table 2.

HAM10000 comparison of classification.

Table 3.

ISIC2018 comparison of classification.

| Project | Accuracy (%) | Sensitivity (%) | Specificity (%) |

|---|---|---|---|

| Gessert et al. [33] | 98.70 | 80.9 | 98.4 |

| Ailin et al. [34] | 98.20 | 89.5 | 98.1 |

| Khan et al. [35] | 89.80 | 89.7 | 94.5 |

| Mohamed et al. [36] | 92.70 | 72.42 | 97.14 |

| Huang et al. [37] | 85.80 | 69.04 | 95.92 |

| Liu et al. [38] | 92.54 | 71.47 | 92.72 |

| Gu et al. [39] | 91.4 | 83.74 | 93.24 |

| Zhou et al. [40] | 92.55 | 84.67 | 93.63 |

| Gan et al. [41] | 93.81 | 90.14 | 98.36 |

| Proposed | 99.78 | 100 | 100 |

Table 4.

ISIC2019 comparison of classification.

| Project | Accuracy (%) | Sensitivity (%) | Specificity (%) |

|---|---|---|---|

| Gessert et al. [33] | 92.3 | 80.9 | 98.4 |

| Ailin et al. [34] | 91.5 | 89.5 | 98.1 |

| Ahmed et al. [42] | 94 | 89.7 | 94.5 |

| Pacheco et al. [43] | 92 | 72.42 | 97.14 |

| Molina et al. [44] | 97 | 69.04 | 95.92 |

| Kaseem et al. [45] | 94 | 71.47 | 92.72 |

| Iqbla et al. [46] | 90 | 83.74 | 93.24 |

| Pulgarin et al. [47] | 92 | 89.53 | 93.57 |

| Proposed | 99.51 | 100 | 100 |

4. Discussion

An automatic skin lesion detection method based on fuzzy GC-SCNN is presented in this paper. For boundary detection and segmentation, we used fuzzy logic, stacked CNNs for feature extraction, and enhanced SVMs for lesion segmentation. At different stages of lesion classification, the enhanced fuzzy GC-SCNN with SVM was compared with existing techniques. It was proven that the proposed model was more accurate and faster at classifying the lesion segments than other models, and produces very few false positives and false negatives.

A skin lesion’s detection and classification performance are typically affected by discriminant feature selection [48]. Existing literature on this topic does not elaborate on image processing steps and does not address the uncertainty of detecting lesion boundaries. For example, in [49], the authors proposed the use of orthogonal matching with a fixed wavelet grid network to enhance, segment, and classify demographic images and obtained an accuracy of 91.82%. By combining SVM, SMOTE, and ensemble classifiers in combination with extracting color texture features from dermoscopy images, 93.83% accuracy was achieved [50]. It was also possible to extract color, texture, and SVM features by using the Gray Level Co-Occurring Matrix (GLCM) technique [51].

Some studies have achieved improved accuracy in skin malignant cell prediction through threshold-based segmentation, ABCD feature extraction, and multiscale lesion-biased techniques [52,53,54]. A CNN model comprised of multiple tracks was developed to resolve the issue of skin lesion classification. The model achieved 85.8% and 79.15% accuracy over five and ten classes respectively [55,56]. In contrast, ensemble-based deep learning demonstrated improved performance in skin lesion classification and reported approximately 90% accuracy [57,58]. Despite this, all of the above-mentioned studies applied a single model, which can affect the accuracy of the model. By stacking different models, we could improve the accuracy.

Based on the Delaunay triangulation, a study with two parallel processes was able to detect skin lesions [59]. The backpropagation multilayer neural network was used to detect and classify melanoma using 3D color texture features from dermoscopy images [60]. On ImageNet datasets, the transfer learning approaches with the CNN model produced 88.33% of accuracy thanks to pre-trained models like Resnet-101, BASNet large, and Google Net [61]. All of these approaches have the disadvantage that in medical diagnosis they require prolonged real-time analysis. Our method of detecting lesion boundaries via fuzzy image processing overcame these limitations.

Additionally, our study is in line with [62] as the authors applied transfer learning to train the model with the HAM1000 dataset. They implemented Resnet50 models with no data preprocessing and manual feature selection which resulted in a significant decrease in the model accuracy and high processing time. The enhanced fuzzy-SCNN with SVM improved the classification accuracy by reducing the loss and achieved 99.75% accuracy. By minimizing the overfitting of training datasets in the SVM classifier, we improved the classification performance by using the same data set for the newly developed and existing models. A modified loss function improved lesion classification by reducing processing time by 25–35 milli seconds and increasing accuracy by 2–5%.

Identifying and classifying seven significant lesions in dermoscopy images was possible with the proposed solution. Although our solution produced the best possible accuracy, we have focused on only a limited set of lesions while neglecting minute lesions. Future work will involve improving the feature extraction techniques with latent factor analysis to detect negligible minute lesions [63,64]. Incorporating more lesion types with lower noise by neural network architecture can enhance the model’s significance.

5. Conclusions

Human beings are protected by their skin against environmental pollution, but the adverse effects of ultraviolet radiation increase the risk of melanoma. We propose a deep learning framework to segment, detect, and classify skin lesions in dermoscopy images for melanoma detection. Based on the publicly available dataset HAM10000, which consists of seven lesion categories, we evaluated the proposed framework. Our model outperformed the existing models in terms of performance. As a result of the current study, the uncertainties in boundary detection were removed, reducing the loss and the processing time. We calculated the prediction time of the proposed model and lesion detection takes 2.513 ms. In conclusion, the results suggest that the proposed model is computationally efficient.

Author Contributions

Conceptualization, U.B. and G.B.; methodology, U.B.; software, G.B.; validation, G.B. and U.B.; formal analysis, U.B.; investigation, U.B.; resources, G.B.; data curation, U.B.; writing—original draft preparation, U.B.; writing—review and editing, G.B.; visualization, U.B.; supervision, G.B.; project administration, G.B.; funding acquisition, G.B. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

No author has any potential conflict of interest during the preparation and submission of the manuscript.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Rogers H.W., Weinstock M.A., Feldman S.R., Coldiron B.M. Incidence estimate of non melanoma skin cancer in the U.S population. JAMA Dermatol. 2015;155:1081–1086. doi: 10.1001/jamadermatol.2015.1187. [DOI] [PubMed] [Google Scholar]

- 2.Ali S., Miah S., Haque J., Rahman M., Islam K. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. Mach. Learn. Appl. 2021;5:100036. doi: 10.1016/j.mlwa.2021.100036. [DOI] [Google Scholar]

- 3.Nasir M., Attique Khan M., Sharif M., Lali U., Saba T., Iqbal T. An improved strategy for skin lesion detection and classification using uniform segmentation and feature selection based approach. Microsc. Res. Tech. 2018;81:528–543. doi: 10.1002/jemt.23009. [DOI] [PubMed] [Google Scholar]

- 4.Skin Cancer. [(accessed on 12 December 2021)]. Available online: https://www.skincancer.org/skin-cancer-information/skin-cancer-facts/

- 5.Khan M.A., Muhammad K., Sharif M., Akram T., de Albuquerque V.H.C. Multi-Class Skin Lesion Detection and Classification via Teledermatology. IEEE J. Biomed. Health Inform. 2021;25:4267–4275. doi: 10.1109/JBHI.2021.3067789. [DOI] [PubMed] [Google Scholar]

- 6.Pacheco A.G., Krohling R.A. The impact of patient clinical information on automated skin cancer detection. Comput. Biol. Med. 2020;116:103545. doi: 10.1016/j.compbiomed.2019.103545. [DOI] [PubMed] [Google Scholar]

- 7.Pacheco A.G., Sastry C.S., Trappenberg T., Oore S., Krohling R.A. On out-of-Distribution Detection Algorithms with deep neural skin cancer classifiers; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops; Seattle, WA, USA. 14–19 June 2020; pp. 1–10. [Google Scholar]

- 8.Abdelhalim I.S.A., Mohamed M.F., Mahdy Y.B. Data augmentation for skin lesion self attention bassed progressive generative adversial network. Expert Syst. Appl. 2021;165:113922. doi: 10.1016/j.eswa.2020.113922. [DOI] [Google Scholar]

- 9.Liu X., Chen C.H., Karvela M., Toumazou C. A DNA based intelligent expert system for personalised skin helath tecommentdations. IEEE J. Biomed. Health Inform. 2020;24:3276–3284. doi: 10.1109/JBHI.2020.2978667. [DOI] [PubMed] [Google Scholar]

- 10.Duggani K., Nath M.K. A technical review report on deep learning approach for skin cancer detection and segmentation. Data Anal. Manag. 2021;54:87–99. doi: 10.1007/978-981-15-8335-3_9. [DOI] [Google Scholar]

- 11.Khan I.U., Aslam N., Anwar T., Aljameel S.S., Ullah M., Khan R., Rehman A., Akhtar N. Remote diagnosis and triaging model for skin cancer usign efficientnet and extreme gradient boosting. Complexity. 2021;2021:5591614. doi: 10.1155/2021/5591614. [DOI] [Google Scholar]

- 12.Alquran H., Qasmieh A., Alqudah M., Alhammouri S., Alawneh E., Abughazaleh A., Hasayen F. The melanoma skin cancer detection and classification using support vector machine; Proceedings of the International Conference on Applied Electrical Engineering and Computational Technology (AEECT); Aqaba, Jordan. 11–13 October 2017; pp. 1–5. [Google Scholar]

- 13.Mahmuei S.S., Aldeen M., Stoecker W.W., Garnavi R. Biologically inspired quadtree color detection in dermoscopy images of melanoma. IEEE J. Biomed. Health Inform. 2019;23:570–577. doi: 10.1109/JBHI.2018.2841428. [DOI] [PubMed] [Google Scholar]

- 14.Hameed N., Hameed F., Shabut A., Khan S., Cirstea S., Hossain A. An intelligent computer aided scheme for classifying multiple skin lesions. Computers. 2019;8:62. doi: 10.3390/computers8030062. [DOI] [Google Scholar]

- 15.Khan M.Q., Hussain A., Rehman S.U., Khan U., Maqsood M., Mehmood K., Khan M.A. Classification of melanoma and nevus in digital images for diagnosis of skin cancer. IEEE Access. 2019;7:90132–90144. doi: 10.1109/ACCESS.2019.2926837. [DOI] [Google Scholar]

- 16.Celebi M.E., Codella N., Halpern A. Dermoscopy Image Analysis: Overview and Future Directions. IEEE J. Biomed. Health Inform. 2019;23:474–478. doi: 10.1109/JBHI.2019.2895803. [DOI] [PubMed] [Google Scholar]

- 17.Guha S.R., Haque S.R. Performance comparison of machine learning based classification of skin diseases from skin lesion images; Proceedings of the International Conference of Communication Computational Electronics System; Coimbatore, India. 21–22 October 2020; pp. 15–25. [Google Scholar]

- 18.Heckler A., Utikal S., Enk H.A., Hauschild M., Weichenthal M., Maron R.C., Berking C., Haferkamp S., Klode J., Schadendorf D., et al. Superior skin cancer classification by the combination of human and artificial intelligence. Eur. J. Cancer. 2019;120:114–121. doi: 10.1016/j.ejca.2019.07.019. [DOI] [PubMed] [Google Scholar]

- 19.Seeja R.D., Suresh A. Deep learning based skin lesion segmentation and classification of melanoma using support vector machine (SVM) Asian Pac. J. Cancer Prev. 2019;20:1555–1561. doi: 10.31557/APJCP.2019.20.5.1555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Esteva A., Kuprel B., Novoa A., Ko S., Swetter M., Blau H.M., Thrun S. Dermatologist level classification of skin cancer with dep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Brinker T.J., Hekler A., Utikal J.S., Grabe N., Schadendorf D., Klode J., Berking C., Steeb T., Enk H., Von Kalle C. Skin cancer classification using convolutional neural networks: Systematic review. J. Med. Internet Residual. 2018;20:e11936. doi: 10.2196/11936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tschandl P., Rosendahl C., Akay B.N., Argenziano G., Blum A., Braun R.P., Cabo H., Gourhant J.Y., Kreusch J., Lallas A., et al. Expert level Diagnosis of Non Pigmented skin Cancer by Combined Convolution Neural Networks. JAMA Dermatol. 2019;155:58–65. doi: 10.1001/jamadermatol.2018.4378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Saba T., Khan M.A., Rehman A., Sainte S.L.M. Region Extraction and Classification of Skin cancer: A Heterogeneous Framework of Deep CNN features Fusion and Reduction. J. Med. Syst. 2019;43:289. doi: 10.1007/s10916-019-1413-3. [DOI] [PubMed] [Google Scholar]

- 24.HAM1000dataset. [(accessed on 18 March 2022)]. Available online: https://dataverse.harvard.edu/dataset.xhtml?persistentId=doi:10.7910/DVN/DBW86T.

- 25.Wang C., Chen D., Hao L., Liu X., Zeng Y., Chen J., Zhang G. Pulmonary image classification based on Inception v3 transfer learing model. IEEE Access. 2019;7:146533–146541. doi: 10.1109/ACCESS.2019.2946000. [DOI] [Google Scholar]

- 26.Chollet F. Xception: Dep Learning with depth wise separable convolutions; Proceedings of the IEEE Conference on Computer Viion and Patter Recognition (CVPR-2017); Honolulu, HI, USA. 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- 27.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolution neural networks. Adv. Neural Inf. Process. Syst. 2012;25:1097–1105. [Google Scholar]

- 28.Usharani B. ILF-LSTM: Enhanced loss function in LSTM to predict the sea surface temperature. Soft Comput. 2022:1433–7479. doi: 10.1007/s00500-022-06899-y. [DOI] [Google Scholar]

- 29.Kaseem M.A., Hosny K.M., Fouad M.M. Skin lesion classification into eight classes for ISIC2019 using deep convolution neural networks and transfer learning. IEEE Access. 2020;8:114822–114832. doi: 10.1109/ACCESS.2020.3003890. [DOI] [Google Scholar]

- 30.Chaturvedi S.S., Gupta K., Prasad P.S. Skin Lesion analyser: An efficient seven way multi class skin cancer classification using mobile net; Proceedings of the International Conference on Advanced Machine Learning Technologies and Applications; Cairo, Egypt. 20–22 March 2020; pp. 165–276. [Google Scholar]

- 31.Khan M.A., Sharif M., Akram T., Damasevicius R., Maskeliunas R. Skin Lesion Segmentation and multiclass classification using deep learning features and improved moth flame optimization. Diagnostics. 2021;11:811. doi: 10.3390/diagnostics11050811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rahul A.R., Mozaffari M.H., Lee W.S., Pari B.E. Skin lesions classification using deep learning based on dilated convolution. bioRxiv. 2019:860700. doi: 10.1101/860700. [DOI] [Google Scholar]

- 33.Gessert N., Nielsen M., Shaikh M., Werner R., Schlaefer A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX. 2020;7:100864. doi: 10.1016/J.MEX.2020.100864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ailin S., Chao H., Minjie C., Hui X., Yali Y. Skin lesion classification using additional patient information. Biomed Res. Int. 2021;2021:6673852. doi: 10.1155/2021/6673852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Khan M.A., Javed M.Y., Sharif M., Saba T., Rehman A. Multimodal deep neural network based features extraction and optimal selection approach for skin lesion classification; Proceedings of the International Conference on Computer and Inflrmation Science (ICCIS2019); Sakaka, Saudi Arabia. 3–4 April 2019; pp. 1–7. [Google Scholar]

- 36.Mohamed E.H., Behaidy E.W.H. Enhanced skin lesions classification using deep convolutional networks; Proceedings of the International Conference on Intelligent computing and information systems (ICICIS2019); Cairo, Egypt. 8–10 December 2019; pp. 180–188. [Google Scholar]

- 37.Huang H.W., Hsu B.W.Y., Lee C.H., Tseng V.S. Development of a light weight deep learning model for cloud applications and remote diagnosis of skin cancers. J. Dermatol. 2021;48:310–316. doi: 10.1111/1346-8138.15683. [DOI] [PubMed] [Google Scholar]

- 38.Liu Q., Yu L., Luo L., Dou Q., Heng P.A. Semi supervised medical image classification with relation driven self ensembling model. IEEE Trans. Med. Imaging. 2020;39:3429–3440. doi: 10.1109/TMI.2020.2995518. [DOI] [PubMed] [Google Scholar]

- 39.Gu Y., Ge Z., Bonnington C.P., Zhou J. Progressive transfer learning and adversarial domain adaption for ross domain skin disease classification. IEEE J. Biomed. Health Inf. 2019;24:1379–1393. doi: 10.1109/JBHI.2019.2942429. [DOI] [PubMed] [Google Scholar]

- 40.Zhou L., Luo Y. Deep features fusion with mutual attention transformr for skin lesion diagnosis; Proceedings of the IEEE International conference on Imge processing (ICIP2021); Anchorage, AK, USA. 19–22 September 2021; pp. 3797–3801. [Google Scholar]

- 41.Cai G., Zhu Y., Wu Y., Jiang X., Ye J., Yang D. A multimodal transformer to fue images and metadata for skin disease classification. Vis. Comput. 2022:1–13. doi: 10.1007/s00371-022-02492-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ahmed S.A.A., Yanikouglu B., Goksu O., Aptoula E. Skin lesion classification with deep CNN ensembles; Proceedings of the Proceedings SIU; Gaziantep, Turkey. 5–7 October 2020; pp. 1–4. [Google Scholar]

- 43.Pacheco A.G.C., Ali A.R., Trappenberg T. Skin cancer detection based on deep learning and entropy to detect outlier samples. arXiv. 20191909.04525 [Google Scholar]

- 44.Molina E.O., Solorza S., Alvarez J. Classification of dermoscopy skin lesion color images using fractal deep learning features. Appl. Sci. 2020;10:5954. doi: 10.3390/app10175954. [DOI] [Google Scholar]

- 45.Sun Q., Huang C., Chen M., Xu H., Yang Y. Skin Lesion Classification Using Additional Patient Information. BioMed Res. Int. 2021;2021:6673852. doi: 10.1155/2021/6673852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Iqbla I., Younus M., Walayat K., Kakar M.U., Ma J. Automated multi class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput. Med. Imaging Graph. 2021;88:101843. doi: 10.1016/j.compmedimag.2020.101843. [DOI] [PubMed] [Google Scholar]

- 47.Villa-Pulgarin J.P., Ruales-Torres A.A., Arias-Garzon D., Bravo-Ortiz M.A., Arteaga-Arteaga H.B., Mora-Rubio A., Alzate-Grisales J.A., Mercado-Ruiz E., Hassaballah M., Orozco-Arias S., et al. Optimized convolutional neural network models for skin lesion classification. Comput. Mater. Contin. 2022;70:2131–2148. doi: 10.32604/cmc.2022.019529. [DOI] [Google Scholar]

- 48.Afza F., Khan M.A., Sharif M., Rehman A. Microscopic Skin Laceration segmentation and classification: A Framework of statistical normal distribution and optimal feature selection. Microsc. Res. Tech. 2019;82:1471–1488. doi: 10.1002/jemt.23301. [DOI] [PubMed] [Google Scholar]

- 49.Sadria R., Azarianpour S., Zekri M., Celebi M.E. WN based approach to melanoma diagnosis from dermoscopy images. IET Image Process. 2017;11:475–482. doi: 10.1049/iet-ipr.2016.0681. [DOI] [Google Scholar]

- 50.Schaefer G., Krawczyk B., Celebi M.E., Iyatomi H. An ensemble classification approach for melanoma diagnosis. Memetic Comput. 2014;6:233–240. doi: 10.1007/s12293-014-0144-8. [DOI] [Google Scholar]

- 51.Waheed Z., Zafar M., Waheed A., Riaz F. An efficient machine learning approach for the detection f melanoma using dermoscopic images; Proceedings of the International Conference on Communication Computing and Digital Systems (C-CODE); Islamabad, Pakistan. 8–9 March 2017; pp. 316–319. [Google Scholar]

- 52.Sivaraj S., Malmathanraj R., Palanisamy P. Detecting anomalous growth of skin lesion uisng threshold based segmentation algorithm and fuzzy k nearest neighbor classifier. J. Cnacer Res. Ther. 2020;16:40–52. doi: 10.4103/jcrt.JCRT_306_17. [DOI] [PubMed] [Google Scholar]

- 53.Bi L., Kim J., Ahn E., Feng D., Fulham M. Automatic melanoma detection via multi scale lesion biased representation and joint reverse classification; Proceedings of the International Symposium on Biomedical Imaging(ISBI); Prague, Czech Republic. 13–16 April 2016; pp. 1055–1058. [Google Scholar]

- 54.Abbes W., Sellami D. Deep Neural network for fuzzy automatic melanoma diagnosis; Proceedings of the International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP2019); Prague, Czech Republic. 25–27 February 2019; pp. 47–56. [Google Scholar]

- 55.Kawahara J., Hamarneh G. Multi resolution tract CNN with hybrid pretrained and skin lesion trained layers. In: Wang L., Adeli E., Wang Q., Shi Y., Suk H.I., editors. Machine Learning in Medical Imaging. MLMI 2016. Vol. 10019. Springer; Cham, Switzerland: 2016. pp. 164–171. Lecture Notes in Computer Science. [Google Scholar]

- 56.Kawahara J., Benraieb A., Hamarneh G. Deep features to classify skin lesions; Proceedings of the IEEE International Symposium on Biomedical Imaging (ISBI2016); Prague, Czech Republic. 13–16 April 2016; pp. 1397–1400. [Google Scholar]

- 57.Nyiri T., Kiss A. Novel ensembling methods for dermatological image classification. In: Fagan D., Martín-Vide C., O’Neill M., Vega-Rodríguez M.A., editors. Theory and Practice of Natural Computing. TPNC 2018. Vol. 11324. Springer; Cham, Switzerland: 2018. pp. 438–448. Lecture Notes in Computer Science. [Google Scholar]

- 58.Shahin A.H., Kamal A., Elattat M.A. Deep ensemble learning for skin lesion classification from dermoscopic images; Proceedings of the IEEE International Biomedical Engineering Conferences (CIBEC2018); Cairo, Egypt. 20–22 December 2018; pp. 150–153. [Google Scholar]

- 59.Pennisi A., Bloisi D.D., Nardi D., Giampetruzzi A.R., Mondino C., Facchiano A. Skin lesions image segmentation using Delaunay Triangulation for melanoma detection. Comput. Med. Imaging Graph. 2016;52:89–103. doi: 10.1016/j.compmedimag.2016.05.002. [DOI] [PubMed] [Google Scholar]

- 60.Warsi F., Khanam R., Kamya S. An efficient 3D color texture feature and neural network technique for melanoma detection. Inform. Med. Unlocked. 2019;1:100176. doi: 10.1016/j.imu.2019.100176. [DOI] [Google Scholar]

- 61.Khatib E., Popescu D., Ichim L. Deep learning based methods for automatic diagnosis of skin lesions. Sensors. 2020;20:1753. doi: 10.3390/s20061753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Jain S., Singhania U., Tripathy B., Nasr E.A., Aboudaif M.K., Kamrani A.K. Deep Learning-Based Transfer Learning for Classifi-cation of Skin Cancer. Sensors. 2021;21:8142. doi: 10.3390/s21238142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Wu D., Luo X., Shang M., He Y., Wang G., Wu X. A data characteristic aware latent factor model for web services QOS prediction. IEEE Trans. Knowl. Data Eng. 2022;34:2525–2538. doi: 10.1109/TKDE.2020.3014302. [DOI] [Google Scholar]

- 64.Wu D., He Q., Luo X., Shang M., He Y., Wang G. A posterior neighborhood regularized latent factor model for highly accurate web service QOS prediction. IEEE Trans. Serv. Comput. 2022;15:793–805. doi: 10.1109/TSC.2019.2961895. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.