Abstract

Approximately one billion individuals suffer from mental health disorders, such as depression, bipolar disorder, schizophrenia, and anxiety. Mental health professionals use various assessment tools to detect and diagnose these disorders. However, these tools are complex, contain an excessive number of questions, and require a significant amount of time to administer, leading to low participation and completion rates. Additionally, the results obtained from these tools must be analyzed and interpreted manually by mental health professionals, which may yield inaccurate diagnoses. To this extent, this research utilizes advanced analytics and artificial intelligence to develop a decision support system (DSS) that can efficiently detect and diagnose various mental disorders. As part of the DSS development process, the Network Pattern Recognition (NEPAR) algorithm is first utilized to build the assessment tool and identify the questions that participants need to answer. Then, various machine learning models are trained using participants’ answers to these questions and other historical data as inputs to predict the existence and the type of their mental disorder. The results show that the proposed DSS can automatically diagnose mental disorders using only 28 questions without any human input, to an accuracy level of 89%. Furthermore, the proposed mental disorder diagnostic tool has significantly fewer questions than its counterparts; hence, it provides higher participation and completion rates. Therefore, mental health professionals can use this proposed DSS and its accompanying assessment tool for improved clinical decision-making and diagnostic accuracy.

Keywords: Mental Disorder, Artificial Intelligence, Machine learning, Network Science, Feature selection, SCL-90-R, Network Pattern Recognition, Healthcare Analytics, Disease Prediction, Diagnosis

Introduction

The World Health Organization (WHO) has described mental health as a state of wellbeing in which individuals can realize their abilities, cope with the everyday stresses of life, work productively, and contribute to their community (WHO, 2018). According to recent estimates (Ritchie, 2018; WHO, 2020), approximately one billion individuals suffer from mental health disorders (e.g., depression, anxiety, bipolar disorder), costing the global economy trillions of dollars in disability payments and lost productivity. However, a significant proportion of individuals with mental illnesses do not receive treatment or the quality of care they need, often due to resource shortages (Docrat et al., 2019; Petersen et al., 2019; Wainberg et al., 2017; Wang & Cheung, 2011). For example, in many countries, there is fewer than one psychiatrist for every 100,000 people (Hanna et al., 2018; Jenkins et al., 2010). Moreover, certain tools and methods used by mental health professionals to make care-related decisions (e.g., formulating accurate diagnoses) are inadequate (Kilbourne et al., 2018; Wang & Cheung, 2011). Additionally, recent pandemics (e.g., COVID-19) and epidemics (e.g., opioid overdose) have exacerbated the mental health crisis worldwide (Johnson et al., 2021; Khanal et al., 2020; Ransing et al., 2020). Thus, there is a need for innovative tools that can help mental health professionals (e.g., psychiatrists, counselors) make more efficient and accurate diagnostic decisions Casado-Lumbreras et al., 2012; Perkins et al., 2018; Thieme Anja et al., 2020).

The primary diagnostic guidelines used by mental health professionals to detect and classify mental disorders according to the type, intensity, and duration of symptoms are the Diagnostic and Statistical Manuel of Mental Disorders (DSM) and International Classification of Diseases (Americans et al., 2013; Fabiano & Haslam, 2020; Fellmeth et al., 2021). These tools, despite their frequent use, have certain limitations. For instance, the criteria used to identify diseases are common to many diagnoses; thus, diagnostic groups cannot be clearly separated from one another (Lin et al., 2006; Ogasawara et al., 2017; Park & Kim, 2020; Sleep et al., 2021). Moreover, these tools do not consider additional factors such as demographic and biochemical data, information obtained during a patient interview, mental illness history for the family, or the individual’s response to medications. They also examine patients according to a binary structure (i.e., patient vs. not-patient), resulting in misdiagnoses and inappropriate treatment plans (Garcia-Zattera et al., 2010; Pechenizkiy et al., 2006; Sleep et al., 2021).

Due to these limitations, mental health professionals often use other diagnostic guidelines, particularly the Symptom Checklist 90-Revised (SCL-90-R), which has become the most common assessment tool used around the world (Akhavan, Abiri, & Shairi, 2020b; Hildenbrand et al., 2015; Li et al., 2018). This tool includes 90 questions that are employed to assess 10 primary mental disorders, including somatization, obsessive-compulsive disorder, interpersonal sensitivity, depression, anxiety, hostility, phobic anxiety, paranoid ideation, psychoticism, and additional items (i.e., sleep, appetite, and feelings of guilt) (Gallardo-Pujol & Pereda, 2013).

Despite the availability of various diagnostic guidelines and tools, many mental health professionals still struggle to diagnose patients accurately and efficiently. Consequently, recent literature has called for more research on the use of cutting-edge technologies (i.e., artificial intelligence) to develop decision support systems (DSSs) that can help mental health professionals make evidence-based treatment decisions and guide policymakers with the digital mental health implementation (Balcombe & Leo, 2021; D’Alfonso, 2020). This study precisely responds to this call and addresses the challenges regarding the guidelines and tools used to diagnose mental disorders. This study mainly aims to build a mental health assessment tool and develop an AI-based DSS for mental health professionals to accurately diagnose mental disorders.

The remainder of the research is organized as follows. First, Sect. 2 summarizes the relevant literature regarding the standard mental health assessment tools and the use of AI to detect mental disorders and ethical guidelines regarding AI to design such tools. Next, Sect. 3 explains the research framework and applies it to a real-world dataset to demonstrate the use of AI to build a mental health assessment tool and develop an efficient DSS that accurately diagnoses mental disorders. Finally, Sect. 4 discusses the implications of the proposed DSS, while Sect. 5 offers concluding remarks.

Literature Review

Assessment tools for mental disorder diagnosis

One of the primary tools used to assess mental health is the SCL-90-R. The instrument features 90 questions scored on a five-point scale ranging from 0 to 4 to denote symptom occurrence (e.g., 0 for symptoms that never occur and 4 for symptoms that occur extremely frequently) (Derogatis, 2017). The SCL-90-R can be used to diagnose various mental disorders, as provided in Table 1 (Derogatis, 2017; Holi, 2003; Prinz et al., 2013).

Table 1.

Mental Disorders Diagnosable using the SCL-90-R (Excluding “Other”)

| Mental Disorder | Definition |

|---|---|

| Anxiety (ANX): | A disorder that causes feelings of apprehension, dread, terror, and panic. |

| Depression (DEP): | A disorder that leads to painful symptoms that negatively affect daily activities such as eating and sleeping. |

| Hostility (HOS): | A disorder wherein patients have thoughts, feelings, and actions that cause a negative state of anger (e.g., aggression, rage). |

| Interpersonal sensitivity (INT): | A disorder wherein patients feel inadequate and inferior when they compare themselves to others (e.g., feelings of self-deprecation, uneasiness). |

| Obsessive-compulsive (OC): | A disorder in which patients have repeated unwanted thoughts or desire to do something continually and urgently. |

| Paranoid ideation (PAR): | A disorder wherein patients have a mode of thinking featuring hostility, suspiciousness, grandiosity, or fear of loss of autonomy. |

| Phobic anxiety (PHOB): | A disorder wherein patients have a persistent feeling of fear of a specific person, place, object, or situation that becomes irrational. |

| Psychoticism (PSY): | A disorder epitomized by aggressiveness and interpersonal hostility (e.g., lack of empathy). |

| Somatization (SOM): | A disorder caused by bodily perceptions and complaints related to cardiovascular, gastrointestinal, respiratory, and other body systems. |

| Additional items (ADI) | Includes items such as sleep and appetite problems and feelings of guilt. |

The SCL-90-R has gained the attention of many researchers, becoming the most widespread mental disorder diagnostic tool used around the world (Barker-Collo, 2003; Bernet et al., 2015; Chen et al., 2020; Kim & Jang, 2016; Olsen et al., 2004; Rytilä-Manninen et al., 2016; Schmitz et al., 2000; Sereda & Dembitskyi, 2016; Urbán et al., 2016). However, the SCL-90-R is lengthy and requires a considerable amount of time to answer all questions. Thus, various studies have proposed using statistical techniques to shorten the number of questions (Akhavan, Abiri, & Shairi, 2020a; Imperatori et al., 2020; Lundqvist & Schröder, 2021). For example, Derogatis (2017) developed the BSI-18, which features 18 questions and is used to diagnose somatization, anxiety, and depression. Prinz et al., (2013) created the SCL-14 to diagnose somatization, phobic anxiety, and depression. However, these tools cannot be used to diagnose all 10 mental health disorders that the original SCL-90-R can detect, because they feature fewer questions.

Despite its effectiveness, the SCL-90-R has some limitations. For example, The SCL-90-R includes several sets of questions; each is reserved for a specific mental disorder. For instance, 13 questions are reserved for depression, while nine address anxiety. When mental health professionals interpret responses to these questions, they do not account for interactions among different groups of questions, meaning that each set of questions only linearly contributes to the diagnosis of a specific mental disorder. Another issue with the SCL-90-R is its length. answering all of the questions on the SCL-90-R requires substantial time and can be exhausting, drastically reducing the participation and completion rates (Galesic & Bosnjak, 2009). Despite efforts to shorten it, the best version for diagnosing the same mental disorders with fewer questions remains the BSI-53, which features 53 questions (Derogatis & Spencer, 1993). Additionally, reducing the number of questions on a diagnostic tool, however, brings up more challenges. For example, the SCL-14 can only assess somatization, phobic anxiety, and depression, while the SCL-25 can only diagnose depression and anxiety. Some scales (e.g., the SCL-27 and SCL-27-Plus) include altered questions that are drastically different from those on the original SCL-90-R and are used to assess various sets of disorders (Hardt & Gerbershagen, 2001; Jochen & Hardt 2008).

Due to the limitations in the past literature, various researchers have emphasized the urgent need to reduce the length of the SCL-90-R (Kruyen et al., 2013) without compromising on the number of disorders that it can diagnose (Graham et al., 2019; Hao et al., 2013; Luxton, 2016; Nie et al., 2012). This study responds to this call and attempts to reduce the number of questions used to assess and detect all 10 mental disorders through the use of AI.

DSSs for mental disorder diagnosis

Several studies have designed and deployed DSSs for the diagnosis and treatment of mental disorders. For instance, the Computerized Texas Medication Algorithm Project is used to support diagnosis, treatment, follow-up, and preventive care decisions related to major depressive disorders and can be incorporated into a clinical setting (Trivedi et al., 2004). A clinical DSS called the SADDESQ, constructed by Razzouk et al., (2006), can be used to diagnose schizophrenia spectrum disorders, based on variables such as symptoms of psychosis and the number and duration of seizures. The Sequenced Treatment Alternatives to Relieve Depression is another DSS that assists doctors with determining the optimal dose and timing of medications by considering changes in symptoms and the medications’ side effects (Sinyor et al., 2010).

In addition to the above-mentioned studies, there have also been efforts to design AI-based DSSs to produce more accurate diagnoses of mental disorders. For instance, Mueller et al., (2011) detected ADHD using a DSS that employs an SVM model constructed using the responses of individuals to the BSI tool. Nie et al., (2012) diagnosed mental disorders using an SVM model built via the SCL-90-R tool. Similarly, Hao et al., (2013) determined individuals’ mental health through SVM and ANN models constructed by combining an SCL-90-R response dataset with social media blogs. Chekroud et al., (2017) proposed a DSS that can cluster depression symptoms. A software platform created by Rovini et al., (2018) can be used to help clinicians diagnose Parkinson’s disease early on by evaluating various non-motor symptoms. Stewart et al., (2020) proposed a DSS based on tree-based learning such as decision trees (DTs) and random forest (RF) to identify children at the highest risk of suicide and self-harm. Chen et al., (2020) utilized a DSS built through deep learning to screen and score dementia patients. Zhang et al., (2020) employed biological markers and genetic data to propose a deep learning framework for recognizing and diagnosing mental disorders early on.

Despite these previous efforts to develop an AI-based DSS to detect and diagnose mental disorders, there are still various gaps in the literature. First, most diagnostic tools are provided to individuals on paper instead of digitally. This necessitates the individual visiting the clinic in person. Also, mental health professionals must expend a significant amount of effort to manually analyze the answers and derive an accurate diagnosis. Additionally, mental health professionals do not investigate the relationships among various variables used to assess mental health. Many of these previous studies focus on a particular set of disorders, instead of assessing multiple disorders at the same time. Additionally, these studies did not consider ethical design elements when creating AI-based DSSs. To the best of our knowledge, our study is the first study that aims to detect 10 disorders using the same set of variables.

Ethical issues related to AI and mental disease diagnosis

Several researchers indicated that many information systems (IS) researchers do not consider the practitioners’ needs when designing DSSs (Dennehy et al., 2021). On the other hand, these practitioners mostly rely on vendors and consultants to solve IS-related problems rather than IS researchers. (Dennehy et al., 2021). In addition, many studies emphasized that the IS researchers should integrate ethical guidelines obtained from IS practitioners when creating AI-based DSSs, particularly in the healthcare area where patients’ health and wellbeing are at stake (Fosso Wamba & Queiroz, 2021). Therefore, there is a growing need to forge alliances and improve the collaboration between IS researchers and practitioners when designing ethical and socially responsible AI-based DSSs.

The primary problem with AI-based DSSs is that they can be unfair and biased in their decision-making, specifically if they are developed via Blackbox algorithms and using various variables (Akter et al., 2021; Parra et al., 2021; Tsamados et al., 2021). There has been evidence in the literature that AI-based DSSs can perpetuate and exacerbate gender and racial biases and discriminate against some members of society more than others (Gupta et al., 2021; Mittelstadt et al., 2016; Mittelstadt & Floridi, 2016). These research studies noted that AI solutions are value-laden and have biases that are “specified by developers and configured by users with desired outcomes in mind that privilege some values and interests over others.” Additionally, most AI algorithms are not transparent and are difficult to explain (Buhmann & Fieseler, 2021). For example, AI-based DSSs can yield unintended negative consequences if certain variables and features are utilized in the training dataset (e.g., gender and race) (Crawford & Calo, 2016; Mittelstadt et al., 2016). For example, HireVue – a recruiting-technology firm – designed an AI-based DSS to hire employees. But according to AI researchers, the HireVue AI application penalized nonnative speakers, visibly nervous interviewees, and anyone else who does not fit the model for look and speech. Another example is the AI application called COMPAS designed to determine recidivism risk. As a result, the algorithm’s prediction assigns a higher probability of recidivism to black and brown men than to all other person (Kirkpatrick et al., 2017). Thus, there have been proposals to create guidelines for integrating ethics into the process of AI-based DSS design to prevent their misuse and potential biases, specifically in the healthcare field (Borgesius, 2020; Floridi & Cowls, 2021; Johnson et al., 2021).

Gerke et al., (2020) and Chandler et al., (2020) proposed a theoretical framework for the ethical implementation of AI models in the health care industry. Their framework has three major principles, including fairness, trustworthiness, and transparency. Fairness (i.e., AI system without biases) particularly deals with data collection and variable types when training AI models. Biases result from the datasets themselves or from how researchers choose and analyze the data (Price, 2019). For instance, including various variables such as race, gender, and insurance payer type as a proxy for socioeconomic status when training AI-based DSS in the healthcare field introduces biases (Chen et al., 2019). Trustworthiness indicates the users’ confidence in the AI systems and has three core elements (IBM, 2020). First, the purpose of AI is to augment human intelligence and help decision-making, not completely replace it. Second, creators of the AI-based DSSs own the data and the insights; thus, they are liable for the decisions that the AI makes. Third, AI systems must be transparent and explainable. Transparency stands for the knowledge regarding the infernal structure of AI-based DSSs. In other words, transparency indicates that the predictions of the AI model used within the DSS can be properly explained (Fosso Wamba et al., 2021; Fosso Wamba & Queiroz, 2021). Transparency allows practitioners to see whether the AI models have been thoroughly tested and make sense and that they can understand why particular decisions are made. Issues related to the trustworthiness of AI-based DSSs arise with the use of “Blackbox” algorithms because users cannot provide a logical explanation of how the algorithm arrived at its given output (Schönberger, 2019). Therefore, explainable and Whitebox AI algorithms are recommended over complex Blackbox models. However, there are cases when these Blackbox models need to be used (i.e., image data). Then, there needs to be an ad-hoc post-model analysis process (i.e., SHAP, LIME) to further understand the decisions made by these Blackbox models.

As many research studies stated, there is a need for a methodology for integrating ethics into the design of AI-based DSSs from the start of the project. For instance, Morley et al., (2019) emphasized that the ethical challenges raised by implementing AI in healthcare settings are tackled proactively rather than reactively. This brings up the concept called “Ethics by Design” which is concerned with algorithms and tools needed to endow AI-based DSSs with the ability to reason about the ethical aspects of their decisions, to ensure that their behavior remains within given moral bounds (D’Aquin et al., 2018; Dignum et al., 2018; Iphofen & Kritikos, 2019).

In this study, the “Ethics by Design” approach was utilized to create an AI-based DSS that can diagnose mental disorders. Throughout the design process, a close cooperative relationship between IS researchers designing AI-based DSSs and the practitioners using the AI-based DSSs (i.e., mental health professionals) was established for ensuring greater impact and addressing concerns regarding the social and responsible use of AI, as addressed by Dennehy et al., (2021) and Morley et al., (2019). Furthermore, various procedures were embedded in the design of the DSS to ensure the fairness, transparency, and trustworthiness of the proposed DSS, as discussed in the methodology section.

Methodology and application

Phase I: data collection and preprocessing

We developed a web portal called Psikometrist that digitally collects participants’ responses to the SCL-90-R and saves them in a database. Mental health professionals such as counselors, psychologists, and psychiatrists seeking to use this platform must register and obtain preauthorization. After registration and obtaining access to the Psikometrist platform, these mental health professionals can implement the SCL-90-R test on the Psikometrist platform by sending an encrypted link to the participant. The questions appearing via the Psikometrist platform have time thresholds, establishing the minimum amount of time necessary to read and answer them. The Psikometrist platform compares the time that patients spend answering an SCL-90-R question with the minimum threshold and excludes patients who answer in a time frame less than that. Using the encrypted link, participants can securely access the SCL-90-R questions. Before answering the questions. Participants or their guardians sign an informed consent indicating that their answers to the SCL-90-R questions can be anonymously used to develop a decision support system. Additionally, patients are informed that they can skip or refuse to answer questions. After data collection, personal identifiers (e.g., name, date of birth, address) are masked and remain confidential in the system and are only visible to the mental health professional who administered the test to satisfy the ethical AI guidelines mentioned in Sect. 2.3. The system generates a unique identifier for each participant and uses it instead of personal identification to ensure anonymity. This ensures that the information collected from patients is kept strictly confidential. Additionally, participants are informed that the data collected will be utilized to improve their mental health diagnosis. Each participant’s responses are tabulated and stored in the system. Respondents also receive a copy of their tabulated responses. Following this procedure, more than 6,000 participants have taken the SCL-90-R test since 2019. We established a close cooperative relationship with three mental health experts (i.e., psychiatrists) who plan to use the proposed DSS to ensure greater impact and address concerns regarding the social and responsible use of AI, as discussed by Dennehy et al., (2021) and Morley et al., (2019). These three mental health experts (i.e., psychiatrists) evaluated the participants’ responses to identify potential mental disorders. To integrate ethics into our AI solution and ensure that the predictions of mental disorders remain within given moral bounds, we removed variables related to the participants’ demographic information (e.g., race, gender, and insurer information). We only considered their responses to the SCL-90-R test. Figure 1 shows the registration GUI for Psikometrist, which was created as a part of this research.

Fig. 1.

GUI of the Psikometrist platform

Phase II: variable selection and creation through NEPAR

We utilized a social network analysis technique called the Networked Pattern Recognition (NEPAR) algorithm to reduce the number of SCL-90-R questions and identify similarities among individuals taking the test (i.e., participants). Through NEPAR, we calculated similarities among the questions and participants, built undirected network graphs, and extracted three centrality measures (i.e., closeness, degree, and betweenness centralities). We then compared these centrality measures to decrease the number of questions from 90 to 28, without reducing the number of disorders the SCL-90-R could detect. Additionally, we applied NEPAR to compute similarities among participants by obtaining three centrality measures, namely closeness, betweenness, and degree centrality. The NEPAR algorithm extracts similarities and relationships among variables. It creates a network diagram with nodes and links, using the responses given by the participants to the SCL-90-R questions. For more information about NEPAR, see Khan & Tutun (2021) and Tutun et al., (2017). It is important to note that the NEPAR algorithm is transparent and explainable as it generates a network diagram providing insights regarding the observations and their relationships in the dataset. Thus, it is possible for the creator of the AI-based DSSs to easily identify issues regarding fairness and bias. We developed two NEPAR models, NEPAR-Q and NEPAR-P. The Q and P stand for questions and participants, respectively. The nodes in the NEPAR-Q model indicate the questions, while the nodes in the NEPAR-P model represent the participants. The links in both models depict similarities among the nodes. NEPAR-Q was used in the present research to reduce the number of questions on the SCL-90-R (i.e., variable selection), while NEPAR-P was utilized for feature engineering (i.e., variable creation) because it generates and incorporates similarities among patients as new variables. The NEPAR algorithm employs the similarity measures of closeness, betweenness, and degree centrality to compute the abovementioned links. We used all of these similarity measures and combined them, since choosing one over another might have caused bias in either model.

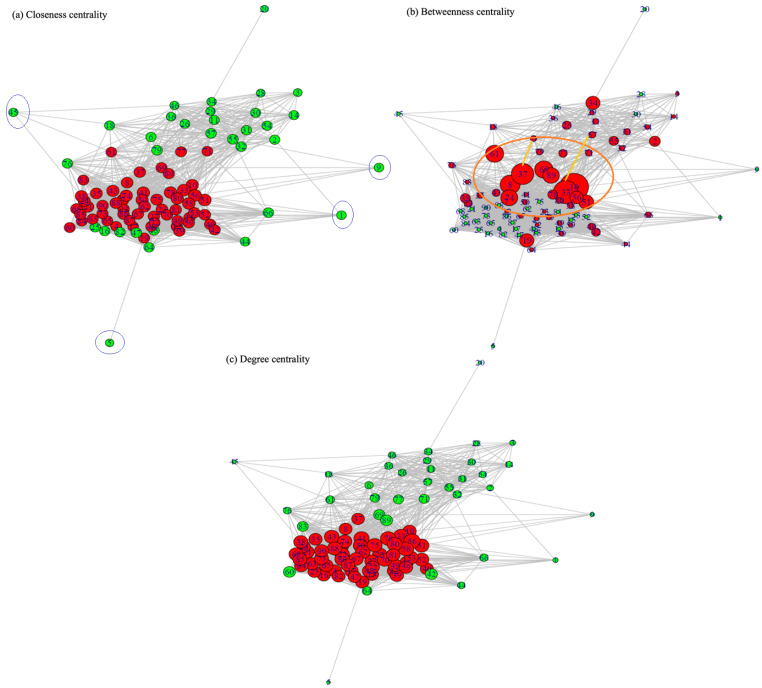

For variable selection through the NEPAR-Q model, three mental health experts determined the threshold values for each similarity measure, based on their experience. These threshold values were 36% for betweenness centrality and 39% for closeness and degree centrality. The threshold values were then used to identify the questions to be removed from the SCL-90-R. Next, the remaining questions for each similarity measure were combined, leading to an assessment tool comprised of 28 questions in total. Figure 2 represents the network diagrams based on three centrality measures obtained from the NEPAR-Q model. The red nodes in the figure represent questions with a similarity value above the threshold, and the green nodes are questions with similarity values below the threshold. The intersection of the red nodes in the three graphs represents the remaining 28 questions.

Fig. 2.

Centrality graphs

Figure 2.a shows the questions (nodes) located further away from the densely populated nodes, with fewer interconnections. These nodes (seen in the blue circles) were 1: Somatization, 5: Interpersonal Sensitivity, 9: OCD, 20: Depression, and 45: Obsessive Compulsive. Therefore, these questions were most likely the weakest and could be removed from the SCL-90-R, since they provided information that could be obtained from other questions.

The top 10 questions (seen in the orange circle) conveying the most information on the SCL-90-R are indicated as augmented nodes in Fig. 2.b. These top 10 questions were 56: Somatization, 78: Anxiety, 41: Interpersonal Sensitivity, 58: Somatization, 33: Anxiety, 80: Anxiety, 7: Psychoticism, 51: Obsessive-Compulsive, 10: Obsessive-Compulsive, and 43: Paranoid Thoughts. Additionally, Fig. 2.b shows that some questions were not necessary and could be predicted using other questions, as seen yellow lines of Fig. 2.b. For example, we could infer that “Question 6: Feeling critical of others” was highly correlated with “Question 37: Feeling that people are unfriendly or dislike you.“ Moreover, the response to “Question 33: Feeling fearful” was highly correlated to the response to “Question 57: Feeling tense or keyed up.“ The SCL-90-R was reduced to a tool called the Symptom Checklist 28-Artificial Intelligence (SCL-28-AI), which features 28 questions and threshold values, as well as a correlation comparison of the centrality values in the non-directional graphs. This tool’s questions and corresponding mental disorders are given in Table 2.

Table 2.

New SCL-28-AI Obtained by Applying NEPAR-Q to the SCL-90-R

| Question # in SCL-28-AI | Question # in SCL-90-R | Question | Mental Disorder | Mental DisorderS Assigned by Experts |

|---|---|---|---|---|

| 1 | 7 | The idea that someone else controls your thoughts | PSY | PSY |

| 2 | 8 | Feeling that others are to blame for most of your troubles | PAR | DEP, PAR, INT, HOS |

| 3 | 10 | Worry about sloppiness and careless | OC | SOM, ANX, OC |

| 4 | 19 | Poor appetite | ADI | DEP, ANX, PSY, SOM |

| 5 | 22 | Feelings of being trapped or caught | DEP | DEP, PAR, PSY, HOS |

| 6 | 23 | Suddenly frightened for no reason | ANX | ANX, PAR, PSY |

| 7 | 24 | Temper outbursts that cannot be controlled | HOS | HOS, ANX, PSY |

| 8 | 27 | Pain in lower back | SOM | SOM, ANX, DEP |

| 9 | 33 | Feeling fearful | ANX | PAR, PSY, ANX, PHOB |

| 10 | 35 | Other people being aware of your private thoughts | PSY | PSY |

| 11 | 37 | Feeling that people are unfriendly or dislike you | INT | INT, HOS, PAR, PSY |

| 12 | 38 | Having to do things very slowly to ensure correctness | OC | OC, ANX |

| 13 | 39 | Heart pounding and racing | ANX | ANX, HOS, INT |

| 14 | 40 | Nausea or upset stomach | SOM | SOM, ANX |

| 15 | 41 | Feeling inferior to others | INT | DEP, INT, ANX |

| 16 | 43 | Feeling that you are being watched or talked about by others | PAR | PAR, PSY, SEN |

| 17 | 49 | Hot or cold spells | SOM | SOM, ANX, DEP |

| 18 | 50 | Having to avoid certain things because they frighten you | PHOB | PHOB, ANX, PAR |

| 19 | 51 | Your mind going blank | OC | DEP, PSY, ANX, SOM |

| 20 | 56 | Feeling weakness in parts of your body | SOM | SOM, ANX, DEP |

| 21 | 58 | Heavy feeling in your arms or legs | SOM | SEP, SOM, ANX |

| 22 | 59 | Thoughts of death or dying | ADI | DEP, ANX |

| 23 | 67 | Having the urge to break or smash things | HOS | HOS, ANX, DEP |

| 24 | 70 | Feeling uneasy in crowds, such as when shopping | PHOB | ANX, PHOB, PAR |

| 25 | 72 | Spells of terror and panic | ANX | ANX, PSY, PHOB |

| 26 | 74 | Getting into frequent arguments | HOS | HOS, ANX, INT, PSY |

| 27 | 78 | Feeling so restless that you cannot sit still | ANX | ANX, HOS, DEP |

| 28 | 80 | Feeling like something bad is going to happen to you | ANX | ANX, PSY, PAR, SOM |

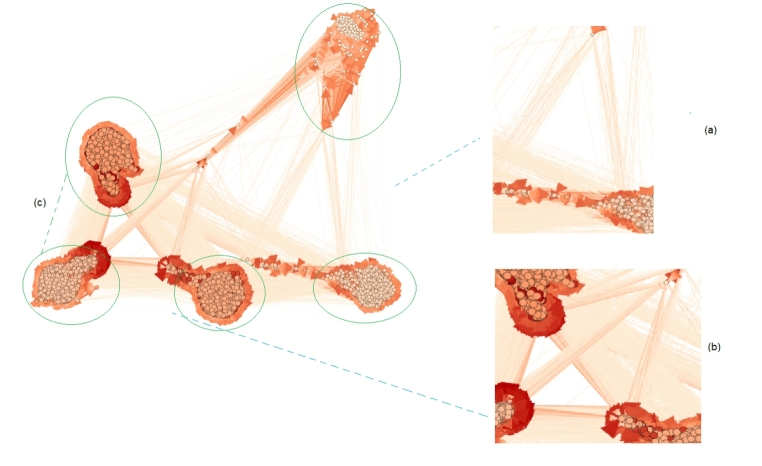

Figure 3 displays the network graph of participants (nodes) obtained using NEPAR-P and degree centrality. It is important to note that the other centrality measures (i.e., closeness and betweenness) provided similar graphs for various sets of communities. For example, as seen in Fig. 3, there were five communities of individuals related to one another via links. This fact underscores that the similarities among participants provide critical information and should be used in the AI models as new variables to improve diagnostic accuracy. Figure 3.a and Fig. 3.b shows that there is high connections among nodes and these connections can give new information about the participants.

Fig. 3.

Network graph of individuals obtained through NEPAR-P, using the degree centrality measure

Model training

In this phase, we built various AI and ML using two different training sets: one including only participants’ responses to the 28 questions and one with their responses and three centrality measures. These AI and ML models were designed to determine the probability of participants’ having a particular type of mental disorder (e.g., depression, anxiety). The prediction models were determined based on our preliminary analysis and included logistic regression-based ridge regression (R-LR), lasso regression (L-LR), RF, and SVM. To ensure the AI-based DSS is explainable and transparent, we particularly, selected three Whitebox explainable AI algorithms (i.e., R-LR, L-LR, and RF) and compared their performance measures to a Blackbox algorithm (i.e., SVM).

Logistic regression (LR) is a statistical approach that explains the relationship between multiple input variables and one output variable. The input variables can be of any type (either numerical or categorical), while the output variable must be categorical. The class probabilities (i.e., the likelihood of having specific mental disorders) are predicted based on the relationships among the input and output variables. In L-LR, a penalty function is added to the traditional multinomial LR such that the coefficients of unimportant variables are set to zero Hastie et al., 2017; James et al., 2013; Johnson, Albizri, Harfouche, et al., 2021; Tutun et al., 2022). Conversely, R-LR uses a different penalty term that shrinks the coefficients of insignificant variables to be close to zero, instead of making them zero.

RF is a tree-based ensemble algorithm comprised of many DTs represented by “if-then” rules. The RF algorithm uses the DT algorithm to build numerous uncorrelated DTs by sampling observations with replacements from the training dataset (Hastie et al., 2017; James et al., 2013). Then, the individual DTs are combined using a function such as simple averages or majority voting (Johnson et al., 2020, 2021; Simsek et al., 2020). Since RF uses multiple uncorrelated DTs by sampling the training set, it provides lower model variance and better accuracy rates, which are considered very robust.

SVM is a supervised learning algorithm mainly used for classification. SVM uses quadratic programming to find hyperplanes that can optimally separate classes with the largest gap possible (Hastie et al., 2009; Simsek et al., 2020). It is important to note that SVM can utilize various kernel functions to classify datasets that are not linearly separable. This allows SVM to efficiently operate in high-dimensional space at high accuracy rates. For each of these algorithms, we used four different datasets, as provided below:

Without NEPAR-Q and NEPAR-P: This dataset only included participants’ responses to the total 90 questions on the SCL-90-R. This set did not make use of NEPAR-Q or NEPAR-P.

Without NEPAR-Q and with NEPAR-P: This dataset included participants’ responses to the total 90 questions on the SCL-90-R, in addition to three similarity features (i.e., closeness, betweenness, and degree similarity) obtained through NEPAR-P. Each similarity feature represented the average similarity of a particular participant to the remaining participants.

With NEPAR-Q and without NEPAR-P: This dataset included participants’ responses to the 28 questions on the SCL-28-AI. This set did not make use of NEPAR-Q or NEPAR-P.

With NEPAR-Q and NEPAR-P: This dataset included participants’ responses to the 28 questions on the SCL-28-AI, in addition to the three similarity features obtained through NEPAR-P.

We divided the datasets into training and test sets. The training set was used for model building, while the test set was used to assess the models’ performance. During model training, we performed cross-validation to tune the model hyperparameters and prevent overfitting. Next, we ran the data preprocessing pipeline for the test set and computed the three additional features. We then predicted the mental disorders of the participants within the test set and calculated the performance measures. Table 3 provides the macro-averages of the performance measures, indicating their overall ability to detect mental disorders.

Table 3.

Macro-averages of Performance Measures by Model

| Variable Set | Extra Variables | Model Name | Accuracy | Sensitivity | Specificity | CI L | CI U |

|---|---|---|---|---|---|---|---|

|

Without NEPAR-Q (SCL-90-R) |

Without NEPAR-P |

L-LR | 0.9682 | 0.9676 | 0.9688 | 0.9568 | 0.9773 |

| R-LR | 0.9666 | 0.9659 | 0.9672 | 0.9549 | 0.9759 | ||

| RF | 0.9560 | 0.9573 | 0.9548 | 0.9430 | 0.9668 | ||

| SVM | 0.9625 | 0.9625 | 0.9626 | 0.9503 | 0.9724 | ||

|

With NEPAR-P |

L-LR | 0.9707 | 0.9778 | 0.9641 | 0.9596 | 0.9794 | |

| R-LR | 0.9698 | 0.9710 | 0.9688 | 0.9587 | 0.9787 | ||

| RF | 0.9560 | 0.9642 | 0.9485 | 0.9430 | 0.9668 | ||

| SVM | 0.9625 | 0.9625 | 0.9626 | 0.9503 | 0.9724 | ||

|

With NEPAR-Q (SCL-28-AI) |

Without NEPAR-P |

L-LR | 0.8719 | 0.8190 | 0.8767 | 0.8531 | 0.8889 |

| R-LR | 0.8539 | 0.8082 | 0.8706 | 0.8491 | 0.8056 | ||

| RF | 0.8508 | 0.7844 | 0.8855 | 0.8461 | 0.8128 | ||

| SVM | 0.8693 | 0.8017 | 0.8826 | 0.7803 | 0.8865 | ||

|

With NEPAR-P |

L-LR | 0.8918 | 0.8355 | 0.9040 | 0.8738 | 0.9083 | |

| R-LR | 0.8852 | 0.8221 | 0.8916 | 0.8604 | 0.8996 | ||

| RF | 0.8811 | 0.8181 | 0.8985 | 0.8647 | 0.7305 | ||

| SVM | 0.8849 | 0.8207 | 0.8991 | 0.8668 | 0.8118 |

Reducing the number of questions from 90 to 28 led to an approximately 9% decrease in performance measures. However, the AI models using the version with 28 questions (i.e., with NEPAR-P) could still diagnose all 10 mental disorders. This substantially contributes to the literature, since previous studies using fewer than 30 questions could only diagnose three to four mental disorders (Derogatis & Fitzpatrick, 2004; Prinz et al., 2013). Thus, mental health professionals can now use the SCL-28-AI instead of the SCL-90-R without compromising the number of mental disorders diagnosed or diagnostic accuracy. Furthermore, the SCL-28-AI has fewer questions; hence, it is much faster to complete and will yield a better response rate. Moreover, the performance measures obtained via NEPAR-P were higher than those obtained without, indicating that including participants’ similarities as additional features improved mental disorder diagnosis. The L-LR model with NEPAR-P yielded the highest performance measures; therefore, it was selected as the final model and deployed as part of the DSS.

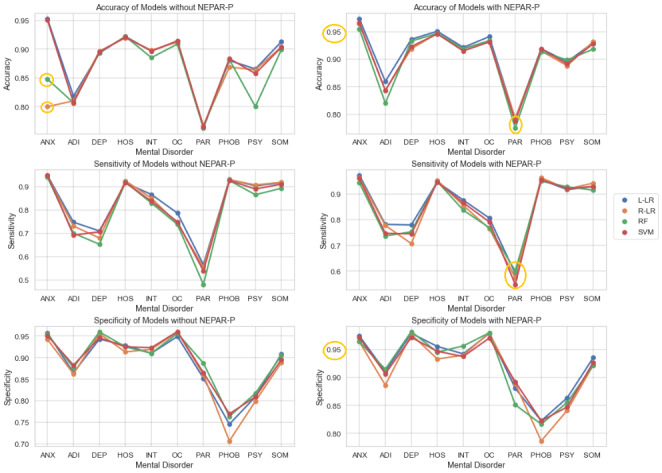

Figure 4 shows each model’s accuracy, sensitivity, and specificity with NEPAR-Q, at the mental disorder level. As seen from the plots in Fig. 4, the models with NEPAR-P (i.e., the three additional features accounting for similarities among participants) outperformed (seen in the yellow circles) the models without NEPAR-P. These models were powerful for diagnosing anxiety, depression, hostility, obsessive-compulsive disorder, and somatization. It appears that the models built both with and without NEPAR-P had some minor issues with diagnosing PAR and ADI. This was mainly because the numbers of individuals with these two disorders are relatively low. We believe that the DSS will perform significantly better once we collect more data and increase the sample size of individuals with these two disorders.

Fig. 4.

Performance of the AI and ML models with NEPAR-Q, by mental disorder

Discussions, implications and Future Research

This study was conducted in response to several calls for research on the use of cutting-edge technologies to develop decision support systems (DSSs) to help mental health professionals make evidence-based ethical treatment decisions and guide policymakers with digital mental health implementation (Balcombe & Leo, 2021; D’Alfonso, 2020). Because many mental health professionals use cumbersome standardized assessment tools such as the SCL-90-R for mental disorder diagnosis, previous studies emphasized the importance of developing less complex diagnostic tools with fewer questions (Kruyen et al., 2013). To this extent, this study mainly focused on techniques to reduce the length of the SCL-90-R for faster competition times and better completion rates. Also, various studies stated the need for DSS to diagnose mental disorders automatically and accurately (Chekroud et al., 2017; Rovini et al., 2018; Stewart et al., 2020; Zhang et al., 2020). Therefore, this study explored how to utilize AI and ML to develop DSS for mental health diagnosis. This study has various implications and makes several contributions to the pertinent literature and practice as follows.

Theoretical implications

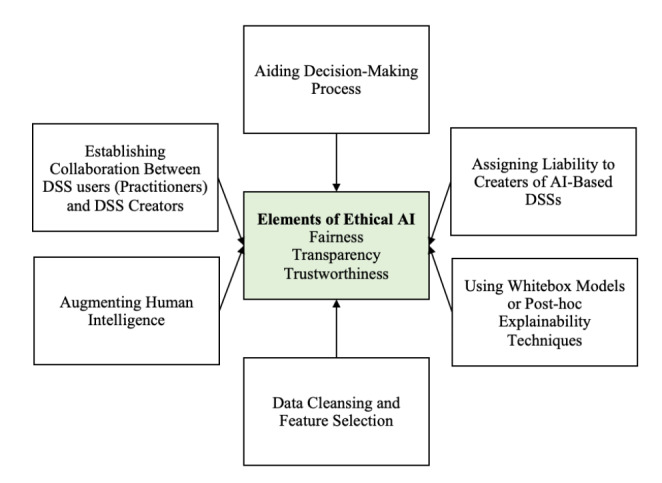

How an ethics framework is implemented in an AI-based healthcare application is not widely reported in the previous literature. Thus, there is a need for examples of AI implementations that satisfy the three principles of ethical AI, particularly in the healthcare field. As mentioned in Sect. 2.3, these principles are fairness, transparency, and trustworthiness. Therefore, this study focused on techniques as developed and described in Fig. 5 in the various phases of AI-based DSS creation and contributed to the theory of developing ethical AI solutions.

Fig. 5.

Core elements of designing ethical AI solutions

First, the study emphasized the importance of establishing close cooperation between the creators of AI-based DSSs and the practitioners. Second, this close collaboration ensured that the practitioners (i.e., mental health professionals) were included in the design process and the proposed DSS is used to aid their decision making and augment their capabilities, not replacing them. The decisions made by the proposed DSS were examined by the mental health practitioners before model deployment to address issues regarding DSS fairness and liability.

Additionally, this study used transparent algorithms. Most AI algorithms used to develop DSSs, such as ANN, are not transparent and may have internal biases (Buhmann & Fieseler, 2021). For example, recommendations and decisions made by a Blackbox-based DSS can yield unintended adverse consequences when certain variables and features are utilized in the training dataset (e.g., gender and race) (Crawford & Calo, 2016; Mittelstadt et al., 2016). For instance, Nie et al., (2012) and Hao et al., (2013) developed used SVM and ANN models to diagnose mental disorders. Unfortunately, these models have a Blackbox nature and do not provide information about their internal structure. Therefore, mental health professionals using this kind of Blackbox DSS do not know how certain factors and variables affect the final diagnosis. Thus, this study made a unique contribution by using explainable and transparent AI models when diagnosing mental disorders.

There were various steps undertaken to ensure that the proposed DSS did not generate biased predictions. For instance, several variables (e.g., gender and race) were removed from the analysis to ensure the predictions are not biased towards a certain group of people. Furthermore, the study used the NEPAR algorithm for feature selection because it is an interpretable algorithm showing how features are related to one another, thus reducing model bias, and making it transparent. Furthermore, the three different functions were applied to calculate similarities among questions and participants because integrating different functions improved the robustness and reliability of the NEPAR models.

Practical implications

Various studies have proposed using statistical techniques to shorten the SCL-90-R test (Akhavan, Abiri, & Shairi, 2020a; Imperatori et al., 2020; Lundqvist & Schröder, 2021). For example, Derogatis (2017) developed the BSI-18, which features 18 questions and is used to diagnose somatization, anxiety, and depression. Prinz et al., (2013) created the SCL-14 to diagnose somatization, phobic anxiety, and depression. However, these shorter tools cannot be used to diagnose all ten mental health disorders that the original SCL-90-R can detect because they feature fewer questions. This study addresses this major issue by creating a new symptoms checklist tool called the SCL-28-AI. Unlike the tools proposed by several previous studies (Barker-Collo, 2003; Bernet et al., 2015; Chen et al., 2020; Kim & Jang, 2016; Olsen et al., 2004; Rytilä-Manninen et al., 2016; Schmitz et al., 2000; Sereda & Dembitskyi, 2016; Urbán et al., 2016), the SCL-28-AI can diagnose all the mental disorders that the SCL-90-R was intended to diagnose but with fewer questions. Therefore, it provides significantly higher participation and completion rates.

Previous studies have never considered the similarities among patients. In other words, previous studies did not consider the fact that similar patients in terms of demographics and other various metrics may have similar mental disorders (Akhavan, Abiri, & Shairi, 2020a; Imperatori et al., 2020; Lundqvist & Schröder, 2021). Using NEPAR, this study utilizes the similarities among participants as an input to determine their mental disorders. Another important practical implication of this study is that it eliminated the manual analysis of participants’ answers to the SCL-90-R test. Because the DSS is used to diagnose disorders, mental health professionals can use their valuable time to develop treatment plans and effective interventions to improve their patients’ wellbeing instead of manually analyzing the test results (Chekroud et al., 2017; Rovini et al., 2018; Stewart et al., 2020; Zhang et al., 2020). This is the first study using the NEPAR algorithm along with major AI algorithms to develop a transparent DSS. Additionally, the proposed DSS can generate the likelihood of a person having a particular mental disorder, instead of binary decisions (i.e., whether having a disorder), which can help mental health professionals to determine the severity of the diagnosis.

There are also economic and social implications of this research too. For example, accurate diagnosis for mental disorders through this proposed DSS can reduce the overall healthcare cost due to misdiagnosis, overdiagnosis, and unnecessary treatment. Additionally, accurate and faster diagnosis of patients through this proposed DSS can help them start their treatment early, improving their overall quality of life. Finally, mental health professionals can increase their panel size (i.e., number of patients that they see) as it is easier to administer and diagnose mental disorders through the proposed DSS. This can provide a greater population with access to mental health services.

Limitations and Future Research

This study is not without limitations. The proposed research is implemented using a dataset obtained from a web portal called Psikometrist. This web portal is currently used by specific mental health professionals. Future research should obtain data from different mental health professionals and apply the framework to other datasets collected from a larger population. The dataset contained approximately 6000 observations. Future studies can use this framework for larger datasets with more variables to increase diagnostic accuracy and reduce model bias. The datasets can include historical variables related to patient demographics, genetic data, medications used, etc., and explore the impact of bias in the final diagnosis. In addition to traditional ML algorithms, future studies can consider more complex models (i.e., deep learning) with post-model explainability techniques to explore the possibility of improving the results.

Conclusions

Mental health crisis has exacerbated in the past years with severe impacts on the personal wellbeing and financial situation of many individuals. Due to the lack of adequate tools to help mental health professionals, this study was motivated by the urgent need to develop innovative tools that can help professionals make improved clinical diagnostic decisions. Our paper developed a DSS called Psikometrist that can replace traditional paper-based examinations, decreasing the possibility of missing data and significantly reducing cost and time needed by patients and mental health professionals. The findings show how AI-based tools can be utilized to efficiently detect and diagnose various mental disorders. In addition, the study discussed the ethical challenges faced during AI implementation in healthcare settings and outlined the ethical guidelines that should be integrated.

Biographies

Salih Tutun

is a faculty of Olin Business School and the Institute of Public Health at Washington University in St. Louis. Moreover, he is the co-founder of Cobsmind for understanding human behaviors and mitigating risks for improving businesses. He is an expert in developing business analytic frameworks using responsible AI, machine learning, and network science. He was awarded the 2021 Reid Teaching Award Master of Science in Business Analytics—Financial Technology from Olin Business School. His NEPAR framework research is ranked by National News Hits at Binghamton University (Circulation: Over 9 million in two months), is mentioned by more than 40 news outlets globally, and published on the front cover of Industrial and Systems Eng. at work magazine. It has also been the finalist at the IISE 2020 and IISE 2021 Cup Competitions.

Marina Johnson

is an Assistant Professor in the Information Management and Business Analytics Department at Montclair State University. Dr. Johnson completed her Ph.D. in Industrial and System Engineering, focusing on Data Mining and Machine Learning, from The State University of New York at Binghamton. Dr. Johnson has worked at various companies in industry, including Hugo Boss and Comcast, and implemented optimization, simulation, and predictive modeling projects. Dr. Johnson’s research interests lie in the area of applications of machine learning and optimization. Dr. Johnson has published articles in the Annals of Operations research, Journal of Enterprise Information Management, Information Systems Frontiers, Decision Sciences Journal of Innovative Education, and others.

Abdulaziz Ahmed

is an Assistant Professor in the Health Informatics Graduate Programs in the Department of Health Services Administration, School of Health Professions (SHP) at the University of Alabama at Birmingham (UAB). Before joining UAB, Dr. Ahmed worked as Assistant Professor at the Business Department at the University of Minnesota Crookston. Dr. Ahmed received his B.Sc. and M.Sc. degrees in Industrial Engineering from Jordan University of Science and Technology (JUST) in 2009 and 2012, respectively. Dr. Ahmed received his Ph.D. in Industrial and Systems Engineering from the State University of New York at Binghamton. Dr. Ahmed teaches in the Graduate Programs in Health Informatics, focusing on machine learning courses in the Data Analytics track. His research focuses on the applications of novel optimization and machine learning techniques to improve complex healthcare systems. Dr. Ahmed has published several conference proceedings and journal articles in prestigious journals such as Expert Systems with Applications, Operations Research for Health Care, and Healthcare Management Science.

Abdullah Albizri

is currently an Assistant Professor of Information Management and Business Analytics at the Feliciano School of Business, Montclair State University. He received his Ph.D. in Management Information Systems from Sheldon B. Lubar School of Business, University of Wisconsin-Milwaukee, in August 2014. Dr. Albizri’s research focuses on using AI solutions and machine learning techniques for financial fraud detection, blockchain applications, and the role of Information Systems in sustainability. Before joining academia, Dr. Albizri worked as an IT business consultant in the banking industry. Dr. Albizri has published articles in the International Journal of Information Management, Journal of Information Systems, Communications of the Association for Information Systems, and others.

Sedat Irgil

is a practicing psychiatrist at his private practice in Turkey as well as the co-founder of and the main psychiatric consultant for DNB Analytics, a company focusing on AI Applications in the fields of psychiatry and behavioral science. Dr. Irgil completed his medical residency at Gulhane Military Medical Academy, focusing on group psychodynamic therapies and psychoanalytic therapies. Dr. Irgil’s research interests psychometric testing, graph analysis, and AI applications in psychiatry. Dr. Irgil has contributed to conference presentations and book chapters on the field of AI applications in behavioral sciences, including a conference paper that was published in Recent Advances in Intelligent Manufacturing and Service Systems.

Ilker Yesilkaya

is an independent researcher in risk assessment and data analytics. İlker Yeşilkaya has completed his B.Sc degree in Business Administration from Anatolian University and M.Sc Degree in Occupational Health and Safety Management from Bal?kesir University. He worked on various projects in the mining industry, health care, and education as a software and data architect and data analyst. It has also been the finalist at the IISE 2020 and IISE 2021 Cup Competitions.

Esma Nur Ucar

is currently having her master’s degree in Experimental Psychology at Dokuz Eyl?l University. She completed her undergraduate education in the Department of Psychology at Ege University and worked as a psychologist in the psychiatry clinic and centers on psychometric assessment in the past. Her research interest includes neuropsychology, the neurological basis of psychiatric diseases, and the measurement and evaluation of neuropsychological disorders.

Tanalp Sengun

is a Business Analyst working at McKinsey&Company. He graduated from Koc University with a double-major in Electrical and Electronics Engineering and Business Administration. He worked at Wecurex AI research lab and Optical Microsystems and Biofluids and Cardiovascular Mechanics Labs at Koc University. His research focuses on artificial intelligence, machine learning, image processing, and their medical applications, such as the classification of cataract disease from retinal images and the classification of prosthetic hand gestures based on neural signals.

Antoine Harfouche

is an Associate Professor of Information Systems (IS) and Artificial Intelligence (AI) at University Paris Nanterre where he teaches undergraduate and graduate courses in various areas, including IS, AI, Big Data, and Quantitative Methods. Dr. Harfouche’s research primarily examines how IS and AI impact individuals, organizations, countries, and societies in general. His publications appeared in peer-reviewed journals (e.g., the Annals of Operation Research, Trends in Biotechnology, Information Technology and People, Lecture Notes in Information Systems and Organization) and renowned conference proceedings (e.g., ICIS, PACIS, MCIS). Throughout his career, Dr. Harfouche has obtained a considerable amount of funding—above 2 million dollars—from the French Research Council (ANR) and the European ERANSMUS+.

Data Availability

Data is available only on request, due to privacy/ethical restrictions. Data supporting the findings of this study are available from WeCureX and the DNB Data Analytics Group, LLC. Restrictions apply to the availability of these data, which were used under license for this study.

Declarations

Conflict of Interest

The authors declare that there are no conflicts of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Salih Tutun, Email: salihtutun@wustl.edu.

Marina E. Johnson, Email: johnsonmari@montclair.edu

Abdulaziz Ahmed, Email: aahmed2@uab.edu.

Abdullah Albizri, Email: albizria@montclair.edu.

Sedat Irgil, Email: sedatirgil@dnbanalytics.com.

Ilker Yesilkaya, Email: ilkeryesilkaya@dnbanalytics.com.

Esma Nur Ucar, Email: esmanurucar@dnbanalytics.com.

Tanalp Sengun, Email: tsengun16@ku.edu.tr.

Antoine Harfouche, Email: antoine.h@parisnanterre.fr.

References

- Akhavan Abiri F, Shairi MR. Short Forms of Symptom Checklist (SCL): Investigation of validity & Reliability. Clinical Psychology and Personality. 2020;18(1):137–162. doi: 10.22070/CPAP.2020.2929. [DOI] [Google Scholar]

- Akhavan Abiri F, Shairi MR. Validity and Reliability of Symptom Checklist-90-Revised (SCL-90-R) and Brief Symptom Inventory-53 (BSI-53) Clinical Psychology and Personality. 2020;17(2):169–195. doi: 10.22070/CPAP.2020.2916. [DOI] [Google Scholar]

- Akter S, McCarthy G, Sajib S, Michael K, Dwivedi YK, D’Ambra J, Shen KN. Algorithmic bias in data-driven innovation in the age of AI. International Journal of Information Management. 2021;60:102387. doi: 10.1016/J.IJINFOMGT.2021.102387. [DOI] [Google Scholar]

- Americans, N., Article, S., Haghir, H., Mokhber, N., Azarpazhooh, M. R., Haghighi, M. B. … Plan, Y. (2013). … World Health Organization. The ICD-10 Classification of Mental and Behavioural Disorders. IACAPAP E-Textbook of Child and Adolescent Mental Health, 55(1993), 135–139. 10.4103/0019

- Balcombe L, De Leo D. Digital Mental Health Challenges and the Horizon Ahead for Solutions XSL • FO RenderX. JMIR Ment Health. 2021;8(3):1. doi: 10.2196/26811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barker-Collo SL. Culture and validity of the Symptom Checklist-90-Revised and Profile of Mood States in a New Zealand student sample. Cultural Diversity and Ethnic Minority Psychology. 2003;9(2):185–196. doi: 10.1037/1099-9809.9.2.185. [DOI] [PubMed] [Google Scholar]

- Bernet W, Baker AJL, Verrocchio MC. Symptom Checklist-90-Revised Scores in Adult Children Exposed to Alienating Behaviors: An Italian Sample. Journal of Forensic Sciences. 2015;60(2):357–362. doi: 10.1111/1556-4029.12681. [DOI] [PubMed] [Google Scholar]

- Borgesius, F. J. Z. (2020). Strengthening legal protection against discrimination by algorithms and artificial intelligence. 24(10), 1572–1593. 10.1080/13642987.2020.1743976

- Buhmann A, Fieseler C. Towards a deliberative framework for responsible innovation in artificial intelligence. Technology in Society. 2021;64:101475. doi: 10.1016/J.TECHSOC.2020.101475. [DOI] [Google Scholar]

- Casado-Lumbreras C, Rodríguez-González A, Álvarez-Rodríguez JM, Colomo-Palacios R. PsyDis: Towards a diagnosis support system for psychological disorders. Expert Systems with Applications. 2012;39(13):11391–11403. doi: 10.1016/j.eswa.2012.04.033. [DOI] [Google Scholar]

- Chandler C, Foltz PW, Elvevåg B. Using Machine Learning in Psychiatry: The Need to Establish a Framework That Nurtures Trustworthiness. Schizophrenia Bulletin. 2020;46(1):11–14. doi: 10.1093/SCHBUL/SBZ105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chekroud AM, Gueorguieva R, Krumholz HM, Trivedi MH, Krystal JH, McCarthy G. Reevaluating the efficacy and predictability of antidepressant treatments: A symptom clustering approach. JAMA Psychiatry. 2017;74(4):370–378. doi: 10.1001/jamapsychiatry.2017.0025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen IH, Lin CY, Zheng X, Griffiths MD. Assessing Mental Health for China’s Police: Psychometric Features of the Self-Rating Depression Scale and Symptom Checklist 90-Revised. International Journal of Environmental Research and Public Health 2020. 2020;17(8):2737. doi: 10.3390/IJERPH17082737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen IY, Szolovits P, Ghassemi M. Can AI help reduce disparities in general medical and mental health care? AMA Journal of Ethics. 2019;21(2):167–179. doi: 10.1001/AMAJETHICS.2019.167. [DOI] [PubMed] [Google Scholar]

- Chen S, Stromer D, Alabdalrahim HA, Schwab S, Weih M, Maier A. Automatic dementia screening and scoring by applying deep learning on clock-drawing tests. Scientific Reports. 2020;10(1):1–11. doi: 10.1038/s41598-020-74710-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crawford K, Calo R. There is a blind spot in AI research. Nature 2016. 2016;538:7625(7625):311–313. doi: 10.1038/538311a. [DOI] [PubMed] [Google Scholar]

- D’Alfonso S. AI in mental health. Current Opinion in Psychology. 2020;36:112–117. doi: 10.1016/J.COPSYC.2020.04.005. [DOI] [PubMed] [Google Scholar]

- D’Aquin, M., Troullinou, P., O, N. E., Cullen, A., Faller, G., & Holden, L. (2018). Towards an “Ethics by Design’’’ Methodology for AI Research Projects.” 18. 10.1145/3278721

- Dennehy D, Pappas IO, Wamba SF, Michael K. Socially responsible information systems development: the role of AI and business analytics. Information Technology and People. 2021;34(6):1541–1550. doi: 10.1108/ITP-10-2021-871. [DOI] [Google Scholar]

- Derogatis, L. (2017). Symptom Checklist-90-Revised, Brief Symptom Inventory, and BSI-18. - PsycNET.Handbook of Psychological Assessment in Primary Care Settings,599–629. https://psycnet.apa.org/record/2017-23747-023

- Derogatis, L., & Fitzpatrick, M. (2004). The SCL-90-R, the Brief Symptom Inventory (BSI), and the BSI-18. In The use of psychological testing for treatment planning and outcomes assessment: Instruments for adults (pp. 1–41). https://psycnet.apa.org/record/2004-14941-001

- Derogatis, L., & Spencer, P. (1993). Brief Symptom Inventory

- Dignum, V., Baldoni, M., Baroglio, C., Caon, M., Chatila, R., Dennis, L. … De Wildt, T. (2018). Ethics by Design: Necessity or Curse? AIES 2018 - Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, 18, 60–66. 10.1145/3278721.3278745

- Docrat S, Besada D, Cleary S, Daviaud E, Lund C. Mental health system costs, resources and constraints in South Africa: a national survey. Health Policy and Planning. 2019;34(9):706–719. doi: 10.1093/HEAPOL/CZZ085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fabiano F, Haslam N. Diagnostic inflation in the DSM: A meta-analysis of changes in the stringency of psychiatric diagnosis from DSM-III to DSM-5. Clinical Psychology Review. 2020;80:101889. doi: 10.1016/J.CPR.2020.101889. [DOI] [PubMed] [Google Scholar]

- Fellmeth G, Harrison S, Opondo C, Nair M, Kurinczuk JJ, Alderdice F. Validated screening tools to identify common mental disorders in perinatal and postpartum women in India: a systematic review and meta-analysis. BMC Psychiatry 2021. 2021;21:1(1):1–10. doi: 10.1186/S12888-021-03190-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floridi L, Cowls J. A Unified Framework of Five Principles for AI in Society. Philosophical Studies Series. 2021;144:5–17. doi: 10.1007/978-3-030-81907-1_2. [DOI] [Google Scholar]

- Fosso Wamba S, Bawack RE, Guthrie C, Queiroz MM, Carillo KDA. Are we preparing for a good AI society? A bibliometric review and research agenda. Technological Forecasting and Social Change. 2021;164:120482. doi: 10.1016/J.TECHFORE.2020.120482. [DOI] [Google Scholar]

- Fosso Wamba, S., & Queiroz, M. M. (2021). Responsible Artificial Intelligence as a Secret Ingredient for Digital Health: Bibliometric Analysis, Insights, and Research Directions. Information Systems Frontiers, 1–16. 10.1007/S10796-021-10142-8/TABLES/7 [DOI] [PMC free article] [PubMed]

- Galesic M, Bosnjak M. Effects of questionnaire length on participation and indicators of response quality in a web survey. Public Opinion Quarterly. 2009;73(2):349–360. doi: 10.1093/poq/nfp031. [DOI] [Google Scholar]

- Gallardo-Pujol, D., & Pereda, N. (2013). Person-environment transactions: persionality traits moderate and mediate the effects. Personality and Mental Health, 7(April 2012), 102–113. 10.1002/pmh [DOI] [PubMed]

- Garcia-Zattera MJ, Mutsvari T, Jara A, Declerck D, Lesaffre E. Correcting for misclassification for a monotone disease process with an application in dental research. Statistics in Medicine. 2010;29(30):3103–3117. doi: 10.1002/sim.3906. [DOI] [PubMed] [Google Scholar]

- Gerke, S., Minssen, T., & Cohen, G. (2020). Ethical and legal challenges of artificial intelligence-driven healthcare. Artificial Intelligence in Healthcare, 295–336. 10.1016/B978-0-12-818438-7.00012-5

- Graham S, Depp C, Lee EE, Nebeker C, Tu X, Kim HC, Jeste DV. Artificial Intelligence for Mental Health and Mental Illnesses: an Overview. Current Psychiatry Reports 2019. 2019;21:11(11):1–18. doi: 10.1007/S11920-019-1094-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gupta, M., Parra, C. M., & Dennehy, D. (2021). Questioning Racial and Gender Bias in AI-based Recommendations: Do Espoused National Cultural Values Matter? Information Systems Frontiers, 1–17. 10.1007/S10796-021-10156-2/TABLES/5 [DOI] [PMC free article] [PubMed]

- Hanna F, Barbui C, Dua T, Lora A, van Altena M, Saxena S. Global mental health: how are we doing? World Psychiatry. 2018;17(3):368. doi: 10.1002/WPS.20572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hao, B., Li, L., Li, A., & Zhu, T. (2013). Predicting mental health status on social media a preliminary study on microblog. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 8024 LNCS(PART 2), 101–110. 10.1007/978-3-642-39137-8-12

- Hardt J, Gerbershagen HU. Cross-validation of the SCL-27: A short psychometric screening instrument for chronic pain patients. European Journal of Pain. 2001;5(2):187–197. doi: 10.1053/eujp.2001.0231. [DOI] [PubMed] [Google Scholar]

- Hardt J. The symptom checklist-27-plus (SCL-27-plus): a modern conceptualization of a traditional screening instrument. Psycho-Social Medicine. 2008;5:Doc08. [PMC free article] [PubMed] [Google Scholar]

- Hastie, T., Tibshirani, R., & Friedman, J. (2009). Support Vector Machines and Flexible Discriminants. In The elements of statistical learning (pp. 1–42). 10.1007/b94608_12

- Hastie, T., Tibshirani, R., & Friedman, J. (2017). The Elements of Statistical Learning: Data Mining, Inference, and Prediction (2nd ed.). Springer Series in Statistics

- Hildenbrand, A. K., Nicholls, E. G., Aggarwal, R., Brody-Bizar, E., & Daly, B. P. (2015). Symptom Checklist-90-Revised (SCL-90-R). The Encyclopedia of Clinical Psychology, 1–5. 10.1002/9781118625392.wbecp495

- Holi, M. (2003). Assessment of psychiatric symptoms using the SCL-90. [Matti Holi]

- IBM (2020). Trustworthy AI. https://www.ibm.com/watson/trustworthy-ai

- Imperatori C, Bianciardi E, Niolu C, Fabbricatore M, Gentileschi P, Lorenzo G, Innamorati M. The Symptom-Checklist-K-9 (SCL-K-9) Discriminates between Overweight/Obese Patients with and without Significant Binge Eating Pathology: Psychometric Properties of an Italian Version. Nutrients 2020. 2020;12(3):674. doi: 10.3390/NU12030674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iphofen R, Kritikos M. Regulating artificial intelligence and robotics: ethics by design in a digital society. Https://Doi.Org. 2019;1563803(2):170–184. doi: 10.1080/21582041.2018.1563803. [DOI] [Google Scholar]

- James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An Introduction to Statistical Learning (Vol. 103). Springer New York. 10.1007/978-1-4614-7138-7

- Jenkins R, Kydd R, Mullen P, Thomson K, Sculley J, Kuper S, Wong ML. International migration of doctors, and its impact on availability of psychiatrists in low and middle income countries. PLoS ONE. 2010;5(2):1–9. doi: 10.1371/journal.pone.0009049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson, M., Albizri, A., & Harfouche, A. (2021). Responsible Artificial Intelligence in Healthcare: Predicting and Preventing Insurance Claim Denials for Economic and Social Wellbeing. Information Systems Frontiers, 1–17. 10.1007/s10796-021-10137-5

- Johnson M, Albizri A, Harfouche A, Tutun S. Digital transformation to mitigate emergency situations: increasing opioid overdose survival rates through explainable artificial intelligence. Industrial Management and Data Systems. 2021 doi: 10.1108/IMDS-04-2021-0248/FULL/XML. [DOI] [Google Scholar]

- Johnson, M., Albizri, A., & Simsek, S. (2020). Artificial intelligence in healthcare operations to enhance treatment outcomes: a framework to predict lung cancer prognosis. Annals of Operations Research, 1–31. 10.1007/s10479-020-03872-6

- Khan ME, Tutun S. Understanding and Predicting Organ Donation Outcomes Using Network-based Predictive Analytics. Procedia Computer Science. 2021;185:185–192. doi: 10.1016/J.PROCS.2021.05.020. [DOI] [Google Scholar]

- Khanal P, Devkota N, Dahal M, Paudel K, Joshi D. Mental health impacts among health workers during COVID-19 in a low resource setting: a cross-sectional survey from Nepal. Globalization and Health. 2020;2020 16:1(1):1–12. doi: 10.1186/S12992-020-00621-Z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilbourne AM, Beck K, Spaeth-Rublee B, Ramanuj P, O’Brien RW, Tomoyasu N, Pincus HA. Measuring and improving the quality of mental health care: a global perspective. World Psychiatry. 2018;17(1):30–38. doi: 10.1002/WPS.20482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim JH, Jang S. The Relationship Between Job Stress, Job Satisfaction, and the Symptom Checklist-90-Revision (SCL-90-R) in Marine Officers on Board. Journal of Preventive Medicine and Public Health. 2016;49(6):376. doi: 10.3961/JPMPH.16.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirkpatrick J, Pascanu R, Rabinowitz N, Veness J, Desjardins G, Rusu AA, Hadsell R. Overcoming catastrophic forgetting in neural networks. Proceedings of the National Academy of Sciences of the United States of America. 2017;114(13):3521–3526. doi: 10.1073/PNAS.1611835114/-/DCSUPPLEMENTAL. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kruyen PM, Emons WHM, Sijtsma K. On the Shortcomings of Shortened Tests: A Literature Review. International Journal of Testing. 2013;13(3):223–248. doi: 10.1080/15305058.2012.703734. [DOI] [Google Scholar]

- Li P, Wang F, Ji GZ, Miao L, You S, Chen X. The psychological results of 438 patients with persisting GERD symptoms by Symptom Checklist 90-Revised (SCL-90-R) questionnaire. Medicine (United States) 2018;97(5):10768. doi: 10.1097/MD.0000000000009783. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- Lin L, Hu PJH, Liu Sheng OR. A decision support system for lower back pain diagnosis: Uncertainty management and clinical evaluations. Decision Support Systems. 2006;42(2):1152–1169. doi: 10.1016/j.dss.2005.10.007. [DOI] [Google Scholar]

- Lundqvist, L. O., & Schröder, A. (2021). Evaluation of the SCL-9S, a short version of the symptom checklist-90-R, on psychiatric patients in Sweden by using Rasch analysis. 10.1080/08039488.2021.1901988 [DOI] [PubMed]

- Luxton, D. D. (2016). An Introduction to Artificial Intelligence in Behavioral and Mental Health Care. Artificial Intelligence in Behavioral and Mental Health Care, 1–26. 10.1016/B978-0-12-420248-1.00001-5

- Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 10.1177/2053951716679679

- Mittelstadt BD, Floridi L. The Ethics of Biomedical Big Data. 1. Cham: Springer; 2016. The Ethics of Big Data: Current and Foreseeable Issues in Biomedical Contexts; pp. 445–480. [DOI] [PubMed] [Google Scholar]

- Morley J, Machado C, Burr C, Cowls J, Taddeo M, Floridi L. The Debate on the Ethics of AI in Health Care: A Reconstruction and Critical Review. SSRN Electronic Journal. 2019 doi: 10.2139/SSRN.3486518. [DOI] [Google Scholar]

- Mueller A, Candrian G, Grane VA, Kropotov JD, Ponomarev VA, Baschera GM. Discriminating between ADHD adults and controls using independent ERP components and a support vector machine: A validation study. Nonlinear Biomedical Physics. 2011;5(1):1–18. doi: 10.1186/1753-4631-5-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nie, D., Ning, Y., & Zhu, T. (2012). Predicting mental health status in the context of web browsing. Proceedings of the 2012 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology Workshops, WI-IAT 2012, 185–189. 10.1109/WI-IAT.2012.196

- Ogasawara K, Nakamura Y, Kimura H, Aleksic B, Ozaki N. Issues on the diagnosis and etiopathogenesis of mood disorders: reconsidering DSM-5. Journal of Neural Transmission. 2017;2017 125:2(2):211–222. doi: 10.1007/S00702-017-1828-2. [DOI] [PubMed] [Google Scholar]

- Olsen LR, Mortensen EL, Bech P. Prevalence of major depression and stress indicators in the Danish general population. Acta Psychiatrica Scandinavica. 2004;109(2):96–103. doi: 10.1046/j.0001-690X.2003.00231.x. [DOI] [PubMed] [Google Scholar]

- Park SC, Kim YK. Anxiety Disorders in the DSM-5: Changes, Controversies, and Future Directions. Advances in Experimental Medicine and Biology. 2020;1191:187–196. doi: 10.1007/978-981-32-9705-0_12. [DOI] [PubMed] [Google Scholar]

- Parra CM, Gupta M, Mikalef P. Information and communication technologies (ICT)-enabled severe moral communities and how the (Covid19) pandemic might bring new ones. International Journal of Information Management. 2021;57:102271. doi: 10.1016/J.IJINFOMGT.2020.102271. [DOI] [Google Scholar]

- Pechenizkiy, M., Tsymbal, A., Puuronen, S., & Pechenizkiy, O. (2006). Class noise and supervised learning in medical domains: The effect of feature extraction. Proceedings - IEEE Symposium on Computer-Based Medical Systems, 2006, 708–713. 10.1109/CBMS.2006.65

- Perkins A, Ridler J, Browes D, Peryer G, Notley C, Hackmann C. Experiencing mental health diagnosis: a systematic review of service user, clinician, and carer perspectives across clinical settings. The Lancet Psychiatry. 2018;5(9):747–764. doi: 10.1016/S2215-0366(18)30095-6. [DOI] [PubMed] [Google Scholar]

- Petersen I, Bhana A, Fairall LR, Selohilwe O, Kathree T, Baron EC, Lund C. Evaluation of a collaborative care model for integrated primary care of common mental disorders comorbid with chronic conditions in South Africa. BMC Psychiatry 2019. 2019;19:1(1):1–11. doi: 10.1186/S12888-019-2081-Z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price, W. N. I. (2019). Medical AI and Contextual Bias. Harvard Journal of Law & Technology (Harvard JOLT), 33. https://heinonline.org/HOL/Page?handle=hein.journals/hjlt33&id=71&div=&collection=

- Prinz U, Nutzinger DO, Schulz H, Petermann F, Braukhaus C, Andreas S. Comparative psychometric analyses of the SCL-90-R and its short versions in patients with affective disorders. BMC Psychiatry. 2013;13:1–9. doi: 10.1186/1471-244X-13-104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ransing, R., Ramalho, R., Orsolini, L., Adiukwu, F., Gonzalez-Diaz, J. M., Larnaout, A. … Kilic, O. (2020). Can COVID-19 related mental health issues be measured? Brain, Behavior, and Immunity, 88, 32. 10.1016/J.BBI.2020.05.049 [DOI] [PMC free article] [PubMed]

- Razzouk D, Mari JJ, Shirakawa I, Wainer J, Sigulem D. Decision support system for the diagnosis of schizophrenia disorders. Brazilian Journal of Medical and Biological Research. 2006;39(1):119–128. doi: 10.1590/S0100-879X2006000100014. [DOI] [PubMed] [Google Scholar]

- Ritchie, H. (2018). Global burden of disease studies: Implications for mental and substance use disorders. In Health Affairs. Issue 6). Project HOPE, 35, 10.1377/HLTHAFF.2016.0082 [DOI] [PubMed]

- Rovini E, Maremmani C, Moschetti A, Esposito D, Cavallo F. Comparative Motor Pre-clinical Assessment in Parkinson’s Disease Using Supervised Machine Learning Approaches. Annals of Biomedical Engineering. 2018;46(12):2057–2068. doi: 10.1007/s10439-018-2104-9. [DOI] [PubMed] [Google Scholar]

- Rytilä-Manninen, M., Fröjd, S., Haravuori, H., Lindberg, N., Marttunen, M., Kettunen, K., & Therman, S. (2016). Psychometric properties of the Symptom Checklist-90 in adolescent psychiatric inpatients and age- and gender-matched community youth. Child and Adolescent Psychiatry and Mental Health 2016 10:1, 10(1), 1–12. 10.1186/S13034-016-0111-X [DOI] [PMC free article] [PubMed]

- Schmitz N, Hartkamp N, Kiuse J, Franke GH, Reister G, Tress W. The Symptom Check-List-90-R (SCL-90-R): A German validation study. Quality of Life Research. 2000;9(2):185–193. doi: 10.1023/A:1008931926181. [DOI] [PubMed] [Google Scholar]

- Schönberger D. Artificial intelligence in healthcare: a critical analysis of the legal and ethical implications. International Journal of Law and Information Technology. 2019;27(2):171–203. doi: 10.1093/IJLIT/EAZ004. [DOI] [Google Scholar]

- Sereda, Y., & Dembitskyi, S. (2016). Validity assessment of the symptom checklist SCL-90-R and shortened versions for the general population in Ukraine. BMC Psychiatry 2016 16:1, 16(1), 1–11. 10.1186/S12888-016-1014-3 [DOI] [PMC free article] [PubMed]

- Simsek S, Albizri A, Johnson M, Custis T, Weikert S. Predictive data analytics for contract renewals: a decision support tool for managerial decision-making. Journal of Enterprise Information Management. 2020 doi: 10.1108/JEIM-12-2019-0375. [DOI] [Google Scholar]

- Sinyor M, Schaffer A, Levitt A. The Sequenced Treatment Alternatives to Relieve Depression (STAR*D) trial: A review. Canadian Journal of Psychiatry. 2010;55(3):126–135. doi: 10.1177/070674371005500303. [DOI] [PubMed] [Google Scholar]

- Sleep C, Lynam DR, Miller JD. Personality impairment in the DSM-5 and ICD-11: Current standing and limitations. Current Opinion in Psychiatry. 2021;34(1):39–43. doi: 10.1097/YCO.0000000000000657. [DOI] [PubMed] [Google Scholar]

- Stewart SL, Celebre A, Hirdes JP, Poss JW. Risk of Suicide and Self-harm in Kids: The Development of an Algorithm to Identify High-Risk Individuals Within the Children’s Mental Health System. Child Psychiatry and Human Development. 2020;51(6):913. doi: 10.1007/S10578-020-00968-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thieme Anja B, Doherty Gavin Machine Learning in Mental Health. ACM Transactions on Computer-Human Interaction (TOCHI) 2020;27(5):34. doi: 10.1145/3398069. [DOI] [Google Scholar]

- Trivedi MH, Kern JK, Grannemann BD, Altshuler KZ, Sunderajan P. A computerized clinical decision support system as a means of implementing depression guidelines. Psychiatric Services. 2004;55(8):879–885. doi: 10.1176/appi.ps.55.8.879. [DOI] [PubMed] [Google Scholar]

- Tsamados A, Aggarwal N, Cowls J, Morley J, Roberts H, Taddeo M, Floridi L. The ethics of algorithms: key problems and solutions. AI & SOCIETY 2021. 2021;1:1–16. doi: 10.1007/S00146-021-01154-8. [DOI] [Google Scholar]

- Tutun S, Khasawneh MT, Zhuang J. New framework that uses patterns and relations to understand terrorist behaviors. Expert Systems with Applications. 2017;78:358–375. doi: 10.1016/J.ESWA.2017.02.029. [DOI] [Google Scholar]