Abstract

The accurate assessment of left ventricular systolic function is crucial in the diagnosis and treatment of cardiovascular diseases. Left ventricular ejection fraction (LVEF) and global longitudinal strain (GLS) are the most critical indexes of cardiac systolic function. Echocardiography has become the mainstay of cardiac imaging for measuring LVEF and GLS because it is non-invasive, radiation-free, and allows for bedside operation and real-time processing. However, the human assessment of cardiac function depends on the sonographer’s experience, and despite their years of training, inter-observer variability exists. In addition, GLS requires post-processing, which is time consuming and shows variability across different devices. Researchers have turned to artificial intelligence (AI) to address these challenges. The powerful learning capabilities of AI enable feature extraction, which helps to achieve accurate identification of cardiac structures and reliable estimation of the ventricular volume and myocardial motion. Hence, the automatic output of systolic function indexes can be achieved based on echocardiographic images. This review attempts to thoroughly explain the latest progress of AI in assessing left ventricular systolic function and differential diagnosis of heart diseases by echocardiography and discusses the challenges and promises of this new field.

Keywords: echocardiography, artificial intelligence, left ventricular systolic function, machine learning, deep learning

1. Left Ventricular Systolic Function Assessment in Clinical Practice

Left ventricular systolic dysfunction is a common disorder mainly caused by myocarditis, cardiomyopathy, and ischemic heart diseases [1,2]. It gives rise to fatigue, dyspnea, or even death from heart failure [1,2]. Accurate assessment of left ventricular systolic function is crucial for the diagnosis, treatment, and prognosis of cardiovascular diseases.

Currently, parameters such as LVEF, GLS, and peak systolic velocity of the mitral annulus (S’) are used to evaluate left ventricular systolic function in clinical practice [1,2,3,4,5]. Echocardiography has become the mainstay of cardiac imaging in measuring LVEF and GLS as it is non-invasive, radiation-free, and easy to obtain. Although echocardiography plays a vital role in the dynamic treatment and follow-up of cardiovascular diseases, there remain some limitations such as dependence on experience, intra-observer variability, and inter-observer variability. Recently, AI has shown increasing promise in medicine to overcome these challenges.

2. AI’s Application in Left Ventricular Systolic Function Assessment

2.1. Key Concepts in AI

Medical imaging has great significance in the diagnosis, treatment, and prognosis of diseases with the characteristics of a large amount of data and rich information of lesions. However, humans have a limited ability to interpret high-dimensional image features. As an effective tool, AI assists experts in analyzing medical imaging data, which can improve the accuracy and repeatability of complex and multi-dimensional data analysis [6,7].

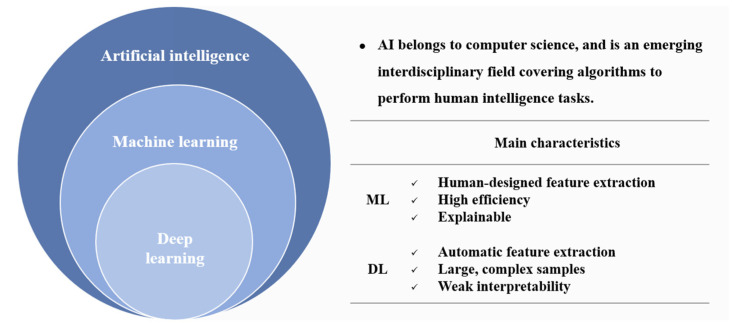

AI is a field of computer science in which algorithms are used to perform human-intelligence tasks, and it is an emerging interdisciplinary field covering computers, psychology, and philosophy [8]. Machine learning (ML) is a subset of AI, and deep learning (DL) is an essential branch of ML [8] (Figure 1). ML uses statistical algorithms such as the random forest (RF) and support vector machine (SVM) algorithms to quickly, accurately, and efficiently analyze complex clinical data based on feature engineering. They establish decision-aided models for cardiovascular diseases with high efficiency and good model interpretability. However, machine learning requires human-designed feature extraction, which is time consuming. DL realizes automatic feature extraction and plays a vital role in analyzing large and complex samples, but its interpretability still needs improvement [8,9].

Figure 1.

Logical diagram of AI, ML, and DL and the main characteristics of ML and DL. (AI: artificial intelligence; ML: machine learning; DL: deep learning).

2.2. AI in Echocardiography

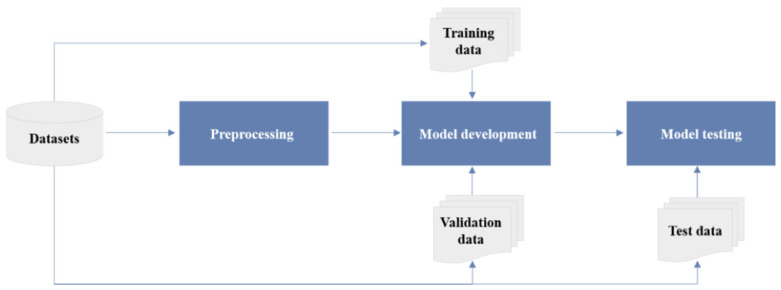

Currently, the research on AI’s application in echocardiography is proliferating. The series of studies follow a standard paradigm divided into four stages: data collection, preprocessing, model development, and model testing [10] (Figure 2).

Figure 2.

The workflow of studies on AI in echocardiography, including four main steps: (1) clinical problem-oriented data collection; (2) data preprocessing operations based on task characteristics (classification tasks require explicit sample labels; segmentation tasks require the marking of regions of interest) and data splitting (training, validation, and testing datasets are mutually independent); (3) based on the type of tasks (regression, classification, or clustering), appropriate AI algorithms are selected for model development on the training datasets, and the performance of the model is validated on the validation datasets; (4) the reliability and generalization of the model are tested on the internal and external independent testing datasets.

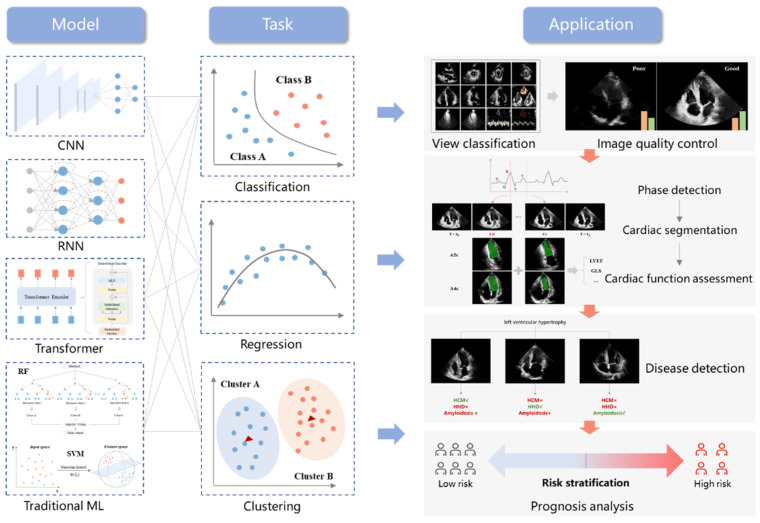

AI extracts features of ultrasound images to recognize standard views, segment cardiac structures, assess cardiac function, identify disease phenotypes, and analyze the prognosis combined with multi-dimensional parameters [7,11] (Figure 3).

Figure 3.

Overview diagram of AI’s application in echocardiography. Current applications focus on view classification and image quality control (classification), cardiac phase detection and cardiac function assessment (regression), and disease diagnosis and prognosis analysis (clustering). Mainstream AI algorithms consist of the convolutional neural network (CNN), recurrent neural network (RNN), transformer, and traditional machine learning algorithms (RF and SVM).

View classification is the first step in real-time quantitative and post-processing cardiac structures and function analysis. Echocardiography consists of multimodal images, such as M-mode still images, two-dimensional gray-scale videos, and Doppler recordings [3]. Different modes contain multiple standard views [3]. Due to the between-subject variability and different imaging parameters, there are differences in the same standard view [3]. In addition, multiple standard views contain similar cardiac motion information (such as valvular motion and ventricular wall motion), increasing the challenge of view classification [3]. Several studies have confirmed the feasibility and accuracy of AI in analyzing echocardiography and classifying standard views [12,13,14,15]. AI algorithms represented by a convolutional neural network (CNN) can extract multi-scale features of ultrasonic images, improve the accuracy of view classification, and simplify the process of image processing. However, how to efficiently classify multimodal ultrasound images requires further studied for the high frame rate of echocardiography, small pixel changes between images, and information redundancy.

In terms of medical image processing, cardiac segmentation is the key to accurately assessing cardiac structures and function. Due to the complexity of heart movement and anatomic structures, edge blur, low signal-to-noise ratios, and small cardiac target regions, accurate heart segmentation based on echocardiography has always been an extremely challenging task [16]. AI algorithms are valuable for mining high-dimensional information that is not perceivable by the naked eye and for maximizing the extraction of image features. By integrating the spatial and temporal information, AI can identify critical cardiac anatomical structures, improve the accuracy of cardiac segmentation, and lay a solid foundation for the assessment of cardiac function [16,17,18].

Human assessment of cardiac function depends on the sonographer’s experience, and inter-observer and intra-observer variability exist [19]. Based on AI algorithms, fully automatic, comprehensive analyses of cardiac function can be achieved. Additionally, they also improve the accuracy and repeatability of image interpretation, simplify the diagnosis and treatment process, and reduce the time and labor costs, which have significant clinical value [19,20,21,22].

Disease diagnosis, differential diagnosis, and prognosis analysis usually require the integration of multi-dimensional imaging parameters and clinical information to build disease decision models. The traditional statistic methods require a priori knowledge by clinicians, and the prediction effect is poor when dealing with extensive sample data [23]. AI performs well in analyzing high-dimensional and complex data with strong feature extraction ability. It has significant advantages over clinicians in analyzing complex clinical information and realizing personalized risk stratification [23,24,25,26,27].

With the continuous development of ultrasound imaging and computer technology, the application of AI in the quantitative assessment of heart structures and function is shifting from single-frame images and two-dimensional video to three-dimensional images, from ML to DL, and from single-tasking to multitasking. The emergence of the latest AI algorithms has injected new vitality into quantifying cardiac function in echocardiography. These algorithms are expected to help achieve automatic full-stack analysis in echocardiography and improve the accuracy and reproducibility of cardiac function assessments. However, AI’s application in echocardiography is in its initial stage, and the current hot spot still focuses on automatic left ventricular systolic function assessment. This paper reviews the current studies on AI-enhanced echocardiography in evaluating left ventricular systolic function.

3. AI’s Application in Left Ventricular Systolic Function—LVEF

LVEF is one of the most critical indexes of cardiac systolic function. However, human assessment of cardiac function has inter-observer variability despite the sonographer’s years of training. Therefore, researchers have been working on a fully automated method of assessing LVEF (Table 1).

Table 1.

Studies of AI’s Application in Left Ventricular Systolic Function—LVEF.

| Authors | Year | Task | Model | Dataset | Results |

|---|---|---|---|---|---|

| Leclerc S. et al. [16] | 2019 | LV segmentation | U-Net | 500 subjects | Accuracy in LV volumes (MAE = 9.5 mL, r = 0.95). |

| Smistad E. et al. [17] | 2019 | LV segmentation | U-Net | 606 subjects | Accuracy for LV segmentation (DSC 0.776–0.786). |

| Leclerc S. et al. [18] | 2020 | LV segmentation | LU-Net | 500 subjects | Accuracy in LV volumes (MAE = 7.6 mL, r = 0.96). |

| Wei H. et al. [28] | 2020 | LV segmentation | CLAS | 500 subjects | Accuracy for LVEF assessment (r = 0.926, bias = 0.1%). |

| Reynaud H. et al. [29] | 2021 | LVEF assessment | Transformer | 10,030 subjects | Accuracy for LVEF assessment (MAE = 5.95%, R2 = 0.52). |

| Ouyang et al. [19] | 2020 | LVEF assessment | EchoNet-Dynamic | 10,030 subjects | Accuracy for LV segmentation (DSC = 0.92), LVEF assessment (MAE = 4.1%), and HFpEF classification (AUC 0.97). |

| Asch F.M. et al. [20] | 2019 | LVEF assessment | CNN | >50,000 studies | AutoEF values show agreement with GT: r = 0.95, bias = 1.0%, with sensitivity 0.90 and specificity 0.92 for detection of EF less than 35%. |

| Zhang J. et al. [21] | 2018 | LVEF assessment GLS assessment Disease detection | CNN | 14,035 studies | Agreement with GT: for LVEF, MAE = 9.7%; for GLS, and MAE = 7.5% and 9.0% (within 2 cohorts). Disease detection: HCM, Amyloid, and PAH (AUC 0.93, 0.87, and 0.85). |

| Tromp J. et al. [30] | 2022 | LVEF assessment | CNN | 43,587 studies | Accuracy for LVEF assessment (MAE 6–10%). |

| Narang A. et al. [15] | 2021 | LVEF assessment | Caption Guidance | 240 subjects | LV size, function, and pericardial effusion in 237 cases (98.8%) and RV size in 222 cases (92.5%) are of diagnostic quality. |

| Asch F.M. et al. [32] | 2021 | LVEF assessment | Caption Health | 166 subjects (Protocol 1) 67 subjects (Protocol 2) |

Protocol 1: agreement with GT: ICC 0.86–0.95, bias < 2%. Protocol 2: agreement with GT: ICC = 0.84, bias 2.5 ± 6.4%. |

| Tokodi M. et al. [24] | 2020 | Disease detection (HFpEF) | TDA | 1334 subjects | Region 4 relative to 1: HR = 2.75, 95%CI 1.27–45.95, p = 0.01. Correlation of NYHA and ACC/AHA stages with regions: r = 0.56 and 0.67. |

LVEF, left ventricular ejection fraction; LV, left ventricle; RV, right ventricle; MAE, mean absolute error; DSC, dice similarity coefficient; CLAS, Co-Learning of Segmentation and Tracking on Appearance and Shape Level; AUC, area under the receiver operating characteristic curve; CNN, convolutional neural network; GT, ground truth; GLS, global longitudinal strain; HCM, hypertrophic cardiomyopathy; PAH, pulmonary arterial hypertension; HFpEF, heart failure with preserved ejection fraction; HF, heart failure; ICC, intraclass correlation coefficient; TDA, topological data analysis; HR, hazard ratio; CI, confidence interval; NYHA, New York Heart Association; ACC, American College of Cardiology; AHA, American Heart Association.

3.1. Cardiac Segmentation

Accurate segmentation of the left ventricle is the basis for estimating LVEF. In 2019, Leclerc S. et al. [16] made a large publicly available dataset called Cardiac Acquisitions for Multi-Structure Ultrasound Segmentation (CAMUS). It contained two- and four-chamber views of 500 patients with expert annotations. Multiple algorithms were adopted to segment structures such as the left ventricular endocardium, myocardium, and left atrium based on the dataset. Then they measured end-diastolic and end-systolic volumes and LVEF using Simpson’s biplane method. The results showed that the optimized AI model performed better than the other algorithms in segmenting the left ventricle. The automatic measurements of the left ventricular volumes and LVEF were consistent with expert assessments. In the same year, Smistad E. et al. [17] used the CAMUS dataset and an additional dataset of 106 patients with apical long-axis views to construct a multi-view segmentation network by transfer learning. It turned out that the network successfully segmented the left ventricle and left atrium in apical long-axis views. Later, Leclerc S. et al. [18] proposed a multistage attention network. Compared with the AI model mentioned above, this network was optimized by adding a region proposal network to locate the left ventricle before segmentation. The results showed that the network improved the accuracy and robustness of left ventricle segmentation.

Previous studies have focused mainly on the segmentation of the cardiac cavity in a single video frame. However, echocardiography provides rich information in the temporal domain. Therefore, Wei H. et al. [28] designed a network called Co-Learning of Segmentation and Tracking on Appearance and Shape Level (CLAS). It provides segmentation of the whole sequences with high temporal consistency and an accurate assessment of LVEF.

3.2. Automatic Assessment of LVEF

Based on the image segmentation, researchers have devoted themselves to developing fully automatic models to assess LVEF.

Ouyang et al. [19] presented a video-based AI model called EchoNet-Dynamic to evaluate cardiac function. The study included apical four-chamber views of 10,030 patients with annotations of only end-systole and end-diastole frames. They generalized these labels with a weak supervision approach and developed an atrous convolution model to generate frame-level semantic segmentation of the whole cardiac cycle. The weak supervision approach could cut the cost of labeling considerably. The atrous convolution model is able to integrate sufficient information into the temporal domain, provide frame-level segmentation of the left ventricle, and draw a left ventricular volume curve with multiple cardiac cycles to evaluate LVEF. The results showed that the EchoNet-Dynamic segmented the left ventricle accurately with a Dice similarity coefficient greater than 0.9, both at the end-systole and end-diastole levels, as well as across the cardiac cycle. The researchers further analyzed the accuracy of LVEF assessment by external validation of 2895 patients. It was revealed that the automatic measurements were consistent with the expert assessments and had good repeatability. In addition, the study discussed a series of problems that clinicians might experience in clinical practice, including the accuracy of model measurements of cardiac function in patients with arrhythmias and the measurements of different video qualities, using different instruments, and under different imaging conditions. The results showed that EchoNet-Dynamic was robust to variation in heart rhythm and video acquisition conditions.

Asch F.M. et al. [20] developed a computer vision model that estimated LVEF by simulating experts on a dataset of 50,000 studies. The results showed good correlation and consistency between automatic measurements and human assessments. In addition, Reynaud H. et al. [29] proposed a transformer model based on the self-attention mechanism, which can analyze echocardiographic videos of any length, locate ED and ES precisely, and assess LVEF accurately.

Zhang J. et al. [21] presented the first fully automated multitasking echocardiogram interpretation system to simplify the clinical diagnosis and treatment. The model successfully classified 23 views, segmented cardiac structures, assessed LVEF, and diagnosed three diseases (hypertrophic cardiomyopathy, cardiac amyloid, and pulmonary arterial hypertension). In 2022, Tromp J. et al. [30] developed a fully automated AI workflow to classify, segment, and interpret two-dimensional and Doppler modalities based on international and interracial datasets. The results showed that the algorithms successfully assessed LVEF with the area under the receiver operating characteristic curve (AUC) of 0.90–0.92.

The above studies discussed the quantification of LVEF in regular medical imaging practice. Nevertheless, Point-of-Care Ultrasonography (POCUS) is widely used in emergency and severe cases. POCUS is the acquisition, interpretation, and immediate clinical integration of ultrasound imaging performed by a clinician at the patient’s bedside rather than by a radiologist or cardiologist [31]. POCUS enables direct interaction between patients and clinicians, contributing to accurate diagnoses and treatments. Moreover, POCUS can provide timely diagnoses for emergency and critically ill patients as the ultrasonic device is easy to carry and handle. However, one of the prerequisites for using POCUS is that the clinician is competent in operating the device and interpreting the data obtained, which requires standardized training. To overcome this challenge, researchers have turned their attention to AI. In 2020, the FDA approved two products of Caption Health: Caption Guidance and Caption Interpretation. Caption guidance could successfully guide novices without ultrasonographic experience to obtain diagnostic views after short-term training [15]. Caption Interpretation was able to assist doctors in measuring LVEF automatically [20]. Then, they combined Caption Guidance and Caption Interpretation to develop a new AI algorithm setting in POCUS [32]. The algorithm allowed for image acquisition, quality assessments, and fully automatic measurements of LVEF, which could assist doctors in collecting and analyzing images accurately and quickly.

The above studies indicate that AI algorithms have essential clinical values: they can improve the accuracy of LVEF assessment, simplify the workflow of diagnosis and treatment, and reduce time and labor costs.

3.3. Disease Diagnosis

According to the 2021 ESC guidelines [1], heart failure is divided into three categories: heart failure with reduced ejection fraction (LVEF ≤ 40%), heart failure with mildly reduced ejection fraction (LVEF 41~49%), and heart failure with preserved ejection fraction (LVEF ≥ 50%). The three types of heart failure have different degrees of left ventricular systolic and diastolic dysfunction. However, there is no sensitive prediction model based on echocardiographic indicators to stage heart failure for patients with similar features or to predict major adverse cardiac events. For this, Tokodi M. et al. [24] applied topological data analysis (TDA) to integrate echocardiographic parameters of left ventricular structures and function into a patient similarity network. The TDA network can represent geometric data structures according to the similarity between multiple echocardiographic parameters, retain critical data features, and effectively capture topological information of high-dimensional data. The results revealed that the TDA network successfully divided patients into four regions based on nine echocardiographic parameters and performed well in the dynamic evaluation of the disease course and the prediction of major adverse cardiac events. Cardiac function gradually deteriorated from region one to region four.

It can be seen that AI algorithms perform well in mining the potential features of echocardiographic data, and hence facilitate early and accurate diagnosis.

4. AI’s Application in Left Ventricular Systolic Function—GLS

LVEF is one of the main indexes of cardiac systolic function. However, it is not sensitive for the identification of early ventricular systolic dysfunction. The guidelines published by the Heart Failure Association of the European Society of Cardiology (ESC) [33] pointed out that GLS was superior to LVEF in the evaluation of subclinical ventricular systolic dysfunction due to it being stable and repeatable. Therefore, the guidelines recommended using GLS to detect subclinical ventricular systolic dysfunction. The current challenge is that GLS post-processing is time-consuming. Hence, researchers have turned to AI to meet these challenges (Table 2).

Table 2.

Studies of AI’s Application in Left Ventricular Systolic Function—GLS.

| Authors | Year | Task | Models | Dataset | Results |

|---|---|---|---|---|---|

| Kawakami H. et al. [34] | 2021 | GLS assessment | AutoStrain | 561 subjects | Automated vs. manual GLS: r = 0.685, bias = 0.99%. Semi-automated vs. manual GLS: r = 0.848, bias = −0.90%. Automated vs. semi-automated GLS: r = 0.775, bias = 1.89%. |

| Salte I.M. et al. [22] | 2021 | GLS assessment | EchoPWC-Net | 200 studies | EchoPWC-Net vs. EchoPAC: r = 0.93, MD 0.3 ± 0.3%. |

| Evain E. et al. [36] | 2022 | GLS assessment | PWC-Net | >60,000 images | Automated vs. Manual GLS: r = 0.77, MAE 2.5 ± 2.1%. |

| Narula S. et al. [25] | 2016 | Disease detection (ATH vs. HCM) |

Ensemble model (SVM, RF, ANN) |

77 ATH, 62 HCM patients |

Sensitivity 0.96; specificity 0.77. |

| Sengupta P.P. et al. [26] | 2016 | Disease detection (CP vs. RCM) |

AMC | 50 CP patients, 44 RCM patients, and 47 controls |

AUC 0.96. |

| Zhang J. et al. [27] | 2021 | Disease detection(CHD) | Two-step stacking | 217 CHD patients, 207 controls |

Sensitivity 0.903; specificity 0.843; AUC 0.904. |

| Loncaric F. et al. [37] | 2021 | Disease detection (HT) | ML | 189 HT patients, 97 controls |

HT is divided into 4 phenotypes. |

| Yahav A. et al. [38] | 2020 | Disease detection (strain curve classification) |

ML | 424 subjects | Strain curve is divided into physiological, non-physiological, and uncertain categories (accuracy 86.4%). |

| Pournazari P. et al. [39] | 2021 | Prognosis analysis (COVID-19) |

ML | 724 subjects | BC (AUC 0.79). BC + Laboratory data + Vital signs (AUC 0.86). BC + Laboratory data + Vital signs + Echos (AUC 0.92). |

| Przewlocka-Kosmala M. et al. [40] | 2019 | Prognosis analysis (HFpEF) | Clustering | 177 HFpEF patients, 51 asymptomatic controls |

HFpEF is divided into 3 prognostic phenotypes. |

GLS, global longitudinal strain; MD, mean difference; MAE, mean absolute error; ATH, athletes; HCM, hypertrophic cardiomyopathy; SVM, support vector machine; RF, random forest; ANN, artificial neural networks; CP, constrictive pericarditis; RCM, restrictive cardiomyopathy; AMC, associative memory classifier; AUC, area under the receiver operating characteristic curve; CHD, coronary heart disease; HT, hypertension; ML, machine learning; HFpEF, heart failure with preserved ejection fraction; BC, baseline characteristics; Echos, echocardiographic measurements.

4.1. Automatic Assessment of GLS

AutoStrain is an application integrated into the EPIQ CVx system that can measure GLS automatically. A study [34] showed that AutoStrain was feasible in 99.5% of patients. However, there was discordance between automated, semi-automated, and manual measurements (automated vs. manual GLS: r = 0.685, bias = 0.99%; semi-automated vs. manual GLS: r = 0.848, bias = −0.90%; automated vs. semi-automated GLS: r = 0.775, bias = 1.89%). Approximately 40% of patients needed manual correction after automated assessments. Therefore, it is necessary to strike a balance between the post-processing speed and accuracy of assessment so that automatic software can be widely used in clinical practice and assist doctors in evaluating patients’ myocardial motion quickly and accurately.

At present, automatic assessment is still based on speckle tracking echocardiography. It tracks the deformation of local speckles between two consecutive frames, which is easily affected by the signal-to-noise ratio of the images. Furthermore, post-processing is time consuming. Therefore, researchers first proposed the optical flow estimation algorithm based on CNN called EchoPWC-Net [22,35]. By calculating the displacement vector changes of pixels in the region of interest in two consecutive frames, EchoPWC-Net quickly and accurately estimated the myocardial motion and fully automatically assessed GLS. Based on 200 cases of 2D echocardiography, the study compared EchoPWC-Net and the commercial semi-automatic software (EchoPAC). The results showed that GLS assessed by the two methods had a highly significant correlation (r = 0.93). First, compared with the sparse speckles of speckle tracking echocardiography, the optical flow estimation algorithm was able to calculate the dense optical flow field of myocardial motion and capture more effective pixel information. Second, the optical flow estimation reduced the post-processing time (single view < 5 s; the average of three views was 13 s), making it possible to measure GLS in real time in the future. In addition, the optical flow algorithm for the fully automated measurement of GLS eliminated intra- and inter-observer variability and had good repeatability. However, the robustness of the model for different image qualities needs to be further evaluated.

Evain E. et al. [36] developed a new optical flow estimation algorithm based on PWC-Net. The results showed that the algorithm accurately assessed GLS in the echocardiography sequences. There was a powerful correlation between automated and manual GLS (r = 0.77). In addition, the study proposed a method to generate simulation datasets (including artifacts or not) to simulate the diversity of datasets in the real world and improve the robustness of the model.

It can be seen that AI algorithms help to improve the accuracy and real-time capability of GLS measurement, thus assisting clinicians in evaluating left ventricular systolic function comprehensively and accurately.

4.2. Disease Diagnosis

The strain parameters generated by the post-processing of echocardiography can faithfully reflect myocardial systolic and diastolic deformation, which is helpful for the early diagnosis and prognosis of cardiac dysfunction. However, these high-dimensional data usually contain redundant information, which poses a challenge for data mining and interpretation. Their clinical application is hampered by the lack of feature extraction capability of traditional analysis methods, and hence cannot provide sufficient information for clinicians to make clinical decisions quickly and accurately. This is where AI rises to the occasion. AI has strong feature extraction capability and performs well in analyzing high-dimensional and complex data. It is widely used in the differential diagnosis of diseases.

Narula S. et al. [25] developed an integrated model (SVM, RF, and ANN) with echocardiographic images of 77 patients with physiological myocardial hypertrophy and 62 patients with hypertrophic cardiomyopathy. Combined with strain parameters, the model successfully distinguished the physiological and pathological patterns of myocardial hypertrophy and identified hypertrophic cardiomyopathy with a sensitivity of 0.96 and specificity of 0.77.

Sengupta P.P. et al. [26] conducted a study including 50 patients with constrictive pericarditis, 44 patients with restrictive cardiomyopathy, and 47 controls. Based on the strain parameters, they developed an associative memory classification algorithm and took pathological results as the gold standard. The results showed that the algorithm effectively distinguished constrictive pericarditis and restrictive cardiomyopathy (AUC 0.96).

Zhang J. et al. [27] conducted a study including 217 patients with coronary heart disease and 207 controls. Based on two-dimensional speckle tracking echocardiographic and clinical parameters, they integrated various classification methods by stacking learning strategies to build a prediction model for coronary heart disease. The results showed that the integrated model combined the advantages of multiple classification models. The classification accuracy of coronary heart disease in the test set was 87.7%, the sensitivity was 0.903, the specificity was 0.843, and the AUC was 0.904, which was significantly higher than that of a single model.

Apart from directly using GLS data, researchers also explored disease phenotypes based on strain curves. Loncaric F. et al. [37] conducted a study including 189 patients with hypertension and 97 controls. Based on the strain curve and pulse Doppler velocity curve of mitral and aortic valves, an unsupervised ML algorithm was developed to automatically identify the patterns in the strain and velocity curves throughout cardiac cycles. The algorithm successfully divided hypertension into four different functional phenotypes (P1, healthy; P2, transitional; P3, functional remodeling in response to pressure overload; and P4, related to a higher burden of comorbidities and differences in clinical management in female patients). In addition, Yahav A. et al. [38] developed a fully automated ML algorithm based on strain curves. The strain curve was successfully divided into physiological, non-physiological, or uncertain categories with a classification accuracy of 86.4%.

AI performs well in mining nonlinear characteristic relationships hidden in data, but the lack of interpretability limits its clinical application. Improving the interpretability of AI algorithms and promoting the adaptation of AI-aided diagnosis systems to clinical practice is essential for future research.

5. Challenges and Future Directions

In recent years, AI-enhanced echocardiography has attracted extensive attention. However, it is in the initial stage of development and still faces many challenges. Firstly, the lack of a large sample and pluralistic and standardized datasets hamper the integration of AI into echocardiographic practice. Secondly, AI-related clinical research is scientifically oriented, requiring every sonographer of the research team to identify and refine vital scientific issues in daily clinical practice, which could be time consuming and infeasible. In addition, traditional ML and DL algorithms have their respective merits and defects. The traditional ML algorithms can be applied to small-sample datasets with certain interpretability, but this does not guarantee exhaustive feature extraction. DL has unique advantages in the data analysis of large samples, but the results lack interpretability, and the sample shortage may lead to model overfitting and limited generalization ability. Therefore, combining traditional ML and DL algorithms might improve the interpretability and sensitivity of intelligent medical prediction models, which is essential for solving critical clinical problems. Additionally, the exploration and application of AI in multimodal imaging are bringing new insights into modern medicine. We are expecting that, in the future, multimodality information such as ultrasound imaging, magnetic resonance imaging, and clinical data could be integrated into a one-stop AI diagnostic model, simplifying clinical diagnoses and the treatment process and improving the detection rate of diseases.

Author Contributions

Conceptualization, Z.Z. (Zisang Zhang), Y.Z. (Ye Zhu), X.Y., M.X., and L.Z.; data curation, Z.Z. (Zisang Zhang), Y.Z. (Ye Zhu), M.L., Z.Z. (Ziming Zhang), and Y.Z. (Yang Zhao); formal analysis, Z.Z. (Zisang Zhang), Y.Z. (Ye Zhu), M.L., Z.Z. (Ziming Zhang), and Y.Z. (Yang Zhao); visualization, Z.Z. (Zisang Zhang), Y.Z. (Ye Zhu), and Y.Z. (Yang Zhao); writing—original draft preparation, Z.Z. (Zisang Zhang), and Y.Z. (Ye Zhu); writing—review and editing, M.L., Z.Z. (Ziming Zhang), X.Y., M.X., and L.Z. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

All authors declare no conflict of interest.

Funding Statement

This work was supported by National Natural Science Foundation of China (No. 81922033, and 82171964), and the Key Research and Development Program of Hubei (No. 2021CFA046).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.McDonagh T.A., Metra M., Adamo M., Gardner R.S., Baumbach A., Böhm M., Burri H., Butler J., Čelutkienė J., Chioncel O., et al. 2021 ESC Guidelines for the diagnosis and treatment of acute and chronic heart failure. Eur. Heart J. 2021;42:3599–3726. doi: 10.1093/eurheartj/ehab368. [DOI] [PubMed] [Google Scholar]

- 2.Heidenreich P.A., Bozkurt B., Aguilar D., Allen L.A., Byun J.J., Colvin M.M., Deswal A., Drazner M.H., Dunlay S.M., Evers L.R., et al. 2022 AHA/ACC/HFSA Guideline for the Management of Heart Failure: A Report of the American College of Cardiology/American Heart Association Joint Committee on Clinical Practice Guidelines. Circulation. 2022;145:e895–e1032. doi: 10.1161/CIR.0000000000001063. [DOI] [PubMed] [Google Scholar]

- 3.Lang R.M., Badano L.P., Mor-Avi V., Afilalo J., Armstrong A., Ernande L., Flachskampf F.A., Foster E., Goldstein S.A., Kuznetsova T., et al. Recommendations for cardiac chamber quantification by echocardiography in adults: An update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. Eur. Heart J. Cardiovasc. Imaging. 2015;16:233–270. doi: 10.1093/ehjci/jev014. [DOI] [PubMed] [Google Scholar]

- 4.Nauta J.F., Jin X., Hummel Y.M., Voors A.A. Markers of left ventricular systolic dysfunction when left ventricular ejection fraction is normal. Eur. J. Heart Fail. 2018;20:1636–1638. doi: 10.1002/ejhf.1326. [DOI] [PubMed] [Google Scholar]

- 5.Potter E., Marwick T.H. Assessment of Left Ventricular Function by Echocardiography: The Case for Routinely Adding Global Longitudinal Strain to Ejection Fraction. JACC Cardiovasc. Imaging. 2018;11:260–274. doi: 10.1016/j.jcmg.2017.11.017. [DOI] [PubMed] [Google Scholar]

- 6.Wallis C. How Artificial Intelligence Will Change Medicine. Nature. 2019;576:S48. doi: 10.1038/d41586-019-03845-1. [DOI] [PubMed] [Google Scholar]

- 7.Litjens G., Ciompi F., Wolterink J.M., de Vos B.D., Leiner T., Teuwen J., Išgum I. State-of-the-Art Deep Learning in Cardiovascular Image Analysis. JACC Cardiovasc. Imaging. 2019;12:1549–1565. doi: 10.1016/j.jcmg.2019.06.009. [DOI] [PubMed] [Google Scholar]

- 8.Davis A., Billick K., Horton K., Jankowski M., Knoll P., Marshall J.E., Paloma A., Palma R., Adams D.B. Artificial Intelligence and Echocardiography: A Primer for Cardiac Sonographers. J. Am. Soc. Echocardiogr. 2020;33:1061–1066. doi: 10.1016/j.echo.2020.04.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 10.Krittanawong C., Johnson K.W., Rosenson R.S., Wang Z., Aydar M., Baber U., Min J.K., Tang W.H.W., Halperin J.L., Narayan S.M. Deep learning for cardiovascular medicine: A practical primer. Eur. Heart J. 2019;40:2058–2073. doi: 10.1093/eurheartj/ehz056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Nolan M.T., Thavendiranathan P. Automated Quantification in Echocardiography. JACC Cardiovasc. Imaging. 2019;12:1073–1092. doi: 10.1016/j.jcmg.2018.11.038. [DOI] [PubMed] [Google Scholar]

- 12.Khamis H., Zurakhov G., Azar V., Raz A., Friedman Z., Adam D. Automatic apical view classification of echocardiograms using a discriminative learning dictionary. Med. Image Anal. 2017;36:15–21. doi: 10.1016/j.media.2016.10.007. [DOI] [PubMed] [Google Scholar]

- 13.Madani A., Ong J.R., Tibrewal A., Mofrad M.R.K. Deep echocardiography: Data-efficient supervised and semi-supervised deep learning towards automated diagnosis of cardiac disease. NPJ Digit. Med. 2018;1:59. doi: 10.1038/s41746-018-0065-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Madani A., Arnaout R., Mofrad M., Arnaout R. Fast and accurate view classification of echocardiograms using deep learning. NPJ Digit. Med. 2018;1:6. doi: 10.1038/s41746-017-0013-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Narang A., Bae R., Hong H., Thomas Y., Surette S., Cadieu C., Chaudhry A., Martin R.P., McCarthy P.M., Rubenson D.S., et al. Utility of a Deep-Learning Algorithm to Guide Novices to Acquire Echocardiograms for Limited Diagnostic Use. JAMA Cardiol. 2021;6:624–632. doi: 10.1001/jamacardio.2021.0185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Leclerc S., Smistad E., Pedrosa J., Ostvik A., Cervenansky F., Espinosa F., Espeland T., Berg E.A.R., Jodoin P.-M., Grenier T., et al. Deep Learning for Segmentation Using an Open Large-Scale Dataset in 2D Echocardiography. IEEE Trans. Med. Imaging. 2019;38:2198–2210. doi: 10.1109/TMI.2019.2900516. [DOI] [PubMed] [Google Scholar]

- 17.Smistad E., Salte I.M., Ostvik A., Leclerc S., Bernard O., Lovstakken L. Segmentation of apical long axis, four-and two-chamber views using deep neural networks; Proceedings of the IEEE International Ultrasonics Symposium; Glasgow, UK. 6–9 October 2019. [Google Scholar]

- 18.Leclerc S., Smistad E., Ostvik A., Cervenansky F., Espinosa F., Espeland T., Rye Berg E.A., Belhamissi M., Israilov S., Grenier T., et al. LU-Net: A Multistage Attention Network to Improve the Robustness of Segmentation of Left Ventricular Structures in 2-D Echocardiography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control. 2020;67:2519–2530. doi: 10.1109/TUFFC.2020.3003403. [DOI] [PubMed] [Google Scholar]

- 19.Ouyang D., He B., Ghorbani A., Yuan N., Ebinger J., Langlotz C.P., Heidenreich P.A., Harrington R.A., Liang D.H., Ashley E.A., et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature. 2020;580:252–256. doi: 10.1038/s41586-020-2145-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Asch F.M., Poilvert N., Abraham T., Jankowski M., Cleve J., Adams M., Romano N., Hong H., Mor-Avi V., Martin R.P., et al. Automated Echocardiographic Quantification of Left Ventricular Ejection Fraction without Volume Measurements Using a Machine Learning Algorithm Mimicking a Human Expert. Circ. Cardiovasc. Imaging. 2019;12:e009303. doi: 10.1161/CIRCIMAGING.119.009303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhang J., Gajjala S., Agrawal P., Tison G.H., Hallock L.A., Beussink-Nelson L., Lassen M.H., Fan E., Aras M.A., Jordan C., et al. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation. 2018;138:1623–1635. doi: 10.1161/CIRCULATIONAHA.118.034338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Salte I.M., Østvik A., Smistad E., Melichova D., Nguyen T.M., Karlsen S., Brunvand H., Haugaa K.H., Edvardsen T., Lovstakken L., et al. Artificial Intelligence for Automatic Measurement of Left Ventricular Strain in Echocardiography. JACC Cardiovasc. Imaging. 2021;14:1918–1928. doi: 10.1016/j.jcmg.2021.04.018. [DOI] [PubMed] [Google Scholar]

- 23.Quer G., Arnaout R., Henne M., Arnaout R. Machine Learning and the Future of Cardiovascular Care: JACC State-of-the-Art Review. J. Am. Coll. Cardiol. 2021;77:300–313. doi: 10.1016/j.jacc.2020.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tokodi M., Shrestha S., Bianco C., Kagiyama N., Casaclang-Verzosa G., Narula J., Sengupta P.P. Interpatient Similarities in Cardiac Function: A Platform for Personalized Cardiovascular Medicine. JACC Cardiovasc. Imaging. 2020;13:1119–1132. doi: 10.1016/j.jcmg.2019.12.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Narula S., Shameer K., Salem Omar A.M., Dudley J.T., Sengupta P.P. Machine-Learning Algorithms to Automate Morphological and Functional Assessments in 2D Echocardiography. J. Am. Coll. Cardiol. 2016;68:2287–2295. doi: 10.1016/j.jacc.2016.08.062. [DOI] [PubMed] [Google Scholar]

- 26.Sengupta P.P., Huang Y.-M., Bansal M., Ashrafi A., Fisher M., Shameer K., Gall W., Dudley J.T. Cognitive Machine-Learning Algorithm for Cardiac Imaging: A Pilot Study for Differentiating Constrictive Pericarditis From Restrictive Cardiomyopathy. Circ. Cardiovasc. Imaging. 2016;9:e004330. doi: 10.1161/CIRCIMAGING.115.004330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhang J., Zhu H., Chen Y., Yang C., Cheng H., Li Y., Zhong W., Wang F. Ensemble machine learning approach for screening of coronary heart disease based on echocardiography and risk factors. BMC Med. Inform. Decis. Mak. 2021;21:187. doi: 10.1186/s12911-021-01535-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wei H., Cao H., Cao Y., Zhou Y., Xue W., Ni D., Li S. Temporal-consistent Segmentation of Echocardiography with Co-learning from Appearance and Shape; Proceedings of the Medical Image Computing and Computer Assisted Intervention; Lima, Peru. 29 June 2020. [Google Scholar]

- 29.Reynaud H., Vlontzos A., Hou B., Beqiri A., Leeson P., Kainz B. Ultrasound Video Transformers for Cardiac Ejection Fraction Estimation. arXiv. 20212107.00977 [Google Scholar]

- 30.Tromp J., Seekings P.J., Hung C.-L., Iversen M.B., Frost M.J., Ouwerkerk W., Jiang Z., Eisenhaber F., Goh R.S.M., Zhao H., et al. Automated interpretation of systolic and diastolic function on the echocardiogram: A multicohort study. Lancet. Digit. Health. 2022;4:e46–e54. doi: 10.1016/S2589-7500(21)00235-1. [DOI] [PubMed] [Google Scholar]

- 31.Díaz-Gómez J.L., Mayo P.H., Koenig S.J. Point-of-Care Ultrasonography. N. Engl. J. Med. 2021;385:1593–1602. doi: 10.1056/NEJMra1916062. [DOI] [PubMed] [Google Scholar]

- 32.Asch F.M., Mor-Avi V., Rubenson D., Goldstein S., Saric M., Mikati I., Surette S., Chaudhry A., Poilvert N., Hong H., et al. Deep Learning-Based Automated Echocardiographic Quantification of Left Ventricular Ejection Fraction: A Point-of-Care Solution. Circ. Cardiovasc. Imaging. 2021;14:e012293. doi: 10.1161/CIRCIMAGING.120.012293. [DOI] [PubMed] [Google Scholar]

- 33.Čelutkienė J., Plymen C.M., Flachskampf F.A., de Boer R.A., Grapsa J., Manka R., Anderson L., Garbi M., Barberis V., Filardi P.P., et al. Innovative imaging methods in heart failure: A shifting paradigm in cardiac assessment. Position statement on behalf of the Heart Failure Association of the European Society of Cardiology. Eur. J. Heart Fail. 2018;20:1615–1633. doi: 10.1002/ejhf.1330. [DOI] [PubMed] [Google Scholar]

- 34.Kawakami H., Wright L., Nolan M., Potter E.L., Yang H., Marwick T.H. Feasibility, Reproducibility, and Clinical Implications of the Novel Fully Automated Assessment for Global Longitudinal Strain. J. Am. Soc. Echocardiogr. Off. Publ. Am. Soc. Echocardiogr. 2021;34:136–145. doi: 10.1016/j.echo.2020.09.011. [DOI] [PubMed] [Google Scholar]

- 35.Leeson P., Fletcher A.J. Let AI Take the Strain. JACC. Cardiovasc. Imaging. 2021;14:1929–1931. doi: 10.1016/j.jcmg.2021.05.012. [DOI] [PubMed] [Google Scholar]

- 36.Evain E., Sun Y., Faraz K., Garcia D., Saloux E., Gerber B.L., De Craene M., Bernard O. Motion estimation by deep learning in 2D echocardiography: Synthetic dataset and validation. IEEE Trans. Med. Imaging. 2022 doi: 10.1109/TMI.2022.3151606. [DOI] [PubMed] [Google Scholar]

- 37.Loncaric F., Marti Castellote P.-M., Sanchez-Martinez S., Fabijanovic D., Nunno L., Mimbrero M., Sanchis L., Doltra A., Montserrat S., Cikes M., et al. Automated Pattern Recognition in Whole-Cardiac Cycle Echocardiographic Data: Capturing Functional Phenotypes with Machine Learning. J. Am. Soc. Echocardiogr. Off. Publ. Am. Soc. Echocardiogr. 2021;34:1170–1183. doi: 10.1016/j.echo.2021.06.014. [DOI] [PubMed] [Google Scholar]

- 38.Yahav A., Zurakhov G., Adler O., Adam D. Strain Curve Classification Using Supervised Machine Learning Algorithm with Physiologic Constraints. Ultrasound Med. Biol. 2020;46:2424–2438. doi: 10.1016/j.ultrasmedbio.2020.03.002. [DOI] [PubMed] [Google Scholar]

- 39.Pournazari P., Spangler A.L., Ameer F., Hagan K.K., Tano M.E., Chamsi-Pasha M., Chebrolu L.H., Zoghbi W.A., Nasir K., Nagueh S.F. Cardiac involvement in hospitalized patients with COVID-19 and its incremental value in outcomes prediction. Sci. Rep. 2021;11:19450. doi: 10.1038/s41598-021-98773-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Przewlocka-Kosmala M., Marwick T.H., Dabrowski A., Kosmala W. Contribution of Cardiovascular Reserve to Prognostic Categories of Heart Failure With Preserved Ejection Fraction: A Classification Based on Machine Learning. J. Am. Soc. Echocardiogr. Off. Publ. Am. Soc. Echocardiogr. 2019;32:604–615. doi: 10.1016/j.echo.2018.12.002. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.