Abstract

Deep learning has received extensive research interest in developing new medical image processing algorithms, and deep learning based models have been remarkably successful in a variety of medical imaging tasks to support disease detection and diagnosis. Despite the success, the further improvement of deep learning models in medical image analysis is majorly bottlenecked by the lack of large-sized and well-annotated datasets. In the past five years, many studies have focused on addressing this challenge. In this paper, we reviewed and summarized these recent studies to provide a comprehensive overview of applying deep learning methods in various medical image analysis tasks. Especially, we emphasize the latest progress and contributions of state-of-the-art unsupervised and semi-supervised deep learning in medical image analysis, which are summarized based on different application scenarios, including classification, segmentation, detection, and image registration. We also discuss the major technical challenges and suggest the possible solutions in future research efforts.

Keywords: Deep learning, unsupervised learning, self-supervised learning, semi-supervised learning, medical images, classification, segmentation, detection, registration, Transformer, attention

1. INTRODUCTION

In current clinical practice, accuracy of detection and diagnosis of cancers and/or many other diseases depends on the expertise of individual clinicians (e.g., radiologists, pathologists) (Kruger et al., 1972), which results in large inter-reader variability in reading and interpreting medical images. In order to address and overcome this clinical challenge, many computer-aided detection and diagnosis (CAD) schemes have been developed and tested, aiming to help clinicians more efficiently read medical images and make the diagnostic decision in a more accurate and objective manner. The scientific rationale of this approach is that using computer-aided quantitative image feature analysis can help overcome many negative factors in the clinical practice, including the wide variations in expertise of the clinicians, potential fatigue of human experts, and lack of sufficient medical resources.

Although early CAD schemes have been developed in 1970s (Meyers et al., 1964; Kruger et al., 1972; Sezaki and Ukena, 1973), progress of the CAD schemes accelerates since the middle of 1990s (Doi et al., 1999), due to the development and integration of more advanced machine learning methods or models into CAD schemes. For conventional CAD schemes, a common developing procedure consists of three steps: target segmentation, feature computation, and disease classification. For example, Shi et al. (2008) developed a CAD scheme to achieve mass classification on digital mammograms. The ROIs containing the target masses were first segmented from the background using a modified active contour algorithm (Sahiner et al., 2001). Then a large number of image features were applied to quantify the lesion characteristics in size, morphology, margin geometry, texture, and etc. Thus the raw pixel data was converted into a vector of representative features. Finally, a LDA (linear discrimination analysis) based classification model was applied on the feature vector to identify the mass malignancy.

As a comparison, for deep learning based models, hidden patterns inside ROIs are progressively identified and learned by the hierarchical architecture of deep neural networks (LeCun et al., 2015). During this process, important properties of the input image will be gradually identified and amplified for certain tasks (e.g. classification, detection), while irrelevant features will be attenuated and filtered out. For instance, an MRI image depicting suspicious liver lesions comes with a pixel array (Hamm et al., 2019), and each entry is used as one input feature of the deep learning model. The first several layers of the model may initially obtain some basic lesion information, such as tumor shape, location, and orientation. The next batch of layers may identity and keep the features consistently related to lesion malignancy (e.g. shape, edge irregularity), while ignoring irrelevant variations (e.g. location). The relevant features will be further processed and assembled by subsequent higher layers in a more abstract manner. When increasing the number of layers, a higher level of feature representations can be achieved. Through the entire procedure, important features hidden inside the raw image are recognized by a general neural network based model in a self-taught manner, and thus the manual feature development is not needed.

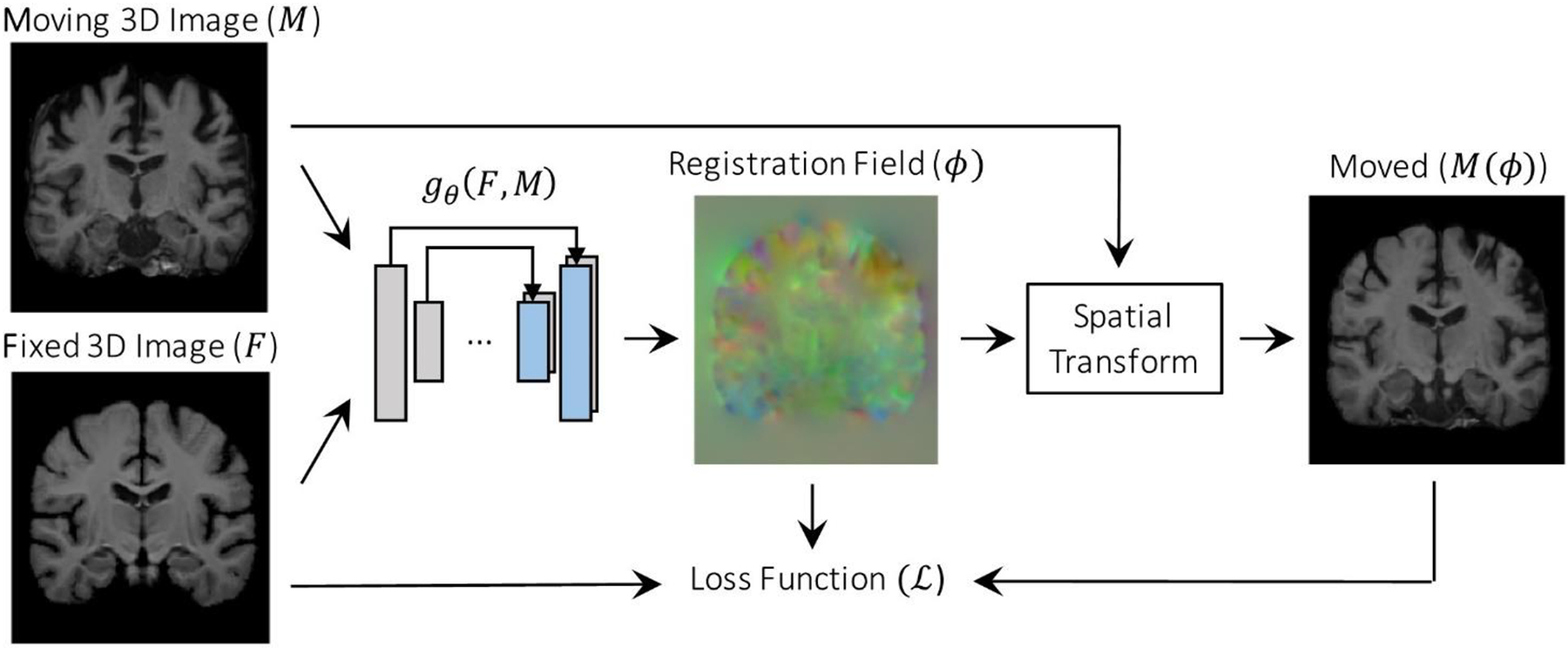

Due to its huge advantage, deep learning related methods have become the mainstream technology in the CAD field and have been widely applied in a variety of tasks, such as disease classification (Li et al., 2020a; Shorfuzzaman and Hossain, 2021; Zhang et al., 2020a; Frid-Adar et al., 2018a; Kumar et al., 2016; Kumar et al., 2017), ROI segmentation (Alom et al., 2018; Yu et al., 2019; Fan et al., 2020), medical object detection (Rijthoven et al., 2018; Mei et al., 2021; Nair et al., 2020; Zheng et al., 2015), and image registration (Simonovsky et al., 2016; Sokooti et al., 2017; Balakrishnan et al., 2018). Among different kinds of deep learning techniques, supervised learning was first adopted in medical image analysis. Although it has been successfully utilized in many applications (Esteva et al., 2017; Long et al., 2017), further deployment of supervised models in many scenarios is majorly hurdled by the limited size of most medical datasets. As compared to regular datasets in computer vision, a medical image dataset usually contains relatively small amounts of images (less than 10,000), and in many cases, only a small percentage of images are annotated by experts. To overcome this limitation, unsupervised and semi-supervised learning methods have received extensive attention in the past three years, which are able to (1) generate more labeled images for model optimization, (2) learn meaningful hidden patterns from unlabeled image data, and (3) generate pseudo labels for the unlabeled data.

There already exist a number of excellent review articles that summarized deep learning applications in medical image analysis. Litjens et al. (2017) and Shen et al. (2017) reviewed relatively early deep learning techniques, which are mainly based on supervised methods. More recently, Yi et al. (2019) and Kazeminia et al. (2020) reviewed the applications of generative adversarial networks across different medical imaging tasks. Cheplygina et al. (2019) surveyed on how to use semi-supervised learning and multiple instance learning in diagnosis or segmentation tasks. Tajbakhsh et al. (2020) investigated a variety of methods to deal with dataset limitations (e.g., scarce or weak annotations) specifically in medical image segmentation. In contrast, a major goal of this paper is to shed light on how the medical image analysis field, which is often bottlenecked by limited, annotated data, can potentially benefit from some latest trends of deep learning. Our survey distinguishes itself from recent review papers with two characteristics – being comprehensive and technically oriented. “Comprehensive” is reflected in three aspects. First, we highlight the applications of a broad range of promising approaches falling in the “not-so-supervised” category, including self-supervised, unsupervised, semi-supervised learning; meanwhile, we do not ignore the continuing importance of supervised methods. Second, rather than covering only a specific task, we introduce the applications of the above learning approaches in four classical medical image analysis tasks (classification, segmentation, detection, and registration). Especially, we discussed the deep learning based object detection in detail, which is rarely mentioned in recent review papers (after 2019). We focused on the applications of chest X-ray, mammogram, CT, and MRI images. All these types of the images have many common characteristics, which are interpreted by physicians at the same department (Radiology). We also mentioned some general methods which were applied on other image domains (e.g. histopathology) but have potential to be used in radiological or MRI images. Third, state-of-the-art architectures/models for these tasks are explained. For example, we summarized how to adapt Transformers from natural language processing for medical image segmentation, which has not been mentioned by existing review papers to the best of our knowledge. In terms of “technically oriented”, we review the recent advances of not-so-supervised approaches in detail. In particular, self-supervised learning is quickly emerging as a promising direction but yet systematically reviewed in the context of medical vision. A wide audience may benefit from this survey, including researchers with deep learning, artificial intelligence and big data expertise, and clinicians/medical researchers.

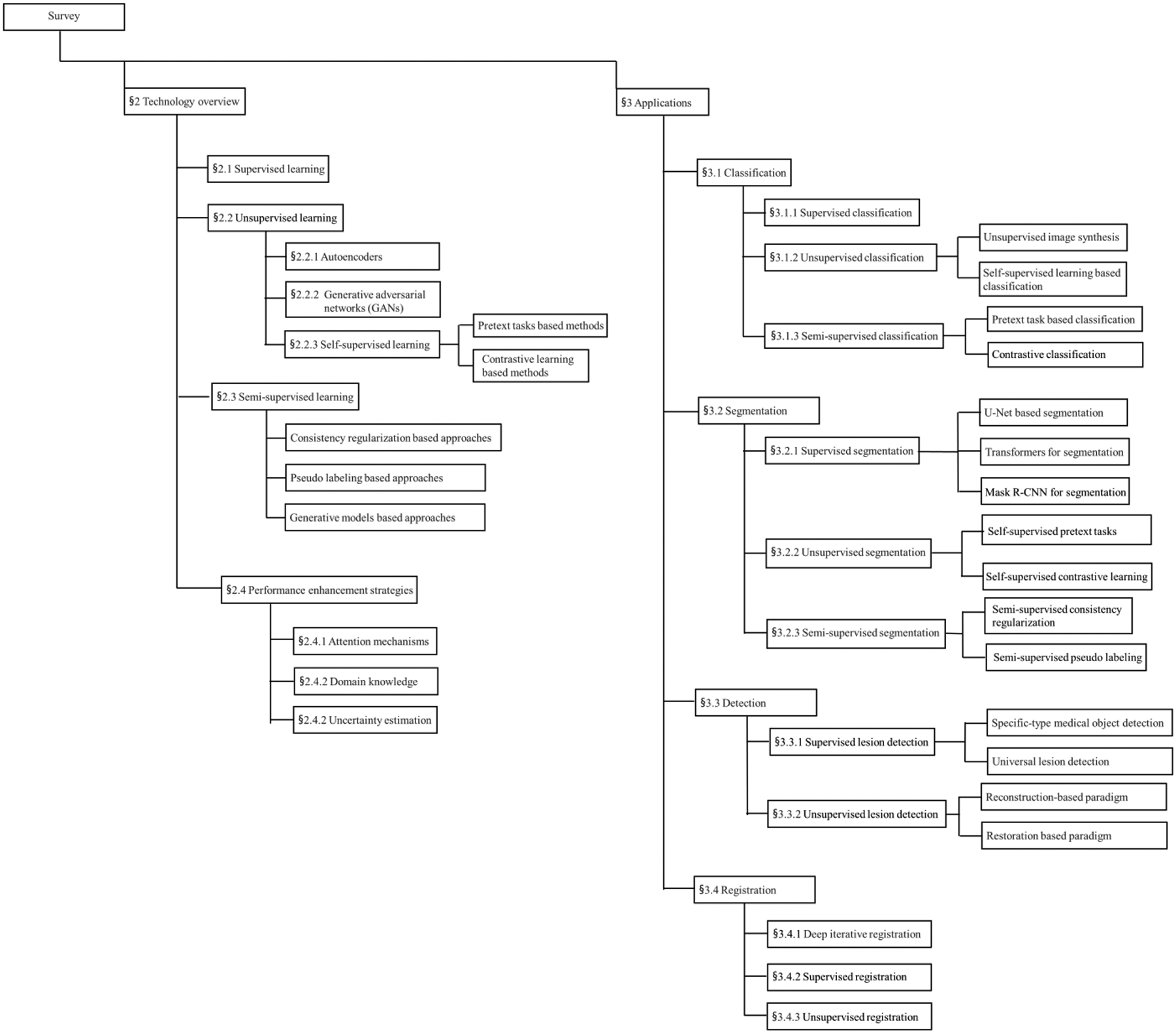

This survey is presented as follows (Figure 1): Section 2 provides an in-depth overview of recent advances in deep learning, with a focus on unsupervised and semi-supervised approaches. In addition, three important strategies for performance enhancement, including attention mechanisms, domain knowledge, and uncertainty estimation, are also discussed. Section 3 summarizes the major contributions of applying deep learning techniques in four main tasks: classification, segmentation, detection, and registration. Section 4 discusses challenges for further improving the model and suggests possible perspectives on future research directions toward large scale applications of deep learning based medical image analysis models.

Figure 1.

The overall structure of this survey.

2. OVERVIEW OF DEEP LEARNING METHODS

Depending on whether labels of the training dataset are present, deep learning can be roughly divided into supervised, unsupervised, and semi-supervised learning. In supervised learning, all training images are labeled, and the model is optimized using the image-label pairs. For each testing image, the optimized model will generate a likelihood score to predict its class label (LeCun et al., 2015). For unsupervised learning, the model will analyze and learn the underlying patterns or hidden data structures without labels. If only a small portion of training data is labeled, the model learns input-output relationship from the labeled data, and the model will be strengthened by learning semantic and fine-grained features from the unlabeled data. This type of learning approach is defined as semi-supervised learning (van Engelen and Hoos, 2020). In this section, we briefly mentioned supervised learning at the beginning, and then majorly reviewed the recent advances of unsupervised learning and semi-supervised learning, which can facilitate performing medical image tasks with limited annotated data. Popular frameworks for these two types of learning paradigms will be introduced accordingly. In the end, we summarize three general strategies that can be combined with different learning paradigms for better performance in medical image analysis, including attention mechanisms, domain knowledge, and uncertainty estimation.

2.1. Supervised learning

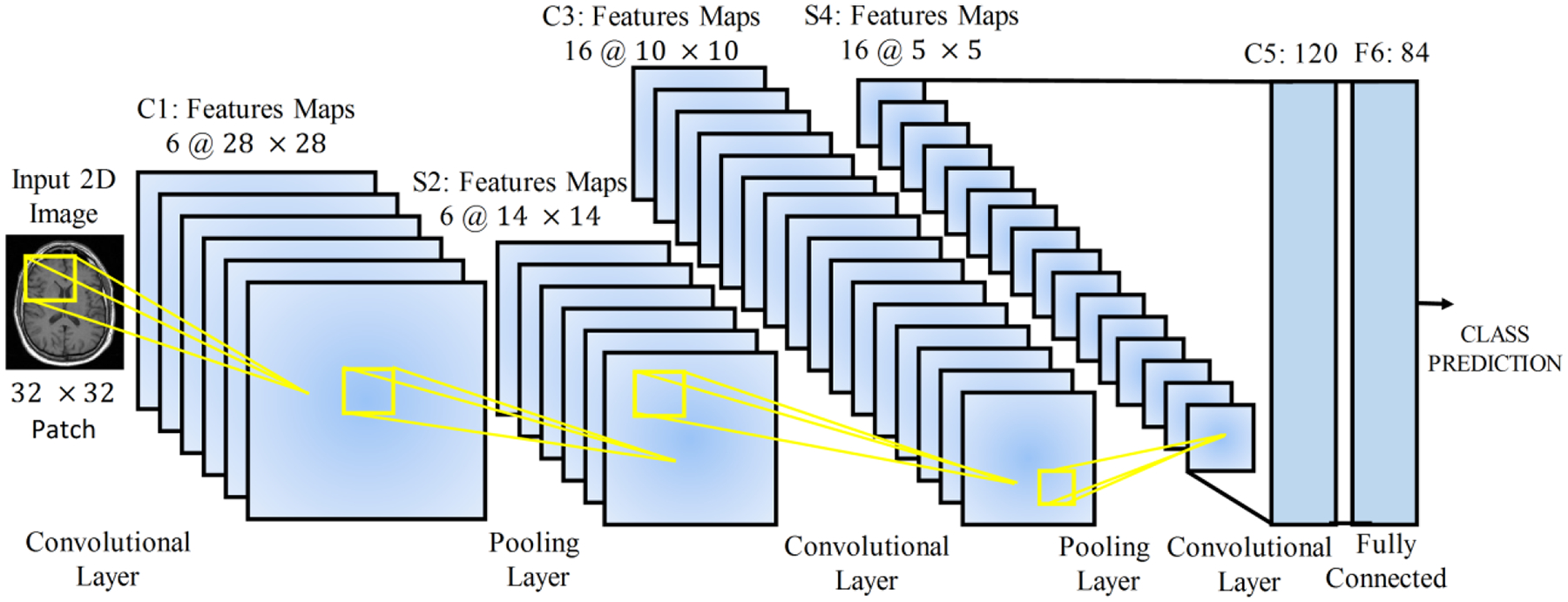

Convolutional neural networks (CNNs) are a widely used deep learning architecture in medical image analysis (Anwar et al., 2018). CNNs are mainly composed of convolutional layers and pooling layers. Figure 2 shows a simple CNN in the context of medical image classification task. The CNN directly takes an image as input, and transforms it via convolutional layers, pooling layers, and fully connected layers, and finally outputs a class-based likelihood of that image.

Figure 2.

A simple CNN for disease classification from MRI images (Anwar et al., 2018).

At each convolutional layer l, a bunch of kernels W = {W1, …, Wk} are used to extract features from the input image, and biases b = {b1, …, bk} are added, generating new feature maps . Then a non-linear transform, an activation function σ(.), is applied resulting in as the input of the next layer. After the convolutional layer, a pooling layer is incorporated to reduce the dimension of feature maps, thus reducing the number of parameters. Average pooling and maximum pooling are two common pooling operations. The above process is repeated for the rest layers. At the end of the network, fully connected layers are usually employed to produce the probability distribution over classes via a sigmoid or softmax function. The predicted probability distribution gives a label for each input instance so that a loss function can be calculated, where y is the real label. Parameters of the network are iteratively optimized by minimizing the loss function.

2.2. Unsupervised learning

2.2.1. Autoencoders

Autoencoders are widely applied in dimensionality reduction and feature learning (Hinton and Salakhutdinov, 2006). The simplest autoencoder, initially known as auto-associator (Bourlard and Kamp, 1988), is a neural network with only one hidden layer that learns a latent feature representation of the input data by minimizing a reconstruction loss between the input and its reconstruction from the latent representation. The shallow structure of simple autoencoders limits their representation power, but deeper autoencoders with more hidden layers can improve the representation. By stacking multiple auto-encoders and optimizing them in a greedy layer-wise manner, deep autoencoders or Stacked Autoencoders (SAEs) can learn more complicated non-linear patterns than shallow ones and thus generalize better outside training data (Bengio et al., 2007). SAEs consist of an encoder network and a decoder network, which are typically symmetrical to each other. To further force models to learn useful latent representations with desirable characteristics, regularization terms such as sparsity constraints in Sparse Autoencoders (Ranzato et al., 2007) can be added to the original reconstruction loss. Other regularized autoencoders include the Denoising Autoencoder (Vincent et al., 2010) and Contractive Autoencoder (Rifai et al., 2011), both designed to be insensitive to input perturbations.

Unlike the above classic autoencoders, variational autoencoder (VAE) (Kingma and Welling, 2013) works in a probablistic manner to learn mappings between the observed data space x ∈ Rm and latent space z ∈ Rn (m ≫ n). As a latent variable model, VAE formulates this problem as maximizing the log-likelihood of the observed samples log p(x) = log ∫ p(x|z)p(z) dz, where p(x|z) can be easily modeled using a neural network, and p(z) is a prior distribution (such as Gaussian) over the latent space. However, the integral is intractable because it is impossible to sample the full latent space. As a result, the posterior distribution p(z|x) also becomes intractable according to Bayes rule. To solve the intractability issue, the authors of VAE propose that in addition to modeling p(x|z) using the decoder, the encoder learns q(z|x) that approximates the unknown posterior distribution. Ultimately a tractable lower bound also termed as evidence lower bound (EBLO), can be derived for log p(x).

where KL stands for the Kullback-Leibler divergence. The first term can be understood as a reconstruction loss measuring the similarity between the input image and its counterpart reconstructed from the latent representation. The second term computes the divergence between the approximated posterior and Gaussian prior.

Later different VAE extensions were proposed to learn more complicated representations. Although the probabilistic working mechanism allows its decoder to generate new data, VAE cannot specifically control the data generation process. Sohn et al. (2015) proposed the so-called conditional VAE (CVAE), where probabilistic distributions learnt by the encoder and decoder are both conditioned using external information (e.g. image classes). This enables VAE to generate structured output representations. Another line of research explores imposing more complex priors on the latent space. For example, Dilokthanakul et al. (2016) presented Gaussian Mixture VAE (GMVAE) that uses a mixture of Gaussians as prior to obtain higher modeling compacity in latent space. We refer readers to a recent paper (Kingma and Welling, 2019) for more details of VAE and its extensions.

2.2.2. Generative adversarial networks (GANs)

Generative adversarial networks (GANs) are a class of deep nets for generative modeling first proposed by Goodfellow et al. (2014). For this architecture, a framework for estimating generative models is designed to directly draw samples from the desired underlying data distribution without the need to explicitly define a probability distribution. It consists of two models: a generator G and a discriminator D. The generative model G takes as input a random noise vector z sampled from a prior distribution Pz(z), often either a Gaussian or a uniform distribution, and then maps z to data space as G(z, θg), where G is a neural network with parameters θg. The fake samples denoted as G(z) or xg are expected to resemble real samples from the training data Pr(x), and these two types of samples are sent into D. The discriminator, a second neural network parameterized by θd, outputs the probability D(x, θd) that a sample comes from the training data rather than G. The training procedure is like playing a minimax two-player game. The discriminative network D is optimized to maximize the log likelihood of assigning correct labels to fake samples and real samples, while the generative model G is trained to maximize the log likelihood of D making a mistake. Through the adversarial process, G is desired to gradually estimate the underlying data distribution and generate realistic samples.

Based on the vanilla GAN, the performance was improved in the following two directions: 1) different loss (objective) functions, and 2) conditional settings. For the first direction, Wasserstein GAN (WGAN) is a typical example. In WGAN, Earth-Mover (EM) distance or Wasserstein-1, commonly known as the Wasserstein distance, was proposed to replace the Jensen–Shannon (JS) divergence in original Vanilla GAN and measure the distance between the real and synthetic data distribution (Arjovsky et al., 2017). The critic of WGAN has the advantage to provide useful gradients information where JS divergence saturates and results in vanishing gradients. WGAN could also improve the stability of learning and alleviate problems like mode collapse.

An unconditional generative model cannot explicitly control the modes of data being synthesized. To guide the data generation process, the conditional GAN (cGAN) is constructed by conditioning its generator and discriminator with additional information (i.e., the class labels) (Mirza and Osindero, 2014). Specifically, the noise vector z and class label c are jointly provided to G; the real/ fake data and class label c are together presented as the inputs of D. The conditional information can also be images or other attributes, not limited to class labels. Further, the auxiliary classifier GAN (ACGAN) presents another strategy to employ label conditioning to improve image synthesis (Odena et al., 2017). Unlike the discriminator of cGAN, D in ACGAN is no longer provided with the class conditional information. Apart from separating real and fake images, D is also tasked with reconstructing class labels. When forcing D to perform the additional classification task, ACGAN can generate high-quality images easily.

2.2.3. Self-supervised learning

In the past few years, unsupervised representation learning has gained huge success in natural language processing (NLP), where massive unlabeled data is available for pre-training models (e.g. BERT, Kenton and Toutanova, 2019) and learning useful feature representations. Then the feature representations are fine-tuned in downstream tasks such as question answering, natural language inference, and text summarization. In computer vision, researchers have explored a similar pipeline – models are first trained to learn rich and meaningful feature representations from the raw unlabeled image data in an unsupervised manner, and then the feature representations are fine-tuned in a wide variety of downstream tasks with labeled data, such as classification, object detection, instance segmentation, etc. However, this practice was not as successful as in NLP for quite a long time, and instead supervised pre-training has been the dominant strategy. Interestingly, we find this situation is changing toward the opposite direction in recent two years, as more and more studies report a higher performance of self-supervised pre-training than supervised pre-training.

In recent literature, the term self-supervised learning is used interchangeably with unsupervised learning; more accurately, self-supervised learning actually refers to a form of deep unsupervised learning, where inputs and labels are created from unlabeled data itself without external supervision. One important motivation behind this technology is to avoid supervised tasks that are often expensive and time-consuming, due to the need to establish new labeled datasets or acquire high-quality annotations in certain fields like medicine. Despite the scarcity and high cost of labeled data, there usually exist large amounts of cheap unlabeled data remaining unexploited in many fields. The unlabeled data is likely to contain valuable information that is either weak or not present in labeled data. Self-supervised learning can leverage the power of unlabeled data to improve both the performance and efficiency in supervised tasks. Since self-supervised learning touches upon vaster data than supervised learning, features learnt in a self-supervised manner can potentially better generalize in the real world. Self supervision can be created in two ways: pretext tasks based methods and contrastive learning based methods. Since the contrastive learning based methods have received broader attention in very recent years, we will highlight more works in this direction.

Pretext task is designed to learn representative features for downstream tasks, but the pretext itself is not of the true interest (He et al., 2020). The pretext tasks learn representations by hiding certain information (e.g., channel, patches, etc.) for each input image, and then predict the missing information from the image’s remaining parts. Examples include image inpainting (Pathak et al., 2016), colorization (Zhang et al., 2016), relative patch prediction (Doersch et al., 2015), jigsaw puzzles (Noroozi and Favaro, 2016), rotation (Gidaris et al., 2018), etc. However, the learnt representations’ generalizability is heavily dependent on the quality of hand-crafted pretext tasks (Chen et al., 2020a).

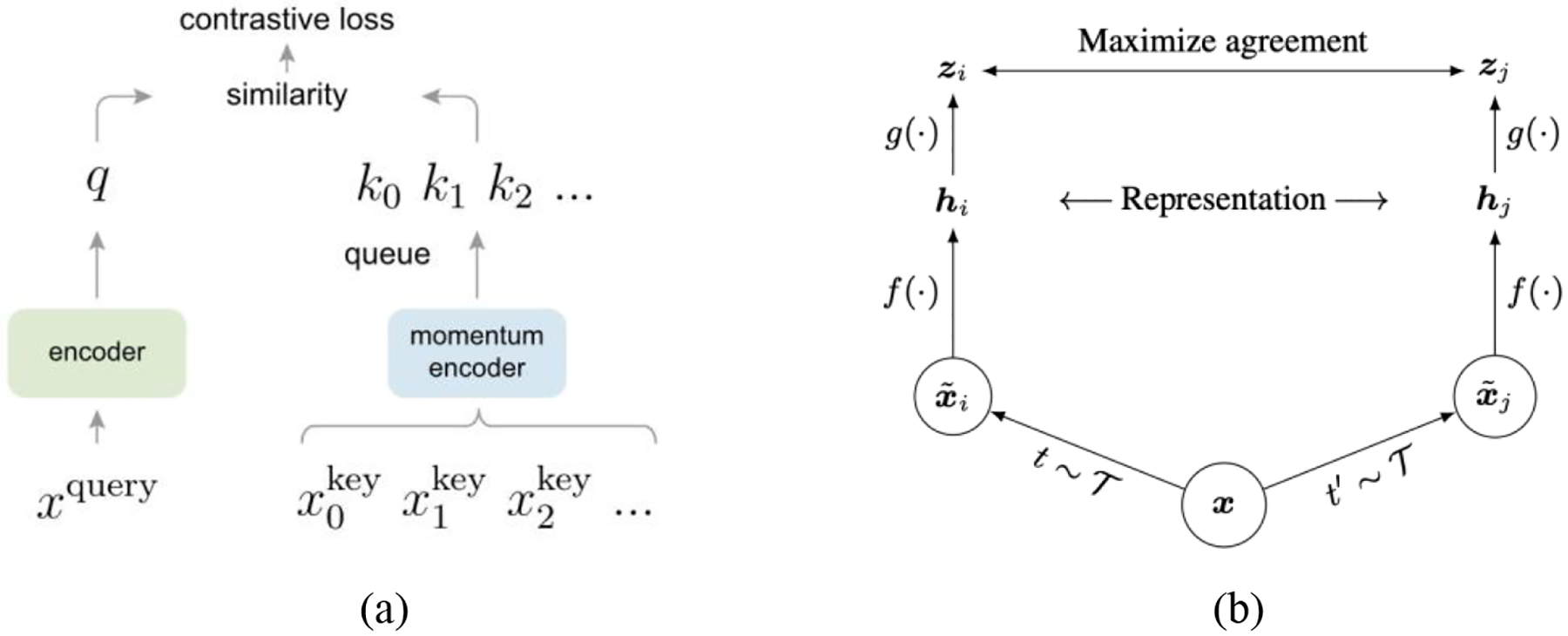

Contrastive learning relies on the so-called contrastive loss, which can date back to at least (Hadsell et al., 2006; Chopra et al., 2005a). Later a number of variants of this contrastive loss were used (Oord et al., 2018; Chen et al., 2020a; Chaitanya et al., 2020). In essence, the original loss and its later versions all enforce a similarity metric to be maximized for positive (similar) pairs and be minimized for negative (dissimilar) pairs, so that the model can learn discriminative features. In the following we will introduce two representative frameworks for contrastive learning, namely Momentum Contrast (MoCo) (He et al., 2020) and SimCLR (Chen et al., 2020a).

MoCo formulates contrastive learning as a dictionary look-up problem, which requires an encoded query to be similar to its matching key. As shown in Figure 3 (a), given an image x, an encoder encodes the image resulting in a feature vector, which is used as a query (q). Likewise, with another encoder the dictionary can be built up by the features, {k0, k1, k2, …} also known as keys, from a large set of image samples {x0, x1, x2, …}. In MoCo, the encoded query q and a key are considered similar if they come from different crops of the same image. Suppose there exists a single dictionary key (k+) that matches with q, then these two items are regarded as a positive pair, whereas the rest keys in the dictionary are considered negative. The authors compute the loss function of a positive pair using InfoNCE (Oord et al., 2018) as follows:

Figure 3.

(a) MoCo (He et al., 2020); (b) SimCLR (Chen et al., 2020a).

Established from a sampled subset of all images, a large dictionary is important for good accuracy. To make the dictionary large, the authors maintain the feature representations from previous image batches as a queue: new keys are enqueued with old keys dequeued. Therefore, the dictionary consists of encoded representations from the current and previous batches. This, however, could lead to a rapidly updated key encoder, rendering the dictionary keys inconsistent, i.e., their comparisons to the encoded query are not consistent. The authors thus propose using momentum update on the key encoder to avoid rapid changes. This key encoder is referred as the momentum encoder.

SimCLR is another popular framework for contrastive learning. In this framework, two augmentated images are considered a postitive pair if they derive from the same example; if not, they are a negative pair. The agreement of feature representations from of postive image pairs are maximized. As shown in Figure 3 (b), SimCLR consists of four components: (1) stochastic image augmentation; (2) encoder networks (f (.)) extracting feature representations from augmented images; (3) a small neural network (multilayer perceptron (MLP) projection head) (g (.)) that maps the feature representations to a lower-dimensional space; and (4) contrastive loss computation. The third component differs SimCLR from its predecessors. Previous frameworks like MoCo compute the feature representations directly rather than first mapping them to a lower-dimensional space. This component is further proven important in achieving satisfactory results, as demonstrated in MoCo v2 (Chen et al., 2020b).

Note that since self-supervised contrastive learning is very new, wide applications of recent advances such as MoCo and SimCLR in the medical image analysis field have yet been established at the time of this writing. Nonetheless, considering the promising results of self-supervised learning reported in the existing literature, we anticipate studies applying this new technology to analyze medical images are likely to explode soon. Also, self-supervised pre-training has great potential to be a strong alternative of supervised pre-training.

2.3. Semi-supervised learning

Different from unsupervised learning that can work just on unlabeled data to learn meaningful representations, semi-supervised learning (SSL) combines labeled and unlabeled data during model training. Especially, SSL applies to the scenario where limited labeled data and large-scale but unlabeled data are available. These two types of data should be relevant, so that the additional information carried by unlabeled data could be useful in compensating the labeled data. It is reasonable to expect that unlabeled data would lead to an average performance boost – probably the more the better for performing tasks with only limited labeled data. In fact, this goal has been explored for several decades, and the 1990s already witnessed a rising interest of applying SSL methods in text classification. The Semi-Supervised Learning book (Chapelle et al., 2009) is a good source for readers to grasp the connection of SSL to classic machine learning algorithms. Interestingly, despite its potential positive value, the authors present empirical findings that unlabeled data sometimes deteriorates the performance. However, this empirical finding seems to be experiencing changes in recent literature of deep learning – an increasing number of works, mostly from the computer vision field, have reported that deep semi-supervised approaches generally perform better than high-quality supervised baselines (Ouali et al., 2020). Even when varying the amount of labeled and unlabeled data, a consistent performance improvement can still be observed. At the same time, deep semi-supervised learning has been successfully applied in the medical image analysis field to reduce annotation cost and achieve better performance. We divide popular SSL methods into three groups: (1) consistency regularization based approaches; (2) pseudo labeling based approaches; (3) generative models based approaches.

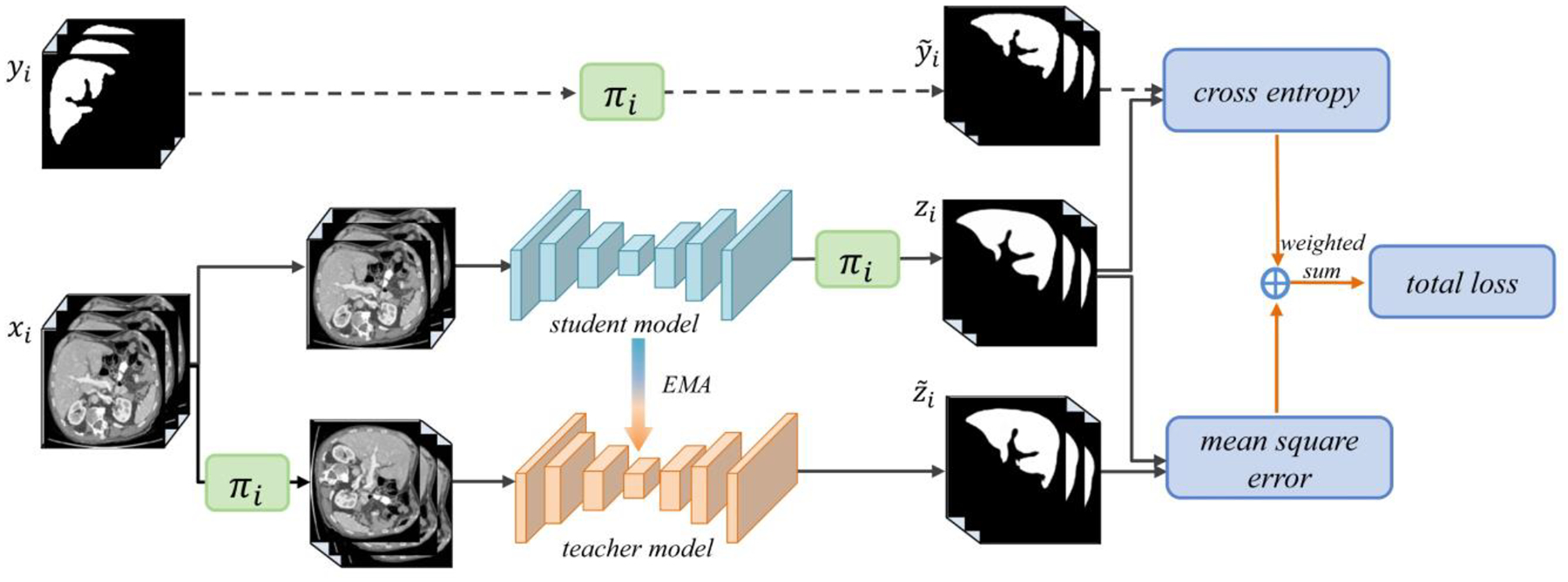

Methods in the first category share one same idea that the prediction for an unlabeled example should not change significantly if some perturbations (e.g., adding noise, data augmentation) are applied. The loss function of an SSL model generally consists of two parts. More concretely, given an unlabeled data example x and its perturbed version , the SSL model outputs logits fθ(x) and . On the unlabeled data, the objective is to give consistent predictions by minimizing the mean squared error , and this leads to the consistency (unsupervised) loss Lu on unlabeled data. On the labeled data, a cross entropy supervised loss Ls is computed. Example SSL models that are regularized by consistency constraints include Ladder Networks (Rasmus et al., 2015), Π-Model (Laine and Aila, 2017), and Temporal Ensembling (Laine and Aila, 2017). A more recent example is the Mean Teacher paradigm (Tarvainen and Valpola, 2017), composed of a teacher model and a student model (Figure 4). The student model is optimized by minimizing Lu on unlabeled data and Ls on labeled data; as an Exponential Moving Average (EMA) of the student model, the teacher model is used to guide the student model for consistency training. Most recently, several works such as unsupervised data augmentation (UDA) (Xie et al., 2020) and MixMatch (Berthelot et al., 2019) have brought the performance of SSL to a new level.

Figure 4.

Mean Teacher model application in medical image analysis (Li et al., 2020b). πi refers to the transformation operations, including rotation, flipping, and scaling. zi and are network outputs.

For pseudo labeling (Lee, 2013), an SSL model itself generates pseudo annotations for unlabeled examples; the pseudo-labeled examples are used jointly with labeled examples to train the SSL model. This process is iterated for several times, during which the quality of pseudo labels and the model’s performance get enhanced. The naïve pseudo-labeling process can be combined with Mixup augmentation (Zhang et al., 2018a) to further improve SSL model’s performance (Arazo et al., 2020). Pseudo labeling also works well with multi-view co-training (Qiao et al., 2018). For each view of the labeled examples, co-training learns a separate classifier, and then the classifier is used to generate pseudo labels for the unlabeled data; co-training maximizes the agreement of assigning pseudo annotations among each view of unlabeled examples.

For methods in the third category, semi-supervised generative models such as GANs and VAEs put more focus on solving target tasks (e.g., classification) than just generating high-fidelity samples. Here we illustrate the mechanism of semi-supervised GAN for brevity. One simple way to adapt GAN to semi-supervised settings is by modifying the discriminator to perform additional tasks. For example, in the task of image classification, Salimans et al. (2016) and Odena (2016) changed the discriminator in DCGAN by forcing it to serve as a classifier. For an unlabeled image, the discriminator functions as in the vanilla GAN, providing a probability of the input image being real; for a labeled image, the discriminator predicts its class besides generating a realness probability. However, Li et al. (2017) demonstrated that the optimal performance of the two tasks may not be achieved at the same time by a single discriminator. Thus, they introduced an additional classifier that is independent from the generator and discriminator. This new architecture composed of three components is called Triple-GAN.

2.4. Strategies for performance enhancement

2.4.1. Attention mechanisms

Attention originates from primates’ visual processing mechanism that selects a subset of pertinent sensory information, rather than using all available information for complex scene analysis (Itti et al., 1998). Inspired by this idea of focusing on specific parts of inputs, deep learning researchers have integrated attention into developing advanced models in different fields. Attention-based models have achieved huge success in fields related to natural language processing (NLP), such as machine translation (Bahdanau et al., 2015; Vaswani et al., 2017) and image captioning (Xu et al., 2015; You et al., 2016; Anderson et al., 2018). One prominent example is the Transformer architecture that solely relies on self-attention to capture global dependencies between input and output, without requiring sequential computation (Vaswani et al., 2017). Attention mechanisms have also become popular in computer vision tasks, such as natural image classification (Wang et al., 2017; Woo et al., 2018; Jetley et al., 2018), segmentation (Chen et al., 2016; Ren and Zemel, 2017), etc. When processing images, attention modules can adaptively learn “what” and “where” to attend so that model predictions are conditioned on the most relevant image regions and features. Based on how attended locations in an image are selected, attention mechanisms can be roughly divided into two categories, namely soft and hard attention. The former deterministically learns a weighted average of features at all locations, whereas the latter stochastically samples one subset of feature locations to attend (Cho et al., 2015). Since hard attention is not differentiable, soft attention, despite being more computationally expensive, has received more research efforts. Following this differentiable mechanism, different types of attention have been further developed, such as (1) spatial attention (Jaderberg et al., 2015), (2) channel attention (Hu et al., 2018a), (3) combination of spatial and channel-wise attention (Wang et al., 2017; Woo et al., 2018), and (4) self attention (Wang et al., 2018). Readers are referred to the excellent review by Chaudhari et al. (2021) for more details of attention mechanisms.

2.4.2. Domain knowledge

Most well-established deep learning models, originally designed to analyze natural images, are likely to produce only suboptimal results when directly applied to medical image tasks (Zhang et al., 2020a). This is because natural and medical images are very different in nature. First, medical images usually exhibit high inter-class similarity, so one major challenge lies in the extraction of fine-grained visual features to understand subtle differences that are important to making correct predictions. Second, typical medical image datasets are much smaller than benchmark natural datasets that contain images ranging from tens of thousands to millions. This hinders models with high complexity in computer vision from being directly applied in the medical domain. Therefore, how to customize models for medical image analysis remains an important issue. One possible solution is to integrate proper domain knowledge or task-specific properties, which has proven beneficial to facilitate learning useful feature representations and reducing model complexity in the medical imaging context. In this review paper, we will mention a variety of domain knowledge, such as anatomical information in MRI and CT images (Zhou et al., 2021; Zhou et al., 2019a), 3D spatial context information from volumetric images (Zhang et al., 2017; Zhuang et al., 2019; Zhu et al., 2020a), multi-instance data from the same patient (Azizi et al., 2021), patient metadata (Vu et al., 2021), radiomic features (Shorfuzzaman and Hossain, 2021), text reports accompanying images (Zhang et al., 2020a), etc. Readers interested in a comprehensive review of how to integrate medical domain knowledge into network designing can refer to the work of Xie et al. (2021a).

2.4.3. Uncertainty estimation

Reliability is of critical concern when it comes to clinical settings with high-safety requirements (e.g. cancer diagnosis). Model predictions are easily subject to factors such as data noise and inference errors, so it is desirable to quantify uncertainty and make the results trustworthy (Abdar et al., 2021). Commonly used techniques for uncertainty estimation include Bayesian approximation (Gal and Ghahramani, 2016) and model ensemble (Lakshminarayanan et al., 2017). Bayesian approaches like Monte Carlo dropout (MC-dropout) (Gal and Ghahramani, 2016) revolve around approximating the posterior distribution over neural networks’ parameters. Ensemble techniques combine multiple models to measure uncertainty. Readers interested in uncertainty estimation are referred to the comprehensive review by Abdar et al. (2021).

3. DEEP LEARNING APPLICATIONS

3.1. Classification

Medical image classification is the goal of computer-aided diagnosis (CADx), which aims at either distinguishing malignant lesions from benign ones or identifying certain diseases from input images (Shen et al., 2017; van Ginneken et al., 2011). Deep learning based CADx schemes have received huge success over the last decade. However, deep neural nets generally depend on sufficient annotated images to ensure good performance, and this requirement may not be easily satisfied by many medical image datasets. To alleviate the lack of large annotated datasets, many techniques have been used, and transfer learning has stood out indisputably as the most dominant paradigm. Beyond transfer learning, several other learning paradigms including unsupervised image synthesis, self-supervised and semi-supervised learning, have demonstrated great potential in performance enhancement given limited annotated data. We will introduce these learning paradigms’ applications in medica image classification in the following subsections.

3.1.1. Supervised classification

Starting from AlexNet (Krizhevsky et al., 2012), a variety of end-to-end models with increasingly deeper networks and larger representation compacity have been developed for image classification, such as VGG (Simonyan and Zisserman, 2014), GoogleLeNet (Szegedy et al., 2015), and ResNet (He et al., 2016), and DenseNet (Huang et al., 2017). These models have yielded superior results, making deep learning mainstream not only in developing high-performing CADx schemes but also in other subfields of medical image processing.

Nonetheless, the performance of deep learning models highly depends on the size of training dataset and the quality of image annotations. In many medical image analysis tasks especially 3D scenarios, it can be challenging to establish a sufficiently large and high-quality training dataset because of difficulties in data acquisition and annotation (Tajbakhsh et al., 2016; Chen et al., 2019a). The supervised transfer learning technique (Tajbakhsh et al., 2016; Donahue et al., 2014) has been routinely used to tackle the insufficient training data problem and improve model’s performance, where standard architectures like ResNet (He et al., 2016) are first pre-trained in the source domain with a large amount of natural images (e.g., ImageNet (Deng et al., 2009)) or medical images, and then the pre-trained models are transferred to the target domain and fine-tuned using much fewer training examples. Tajbakhsh et al. (2016) showed that pre-trained CNNs with adequate fine-tuning performed at least as well as CNNs trained from scratch. Indeed, transfer learning has become a cornerstone for image classification tasks (de Bruijne, 2016) across a variety of modalities, including CT (Shin et al., 2016), MRI (Yuan et al., 2019), mammography (Huynh et al., 2016), X-ray (Minaee et al., 2020), etc.

Within the paradigm of supervised classification, different types of attention modules have been used for performance boost and better model interpretability (Zhou et al., 2019b). Guan et al. (2018) introduced an attention-guided CNN, which is based on ResNet-50 (He et al., 2016). The attention heatmaps from the global X-ray image were used to suppress large irrelevant areas and highlight local regions that contain discriminative cues for the thorax disease. The proposed model effectively fused the global and local information and achieved a good classification performance. In another study, Schlemper et al. (2019) incorporated attention modules to a variant network of VGG (Baumgartner et al., 2017) and U-Net (Ronneberger et al., 2015) for 2D fetal ultrasound image plane classification and 3D CT pancreas segmentation, respectively. Each attention module was trained to focus on a subset of local structures in input images, and these local structures contain salient features useful to the target task.

3.1.2. Unsupervised methods

I. Unsupervised image synthesis

Classic data augmentation (e.g., rotation, scale, flip, translation, etc.) is simple but effective in creating more training instances to achieve better performance (Krizhevsky et al., 2012). However, it cannot bring much new information to the existing training examples. Given the advantage of learning hidden data distribution and generating realistic images, GANs have been used as a more complicated approach for data augmentation in the medical domain.

Frid-Adar et al. (2018b) exploited DCGAN for synthesizing high-quality examples to improve liver lesion classification on a limited dataset. The dataset only has 182 liver lesions including cysts, metastases, and hemangiomas. Since training GAN typically needs a large number of examples, the authors applied classic data augmentation (e.g., rotation, flip, translation, scale) to create nearly 90,000 examples. The GAN-based synthetic data augmentation significantly improved the classification performance, with the sensitivity and specificity increased from 78.6% and 88.4% to 85.7% and 92.4% respectively. In their later work (Frid-Adar et al., 2018a), the authors further extended lesion synthesis from the unconditional setting (DCGAN) to a conditional setting (ACGAN). The generator of ACGAN was conditioned on the side information (lesion classes), and the discriminator predicted lesion classes besides synthesizing new examples. However, it was found that ACGAN-based synthetic augmentation delivered a weaker classification performance than its unconditional counterpart.

To alleviate data scarcity and especially the lack of positive cancer cases, Wu et al. (2018a) adopted a conditional structure (cGAN) to generate realistic lesions for mammogram classification. Traditional data augmentation was also used to create enough examples for training GAN. The generator, conditioned with malignant/non-malignant labels, can control the process of generating a specific type of lesions. For each non-malignant patch image, a malignant lesion was synthesized onto it using a segmentation mask of another malignant lesion; for each malignant image, its lesion was removed, and a non-malignant patch was synthesized. Although the GAN-based augmentation achieved better classification performance than traditional data augmentation, the improvement was relatively small, less than 1%.

II. Self-supervised learning based classification

Recent self-supervised learning approaches have shown great potential in improving performance of medical tasks lacking sufficient annotations (Bai et al., 2019; Tao et al., 2020; Li et al., 2020a; Shorfuzzaman and Hossain, 2021; Zhang et al., 2020a). This method is suitable to the scenario where large amounts of medical images are available, but only a small percentage are labeled. Accordingly, the model optimization is divided into two steps, namely, self-supervised pre-training and supervised fine-tuning. The model is initially optimized using unlabeled images to effectively learn good features that are representative of the image semantics (Azizi et al., 2021). The pre-trained models from self-supervision are followed by supervised fine-tuning to achieve faster and better performance in subsequent classification tasks (Chen et al., 2020c). In practice, self-supervision can be created either through pretext tasks (Misra and Maaten, 2020) or contrastive learning (Jing and Tian, 2020) as follows.

Self-supervised pretext task based classification utilizes common pretext tasks such as rotation prediction (Tajbakhsh et al., 2019) and Rubik’s cube recovery (Zhuang et al., 2019; Zhu et al., 2020a). Chen et al. (2019b) argued that existing pretext tasks such as relative position prediction (Doersch et al., 2015) and local context prediction (Pathak et al., 2016) resulted in only marginal improvements on medical image datasets; the authors designed a new pretext task based on context restoration. This new pretext task has two steps: disordering patches in corrupted images and restoring the original images. The context restoration pre-training strategy improved the performance of medical image classification. Tajbakhsh et al. (2019) exploited three pretext tasks, namely, rotation (Gidaris et al., 2018), colorization (Larsson et al., 2017), and WGAN-based patch reconstruction, to pre-train models for classification tasks. After pre-training, models were trained using labeled examples. It was shown that pretext tasks based pre-training in the medical domain was more effective than random initializations and transfer learning (ImageNet pre-training) for diabetic retinopathy classification.

For self-supervised contrastive classification, Azizi et al. (2021) adopted the self-supervised learning framework SimCLR (Chen et al., 2020a) to train models (wider versions of ResNet-50 and ResNet-152) for dermatology condition classification and chest X-ray classification. They pre-trained the models by first using unlabeled natural images then with unlabeled dermatology images and chest X-rays. Feature representations were learned by maximizing agreement between positive image pairs that are either two augmented examples of the same image or multiple images from the same patient. The pre-trained models were fine-tuned using much fewer labeled dermatology images and chest X-rays. These models outperformed their counterparts pre-trained using ImageNet by 1.1% in mean AUC for chest X-ray classification and 6.7% in top-1 accuracy for dermatology condition classification. MoCo (He et al., 2020; Chen et al., 2020b) is another popular self-supervised learning framework to pre-train models for medical classification tasks, such as COVID-19 diagnosis from CT images (Chen et al., 2021a) and pleural effusion identification in chest X-rays (Sowrirajan et al., 2021). Furthermore, it has been shown that self-supervised contrastive pre-training can greatly benefit from the incorporation of domain knowledge. For example, Vu et al. (2021) harnessed patient metadata (patient number, image laterality, and study number) to construct and select positive pairs from multiple chest X-ray images for MoCo pre-training. With only 1% of the labeled data for pleural effusion classification, the proposed approach improved mean AUC by 3.4% and 14.4% compared to previous contrastive learning method (Sowrirajan et al., 2021) and ImageNet pre-training respectively.

3.1.3. Semi-supervised learning

Unlike self-supervised approaches that can learn useful feature representations just from unlabeled data, semi-supervised learning needs to integrate unlabeled data with labeled data through different ways to train models for a better performance. Madani et al. (2018a) employed GAN that was trained in a semi-supervised manner (Kingma et al., 2014) for cardiac disease classification in chest X-rays where labeled data was limited. Unlike the vanilla GAN (Goodfellow et al., 2014), this semi-supervised GAN was trained using both unlabeled and labeled data. Its discriminator was modified to predict not only the realness of input images but also image classes (normal/abnormal) for real data. When increasing the number of labeled examples, the semi-supervised GAN based classifier consistently performed better than supervised CNN. Semi-supervised GAN was also shown useful in other data-limited classification tasks, such as CT lung nodule classification (Xie et al., 2019a), and left ventricular hypertrophy classification from echocardiograms (Madani et al., 2018b). Besides the semi-supervised adversarial approach, consistency-based semi-supervised methods such as Π -Model (Laine and Aila, 2017) and Mean Teacher (Tarvainen and Valpola, 2017) have also been used to leverage unlabeled medical image data for better classification (Shang et al., 2019; Liu et al., 2020a).

3.2. Segmentation

Medical image segmentation, identifying the set of pixels or voxels of lesions, organs, and other substructures from background regions, is another challenging task in medical image analysis (Litjens et al., 2017). Among all common image analysis tasks such as classification and detection, segmentation needs the strongest supervision (large amounts of high-quality annotations) (Tajbakhsh et al., 2020). Since its introduction in 2015, U-Net (Ronneberger et al., 2015) has become probably the most well-known architecture for segmenting medical images; afterwards, different variants of U-Net have been proposed to further improve the segmentation performance. From the very recent literature, we observe that the combination of U-Net and Transformers from NLP (Chen et al., 2021b) has contributed to state-of-the-art performance. In addition, a number of semi-supervised and self-supervised learning based approaches have also been proposed to alleviate the need for large annotated datasets. Accordingly, in this section we will (1) review the original U-Net and its important variants, and summarize useful performance enhancing strategies; (2) introduce the combination of U-Net and Transformers, and Mask RCNN (He et al., 2017); 3) cover self-supervised and semi-supervised approaches for segmentation. Since recent studies focus on applying Transformers to segment medical images in a supervised manner, we purposely position the introduction of Transformers-based architectures in the supervised segmentation section. However, it should be noted that such categorization does not mean Transformers-based architectures cannot be used in semi-supervised or unsupervised settings.

3.2.1. Supervised learning based segmenting models

I. U-Net and its variants

In a convolutional network, the high-level coarse-grained features learned by higher layers capture semantics beneficial to the whole image classification; in contrast, the low-level fine-grained features learned by lower layers contain useful details for precise localizations (i.e., assigning a class label to each pixel) (Hariharan et al., 2015), which are important to image segmentation. U-Net is built on the fully convolutional network (Long et al., 2015), the key innovation of U-Net is the so-called skip connections between opposing convolutional layers and deconvolutional layers, which successfully concatenate features learned at different levels to improve the segmentation performance. Meanwhile, skip connections is also helpful in recovering the network’s output to be of the same spatial resolution as the input. U-Net takes 2D images as input, and it generates several segmentation maps, each of which corresponds to one respective pixel class.

Based on the basic architectures, Drozdzal et al. (2016) further studied the influence of long and short skip connections in biomedical image segmentation. They concluded that adding short skip connections is important to train very deep segmentation networks. In one study, Zhou et al. (2018) claimed that the plain skip connections between U-Net’s encoder and decoder subnetworks leads to fusion of semantically dissimilar feature maps; they proposed to reduce the semantic gap prior to fusing feature maps. In the proposed model UNet++, the plain skip connections were replaced by nested and dense skip connections. The suggested architecture outperformed U-Net and wide U-Net across four different medical image segmentation tasks.

Aside from redesigning the skip connections, Çiçek et al. (2016) replaced all 2D operations with their 3D counterparts to extend the 2D U-Net to 3D U-Net for volumetric segmentation with sparsely annotated images. Further, Milletari et al. (2016) proposed V-Net for 3D MRI prostate volumes segmentation. A major architecture difference between U-Net and V-Net lies in the change of the forward convolutional units (Figure 5(a)) to residual convolutional units (Figure 5(c)), so V-Net is also referred as residual U-Net. A new loss function based on Dice coefficient was proposed to deal with the imbalanced number of foreground and background voxels. To tackle the scarcity of annotated volumes, the authors augmented their training dataset with random non-linear transformations and histogram matching. Gibson et al. (2018a) proposed the Dense V-network that modified V-Net’s loss function of binary segmentation to support multiorgan segmentation of abdominal CT images. Although the authors followed the V-Net architecture, they replaced its relatively shallow down-sampling network with a sequence of three dense feature stacks. The combination of densely linked layers and the shallow V-Net architecture demonstrates its importance in improving segmentation accuracy, and the proposed model yielded significantly higher Dice scores for all organs compared to multi-atlas label fusion (MALF) methods.

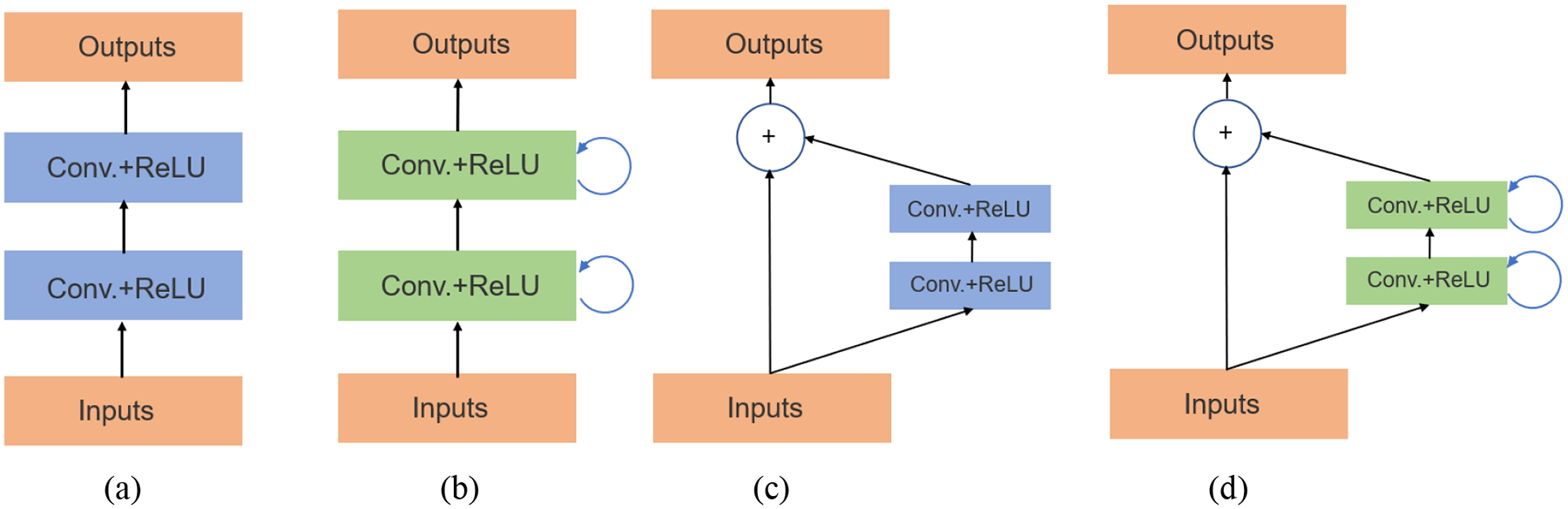

Figure 5.

Units of different segmentation networks (a) forward convolutional unit (U-Net), (b) recurrent convolutional block (RCNN), (c) residual convolutional unit (residual U-Net), and (d) recurrent residual convolutional unit (R2U-Net) (Alom et al., 2018).

Alom et al. (2018) proposed to integrate the architectural advantages of recurrent convolutional neural network (RCNN) (Ming and Xiaolin, 2015) and ResNet (He et al., 2016) when designing U-Net based segmentation networks. In their first network (RU-Net), the authors replaced U-Net’s forward convolutional units using RCNN’s recurrent convolutional layers (RCL) (Figure 5(b)), which can help accumulate useful features to improve segmentation results. In their second network (R2U-Net), the authors further modified RCL using ResNet’s residual units (Figure 5(d)), which learns a residual function by using identity mapping for shortcut connections, thus allowing for training very deep networks. Both models achieved better segmentation performance than U-Net and residual U-Net. Dense convolutional blocks (Huang et al., 2017) also demonstrated its superiority in enhancing segmentation performance on liver and tumor CT volumes (Li et al., 2018).

Besides the redesigned skip connections and modified architectures, U-Net based segmentation approaches also benefit from adversarial training (Xue et al., 2018; Zhang et al., 2020b), attention mechanisms (Jetley et al., 2018; Anderson et al., 2018; Oktay et al., 2018; Nie et al., 2018; Sinha and Dolz, 2021), and uncertainty estimation (Wang et al., 2019a; Yu et al., 2019; Baumgartner et al., 2019; Mehrtash et al., 2020). For example, Xue et al. (2018) developed an adversarial network for brain tumor segmentation, and the network has two parts: a segmentor and a critic. The segmentor is a U-Net-like network that generates segmentation maps given input images; the predicted maps and ground-truth segmentation maps are sent into the critic network. Alternatively training these two components eventually led to good segmentation results. Oktay et al. (2018) proposed incorporating attention gates (AGs) into the U-Net architecture to suppress irrelevant features from background regions and highlight important salient features that are propagated through the skip connections. Attention U-Net consistently delivered a better performance than U-Net in CT pancreas segmentation. Baumgartner et al. (2019) developed a hierarchical probabilistic model to estimate uncertainties in the segmentation of prostate MR and thoracic CT images. The authors employed variational autoencoders to infer the uncertainties or ambiguities in expert annotations, and separate latent variables were used to model segmentation variations at different resolutions.

II. Transformers for segmentation

Transformers are a group of encoder-decoder network architectures used for sequence-to-sequence processing in NLP (Chaudhari et al., 2021). One critical sub-module is known as multi-head self-attention (MSA), where multiple parallel self-attention layers are used to simultaneously generate multiple attention vectors for each input. Different from the convolution based U-Net and its variants, Transformers rely on the self-attention mechanisms, which possess the advantage of learning complex, long-range dependencies from input images. There exist two ways to adapt Transformers in the context of medical image segmentation: hybrid and Transformer-only. The hybrid approach combines CNNs and Transformers, while the latter approach does not involve any convolution operations.

Chen et al. (2021b) present TransUNet, the first Transformers-based framework for medical image segmentation. This architecture combines CNN and Transformer in a cascaded manner, where one’s advantages are used to compensate for the other’s limitations. As introduced previously, U-Net and its variants based on convolution operations have achieved satisfactory results. Because of skip connections, low-level/high-resolution CNN features from the encoder, which contain precise localization information, are utilized by the decoder to enable better performance. However, due to the intrinsic locality of convolutions, these models are generally weak at modeling long-range relations. On the other hand, although Transformers based on self-attention mechanisms can easily capture long-range dependencies, the authors found that using Transformer alone cannot provide satisfactory results. This is because it exclusively concentrates on learning global context but ignores learning low-level details containing important localization information. Therefore, the authors propose to combine low-level spatial information from CNN features with global context from the Transformer. As shown in Figure 6 (b), TransUNet has an encoder-decoder design with skip connections. The encoder is composed of a CNN and several Transformer layers. The input image needs to be first split into patches and tokenized. Then the CNN is used to generate feature maps for input patches. CNN features at different resolution levels are passed to the decoder though skip connections, so that spatial localization information can be retained. Next, patch embeddings and positional embeddings are applied to the sequence of feature maps. The embedded sequence is sent into a series of Transformer layers to learn global relations. Each Transformer layer consists of an MSA block (Vaswani et al., 2017; Dosovitskiy et al., 2020) and a multi-layer perceptron (MLP) block (Figure 6 (a)). The hidden feature representations produced by the last Transformer layer are reshaped and gradually upsampled by the decoder, which outputs a final segmentation mask. TransUNet demonstrates superior performance in the CT multi-organ segmentation task over other competing methods like attention U-Net.

Figure 6.

(a) Transformer layer; (b) the architecture of TransUNet (Chen et al., 2021b)

In another study, Zhang et al. (2021) adopt a different approach to combine CNN and Transformer. Instead of first using CNN to extract low-level features and then passing features through the Transformer layers, the proposed model TransFuse combines CNN and Transformer with two branches in a parallel manner. The Transformer branch consisting of several layers takes as input a sequence of embedded image patches to capture global context information. The output of the last layer is reshaped into 2D feature maps. To recover finer local details, these maps are upsampled to higher resolutions at three different scales. Correspondingly, the CNN branch uses three ResNet-based blocks to extract features from local to global at three different scales. Features with the same resolution scale from both branches are selectively fused using an independent module. The fused features can capture both the low-level spatial and high-level global context. In the end, the multi-level fused features are used to generate a final segmentation mask. TransFuse achieved good performance in prostate MRI segmentation.

In addition to 2D image segmentation, the hybrid approach is also useful to 3D scenarios. Hatamizadeh et al. (2022) propose a UNet-based architecture to perform volumetric segmentation of MRI brain tumor and CT spleen. Similar to 2D cases, 3D images are first split into volumes. Then linear embeddings and positional embeddings are applied to the sequence of input image volumes before fed to the encoder. The encoder, composed of multiple Transformer layers, extracts multi-scale global feature representations from the embedded sequence. The extracted features at different scales are all upsampled to higher resolutions and later merged with multi-scale features from the decoder via skip connections. In another study, Xie et al. (2021b) research on reducing Transformers’ computational and spatial complexities in the 3D multi-organ segmentation task. To achieve this goal, they replace the original MSA module in the vanilla Transformer with the deformable self-attention module (Zhu et al., 2021a). This attention module attends over a small set of key positions instead of treating all positions equally, thus resulting in much lower complexity. Besides, their proposed architecture CoTr, is in the same spirit of TransUNet – a CNN generates feature maps, used as the inputs of Transformers. The difference lies in that instead of extracting only single-scale features, the CNN in CoTr extracts feature maps at multiple scales.

For the Transformer-only paradigm, Cao et al. (2021) present Swin-Unet, the first UNet-like pure Transformer architecture for medical image segmentation. Swin-UNet has a symmetric encoder-decoder structure without using any convolutional operations. The major components of the encoder and decoder are (1) Swin Transformer blocks (Liu et al., 2021) and (2) patching merging or expanding layers. Enabled by a shifted windowing scheme, the Swin Transformer block exhibits better modeling power as well as lower complexity in computing self-attention. Therefore, the authors use it to extract feature representations for the input sequence of image patch embeddings. The subsequent patching layer down-samples the feature representations/ maps into lower resolutions. These down-sampled maps are further passed through several other Transformer blocks and patching merging layers. Likewise, the decoder also uses Transformer blocks for feature extraction, but its patching expanding layers upsample feature maps into higher resolutions. Similar to U-Net, the upsampled feature maps are fused with the down-sampled feature maps from the encoder via skip connections. Finally, the decoder outputs pixel-level segmentation predictions. The proposed framework achieved satisfactory results on multi-organ CT and cardiac MRI segmentation tasks.

Note that, to ensure good performance and reduce training time, most of the Transformers-based segmentation models introduced so far are pre-trained on a large external dataset (e.g., ImageNet). Interestingly, it has been shown that Transformers can also produce good results without pre-training by utilizing computationally efficient self-attention modules (Wang et al., 2020a) and new training strategies to integrate high-level information and finer details (Valanarasu et al., 2021). Also, when applying Transformers-based model for 3D medical image segmentation, Hatamizadeh et al. (2022) and Xie et al. (2021b) find pre-training did not show performance improvement.

III. Mask R-CNN for segmentation

Aside from the above UNet and Transformers-based approaches, another architecture Mask RCNN (He et al., 2017), which was originally developed for pixelwise instance segmentation, has achieved good results in medical tasks. Since it is closely related to Faster RCNN (Ren et al., 2015; Ren et al., 2017), which is a region-based CNN for object detection, details of Mask RCNN and its relations with the detection architectures will be elaborated later. To sum up in brief, Mask RCNN has (1) a region proposal network (RPN) as in Faster RCNN to produce high-quality region proposals (i.e., likely to contain objects), (2) the RoIAlign layer to preserve spatial correspondence between RoIs and their feature maps, and (3) a parallel branch for binary mask prediction in addition to bounding box prediction as in Faster RCNN. Notably, the Feature Pyramid Network (FPN) (Lin et al., 2017a) is used as the backbone of the Mask RCNN to extract multi-scale features. FPN has a bottom-up pathway and a top-down pathway to extract and merge features in a pyramidal hierarchy. The bottom-up pathway extracts feature maps from high resolution (semantically weak features) to low resolution (semantically strong), whereas the top-down pathway operates in the opposite. At each resolution, features generated by the top-down pathway are enhanced by features from the bottom-up pathway via skip connections. This design might make FPN seemingly resemble U-Net, but the major difference is that FPN makes predictions independently at all resolution scales instead of one.

Wang et al. (2019b) proposed a volumetric attention (VA) module within the Mask RCNN framework for 3D medical image segmentation. This attention module can utilize the contextual relations along the z direction of 3D CT volumes. More concretely, feature pyramids are extracted from not only the target image (3 adjacent slices with the target CT slice in the middle), but also a series of neighboring images (also 3 CT slices). Then the target and neighboring feature pyramids are concatenated at each level to form an intermediate pyramid, which carries long-range relations in the z axis. In the end, spatial attention and channel attention are applied on the intermediate and target pyramids to form the final feature pyramid for mask prediction. With this VA module incorporated, Mask RCNN could achieve lower false positives in segmentation. In another study, Zhou et al. (2019c) combined UNet++ and Mask RCNN, leading to Mask RCNN++. As mentioned earlier, UNet++ demonstrates better segmentation results using the redesigned nested and dense skip connections, so the authors use them to replace the plain skip connections of the FPN inside Mask RCNN. A large performance boost was observed using the proposed model.

3.2.2. Unsupervised learning based segmenting models

For medical image segmentation, to alleviate the need for a large amount of annotated training data, reserachers have adopted generative models for image synthesis to increase the number of training examples (Zhang et al., 2018b; Zhao et al., 2019a). Meanwhile, exploiting the power of unlabeled medical images seems like a much more popular choice. In contrast to the difficult and expensive high-quality annotated dataset, unlabeled medical images are often available, usually coming with a large number. Given a small medical image dataset with limited ground truth annotations and a related but unlabeled large dataset, reserachers have explored self-supervised and semi-supervised learning approches to learn useful and transferrable feature representations from the unlabled dataset, which will be discussed in this and the next section respectively.

Self-supervised pretext tasks:

Since self-supervision via pretext tasks and contrastive learning can learn rich semantic representations from unlabeled datasets, self-supervised learning is often used to pre-train the model and enable solving downstream tasks (e.g., medical image segmentation) more accurately and efficiently when limited annotated examples are available (Taleb et al., 2020). The pretext tasks could be either designed based on application scenarios or chosen from traditional ones used in the computer vision field. For the former type, Bai et al. (2019) designed a novel pretext task by predicting anatomical positions for cardiac MR image segmentation. The self-learnt features via the pretext task were transferred to tackle a more challenging task, accurate ventricles segmentation. The proposed method achieved much higher segmentation accuracy than the standard U-Net trained from scratch, especially when only limited annotations were available.

For the latter type, Taleb et al. (2020) extended performing pretext tasks from 2D to 3D scenarios, and they investigated the effectiveness of several pretext tasks (e.g., rotation prediction, jigsaw puzzles, relative patch location) in 3D medical image segmentation. For brain tumor segmentation, they adopted the U-Net architecture, and the pretext tasks were performed on a large unlabeled dataset (about 22,000 MRI scans) to pre-train the models; then the learned feature representations were fine-tuned on a much smaller labeled dataset (285 MRI scans). The 3D pretext tasks performed better than their 2D counterparts; more importantly, the proposed methods sometimes outperformed supervised pre-training, suggesting a good generalization ability of the self-learnt features.

The performance of self-supervised pre-training could also be improved by adding other types of information. Hu et al. (2020) implemented a context encoder (Pathak et al., 2016) performing semantic inpainting as the pretext task, and they incorporated DICOM metadata from ultrasound images as weak labels to boost the quality of pre-trained features toward facilitating two different segmentation tasks.

Self-supervised contrastive learning based approaches: For this method, early studies such as the work by Jamaludin et al. (2017) adopted the original contrastive loss (Chopra et al., 2005b) to learn useful feature representations. In recent three years, with a surge of interest in self-supervised contrastive learning, contrastive loss has evolved from the original version to more powerful ones (Oord et al., 2018) for learning expressive feature representations from unlabeled datasets. Chaitanya et al. (2020) claimed although the contrastive loss in Chen et al. (2020a) is suitable for learning image-level (global) feature representations, it does not guarantee learning distinctive local representations that are important for per-pixel segmentation. They proposed a local contrastive loss to capture local features that can provide complementary information to boost the segmentation performance. Meanwhile, to the best of our knowledge, when computing the global contrastive loss, these authors are the first to utilize the domain knowledge that there is structural similarity in volumetric medical images (e.g., CT and MRI). In MR image segmentation with low annotations, the proposed method substantially outperformed other semi-supervised and self-supervised methods. In addition, it was shown that the proposed method could further benefit from data augmentation techniques like Mixup (Zhang et al., 2018a).

3.2.3. Semi- supervised learning based segmenting models

Semi-supervised consistency regularization:

The mean teacher model is commonly used. Based on the mean teacher framework, Yu et al. (2019) introduced uncertainty estimation (Kendall and Gal, 2017) for better segmentation of 3D left atrium from MR images. They argued that on an unlabeled dataset, the output of the teacher model can be noisy and unreliable; therefore, besides generating target outputs, the teacher model was modified to estimate these outputs’ uncertainty. The uncertainty-aware teacher model can produce more reliable guidance for the student model, and the student model could in turn improve the teacher model. The mean teacher model can also be improved by the transformation-consistent strategy (Li et al., 2020b). In one study, Wang et al. (2020b) proposed a semi-supervised framework to segment COVID-19 pneumonia lesions from CT scans with noisy labels. Their framework is also based on the Mean Teacher model; instead of updating the teacher model with a predefined value, they adaptively updated the the teacher model using a dynamic threshold for the student model’s segmentation loss. Similarly, the student model was also adaptively updated by the teacher model. To simultaneously deal with noisy labels and the foreground-background imbalance, the authors developed a generalized version of the Dice loss. The authors designed the segmentation network in the same spirit of U-Net but made several changes in terms of new skip connections (Pang et al., 2019), multi-scale feature representation (Chen et al., 2018a), etc. In the end, the segmentation network with the Dice loss were combined with the mean teacher framework. The proposed method demonstrated high robustness to label noise and achieved better performance for pneumonia lesion segmentation than other state-of-the-art methods.

Semi-supervised pseudo labeling:

Fan et al. (2020) presented a semi-supervised framework (Semi-InfNet) to tackle the lack of high-quality labeled data in COVID-19 lung infection segmentation from CT images. To generate pseudo labels for the unlabeled images, they first used 50 labeled CT images to train their model, which produced pseudo labels for a small amount of unlabeled images. Then the newly pseudo-labeled examples were included in the original labeled training dataset to re-train the model to generate pseudo labels for another batch of unlabled images. This process was repeated until 1600 unlabeled CT images all got pseudo-labeled. Both the labeled and pseudo-labeled examples were used to train Semi-InfNet, and its performance surpassed other cutting-edge segmentation models such as UNet++ by a large margin. Aside from the semi-supervised learning strategy, there are three critical components in the model responsible for the good performance: parallel partial decoder (PPD) (Wu et al., 2019a), reverse attention (RA) (Chen et al., 2018b), and edge attention (Zhang et al., 2019). PPD can aggregate high-level features of the input image and generate a global map indicating the rough location of lung infection regions; EA module uses low-level features to model boundary details, and RA module further refines the rough estimation into an accurate segmentation map.

Semi-supervised generative models:

As one of the earliest works that extended generative models to semi-supervised segmentation task, Sedai et al. (2017) utilized two VAEs to segment optic cup from retinal fundus images. The first VAE was employed to learn feature embeddings from a large number of unlabeled images by performing image reconstruction; the second VAE, trained on a smaller number of labeled images, mapped input images to segmentation masks. In other words, the authors used the first VAE to perform an auxiliary task (image reconstruction) on unlabeled data, which can help the second VAE to better achieve the target objective (image segmentation) using labled data. To leverage the feature embeddings learned by the first VAE, the second VAE simultaneously reconstructed segmentation masks and latent representations of the first VAE. The utilization of additional information from unlabled images improved segmentation accuracy. In another study, Chen et al. (2019c) also adopted a similar idea of introducing an auxiliary task on unlabeled data to facilitate performing image segmentation with limited labeled data. Specifically, the authors proposed a semi-supervised segmentation framework consisting of a UNet-like network for segmentation (target objective) and an autoencoder for reconstruction (auxiliary task). Unlike the previous study that trained two VAEs separately, the segmentation network and reconstruction network in this framework share the same encoder. Another difference lies in that the foreground and background parts of the input image were reconstructed/generated separately, and the respective segmentation labels were obtained via an attention mechanism. This semi-supervised segmentation framework outperformed its counterparts (e.g., fully supervised CNNs) in different labeled/unlabeled data splits.

In addition to the aforementioned approaches, researchers have also explored incorporating domain-specific prior knowledge to tailor the semi-supervised frameworks for a better segmentation performance. The prior knowledge varies a lot, such as the anatomical prior (He et al., 2019), atlas prior (Zheng et al., 2019), topological prior (Clough et al., 2020), semantic constraint (Ganaye et al., 2018), and shape constraint (Li et al., 2020c) to name a few.

3.3. Detection

A natural image may contain objects belonging to different categories, and each object category may contain several instances. In the computer vision field, object detection algorithms are applied to detect and identify if any instance(s) from certain object categories are present in the image (Sermanet et al., 2014; Girshick et al., 2014; Russakovsky et al., 2015). Previous works (Shen et al., 2017; Litjens et al., 2017) have reviewed the successful applications of the frameworks before 2015, such as OverFeat (Sermanet et al., 2014; Ciompi et al., 2015), RCNN (Girshick et al., 2014), and fully convolutional networks (FCN) based models (Long et al., 2015; Dou et al., 2016; Wolterink et al., 2016). As a comparison, we aim at summarizing applications of more recent object detection frameworks (since 2015), such as Faster RCNN (Ren et al., 2015), YOLO (Redmon et al., 2016), and RetinaNet (Lin et al., 2017b). In this section, we will first briefly review several recent milestone detection frameworks, including one-stage and two-stage detectors. It should be noted that, since these detection frameworks are often used in supervised and semi-supervised settings, we introduce them under these learning paradigms. Then we will cover these frameworks’ applications in specific type of lesion detection and universal lesion detection. In the end, we will introduce unsupervised lesion detection based on GANs and VAEs.

3.3.1. Supervised and semi-supervised lesion detection

I. Overview of the detection frameworks