Abstract

How neuronal variability affects sensory coding is a central question in systems neuroscience, often with complex and model-dependent answers. Many studies explore population models with a parametric structure for response tuning and variability, preventing an analysis of how synaptic circuitry establishes neural codes. We study stimulus coding in networks of spiking neuron models with spatially ordered excitatory and inhibitory connectivity. The wiring structure is capable of producing rich population-wide shared neuronal variability that agrees with many features of recorded cortical activity. While both the spatial scales of feedforward and recurrent projections strongly affect noise correlations, only recurrent projections, and in particular inhibitory projections, can introduce correlations that limit the stimulus information available to a decoder. Using a spatial neural field model, we relate the recurrent circuit conditions for information limiting noise correlations to how recurrent excitation and inhibition can form spatiotemporal patterns of population-wide activity.

Spatiotemporal dynamics in spiking neuron networks limit information transmission.

INTRODUCTION

A prominent feature of cortical response to sensory stimuli is that neuronal activity varies substantially across presentation trials (1), even when efforts are taken to control or account for variable animal behavior (2–4). A component of this variability is coordinated among neurons in a brain area, often leading to shared fluctuations in spiking activity (5–7). How stimulus processing is affected by this large, population-wide neuronal variability is a longstanding question in both experimental and theoretical neuroscience communities.

Recording from neuronal populations while simultaneously monitoring an animal’s behavior during a structured task offers a glimpse into how neuronal activity supports computation. Correlations between spike counts from pairs of neurons in response to repeated stimulus, often referred to as noise correlations, are modulated by a variety of cognitive factors that are known to affect task performance (2). For example, noise correlations decrease with animal arousal (8) or task engagement (9). In the visual pathway, noise correlations between neurons in the superficial layer are decreased when spatial attention is directed into the receptive field of a recorded population (10), although the attention-mediated changes in noise correlation depend on cortical layer (11) and task conditions (12). In addition, perceptual learning (13, 14) and visual experience (15) can also result in an attenuation of noise correlations. The common theme in all of these studies is that a reduction in noise correlations co-occurs with cognitive shifts that improve task performance. This supports the often cited idea that shared variability is deleterious to neuronal coding because it cannot be reduced by ensemble averaging (16, 17), and thus, it is expected that its reduction will enhance neural coding.

While the above narrative is appealing, it oversimplifies a long debate in the computational neuroscience community (16, 18–20). It is popular (and pragmatic) to restrict analysis to a linear decoding of neuronal response, where performance is often measured using the linear Fisher information between the estimated and actual stimulus (19, 21–23). Linear Fisher information depends on two components: the set of neuronal tuning curves and the population covariance matrix (19, 21). Whether noise correlations degrade or improve stimulus coding depends on the relationship between these components. For example, while it is true that correlations between similarly tuned neurons limit Fisher information (17), correlations between dissimilarly tuned neurons can actually increase information compared to an asynchronous population (18, 19). Several more recent studies have built upon this idea and shown how stimulus-dependent correlations can also improve linear information transmission (24–26). Last, Moreno-Bote et al. (27) showed that information is only limited by one specific type of correlations, termed differential correlations, which align with population activity in the direction defined by the derivatives of the tuning curves. In all of these varied modeling studies, both tuning curves and covariance structure were assumed and thus had a prescribed relation to one another. In this study, we adopt an alternative modeling approach and consider biophysically grounded population models, where cellular spiking dynamics and synaptic wiring are assumed, and population response and its variability are emergent properties of the circuit.

A serious obstacle in using circuit models to study neuronal coding is the lack of a complete mechanistic theory underlying population-wide variability (28). An often used framework assumes that neuronal fluctuations are inherited from external sources and any circuit wiring filters and propagate this variability throughout the population (29, 30). Along these lines, recent modeling work in a feedforward circuit model of primary visual cortex shows that when such inherited variability originates from a noisy stimulus, then population-wide correlations, which limit information transfer, naturally develop throughout the circuit (31). Neuronal variability can also emerge through recurrent interactions within the network, when synaptic weights are large, and excitation is dynamically balanced by recurrent inhibition (1, 32). However, these networks famously support an asynchronous state (33), making them at the surface ill-suited to probe how internally generated population-wide variability affects neuronal processing. Recent extensions of balanced networks, which include structured wiring, have provided an understanding of how shared variability can emerge through internal mechanics (34–39). These networks are a useful modeling framework to probe how shared variability generated through circuit dynamics affects population coding.

In this work, we study information transmission in spatially ordered networks of spiking neuron models, where a balance between excitation and inhibition produces population-wide variability. We embed a columnar organization of stimulus orientation preference in our model and measure the performance of an optimal estimation of a stimulus from a linear decoding of population activity. In particular, we explore how the spatial scales of feedforward and recurrent wiring influence stimulus estimation. First, we show that both feedforward and recurrent wiring shape population-wide noise correlations within the population, indicating that both aspects of circuitry may affect population coding. However, only the structure of the recurrent network determines whether the network generates information limiting noise correlations, which degrade stimulus estimation. These correlations emerge from pattern-forming dynamics in the recurrent circuit, which prevent a linear model from capturing population-wide variability, and thereby degrade any linear estimation of a stimulus. As we show, inhibition plays a critical, yet complex, role in this pattern-forming dynamics and, hence, in information transmission. Broader lateral inhibition creates patterned network activity, which decreases information, while for spatially narrow inhibition, increasing the mean inhibitory drive stabilizes activity, resulting in increased information transfer. In summary, our work begins to connect the emerging theory of how neuronal circuits produce trial-variable activity with ongoing theories of how population-wide variability affects stimulus representation.

RESULTS

Our study measures the propagation of stimulus information in a two-layer network of spatially ordered neurons. As motivation, our model aims to capture layer (L)4 and L2/3 neurons in the primary visual cortex (V1) (Fig. 1A, see Materials and Methods); however, our model is sufficiently general to capture an arbitrary layered network. We model L4 neuronal spiking activity as a Poisson process with Gabor receptive fields defining the stimulus to firing rate transfer. The orientation preference of L4 neurons is determined from a superimposed pinwheel orientation map [Fig. 1A, bottom right; see (40)]. The Gabor visual images are corrupted by spatially independent noise obeying a temporal Ornstein-Uhlenbeck process; this provides a bound on the stimulus information entering our network. L2/3 is modeled as a recurrently connected network of both excitatory and inhibitory spiking neuron models. The connection probability of all recurrent projections within L2/3 and the feedforward projections from L4 to L2/3 decays with distance with spatial widths αrec and αffwd, respectively [see Materials and Methods and (36) for details]. The spatial size of the network is normalized to be 1 in both x and y directions. The columnar radius is set to be 0.1, and the feedforward and recurrent connection widths vary between 0.05 and 0.2. For comparison, in cat primary visual cortex, the radius of recurrent connection was found to be around 250 μm, and the column radius was approximately 500 μm (41, 42). We assume that the image stimulus is in the receptive field of all neurons, meaning that the image activates the whole network.

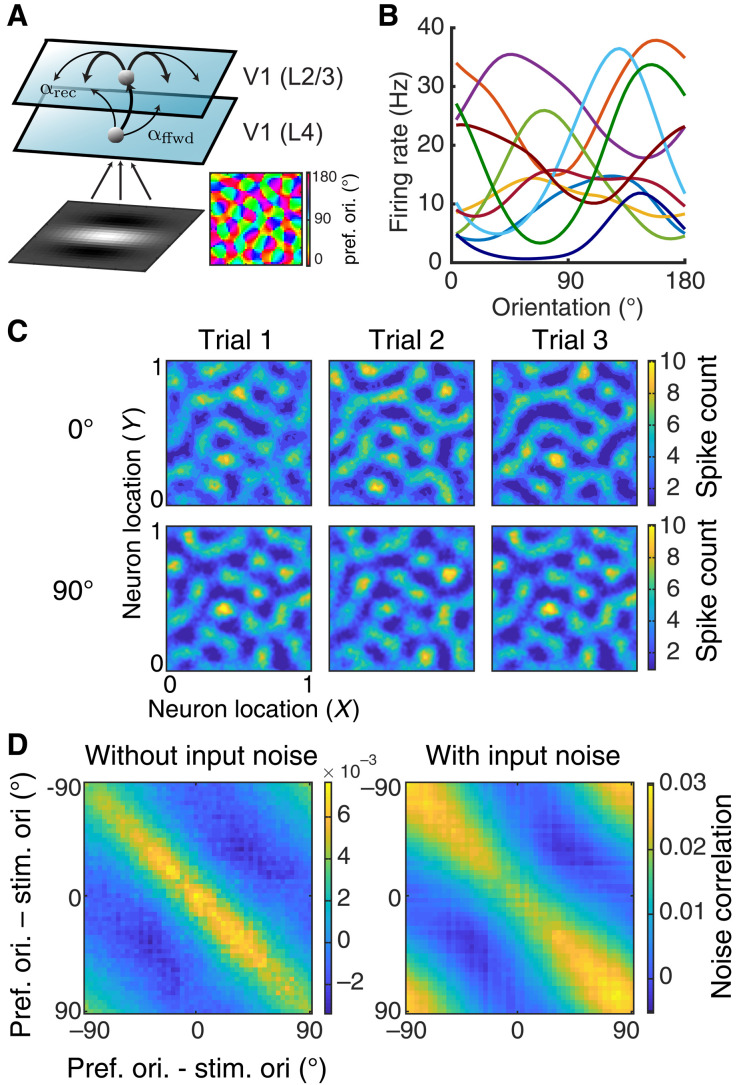

Fig. 1. Spatially ordered balanced networks generate heterogeneous tuning curves and structured trial-to-trial variability.

(A) Model schematic of a two-layer network of spatially ordered spiking neurons modeling L4 neurons and L2/3 neurons, respectively, from visual area V1. The visual input to the model is a Gabor image with orientation θ. The L4 network consists of Poisson units with Gabor receptive fields. The orientation preferences of L4 neurons are assigned according to a pinwheel orientation map (bottom right). The L2/3 network consists of both excitatory and inhibitory neurons modeled with integrate-and-fire dynamics, all arranged on a unit square. The spatial widths of the feedforward projections from L4 to L2/3 and the recurrent projections within L2/3 are denoted as αffwd and αrec, respectively. (B) The L2/3 neurons have heterogeneous orientation tuning curves. Ten examples of tuning curves are shown with different colors representing different neurons (smoothed with a Gaussian kernel of width 9∘). (C) The model internally generates trial-to-trial variability. Three trials of network spike counts (200 ms time window) are shown from a network with αffwd = 0.05 and αrec = 0.1 in response to a Gabor image of 0° (top) or 90° (bottom). Images are smoothed with a Gaussian kernel of width 0.01. (D) Noise correlation matrix with neurons ordered by their preferred stimulus orientation. Responses were simulated for a Gabor input with orientation at θ = 0∘ without (left) and with stimulus noise (right).

Networks with large, balanced excitatory and inhibitory connections are an attractive model framework for cortical activity because they capture several aspects of reported in vivo population response. When large synaptic connections are paired with random network wiring, they naturally produce significant heterogeneity in spiking activities across the network (32, 43). In our spatially ordered network, the L2/3 neurons have very heterogeneous tuning curves with various widths and magnitudes (Fig. 1B). This produces a large spread of orientation selectivity across the population (Figs. 1B and 2B), capturing the broad heterogeneity of orientation tuning reported in primary visual cortex (44).

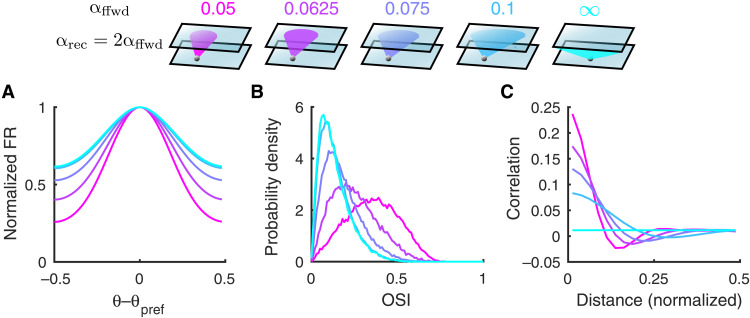

Fig. 2. Both tuning curves and noise correlations change with the feedforward projection width.

(A) The average tuning curve, normalized and centered at the preferred orientation, broadens as the feedforward projection width, αffwd, increases (color scheme shown on top). The recurrent width, αrec, is fixed to be 2αffwd. The columnar radius, αcol, is 0.1. The case of αffwd = ∞ means the connection probability is independent of distance. (B) The probability density distributions of orientation selectivity indexes (OSI, Eq. 7) of the L2/3 excitatory neurons. (C) Pairwise noise correlations as a function of distance between neuron pairs from the L2/3 excitatory population. The magnitude of pairwise correlations decreases as αffwd increases.

Balanced networks produce significant dynamic and trial-to-trial spiking variability through internal mechanisms (32). While balanced networks with disordered connectivity produce asynchronous activity (33), networks with structured wiring can produce correlated variability (34, 35, 37), which, under certain conditions, can be population wide (36). These past models were concerned with the mechanics of neuronal variability and did not model a spatially distributed stimulus to drive network response. In our model, when L4 columnar stimulus structure is enforced, a broad L2/3 columnar activation is recruited, accompanied by significant population-wide trial-to-trial variability (Fig. 1C). Despite the lack of feature-based coupling (i.e., connectivity was determined only by the spatial distance between neurons), there is a clear positive relation between pairwise signal and noise correlations (Fig. 1D), matching diverse in vivo datasets (10, 13, 30, 45). This occurs for two reasons. First, the stimulus has a spatial columnar profile, so any spatial wiring partially overlaps with the signal profile. Second, it is well known that spike count correlations increase with the firing rates of the neuron pair (46), so that when neuron pairs with similar stimulus preference are coactivated, any significant trial-to-trial covariability in their synaptic inputs is better expressed in their output spiking. In our model, the relation between signal and noise correlations occurs in response to Gabor images, which are noiseless (Fig. 1D, left), or those contaminated by sensory noise (Fig. 1D, right). The latter case naturally provides feedforward noise correlations (31) with a columnar spatial scale. These externally imposed correlations are reduced for neuron pairs with a stimulus preference that match the driving stimulus, in agreement with past models where correlations are inherited from outside the circuit (30). In total, our spiking network captures many of the trial-averaged and trial-variable aspects of real cortical population response.

We focus on the network’s ability to discriminate two Gabor images with similar orientations, a paradigm commonly used in experiments (47). Gabor images with orientation θ are presented to the L4 neurons repetitively with an ON interval of 200 ms and an OFF interval of 300 ms (see Materials and Methods). In what follows, we consider a decoder that has access to N model L2/3 pyramidal neurons (randomly chosen from the 4 × 104 L2/3 neurons). We simulate the spiking activities of L2/3 neurons and collect spike counts from the observed population, n = [n1, …, nN], during each stimulus presentation (ON interval). The linear Fisher information about θ available from n is defined as

| (1) |

where f is the expectation of n conditioned on θ (population tuning curve), ′ denotes differentiation with respect to θ, and Σ is the covariance matrix of n (noise covariance matrix). Fisher information is a useful metric when considering the estimation of orientation θ from n. Let be the optimal linear estimator of the θ for a given Gabor image, then we have that (48). Put more simply, IF measures the accuracy of the optimal linear estimator of orientation. In practice, we measure the linear Fisher information from the spike counts of the L2/3 excitatory neurons using a bias-corrected estimation [Materials and Methods and Eq. 8; (49)].

The spatial scales of connectivity in our network are key determinants of overall population spiking activity in unstimulated conditions (34–36). In the next sections, we explore how the spatial scales of the feedforward L4 to L2/3 connectivity (αffwd; Fig. 1A) and those of the L2/3 to L2/3 recurrent projections (αrec; Fig. 1A) influence information transfer about stimulus θ within the network.

Narrow feedforward projections increase information saturation rate

Linear Fisher information (Eq. 1) depends on two components: the trial-averaged response gain to stimulus orientation (f′) and the trial-to-trial covariance matrix Σ. As we will show, the joint influence of αffwd on gain f′ and covariance Σ sets a conflict between how αffwd should ultimately affect IF.

On the one hand, the population-averaged tuning curve broadens as αffwd increases, resulting in reduced orientation gain f′ (Fig. 2A). In agreement, the orientation selectivity index (OSI, Eq. 7) of individual neurons decreases with αffwd (Fig. 2B). This reduction in orientation tuning is due to broader spatial filtering of tuned inputs from L4, where the tuning preferences of neurons are spatially clustered in a columnar organization. In particular, when the connection probabilities of all projections are uniform in space (αffwd → ∞), the columnar structure is not preserved in L2/3, and neurons are least tuned. Hence, considering these changes in tuning selectivity alone predicts that the IF about θ from L2/3 neurons decoding would decrease with αffwd.

On the other hand, the noise correlations between nearby neurons decrease with increasing αffwd (Fig. 2C). Rosenbaum et al. (35) showed that for spatially distributed balanced networks, where αffwd < αrec, an asynchronous solution does not exist. Rather, network activity is organized so that nearby cell pairs have positive noise correlations, while more distant cell pairs have negative correlations (Fig. 2C, pink curves). We remark that while very nearby neurons can have large noise correlations (∼0.25), most pairs are distant, so that mean noise correlation across all pairs remains low (0.01). Previous work on population coding has suggested that positive correlation between similarly tuned neurons can limit information, while between oppositely tuned neurons, it can benefit coding (16–18). Since the tuning preferences of neurons are spatially clustered with a pinwheel orientation map, nearby neurons are likely to share similar tuning. Therefore, in contrast to the effect of the tuning selectivity, which would suggest that IF could decrease with αffwd, the overall reduction in the spatial structure of pairwise noise correlations implies that IF could increase with αffwd.

We divide our analysis of how IF depends on αffwd into two cases: first, when the decoder is restricted to a small (N ∼ 102) population of neurons and, second, for decoders that have access to large (N ∼ 104) populations. For decoders restricted to small populations, the combined effects of reduced tuning selectivity and correlations with larger αffwd result in the linear Fisher information being largely reduced as αffwd increases (Fig. 3A). Consistently, the neural thresholds of single neurons, measured as the ratio of the standard deviation of the spike counts and the derivative of the tuning curve function (), also increase with αffwd (Fig. 3B). In total, the population code is less informative with larger αffwd, in line with the single neuron reduction in orientation selectivity (Fig. 2B), despite the associated decrease in pairwise noise correlations (Fig. 2C).

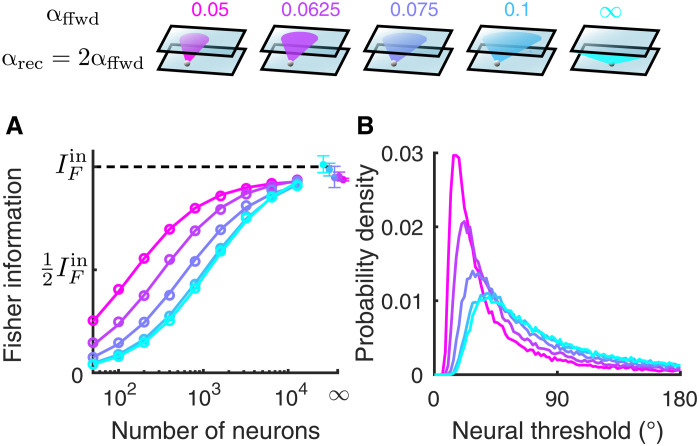

Fig. 3. The linear Fisher information saturates faster with smaller feedforward projection width.

(A) The linear Fisher information (IF) increases with the number of neurons sampled from the excitatory population in L2/3 (color scheme on top). With a large number of neurons, the linear Fisher information saturates close to the input information bound from the L4 network (, black dashed line). The asymptotic values of IF at large N limit (dots at N = ∞) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF (the linear fits shown in fig. S1A). Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. The input noise on the Gabor images is chosen such that corresponds to a discrimination threshold of about 1.8°, which is consistent with psychophysical experiments (79) (see Materials and Methods). (B) The probability density distributions of neural thresholds () of the L2/3 excitatory neurons.

The noise in the Gabor image creates shared fluctuations in the L2/3 neurons that cannot be distinguished from signal, limiting the available information about θ that is possible to be decoded (; Fig. 3A, black dashed line; see Materials and Methods). For decoders that have access to large populations, the linear Fisher information of the L2/3 neurons saturates to a level close to , regardless of the feedforward projection width (Fig. 3A). It suggests that the network is very efficient at transmitting information. In particular, even networks with spatially disordered connections (αffwd → ∞), where neurons are very weakly tuned, still contain most of the information from the input layer.

Moreno-Bote et al. (27) explicitly make the observation that while networks may show significant noise correlations, it is the shared fluctuations that align with the direction of response gain that limit information. In particular, they consider the decomposition of the covariance Σ = Σ0 + ϵf′f′T, with ϵ measuring the shared variance along the coding direction (f′). They show that for decoders that have access to a large number of neurons, IF ∼ 1/ϵ. By assuming that the information of the nonlimiting covariance component (Σ0) scales linearly with N, we estimated the asymptotic value of IF at the N → ∞ limit [Fig. 3A, dots, and fig. S1A; (50); see Materials and Methods]. For our network, it is not (to our knowledge) possible to formally decompose Σ into Σ0 + ϵf′f′T, meaning we do not have a way of estimating how network interactions contribute to ϵ. However, the fact that the information eventually saturates close to for all values of αffwd indicates that αffwd does not affect the value of ϵ. This is consistent with our network being in a regime where the origin of information limiting correlations stems from strictly external fluctuations, as has been previously studied (31). Recent experiments have found strong evidence supporting the existence of information-limiting correlations in mouse visual cortex in response to grating images (50–52). Information with respect to the orientation of gratings increased sublinearly with the number of decoded neurons and was estimated to approach a plateau with tens of thousands of neurons (50, 51). Consistently, the information in our model typically approaches the asymptotic value with around 10,000 neurons.

Recurrent connections with spatially balanced excitation and inhibition have small effects on information transfer

Next, we study how the spatial scales of recurrent connections (αrec) affect information transmission. We first consider networks with the same spatial scale of recurrent excitatory and inhibitory projections. A previous study has shown that the relative spatial scale between recurrent and feedforward projections has a large impact on the spatial structure of pairwise noise correlations (35). For fixed αffwd and increasing αrec, pairwise noise correlations become spatially structured with large magnitude (Fig. 4D). In addition, the individual neuron orientation selectivity is higher when αrec is larger than αffwd since the recurrent synaptic current is effectively inhibitory and thus sharpens the tuning curves (Fig. 4C) (34).

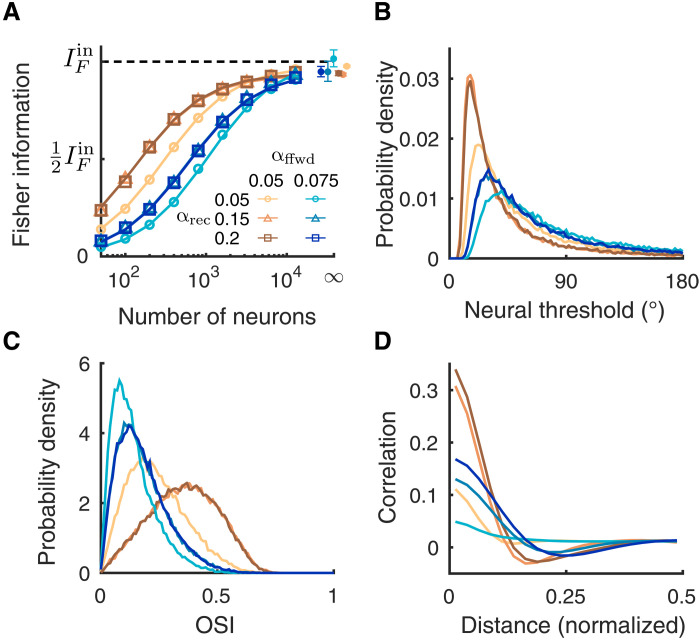

Fig. 4. The recurrent projection width has small effects on the linear Fisher information.

(A) The linear Fisher information is slightly lower when the recurrent projection width (αrec) is narrower than the feedforward width (αffwd) (light shade). When αrec is broader than αffwd, the saturation curve of the Fisher information overlaps (darker shades). Examples of two αffwd values are shown (brown, αffwd = 0.05; blue, αffwd = 0.075). The asymptotic values of IF at N = ∞ (dots) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF (the linear fits shown in fig. S1B). Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. (B) The probability density distributions of neural thresholds () of the L2/3 excitatory neurons. (C) The probability density distributions of OSI of the L2/3 excitatory neurons. (D) Pairwise noise correlation as a function of the distance between a neuron pair. The excitatory and inhibitory projection widths are set to be equal (σe = σi = αrec).

For decoders that consider only small populations (N ∼ 102 to 103 neurons), we see a clear difference between how αrec and αffwd affect IF. Specifically, IF is relatively insensitive to αrec (the brown curves scanning over αrec for αffwd = 0.05 are clustered in Fig. 4A; similar to the blue curves with αffwd = 0.075). Both the distributions of neural thresholds and OSI show similar ordering as the Fisher information (Fig. 4, B and C). By contrast, the spatial structures of pairwise correlations vary in both magnitude and width and do not show a clear relationship with the ordering of the Fisher information curves for finite N (Fig. 4D). In addition, the fact that all IF saturate at the same value for N → ∞ suggests that the changes in the population correlations do not affect the information-limiting component. Overall, for spatially balanced recurrent excitatory and inhibitory projections, the specific scale of αrec has much smaller effect than that of the feedforward projections when considering information transfer.

Broad inhibitory projections reduce information

Our cortical network can exist in one of two regimes. The preceding sections consider a regime where circuit parameters are such that the firing rate dynamics across the network are stable despite pairwise correlations being structured. The second regime is where the firing rates are no longer dynamically stable, and large rate fluctuations are produced through internal interactions (as we will formalize below). In past work (36), we showed how model networks in this second regime produced the low-dimensional, population-wide shared variability characteristic of a variety of cortical areas (6, 7, 53, 54). In this section, we consider how networks in this second regime transfer information across layers.

Dynamical networks with spatially ordered coupling can produce rich spatiotemporal patterns of activity (55). To explore how the network circuitry in our cortical model determines its pattern-forming dynamics, we first implement two simplifications. First, we restrict our analysis to an associated firing rate model (56, 57), sharing the same spatial connectivity as our network of model spiking neurons (see Materials and Methods). This greatly simplifies any linear stability analysis, and it provides qualitatively, but not quantitatively, similar macroscopic network firing rates when spiking neuron models are in a fluctuation-driven regime (58). Second, we analyze the dynamics of the network when driven by spatially uniform inputs. This provides a spatial translational symmetry in the network needed for Fourier analysis.

A stable, spatially uniform solution of the firing rate model corresponds to an asynchronous state in the network of spiking neuron models. We linearize the firing rate network dynamics around this uniform solution and obtain a set of eigenvalues and associated eigenmodes. This eigenstructure governs the linearized firing rate dynamics near the uniform solution. Each eigenmode has a wave number indicating its spatial scale across the network; the zero wave number eigenmode describes a spatially uniform solution, while higher wave number eigenmodes contribute to spatially structured solutions (at the spatial period of the wave number). The stability of the solution at each eigenmode is given by the sign of the real component of the associated eigenvalue: Negative (positive) eigenvalues imply that dynamics about that eigenmode are stable (unstable). If all eigenmodes are stable, then we say that the solution is stable. The imaginary component of the associated eigenvalue near the instability indicates the temporal frequency of the unstable solution. A zero imaginary component implies a nonrhythmic solution, while a nonzero imaginary component suggests an oscillatory solution with a characteristic frequency proportional to the imaginary component.

Networks with spatially balanced recurrent excitatory and inhibitory projections (σe = σi) and strong static inputs to the inhibitory neurons (μi) have a stable spatially uniform solution (Fig. 5, A and B, gray region and orange curves, respectively). In this regime, the network dynamics is approximately linear with weak perturbations, meaning that the network fluctuations are linearly related with input fluctuations. Since linear Fisher information is conserved under an invertible linear transformation (see Discussion), a network in a stable regime preserves almost all of its input information. As shown in previous sections, IF converges to for large N decoders, and this convergence is independent of the connectivity scales of both the feedforward and the recurrent pathways (Figs. 3A and 4A).

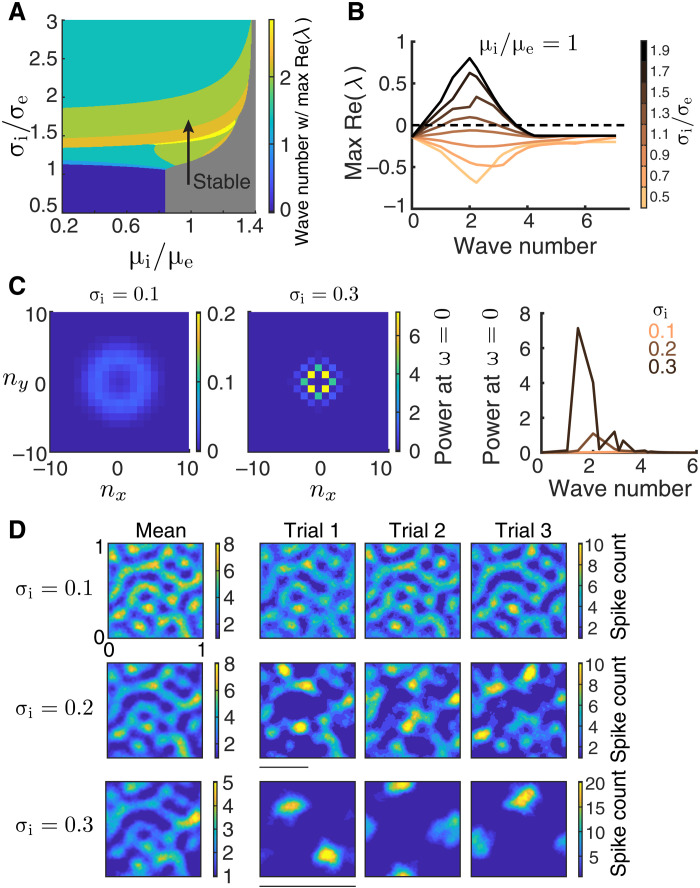

Fig. 5. Networks with broad inhibitory projections exhibit pattern-forming dynamics.

(A) Bifurcation diagram of a firing rate model (Eq. 6) as a function of the inhibitory projection width σi and the depolarization current to the inhibitory neurons (μi). The excitatory projection width and the drive to the excitatory neurons are fixed at σe = 0.1 and μe = 0.5, respectively. Color represents the wave number whose eigenvalue has the largest positive real part, and the gray region is marked stable since all eigenvalues have a negative real part. (B) The maximum real part of eigenvalues as a function of wave number for increasing σi (σe = 0.1), when μi = μe. Nonzero wave numbers lose stability as σi increases, indicating that the network will exhibit firing rate dynamics with spatial scales of the unstable eigenmodes of the uniform solution. (C) The power spectrum at temporal frequency ω = 0 for different spatial Fourier modes (nx, ny) of the spontaneous spiking activity from the spiking neuron networks with σi = 0.1 (left, the nonzero spatial frequency power peaks around wave number 3.6.) and σi = 0.3 (middle). Right: The average power at ω = 0 across wave numbers (). The power spectra are computed using a 200-ms time window. (D) Activities of spiking neuron networks with different σi when driven by a Gabor image (right, three trials of spike counts within the 200-ms window; left, mean spike counts). The scale bars indicate wavelengths of 0.5 (normalized unit, corresponding to wave number 2; middle) and 1 (normalized unit, corresponding to wave number 1; bottom). The spatial domain of the network is [0,1] × [0,1]. Images are smoothed with a Gaussian kernel of width 0.01. Other parameters for the spiking neuron networks (C and D) are σe = 0.1, αffwd = 0.05, and feedforward strengths JeF = 240 mV and JiF = 400 mV.

When the excitatory and inhibitory projections differ in spatial scale, recurrent connectivity can have a large impact on network dynamics. As the inhibitory projection width (σi) increases, the uniform solution in the spatial firing rate model loses stability at a band of nonzero wave numbers (Fig. 5, A and B). This is via a Turing-Hopf bifurcation (55), a pattern-forming transition common to spatially distributed neuronal network models (56, 57). The unstable eigenmodes that emerge from the bifurcation contribute to population dynamics confined to the spatial scale of their wave numbers. The temporal structure of the population dynamics is due to the eigenvalues having nonzero imaginary components at the bifurcation (fig. S2). Similar pattern-forming transitions are also observed in the spontaneous activity of spiking neuron networks (movies S1 to S3) (34, 36, 39). To quantify the spatial scales of population activity, we compute the spatial power of population spiking (at zero temporal frequency) in the spontaneous state. The power at the zero temporal frequency is measured using the same time window (200 ms) as used for Fisher information calculation; therefore, it measures the fluctuations in population activity relevant for the readout information. When σi equals σe the power spectrum is small (Fig. 5C, right, orange curve) showing a band at a wave number of approximately 3.6 (Fig. 5C, left), reflecting the spatial scale of the feedforward projections [see fig. S3 in (35)]. As σi increases, there is a large increase in power at some nonzero wave numbers (Fig. 5C), reflecting large, internally generated fluctuations at nonzero spatial frequencies. The pattern-forming dynamics vary slowly in time, with temporal power concentrating in a frequency band below 5 Hz (fig. S3A). Overall, this suggests that as σi increases, the network of spiking neuron models transitions through either a pure Turing instability, where spiking noise causes slow diffusion, or a Turing-Hopf bifurcation.

When the spiking network is driven by a Gabor image, the internal dynamics of the network interacts with the spatial patterns of the feedforward inputs. In the stable regime, where excitation is spatially balanced with inhibition (σi = σe), the spatial patterns of the network activity are similar across trials and are inherited from the input spatial scale (Fig. 5D, first row). As σi increases, the internal pattern-forming dynamics of the network become dominant over the feedforward input patterns (Fig. 5D, top to bottom rows). The spatially periodic patterns of the internal dynamics become evident in the variations of spike counts from the mean (fig. S4). The broad inhibition suppresses significant activity, resulting in only a few stimulus-evoked areas compared to the spatially balanced case. The locations of the evoked areas vary from trial to trial, hence introducing excessive trial-to-trial variability in the population activity patterns. The mean population activity pattern across trials also degrades as σi increases (Fig. 5D, left). In total, this suggests that there will be information loss in networks with larger σi.

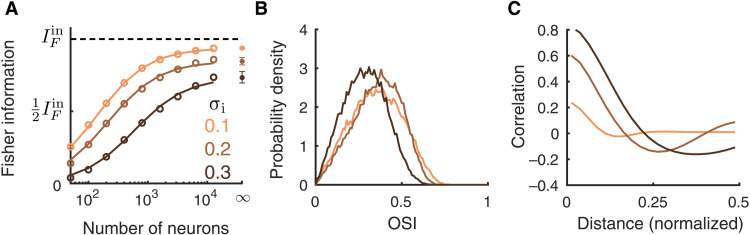

The Fisher information IF about stimulus θ is markedly reduced when the network loses stability through broader inhibitory projections (Fig. 6A). The orientation selectivity of neurons only changes slightly with σi (Fig. 6B), which means that a reduction in tuning selectivity cannot be the primary reason for the decrease in IF. However, the magnitude of pairwise correlations increases drastically as σi increases. Because of the internal pattern-forming dynamics in networks with large σi, nearby neurons are strongly correlated, while neuron pairs that are separated by half the distance between active zones are strongly anticorrelated. Consider the decomposition of network covariance Σ = Σ0 + ϵf′f′T, recalling that in the limit of large decoded populations (N), we have that the Fisher information IF ∼ 1/ϵ, so that ϵ measures the strength of information limiting correlations. In the stable regime, IF converges to the input information (Fig. 6A, orange curve), implying that all information-limiting correlations are inherited through the feedforward pathway (as reported earlier in Figs. 3 and 4). However, for broader inhibition, it appears that for large N, the information IF saturates to a level that is below . This argues that a component of the internally generated network covariability actually limits information transfer (i.e., contributes to ϵ). While our simulations support this conclusion, we admit that since it is computationally prohibitive to consider N beyond 104 neurons, we cannot verify how IF truly converges. In total, the pattern-forming dynamics in networks with broad inhibitory projections largely reduce the transmitted information.

Fig. 6. Broad inhibitory projections reduce information flow.

(A) The linear Fisher information from the L2/3 excitatory neurons is largely reduced as the inhibitory projection width (σi) increases. The asymptotic values of IF at N = ∞ (dots) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF (the linear fits shown in fig. S1C). Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. (B) The probability density distributions of OSI of the excitatory neurons from L2/3. (C) Pairwise noise correlations as a function of distance between a neuron pair in L2/3.

Depolarizing inhibitory neurons improves information flow

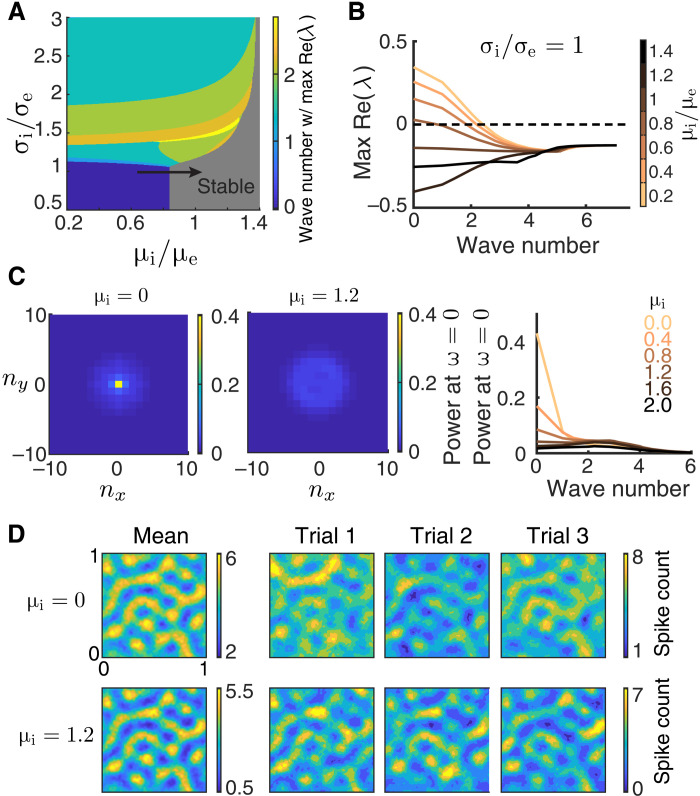

Even when excitatory and inhibitory projections are spatially matched, a network can nevertheless have an unstable uniform solution. This occurs when the static input to the inhibitory neurons (μi) is low (Fig. 7A, blue region), and the firing rate network is unstable through the eigenmode with zero wave number (Fig. 7B). In this case, the stability of the uniform solution is mediated by a Hopf bifurcation, through which bulk population-wide firing dynamics are produced (Fig. 7A, transition from blue to gray regions; movies S4 and S5), as opposed to the spatially confined dynamics produced via a Turing instability (Fig. 5). The spiking network shows a similar instability; when the static current to the inhibitory neurons is small, the spontaneous activities of spiking neuron networks exhibit large power at zero wave number, indicating large magnitude global fluctuations (Fig. 7C). The global fluctuations exhibit oscillations in the gamma frequency band, with temporal spectra peak around 25 to 50 Hz (fig. S3B). We note that networks in this regime lack fluctuations on the slow time scale as measured in the visual cortex [around 70 to 150 ms; (59, 60)]. Furthermore, the global fluctuations in the spontaneous activities of the spiking neuron network are largely suppressed with more input to the inhibitory neurons (Fig. 7C). In response to a stimulus θ, the population activity patterns show large fluctuations in the overall spiking activity level when μi is small (Fig. 7D). However, unlike the case for broad inhibition, since the instability is at zero wave number, then no spatially patterned internal dynamics occur that would compete with the stimulus-evoked spatial patterns. Consequently, the spatial patterns of the evoked activities are similar across trials (Fig. 7D).

Fig. 7. Lack of inhibitory drive gives rise to network-wide fluctuations.

(A) Bifurcation diagram of a firing rate model (Eq. 6) as a function of the inhibitory projection width σi and the depolarization current to the inhibitory neurons. The same as Fig. 5A. (B) The real part of eigenvalues as a function of wave number for increasing μi (μe = 0.5) when σi = σe. The zero wave number loses stability when μi is small, indicating that the network will exhibit network-wide nonlinear dynamics. (C) The power spectrum at temporal frequency ω = 0 for different spatial Fourier modes (nx, ny) of the spontaneous spiking activity from the spiking neuron networks with μi = 0 (left) and μi = 1.2 (middle). Right: The average power at ω = 0 across wave number (). The power spectra are computed using a 200-ms time window. (D) Activities of spiking neuron networks with different μi when driven by a Gabor image (right, three trials of spike counts within the 200-ms window; left, mean spike counts). Images are smoothed with a Gaussian kernel of width 0.01. Other parameters for the spiking neuron networks (C and D) are σe = σi = 0.1, αffwd = 0.05, JeF = 140 mV, and JiF = 0 mV, and the tonic current to excitatory neurons is μe = 0. This corresponds to an average current of 1.56 to each excitatory neuron.

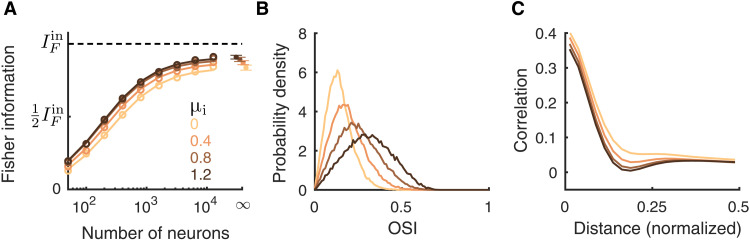

The Fisher information about θ increases with μi as the network becomes more stable (Fig. 8A). Once the network is in the stable regime, a further increase in μi has little effect on the IF (Fig. 8A, μi = 0.8 compared to μi = 1.2). To understand this, we again consider how response gain and population covariability are affected by μi. First, as μi increases, the tuning curves of the excitatory neurons are sharpened (Fig. 8B). Second, since the unstable dynamics correlate the whole network, they give rise to positive correlations between neurons across long distances. As μi increases, these population-wide fluctuations are suppressed, and overall neurons are less correlated (Fig. 8C). This combination could, in principle, conspire to produce the increase in information. However, we note that the impact of global fluctuations upon information transfer is much less than that of the spatially confined fluctuations induced by broad inhibition (compare Figs. 6A and 8A).

Fig. 8. Depolarizing inhibitory neurons improves information flow.

(A) The linear Fisher information increases with depolarizing the inhibitory neurons (μi). The saturation curves of the linear Fisher information overlap for μi ≥ 0.8. The asymptotic values of IF at N = ∞ (dots) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF (the linear fits shown in fig. S1D). Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. (B) The probability density distributions of OSI of the L2/3 excitatory neurons. (C) Pairwise noise correlations as a function of the distance between neuron pairs from the L2/3 excitatory population. The feedforward strengths are JeF = 140 mV and JiF = 0 mV, and the tonic current to excitatory neurons is μe = 0.

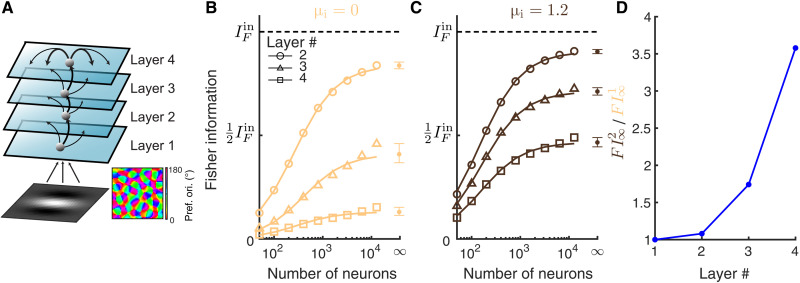

The small improvement in information transfer between two layers can be compounded during multilayer processing. We consider a four-layer network model with the same spatial wiring to model for the processing hierarchy in the visual cortex (Fig. 9A). The first and the second layer are the same as the L4 and L2/3 networks of the V1 area, respectively, in the previous model. The third and fourth layer can be considered as modeling for V2 and V4 areas, respectively. We compare the two networks with different inhibitory biases (μi) applied to the neurons in layers 2, 3, and 4. The feedforward strength was adjusted such that the firing rates were similar across layers 2 to 4 (fig. S5C) and between the two conditions of μi. As expected, the information about θ decreases as it propagates to higher-order layers (Fig. 9, B and C); this is simply an expression of the data-processing inequality (61). This suggests that information becomes more diffuse in higher-order layers (Fig. 9, B and C); indeed, the pairwise correlations are higher, and the thresholds of single neurons are larger in higher-order layers (fig. S5, A and B). However, the information deteriorates much faster across layers in networks with smaller μi (Fig. 9, B and C), not only because of the compounding effect but also because of the increase in spike train synchrony among neurons for small μi (fig. S5D). Since the inhibition is insufficient to cancel correlations at each layer, the synchrony builds up as signal propagates to higher-order layers (62). With stronger input to the inhibitory neurons, excitation is more balanced by inhibition, leading to stable asynchronous dynamics (fig. S5D). In total, the improvement in the Fisher information by increasing μi markedly increases with the number of processing layers (Fig. 9D).

Fig. 9. Information deteriorates in a multilayer model with internal dynamics.

(A) Schematic of a multilayer model. Layer 2 to 4 are recurrent networks of excitatory and inhibitory neurons, with the same projection widths of both recurrent and feedforward connections (αrec = 0.1 and αffwd = 0.05). Recurrent connections of layer 2 and layer 3 are not shown for better illustration. The layer 1 network is the same as the V1 L4 network in Fig. 1A. (B) The linear Fisher information is much more reduced in the higher-order layers when there is no input to the inhibitory neurons (μi = 0). The black dashed line is the total input Fisher information from layer 1 (FIctin). The asymptotic values of IF at N = ∞ (dots) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF (the linear fits shown in fig. S1E). Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. (C) The same as B with larger input to the inhibitory neurons (μi = 1.2). The linear fits of 1/IF versus 1/N are shown in fig. S1F. (D) The ratio between the Fisher information estimated at N = ∞ in networks with μi = 1.2 (FI2) and that with μi = 0 (FI1) across layers. The feedforward strengths are adjusted such that the firing rates are similar between the two conditions of μi and are maintained across layers (fig. S5C).

Tuning-dependent connectivity partially mitigates information loss

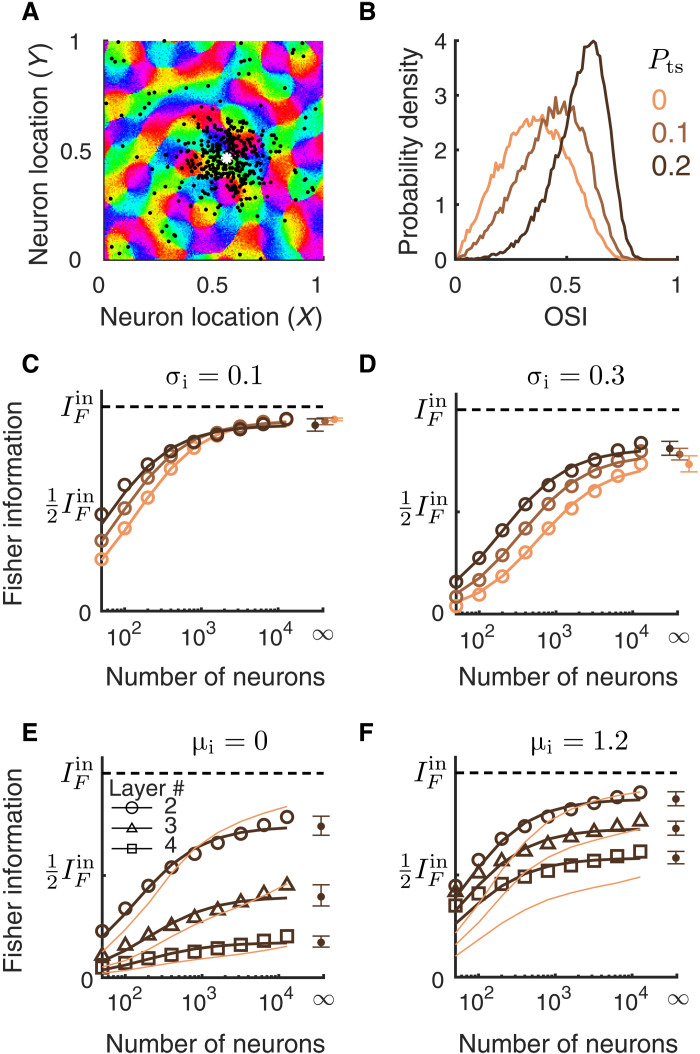

We have, thus far, considered networks with only spatially dependent connections. However, the connection probability between neurons depends not only on the physical distance between them but also on the similarity of their tuning preferences (41, 63, 64). To investigate how tuning-dependent connections shape the information flow, we modified network connectivity by rewiring a percentage of recurrent excitatory connections to be only between similarly tuned neurons (Fig. 10A; see Materials and Methods). This mimics the long-range “patchy” connections found in the visual cortex of cat and tree shrew (41, 63). We denote the percentage of such tuning-dependent connections as Pts. Networks with Pts = 0 are the same as those described previously with only spatial-dependent connections.

Fig. 10. Networks with tuning-dependent connectivity.

(A) Locations of postsynaptic excitatory neurons (black dots) of one example presynaptic excitatory neuron (white asterisk). The connection probability is defined similar to the networks described previously, except that a percentage (Pts) of recurrent excitatory connections were rewired to connect similarly tuned neurons across the whole network (see Materials and Methods). (B) The probability density distributions of OSI of the excitatory neurons from L2/3 for varying the percentage of tuning-dependent connections (Pts). The network parameters are the same as in (C) with σi = σe = 0.1. (C to F) The linear Fisher information as a function of the number of neurons sampled from the excitatory population for networks with different Pts [color scheme the same as in (B)]. The asymptotic values of IF at large N limit (dots at N = ∞) are estimated by fitting Eq. 10 (see Materials and Methods). Solid curves are the fits of IF. Open circles are the numerical estimation of the linear Fisher information (Eq. 8). Error bars are the 95% confidence intervals. (C) Networks with σi = σe = 0.1; (D) networks with σi = 0.3 and σe = 0.1; (E) multilayer networks with Pts = 0.2 (dark color) or Pts = 0 (light color), and μi = 0; (F) the same as (E) with μi = 1.2. The information curves of networks with Pts = 0 (light color) in (E) and (F) are the same as those in Fig. 9 (B and C).

We focus on our two identified mechanisms that transition the network between the stable and the pattern-forming dynamical regimes: varying the spatial scale of inhibitory projections (σi; Fig. 10, C and D) and the bias current to the inhibitory neurons (μi; Fig. 10, E and F). We find that the tuning-dependent connections increase the information encoded in small ensembles of neurons, which is likely due to the large improvement in the orientation selectivity of individual neurons (Fig. 10B). Despite this difference, our main conclusions from previous sections still hold in networks with tuning-dependent connections. First, the addition of tuning-dependent connections does not change the asymptotic information value when networks are in a dynamically stable regime (Fig. 10C). Second, broadening the inhibitory projections largely reduces the total amount of information (Fig. 10D compared with C), although tuning-dependent connections partially mitigate the information loss (Fig. 10D, different colors). Last, depolarizing inhibitory neurons improves the information transmission across the processing layers in multilayer networks (Fig. 10, E and F). Tuning-dependent connections do not have much of an effect on information transmission in networks with smaller μi (Fig. 10E), whereas it partially reduces the information loss in higher layers with larger μi (Fig. 10F).

DISCUSSION

The performance of a population code depends on the relationship between the individual tuning curves and the shared variability among neurons (18, 20, 21). Previous studies have tacitly assumed a prescribed structure between tuning and variability, allowing a dissection of their respective impacts on population coding (19, 24, 25). While this approach has given some critical insights, an understanding of how the mechanics of a neural circuit affect population coding has remained elusive. It is well known that the trial-averaged (65) and trial-to-trial variability (22, 31, 35, 36) of a population response are shaped by both feedforward and recurrent network connectivity. In this study, we estimated the information available (to a linear decoder) about a simple, one-dimensional stimulus that is contained in the activity of a recurrently coupled network of spatially ordered spiking neuron models. We show that simply understanding how pairwise noise correlations are determined through circuitry is insufficient to predict how information will be transferred across layers. Rather, an analysis of how circuitry determines the stability of network firing activity provides a better understanding of information transfer. Our work reveals in particular the critical role of inhibition in information transmission. Increasing the mean inhibitory drive resulted in better information transmission, while increasing the width of lateral inhibition worsened information transmission.

A central result of our paper is that in the limit of a large number of decoded neurons, the linear Fisher information IF can either saturate to the input information for networks with stable firing rate dynamics (Figs. 3 and 4) or fall short of that bound for networks in a pattern-forming regime (Figs. 6, 8, and 9). To appreciate why this is the case, it is useful to recall a simple fact about how linear systems encode inputs. Consider a one-dimensional stimulus θ that drives a vector of noisy inputs x(θ) = s(θ) + η, where the noise process η has zero mean with covariance matrix Σx. Let the output y(θ) be a linear mapping of the input; specifically, we take y(θ) = Lx(θ) for some invertible matrix L. Then, we have that the linear Fisher information available to a decoder from y, termed , is simply

| (2) |

In other words, deterministic linear mappings do not distort or degrade information transfer (when the decoder is also linear). We remark that does not depend on the linear mapping L.

When the cortical network is in the stable regime and any input noise is weakly correlated, then we can linearize network dynamics about an operating point (29, 58). In this regime, the noisy spike counts during a trial, n(θ), in response to stimulus θ obey

Here, Gij = giδij is a diagonal matrix of the neuron response gains (depends on the operating point) and Jij is the synaptic coupling from neuron j to i. In addition to stimulus noise in x(θ), there is an internal noise process ξ that can reflect any trial fluctuations accrued from the digitization from continuous inputs to spike counts (27) or a “background” noise that is present even when coupling and stimulus are absent (29); in all cases, ξ is independent across neurons. If sufficiently large numbers of neurons are decoded or sufficiently long observation times are used, then the contribution of ξ to population statistics is negligible, and we have that n ≈ (I − GJ)−1Gx, or in other words, the population output is a linear function of the input. Given this linearization and Eq. 2, we would then expect that , so long as the coupling J and input x provide a stable operating point around which to linearize. In contrast to this stable case, when the network is in a pattern-forming regime, then there is not a stable operating point about which to linearize. This means that Eq. 2 is no longer valid, and we would expect that . In total, this argument sketches how an understanding of the network firing rate stability translates quite naturally to an understanding of what circuit conditions will allow the network to faithfully transfer all the information available (to a linear decoder) in an input signal.

Broad inhibitory projections, also referred to as lateral inhibition, are a common circuit structure used to support various neural computations, such as working memory (56, 66), sharpening of tuning curves (22, 65), formation of clustered networks (67), and the periodic spatial receptive fields of grid cells (68). In this work, we show that the pattern-forming dynamics generated by lateral inhibition degrade information transmission, which is consistent with previous results (22). Anatomical measurements of local cortical circuitry in the visual cortex show that excitatory and inhibitory neurons project with similar spatial scales (41, 64). Our results suggest that such spatially balanced excitatory and inhibitory projections are important for maintaining faithful representations of sensory stimuli. Nevertheless, lateral inhibition may be important for transforming sensory information into task-related information in higher-order cortices, such as the prefrontal cortex.

Information transmission in feedforward networks has been studied in several models. Bejjanki et al. (69) show that by improving feedforward template matching of Gabor filters, perceptual learning can increase the information gleaned from a presented image. In our model, the L4 network is similar to such models (31, 69), where information loss depends on the Gabor filters of the L4 neurons. In contrast, the feedforward projections from L4 to L2/3 neurons in our model are random and expansive (meaning there are much more neurons in L2/3 than L4), which is known to minimize information loss (23, 70). Therefore, there is little information loss in the feedforward projections from L4 to L2/3 in our model, regardless of the projection width. Renart and van Rossum (71) and Zylberberg et al. (70) study information transmission with external noise added to the output layer and compute the optimal connectivity and input covariance that maximize the information in the output layer. In our model, there is no external noise imposed on the L2/3 neurons. All the neuronal variability in the L2/3 network is either internally generated or inherited from the feedforward inputs (32, 35, 36).

Our results can potentially explain the improvement in discriminability by selective attention. Spatial attention has been shown to significantly reduce the global fluctuations in cortical recordings and, meanwhile, improve animals’ performance in the orientation change detection task [(10); but see (11, 12)]. However, the magnitude of global fluctuations is found to have little effect on information, and instead, information is only limited by input noise (27, 31). Recently, we developed a circuit model where large-scale wave dynamics give rise to low-dimensional shared variability (36), thus capturing properties of population recordings in the visual cortex (6, 45, 54). By depolarizing the inhibitory neurons, the attentional modulation in our model stabilizes the network and decreases noise correlations. To account for attention-mediated increase in firing rates, the model requires additional mechanisms such as increasing the feedforward projection strengths (72). Our past work suggests that the attention-mediated reduction in noise correlations reflects an increase in the stability of network dynamics. Results from the present study imply that enhanced network stability can increase information flow by quenching the internally generated, turbulent dynamics in the recurrent circuit (Fig. 8A).

In this work, we focused on two types of solutions in spatial networks, each with distinct nonlinear dynamics. In the pattern-forming regime with broad inhibition, networks generate slow fluctuations (below 5 Hz), positive correlations between nearby neuron pairs, and negative correlations for neurons pairs that are half wavelength away. In the regime of wave dynamics, network activity shows gamma rhythms (25 to 50 Hz) and, on average, positive correlations across all distances. Recordings from visual cortex have found fluctuations around the 70- to 150-ms time scale (59, 60) and, on average, positive correlations across long distance (36), which is more consistent with the wave dynamics regime.

In addition to the two types of nonlinear dynamics focused in our work, there are other mechanisms for networks to internally generate shared variability. For example, models with bistability can produce stochastic transitions between active and inactive states (11, 67, 73), which have been commonly observed in anesthetized (73) and awake behaving animals (74). Networks with bistability generate slow fluctuations and large correlations among neurons within the same population (11, 67, 73). The time scale of variability depends on the adaption time constant and input noise strength. A recent spatial network model with local bistability models attention as excitatory inputs and predicts a reduction in the spatial scale of noise correlations by attention (11). In contrast, networks in the wave dynamics regime produce a more spatially uniform shift of correlations (Fig. 8C). A reanalysis of multielectrode recordings from the V4 area shows that noise correlations have a shorter spatial scale in the attended state (fig. S6), which is more consistent with models with local bistability. Despite the differences in mechanisms, models with local bistability also predict that attention improves the stability of the active state of local populations (11). Since the transitions between active and inactive states are internal nonlinear dynamics, results from the present study suggest that they may also reduce information transmission. A more detailed study is needed to compare how different mechanisms of nonlinear network dynamics affect information processing.

Spontaneous dynamics of cortex have been shown to mimic the response patterns evoked by external stimuli (75, 76). It has been hypothesized that the spontaneous dynamics reflect the prior distribution of the stimulus statistics, which is critical for optimal Bayesian inference (76, 77). Our results show that the internal dynamics of recurrent networks do not improve information flow and can reduce it drastically when they are excessive. This is consistent with the correlation between the reduction in global fluctuations in population activity by attention and learning and the improvement in animal’s performance (13, 14). The inclusion of tuning-dependent wiring only partially mitigates the information loss that is due to internal dynamics in some networks. The exact role of spontaneous dynamics in information processing and neural computation remains to be elucidated in future studies.

MATERIALS AND METHODS

Network model description

The network consists of two stages, modeling for the L4 and L2/3 neurons in V1, respectively (Fig. 1A). Neurons on the two layers are arranged on a uniform grid covering a unit square Γ = [−0.5,0.5] × [−0.5,0.5]. L4 population is modeled similarly to the model in (31). There are Nx = 2500 neurons in L4. The firing rate of neuron i from L4 is determined by its Gabor filter Fi acting on the presented image

where is a Gabor filter, is a Gabor image corrupted by independent additive noise, , P is the number of pixels in each image, and · is the inner product of two vectors. The noise on each pixel follows the Ornstein-Uhlenbeck process

with τn = 40 ms, σn = 3.5, and W being a vector of P independent Wiener processes. Spike trains of L4 neurons are generated as inhomogeneous Poisson process with rate ri(t). The shared stimulus noise generates correlations across L4 neuron pairs and provides an external source of information limiting correlations, as in (31). These correlations are weak, yet nonzero (0.0052 on average) and match the weak values reported in the literature (78).

The Gabor image is defined on Γ with 25 × 25 pixels (Δx = 0.04),

The size of the spatial Gaussian envelope was σ = 0.2, the spatial wavelength was λ = 0.6, and phase was ϕ = 0. The orientation θ is normalized between 0 and 1. The Gabor filters were

with the same σ, λ, and ϕ as the image. The orientation map was generated using the formula from (40) (Supplementary Materials, eq. S20). The preferred orientation at (x, y) is θpref(x, y) = angle(z(x, y))/(2π) and

where Λ = 0.2 is the average column spacing, lj = ±1 is a random binary vector, and the phase ϕj is uniformly distributed in [0,2π].

The L2/3 network consists of recurrently coupled excitatory (Ne = 40,000) and inhibitory (Ni = 10,000) neurons. Each neuron is modeled as an exponential integrate-and-fire neuron whose membrane potential is described by

| (3) |

where superscript α = e, i denotes excitatory and inhibitory neurons, respectively. Each time exceeds a threshold Vth, the neuron spikes and the membrane potential is held for a refractory period τref and then resets to a fixed value Vre. Neuron parameters for excitatory neurons are τm = Cm/gL = 15 ms, EL = −60 mV, VT = −50 mV, Vth = −10 mV, ΔT = 2 mV, Vre = −65 mV, and τref = 1.5 ms. Inhibitory neurons are the same except τm = 10 ms, ΔT = 0.5 mV, and τref = 0.5 ms. The total current to each neuron is

| (4) |

where N = Ne + Ni is the total number of neurons in the network. Postsynaptic current is

where τer = 1 ms, τed = 5 ms for excitatory synapses and τir = 1 ms, τid = 8 ms for inhibitory synapses. The feedforward synapses from L4 to L2/3 have the same kinetics as the recurrent excitatory synapse, i.e., ηF(t) = ηe(t).

The probability that two neurons, with coordinates x = (x1, x2) and y = (y1, y2), respectively, are connected depends on their distance measured on Γ with periodic boundary condition

| (5) |

Here, is the mean connection probability and

is a wrapped Gaussian distribution. A presynaptic neuron is allowed to make more than one synaptic connection to a single postsynaptic neuron.

The mean recurrent connection probabilities were , , , and , and the recurrent synaptic weights were Jee = 80 mV, Jei = −240 mV, Jie = 40 mV, and Jii = −300 mV. The feedforward connection probabilities were and . The feedforward connection strengths were JeF = 240 mV and JiF = 400 mV for Figs. 1 to 6, and JeF = 140 mV and JiF = 0 mV for Figs. 7 and 8. The static inputs to inhibitory neurons were μi = 0 mV/ms for Figs. 1 to 6, and μi = 0,0.4,0.8, and 1.2 mV/ms for Figs. 7 and 8. The static inputs to excitatory neurons were μe = 0 mV/ms for all simulations.

The feedforward connection widths, αffwd, were 0.05, 0.625, 0.75, 0.1, and ∞, and the excitatory and inhibitory connection widths were fixed to be σe = σi = 2αffwd for Figs. 2 and 3. The inhibitory connection widths σi were 0.1, 0.2, and 0.3 for Figs. 5 and 6, and the excitatory and the feedforward connection widths were σe = 0.1 and αffwd = 0.05, respectively. The feedforward connection width was αffwd = 0.05, and the excitatory and inhibitory connection widths were σe = σi = 0.1 for Figs. 1 and 7 to 9.

In the multilayer network (Fig. 9), the recurrent and the feedforward connections of all layers are αrec = 0.1 and αffwd = 0.05, respectively. The first and the second layer are the same as the L4 and L2/3 networks, respectively, described above. The other layers are modeled the same as the L2/3 network. In the condition when μi = 0 mV/ms, the feedforward connection strength from L1 to L2 excitatory neurons is JeF = 140 mV, and the feedforward connection strengths between other layers are JeF = 35 mV. In the condition when μi = 1.2 mV/ms, the feedforward connection strength from L1 to L2 excitatory neurons is JeF = 180 mV, and the feedforward connection strengths between other layers are JeF = 54 mV. The feedforward connection probability from L1 to L2 excitatory neurons was , and feedforward connection probabilities to other layers were in both conditions of μi. All the feedforward connections to inhibitory neurons and the static inputs to excitatory neurons were set to be zero, i.e., JiF = 0 mV and μe = 0 mV/ms. The feedforward strengths were chosen such that the mean firing rates of L2 to L4 are similar across layers and between the two conditions of μi.

In networks with tuning-dependent connections (Fig. 10), Pts percentages of recurrent excitatory connections are randomly chosen from similarly tuned neurons and do not depend on space. The remaining 1 − Pts connections are generated according to connection probability that depends only on the physical distance between neurons (Eq. 5). Neurons i and j are called similarly tuned if their preferred orientations, θi and θj, satisfy condition cos (θi − θj) > 0.6. The tuning preference of the excitatory neurons are determined by choosing the preferred orientation θ of the estimated feedforward input, h(x, y, θ) = f(x, y, θ) * g(x, y, αffwd), where f is the tuning curve function of neurons in the feedforward layer, g is a wrapped Gaussian spatial kernel of width αffwd, and * is convolution over space. Other parameters are the same as described above.

All simulations were performed on the High Performance Computing Cluster at the Center for the Neural Basis of Cognition in Pittsburgh, PA. All simulations were written in a combination of C and Matlab (Matlab R 2015a, Mathworks). The differential equations of the neuron model were solved using the forward Euler method with a time step of 0.05 ms.

Neural field model and stability analysis

We use a two-dimensional neural field model to describe the dynamics of population rate (Figs. 4 and 6). The neural field equations are

| (6) |

where rα(x, t) is the firing rate of neurons in population α = e, i near spatial coordinates x ∈ [0,1] × [0,1]. The symbol * denotes convolution in space, μα is a constant external input and , where g(x; σβ) is a two-dimensional wrapped Gaussian with width parameter σβ, β = e, i. The transfer function is a threshold-quadratic function, . The time scale of synaptic and firing rate responses is implicitly combined into τα.

For constant inputs, μe and μi, there exists a spatially uniform fixed point, . Linearizing around this fixed point in Fourier domain gives a Jacobian matrix at each spatial Fourier mode (34).

where is the two-dimensional Fourier mode, is the Fourier coefficient of wαβ(x) with , and is the gain. The fixed point is stable at Fourier mode if both eigenvalues of have negative real part. Note that stability only depends on the wave number, , so Turing-Hopf instabilities always occur simultaneously at all Fourier modes with the same wave number (spatial frequency).

For the stability analysis in Figs. 5 and 7, μi varied from 0.1 to 0.7 with a step size of 0.002, σi varied from 0.05 to 0.2 with a step size of 0.0005, and μe = 0.5 and σe = 0.1. The rest of the parameters were , , , , τe = 5 ms, and τi = 8 ms.

Statistical methods

Each simulation was 20 s long consisting of alternating OFF (300 ms) and ON (200 ms) intervals. Image was presented during ON intervals, where the average firing rate of L4 neurons was rX = 10 Hz. During OFF intervals, spike trains of L4 neurons were an independent Poisson process with rate rX = 5 Hz. Spike counts from the L2/3 excitatory neurons during the ON intervals were used to compute the linear Fisher information and noise correlations. The first spike count in each simulation was excluded. For each parameter condition, the connectivity matrices were fixed for all simulations. The initial states of each neuron’s membrane potential were randomized in each simulation.

To compute tuning curve functions, the orientation for each ON interval was randomly sampled from 50 orientations uniformly spaced between 0 and 1. There were 9750 spike counts in total for all orientations. Tuning curves were smoothed with a Gaussian kernel of width 0.05. OSI for neuron i is computed as

| (7) |

where ri(θ) is the tuning curve function of neuron i, and θk = k/Nth.

To compute the linear Fisher information and noise correlation, the orientations of the Gabor images during ON intervals were randomly chosen from θ1 = θ + δθ/2 and θ2 = θ − δθ/2, where θ = 0.5 and δθ = 0.01. The linear Fisher information of L2/3 neurons is computed using the bias-corrected estimate (49)

| (8) |

where fi and Qi are the empirical mean and covariance, respectively, for θi. Ntr was the number of trials for each θi. The number of neurons were N = 50, 100, 200, 400, 800, 1600, 3200, 6400, and 12,800, randomly sampled without replacement from the excitatory population of L2/3.

To estimate the asymptotic value of the linear Fisher information, I∞, at the limit of N → ∞, we used the fitting algorithm proposed by Kafashan et al. (50). Briefly, the theory of information-limiting correlations (27) shows that the linear Fisher information, IN, in a population of N neurons can be decomposed into a limiting component, I∞, and a nonlimiting component I0(N),

| (9) |

where we assume that the nonlimiting component increases linearly with N, i.e., I0 = cN. Hence, Eq. 9 can be rewritten as

| (10) |

which shows that 1/IN scales linearly with 1/N with 1/I∞ as the intercept. Hence, we did a linear fit of 1/IN versus 1/N, with N varying from 200 to 12,800 and estimated 1/I∞. A summary of the linear fit is shown in fig. S1.

The linear Fisher information of L4 neurons can be estimated analytically as (31), with

where , T is the time window for spike counts, and δij is a Kroenecker delta, which is 1 if i = j, and 0 otherwise. We can calculate the variance of the integrated noise over time window T as .

The noise correlation was computed with N = 1600 neurons randomly sampled without replacement from the excitatory population of L2/3. The Pearson correlation coefficients were computed from the average covariance matrix (Q1 + Q2)/2.

For the computation of both noise correlations and linear Fisher information, there were 20 sampling of neurons for each N. Neurons of firing rates less than 1 Hz were excluded. There were 117,000 spike counts in total for θ1 and θ2.

Acknowledgments

Funding: The work was funded by the Swartz Foundation Fellowship no. 2017-7 (C.H.); grants from the Simons Foundation Collaboration on the Global Brain (C.H., A.P., and B.D.); the Swiss National Science Foundation (www.snf.ch), nos. 31003A_143707 and 31003A_165831 (A.P.); National Institutes of Health (grant nos. 1U19NS107613-01 and R01 EB026953); and the Vannevar Bush Faculty (Fellowship no. N00014-18-1-2002). This work used the Extreme Science and Engineering Discovery Environment (XSEDE), which is supported by National Science Foundation grant number ACI-1548562. Specifically, it used the Bridges system, which is supported by NSF award number ACI-1445606, at the Pittsburgh Supercomputing Center (PSC).

Author contributions: C.H., A.P., and B.D. conceived the project; C.H. performed the simulations and data analysis; B.D. supervised the project; all authors contributed to writing the manuscript.

Competing interests: The authors declare that they have no competing interests.

Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Computer code for all simulations and analysis of the resulting data is available at https://doi.org/10.5281/zenodo.5874682 and https://github.com/hcc11/FI_SpatialNet.

Supplementary Materials

This PDF file includes:

Figs. S1 to S6

Other Supplementary Material for this manuscript includes the following:

Movies S1 to S5

REFERENCES AND NOTES

- 1.Shadlen M. N., Newsome W. T., The variable discharge of cortical neurons: Implications for connectivity, computation, and information coding. J. Neurosci. 18, 3870–3896 (1998). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ruff D. A., Ni A. M., Cohen M. R., Cognition as a window into neuronal population space. Annu. Rev. Neurosci. 41, 77–97 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Stringer C., Pachitariu M., Steinmetz N., Reddy C. B., Carandini M., Harris K. D., Spontaneous behaviors drive multidimensional, brainwide activity. Science 364, eaav7893 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Musall S., Kaufman M. T., Juavinett A. L., Gluf S., Churchland A. K., Single-trial neural dynamics are dominated by richly varied movements. Nat. Neurosci. 22, 1677–1686 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cohen M., Kohn A., Measuring and interpreting neuronal correlations. Nat. Neurosci. 14, 811–819 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rabinowitz N. C., Goris R. L., Cohen M., Simoncelli E., Attention stabilizes the shared gain of v4 populations. eLife , e08998 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Whiteway M. R., Butts D. A., Revealing unobserved factors underlying cortical activity with a rectified latent variable model applied to neural population recordings. J. Neurophysiol. 117, 919–936 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Vinck M., Batista-Brito R., Knoblich U., Cardin J. A., Arousal and locomotion make distinct contributions to cortical activity patterns and visual encoding. Neuron 86, 740–754 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Downer J. D., Niwa M., Sutter M. L., Task engagement selectively modulates neural correlations in primary auditory cortex. J. Neurosci. 35, 7565–7574 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cohen M. R., Maunsell J. H. R., Attention improves performance primarily by reducing interneuronal correlations. Nat. Neurosci. 12, 1594–1600 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shi Y.-L., Steinmetz N. A., Moore T., Boahen K., Engel T. A., Influence of on-off dynamics and selective attention on the spatial pattern of correlated variability in neocortex. bioRxiv, 2020.09.02.279893 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ruff D. A., Cohen M. R., Attention can either increase or decrease spike count correlations in visual cortex. Nat. Neurosci. 17, 1591–1597 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gu Y., Liu S., Fetsch C. R., Yang Y., Fok S., Sunkara A., DeAngelis G. C., Angelaki D. E., Perceptual learning reduces interneuronal correlations in macaque visual cortex. Neuron 71, 750–761 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ni A., Ruff D., Alberts J., Symmonds J., Cohen M., Learning and attention reveal a general relationship between population activity and behavior. Science 359, 463–465 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Smith G. B., Sederberg A., Elyada Y. M., Van Hooser S. D., Kaschube M., Fitzpatrick D., The development of cortical circuits for motion discrimination. Nat. Neurosci. 18, 252–261 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zohary E., Shadlen M. N., Newsome W. T., Correlated neuronal discharge rate and its implications for psychophysical performance. Nature 370, 140–143 (1994). [DOI] [PubMed] [Google Scholar]

- 17.Sompolinsky H., Yoon H., Kang K., Shamir M., Population coding in neuronal systems with correlated noise. Phys. Rev. E 64, 051904 (2001). [DOI] [PubMed] [Google Scholar]

- 18.Averbeck B. B., Latham P. E., Pouget A., Neural correlations, population coding and computation. Nat. Rev. Neurosci. 7, 358–366 (2006). [DOI] [PubMed] [Google Scholar]

- 19.Abbott L. F., Dayan P., The effect of correlated variability on the accuracy of a population code. Neural Comput. 11, 91–101 (1999). [DOI] [PubMed] [Google Scholar]

- 20.Kohn A., Coen-Cagli R., Kanitscheider I., Pouget A., Correlations and neuronal population information. Annu. Rev. Neurosci. 39, 237–256 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Seung H. S., Sompolinsky H., Simple models for reading neuronal population codes. Proc. Natl. Acad. Sci. U.S.A. 90, 10749–10753 (1993). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Seriès P., Latham P. E., Pouget A., Tuning curve sharpening for orientation selectivity: Coding efficiency and the impact of correlations. Nat. Neurosci. 7, 1129–1135 (2004). [DOI] [PubMed] [Google Scholar]

- 23.Beck J., Bejjanki V. R., Pouget A., Insights from a simple expression for linear fisher information in a recurrently connected population of spiking neurons. Neural Comput. 23, 1484–1502 (2011). [DOI] [PubMed] [Google Scholar]

- 24.Josić K., Shea-Brown E., Doiron B., de la Rocha J., Stimulus-dependent correlations and population codes. Neural Comput. 21, 2774–2804 (2009). [DOI] [PubMed] [Google Scholar]

- 25.Zylberberg J., Cafaro J., Turner M. H., Shea-Brown E., Rieke F., Direction-selective circuits shape noise to ensure a precise population code. Neuron 89, 369–383 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Franke F., Fiscella M., Sevelev M., Roska B., Hierlemann A., da Silveira R. A., Structures of neural correlation and how they favor coding. Neuron 89, 409–422 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Moreno-Bote R., Beck J., Kanitscheider I., Pitkow X., Latham P., Pouget A., Information-limiting correlations. Nat. Neurosci. 17, 1410–1417 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Doiron B., Litwin-Kumar A., Rosenbaum R., Ocker G., Josic K., The mechanics of state-dependent neural correlations. Nat. Neurosci. 19, 383–393 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ocker G. K., Hu Y., Buice M. A., Doiron B., Josić K., Rosenbaum R., Shea-Brown E., From the statistics of connectivity to the statistics of spike times in neuronal networks. Curr. Opin. Neurobiol. 46, 109–119 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hennequin G., Ahmadian Y., Rubin D. B., Lengyel M., Miller K. D., The dynamical regime of sensory cortex: Stable dynamics around a single stimulus-tuned attractor account for patterns of noise variability. Neuron 98, 846–860.e5 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kanitscheider I., Coen-Cagli R., Pouget A., Origin of information-limiting noise correlations. Proc. Natl. Acad. Sci. U.S.A. 112, E6973–E6982 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.van Vreeswijk C., Sompolinsky H., Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726 (1996). [DOI] [PubMed] [Google Scholar]