Abstract

Hand hygiene monitoring and compliance systems play a significant role in curbing the spread of healthcare associated infections and the COVID-19 virus. In this paper, a model has been developed using convolution neural networks (CNN) and computer vision to detect an individual’s germ level, monitor their hand wash technique and create a database containing all records. The proposed model ensures all individuals entering a public place prevent the spread of healthcare associated infections (HCAI). In our model, the individual’s identity is verified using two-factor authentication, followed by checking the hand germ level. Furthermore, if required the model will request sanitizing/ hand wash for completion of the process. During this time, the hand movements are checked to ensure each hand wash step is completed according to World Health Organization (WHO) guidelines. Upon completion of the process, a database with details of the individual’s germ level is created. The advantage of our model is that it can be implemented in every public place and it is easily integrable. The performance of each segment of the model has been tested on real-time images an validated. The accuracy of the model is 100% for personal identification, 96.87% for hand detection, 93.33% for germ detection and 85.5% for the compliance system respectively.

Keywords: Convolution neural networks (CNN), Template matching, Optical character recognition (OCR), Frame extraction, Image processing, Hand hygiene, Healthcare associated infections (HCAI)

Introduction

Infection prevention and control (IP&C) measures are vital in providing a safe environment for people by reducing the risk of the spread of diseases. These measures are generally used in hospitals to reduce the potential spread of HCAI and to provide patients and health professionals a safe environment. Most of the HCAI have been known to be spread to patients through the hands of healthcare workers [18]. According to WHO, hundreds of millions of people get infected by HCAI. In such a scenario, hand hygiene is widely regarded as the most effective preventive measure for HCAI. Newborn babies are at higher risks of getting affected by HCAIs in developing countries than in developed nations by 20 times. In order to reduce the spread of such infections, there is a necessity for hand hygiene monitoring and compliance systems installed in hospitals. This can be further expanded to schools and colleges to prevent the spread of diseases in buses and classrooms [20]. Despite the publications of many guidelines, compliance remains to be low approximately 40% even in properly resourced facilities [4]. Hand hygiene has been a growing concern among people, especially in the health sector, even before the COVID-19 pandemic. Shashank et al. has proposed a collaborative framework for addressing the challenges faced by health care workers during pandemics and stressed the need to develop infrastructure with appropriate technological support to combat infections [10]. Ever since the pandemic started, people around the world have been cautious about their hand hygiene as there are high possibilities of coming in contact with COVID-19 [1]. In the past, various experiments have shown that hand hygiene compliance systems have helped in the reduction of the spread of infections [3] and diseases in hospitals. Since hand hygiene compliance was poor in Groote Schuur hospital, Cape Town, South Africa, Patel et al. used a multifaceted hospital-wide intervention to increase hand hygiene compliance [15]. It was a quasi-experimental pre-post intervention design conducted for 3 months from June to August in 2015. The sample size was 497 and it was conducted in 11 wards of the hospital. They followed the WHO guidelines and a statistically significant improvement in hand hygiene was witnessed. The study confirmed that a reduction in hospital-acquired infections and a decline in antibiotic resistance can be achieved, which in turn would improve patient care and reduce the future cost of healthcare. These studies used in curbing of infections have inspired and motivated us to propose a simple and effective hand hygiene monitoring and compliance system.

In this paper, an integrated model of hand hygiene monitoring and compliance system has been proposed and developed using convolution neural networks (CNN) and computer vision to detect an individual’s germ level, monitor their hand wash technique and create a database containing all records. The integrated model mainly consists of personal dual-factor authentication in the first stage, detection of germ level of an individual in the second stage, activation of WHO recommended washing/sanitizing in the third stage and generation of database in the fourth stage. The following paragraphs briefly present the important works in literature which are related in the design of each stage of our proposed model.

An important segment of our proposed work is hand detection. Previous papers have introduced computer vision-based methods to extract features from a video frame for the detection of hands [7]. In the literature review conducted by Manish et al., vision techniques for hand detection are compared and studied out of which adaptive boosting algorithm provides better and faster results. The authors used the adaptive boosting algorithm for the detection of hand, Haar classifier algorithm for training the classifier, HSV model to remove noise and background subtraction, convex hull to draw contours. In another method, Arpit et al. has used a two-stage method to detect hands [13]. The first stage uses 3 detectors (hand shape detector, context detector, and skin-based detector) to propose hand bounding boxes. A score was given by each bounding box independently. In the second stage, a final score was given using these three detectors. The drawbacks for illumination and background faced by skin-based detectors are overcome by using CNN [21]. Analyzing the pros of using CNN, our model is designed to harness these advantages.

Another important segment of our proposed model is extracting text for the personal authentication model. Previously, there have been numerous methods to extract text from an image. Text extraction has mainly been done using optical character recognition (OCR Tesseract). Zhang et al., have proposed their own approach for text extraction which includes: feature extraction using six phases (pixel research, similarity between pairs of pixels, CC (connected components) feature, similarity between pairs of CCs, text feature and text layout feature) [24]. For text extraction from images Vedant et al., extracts information from bills using OCR, storing the information as a machine-processable text which is easy to access [11]. OpenCV and Tesseract OCR engines are used for optical character recognition and text extraction respectively in our model. After examining the functioning and benefit of OCR, our model utilizes it to complete the personal authentication segment.

According to WHO hand hygiene guidelines [19], there are 7 steps for hand rub and washing hands with soap. Previously, David et al. had implemented a computer vision-based system to assess the quality of the hand rub of sanitizers and hand wash using soaps [12]. Hand and arm segmentation is achieved by skin and motion features for determining the region of interest (ROI). For measuring the position of the arms, a multi-modal distribution is used. HOG and histogram of oriented optical flow (HOF) are used for feature vector creation which is then sent to the classifier. Two support vector machines (SVMs) are used for pose recognition with an approach that is one-against-one and also multiclass. Yeung et al. has used depth sensors for hand hygiene monitoring systems [22]. In this method, computer vision is used to detect when a person does a hand hygiene action. In some other cases, biosensors are also used to detect viruses and germs. Saylan et al. has shown the use of biosensors to detect viruses [16]. The disadvantages of using a biosensor are that it is dependent on external parameters like temperature and pH values.

Weighing the advantages and disadvantages of aforementioned methods, we propose to integrate computer vision techniques with artificial intelligence. There is a huge potential of AI in clinical medicine like surveillance of HCAI [5], diagnosis of infection with IPC implication [8], diagnosis of COVID using AI and computer vision [2], post COVID effects in society [14] and hand hygiene [6]. With this in mind, we have proposed and developed an integrated system that helps not only health professionals but also people everywhere to monitor their hand hygiene using a convolution neural network and computer vision. The systematic implementation of the proposed system can help in significantly reducing the risk of transmission of diseases in hospitals and public areas. It can also help in increasing the compliance rates which will in turn help in the reduction of the spread of HCAI.

The structure of the paper is as follows. Section 2 discusses the steps involved in developing a system that monitors hand hygiene and its compliance. Section 3 deals with the database and performance metrics used. Section 4 illustrates the results and discussions are presented in Section 5. Section 6 concludes the work with important inferences.

Proposed method

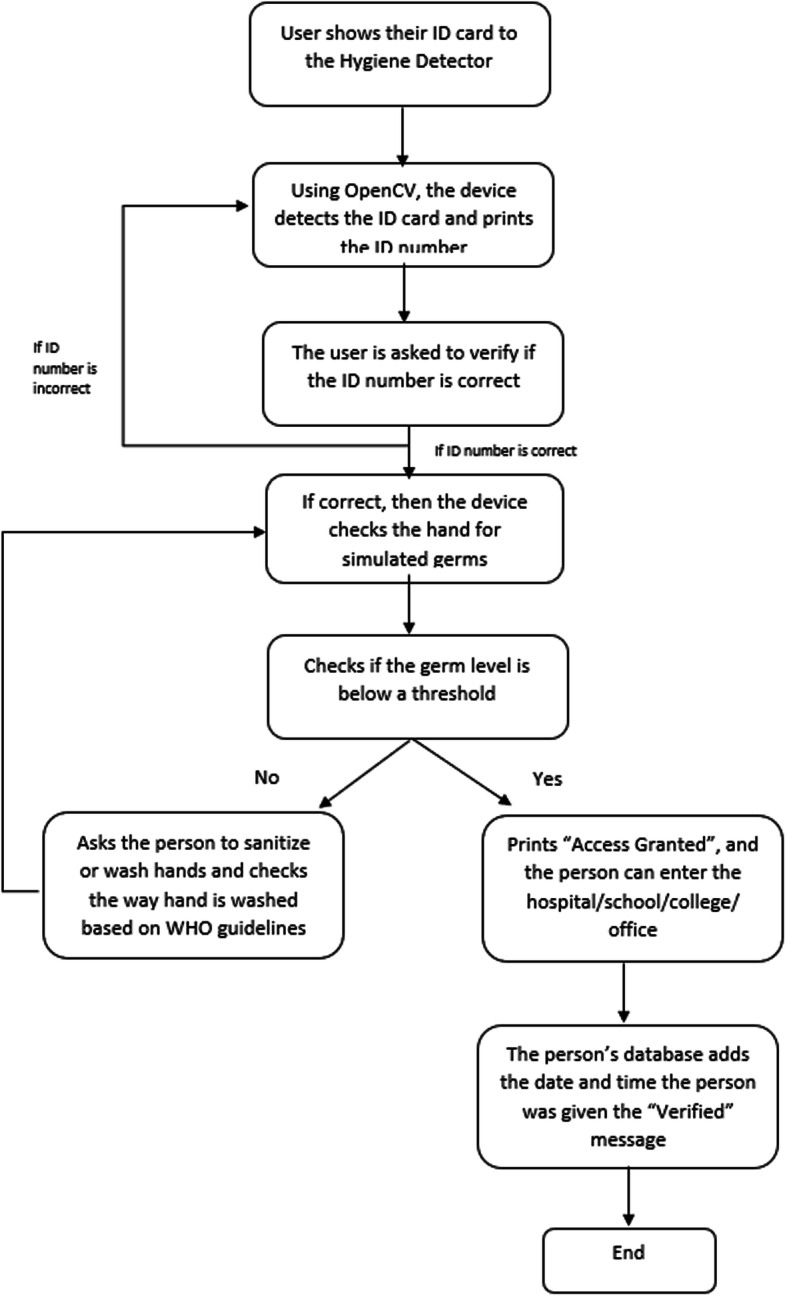

The proposed method depicted in Fig. 1 is a four-step model to successfully implement a hand hygiene compliance system. In the first stage, personal dual-factor authentication is completed by scanning the identity (ID) card and password verification. In the second stage, the germ level of an individual is detected. The third stage is only activated if the person’s germ level is high. In this stage, the person is required to wash/ sanitize their hands based on the WHO guidelines, which is monitored by the CNN model. The fourth stage is used to generate a database.

Fig. 1.

Block diagram of the proposed methodology

First stage of personal authentication

For preprocessing the images, we have used OpenCV. Transforming an image can be done using the predefined functions of OpenCV. Tesseract uses a neural network subsystem for text line recognizer. For the recognition of a sequence of characters, Tesseract uses recurrent neural networks (RNN). Our system employs the usage of Pytesseract which is a wrapper [23].

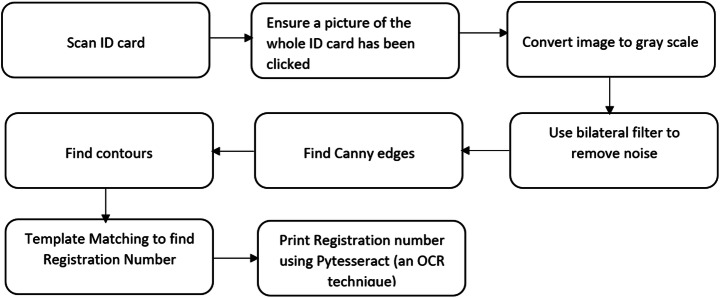

To crop the region which contains the required information to be extracted, we use template matching. It is a process done to match a template image in the original image. It slides the template over the image till it finds a match. This is done as a 2D Convolution. The personal authentication is executed using an eight-stage process that ensures a secure dual-factor authentication. The eight stages are highlighted in Fig. 2.

Fig. 2.

Flow chart of the personal authentication process

The first stage scans the ID card while ensuring no other noise or objects have been incorrectly included. The second step converts the image to grayscale to make the process easier rather than indulging in the complexities of colored images. The third step uses a bilateral filter to remove noise and sharpen edges without blurring. Furthermore, it replaces pixel value with weighted average using the Gaussian distribution of neighboring pixels. The fourth step finds canny edges to detect edges in noisy images. The process followed to execute this step is: noise reduction, gradient calculation, non-max suppression, double thresholding and edge tracking by hysteresis.

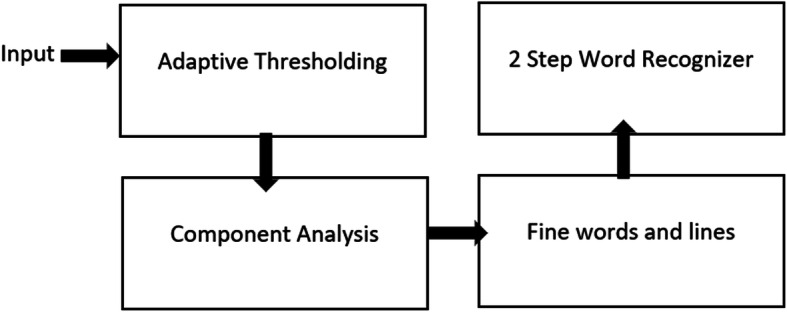

The fifth step finds contours that are imperative for object recognition. In the sixth step, template matching is used to extract the ID number area of an image. The input image is converted to grayscale and a template is selected. The template is moved in a way such that it slides over the image. Wherever the accuracy is highest, the position is detected as the region of interest (ROI). This ROI is used in the text extraction process. In the seventh step, the text is extracted using Tesseract for OCR to extract the ID number in text format. Tesseract OCR engine does the localization of text, character segmentation and recognition. After this, Tesseract performs adaptive thresholding to give a binary image that represents segmentation; it also performs component analysis to find character outlines. Then, it finds lines and words to create outlines on each word and lastly a two-step word recognition stage to identify the alphabet or number, which is shown in Fig. 3.

Fig. 3.

Flow chart of OCR (Optical Character Recognition)

In the eighth stage, dual-factor authentication is used to verify the passport using a database with ID numbers and passwords. The model checks the password entered by the individual to that given in the database. This completes the personal identification process.

CNN for hand detection system and hand hygiene compliance system

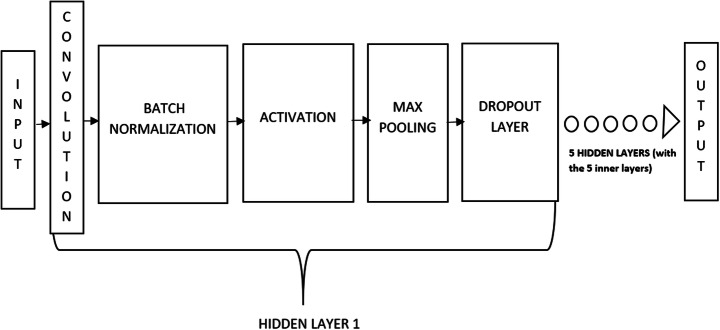

CNN is a class of deep learning neural networks. After performing extensive research on which machine learning model to use for image classification, we landed on CNN due to its high accuracy. Although accuracy plays a vital role in deciding the type of model to be used, CNN has an edge over other machine learning models as it has the ability to detect important features and extract them by itself, hence making it computationally efficient. It is also very effective in reducing the parameters without affecting the quality of the model. Consequently, we have used CNN in our hand detection and hygiene compliance system, the architecture of which is shown in Fig. 4.

Fig. 4.

CNN architecture

To implement CNN, we used Tensorflow and Keras. We use Keras because the application programming interface (API) is simple, clean and allows deep learning models to be defined, fit and evaluated with just a few lines of code. In 2019, Google integrated Keras API with Tensorflow library and released Tensorflow2 deep learning library. The integration is commonly referred to as tf.keras interface or API. We have used this API to develop the CNN models for the hand hygiene monitoring and compliance system.

The second stage of hand detection and germ detection

The germ detection requires the hand to be detected followed by germ detection.

Hand detection

Architecture of the model

The parameters set in our model include an image size of 128 × 128 × 3. The model also contains parameters like 2 categories of output for classification, 5 pooling and convolution layers, 5 epochs and the batch size 16. The training and testing data paths are given as input to the model along with a CSV file which augments the process. The training dataset is split into 85% training data and 15% validation data. A soft-max function is used as an activation function during training the model and an Adam optimizer is used while testing the algorithm. The architecture of the CNN is shown in Fig. 4.

Training the model

Our model consists of 5 hidden layers and can classify images into two categories namely, ‘hand’ and ‘no hand’. Our model takes an image as its input and assigns weights and biases to various aspects/objects in the image, through which it is able to classify images. Once all the parameters are set, the model is trained with the training dataset and is also run for the validation dataset. The time taken for the process is 2 min and 21 s. Using this model the categories of the testing dataset are predicted and the values are printed. A data frame is created which contains the name of the image and its predicted label/class. The parameters of the hand detection model are shown in Table 1.

Table 1.

Model accuracy of hand detection

| Model parameters | Model accuracy (%) |

|---|---|

| Batch size =16, epochs =5 | 94.17 |

| Batch size = 16, epochs = 10 | 97.5 |

Germ detection

Architecture of the model

The parameters set in germ detection model are similar to the hand detection model. The model also contains parameters like 2 categories of output for classification, 5 pooling and convolution layers, 20 epochs and the batch size 4. It also uses soft-max activation function. The training dataset is split into 85% training data and 15% validation data.

Training the model

In this model the images are classified into two categories namely, “High’ germ level and “Low” germ level. The parameters of germ detection model are shown in Table 2.

Table 2.

Model accuracy of germ detection

| Model parameters | Model accuracy (%) |

|---|---|

| Batch size =4, epochs =20 | 93.33 |

The third stage of the WHO compliance system

The WHO compliance system comes into play only if the person’s hand germ level is categorized as high. This model requires the person to perform all hand wash steps using a soap/ sanitizer in front of the camera. Our model is trained to record the entire process and extract frames from the video. Those frames are used to validate if a person has performed all required steps.

Architecture of the model

The parameters set in our model include an image size of 128 × 128 × 3. The model also contains parameters like 6 categories of output for classification (depicting the 6 WHO compliance steps). This multiclass label classification model uses CNN to distinguish if the user has washed their hands based on the WHO guidelines. The model has 5 pooling and convolution layers, 10 epochs and a batch size of 16. The training and testing data paths are given as input to the model along with a CSV file which augments the process. The training dataset is split according to the above models. It also uses soft-max function and Adam optimizer.

-

b)

Training the model

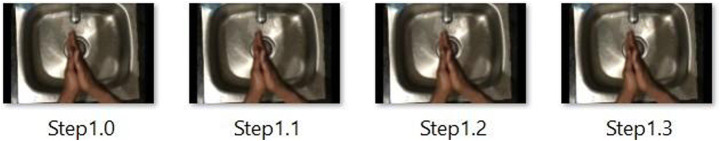

Our model consists of 5 hidden layers and can classify images into two categories namely, ‘step0’, ‘step1’, ‘step2’, ’step3’, ‘step4’, ‘step5’. Once all the parameters are set, the model is trained with the training dataset and is also run for the validation dataset. Meanwhile, the person performs the hand washing procedure and our system records it in the form of a video format, which is then used to extract frames from the recorded video. These frames are given as an input to the model which categorizes the testing dataset and prints the predicted values. A data frame is created which contains the name of the image and its predicted label/class. The parameters of WHO compliance system are shown in Table 3.

Table 3.

Model accuracy of WHO compliance system

| Model parameters | Model accuracy |

|---|---|

| Batch size =16, epochs =10 | 85.5% |

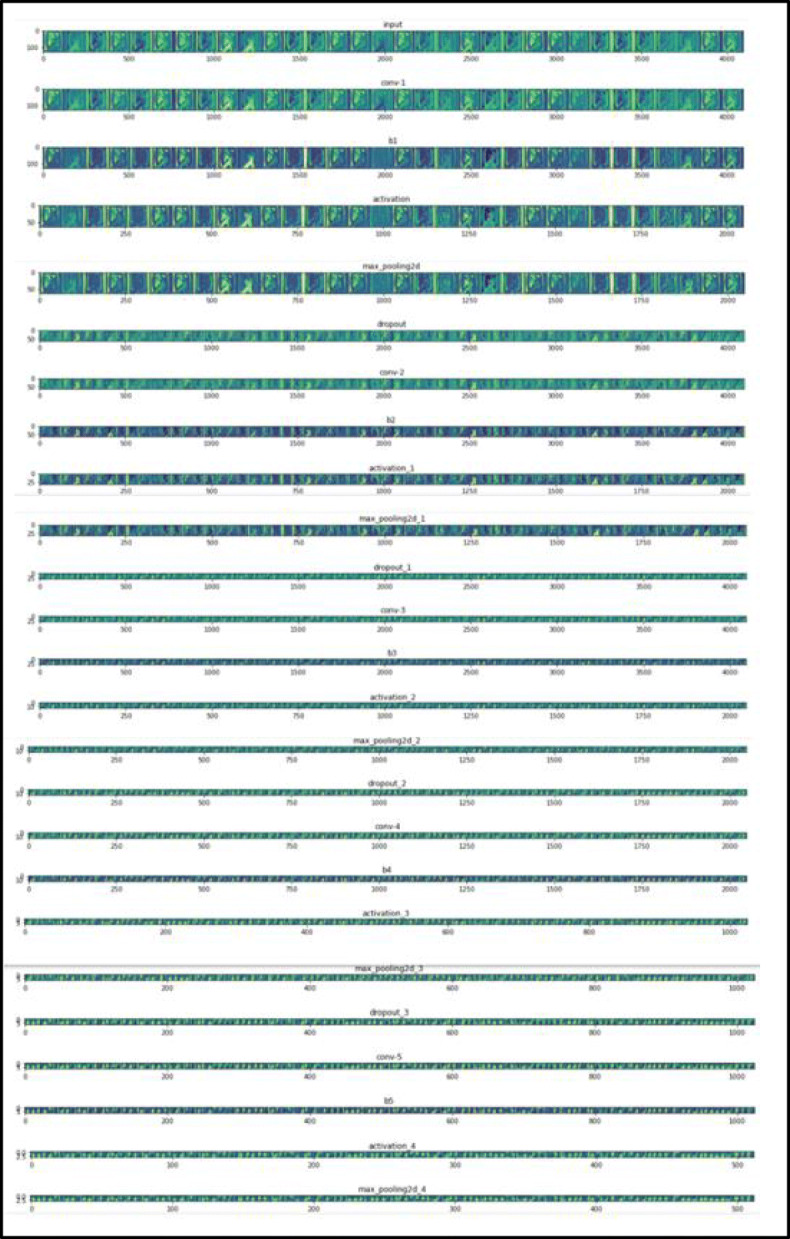

Feature maps for each of the steps are also plotted. The feature map for Step 3 is shown below. As we can see from the feature maps, using filters in the CNN helps the model to highlight lines, focus on the background, focus on outlining the hands; focus on the edges etc. The model is able to capture fine details about the image which it then uses during classification and prediction. The feature map of our model is shown in Fig. 5.

Fig. 5.

Feature map for ‘Step 3’

Fourth stage of database generation

In order to connect the system with a database, we use openpyxl. It is a library used to access the excel sheets on the desktop and make them into active workbooks so that we can add our final data to this database (excel format). After the completion of the hand hygiene validation, the data of the individual is updated into a database using xlsxwriter module. The excel sheet is opened and made in an editable format, the number of rows occupied are recorded and the updating of the database is done from the next row. This database helps maintain a record of each individual’s germ level entering any public place.

Database and performance metrics

The first step in developing a hand hygiene monitoring and compliance system is the collection of data. The dataset for each of the four segments mentioned in Section 2 is independently created by taking real-time images. Moreover for personal authentication and database generation, real-time analysis is performed based on the output generation. For the hand identification data, we used real-time hand images with varied skin tones, backgrounds and different hand sizes and used pictures of various other objects so that the dataset contains both hand images and images that do not contain hand. The training images were named based on whether a hand is present or not, for example, the images are named as ‘Hand.n’ or ‘No.n’, where n = 1, 2, 3….n. Here ‘n’ indicates total number of hand/ no hand images. Some sample images used for hand detection are shown in Fig. 6.

Fig. 6.

Dataset sample for hand detection

For the WHO hand hygiene compliance system, we used the UCF-101 dataset [9] which contains videos of individuals performing the hand wash action according to the WHO guidelines. It also contains various parameters like illumination, background (like different sinks), camera position and field of view. Along with this, we also added videos of us performing the hand wash actions according to WHO guidelines. Frames are extracted from these video files using OpenCV which is used as an input to the CNN model (training dataset). There are 4815 images in the training dataset. The training images are named based on the action they are representing, for example, the images are named as ‘Step1.n’, where n = 1, 2, 3….n (n represents total number of images), as shown in Fig. 7.

Fig. 7.

Dataset sample of WHO Compliance system

For Germ Detection, a dataset is created using real-time images of fluorescence paint on the hand (simulating a virus). The germ levels are of two intensities “High” and “Low” based on the luminescence, as shown in Fig. 8. The hand on the left and right are classified as having “High” and “Low” germ level respectively. The training images are named based on whether germ level is “High” or “Low”, for example, the images are named as ‘High.n’ or ‘Low.n’, where n = 1,2,3….n (n represents total number of high/ low germ level hand images).

Fig. 8.

This image shows the difference between “high” germ level and “low” germ level. The “high” germ level can be transformed to a “low” germ level by a 30-second hand wash procedure

To evaluate the effectiveness of the proposed model, the accuracy of each of the four steps mentioned in Section 2 is obtained. As the performance of the CNN model is based on its training, testing accuracy and computation time, we mentioned these three performance metrics for each segment of our model.

Results

Experimental setup

The implementation of the four segments together integrated our project to give desired results. The methodology explained in Section 2 is completely executed in Python using Jupyter Notebook. The different python libraries used, as stated in Section 1, aided in the execution of our project. For the execution of the project, a laptop with 32 GB RAM, 2 × 64-bit, 2.8 GHz 8.00 GT/s CPUs, a stable internet connection and a working camera are used [17].

Model training and testing

Successful execution of each segment of our model, keeping in mind the performance metrics, has been done and shown in Table 4. To evaluate the model, the accuracy of each of the four steps mentioned in Section 2 is obtained and also the computation time is mentioned. Personal authentication is perfectly detecting the ID card number and validating the password for two-factor authentication. As for the hand detection, germ detection and WHO compliance system, the model is tested with subject images the model had not seen before. All processes are tested with different backgrounds to make sure it accurately predicts values. Our dataset contains varied parameters like illumination, background (like different sinks), camera position, and the field of view and hand complexions. This makes our results more precise when new hands are introduced to the system.

Table 4.

Result analysis

| Model | Training dataset accuracy (%) | Validation accuracy (%) | Computation time |

|---|---|---|---|

| Hand detection model | 96.87 | 90.9 | 2 min 21 s |

| Germ/Virus detection model | 93.3 | 57.0 | 2 min 01 s |

| WHO hand hygiene compliance model | 85.5 | 99.7 | 1 h 9 min 49 s |

Discussions

Our study has few limitations regarding germ detection and the WHO hand hygiene compliance system. Our study simulates the presence of germs and does not detect germs as of now. This can be achieved by using fluorescence spectral imaging techniques. The computation time of the WHO hand hygiene compliance system is longer than the already existing models like the model proposed by Harvey Wang et al. [6]. However, the accuracy obtained by our model is higher as our dataset consists of various clips of individuals washing their hands with different types of sinks, skin colours and environments.

If any discrepancy is faced by the model it asks the individual to redo the steps required for completion of the process. For hand detection and WHO compliance system, 100 images and 4815 images are used for training respectively. However, if more images are used, the accuracy of the model will increase (image dataset of 3000 images or more). Previous studies performed by Yeung et al. [22] and Saylan et al. [16] show the usage of depth sensors for detecting all WHO hand wash steps. The cost associated with doing so can be high; hence we have provided a cost-effective yet efficient method to use a camera to detect the same.

Conclusions

Hand Hygiene is one of the most effective preventive methods for the reduction in the spread of HCAI and other diseases. In this paper, the practical use of deep learning combined with computer vision in detecting the germ level of all individuals in places like hospitals and schools is portrayed. The aim of the model is to be able to successfully authenticate an individual’s identity, detect the hand for germs, monitor the WHO hand washing steps if the germ level is high and add the individual’s name to a database. CNN and OpenCV are used to achieve this endeavor. The most important feature of the proposed work is the WHO Compliance part of our model which comes into play if the germ level is high and makes sure the individual sanitizes/ washes their hands based on the WHO guidelines. Our model executed the personal authentication with a 100%, hand detection with a 96.87%, germ detection with a 93.33% accuracy and WHO compliance system with 85.5% accuracy. Instantaneous feedback is given after every stage of the model. The model could be improved by increasing the data size. At a macro level, the government could install systems at required places like hospitals, schools, offices and any public area, which can monitor each person’s germ level, helping them keep a record. Moreover, at a micro-level, a mobile application could be designed to record high germ locations.

Acknowledgements

The authors would like to thank Vellore Institute of Technology for the encouragement, approval and support in pursuing this research study.

Declarations

Conflict of interest

The authors have no conflicts of interest, affiliations or financial interests to report.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Anubha Nagar, Email: anubha.ed@gmail.com.

Mithra Anand Kumar, Email: madhumithraa99@gmail.com.

Naveen Kumar Vaegae, Email: vegenaveen@vit.ac.in.

References

- 1.Alzyood M, Jackson D, Aveyard H, Brooke J. COVID-19 reinforces the importance of handwashing. J Clin Nurs. 2020 doi: 10.1111/jocn.15313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bhargava A, Bansal A. Novel coronavirus (COVID-19) diagnosis using computer vision and artificial intelligence techniques: a review. Multimed Tools Appl. 2021;80:19931–19946. doi: 10.1007/s11042-021-10714-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Boyce JM, Pittet D. Guideline for hand hygiene in health-care settings: recommendations of the Healthcare Infection Control Practices Advisory Committee and the HICPAC/SHEA/APIC/IDSA Hand Hygiene Task Force. Inf Control Hosp Epidemiol. 2002;23(S12):S3–S40. doi: 10.1086/503164. [DOI] [PubMed] [Google Scholar]

- 4.Erasmus V, Daha TJ, Brug H, Richardus JH, Behrendt MD, Vos MC, van Beeck EF. Systematic review of studies on compliance with hand hygiene guidelines in hospital care. Infect Control Hosp Epidemiol. 2010;31(3):283–294. doi: 10.1086/650451. [DOI] [PubMed] [Google Scholar]

- 5.Fitzpatrick F, Doherty A, Lacey G. Using artificial intelligence in infection prevention. Current treatment options in infectious diseases. 2020;12(2):135–144. doi: 10.1007/s40506-020-00216-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Galkin M, Rehman K, Schornstein B, Sunada-Wong W, Wang H (2019) A hygiene monitoring system. Rutgers University’s School of Engineering

- 7.Gurav RM, Kadbe PK (2015) Real time finger tracking and contour detection for gesture recognition using OpenCV. In: 2015 International Conference on Industrial Instrumentation and Control (ICIC). IEEE, pp 974-977. 10.1109/IIC.2015.7150886

- 8.Haque A, Guo M, Alahi A, Yeung S, Luo Z, Rege A, Fei-Fei L (2017) Towards vision-based smart hospitals: a system for tracking and monitoring hand hygiene compliance. In: Machine Learning for Healthcare Conference. PMLR, pp 75-87. Available from https://proceedings.mlr.press/v68/haque17a.html. Accessed 21 Mar 2021

- 9.Kaggle S (2020) Hand wash dataset. Available online: https://www.kaggle.com/realtimear/hand-wash-dataset. Accessed 22 Mar 2021

- 10.Kumar S, Raut RD, Narkhede BE. A proposed collaborative framework by using artificial intelligence-internet of things (AI-IoT) in COVID-19 pandemic situation for healthcare workers. Int J Healthc Manag. 2020;13(4):337–345. doi: 10.1080/20479700.2020.1810453. [DOI] [Google Scholar]

- 11.Kumar V, Kaware P, Singh P, Sonkusare R, Kumar S (2020) Extraction of information from bill receipts using optical character recognition. In: 2020 International Conference on Smart Electronics and Communication (ICOSEC). IEEE, pp 72-77. 10.1109/ICOSEC49089.2020.9215246

- 12.Llorca DF, Parra I, Sotelo M, Lacey G. A vision-based system for automatic hand washing quality assessment. Mach Vis Appl. 2011;22(2):219–234. doi: 10.1007/s00138-009-0234-7. [DOI] [Google Scholar]

- 13.Mittal A, Zisserman A, Torr PH (2011) Hand detection using multiple proposals. In: Bmvc, vol 2, no 3, p 5. 10.5244/C.25.75

- 14.Nayal K, Raut R, Priyadarshinee P, Narkhede BE, Kazancoglu Y, Narwane V. Exploring the role of artificial intelligence in managing agricultural supply chain risk to counter the impacts of the COVID-19 pandemic. Int J Logist Manag. 2021 doi: 10.1108/IJLM-12-2020-0493. [DOI] [Google Scholar]

- 15.Patel B, Engelbrecht H, McDonald H, Morris V, Smythe W. A multifaceted hospital-wide intervention increases hand hygiene compliance: in practice. S Afr Med J. 2016;106(4):335–341. doi: 10.7196/SAMJ.2016.v106i4.10671. [DOI] [PubMed] [Google Scholar]

- 16.Saylan Y, Erdem Ö, Ünal S, Denizli A (2019) An alternative medical diagnosis method: biosensors for virus detection. Biosensors 9(2):65. 10.3390/bios9020065 [DOI] [PMC free article] [PubMed]

- 17.System Requirements — Anaconda Documentation. Available online: https://docs.anaconda.com/anaconda-enterprise/system-requirements/. Accessed 24 Mar 2021

- 18.World Health Organization (2006) WHO guidelines on hand hygiene in health care (advanced draft): global safety challenge 2005-2006: clean care is safer care (No. WHO/EIP/SPO/QPS/05.2 Rev. 1). World Health Organization. https://apps.who.int/iris/handle/10665/69323. Accessed 1 Mar 2021

- 19.WHO Hand Hygiene: Why, How and When (2009) https://www.who.int/gpsc/5may/Hand_Hygiene_Why_How_and_When_Brochure.pdf Accessed 16 Mar 2021

- 20.World Health Organization. Health care-associated infections FACT SHEET. http://www.who.int/gpsc/country_work/gpsc_ccisc_fact_sheet_en.pdf. Accessed 12 Mar 2021

- 21.Yang L, Qi Z, Liu Z, Liu H, Ling M, Shi L, Liu X. An embedded implementation of CNN-based hand detection and orientation estimation algorithm. Mach Vis Appl. 2019;30(6):1071–1082. doi: 10.1007/s00138-019-01038-4. [DOI] [Google Scholar]

- 22.Yeung S, Alahi A, Haque A, Peng B, Luo Z, Singh A, … Li FF (2016) Vision-based hand hygiene monitoring in hospitals. In: AMIA

- 23.Zelic F “[Tutorial] OCR in Python with Tesseract, OpenCV and Pytesseract.” AI & Machine Learning Blog, 14 May 2021, http://www.nanonets.com/blog/ocr-withtesseract

- 24.Zhang J, Cheng R, Wang K, Zhao H (2013) Research on the text detection and extraction from complex images. In: 2013 Fourth International Conference on Emerging Intelligent Data and Web Technologies. IEEE, pp 708-713. 10.1109/EIDWT.2013.122