Abstract

In this preregistered study (https://osf.io/s4rm9) we investigated the behavioural and neurological [electroencephalography; alpha (attention) and theta (effort)] effects of dynamic non-predictive social and non-social cues on working memory. In a virtual environment realistic human-avatars dynamically looked to the left or right side of a table. A moving stick served as a non-social control cue. Kitchen items were presented in the valid cued or invalid un-cued location for encoding. Behavioural findings showed a similar influence of the cues on working memory performance. Alpha power changes were equivalent for the cues during cueing and encoding, reflecting similar attentional processing. However, theta power changes revealed different patterns for the cues. Theta power increased more strongly for the non-social cue compared to the social cue during initial cueing. Furthermore, while for the non-social cue there was a significantly larger increase in theta power for valid compared to invalid conditions during encoding, this was reversed for the social cue, with a significantly larger increase in theta power for the invalid compared to valid conditions, indicating differences in the cues’ effects on cognitive effort. Therefore, while social and non-social attention cues impact working memory performance in a similar fashion, the underlying neural mechanisms appear to differ.

Keywords: EEG, gaze, social, joint attention, virtual reality

Eye gaze is highly important in human communication (Kleinke, 1986), and people will generally follow other people’s eye gaze, engaging in joint attention (see Frischen et al., 2007). Joint attention is linked to theory of mind (e.g. Charman et al., 2000) which leads to mentalising and perspective taking (Frith and Frith, 2006). This gaze-following behaviour is typically investigated using an adapted Posner cueing task (e.g. Posner, 1980) whereby targets are presented in a valid, cued or in an invalid, un-cued location. Despite the uninformative nature of the cues, validly cued targets are generally responded to faster than those invalidly cued, with this being found both for eye gaze cues (e.g. Friesen and Kingstone, 1998; Driver et al., 1999; Frischen et al., 2007; Gregory, 2021; Gregory and Jackson, 2021) and for other non-social communicative cues such as arrows and directional words (e.g. Hommel et al., 2001; Ristic et al., 2002; Tipples, 2002, 2008; Gregory and Jackson, 2021).

While cueing effects are not unique to eye gaze, joint attention is shown to have a unique effect upon higher-order cognitive processes, with equivalent effects not found for non-social controls. For example joint attention has been found to improve language comprehension in children (Tomasello and Farrar, 1986; Tomasello, 1988) and adults (Hanna and Brennan, 2007; Knoeferle and Kreysa, 2012), to influence memory processes in infants (Striano et al., 2006; Cleveland et al., 2007; Wu and Kirkham, 2010; Wu et al., 2011) and adults (Shteynberg, 2010; Dodd et al., 2012; Richardson et al., 2012; Gregory and Jackson, 2017), and to influence perceived object value (Bayliss et al., 2007; van der Weiden et al., 2010; Madipakkam et al., 2019). These uniquely social effects are considered to reflect default altercentric (other-centred) processing (Kampis and Southgate, 2020), where humans cannot help but be influenced by the perspective of others. Evidence therefore suggests that objects seen under shared/joint attention undergo enhanced processing due to the uniquely social influence of mutual gaze (Becchio et al., 2008; Shteynberg, 2010).

While evidence shows that joint attention enhances working memory for simple objects (Gregory and Jackson, 2017, 2019), the mechanisms of this effect are currently unknown. For example, it is unclear if joint attention influences memory for multidimensional information (Baddeley, 2010). Information that captures attention is found to be favoured by working memory (Awh et al., 2006), and these attention and memory processes are linked to neural oscillatory activity in the alpha (8–12 Hz) and theta (3–7 Hz) bands (Klimesch, 1999). Alpha desynchronisation (decrease in power) is related to enhanced attentional processing of target stimuli (Hanslmayr et al., 2005; Sauseng et al., 2005). Theta synchronisation (increase in power) is related to effortful cognitive control processes (Demiralp and Başar, 1992; Min and Park, 2010; Cavanagh and Frank, 2014; Noonan et al., 2018). In memory, increased theta power at encoding and retrieval is related to better recall of stimuli (see Hsieh and Ranganath, 2014 for a review) and increases in task demands lead to greater theta synchronisation (e.g. Gevins et al., 1997; Jensen and Tesche, 2002).

Changes in alpha and theta rhythms also reflect social processing. During perspective taking, theta oscillations occur in brain areas linked to mentalising processes (Bögels et al., 2015; Wang et al., 2016; Seymour et al., 2018). Further gaze processing deficits in schizophrenia have been linked to irregular theta activity (Grove et al., 2021), and theta is also linked to social exclusion (Cristofori et al., 2013, 2014). When using real humans as stimuli (not photographs), alpha power is found to be modulated by eye gaze. For example, alpha power desynchronization is more pronounced for direct than averted gaze (Chapman et al., 1975), and direct gaze triggers approach-related alpha activity while averted gaze triggers avoidance-related activity (Hietanen et al., 2008; Pönkänen et al., 2011). Furthermore, higher alpha desynchronization is triggered by a joint attention compared to no joint attention condition in an otherwise identical task (Lachat et al., 2012). Finally, using a humanoid robot in an innovative gaze cueing task, it was found that eye contact prior to gaze shift results in higher alpha desynchronization compared to no eye contact prior to shift (Kompatsiari et al., 2021).

When photographs instead of real people are used as gaze cues, the effects of gaze on alpha are absent (Hietanen et al., 2008). Indeed, there are a range of important variations found in people’s responses to real people compared to photographic or video stimuli (see Risko et al., 2016). However, while the use of real people as stimuli is a useful and enlightening method, this can produce limitations in complexity, replicability and the types of behaviours that can be investigated. As an alternative, Wykowska and colleagues have successfully used robots as social stimuli in social cognition research (Wykowska et al., 2016; Kompatsiari et al., 2018, 2021; Willemse et al., 2018). This demonstrates that it is not always necessary to use real people when studying social phenomenon. Indeed, research shows that interactions with virtual humans are comparable to real human interaction (see Bombari et al., 2015).

In the current preregistered study (https://osf.io/s4rm9), we investigated the effect of dynamic eye gaze on working memory using virtual human avatars. Critically, we recorded electroencephalography (EEG) to investigate oscillatory power changes in alpha, reflecting attentional effects, and theta, reflecting cognitive effort, during the task. To the best of our knowledge, this is the first investigation of theta and alpha oscillations with respect to the effect of gaze cueing on working memory. Notably, to the best of our knowledge, this is also the first investigation of theta oscillations in gaze cueing more generally.

Presented using a head-mounted display, 3D avatars looked up to engage the participant in eye contact before looking down to the left or right. Reflecting real gaze behaviour, the avatars eyes shifted gaze direction rapidly in the direction of head turn (i.e. Hayhoe et al., 2012). Unlike traditional cueing tasks where items are presented to the side of the cue, here target kitchen items (bowl, plate, cup and teapot) appeared on the left or right side of a table below the cue. This allowed investigation of the influence of cues in a more realistic environment where cue and target are not on the same visual plain. Participants were asked to remember multidimensional (i.e. Baddeley, 2010) location and status information about the four presented items. A dynamic 3D stick was presented as a non-social control cue which reflected the movement of the social avatar (Figure 1), allowing investigation of movement vs social cueing effects on working memory. Neural activity was assessed during cue shift, encoding and retrieval.

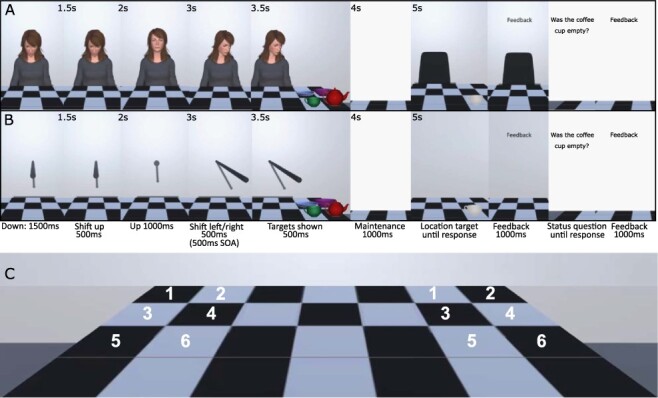

Fig. 1.

Illustration of the trial procedure (chequered pattern inspired by Harkin et al., 2011). Adopting the parameters of the traditional central cueing paradigm, the cue remained on screen for the entire trial (e.g. Friesen and Kingstone, 1998; Driver et al., 1999). Panel A shows the social avatar cue, and Panel B shows the non-social stick cue. Timings, as shown in the figure, were matched across cue types. Note that the inter-trial interval was 1000 ms during which a fixation cross was presented. The experiment was a free viewing experiment; thus, participants could move their eyes as they wished. Panel C shows the six possible left and right locations for the four encoding targets.

Generally in memory research theta power changes are prevalent in anterior sites, while alpha changes tend to be posterior (Jensen et al., 2002; Khader et al., 2010; Sauseng et al., 2010). Therefore, if the cues directly impact working memory encoding, effects would be expected in these areas. However, these effects have not been tested in the context of social and non-social cueing in virtual reality (VR); therefore, our analysis does not focus on specific locations.

The following predictions were pre-registered: (i) in the social gaze cue condition only we predicted working memory performance would be better in the valid compared to the invalid condition. (ii) We predicted alpha and theta power would be more strongly affected by the shift of the social compared to the non-social cue (i.e. Lachat et al., 2012; Kompatsiari et al., 2021). (iii) In the social gaze cue condition only, we predicted there would be stronger theta power increases and stronger alpha power decreases for the valid compared to the invalid condition at both the encoding and retrieval intervals.

Note that we also preregistered predictions in the domain of event-related potentials (ERPs), related to replicating basic attentional effects; however, this is not pursued here in favour of more specific predictions made for memory functioning in oscillations (see Supplementary materials).

Method

Participants

We recruited 49 participants [33 females, 16 males, mean age 21 years (s.d. = 3.1, range 18–32), 3 left-handed] from Aston university for payment (£10/h, cash) or course credit. Planned recruitment of 60 participants was cut-off by the 2020 coronavirus pandemic; however, the study is sufficiently powered to detect behavioural and neurological effects of the cues if present; a within-subjects design with 49 participants has 80% power (Westfall, 2016) to detect a small-to-medium effect (e.g. d = 0.35, Gregory and Jackson, 2017; d ≈ 0.5; Kompatsiari et al., 2021). All participants reported having normal or corrected to normal vision. Consent was obtained in accordance with the Declaration of Helsinki, and ethical approval was obtained from the Aston University Research Ethics Committee.

Apparatus

The study was programmed in Unity using the Unity experimental framework (Brookes et al., 2020). A Lenovo Legion Y540-17IRH laptop computer (Intel Core i7-9750H Processor, 33GB RAM, NVIDIA GeForce RTX 2060 graphics card) ran the programme and communicated triggers wirelessly to the EEG (LSL4Unity; https://github.com/labstreaminglayer/LSL4Unity). Participants viewed the study through the Oculus Rift S PC-Powered VR Gaming Head-Mounted Display (HMD) and responded using a touch controller. Study and materials can be downloaded here: https://osf.io/s9xmu/files/.

Stimuli

Human avatar cue

Four male and four female identities with neutral facial expressions and plain grey clothing were created (see Supplementary materials). Avatars were independently rated (n = 61, online study; see Supplementary materials) for human personality traits (Oosterhof and Todorov, 2008), anthropomorphism, animacy and likeability (Bartneck et al., 2009). Importantly, the ratings indicated that the avatars were seen as humanlike (Table 1).

Table 1.

Shows average ratings for the avatars across all identities rated on general personality traits from Oosterhof and Todorov (2008), as well as showing the results from the original study where 327 participants rated 66 different neutral faces on personality traits (data shown with permission). The scale ranged from 1 (not at all) to 9 (extremely) for each trait, and results do not indicate that the avatars used were particularly strange, mean difference in rating = 0.6 points, although note the higher variability for the avatar ratings. Table 1 also shows the Godspeed ratings (9-point scale) for the human avatars and the robot avatar; ratings were significantly higher and thus more humanlike for the human avatars than for the robot to the P < 0.001 level

| Rating scale | Current study (Avatars) Mean (s.d.) | Oosterhof and Todorov (2008) Table S1 Mean (s.d.) |

|---|---|---|

| Aggressive | 3.67 (2.26) | 4.68 (0.98) |

| Attractive | 4.54 (2.36) | 2.85 (0.78) |

| Caring | 4.67 (2.04) | 4.54 (0.72) |

| Confident | 4.38 (1.98) | 4.81 (0.68) |

| Dominant | 4.25 (2.36) | 4.81 (0.81) |

| Emotionally stable | 4.16 (2.12) | 4.74 (0.79) |

| Intelligent | 5.31 (1.91) | 4.88 (0.68) |

| Mean | 4.23 (2.28) | 4.94 (0.87) |

| Responsible | 4.82 (1.88) | 4.31 (0.77) |

| Sociable | 4.20 (2.23) | 4.58 (0.74) |

| Trustworthy | 4.57 (2.09) | 4.74 (0.85) |

| Unhappy | 4.98 (2.47) | 4.72 (0.82) |

| Weird | 4.43 (2.55) | 5.01 (1.05) |

| Threatening | 3.61 (2.22) | Not included |

| Godspeed | Mean (SD) Avatars | Mean (SD) Robot |

| Amphropomorphism | 5.04 (1.73) | 2.16 (1.34) |

| Animacy | 5.00 (1.64) | 2.55 (1.48) |

| Likeability | 5.22 (1.37) | 3.25 (1.85) |

Non-social stick cue

Created in Unity as a cylindrical game object which extended to a similar distance from the participant and table as the avatars (see Figure 1). Both cues have been shown to trigger typical cueing effects (Gregory, 2021).

Targets

Adapted from the Unity asset store (assetstore.unity.com/packages/3d/white-porcelain-dish-set-demo-82858), the cup and bowl were presented either empty or full by adding coffee/soup, the plate contained a pastry (assetstore.unity.com/packages/3d/props/food/croissants-pack-112263) that was bitten or whole and the teapot was presented cracked or not cracked. Items were presented in colour at encoding and greyscale at retrieval to avoid colour matching.

Design

Within-subjects independent variables were cue type (avatar and stick) and cue-target validity (50% valid and 50% invalid) pseudorandomised. Further controlled variables were probe-item location (same/different, 50% each) and correct answer to status question (yes/no, 50% each). Per cue type there were 56 valid and 56 invalid trials. Additionally, we pseudorandomised equally whether items appeared to the left or right and whether the cues looked left or right. The computer programme randomised (simple randomisation) avatar identity, item location (six possible locations), probe item for location and probe question for status. It was possible for location and status questions to probe memory for different items.

Procedure

A 5-trial familiarization session preceded the main experiment during which the HMD was configured prior to EEG set up. For the main study, cue condition was counterbalanced such that participants saw either the social avatar or the non-social stick condition first and all trials were completed before seeing the other cue condition. For both cue types there were 10 practice trials and 112 experimental trials. Breaks were encouraged every 28 trials and an enforced break was taken between the two cue type sessions. Participants could remove the HMD during breaks.

For both cue types, a trial proceeded as follows (Figure 1): a fixation cross was presented for 1000 ms (inter-trial interval) and then replaced by the cue looking/pointing at the table (1500 ms). The cue then looked/pointed at the participant (transition 500 ms) and after 1000 ms pointed/looked to the left or right (transition 500 ms: for gaze, eyes also moved rapidly during the first 30 ms of the 500 ms head turn), targets were then presented [stimulus onset asynchrony (SOA) 500 ms, calculated from the moment the cue began to shift]. Four items were presented for encoding (500 ms) in four of the six possible locations (Figure 1C) on either the valid or invalid side. Participants were instructed that the cue was not informative and should be ignored. After a blank maintenance interval (1000 ms) a probe item was shown in greyscale either in the location in which it was initially presented, or in a different location (occupied previously by another object). Participants responded with a button press and received accuracy feedback. Next, the status of an item at encoding was probed using text (e.g. ‘Did the bowl have soup in it?’) and participants responded with a button press and received feedback. The experiment was a free-viewing study and there was no response-window cut-off.

EEG acquisition and pre-processing

We recorded EEG using a 64-channel eego™ sports mobile EEG system (ANT Neuro, Enschede, the Netherlands; Ag/AgCl electrodes, international 10–10 system), digitised at a sampling rate of 500 Hz. Electrode CPz served as online reference and AFz as the ground electrode. Mastoids and electrooculogram (EOG) electrodes were not used, and impedance was kept below 20 kΩ during task.

EEG data were pre-processed using Fieldtrip toolbox version 20191028 (Oostenveld et al., 2011) in MatlabR2019b®. Data were detrended and then bandpass filtered between 0.5 and 36.0 Hz. The data were epoched from 1 s pre cue onset to 1 s post probe response, such that cue onset = time 0. Trials were inspected for artefacts, and trials with large artefacts were removed (average 221 total trials/participant in final analysis); corrupted electrodes were interpolated using the average method (5 in total; max 2/participant); data were re-referenced using the average reference method (post interpolation). Independent component analysis (fastica) was used to identify noise, eye-blink, saccade, heartbeat and muscle components (average 11 components removed per participant, range 2–23, see Figure S3 in Supplementary materials for example components).

Data analysis and results

Behavioural data

Due to programming error (see Supplementary materials) the preregistered use of d' as a measure of WM accuracy was not possible, instead percent accuracy was used. Repeated-measures analyses of variance (ANOVAs) were conducted on percent accuracy separately for the location and status data with cue type (social and non-social) and cue validity (valid and invalid) as within-subjects variables. Conforming to the preregistered analysis plan, results are reported using standard null hypothesis significance testing with supporting analysis conducted with Bayesian statistics using JASP (Version 0.12.2.0; Love et al., 2015) using default priors (Wagenmakers et al., 2018) and ANOVA effects across matched models only (see Van Den Bergh et al., 2020). Bayesian analysis allows us to make inferences about the strength of findings as well as about the nature of any null findings.

Time–frequency analysis

Time–frequency analysis was carried out by applying a Morlet wavelet transform on each trial from 2 to 30 Hz (for every 1 Hz), with three cycles per time-window in steps of 50 ms. For each participant trials were then averaged within each condition and a decibel (db) baseline correction was applied at 500 ms to 100 ms pre cue onset. Time–frequency representations were generated for the full time–frequency spectrum, and statistical analysis focussed on alpha (8–12 Hz) and theta (3–7 Hz) bands separately, averaging across the frequency band. Analysis was data-driven (no pre-selected time intervals or electrodes), and multiple comparisons across time points and electrodes were corrected using non-parametric cluster-based permutation tests implemented in the Fieldtrip Toolbox (Maris and Oostenveld, 2007), with 5000 permutations (cluster alpha = P < 0.05, critical alpha = P < 0.05). Analysis compared valid and invalid conditions separately for each cue type. In addition, to understand potential interactions between cue validity and cue type (person/stick) for each participant we subtracted the invalid condition from the valid condition for both cue types separately and then compared the magnitude of the difference. This statistical approach is recommended on the Fieldtrip website and has been implemented in previous work (Bögels et al., 2015; Wang et al., 2016; Huizeling et al., 2020); analysis scripts and data are available from OpenNeuro (https://openneuro.org/datasets/ds003702).

Results

Behavioural

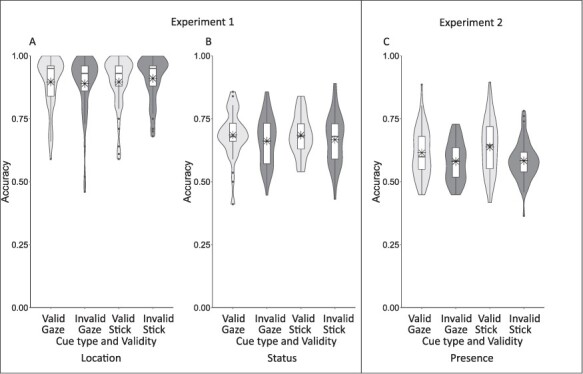

Location accuracy was not statistically different for the social (M = 0.89, s.d. = 0.11) and non-social (M = 0.90, s.d. = 0.09) cueing conditions, F(1,48) = 0.959, P = 0.332, ηp2 = 0.020, BFincl = 0.398. There was also no difference in location accuracy between the valid (M = 0.90, s.d. = 0.10) and invalid (M = 0.90, s.d. = 0.09) conditions, F(1,48) = 0.398, P = 0.531, ηp2 = 0.008, BFincl = 0.175. However, there was a significant interaction between cue type and validity, F(1,48) = 8.958, P = 0.004, ηp2 = 0.157, BFincl = 0.464. For the social cue, there was no significant difference in location accuracy between the valid (M = 0.90, s.d. = 0.10) and invalid (M = 0.89, s.d. = 0.12) conditions, t(48) = 1.102, P = 0.315, Cohen’s d = 0.145, BF10 = 0.68. For the non-social cue, however, there was a significant difference with location accuracy being worse in the valid condition (M = 0.90, s.d. = 0.10) compared to the invalid condition (M = 0.91, s.d. = 0.09), t(48) = −2.251, P = 0.029, Cohen’s d = −0.322, BF10 = 1.54 (Figure 2, Panel A, see also Supplementary materials Figure S1 for individual differences data).

Fig. 2.

Results from Experiments 1 and 2 showing location accuracy (A) and status accuracy (B) for Experiment 1, and presence accuracy for Experiment 2 (C) plotted as a function of cue validity. Boxplots indicate the median and quartiles (whiskers 1.5 times interquartile range), violin overlay shows the full distribution of the data (kernel probability density), and mean is marked by an asterisk.

Status accuracy was not statistically different for the social (M = 0.67, s.d. = 0.09) and non-social (M = 0.68, s.d. = 0.08) cueing conditions, F(1,48) = 0.058, P = 0.811, ηp2 = 0.0001, BFincl = 0.16. There was a significant main effect of validity, F(1,48) = 6.196, P = 0.016, ηp2 = 0.114, BFincl = 1.99—here status accuracy was better in the valid (M = 0.69, s.d. = 0.07) compared to the invalid condition (M = 0.66, s.d. = 0.09). There was a non-significant interaction between cue type and validity, F(1,48) = 0.142, P = 0.798, ηp2 = 0.003, BFincl = 0.22 indicating that the effect of cue validity on status accuracy was not modulated by cue type (Figure 2, Panel B, and Supplementary materials Figure S1).

Due to the unpredicted and disparate effects of the stick cue (i.e. better memory for invalidly cued items in the location task, and better memory for the validly cued items in the status task), we ran an online follow-up to test the effects of the two cues in a simpler memory task; participants indicated if a probe item had been one of five items presented at encoding (see Supplementary materials). This 60-participant study replicated the status memory effects showing a significant main effect of validity, F(1,59) = 33.331, P < 0.001, ηp2 = 0.361, BFincl >100 with validly cued items (M = 0.63, s.d. = 0.09) being recalled more accurately than invalidly cued items (M = 0.58, s.d. = 0.07) items for both the social and non-social cues (Figure 2, Panel C).

EEG

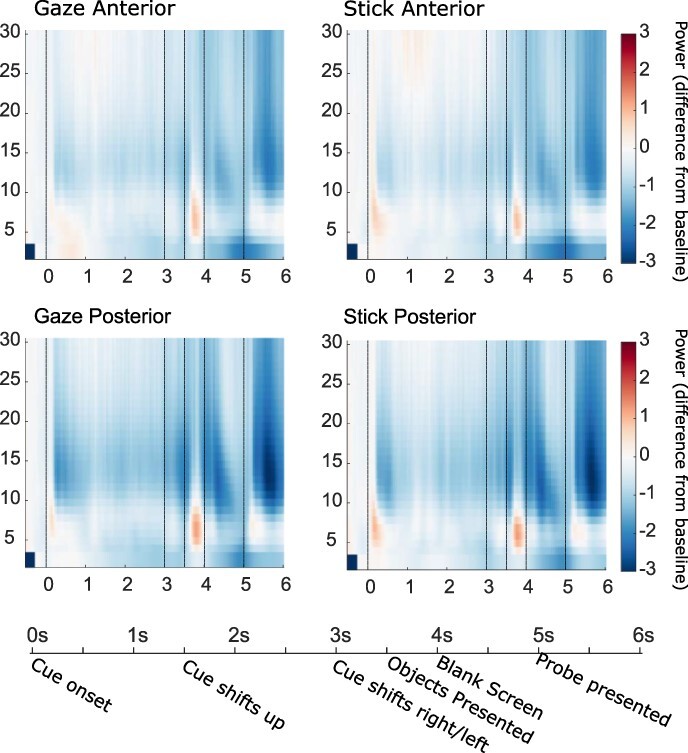

EEG analysis was performed on trials where the location question was answered correctly. For both cues, the time-frequency representations (TFRs) in Figure 3 show the expected increase in theta power at 3.5 s when the targets are presented for encoding as well as expected decreases in alpha power.

Fig. 3.

Figure shows time frequency (TFR) plots for oscillatory power (db baseline corrected −500–−100 ms) during the social avatar and the non-social stick condition, collapsed across validity conditions for anterior (Fp1, Fpz, Fp2, AF7, AF3, AF4, AF8, F7, F5, F3, F1, Fz, F2, F4, F6, F8, FT7, FC5, FC3, FC1, FCz, FC2, FC4, FC6 and FT8) and posterior (TP7, CP5, CP3, CP1, CP2, CP4, CP6, TP8, P7, P5, P3, P1, Pz, P2, P4, P6, P8, PO7, PO5, PO3, POz, PO4, PO6, PO8, O1, Oz and O2) electrodes separately. Timeline shows key experimental events and the crucial time points indicated on the TFRs are the trial start at 0 ms, cue shift at 3 s, the target onset at 3.5 s, target offset at 4 s and the probe onset at 5 s.

Cue shift window

The first crucial comparison focusses on whether alpha and theta oscillatory effects are modulated by cue type during the initial cue shift. The window of interest is a 1000-ms period (see Figure 1) where the cue proceeds from pointing/looking at the participant to looking/pointing at a side of the table (2.5–3.5 s, shift to left/right begins at 3 s), thus containing a 500-ms period of eye contact in the social avatar condition. Cue validity was currently unknown to the participant.

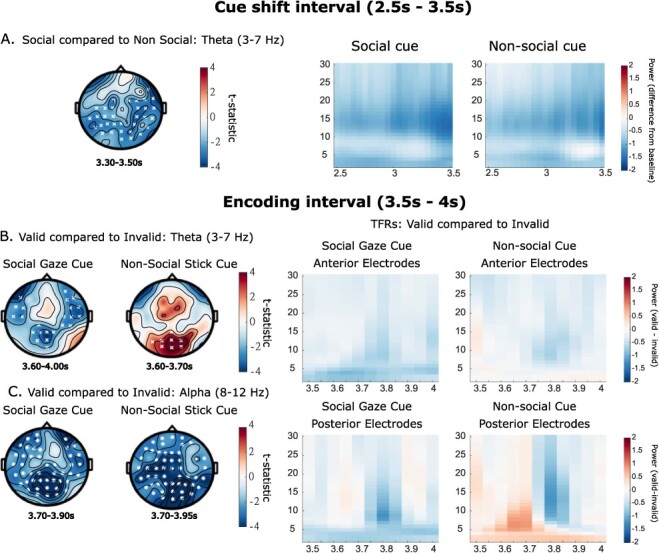

Results show no significant differences in alpha power changes between cue types in this interval, with both cues showing the expected reduction in alpha power (Figure 4, Panel A). However, during the cue shift, there was an apparent decrease in theta power for both cues, with this being larger for the social than the non-social cue (P = 0.023, Figure 4, Panel A).

Fig. 4.

The left side of the figure shows representative cluster plots, full plots of effects across the time window are in Supplementary materials (Figure 1). The right side shows associated TFRs (db baseline corrected −500 to −100 ms). In Plot A the social gaze and non-social stick cue are contrasted during the initial cue shift window (theta effects only); the associated TFR is plotted over all electrodes. Valid and invalid conditions are contrasted for each cue separately in the encoding window; B shows theta band effects and C shows alpha band effects. In associated TFR plots, in the upper plots data show a snapshot of the anterior electrodes (Fp1, Fpz, Fp2, AF7, AF3, AF4, AF8, F7, F5, F3, F1, Fz, F2, F4, F6, F8, FT7, FC5, FC3, FC1, FCz, FC2, FC4, FC6 and FT8), and in the lower plots data are plotted over the electrodes that make up the significant posterior clusters in B (Social: CP1, P3, Pz, POz, P1, P2, PO3 and Oz, Non-social: Pz, POz, O1, O2, PO5, PO3, PO4, PO6 and Oz). Note that these plots are created by subtracting the invalid from the valid power-spectrum data, thus they show the difference between the conditions. Cluster significance levels from a two-tailed test are indicated as x P < 0.05, ✱ P < 0.01.

Because no differences were observed in the eye contact period, we conducted a second exploratory analysis to determine if any eye contact effects are present when the cue initially moved to point/look at the participant, i.e. when eye contact is engaged in the gaze condition. Thus, we compared effects in alpha and theta between the social and non-social cue at the 1.5-s (shift up starts) to 2.5-s interval. Results show no significant differences in alpha or theta power changes between cue types in this interval.

Encoding window

The next comparison investigated whether changes in alpha and theta power were modulated by cue validity during the 500-ms encoding period (3.5–4 s; see Figure 1). Looking first at theta power, for the social cue there was a significantly smaller increase in theta power (in relation to baseline) for the valid compared to the invalid condition (P = 0.012; Figure 4, Panel B). Contrastingly, for the non-social cue there was a significantly larger increase in theta power for the valid compared to the invalid condition (P = 0.044; Figure 4, Panel C). Comparing these validity differences across the two cue types across all electrodes revealed a non-significant difference (P > 0.10). However, for the non-social cue, effects appear to be located over occipital electrodes, while for the social cue effects are more dispersed both in time and in location. Performing a more focussed post hoc cluster-based permutation analysis on the occipital electrodes that make up the significant clusters (see Figure 4 caption) during the encoding window indicates that the changes in theta power at this location are significantly different between the two cue types (P = 0.046).

Looking now at changes in alpha power, there was a significantly stronger decrease in alpha power in the validly cued compared to the invalidly cued condition for both the social (P = 0.002) and non-social cue (P < 0.001; Figure 4, Panel C). Comparing the validity differences across the two cue types across all electrodes revealed a non-significant difference in the magnitude of this alpha power change (P > 0.20).

Probe window

Finally, we investigated changes in alpha and theta power during the probe interval (retrieval; 5–6 s). Results for both cue types showed that cue validity at encoding did not modulate alpha and theta power at retrieval, all P values > 0.1.

Discussion

Here we examined the behavioural and neural effects of virtual social and non-social cues on working memory for the status and location of presented objects. We predicted that the social gaze cue would influence working memory, while the non-social cue would not. Furthermore, we predicted that the social cue would have a stronger effect on alpha and theta oscillations than the non-social cue.

Contrary to predictions, working memory for status information was modulated by both the social and non-social cues, with objects in the valid location being recalled more accurately than those in the invalid location for both cue types. This finding was replicated in Experiment 2 using a different task. This is contrary to previous work conducted using arrows as the non-social cue, where no effects of the non-social cue were seen on working memory (Gregory and Jackson, 2017), long-term memory (Dodd et al., 2012) or object appraisal (Bayliss et al., 2006). Due to its size and motion, in the studies presented here the non-social cue is much more potent than the traditional arrow. It is therefore possible that arrow cues are easier to ignore if required, such as when doing complex higher-order tasks, than eye gaze, and here this potent moving stick.

The social cue had no effect on working memory for the location information; however, for the non-social cue, surprisingly memory was better in the invalid than the valid condition. Location accuracy was high across participants, with some achieving accuracy of 100%; therefore, gaze cue effects may have been lost to ceiling effects, and so it is unknown if gaze cues would influence location accuracy in a more difficult task. The non-social cue result may reflect inhibition of return (Klein, 2000); however, this is unlikely because this cue has been found to show facilitative cueing at this 500-ms SOA (Gregory, 2021). Furthermore, the result is reversed for the status condition, where memory is better for items in the valid condition. This is therefore possibly a spurious effect, likely driven by ceiling effects. However, to speculate briefly, evidence suggests that visuo-spatial working memory works as a distributed network, processing visual appearance (i.e. status) information separately from spatial location information (see Zimmer, 2008). It is possible that the presence of the stick cue increased the overall attention to the cued objects enhancing visual appearance processing while adding an extra spatial element to be processed which disrupted spatial processing. For the gaze cue the head of the avatar was attached to a body giving it a more distinct and fixed spatial location compared to the moving stick.

There was no difference between the two cue types in their influence on alpha power during the cue shift, which incorporated a period of eye contact (social cue only) as well as the left/right cue shift movement, nor were there any differences during the earlier period where eye contact was established. This is contrary to the results of Kompatsiari et al. (2021) who found that eye contact modulated alpha power, with a greater decrease in power found in an eye contact condition compared to a no eye contact condition. Here both the social gaze cue and the non-social stick cue engaged the participant prior to cue shift either by looking or by pointing at them. It is therefore possible that prior findings that eye contact and joint attention modulated alpha power (Chapman et al., 1975; Lachat et al., 2012; Kompatsiari et al., 2021) are related to the participant attending more when the stimulus is more engaging, either through looking at them, or looking where they look, rather than the specific social nature of the stimuli. However, it is possible that differences could be found when focussing analysis on specific regions of interest. There was also no difference in the alpha effects between the two cues during the encoding interval. For both cues there was a significantly stronger decrease in alpha power in the validly cued condition compared to the invalidly cued condition, with no difference in the magnitude of the effect. This reflects the memory findings and indicates that the cues had similar influences on attention.

During the cue shift there was no clear theta synchronisation and instead significantly stronger theta desynchronisation for the social cue compared to the non-social cue. This indicates that there was a general ignoring of the cues that did not require effortful processing. The significant decrease in the social condition may be due to the apparent automatic nature of Level-1 perspective taking (Samson et al., 2010).

Theta power during the encoding interval was also modulated by cue type. For the social cue there was a significantly smaller increase in theta power (in relation to baseline) for the valid condition, where working memory for the status information was better than that in the invalid condition. Theta oscillatory power increases reflect effort (Gevins et al., 1997; Klimesch, 1999; Jensen and Tesche, 2002); therefore, this result indicates that less effort was needed for encoding of status information when cued by gaze. Contrastingly, for the non-social cue there was a significantly larger increase in theta power for the valid condition where again working memory was better for the status information compared to the invalid condition. This indicates that more effort was made for the validly cued location, leading to better encoding of status information.

The effects in the social condition may be explained by the tendency for humans to automatically track another’s perspective (e.g. Michelon and Zacks, 2006; Kessler and Rutherford, 2010; Samson et al., 2010; Kessler et al., 2014). It is possible that less effort is required in the valid gaze condition due to this ease of (Level-1) perspective taking. Indeed, it has been stated that ‘…objects falling under the gaze of others acquire properties that they would not display if not looked at’ (Becchio et al., 2008, p. 254), and research by Shteynberg (2010) indicates that stimuli experienced as part of a social group are more prominent due to what is termed a ‘social tuning’ effect. Alternatively, it is possible that due to tracking the perspective of the avatar, yet, without a target, it is more difficult to disengage from the invalid location, leading to greater theta power required in that condition.

In contrast to our expectations, there were no effects of cue validity on alpha and theta power during the retrieval interval, indicating that effects are specific to encoding. Research has shown stronger theta power at parietal-to-central electrodes during successful encoding (Khader et al., 2010), although generally memory-related theta power changes tend to occur in anterior sites (Jensen and Tesche, 2002). For the gaze cue effects appear across temporal, parietal and occipital electrodes, with some differences in anterior electrodes, whereas for the stick cue the differences occur posteriorly. Parietal theta may promote successful memory encoding, while frontal theta may mediate general attentional processing (Khader et al., 2010). Due to the nature of the study, we do not have clear information about the sources of the power changes; however, the differences in the nature and location of effects suggest that there are differences in how social and non-social cues influence memory processes during encoding.

Here we aimed to understand the influence of social cues on working memory, expanding on previous work in this area (Dodd et al., 2012; Gregory and Jackson, 2017). Using a realistic immersive environment and dynamic social and non-social cues we found that social and non-social cues had similar effects on working memory performance, but that this was underpinned in differences in neural activations. While alpha oscillations were comparable in their modulation of effects, theta oscillations during encoding told a different story. Results therefore indicate that while attention cueing does impact working memory in a similar fashion for the social and non-social cues presented, the underlying neural mechanisms differ, with objects seen under joint attention appearing to require less processing power to be encoded. This provides further evidence for the idea that eye gaze offers a specialised signal in human cognition (Becchio et al., 2008; Samson et al., 2010; Shteynberg, 2010; Kampis and Southgate, 2020).

Supplementary Material

Acknowledgements

We thank the two anonymous reviewers for their helpful comments on earlier drafts of the manuscript.

Contributor Information

Samantha E A Gregory, Department of Psychology, University of Salford, Salford M5 4WT, UK; Institute of Health and Neurodevelopment, Aston Laboratory for Immersive Virtual Environments, Aston University, Birmingham B4 7ET, UK.

Hongfang Wang, Institute of Health and Neurodevelopment, Aston Laboratory for Immersive Virtual Environments, Aston University, Birmingham B4 7ET, UK.

Klaus Kessler, Institute of Health and Neurodevelopment, Aston Laboratory for Immersive Virtual Environments, Aston University, Birmingham B4 7ET, UK; School of Psychology, University College Dublin, Dublin D04 V1W8, Ireland.

Funding

This work was supported by a Leverhulme Trust early career fellowship [ECF2018-130] awarded to S.E.A. Gregory.

Conflict of interest

None declared.

Supplementary data

Supplementary data are available at SCAN online.

References

- Awh E., Vogel E.K., Oh S.H. (2006). Interactions between attention and working memory. Neuroscience, 139(1), 201–8. [DOI] [PubMed] [Google Scholar]

- Baddeley A.D. (2010). Working memory. Current Biology, 20(4), 136–40. [DOI] [PubMed] [Google Scholar]

- Bartneck C., Kulić D., Croft E., Zoghbi S. (2009). Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. International Journal of Social Robotics, 1(1), 71–81. [Google Scholar]

- Bayliss A.P., Paul M.A., Cannon P.R., Tipper S.P. (2006). Gaze cuing and affective judgments of objects: i like what you look at. Psychonomic BulletinandReview, 13(6), 1061–6. [DOI] [PubMed] [Google Scholar]

- Bayliss A.P., Frischen A., Fenske M.J., Tipper S.P. (2007). Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition, 104(3), 644–53. [DOI] [PubMed] [Google Scholar]

- Becchio C., Bertone C., Castiello U. (2008). How the gaze of others influences object processing. Trends in Cognitive Sciences, 12(7), 254–8. [DOI] [PubMed] [Google Scholar]

- Bögels S., Barr D.J., Garrod S., Kessler K. (2015). Conversational interaction in the scanner: mentalizing during language processing as revealed by MEG. Cerebral Cortex, 25(9), 3219–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bombari D., Schmid Mast M., Canadas E., Bachmann M. (2015). Studying social interactions through immersive virtual environment technology: virtues, pitfalls, and future challenges. Frontiers in Psychology, 6(June), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brookes J., Warburton M., Alghadier M., Mon-Williams M., Mushtaq F. (2020). Studying human behavior with virtual reality: the unity experiment framework. Behavior Research Methods, 52(2), 455–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanagh J.F., Frank M.J. (2014). Frontal theta as a mechanism for affective and effective control. Trends in Cognitive Sciences, 18(8), 414–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman A.J., Gale A., Smallbone A., Spratt G. (1975). EEG correlates of eye contact and interpersonal distance. Biological Psychology, 3(4), 237–45. [DOI] [PubMed] [Google Scholar]

- Charman T., Baron-Cohen S., Swettenham J., Baird G., Cox A., Drew A. (2000). Testing joint attention, imitation, and play as infancy precursors to language and theory of mind. Cognitive Development, 15(4), 481–98. [Google Scholar]

- Cleveland A., Schug M., Striano T. (2007). Joint attention and object learning in 5- and 7- month-old infants. Infant and Child Development, 16(3), 295–306. [Google Scholar]

- Cristofori I., Moretti L., Harquel S., et al. (2013). Theta signal as the neural signature of social exclusion. Cerebral Cortex, 23(10), 2437–47. [DOI] [PubMed] [Google Scholar]

- Cristofori I., Harquel S., Isnard J., Mauguière F., Sirigu A. (2014). Monetary reward suppresses anterior insula activity during social pain. Social Cognitive and Affective Neuroscience, 10(12), 1668–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demiralp T., Başar E. (1992). Theta rhythmicities following expected visual and auditory targets. International Journal of Psychophysiology, 13(2), 147–60. [DOI] [PubMed] [Google Scholar]

- Dodd M.D., Weiss N., McDonnell G.P., Sarwal A., Kingstone A. (2012). Gaze cues influence memory…but not for long. Acta Psychologica, 141(2), 270–5. [DOI] [PubMed] [Google Scholar]

- Driver J., Davis G., Ricciardelli P., Kidd P., Maxwell E., Baron-Cohen S. (1999). Gaze perception triggers reflexive visuospatial orienting. Visual Cognition, 6(5), 509–40. [Google Scholar]

- Friesen C.K., Kingstone A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic BulletinandReview, 5(3), 490–5. [Google Scholar]

- Frischen A., Bayliss A.P., Tipper S.P. (2007). Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychological Bulletin, 133(4), 694–724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith C.D., Frith U. (2006). The neural basis of mentalizing. Neuron, 50(4), 531–4. [DOI] [PubMed] [Google Scholar]

- Gevins A., Smith M.E., McEvoy L., Yu D. (1997). High-resolution EEG mapping of cortical activation related to working memory: effects of task difficulty, type of processing, and practice. Cerebral Cortex, 7(4), 374–85. [DOI] [PubMed] [Google Scholar]

- Gregory S.E.A. (2021). Investigating facilitatory versus inhibitory effects of dynamic social and non-social cues on attention in a realistic space. Psychological Research, 0123456789.doi: 10.1007/s00426-021-01574-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gregory S.E.A., Jackson M.C. (2017). Joint attention enhances visual working memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43(2), 237–49. [DOI] [PubMed] [Google Scholar]

- Gregory S.E.A., Jackson M.C. (2019). Barriers block the effect of joint attention on working memory: perspective taking matters. Journal of Experimental Psychology: Learning, Memory, and Cognition, 45(5), 795–806. [DOI] [PubMed] [Google Scholar]

- Gregory S.E.A., Jackson M.C. (2021). Increased perceptual distraction and task demand enhances gaze and non-biological cuing effects. Quarterly Journal of Experimental Psychology, 74(2), 221–40. [DOI] [PubMed] [Google Scholar]

- Grove T.B., Lasagna C.A., Martínez-Cancino R., Pamidighantam P., Deldin P.J., Tso I.F. (2021). Neural oscillatory abnormalities during gaze processing in schizophrenia: evidence of reduced theta phase consistency and inter-areal theta-gamma coupling. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 6(3), 370–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanna J.E., Brennan S.E. (2007). Speakers’ eye gaze disambiguates referring expressions early during face-to-face conversation. Journal of Memory and Language, 57(4), 596–615. [Google Scholar]

- Hanslmayr S., Klimesch W., Sauseng P., et al. (2005). Visual discrimination performance is related to decreased alpha amplitude but increased phase locking. Neuroscience Letters, 375(1), 64–8. [DOI] [PubMed] [Google Scholar]

- Harkin B., Rutherford H., Kessler K. (2011). Impaired executive functioning in subclinical compulsive checking with ecologically valid stimuli in a working memory task. Frontiers in Psychology, 2, 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayhoe M.M., McKinney T., Chajka K., Pelz J.B. (2012). Predictive eye movements in natural vision. Experimental Brain Research, 217(1), 125–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hietanen J.K., Leppänen J.M., Peltola M.J., Linna-aho K., Ruuhiala H.J. (2008). Seeing direct and averted gaze activates the approach-avoidance motivational brain systems. Neuropsychologia, 46(9), 2423–30. [DOI] [PubMed] [Google Scholar]

- Hommel B., Pratt J., Colzato L., Godijn R. (2001). Symbolic control of visual attention. Psychological Science, 12(5), 360–5. [DOI] [PubMed] [Google Scholar]

- Hsieh L.-T., Ranganath C. (2014). Frontal midline theta oscillations during working memory maintenance and episodic encoding and retrieval. NeuroImage, 85, 721–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huizeling E., Wang H., Holland C., Kessler K. (2020). Age-related changes in attentional refocusing during simulated driving. Brain Sciences, 10(8), 1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O., Gelfand J., Kounios J., Lisman J.E. (2002). Oscillations in the alpha band (9–12 Hz) increase with memory load during retention in a short-term memory task. Cerebral Cortex, 12(8), 877–82. [DOI] [PubMed] [Google Scholar]

- Jensen O., Tesche C.D. (2002). Frontal theta activity in humans increases with memory load in a working memory task. European Journal of Neuroscience, 15(8), 1395–9. [DOI] [PubMed] [Google Scholar]

- Kampis D., Southgate V. (2020). Altercentric cognition: how others influence our cognitive processing. Trends in Cognitive Sciences, 24(11), 1–15.doi: 10.1016/j.tics.2020.09.003. [DOI] [PubMed] [Google Scholar]

- Kessler K., Cao L., O’Shea K.J., Wang H. (2014). A cross-culture, cross-gender comparison of perspective taking mechanisms. Proceedings of the Royal Society B: Biological Sciences, 281(1785).doi: 10.1098/rspb.2014.0388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler K., Rutherford H. (2010). The two forms of visuo-spatial perspective taking are differently embodied and subserve different spatial prepositions. Frontiers in Psychology, 1(DEC), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khader P.H., Jost K., Ranganath C., Rösler F. (2010). Theta and alpha oscillations during working-memory maintenance predict successful long-term memory encoding. Neuroscience Letters, 468(3), 339–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein R. (2000). Inhibition of return. Trends in Cognitive Sciences, 4(4), 138–47. [DOI] [PubMed] [Google Scholar]

- Kleinke C.L. (1986). Gaze and eye contact. Psychological Bulletin, 100(1), 78–100. [PubMed] [Google Scholar]

- Klimesch W. (1999). EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Research Reviews, 29(2–3), 169–95. [DOI] [PubMed] [Google Scholar]

- Knoeferle P., Kreysa H. (2012). Can speaker gaze modulate syntactic structuring and thematic role assignment during spoken sentence comprehension? Frontiers in Psychology, 3, 1–15.doi: 10.3389/fpsyg.2012.00538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kompatsiari K., Ciardo F., Tikhanoff V., Metta G., Wykowska A. (2018). On the role of eye contact in gaze cueing. Scientific Reports, 8(1), 17842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kompatsiari K., Bossi F., Wykowska A. (2021). Eye contact during joint attention with a humanoid robot modulates oscillatory brain activity. Social Cognitive and Affective Neuroscience, 16(4), 1–10.doi: 10.1093/scan/nsab001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachat F., Hugueville L., Lemaréchal J.D., Conty L., George N. (2012). Oscillatory brain correlates of live joint attention: a dual-EEG study. Frontiers in Human Neuroscience, 6(June 2012), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Love J., Selker R., Marsman M., et al. (2015). JASP (Version 0.7) [Computer Software].

- Madipakkam A.R., Bellucci G., Rothkirch M., Park S.Q. (2019). The influence of gaze direction on food preferences. Scientific Reports, 9(1), 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maris E., Oostenveld R. (2007). Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods, 164(1), 177–90. [DOI] [PubMed] [Google Scholar]

- Michelon P., Zacks J.M. (2006). Two kinds of visual perspective taking. PerceptionandPsychophysics, 68(2), 327–37. [DOI] [PubMed] [Google Scholar]

- Min B.K., Park H.J. (2010). Task-related modulation of anterior theta and posterior alpha EEG reflects top-down preparation. BMC Neuroscience, 11, 1–8.doi: 10.1186/1471-2202-11-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noonan M.P., Crittenden B.M., Jensen O., Stokes M.G. (2018). Selective inhibition of distracting input. Behavioural Brain Research, 355(September 2017), 36–47. [DOI] [PubMed] [Google Scholar]

- Oostenveld R., Fries P., Maris E., Schoffelen J. (2011). FieldTrip : open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 2011, 1–9.doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof N.N., Todorov A. (2008). The functional basis of face evaluation. Proceedings of the National Academy of Sciences of the United States of America, 105(32), 11087–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pönkänen L.M., Peltola M.J., Hietanen J.K. (2011). The observer observed: frontal EEG asymmetry and autonomic responses differentiate between another person’s direct and averted gaze when the face is seen live. International Journal of Psychophysiology, 82(2), 180–7. [DOI] [PubMed] [Google Scholar]

- Posner M.I. (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32(1), 3–25. [DOI] [PubMed] [Google Scholar]

- Richardson D.C., Street C.N.H., Tan J.Y.M., Kirkham N.Z., Hoover M.A., Ghane Cavanaugh A. (2012). Joint perception: gaze and social context. Frontiers in Human Neuroscience, 6, 1–8.doi: 10.3389/fnhum.2012.00194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Risko E.F., Richardson D.C., Kingstone A. (2016). Breaking the fourth wall of cognitive science: real-world social attention and the dual function of gaze. Current Directions in Psychological Science, 25(1), 70–4. [Google Scholar]

- Ristic J., Friesen C.K., Kingstone A. (2002). Are eyes special? It depends on how you look at it. Psychonomic BulletinandReview, 9(3), 507–13. [DOI] [PubMed] [Google Scholar]

- Samson D., Apperly I.A., Braithwaite J.J., Andrews B.J., Scott B., Sarah E. (2010). Seeing it their way: evidence for rapid and involuntary computation of what other people see. Journal of Experimental Psychology: Human Perception and Performance, 36(5), 1255–66. [DOI] [PubMed] [Google Scholar]

- Sauseng P., Klimesch W., Stadler W., et al. (2005). A shift of visual spatial attention is selectively associated with human EEG alpha activity. European Journal of Neuroscience, 22(11), 2917–26. [DOI] [PubMed] [Google Scholar]

- Sauseng P., Griesmayr B., Freunberger R., Klimesch W. (2010). Control mechanisms in working memory: a possible function of EEG theta oscillations. Neuroscience and Biobehavioral Reviews, 34(7), 1015–22. [DOI] [PubMed] [Google Scholar]

- Seymour R.A., Wang H., Rippon G., Kessler K. (2018). Oscillatory networks of high-level mental alignment: a perspective-taking MEG study. NeuroImage, 177(May), 98–107. [DOI] [PubMed] [Google Scholar]

- Shteynberg G. (2010). A silent emergence of culture: the social tuning effect. Journal of Personality and Social Psychology, 99(4), 683–9. [DOI] [PubMed] [Google Scholar]

- Striano T., Chen X., Cleveland A., Bradshaw S. (2006). Joint attention social cues influence infant learning. European Journal of Developmental Psychology, 3(3), 289–99. [Google Scholar]

- Tipples J. (2002). Eye gaze is not unique: automatic orienting in response to uninformative arrows. Psychonomic BulletinandReview, 9(2), 314–8. [DOI] [PubMed] [Google Scholar]

- Tipples J. (2008). Orienting to counterpredictive gaze and arrow cues. PerceptionandPsychophysics, 70(1), 77–87. [DOI] [PubMed] [Google Scholar]

- Tomasello M. (1988). The role of joint attentional processes in early language development. Language Sciences, 10(1), 69–88. [Google Scholar]

- Tomasello M., Farrar M.J. (1986). Joint attention and early language. Child Development, 57(6), 1454–63. [PubMed] [Google Scholar]

- Van Den Bergh D., Van Doorn J., Marsman M., et al. (2020). A tutorial on conducting and interpreting a Bayesian ANOVA in JASP. Annee Psychologique, 120(1), 73–96. [Google Scholar]

- van der Weiden A., Veling H., Aarts H. (2010). When observing gaze shifts of others enhances object desirability. Emotion, 10(6), 939–43. [DOI] [PubMed] [Google Scholar]

- Wagenmakers E.J., Love J., Marsman M., et al. (2018). Bayesian inference for psychology. Part II: example applications with JASP. Psychonomic BulletinandReview, 25(1), 58–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang H., Callaghan E., Gooding-Williams G., McAllister C., Kessler K. (2016). Rhythm makes the world go round: an MEG-TMS study on the role of right TPJ theta oscillations in embodied perspective taking. Cortex, 75, 68–81. [DOI] [PubMed] [Google Scholar]

- Westfall J. (2016). PANGEA. https://jakewestfall.shinyapps.io/pangea/. http://jakewestfall.org/publications/pangea.pdf [December 10, 2019].

- Willemse C., Marchesi S., Wykowska A. (2018). Robot faces that follow gaze facilitate attentional engagement and increase their likeability. Frontiers in Psychology, 9(FEB), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu R., Gopnik A., Richardson D.C., Kirkham N.Z. (2011). Infants learn about objects from statistics and people. Developmental Psychology, 47(5), 1220–9. [DOI] [PubMed] [Google Scholar]

- Wu R., Kirkham N.Z. (2010). No two cues are alike: depth of learning during infancy is dependent on what orients attention. Journal of Experimental Child Psychology, 107(2), 118–36. [DOI] [PubMed] [Google Scholar]

- Wykowska A., Chaminade T., Cheng G. (2016). Embodied artificial agents for understanding human social cognition. Philosophical Transactions of the Royal Society B: Biological Sciences, 371, 1693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimmer H.D. (2008). Visual and spatial working memory: from boxes to networks. Neuroscience and Biobehavioral Reviews, 32(8), 1373–95. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.