Abstract

Objective:

Previous research has demonstrated the feasibility of programming cochlear implants (CIs) via telepractice. To effectively use telepractice in a comprehensive manner, all components of a clinical CI visit should be validated using remote technology. Speech-perception testing is important for monitoring outcomes with a CI, but it has yet to be validated for remote service delivery. The objective of this study, therefore, was to evaluate the feasibility of using direct audio input (DAI) as an alternative to traditional sound-booth speech-perception testing for serving people with CIs via telepractice. Specifically, our goal was to determine whether there was a significant difference in speech-perception scores between the remote DAI (telepractice) and the traditional (in-person) sound-booth conditions.

Design:

This study used a prospective, split-half design to test speech perception in the remote DAI and in-person sound-booth conditions. Thirty-two adults and older children with CIs participated; all had a minimum of 6 months of experience with their device. Speech-perception tests included the Consonant-Nucleus-Consonant (CNC) words, Hearing in Noise Test (HINT) sentences, and Arizona Biomedical Institute at Arizona State University (AzBio) sentences. All three tests were administered at levels of 50 and 60 dBA in quiet. Sentence stimuli were also presented in four-talker babble at signal-to-noise ratios (SNRs) of +10 dB and +5 dB for both the 50-dBA and 60-dBA presentation levels. A repeated-measures analysis of variance (RM ANOVA) was used to assess the effects of location (remote, in person), stimulus level (50 dBA, 60 dBA), and SNR (if applicable; quiet, +10 dB, +5 dB) on each outcome measure (CNC, HINT, AzBio).

Results:

The results showed no significant effect of location for any of the tests administered (p > 0.1). There was no significant effect of presentation level for CNC words or phonemes (p > 0.2). There was, however, a significant effect of level (p < 0.001) for both HINT and AzBio sentences, but the direction of the effect was opposite of what was expected – scores were poorer for 60 dBA than for 50 dBA. For both sentence tests, there was a significant effect of SNR, with poorer performance for worsening SNRs, as expected.

Conclusions:

The present study demonstrated that speech-perception testing via telepractice is feasible using DAI. There was no significant difference in scores between the remote and in-person conditions, which suggests that DAI testing can be used as a valid alternative to standard sound-booth testing. The primary limitation is that the calibration tools are presently not commercially available.

Keywords: Cochlear Implant, Telehealth, Telepractice, Speech Perception, Direct Audio Input

INTRODUCTION

Cochlear implant (CI) centers are not widely located because they provide specialized and multidisciplinary services. Accessibility to CI services can therefore be difficult, particularly for those who live in rural areas or who have mobility or transportation issues. The increasing implementation of telehealth/telepractice may provide a solution to this accessibility problem. Telepractice is already being used in audiology for hearing-aid fittings, maintenance, and adjustments, especially within the Veterans’ Health Administration (Pross et al. 2016). In recent years, studies have investigated the feasibility of using telepractice for CI service delivery (Eikelboom et al. 2014; Goehring et al. 2012; Goehring & Hughes 2017; Hughes et al. 2012; Ramos et al. 2009; Wesarg et al. 2010). Most studies with people using CIs have focused on the feasibility of programming CIs for adults via distance technology. However, speech-perception testing is an important component of monitoring outcomes with a CI, but it has not been validated for remote service delivery. It therefore remains unknown if telepractice can be used as a feasible and reliable method for testing speech perception with people using CIs. The goal of this study was to evaluate the validity of using direct audio input (DAI) to remotely test speech perception in people using CIs via telepractice.

Several studies have examined the feasibility of programming CI sound processors for both adults and children via telepractice. Results for both age groups have shown no significant difference in programming (map) levels between traditional and remote methods (Eikelboom et al 2014; Goehring & Hughes 2017; Hughes et al. 2018; Hughes et al. 2012; Ramos et al. 2009; Wesarg et al. 2010). In adults, recipients generally responded positively to remote programming as an alternative to traditionally performed measures and would recommend it to others. In a study conducted by Eikelboom et al. (2014), CI programming was conducted with adults both in the sound booth and remotely using the Med-El clinical software. The programming levels between the two conditions showed no significant difference. Similarly, Wesarg et al. (2010) compared remote programming to the traditional face-to-face method. Half of the recipients started first in the remote condition and the other half started in the face-to-face condition. No significant difference was found in the programming levels between the two conditions. In adults, Ramos et al. (2009) also evaluated programming levels obtained in the in-person and remote conditions, as well as measuring electrically evoked compound action potentials (eCAPs) via Neural Response Imaging. No significant difference was found in the programming levels between the two conditions. eCAP thresholds were reported as “similar” but were not statistically compared. Last, McElveen et al. (2010) examined speech perception between two groups, each comprised of seven adults with CIs. One group was programmed in person and the second group was programmed remotely. Both groups then completed speech-perception testing using Consonant-Nucleus-Consonant (CNC) words and Hearing-In-Noise Test (HINT) sentences in person. Scores were then compared between the groups and showed no significant difference. It is important to note that speech perception was not tested remotely; rather, the scores were used to evaluate the effect of the programming levels obtained across the two conditions. Further, the study used a between-groups design instead of a within-subjects design.

In children with CIs, Goehring and Hughes (2017) and Hughes et al. (2018) examined behavioral thresholds for remote versus in-person conditions. Children were tested using either conditioned play audiometry (Goehring & Hughes 2017) or visual reinforcement audiometry (Hughes et al. 2018). Other factors examined were test duration, measurement success rate, and caregiver satisfaction with the remote programming procedure. Results from both studies revealed no significant differences between remote and in-person conditions for threshold levels, test duration, or measurement success rate. Parent/caregiver satisfaction with telepractice was positive overall. Questions used a Likert scale to evaluate caregivers’ willingness to utilize telepractice for CI appointments, if the technology was overwhelming, or if they felt that the quality of care via telepractice was comparable to that of traditional in-person visits. All respondents reported favorable views of remote testing for all questions. In summary, the majority of studies that have evaluated the use of telepractice for CI service delivery have (1) focused primarily on programming the sound processor, (2) found that map levels are similar for in-person and remote programming, and (3) determined that the remote programming procedure is generally well accepted by recipients and/or caregivers.

Although programming the sound processor is a main focus of audiological appointments for people using CIs, it is not the only procedure performed at clinical visits. To use telepractice effectively as an alternative for in-person visits, it is necessary to be able to provide all services remotely that are performed as part of a comprehensive clinical visit. Specifically, speech-perception testing is important for documenting the benefit of the CI relative to pre-implant performance, and for monitoring performance over time. In a study with 29 people using CIs, Hughes et al. (2012) compared various physiological and perceptual outcome measures, including speech perception, obtained in person versus remotely. The physiological and basic perceptual measures were all obtained using direct-connect from the computer to the processor, whereas speech perception was measured in the sound field (sound booth for in-person and office/conference room for remote). The results showed no significant differences between the two test conditions for all measures except for speech perception. The findings indicated that scores were significantly poorer in the remote condition than in the in-person condition. This difference was attributed to higher background noise levels (~20–50 dB SPL) and longer reverberation times (~0.4–0.8 sec) at the remote sites (office or conference room) compared to a sound-treated booth (~20 dB SPL and ~0.1 sec). Speech-test stimuli delivered via DAI (de Graaff et al. 2016) may offer a potential solution to overcome the negative effects of background noise and reverberation associated with remote test sites that lack a sound booth. DAI deactivates the processor microphones and introduces the stimuli directly to the recipient, thereby eliminating reverberation and background noise effects from the external environment.

The primary aim of this study was to determine whether DAI can be used for remote speech-perception testing as a suitable alternative to in-person sound-booth testing. Speech perception was tested in both the traditional sound-booth setting and remotely with DAI using a within-subjects design. The null hypothesis was that there would be no significant difference in speech-perception scores between the in-person sound-booth and remote DAI conditions.

MATERIALS AND METHODS

Participants

Thirty-five adults and children aged 13 years and older with CIs were enrolled. Three adults could not complete the study or were excluded due to floor effects; therefore, this study presents data from a total of 32 participants (mean age, 57.6 years; range, 13-85 years). Demographic information is detailed in Table 1. Participants included 15 individuals with Advanced Bionics (Advanced Bionics, Valencia, CA, USA) devices (CI, CII, or HiRes 90K) and 17 with Cochlear Ltd. (Cochlear Ltd., Sydney, NSW, Australia) devices (CI24R, CI24RE, CI422, CI512, or CI522). Enrollment criteria were: (1) a minimum of six months’ device use, (2) proficient in spoken English, and (3) successful history of completing clinical speech-perception testing. All participants had at least nine months of device use (more than the minimum required six months) prior to participation (mean, 9.7 years; range, 9 months–22 years). If a recipient was bilaterally implanted, only the better-performing ear was tested to (1) avoid floor effects and (2) to prevent test familiarity that might occur with testing both ears due to a limited number of lists available in the speech-perception tests administered. All participants signed an informed consent and were compensated for mileage and hourly participation. This study was approved by the Boys Town National Research Hospital (BTNRH) Institutional Review Board under protocol 13-09-XP.

Table 1.

Participant demographics. Durations are in years (y) or years and months (y,m). Starting location is the first location tested (S, sound booth; R, remote). All participants with Advanced Bionics devices (subject IDs starting with K or C) used a Harmony processor, and all participants with Cochlear devices (subject IDs starting with F, N, or R) used an N6 processor.

| Subject ID | Age at Implant (y,m) | Age at Test (y) | Internal Device | Length of CI Use (y,m) | Processing Strategy | Per-Channel Rate (pps) | Starting Location |

|---|---|---|---|---|---|---|---|

| C11 | 55,3 | 77 | C1.0 | 22,0 | MPS | 1444 | S |

| KJ23 | 63,1 | 72 | HiRes 90K 1J | 8,6 | HiRes Optima-S | 1954 | R |

| CJ7 | 6,3 | 20 | CII 1J | 13,8 | HiRes-S | 829 | R |

| CJ16 | 1,5 | 15 | CII 1J | 13,5 | HiRes Optima-P | 2750 | R |

| KJ25 | 70,11 | 74 | HiRes 90K 1J | 2,2 | HiRes Optima-S | 3712 | R |

| KJ47 | 8,9 | 22 | HiRes 90K 1J | 13,1 | HiRes Optima-P | 3093 | S |

| KM1 | 55,8 | 70 | HiRes 90K MS | 14,0 | HiRes Optima-P | 3712 | S |

| KM4 | 57,9 | 60 | HiRes 90K MS | 2,0 | HiRes Optima-S | 1856 | S |

| CJ11 | 40,11 | 55 | CII 1J | 14,4 | HiRes -P | 3480 | S |

| CJ14 | 58,6 | 75 | CII 1J | 16,1 | HiRes Optima-P | 2750 | R |

| KJ48 | 24,7 | 33 | HiRes 90K 1J | 8,3 | HiRes Optima-P | 3712 | R |

| KM14 | 74,5 | 76 | HiRes 90K MS | 1,6 | HiRes Optima-S | 1515 | S |

| CJ17 | 2,1 | 13 | CII 1J | 11,1 | HiRes Optima-P | 3712 | S |

| CJ20 | 31,9 | 48 | CII 1J | 17,9 | HiRes Optima-S | 2750 | R |

| KJ17 | 35,1 | 41 | HiRes 90K 1J | 5,4 | HiRes Optima-S | 1856 | R |

| KM28 | 68,10 | 69 | HiRes 90K 1J | 0,9 | HiRes Optima-S | 3093 | R |

| KJ49 | 74,11 | 85 | HiRes 90K 1J | 10,1 | HiRes Optima-S | 1547 | S |

| FS32 | 59,3 | 63 | CI422 | 4,0 | ACE | 500 | S |

| R17 | 48,9 | 63 | CI24R(CS) | 13,9 | ACE | 900 | S |

| F17 | 42,11 | 54 | CI24RE(CA) | 11,7 | ACE | 900 | S |

| NS22 | 71,0 | 72 | CI522 | 1,8 | ACE | 900 | S |

| F42 | 22,1 | 32 | CI24RE(CA) | 10,0 | ACE | 900 | R |

| F35 | 49,8 | 58 | CI24RE(CA) | 8,11 | ACE | 900 | R |

| F43 | 73,10 | 79 | CI24RE(CA) | 4,10 | ACE | 900 | S |

| N5 | 49,4 | 57 | CI512 | 6,8 | ACE | 900 | R |

| R5 | 49,6 | 66 | CI24R(CS) | 17,1 | ACE | 900 | R |

| R18 | 60,3 | 73 | CI24R (CA) | 13,1 | ACE | 1200 | R |

| F47 | 63,1 | 63 | CI24RE(CA) | 0,10 | ACE | 900 | R |

| FS44 | 65,8 | 70 | CI422 | 4,1 | ACE | 900 | S |

| N33 | 41,2 | 47 | CI512 | 6,2 | ACE | 900 | R |

| R6 | 46,7 | 66 | CI24R(CS) | 19,7 | ACE | 1200 | S |

| R7 | 62,3 | 75 | CI24R(CS) | 13,11 | ACE | 900 | S |

Study Design

This study utilized a prospective, split-half, within-subjects design (N=16 remote condition first; N=16 in-person condition first) with pseudo-random group assignment. Participants were randomly assigned to be tested either in the remote or in-person condition first without consideration for device type or any other variables. When the randomization yielded an imbalance of the number of participants, then the remaining enrollees were purposefully assigned to the group with fewer subjects to achieve a balanced design. Both conditions were tested within the same building. The in-person condition was conducted in a sound-treated booth within the Cochlear Implant Research Laboratory at BTNRH, and the remote condition was conducted with the audiologist in the laboratory and the participant in a different room within the same building. This design was used for the convenience of logistics and scheduling. Both conditions were typically tested on the same day with a short 10–15 minute break between conditions. Due to time constraints, four participants were unable to complete both conditions in one day. In those cases, the second condition was completed within one week of the first visit.

Stimuli and Equipment

For both the sound-booth and DAI conditions, speech stimuli were presented using the ListPlayer research software provided by Advanced Bionics (Advanced Bionics, Valencia, CA, USA). This software, which is not commercially available, allows the tester to choose the test material, list number, presentation level, signal-to-noise ratio (SNR), and noise type. Recipients with an Advanced Bionics device utilized a laboratory Harmony sound processor for both test conditions. For recipients with Cochlear devices (Cochlear Ltd., Sydney, NSW, Australia), a Nucleus 6 (CP910) processor was used for both test conditions. Stimuli consisted of prerecorded lists from the CNC word test, HINT sentences, and AzBio sentences. Two lists from each sentence test and one CNC list were presented for each test condition (in-person sound-booth, remote DAI). Test (CNC, HINT, AzBio), list number, and level/SNR combinations were randomized for order of presentation across recipients. Contralateral devices (CI or hearing aid) were deactivated prior to testing. CNC words were presented at 50 and 60 dBA in quiet only. HINT and AzBio sentences were presented at 50 and 60 dBA in each of the following noise conditions: no noise (quiet), +10, and +5 dB SNR. Four-talker babble was used for speech-in-noise conditions. In total, each recipient heard 12 AzBio, 12 HINT, and two CNC lists (of a possible 10 CNC, 26 HINT, and 22 AzBio lists) for each of the two testing locations.

Procedures

Participants were tested using their everyday program, including all front-end processing [e.g., Adaptive Dynamic Range Optimization (ADRO), Automatic Sensitivity Control (ASC), Automatic Scene Classifier system (SCAN), ClearVoice, EchoBlock, WindBlock, SoundRelax] that they normally used. For participants with Advanced Bionics devices, a dedicated auxiliary-only program had to be loaded onto the Harmony sound processor for DAI listening. Therefore, the laboratory sound processor was loaded with the recipient’s daily program (Program 1) for testing in the sound booth, as well as a copy of the daily program that was designated for auxiliary only (Program 2) for testing in the remote DAI condition. For participants with Cochlear devices, the accessory-mixing ratio was set to “Accessory Only”, which automatically deactivates the two microphones on the external processor when an accessory is plugged into the DAI port. After the accessory is unplugged, the external microphones on the sound processor are automatically reactivated.

In-Person Testing

In the in-person (sound-booth) condition, a GSI 61 audiometer was used as an attenuator for stimulus presentation from ListPlayer to the free-field speaker inside the sound booth. Stimulus level was calibrated at the level of the participant’s processor microphone with a Radio Shack digital sound-level meter (slow response, A-weighting) using a 60-dB SPL, 1 kHz calibration pure tone that is a feature built into the ListPlayer software. The participant was seated facing a loudspeaker at 0 degrees azimuth in the horizontal plane at a distance of one meter. The speech stimuli were then presented from ListPlayer via the loudspeaker. The participant was instructed to repeat back what was heard to the best of their ability, and was encouraged to guess if unsure. Responses were entered into Listplayer by the tester.

Remote DAI Testing

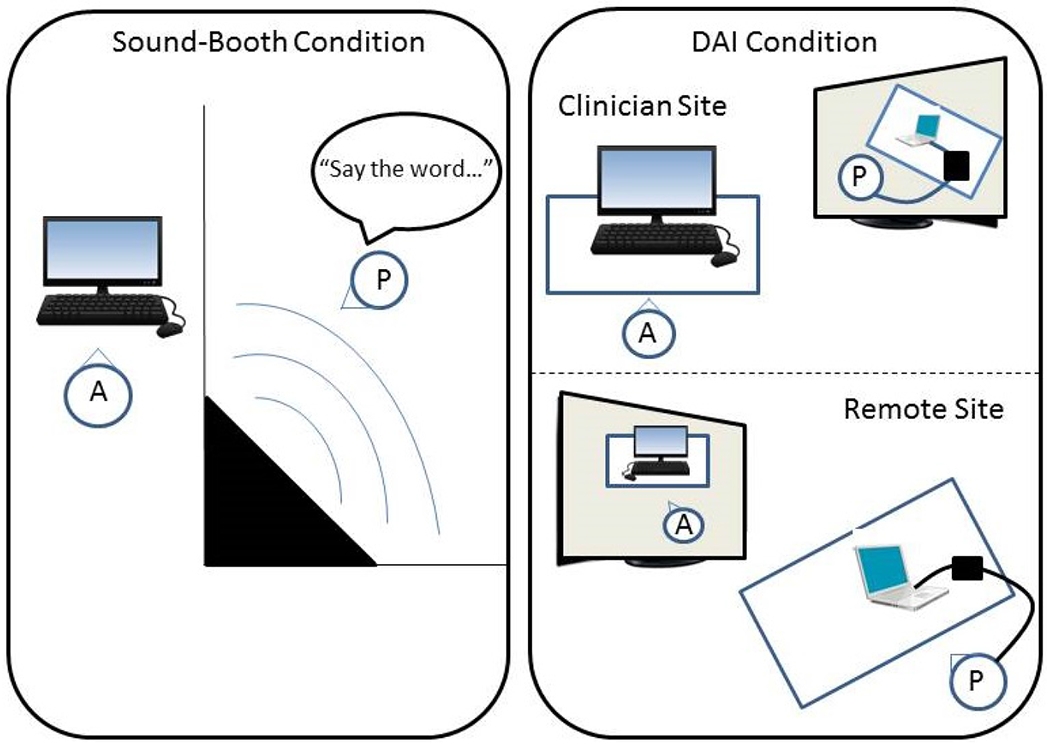

For the remote DAI condition, the participant was seated in the remote-site room while the CI audiologist was seated in the CI laboratory. The audiologist site utilized a VSX 7000 Polycom (Polycom Inc., San Jose, CA) system for two-way audio/video communication. The Polycom system used an encrypted firewall for a secure connection. The remote site utilized a Cisco videoconferencing system (CISCO Systems, San Jose, CA) and a Dell laptop (Dell, Round Rock, TX) with Windows 7, which was loaded with the ListPlayer software and placed at the table with the recipient. A commercially available USB sound card was connected to the laptop, equipped with standard unbalanced (without separate ground wire) RCA ports that were compatible with both CI manufacturers’ personal audio cables. The sound card routed the speech stimuli from ListPlayer to the sound processor. The Cochlear personal audio cable was commercially available, and the cable from Advanced Bionics was modified by the company for use with ListPlayer. Figure 1 depicts a schematic of the equipment setup for each of the two test conditions.

Figure 1.

Schematic for test set up for the in-person (sound-booth) and remote direct audio input (DAI) conditions for speech perception testing (A= audiologist; P=participant). The left panel shows the in-person condition with the audiologist outside of the sound booth controlling the stimuli and the participant inside during speech-perception testing. The right panel shows the remote DAI condition with the audiologist (top) communicating via the video-communication system with the participant (bottom), whose CI processor is connected to the laptop at the remote site.

Calibration for the DAI stimulus delivery differed between the two CI manufacturers. For Advanced Bionics devices, the calibration was performed using a built-in feature within the ListPlayer software, and was designed specifically for use with the Harmony sound processor. For Cochlear devices, calibration was achieved using proprietary software from Cochlear Ltd. that allowed access to internal sound-level meters that are coupled to both external microphones and the DAI port of the CP910 sound processor (de Graaff et al. 2016). This software tool, in conjunction with ListPlayer (to present the stimulus1), was used to adjust the stimulus levels to achieve the desired SPL.

At the beginning of the remote session, the audiologist took the participant to the remote-site room, provided instructions regarding the study procedures, and then connected the personal audio cable to the recipient’s processor, thereby deactivating the external microphones. The audiologist then left the room for the laboratory to begin testing. In practice, a test assistant would be located at the remote site to connect hardware and further instruct the recipient during the appointment. Once the test session commenced, the audiologist held up cue cards to the Polycom system camera in the lab to communicate with the recipient watching via the video monitor of the Cisco system at the remote site. The cue cards were used to indicate whether the subsequent stimulus would be sentences or words, or if the stimuli would include background noise. Unlike what is available in clinical programming software, the Listplayer software does not have a talk-over feature enabling the clinician to communicate verbally with the patient or participant during testing.

The audiologist used an HP ProDesk (Hewlett-Packard, Palo Alto, CA) computer to control the ListPlayer software on the Dell laptop (Dell, Round Rock, TX) at the recipient site using the Remote Desktop Connection feature in Windows 7. Once this feature was initialized, the content from ListPlayer could not be viewed on the laptop screen by the participant. The audiologist then used ListPlayer to present the speech-perception materials to the sound processor. Participants vocalized responses to the audiologist through a Cisco system table-top microphone located in front of the participant. As in the in-person condition, responses were scored in real time within ListPlayer for the AzBio and HINT sentences. At the conclusion of each list, the percent correct was displayed and stored electronically. For CNC words, ListPlayer only allows for scoring words correct, so phoneme scores were recorded and calculated by hand. All participants were native English speakers, and none had speech difficulties that prevented the investigator from understanding their responses. The total testing time for both in-person and DAI conditions, including multiple breaks, was approximately four hours.

Analysis

A mixed analysis of variance (ANOVA) was used to evaluate within- and between-subjects factors. Although the primary goal of this study was to determine whether there is a difference in speech-perception scores between in-person and remote conditions, multiple levels and SNRs were tested so that our question could be addressed for most conditions tested clinically. As a result, level and SNR were included as factors in the analysis to determine whether any location effects (in person or remote) were dependent on level or SNR. For the sentence tests (HINT and AZBio), within-subjects (repeated measures) factors were location (remote, in-person), presentation level (50 dBA, 60 dBA), and SNR (quiet, +10, and +5 dB). CNCs were analyzed separately for word and phoneme scores. For CNCs, the within-subjects factors were location (remote, in-person) and presentation level (50 dBA, 60 dBA). In addition to the within-subjects factors, device was included as a between-subjects factor to determine whether any of the outcomes were device-specific. When sphericity was violated (Mauchly’s Test of Sphericity), the degrees of freedom were adjusted using the Greenhouse-Geisser correction.

RESULTS

CNC Words and Phonemes

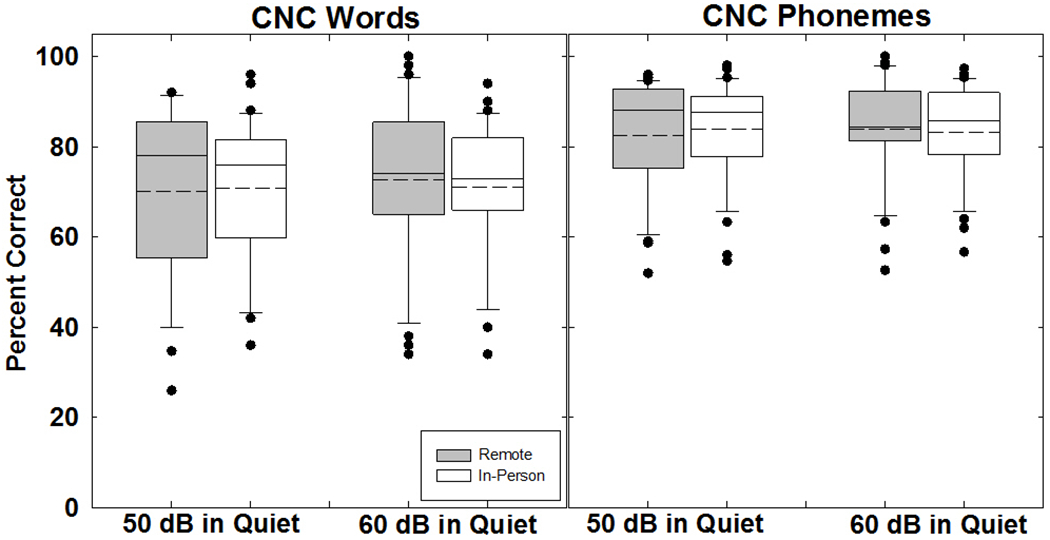

Figure 2 shows box-and-whisker plots for CNC words (left panel) and phonemes (right panel). Box boundaries represent the 25th and 75th percentiles and the whiskers represent the 10th and 90th percentiles. Means and medians are shown with dashed and solid lines, respectively. Solid circles indicate outliers outside the 10th and 90th percentiles. For CNC words (Fig. 2, left panel), the ANOVA results showed no significant effect of location (F(1, 30) = 0.103, p = 0.75, partial eta squared η2 = 0.003) or level (F(1, 30) = 0.987, p = 0.33, partial eta squared η2 = 0.032), with no significant interaction between factors (F(1, 30) = 0.807, p = 0.38, partial eta squared η2 = 0.026). Mean word scores for the in-person and remote conditions (averaged across level and device) were 71.1% and 71.5%, respectively. Mean word scores for 50 and 60 dBA (averaged across location and device) were 70.7% and 71.9%, respectively.

Figure 2.

Box-and-whisker plots showing percent correct for consonant-nucleus-consonant (CNC) words (left panel) and phonemes (right panel) obtained in the remote (gray boxes) and in-person (white boxes) conditions. Box boundaries represent the 25th and 75th percentiles and whiskers represent the 10th and 90th percentiles. Means and medians are shown with dashed and solid lines, respectively. Solid circles indicate outliers.

The between-subjects analysis revealed no significant effect of device (F(1, 30) = 0.576, p = 0.45, partial eta squared η2 = 0.019). Mean word scores were 69.2% for participants with Advanced Bionics devices and 73.5% for participants with Cochlear devices. There was, however, a significant interaction between device and level. Descriptive statistics for the significant interactions for CNC scores are shown in Supplemental Digital Content (SDC) 1. Mean scores were better for 60 dB than for 50 dB for Advanced Bionics (71.2% vs. 67.3%, respectively), whereas mean scores were poorer for 60 dB than for 50 dB for Cochlear (72.7% vs. 74.2%, respectively; see SDC 1A).

For CNC phonemes (Fig. 2, right panel), the ANOVA results showed no significant effect of location (F(1,30) = 0.205, p = 0.65, partial eta squared η2 = 0.007) or level (F(1,30) = 0.058, p = 0.81, partial eta squared η2 = 0.002), with no significant interaction between location and level (F(1,30) = 1.218, p = 0.28, partial eta squared η2 = 0.039). Mean phoneme scores for the in-person and remote conditions (averaged across level and device) were 83.7% and 83.3%, respectively. Mean phoneme scores for 50 and 60 dBA (averaged across location and device) were 83.4% and 83.6%, respectively.

The between-subjects analysis revealed no significant effect of device (F(1, 30) = 0.328, p = 0.57, partial eta squared η2 = 0.011). Mean phoneme scores were 82.4% for participants with Advanced Bionics devices and 84.6% for participants with Cochlear devices. As with the word scores, there was a significant interaction between device and level for phonemes (see SDC 1B). Mean scores were better for 60 dB than for 50 dB for Advanced Bionics (83.5% vs. 81.3%, respectively), whereas mean scores were poorer for 60 dB than for 50 dB for Cochlear (83.6% vs. 85.5%, respectively). There was also a significant three-way interaction between device, location, and level (see SDC 1C). For both devices, the effect of level was larger in the remote condition than in person.

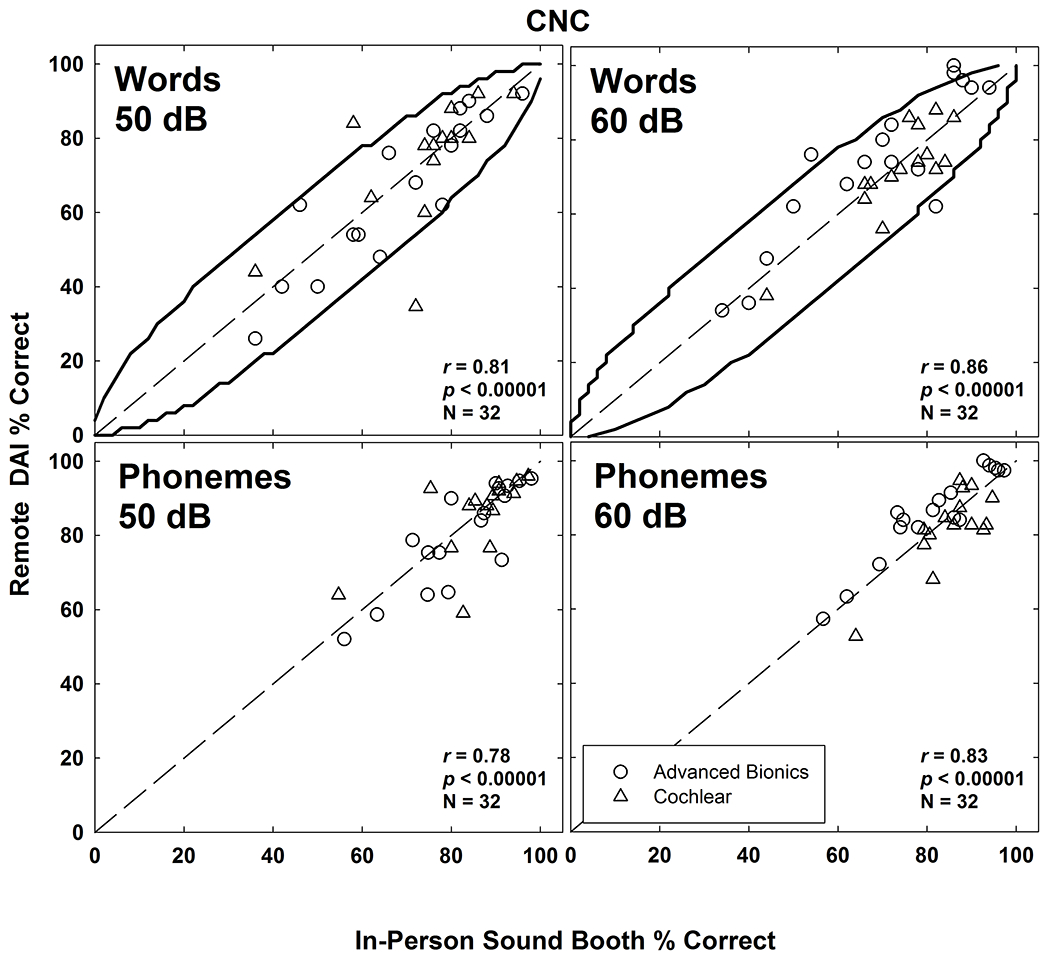

Figure 3 shows scatter plots of individual CNC word (top row) and phoneme (bottom row) scores obtained in the remote and in-person conditions. Curved lines in the top panels indicate 95% confidence intervals based on Thornton and Raffin (1978). With the exception of two outliers at 50 dB (both were participants with Cochlear devices) and four outliers at 60 dB (all had Advanced Bionics devices), the word scores were located within the confidence intervals. Pearson correlations (shown in each panel) were significant (p < 0.00001) for all conditions.

Figure 3.

Scatter plots of individual CNC word (top row) and phoneme (bottom row) scores obtained in the remote DAI and in-person sound-booth conditions. Data for presentation levels of 50 dBA and 60 dBA are shown in the left and right columns, respectively. Curved lines in the top panels indicate 95% confidence intervals based on Thorton and Raffin (1978). Dashed diagonals represent unity. Advanced Bionics and Cochlear data are displayed with open circles and triangles, respectively. Pearson correlations (r), significance (p), and number of observations (N) are indicated in each panel.

HINT Sentences

Figure 4 shows box-and-whisker plots for HINT sentence scores. The left and right panels show percent-correct data for 50 and 60 dB signal levels, respectively. From left to right within each panel, data are plotted for the quiet, +10 dB, and +5 dB SNRs, respectively. Data were missing for two participants in the 50 dB/+10 SNR condition and for one participant in the 50 dB/+5 SNR condition. Results showed no significant effect of location (F (1, 28) = 0.158, p = 0.69, partial eta squared η2 = 0.006). Mean scores for the in-person and remote conditions were 59.8% and 60.4%, respectively. There was a significant effect of level, (F (1, 28) = 19.452, p < 0.001, partial eta squared η2 = 0.410). The mean scores for 50 dB (63.6%) were significantly higher than for 60 dB (56.5%), which was unexpected. There was also a significant effect of SNR (F (2, 56) = 333.563, p < 0.001, partial eta squared η2 = 0.923). Mean scores for SNRs of quiet, +10, and +5 were 93.5%, 59.6%, and 27.1%, respectively, which followed expected trends. There was no significant interaction between location and level (F (1, 28) = 1.704, p = 0.20, partial eta squared η2 = 0.057) or location and SNR (F (2, 56) = 0.147, p = 0.86, partial eta squared η2 = 0.005). However, there was a significant interaction between level and SNR (F (2,56) = 10.717, p < 0.001, partial eta squared η2 = 0.277; see SDC 2A), and a three-way interaction between location, level, and SNR (F (2, 56) = 6.273, p = 0.003, partial eta squared η2 = 0.183; see SDC 2B).

Figure 4.

Box-and-whisker plots showing percent correct for Hearing in Noise Test (HINT) sentences scores obtained in the remote (gray boxes) and in-person (white boxes) conditions. The left (50 dB) and right (60 dB) panels shows data with decreasing SNRs (Quiet, +10, +5) from left to right within each panel. Box boundaries represent the 25th and 75th percentiles and the whiskers represent the 10th and 90th percentiles. Means and medians are shown with dashed and solid lines, respectively. Solid circles indicate outliers.

The between-subjects analysis revealed a significant effect of device (F (1.28) = 4.449, p < 0.044, partial eta squared η2 = 0.137), with better performance overall for participants with Advanced Bionics devices (65.6%) than for Cochlear devices (54.6%). There were significant interactions between device and the following factors: location, level, SNR, location*SNR, and level*SNR. Descriptive statistics for these interactions are detailed in SDC 2C–G. For the device*location interaction, the remote DAI condition yielded better performance for participants with Advanced Bionics devices and poorer performance for participants with Cochlear devices as compared with the in-person condition (SDC 2C). For device*level interaction, performance was better at 50 dB than at 60 dB for both devices, but this difference was larger for Cochlear than for Advanced Bionics (SDC 2D). This trend continued for the interactions that included SNR (see SDC 2E–G), where performance degraded to a larger extent with worsening SNR for participants with Cochlear devices than for Advanced Bionics devices.

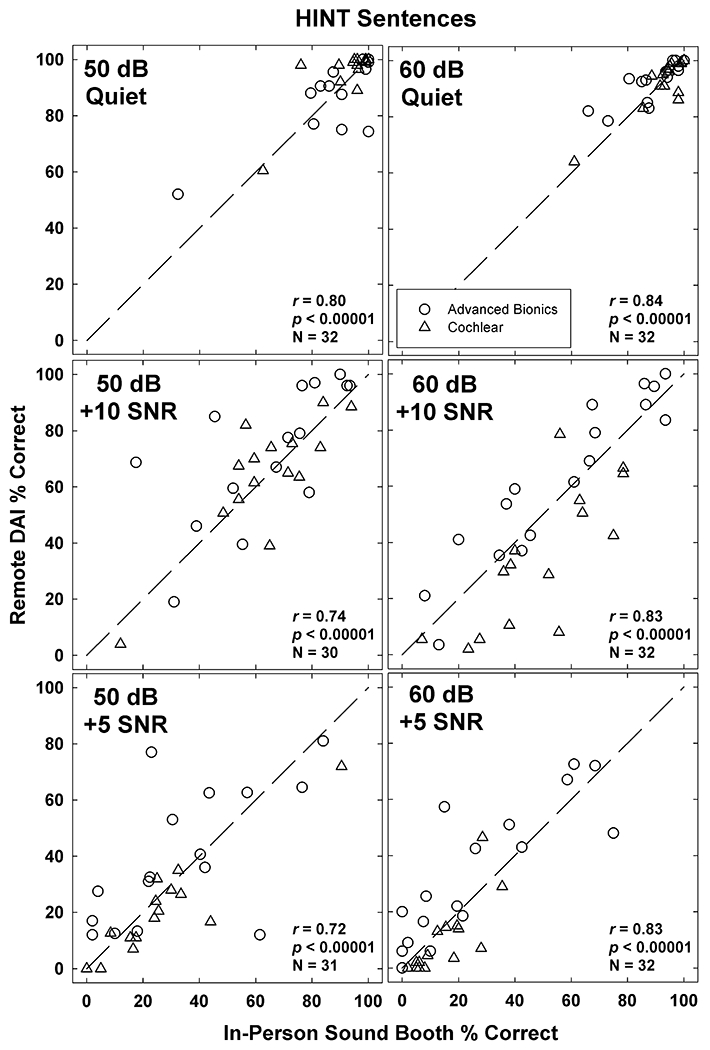

Figure 5 shows scatter plots of individual HINT percent-correct scores obtained in the remote versus in-person conditions. Four participants had relatively large differences in scores between the remote and in-person conditions. For example, in the 50 dB +10 SNR condition, one participant (KJ25) scored 17.5% in the in-person condition but 68.7% in the remote DAI condition. It should be noted that the outlier in this figure is not the same as the one in the same region of the 50 dB +5 SNR condition. However, the outliers in the upper left quadrants of the 50 dB + 5 SNR and 60 dB + 5 SNR conditions are the same recipient (KJ17). The outliers in the bottom right quadrants of the 50 dB + 5 SNR and 60 dB + 5 SNR conditions are the same recipient (KM28). Pearson correlations (shown in each panel) were significant (p < 0.00001) for all conditions. Correlations were slightly stronger for the 60-dB conditions than for 50 dB.

Figure 5.

Scatter plots of individual HINT percent-correct scores obtained in the remote DAI and in-person sound-booth conditions. Data for presentation levels of 50 dB and 60 dB are shown in the left and right columns, respectively. SNR conditions are shown from top to bottom: Quiet (top), +10 SNR (middle), and +5 SNR (bottom). Dashed diagonals represent unity. Advanced Bionics and Cochlear data are displayed with open circles and triangles, respectively. Pearson correlations (r), significance (p), and number of observations (N) are indicated in each panel.

AzBio Sentences

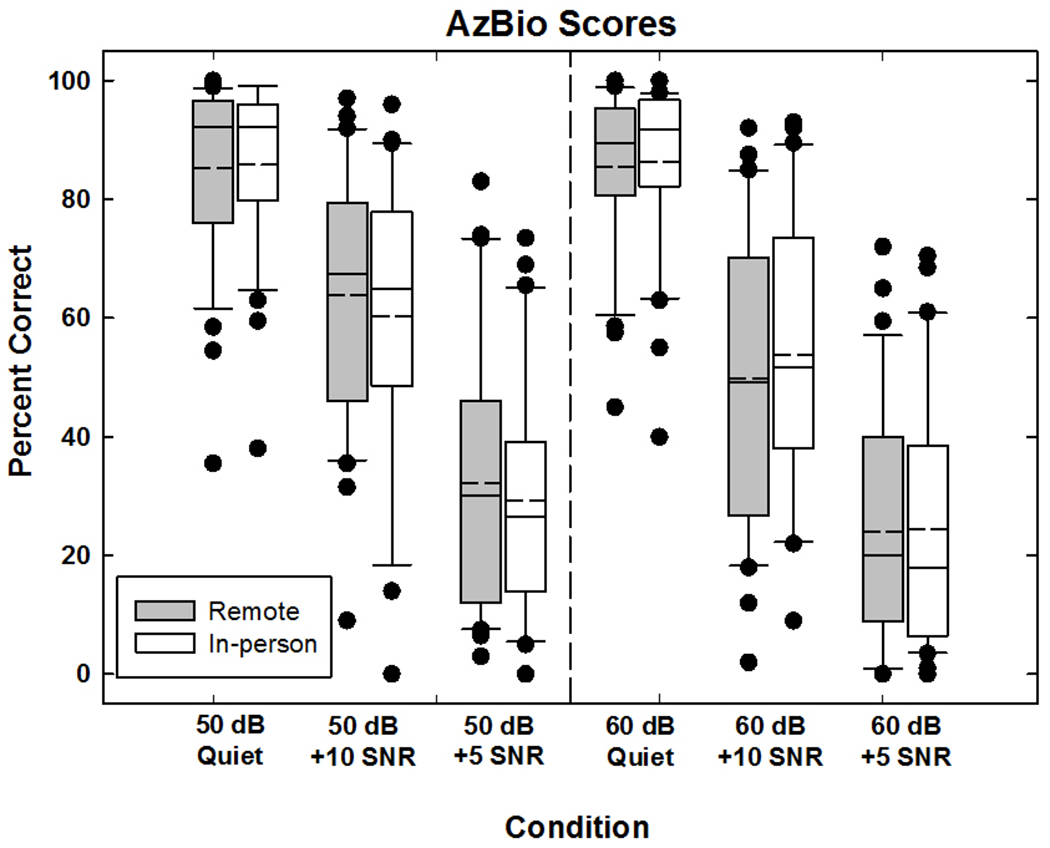

Figure 6 shows box-and-whisker plots for AzBio sentence scores. Data are plotted similar to Fig. 4. Results showed no significant effect of location (F (1, 28) = 0.131, p = 0.72, partial eta squared η2 = 0.005). The mean scores for in-person and remote conditions were 58.4% and 57.9%, respectively. The effect of level was significant, (F (1, 28) = 31.326, p < 0.001, partial eta squared η2 = 0.528). As was the case for HINT sentences, the mean scores for 50 dB (61.1%) were significantly higher than for 60 dB (55.1%), which was unexpected. There was also a significant effect of SNR, (F (1.52, 42.4) = 379.146, p < 0.001, partial eta squared η2 = 0.931) where performance decreased with more challenging SNRs, as expected. Mean scores for SNRs of quiet, +10, and +5 were 87.6%, 58.5%, and 28.3%, respectively. There was a small but significant interaction between location and level (F (1,28) = 4.232, p= 0.049, partial eta squared η2 =0.131; see SDC 3A), as well as a significant interaction between level and SNR (F (2, 56) =17.690, p < 0.001, partial eta squared η2 = 0.387; see SDC 3B) and location, level, and SNR (F (2, 56) = 4.290, p = 0.018, partial eta squared η2 = 0.133; see SDC 3C).

Figure 6.

Box-and-whisker plots showing percent correct for Arizona Biomedical Institute at Arizona State (AzBio) sentences scores. The left (50 dB) and right (60dB) panels shows data with decreasing SNRs (Quiet, +10, +5) from left to right within each panel. Box boundaries represent the 25th and 75th percentiles and the whiskers represent the 10th and 90th percentiles. Means and medians are shown with dashed and solid lines, respectively. Solid circles indicate outliers.

The between-subjects analysis revealed no significant effect of device (F(1,28) = 1.317, p = 0.261, partial eta squared η2 = 0.045). General trends were similar to that for the HINT sentences, where participants with Advanced Bionics devices scored better overall (61.5%) than participants with Cochlear devices (54.7%). There were significant interactions between device and the following factors: location, level, SNR, level*SNR, and location*level*SNR. Descriptive statistics for these interactions are detailed in SDC 3D–H. For the device*location interaction, the remote DAI condition yielded better performance for participants with Advanced Bionics devices and poorer performance for participants with Cochlear devices compared with the in-person condition (SDC 3D), which was similar to the findings for HINT sentences. For the device*level interaction, performance was better at 50 dB than 60 dB for both device groups, but this difference was larger for participants with Cochlear devices than for Advanced Bionics (SDC 3E). This trend, which was similar to that for the HINT results, continued for the interactions that included SNR (SDC 3F–H). In general, performance decrements were larger for participants with Cochlear devices than for those with Advanced Bionics devices as the SNR became more challenging.

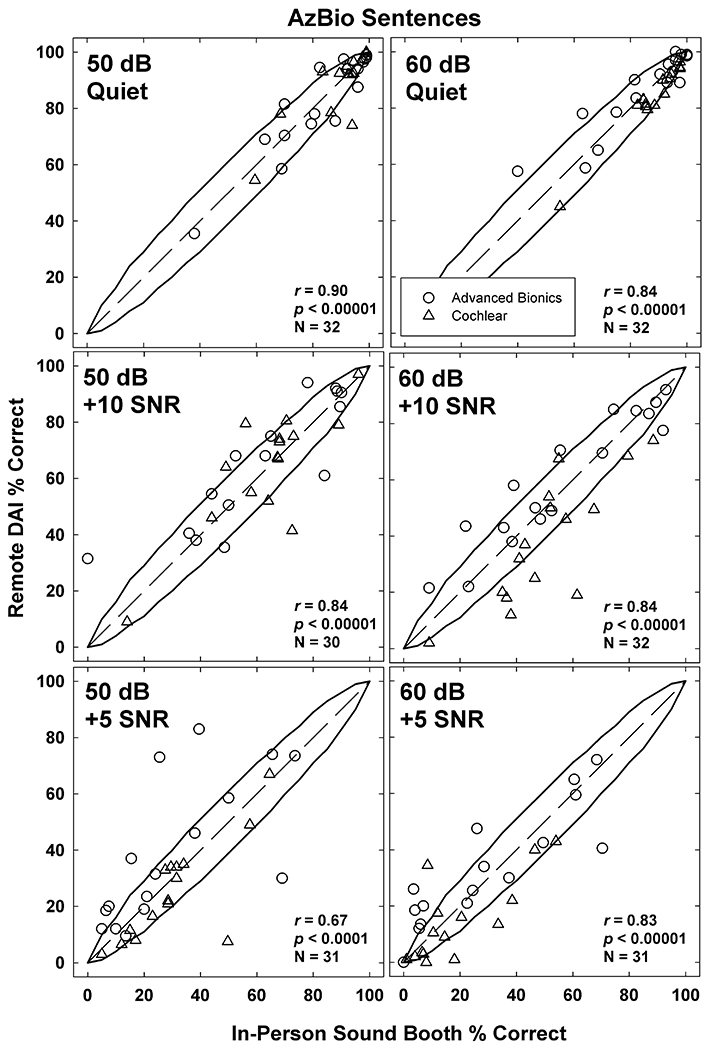

Figure 7 shows scatter plots of AzBio scores obtained in the remote versus in-person conditions. Data are plotted similar to Fig. 5. Curved lines indicate 95% confidence intervals developed from trials in a sound-treated booth at 60 dB without background noise (Spahr et al, 2012). Some participants had relatively large differences in scores between the remote and in-person conditions. For example, in the 50 dB +5 SNR condition, one participant scored 69% in the in-person condition but 30% in the remote DAI condition. It should be noted that outlier (KM28) is the same person as the one in the same region of the 60 dB +5 SNR condition. The correlations for both levels decrease with more challenging SNR, particularly for 50 dB.

Figure 7.

AzBio sentence percentage scores expressed as scatter plots between remote DAI and in-person sound-booth conditions. The left (50 dB) and right (60dB) columns shows data with decreasing SNRs for Quiet (top), +10 (middle), and +5 (bottom). Dashed diagonals represent unity lines between remote and in-person conditions. Data displayed as scores for participants with Advanced Bionics (open circles) and Cochlear (open triangles) devices.

DISCUSSION

The primary goal of this study was to evaluate whether speech-perception testing for people with CIs using DAI via telepractice could be a suitable alternative for traditional in-person testing. Across the tests administered, our data show that the null hypothesis cannot be rejected; there was no significant difference in performance between DAI via telepractice and traditional in-person testing. The results showed no significant difference in performance between 50 dB and 60 dB for words or phonemes. However, for HINT and AzBio sentences, the results unexpectedly showed better scores for 50 dB than for 60 dB presentation levels. For both presentation levels, scores progressively worsened as the SNRs were made more challenging, as expected (e.g., Dunn et al., 2010; Fetterman & Domico, 2002; Gifford and Revit, 2010).

The present study was designed to circumvent the deleterious effects of room noise and reverberation at the remote site, which was presumed to be the reason why performance was poorer in the remote setting than the in-person (booth) setting in Hughes et al. (2012). In that study, speech perception was tested at the remote site in a non-sound-treated room, using the speaker from the videoconferencing unit to deliver speech stimuli to the participant in the sound field. The results showed scores for CNC words and phonemes were 14% and 10% poorer than those tested in person in the sound booth, respectively; and scores for HINT sentences were 19% poorer than in the sound booth. When the ratio of input is 100% to the auxiliary port, the use of DAI deactivates the external microphones of the sound processor (including any directional capabilities) thereby eliminating environmental noise and effects of room acoustics. Speech-perception testing using DAI can therefore be completed in environments where a sound booth is not available. Implications resulting from this study show that CI service delivery can be expanded from only programming the processor to also assessing outcomes with the device via speech perception. Because a sound booth is not required with DAI, CI services can be provided remotely to non-audiological sites such as those located within primary-care physician offices or rural health clinics. At the remote site, service provision may include a test assistant in the room to instruct the recipient and connect hardware, or to educate recipients about how to connect their own device to the interface. Recent advances for certain wireless connectivity for programming sound processors may alleviate issues with physically connecting the sound processor to the interface.

The present study evaluated speech perception using two stimulation levels that are typically used in the clinic. An unexpected level effect was found across all speech-perception tests. Previous research has shown that speech-perception scores typically are better at louder presentation levels (Firszt et al., 2004), although it is unclear whether those findings would apply with current front-end processing technology. However, the present study showed no significant difference in scores between the 50- and 60-dB presentation levels for CNC words or phonemes, and better scores for 50 dB than for 60 dB for both HINT and AzBio sentences. In participants with Cochlear devices, it may be that the use of Adaptive Dynamic Range Optimization (ADRO), which boosts soft sounds to make them more audible for the recipient (Blamey, 2005), caused speech stimuli presented at 50 dB to be as intelligible as that presented at 60 dB. The algorithm functions according to four “fuzzy logic” rules that apply as long as they do not interfere with any other rule: The “comfort rule” decreases gain if the output exceeds comfort level target more than 10% of the time; the “audibility rule” increases gain if the output is below the target more than 30% of the time; the “hearing protection rule” prevents the output from exceeding a maximum allotted level; and the “background noise rule” limits the maximum allotted gain and prevents any low-level background noise from being increased. All Cochlear recipients had this feature activated. The interaction of the “comfort” and “background noise” rules may have led to recipients experiencing reduced performance during certain noise conditions (Blamey, 2005). The poorer performance could also result from the recipient’s Q-value, which is a metric that defines the curvature of the function that maps the acoustic input to the electrical dynamic range. For example, the instantaneous input dynamic range (IIDR) for recipients using Cochlear sound processors in the DAI condition is 40 dB, which is the same as the everyday microphone configuration. If a speech stimulus is presented at 50 dB, it is located on the steepest portion of the function and will likely enhance the ability to listen to the target speech over the background noise. At 60 dB, the speech signal is located on the most compressed portion of the growth function and may limit the ability to listen to target speech over present background noise. Similar effects are available in the processors for Advanced Bionics devices by manually adjusting the input dynamic range (IDR) and threshold (T) levels. Manipulating the IDR and T levels allows for the processor to detect softer sounds in the surrounding environment in a non-instantaneous manner similar to IIDR used by Cochlear. This aids in audibility of soft sounds for the recipient as well. The short duration of the CNC stimuli may have prevented AGC loops and other pre-processing algorithms from yielding the same effect on the presentation levels as was seen in sentence testing.

Performance was generally poorer in the remote DAI condition than in person for participants with Cochlear devices, whereas performance was generally better in the remote DAI condition than in person for participants with Advanced Bionics devices. For Cochlear recipients, a difference in the frequency response of the personal audio cable (PAC) versus the processor microphones may have contributed to the reduced scores in the DAI condition. De Graaf et al. (2016) reported that there is a small difference in the frequency response between the PAC and the sound processor microphones. It may be that some people with CIs are more sensitive to this difference in frequency responses. The spectrum seen at the sound processor for a given sound delivered from speakers in the sound field to the processor microphones will differ to a certain extent compared to the same sound delivered to the processor via DAI. In the processor design, the microphone response and the pre-emphasis filter were carefully calibrated for maximum hearing performance, but the DAI utilizes a basic first-order filter in the cable to approximate this frequency shaping without additional filtering. An N-of-M processing strategy, such as the Advanced Combination Encoder (ACE), will occasionally select different channels depending on the spectrum of the sound and the magnitude of the difference between setups (Zachary Smith, Cochlear Americas, personal communication 4/13/2018). It should be noted that there is a disparity in the technology used between the two tested manufacturers. At time of testing, the Nucleus 6 sound processor from Cochlear Americas was the newest technology from the company. The Harmony sound processor from Advanced Bionics was two models older than the Naida Q90, which is the newest technology from that company (note that the Harmony was the only processor compatible with the ListPlayer calibration).

Limitations

The primary limitation of the present study was that the stimulus calibration could only be achieved using tools that are not presently commercially available. Advanced Bionics processors were calibrated using a built-in function in the ListPlayer software, which is currently only available for research purposes. Cochlear Nucleus 6 sound processors were calibrated using research software that accesses internal sound level meters to determine input levels of the external microphones as well as the accessory port for DAI. Internal sound level meters are currently only available in the CP 910/920/1000 sound processors and the ability to access them is currently not available in the clinical programming software.

One disadvantage of using DAI for speech-perception testing is that any issues related to the sound-processor microphone may not be apparent during testing. Because DAI bypasses the external microphone(s), any degradation of speech perception that a listener might experience due to a malfunctioning microphone may not be reflected in their scores using DAI. This issue could be alleviated by training support staff at the remote site regarding how to trouble-shoot sound processors.

A relatively minor limitation was the inability to communicate with participants in the DAI condition. The DAI mode lacks a talk-over feature that would allow for verbal communication with the recipient. Written messages and pre-printed prompts were used to indicate testing conditions. Future developments of technology from CI manufacturers may incorporate a talk-over feature for DAI connections when providing telepractice services.

As with any aspect of telehealth, communication between the host and recipient sites may also be subject to weaknesses in internet security if not properly encrypted. Bandwidth issues may also cause reduced video quality. During the course of this study, there were two instances when the audio and video feeds were terminated unintentionally. Both instances were a result of system upgrades taking place within the hospital network, and only briefly disrupted data collection.

There was a final limitation with the availability of required hardware. A programming interface is needed at the remote site to connect the recipient’s processor to the programming/test computer. In the present study the audiologist attached the CI hardware and then left the remote site to begin testing. In clinical practice, an assistant would be needed in the remote location to set up the equipment and connect the recipient’s processor to the programming interface. As technology evolves, some of these technical limitations might be overcome. For example, the newly released CP1000 (Nucleus 7) platform by Cochlear Ltd. (Cochlear Ltd., Sydney, Australia) includes made-for-iPhone compatibility. The platform allows the recipient to adjust program levels in a limited capacity. It also allows for direct streaming from a device to the sound processor. As a result, future telepractice services using CIs may be conducted via wireless streaming.

CONCLUSIONS

This study demonstrated the feasibility of remotely testing speech perception for people with CIs using DAI. It should be noted that only experienced listeners were included in this study. The next step for clinical implementation would be for commercial development of calibration tools for stimuli delivered to the DAI port. Results from this study indicate that remote speech-perception testing via DAI can be used as a suitable alternative to traditional in-person testing.

Supplementary Material

ACKNOWLEDGMENTS

This study was supported by the National Institute on Deafness and Other Communication Disorders (NIDCD) Grants R01 DC013281, and the National Institute on General Medicine and Surgery P20 GM109023. The content of this project is solely the authors’ responsibility and does not necessarily represent the official views of the NIDCD or the National Institutes of Health. The authors thank Leo Litvak and Advanced Bionics for providing the ListPlayer software and related hardware; Tony Spahr and Chen Chen from Advanced Bionics for technical and calibration assistance; Zachary Smith and Amy Popp from Cochlear Americas for technical and calibration assistance; Sig Soli and Daniel Valente for technical assistance in the initial phases of this study; Jacquelyn Baudhuin, Jenny Goehring, and Margaret Miller for assistance with data collection in the pilot phase of this study; and Roger Harpster, Todd Sanford, Dave Jenkins, and Aurelie Villard for videoconferencing assistance.

Conflicts of Interest and Source of Funding:

Michelle Hughes is a member of the Ear and Hearing editorial board. No other conflicts of interest are declared for any of the authors. This research was supported by the National Institutes of Health (NIH), the National Institute on Deafness and Other Communication Disorders (NIDCD), grant R01 DC013281 (M. L. Hughes, PI) and the National Institute of General Medical Sciences (NIGMS) P20 GM109023.

Footnotes

Within the ListPlayer software, the direct-connect setting was used because of internal calibrations for Advanced Bionics devices. While Cochlear devices also used the accessory port for DAI testing, the “free field” setting in the software was used because the calibration was performed externally.

REFERENCES

- Blamey PJ (2005). Adaptive Dynamic Range Optimization (ADRO): A Digital Amplification Strategy for Hearing Aids and Cochlear Implants. Trends In Amplification, 9, 77–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Graaff F, Huysmans E, Qazi O, Vanpoucke FJ, Merkus., Goverts ST, Smits C (2016). The Development of Remote Speech Recognition Tests for Adult Cochlear Implant Users: The Effect of Presentation Mode of the Noise and a Reliable Method to Deliver Sound in Home Environments. Audiology and Neurotology, 21, 48–54 [DOI] [PubMed] [Google Scholar]

- Dunn CC, Noble W, Tyler RS, Kordus M, Gantz BJ, Ji H (2010) Bilateral and Unilateral Cochlear Implant Users Compared on Speech Perception in Noise. Ear & Hearing, 31 (2), 296–298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eikelboom RH, & Jayakody DM, Swanepoel DW, Chang S, Atlas MD (2014). Validation of remote mapping of cochlear implants. Journal of Telemedicine and Telecare, 0, 1–7. [DOI] [PubMed] [Google Scholar]

- Fetterman BL, Domico EH (2002). Speech recognition in background noise of cochlear implant patients. Otolaryngology- Head and Neck Surgery, 126(3), 257–263. [DOI] [PubMed] [Google Scholar]

- Firszt JB, Holden LK, Skinner MW, Tobey EA, Peterson A, Gaggl W, Runge-Samuelson CL, Wackym PA (2004). Recognition of Speech Presented at Soft to Loud Levels by Adult Cochlear Implant Recipients of Three Cochlear Implant Systems. Ear & Hearing, 25, 375–387. [DOI] [PubMed] [Google Scholar]

- Gifford RH & Revit LJ (2010). Speech Perception for Adult Cochlear Implant Recipients in a Realistic Background Noise: Effectiveness of Preprocessing Strategies and External Option for Improving Speech Recognition in Noise. Journal of the American Academy of Audiology, 21 (7), 441–488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goehring JL & Hughes ML (2017) Measuring Sound-Processor Threshold Levels for Pediatric Cochlear Implant Recipients Using Conditioned Play Audiometry via Telepractice. Journal of Speech, Language, and Hearing Research, 60, 732–740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goehring JL, Hughes ML, Baudhuin JL, Valente DL, McCreery RW, Diaz GR, Sanford T, Harpster R (2012). The Effect of Technology and Testing Environment on Speech Perception Using Telehealth With Cochlear Implant Recipients. Journal of Speech, Language, and Hearing Research, 55, 1373–1386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes ML, Goehring JL, Baudhuin JL, Diaz GR, Sanford T, Harpster R, Valente DL (2012). Use of Telehealth for Research and Clinical Measures in Cochlear Implants Recipients: A Validation Study. Journal of Speech, Language, and Hearing Research, 55, 1112–1127 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes ML, Goehring JL, Sevier JD, & Choi S (2018). Measuring sound-processor threshold levels for pediatric cochlear implant recipients using visual reinforcement audiometry via telepractice. Journal of Speech, Language, and Hearing Research, in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McElveen J, Blackburn E, Green J, McLear P, Thimsen D, Wilson B (2010). Remote Programming of Cochlear Implants: A Telecommunications Model. Otology and Neurotology, 31, 1035–1040. [DOI] [PubMed] [Google Scholar]

- Pross SE, Bourne AL, & Cheung SW (2016). TeleAudiology in the Veterans Health Administration. Otology and Neurotology, 37(7), 847–850. [DOI] [PubMed] [Google Scholar]

- Ramos A, Rodriguez C, Martinez-Beneyto P, Perez D, Gault A, Falcon JC, Boyle P (2009). Use of telemedicine in the remote programming of cochlear implants. Acta Oto-Laryngologica, 129, 533–540. [DOI] [PubMed] [Google Scholar]

- Spahr A, Dorman M, Litvak L et al. (2012). Development and validation of the AzBio sentence lists. Ear Hear, 33(1), 112–117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thornton AR & Raffin MJ (1978). Speech-Discrimination Scores Modeled as a Binomial Variable. J Speech Hear Res, 21, 507–518. [DOI] [PubMed] [Google Scholar]

- Wesarg T, Wasowski A, Skarzynski H, Ramos A, Gonzalez J, Kyriafinis G, Junge F, Novakovich A, Mauch H, Laszig R (2010). Remote fitting in Nucleus cochlear implant recipients. Acta Oto-Laryngologica, 130, 1379–1388. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.