Abstract

Performance-raising practices tend to diffuse slowly in the health care sector. To understand how incentives drive adoption, I study a practice that generates revenue for hospitals: submitting detailed documentation about patients. After a 2008 reform, hospitals could raise their Medicare revenue over 2% by always specifying a patient’s type of heart failure. Hospitals only captured around half of this revenue, indicating that large frictions impeded takeup. Exploiting the fact that many doctors practice at multiple hospitals, I find that four-fifths of the dispersion in adoption reflects differences in the ability of hospitals to extract documentation from physicians. A hospital’s adoption of coding is robustly correlated with its heart attack survival rate and its use of inexpensive survival-raising care. Hospital-physician integration and electronic medical records are also associated with adoption. These findings highlight the potential for institutionlevel frictions, including agency conflicts, to explain variations in health care performance across providers.

Keywords: Hospitals, Healthcare, Technology adoption, Firm Performance, Upcoding

1. Introduction

A classic finding of studies of technology is that new, performance-raising forms of production are adopted slowly and incompletely. For example, Griliches (1957) observed this pattern in the takeup of hybrid corn across states; more recent research has studied adoption patterns in agriculture in the developing world, manufacturing in advanced economies, management practices internationally, and a host of other examples (Conley and Udry, 2010; Foster and Rosenzweig, 1995; Collard-Wexler and De Loecker, 2015; Bloom et al., 2012). In the health-care sector, clinical quality-improving practices, including checklists, hand-washing, and drugs like β-blockers provide analogous examples of slow adoption. Disparities in the use of these practices are a leading explanation for health care productivity variations across providers and regions (Skinner and Staiger, 2015; Baicker and Chandra, 2004; Chandra et al., 2013). Given the enormous potential for new forms of production to improve patient outcomes in the health care sector and to raise output in the economy more generally, the nearly ubiquitous finding of delayed takeup is particularly vexing.

In this paper, I study a health care practice that raises revenue for the hospital: the detailed reporting of heart failure patients to Medicare. A 2008 Medicare policy change created a financial incentive for hospitals to provide more detail about their patients in insurance reimbursement claims.1 Yet hospitals could only provide these details if they were documented by physicians. The incentive for hospitals to report the information was large: this policy put over 2% of hospital Medicare incomes on the line in 2009 – about $2 billion – though it did not directly affect the pay of physicians. By tracking the spread of the reporting practice across hospitals, this study examines the role of financial incentives and agency conflicts in the adoption of new practices. While improved heart failure billing is a revenue-raising but not survival-raising practice, and is thus less influenced by physicians’ intrinsic motivations for clinical quality, it is a test case of how financial incentives drive takeup in the presence of firm-level barriers to adoption.

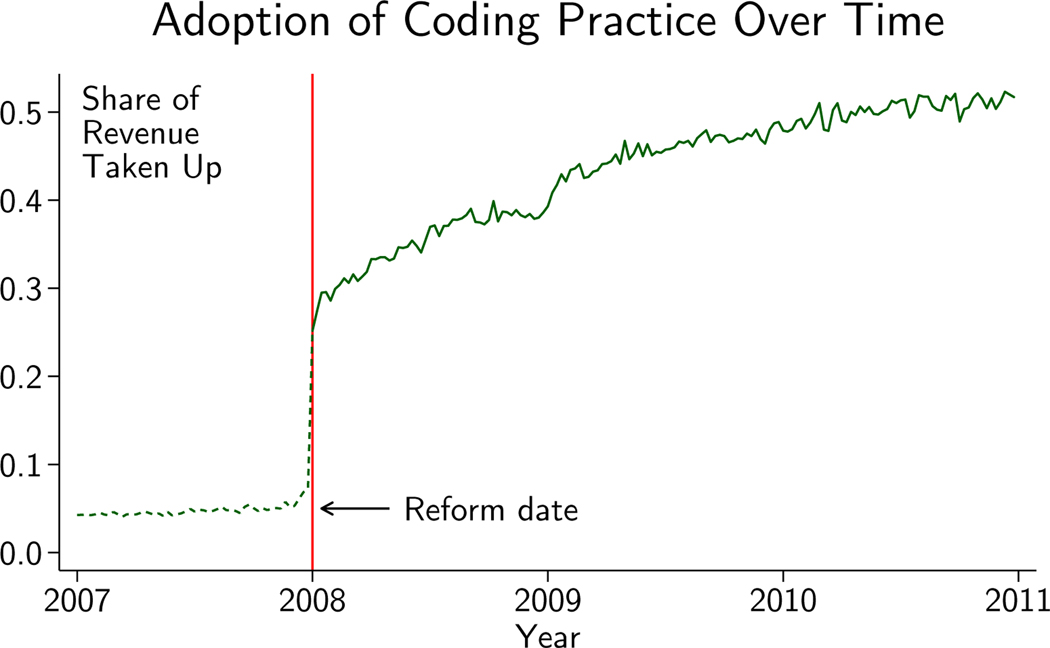

Figure 1 shows that the change in incentives triggered a rapid but incomplete response by hospitals: in just weeks following the reform, hospitals started capturing 30% of the revenue made available; by the end of 2010 they were capturing about 52%. This finding is consistent with existing work showing that hospitals respond to incentives by changing how they code their patients (Dafny, 2005; Silverman and Skinner, 2004). Yet presented inversely, in spite of the reform being announced earlier that year, 70% of the extra heart failure revenue was not captured shortly after implementation and nearly half was still not being realized after several years.

Figure 1.

Figure plots the share of revenue available for detailed coding of HF that was captured by hospitals over time. Dotted line shows revenue that would have been captured in 2007 if hospitals had been paid per 2008 rules. See Appendix Section A.1.2 for more details.

I show that substantial hospital-level heterogeneity underlies the national takeup of detailed heart failure codes. Mirroring the literature that has demonstrated large differences in productivity across seemingly similar firms (Fox and Smeets, 2011; Syverson, 2011; Bartelsman et al., 2013), I find dispersion in the takeup of detailed billing codes across hospitals. This dispersion exists even after accounting for disparities in the types of patients that different hospitals treat. For example, 55% of heart failure patients received a detailed code at the average hospital in 2010, and with the full set of patient controls the standard deviation of that share was 15 percentage points. A hospital two standard deviations below the mean provided detailed heart failure codes for 24% of its heart failure patients, while a hospital two standard deviations above the mean did so for 85% of its patients. While Song et al. (2010) and Finkelstein et al. (2017) find evidence of disparities in regional coding styles, this study is the first to isolate the hospital-specific component of coding adoption and study its distribution (I also find disparities in coding across regions, but regions leave unexplained at least three-quarters of the variation in hospital coding styles).

My findings suggest that hospitals were aware of the financial incentive to use the detailed codes, but that this awareness was tempered by significant frictions. I note two key potential drivers of incomplete and varied adoption of the codes across hospitals. First, an agency problem arises because physicians supply the extra information about the heart failure, but Medicare does not pay them for the detailed codes. Second, hospitals’ health information management staff and systems may have been differentially effective at translating the information that physicians provided into the high-value codes.

To study the role of these frictions, I consider adoption rates that isolate the role of hospitals above and beyond their patients and physicians. Because doctors practice at multiple hospitals, it is possible to decompose the practice of detailed documentation into hospital- and physician-specific components. This decomposition is an application of a labor economics technique that has been frequently used in the context of workers and firms (Abowd et al., 1999; Card et al., 2013); to the author’s knowledge this study is among the first, alongside Finkelstein et al. (2016)’s decomposition of health spending across regions, to apply this approach in health care.

Isolating the hospital contribution addresses the concern that some hospitals might work with physicians who would be more willing to supply the documentation wherever they practice. Yet dispersion is, if anything, slightly increased when the hospital component is isolated: the standard deviation of the detailed documentation rate across hospitals rises from 15 percentage points with rich patient controls to 16 percentage points with patient and physician controls. The residual variation means that even if facilities had the same doctors, some would be more capable of extracting specific documentation from their physicians than others (I also study the physician contribution to adoption, where dispersion is of a similar magnitude). These results are consistent with firm-level disparities in resolving frictions.

I next consider the correlation between hospital adoption and hospital characteristics. The most powerful predictors of hospital adoption are the measures of clinical quality: heart attack survival and use of survival-raising processes of care. High clinical quality facilities are also more likely to be early adopters. Under the view that extracting the revenue-generating codes from physicians makes a hospital revenue-productive, these results show that treatment and revenue productivity are positively correlated. This result also touches on a key policy implication of this study: that financial incentives that push providers to raise treatment quality may be relatively ineffective on the low quality facilities most in need of improvement. Adoption is correlated with hospital-physician integration, suggesting that a key tool for hospitals to resolve takeup frictions is contractual arrangements that align the two parties. Electronic medical records also influence adoption, suggesting that health information systems can help to resolve the frictions – though this finding is estimated imprecisely in my preferred specification.

I contribute to the literature on health care provider performance variations in several ways. First, by focusing on whether hospitals are able to modify their billing techniques to extract revenue, I isolate disparities in a context where it is plausible they might be small or nonexistent. These disparities reflect differences in hospitals’ basic ability to respond to incentives. Second, using decomposition techniques adapted from studies of labor markets, I show that four-fifths of the variation in adoption is driven by some hospitals being able to extract more high-revenue codes from their patients and physicians than others. Third, I correlate the adoption of revenue-generating codes with the use of high quality standards of care in treatment to find that a common factor may drive both outcomes. Fourth, I show that facilities that more closely integrate with their physicians are also more likely to adopt, hinting that principal-agent problems may play a role in productivity dispersion more generally – inside and outside the health care sector.

A key caveat of these analyses is that they are descriptive, and thus only suggestive of causal relationships. For example, this study shows that clinical performance and coding are correlated; this relationship could be driven by unobserved institution-level factors like the quality of hospital staff (though not physicians, which I control for). Likewise, while hospitals with better coding are more likely to be integrated with physicians, this integration could be the result of other factors, like management practices, that exert their own influences on coding.

The paper proceeds as follows. Section 2 discusses the heart failure billing reform, the data I use to study it, and provides a simple analytical framework. Section 3 describes the econometric strategy and identification. Section 4 presents results on dispersion in takeup, then shows how takeup relates to hospital and physician characteristics. Section 5 provides a discussion of the results. Section 6 concludes.

2. Setting and Data

Heart failure (HF) is a syndrome defined as the inability of the heart’s pumping action to meet the body’s metabolic needs. It is uniquely prevalent and expensive among medical conditions. There are about 5 million active cases in the United States; about 500,000 cases are newly diagnosed each year. Medicare, the health insurance program that covers nearly all Americans age 65 and over, spends approximately 43% of its hospital and supplementary insurance dollars treating patients with HF (Linden and Adler-Milstein, 2008).

The classic economic literature on health care eschews studying HF in favor of less common conditions like acute myocardial infarctions (AMIs), or heart attacks (see e.g. McClellan et al., 1994; Cutler et al., 1998, 2000; Skinner et al., 2006 and Chandra and Staiger, 2007). The literature has focused on these conditions because they are thought to be sensitive to treatment quality, are well observed in most administrative data, and almost always result in a hospitalization, removing the issue of selection into treatment. Since this paper concerns how hospitals learn to improve their billing practices, not the effect of treatment on health, the endogenous selection of patients into the inpatient setting is not a central econometric barrier. Rather, the great deal of revenue at stake for the reimbursement of heart failure patients makes it a condition that is well suited for this study’s aim of understanding how hospitals respond to documentation and coding incentives.

The hospitals I study are paid through Medicare’s Acute Inpatient Prospective Payment System (IPPS), the $112 billion program that pays for most Medicare beneficiaries who are admitted as inpatients to most hospitals in the United States MEDPAC (2015). As part of a 2008 overhaul of the IPPS – the most significant change to the program since its inception – the relative payment for unspecified type (vaguely documented) and specified type (specifically documented) HF changed. This element of the reform made the documentation valuable and provided the financial incentive for the spread of the practice.

2.1. Payment Reform and Patient Documentation

The 2008 overhaul was a redesign of the IPPS risk-adjustment system, the process that adjusts payments to hospitals depending on the severity, or level of illness, of a patient. Medicare assigns a severity level to every potential condition a patient might have. A patient’s severity is the highestseverity condition listed on his hospital’s reimbursement claim. The reform created three levels of severity (low, medium, or high) where there had been two (low or high), shuffling the severity level of the many heart failure codes in the process.2

By the eve of the reform, Medicare policymakers had come to believe that the risk-adjustment system had broken down, with nearly 80% of inpatients crowded into the high-severity category (GPO, 2007). The reporting of HF had been a primary cause of the breakdown: there were many codes describing different types of HF, and all of them had been considered high-severity. Patients with HF accounted for about one-fourth of high-severity patients (or one-fifth of patients overall) in the final year before the reform.

Risk adjustment relies on detailed reporting of patients by providers, but according to the Centers for Medicare & Medicaid Services (CMS), which administers Medicare, the overwhelmingly most common of the HF codes – 428.0, “congestive heart failure, unspecified” – was vague. Patients with this code did not have greater treatment costs than average (GPO, 2007). A set of heart failure codes that gave more information about the nature of the condition was found to predict treatment cost and, representing specifically identified illnesses, was medically consistent with the agency’s definitions of medium and high severity. These codes were in the block 428.xx, with two digits after the decimal point to provide the extra information. The vague code was moved to the low-severity list, but each of the detailed codes was put on either the medium- or the high-severity list (Table 1).

Table 1–

Vague and Specific HF Codes

| Severity | |||

|---|---|---|---|

| Code | Description | Before | After |

| Vague Codes | |||

|

| |||

| 428.0 | Congestive HF, Unspecified | High | Low |

| 428.9 | HF, Other | High | Low |

| Specific Codes (Exhaustive Over Types of HF) | |||

|

| |||

| 428.20 | HF, Systolic, Onset Unspecified | High | Medium |

| 428.21 | HF, Systolic, Acute | High | High |

| 428.22 | HF, Systolic, Chronic | High | Medium |

| 428.23 | HF, Systolic, Acute on Chronic | High | High |

| 428.30 | HF, Diastolic, Onset Unspecified | High | Medium |

| 428.31 | HF, Diastolic, Acute | High | High |

| 428.32 | HF, Diastolic, Chronic | High | Medium |

| 428.33 | HF, Diastolic, Acute on Chronic | High | High |

| 428.40 | HF, Combined, Onset Unspecified | High | Medium |

| 428.41 | HF, Combined, Acute | High | High |

| 428.42 | 42 HF, Combined, Chronic | High | Medium |

| 428.43 | HF, Combined, Acute on Chronic | High | High |

Congestive HF (the description of code 428.0) is often used synonymously with HF.

The detailed codes were exhaustive over the types of heart failure, so with the right documentation, a hospital could continue to raise its HF patients to at least a medium level of severity following the reform. The specific HF codes indicate whether the systolic and/or diastolic part of the cardiac cycle is affected and, optionally, whether the condition is acute and/or chronic. Submitting them is a practice that requires effort from both physicians and hospital staff and coordination between the two. In this way it is similar to technologies that have been the focus of researchers and policymakers, including the use of β-blockers (an inexpensive class of drugs that have been shown to raise survival following AMI; see e.g. Skinner and Staiger, 2015) in health care and the implementation of best managerial practices in firms (e.g. Bloom et al., 2012; McConnell et al., 2013; Bloom et al., 2016).

2.2. Analytical Approach

The framework for analyzing adoption views the decision to use a specific HF code code ∈ {0,1} as a function of the propensity of the hospital and the doctor to favor putting down the code or documentation thereof. I let hospitals be indexed by h, doctors by d, and patients by p. Under additive separability, hospitals can be represented by a hospital type αh and doctors by a doctor type αd. Patient observables are Xp and the remaining heterogeneity, which accounts for unobserved determinants of coding behavior, is ϵph:

| (1) |

The hospital’s type can be thought of as its underlying propensity to identify and extract the codes independently of the types of physicians who practice at the hospital. The doctor type reflects that some physicians are more or less prone to document the kind of HF that their patients have due to their own practice styles and the incentives of the physician payment system. In this framework, doctors carry their types across hospitals. Finally, the patient component accounts for observed differences that, in a way that is common across facilities, affect the cost or benefit of providing a specific code.

The dispersion of the hospital types is the first focus of the empirical analysis. A hospital’s type can be thought of as its revenue productivity – its residual ability to extract revenue from Medicare after accounting for the observable inputs to the coding production process, like patient and doctor types. A wide literature has documented persistent productivity differentials in the manufacturing sector (see Syverson, 2011 for a review), and work is ongoing to develop documentation of similar facts in the service and health care sectors (Fox and Smeets, 2011; Chandra et al., 2016a,b). Dispersion in hospital types is therefore a form of productivity dispersion. In Section 2.5 I discuss potential drivers of this dispersion and in Section 4.3 I estimate it.

The second element of the empirical analysis focuses on describing the kinds of hospitals that are most effective at responding to the incentives for detailed coding. These analyses look at the relationships between hospital types and characteristics of the hospital. The first set of characteristics, called Ch, comprises the hospital’s size, ownership, location, teaching status, and ex-ante per-patient revenue put at stake by the reform. The second set, called Ih, contains factors related to potential facility-level frictions that might improve revenue extraction, like EMRs and hospitalphysician integration. The final set Zh includes measures of the hospital’s clinical performance – defined here as its ability to use evidence-based medical inputs and to generate survival. In the key hospital-level analysis, I regress the hospital type on these three sets of characteristics:

| (2) |

The signs of ρ, γ, and θ are not obvious, both because the causal relationships between hospital characteristics and the takeup of revenue-generating technology are not well known and because other, unobserved factors may be correlated with Ch, Ih, and Zh and drive takeup. I discuss these potential relationships and estimate this equation in Section 4.4.

The final component of the empirical analysis applies these methods to the physician types, analyzing their dispersion and correlates. From the perspective of revenue generation, physician types are a form of productivity; in practice, they embody the physician’s willingness to supply the detailed documentation about their patients. A physician type thus may reflect her alignment with hospitals’ aim to generate revenue or her desire to supply information in medical records. Since supplying the documentation may have clinical payoffs as well, types may reflect differences in clinical practice patterns. Section 4.6 studies the dispersion and correlates of these types.

2.3. Data

My data is primarily drawn from the MEDPAR and Inpatient Research Information Files (RIFs), 100% samples of all inpatient stays by Medicare beneficiaries with hospital care coverage through the government-run Original Medicare program. Each row in this file is a reimbursement claim that a hospital sent Medicare. I use data on heart failure hospital stays from the calendar year 2006–2010 files, yielding fiscal year 2007–2010 data (in some secondary analyses I use files back to 2002). These stays are identified as those with a principal or secondary ICD-9 diagnosis code of 428.x, 398.91, 402.x1, 404.x1, or 404.x3.3 I source additional information about patients from the enrollment and chronic conditions files.

I eliminate those who lacked full Medicare coverage at any point during their hospital stay, were covered by a private plan, were under age 65, or had an exceptionally long hospital stay (longer than 180 days). To focus on hospitals that were subject to the reform, I include only inpatient acute care facilities that are paid according to the IPPS. As a result, I drop stays that occur at critical access hospitals (these hospitals number about 1,300 but are very small and have opted to be paid on a different basis) and Maryland hospitals (which are exempt from the IPPS). The result is a grand sample of all 7.9 million HF claims for 2007 through 2010, 7.3 million of which (93%) also have information about the chronic conditions of the patients.

2.4. Revenue at Stake from Reform

Since HF was so common and the payment for medium- or high-severity patients was so much greater than for low-severity patients, hospitals had an incentive to use detailed codes when possible. Before the reform, the gain from these detailed codes relative to the vague code was zero because they were effectively identical in the Medicare payment calculation. Consistent with these incentives, fewer than 15% of HF patients received a detailed code in the year before the reform.

Following the reform, the gain was always weakly positive and could be as high as tens of thousands of dollars; the exact amount depended on the patient’s main diagnosis and whether the patient had other medium- or high-severity conditions. For patients with other medium-severity conditions, hospitals could gain revenue if they could find documentation of a high-severity form of HF. For patients with other high-severity conditions, finding evidence of high-severity HF would not change Medicare payments, but using the detailed codes was still beneficial to the hospital because it would help to keep payments from being reduced if the claim were audited and the other high-severity conditions were found to be poorly supported.

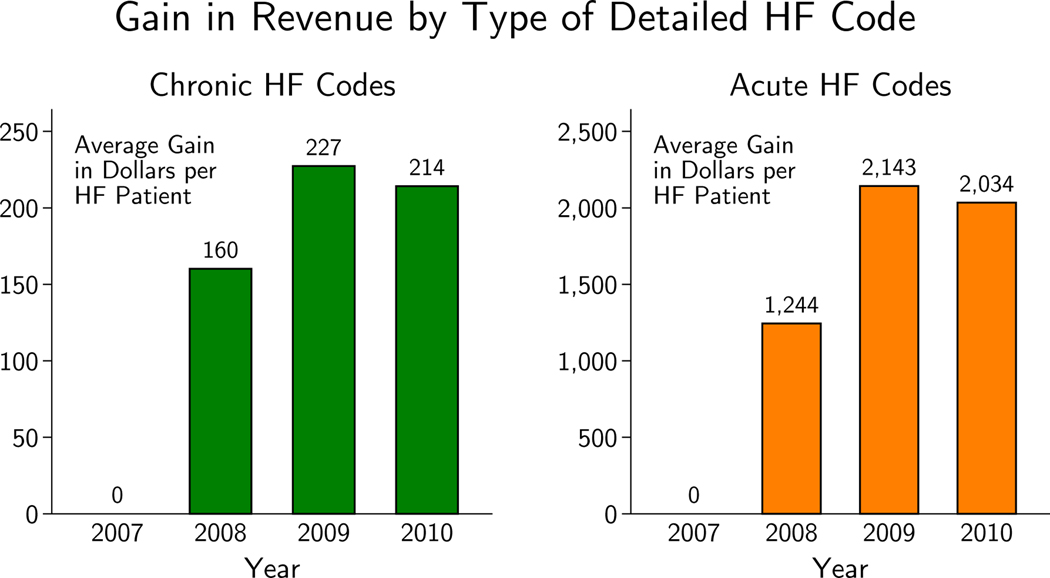

The reform was phased in over two years and incentives reached full strength in 2009. By then, the average gain per HF patient from using a detailed HF code instead of a vague one was $227 if the code indicated chronic HF (a medium-severity condition) and $2,143 if it indicated acute HF (a high-severity condition).4 As a point of comparison, Medicare paid hospitals about $9,700 for the average patient and $10,400 for the average HF patient in 2009.5 Looking at the grand sample of all HF patients from 2007 through 2010, the evolution of the gain to specific coding is shown in Figure 2 and the corresponding takeup in revenue is shown in Figure 1 (Appendix Figure A1 plots the raw takeup of the detailed codes).

Figure 2.

Figure plots the average per-HF patient gain in revenue going from always using vague codes for HF patients to always using chronic codes or acute codes. Prices in 2009 dollars.

For each hospital, the gain to taking up the revenue-raising practice – the revenue at stake from the reform – depended on its patient mix. Hospitals with more HF patients, and more acute (high-severity) HF patients, had more to gain from adopting specific HF coding. To get a sense of how this gain varied across hospitals, I predict each hospital’s ex ante revenue put at stake by the reform. This prediction takes the hospital’s 2007 HF patients and probabilistically fills in the detailed HF codes the patients would have received under full adoption. It then processes the patient under the new payment rules to calculate the expected gain in payment from these codes. Heart failure codes are predicted using the relationship between coding and patient characteristics in hospitals that were relatively specific coders in 2010 (see Appendix Section A.1.2).

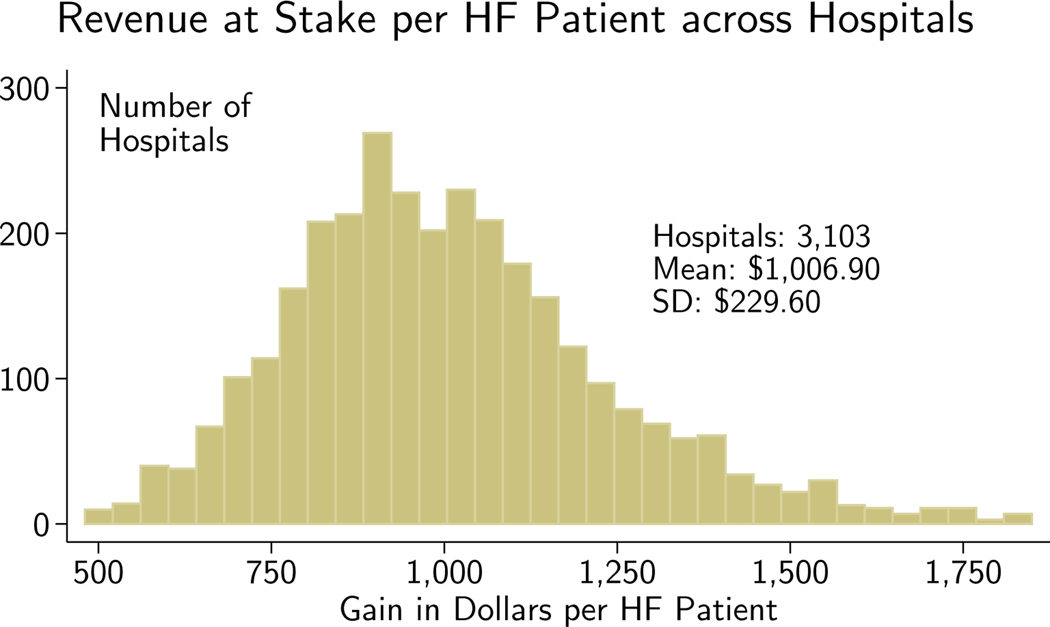

Figure 3 shows the distribution of ex ante revenue put at stake by the reform across hospitals; the average hospital would have expected to gain $1,007 per HF patient in 2009 by giving all of its HF patients specific HF codes rather than vague ones. The standard deviation of the revenue at stake per HF patient was $230. Appendix Figure A2 shows the distribution of the gain when it is spread across all Medicare admissions, which follows a similar but attenuated (as expected) pattern.

Figure 3.

Revenue at stake is calculated using pre-reform (2007) patients processed under post-reform (2009) payment rules. The prediction process is described in the appendix. The 422 hospitals with <50 HF patients are suppressed and the upper and lower 1% in revenue at stake per HF patient are then removed.

To provide a sense of scale, one can consider these amounts relative to hospital operating margins. The 2010 Medicare inpatient margin, which equals hospitals’ aggregate inpatient Medicare revenues less costs, divided by revenues, was −1.7% (MEDPAC, 2015). This negative operating margin has been cited by the American Hospital Association as evidence that Medicare does not pay hospitals adequately (American Hospital Association, 2005). The gains from detailed coding for HF were even larger than this margin: pricing the pre-reform patients under the 2009 rules shows that hospitals could have expected to raise their Medicare revenues by 2.9% by giving all of their HF patients specific HF codes.

2.5. Organizational Processes and Takeup Frictions

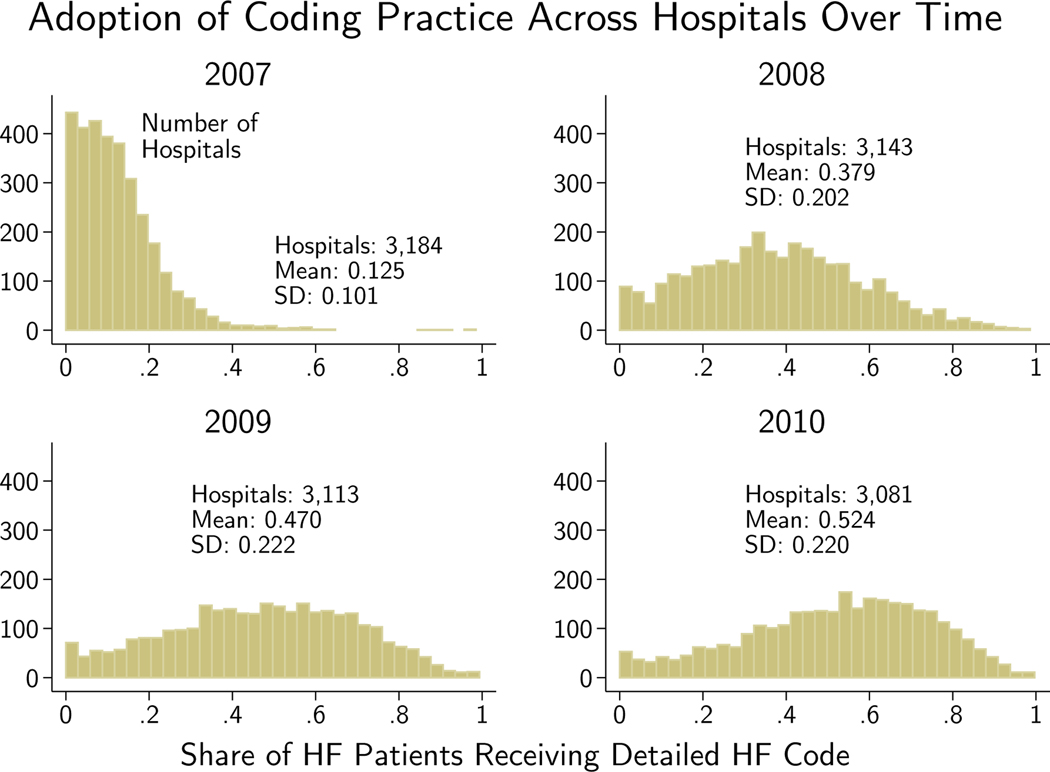

Figure 4 shows that the reform induced an almost instantaneous partial adoption of the detailed coding practice. Over the following years the takeup continued, though it remained far from 100% even by the end of 2010. The finding of incomplete takeup raises the question of what costs must be incurred by the hospital to adopt.

Figure 4.

A hospital’s adoption equals the share of its HF patients who received a detailed HF code in that year. Hospitals with fewer than 50 HF patients in the year excluded.

For a hospital to legally submit a detailed code, a doctor must state the details about the HF in the patient’s medical chart.6 As the physician treats a patient, she inputs information about diagnoses, tests, and treatments in the patient’s medical chart. When the patient is discharged, the physician summarizes the patient’s encounter, including the key medical diagnoses that were confirmed or ruled out during the stay. This discharge summary provides the primary evidence that the hospital’s health information staff (often called coders) and computer systems use when processing the chart (Youngstrom, 2013). The staff can review the chart and send it back to the doctor with a request for more information – this process is called querying. Then, the staff must work with coding software to convert the descriptions of diagnoses into the proper numeric diagnosis codes, which become a part of the inpatient reimbursement claim. A concise description of the coding process can be found in O’Malley et al. (2005).

Both physicians and staff needed to revise old habits and learn new definitions; they also needed to work together to clarify ambiguous documentation. Coding staff might query a physician to specify which part of the cardiac cycle was affected by the HF, and other staff might review patient charts and instruct physicians on how to provide more detailed descriptions (Rosenbaum et al., 2014). Hospitals could also provide clinicians with scorecards on whether their documentation translated into high-value codes, or update their medical record forms and software to make it quicker to document high-value conditions (Richter et al., 2007; Payne, 2010).

A potential friction comes from a principal-agent problem that pitted a hospital interest in detailed documentation against physicians who had little to gain financially from providing the information. Although this documentation may seem nearly costless to produce, physicians face competing demands on their time when they edit medical charts. HF is often just one condition among many that are relevant to the patient’s treatment. A doctor’s first-order concern may be documenting aspects of the patient that are crucial for clinical care, making documentation that matters solely for the hospital’s billing a secondary issue, a view expressed, for example, by the American College of Physicians (Kuhn et al., 2015).

Hospitals also face significant constraints on using incentive pay to resolve the potential conflicts in their aims and those of physicians. The Stark Law and the Anti-Kickback Statute (AKS) both make it illegal for hospitals to incentivize their physicians to refer patients to the facility (regulations are less strict for physicians employed by the hospital, who are broadly exempted from the AKS). Both laws implicate hospital payments to physicians that reward documentation because these payments would incentivize physicians to refer certain groups of patients to the hospital. Such arrangements would pay physicians depending on the “volume or value” of referrals, violating exemptions and safe harbor provisions of both laws (BNA, 2017).

Verifying that hospitals follow these rules in practice is difficult due to the confidential nature of hospital-physician contracts. One approach to reach into the “black box” of hospital practices is to survey hospital managers directly, as in Bloom et al. (2012) and McConnell et al. (2013). In preliminary work comprising 18 interviews on documentation and coding practices with hospital chief financial officers (CFOs) in a large for-profit hospital chain, all stated that they did not use financial incentives to encourage coding. Generating systematic evidence on the managerial practices underlying coding intensity will be an important avenue for future research.

Taking up the revenue-generating practice required hospitals to pay a variety of fixed and variable costs that could encourage better physician documentation as well as their ability to translate documentation into high-value codes. Examples of these costs include training hospital staff to prompt doctors for more information when a patient’s chart lacks details, training coding staff to more effectively read documentation, and hiring coders with more experience. Hospitals could purchase health information technology that automatically suggests high-value codes and that prompts staff to look for and query doctors about these codes. Hospitals also could expend resources creating ordeals for physicians who fail to provide detailed documentation. The view that physician habits are expensive for the hospital to change matches accounts of quality improvement efforts that sought to make reluctant physicians prescribe evidence-based medicines, wash their hands, and perform other tasks to improve clinical outcomes (Voss and Widmer, 1997; Stafford and Radley, 2003; Pittet et al., 1999).

2.6. Clinical Costs of Takeup

One possibility is that taking up the reform requires medical testing of HF patients to confirm the details of their conditions. The minimum information needed to use a specific code is a statement of whether there is systolic or diastolic dysfunction. Echocardiograms are non-invasive diagnostic tests that are the gold standard to confirm these dysfunctions. Some observers proposed that the reform put pressure on physicians to perform echocardiograms that they had not considered medically necessary (Leppert, 2012). If these concerns were realized, one could interpret the adoption friction as not one of documentation, but rather the refusal of doctors and hospital staff to provide costly treatment that they perceived to lack clinical benefit.

Official coding guidelines indicate that more detailed HF coding did not have to involve changes in real medical treatment. The coding guidelines state that “if a diagnosis documented at the time of discharge is qualified as ‘probable,’ ‘suspected,’ ‘likely,’ ‘questionable,’ ‘possible,’ or ‘rule out,’ the condition should be coded as if it existed or was established” (Prophet, 2000). Clinically, the information to diagnose and submit a vague HF code typically enables the submission of a specific HF code – a patient’s medical history and symptoms are predictive of the type of HF – and time series evidence is consistent with this view. Appendix Figure A3 shows no perceptible change in heart testing rates (echocardiograms) around the reform.

A more systematic test of the correlation between HF coding and treatment suggests that heart testing can account for only a small fraction of the rise in detailed coding. In Appendix Table A2 I partition patients into 25 groups using major diagnostic categories (MDCs), an output of the DRG classification system that is based on the patient’s principal diagnosis. For each group, I calculate its ex ante HF rate using 2003–2004 patients and analyze how its detailed HF coding and echocardiogram rates grew between the pre-reform (2005–2007) and post-reform (2008–2010) eras. Unsurprisingly, groups with a greater fraction of HF patients ex ante were more likely to grow their detailed HF coding rates: for each additional 10 percentage points of HF ex ante, detailed coding later rose by 4.5 percentage points. These groups were also more likely to grow their echocardiogram rates, but the growth was one-eighth that of detailed HF coding, with an additional 10 percentage points of HF ex ante associated with a 0.6 percentage point higher echocardiogram rate later.

3. Econometric Strategy

In this section, I describe my approach for analyzing the roles that hospitals and physicians played in the adoption of the revenue generating practice. I decompose coding into the component that is due to the facility and the component that is due to its doctors. The notion of outcomes being due to a hospital and doctor component follows a common econometric model of wages that decomposes them into firm and worker effects (Abowd et al., 1999; Card et al., 2013).

This approach enables two key hospital analyses. First, it uncovers the dispersion in the adoption of detailed HF coding among observably similar hospitals and shows whether it is robust to removing the physician component of coding – that is, it tests whether dispersion would persist even if hospitals had the same doctors. Second, it admits a study of the relationship between adoption and hospital factors like EMRs, financial integration with physicians, and clinical quality. Later, I apply the same approaches to study the dispersion in and correlates of physician coding.

3.1. Specification

The key analyses describe the distribution of the adoption of the coding practice with two-step methods. The first step extracts a measure of adoption at the hospital level, which is the hospital effect given in equation 1. This fixed effect is the probability that a HF patient in the hospital receives a detailed HF code, after adjusting for patient observables and physician effects. In the second step, I analyze the distribution of the fixed effects by calculating their standard deviation (to look for variations among seemingly similar enterprises) and by regressing them on hospital characteristics and clinical performance (to see which facilities are most likely to adopt).

3.1.1. First Step: Estimating Hospital Fixed Effects

In the first step, I run the regression given in equation 1. I consider versions of this regression with patient controls of varying degrees of richness, and run these regressions both with and without physician fixed effects. I then extract estimates of the hospital fixed effects. These estimates equal the share of HF patients at the hospital who received a specific code less the contribution of the hospital’s average patient () and the patient-weighted average physician effect (where Nh is the number of HF patients at the hospital, Ph indexes the patients, and d(p) indicates the doctor that attended to patient p):

In the simplest specification, which includes no patient controls nor physician fixed effects, the estimates of the hospital fixed effects αˆh become the shares of HF patients in hospital h who receive a specific HF code:

| (3) |

There are two caveats to using this measure, both of which can be seen by taking the difference between hsimple and :

One is that heterogeneity in may be due to patient-level factors that have been shifted to the error term of the simple measure. For example, dispersion in coding could reflect that some hospitals have patients who are difficult or less profitable to code. The specifications with rich sets of patient observables aim to address this concern. When patient-level factors are included, the use of hospital (and potentially physician) fixed effects means that the coefficients on patient characteristics are estimated from the within-hospital (and potentially within-physician) relationships between these characteristics and coding.

The second caveat is that dispersion could also reflect the role of physicians in coding, –some hospitals may have doctors who are particularly willing or unwilling to provide detailed documentation of their patients. Whether the physician component should be removed depends on the aim of the analysis, since the physician’s actions inside the hospital are a component of the hospital’s overall response to the reform. For example, hospitals with much to gain from the reform may be more likely to teach their physicians how to recognize the signs and symptoms of HF. These physicians would then be more likely to document specific HF in any hospital. Controlling for the physician effects would sweep out this improvement. Still, the extent to which the response to the reform is driven by changes in hospital behavior above and beyond the actions of its physicians is of interest in identifying the performance of the facility itself, which could reflect the performance of its own coding systems as well as how it resolves agency issues.

3.1.2. Second Step: Describing the Distribution of the Hospital Fixed Effects

This section explains the analyses of the and how they account for estimation error due to sampling variance.

Dispersion among Similar Hospitals

The first key analysis of this paper studies the dispersion of the hospital fixed effects. However, the objects are noisy – though unbiased – estimates of , meaning that their dispersion will be greater than the true dispersion of αh. This noise comes from small samples at the hospital level (some hospitals treat few HF patients) and imprecision in the estimates of the other coefficients in the model. When the specification lacks physician fixed effects, the only other coefficients in the model are at the patient level, and are estimated from millions of observations. These coefficients are estimated precisely, reducing the role for this noise.

When the specification includes physician fixed effects, the imprecision of the hospital effects grows as the variation available to identify the hospital component is reduced. In a simple specification with no patient-level characteristics, the hospital effects are identified only by patients who were treated by mobile doctors, and one component of the measurement error in the hospital effect is an average of the measurement error of those physicians’ effects. As these coefficients become estimated more precisely, for example as the number of patients treated by the mobile doctors rises, the estimation error falls (for more discussion of the identification conditions see Abowd et al., 2002 and Andrews et al., 2008).

Estimates of the variance of αh must account for measurement error in order to avoid overstating dispersion. To produce these estimates, I adopt the Empirical Bayes procedure described in Appendix C of Chandra et al. (2016a). This procedure uses the diagonals of the variance-covariance matrix from the first-step regression as estimates of the variance of the hospital fixed effect measurement error. I generate a consistent estimate of the variance of by taking the variance of and subtracting the average squared standard error of the hospital fixed effects (i.e. the average value of the diagonals of the variance-covariance matrix).7

Describing the Adopters

The other key hospital analysis describes the adopters by placing the hospital fixed effect estimates on the left-hand side of regressions of the form of equation 2. The measurement error in the therefore moves into the error term where its primary effect is to reduce the precision of the estimates of the coefficients ρ, γ, and θ. Since the measurement error is due to sampling variance in the first step, it is not correlated with the characteristics and performance measures that are found on the right-hand side of the key regressions, and it does not bias the estimates of the coefficients.

3.2. Separate Identification of Hospital and Physician

The HF context allows the separate identification of hospital and physician contributions to takeup. The key insight behind the decomposition is that physicians are frequently observed treating patients at multiple hospitals, since doctors may have admitting privileges at several facilities. When the same physician practices in two hospitals, her propensity to provide detailed documentation at each facility identifies the hospital effects relative to each other. Likewise, when two physicians practice at the same hospital, their outcomes at that hospital identify the physician effects relative to each other.

The hospital and physician effects can be separately identified within a mobility group – the set of doctors and hospitals that are connected to each other by shared patients. A mobility group starts with a doctor or hospital and includes all other doctors and hospitals that are connected to her or it. Thus mobility groups are maximally connected subgraphs on the graph in which doctors and hospitals are nodes and shared patients are edges.

The key assumption of the econometric model here is that the probability that a patient receives a specific code must approximate a linear probability model with additive effects from the patient, hospital, and doctor such that:

Though the idea the three levels are linear and additively separable is clearly an approximation given the binary nature of the outcome, the additivity assumption can be tested by estimating a match effects model (Card et al., 2013). This model replaces the hospital and physician fixed effects with a set of effects at the hospital-physician level (i.e. αh,d), allowing any arbitrary relationship between hospital and physician types. The match effects model improves explanatory power minimally, suggesting that additivity is not a restrictive assumption in this context.8

The additive model does not structure the matching process between hospital types and physician types. Hospitals drawn from one part of the hospital type distribution may systematically match with physicians drawn from any part of their type distribution. Likewise, the model makes no assumption about the relationship between physician type and mobility status: mobile physicians may be drawn from a different part of the physician type distribution than their non-mobile counterparts.

Instead, the principal threats to identification are twofold. First, the conditional expectation equation implies that patients do not select hospitals or doctors on the basis of unobserved costs of coding. If such selection were to occur, the fixed effect of a hospital with unobservably more costly to code patients would, for example, be estimated with negative bias. In practice, I test this assumption by including a rich set of patient characteristics as controls. Adding controls yields qualitatively similar, albeit somewhat attenuated, results.

A second identification requirement is that the assignment of doctors to hospitals must not reflect match-specific synergies in the coding outcome. Though there may be an unobserved component of coding that is due to the quality of the match, the matching of doctors and hospitals must not systematically depend on this component (Card et al., 2013). For example, one hospital might demand more specificity in HF coding from physicians who were directly employed by the facility. These physicians would have positive match effects with that hospital. If they tended to practice at the hospital, the match effects would load onto the hospital effect, biasing it upward. The role of match-specific synergies is bounded by the match effects model described in footnote 8 – the low explanatory improvement of that model indicates that the size of these synergies must be small, limiting the scope for endogeneity from this source.

4. Analysis and Results

4.1. Analysis Sample

I use the grand sample described in Section 2.3 to construct an analysis sample of hospitals’ claims to Medicare for their HF patients. I start with the 1.9 million HF patients across 3,414 hospitals from 2010. For 1.6 million (84%) of these stays across 3,381 hospitals and 136,067 physicians, I observe the patient’s history of chronic conditions as well as the attending physician, who was primarily in charge of taking care of the patient in the hospital and thus most responsible for the final diagnoses that were coded and submitted on the hospital’s claim.9 Hospital and physician types are only separately identified within the mobility group described in Section 3.2. I call the first-step analysis sample the set of 1.5 million patient claims that occur within the largest mobility group of hospitals and physicians – 80% of the grand sample of HF claims in 2010.

This sample is described in Table 2. There are 2,831 hospitals and 130,487 doctors in the sample. The average hospital sees 534 HF patients in 2010 and its HF patients are treated by 57 distinct doctors. At the average hospital, 19 of these doctors are mobile, which means that they are observed treating at least one HF patient at another hospital. In this sample, the average doctor sees 12 HF patients in a given year and works at 1.23 distinct hospitals. About one-fifth of doctors are mobile.

Table 2 –

Statistics about the First-Step Analysis Sample

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Mean | SD | Min | Max | |

| Hospitals (N=2,831) | ||||

|

| ||||

| HF Patients | 533.73 | 504.59 | 1 | 3,980 |

| Distinct Physicians | 56.61 | 52.45 | 1 | 531 |

| Mobile Physicians | 19.02 | 21.02 | 1 | 169 |

| Physicians (N=130,487) | ||||

|

| ||||

| HF Patients | 11.58 | 17.29 | 1 | 563 |

| Distinct Hospitals | 1.23 | 0.54 | 1 | 8 |

| Mobile (>1 hospital) | 0.184 | 0.388 | 0 | 1 |

The first-step analysis sample includes 1,510,988 HF patients. See text for more details.

Table 3 provides additional information about the doctors by mobility status using data from the AMA Masterfile.10 The average mobile physician treats about twice as many patients as a non-mobile physician.11 Mobile physicians are more likely to be primary physicians like internists or medical specialists like cardiologists, and they are less likely to be women. Mobile physicians have about 8 months more training – but about 8 months less experience since completing training – than their non-mobile counterparts, and they are also more likely to have received their medical training outside the U.S. The difference in characteristics between mobile and non-mobile physicians does not invalidate the econometric model, which allows physician types to vary flexibly with mobility status. The relevant identification assumption, described in more detail in Section 3.2, is instead that physicians and hospitals do not match based on unobserved coding synergies.

Table 3 –

Statistics about Physicians by Mobility Status

| (1) | (2) | (3) | |

|---|---|---|---|

| All values are means | All | Mobile | Non-Mobile |

| Patient and Hospital Volume | |||

|

| |||

| HF Patients | 14.6 | 20.2 | 12.9 |

| Share Given Specific Code | 0.53 | 0.53 | 0.53 |

| Distinct Hospitals | 1.29 | 2.24 | 1 |

| Mobile (>1 hospital) | 0.24 | 1 | 0 |

| Specialization | |||

|

| |||

| Primary Care Physician | 0.51 | 0.51 | 0.51 |

| Medical Specialist | 0.30 | 0.34 | 0.28 |

| Surgeon | 0.17 | 0.14 | 0.18 |

| Unknown/Other | 0.025 | 0.021 | 0.026 |

| Demographics | |||

|

| |||

| Female | 0.19 | 0.15 | 0.20 |

| Age | 49.0 | 48.9 | 49.0 |

| Training and Experience | |||

|

| |||

| Years in Training* | 5.94 | 6.51 | 5.76 |

| Years Since Training* | 15.9 | 15.4 | 16.0 |

| Trained in US | 0.69 | 0.59 | 0.72 |

| Physicians | 101,370 | 24,048 | 77,322 |

Mobile physicians are observed attending to HF patients at multiple hospitals in 2010; non-mobile physicians attend to patients at one hospital in that period. Data on specialization, demographics, training, and experience derived from AMA Masterfile. Excludes 29,117 “singleton” physicians who do not contribute to identification in the full econometric model and are omitted from the later physician analysis.

Excludes physicians for whom years in/since training is unknown (3.5% in each column).

4.2. Hospital Characteristics

Table 4 shows summary statistics for the 2,341 hospitals in the main analyses for which I observe complete information on all covariates – the second-step analysis sample. Hospital size (beds) and ownership are taken from the Medicare Provider of Services file. Hospital location and teaching status are taken from the 2010 Medicare IPPS Impact file. The location definition is the one used by Medicare: a large urban area is any Metropolitan Statistical Area (MSA) with a population of at least 1 million, an other urban area is any other MSA, and the rest of the country is considered rural. Only 22% of the hospitals in the sample are rural – many rural hospitals are classified as critical-access facilities exempt from this reform, and they are excluded from my analyses. Teaching hospitals are defined as those with any residents; major teaching facilities are the 10% with a resident-to-bed ratio of at least 0.25; minor teaching facilities are the 28% that have a resident-to-bed ratio greater than zero but less than 0.25.

Table 4 –

Hospital Summary Statistics

| (1) | (2) | |

|---|---|---|

| Patient Controls | Mean | SD |

| Heart Failure Coding and Physicians | ||

|

| ||

| HF Patients | 601.8 | 514.2 |

| Share Given Specific Code | 0.546 | 0.199 |

| Distinct Physicians | 62.97 | 54.16 |

| Mobile Physicians | 20.24 | 21.83 |

| Hospital Characteristics | ||

|

| ||

| Beds | 287.9 | 235.0 |

| Ownership | ||

| Government | 0.167 | |

| Non-Profit | 0.671 | |

| For-Profit | 0.161 | |

| Location | ||

| Rural Area | 0.224 | |

| Large Urban Area | 0.422 | |

| Other Urban Area | 0.354 | |

| Teaching Status | ||

| Non-Teaching | 0.623 | |

| Major Teaching Hospital | 0.101 | |

| Minor Teaching Hospital | 0.276 | |

| Ex Ante $ at Stake / Patient | 267.5 | 71.77 |

| EMR and Hospital-Physician Integration | ||

|

| ||

| EMR | ||

| None | 0.065 | |

| Basic | 0.502 | |

| Advanced | 0.434 | |

| Hospital-Physician Integration | ||

| None | 0.305 | |

| Contract | 0.167 | |

| Employment | 0.351 | |

| Unknown/Other | 0.177 | |

| Standards of Care (share of times standards used in 2006) | ||

|

| ||

| for AMI Treatment | 0.916 | 0.084 |

| for Heart Failure Treatment | 0.827 | 0.113 |

| for Pneumonia Treatment | 0.864 | 0.O61 |

| for High-Risk Surgeries | 0.798 | 0.118 |

| AMI Treatment (patients in 2000-: | ||

|

| ||

| Adjusted 30-Day Survival | 0.813 | 0.030 |

N=2,341 hospitals. See text for more details on the source and definitions of the characteristics. The standard deviations of specific coding for HF and AMI survival account for sampling variance.

I define the ex ante revenue at stake as the expected value of giving all of the hospital’s prereform (2007) HF patients a specific code according to post-reform (2009) reimbursement rules. The revenue at stake is scaled by the total number of patients at the hospital, making it the per-patient expected gain from fully taking up the reform (see Appendix Section A.1.2).

Hospital EMR adoption comes from Healthcare Information and Management Systems Society (HIMSS) data and is classified into basic and advanced according to the approach in Dranove et al. (2014). 6% of hospitals do not have an EMR, half have EMRs with only a basic feature (clinical decision support, clinical data repository, or order entry), and 43% have EMRs with an advanced feature (computerized practitioner order entry or physician documentation).

To measure hospital-physician integration, I use the American Hospital Association (AHA) hospital survey data and follow Scott et al. (2016) to group hospitals by the tightest form of integration that they report. Hospitals that report no formal contractual or employment agreement with physicians are said to have no relationship (31% of hospitals). Hospitals may sign agreements with outside physician or joint physician-hospital organizations; these hospitals are said to have contract relationships (17% of hospitals). The most integrated arrangements occur when hospitals directly salary physicians or own the physician practice; these models are considered employment relationships (35% of hospitals).12 If the hospital did not respond to the question or described integration using a freeform text field, I classify the hospital as having an unknown or other relationship (18% of hospitals).

The standards of care measures were collected by CMS under its Hospital Compare program and are described in greater detail in Appendix Section A.2.1. They indicate the shares of times that standards of care were followed for AMI, HF, pneumonia, and high-risk surgery patients in 2006. These standards of care are inexpensive, evidence-based treatments that were selected because they had been shown to improve patient outcomes and aligned with clinical practice guidelines (Williams et al., 2005; Jencks et al., 2000). When productivity is defined as the amount of survival a hospital can generate for a fixed set of inputs, these scores measure the takeup of productivityraising technologies. They notably include β-blockers, a class of inexpensive drugs that dramatically improve survival following AMI and have been the subject of several studies of technology diffusion (see e.g. Skinner and Staiger, 2007, 2015).

Adjusted AMI survival is based on the sample and methods of Chandra et al. (2013) and its construction is described in Appendix Section A.2.2. A form of treatment performance, a hospital’s adjusted survival is the average 30-day survival rate of AMI patients treated at the hospital in 2000–2006, after controlling for the inputs used to treat the patient and a rich set of patient observables. An increase in the rate of 1 percentage point means that, at the same level of inputs and for the same patient characteristics, the hospital is able to produce a 1 percentage point greater probability that the patient survives 30 days. This rate is adjusted to account for measurement error using an Empirical Bayes shrinkage procedure. The survival rate at the average hospital is 81%, and the standard deviation of that rate across facilities, after accounting for differences in patient characteristics, input utilization, and measurement error, is 3 percentage points.

To provide a sense of whether the analysis sample is representative of the broader set of hospitals that treat HF, Appendix Table A3 compares the characteristics of these hospitals to the grand sample. The analysis sample includes 69% of hospitals in the grand sample. As expected given the selection criteria that required sample facilities to have doctors in the mobility group and to have information on all covariates, the sample is similar but not identical to the population: the sample tends to be larger (in beds) and contains a greater proportion of non-profit, urban, and teaching facilities.

4.3. Dispersion across Hospitals

I now assess dispersion in hospital adoption and its sensitivity to patient and physician controls (for dispersion at the hospital system and geographic region levels, see Appendix Section A.3). To provide a sense of the time series of adoption, Figure 4 shows the distribution of raw, the share of HF patients at hospital h who received a detailed HF code, in each year from 2007 to 2010. Takeup across hospitals occurred rapidly after the reform. By 2010, the third year after the reform, the median hospital used detailed codes 55% of the time. Variation was substantial: a mass of hospitals used the codes for the vast majority of their HF patients while a nontrivial number of hospitals almost never used them.13

Table 5 shows the standard deviation of adoption overall and among homogeneous categories of hospitals. I divide the space of hospitals on the basis of characteristics that have been the focus of literature on hospital quality. The left three columns estimate dispersion using varying sets of patient controls and no physician controls; in these results, the hospital effects include the component of coding that is due to the physicians. The final column adds first-step physician effects, which subtracts the physician component.

Table 5 –

Standard Deviation of Coding by Type of Hospital

| (1) | (2) | (3) | (4) | (5) | |

|---|---|---|---|---|---|

| Statistic | Std Dev | Std Dev | Std Dev | Std Dev | N |

| All Hospitals | 0.199 | 0.151 | 0.151 | 0.160 | 2,341 |

|

| |||||

| By Ownership | |||||

|

| |||||

| Government | 0.222 | 0.163 | 0.162 | 0.141 | 392 |

| Non-Profit | 0.191 | 0.147 | 0.147 | 0.167 | 1,571 |

| For-Profit | 0.192 | 0.143 | 0.143 | 0.143 | 378 |

| By Location | |||||

|

| |||||

| Rural | 0.229 | 0.171 | 0.170 | 0.190 | 525 |

| Large Urban | 0.192 | 0.146 | 0.145 | 0.146 | 988 |

| Other Urban | 0.182 | 0.142 | 0.141 | 0.151 | 828 |

| By Size | |||||

|

| |||||

| Upper Tercile | 0.174 | 0.137 | 0.137 | 0.129 | 780 |

| Middle Tercile | 0.184 | 0.141 | 0.141 | 0.143 | 775 |

| Lower Tercile | 0.227 | 0.168 | 0.167 | 0.196 | 786 |

| By Teaching Status | |||||

|

| |||||

| Non-Teaching | 0.206 | 0.154 | 0.153 | 0.159 | 1,459 |

| Major Teaching | 0.183 | 0.146 | 0.146 | 0.129 | 237 |

| Minor Teaching | 0.182 | 0.141 | 0.141 | 0.168 | 645 |

| By EMR Type | |||||

|

| |||||

| None | 0.184 | 0.143 | 0.143 | 0.135 | 151 |

| Basic | 0.207 | 0.155 | 0.154 | 0.151 | 1,175 |

| Advanced | 0.186 | 0.143 | 0.143 | 0.171 | 1,015 |

| By Hospital-Physician Integration | |||||

|

| |||||

| None | 0.201 | 0.151 | 0.150 | 0.180 | 714 |

| Contract | 0.188 | 0.145 | 0.145 | 0.129 | 392 |

| Employment | 0.191 | 0.147 | 0.147 | 0.164 | 821 |

| Patient Controls | None | Admission | Full | Full | |

| Physician Controls | None | None | None | FE | |

Each row shows the standard deviation in coding score for a different partition of hospitals (hospital counts, which apply to columns 1–4, shown in column 5). Column 1 uses no controls to calculate the hospital effects. Column 2 adds controls for patient characteristics observable upon admission, and column 3 adds histories of chronic conditions. Column 4 adds physician fixed effects. All results are adjusted for sampling variation.

The controls are described briefly here and in full detail in Appendix Section A.4. Column 1 uses no patient-level controls. Column 2 controls for observables about the patient’s hospital admission found in the hospital’s billing claim: age, race, and sex interactions; whether the patient was admitted through the emergency department; and finely grained categories for the patient’s primary diagnosis. Richly controlling for the patient’s principal diagnosis also helps to account for the patient-level return to the detailed codes, which will vary depending on the patient’s DRG. Column 3 adds indicators for a broad set of chronic conditions. To improve comparability across analyses, the table only includes hospitals for which all covariates are observed.

Among all hospitals, the standard deviation of the coding scores with no controls is 0.20 (column 1), meaning that a hospital with one standard deviation greater adoption gives 20 percentage points more of its HF patients a specific HF code. This measure does not account for differences in patient or doctor mix across hospitals. With patient observables on admission included, the standard deviation falls to 0.15 (column 2). Additionally controlling for patient illness histories has little further effect (column 3). This dispersion is the standard deviation across hospitals of the probability a HF patient gets a specific code, holding fixed the patient’s observed characteristics. It calculates adoption across hospitals after removing the component that can be explained by within-hospital relationships between patient observables and coding. Further adding physician fixed effects raises the standard deviation slightly to 0.16 (column 4). This result is the dispersion across hospitals in the probability a specific code is used, given a HF patient with a fixed set of characteristics and a fixed physician. With these controls, a hospital with one standard deviation greater adoption is 16 percentage points more likely to give a patient a specific code.

Within key groups of hospitals, dispersion tends to decline with the inclusion of patient characteristics in the first step; the additional inclusion of physician fixed effects yields smaller changes in magnitude of varying sign. Specifically, dispersion declines by 4–6 percentage points with the inclusion of patient characteristics; the additional inclusion of physician effects yields changes ranging from a decline of 2 percentage points to a rise of 3 percentage points.

While it may seem counterintuitive that disparities in adoption sometimes increase with the addition of physician controls, this finding is possible if high type hospitals tend to match with low type physicians. When physician controls are omitted, the hospital’s adoption includes both the facility component and an average physician component. If dispersion in adoption rises after removing the physician component, it indicates that the average physician component was negatively correlated with the hospital component – evidence of negative assortative matching. While the econometric model assumes additivity of hospital and physician effects, it is agnostic about the matching process, permitting assortative or non-assortative matching.

4.4. Describing the Adopters

Having found evidence of disparities in adoption even after accounting for patients and physicians, in this section I turn to the characteristics that are associated with adoption. That is, I estimate equation 2 by regressing the hospital adoption measures (estimated with varying patient and physician controls) on the hospital characteristics. I first discuss what existing literature on hospital performance suggests for the ex ante relationships one might expect between hospital covariates and HF coding. I then show how these correlations are borne out in my data.

In Appendix Section A.3, I consider two additional explanatory factors: hospital system and geographic region. While most variation in hospital adoption is not explained by either level, the explanatory power of each is non-trivial: system and region fixed effects account for as much as one-fourth of variation with physician effects not swept out, and one-fifth of variation with physician effects removed.

4.4.1. Potential Roles of Hospital Characteristics

Size (Number of Beds)

A long line of research has documented a relationship between hospital size and quality, though with an unclear causal link. Epstein (2002) provides a critical review of this association, called the volume-outcomes hypothesis. Likewise, a scale-coding relationship could be the result of several factors. It could derive from features of the code production process. As with clinical quality, it could reflect that hospitals learn by doing, and large hospitals have more patients to learn from. Larger hospitals would also be more likely to adopt detailed HF coding if there were fixed costs of adoption – the return on these fixed costs is greater when they yield better coding on a bigger patient population. In this context, fixed costs could include health information technology software (though I study this possibility directly by looking at EMRs). Lastly, a scalecoding gradient could be the incidental result of an omitted third factor, though the correlation between size and coding could still be of interest for policymakers seeking to understand the effects of the reform on distribution and which facilities are likely to respond in the future.

Ownership

While there is no consensus on whether non-profit or for-profit hospitals provide superior quality of care (see e.g. McClellan and Staiger, 2000; Sloan et al., 2003; Joynt et al., 2014), the disparities have been clearer in studies of billing and coding, which have found that for-profit hospitals exploited revenue-making opportunities more aggressively than their non-profit and governmentrun counterparts (Dafny, 2005; Silverman and Skinner, 2004). Earlier work has typically focused on upcoding, or the exaggeration of patient severity to raise payments. Here, a hospital can provide a detailed HF code for all its HF patients with detailed documentation but no upcoding (upcoding would entail submitting a detailed code that lacked supporting documentation).

Location

Research has considered differences in clinical performance between urban and rural facilities, but whether rural hospitals should be more effective at adopting the revenue-raising technology than urban hospitals holding scale fixed is unclear ex ante. Evidence on outcomes and processes along the dimension of hospital location may be suggestive. Most of the literature has found that health care outcomes and clinical quality are lower in rural hospitals relative to their urban counterparts, a finding that persists even conditional on hospital size (MEDPAC, 2012; Baldwin et al., 2010; Goldman and Dudley, 2008).

Teaching Status

Teaching hospitals have better outcomes and higher quality processes of care than non-teaching hospitals (Ayanian and Weissman, 2002; Mueller et al., 2013; Burke et al., 2017). Beyond the academic literature, teaching hospitals appear to be regarded in conventional wisdom as purveyors of the frontier of high quality care (see, for example, U.S. News and World Report rankings of hospitals). Whether this conventional wisdom is true, and whether it translates into more responsiveness to incentives in the form of takeup of the revenue-generating practice, is an open question – for example, the presence of residents who lack prior experience with hospital documentation and billing needs may act as a drag on a hospital’s coding, while the need to document extensively for training purposes could improve coding.

Revenue at Stake

A hospital with more revenue at stake from the reform, all else equal, would have a greater incentive to buy software that improves specific coding and to coax its doctors to provide detailed documentation. The revenue at stake depends on the hospital’s patient mix – hospitals with more HF patients and hospitals with more acute HF patients have more to gain. However, even after controlling for a host of observables about the hospitals, unobserved characteristics may still exert an effect on adoption along this gradient, since patient mix and acuity may be correlated with other attributes about the hospital that independently affect its coding (for example, after conditioning on characteristics, having revenue at stake could be correlated with having a safety net role and thus other associated but unobserved factors). In the regressions, I calculate revenue at stake per patient, rather than total revenue at stake or total number of HF patients. The total revenue measures are closely related to hospital size (in practice, the total measures are not conditionally correlated with adoption).

Electronic Medical Records

Hospital EMRs may facilitate detailed coding by reducing the cost for physicians of providing additional information. EMRs can also prompt physicians to provide documentation or copy it over automatically from older records (Abelson et al., 2012). While most of the literature on EMRs centers on their potential to improve the quality of care, some quasiexperimental work considers its effects on documentation and coding. Two studies find that EMRs raise coding intensity, albeit with different magnitudes: Li (2014) shows a significant increase in the fraction of patients in high-severity DRGs as hospitals submit more diagnosis codes in their claims, while Agha (2014) shows that hospital payments rise due to EMR adoption, but that increased coding intensity explains only 7% of the change.

Hospital-Physician Integration

Physicians traditionally practiced at hospitals without formal contractual or employment relationships, billing insurers directly for the care they provided. In recent years hospitals and physicians have come to integrate more closely (Scott et al., 2016). The tightest form of integration occurs when physicians are directly employed by hospitals; an intermediate form occurs when physician group practices contract with the hospital to establish a relationship. Multiple studies have shown that integration raises the prices providers receive from private insurers, either by increasing the bargaining power of the integrated unit or because Medicare’s administrative pricing rules favor integrated entities (Baker et al., 2014; Neprash et al., 2015).

Tighter hospital-physician integration has the potential to increase coding rates by aligning the revenue objectives of physicians with those of the hospital. In addition, the federal Anti-Kickback Statute, which restricts how hospitals pay physicians, does not apply to employment relationships (BNA, 2017). While there is little evidence on how hospital-physician integration affects documentation and coding, integration in other areas of health care can improve coding: Geruso and Layton (2015) show that private insurance plans in Medicare that integrate with health care providers raise the coded severity of their patients more than unintegrated plans, leading to increased federal capitation payments.

Clinical Performance and Quality

Whether high treatment performance hospitals are more likely to adopt the coding practice is not obvious. High quality hospitals may have good managers who effectively work with physicians to incorporate consensus standards of care – a correlation that has been observed in U.S. hospital cardiac care units (McConnell et al., 2013). These managers may use the same techniques to extract more detailed descriptions from their physicians. The managers could also use their treatment performance-raising techniques to ensure that coding staff does not miss revenue-making opportunities.

On the other hand, a negative correlation between treatment quality and revenue productivity is also plausible. To the extent that productivity depends on managerial quality, the relationship between revenue productivity and treatment quality could reflect whether one is a substitute for another in the hospital management production process. In the substitutes view, managers specialize in either coaxing physicians and staff to extract revenue from payers or in encouraging them to treat patients well.

4.4.2. Results

Table 6 displays estimates of the correlation between hospital characteristics and takeup of detailed HF coding. The columns of this table show the results when different sets of first-step controls are included. These specifications match those used in the dispersion analysis. The hospital effects are estimated with noise, adding left-hand side measurement error to the regressions. This measurement error comes from sampling variance, so it does not bias the coefficients.

Table 6 –

Association Between Hospital Characteristics and Coding

| (1) | (2) | (3) | (4) | |

|---|---|---|---|---|

| Outcome | Score | Score | Score | Score |

| Hospital Characteristics (Ch) | ||||

|

| ||||

| ln(Beds) | 0.018** | 0.011* | 0.011* | −0.009 |

| (0.008) | (0.006) | (0.006) | (0.011) | |

| Government Ownership | ref. | ref. | ref. | ref. |

| Non-Profit Ownership | 0.037** | 0.028** | 0.028** | 0.020 |

| (0.015) | (0.011) | (0.011) | (0.013) | |

| For-Profit Ownership | 0.018 | 0.016 | 0.015 | 0.031* |

| (0.017) | (0.012) | (0.012) | (0.017) | |

| Located in Rural Area | ref. | ref. | ref. | ref. |

| Located in Large Urban Area | 0.002 | −0.001 | −0.002 | 0.030 |

| (0.016) | (0.012) | (0.012) | (0.020) | |

| Located in Other Urban Area | 0.016 | 0.008 | 0.008 | 0.031* |

| (0.014) | (0.011) | (0.011) | (0.016) | |

| Non-Teaching Hospital | ref. | ref. | ref. | ref. |

| Major Teaching Hospital | 0.024 | 0.028** | 0.028** | 0.045** |

| (0.018) | (0.014) | (0.014) | (0.020) | |

| Minor Teaching Hospital | −0.003 | 0.002 | 0.002 | 0.003 |

| (0.010) | (0.008) | (0.008) | (0.014) | |

| Ex Ante $ at Stake per Patient | 0.000 | 0.000 | 0.000 | 0.000 |

| (0.000) | (0.000) | (0.000) | (0.000) | |

| EMR and Hospital-Physician Integration (Ih) | ||||

|

| ||||

| No EMR | ref. | ref. | ref. | ref. |

| Basic EMR | −0.038* | −0.034** | −0.034** | −0.012 |

| (0.020) | (0.016) | (0.016) | (0.022) | |

| Advanced EMR | −0.010 | −0.010 | −0.009 | 0.003 |

| (0.020) | (0.016) | (0.016) | (0.023) | |

| No Affiliation | ref. | ref. | ref. | ref. |

| Contract Affiliation | 0.001 | 0.004 | 0.004 | 0.021 |

| (0.013) | (0.010) | (0.010) | (0.014) | |

| Employment Affiliation | 0.024** | 0.018** | 0.018** | 0.024* |

| (0.012) | (0.009) | (0.009) | (0.013) | |

| Unknown/Other Affiliation | −0.018 | −0.011 | −0.011 | 0.015 |

| (0.014) | (0.011) | (0.011) | (0.015) | |

| Standards of Care and Clinical Performance (Zh) | ||||

|

| ||||

| Standards of Care Z-Score | 0.026*** | 0.019*** | 0.019*** | 0.017*** |

| (0.006) | (0.004) | (0.004) | (0.006) | |

| AMI Survival Z-Score | 0.030*** | 0.025*** | 0.025*** | 0.030*** |

| (0.007) | (0.005) | (0.005) | (0.008) | |

| Observations | 2,341 | 2,341 | 2,341 | 2,341 |

| R 2 | 0.107 | 0.109 | 0.107 | 0.032 |

| Basic EMR = Advanced EMR (p-val) | 0.002 | 0.000 | 0.000 | 0.178 |

| Patient Controls | None | Admission | Full | Full |

| Physician Controls | None | None | None | FE |

This table presents the results of regressing hospital coding scores on hospital characteristics. Column 1 uses no controls to calculate the hospital scores, column 2 adds controls for patient characteristics observable upon admission, and column 3 adds histories of chronic conditions. Column 4 adds physician fixed effects. Standard errors clustered at the market level in parentheses.

significant at 1% level

significant at 5% level

significant at 10% level

Without Physician Controls

Columns 1 to 3 depict the correlations with increasingly rich first-step patient controls but no physician controls. They establish several relationships of interest. There is a coding-scale relationship: hospitals that are 10% larger give 0.18 percentage points more of their HF patients a specific code. Adding patient controls reduces this effect to 0.11 percentage points (significant at the 10% but not 5% level) – some of the raw relationship between size and coding can be accounted by larger hospitals tending to have patients that are more likely to receive a detailed code at any hospital. Hospital ownership matters: controlling for patients, non-profit hospitals give 2.8 percentage points more of their patients a specific code than government-run facilities. There is no statistically significant difference between the takeup rates of for-profit and government-run hospitals. Major (but not minor) teaching hospitals are significantly more likely to provide detailed codes than non-teaching facilities, a difference of 2.8 percentage points with patient controls.