Abstract

Background:

Health IT, such as clinical decision support (CDS), has the potential to improve patient safety. However, poor usability of health IT continues to be a major concern. Human factors engineering (HFE) approaches are recommended to improve the usability of health IT. Limited evidence exists on the actual impact of HFE methods and principles on the usability of health IT.

Objective:

To identify and describe the usability barriers and facilitators of an HFE-based CDS prior to implementation in the emergency department (ED).

Methods:

We conducted debrief interviews with 32 emergency medicine physicians as a part of a scenario-based simulation study evaluating the usability of the HFE-based CDS. We performed a deductive content analysis of the interviews using the usability criteria of Scapin and Bastien as a framework.

Results:

We identified 271 occurrences of usability barriers (94) and facilitators (177) of the HFE-based CDS. For instance, we found a facilitator relating to the usability criteria prompting as the PE Dx helps the physician order diagnostic tests following the risk assessment. We found the most facilitators relating to the criteria, minimal actions, e.g., as the PE Dx automatically populating vitals signs (e.g., heart rate) from the chart into the CDS. The majority of the usability barriers related to the usability criteria, compatibility (i.e., workflow integration), which was not explicitly considered in the HFE design of the CDS. For example, the CDS did not support resident and attending physician teamwork in the PE diagnostic process.

Conclusion:

The systematic use of HFE principles in the design of CDS improves the usability of these technologies. In order to further reduce usability barriers, workflow integration should be explicitly considered in the design of health IT.

Keywords: Clinical Decision Support, Human Factors Engineering, Usability Evaluation, Workflow Integration, Emergency Medicine

1. Introduction

The widespread implementation of health information technology (IT) provides new opportunities to leverage these technologies to improve care quality and patient safety. For instance, one type of health IT, clinical decision support (CDS), integrates patient-specific information with a computerized knowledge base to support clinicians’ decisions [1, 2]. As CDS provides evidence-based guidelines at the point of care (i.e. at the time of decision-making), it can support a systematic approach to diagnosis, ordering of tests, and evidence-based prescribing. However, the usability of health IT, including CDS technologies, remains a major challenge [3]. Acknowledging the impact of poor usability on patient (e.g. medical errors) and clinician (e.g. burnout) outcomes [4], the Office of the National Coordinator recommends incorporating human factors engineering (HFE) methods and principles in the design of CDS [5]. Yet, only a few studies have applied HFE in the design of CDS or demonstrated the value of the HFE approach [6, 7].

1.1. Impact of HFE on CDS usability

HFE is “the scientific discipline concerned with the understanding of interactions among humans and other elements of a system, and the profession that applies theory, principles, data, and methods to design in order to optimize human well-being and overall system performance” [8]. HFE applies holistic and participatory approaches to evaluate and design systems taking into account the physical, cognitive, sociotechnical, environmental, and organizational work system factors and their interactions. We need to learn more about the impact of HFE-based design on CDS usability to understand if we are actually achieving usability improvements with these methods. A group of researchers at Lille University in France explored the link between HFE and usability of CDS. In a systematic review, they evaluated 26 papers discussing usability flaws in medication-related CDS [9]. They identified 168 usability flaws that led to negative consequences to workflow, technology effectiveness, care processes, and patient safety [10]. Yet, we do not know if the application of an HFE approach could have prevented these negative outcomes. In a follow-up study [11], the French researchers demonstrated the value of HFE in the design of a patient prioritization tool in the ED. They conducted a work system analysis to identify design specifications for the tool. After developing initial mock-ups, they conducted 4 phases of usability testing, identifying important modifications (e.g., to icons) that improved the usability of the tool, which was subsequently implemented. Building off of this work, additional research is needed to elucidate the impact of HFE design on CDS usability. In this study, we investigate the usability barriers and facilitators of an HFE-based CDS.

1.2. Usability

The International Organization for Standardization (ISO) defines usability as “the extent to which a system, product, or service can be used by specific users to achieve specified goals”; they describe 3 aspects of usability: efficiency, effectiveness, and satisfaction [12]. Several usability frameworks exist, such as the Nielsen-Schneiderman heuristics by Zhang et al. [13] and the usability criteria of Scapin and Bastien [14], which have both been applied in the design of health IT. While there is significant overlap between the two frameworks, the usability criteria of Scapin and Bastien [14] (table 1; see Appendix 1 for full definitions of the criteria) provide a broader, macro-view on usability compared to the more micro-focus of Zhang and colleagues [13]; this macro-view is emphasized in one of their criteria, compatibility, which specifically focuses on the context of use and workflow of users [9]. The framework also includes explicit consideration of ‘workload’, a major concern with health IT (e.g. technology burden). For these reasons (i.e., macro-view, specific compatibility and workload principles), we use the Scapin and Bastien [14] criteria as a framework in our study.

Table 1:

Scapin and Bastien [14] usability criteria

| Usability criteria | Sub-criteria |

|---|---|

| 1. Guidance | Prompting |

| Grouping by location | |

| Grouping by format | |

| Immediate feedback | |

| Legibility | |

| 2. Workload | Conciseness |

| Minimal actions | |

| Information density | |

| 3. Explicit control | Explicit user actions |

| User control | |

| 4. Adaptability | Flexibility |

| Users’ experience | |

| 5. Error management | Error protection |

| Quality of error messages | |

| Error correction | |

| 6. Consistency | Consistency |

| 7. Significance of codes | Significance of codes |

| 8. Compatibility | Compatibility |

1.3. Context of the study

Using HFE methods and principles [7, 15], we designed a CDS to support pulmonary embolism (PE) diagnosis in the ED. PE, a blood clot in the lung, contributes to approximately 100,000 deaths in the US each year [16]. Diagnosis of PE is frequently delayed or missed and is especially challenging in the ED due to limited patient information and high time pressure. Despite the availability of numerous risk scores to support PE diagnosis, there remains an over-use of CT scans to diagnose PE, which is harmful to patients [17].

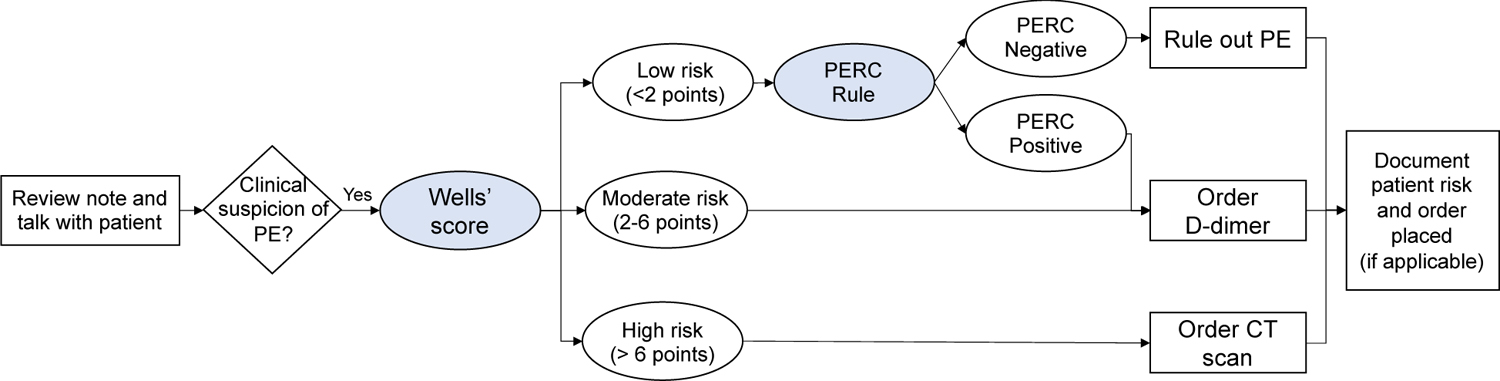

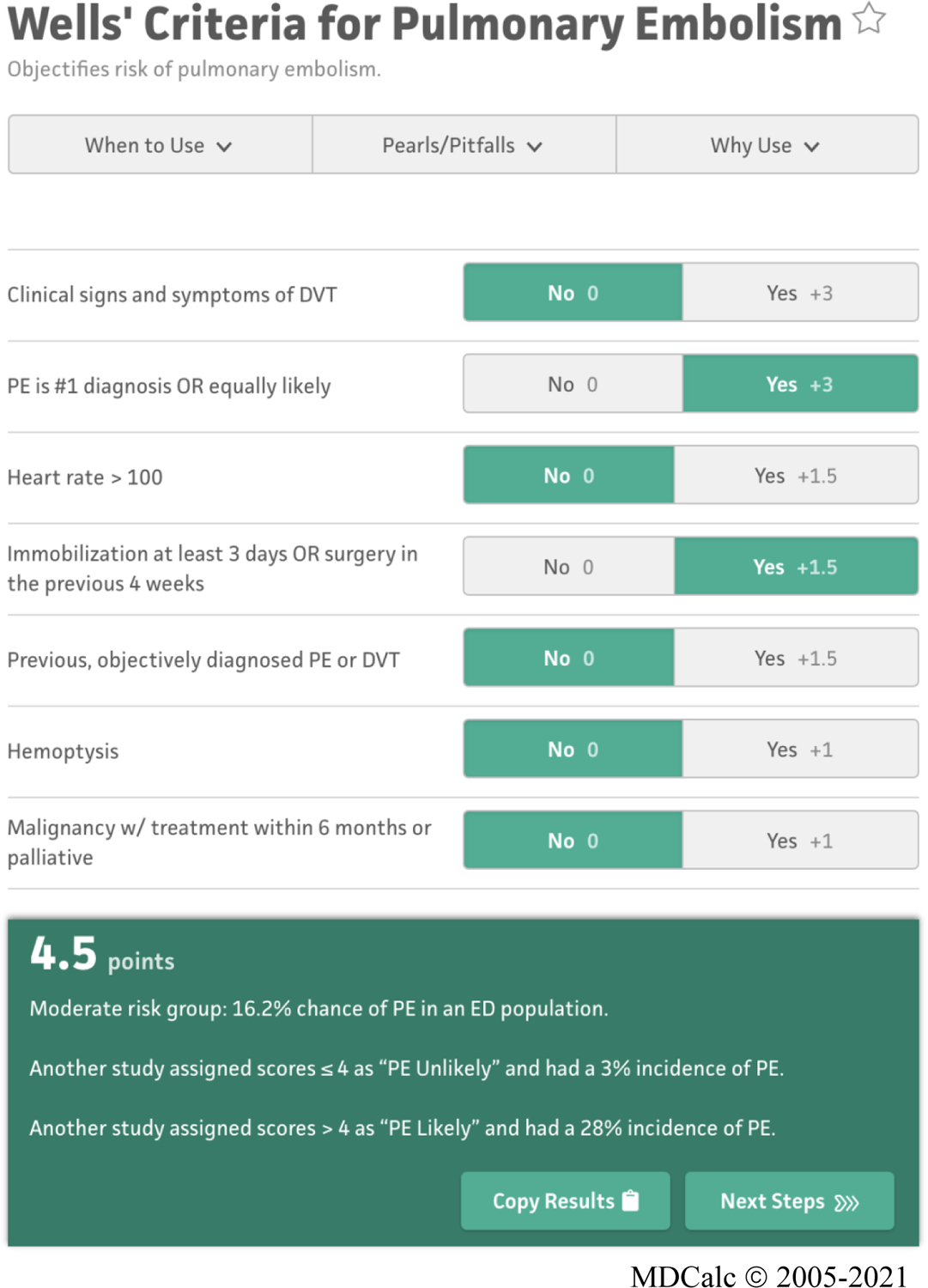

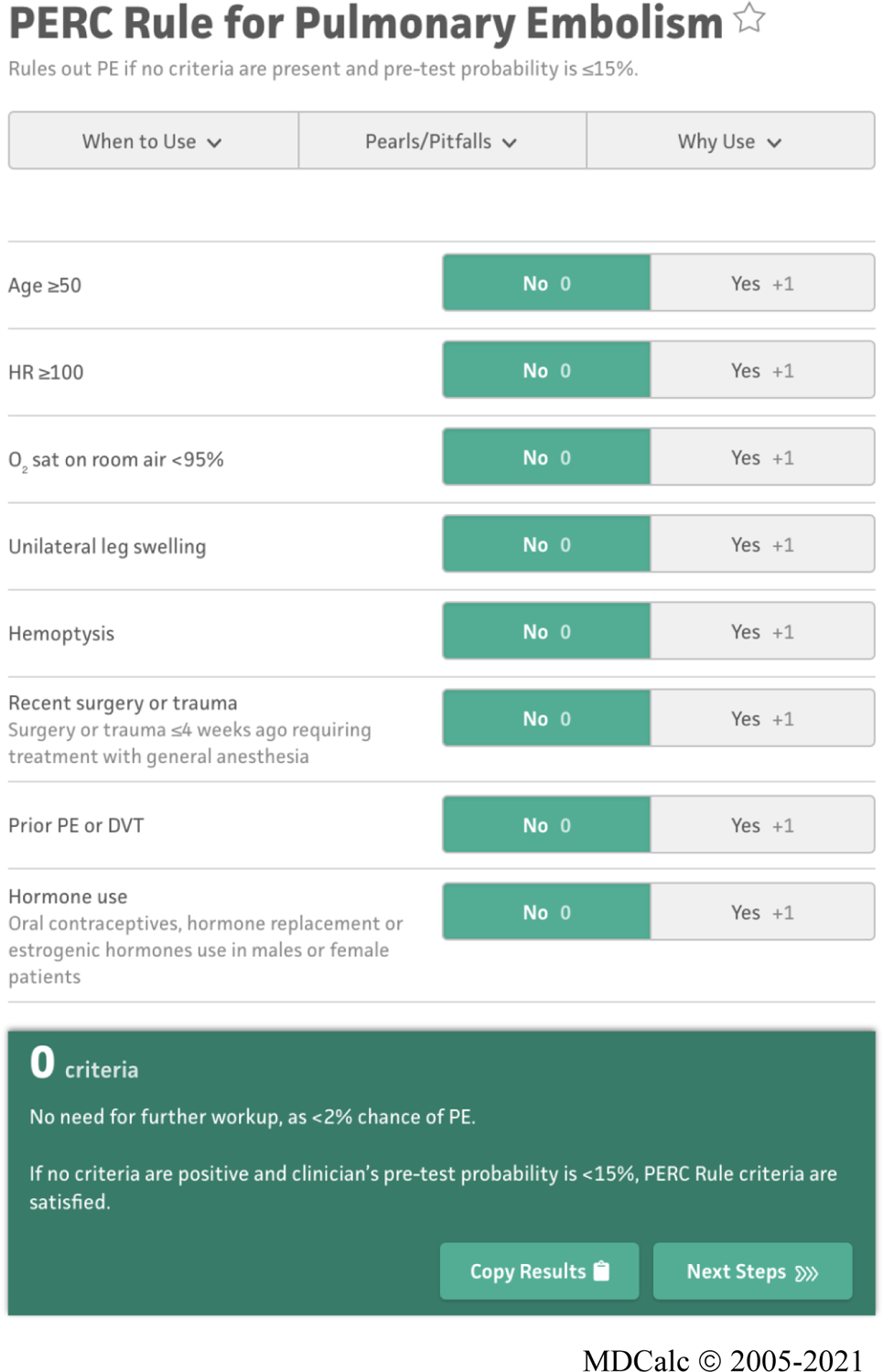

The HFE-based CDS, i.e., “PE Dx”, combines two risk scores, the Wells’ score [18] and the Pulmonary Embolism Rule out Criteria (PERC) rule [19, 20], which are recommended by the American College of Physicians [19] to assess a patient’s risk of PE for patients that are 18 and older with acute onset of new or worsening shortness of breath or chest pain. Figure 1 depicts the recommend workflow for PE workup. An interdisciplinary team designed the PE Dx using a thorough work system analysis, 9 participatory design sessions, and 2 focus groups [7]. We built the PE Dx in the EHR “playground” environment, a simulated environment that mirrors the actual EHR used at the hospital. We then conducted a group heuristic evaluation to identify additional usability flaws in the technology. The design of PE Dx integrated multiple HFE principles such as minimizing workload and appropriate use of automation (see Figure 3 for the list of HFE design principles used for PE Dx) [7].

Figure 1:

American College of Physicians recommended workflow for PE workup [15, 19]

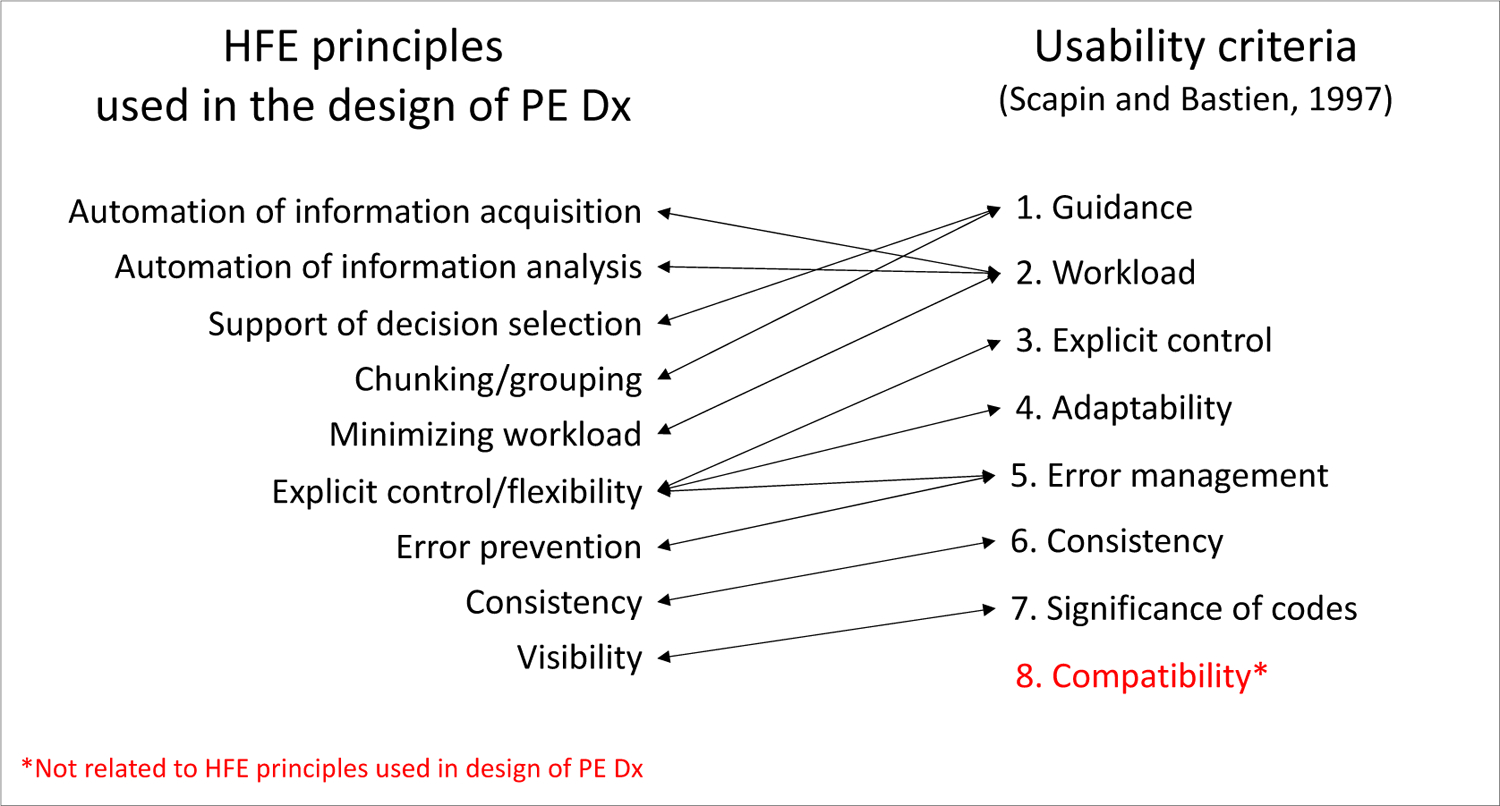

Figure 3:

HFE principles considered in the design of PE Dx and corresponding Scapin and Bastien [14] usability criteria

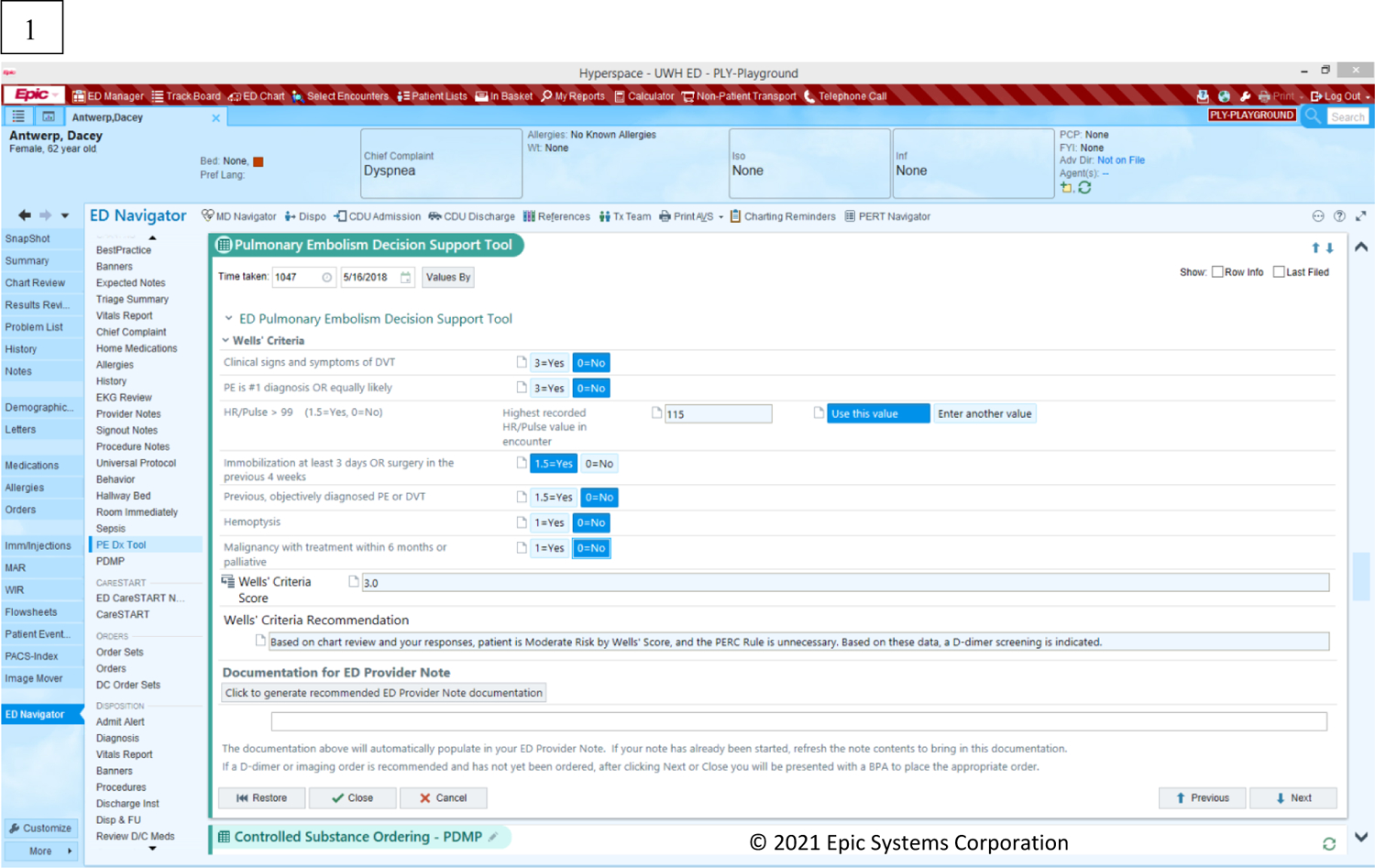

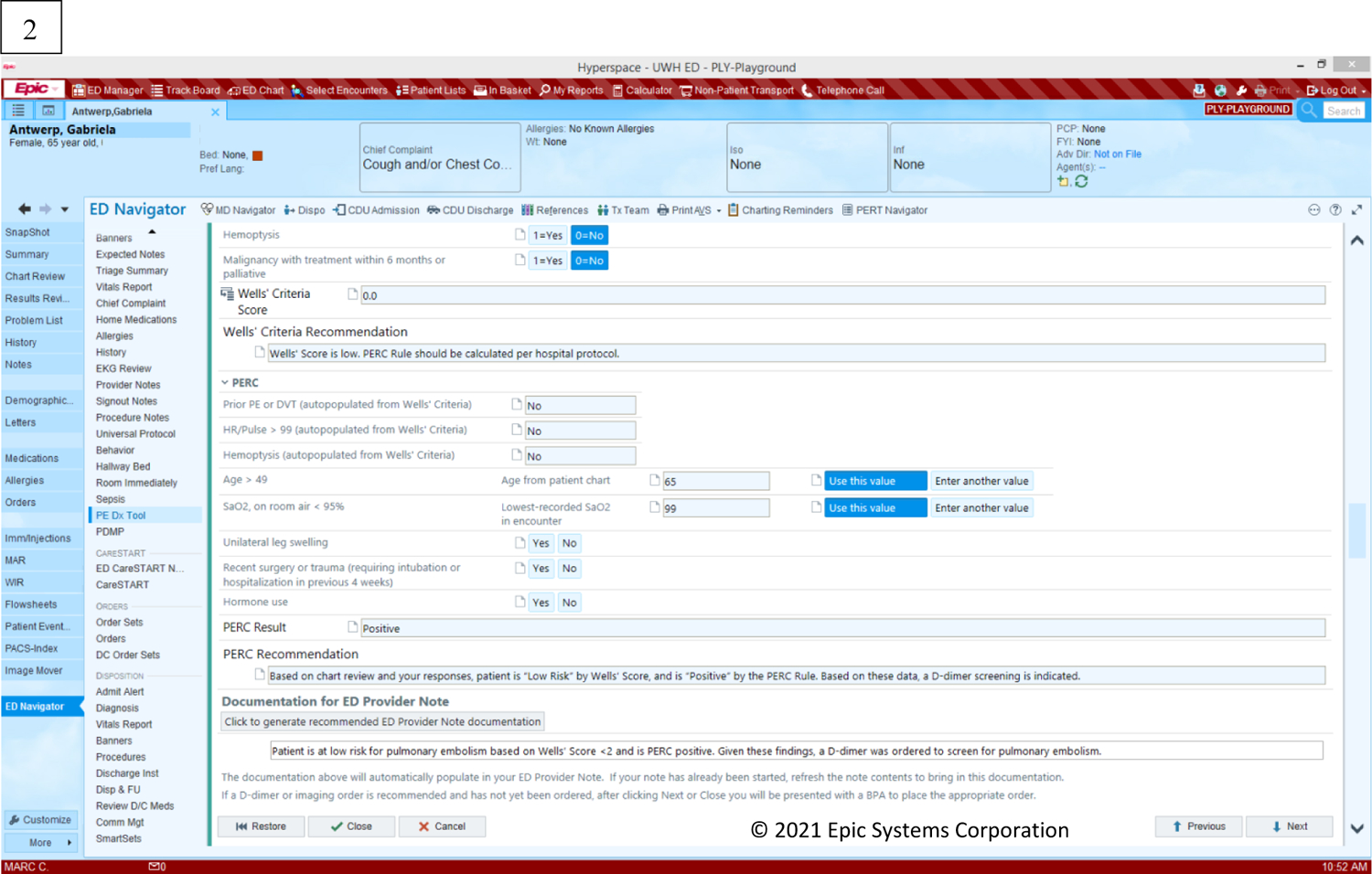

Figure 2 depicts the page screens of the PE Dx in the EHR. Physicians access the PE Dx by clicking a button “PE CDS” in the ED Navigator section of the EHR. The PE Dx CDS is then opened and presents the Wells’ criteria for the physician to complete by selecting the yes/no toggles for each criterion. The PE Dx automatically populates patient data from the EHR (e.g., heart rate, age) and automatically selected the yes/no toggle corresponding with that value. For example, if a patient’s heart rate is 105, the “yes” button is automatically selected for the criterion “Heart rate > 100”. Once all the Wells’ criteria are complete, the PE Dx generates a patient-specific risk score. If the Wells’ score is medium or high (see Figure 1), the PE Dx supports ordering the recommended diagnostic test (e.g., D-dimer or CT scan). If the Wells’ score is low, the PERC criteria appear on the screen for the physician to complete. Finally, the PE Dx documents the diagnostic workup decision in the physician’s note.

Figure 2:

PE Dx CDS screen displays: (1) Wells’ criteria, (2) PERC rule

We performed a scenario-based simulation study to evaluate the usability of the PE Dx compared to the currently used risk-scoring website, ‘MDCalc’. MDCalc is a free medical reference website with point-of-care CDS for over 200 conditions, including the Wells’ score and PERC rule for PE. In the existing workflow, physicians review a patient’s chart and meet with a patient to discuss their symptoms. The physician then orders the appropriate diagnostic test (e.g., D-dimer, CT scan) either based on clinical gestalt of the patient’s risk or by using one or both of the Wells’ criteria and PERC rule on MDCalc via their phone or computer. When compared to MDCalc, PE Dx demonstrated higher usability in an experimental simulation-based evaluation [7]. In this study, we conduct an in-depth analysis of the usability of PE Dx based on qualitative interview data collected in the experimental evaluation. Our aim is to develop a deep understanding of the linkage between the HFE design principles used for PE Dx and the usability criteria proposed by Scapin and Bastien [14]; this analysis focuses on the identification of barriers and facilitators in the use of PE Dx and its integration in the work and workflow of emergency physicians.

2. Materials and methods

2.1. Setting and sample

The study took place from April-June 2018 in the ED at a large, academic hospital in the US. Data were collected as a part of a scenario-based simulation study evaluating the usability of PE Dx [7]. Thirty-two emergency medicine physicians participated in the study: 8 year 1 residents, 8 year 2 residents, 8 year 3 residents, and 8 attending physicians (see Table 2). A power calculation for the scenario-based simulation determined the sample size for the study (full sample justification in Carayon et al. [7]). We recruited physicians by advertising the study in email communications. The study was approved by the associated institutional review board.

Table 2:

Sample demographics

| Role | |

| Year 1 residents | 8 |

| Year 2 residents | 8 |

| Year 3 residents | 8 |

| Attending physicians | 8 |

| Age | |

| 24–29 | 15 |

| 30–34 | 13 |

| 35–39 | 3 |

| 40–44 | 0 |

| 45–49 | 0 |

| 50–54 | 1 |

| Male (%) | 75% |

2.2. Data collection

At the end of the scenario-based simulation, we interviewed each physician to gather qualitative feedback on the usability barriers and facilitators of PE Dx. The lead HFE researcher performing the experiments conducted each interview. We asked physicians 3 questions: (1) What about using PE Dx together with the EHR interferes with your workflow? (2) What about using PE Dx together with the EHR fits your workflow? and (3) How does PE Dx compare to MDCalc? We audio-recorded and transcribed each interview. The 32 semi-structured interviews lasted on average 5 minutes (SD: 3 minutes; range: 2–15 minutes) for a total of 154 minutes. The audio-recordings produced a total of 91 pages of text.

2.3. Data analysis

To analyze the interview data, two HFE researchers performed deductive content analysis [21] guided by the usability criteria of Scapin and Bastien [14]. First, one researcher coded 5 transcripts for barriers and facilitators of PE Dx and for the Scapin and Bastien [14] usability criteria. The two researchers discussed the coding and refined the codebook. Next, both researchers independently coded 2 transcripts and met to review the coding in a consensus-based process, updating the codebook to clarify any discrepancies found. The two researchers continued this process until all the transcripts were coded. After coding all transcripts, the two researchers went back and re-coded the first 5 transcripts according to the finalized codebook. Finally, one researcher randomly selected two transcripts to re-code in order to verify there was no researcher drift throughout the coding process. The final coded excerpts were exported from Microsoft Word into Microsoft Excel. In Excel, we analyzed the occurrence of barriers and facilitators for each usability criteria. We created a tab in Excel for each usability criterion and with the associated excerpts of barriers and facilitators coded for each criterion. One researcher reviewed the excerpts within each criterion to develop a comprehensive list of all the barriers and facilitators coded for each criterion (see Table 3).

Table 3:

Description of usability barriers and facilitators in PE Dx

| Usability criteria | Sub-criteria | Facilitators | Barriers |

|---|---|---|---|

| 1. Guidance | Prompting |

|

|

| Grouping by location |

|

|

|

| Grouping by format | No facilitators reported |

|

|

| Immediate feedback | No facilitators reported | No barriers reported | |

| Legibility | No facilitators reported |

|

|

| 2. Workload | Conciseness |

|

No barriers reported |

| Minimal actions |

|

|

|

| Information density |

|

No barriers reported | |

| 3. Explicit control | Explicit user actions |

|

|

| User control |

|

|

|

| 4. Adaptability | Flexibility | No facilitators reported |

|

| Users’ experience | No facilitators reported | No barriers reported | |

| 5. Error management | Error protection |

|

|

| Quality of error messages | No facilitators reported | No barriers reported | |

| Error correction |

|

No barriers reported | |

| 6. Consistency | Consistency |

|

No barriers reported |

| 7. Significance of codes | Significance of codes |

|

|

| 8. Compatibility | Compatibility |

|

|

We compared the Scapin and Bastien [14] usability criteria to the HFE design principles used for PE Dx. Figure 3 depicts each HFE principle used in the design of PE Dx [7] and the corresponding Scapin and Bastien [14] usability criteria. The usability criterion in red did not align with any of the PE Dx design principles.

3. Results

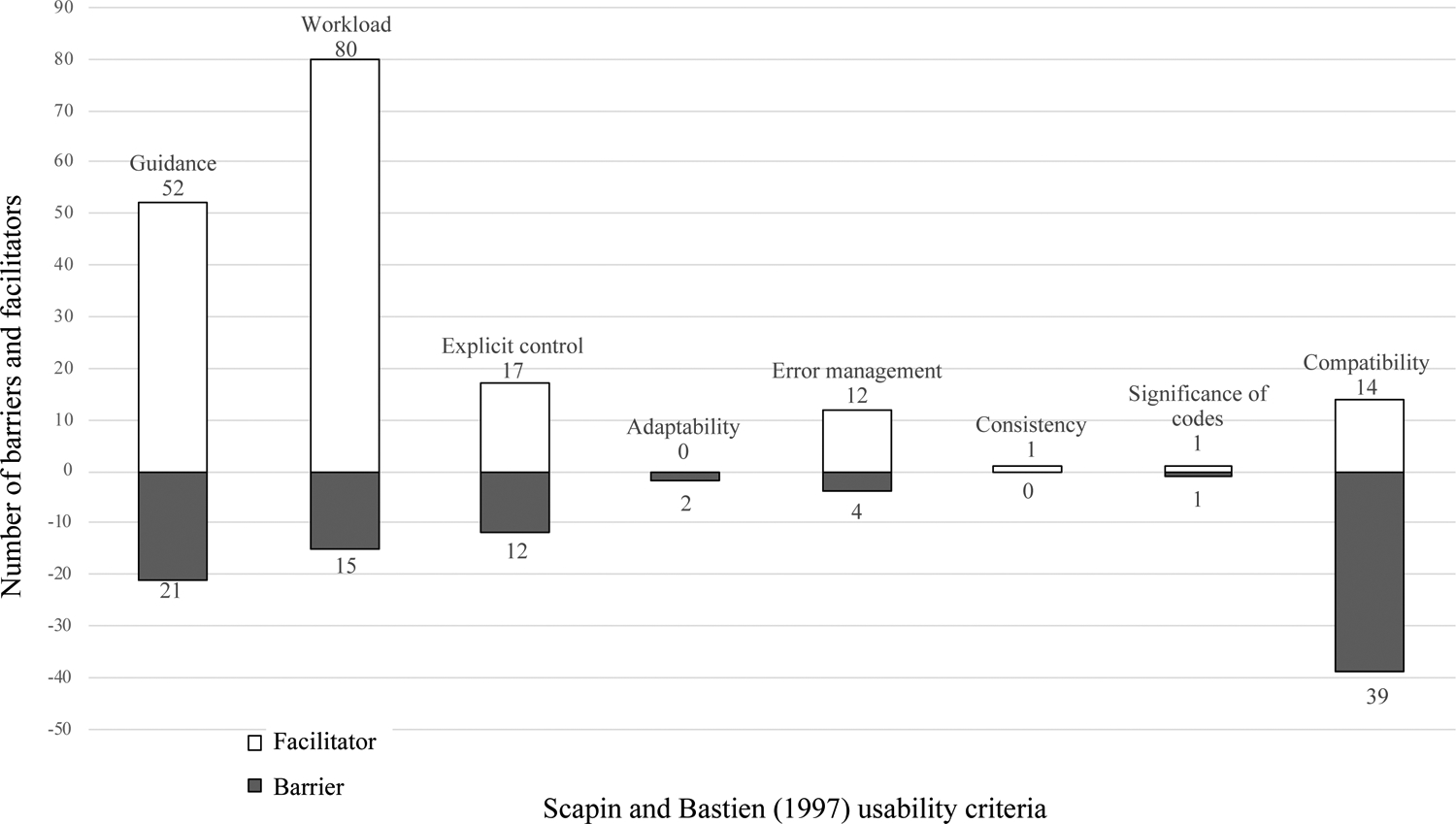

The 32 interview transcripts resulted in a total of 271 occurrences of the usability criteria with 94 (35%) and 177 (65%) occurrences of barriers and facilitators, respectively. A description of the barriers and facilitators for the eight usability criteria can be found in Table 3. The distribution of barriers and facilitators for each usability criterion can be found in Figure 4.

Figure 4:

Number of barriers and facilitators for each usability criteria

3.1. Usability criteria considered in PE Dx design by HFE principles

Seven of the eight Scapin and Bastien [14] usability criteria aligned with the HFE principles used in the design of PE Dx. We identified 218 occurrences of these usability criteria, with the majority (75%) coded as facilitators.

We identified 73 occurrences of the usability criteria, guidance, with 21 barriers and 52 facilitators. Physicians liked that the CDS automatically recommended the next steps based on the patient’s risk score and provided documentation text that could be directly sent into the note. A resident explained, “I think that thing that pops up at the end is super helpful too, to be able to just like click ‘order’ at the decision point.” Conversely, physicians did not like that the result of PERC positive and negative looked the same; this made it hard to distinguish when a patient was PERC positive (and needed diagnostic testing), or PERC negative.

We identified 95 occurrences of the usability criteria workload with 84% coded as facilitators. Physicians liked that PE Dx automatically populated some of the patient’s vital signs into the CDS (e.g. heart rate), reducing the need for physicians to search for information. A year 2 resident explained: “Having it draw in the patient information really saved a lot of time too, to not have to go back and look it up or have to remember exactly what the numbers were”. Physicians thought that the PE Dx reduced the time required for ordering and documentation. A year 1 resident stated: “it’s really nifty. Especially the documentation thing is so awesome. It’s always the thing that takes the most time in our jobs”.

Explicit control resulted in 12 and 17 occurrences of barriers and facilitators, respectively. Physicians liked that they could edit the automatically populated vital signs and that they had the choice not to order the recommended diagnostic test. Additionally, physicians liked that the PE Dx did not pop-up as an alert, rather the physician had to actively choose to use PE Dx.

We identified 12 occurrences of facilitators relating to error management. Physicians believed that the order support functionality in the CDS would reduce the chance that they would forget to place an order for PE. Physicians also thought the CDS would prevent errors because it auto-populates vital signs, which can reduce the chance of missing significant vital signs in the chart: A resident explained: “as somebody who perhaps does not check vital signs as closely as I ought to, in the one case where the single pulse ox [oxygen saturation] of 94% that was slightly low, to have blown in automatically, that was helpful to me”. However, some physicians mentioned the possibility that they would not double-check if the correct data were automatically populated, which was a barrier to error management.

The usability criteria adaptability, consistency, and significance of codes only resulted in 2, 1, and 2 occurrences of barriers and facilitators, respectively (see description in table 3).

3.2. Usability criterion of compatibility not considered in PE Dx design

One of the usability criteria, compatibility, was not considered in the design of PE Dx (see Figure 3). We identified 53 occurrences of the usability criterion, compatibility, with 74% of these coded as barriers. Physicians did not like that the CDS forced them to use Wells’ followed by PERC (see Figure 1 for guideline recommended workflow) and instead preferred to use one risk score or the other (e.g. Wells’ or PERC). A year 3 resident explained “being forced to use the Wells’ criteria, which in my personal practice I don’t use as much. I use the PERC almost all the time, almost every shift, the Wells’ criteria I don’t”. Additionally, some physicians determined a patient’s risk of PE before leaving the patient’s room and therefore, the CDS was incompatible with their workflow.

Some physicians placed all their orders for a patient together at one time and then subsequently used risk scores to verify their decision. The PE Dx order support functionality did not fit this workflow as it focuses on PE diagnosis; a resident explained: “I tend to order, as we say it, ‘a la carte’…Normally, I would type in… all the things I’m trying to rule out, particularly the blood work, all at one time. So, it’s just a little bit of a change in my workflow”.

Another barrier was that PE Dx did not fit the workflow of resident and attending teams, in which the resident assesses the patient’s PE risk, discusses with the attending, and then places the order and documents the decision based on the resident-attending discussion. A resident explained: “when it pops up, the option to, you know, ‘do you want to order a CT’, or ‘do you want to order an MRI’? Right then, I was like, well, I have to cancel out of this and check with an attending and see where we’re at with that. So that kind of wiped out what I’d done”. Finally, physicians said that they used MDCalc to check many potential diagnoses for a patient, not just for diagnosing PE; therefore, using PE Dx in the EHR does not fit with their overall workflow, which included concurrent consideration of multiple diagnoses for the patient. Physicians also described several facilitators related to compatibility. For instance, the fact that the CDS is integrated within the EHR made it easy to fit the CDS in their current workflow.

3.3. Residents versus attending physicians

We compared the barriers and facilitators identified by residents and attending physicians. We found that residents described more facilitators relating to error management compared to attending physicians. For example, residents liked that the CDS ordering prompt helped them to remember to place an order (e.g., CT scan) for the patient. Residents also said that the CDS helped confirm their clinical gestalt and made sure they took appropriate actions. They also liked that the auto-population of vital signs ensured they did not make a mistake in entering the values. These factors were less important to attending physicians who have more clinical expertise and experience. We also found a difference between residents and attendings relating to the usability criteria compatibility. Residents described a barrier to compatibility in that the CDS did not support their collaborative teamwork with attending physicians; this was not described as a barrier by attending physicians. We did not identify any other major differences between residents and attending physicians.

4. Discussion

Through a qualitative analysis of debrief interview data collected from 32 emergency medicine physicians as a part of a scenario-based simulation, we identified 271 occurrences of usability barriers (94) and facilitators (177) of an HFE-based CDS. We categorized the barriers and facilitators according to the Scapin and Bastien [14] usability criteria which we compared to the HFE principles used in the PE Dx design process. Seven out of the 8 usability criteria aligned with HFE principles used in the design process.

4.1. Benefits of HFE design principles

We provide evidence that HFE principles impact the usability of CDS. In our data, the usability criteria considered by the HFE design principles resulted in a high proportion of facilitators (75%). In comparison, the one usability criterion not considered by the HFE principles resulted in mostly barriers (74%); this demonstrates the importance of explicitly using HFE principles in the design of health IT. When HFE principles are explicitly considered during the design of health IT, the usability of the technology is enhanced. This study expands on previous work [9–11] as we demonstrate how the use of HFE approaches in the design of CDS mitigates usability flaws. We demonstrate the value of explicitly considering HFE principles in the design of health IT.

Building on the work of Carayon et al. [7], we provide a deeper understanding of how PE Dx does, and does not, support the workflow of physicians. The identified barriers and facilitators to usability can inform the design of future CDS. For instance, automatically populating data into CDS can reduce workload and errors in data entry; however, designers should allow clinicians to edit automatically populated data to ensure users have explicit control. Similarly, designers should consider how the CDS technology supports the workflow of clinicians; for instance, users should be prompted to complete next steps (e.g., placing orders, documenting the decision-making process) based on the calculated risk score.

We demonstrate the importance of minimizing workload in the design of CDS. The usability criteria, workload, was most frequently discussed by physicians out of all of the usability criteria. We explicitly considered workload in the design of PE Dx, resulting in 80 facilitators compared to 15 barriers related to workload. This study demonstrates the importance of efficiency and minimizing workload in CDS design, especially in the fast-paced ED. Systematic consideration of the usability criterion workload during the design of CDS may mitigate physician workload and stress relating to technology.

4.2. Workflow integration or compatibility

We found inadequate consideration of workflow integration in the design of the HFE-based CDS. We did not explicitly consider the usability criterion, compatibility, in our design process as we focused on the PE diagnostic pathway; this usability criterion resulted in the highest number of barriers. Compatibility represents a broader, macro-view of the technology’s interaction with the work system and workflow of users. In essence, the compatibility usability criterion represents integration of the technology in clinical workflow. Our findings demonstrate the challenges of workflow integration when designing health IT. For instance, we identified a barrier to using the PE Dx due to a misfit of the technology with attending-resident teamwork. Designers of CDS should not only focus on supporting the tasks of an individual, but consider the broader process and teamwork involved in providing patient care [22]. We identified another barrier to using the PE Dx as physicians reported they place multiple orders for a patient at one time rather than only the PE diagnostic test (e.g. CT scan). Because our CDS only supported ordering one test at a time, this did not fit physician workflow. To design usable health IT, it is important that the technology fits within the broader work processes, including the work of teams. Our findings emphasize the importance of workflow integration in health IT design.

Previous studies have frequently discussed challenges integrating health IT in clinical workflow [23, 24] and workflow integration is commonly cited as a reason for poor adoption and use of CDS [3, 25, 26]. Yet, workflow integration is poorly defined and conceptualized and is therefore, challenging to systematically consider during the design of CDS. Goodhue [27] proposed the task-technology fit (TTF) model, which specifies that task characteristics, technology characteristics, and individual characteristics interact to develop a task-technology fit, which influence utilization of the technology by users as well as task performance. This model has been adapted [28] and applied to identify barriers to CDS adoption [23]. More recently, Salwei et al. [29] proposed that in addition to task, technology, and individual characteristics, workflow integration relies on the technology’s fit with the physical environment and organizational context (i.e., the 5 elements of the Work System model [30]). For instance, we identified a barrier to using the PE DX because the CDS is not available while the physician is in the room talking with the patient; this is an example of how the technology does not fit with the task and physical environment. Salwei et al. [29] developed a conceptual model of workflow integration, which includes 4 dimensions of workflow integration TIME, FLOW, SCOPE of patient journey, and LEVEL. Each of these dimensions includes sub-dimensions that specify the multiple elements that influence workflow integration of CDS. Future research is needed to apply these concepts in the design of CDS and determine how they influence workflow integration; this work could leverage the checklist of workflow integration developed by Salwei et al., [29].

4.3. Implications to the design of health IT

This study presents implications for the design of health IT. First, future research should use the Scapin and Bastien [14] usability criteria throughout iterative cycles of health IT design to continually improve the usability and integration of the technology in clinical workflows [14]. Because these usability criteria integrate both micro- and macro-HFE design considerations, they are more likely to yield benefits when the technology is actually implemented. Next, in addition to usability criteria focused on the interface, the usability criterion compatibility should be explicitly considered during the development of health IT. Consideration of this design principle may reduce usability barriers and improve integration of health IT in clinical workflows. Finally, future studies should conduct debrief interviews before and after the implementation of the technology. These interview qualitative data can be systematically analyzed to identify CDS design improvements prior to implementation as well as after implementation once the technology is in-use.

One limitation is that these data come from one ED of a US academic health system; the results may not be applicable to other settings. Another limitation of the study is that the interviews were short (~5 minutes). Although the interviews were short, the data represent the view of 32 emergency physicians with different roles and experience, which enabled us to gather diverse feedback on the CDS usability. Another limitation is that the data come from physicians interacting with the CDS in a simulated setting. The barriers and facilitators of PE Dx may be different when the technology is used over a longer period of time and in a real clinical setting. Future research should also evaluate the implementation of the HFE-based CDS to identify the barriers and facilitators of the CDS in the real clinical environment [29].

5. Conclusion

CDS has the potential to improve patient care, however, previous implementations have faced challenges due to poor usability and lack of integration in clinician workflow. This study provides evidence that consideration of HFE principles during the design of CDS can improve the usability of the technology, and highlights the importance of applying explicit HFE criteria, such as Scapin and Bastien’s, to ensure all relevant factors are considered. While the results demonstrate that systematic consideration of HFE design principles produce more facilitators than barriers, we still found multiple barriers related to compatibility, i.e. a macro-HFE design principle that was not systematically integrated in the design process. Incorporating the usability criterion of compatibility in CDS design can further support consideration of workflow integration and therefore improve the technology’s usability and integration in clinical workflow. Designing CDS technologies that are usable and integrated in the clinical workflow is a difficult endeavor, which can benefit from systematic consideration of multiple HFE design principles. Further research should continue to address this challenge as well as to explore how HFE design principles can be integrated in a continuous technology design process.

Manuscript highlights.

Systematic consideration of HFE design principles can improve the usability of CDS

Consideration of workflow integration (or compatibility) should be included during design to improve CDS usability and reduce barriers to use; we outline specific factors that influence CDS workflow integration

The Scapin and Bastien [14] usability criteria can support consideration of workflow integration during a technology’s design

Summary table.

| What was already known on the topic (2–4 bullet statements) |

|

| What this study added to our knowledge (2–4 bullet statements. Note: that the second part of the table should not list the results of the study as such. It should address what this study has proven and what insights have been gained.) |

|

Acknowledgements

This research was made possible by funding from the Agency for Healthcare Research and Quality (AHRQ) and the Patient-Centered Outcomes Research Institute (PCORI), grants: K12HS026395, R01HS022086 and K08HS024558 the Clinical and Translational Science Award (CTSA) program, through the NIH National Center for Advancing Translational Sciences (NCATS), grant: UL1TR000427, and through the National Library of Medicine Institutional Training Program in Biomedical Informatics and Data Science through the NIH, grant: T15LM007450–19. The content is solely the responsibility of the authors and does not necessarily represent the official views of AHRQ, PCORI, or the NIH.

Appendix 1: Scapin and Bastien [14] usability criteria, sub-criteria, and definitions

| Usability criteria | Sub-criteria | Definition |

|---|---|---|

| 1. Guidance “means available to advise, orient, inform, instruct, and guide the users throughout their interactions with a computer” |

Prompting | Means available to guide the users towards making specific actions |

| Grouping and distinguishing items by location | Relative positioning of items in order to indicate whether or not they below to a given class or to indicate differences between classes | |

| Grouping and distinguishing items by format | Graphical features that indicate whether or not items belong to a given class or to indicate differences between classes | |

| Immediate feedback | System responses to users’ actions | |

| Legibility | Lexical characteristics of the information presented on the screen that may hamper or facilitate the reading of the information | |

| 2. Workload “all interface elements that play a role in reducing the users’ perceptual or cognitive load, and in increasing the dialogue efficiency” |

Conciseness | Perceptual and cognitive workload for individual inputs or outputs |

| Minimal actions | Workload with respect to the number of actions necessary to accomplish a goal or task | |

| Information density | Workload from a perceptual and cognitive point of view with regard to the whole set of information presented to the users rather than each individual item | |

| 3. Explicit control “concerns both the system processing of explicit user actions, and the control users have on the processing of their actions by the system” |

Explicit user actions | Relationship between the computer processing and the actions of the users |

| User control | Users should always be in control of the system processing | |

| 4. Adaptability “its capacity to behave contextually and according to the users’ needs and preferences” |

Flexibility | Means available to the users to customize the interface in order to take into account their working strategies and/or their habits and task requirements |

| Users’ experience | Means available to take into account the level of user experience | |

| 5. Error management “means available to prevent or reduce errors and to recover from them when they occur” |

Error protection | Means available to detect and prevent data entry errors, command errors, or actions with destructive consequences |

| Quality of error messages | Phrasing and content of error messages | |

| Error correction | Means available to the users to correct their errors | |

| 6. Consistency “the way interface design choices (codes, naming, formats, procedures, etc.) are maintained in similar contexts, and are different when applied to different contexts” |

Consistency | Interface design choices (codes, naming, formats, procedures) are maintained in similar contexts and are different when applied to different contexts |

| 7. Significance of codes “qualifies the relationship between a term and/or a sign and its reference” |

Significance of codes | Relationship between a term and/or a sign and its reference |

| 8. Compatibility “refers to the match between users’ characteristics (memory, perceptions, customs, skills, age, expectations, etc.) and task characteristics on the one hand, and the organization of the output, input, and dialogue for a given application, on the other hand” |

Compatibility | Match between users characteristics (memory, perceptions, customs, skills, age, expectations) and task characteristics on the one hand and the organization of the output, input, and dialogue for a given application, on the other hand |

Appendix 2: MDCalc interface (www.mdcalc.com)

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflicts of interest

The author(s) declare no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Authorship statement

Conception and design of study: P.Carayon, P.L.T.Hoonakker, D.Wiegmann, M.S.Pulia, B.W.Patterson

Acquisition of data: M.E.Salwei, P.L.T.Hoonakker

Analysis and/or interpretation of data: M.E.Salwei, P.Carayon

Drafting the manuscript: M.E.Salwei

Revising the manuscript: M.E.Salwei, P.Carayon, P.L.T.Hoonakker, D.Wiegmann, M.S.Pulia, B.W.Patterson

Approval of final version of the manuscript: M.E.Salwei, P.Carayon, P.L.T.Hoonakker, D.Wiegmann, M.S.Pulia, B.W.Patterson

Declaration of interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Hunt DL, et al. , Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. Jama, 1998. 280(15): p. 1339–1346. [DOI] [PubMed] [Google Scholar]

- 2.Patterson BW, et al. , Scope and Influence of Electronic Health Record–Integrated Clinical Decision Support in the Emergency Department: A Systematic Review. Annals of emergency medicine, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tcheng JE, et al. , Optimizing Strategies for Clinical Decision Support: Summary of a Meeting Series. Washington, DC: National Academy of Medicine, 2017. [PubMed] [Google Scholar]

- 4.National Academies of Sciences, Engineering, and and Medicine, Taking Action Against Clinician Burnout: A Systems Approach to Professional Well-being. 2019: Washington, DC. [PubMed] [Google Scholar]

- 5.The Office of the National Coordinator for Health Information Technology, Strategy on reducing regulatory and administrative burden relating to the use of Health IT and EHRs. 2020.

- 6.Russ A, et al. , Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. Journal of the American Medical Informatics Association, 2014. 21(e2): p. e287–e296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Carayon P, et al. , Application of human factors to improve usability of clinical decision support for diagnostic decision-making: a scenario-based simulation study. BMJ Quality & Safety, 2020. 29(4): p. 329–340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.I.E.A. What Is Ergonomics? 2021. 2021; Available from: https://iea.cc/what-is-ergonomics/.

- 9.Marcilly R, et al. , Usability flaws of medication-related alerting functions: A systematic qualitative review. Journal of biomedical informatics, 2015. 55: p. 260–271. [DOI] [PubMed] [Google Scholar]

- 10.Marcilly R, et al. , Usability Flaws in Medication Alerting Systems: Impact on Usage and Work System. Yearb Med Inform, 2015. 10(1): p. 55–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Schiro J, et al. , Applying a Human-Centered Design to Develop a Patient Prioritization Tool for a Pediatric Emergency Department: Detailed Case Study of First Iterations. JMIR Human Factors, 2020. 7(3): p. e18427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.International Organization for Standardization, ISO 9241–11 Ergonomics of Human-System Interaction - Part 11: Usability: Definitions and Concepts. 2018, ISO: Geneva, Switzerland. [Google Scholar]

- 13.Zhang J, et al. , Using usability heuristics to evaluate patient safety of medical devices. Journal of biomedical informatics, 2003. 36(1–2): p. 23–30. [DOI] [PubMed] [Google Scholar]

- 14.Scapin DL and Bastien JC, Ergonomic criteria for evaluating the ergonomic quality of interactive systems. Behaviour & Information Technology, 1997. 16(4–5): p. 220–231. [Google Scholar]

- 15.Hoonakker PL, et al. , The Design of PE Dx, a CDS to Support Pulmonary Embolism Diagnosis in the ED. Context Sensitive Health Informatics: Sustainability in Dynamic Ecosystems, 2019. 265: p. 134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Khan F, et al. , Venous thromboembolism. The Lancet, 2021. 398: p. 64–77. [DOI] [PubMed] [Google Scholar]

- 17.Kline JA, et al. , Outcomes and radiation exposure of emergency department patients with chest pain and shortness of breath and ultralow pretest probability: a multicenter study. Annals of emergency medicine, 2014. 63(3): p. 281–288. [DOI] [PubMed] [Google Scholar]

- 18.Wells PS, et al. , Excluding pulmonary embolism at the bedside without diagnostic imaging: management of patients with suspected pulmonary embolism presenting to the emergency department by using a simple clinical model and d-dimer. Annals of internal medicine, 2001. 135(2): p. 98–107. [DOI] [PubMed] [Google Scholar]

- 19.Raja AS, et al. , Evaluation of patients with suspected acute pulmonary embolism: Best practice advice from the Clinical Guidelines Committee of the American College of Physicians. Annals of internal medicine, 2015. 163(9): p. 701–711. [DOI] [PubMed] [Google Scholar]

- 20.Kline JA, Peterson CE, and Steuerwald MT, Prospective Evaluation of Real-time Use of the Pulmonary Embolism Rule‐out Criteria in an Academic Emergency Department. Academic Emergency Medicine, 2010. 17(9): p. 1016–1019. [DOI] [PubMed] [Google Scholar]

- 21.Elo S and Kyngäs H, The qualitative content analysis process. Journal of advanced nursing, 2008. 62(1): p. 107–115. [DOI] [PubMed] [Google Scholar]

- 22.Carayon P and Hoonakker P, Human Factors and Usability for Health Information Technology: Old and New Challenges. Yearbook of medical informatics, 2019. 28(01): p. 071–077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lesselroth BJ, et al. , Addressing the sociotechnical drivers of quality improvement: a case study of post-operative DVT prophylaxis computerised decision support. Quality and Safety in Health Care, 2011: p. qshc. 2010.042689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Press A, et al. , Usability testing of a complex clinical decision support tool in the emergency department: lessons learned. JMIR human factors, 2015. 2(2): p. e14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Bennett P and Hardiker NR, The use of computerized clinical decision support systems in emergency care: a substantive review of the literature. Journal of the American Medical Informatics Association, 2016. 24(3): p. 655–668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jun S, et al. , Point-of-care Cognitive Support Technology in Emergency Departments: A Scoping Review of Technology Acceptance by Clinicians. Academic Emergency Medicine, 2018. 25(5): p. 494–507. [DOI] [PubMed] [Google Scholar]

- 27.Goodhue DL and Thompson RL, Task-technology fit and individual performance. MIS quarterly, 1995: p. 213–236. [Google Scholar]

- 28.Ammenwerth E, Iller C, and Mahler C, IT-adoption and the interaction of task, technology and individuals: a fit framework and a case study. BMC medical informatics and decision making, 2006. 6(1): p. 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Salwei ME, et al. , Workflow integration analysis of a human factors-based clinical decision support in the emergency department. Applied Ergonomics, 2021. 97: p. 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Smith MJ and Carayon-Sainfort P, A balance theory of job design for stress reduction. International Journal of Industrial Ergonomics, 1989. 4(1): p. 67–79. [Google Scholar]