Abstract

Background

Recent research recommends that epi-transcriptome regulation through post-transcriptional RNA modifications is essential for all sorts of RNA. Exact identification of RNA modification is vital for understanding their purposes and regulatory mechanisms. However, traditional experimental methods of identifying RNA modification sites are relatively complicated, time-consuming, and laborious.

Machine learning approaches have been applied in the procedures of RNA sequence features extraction and classification in a computational way, which may supplement experimental approaches more efficiently. Recently, convolutional neural network (CNN) and long short-term memory (LSTM) have been demonstrated achievements in modification site prediction on account of their powerful functions in representation learning. However, CNN can learn the local response from the spatial data but cannot learn sequential correlations. And LSTM is specialized for sequential modeling and can access both the contextual representation but lacks spatial data extraction compared with CNN. There is strong motivation to construct a prediction framework using natural language processing (NLP), deep learning (DL) for these reasons.

Results

This study presents an ensemble multiscale deep learning predictor (EMDLP) to identify RNA methylation sites in an NLP and DL way. It organically combines the dilated convolution and Bidirectional LSTM (BiLSTM), which helps to take better advantage of the local and global information for site prediction.

The first step of EMDLP is to represent the RNA sequences in an NLP way. Thus, three encodings, e.g., RNA word embedding, One-hot encoding, and RGloVe, which is an improved learning method of word vector representation based on GloVe, are adopted to decipher sites from the viewpoints of the local and global information. Then, a dilated convolutional Bidirectional LSTM network (DCB) model is constructed with the dilated convolutional neural network (DCNN) followed by BiLSTM to extract potential contributing features for methylation site prediction. Finally, these three encoding methods are integrated by a soft vote to obtain better predictive performance. Experiment results on m1A and m6A reveal that the area under the receiver operating characteristic(AUROC) of EMDLP obtains respectively 95.56%, 85.24%, and outperforms the state-of-the-art models. To maximize user convenience, a user-friendly webserver for EMDLP was publicly available at http://www.labiip.net/EMDLP/index.php (http://47.104.130.81/EMDLP/index.php).

Conclusions

We developed a predictor for m1A and m6A methylation sites.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12859-022-04756-1.

Keywords: RNA modification site, Deep learning, Natural language processing, Predictor

Background

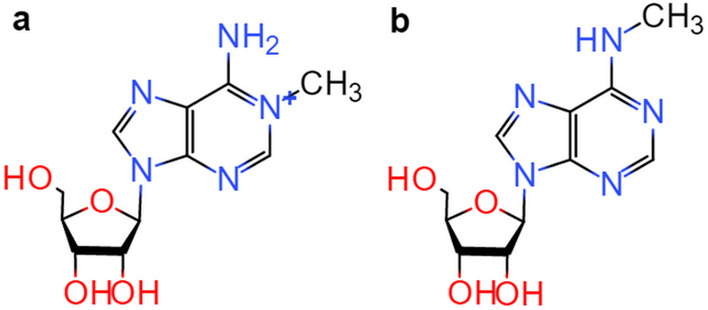

RNA molecules’ functional diversity is enriched by post-transcriptional RNA modifications, which regulate all stages of RNA life [1]. Up to now, there are around 160 different forms of RNA modifications that have been discovered [2], including N1-methyladenosine(m1A), N6-methyladenosine(m6A), 5-methylcytosine(m5C), N2-methylguanosine(m2G), 7-methylguanosine(m7G) [3, 4], etc. Among them, m1A modification is a prevalent RNA modification, which occurs on the nitrogen-1 position of the adenine base attached with a methyl group [5], as shown in Fig. 1a. It’s linked to problems with the respiratory chain, neurodevelopmental regression, and mediate antibiotic resistance bacteria, etc. [6–8]. Another modification affecting adenine is m6A modification, the most abundant modification in mammals, which occurs on the nitrogen-6 position of the adenosine base [9], as shown in Fig. 1b. It has a profound impact on human growth and disease [10]. The adenosine usually undergoes m1A and m6A [11]. Interestingly, m1A is also known to undergo Dimroth rearrangement to m6A under alkaline conditions [11]. Therefore, it is important to accurately identify m1A and m6A modification sites to uncover the mechanisms and functions of those modifications [12].

Fig. 1.

Chemical structures of modifications. a m1A modification. b m6A modification

Many experimental methods for identifying m1A and m6A modification sites have been constructed with the significant advances in high-throughput sequencing technology, such as m6A-CLIP [13], m6A-miCLIP [14], m1A-seq [15], m1A-ID-seq [11], etc. However, the experimental methods are expensive and time-consuming, which limit their extensive use [16]. Fortunately, various computational methods have become powerful supplements in this area.

Most machine learning methods designed for site prediction from sequences usually first extracted features based on human-understood feature methods, followed by a classifier to predict whether the site is a methylation site or not. For example, RAMPred extracted features based on nucleotide chemical properties (NCP), nucleotide composition (NC), and adopted the support vector machine (SVM) to predict the m1A methylation site for the first time [17]. iRNA-3typeA extracted features based on NCP, accumulated nucleotide frequency(ANF), and adopted SVM to predict m1A, m6A, and A-to-I modification sites [18]. iMRM extracted features based on NCP, NC, One-hot encoding, Dinucleotide Binary Encoding (DBE), Nucleotide Density (ND), Dinucleotide physicochemical properties (DPCP) and adopted eXtreme Gradient Boosting(XGboost) to predict m1A, m6A, m5C, and A-to-I modification sites, whose performance was superior to existing methods [19]. M6AMRFS extracted features based on DBE, ANF, used the F-score algorithm combined with Sequential Forward Search(SFS) to raise feature representation, and employed XGBoost to predict m6A site [20]. RNAMethPre extracted the features of the flanking sequences, the local secondary structure data, and the relative position data first, then adopted SVM to predict m6A methylation site with satisfactory performance [21]. SRAMP combines three random forest classifiers by exploiting One-hot encoding, K-nearest neighbor encoding, and Nucleotide pair spectrum encoding to predict m6A sites [22]. RFAthM6A extracted features based on four encoding methods, including Knucleotide frequencies (KNF), position-specific nucleotide sequence profile (PSNSP), Kspaced nucleotide pair frequencies (KSNPF), and position-specific dinucleotide sequence profile (PSDSP), respectively, then built four random forest models, which were competitive compared with AthMethPre, M6ATH, and RAM-NPPS [23]. WHISTLE adds 35 genomic features in addition to integrating conventional sequence features and predicts m6A methylation by SVM [24], which significantly improved compared to other computational approaches. However, genomic features are not always available when only a few RNA sequences are provided to predict m6A methylation. These conclusions show that extracted features is extremely critical to the final prediction.

It is well known that RNA-seq contains rich biometric information. Thus, the Rational representation of RNA sequences becomes even more critical. To address this problem, representation learning of sequences by natural language processing (NLP) has attracted a lot of attention [25], where an RNA sequence is regarded as a sentence, and a k-monomeric unit (k-mer) is regarded as a word, has gained great traction [26, 27]. Compared with conventional machine learning methods, most of the deep learning(DL) models can be divided into three parts: first, learning input data representations by NLP models [28]; second, composing over the word vectors that have been learned [29]; third, classing by a classifier to predict whether or not the site is a methylation site.

By far, some prediction methods using NLP and DL networks have been developed to predict m6A or m1A sites. Among them, Gene2Vec [30], DeepPromise [12], and EDLm6Apred [16] were the most representative and advanced methods for methylation site prediction. Specifically, Gene2Vec was developed to predict m6A site based on Word2vec [31] and convolutional neural network (CNN). DeepPromise adopted CNN and integrated enhanced nucleic acid content (ENAC) [32], RNA word embedding [33], and One-hot encoding [20, 34] features to identify m1A and m6A sites. EDLm6Apred adopted Word2vec, One-hot encoding, RNA word embedding, and BiLSTM to predict m6A sites. However, the existing methods have the following shortcomings. As is known, from the perspective of NLP, ENAC, One-hot, and RNA word embedding focused on the local semantic information [16] but ignored the context and global information. Word2vec encoding considered the context window information, ignoring the global information [35]. From the perspective of DL, CNN can learn the local response from the spatial data [25]. The different scale of the convolution kernel impacts the network's learning ability. Gene2Vec [30] and DeepPromise [12] directly used CNN composed of a single-scale convolution kernel, which might lead to incomplete representation learning of sequences [36]. The missing information in both methods may be important to the final site prediction. In addition, CNN has no memory function and lacks the ability to learn sequential correlations [25]. On the contrary, EDLm6Apred [16] presented a deep BiLSTM network to address the above issue, which simultaneously accessed context information. However, BiLSTM lacks spatial data extraction compared with CNN and needs a high training time [37, 38].

Consider the above questions. This paper proposes EMDLP to identify RNA methylation sites in an NLP and DL way. Specifically, One-hot encoding, RNA word embedding, and RGloVe were initially used to encode the sequences. Secondly, the DCB model was constructed with DCNN followed by BiLSTM to extract potential contributing features for methylation site prediction. Third, Three predictors were constructed based on the DCB model by the three feature encoding methods above. Finally, EMDLP was formulated by a soft vote with average predicted probabilities to use the three predictors to obtain better predictive performance. The results showed that the performance of the EMDLP model outperformed the state-of-the-art methods such as DeepPromise [12] and EDLm6Apred [16] in independent tests.

Results

Evaluation metrics

To estimate the prediction of the models, we adopted widely used binary classifier evaluation metrics, including Sensitivity(Sn, Recall), Specificity(Sp), Accuracy(Acc), Precision(Pre), F1 score (F1), Matthews correlation coefficient(MCC), Area under the receiver operating characteristic(AUROC), and Area under the precision-recall curve (AUPRC). Sn, Sp, Acc, Pre, F1, MCC are defined as follows:

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

where TP refers to true positive, TN refers to true negative, FP refers to false positive, and FN refers to false negative. In addition, the AUROC and AUPRC values are calculated based on the receiver operation curve (ROC) and the precision-recall curve (PRC), respectively. All the metric values range from 0 to 1 except for the MCC value, which ranges lies in [− 1, + 1], with a higher value indicating better performance.

Results analysis

This paper first examined the performance of RGloVe and GloVe on different sliding window sizes. Second, the self-built DCB model was compared and analyzed with the CNN, DCNN, and BiLSTM models. Third, this study compared the RGloVe feature encoding with the three others on predicting methylation modification sites. Last, this paper compared the EMDLP model with state-of-the-art methods based on the independent datasets. Our computing device has two NVIDIA RTX2080Ti GPU and 11 GB of GPU device memory. In addition to the GPU, the machine has two 2.3 GHz 16-core Intel(R) Xeon(R) Gold 5218 CPU and 128 GB of RAM. The device is installed with 64-bit Windows10 Professional Edition 20H2, python 3.7.6, Keras 2.2.4, and TensorFlow-gpu 1.14.0.

The size of the sliding window is an important parameter that affects the performance of the encoding scheme. Based on benchmark datasets, this experiment compares the performance of RGloVe and GloVe in predicting m1A and m6A methylation sites under four different sliding window sizes(i.e., 8, 15, 30, and 60). RGloVe is based on the GloVe model framework and adopts RMSProp instead of Adagrad to minimize the loss function of the global vector model. As a result, RGloVe shows the best prediction performance when the sliding window length = 30, as shown in Table 1. The experiment results show that using RMSProp can train the model more effectively.

Table 1.

AUROC scores of RGloVe and GloVe under different sliding windows sizes based on benchmark datasets

| Modification type | Encoding | Window sizes = 8 | Window sizes = 15 | Window sizes = 30 | Window sizes = 60 |

|---|---|---|---|---|---|

| m1A | RGloVe | 0.9283 | 0.9317 | 0.9377 | 0.9315 |

| GloVe | 0.9282 | 0.9193 | 0.9305 | 0.9185 | |

| m6A | RGloVe | 0.8414 | 0.8415 | 0.8432 | 0.8407 |

| GloVe | 0.8399 | 0.8420 | 0.8414 | 0.8372 |

The bolded values represent the best results

Comparison with other different learning models

Next, DCB was compared and analyzed with CNN, DCNN, and BiLSTM using the same benchmark datasets. The experiments used RGloVe encoding to describe the RNA sequence, constructed CNNRGloVe, DCNNRGloVe, BiLSTMRGloVe, and DCBRGloVe, respectively. Among them, CNNRGloVe employed the CNN model in Deeppromise [12]. DCBRGloVe represented a self-built DCB model, including the DCNN and BiLSTM stage. The DCNNRGloVed denoted the DCBRGloVe removing the BiLSTM stage, which was substituted by the flatten layer. Similarly, the BiLSTMRGloVe represented the DCBRGloVe without the DCNN stage.

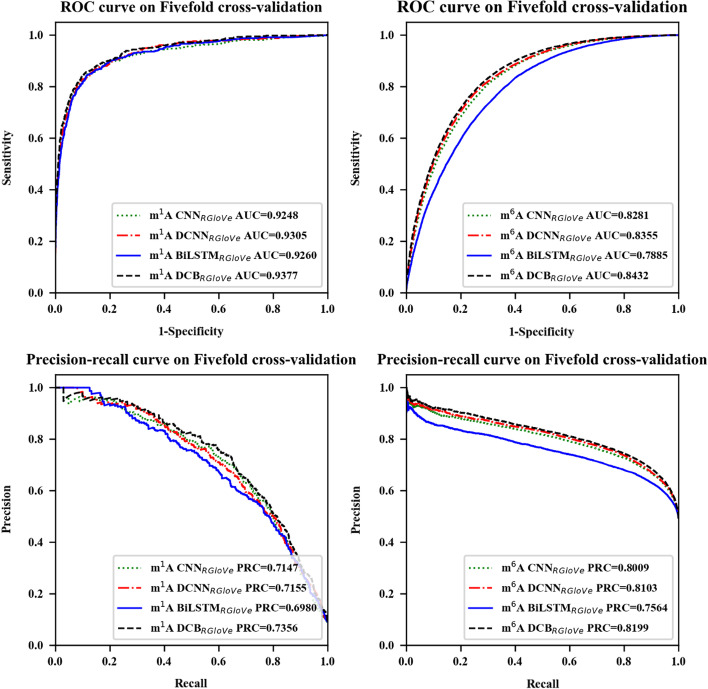

The fivefold cross-validation evaluation results, the AUROC and AUPRC curves on the m1A and m6A are shown in Fig. 2 and Table 2. The result shows the AUROC of DCNNRGloVe is 0.57% and 0.74% higher than CNNRGloVe’s on m1A and m6A, and the AUPRC of DCNNRGloVe is 0.08% and 0.94% higher than CNNRGloVe’s. This result.

Fig. 2.

Performance of the different models through fivefold cross-validation. The models are CNNRGloVe, DCNNRGloVe, BiLSTMRGloVe, and DCBRGloVe, respectively. "CNNRGloVe" employs the CNN model in Deeppromise; "DCBRGloVe" represents a self-built DCB model, including the DCNN and the BiLSTM stage; "DCNNRGloVe" denotes the DCBRGloVe removing the BiLSTM stage; "BiLSTMRGloVe" represents the DCBRGloVe without the DCNN stage

Table 2.

Evaluation results of the different models trained on the fivefold cross-validation

| Modification type | Classifiers | AUROC | Acc (%) | Sn (%) | Sp (%) | MCC (%) | Pre (%) | F1 (%) | AUPRC | Time (s) |

|---|---|---|---|---|---|---|---|---|---|---|

| m1A | CNNRGloVe | 0.9248 | 94.06 | 66.95 | 96.78 | 63.97 | 67.52 | 67.23 | 0.7147 | 127 |

| DCNNRGloVe | 0.9305 | 94.22 | 58.01 | 97.84 | 61.97 | 72.88 | 64.60 | 0.7155 | 96 | |

| BiLSTMRGloVe | 0.9260 | 93.02 | 66.44 | 95.68 | 59.63 | 60.62 | 63.40 | 0.6980 | 2104 | |

| DCBRGloVe | 0.9377 | 94.62 | 61.72 | 97.91 | 65.04 | 74.69 | 67.59 | 0.7356 | 1809 | |

| m6A | CNNRGloVe | 0.8281 | 74.93 | 81.84 | 68.22 | 50.47 | 71.44 | 76.29 | 0.8009 | 5264 |

| DCNNRGloVe | 0.8355 | 75.79 | 82.48 | 69.29 | 52.18 | 72.29 | 77.05 | 0.8103 | 18,732 | |

| BiLSTMRGloVe | 0.7885 | 71.42 | 83.87 | 59.33 | 44.48 | 66.70 | 74.31 | 0.7564 | 131,340 | |

| DCBRGloVe | 0.8432 | 76.46 | 79.30 | 73.65 | 53.03 | 74.96 | 77.07 | 0.8199 | 21,638 |

The bolded values represent the best results

Verifies that the single-scale convolution kernel in CNN is challenging to learn deep semantics from RNA sequences. On the contrary, the multiscale convolution kernels can extract additional features to provide deep semantics.

In addition, the study compared the performance of DCBRGloVe and DCNNRGloVe. The AUROC of DCBRGloVe is 0.72% and 0.77% higher than DCNNRGloVe’s on m1A and m6A, respectively, and the AUPRC of DCBRGloVe is 2.01% and 0.96% higher than DCNNRGloVe’s on m1A and m6A, respectively. The reason may be that DCNN has no memory function and cannot learn sequential correlations. On the contrary, DCB can capture the local correlation of different spatial structures according to DCNN and effectively learn the context of each k-mer in the text according to BiLSTM. In summary, DCB can understand sequence semantics more accurately than other methods.

Finally, the study compared the running time of DCBRGloVe and BiLSTMRGloVe. Although many factors affect the model's training time, the experiment results show that the training time of BiLSTMRGloVe is very long, for it is several times that of DCBRGloVe. The reason is that the max-pooling layer of the DCNN stage reduces the parameters of the network, which plays an active role in lowering dimensionality and computational complexity.

In conclusion, the DCBRGloVe classifier could effectively and quickly capture the sequence details on m1A and m6A modification sites.

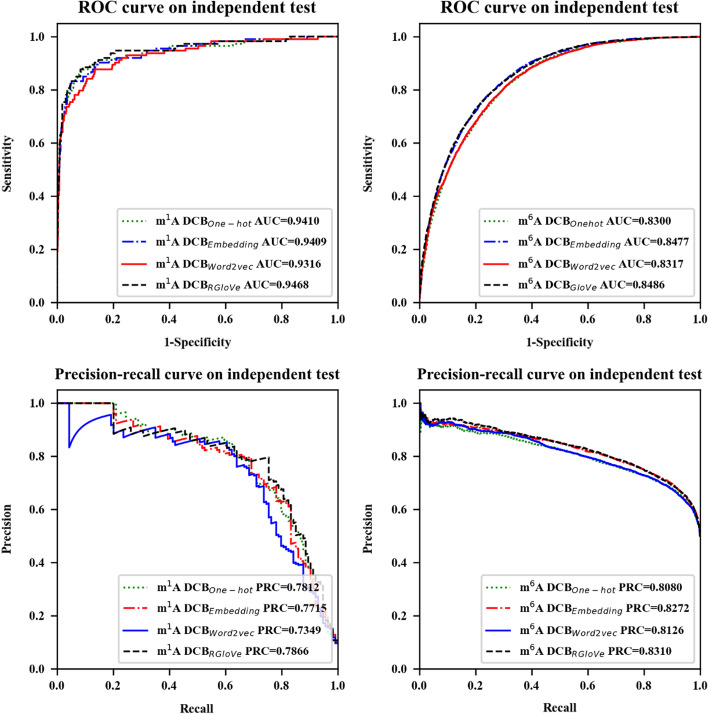

Comparison with other different feature encoding methods

Besides, the following content compared the prediction performance of the four feature encoding methods. The experiment encoded the sequences by our RGloVe and the three commonly used schemes, RNA word embedding, One-hot encoding, and word2vec, respectively, then applied the same DCB model to predict the modification site on the same independent dataset. The comparison results demonstrate that RGloVe outperforms the other three encoding techniques in predicting AUROC, as shown in Fig. 3 and Table 3. In the sense of exactly, for m1A and m6A sites, DCBRGloVe achieved AUROC 0.9468 and 0.8486 and more accurately than other methods. The reason is that the One-hot encoding and RNA word embedding emphasize local semantic information, and Word2vec encoding highlights the context windows information, but the above three encodings ignore the global information. RGloVe inherits the advantages of GloVe, which combines the benefits of global matrix factorization and local context approaches [37]. Therefore, RGloVe can improve the model prediction accuracy according to this advantage.

Fig. 3.

Performance of the DCB model based on One-hot encoding, RNA word embedding, Word2vec, and RGloVe

Table 3.

Evaluation results of the DCB model based on One-hot encoding, RNA word embedding, Word2vec, and RGloVe

| Modification type | Classifiers | AUROC | Acc (%) | Sn (%) | Sp (%) | MCC (%) | Pre (%) | F1 (%) | AUPRC |

|---|---|---|---|---|---|---|---|---|---|

| m1A | DCBOne-hot | 0.9410 | 95.37 | 64.04 | 98.51 | 69.66 | 81.11 | 71.57 | 0.7812 |

| DCBEmbedding | 0.9409 | 95.37 | 65.79 | 98.33 | 70.0 | 79.79 | 72.12 | 0.7715 | |

| DCBword2vec | 0.9316 | 95.29 | 61.4 | 98.68 | 68.72 | 82.35 | 70.35 | 0.7349 | |

| DCBRGloVe | 0.9468 | 95.45 | 64.04 | 98.6 | 70.12 | 82.02 | 71.92 | 0.7866 | |

| m6A | DCBOne-hot | 0.8300 | 74.51 | 72.25 | 76.76 | 49.06 | 75.57 | 73.87 | 0.8080 |

| DCBEmbedding | 0.8477 | 76.52 | 83.30 | 69.79 | 53.56 | 73.28 | 77.97 | 0.8272 | |

| DCBword2vec | 0.8317 | 75.10 | 79.60 | 70.62 | 50.43 | 72.95 | 76.13 | 0.8126 | |

| DCBRGloVe | 0.8486 | 76.36 | 84.2 | 68.57 | 53.41 | 72.72 | 78.04 | 0.8310 |

The bolded values represent the best results

In summary, RGloVe shows higher semantic accuracy than the other three commonly used schemes.

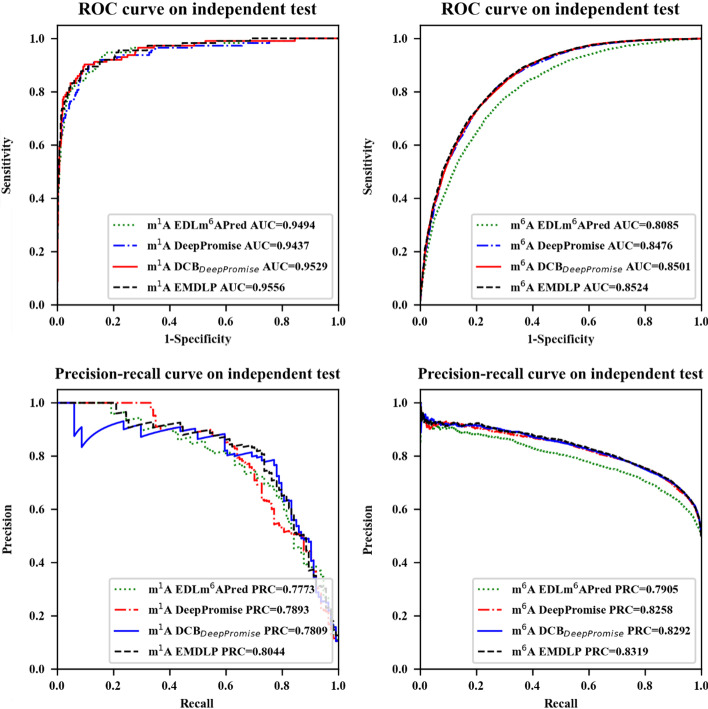

Comparison with state-of-the-art approaches

Finally, EMDLP was compared with other state-of-the-art approaches on the same independent datasets, such as DeepPromise [12] and EDLm6Apred [16]. To make the comparison more illustrative, we built DCBDeepPromise by replacing the CNN model in DeepPromise with DCB, and our EMDLP replaced the ENAC encoding in DCBDeepPromise with RGloVe.

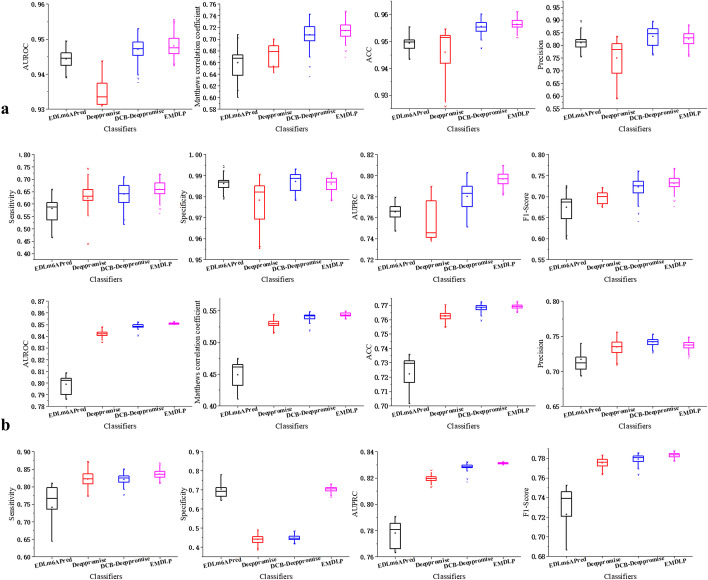

In order to evaluate the reliability of the model, the EDLm6Apred, DeepPromise, DCBDeepPromise, and EMDLP models were performed 100 replicate experiments on the same independent test sets of m1A and m6A, respectively. In each replicate, new evaluation results were produced. As shown in Fig. 4, Table 4, and Fig. 5, the AUROC and AUPRC of EMDLP are better than other approaches. The reason may be that ENAC, One-hot, and RNA word embeddings focus on local semantic information, and Word2vec encoding considers context window information, but none of them pay attention to global statistical information. At the same time, RGloVe can represent semantic information sequences more comprehensively than the other four encodings. And DCB is more suitable for extracting the RNA sequence's features than the other methods. Furthermore, We test the statistical significance of AUROC values between different tools by the student’s t-test [39], as shown in Table 5.

Fig. 4.

Performance of EMDLP and other methods on the independent test

Table 4.

Compare EMDLP model

| Modification type | Classifiers | AUROC | Acc (%) | Sn (%) | Sp (%) | MCC (%) | Pre (%) | F1 (%) | AUPRC |

|---|---|---|---|---|---|---|---|---|---|

| m1A | EDLm6Apred | 0.9494 | 95.06 | 64.91 | 98.07 | 68.10 | 77.08 | 70.47 | 0.7773 |

| DeepPromise | 0.9437 | 95.30 | 65.79 | 98.25 | 69.57 | 78.95 | 71.77 | 0.7893 | |

| DCBDeepPromise | 0.9529 | 95.61 | 67.54 | 98.42 | 71.67 | 81.05 | 73.68 | 0.7809 | |

| EMDLP | 0.9556 | 95.62 | 61.40 | 99.04 | 70.69 | 86.42 | 71.79 | 0.8044 | |

| m6A | EDLm6APred | 0.8085 | 73.38 | 80.14 | 66.66 | 47.23 | 70.52 | 75.02 | 0.7905 |

| DeepPromise | 0.8476 | 77.07 | 82.15 | 45.00 | 54.43 | 74.79 | 78.30 | 0.8258 | |

| DCBDeepPromise | 0.8501 | 76.76 | 81.89 | 44.95 | 53.81 | 74.19 | 77.85 | 0.8292 | |

| EMDLP | 0.8524 | 76.98 | 84.36 | 69.64 | 54.58 | 73.44 | 78.52 | 0.8319 |

The bolded values represent the best results

Fig. 5.

Boxplot of eight metrics for comparative performance assessment of the four methods based on the pAerformance of 100 replications of four methods. a for the m1A independent dataset. b for the m6A independent dataset

Table 5.

Statistically significant correlation matrix for the difference in the performance of the four classifiers

| Modification type | Classifiers | Classifiers | |||

|---|---|---|---|---|---|

| EDLm6APred | DeepPromise | DCBDeepPromise | EMDLP | ||

| m1A | EDLm6APred | ||||

| DeepPromise | 6.80137E-27 | ||||

| DCBDeepPromise | 2.14723E-11 | 5.22548E-34 | |||

| EMDLP | 8.734E-20 | 4.51535E-37 | 0.01606677 | ||

| m6A | EDLm6APred | ||||

| DeepPromise | 1.7731E-122 | ||||

| DCBDeepPromise | 3.3248E-133 | 2.05181E-42 | |||

| EMDLP | 8.6672E-142 | 6.72773E-87 | 3.06352E-20 | ||

Webserver

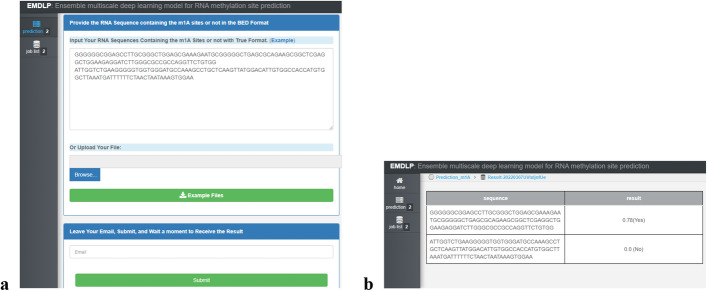

We established an online webserver to simultaneously identify m1A and m.6A modifications in H. sapiens to facilitate scientific research. The user-friendly webserver for EMDLP was publicly available at http://www.labiip.net/EMDLP/index.php (http://47.104.130.81/EMDLP/index.php). The usage guide of the webserver for EMDLP is as follows. Open the home page at http://www.labiip.net/EMDLP/index.php (http://47.104.130.81/EMDLP/index.php). First, clicking the "Prediction" button and selecting the "m1A" or"m6A" successively, the page will appear, as shown in Fig. 6a. Second, Type or paste an RNA sequence in the input box. Third, leave your email in the input box, clicking the "submit" button, and the predictive results will appear on a new page, as shown in Fig. 6b.

Fig. 6.

Screenshot of EMDLP webserver. a Site input interface of EMDLP. b The prediction result returned by EMDLP

Discussion

This paper proposes EMDLP to identify RNA methylation sites in an NLP and DL way. The specific discussion is as follows:

Firstly, this study compared the performance of predicting m1A and m6A methylation sites under four different sliding window sizes (i.e., 8, 15, 30, and 60) based on the RGloVe and GloVe encoding methods. The evaluation results show that using RMSProp instead of Adagrad to minimize the loss function of the global vector model can indeed train the model more effectively. This result is consistent with that of Ruder, S. (2017), who pointed out that RMSProp can overcome the weakness of Adagrad. RGloVe shows the best prediction performance when the sliding window length = 30.

Secondly, based on the feature representation of the sequence by the above RGloVe, this study compared the DCB model with the CNN, DCNN, and BiLSTM models for predicting methylation modification sites. The experiment result shows the AUROC of DCNNRGloVe is 0.57% and 0.74% higher than CNNRGloVe's on m1A and m6A. This study confirms that the multiscale convolution kernels can extract different features to provide deep semantics. The experiment results show that the training time of BiLSTMRGloVe is very long, and it is several times that of DCBRGloVe. That also accords with Min, X.’s conclusion, which showed that the max-pooling layer of the DCNN stage reduces the parameters of the network, which plays an active role in lowering dimensionality and computational complexity. The experimental results show that the DCBRGloVe model is superior to other models in predicting m1A and m6A sites. This study confirms that the combination of DCNN and BiLSTM makes the understanding of sequence semantics more accurate.

Third, based on the above self-built DCB model, this paper compared the prediction performance of RGloVe, RNA word embedding, One-hot encoding, and word2vec. The results reveal that Our RGloVe outperforms the other three encoding schemes in prediction performance. This finding is consistent with Pennington, J (2014), who proposed that GloVe shows higher semantic accuracy than word2vec.

Finally, EMDLP was constructed by a soft vote to use the three predictors to obtain better predictive performance. This paper compared the prediction performance of EMDLP, DeepPromise, DCBDeepPromise, and EDLm6Apred based on the independent datasets. The results show that the AUROC of EMDLP is significantly better than the three methods. This study further indicates that RGloVe can better represent the semantic information of sequences than the other four encodings, and DCB is more suitable for extracting the RNA sequence's features than the other methods.

Conclusions

The contribution of this paper proposes a predictor EMDLP to identify RNA methylation sites by NLP and DL way. It organically combines the dilated convolution and BiLSTM, which helps take better advantage of the local and global information for site prediction.

Although EMDLP outperforms state-of-the-art predictors, which is currently limited to humans and has not been extended to other model organisms due to the lack of a sufficient number of single-nucleotide datasets for other species. It is worth looking forward to testing the performance of EMDLP when sufficient other species RNA modification datasets become available in the future.

Materials and methods

Datasets

We have extracted two common types of human RNA modification site datasets published at single-nucleotide resolution, including m1A and m6A. For the m1A and m6A sites, the datasets in this paper were derived from the previous studies of Chen et al. [12] and Zou et al. [30], respectively. The only difference is that the Zou validation set was used as the independent test set of this paper on the m6A site.

The study divided the dataset into two parts: a benchmark dataset for cross-validation testing and an independent dataset for independent testing. It took the modified/non-modified site as the center for each sample and brought the (2n + 1)-nt partial sequence window. It was worth noting that the "n" for these two modifications was different. Referring to the experimental results in Chen’s paper, the size of the optimal window was 101 and 1001 for m1A and m6A sites[12], respectively. If the length of the original sequences were shorter than 2n + 1, the empty positions would be filled with the character "-" to ensure the sequence length is consistent. The ratio of positive and negative samples of m1A sites and m6A sites was 1:10 and 1:1, respectively. The statistic of these two RNA modification datasets is shown in Table 6.

Table 6.

A statistical of these two RNA modification datasets

| Modification type | Dataset | Window size | Number of positive samples | Number of negative samples |

|---|---|---|---|---|

| m1A | m1A_BM | 101 | 593 | 5930 |

| m1A | m1A_IND | 101 | 114 | 1140 |

| m6A | m6A_ BM | 1001 | 26,586 | 27,371 |

| m6A | m6A_IND | 1001 | 6879 | 6914 |

BM benchmark; IND independent

Feature encoding representation on different perspectives

As we all know, feature encoding is the key to evaluating the excellent performance of site prediction models. This paper encodes the sequences by RNA word embedding, One-hot encoding, and RGloVe.

One-hot encoding is a sparse binary, high-dimensional word vector, while RNA word embedding is a continuous, low-dimensional dense word vector that captures the local semantic information. RGloVe inherits the principle of GloVe, which captures the global semantic information.

One-hot encoding is a very simple encoding method to describe the nucleotides sequence. The four nucleotides and the the gap symbol "-" are encoded as , where A = (1,0,0,0,0), C = (0,1,0,0,0), G = (0,0,1,0,0), T = (0,0,0,1,0), and "-" = (0,0,0,0,1). Take m1A as an example, a sequence of 101nts is transformed to 505-bit vectors.

RNA word embedding is a standard method for encoding RNA sequences. A sliding window of size k slides on the RNA sequence by overlapping an equal length to form a k-mer sub-sequence, and these sub-sequences are created as a vocabulary. Take m1A as an example. A sequence of 101nts is converted to 99 sub-sequence through a sliding window of size 3. The study obtained 105 different sub-sequences, which are indexed by a unique integer index. Each pre-processed sequence is changed with an integer index and fed into the Keras embedding layer to generate 300-dimension word vectors. Thus, the 101nts sequences are transformed into a matrix of 99 × 300.

RNA word embedding only considers the frequency information but neglects the context and global information. Word2vec only trains independently by information from each local context window, while it does not use the statistical data in the global co-occurrence matrix [35]. Pennington et al. [40] proposed global vectors(GloVe) that can consider the statistical data in the global co-occurrence matrix and used Adagrad to train GloVe word embeddings [41]. But, Adagrad has a primary weakness, which can cause the learning rate of Adagrad to decrease and get extremely small, at which point the algorithm can not learn new information [41]. Therefore, the study uses RMSProp instead of Adagrad to minimize the loss function of the global vector model. The word vector trained by this method is called RGloVe. The specific analysis process is as follows.

The statistics of k-mer incidence is the most important data source for learning embedding representations. Y denotes the matrix of co-occurrence counts, and Yij records the frequency of the word k-mer appearing in the context sliding windows of the word k-mer i. are two k-mer indexes, the vocabulary size W = 105. According to the GloVe model, we get the embedding vector by training the cost function under,

| 7 |

where are expected embedding vectors, are separate context k-mer vectors that help obtain , are the biases for respectively. is a non-decreasing weighting function below

| 8 |

where is a maximum cutoff value and denotes the fractional power scaling, which is commonly 0.75.

The original GloVe uses Adagrad [42] to minimize Eq. (7). At every time step , the specific iterative rules are as follows:

| 9 |

where indicates the gradient of the objective function, is the parameter at a time step . The Adagrad update for every parameter at each time step are as follows:

| 10 |

where indicates the learning rate, is a diagonal matrix where each diagonal element i, i is the sum of the gradients' squares. up to time step t, δ is commonly 1 − 8.

The primary deficiency of Adagrad is its accumulation of the squared gradients in the denominator, at which point the algorithm stops learning new information [41]. The RMSprop algorithm solves this flaw by reducing its monotonically decreasing learning rate. RMSprop does not accumulate all past square gradients but limits the window of accumulated past gradients to a fixed size . The total of gradients is recursively defined as a decaying average of all past square gradients rather than merely keeping previous square gradients [41]. At time step , the running average depends on the previous average and the current gradient :

| 11 |

at each time step , the RMSprop update for every parameter below:

| 12 |

The momentum term is usually set to 0.9 or a similar value, while the learning rate of RMSprop is 0.001. We use RMSprop to minimize Eq. (7) and obtained the D-dimensional embedding vector representations . According to the vectors, the study has completed the embedding encoding of representation learning by embedding each k-mer into the vector space :

| 13 |

where . We carried out the convolution stage based on the output matrix.

Take m1A as an example. If the dimension is 300, the 101nts sequences are transformed into a matrix of 99 × 300. Three feature encoding input and output formats are in Table 7.

Table 7.

Input and output formats with three kinds of feature encoding

| Modification type | Encoding method | Input | Output |

|---|---|---|---|

| m1A | One-hot | 101 × 1 | 101 × 5 |

| RNA word embedding | 99 × 3 | 99 × 300 | |

| RGloVe | 99 × 3 | 99 × 300 | |

| m6A | One-hot | 1001 × 1 | 1001 × 5 |

| RNA word embedding | 999 × 3 | 999 × 300 | |

| RGloVe | 999 × 3 | 999 × 300 |

Dilated convolutional neural network

Holschneider et al. [43] were the first to develop dilated convolution, which kept the feature map's resolution by introducing holes into the regular convolution [44]. Compared to ordinary convolution, dilated convolution adds a hyperparameter named dilation rate(DR), which corresponds to the number of kernel intervals, such as DR = 1 in ordinary convolution.

When applied to a one-dimensional situation, dilated convolution can be calculated as Eq. (14). Different dilution rates can be regarded as inserting varying sizes of blank rows between each kernel of convolution, as shown in Additional file 1: Fig. S1.

| 14 |

where xj is the jth element of input, yj denotes the output of the jth element in the DCNN, is the weight of the filter, N is the length of the filter, r is known as the DR.

In addition to the dilated convolution, the DCNN comprises the pooling and dropout layer. The pooling layer is applied to each feature map and outputs the average or maximum value of the input in a pooling window so that the pooling layer can reduce the number of parameters.

The dropout layer is used to avoid overfitting during model training and is the most commonly used regularization technique. In each training activity during forwarding propagation, some neurons are randomly set to zero, which intuitively leads to the integration of different networks. The dropout rate is the probability of a neuron withdrawing.

In this study, dilated convolutional layers of three dilation rates(DR = 1, 2, and 3, respectively) are concatenated to send to the BiLSTM stage.

Bidirectional LSTM

BiLSTM is a specific sort of recurrent neural network(RNN) that combines forward LSTM and backward LSTM. Among them, forward LSTM calculates the hidden features in the forward direction and saves the output at each moment . With the same reasoning, backward LSTM calculates the hidden features in the reverse direction and saves the output at each moment , as shown in Additional file 1: Fig. S2. Ultimately, the final result is derived from merging the output values of the forward and backward LSTM layers at each instant.

The LSTM [45] framework addresses the exploding or disappearing gradients in RNNs. Commonly, the LSTM unit is defined as a current input , a memory unit , an input modulation vector , a hidden state , a forget gate , an input gate , and an output gate at the moment , as shown in Additional file 1: Fig. S3.

Among them, a memory unit is controlled by three "gates": a forget gate , an input gate , and an output gate , where their entries are in [0, 1]. The following are the LSTM transition equations:

| 15 |

| 16 |

| 17 |

| 18 |

| 19 |

| 20 |

where and are the weight metrics, represents bias, is the logistic Sigmoid function, represents element-wise multiplication.

LSTM has been demonstrated significant benefits in modeling time series data attributable to features of its engineer. BiLSTM combines forward and backward LSTM, which overcomes the vanishing or exploding gradients and evaluates the context's meaning [25].

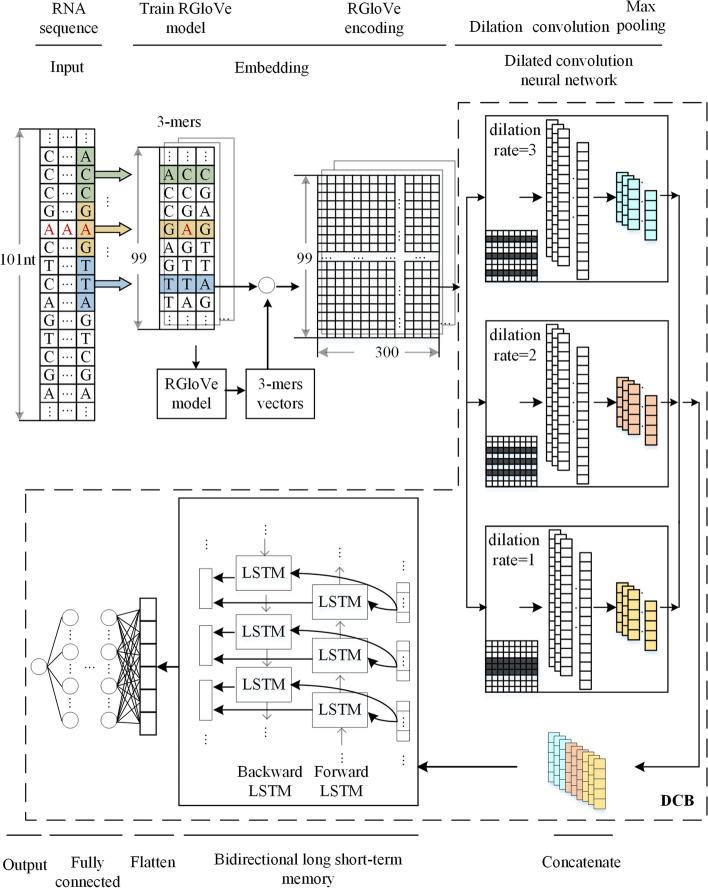

Site prediction based on dilated convolutional Bidirectional LSTM

The study combined the DCB model with three encoding methods: RNA word embedding, one-hot encoding, and RGloVe to create three modification site predictors. Consider the RGloVe predictor, as shown in Fig. 7.

Fig. 7.

structure of our computational framework based on RGloVe, DCNN, and BiLSTM neural network to predict m1A methylation site

Suppose that we have N RNA sequences of L0-length. Each has a binary label indicating whether it is a methylation modification site, meaning N-labeled samples . For each sequence with A, C, T, G nucleotides, and "-", we split it into sub-sequences by using a split window. Each sub-sequence containing k nucleotides is called the k-mer motif. We extract the sub-sequence of length k with stride s, resulting in a k-mer motif of length . Take m1A as an example. A sequence of L0 = 101nts is converted to 99 sub-sequence through a split window of size k = 3 and stride s = 1, where all these 3-mers have a positive integer index in the set = [1, 2, 3, 4…, 105], and sequence data .

The following content will specifically introduce learning a feature map that maps into feature vectors useful for DL tasks.

We used DCB with k-mer embedding to train the model, as shown in Fig. 7. The representation learning function can be separated into four stages:

| 21 |

The embedding stage calculates the co-occurrence statistics of k-mers and maps them to the D-dimensional space .

The DCNN stage has three blocks of DCNNs, and the dilution rate of three DCNNs is 1, 2, and 3, respectively. A dilated convolutional layer with the rectified linear unit (ReLU) as its active function, a max-pooling layer, and a dropout unit are all included in each DCNN block. We used the grid-search strategy for the optimization of hyperparameters. There are 64 convolution kernels with a size of 3 each. For the max-pool layer, the size of the max-pool windows is 2. The drop rate is set at 0.2 to avoid overfitting. The concatenate stage concatenates the three blocks of DCNNs to build a multiscale feature extractor. The BiLSTM stage applies a Bi-direction LSTM network to the input in order to collect long-term data dependency information between the data. The number of neurons is set at 64, and the drop rate is 0.2. After the BiLSTM stage, the data were flattened into one dimension by the flatten layer, followed by a fully connected layer. The fully connected layer consists of three full connections, which contain the number of neurons is 256,128,64, activated by ReLU function, and dropout with a probability of 0.5. Finally, the output layer calculates the probability score to indicate the likelihood of the site being modified with the Sigmoid function as follows:

| 22 |

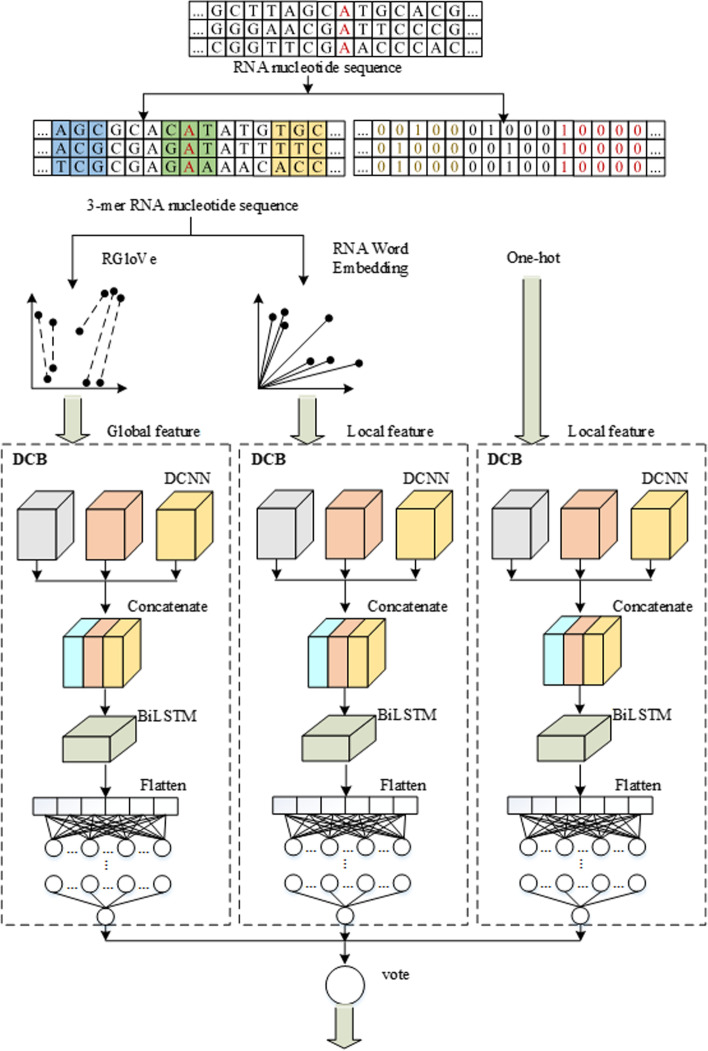

Ensemble-based site prediction

Various encoding techniques will observe the sequences from various perspectives. RNA word embedding and One-hot encoding emphasize the local information, while RGlove employs global statistics to learn the global semantics. As a result, different predictors may have complementary impacts on prediction. Based on the DCB model, three predictors are constructed by RNA word embedding, One-hot encoding, and RGloVe. Finally, EMDLP was formulated with the three predictors above by a soft vote, as shown in Fig. 8.

Fig. 8.

Structure of EMDLP predictor. The diagrams depicted our method's architecture. Three different DL classifiers predicted the methylation sequences and decided the final finding by a soft vote

Supplementary Information

Additional file 1. Supplementary Figures.

Acknowledgements

Not applicable.

Abbreviations

- RNA

Ribonucleic acid

- m6A

N6-methyladenosine

- m1A

N1-methyladenosine

- CNN

Convolutional neural network

- BiLSTM

Bidirectional long short-term memory

- LSTM

Long short-term memory

- RNN

Recurrent neural network

- NLP

Natural language processing

- DL

Deep learning

- EMDLP

Ensemble multiscale deep learning model for RNA methylation site prediction

- DCB

Dilated convolutional bidirectional long short-term memory network

- DCNN

Dilated convolutional neural network

- GloVe

Global vectors

- Sn

Sensitivity

- Sp

Specificity

- ACC

Accuracy

- Pre

Precision

- MCC

Matthews correlation coefficient

- AUROC

Area under the receiver operating characteristic

- AUPRC

Area under the precision-recall curve

- ENAC

Enhanced nucleic acid composition

Author contributions

HW built the architecture for EMDLP, designed and implemented the experiments, analyzed the result, and wrote the paper. GL and TH conducted the experiments and revised the paper. LZ conducted the experiments, analyzed the result, and revised the paper. HL and YS supervised the project, analyzed the result, and revised the paper. All authors read, critically revised, and approved the final manuscript.

Funding

This work has been supported by the Fundamental Research Funds for the Central Universities (2014QNA84 to HL), the National Natural Science Foundation of China (31871337 to HL), and the "333 Project" of Jiangsu(BRA2020328 to WHL). The funding body did not play any roles in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Availability of data and materials

The data supporting the findings of the article is available at the webserver http://www.labiip.net/EMDLP/index.php (http://47.104.130.81/EMDLP/index.php). The code implemented to perform the analysis is deposited at https://github.com/whl-cumt/EMDLP.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Hui Liu, Email: hui.liu@cumt.edu.cn.

Yanjing Sun, Email: yjsun@cumt.edu.cn.

References

- 1.Song ZT, Huang DY, Song BW, Chen KQ, Song YY, Liu G, Su JL, de Magalhaes JP, Rigden DJ, Meng J. Attention-based multi-label neural networks for integrated prediction and interpretation of twelve widely occurring RNA modifications. Nat Commun. 2021;12(1):1–11. doi: 10.1038/s41467-020-20314-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Boccaletto P, Machnicka MA, Purta E, Piatkowski P, Baginski B, Wirecki TK, de Crecy-Lagard V, Ross R, Limbach PA, Kotter A, et al. MODOMICS: a database of RNA modification pathways 2017 update. Nucleic Acids Res. 2018;46(D1):303–307. doi: 10.1093/nar/gkx1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sun WJ, Li JH, Liu S, Wu J, Zhou H, Qu LH, Yang JH. RMBase: a resource for decoding the landscape of RNA modifications from high-throughput sequencing data. Nucleic Acids Res. 2016;44(D1):259–265. doi: 10.1093/nar/gkv1036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Xuan JJ, Sun WJ, Lin PH, Zhou KR, Liu S, Zheng LL, Qu LH, Yang JH. RMBase v2.0: deciphering the map of RNA modifications from epitranscriptome sequencing data. Nucleic Acids Res. 2018;46(D1):327–334. doi: 10.1093/nar/gkx934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Dunn DB. The occurence of 1-methyladenine in ribonucleic acid. Biochem Biophys Acta. 1961;46(1):198–200. doi: 10.1016/0006-3002(61)90668-0. [DOI] [PubMed] [Google Scholar]

- 6.Hauenschild R, Tserovski L, Schmid K, Thuring K, Winz ML, Sharma S, Entian KD, Wacheul L, Lafontaine DL, Anderson J, et al. The reverse transcription signature of N-1-methyladenosine in RNA-Seq is sequence dependent. Nucleic Acids Res. 2015;43(20):9950–9964. doi: 10.1093/nar/gkv895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.El Allali A, Elhamraoui Z, Daoud R. Machine learning applications in RNA modification sites prediction. Comput Struct Biotechnol J. 2021;19:5510–5524. doi: 10.1016/j.csbj.2021.09.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ballesta JP, Cundliffe E. Site-specific methylation of 16S rRNA caused by pct, a pactamycin resistance determinant from the producing organism, Streptomyces pactum. J Bacteriol. 1991;173(22):7213–7218. doi: 10.1128/jb.173.22.7213-7218.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Deng X, Chen K, Luo GZ, Weng X, Ji Q, Zhou T, He C. Widespread occurrence of N6-methyladenosine in bacterial mRNA. Nucleic Acids Res. 2015;43(13):6557–6567. doi: 10.1093/nar/gkv596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Xiao S, Cao S, Huang Q, Xia L, Deng M, Yang M, Jia G, Liu X, Shi J, Wang W, et al. The RNA N(6)-methyladenosine modification landscape of human fetal tissues. Nat Cell Biol. 2019;21(5):651–661. doi: 10.1038/s41556-019-0315-4. [DOI] [PubMed] [Google Scholar]

- 11.Li X, Xiong X, Wang K, Wang L, Shu X, Ma S, Yi C. Transcriptome-wide mapping reveals reversible and dynamic N(1)-methyladenosine methylome. Nat Chem Biol. 2016;12(5):311–316. doi: 10.1038/nchembio.2040. [DOI] [PubMed] [Google Scholar]

- 12.Chen Z, Zhao P, Li F, Wang Y, Smith AI, Webb GI, Akutsu T, Baggag A, Bensmail H, Song J. Comprehensive review and assessment of computational methods for predicting RNA post-transcriptional modification sites from RNA sequences. Brief Bioinform. 2019;21(5):1676–1696. doi: 10.1093/bib/bbz112. [DOI] [PubMed] [Google Scholar]

- 13.Ke S, Alemu EA, Mertens C, Gantman E, Darnell RB. A majority of m6A residues are in the last exons, allowing the potential for 3′ UTR regulation. Genes Dev. 2015;29(19):2037–2053. doi: 10.1101/gad.269415.115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Linder B, Grozhik AV, Olarerin-George AO, Meydan C, Mason CE, Jaffrey SR. Single-nucleotide-resolution mapping of m6A and m6Am throughout the transcriptome. Nat Methods. 2015;12(8):767–772. doi: 10.1038/nmeth.3453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dominissini D, et al. The dynamic N(1)-methyladenosine methylome in eukaryotic messenger RNA. Nature. 2016;530(7591):1–39. doi: 10.1038/nature16998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang L, Li GS, Li XY, Wang HL, Chen ST, Liu H. EDLm(6)APred: ensemble deep learning approach for mRNA m(6)A site prediction. BMC Bioinformatics. 2021;22(1):1–15. doi: 10.1186/s12859-020-03881-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chen W, Feng P, Tang H, Ding H, Lin H. RAMPred: identifying the N(1)-methyladenosine sites in eukaryotic transcriptomes. Sci Rep. 2016;6:1–8. doi: 10.1038/s41598-016-0001-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen W, Feng P, Yang H, Ding H, Lin H, Chou KC. iRNA-3typeA: identifying three types of modification at RNA's adenosine sites. Mol Ther Nucleic Acids. 2018;11:468–474. doi: 10.1016/j.omtn.2018.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Liu K, Chen W. iMRM: a platform for simultaneously identifying multiple kinds of RNA modifications. Bioinformatics. 2020;36(11):3336–3342. doi: 10.1093/bioinformatics/btaa155. [DOI] [PubMed] [Google Scholar]

- 20.Qiang XL, Chen HR, Ye XC, Su R, Wei LY. M6AMRFS: robust prediction of N6-methyladenosine sites with sequence-based features in multiple species. Front Genet. 2018;9:1–9. doi: 10.3389/fgene.2018.00495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Xiang S, Liu K, Yan Z, Zhang Y, Sun Z. RNAMethPre: a web server for the prediction and query of mRNA m6A sites. PLoS ONE. 2016;11(10):1–13. doi: 10.1371/journal.pone.0162707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zhou Y, Zeng P, Li YH, Zhang ZD, Cui QH. SRAMP: prediction of mammalian N-6-methyladenosine (m(6)A) sites based on sequence-derived features. Nucleic Acids Res. 2016;44(10):e91. doi: 10.1093/nar/gkw104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wang XF, Yan RX. RFAthM6A: a new tool for predicting m(6)A sites in Arabidopsis thaliana. Plant Mol Biol. 2018;96(3):327–337. doi: 10.1007/s11103-018-0698-9. [DOI] [PubMed] [Google Scholar]

- 24.Chen KQ, Wei Z, Zhang Q, Wu XY, Rong R, Lu ZL, Su JL, de Magalhaes JP, Rigden DJ, Meng J. WHISTLE: a high-accuracy map of the human N-6-methyladenosine (m(6)A) epitranscriptome predicted using a machine learning approach. Nucleic Acids Res. 2019;47(7):1–8. doi: 10.1093/nar/gkz077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Liu G, Guo JB. Bidirectional LSTM with attention mechanism and convolutional layer for text classification. Neurocomputing. 2019;337:325–338. doi: 10.1016/j.neucom.2019.01.078. [DOI] [Google Scholar]

- 26.Angermueller C, Rnamaa PT, Parts L, Stegle O. Deep learning for computational biology. Mol Syst Biol. 2016;12(7):1–16. doi: 10.15252/msb.20156651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zou J, Huss M, Abid A, Mohammadi P, Torkamani A, Telenti A. A primer on deep learning in genomics. Nat Genet. 2019;51(1):12–18. doi: 10.1038/s41588-018-0295-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Pang B, Lee L. Seeing stars: exploiting class relationships for sentiment categorization with respect to rating scales. arXiv 2005:115–124.

- 29.Collobert R, Weston J, Bottou L, Karlen M, Kavukcuoglu K, Kuksa P. Natural language processing (almost) from scratch. J Mach Learn Res. 2011;12:2493–2537. [Google Scholar]

- 30.Zou Q, Xing PW, Wei LY, Liu B. Gene2vec: gene subsequence embedding for prediction of mammalian N-6-methyladenosine sites from mRNA. RNA. 2019;25(2):205–218. doi: 10.1261/rna.069112.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Church K. Ward: emerging trends word2vec. Nat Lang Eng. 2017;23(1):155–162. doi: 10.1017/S1351324916000334. [DOI] [Google Scholar]

- 32.Chen Z, Zhao P, Li F, Leier A, Marquez-Lago TT, Wang Y, Webb GI, Smith AI, Daly RJ, Chou KC, et al. iFeature: a Python package and web server for features extraction and selection from protein and peptide sequences. Bioinformatics. 2018;34(14):2499–2502. doi: 10.1093/bioinformatics/bty140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dai HJ, Umarov R, Kuwahara H, Li Y, Song L, Gao X. Sequence2Vec: a novel embedding approach for modeling transcription factor binding affinity landscape. Bioinformatics. 2017;33(22):3575–3583. doi: 10.1093/bioinformatics/btx480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wei LY, Luan S, Nagai LAE, Su R, Zou Q. Exploring sequence-based features for the improved prediction of DNA N4-methylcytosine sites in multiple species. Bioinformatics. 2019;35(8):1326–1333. doi: 10.1093/bioinformatics/bty824. [DOI] [PubMed] [Google Scholar]

- 35.Liu XQ, Li BX, Zeng GR, Liu QY, Ai DM. Prediction of long non-coding RNAs based on deep learning. Genes (Basel) 2019;10(4):1–16. doi: 10.3390/genes10040273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wang R, Shi RY, Hu X, Shen CQ. Remaining useful life prediction of rolling bearings based on multiscale convolutional neural network with integrated dilated convolution blocks. Shock Vib. 2021;2021:1–11. [Google Scholar]

- 37.Min X, Zeng W, Chen N, Chen T, Jiang R. Chromatin accessibility prediction via convolutional long short-term memory networks with k-mer embedding. Bioinformatics. 2017;14:92–101. doi: 10.1093/bioinformatics/btx234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Zhao CY, Huang XZ, Li YX, Iqbal MY. A double-channel hybrid deep neural network based on CNN and BiLSTM for remaining useful life prediction. Sensors-Basel. 2020;20(24):1–15. doi: 10.3390/s20247109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chen Z, Zhao P, Li C, Li FY, Xiang DX, Chen YZ, Akutsu T, Daly RJ, Webb GI, Zhao QZ, et al. iLearnPlus: a comprehensive and automated machine-learning platform for nucleic acid and protein sequence analysis, prediction and visualization. Nucleic Acids Res. 2021;49(10):e60. doi: 10.1093/nar/gkab122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pennington J, Socher R, Manning C. Glove. Global vectors for word representation. In: conference on empirical methods in natural language processing. 2014. pp. 1532–1543.

- 41.Ruder S. An overview of gradient descent optimization algorithms. 2017:1–14. arXiv:160904747.

- 42.Duchi J, Hazan E, Singer Y. Adaptive subgradient methods for online learning and stochastic optimization. J Mach Learn Res. 2011;12:2121–2159. [Google Scholar]

- 43.Holschneider M, Kronland-Martinet R, Morlet J. A real-time algorithm for signal analysis with help of the wavelet transform. In: Combes JM, Grossmann A, Tchamitchian P, editors. Wavelets. Heidelberg: Springer; 1989. pp. 286–297. [Google Scholar]

- 44.Ku T, Yang QR, Zhang H. Multilevel feature fusion dilated convolutional network for semantic segmentation. Int J Adv Rob Syst. 2021;18(2):1–11. [Google Scholar]

- 45.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput. 1997;9(8):1735–1780. doi: 10.1162/neco.1997.9.8.1735. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Supplementary Figures.

Data Availability Statement

The data supporting the findings of the article is available at the webserver http://www.labiip.net/EMDLP/index.php (http://47.104.130.81/EMDLP/index.php). The code implemented to perform the analysis is deposited at https://github.com/whl-cumt/EMDLP.