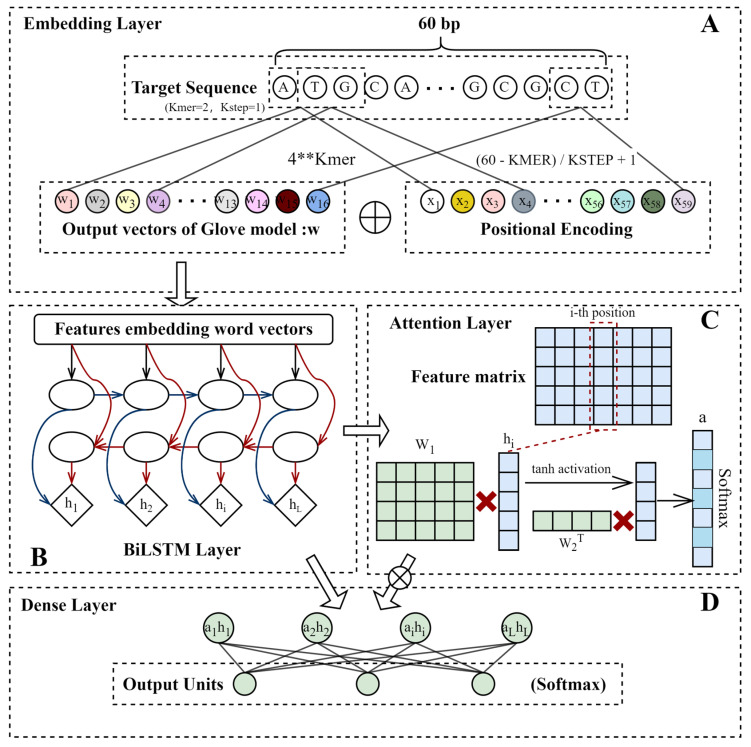

Figure 2.

Apindel architecture. (A) Embedding layer, mainly consisting of GloVe model and Positional Encoding. (B) BiLSTM layer, used to capture the contextual information in the input message. (C) Attention layer. The feature matrix output from the previous layer calculated the attention vector, which stored the importance scores of different loci of the sequences on the final prediction outcomes. (D) Dense layer. The attention vector was combined with the BiLSTM layer output, and the final, fully connected layer was used to obtain the final results.