Abstract

Within the context of the Direct and Indirect Effects model of Writing, we examined a dynamic relations hypothesis, which contends that the relations of component skills, including reading comprehension, to written composition vary as a function of dimensions of written composition. Specifically, we investigated (a) whether higher order cognitive skills (i.e., inference, perspective taking, and monitoring) are differentially related to three dimensions of written composition—writing quality, writing productivity, and correctness in writing; (b) whether reading comprehension is differentially related to the three dimensions of written composition after accounting for oral language, cognition, and transcription skills; and whether reading comprehension mediates the relations of discourse oral language and lexical literacy to the three dimensions of written composition; and (c) whether total effects of oral language, cognition, transcription, and reading comprehension vary for the three dimensions of written composition. Structural equation model results from 350 English-speaking second graders showed that higher order cognitive skills were differentially related to the three dimensions of written composition. Reading comprehension was related only to writing quality, but not to writing productivity or correctness in writing; and reading comprehension differentially mediated the relations of discourse oral language and lexical literacy to writing quality. Total effects of language, cognition, transcription, and reading comprehension varied largely for the three dimensions of written composition. These results support the dynamic relation hypothesis, role of reading in writing, and the importance of accounting for dimensions of written composition in a theoretical model of writing.

Keywords: direct and indirect effects model of writing (DIEW), writing, reading, higher order cognitions, mediation

In order to produce coherent written compositions, one needs to carefully coordinate and regulate complex, recursive processes of generating ideas, translating them into oral language, transcribing them into print, and revising and editing written texts. These complex writing processes draw on multiple language and cognitive skills and knowledge in the context of physical and social environments (see Berninger & Winn, 2006; Graham, 2018; Hayes, 1996; Kim & Park, 2019). The Direct and Indirect Effects model of Writing (DIEW; Kim, 2020a; Kim & Park, 2019; Kim & Schatschneider, 2017) was recently proposed to describe the component or contributing skills and knowledge (component skills henceforward) that are involved in the aforementioned writing processes and writing development. In the present study, we expand DIEW by adding a dynamic or differential relations hypothesis as a function of measurement and dimensions of written composition, and by adding reading as a component skill that contributes to writing. We then empirically examined these additional hypotheses of DIEW, using data from English-speaking children in Grade 2. Specifically, we examined (a) the relations of higher order cognitive skills—inference, perspective taking, monitoring—to three dimensions of written composition: writing quality, writing productivity, and correctness in writing; and (b) the relation of reading comprehension to the three dimensions of written composition after accounting for oral language, cognition, and transcription skills; and the mediating role of reading comprehension in the relations of discourse oral language and lexical-level literacy skill to the three dimensions of written composition; and (c) total effects of oral language, cognition, transcription, and reading comprehension on the three dimensions of written composition.

DIEW

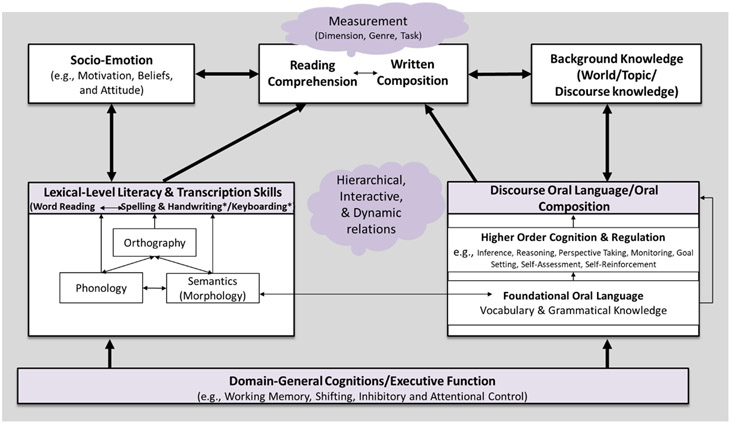

DIEW hypothesizes that the following skills contribute to writing processes and its product, written composition, as well as writing development (see Figure 1 and Appendix A): background knowledge; socio-emotions; transcription skills such as spelling and handwriting/keyboarding; knowledge or awareness of phonology, orthography, and semantics; oral composition or discourse oral language; higher order cognitive skills and regulation skills such as reasoning, perspective taking, inferencing, goal setting, and monitoring; vocabulary and grammatical knowledge; and domain-general cognitions or executive function such as working memory and attentional control. These component skills are expected to develop interacting with environmental factors, including home language and literacy environment, instruction at school, and larger communities and structures (e.g., Kim, Boyle, Zuilkowski, & Nakamura, 2016; also see Graham, 2018).

Figure 1.

Expanded Direct and Indirect Effects model of Writing. The component skills are expected to contribute to writing processes and consequent written composition. *Handwriting and keyboard skills are only relevant to written composition, not reading comprehension. Discourse knowledge includes genre knowledge (e.g., text structures and features associated with different genres), and knowledge about how carry out specific writing tasks. Grammatical knowledge includes morphosyntactic and syntactic knowledge. Interactive relations beyond what is noted graphically here by double-headed arrows are expected as well.

DIEW extends previous theoretical models in several ways. First, DIEW explicitly and clearly articulates a comprehensive set of specific component skills of writing. DIEW is a component skills model of writing that articulates component information processing systems that are involved in complex and recursive writing processes. Writing processes such as planning, revising and reflection, or text generation were well articulated in previous influential work such as the Hayes and Flower model (Hayes & Flower, 1980) and the knowledge telling and transforming model (Bereiter & Scardamalia, 1987). Although component skills were included in these theoretical models, they did not fully focus on them. For example, the Hayes and Flower (1980) model specified that the planning process requires input from long-term memory. Hayes and Flower did identify the various types of knowledge stored in long-term memory, but specific component skills and their structural relations were not the foci in the model.

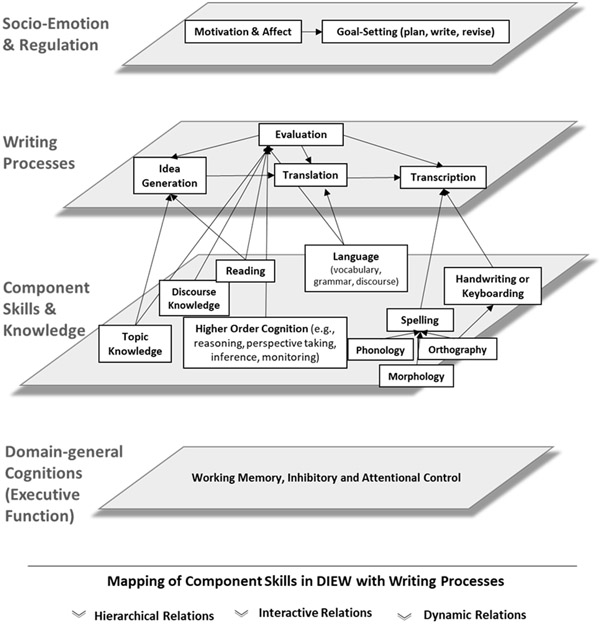

DIEW does a better job of mapping component skills and writing processes than prior models such as the one created and modified over time by Hayes (2012). This is illustrated in Figure 2. The idea generation process primarily draws on topic/content knowledge and reading (when involving reading source materials); the translation process primarily draws on oral language; the transcription process draws on spelling and handwriting or keyboarding skills; and the evaluation process primarily draws on language skills, reading skills, higher order cognitions, topic knowledge, and discourse knowledge. Domain general cognitions, socio-emotions, and self-regulation are involved during the entire writing processes.

Figure 2.

Expanded Direct and Indirect Effects model of Writing (DIEW) where writing processes are mapped with component skills and knowledge. Domain-general cognitions are involved throughout the writing process via component skills while the social-emotional control support the writing process. The arrows in the writing process plane should not be construed as completely sequential. Instead, simultaneous activations (e.g., generation, translation, and evaluation) and recursion are allowed. The component skills and knowledge also have hierarchical, interactive, and dynamic relations among each other, but are not represented here.

Explicit and precise articulation of component skills is an important step to directly and more readily link theory to assessment and instruction. For example, it is not apparent for assessment and instruction how planning, ideation, or text generation should be operationalized (e.g., how to assess and teach ideation). In contrast, specifying component skills that are needed for the planning process such as oral composition or discourse oral language, vocabulary, higher order cognitions, transcription, and text structure knowledge offer a clearer picture about how to operationalize assessment and instruction. This is a singular advantage of DIEW as well as the principle central to the model that component skills are involved in the writing process only as needed, and not all component skills are involved during the entire writing process.

Another crucial way that DIEW extends previous theoretical models (e.g., the simple view of writing, the not-so-simple view of writing, the Hayes and Flower model) is articulation of three testable hypotheses regarding structural relations of component skills—hierarchical, interactive, and dynamic hypotheses. DIEW hypothesizes and specifies hierarchical relations among the component skills (see Kim & Park, 2019, for a review of evidence). The hierarchical relations hypothesis lays out a chain of multi-channeled pathways by which component skills are related to written composition. An important corollary of hierarchical relations is direct and indirect relations of component skills. That is, not all component skills are directly related to written composition, and lower order skills are related to written composition via higher order skills (see Appendix A for an example).

DIEW also posits an interactive relations hypothesis, which states that relations between component skills and writing, and among component skills are interactive and bidirectional (see double-headed arrows in Figure 1; see Kim & Park, 2019, for details). For example, social-emotional aspects about writing are expected to develop in interactive manner with writing; so does content/topic knowledge with writing particularly in an advanced phase such as the knowledge-transforming stage by Kellogg (2008). Other component skills are also expected to have interactive relations, such as vocabulary and grammatical knowledge and their relations with inferencing skills (Currie & Cain, 2015; Kim, 2017; Lepola, Lynch, Laakkonen, Silven, & Niemi, 2012), and morphological awareness with vocabulary and grammatical knowledge (Kieffer & Lesaux, 2012; McBride-Chang et al., 2008).

The final hypothesis of DIEW is a dynamic relations hypothesis—the relations of component skills to written composition change or differ as a function of development such that transcription skills are expected to exert a large influence on writing process in the beginning phase of writing development, whereas discourse oral language and its component skills such as higher order cognitions are expected to play greater roles in a more advanced developmental phase (see Kim & Park, 2019).

Expanded DIEW

Dynamic Relations as a Function of Writing Measurement

We are expanding the dynamic relations hypothesis to include that the relations of component skills to the written composition vary as a function of measurement and dimensions of written composition. Measurement refers to how writing skills are assessed whereas dimensions refer to the aspects evaluated in written composition. Measurement is a broader concept and includes multiple facets of assessment of written composition, including assessment format1, assessment genre2, and evaluation of written composition. The dynamic relations hypothesis in the expanded DIEW includes these various facets of measurement of writing, and each deserves careful attention. However, elaboration of each measurement facet is beyond the scope of this paper, and in the present study, we focus and elaborate on evaluation of written composition (i.e., dimensions of written composition).

Written composition has been evaluated in multiple ways (e.g., Kim, Schatschneider, Wanzek, Gatlin, & Al Otaiba, 2017; Swartz et al., 1999), including writing quality, writing productivity, correctness in writing, spelling and conventions, vocabulary, and syntactic complexity. Studies found that these different dimensions of written composition are related but dissociable (Coker, Ritchey, Uribe-Zarain, & Jennings, 2018; Kim, Al Otaiba, Folsom, Gruelich, & Puranik, 2014; Kim, Al Otaiba, Wanzek, & Gatlin, 2015; Puranik, Lombardino, & Altmann, 2008; Wagner et al., 2011). Writing quality is the most widely evaluated dimension of written composition, is arguably the most important dimension, and typically includes coherence and quality of ideas, and use of language (vocabulary and sentence structure; e.g., Coker et al., 2018; Graham, Harris, & Chorzempa, 2002; Hooper, Swartz, Wakely, de Kruif, & Montgomery, 2002; Kim et al., 2015; Olinghouse, 2008). Another widely examined dimension is writing productivity, the amount of writing such as number of words and sentences (e.g., Abbott & Berninger, 1993; Berman & Verhoevan, 2002; Kim et al., 2011, 2014; Mackie & Dockrell, 2004; Olinghouse & Graham, 2009; Scott & Windsor, 2000). Also widely used particularly in the context of screening and progress monitoring for developing writers is the Curriculum-Based Measurement (CBM) writing scores, which include indicators for correctness in writing such as accuracy in spelling and grammaticality. Note that although writing productivity or correctness in writing in and of themselves are not the ultimate outcomes or dimensions of interest, they have been widely used as important indicators of writing proficiency particularly with developing writers and studies have shown moderate to strong relations with writing quality (Abbott & Berninger, 1993; Kim et al., 2011, 2014; Mackie & Dockrell, 2004; Wagner et al., 2011).

If there are multiple dimensions of written composition or multiple ways of evaluating written composition, then are the relations of component skills to various dimensions of written composition similar or different? Previous theoretical models were silent about dimensionality and its implications. Dimensionality is an important question to consider in a theoretical model because demands of the component skills are likely to differ by way of focal dimensions of written composition. In other words, a theoretical model should recognize both sides of the equation—outcome (dimensions of written composition) and predictors (component skills)—particularly if the outcome is multi-dimensional as is the case with written composition. For instance, if quality of ideas is a focal dimension, then the ability to express ideas using precise vocabulary and sentence structures, and the ability to arrange ideas in a coherent manner considering audience’s needs using higher order cognitive skills should be vital contributors, in addition to transcription skills. In contrast, oral language skills and/or higher order cognitive skills are not as likely to be particularly important to writing productivity or the length of composition; instead transcription skills should be. Furthermore, grammatical knowledge and spelling skill should be important to correctness in writing as this dimension evaluates grammatical and spelling accuracy in written composition.

A few previous studies have suggested differential relations of component skills to various dimensions of written composition. Oral language skill composed of vocabulary and grammatical knowledge made an independent contribution to writing quality over and above transcription skills, but not to writing productivity (Kim et al., 2014, 2015). In contrast, transcription skills were more strongly related to writing productivity as well as the spelling and conventions dimension of written composition (Kim et al., 2014). Studies also showed that higher order cognitions, such as inference, perspective taking, and monitoring, are related to writing quality. In particular, inference was independently related to writing quality even after accounting for language, transcription, and monitoring for first graders (Kim & Schatschneider, 2017), and inference in Grade 1 predicted writing quality in Grade 3 even after controlling for writing quality in Grade 1 (Kim & Park, 2019). Perspective taking as measured by theory of mind was related to writing quality for students in Grade 4 (Kim, 2020a).

It should be noted that written composition in DIEW, like in other theoretical models of writing such as the Hayes and Flower model or the not-so-simple view of writing, refers to a theoretical construct of a general writing skill. Although one’s writing skill is materialized or manifested in varying contexts and tasks, and one’s writing skill may vary depending on the genres and tasks, this does not deny the existence of a measurable general writing skill. In practice, one’s general writing skill can be measured in multiple genres using multiple tasks and using a latent variable that captures common variance across genres and tasks. The point is that DIEW is not a model of a particular genre or task. Instead, DIEW is a theoretical model of writing that describes component skills that contribute to writing process and writing development across genres and tasks, but posits that the extent of contributions of component skills varies as a function of an individual factor such as development, and assessment factors such as measurement and dimensions of written composition.

Reading as a Component Skill of Writing

The second way we expand DIEW is inclusion of reading as an additional component skill of writing. Reading skills, such as word reading and reading comprehension, are essential during the revision and editing processes (Breetvelt, van den Bergh, & Rijlaarsdam, 1996; Hayes, 1996; also see Deane et al., 2008). Another rationale for including reading as a component skill of writing is a functional aspect (e.g., Shanahan, 2016)—reading and writing co-occur, and are needed to complete a task as writers analyze and interpret meaning from written sources and respond to them in writing. Word reading is necessary as the writer has to decode words she wrote during revision process or those in source materials. Reading comprehension is also expected to contribute to written composition, especially to the writing quality dimension. When the writer reads her own text for revision, she has to construct an accurate mental representation of the text (see Hayes, 1996) and evaluate it compared to her intended goals, which then guides subsequent revision actions—if there are discrepancies between intended goals and draft text, then the author would make necessary changes. Therefore, by contributing to an accurate mental representation of one’s own text during the revision process, reading comprehension is expected to contribute to the quality of one’s written composition. The same is true when reading source materials is part of a writing task—an accurate and deep understanding of source texts is important to idea generation and formulation, and subsequent writing processes, and, therefore, influences the quality of written composition. In other words, constructing a rich and accurate mental model or deep understanding of one’s own written texts or source materials would lead to rich and coherent written composition (i.e., writing quality) while incomplete or shallow understanding of one’s written texts or source materials would lead to incomplete or less coherent ideas in written composition.

One key point that should be underscored regarding the hypothesis that reading is a component skill of writing is that reading itself is a complex skill. As shown in Figure 1, both reading comprehension and written composition are constructs built on a highly similar complex set of component skills such as background knowledge, higher order cognitions, vocabulary, grammatical knowledge, working memory, attentional control, and lexical level literacy skills (Kim, 2020b for a theoretical model on reading). Of course, there is an exception such that handwriting or keyboarding fluency is not relevant to reading comprehension. Furthermore, the relative contributions of component skills to reading comprehension versus written composition are expected to differ (Kim, 2020c, for details regarding reading-writing relations). Similarly, word reading and spelling are built on the same component skills such as phonological, orthographic, and semantic knowledge and awareness (see Adams, 1990) although spelling requires a more precise orthographic representation (see Ehri, 1997; Perfetti, 1997).

The reading-writing relation has been long recognized (Kim, 2020c; Fitzgerald & Shanahan, 2000; Hayes, 1996; Langer & Flihan, 2000; Shanahan, 2016; Shanahan & Lomax, 1986). One hypothesis for the reading-writing relation is that reading and writing draw on common shared skills and knowledge (Kim, 2020c; Fitzgerald & Shanahan, 2000; Langer & Flihan, 2000; Shanahan, 2016; Shanahan & Lomax, 1986). Fitzgerald and Shanahan (2000) elegantly summarized shared sources for reading and writing as follows: metaknowledge (pragmatics such as knowledge about functions and purposes of reading and writing, one’s own meaning making), domain knowledge about content, knowledge about universal text attributes (e.g., graphophonics, syntax, and text organization), and procedural knowledge (e.g., accessing and using knowledge). The inclusion of reading in the expanded DIEW is very much in line with Fitzgerald and Shanahan’s (2000) conceptualization but with a critical difference—the expanded DIEW articulates specific component skills that are shared in reading and writing based on theoretical models of writing noted above and those of reading (see the triangle model [Adams, 1990], the simple view of reading [Gough & Tunmer, 1986], the direct and mediated model [Cromley & Azevedo, 2007], the reading systems framework (Perfetti & Stafura, 2014), and direct and indirect effects model of reading [Kim, 2017, 2020b]). DIEW is also in line with a recent literacy model that integrates reading and writing (Kim, 2020c).

As shown in Figure 1, reading comprehension and written composition are hypothesized to have interactive relations, particularly beyond the beginning phase of development characterized as the knowledge-telling phase (see Kim, 2020c for details). In the present study we examined the direction of reading comprehension to dimensions of written composition based on the extant evidence from developing writers (Ahmed et al., 2014; Bergninger & Abbott, 2010; Kim, Petscher, Wanzek, & Al Otaiba, 2018). Specifically two longitudinal studies expressly examined the directionality. Ahmed, Wagner, and Lopez (2014) conducted a longitudinal study from Grade 1 to Grade 4 and found the relation of reading to writing at the lexical level (i.e., word reading predicted spelling) and discourse level (reading comprehension predicted written composition), not the other way around. Highly similar findings were reported in a longitudinal study from Grade 3 to Grade 6 (Kim et al., 2018).

One corollary of reading comprehension as a component skill of written composition is that reading comprehension mediates3 the relations between component skills and different dimensions of written composition. Reading comprehension and written composition share largely similar component skills (see Figure 1), and reading comprehension is a component skill of written composition (see Figure 2). Then, it is reasonable to posit that reading comprehension mediates the relations of shared component skills to written composition, writing quality in particular (see above). In other words, reading comprehension largely captures the component skills that contribute to written composition, and therefore, mediates their relations to written composition. It should be noted that ‘mediation’ here does not mean that reading comprehension should be part of the writing process for all writers in all writing tasks. Writers, beginning or advanced writers, differ in the extent to which they reread their own written texts for revision purposes in different writing tasks; and writing tasks vary in the extent to which source materials are included. What we are examining is that theoretically reading comprehension, which captures or draws on highly similar set of component skills as for written composition, would mediate the relations of component skills to dimensions of written composition. When writers engage in reading while writing, reading comprehension would clearly play a mediating role, and when writers do not engage in reading, it would not. The latter, however, does not entail that we cannot test the theoretical idea that reading comprehension plays a mediating role just because writers do not always employ reading during the writing process.

In line with the dynamic relations hypothesis, the relation of reading comprehension to written composition varies as a function of development and dimensions of written composition. In the initial phase of development when students are just learning to write, reading comprehension is constrained by lexical-level literacy skill (i.e., word reading), and therefore reading comprehension captures lexical-level literacy skill to a greater extent than discourse oral language (Adlof, Catts, & Little, 2006; Kim & Wagner, 2015; Reed, Petscher, & Foorman, 2016). This does not, however, mean that reading comprehension is identical to word reading in the initial phase. Studies have shown that discourse oral language does uniquely contribute to reading comprehension over and above word reading even for beginning readers such as English-speaking students in Grade 1 (Hoover & Gough, 1990; Kim, Wagner, & Foster, 2011; Ouellette & Beers, 2010) and thus, reading comprehension captures and is a function of both word reading and discourse oral language and their associated component skills even during the initial phase of development. Given the greater dominance of lexical-level literacy skill in reading comprehension during the initial phase, then, reading comprehension is likely to partially, not completely, mediate the relations of discourse oral language or component skills of discourse oral language to dimensions of written composition in the beginning phase of development. In contrast, as students develop reading skills, reading comprehension is less constrained by lexical-level literacy skills and thus captures language and higher order cognitions to a greater extent (Adlof et al., 2006; Foorman, Koon, Petscher, Mitchell, & Truckenmiller, 2015; Kim & Wagner, 2015) so that reading comprehension might completely mediate the relation of discourse oral language to written composition in an advanced phase of development.

Present Study

Like any theoretical model, DIEW should undergo rigorous testing using data from writers from different developmental phases, and those learning to read and write in different writing systems and learning in L1 and L2. The roles of component skills of DIEW, their hierarchical relations and dynamic relations as a function of development have been examined in prior work with students in elementary grades (Kim, 2020a; Kim & Park, 2019; Kim & Schatschneider, 2017). In the present study, we focused on the two additionally proposed hypotheses of DIEW, the dynamic relations hypothesis as a function of dimensions of written composition and reading comprehension as a component skill of writing, using data from readers and writers in Grade 2. The following were specific research questions and associated hypotheses.

Is the dynamic relations hypothesis supported for the relations of higher order cognitive skills to different dimensions of written composition? Specifically, do higher order cognitive skills such as inference, perspective taking, and comprehension monitoring differentially relate to writing quality, writing productivity, and correctness in writing after accounting for transcription skills (spelling and handwriting fluency) and domain-general cognitions (working memory and attentional control)? We anticipated that higher order cognitive skills would be related to writing quality, but not to writing productivity or correctness in writing because higher order cognitions are expected to contribute to establishing global coherence (see Appendix A), which is primarily captured in writing quality, but not in writing productivity or correctness in writing.

Is reading comprehension differentially related to writing quality, writing productivity, and correctness in writing after controlling for language, cognition, lexical literacy (word reading and spelling), and handwriting fluency? Does reading comprehension partially or completely mediate the relations of discourse oral language and lexical literacy to written composition, and do the mediating relations vary for writing quality, writing productivity, and correctness in writing? We posited that reading comprehension would predict writing quality (see above; e.g., Berninger & Abbott, 2010; Kim et al., 2018), but not writing productivity or correctness in writing, after accounting for language, cognition, lexical literacy, and handwriting fluency skills. We also anticipated that reading comprehension would differentially mediate the relations of discourse oral language and lexical literacy to written composition—partial mediation for discourse oral language and full mediation for lexical literacy—given that English-speaking children in Grade 2 are, on average, in the beginning phase of literacy development (see above).

Do the total effects of language (discourse oral language, vocabulary, grammatical knowledge), cognition (inference, perspective taking, monitoring, working memory, attention control), lexical literacy (word reading and spelling), handwriting fluency, and reading comprehension vary for writing quality, writing productivity, and correctness in writing? We expected variation in the total effects of component skills on the different dimensions of written composition. Specifically, we anticipated that all the component skills would be important to writing quality although the magnitudes of total effects would vary (Kim et al., 2014, 2015). For writing productivity, we anticipated that lexical literacy and handwriting fluency would make large contributions. For correctness in writing, we expected that spelling and grammatical knowledge would be particularly important.

Method

Participants

Data for the present study came from 350 children in Grade 2 from 30 classrooms in seven public schools in a southeastern semirural area of the United States (53% boys; mean age = 7.54 years, SD = .64). The data were collected as part of a larger longitudinal study on children’s literacy development, and results on reading comprehension were reported earlier (Kim, 2017; the research question was predictors of reading comprehension). In the present study, we used cross-sectional data from children in Grade 2. These children were composed of two cohorts of children from two consecutive years in the same schools (n = 165 for cohort 1; n = 185 for cohort 2). The protocol, order, and timing of the assessment within the academic year were identical in this study across the two cohorts. Because the distributions, performance levels (e.g., raw scores and standard scores), and correlation patterns (directions and magnitudes) were similar for the two cohorts, the combined sample was used in the present study for statistical power (see further details in Kim, 2017). The racial/ethnic composition of the sample children was as follows: 53% Caucasians, 34% African Americans, and 6% Hispanics. Approximately three fourths of the children were eligible for free or reduced-price lunch, and approximately 1.8% of the sample were considered English learners according to the district records. All children in participating classes were invited to participate in the study, but children with identified intellectual disabilities were excluded. School personnel indicated an absence of a formal district-wide writing curriculum, but many teachers reported using a writer’s workshop approach.

Measures

Children’s responses for the majority of tasks were scored dichotomously (1 = correct, 0 = incorrect) for each item. Exceptions include written composition, discourse oral language production (oral retell), handwriting fluency, working memory, attention, and a few items in the Narrative Comprehension of the Test of Narrative Language (see below). Unless otherwise noted, all the items were administered to children.

Written composition: Dimensions of written composition (writing quality, writing productivity, and correctness in writing).

Children were administered two expository prompts, using one normed task and one experimental task: the Essay Composition task of Wechsler Individual Achievement Test – 3rd edition (WIAT-III; Wechsler, 2009) and a Beaver prompt. In the WIAT-III task, the child was asked to write about his or her favorite game and provide at least three reasons. In the Beaver task, the child was provided with a passage about beavers (297 words), with three accompanying illustrations. The passage about beavers was adapted from the Qualitative Reading Inventory – 5th Edition (QRI; Leslie & Caldwell, 2011; Level 3). The original beaver text did not have accompanying illustrations and is designated as a third grader text in QRI. We used the adapted beaver text with illustrations in a pilot study and found it to be adequate for second graders (i.e., majority of children were able to write about beavers at least to some extent). After reading the given text, the child was asked to write “details about what beavers do and how they do it.” They were asked to use information from the given text and illustrations to facilitate their writing process. Children were told not to copy sentences verbatim from the provided passage. Children were given 15 minutes for each prompt, excluding reading time for the Beaver prompt, based on our extensive experiences with primary grade children. The format of the Beaver task, writing based on source materials, was used to reflect the emphasis of writing in response to source materials in the Common Core State Standards and other similar state standards in the United States.

Children’s handwritten compositions were typed up verbatim. Then, another typed-up version was created where words that were incorrectly spelled but decodable by people who are familiar with children’s writing (two former classroom teachers) were converted to real words for evaluation purposes. However, strings of letters that were not reasonably decodable were retained verbatim in both typed versions. Children’s written compositions were scored in three dimensions: quality, productivity, and correctness. Writing quality and productivity were evaluated using the typed versions with corrected spelling, and therefore, children’s spelling was not taken into consideration in evaluation. This decision was based on evidence that legibility of handwriting and spelling errors influence evaluators’ judgement of writing quality (see Graham, Harris, and Hebert, 2011b, for a review). Children’s original handwritten versions were used for the evaluation of correctness in writing following protocols of CBM-Writing literature (McMaster & Espin, 2007).

Writing quality was operationalized as the extent and clarity of idea development and organization. We modified the “ideas” and “organization” traits of the 6 + 1 Trait Rubric so that a single score on a scale of 1 to 7 considering both ideas and organization aspects was assigned to a student’s written composition. A zero was assigned to clearly unscorable compositions due to illegibility, for example, which was rare. Compositions that clearly and explicitly presented on-topic ideas with relevant supporting details were rated high. In addition, overall structural organization and logical sequences of ideas were taken into consideration so that compositions with a clear beginning, middle, and end as well as tight coherent sequencing of ideas were rated high, in line with previous studies (e.g., Hooper et al., 2002; Kim et al., 2015; Olinghouse, 2008). For the Beaver prompt, when the vast majority of written composition was verbatim copy of the given source text, compositions were scored as a zero (six students’ compositions). When only a few sentences were directly taken from the provided text, these sentences were excluded from evaluation. Two raters were extensively trained, and inter-rater reliabilities (Cohen’s kappa) were .87 for the WIAT-III and .95 for the Beaver prompts, using a total of 80 written samples.

Writing productivity was measured by the number of words written, following previous studies (e.g., Abbott & Berninger, 1993; Kim et al., 2011, 2014; Puranik et al., 2008; Wagner et al., 2011). Words that were recognizable as real words (those listed in the dictionary, including slang expressions) in the context of the child’s written composition, despite spelling errors, were given credit. Reliability, exact percent agreement, was estimated to be .95, using 42 written samples. Fewer written samples than for writing quality were used to establish reliability for the number of words written because a high agreement rate was observed during the training session.

Correctness in writing was measured using one of the CBM writing scores, correct minus incorrect word sequences (CIWS), because of strong validity evidence (Graham, Harris, & Hebert, 2011a; McMaster & Espin, 2007). CIWS is derived by subtracting the number of incorrect word sequences from the number of correct word sequences. Correct word sequences are two adjacent words that are grammatically correct and spelled correctly. Students’ handwritten version was used for CBM writing scores, following CBM conventions. Reliabilities, using a similarity coefficient, were estimated to be .95 and .94 for correct word sequences and incorrect word sequences, respectively, using 60 written samples. A similarity coefficient, which indicates proximity of the coders’ scores (Shrout & Fleiss, 1979), was used because these data are interval not categorical.

Component skills.

Reading comprehension, discourse oral language, spelling, handwriting fluency, inference, perspective taking, monitoring, vocabulary, grammatical knowledge, working memory, and attention were measured.

Reading comprehension.

Two widely used normed tasks were used: the Passage Comprehension of Woodcock Johnson-III (WJ-III; Woodcock, McGrew, & Mather, 2001) and the Reading Comprehension of WIAT-III (Wechsler, 2009). The former is a cloze task where the child was asked to read sentences and passages and to fill in blanks. In the latter task, the child was asked to read passages and to answer multiple choice questions. Cronbach’s alpha estimates of the scores were .83 and .82 for WJ-III and WIAT-III, respectively.

Discourse oral language.

Oral language proficiency at the discourse level was measured by three listening comprehension and two oral retell and production tasks. It may be argued, based on theoretical models such as the simple view of reading (Hoover & Gough, 1990) and simple view of writing (Juel et al., 1986), that listening comprehension tasks and oral retell/production tasks should be used for reading comprehension and written composition, respectively. However, listening comprehension and oral retell/production tasks were used as a single latent variable for two reasons. First, in the statistical model of the present study, discourse oral language skill was used to predict reading comprehension and written composition and, therefore, including either listening comprehension or oral retell, but not both, would not appropriately capture the relation of discourse language skill to reading comprehension and written composition. Second, a previous study showed that listening comprehension and oral retell/production were best described as having a bifactor structure, composed of a common factor and residual comprehension-specific and production-specific factors. Importantly, it was the common factor—the common variance between listening comprehension and oral retell and production tasks—that was related to reading comprehension and written composition, not the comprehension- and production-specific factors (Kim, Park, & Park, 2015). Note that analyses for Research Questions 2 and 3 were replicated after excluding listening comprehension tasks from the discourse oral language construct. Patterns of results are essentially identical to those reported in the main text.

Listening comprehension was measured by three tasks: the Listening Comprehension Scale of the Oral and Written Language Scales–II (OWLS-II; Carrow-Woolfolk, 2011), the Narrative Comprehension subtest of the Test of Narrative Language (TNL; Gillam & Pearson, 2004), and an experimental informational task. In the OWLS-II task, the child heard sentences and was asked to point to a picture that best represents the answer to a question (α = .94). Testing discontinued after four consecutive incorrect items. In the TNL task, the child heard three narrative stories and was asked 30 open-ended comprehension questions (25 literal questions & 5 inferential questions) for each story (α = .74). The majority of the items were scored dichotomously, but six items were scored 0 to 2 and two items were scored 0 to 3 according to the TNL manual (maximum possible score = 40). In the experimental informational task, the child heard three informational texts (Changing Matter, Whales and Fish, and Where Do People Live?) from the Qualitative Reading Inventory-5 (Leslie & Caldwell, 2011) and was asked eight comprehension questions about each passage for a total possible score of 24 (14 literal questions & 10 inferential questions; α = .72).

Oral retell and production tasks were measured by children’s retell after hearing the three TNL stories and three experimental informational passages. Children were asked to tell everything they remembered. Children’s oral retell was digitally recorded and transcribed using the Systematic Analysis of Language Transcription guidelines (SALT; Miller & Iglesias, 2006) and then coded for overall quality. In the TNL task, retell was coded for the quality of story structure elements such as main characters, setting, main events, problem, and resolution. The majority of story structural elements were rated on a scale of 0 (absence of relevant information) to 3 (precise information) except for the resolution element, which was scored 0 to 2. For example, for character description of TNL task 1, a score of 0 was given when the child’s retell did not include any names of the characters, a score of 1 was given when only one of the characters was correctly named, a score of 2 was given when two of the three characters were correctly named, and a score of 3 was given when all three characters were named correctly. In addition, retell was coded on the inclusion of important details (1 for each important detail included), inclusion of introduction (1 = introduction was present [e.g., This story is about…]; 0 = introduction was absent) and closing (1 = closing was present [e.g., That is all.]; 0 = closing was absent), and logical sequencing of the story (1 = order of mainline events was logical; 0 = order of mainline events was not logical; see Kim & Schatschneider, 2017, for a similar approach). Maximum possible scores varied a bit depending on the nature of the story, and they were as follows: 23 for the TNL task 1, 21 for the TNL task 2, and 24 for the TNL task 3.

In oral retell of informational texts, we evaluated the extent to which main ideas were stated and key details were included. Main ideas were scored on a scale of 0 to 2 depending on accuracy. Key details were counted (1 point for each key idea; Wagner et al., 2011, for a similar approach). Maximum possible scores were as follows: 18 for the Matter text, 21 for the Whales and Fish text, and 34 for the Where Do People Live? text. The maximum possible score for the last passage (Where Do People Live?) was relatively high because the passage was about comparing and contrasting different places where people live, rendering many possible points for explicitly noting similarities and differences. Percent agreement ranged from .90 to .99, using 50 sample retells. All the retells were double scored, and final scores were determined after discussion of discrepant scores.

Spelling.

In order to capture the ability to spell words appropriate for children in Grade 2 (e.g., CVCe words, vowel digraphs, multi-syllabic words), an experimental dictation task was used. Target words were first presented in isolation, then in a sentence, and, last, in isolation again. There were 22 items. Cronbach’s alpha was estimated to be .88.

Word reading.

Three widely used normed word reading tasks were used. In the Letter Word Identification of the WJ-III, the child was asked to read aloud a list of words of increasing difficulty (α = .91). Test administration discontinued after six consecutive incorrect items. The other tasks were two forms (A & B) of the Sight Word Efficiency task of the Test of Word Reading Efficiency–II (Wagner, Torgesen, & Rashotte, 2012), where the child was asked to read words of increasing difficulty with accuracy and speed in 45 seconds (test-retest reliability = .93, Wagner et al., 2012).

Handwriting fluency.

Handwriting fluency was measured by sentence copying tasks, using three sentences. In each task, the child was shown a sentence and was asked to copy it as many times as possible in 1 minute. The sentences were as follows: The quick brown fox jumps over the lazy dog, My dog jumps and runs when I tell him to jump and run, and My mom put the lid on the pan to cook the food. The first sentence is a pangram and has been used in previous studies (e.g., Wagner et al., 2011). The second and third sentences were experimental. Children’s responses were scored by counting the number of letters copied correctly. Alternate form reliability (correlations among the three sentences) ranged from .63 to .72.

Inference.

Inference was measured by the Inference task of the Comprehensive Assessment of Spoken Language (CASL; Carrow-Woolfolk, 1999). The child heard a one- to three-sentence story and was asked a question that required inference drawing on background knowledge. For instance, the child heard “Mom, Dad, and Sam were at the dinner table when Mom looked at Sam and said, ‘I forgot to get the water.’ What do you think Mom wanted Sam to do?” The correct responses include “get the water” or something similar. Test administration discontinued after five consecutive incorrect items. Cronbach’s alpha was .91.

Perspective taking.

Perspective taking was measured by a theory of mind task (Caillies & Sourn-Bissaoui, 2008; Kim, 2015, 2016; Kim & Phillips, 2014). Three second-order false belief scenarios, appropriate to the developmental stage of the participating children (7-year-olds), were used (Kim, 2015 for details). These second-order scenarios required the child to infer a story character’s mistaken belief about another character’s knowledge. The child heard scenarios about the context of a bake sale, going out for a birthday celebration, and visiting a farm, which were presented with a series of illustrations, and was then asked questions related to understanding characters’ mental states (e.g., What does Sam think they are selling at the bake sale? Why does he think that?). There were 18 questions (six per scenario). Cronbach’s alpha was .71.

Monitoring.

Children’s ability to monitor comprehension was assessed using an inconsistency detection task (Cain, Oakhill, & Bryant, 2004; Kim, 2015). In this task, the child heard a short story and was asked whether the story made sense. If the child stated that the story did not make sense, then he or she was asked to provide a brief explanation and to fix the story so that it made sense. There were two practice items (one consistent and one inconsistent) and nine test items (three consistent and six inconsistent). For all nine items, accuracy of the child’s answer about whether a story was consistent or inconsistent was dichotomously scored. For the six inconsistent stories, the accuracy of children’s explanation and repair of the story were also dichotomously scored; thus, the total possible score for this task was 21. Cronbach’s alpha was estimated to be .69.

Vocabulary.

A normed task, the Picture Vocabulary of the Woodcock Johnson-III, was used. In this task, the child was asked to name pictured objects or provide synonyms. Test administration discontinued after six consecutive incorrect items. Cronbach’s alpha was estimated to be .69.

Grammatical knowledge.

A normed task, the Grammaticality Judgement task of the Comprehensive Assessment of Spoken Language (CASL; Carrow-Woolfolk, 1999), was used. In this task, the child heard a sentence (e.g., The boy are happy) and was asked whether the sentence was grammatically correct. If grammatically incorrect, the child was asked to correct the sentence. Test administration discontinued after five consecutive items that were answered wrongly. Cronbach’s alpha was estimated to be .94.

Working memory.

Working memory was measured by a listening span task (Cain et al., 2004; Kim, 2015, 2016). The child was presented with a sentence (e.g., Apples are red) and asked to identify whether the heard sentence was true or not. After hearing sentences, the child was asked to recall the last words in the sentences. There were four practice items and 14 test items. Testing discontinued after three consecutive incorrect responses. Children’s responses regarding the veracity of the statements (yes/no responses) were not scored; only children’s word recall was scored. Recall of the correct last words in correct order was given a score of 2, recall of the correct last words in incorrect order was given a score of 1, and recall of incorrect last words was given a score of 0. Therefore, the maximum possible total score was 28 (14 items x 2). Cronbach’s alpha was estimated to be .71.

Attentional control.

The first nine items in the Strengths and Weaknesses of ADHD Symptoms and Normal Behavior (SWAN; Swanson et al., 2006) which are related to sustained attention on tasks or activities (Sáez, Folsom, Al Otaiba, & Schatschneider, 2012) were used. SWAN includes 30 items on children’s behaviors related to attention and hyperactivity on a 7-point scale (1 = far below average; 7 = far above average). The maximum possible score was 63 (9 items x 7). Teachers of the participating children completed the SWAN checklist. Cronbach’s alpha was estimated to be .99.

Procedures

Rigorously trained research assistants (assessors had to pass a 99% fidelity check) worked with children in a quiet space in the school. The assessment batteries were administered in several sessions, and each session lasted approximately 30 to 40 minutes to reduce fatigue effects. The vast majority of assessment batteries were administered to children individually, except for the written composition, spelling, and handwriting fluency tasks, which were administered in groups (typically four children). Language and cognitive skills were measured in the fall; reading skills were measured in the winter; and writing skills (transcription and written composition) were measured in the spring. Assessment time for the included tasks varied depending on the child, but individual assessments took approximately 160 minutes, on average, and group assessments took approximately 70 minutes per group, on average. Evaluation of written compositions and coding of oral retell were conducted by project staff who had extensive experiences in evaluating children’s written compositions and oral retell in previous work led by the first author and who were trained rigorously in several sessions for each dimension of written composition (i.e., writing quality, productivity, and correctness in writing).

Results

Descriptive Statistics and Preliminary Data Analysis

Prior to the estimation of the descriptive statistics and correlations, missing data were evaluated for the measures. Missing data rates were minimal, ranging from 0% on the TNL retell to 4% on the CIWS for the WIAT writing task. Data from all children were used in the analysis, using full information maximum likelihood in confirmatory factor analysis and SEM (e.g., see Enders & Bandalos, 2001, for use of full information maximum likelihood for missing data).

Descriptive statistics are displayed in Table 1. Mean scores of writing quality were 2.99 and 2.74 for the WIAT Essay Composition and Beaver tasks, respectively, and there was sufficient variation around the means (SDs = 1.06 and .99 for each task, respectively). Children wrote, on average, 65 (SD = 32.52) to 68 (SD = 42.73) words in the two written composition tasks. Children’s mean performances on the normed and standardized tasks such as reading comprehension, word reading, listening comprehension, inference, vocabulary, and grammatical knowledge were in the average range (e.g., mean standard score for the WJ Passage Comprehension = 96.88). Skewness (< ±2) and kurtosis values (< 7; West, Finch, & Curran, 1995) were in the acceptable ranges. Subsequent analysis was conducted using raw scores.

Table 1.

Descriptive Statistics

| Measure | M | SD | Min-Max | Skewness | Kurtosis |

|---|---|---|---|---|---|

| Writing Quality | |||||

| WIAT Writing: quality | 2.99 | 1.06 | 0 - 6 | −0.22 | −0.20 |

| Beaver Writing: quality | 2.74 | 0.99 | 0 - 6 | −0.10 | 0.77 |

| Writing Productivity | |||||

| WIAT Words written | 68.10 | 42.73 | 0 - 222 | 0.90 | 0.26 |

| Beaver Words written | 64.72 | 32.52 | 7 - 167 | 0.80 | 0.27 |

| Correctness in Writing | |||||

| WIAT Correct word sequences | 44.21 | 31.14 | 0 - 139 | 1.02 | 0.37 |

| WIAT Incorrect word sequences | 31.87 | 22.93 | 1 - 149 | 1.57 | 3.24 |

| WIAT CIWS | 12.35 | 28.65 | −89 - 106 | .68 | 1.79 |

| Beaver Correct word sequences | 42.31 | 28.94 | 1 - 154 | 1.05 | 0.86 |

| Beaver Incorrect word sequences | 30.60 | 21.17 | 0 - 115 | 1.16 | 1.50 |

| Beaver CIWS | 11.71 | 35.40 | −82 - 142 | .43 | .59 |

| Word Reading | |||||

| WJ Letter Word Identification | 42.01 | 6.47 | 18-63 | .38 | .62 |

| WJ Letter Word Identification – SS | 104.18 | 12.90 | 47-135 | −.49 | .91 |

| TOWRE SWE 1 | 51.64 | 12.08 | 7-75 | −.56 | .08 |

| TOWRE SWE 1 – SS | 98.95 | 16.10 | 55-131 | −.54 | .01 |

| TOWRE SWE 2 | 52.01 | 12.18 | 9-78 | −.44 | .21 |

| TOWRE SWE 2 – SS | 99.24 | 16.45 | 55-135 | −.45 | .00 |

| Spelling | |||||

| Spelling | 12.52 | 4.98 | 0 - 22 | −0.18 | −0.74 |

| Handwriting Fluency | |||||

| Sentence Copying 1 | 10.43 | 3.61 | 0 - 23 | 0.24 | 0.29 |

| Sentence Copying 2 | 14.93 | 4.55 | 1 - 29 | 0.12 | 0.45 |

| Sentence Copying 3 | 18.85 | 5.36 | 0 - 34 | −0.09 | 0.33 |

| Reading Comprehension | |||||

| WJ Passage Comprehension | 22.98 | 4.22 | 9 - 33 | .08 | −.43 |

| WJ Passage Comprehension – SS | 96.88 | 11.70 | 44 - 122 | −.58 | 1.05 |

| WIAT Reading Comprehension | 50.94 | 11.30 | 3 - 83 | −.08 | .81 |

| WIAT Reading Comprehension – SS | 96.71 | 13.18 | 40 - 138 | .03 | 1.23 |

| Discourse Oral Language | |||||

| OWLS Listening Comprehension | 76.46 | 13.03 | 37 - 103 | −0.17 | −0.49 |

| OWLS Listening Comprehension – SS | 97.05 | 15.15 | 44 - 124 | −0.45 | 0.08 |

| TNL Comprehension | 25.87 | 4.98 | 5 - 36 | −0.79 | 0.94 |

| TNL Comprehension – SS | 8.30 | 2.87 | 1 - 15 | −0.09 | −0.03 |

| Informational Text Comprehension | 9.70 | 3.48 | 1 - 20 | 0.47 | 0.07 |

| TNL Retell | 30.29 | 12.30 | 0 - 53 | −0.61 | −0.08 |

| Informational Text Retell | 10.39 | 7.16 | 0 - 42 | 1.07 | 1.52 |

| Knowledge-Based Inference | |||||

| CASL Inference | 10.89 | 6.98 | 0 - 31 | 0.59 | −0.43 |

| CASL Inference – SS | 92.51 | 13.29 | 56 - 127 | 0.25 | −0.32 |

| Perspective Taking | |||||

| Theory of Mind | 7.79 | 3.92 | 0 - 17 | 0.08 | −0.76 |

| Monitoring | |||||

| Comprehension Monitoring | 6.77 | 2.96 | 1 - 16 | 0.36 | −0.50 |

| Vocabulary | |||||

| WJ Picture Vocabulary | 20.48 | 2.90 | 7 - 29 | −0.10 | 1.14 |

| WJ Picture Vocabulary – SS | 96.91 | 10.52 | 43 - 126 | −0.43 | 1.81 |

| Grammatical Knowledge | |||||

| CASL Grammaticality | 32.43 | 12.71 | 2 - 66 | 0.02 | −0.15 |

| CASL Grammaticality – SS | 95.84 | 13.54 | 40 - 134 | −0.43 | 0.75 |

| Working Memory | |||||

| Working Memory | 8.21 | 3.91 | 0 - 20 | 0.02 | 0.20 |

| Attentional Control | |||||

| SWAN Attention | 35.69 | 12.03 | 9 - 63 | 0.36 | −0.23 |

Note. Unless otherwise noted, all the scores are raw scores. Theory of mind is a measure of perspective taking. WIAT = Wechsler Individual Achievement Test; CIWS = correct minus incorrect word sequences; WJ = Woodcock Johnson; SS = standard score; TOWRE SWE = The Sight Word Efficiency task of Test of Word Reading Efficiency; OWLS = Oral and Written Language Scales; TNL = Test of Narrative Language; CASL = Comprehensive Assessment of Spoken Language; SWAN = Strengths and Weaknesses of ADHD Symptoms and Normal Behavior.

Bivariate correlations between measures were overall in expected directions and magnitudes (see Table 2). Higher order cognitive skills (inference, theory of mind, and comprehension monitoring) were consistently, although weakly (.17 ≤ rs ≤ .27), related to writing quality, but not writing productivity (−.09 ≤ rs ≤ .06). For correctness in writing, comprehension monitoring was weakly but significantly related (.17 ≤ rs ≤ .19). Reading comprehension was weakly to moderately related to writing quality (.26 ≤ rs ≤ .42), weakly related to writing productivity (.03 ≤ rs ≤ .20), and moderately related to correctness in writing (.33 ≤ rs ≤ .43). Furthermore, discourse oral language, vocabulary, grammatical knowledge, and working memory were weakly to moderately related to writing quality and correctness in writing, but were not related or very weakly related to writing productivity.

Table 2.

Bivariate Correlations Between Measures

| Measure | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 | 26 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. WIAT W. Quality | -- | |||||||||||||||||||||||||

| 2. Beaver W. Quality | .32 | -- | ||||||||||||||||||||||||

| 3. WIAT W. Words | .12 | .03 | -- | |||||||||||||||||||||||

| 4. Beaver W. Words | .20 | .24 | .50 | -- | ||||||||||||||||||||||

| 5. WIAT CIWS | .38 | .16 | .34 | .29 | -- | |||||||||||||||||||||

| 6. Beaver CIWS | .38 | .14 | .22 | .33 | .60 | -- | ||||||||||||||||||||

| 7. WJ Letter Word Iden. | .35 | .23 | .20 | .27 | .49 | .49 | -- | |||||||||||||||||||

| 8. TOWRE SWE 1 | .31 | .19 | .30 | .33 | .40 | .39 | .76 | -- | ||||||||||||||||||

| 9. TOWRE SWE 2 | .29 | .19 | .29 | .32 | .39 | .39 | .74 | .92 | -- | |||||||||||||||||

| 10. Spelling | .36 | .23 | .28 | .31 | .54 | .56 | .76 | .65 | .65 | -- | ||||||||||||||||

| 11. Sentence Copying 1 | .24 | .17 | .35 | .45 | .30 | .35 | .28 | .39 | .41 | .31 | -- | |||||||||||||||

| 12. Sentence Copying 2 | .20 | .20 | .31 | .48 | .30 | .31 | .29 | .36 | .38 | .31 | .67 | -- | ||||||||||||||

| 13. Sentence Copying 3 | .28 | .13 | .38 | .50 | .26 | .35 | .26 | .36 | .38 | .33 | .69 | .74 | -- | |||||||||||||

| 14. WJ Passage Comp | .38 | .29 | .17 | .20 | .39 | .43 | .75 | .66 | .63 | .64 | .24 | .18 | .18 | -- | ||||||||||||

| 15. WIAT Reading Comp | .42 | .26 | .03 | .12 | .33 | .37 | .54 | .51 | .47 | .48 | .24 | .18 | .20 | .58 | -- | |||||||||||

| 16. OWLS Listening Comp | .32 | .20 | −.06 | −.06 | .07 | .14 | .28 | .14 | .08 | .18 | .06 | .05 | .06 | .36 | .35 | -- | ||||||||||

| 17. TNL Comp | .34 | .28 | −.02 | .05 | .13 | .19 | .30 | .18 | .11 | .22 | .09 | .06 | .06 | .48 | .43 | .44 | -- | |||||||||

| 18. Informational Comp | .33 | .30 | −.03 | .05 | .19 | .18 | .27 | .12 | .11 | .22 | .05 | .05 | .05 | .42 | .41 | .43 | .60 | -- | ||||||||

| 19. TNL Retell | .20 | .17 | .05 | .12 | .13 | .09 | .22 | .16 | .15 | .19 | .16 | .08 | .06 | .31 | .35 | .23 | .52 | .44 | -- | |||||||

| 20. Informational Retell | .26 | .13 | .03 | .10 | .15 | .18 | .23 | .15 | .15 | .19 | .11 | .06 | .07 | .34 | .35 | .30 | .48 | .67 | .54 | -- | ||||||

| 21. Inference | .20 | .17 | −.09 | −.04 | .03 | .05 | .23 | .13 | .11 | .15 | −.01 | −.02 | .00 | .38 | .43 | .40 | .56 | .48 | .38 | .40 | -- | |||||

| 22. Perspective (ToM) | .23 | .22 | −.08 | −.01 | .04 | .15 | .16 | .08 | .05 | .13 | .03 | .01 | −.01 | .37 | .34 | .37 | .51 | .47 | .33 | .41 | .44 | -- | ||||

| 23. Comp Monitoring | .27 | .19 | .00 | .06 | .17 | .19 | .23 | .10 | .07 | .19 | .04 | .04 | .06 | .34 | .29 | .30 | .46 | .42 | .30 | .41 | .48 | .33 | -- | |||

| 24. WJ Vocabulary | .26 | .19 | −.07 | .00 | .14 | .15 | .35 | .25 | .20 | .27 | .06 | −.01 | .00 | .49 | .38 | .47 | .46 | .41 | .24 | .30 | .45 | .35 | .29 | -- | ||

| 25. Grammaticality | .33 | .31 | .01 | .10 | .22 | .25 | .44 | .29 | .27 | .40 | .13 | .10 | .10 | .47 | .45 | .41 | .53 | .44 | .29 | .33 | .58 | .32 | .41 | .44 | -- | |

| 26. Working Memory | .28 | .14 | .02 | .04 | .14 | .15 | .31 | .18 | .18 | .24 | .13 | .05 | .08 | .39 | .27 | .33 | .34 | .28 | .21 | .27 | .25 | .24 | .21 | .36 | .33 | -- |

| 27. SWAN Attention | .38 | .18 | .17 | .21 | .38 | .44 | .50 | .45 | .44 | .52 | .26 | .23 | .26 | .55 | .46 | .32 | .29 | .34 | .19 | .29 | .27 | .29 | .25 | .26 | .33 | .32 |

Note. Values equal to or smaller than .10 are not statistically significant at the p < .05 level. WIAT = Wechsler Individual Achievement Test; W = Writing; CIWS = correct minus incorrect word sequences; WJ = Woodcock Johnson; Iden = Identification; TOWRE SWE = The Sight Word Efficiency task of Test of Word Reading Efficiency; Comp = Comprehension; OWLS = Oral and Written Language Scales; TNL = Test of Narrative Language; ToM = Theory of Mind; SWAN = Strengths and Weaknesses of ADHD Symptoms and Normal Behavior.

The following latent variables were created using confirmatory factor analysis, using Mplus 8.4 (Muthén & Muthén, 2020): writing quality, writing productivity, correctness in writing, reading comprehension, lexical literacy, handwriting fluency, and discourse oral language. It should be noted that the lexical literacy latent variable was created instead of a word reading latent variable and an observed spelling variable because of their strong correlation (r = .81), and a consequent multicollinearity issue when they are entered together in a model. As presented in Table 3, loadings of indicators to latent variables were moderate to strong, ranging from .47 to .91 (ps < .001). Correlations between latent variables are presented in Table 4. Different dimensions of written composition were moderately to fairly strongly related (.39 ≤ rs ≤ .65). Discourse oral language was fairly strongly related to writing quality (.62) and reading comprehension (.68) whereas it was not related to writing productivity (.09, p = .21). Lexical literacy was moderately to strongly related to the different dimensions of written composition (.43 ≤ rs ≤ .71), and it was very strongly related to reading comprehension (.93).

Table 3.

Loadings for Latent Variables

| Latent Variable | Observed Variable | Loading | p value |

|---|---|---|---|

| Writing Quality | WIAT Essay Composition Quality | .69 | < .001 |

| Beaver Quality | .47 | < .001 | |

| Writing Productivity | WIAT Essay Composition Number of words | .61 | < .001 |

| Beaver Composition Number of words | .82 | < .001 | |

| Correctness in Writing | WIAT Essay Composition CIWS | .77 | < .001 |

| Beaver Composition CIWS | .79 | < .001 | |

| Reading Comprehension | WJ Passage Comprehension | .85 | < .001 |

| WIAT Reading Comprehension | .68 | < .001 | |

| Discourse Oral Language | OWLS Listening Comprehension | .52 | < .001 |

| TNL Comprehension | .76 | < .001 | |

| Informational text comprehension | .82 | < .001 | |

| TNL retell | .62 | < .001 | |

| Informational text retell | .73 | < .001 | |

| Lexical Literacy | WJ Letter Word Identification | .91 | < .001 |

| TOWRE SWE 1 | .82 | < .001 | |

| TOWRE SWE 2 | .81 | < .001 | |

| Spell | .83 | < .001 | |

| Handwriting Fluency | Sentence copying task 1 | .77 | < .001 |

| Sentence copying task 2 | .83 | < .001 | |

| Sentence copying task 3 | .86 | < .001 |

Note. WIAT = Wechsler Individual Achievement Test; CIWS = Correct minus incorrect word sequences; WJ = Woodcock Johnson; OWLS = Oral and Written Language Scales; TNL = Test of Narrative Language; TOWRE SWE = The Sight Word Efficiency task of Test of Word Reading Efficiency.

Table 4.

Correlations between latent variables

| Variable | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 1. Writing Quality | -- | |||||

| 2. Writing Productivity | .39 | -- | ||||

| 3. Correctness in Writing | .65 | .52 | -- | |||

| 4. Reading Comprehension | .73 | .29 | .66 | -- | ||

| 5. Discourse Oral Language | .62 | .09+ | .30 | .68 | -- | |

| 6. Lexical Literacy | .56 | .43 | .71 | .93 | .35 | -- |

| 7. Handwriting Fluency | .49 | .70 | .59 | .33 | .13 | .45 |

Note. All values are statistically significant at .05 level except for +.

Research Question 1: Dynamic Relations of Higher Order Cognitive Skills to Writing Quality, Writing Productivity, and Correctness in Writing

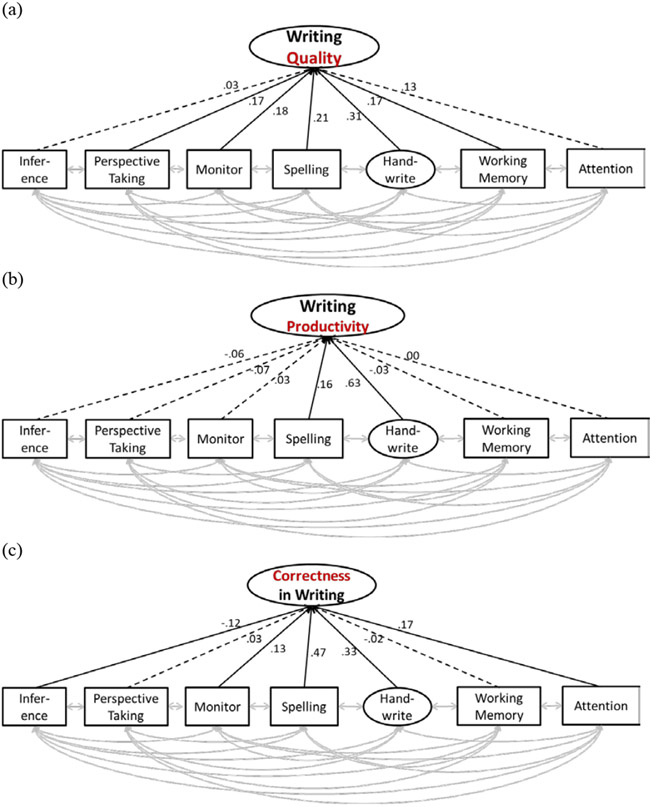

The unique contributions of higher order cognitive skills to the three dimensions of written composition—writing quality, writing productivity, and correctness in writing—were examined by fitting the three structural equation models in Figures 3a to 3c where higher order cognitive skills were predictors of the three dimensions of written composition after accounting for spelling, handwriting fluency, working memory, and attention—the essential skills for writing identified in the not-so-simple view of writing (Berninger & Winn, 2006) and DIEW (Kim & Park, 2019). Model fit was evaluated using multiple indices: chi-square statistic, comparative fit index (CFI), Tucker-Lewis index (TLI), root mean square error of approximation (RMSEA), and standardized root mean square residuals (SRMR). RMSEA values below .08, CFI and TLI values equal to or greater than .95, and SRMR equal to or less than .05 indicate excellent model fit (Hu & Bentler, 1999). CFI and TLI values greater than .90 and SRMR equal to or less than .10 are considered acceptable (Kline, 2005).

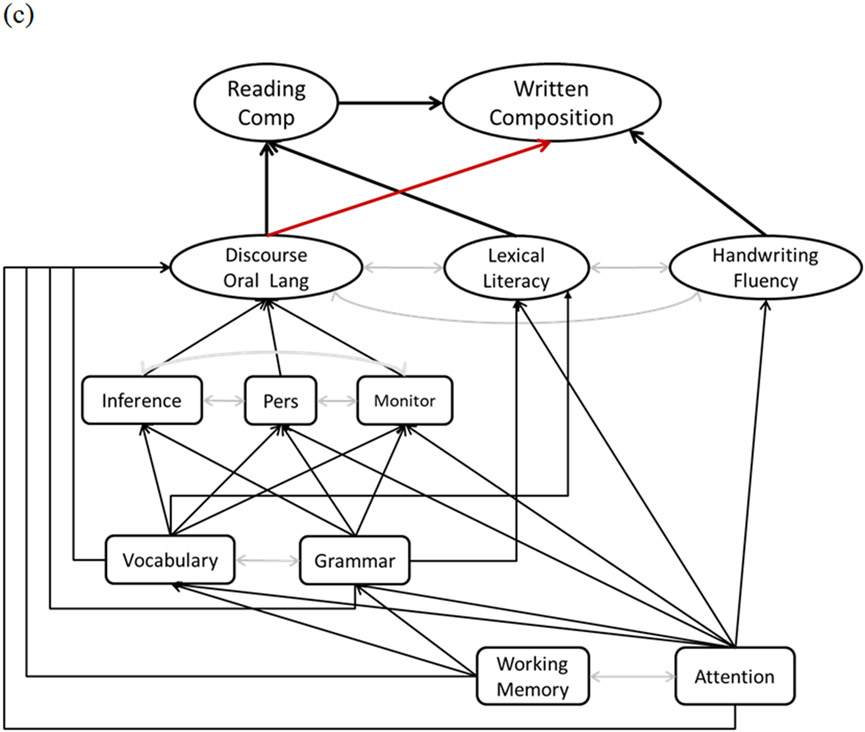

Figure 3.

Standardized path coefficients of relations of higher order cognitive skills (inference, perspective taking as measured by theory of mind, and comprehension monitoring) to the three dimensions of written composition: (a) writing quality, (b) writing productivity, and (c) correctness in writing. Black solid lines represent statistically significant relations, whereas black dashed lines represent nonsignificant relations. Gray lines represent covariances, which were included in the analysis, but results are not shown. Monitor = comprehension monitoring; Hand write = handwriting fluency; Attention = Attentional control.

These models shown in Figures 3a to 3c fit the data very well: χ2 (22) = 24.50, p = .32, CFI = 1.00, TLI = .99, RMSEA = .02, SRMR = .02 for writing quality; χ2 (22) = 13.23, p = .92, CFI = 1.00, TLI = 1.00, RMSEA = .00, SRMR = .02 for writing productivity; and χ2 (22) = 18.23, p = .92, CFI = 1.00, TLI = 1.00, RMSEA = .00, SRMR = .02 for correctness in writing. Figure 3 shows standardized path coefficients for the three dimensions of written composition. For writing quality (Figure 3a), perspective taking (.17, p = .02) and comprehension monitoring (.18, p = .01) were uniquely related, whereas inference (.03, p = .74) was not, after accounting for all other predictors in the model. For writing productivity (Figure 3b), none of the higher order cognitive skills was uniquely related (ps ≥ .28). For correctness in writing (Figure 3c), comprehension monitoring (.13, p = .01) had a unique, positive relation whereas inference had a suppression effect (−.12, p = .03), and perspective taking (.03, p = .47) was not related. Approximately 53%, 52%, and 64% of total variance in writing quality, productivity, and correctness in writing were explained, respectively.

Research Question 2: Dynamic Relations of Reading Comprehension to Writing Quality, Writing Productivity, and Correctness in Writing, and the Mediating Role of Reading Comprehension

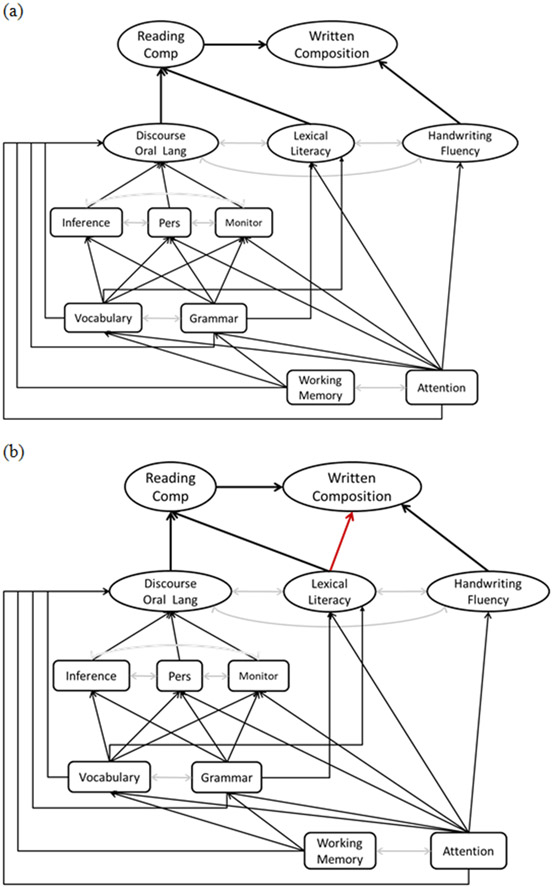

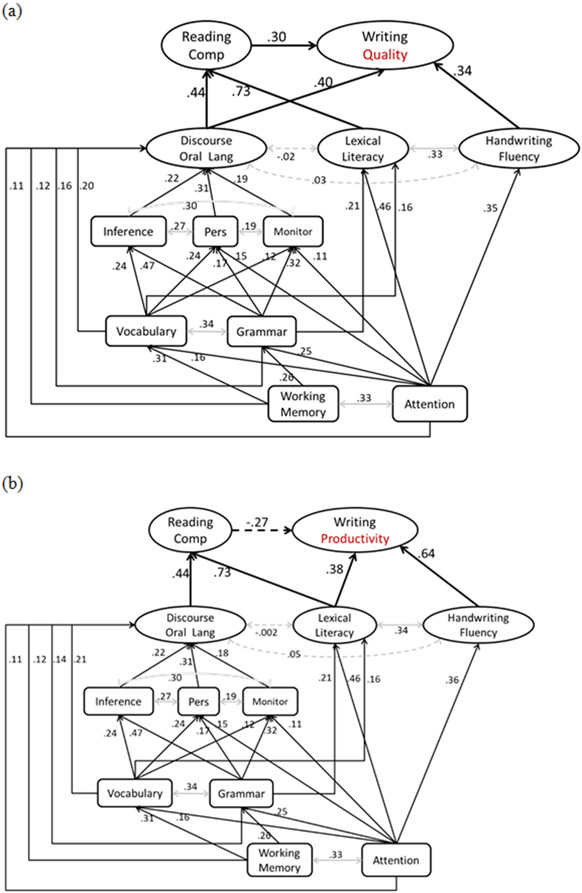

Prior to examing the mediating roles of reading comprehension using the Figure 4 models, we fitted structural equation models without reading comprehension to establish relations of discourse oral language, lexical literacy, handwriting fluency, and the other skills to the three dimensions of written composition. The results supported the existence of relations of these component skills to written composition and their differential relations (see Table S1 and Figure S1 in online supplemental materials). We then fitted the three alternative models in Figure 4 that includes reading comprehension for each dimension of written composition, writing quality, writing productivity, and correctness in writing, for a total of 9 structural equation models (three models*three dimensions of written composition). In all these models, reading comprehension was hypothesized to be directly predicted by discourse oral language and lexical literacy skills and indirectly predicted by their associated component skills according to theoretical models of reading comprehension and empirical evidence (Florit & Cain, 2011; Hoover & Gough, 1990; Kim, 2017, 2020b). However, the three alternative models differed in the nature of mediating role of reading comprehension. In Figure 4a, reading comprehension was hypothesized to completely mediate the relations of discourse oral language and lexical literacy to the three dimensions of written composition. In Figure 4b, reading comprehension partially mediates the relation of lexical literacy to the three dimensions of written composition while it completely mediates the relation of discourse oral language to the three dimensions of written composition. In Figure 4c, reading comprehension partially mediates the relation of discourse oral language to the three dimensions of written composition while it completely mediates the relation of lexical literacy to the three dimensions of written composition. The Figure 4a was nested in Figure 4b and Figure 4c, and therefore, model fits between Figure 4a versus Figure 4b and Figure 4c were compared using chi-square difference tests. The Figure 4b and Figure 4c models were equivalent models and could not be statistically compared. Therefore, these were examined for any problems with estimation (e.g., Heywood case) for model choice.

Figure 4.

Alternative models of the relation of reading comprehension to written composition, which were fitted to each of the three dimensions of written composition, writing quality, writing productivity, and correctness in writing. Black lines represent predictive paths (the red line indicates how the alternative models differ), and gray lines represent covariances. Comp = comprehension; Lang = language; Lexical literacy = Word reading and spelling; Pers = perspective taking (theory of mind); Monitor = comprehension monitoring; Attention = Attentional control.

Given the complexity of these models, preliminary analysis was conducted to examine relations among component skills. In all the models, handwriting fluency was not hypothesized to have a relation with reading comprehension, based on DIEW (Figure 1) and on our preliminary analysis confirming no such relation. Covariances between higher order cognitive skills and transcription skills were not allowed based on theory (see Figure 1), prior findings (Kim & Schatschneider, 2017), and the preliminary analysis. Preliminary analysis also showed that attentional control had direct relations to perspective taking and monitoring, but not to inference, after accounting for vocabulary, grammatical knowledge, and working memory. Furthermore, attentional control was directly related to spelling and handwriting fluency, whereas working memory was not related to either of these skills after accounting for the other variables in the model. These preliminary findings were applied to all models in the Figures 4a, 4b, and 4c.

Table 5 presents model fits of the three alternative models (Figures 4a, 4b, and 4c) for each dimension of written composition, writing quality, writing productivity, and correctness in writing. For writing quality, Figure 4c was the best fitting model because Figure 4a had a statistically significantly worse fit than Figures 4b and 4c, and Figure 4b suffered from Heywood case where the standardized coefficient for the relation of reading comprehension to writing quality was greater 1. Results of the Figure 4c model are shown in Figure 5a. Reading comprehension (.30, p < .001) and handwriting fluency (.32, p < .001) were independently related to writing quality after accounting for the other variables in the model, including the lexical literacy. Lexical literacy was independently related to reading comprehension (73, p < .001) but not to writing quality, and discourse oral language was independently related to reading comprehension (.44, p < .001) and writing quality (.40, < .001) after accounting for the other variables in the model. These results indicate that reading comprehension and writing quality are directly predicted by discourse oral language and lexical literacy skills, and indirectly predicted by their component skills, such as inference, perspective taking monitoring, vocabulary, grammatical knowledge, working memory, and attention control. The results also indicate that the relations of lexical literacy and its component skills to writing quality are completely mediated by reading comprehension skill. In contrast, the relations of discourse oral language and its component skills to writing quality was partially mediated by reading comprehension. Approximately 67% of total variance in writing quality was explained by the included predictors.

Table 5.

Model Fit Comparisons

| Dimension of Written Composition |

Figure | χ2 (df), p value | CFI (TLI) | RMSEA (SRMR) |

nBIC | Model comparison : Δχ2 (Δdf, p value) |

|---|---|---|---|---|---|---|

| Writing Quality | Figure 4a | 382.41 (200), < .001 | .96 (.95) | .05 (.05) | 45207.28 | |

| Figure 4b * | 367.99 (199), < .001 | .96 (.95) | .05 (.05) | 45195.55 | ||

| Figure 4c | 367.99 (199), < .001 | .96 (.95) | .05 (.05) | 45195.55 | 4a vs. 4c: 12.42 (1, < .001) | |

| Writing Productivity | Figure 4a | 354.45 (200), < .001 | .96 (.96) | .05 (.05) | 50008.26 | |

| Figure 4b | 347.98 (199), < .001 | .97 (.96) | .05 (.05) | 50004.47 | ||

| Figure 4c + | 347.98 (199), < .001 | .97 (.96) | .05 (.05) | 50004.47 | 4a vs. 4b & 4c: 6.47 (1, .01) | |

| Correctness in Writing | Figure 4a | 392.70 (200), < .001 | .96 (.95) | .05 (.05) | 49673.95 | |

| Figure 4b | 380.95 (199), < .001 | .96 (.95) | .05 (.05) | 49664.88 | ||

| Figure 4c + | 380.95 (199), < .001 | .96 (.95) | .05 (.05) | 49664.88 | 4a vs. 4b & 4c: 9.75 (1, .002) |

Note.

Heywood case

Statistically significant suppression effects; Bolded are final models.

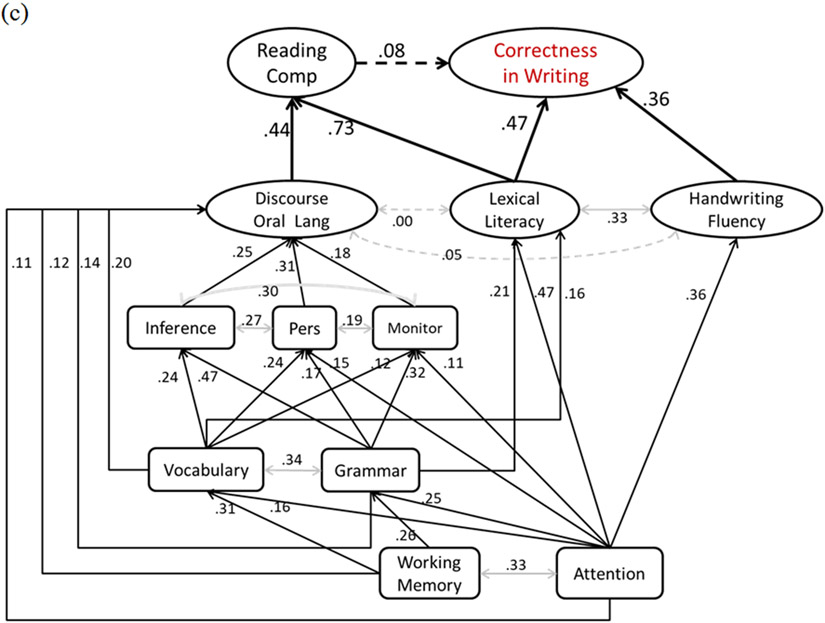

Figure 5.

Standardized path coefficients of expanded DIEW, where reading comprehension is a mediator for (a) writing quality, (b) writing productivity, and (c) correctness in writing. Solid lines represent statistically significant relations, whereas dashed lines represent nonsignificant relations. Gray lines represent covariances. Comp = comprehension; Lang = language; Lexical literacy = Word reading and spelling; Pers = perspective taking (theory of mind); Monitor = comprehension monitoring; Attention = Attentional control.

Given the relation of reading comprehension to writing quality over and above all the other skills included in the model, a post-hoc analysis was conducted to explore the directionality of reading comprehension-writing quality relation. As stated above, DIEW posits bidirectional relations between reading comprehension and written composition. However, the bidirectionality is anticipated at an advanced phase of writing development, and we anticipated the direction of reading comprehension to writing relation in the beginning writing phase examined in the present study (see Ahmed et al., 2014; Kim et al., 2018 for empirical evidence). Although the directionality question is better addressed using longitudinal data, we explored whether the writing quality-to-reading comprehension model fits the data better than the reading comprehension-to-writing quality model shown in Figure 5a. The results indicate that the reading comprehension-to-writing quality model (Figure 5a) fit the data better than the writing quality-to-reading comprehension model (see Appendix B).

For writing productivity dimension, Figure 4b was chosen as the final model. Both Figures 4b and 4c were superior to Figure 4a, but the Figure 4c model had a statistically significant suppression effect of discourse oral language to writing productivity (i.e., no relation [.09, p = .21] in bivariate correlation, but a negative relation [−.23, p = .01] in Figure 4c). The same pattern was found for correctness in writing so that Figure 4b was chosen as the best fitting model. Standardized path coefficients are presented in Figures 5b and 5c for writing productivity and correctness in writing, respectively. In both models, reading comprehension was not related (−.27, p = .07 for writing productivity, and .08, p = .52 for correctness in writing) but lexical literacy and handwriting fluency made independent contributions (≥ .36, ps < .001) after accounting for the other variables. In other words, reading comprehension is not related to writing productivity and correctness in writing while lexical literacy and handwriting fluency skills are, after accounting for the other variables in the model. Therefore, reading comprehension does not act as a mediator for writing productivity and correctness in writing. No post-hoc analysis with regard to the directionality was conducted, given the absence of the unique relation between reading comprehension and writing productivity and correctness in writing. Approximately 51% and 61% of variance in writing productivity and correctness in writing, respectively, were explained.

Research Question 3: Dynamic Relations in Terms of Total Effects of Component Skills