Abstract

The cortical grasp network encodes planning and execution of grasps and processes spoken and written aspects of language. High-level cortical areas within this network are attractive implant sites for brain-machine interfaces (BMIs). While a tetraplegic patient performed grasp motor imagery and vocalized speech, neural activity was recorded from the supramarginal gyrus (SMG), ventral premotor cortex (PMv), and somatosensory cortex (S1). In SMG and PMv, five imagined grasps were well represented by firing rates of neuronal populations during visual cue presentation. During motor imagery, these grasps were significantly decodable from all brain areas. During speech production, SMG encoded both spoken grasp types and the names of five colors. While PMv neurons significantly modulated their activity during grasping, SMG’s neural population broadly encoded features of both motor imagery and speech. Together, these results indicate brain signals from high-level areas of the human cortex could be used for grasping and speech BMI applications.

Keywords: brain-machine interfaces, single-unit recording, grasp decoding, speech decoding, supramarginal gyrus, ventral premotor cortex, somatosensory cortex

eTOC Blurb:

Wandelt et al. study how signals from the human supramarginal gyrus, ventral premotor cortex, and somatosensory cortex can be used for brain-machine-interfaces. The supramarginal gyrus and ventral premotor cortex encode grasp motor imagery and the supramarginal gyrus encodes vocalized speech, indicating new target regions for grasp and speech BMI applications.

Introduction

The ability to grasp and manipulate everyday objects is a fundamental skill, required for most daily tasks of independent living. Functional loss of this ability, due to partial or complete paralysis from a spinal cord injury (SCI), can irrevocably degrade an individual’s autonomy. Recovery of hand and arm function (Anderson, 2004), as well as recovering speech communication (Hecht et al., 2002), are very important to tetraplegic patients and those suffering from certain neurological conditions such as amyotrophic lateral sclerosis (ALS).

Brain-machine interfaces (BMI) could give tetraplegic individuals greater independence by directly recording neural activity from the brain and decoding these signals to control external devices, such as a robotic arm or hand (Aflalo et al., 2015) (Collinger et al., 2013). Recently, BMIs have utilized neural signals to reconstruct speech (Moses et al., 2021), (Wilson et al., 2020), (Angrick et al., 2021). Intracortical BMIs use microelectrode arrays to record the action potentials of individual neurons with high signal to noise ratio (SNR) and high spatial resolution (Nicolas-Alonso and Gomez-Gil, 2012). These characteristics make them valuable for BMI applications.

This study targeted three regions of the human cortex: the supramarginal gyrus (SMG), which is a sub-region of the posterior parietal cortex (PPC), the ventral premotor cortex (PMv) and the primary sensory cortex (S1). These brain areas are key components of the cortical grasp circuit. PPC and PMv each encode complex cognitive processes, like goal signals (Aflalo et al., 2015), but, similar to M1, also encode low level trajectory and joint-angle motor commands (Andersen et al., 2014), (Schaffelhofer and Scherberger, 2016). Instead of decoding individual finger movements from M1, decoding movement intentions from upstream brain areas such as PPC and PMv may allow for more rapid and intuitive control of a grasp BMI (Andersen et al., 2019). S1 processes incoming sensory feedback signals from the peripheral nervous system, including proprioceptive signals during movement (Goodman et al., 2019) and imagined somatosensations (Bashford et al., 2021).

The supramarginal gyrus (SMG) is involved in processing motor activity during complex tool use (Orban and Caruana, 2014). This finding is supported by functional magnetic resonance imagining (fMRI) studies of humans and non-human primates (NHPs), demonstrating tool use activation of SMG is unique to humans (Peeters et al., 2009). Other studies have confirmed modulation of SMG activity during grasping and manipulation of objects (Sakata, 1995), reaching (Filimon et al., 2009), planning (Johnson-Frey, 2004) and execution of tool use (Gallivan et al., 2013), (Orban and Caruana, 2014), (McDowell et al., 2018), (Buchwald et al., 2018), (Reynaud et al., 2019) (Garcea and Buxbaum, 2019). These characteristics highlight SMG’s rich potential as a source of grasp related neural signals in the human cortex.

In the somatosensory cortex, human and non-human primate (NHP) studies have demonstrated decoding of hand kinematics during executed hand gestures (Branco et al., 2017) and before contact during object grasping (Okorokova et al., 2020). Modulation of S1 neurons during motor imagery of reaching (Jafari et al., 2020) has been demonstrated for the same participant whose data underlies this work. Therefore, S1 might encode grasp motor imagery.

While the human grasping circuit represents an ideal target for grasp BMI applications, aspects of language are also decodable from this same network. (Stavisky et al., 2019) and (Wilson et al., 2020) demonstrated decoding of speech from the ‘hand knob’ area in M1, the final cortical output of the grasp circuit. (Aflalo et al., 2020) found PPC activation during reading action words and (Zhang et al., 2017) during vocalized speech. Transcranial magnetic stimulation (TMS) and fMRI experiments have both documented SMG’s involvement in language processing (Stoeckel et al., 2009), (Sliwinska et al., 2012), (Oberhuber et al., 2016) and verbal working memory (Deschamps et al., 2014), suggesting potential involvement in speech production. However, to our knowledge, speech decoding has not previously been demonstrated in SMG.

Results

Grasp representation in SMG, PMv and S1 was characterized by decoding five imagined grasps, cued with visual images taken from the “Human Grasping Database” (Feix et al., 2016). We quantified grasp tuning in the neuronal population and decodability of each individual grasp across all brain regions. SMG, PMv and S1 neural populations showed significant grasp selectivity, making them candidates for grasp BMI implantation sites. We evaluated each region’s role during language processing by cueing the participant to vocalize grasp names and colors. PMv was selectively active during imagined grasps, while SMG was selective during both imagined grasps and speech production.

Motor imagery task design

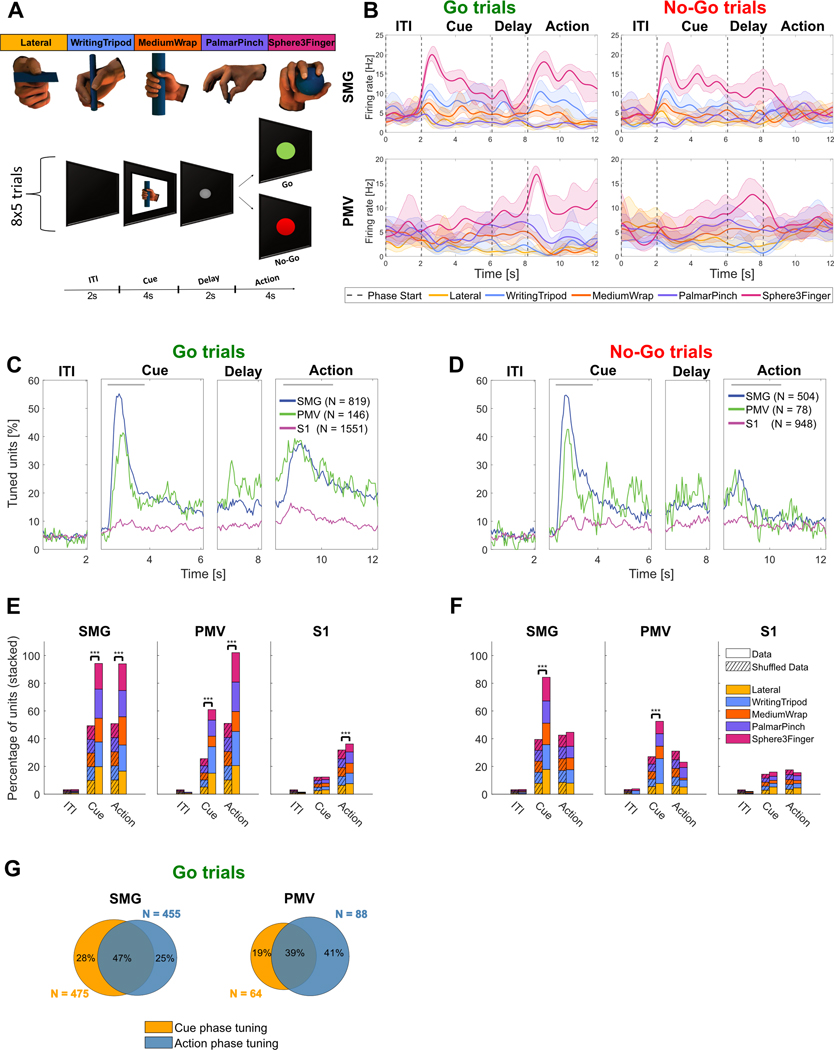

The motor imagery task contained four phases: an inter-trial interval (ITI), a cue phase, a delay phase, and an action phase (Figure 1A). The Go variation of the task consisted of only Go-trials, with performed motor imagery during the action phase. A Go/No-Go variation of the task contained an action phase with randomly intermixed Go trials and No-Go trials. This control condition verified the participant could control motor imagery-related activity at will.

Figure 1|. Neurons in the cortical grasp circuit encode grasp types.

A) Grasp images were used to cue motor imagery in a tetraplegic human. The task was composed of an inter-trial interval (ITI), a cue phase displaying one of the grasp images, a delay phase and an action phase. For the Go/No-Go task the action phase contained intermixed Go trials (green – performed motor imagery) and No-Go trials (red – rest). B) Example smoothed firing rates of neurons in SMG and PMv during Go and No-Go trials. Smoothed average firing rates of two example units (solid line: mean, shaded area: 95% bootstrapped confidence interval) for 8 trials of each grasp. Vertical dashed lines represent the beginning of each phase. C) Percentage of tuned units to grasps for Go trials in 50ms time bins in SMG, PMv and S1 over the trial duration. The gray lines represent cue and action analysis windows for figures E,F. D) Same as C) for No-Go trials. E) Stacked percentage of units tuned for each grasp in the ITI, cue and action phase windows during Go trials. Significance was calculated by comparing data to a shuffle distribution (striped lines, *** = p < 0.001). F) Same as E) for No-Go trials. G) Overlap of tuned units between the cue and action analysis windows during Go trials for SMG and PMv.

Go trial results were quantitatively similar in both the Go and Go/No-Go variations of the task, as assessed through a t-test between classification accuracies (p > 0.05 for all). Therefore, neurons involved during Go-trials in both tasks were pooled over all session days (see Table 1), resulting in 819 SMG Go task units, 504 SMG No-Go task units, 146 PMv Go task units, 78 PMv No-Go task units, 1551 S1 task Go units, and 948 S1 No-Go task units.

Table 1.

illustrates the number of recording sessions for each task Variation.

| Area╲Task | Go task | Go/No-Go task | Spoken Grasps | Spoken Colors |

|---|---|---|---|---|

| SMG | 6 | 9 | 5 | 5 |

| PMV | 6 | 6 | 5 | 5 |

| S1 | 6 | 7 | 5 | 5 |

SMG, PMv and S1 show significant tuning to grasps during motor imagery

Smoothed firing rates of example neurons for SMG and PMv most active for grasp “Sphere3Finger” are shown in Figure 1B. Motor imagery evoked a much stronger response during the action phase of Go trials compared to the action phase of No-Go trials.

After establishing individual neural firing rate modulation during motor imagery for different grasps, we quantified the entire neuronal population’s selectivity for each grasp. To compare selective neural activity within task epochs (image cue, Go-task action phase, No-Go task action phase), we determined the duration of selective (or tuned) activity of the neural population during each phase. Tuning of a neuron to a grasp was determined by fitting a linear regression model to the firing rate in 50ms time bins (see methods).

Population analysis (Figure 1C) of Go trials revealed two main peaks of activation in SMG and PMv, one at cue presentation (54.8% SMG, 41.1% PMV) and another during the action phase (37.4% SMG, 39.0% PMv). For S1, only a minor increase in neural tuning was observed during the action phase. During No-Go trials, neuronal activity decreased around 1s after start of the action phase (Figure 1D, action phase).

The peaks of activity were selected to compute individual grasp tuning. Time windows incorporating the peaks began 250ms after the start of either the cue or action phase (to account for processing latencies), and were respectively 1.5s and 2s long (gray lines, top of Figure 1C,D). A longer time window was chosen for the action phase, as the exact onset of motor imagery is not possible to measure.

To assess if grasp tuning was significant, results were compared to a shuffled condition, where grasp labels were randomly reassigned (see methods). As linear regression uses the ITI phase as a baseline condition, shuffled results were proportional to the general increase of activity in the neuronal population. Tuning was significant during the Go-trial peak activity for all brain areas (Figure 1E). As expected, tuning was not significant in the ITI condition. During the cue phase, results were significant in SMG and PMv, but not significant in S1. During the action phase, no significance was found during No-Go trials for all brain areas (Figure 1F). These results highlight grasp-dependent neuronal activity during cue presentation in SMG and PMv, and during instructed motor imagery in all brain areas. Additionally, units tuned to multiple grasps existed in all brain areas, demonstrating mixed grasp encoding within the population (Figure S1).

Similar to previous analysis methodologies (Murata et al., 1997), (Sakata, 1995), (Taira, 1998), (Klaes et al., 2015), we separated tuned units into three categories: those tuned during the cue phase (“visual units”), those tuned during Go-trial action phase (“motor-imagery units”) and those tuned during both (“visuo-motor units”). All three neuron types were found in SMG and PMv (Figure 1B,G).

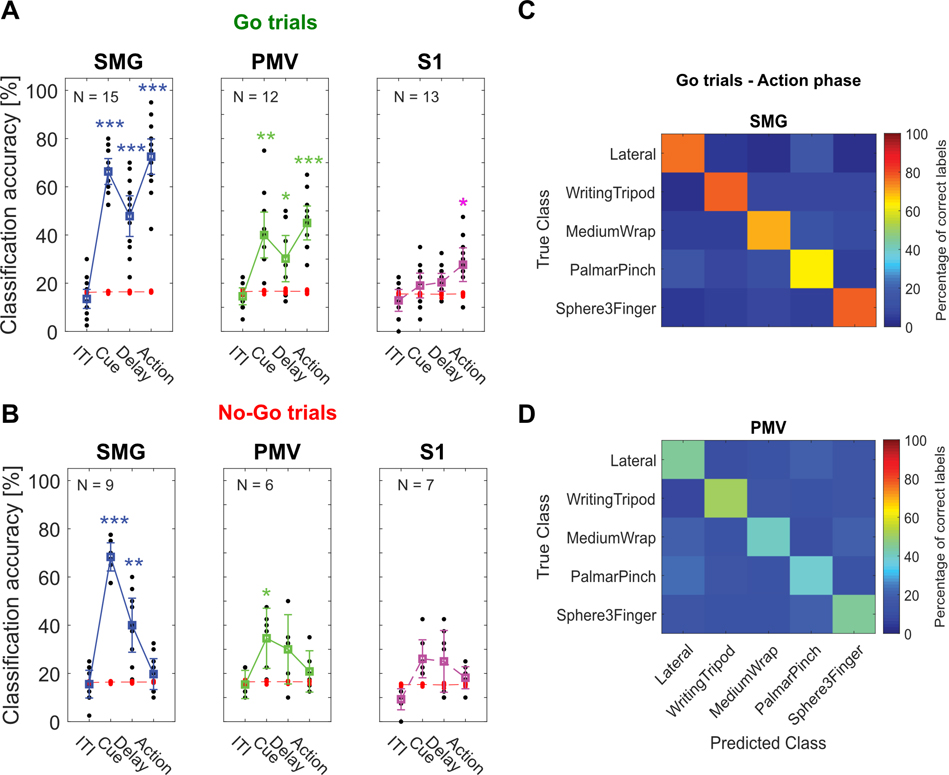

SMG, PMv and S1 show significant classification accuracy during grasp motor imagery

To assess each brain region’s potential use for BMI applications, we evaluated decodability of individual imagined grasps using linear discriminant analysis (LDA - see methods). Significant motor imagery decoding was observed during the cue, delay and Go-action phases in SMG and PMv and during the Go-action phase in S1 (Figure 2A). For No-Go trials, no significant classification accuracies were obtained in the action phases (Figure 2B). Importantly, these results mirror our findings in Figure 1E,F, indicating significant grasp tuning can predict significant classification accuracies. A confusion matrix averaged over all sessions of Go-trials in SMG and PMv during the action phase indicates all grasps can be decoded (Figure 2C,D).

Figure 2|. Significantly decodable grasps from all brain areas during motor imagery.

A) Classification was performed for each session day individually using leave-one out cross-validation (black dots). PCA was performed. 95% c.i.s for the session means were computed. Significance was evaluated by comparing actual data results to a shuffle distribution (averaged shuffle results = red dots, * = p < 0.05, ** = p < 0.01, *** = p < 0.001) B) Same as A) for No-Go trials. C) Error matrix during Go-trial action phase for SMG, averaged over all session days. D) Same as C) for PMv.

SMG and PMv show high generalizability of grasp encoding in the neural population

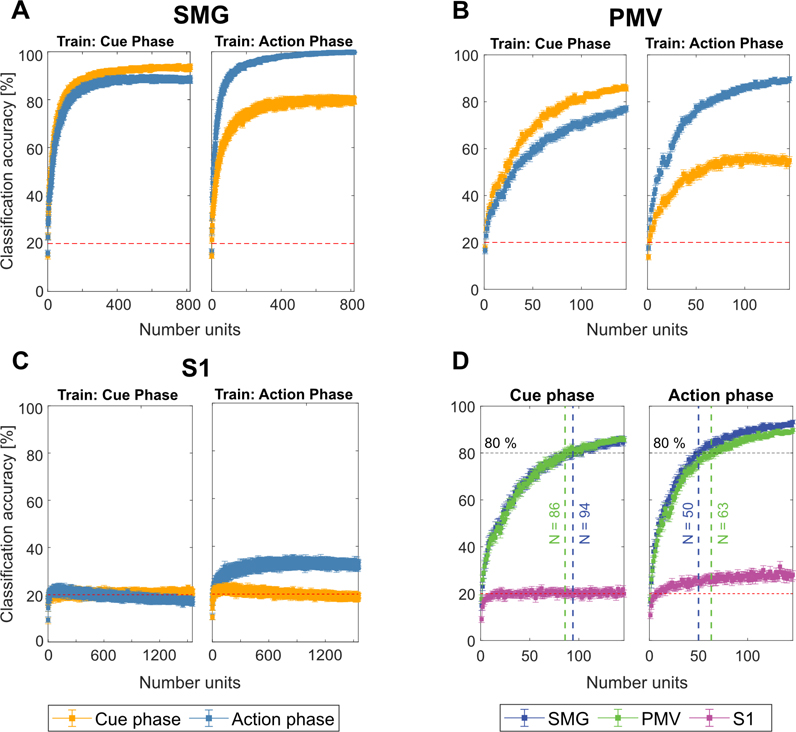

We addressed generalizability of grasp encoding in the neural population via two analyses, cross-phase classification and stability across different population sizes.

Cross-phase classification examined the similarities of neural processes across the cue and action phases (see methods). We trained a classification model on a subset of the data of one phase (e.g. cue phase), and tested it on two different subsets taken from the cue and action phases. If a model trained on the cue phase did not generalize to the action phase, distinct neural processes might be present in each phase. However, if the model generalized well, common cognitive processes might be occurring in both phases. A neuron dropping analysis tracked the evolving classification accuracy as units were removed or added to the pool of predictors (see methods). The analysis was performed separately for each of the implanted brain regions, with 100 repetitions of eight-fold cross-validation.

Results were averaged over 8-folds and bootstrapped confidence intervals (c.i.s.) of the mean were computed over 100 repetitions (Figure 3). Stable results led to small c.i.s, ranging from ±2.88% to ±0.05% for SMG, ±2.83% to ±0.54% for PMV and ±2.36% to ±0.8% for S1, decreasing with the number of available units. SMG and PMv showed strong shared activity between the cue and action phases. When training on the cue phase, and testing on the cue and action phases, we observed good generalization of the model in SMG, with overlapping c.i.s, diverging only at high unit counts. In PMv, the generalization was lower, but showed similar trends, while decoding remained at chance level for S1 (Figure 3 A,B,C Train: Cue Phase). However, when training on the action phase, and evaluating on the cue phase, lower generalization of the model was observed in SMG and PMv (Figure 3A,B,C Train: Action Phase).

Figure 3|. SMG and PMv show high generalizability of grasp encoding in neuronal populations.

A-C) Neuron dropping curves were performed in SMG, PMv and S1 over 100 repetitions of eight-fold cross validation. The first 20 PC’s were used as features. The model was trained once on the cue phase and applied on both cue and action phases (Train: Cue phase), and vice-versa (Train: Action phase). The mean classification accuracy with bootstrapped 95% c.i.s are plotted. D) The first 140 units of each brain area were plotted together to compare the number of units required for 80% classification accuracy. SMG and PMv results were similar, with less units needed for classification during the action phase compared to the cue phase.

During the action phase, SMG peaked at 99% decoding accuracy when all recorded units were included in the analysis (Figure 3A). In S1, decoding accuracy during the action phase peaked around 32%, even when the pool of available neurons increased (Figure 3C). As PMv did not reach its peak decoding accuracy due to fewer number of units recorded (Figure 3B), performance of SMG and PMv at the same population levels was compared directly. Figure 3D depicts the number of features needed to obtain 80% classification accuracy during cue (left) and action (right) phase. During the cue phase, 94 units in SMG and 86 units in PMv were needed. During the action phase, 80% classification accuracy was obtained with 50 units in SMG, and 63 units in PMv. These results demonstrate SMG’s and PMv’s potential for comparable grasp decoding. If higher neuronal population counts were available, excellent grasp classification results can be expected in both brain areas.

PMv had a limited number of neurons available for each daily session. It is possible that some units were included multiple times across multiple days, potentially reducing the amount of independent available information. However, since the highest classification accuracy during the action phase was higher for the neuron dropping curve (90%) than for individual session days (65%, Figure 2A), new grasp information was available by combining units across several days.

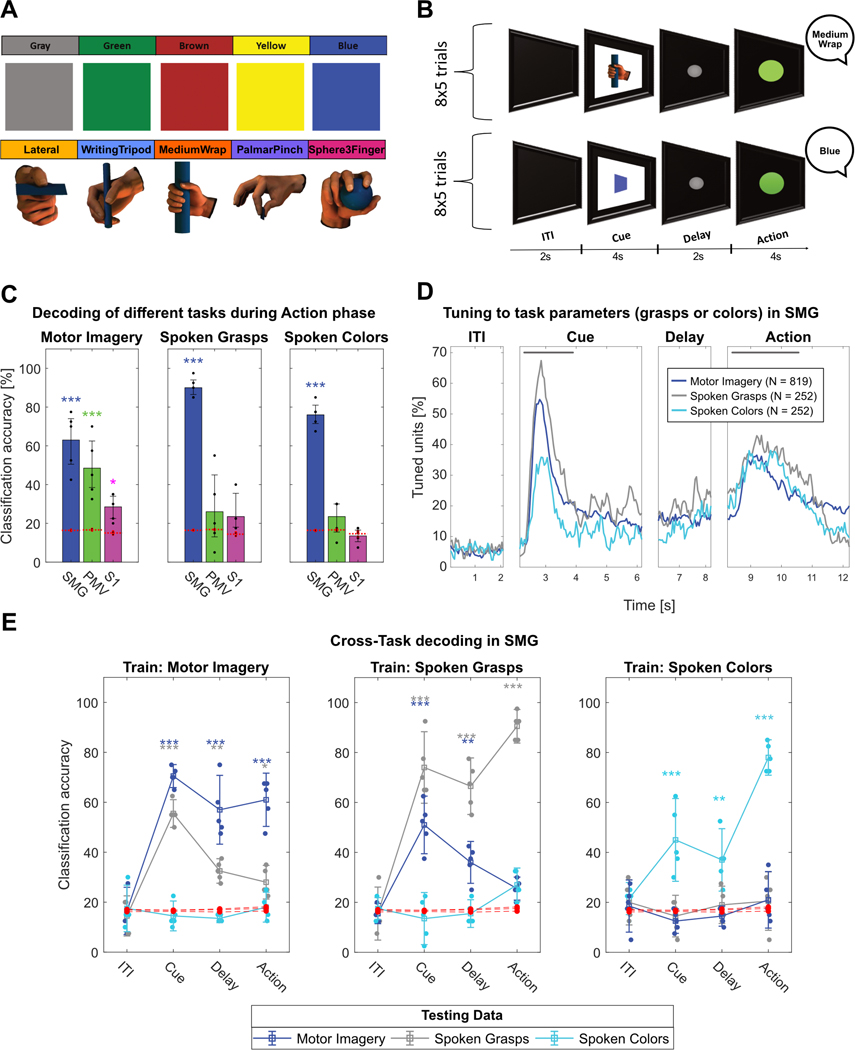

SMG significantly decodes spoken grasps and colors

To explore SMG, PMv and S1’s potential for speech BMIs, the participant was instructed to perform verbal speech instead of motor imagery during the action phase. By comparing each region’s evoked activity between these two cognitive processes, we aimed to uncover evidence for language processing activity at the single unit level. During each daily session, a “Motor Imagery”, a “Spoken Grasps” and a “Spoken Colors” version of the task was run (Figure 4A,B, see methods). Importantly, both the “Motor Imagery” and the “Spoken Grasps” task were cued with the same images. This allowed us to investigate if the cue representation of the grasps remained similar, even if different motor outputs (grasping vs speaking) were planned.

Figure 4|. SMG encodes speech.

A) Grasp images cued the “Motor Imagery” and “Spoken Grasps” tasks. Colored squares cued the “Spoken Colors” task. B) The task contained an ITI, a cue phase displaying the image of one of the grasp or colored squares, a delay phase and an action phase. During the action phase, the participant vocalized once the name of the cued grasp or color. C) Classification was performed for each individual session day using leave-one-out cross-validation (black dots) for all tasks. PCA was performed for feature selection. 95% c.i.s for the session mean was computed. Significance was computed by comparing actual data results to a shuffle distribution (averaged shuffle results = red dots, * = p < 0.05, ** = p < 0.01, *** = p < 0.001). SMG, PMv, and S1 showed significant classification results for motor imagery. Only SMG data significantly classified spoken grasps and spoken colors. D) Percentage of tuned units to grasps or colors in 50ms time bins in SMG for each task. The gray lines represent cue and action analysis windows for figure S2A,B. E) Cross-task classification was performed by training a classification model on one task (e.g. Motor Imagery) and evaluating it on all three tasks, for each phase separately. Confidence intervals and significance were computed as described in figure 4C). During the cue phase, generalization between tasks using the same image cue (“Motor Imagery” and “Spoken Grasps”) was observed. During the action phase, weak (*) or no generalization was observed.

Classification results during the action phase corroborate SMG’s involvement during language processing (Figure 4C) (Oberhuber et al., 2016), (Deschamps et al., 2014), (Stoeckel et al., 2009). In contrast to our motor imagery task, only SMG showed significant results during vocalization of grasp names and colors.

To assess selectivity of SMG neurons to the different task parameters, tuning in 50ms bin was computed identically to Figure 1C,D for each task (Figure 4D). The population analysis revealed similar temporal dynamics during the cue phase for the “Motor Imagery” and “Spoken Grasps” tasks. This result was expected; both conditions employed the same grasp cue. However, responses for the “Spoken Colors” cues were shorter in time and of lower amplitude, even though they were presented for the same duration as the grasp cues on the screen. During the action phase, temporal dynamics between motor imagery and spoken words were comparable, possibly indicating similar underlying cognitive processes.

We evaluated this hypothesized similarity between motor imagery and speech production by performing cross-task classification of the cue and action phases. Cross-task classification involved training a model on the neuronal firing rate observed in one task, and evaluating the model on all three tasks, performed separately for each phase (see methods). During the cue phase, decoding of grasps nicely generalized between the “Motor Imagery” and the “Spoken Grasps” task (Figure 4E, Train: Motor Imagery; Train: Spoken Grasps). This effect weakened during the delay phase, potentially indicating the formation of separate motor plans for speech and motor imagery. During the action phase, generalization between grasp motor imagery and grasp speech was weak or absent, even if the semantic content was identical. No generalization between the “Spoken Colors” and “Spoken Grasps” tasks occurred.

These results were strengthened by two additional analyses. Venn diagrams displayed the overlap of tuned units between the tasks in different phases. During the cue presentation (Figure S2A), highly overlapping neural populations were engaged in the “Motor Imagery” and “Spoken Grasps” tasks. However, during the action phase (Figure S2B), the output modality (speech vs. motor imagery) was represented more similarly than semantic content (grasps vs. colors). The projection of z-scored action phase data onto the first two principal components indicated grasp motor imagery, grasp speech and spoken colors occupied different feature spaces (Figure S2C).

We found a similar relationship between the cue and action phase neural representations during speech production as was found previously (Figure 3) in the “Motor Imagery” task (Figure S3A,B). Results from the cross phase classification analysis and neuron dropping curves yielded evidence of generalizability from the cue to action phase in both types of tasks (Figure S3).

Discussion

To demonstrate the participant’s volitional control of motor imagery during the action phase, interleaved No-Go trials served as a control. As expected, during No-Go trials in the action phase, unit tuning was not significantly different from a shuffled distribution (Figure 1F, Figure S1B), and classification was not significantly different from chance (Figure 2B). A non-significant peak in tuning was observed in Figure 1D (No-Go action phase trials), potentially indicating the formation of a motor plan before the No-Go cue that then dissipated in the action phase. Similar cancelled plans have been previously observed in PPC of NHPs for reach and saccade plans (Cui and Andersen, 2007).

S1 encodes imagined grasps significantly, but does not improve with population size

While S1 grasp motor imagery classification was significant (Figure 2A), performance did not improve with increased population sizes as was seen with SMG and PMv (Figure 3D). This could be an indication of limited grasp information within the S1 population, or highly correlated firing units. Firstly, no actual movement was performed, likely decreasing the occurrence of proprioceptive signals. Secondly, the task design might have only weakly engaged the neural populations we recorded from, as the electrode implant mostly covered the contralateral arm area (Armenta Salas et al., 2018). Thirdly, units in S1 showed mostly grasp independent increases in activity compared to baseline (Figure 1C,E), possibly indicating that the grasp-related responses were not different enough to support stronger decoding in S1.

SMG and PMv show significant grasp activity during the visual cue and motor imagery

SMG’s cue phase activity rose faster, and peaked higher compared to activity during motor imagery (Figure 1C). A study showed grasp planning in SMG was disrupted by TMS as early as 17ms after cue presentation, implicating a causal role in grasp planning and execution (Potok et al., 2019).

While human participants can self-report strategies employed while performing internal cognitive tasks, cue processing and motor imagery do not have independently observable behavioral outputs to correlate with the measured neural data. Our analysis showed generalizable representation (Figure 3A,B) and overlapping tuning (Figure 1G) in both SMG and PMv during both the cue and action phases. Multiple explanations for generalized neural activity observed during these tasks are plausible. During cue presentation, an increase in neural activity could represent visual feature extraction of the presented cue (visual processes). Alternatively, activity could be independent of visual input and represent planning activity of the cued grasp (motor processes). Additionally, activity could be related to memory or semantic meaning, as the participant remembers the instructed grasp (cognitive processes). Finally, a combination of all these processes might be at play. While proving a definitive answer to these questions is beyond the scope of this paper, performing cross-phase classification between the cue and action can help identify similar or distinct cognitive processes within the observed data.

These similarities could be explained by the participant performing “visual imagery” rather than motor imagery during the action phase, as they recall a mental image of the grasp (Figure 3A,B Train: Cue Phase). Cue phase activity can partly be explained during action phase (classification performance 80% SMG, 55% PMv) (Figure 3A,B Train: Action Phase), but neuronal activity unique to action phase exists (classification performance 99% SMG, 89% PMv, Figure 3A,B Train: Action Phase). This generalization from cue to action is not bidirectional (from action to cue). Therefore, we argue this additional information during the action phase is likely motor-related and thus fundamentally different from neural activity during the cue phase.

Good generalization of the model to both cue and action phases when training on the cue phase could indicate motor components as well as visual components. PMv has been shown to represent planning activity of the grasp in NHP experiments (Schaffelhofer and Scherberger, 2016). Therefore, planned hand shape as well as visual object features can modulate neuronal firing rates within the cortical grasp circuit during a grasp task. In SMG, a fMRI study demonstrated planning activity for grasping tools that were previously manipulated without vision, hinting that SMG’s cue phase activity is likely not to be only visual (Styrkowiec et al., 2019).

During tool use, SMG is hypothesized to integrate the appropriate grasp type with the knowledge of how to use the tool (Osiurak and Badets, 2016; Vingerhoets, 2014), which requires access to semantic information. As our current task design does not allow the determination of this cognitive process, further experimentation is necessary.

SMG encodes speech

During speech, SMG and PMv showed vastly different results. Spoken words (both grasp names and colors) were decodable equally or better than only motor imagery of grasps in SMG. In contrast, PMv and S1 showed neither significant classification of spoken grasp names nor of spoken colors (Figure 4C).

The motor imagery and speech tasks showed similar proportions of tuned SMG neurons during the action phase (Figure 4D). Does this result suggest SMG processes semantics, regardless of the performed task? To answer this question, we performed cross-task classification (Figure 4E)

During the cue phase, the model generalized nicely between the grasp motor imagery and spoken grasps tasks, confirming the neural code of the cued grasp image remained similar. This effect decreased during the delay phase and became weak or absent during the action phase. SMG may engage different motor plans when motor imagery or speech was performed, even if the semantic content was identical.

During the action phase, none of the models trained during one task generalized well to a different task. Furthermore, accurate classification of color words confirmed SMG’s role is not confined to only action verbs, even if classification accuracy of spoken colors was lower than that for spoken grasps. Possibly, the novelty of the words affected the amplitude of the neural representation, as color words are more common than the grasp names we employed. However, our participant was well versed in the names of the grasps, having used them repeatedly prior to data collection.

Study of the underlying feature space in SMG’s neuronal population suggested that hand posture (proximity of “Lateral” and “Palmar Pinch” in PCA space, Figure S2C) rather than object size and shape modulate SMG activity. These results support fMRI findings, where object size was not shown to modulate SMG activity (Perini et al., 2020).

Conclusion

In this study, we demonstrate grasps are well represented by single unit firing rates of neuronal populations in human SMG and PMv during cue presentation. During motor imagery, individual grasps could be significantly decoded in all brain areas. SMG and PMv achieved similar highly-significant decoding performances, demonstrating their viability for a grasp BMI. During speech, SMG achieved significant classification performance, in contrast to PMv and S1, which were not able to significantly decode individual spoken words. While temporal dynamics between motor imagery and speech were similar, we observed different motor plans for each output modality. These results are evidence for a larger role of SMG in language processing. Given the flexibility of neural representations within SMG, this brain area may be a candidate implant site for BMI speech and grasping applications.

STAR Methods

Resource Availability

Lead Contact

Further information and requests for resources should be directed to the Lead Contact, Sarah K. Wandelt (skwandelt@caltech.edu).

Materials Availability

This study did not generate new unique materials.

Data and Code Availability

All analyses were conducted in MATLAB using previously published methods and packages. MATLAB analyses scripts and preprocessed data are available on GitHub (https://doi.org/10.5281/zenodo.6330179).

Experimental model and subject details

A tetraplegic participant was recruited for an IRB- and FDA-approved clinical trial of a brain-machine interface and he gave informed consent to participate. The participant suffered a spinal cord injury at cervical level C5 two years prior to participating in the study.

Method details

Implants

The targeted areas for implant were the left ventral premotor cortex (PMv), supramarginal gyrus (SMG), and primary somatosensory cortex (S1). Exact implant sites within PPC and PMv were identified using fMRI while the participant performed imagined reaching and grasping tasks. The subject performed precision grip, power grip or reaches without hand shaping of objects in different orientations (Aflalo, Kellis et al., 2015). For localization of the S1 implant, the subject was touched on areas with residual sensation on the biceps, forearm and thenar eminence during fMRI, and reported the number of touches (Armenta Salas et al., 2018). In November 2016, the participant underwent surgery to implant one 96-channel multi-electrode array (Neuroport Array, Blackrock Microsystems, Salt Lake City, UT) in SMG and PMv each, and two 7 × 7 sputtered iridium oxide film - tipped microelectrode arrays with 48 channels each in S1.

Data collection

Recording began two weeks after surgery and continued one to three times per week. Data for this work were collected between 2017 and 2019. Broadband electrical activity was recorded from the NeuroPort arrays using Neural Signal Processors (Blackrock Microsystems, Salt Lake City, UT). Analog signals were amplified, bandpass filtered (0.3–7500 Hz), and digitized at 30,000 samples/sec. To identify putative action potentials, these broadband data were bandpass filtered (250–5000 Hz), and thresholded at −4.5 the estimated root-mean-square voltage of the noise. Waveforms captured at these threshold crossings were then spike sorted by manually assigning each observation to a putative single neuron, and the rate of occurrence of each “unit”, in spikes/sec, are the data underlying this work. Units with firing rate <1.5 Hz were excluded from all analyses. To allow for meaningful analysis of individual datasets, recording sessions where high levels of noise prevented us from isolating more than three units on an array were excluded. This resulted in the removal of three PMv datasets. The rounded average number of recorded units per session was 55 +/− 17 for SMG, 12 +/− 9 for PMv, and 119 +/− 48 for S1.

Experimental Task

We implemented a task that cued five different grasps with visual images taken from the “Human Grasping Database” (Feix et al., 2016) to examine the neural activity related to imagined grasps in SMG, PMv and S1. The grasps were selected to cover a range of different hand configurations and were labeled “Lateral”, “WritingTripod”, “MediumWrap”, “PalmarPinch”, and “Sphere3Finger” (Figure 1A).

Go task:

Each trial consisted of four phases, referred to in this paper as ITI, cue, delay and action (Figure 1B). The trial began with a brief inter-trial interval (2 sec), followed by a visual cue of one of the five specific grasps (4 sec). Then, after a delay period (gray circle onscreen; 2 sec), the participant was instructed to imagine performing the cued grasp with his right (contralateral) hand (Go trials; green circle on screen; 4 sec). Three datasets had a longer action phase. For these, only data from the first four seconds of the action phase were included in the analysis.

Go/No-Go task:

In a Go/No-Go version of this task, the participant was presented with either a green circle (Go condition) or a red circle (No-Go condition) after the delay, with instructions to imagine performing the cued grasp as normal during the Go condition (Go trials), and to do nothing for the No-Go condition (No-Go trials). In both variations of the task, conditions and grasp types were pseudorandomly interleaved and balanced with eight trials collected per combination (Figure 1B).

Spoken Grasps task:

A speaking variation of the task was constructed with the same task design outline above, but instead of performing motor imagery during the action phase, the participant was instructed to vocalize once the name of the grasp. Spoken Colors task: Another variation of this speaking task used five squares of different colors instead of five grasps, and the participant was instructed to vocalize once the color during the action phase (Figure 4A,B). On each session day, a “Motor Imagery task” (identical to Go task), a “Spoken Grasps task” and a “Spoken Colors task” was performed, to allow comparisons between tasks.

Table 1 illustrates the number of recording sessions for each task variation.

The participant was situated 1 m in front of a LED screen (1190 mm screen diagonal), where the task was visualized. The task was implemented using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997; Kleiner et al, 2007) extension for MATLAB (MATLAB. (2018). 9.7.0.1190202 (R2019b). Natick, Massachusetts: The MathWorks Inc.).

Neural Firing Rates

Firing rates of sorted units were computed as the number of spikes that occurred in 50ms bins, divided by the bin width, and smoothed using a Gaussian filter with kernel width of 50ms to form an estimate of the instantaneous firing rates (spikes/sec). For the Go condition, 40 trials (8 repetitions of 5 grasps) were recorded per block. For the No-Go condition, two consecutive blocks of 40 trials (4 repetitions of 5 Go and 5 No-Go grasps) were recorded and combined, to accommodate the participant with shorter tasks.

Quantification and Statistical Analysis

All analyses were performed using MATLAB R2020b.

Linear regression analysis

To identify units that exhibited selective firing rate patterns (or tuning) for the different grasps, linear regression analysis was performed in two different ways: 1) step by step in 50ms time bins to allow assessing changes in neuronal tuning over the entire trial duration; 2) averaging the firing rate of specified time windows during the cue (1.5s) and action phase (2s), allowing to compare tuning between both phases. The model returns a fit that estimates the firing rate of a unit based on the following variables:

Where FR corresponds to the firing rate of that unit, and β corresponds to the estimated regression coefficients. A 48 × 5 indicator variable, X, indicated which data corresponded to which grasp. The first 8 rows were the average firing rate of the ITI phase, and indicated the offset term βo, or baseline condition. These rows had only zeros. The next 40 rows indicated the trial data, for example, if the first trial was “Lateral” (grasp 1), it would have a 1 in column 1, and zeros in all other columns.

In this model, β symbolizes the change of firing rate from baseline for each grasp. A student’s t-test was performed to test the hypothesis of β = 0. A unit was defined as tuned if the hypothesis could be rejected (p < 0.05, t-statistic). This definition allows for tuning of a unit to zero, one, or multiple grasps during different time points of the trial.

Linear regression significance testing

To assess significance of unit tuning, a null dataset was created by repeating linear regression analysis 1000 times with shuffled labels. Then, different percentile levels of this obtained null distribution were computed and compared to the actual data. Data higher than the 95th percentile of the null - distribution was denoted with a * symbol, higher than 99th percentile was denoted with **, and higher than 99.9th percentile was denoted with ***.

Classification

Using the neuronal firing rates recorded in this task, a classifier was used to evaluate how well the set of grasps could be differentiated during each phase. For each session and each array individually, linear discriminant analysis (LDA) was performed, assuming an identical diagonal covariance matrix for data of each grasp. These assumptions, compared to a full diagonal covariance matrix, resulted in best classification accuracies. Classifiers were trained using averaged data from each phase, which were either 2s (ITI, delay) or 4s (cue, action). We applied principal component analysis (PCA) and selected the 10 highest principal components (PCs), or PCs explaining more than 90% of the variance (whichever was higher), for feature selection on the training set. When less than 10 PCs were available, all features were used. This feature selection method allowed us to compare if there was a correlation between the number of tuned units and classification accuracy, without selecting tuned units as features. The unit yield in PMv was generally lower than in SMG and S1; however, significant classification accuracies were still obtained with a limited number of features. Between 12 and 21 PCs were used in SMG, 6 and 16 in PMv, and 18 and 27 in S1. Leave-one-out cross-validation was performed to estimate decoding performance. A 95% confidence interval was computed by the student’s t-inverse cumulative distribution function.

Classification performance significance testing

To assess the significance of classification performance, a null dataset was created by repeating classification 1000 times with shuffled labels. Then, different percentile levels of this null distribution were computed and compared to the mean of the actual data. Mean classification performances higher than the 95th percentile were denoted with a * symbol, higher than 99th percentile were denoted with **, and higher than 99.9th percentile were denoted with ***.

Neuron dropping curve and cross-phase classification

The neuron dropping curve represents the evolution of the classification accuracy based on the number of neurons used to train and test the model. All available neurons were used for all brain areas. Cross-phase classification was performed to investigate how well a model trained on data of the cue phase can predict data of the action phase, and vice-versa. Classification with eightfold cross validation was performed for each subset of neurons selected for classification. First, one of the neurons was randomly selected, and the classification accuracy on the cue and action phase was computed with a model trained on either the action phase or the cue phase. Then, a new subset of two random neurons was selected, and classification accuracy was again computed. This was performed until all available neurons were randomly added. PCA was performed on the dataset. To avoid overfitting by using more features than observations (40), the maximum number of principal components used was 20, and the process was repeated 100 times. The prediction accuracy was averaged over the cross-validation folds, and the mean with 95% confidence interval (bootstrapped) was plotted against the number of neurons.

Cross-task classification

To evaluate the similarity of neuronal firing in the “Motor Imagery”, the “Spoken Grasps” and the “Spoken Colors” tasks, cross-task classification was performed. This method consisted of training a classifier on the averaged neuronal firing rates recorded during one of the tasks (e.g. “Motor Imagery”), and evaluating it on the neuronal firing rates of all three tasks. For “Spoken Colors”, data was only averaged over the first 2s of the cue phase, as neuronal activity for this condition was shorter than for the other tasks (Figure 4D). A LDA with PCA and Leave-one-out cross validation was performed for each individual phase (see method section “Classification”).

Supplementary Material

Key resources table.

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| MATLAB R2020b | MathWorks | http://www.mathworks.com |

| Psychophysics Toolbox extension for MATLAB (2018) | http://psychtoolbox.org/ | |

| Other | ||

| Neuroport System | Blackrock Microsystems | http://blackrockmicro.com/ |

Highlights:

Single neurons in the human cortical grasp circuit encode motor imagery and language

Neural representations of unique grasps are found in cortical areas SMG, PMv and S1

Additionally, SMG encodes the vocalization of grasp names and colors

Our results identify new target areas for grasp and speech brain-machine interfaces

Acknowledgements

We wish to thank L. Bashford, H. Jo and I. Rosenthal for helpful discussions and data collection. We wish to thank our study participant FG for his dedication to the study which made this work possible. This research was supported by the NIH National Institute of Neurological Disorders and Stroke Grant U01: U01NS098975 (S.K.W., S.K., D.B., K.P., C.L. and R.A.A.) and by the T&C Chen Brain-machine Interface center (S.K.W., D.B., R.A.A.).

Footnotes

Declaration of interests

The authors declare no competing interest

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Aflalo T, Kellis S, Klaes C, Lee B, Shi Y, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C, Andersen RA, 2015. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science 348, 906–910. 10.1126/science.aaa5417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aflalo T, Zhang CY, Rosario ER, Pouratian N, Orban GA, Andersen RA, 2020. A shared neural substrate for action verbs and observed actions in human posterior parietal cortex. Science Advances 6, eabb3984. 10.1126/sciadv.abb3984 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Aflalo T, Kellis S, 2019. From thought to action: The brain–machine interface in posterior parietal cortex. PNAS 116, 26274–26279. 10.1073/pnas.1902276116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Kellis S, Klaes C, Aflalo T, 2014. Toward more versatile and intuitive cortical brain machine interfaces. Curr Biol 24, R885–R897. 10.1016/j.cub.2014.07.068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson KD, 2004. Targeting recovery: priorities of the spinal cord-injured population. J. Neurotrauma 21, 1371–1383. 10.1089/neu.2004.21.1371 [DOI] [PubMed] [Google Scholar]

- Angrick M, Ottenhoff MC, Diener L, Ivucic D, Ivucic G, Goulis S, Saal J, Colon AJ, Wagner L, Krusienski DJ, Kubben PL, Schultz T, Herff C, 2021. Real-time synthesis of imagined speech processes from minimally invasive recordings of neural activity. Commun Biol 4, 1–10. 10.1038/s42003-021-02578-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Armenta Salas M, Bashford L, Kellis S, Jafari M, Jo H, Kramer D, Shanfield K, Pejsa K, Lee B, Liu CY, Andersen RA, 2018. Proprioceptive and cutaneous sensations in humans elicited by intracortical microstimulation. eLife 7, e32904. 10.7554/eLife.32904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bashford L, Rosenthal I, Kellis S, Pejsa K, Kramer D, Lee B, Liu C, Andersen RA, 2021. The Neurophysiological Representation of Imagined Somatosensory Percepts in Human Cortex. J. Neurosci. 41, 2177–2185. 10.1523/JNEUROSCI.2460-20.2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Branco MP, Freudenburg ZV, Aarnoutse EJ, Bleichner MG, Vansteensel MJ, Ramsey NF, 2017. Decoding hand gestures from primary somatosensory cortex using high-density ECoG. Neuroimage 147, 130–142. 10.1016/j.neuroimage.2016.12.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchwald M, Przybylski Ł, Króliczak G, 2018. Decoding Brain States for Planning Functional Grasps of Tools: A Functional Magnetic Resonance Imaging Multivoxel Pattern Analysis Study. Journal of the International Neuropsychological Society 24, 1013–1025. 10.1017/S1355617718000590 [DOI] [PubMed] [Google Scholar]

- Collinger JL, Wodlinger B, Downey JE, Wang W, Tyler-Kabara EC, Weber DJ, McMorland AJ, Velliste M, Boninger ML, Schwartz AB, 2013. 7 degree-of-freedom neuroprosthetic control by an individual with tetraplegia. Lancet 381, 557–564. 10.1016/S0140-6736(12)61816-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui H, Andersen RA, 2007. Posterior Parietal Cortex Encodes Autonomously Selected Motor Plans. Neuron 56, 552–559. 10.1016/j.neuron.2007.09.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deschamps I, Baum SR, Gracco VL, 2014. On the role of the supramarginal gyrus in phonological processing and verbal working memory: Evidence from rTMS studies. Neuropsychologia 53, 39–46. 10.1016/j.neuropsychologia.2013.10.015 [DOI] [PubMed] [Google Scholar]

- Feix T, Romero J, Schmiedmayer HB, Dollar AM, Kragic D, 2016. The GRASP Taxonomy of Human Grasp Types. IEEE Transactions on Human-Machine Systems 46, 66–77. 10.1109/THMS.2015.2470657 [DOI] [Google Scholar]

- Filimon F, Nelson JD, Huang R-S, Sereno MI, 2009. Multiple parietal reach regions in humans: cortical representations for visual and proprioceptive feedback during on-line reaching. J. Neurosci. 29, 2961–2971. 10.1523/JNEUROSCI.3211-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, McLean DA, Valyear KF, Culham JC, 2013. Decoding the neural mechanisms of human tool use. eLife 2, e00425. 10.7554/eLife.00425 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Buxbaum LJ, 2019. Gesturing tool use and tool transport actions modulates inferior parietal functional connectivity with the dorsal and ventral object processing pathways. Human Brain Mapping 40, 2867–2883. 10.1002/hbm.24565 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman JM, Tabot GA, Lee AS, Suresh AK, Rajan AT, Hatsopoulos NG, Bensmaia S, 2019. Postural Representations of the Hand in the Primate Sensorimotor Cortex. Neuron 104, 1000–1009.e7. 10.1016/j.neuron.2019.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hecht M, Hillemacher T, Gräsel E, Tigges S, Winterholler M, Heuss D, Hilz M-J, Neundörfer B, 2002. Subjective experience and coping in ALS. Amyotroph Lateral Scler Other Motor Neuron Disord 3, 225–231. 10.1080/146608202760839009 [DOI] [PubMed] [Google Scholar]

- Jafari M, Aflalo T, Chivukula S, Kellis SS, Salas MA, Norman SL, Pejsa K, Liu CY, Andersen RA, 2020. The human primary somatosensory cortex encodes imagined movement in the absence of sensory information. Commun Biol 3, 1–7. 10.1038/s42003-020-01484-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson-Frey SH, 2004. The neural bases of complex tool use in humans. Trends in Cognitive Sciences 8, 71–78. 10.1016/j.tics.2003.12.002 [DOI] [PubMed] [Google Scholar]

- Klaes C, Kellis S, Aflalo T, Lee B, Pejsa K, Shanfield K, Hayes-Jackson S, Aisen M, Heck C, Liu C, Andersen RA, 2015. Hand Shape Representations in the Human Posterior Parietal Cortex. J Neurosci 35, 15466–15476. 10.1523/JNEUROSCI.2747-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDowell T, Holmes NP, Sunderland A, Schürmann M, 2018. TMS over the supramarginal gyrus delays selection of appropriate grasp orientation during reaching and grasping tools for use. Cortex 103, 117–129. 10.1016/j.cortex.2018.03.002 [DOI] [PubMed] [Google Scholar]

- Moses DA, Metzger SL, Liu JR, Anumanchipalli GK, Makin JG, Sun PF, Chartier J, Dougherty ME, Liu PM, Abrams GM, Tu-Chan A, Ganguly K, Chang EF, 2021. Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria. New England Journal of Medicine 385, 217–227. 10.1056/NEJMoa2027540 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Fadiga L, Fogassi L, Gallese V, Raos V, Rizzolatti G, 1997. Object Representation in the Ventral Premotor Cortex (Area F5) of the Monkey. Journal of Neurophysiology 78, 2226–2230. 10.1152/jn.1997.78.4.2226 [DOI] [PubMed] [Google Scholar]

- Nicolas-Alonso LF, Gomez-Gil J, 2012. Brain Computer Interfaces, a Review. Sensors (Basel) 12, 1211–1279. 10.3390/s120201211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberhuber M, Hope TMH, Seghier ML, Parker Jones O, Prejawa S, Green DW, Price CJ, 2016. Four Functionally Distinct Regions in the Left Supramarginal Gyrus Support Word Processing. Cereb Cortex 26, 4212–4226. 10.1093/cercor/bhw251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okorokova EV, Goodman JM, Hatsopoulos NG, Bensmaia SJ, 2020. Decoding hand kinematics from population responses in sensorimotor cortex during grasping. J. Neural Eng. 17, 046035. 10.1088/1741-2552/ab95ea [DOI] [PubMed] [Google Scholar]

- Orban GA, Caruana F, 2014. The neural basis of human tool use. Front Psychol 5. 10.3389/fpsyg.2014.00310 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Osiurak F, Badets A, 2016. Tool use and affordance: Manipulation-based versus reasoning-based approaches. Psychol Rev 123, 534–568. 10.1037/rev0000027 [DOI] [PubMed] [Google Scholar]

- Peeters R, Simone L, Nelissen K, Fabbri-Destro M, Vanduffel W, Rizzolatti G, Orban GA, 2009. The representation of tool use in humans and monkeys: common and uniquely human features. J. Neurosci. 29, 11523–11539. 10.1523/JNEUROSCI.2040-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perini F, Powell T, Watt SJ, Downing PE, 2020. Neural representations of haptic object size in the human brain revealed by multivoxel fMRI patterns. Journal of Neurophysiology 124, 218–231. 10.1152/jn.00160.2020 [DOI] [PubMed] [Google Scholar]

- Potok W, Maskiewicz A, Króliczak G, Marangon M, 2019. The temporal involvement of the left supramarginal gyrus in planning functional grasps: A neuronavigated TMS study. Cortex 111, 16–34. 10.1016/j.cortex.2018.10.010 [DOI] [PubMed] [Google Scholar]

- Reynaud E, Navarro J, Lesourd M, Osiurak F, 2019. To Watch is to Work: a Review of NeuroImaging Data on Tool Use Observation Network. Neuropsychology Review 29, 484–497. 10.1007/s11065-019-09418-3 [DOI] [PubMed] [Google Scholar]

- Sakata H, 1995. Neural Mechanisms of Visual Guidance of Hand Action in the Parietal Cortex of the Monkey | Cerebral Cortex | Oxford Academic; [WWW Document]. URL https://academic.oup.com/cercor/article-abstract/5/5/429/375668?redirectedFrom=fulltext (accessed 2.28.18). [DOI] [PubMed] [Google Scholar]

- Schaffelhofer S, Scherberger H, 2016. Object vision to hand action in macaque parietal, premotor, and motor cortices. eLife 5. 10.7554/eLife.15278 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sliwinska MWW, Khadilkar M, Campbell-Ratcliffe J, Quevenco F, Devlin JT, 2012. Early and Sustained Supramarginal Gyrus Contributions to Phonological Processing. Front. Psychol. 3. 10.3389/fpsyg.2012.00161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stavisky SD, Willett FR, Wilson GH, Murphy BA, Rezaii P, Avansino DT, Memberg WD, Miller JP, Kirsch RF, Hochberg LR, Ajiboye AB, Druckmann S, Shenoy KV, Henderson JM, 2019. Neural ensemble dynamics in dorsal motor cortex during speech in people with paralysis. eLife 8, e46015. 10.7554/eLife.46015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoeckel C, Gough PM, Watkins KE, Devlin JT, 2009. Supramarginal gyrus involvement in visual word recognition. Cortex, Special Issue on “The Contribution of TMS to Structure-Function Mapping in the Human Brain. Action, Perception and Higher Functions” 45, 1091–1096. 10.1016/j.cortex.2008.12.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Styrkowiec PP, Nowik AM, Króliczak G, 2019. The neural underpinnings of haptically guided functional grasping of tools: An fMRI study. NeuroImage 194, 149–162. 10.1016/j.neuroimage.2019.03.043 [DOI] [PubMed] [Google Scholar]

- Taira, 1998. Parietal cortex neurons of the monkey related to the visual guidance of hand movement | SpringerLink; [WWW Document]. URL 10.1007/BF00232190 (accessed 2.28.18). [DOI] [PubMed] [Google Scholar]

- Vingerhoets G, 2014. Contribution of the posterior parietal cortex in reaching, grasping, and using objects and tools. Front Psychol 5. 10.3389/fpsyg.2014.00151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson GH, Stavisky SD, Willett FR, Avansino DT, Kelemen JN, Hochberg LR, Henderson JM, Druckmann S, Shenoy KV, 2020. Decoding spoken English from intracortical electrode arrays in dorsal precentral gyrus. J. Neural Eng. 17, 066007. 10.1088/1741-2552/abbfef [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang CY, Aflalo T, Revechkis B, Rosario ER, Ouellette D, Pouratian N, Andersen RA, 2017. Partially Mixed Selectivity in Human Posterior Parietal Association Cortex. Neuron 95, 697–708.e4. 10.1016/j.neuron.2017.06.040 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All analyses were conducted in MATLAB using previously published methods and packages. MATLAB analyses scripts and preprocessed data are available on GitHub (https://doi.org/10.5281/zenodo.6330179).