Abstract

Background:

There is no commonly accepted comprehensive framework for describing the practical specifics of external support for practice change. Our goal was to develop such a taxonomy that could be used by both external groups or researchers and health care leaders.

Methods:

The leaders of 8 grants from Agency for Research and Quality for the EvidenceNOW study of improving cardiovascular preventive services in over 1500 primary care practices nationwide worked collaboratively over 18 months to develop descriptions of key domains that might comprehensively characterize any external support intervention. Combining literature reviews with our practical experiences in this initiative and past work, we aimed to define these domains and recommend measures for them.

Results:

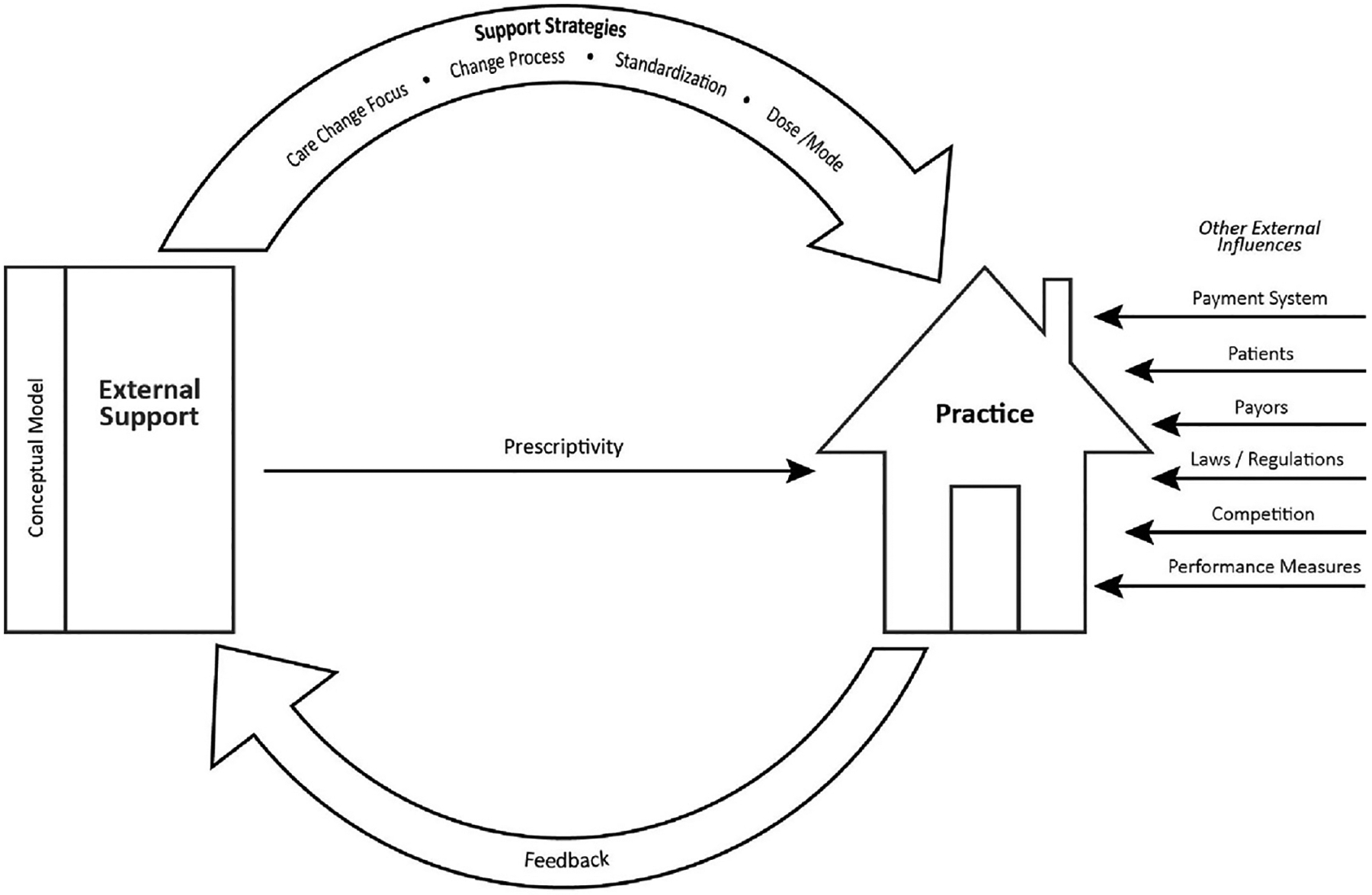

The taxonomy includes 1 domain to specify the conceptual model(s) on which an intervention is built and another to specify the types of support strategies used. Another 5 domains provide specifics about the dose/mode of that support, the types of change process and care process changes that are encouraged, and the degree to which the strategies are prescriptive and standardized. A model was created to illustrate how the domains fit together and how they would respond to practice needs and reactions.

Conclusions:

This taxonomy and its use in more consistently documenting and characterizing external support interventions should facilitate communication and synergies between 3 areas (quality improvement, practice change research, and implementation science) that have historically tended to work independently. The taxonomy was designed to be as useful for practices or health systems managing change as it is for research.

Keywords: Change Management, Delivery of Health Care, Diffusion of Innovation, Implementation Science, Organizational Innovation, Primary Health Care, Quality Improvement

Introduction

There is widespread agreement that the quality and cost of US health care needs to improve. From 1990 to early 2000, the main approach to improvement was to use guideline-based efforts to improve specific conditions and care processes. More recently, the focus has broadened to seeking wholescale transformation in culture and care processes to achieve quadruple aim goals on a large scale.1–6 This has led to many initiatives and clinical trials of various sizes and approaches aimed at changing medical practice, especially in primary care.7–9 It has also provided a focus for the growing new field of dissemination and implementation research.10,11

During this time, quality improvement researchers, dissemination and implementation scientists, and health care leaders have each been working—in different ways—to support and learn from the many change efforts in the primary care setting. Yet, most of their efforts have had mixed results, and there are few studies of whether and why changes are sustained after the external intervention goes away.12–14 More important, we know little about the details of most quality initiatives and interventions. When the focus is on quality improvement, the details of external support may not be documented or evaluated, and therefore are not easily sharable. Even among researchers, the published literature is scarce in important details about the interventions being tested, in part because journals have limited space for details, although there has also been little emphasis by the research community on the need for such details.

We use the term, external support, here to refer to the strategies (eg, facilitation, audit, and feedback) that an external organization or agent might provide to a practice to assist it to change. However, the terms, “intervention” and “implementation,” are often used interchangeably without clarifying whether they refer to what is done by an external agent, what actual changes that practices make, or what change process practices use to make changes. These ambiguous terms highlight a problem for the fields of practice change and improvement as well as dissemination and implementation science; there is lack of common language and standardized descriptions of the specific features of the strategies, change focus, or change process, either as planned or as it was actually delivered. This has limited the field’s ability to compare and learn within and across initiatives and research studies.

We are the lead investigators for EvidenceNOW, one of the largest trials ever to study the impact of external support for primary care practice change.15,16 In EvidenceNOW, the Agency for Research and Quality funded 7 regionally dispersed grantees to each engage approximately 250 primary care practices (total of 1500 practices), and to provide external support to help these practices improve their processes for delivering cardiovascular preventive care. The principal outcomes for the trial were practice level rates of delivery of “ABCS”—aspirin for patients who need it, blood pressure control, cholesterol management, and smoking cessation support—plus measures of practice capacity for change. Each grantee had 3 years to recruit practices, implement their intervention, and evaluate the results. In addition, Agency for Research and Quality funded an eighth grantee to conduct an independent national evaluation of EvidenceNOW. This evaluation was conducted prospectively and used both pooled practice-level quantitative data and complementary qualitative data. In doing this work, we all recognized the importance of describing and documenting—in as similar a way as possible—the external support that grantees provided to practices. We created the resulting taxonomy and hoped it would facilitate 3 things:

Harmonization of detailed descriptions of our external support interventions that varied in content and approach across the seven grantees to facilitate both comparisons and aggregate analyses;

Identification of practical specifics for use by others, especially the practice and care delivery leaders responsible for bringing their care processes into alignment with ever-changing payment methods, patient desires, and policy concerns;

Development of more conceptual clarity about external support—both what it is and a framework for more consistent descriptions of what was planned and then actually done.

To do that, we needed a more pragmatic and comprehensive way to document and characterize external support than is currently available in the many articles describing conceptual frameworks and intervention strategies at a more theoretical level.17–21 We wanted to build a common terminology like the one that was begun by Colquhoun and other implementation science thought leaders in 2014.22 They convened an international working group that explored various approaches to that task and concluded there was need “to draft a new, simplified consensus framework of interventions to promote and integrate evidence into health practices, systems, and policies.” Their resulting framework identified 4 elements for categorizing interventions—active ingredients, causal mechanisms, mode of delivery, and intended target. They invited others to debate, build on, and revise this nascent theoretical framework. A parallel proposal by Proctor et al as extended by Leeman et al provides another perspective, but each of these need the kind of specificity and focus on external agents that we hoped to add.23,24

This article is our effort to complement the Colquhoun framework from an empirical perspective by expanding and revising their elements/domains and by suggesting some specifics and measures that should be tracked and summarized so that others can know both what external support was planned and how it was actually delivered. Thus, members from all 8 EvidenceNOW grants worked together to create an empirical taxonomy for external support for practice change that would facilitate how to describe and measure the kinds of activities that an external agent might use to provide support for practices to change. However, we believe that the taxonomy should be equally useful for a health system that is providing internal improvement support to its practices as well as for internal leadership of individual practices, and is certainly not limited to research studies.

Methods

Setting

The setting for this work was the EvidenceNOW initiative, described in more detail elsewhere.15,16 The leaders of all 8 grants in that initiative had extensive experience with both studying and supporting practice change and we collaboratively developed this taxonomy so that dissemination products, including publications, would include consistent terminology. This project developed over an 18-month time period with monthly conference calls, including an in-person retreat at the 12-month mark. It began after all support was completed, most data had been collected, and final analyses and dissemination efforts were beginning.

Process

We began by generating a list of 10 potentially important aspects of external support interventions (hereafter referred to as domains) that might collectively describe what each grantee had done in its effort to help primary care practices improve both their cardiovascular preventive services and overall infrastructure. We then conducted literature reviews and had multiple conversations over 15 months to clarify the terminology and definitions. Through this process, we reduced the domains to 7.

Then, each grantee identified the domains that were of most interest to them. This allowed subgroups to be responsible for conducting additional literature searches, fine tuning the domain definition, summarizing what was known about it, proposing ways to measure that domain’s use in an intervention, and providing examples to increase understanding of what the domain includes.

Once these documents were drafted and discussed by the group as a whole, an in-person meeting was arranged where each domain was presented and discussed to identify questions and suggestions for further revision by the team responsible for it. As we did that, we also developed a better understanding of the inter-relationships among the domains in a way that also enhanced our descriptions of each domain and the overall dynamics of delivering an external support intervention to practices.

Results

Table 1 provides a list with definitions of the 7 final domains of external support interventions designed to help primary care practices improve and transform. The domain of Conceptual Frameworks is qualitatively different from the other domains because it is primarily used by the external agent to inform and format its intervention. Although listed separately, 5 of the domains could also be seen as sub-domain descriptors that further characterize the Support Strategies domain. Those 5 also had very few relevant articles in the literature reviews that were performed so it is rare to find them described in quality improvement initiatives or research studies. Further information about the definitions, literature review, and examples for each domain are provided in the Appendix.

Table 1.

Intervention Taxonomy Domains

| Domain | Definition | Example |

|---|---|---|

| Conceptual model | Models used to conceptualize the care process, change process, outcomes, and changes at the practice, team, and individual levels that are hypothesized to influence outcomes | Choosing to base support targets on the Chronic Care Model25 would require consideration of changes in its domains (decision support, clinical information system, etc), but would provide no guidance for how to facilitate those changes |

| Support strategies | Methods or techniques used by practice change support agents to motivate, guide, and support practices in adopting, implementing, and sustaining evidence-based changes and quality improvements | Although practice facilitation was the main strategy used by all of the EvidenceNOW grantees, all also included data support and audit with feedback and some used education and training |

| Care change focus | The establishment of a priority regarding what care processes will be targets for improvement | Some grantees encouraged trying to improve care processes for all four EN targets (aspirin, blood pressure, cholesterol, and smoking) while others left the focus up to individual practices |

| Change process | The method of guiding change through ongoing support activities to help implement innovations, create efficiency, or improve outcomes | The model for improvement26 provides a framework for change by asking a change team to answer 3 questions and then conduct rapid cycle test of change |

| Prescriptivity | The extent to which practices are expected to make pre-specified changes and then are assessed on the degree to which they make those changes | Generally, only government entities can mandate that practices make specific changes and have the ability to require them |

| Standardization | The degree to which an external agent provides support in a standardized way for all practices participating in an initiative | The DIAMOND initiative27 to implement collaborative care for depression provided the same training and support options for all practices |

| Dose/mode | A measure of practice support that accounts for exposure (number of contacts), intensity (total contact time), reach (practice members involved and their level of influence), engagement (commitment and effort), duration (total time over which support occurred) and mode (proportion of contacts that occur in person vs other forms of communication) | EN grantees used various methods for facilitators to track the number, duration, and type of interactions with the practices they were assisting |

DIAMOND, name of a statewide initiative to improve depression care in MN; EHR, electronic health record; EN, EvidenceNOW; IT, information technology; LtOT, long-term opioid therapy; MED, morphine-equivalent dose.

Figure 1 illustrates the relationships among these domains and between the external agent and a practice. First, notice the direct arrow between the external support agent and the practices. This arrow is labeled Prescriptivity and it references the degree to which any changes targeted by the initiative or study are required. When the changes are highly prescriptive, the practice is told exactly what changes need to be made and even how to implement them by the external agent (eg, when a regulator or payer requires a particular approach to patient care); thus, there is little value in feedback from the practice or attempts to make modifications. In that sense, prescriptivity can also be thought of as a way to maintain fidelity to an evidence-based change. While Prescriptivity describes the degree to which practice changes are required, Standardization describes the degree to which the external agent intends to provide the same type and amount of support for every practice, regardless of differences among them (eg, most randomized controlled trials). If the intent is to provide a very standardized intervention, change agents are not permitted to adapt it to apparent needs or desires of the clinics they are helping. Within EvidenceNOW, most grantee support had little prescriptivity but moderate standardization across practices.

Figure 1.

Domain and Agent Relationships.

In the absence of prescriptivity, there is usually a cyclic relationship between the external organization’s support strategies and a practice’s feedback about what it desires or is willing to do. This reciprocal relationship then usually determines the dose and mode of support actually provided as well as what change process was used and what care changes were focused on. For example, while the grantee’s goal was to improve all 4 cardiovascular preventive services, a practice may have only wanted to work on 1 to 2 of them or may have wanted to focus instead on some more generic aspect of its care infrastructure like appointments or test result reporting. Similarly, even though the grantee had decided on facilitating multidisciplinary teams to manage a practice’s changes, that may have been unacceptable in a 1-clinician practice or in one whose practice manager is accustomed to managing any changes in a different way. We have found that such feedback may manifest as behaviors rather than specific communications. For example, the practice may be reluctant to schedule contacts with a facilitator or include only a few practice participants in those contacts. Then, the external agent would be forced to modify its planned intervention, at least for that practice. In that case, rather than providing the target Dose/Mode of facilitation (eg, 12 monthly in-person visits), the facilitator might provide fewer visits and try to stay connected to 1 person by e-mail or phone (Mode). Other factors can also affect the way an intervention is operationalized for particular practices. If a practice is located far from those delivering the support, the Dose and Mode of interactions are likely to be quite different from 1 located nearby, and a practice that consists of separate physician practices linked only for billing and call purposes may not be very receptive to the suggestion of developing standardized care processes.

Finally, no matter how large or important an initiative’s external support may be, there are many other external and internal forces that can affect the way that support is received and conducted. For example, many EvidenceNOW practices experienced disruptive changes during the support period (change in electronic health record (EHR), loss of clinicians). These forces or factors are now called determinants of practice or implementation mechanisms.28–30 An increasingly common example for practices that are owned by a larger care system is that there will be organizational rules, procedures, and processes that might be important for the targeted change but that are not under the control of either the practice or an external agent. For that reason, we made no attempt to identify such mediators and moderators, but contextual information that described the practice environment would be 1 logical extension of this taxonomy.

Discussion

The taxonomy domains defined briefly here and elaborated in the Appendix aim to provide a framework for a more comprehensive, consistent, and specific description of the components of external support for practice transformation and care improvements as well as how they are actually implemented. Although the other domains highlight the shortcomings of relying on strategies alone to characterize external support, we do not suggest that these are the only domains or measures to quantify external support. Instead, this taxonomy is intended as a starting point for further development of measures within these domains to allow for better comparison across support initiatives and a standardized platform on which to build new ones. We also hope it will help foster a common language and will complement the more theoretical approach taken by Colquhoun et al.22

Many other taxonomies and frameworks have been proposed for organizing information about efforts to implement what are often called evidence-based practices. Back in the earliest days of guideline development, Lomas and Haynes31 proposed a taxonomy for tested strategies for applying clinical practice recommendations. Since then, the scoping review of classification schemes by Lokker et al32 for interventions to integrate evidence into practice in health care identified 23 taxonomies, 15 frameworks, 8 intervention lists, 3 models, and 2 others. Most of the taxonomies focused on public health or behavior change, often for a particular type of health problem, and all were attempts to organize individual strategies into some type of hierarchical structure. None identified different characteristics of strategies or distinguished those that were used by an external agent from those for use by various other actors and none focused in particular on medical practices.

Although a substantial body of research has been done in dissemination and implementation science to identify implementation strategies, much of that work has mixed strategies used by external agents with strategies used by practices and care systems and has remained at a theoretical level. Thus, we have found relatively little in the literature to help us to characterize the specifics of our external support interventions. The development of the consolidated framework for implementation research has at least summarized the disparate literature and a compilation of 73 implementation strategies has provided a starting point for this work.17–19 However, an intervention involves much more than strategies, and the compilation of strategies has not distinguished those that are used by external change facilitator groups from those that are necessarily used by practices or that require actions by external stakeholders.

In 2013, Proctor, Powell, and McMillen24 proposed recommendations for specifying and reporting on the use of implementation strategies. They pointed out that strategies “are often inconsistently labeled and poorly described, are rarely justified theoretically, lack operational definitions or manuals to guide their use, and are part of ‘packaged’ approaches whose specific elements are poorly understood.” Therefore, they recommended that strategies be operationalized along 7 dimensions: actor, action, action targets, temporality, dose, implementation outcomes addressed, and theoretical justification. Our taxonomy builds on these dimensions by adding pragmatic concepts such as prescriptivity and standardization, while also adding the concept of a cyclic relationship between the external support agent and the practices.

Nobody had addressed who was doing what to whom until Proctor et al24 suggested that would be valuable. Leeman et al23 extended that recommendation by proposing a system for classifying implementation strategies based on who the actors (similar to our use of “agents”) and action targets are. This followed an observation that using a single term for strategies does not distinguish what practice facilitators do in support of primary care staff efforts to implement changes in their care processes.23 To counteract this, they suggested structuring strategies into groups based on the relevant actors and targets. They identified 3 types of actors: those in care delivery systems, those in support systems, and those who identify, translate, and disseminate evidence. That led them to 5 classes of implementation strategies:

Dissemination strategies that target decision-maker and clinician awareness of evidence

Implementation process strategies that are enacted by those in delivery systems

Integration strategies that are also used by delivery system actors, but in this case to target factors that facilitate or impede implementation

Capacity-building strategies that are enacted by support system actors to facilitate implementation

Scale-up strategies that are also enacted by support system actors and are aimed at getting multiple settings to implement specific evidence

Our taxonomy was designed to address at least 3 gaps that we saw in the implementation and quality improvement literature. First, we found it necessary to resolve the confusion caused by describing implementation strategies without regard to which agents they were relevant to, as described above. Second, we thought that an intervention description that was limited to strategies was insufficient—that other characteristics of an intervention were equally important for other change agents to be able to understand its generalizability and potential for replication. Finally, we also believe that change initiatives needed to measure the important components of their interventions as they were actually delivered.

Although EvidenceNOW was funded as a research initiative aimed at testing various approaches to providing support for cardiovascular preventive services in small primary care practices with limited resources, we intended to create a taxonomy that will be helpful for a wide variety of improvement initiatives, practice settings, and care changes, not just for research or implementation scientists. There are many changes being requested of health care systems, especially of primary care practices, and few of them have the time, resources, and expertise to make them without guidance about what changes are most likely to impact outcomes and support for operationalizing those changes. Even practices in large health systems need external support, though they are more likely to get it from their own organization. If a taxonomy like this were used in describing all change efforts, those who wished to replicate such approaches would benefit by knowing more specifically how that support was provided.

We encourage others to react to this early effort to characterize and quantify external support interventions, whether from health plans, quality improvement organizations or a multi-site care system for its component practices. Although we have had the advantage of input from many leaders with considerable experience in facilitating and evaluating practice change, we are certain that this taxonomy can be improved. Help us to do that.

Funding:

This project is supported by research grants from the Agency for Healthcare Research & Quality (Grant No. R01HS023940-01) as part of the EvidenceNOW Initiative R18 HS023908, R18 HS023922, R18 HS023921, R18 HS023912, R18 HS023904, R18 HS023913, R18 HS023919, R01 HS023940.

We are grateful to the Agency for Research and Quality, which not only funded each of our grants to test external support for practice improvement and transformation, but also has facilitated our collaborations and harmonizations. This taxonomy is but 1 example of those efforts.

APPPENDIX

This appendix extends the article by providing a detailed description of the 7 taxonomy domains (conceptual models, external support strategies, care change process, care change focus, prescriptivity, standardization, dose, and mode). For each domain, we provide definitions, findings from brief literature reviews, and discussions. We close with examples derived from the EvidenceNOW cooperatives that illustrate choices and rationales for all 7 domains.

Conceptual MODEL

Definition

Models used to conceptualize the care process, change process, outcomes, and changes at the practice, team, and individual levels that are hypothesized to influence outcomes.

Literature Review

We conducted a comprehensive literature review to identify conceptual models that address the complexity of external support like practice facilitation (PF) interventions and related multi-level evaluations. Per concordant work by Tabak et al,2 the term, “models,” is used to refer to both theories and frameworks that enhance dissemination and implementation of evidence-based interventions.

First, we reviewed the literature on models that each Cooperative reported applying to their study. Second, we conducted a review to identify PF frameworks. Third, we conducted a broader review of 85 implementation science frameworks indexed by The Center for Research in Implementation Science and Prevention (CRISP).3 CRISP expanded on Tabak’s2 work of implementation science model categorization, which included classifying models by their focus on dissemination versus implementation. Given that the Cooperatives’ work was focused on implementing evidence-based guidelines, we identified 45 from the CRISP index that were relevant to this study; 3 were used by Cooperatives. Fourth, we conducted a review of team- and individual-level change frameworks. We found 1 leading model for team-level change that has been used in practice transformation research and 2 systematic reviews depicting the key determinants of individual behavior change.

Discussion

Studies of external practice support are strengthened by the application of frameworks that inform design and evaluation,4 including implementation science taxonomy work by Nilsen,5 who proposed that such models serve 3 main purposes: to describe and/or guide the process of translating research into practice; to understand and/or explain what influences implementation outcomes; and to evaluate implementation.

In other words, models have different purposes—some will drive the intervention components (eg, the Chronic Care Model was used by HealthyHearts NYC to identify “high-risk patient identification” as a change strategy), and others will help guide the intervention implementation (eg, CFIR guided the formative work to fit electronic reports that supported high-risk patient identification into the practice setting). Multiple models may be necessary to guide different components of a study.

External Practice Support Strategies

Definition

The methods or techniques used by practice change support organizations to motivate, guide, and support practices in adopting, implementing, and sustaining evidence-based changes and quality improvements.

Strategies for External Practice Support

The range of key strategies used across EvidenceNOW cooperatives was initially captured from various cross-cooperative documents and then modified based on strategies that arose from the literature review.

Literature Review

Much of the literature review pointed to the results of the project, Expert Recommendations for Implementing Change (ERIC). Concept mapping and delphi processes identified and defined 73 discrete implementation strategies.6 Subsequently, Waltz and colleagues7 grouped them into 9 domains. Other publications provide related formulations of the practice redesign and quality improvement process.8–10 Regarding practice facilitation, a systematic review by Baskerville et al3 demonstrated the impact but did not define specific elements of the intervention components. Berta et al11 describe facilitation as an intervention applied in the health-care sector to increase the learning capacity of the health care organization to adopt and use evidence-based knowledge to improve health outcomes and organizational performance.

Discussion

Perry and colleagues12 identified 33 ERIC practice support strategies across the 7 EvidenceNOW cooperatives. However, they recommended revising the definition of 14 strategies and identified 3 strategies not previously described: engage community resources, create online learning communities, and assess and redesign workflow. This suggests that a reconceptualization of external supports provided to primary care practices is needed.

Care Change Focus and Change Process

Definitions

Care change focus is the establishment of a priority regarding what care processes might need improvement. Change process is the method of guiding change in the practice through ongoing support activities to help implement innovations, create efficiency, or improve outcomes.

Literature Review

The concepts and processes of health care change were adapted from previous work in industry and business.13,14 Many of these components and models were incorporated into the Institute for Health care Improvement’s fundamental Model for Improvement.15 The Lean Six Sigma change processes describes methods of motivation, measurement, focus on patients, and eliminating waste to improve efficiency, satisfaction, and care outcomes. These theories and methods have been consolidated in models of diffusion of innovation such as the Promoting Action on Research Implementation in Health Services (PARiHS), which describes 3 key domains: evidence, context, and facilitation.16 Primary care transformation projects report how change processes have been adopted for QI.9,17,18

Discussion

In the change process, we first utilized techniques from corporate literature to build the case for organizational change and then added elements of the Institute for Health care Improvement and Lean Six Sigma to iteratively structure actual practice systems. These actions aim to modify attitudes, knowledge and skills of the people, redesign the structure and function of the process work flow, and integrate technology, particularly information technology.19,20

Facilitating a practice’s implementation of a change process is a complex intervention using performance feedback and evidence-based improvement approaches.21 It takes practices through change processes using systematic steps from awareness-raising to outcomes.22 Change facilitation has been shown to require significant tailoring for its success.23,24 The measures of success include clinical outcomes, satisfaction, leadership support, productivity, and work efficiency.

Prescriptivity

Definition

Prescriptivity refers to the extent to which, in the context of a practice improvement study or program, practices are expected to make prespecified changes and then assessed on whether or not they make those changes. It can be applied to 2 aspects of interventions—the targeted care process changes (eg, reminders) and the change process used to implement them (eg, a multi-disciplinary quality improvement team).

Literature Review

We identified 174 articles that addressed the process used by clinics to implement care changes. However, very few described external facilitation strategies and none focused on whether a particular change was required. Some examples are:

In the DIAMOND initiative to implement the collaborative care model for depression in primary care clinics, each clinic needed to be certified by the training organization that they had implemented each of the 6 components of that care process model.25 If they hadn’t, they were ineligible for extra monthly reimbursements from insurance plans.

In the ULTRA trial, practices were provided with facilitation for a specific type of practice improvement team, but they could work on anything they wished. None chose to work on adherence to any clinical guidelines, so there were no measurable improvements in care.26

Discussion

An intervention can be described on a continuum from not prescriptive to highly prescriptive. It is also useful to apply that continuum to each focus of prescriptivity –the care process and the change process used to implement those care changes, using a 10-point Likert scale for each component that rates the degree rescriptivity from 0 (none) to 10 (highly prescriptive):

-

Care process prescriptivity

0 = Any change a practice wants to make in the way it provides care

10 = Practices implement the required care changes

-

Change process prescriptivity

0 = Any approach a practice wants to take to managing their changes

10 = Only practices that actually use the required change process

Standardization

Definition

Standardization is the degree to which an external agent provides support in a consistent way for all practices participating in an initiative. A highly standardized intervention would follow the same schedule of visits, calls, or meetings and provide the same information to practices regardless of their desires or needs, while an unstandardized one would vary across practices, allowing for adaptations that may be responsive to local context.

Literature Review

Our literature review did not identify any articles or studies that explicitly addressed standardization as a characteristic of an intervention. However, some reports had implicit attention to standardization, or lack thereof. Some examples are:

The DIAMOND initiative to implement collaborative care for depression involved a very detailed series of training sessions for representatives of all participating practices.25,27 In both cases, the training was the same for all participants. However, the practice facilitation that followed the training was highly tailored to practices.

In the STEP-UP trial, practices were given a choice of options from a toolkit of preventive service strategies to implement and the assistance from a facilitator to help coach the practice through these changes.28 What each practice received was highly individualized for facilitator and practice preferences.

Discussion

The domain of standardization has 2 aspects:

The types and amounts of support provided

The content of that support for managing change and providing care

For both aspects, it is helpful to document both what was originally planned and what was actually delivered (fidelity vs adaptation). A rating from 0 to 10 for both intent and actual implementation could be anchored by:

-

Standardization of support approach:

0 = None - both amount and type of support are tailored for each practice

10 = Completely - both are the same for every practice (like a controlled trial)

-

Standardization of support content:

0 = None - tailored to practice desires and needs

10 = Completely - every practice receives the same information

Dose and Mode

Definitions

Dose is a measure of practice support that accounts for exposure (number of contacts), intensity (total contact time), reach (practice members engaged in the intervention and their level of influence), engagement (commitment and effort), and duration (total time over which support occurred). Mode is the means of communication by which external practice support is provided, and the individuals involved.

Literature Review

The dose of practice support has typically been defined as the duration and intensity of support organization encounters with each practice. Using this definition, an analysis of multiple studies of practice support found no relationship between the duration of support and effect size, but did see a significant trend for intensity (total contact time with the practice).3 Multiple additional dimensions of intervention dose are proposed by McHugh.29

Discussion

Five of the dimensions of dose seem particularly important and led us to propose the revised definition. Exposure and duration all would be easy to record and measure, whereas intensity, reach, and engagement would require a more qualitative assessment. Nonlinear modeling of the relationship of dose to other practice features might prove that there may be tipping points. For example, practice support that creates greater adaptive reserve in the practice in turn opens the door for practice support that helps achieve more ambitious and important goals.17

Reporting practice support dose is not sufficient unless the mode of practice support is also reported and linked to dose. We propose 3 key axes of the mode of external practice support: 1) Synchronicity, whether the contact between participants at the same time (such as a meeting) or can be experienced in different time frames (such as e-mail); 2) Virtuality, whether the contact occurs face-to-face in the same location or in different locations through technological support (such as a videoconference); and 3) Participant Group Size, whether support is delivered 1 on 1 (at the elbow consultation) or 1 to a group (training session). One can therefore imagine 8 distinct combinations of synchronicity, virtuality, and participant group size.

Example

We offer a composite example of the external practice support initiatives designed and implemented by cooperatives in EvidenceNOW to illustrate the choices inherent in paying attention to all 7 domains of practice support. We show how these domains are both distinct as well as how they should be integrated in both planning, implementing, evaluating, and reporting on an external practice support initiative. The EvidenceNOW cooperatives had the overarching goal of helping develop primary care practices capacity and incorporate patient-centered outcomes research findings into the care of the populations they serve, with an initial focus on cardiovascular preventive care—Aspirin for secondary prevention, Blood pressure control, Cholesterol effect mitigation, and Smoking cessation (ABCS).

Conceptual Models

Cooperatives used conceptual models to guide their work, particularly Bodenheimer’s 10-block model30 and the Chronic Care model.31 Conceptual models helped inform the overall design and approach to delivering external practice support. For instance, Bodenheimer’s model highlighted the need to engage leadership in the change process and then help practices address their data needs, to engage in data-driven improvement and population management, and help practices think about how teams might be engaged in improving CVD preventive care. Elements of the conceptual models used addressed both the key drivers and the change process in practices. Most Cooperatives used conceptual models that guided the change process in practices, some with a particular emphasis on using health information technology to support change. Cooperatives did not have conceptual models that informed patient behavior change, as the target of EvidenceNOW was practices.

External Support Strategies

Cooperatives developed explicit plans for most of the strategies and many developed extensive toolkits to guide facilitators work with practices, for example, the materials developed by the Southwest Cooperative (https://www.practiceinnovationco.org/ensw/ensw-resources/). Some Cooperatives had difficulties obtaining the ABCS data in real time, which meant that using these data to inform the quality improvement function (the accountability agent function of practice facilitation) was difficult to accomplish. Cooperatives that did not have the ability to provide ongoing audit and feedback either did not provide this or found ways to use manual chart audit data to inform practice quality improvement efforts. Other education and training strategies such as online resources, online member discussion forums, webinars, and academic detailing were available. Some of these educational materials were directed to practice members.32

Care Change Focus and Change Process

Care Change Focus

Cooperatives varied on the extent to which practices could decide which priorities they worked on. Some used a baseline assessment to guide collaborative prioritization; others let a practice leader decide; others provided a menu of options to inform choices; others were quite directive, and had practices work on all 4 ABCS in a specific sequence. Change Process: Some cooperatives focused on educating practice members about the ABCS and then left it to the practice or the clinician to choose how to implement these changes in practice. Other Cooperatives worked one-on-one with a practice manager and others in the practice, as needed, to assist with planning the implementation of quality improving changes. Others convened a quality improvement team in the practice to identify care gaps and develop a plan for testing and implementing operational practice changes. Cooperatives’ focus was on making both general practice changes to improve workflows as well as changes specific to ABCS outcome improvement.

Prescriptivity

While Cooperatives varied in the extent to which their practices could choose which ABC or S to work on (see Change Focus), Cooperatives were flexible about the choices practices made regarding how they would modify care processes to make these improvements. Cooperatives’ facilitators did provide examples of what has worked in other similar practices, and had tools and examples to guide these changes, but these were not prescriptive, as they might be in a health system. They were suggestions or a starting point for practices.

Standardization

Most Cooperatives developed a comprehensive set of external support strategies. For a number of cooperatives, facilitation was the most standardized element of the external support strategies. Facilitators in some cooperatives were expected to make a certain number of visits to their practices, and they had, as described above, a tailorable set of tools that could inform this work. In addition to facilitation, practice members could choose from the other external support strategies cooperatives offered, often with the guidance of a facilitator. For example, some practices might prefer to learn on their own and make use of a library of materials and online educational materials available to them. Cooperatives that relied on health IT tools as a key external support strategy had planned on a highly standardized approach but had to modify this to be more flexible when implementation of these tools became challenging.

Dose and Mode

While all Cooperatives kept track of the dose of facilitation practices received (eg, number of visits, visit length, and mode of visit [in person, virtual]), not all cooperatives monitored this information to ensure practices received a target dose of facilitation. In terms of other external support activities (eg, participation in webinars, learning collaborative), cooperatives varied in the extent to which they tracked attendance at these events, and did not take steps to require or enforce attendance. For facilitation and for attendance at other types of external support, there was variation in tracking the number of individuals at practices that were involved (including degree of involvement). Cooperative also had various ways of indicating level of engagement in real time.

References

- 1.Berwick DM. Continuous improvement as an ideal in health care. N Engl J Med 1989;320(1):53–56. [DOI] [PubMed] [Google Scholar]

- 2.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med 2012;43:337–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis of practice facilitation within primary care settings. Ann Fam Med 2012; 10:63–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Coly A, Parry G. Evaluating complex health interventions: a guide to rigorous research designs. 2017. Available from: https://www.academyhealth.org/evaluationguide. Accessed November 15, 2018.

- 5.Nilsen P Making sense of implementation theories, models and frameworks. Implement Sci 2015;10:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Waltz TJ, Powell BJ, Matthieu MM, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci 2015;10:109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lewis CC, Klasnja P, Powell BJ, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health 2018;6:136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Solberg LI. Improving medical practice: a conceptual framework. Ann Fam Med 2007;5:251–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Solberg LI, Asche SE, Margolis KL, Whitebird RR. Measuring an organization’s ability to manage change: the change process capability questionnaire and its use for improving depression care. Am J Med Qual 2008;23:193–200. [DOI] [PubMed] [Google Scholar]

- 11.Berta W, Cranley L, Dearing JW, Dogherty EJ, Squires JE, Estabrooks CA. Why (we think) facilitation works: insights from organizational learning theory. 2015. Available from: http://www.implementationscience.com/content/10/1/141. [DOI] [PMC free article] [PubMed]

- 12.Perry CK, Damschroder LJ, Hemler JR, Woodson TT, Ono SS, Cohen DJ. Specifying and comparing implementation strategies across seven large implementation interventions: a practical application of theory. Implement Sci 2019;14:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rogers EM. Diffusion of innovations. 5th ed. New York, NY: Free Press; 2003. [Google Scholar]

- 14.Langley GJ. The improvement guide: a practical approach to enhancing organizational performance. 1st ed.SanFrancisco,CA:Jossey-BassPublishers;1996. [Google Scholar]

- 15.Langley GJ. The improvement guide: a practical approach to enhancing organizational performance. 2nd ed. San Francisco, CA: Jossey-Bass Publishers; 2009. [Google Scholar]

- 16.Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A. Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci 2008;3:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nutting PA, Crabtree BF, Stewart EE, et al. Effect of facilitation on practice outcomes in the National Demonstration Project model of the patient-centered medical home. Ann Fam Med 2010;8(Suppl1):S33–44; S92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dickinson WP, Dickinson LM, Nutting PA, et al. Practice facilitation to improve diabetes care in primary care: a report from the EPIC randomized clinical trial. Ann Fam Med 2014;12:8–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Nagykaldi Z, Mold JW. The role of health information technology in the translation of research into practice: an Oklahoma Physicians Resource/Research Network (OKPRN) study. J Am Board Fam Med 2007;20:188–95. [DOI] [PubMed] [Google Scholar]

- 20.Kanouse DE, Jacoby I. When does information change practitioners’ behavior? Int J Technol Assess Health Care 1988;4:27–33. [DOI] [PubMed] [Google Scholar]

- 21.Nagykaldi Z, Mold JW, Aspy CB. Practice facilitators: a review of the literature. Fam Med 2005; 37:581–8. [PubMed] [Google Scholar]

- 22.Sastri P, Narayana JL. Outcome based engineering education: a case study on implementing DMAIC method to derive learning outcomes. Int J Adv Res Engin Technol 2019;10:216–22. [Google Scholar]

- 23.Cykert S, Lefebvre A, Bacon T, Newton W. Meaningful use in chronic care: improved diabetes outcomes using a primary care extension center model. N C Med J 2016;77:378–83. [DOI] [PubMed] [Google Scholar]

- 24.Mold JW, Fox C, Wisniewski A, et al. Implementing asthma guidelines using practice facilitation and local learning collaboratives: a randomized controlled trial. Ann Fam Med 2014;12:233–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Solberg LI, Glasgow RE, Unützer J, et al. Partnership research: a practical trial design for evaluation of a natural experiment to improve depression care. Med Care 2010;48:576–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Balasubramanian BA, Chase SM, Nutting PA, ULTRA Study Team, et al. Using Learning Teams for Reflective Adaptation (ULTRA): insights from a team-based change management strategy in primary care. Ann Fam Med 2010; 8:425–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Solberg LI, Kottke TE, Brekke ML, et al. Failure of a continuous quality improvement intervention to increase the delivery of preventive services. A randomized trial. Eff Clin Pract 2000;3:105–15. [PubMed] [Google Scholar]

- 28.Goodwin MA, Zyzanski SJ, Zronek S, et al. A clinical trial of tailored office systems for preventive service delivery. The Study to Enhance Prevention by Understanding Practice (STEP-UP). Am J Prev Med 2001;21:20–8. [DOI] [PubMed] [Google Scholar]

- 29.McHugh M, Harvey JB, Kang R, Shi Y, Scanlon DP. Measuring the dose of quality improvement initiatives. Med Care Res Rev 2016;73:227–46. [DOI] [PubMed] [Google Scholar]

- 30.Bodenheimer T, Ghorob A, Willard-Grace R, Grumbach K. The 10 building blocks of high-performing primary care. Ann Fam Med 2014;12: 166–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wagner EH, Austin BT, Von Korff M. Organizing care for patients with chronic illness. Milbank Q 1996;74:511–44. [PubMed] [Google Scholar]

- 32.EvidenceNOW tools for change for practice facilitators. https://www.ahrq.gov/evidencenow/tools/facilitation/index.html. Accessed March 16, 2020.

Footnotes

Conflict of interest: None.

References

- 1.Berwick DM. Continuous improvement as an ideal in health care. N Engl J Med 1989;320:53–6. [DOI] [PubMed] [Google Scholar]

- 2.Berwick DM, Nolan TW, Whittington J. The Triple Aim: care, health, and cost. Health Aff (Millwood) 2008;27:759–69. [DOI] [PubMed] [Google Scholar]

- 3.Best A, Greenhalgh T, Lewis S, Saul JE, Carroll S, Bitz J. Large-system transformation in health care: a realist review. Milbank Q 2012;90:421–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Conway PH, Clancy C. Transformation of health care at the front line. JAMA 2009;301:763–5. [DOI] [PubMed] [Google Scholar]

- 5.Lukas CV, Holmes SK, Cohen AB, et al. Transformational change in health care systems: an organizational model. Health Care Manage Rev 2007;32:309–20. [DOI] [PubMed] [Google Scholar]

- 6.Silvey AB. A transformational approach to healthcare quality improvement. J Healthc Qual 2004; 26:57. 3. [DOI] [PubMed] [Google Scholar]

- 7.Dale SB, Ghosh A, Peikes DN, et al. Two-year costs and quality in the comprehensive primary care initiative. N Engl J Med 2016;374:2345–56. [DOI] [PubMed] [Google Scholar]

- 8.Edwards ST, Bitton A, Hong J, Landon BE. Patient-centered medical home initiatives expanded in 2009–13: providers, patients, and payment incentives increased. Health Aff (Millwood) 2014;33:1823–31. [DOI] [PubMed] [Google Scholar]

- 9.Hughes LS, Peltz A, Conway PH. State innovation model initiative: a state-led approach to accelerating health care system transformation. JAMA 2015;313: 1317–8. [DOI] [PubMed] [Google Scholar]

- 10.Brownson RC, Colditz GA, Proctor EK, eds. Dissemination and implementation research in health: translating science to practice. New York, NY: Oxford University Press, Inc; 2012. [Google Scholar]

- 11.Holtrop JS, Rabin BA, Glasgow RE. Dissemination and implementation science in primary care research and practice: contributions and opportunities. J Am Board Fam Med 2018;31:466–78. [DOI] [PubMed] [Google Scholar]

- 12.Wiltsey Stirman SKJ, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci 2012;7:17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Tricco AC, Ashoor HM, Cardoso R, et al. Sustainability of knowledge translation interventions in healthcare decision-making: a scoping review. Implement Sci 2016;11:55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Lennox L, Maher L, Reed J. Navigating the sustainability landscape: a systematic review of sustainability approaches in healthcare. Implement Sci 2018;13:27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cohen DJ, Balasubramanian BA, Gordon L, et al. A national evaluation of a dissemination and implementation initiative to enhance primary care practice capacity and improve cardiovascular disease care: the ESCALATES study protocol. Implement Sci 2016;11:86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Meyers D, Miller T, Genevro J, et al. EvidenceNOW: balancing primary care implementation and implementation research. Ann Fam Med 2018;16:S5–S11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009;4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev 2012;69:123–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Powell BJ, Stanick CF, Halko HM, et al. Toward criteria for pragmatic measurement in implementation research and practice: a stakeholder-driven approach using concept mapping. Implement Sci 2017;12:118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implement Sci 2019;14:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Colquhoun H, Leeman J, Michie S, et al. Towards a common terminology: a simplified framework of interventions to promote and integrate evidence into health practices, systems, and policies. Implement Sci 2014;9:51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Leeman J, Birken SA, Powell BJ, Rohweder C, Shea CM. Beyond “implementation strategies”: classifying the full range of strategies used in implementation science and practice. Implement Sci 2017;12:125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci 2013;8:139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wagner EH, Austin BT, Davis C, Hindmarsh M, Schaefer J, Bonomi A. Improving chronic illness care: translating evidence into action. Health Aff (Millwood) 2001;20:64–78. [DOI] [PubMed] [Google Scholar]

- 26.Courtlandt CD, Noonan L, Feld LG. Model for improvement—Part 1: a framework for health care quality. Pediatr Clin North Am 2009;56:757–78. [DOI] [PubMed] [Google Scholar]

- 27.Solberg LI, Glasgow RE, Unützer J, et al. Partnership research: a practical trial design for evaluation of a natural experiment to improve depression care. Med Care 2010;48:576–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Baker R, Camosso-Stefinovic J, Gillies C, et al. Tailored interventions to address determinants of practice. Cochrane Database Syst Rev 2015;CD005470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Krause J, Van Lieshout J, Klomp R, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci 2014;9:102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lewis CC, Klasnja P, Powell BJ, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health 2018;6:136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lomas J, Haynes RB. A taxonomy and critical review of tested strategies for the application of clinical practice recommendations: from “official” to “individual” clinical policy. Am J Prev Med 1988;4:77–94; discussion 95–77. [PubMed] [Google Scholar]

- 32.Lokker C, McKibbon KA, Colquhoun H, Hempel S. A scoping review of classification schemes of interventions to promote and integrate evidence into practice in healthcare. Implement Sci 2015;10:27. [DOI] [PMC free article] [PubMed] [Google Scholar]