Keywords: amplitude modulation, audiovisual, ECoG, multisensory, phase reset

Abstract

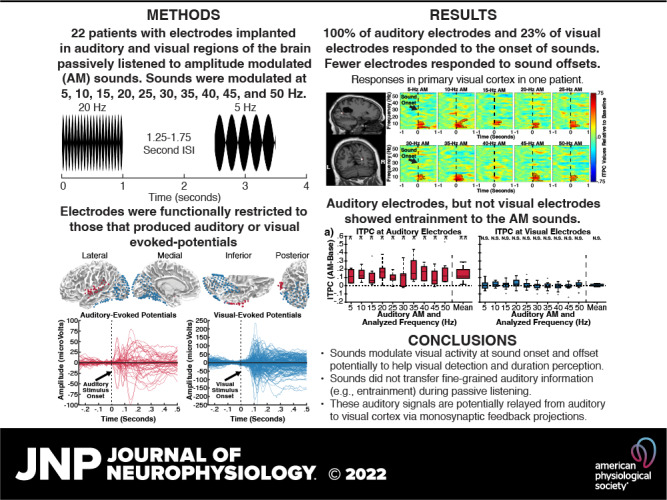

Sounds enhance our ability to detect, localize, and respond to co-occurring visual targets. Research suggests that sounds improve visual processing by resetting the phase of ongoing oscillations in visual cortex. However, it remains unclear what information is relayed from the auditory system to visual areas and if sounds modulate visual activity even in the absence of visual stimuli (e.g., during passive listening). Using intracranial electroencephalography (iEEG) in humans, we examined the sensitivity of visual cortex to three forms of auditory information during a passive listening task: auditory onset responses, auditory offset responses, and rhythmic entrainment to sounds. Because some auditory neurons respond to both sound onsets and offsets, visual timing and duration processing may benefit from each. In addition, if auditory entrainment information is relayed to visual cortex, it could support the processing of complex stimulus dynamics that are aligned between auditory and visual stimuli. Results demonstrate that in visual cortex, amplitude-modulated sounds elicited transient onset and offset responses in multiple areas, but no entrainment to sound modulation frequencies. These findings suggest that activity in visual cortex (as measured with iEEG in response to auditory stimuli) may not be affected by temporally fine-grained auditory stimulus dynamics during passive listening (though it remains possible that this signal may be observable with simultaneous auditory-visual stimuli). Moreover, auditory responses were maximal in low-level visual cortex, potentially implicating a direct pathway for rapid interactions between auditory and visual cortices. This mechanism may facilitate perception by time-locking visual computations to environmental events marked by auditory discontinuities.

NEW & NOTEWORTHY Using intracranial electroencephalography (iEEG) in humans during a passive listening task, we demonstrate that sounds modulate activity in visual cortex at both the onset and offset of sounds, which likely supports visual timing and duration processing. However, more complex auditory rate information did not affect visual activity. These findings are based on one of the largest multisensory iEEG studies to date and reveal the type of information transmitted between auditory and visual regions.

INTRODUCTION

Co-occurring sounds can facilitate the detection and identification of visual stimuli (1–3), but the specific forms of auditory information that are transmitted to visual areas remain poorly understood. Although early investigations were focused on multisensory convergence in the superior colliculus and higher-order association cortices (4, 5), recent research has demonstrated the importance of crossmodal modulations of oscillatory activity in nominally “unisensory” cortical areas (6–9) such as sounds evoking early responses in primary visual cortex (10–12). By resetting the phase of oscillations in visual cortex, simple transient sounds can enhance cortical and perceptual sensitivity for temporally coupled visual input (13–20). However, because the signals driving these crossmodal modulations may originate from many levels of the auditory processing hierarchy (21–24), the content and representational complexity of the information conveyed by this mechanism are unknown.

The auditory system encodes a large number of perceptually relevant dimensions from auditory signals, including pitch, spatial location, sound onset and offset, and rhythmic responses, among others (for a review see Ref. 25). Although it is well documented that sounds can bias visual perception and modulate visual activity, the information encoded in this auditory-visual transmission has received less attention. Previous reports indicate that early visual cortex represents high-level auditory information from complex natural sounds (26, 27) and there is strong evidence that sounds can bias visual cortex onset timing and visual spatial processing (15, 28–33). Moreover, even information from canonical features of auditory processing such as pitch have been reported within visual areas of early blind and sighted individuals (34, 35). However, it is unclear what other forms of auditory information are transmitted to visual areas and if this information is reflexively transmitted or only during concurrent visual processing.

One relevant candidate is whether rhythmic sounds can elicit entrainment of visual cortex. Specifically, auditory neurons generally exhibit frequency following behaviors in response to amplitude-modulated (AM) sounds (Fig. 1), with oscillatory activity entrained at the rhythmic rate of the auditory signal (36). This auditory response is paralleled in the visual system by the entrainment of visual neurons at the rhythmic rate of flashing strobe lights (37, 38). The susceptibility of both the auditory and visual systems to neural entrainment has led to predictions that one modality should be able to entrain the other. Indeed, recent studies have reported that repetitive auditory sounds can modulate visual perception (39) and alter neural activity in putative visual areas measured using noninvasive electroencephalography (EEG) (40), but these effects have not been dissociated from transient auditory responses and lacked the spatial resolution to say that visual neurons in specific were entrained. Studies using functional magnetic resonance imaging (fMRI) have similarly reported results that are consistent with auditory-entrainment of visual activity (41, 42), but not all fMRI studies have observed this pattern (43). Moreover, fMRI blood-oxygen-level-dependent (BOLD) signals are indirect measures of neural activity that cannot directly measure the oscillatory phase information that denotes neural entrainment, necessitating that this question be examined by more direct measures of neural activity. To better understand the content present in auditory-visual transmissions, we used intracranially implanted electrodes in humans (intracranial electroencephalography; iEEG) to measure the sensitivity of visual cortex to three different forms of auditory information (rhythmic entrainment to sounds, auditory onset responses, and auditory offset responses) during a passive listening task.

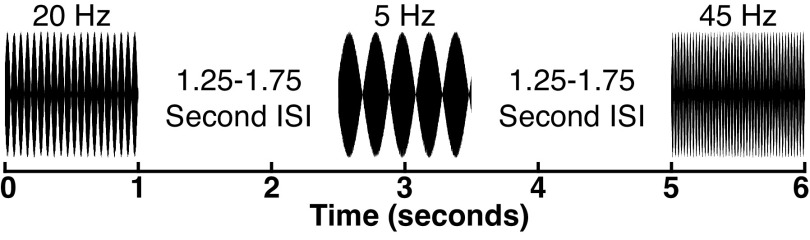

Figure 1.

Schematic of experiment 1 passive listening task, showing three example amplitude-modulated (AM) sounds at different AM frequencies. ISI, intersound interval.

MATERIAL AND METHODS

Participants

Data were acquired from patients with epilepsy during invasive work-up for medically intractable seizures using intracranial electroencephalography (iEEG) monitoring from chronically implanted depth electrodes (5 mm center-to-center spacing, 2 mm diameter) and/or subdural electrodes (10 mm center-to-center spacing, 3 mm diameter). Sixteen patients participated in experiment 1. Patients ranged in age from 17 to 50 yr (mean = 37.5, mean absolute deviation = 7.9) and included eight females. Thirteen patients with epilepsy (7 of whom were included in experiment 1) participated in experiment 2. Patients ranged in age from 21 to 49 yr (mean = 36.8, mean absolute deviation = 9.1) and included five females. Age did not significantly differ between the groups [t(27) = 0.18, P = 0.860]. For both experiments, electrodes were placed according to the clinical needs of the patients. Written informed consent was obtained from each patient according to separate protocols that were reviewed and approved by institutional review boards (IRBs) at the University of Michigan, University of Chicago, and Henry Ford Hospital.

Method Details

Amplitude-modulated sound paradigm.

Patients were seated in a hospital bed. Auditory stimuli (∼65 dB) were delivered via a laptop using PsychToolbox (44, 45) through a pair of free-field speakers placed ∼15° to the right and left of participants’ midline. A central fixation cross against a blank background was presented on the laptop display throughout the duration of the experiment. Patients were instructed to maintain central fixation and passively listen to presented sounds.

In experiment 1, participants were presented with 1- or 2 s in duration auditory stimuli consisting of sinusoidally amplitude-modulated white noise with different rates of modulation (100% modulation depth). For the initial three participants, trials included nine different AM rates, ranging from 10 Hz to 50 Hz in 5 Hz intervals (2 s in duration), with 10 trials of each condition, resulting in 90 total trials (AM sound variant A). For the remaining 13 participants, trials included 10 different AM rates, ranging from 5 Hz to 50 Hz in 5 Hz intervals (1 s in duration), with 20 trials of each condition, resulting in 200 total trials (AM sound variant B). The paradigm was updated across participants to increase the range of frequencies tested and the number of trials acquired to increase the signal-to-noise ratio in detecting transient onset responses while maintaining sensitivity to entrainment (if it was present). The sounds were presented in a randomized order for each participant, and the intersound interval (ISI) was randomly varied between 1.25 and 1.75 s (uniform distribution) for AM sound variant A of the passive listening task, and 2.25 and 2.75 s (uniform distribution) for AM sound variant B of the passive listening task. We used a variable ISI, common in event-related EEG designs, to reduce the influence of intrinsic oscillations that were unperturbed by the AM sounds.

In experiment 2, participants were presented with 10 s in duration AM sounds. This longer duration was used to boost the sensitivity for detecting any frequency-following response (if present). Patients 1–4 were presented with 36 trials of 10-s sounds consisting of amplitude-modulated white noise differing in the rate and depth of modulation. The sounds were presented in a randomized order for each participant, and the intersound interval was randomly varied between 4 and 6 s (uniform distribution). Patients 5–13 were presented with 8 trials of 10 s sounds consisting of white noise amplitude-modulated at 40 Hz at 100% depth with an intersound interval randomly varying between 12 and 14 s (uniform distribution). The present study reports neural activity evoked by sounds amplitude-modulated at 40 Hz at 100% depth (a subset of the amplitude-modulated sounds presented for participants 1–4 totaling 40 s per participants, and all the amplitude-modulated sounds presented for participants 5–13 totaling 80 s). AM sounds (40 Hz) were selected for experiment 2 to increase the chances that a maximally robust auditory entrainment signal would be transmitted and isolated visual cortex if it exists, given the sensitivity of auditory regions to this rate and as 40 Hz is above the range of activity modulated by transient onset/offset responses.

MRI and CT acquisition and processing.

A preoperative T1-weighted MRI and a postoperative CT scan were acquired for each participant to aid in localization of electrodes. Cortical reconstruction and volumetric segmentation of each participant’s MRI was performed with the Freesurfer image analysis suite (http://surfer.nmr.mgh.harvard.edu/) (46, 47). Postoperative CT scans were registered to the T1-weighted MRI through Statistical Parametric Mapping (SPM) and electrodes were localized along the Freesurfer cortical surface using customized open-source software developed in our laboratories (48) (available for download online https://github.com/towle-lab/electrode-registration-app/). This software segments electrodes from the CT by intensity values and projects the normal tangent of each electrode to the dura surface, avoiding sulcal placements and correcting for postimplantation brain deformation present in CT images.

Intracranial electroencephalography data acquisition and preprocessing.

For experiment 1, iEEG recordings were made at either 1,024 Hz (10 participants), 4,096 Hz (4 participants), or 1,000 Hz (2 participants) due to differences in the clinical amplifiers used. For experiment 2, recordings were made at either 1,024 Hz (7 participants), 4,096 Hz (3 participants), or 1,000 Hz (3 participants). Data recorded at 4,096 Hz were downsampled to 1,024 Hz for the initial stages of processing, and all participants’ data were resampled to 1,024 Hz for group-level time-frequency analyses. The onset of each trial was denoted online by a voltage isolated transistor–transistor logic (TTL) pulse. To ensure that electrodes reflected maximally local and independent activity, we used bipolar referencing. Noisy channels were identified by first calculating the standard deviation across time for each channel (resulting in one value per channel) and then by comparing this value across channels: those with a value exceeding 5 standard deviations above or below the mean were excluded; this conservative threshold was applied to allow maximum inclusion of visual electrodes sensitive to auditory content. Noisy trials were identified by first calculating the standard deviation across time for each trial (resulting in one value per trial) and then by comparing this value across trials: those with a value exceeding 3 standard deviations above or below the mean were excluded. Following the rejection of artifactual channels and trials, data were high-pass filtered at 0.01 Hz to remove slow drift artifacts, and notch-filtered at 60 Hz and its harmonics to remove line noise.

Electrode selection.

Auditory and visual electrodes included for analyses were selected based on their functional responses and anatomical locations. Spatial selection of the auditory electrodes was limited to those located proximal to the superior temporal gyrus or neighboring white matter based on the Desikan–Killiany–Tourville atlas calculated on individual-participant Freesurfer cortical parcellations. Functional selection of the auditory electrodes was limited to those that showed significant early event-related potential (ERP) responses to sounds (AM sounds for experiment 1, tones and pink noise-bursts obtained from separate passive listening tasks for experiment 2). Spatial selection of the visual electrodes was limited to those located in occipital, parietal, or inferior temporal areas or neighboring white matter. Functional selection of the visual electrodes was limited to those that showed significant early ERP responses to salient visual stimuli during a separate task. Specifically, participants completed one of three tasks with visual stimuli. Visual task variant A presented participants with images of faces, houses, chairs, and scrambled noise one at a time. Visual task variant B presented participants with images of complex visual scenes with humans and objects. Visual task variant C presented participants with movies of a speaking face that started with a static image of the face shown for 500 ms. A significant functional response across both auditory and visual areas was defined as any significant deviation from baseline between 0 and 120 ms: statistics were calculated using one-sample t tests separately at each timepoint, with random effect = trial, and corrected for multiple comparisons across time-points using false discovery rate (FDR). ERPs were used for functional localization because our analyses focus on low-frequency oscillations that are reflected in the ERP waveform. Locations for each participant’s electrodes are given in Supplemental Table S1 (see https://doi.org/10.6084/m9.figshare.14410247) and participant specific maps are shown in Supplemental Figs. S1 (see https://doi.org/10.6084/m9.figshare.14410244) and S3 (see https://doi.org/10.6084/m9.figshare.19698544).

Quantification and Statistical Analyses

After preprocessing, iEEG data were segmented into 6-s epochs (−3 to 3 s around the onset of the sound) for experiment 1 and 24-s epochs (−12 to 12 s around the onset of the sound) for experiment 2. For all analyses, the resultant epochs were convolved with complex Morlet wavelets, also referred to as complex Gabor wavelets (49). Wavelets were constructed by convolving a complex sine wave with a Gaussian, given by the equation: , where A refers to the amplitude of the Gaussian, t is time, f is frequency, and s refers to the standard deviation of the Gaussian as given by the equation s = n/2πf, where n refers to the number of wavelet cycles and f is frequency (50). Wavelet center frequencies (variable f aforementioned) were set between 1 and 20 Hz (yielding 20 wavelets) or 1 and 50 Hz (yielding 50 wavelets), depending on the analysis, as described in the sections Analysis of transient phase coherence and Analysis of entrainment. The number of wavelet cycles (variable n) for each wavelet was equal to the center frequency, with the exception of 1 and 2 Hz Wavelets, which used n = 3 cycles due to the Nyquist limit (50). After iEEG were convolved with wavelets, instantaneous phase-angles for each timepoint, frequency bin, and trial were extracted.

At each timepoint for each convolved frequency, intertrial phase coherence (ITPC) was calculated as the magnitude of the complex average of the phase-angle vectors across trials, given by the equation:

where n is the number of trials and k represents the polar angle on trial r. ITPC is a measure to quantify the consistency of phase angles across trials (51) and is widely used in EEG analyses (e.g., most often through its implementation in EEGLAB) (52). This implementation has been used under other names as well, including “phase locking factor,” “intertrial coherence,” among others. For all analyses except the time-frequency analyses, ITPC values were averaged over the duration of each analysis period of interest, yielding a single value for each condition and electrode that indexes the average phase alignment across each period. ITPC values are bound between 0 (uniform phase angle distribution) and 1 (identical phase angles across epochs). Throughout all analyses, only increases in ITPC values were considered significant because both phase-reset and entrainment are expected to produce increases, and not decreases, in phase alignment. Results for electrode-specific analyses of transient onset, transient offset, and entrainment responses are tabulated according to participant and electrode location in Supplemental Table S1.

Analysis of transient phase coherence.

For group-level analyses of transient ITPC, each epoch was convolved with 20 wavelets with center frequencies ranging from 1 to 20 Hz in 1-Hz intervals, with the number of cycles varying across this frequency range (3 cycles for the frequencies 1–3 Hz and 4–20 cycles varied linearly for the frequencies 4–20 Hz). For group-level comparisons against baseline, ITPC was calculated separately for each frequency band and each electrode during the prestimulus baseline period (−1,000 to −500 ms) and the 200 ms stimulus onset or offsets period (0–200 ms); we used a 500-ms window in the baseline period (relative to the shorter 200-ms windows in the poststimulus periods) to ensure stable “null” ITPC values. Frequency-specific ITPC values for each period were subtracted and Fisher’s Z-transformed before averaging across frequencies to produce electrode-specific difference scores. These difference scores were then averaged across each participant’s auditory or visual electrodes, and these participant-level difference scores were compared to 0 using a one-tailed, one-sample t test. Because 3 of 7 participants with auditory electrodes were not tested on the 5-Hz AM sound condition, this condition was omitted from group-level analyses of auditory electrodes.

For group-level time-frequency analyses, ITPC values for each timepoint (−1 to 1 s) and frequency band were first averaged across electrodes within each participant and then averaged across participants. Significant timepoints were identified in the 0 to 1 s range relative to the prestimulus baseline period (−1 to −0.5 s) using one-tailed one-sample t tests at each timepoint and frequency. Multiple comparison corrections were applied using cluster statistics, in which the time-frequency map of t value statistics is permuted 10,000 times to identify clusters of significant contiguous time-frequency points (at P < 0.05) that are greater than those in 95% of the permuted data (50).

For single-electrode analyses of transient ITPC, phase-shuffling was used to generate a null distribution of ITPC values for the 200-ms onset period in each electrode. For 10,000 iterations, a random value ranging from −π to +π (uniformly sampled) was added to each trial before calculation of ITPC. The distribution of ITPC values produced by this procedure is designed to reflect the expected distribution of values observed under purely spurious phase coherence. P values were determined by identifying the proportion of values from this distribution that equaled or exceeded observed ITPC values, and then were corrected for multiple comparisons using FDR correction (q = 0.05).

As prior literature has indicated that sounds relay meaningful information to visual motion area V5/hMT+ (12, 35), we compared the proportions of electrodes exhibiting transient onset responses near area V5/hMT+ versus other visual areas using a permutation test with restricted exchangeability (53). For each of 10,000 permutations, labels indicating electrode location were permuted so that the relationship between electrode location and ITPC significance was obliterated, and the number of significant electrodes with a V5/hMT+ label was recorded for each permutation to generate a null distribution. Permutations were restricted so that labels were only exchanged between electrodes belonging to the same participant, producing an exchangeability restriction reflecting the dependency structure of the data. All participants with at least one electrode within the V5/hMT+ label also had at least one electrode outside of the label. The P value was determined by identifying the proportion of values from the resultant null distribution that equaled or exceeded the observed number of significant electrodes with a V5/hMT+ label (i.e., those within 1 cm of the Freesurfer V5/hMT+ probabilistic label). Statistical results were comparable when using unrestricted permutations or a parametric χ2 test for equality of proportions.

Analysis of entrainment.

For group-level time-spectral analyses of entrainment, the epoched data were convolved with 10 wavelets (center frequencies ranging from 5 to 50 Hz in 5-Hz intervals) with the number of cycles varying linearly across this frequency range (5–50 cycles in 5-Hz intervals). ITPC values for each timepoint and frequency were calculated separately for each participant, Fisher’s Z-transformed, and then averaged across participants, with a group-level t tests conducted at each time point and frequency. Multiple comparison corrections were applied using cluster statistics, in which the time-frequency map of t value statistics is permuted 10,000 times to identify clusters of significant contiguous time-frequency points (at P < 0.05) that are greater than those in 95% of the permuted data (50).

For frequency-specific analyses, data from each condition were convolved with a single wavelet: center frequency and the number of cycles included were equal to the condition AM frequency (e.g., data from the 40 Hz condition were convolved with a 40-cycle long wavelet with a center frequency of 40 Hz). ITPC values were then calculated separately at each timepoint, Fisher’s Z-transformed, and averaged across time to yield two ITPC values for each electrode and condition: one for data in the prestimulus baseline period (experiment 1: −1.5 to −0.5 s; experiment 2: −11 to −1 s) and another during the full stimulus period. Difference scores were then taken from these values to yield a single ITPC value (relative to baseline) per condition, electrode, and participant. For single-electrode analyses of entrainment, frequency-averaged ITPC values for the full stimulus period were compared with null distributions generated through phase shuffling. P values were determined by identifying the proportion of null distributions that exceeded observed ITPC values, and then corrected for multiple comparisons using FDR correction (q = 0.05). For group-level analyses of entrainment, data were averaged across electrodes before statistics (one-sample t tests) were conducted separately at each condition (with multiple corrections applied using FDR).

The aforementioned analyses examined whether entrainment is present at entrained frequencies. To more generally examine whether different AM sounds elicited selective power or phase changes in any frequency, we used multiclass support vector machines (SVM) decoding to classify AM frequencies using either spectral phase spectral power information, separately at auditory and visual electrodes. ITPC values were calculated as described earlier from 5 to 50 Hz in 5-Hz intervals. Event-related spectral power (ERSP) was calculated from the squared magnitude of the same signals used in ITPC analyses from 5 to 50 Hz in 5-Hz intervals and averaged across trials. ITPC and ERSP data were averaged from 0 to 1 s to yield a pair of values per condition, electrode, and participant. Classification was performed using the Matlab command “fitcecoc” through a leave-one-out group-level approach to classify AM sound conditions. Specifically, each fold included a training set comprised of all but one participants’ data and a test set comprised of the “left-out” participant; thus, the number of folds equaled the number of participants at auditory and visual electrodes. Significance was calculated using one-sample t tests comparing participants’ test classification accuracy relative to chance threshold (10% for 10 AM sound conditions); one-tailed statistics were used on the expectation that classification should be above chance and not below.

RESULTS

Sound Onsets Produce Transient Phase Reset in Visual Cortex

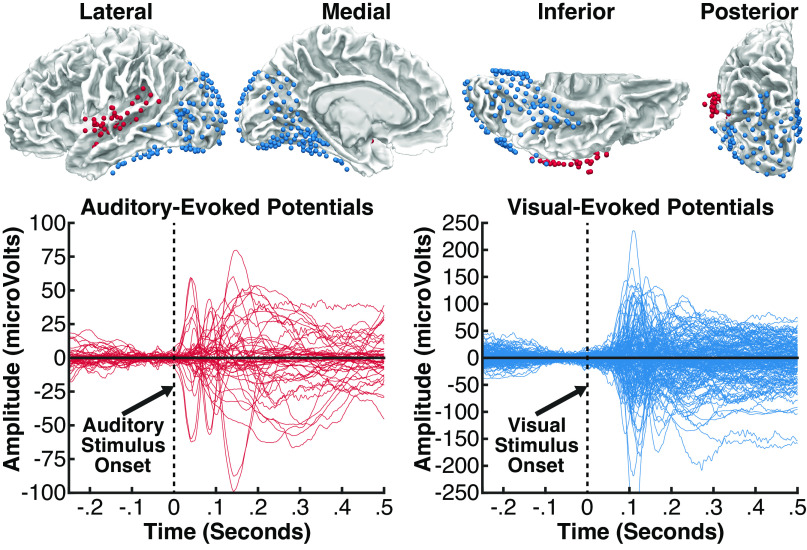

To test whether AM sounds produce transient modulations of oscillatory activity in visual cortex, we first examined whether auditory onsets produced transient intertrial phase coherence (ITPC) in visually responsive electrodes; see Fig. 2 and Supplemental Fig. S1 and material and methods for electrode selection criteria. ITPC is quantified across trials and is therefore sensitive to the consistency of oscillatory phase-angles produced by both stimulus-evoked phase resetting and oscillatory entrainment. To isolate phase coherence evoked by auditory onsets (regardless of AM frequency), we combined data across stimulus conditions so that the frequencies of potential entrainment responses were inconsistent across trials and, therefore, would not produce reliable frequency-specific ITPC. We restricted our analysis of transient responses to the 1–20 Hz range based on previous reports indicating that brief auditory stimuli reset visual oscillations in the δ, θ, and α frequency bands (13, 15, 54). At the group level [data were averaged across electrodes and frequency bands (1–20 Hz) for each participant before group comparison], we found significantly more phase coherence in both auditory [t(6) = 6.21, P = 0.0004, d = 2.35; Fig. 3A] and visual electrodes [t(12) = 3.57, P = 0.002, d = 0.991, Fig. 3B] during the 200-ms period following stimulus onset than the prestimulus baseline period, suggesting that auditory onsets produced transient phase coherence in both auditory and visual cortex. Note that the degrees of freedom vary according to the number of participants contributing to each group-level analysis (auditory: n = 7; visual: n = 13).

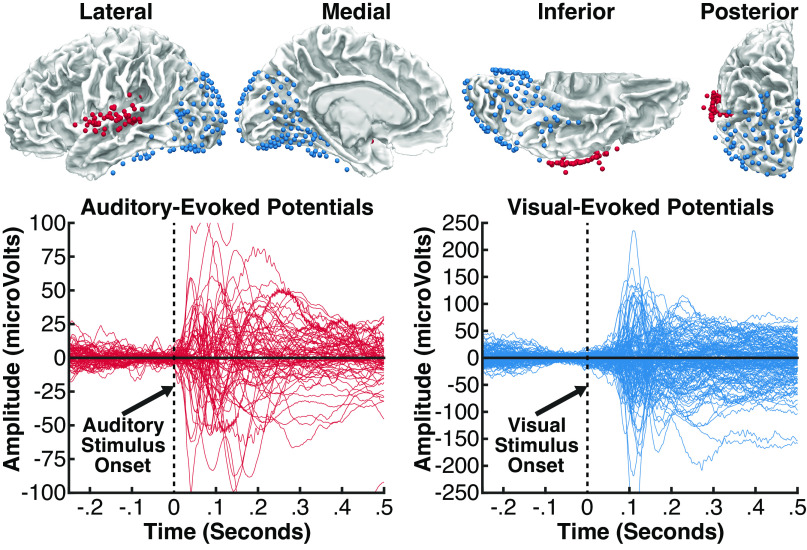

Figure 2.

Top: intracranial electrodes from 16 patients, displayed on an average brain (all electrodes projected into the left hemisphere). Each colored sphere reflects a single electrode contact included in analyses, localized in auditory areas (red, 55 electrodes) or visual areas (blue, 178 electrodes). Auditory electrodes were limited to those located proximal to the superior temporal gyrus or neighboring white matter and showing a significant event-related potential (ERP) to sounds beginning at less than 120 ms. Visual electrodes were limited to those located in occipital, parietal, or inferior temporal areas and showing a significant ERP to visual stimuli beginning at less than 120 ms. Bottom: ERP responses at all auditory electrodes (red) evoked during an auditory task, and at all visual electrodes (blue) evoked during a visual task; the time zero indicates stimulus onset in each task.

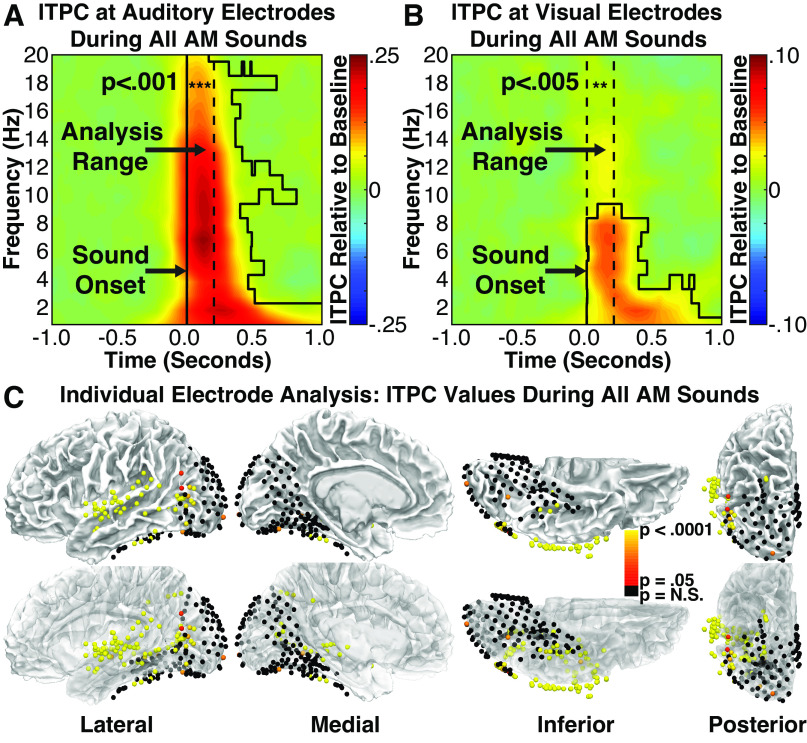

Figure 3.

Group intertrial phase coherence (ITPC) time-spectral plots for auditory (A) and visual (B) electrodes during the average of all amplitude-modulated (AM) sound conditions. Dotted lines denote analyzed time-frequency range. Relative to the prestimulus baseline period, significantly greater ITPC values were present at low frequencies for both auditory and visual electrodes, highlighting the presence of an auditory-driven transient phase reset to the onset of AM sounds at auditory and visual areas. Black polygon shapes highlight significant times and frequencies using cluster-corrected statistics (P < 0.05). C: auditory and visual electrodes from all patients showing either significant (red to yellow) or nonsignificant (black) ITPC values during the average of all AM sound conditions. Significant electrodes cluster around the central superior temporal gyrus, proximal to auditory cortex, and are additionally broadly distributed throughout visual areas, most strongly at pericalcarine and occipitotemporal areas (potentially V5/hMT+). The bottom row of images reflects the same data on partially transparent brains to show statistics from electrodes not visible at the surface.

To characterize the spectro-temporal profile of these effects, we identified timepoints and frequency bands in which significant ITPC was observed at the group level (auditory and visual electrodes were separately averaged within each participant before being averaged across participants). After correcting for multiple comparisons using cluster-based statistics (see material and methods), significant clusters were observed in the 1–20 Hz range for auditory electrodes (Fig. 3A; black polygon shapes surround significant clusters) and the 1–9 Hz range for visual electrodes (Fig. 3B). Although stimuli were 1 s or longer in duration, significant phase coherence persisted for only ∼500 ms in frequency bands over 3 Hz, indicating the transient nature of these responses. Although more prolonged phase coherence is apparent in the 1–3 Hz range, this likely reflects temporal smoothing inherent to frequency-resolved analysis of slower oscillations due to the temporal widths of the wavelets, but the poor temporal precision prevents us from excluding the possibility that indicates there was a sustained response. These group-level results suggest that the onsets of AM sounds produce transient phase coherence in both auditory and visual cortex.

To examine the regional specificity of these effects, we identified individual electrodes showing significant phase coherence using a similar, but electrode-specific, analysis (Fig. 3C). For each electrode, we computed the average ITPC across the 1–20 Hz frequency range (ITPC calculated independently at each 1 Hz band) during the initial 200 ms following stimulus onset. To assess statistical significance, ITPC values averaged for each electrode were compared with the distribution of values produced by phase-shuffled data, and the resulting P values were FDR-corrected (q = 0.05) for multiple comparisons across electrodes. Based on this analysis, 55 of 55 (100%) auditory electrodes and 41 of 178 (23.0%) visual electrodes showed significant phase coherence (Fig. 3C).

Significant visual electrodes were broadly distributed across sampled regions, with multiple electrodes clustered in pericalcarine, posterior parietal, and inferior temporal cortex, and many significant electrodes observed in lateral occipito-temporal cortex. The location of this region, situated inferior to the angular gyrus at the juncture between inferior/middle temporal cortex and lateral occipital cortex, corresponds closely to the expected anatomical location of area V5/hMT+ (55). To examine more closely whether significant electrodes preferentially clustered near area V5/hMT+, we compared the proportions of visual electrodes within 1 cm of the estimated anatomical location of area V5/hMT (Freesurfer probabilistic atlas) (56) that showed significant transient ITPC to the proportion of visual electrodes in other areas that showed significant transient ITPC. Sixteen of 30 (53.3%) electrodes in the V5/hMT+ region showed significant ITPC, although only 25 of 148 (16.9%) of remaining electrodes showed significant ITPC, with a significant difference across these proportions (P = 0.0001; restricted permutation test). These results suggest that electrodes exhibiting transient onset responses cluster preferentially near area putative V5/hMT+, while also occurring in multiple regions of visual cortex. Indeed, electrodes exhibiting significant onset responses in the V5/hMT+ region came from 4 of 6 participants with electrodes in this region, indicating the generalizability of these findings.

For completeness, we additionally analyzed responses from the remaining electrodes that did not meet inclusion criteria for the aforementioned analyses. Supplemental Fig. S2 (see https://doi.org/10.6084/m9.figshare.19388477) shows the results of this analysis (FDR-corrected) on n = 603 electrodes. Although none of these electrodes met functional inclusion criteria, this subset included coverage of three main regions: n = 194 electrodes in auditory areas (n = 25 middle temporal, n = 69 superior temporal, and n = 6 supramarginal), n = 128 electrodes in visual areas (n = 3 lingual, n = 7 lateral occipital, n = 48 fusiform, and n = 70 inferior temporal), and n = 183 electrodes in medial temporal areas (n = 38 entorhinal, n = 41 parahippocampal, n = 72 hippocampus, n = 9 amygdala, and n = 23 temporal pole). Note that auditory and visual electrodes in this context were excluded from the main analyses because they failed to show a significant auditory or visual response, respectively, as measured with the functional localizer. Nevertheless, these auditory and visual electrodes showed a similar pattern to those that met inclusion criteria: 100 of 194 (51.5%) auditory electrodes and 28 of 128 (21.9%) visual electrodes showed significant phase coherence. Conversely, electrodes in the medial temporal lobe near the hippocampus showed low levels of phase coherence: 9 of 183 (4.92%), emphasizing that auditory-driven responses are not equivalent across all areas.

Altogether, these results suggest that the onsets of AM sounds produce transient phase coherence in both auditory and visual cortices. Importantly, because both evoked responses that are independent of oscillatory activity and phase resetting of oscillatory activity can produce the appearance of transient phase coherence, event-related ITPC must be interpreted with care (57). In auditory cortex, sounds are known to evoke changes in both spectral power and oscillatory phase reset, suggesting that phase coherence in auditory cortex likely reflects both mechanisms (58). In contrast, converging evidence from transcranial magnetic stimulation (TMS), EEG, psychophysics, and intracranial electrophysiology in human and nonhuman primates (13, 15, 54) suggests that sounds influence visual cortex by modulating subthreshold oscillatory activity, rather than driving event-related evoked responses, suggesting that transient phase coherence in visual cortex most likely reflects crossmodal phase-resetting to auditory onsets. Because transient responses to the onsets of AM sounds only emerge at the cortical level of the auditory processing hierarchy, these results provide initial evidence that crossmodal phase-resetting in visual cortex may be driven by auditory cortical activity.

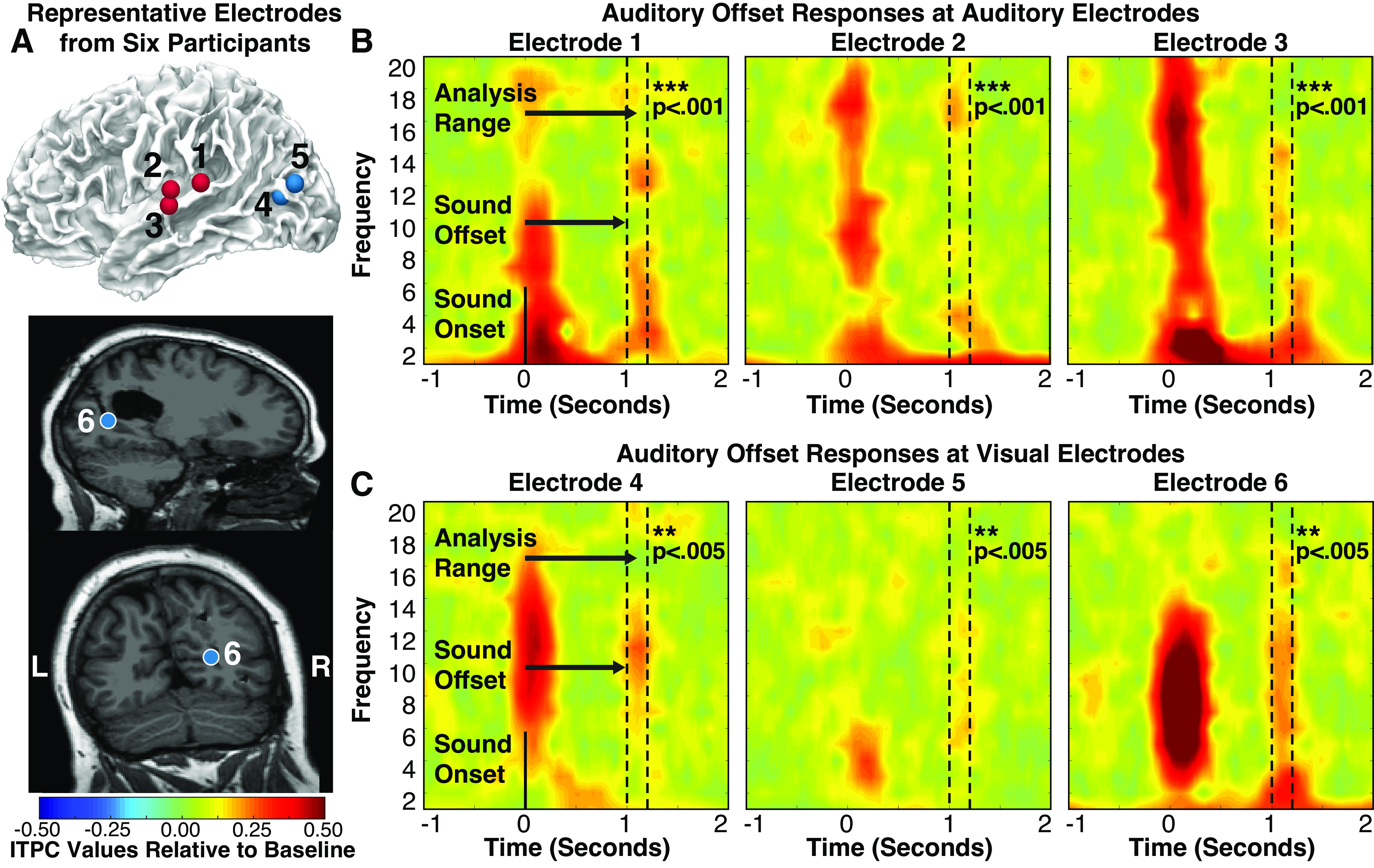

Sound Offsets Produce Transient Phase Reset in Visual Cortex

In addition to auditory onsets, cortical auditory neurons also display transient responses to the offsets of AM sounds (59–64). To rule out the possibility that the transient onset responses observed in visual cortex simply reflect rapidly adapting or highly damped entrainment responses, we tested whether cortical offset responses were also reflected in visual oscillations. To examine whether auditory offsets produce transient phase-resetting in visual cortex, we applied the same analyses to the 200-ms window following auditory offsets as we did to the 200 ms window following auditory onsets (Fig. 4). In group-level analyses averaging ITPC values for auditory or visual electrodes within each participant, both auditory [t(6) = 2.75, P = 0.017, d = 1.04] and visual [t(12) = 2.56, P = 0.013, d = 0.710] regions showed significant phase coherence in response to auditory offsets (compared with prestimulus baseline), suggesting that auditory offsets do indeed produce transient phase coherence in visual cortex. Analyzing electrodes individually, 39 of 55 auditory electrodes (70.9%; representative electrodes/responses: Fig. 4, A and B) and 7 of 178 visual electrodes (3.9%; Fig. 4, A and C) showed significant phase coherence following auditory offsets, suggesting that both auditory and visual cortex showed reliable, but comparatively weaker, responses to auditory offsets. This weakened response is consistent with previous human iEEG research showing weaker offset than onset responses in auditory cortex (59).

Figure 4.

A: representative electrodes (auditory electrodes are red, visual electrodes are blue) from six participants that showed significant intertrial phase coherence (ITPC) responses (after correcting for multiple comparisons) at the offset of sounds. Auditory electrodes (left) and visual (right) electrodes during the average of all amplitude-modulated (AM) sound conditions. Dotted lines denote analyzed time-frequency range. Individual participant ITPC plots at selected auditory (B) and visual (C) electrodes. AM sound onset occurred at 0 s and offset at 1 s. Each electrode shows both onset and offset responses.

In visual cortex, although only a small number of electrodes showed significant offset responses, these responses were statistically robust, suggesting that they are unlikely to be spurious (all P < 0.005 after FDR-based multiple comparison correction; Fig. 4C). Of the seven visual electrodes that showed significant offset responses, six showed significant onset responses, suggesting that onset and offset responses occurred at similar sites in visual cortex. Restricting our offset analysis to the 41 visual electrodes that showed significant responses to auditory onsets, 10 of 41 (24.4%) visually selective electrodes showed significant offset responses. The greater number of significant electrodes observed here is due to the smaller number of electrodes tested, reducing the severity of the multiple comparison corrections applied. These results suggest that crossmodal responses to auditory offsets tend to occur in the same neural populations that display crossmodal responses to auditory onsets. Electrodes showing both responses tended to cluster around pericalcarine and occipito-temporal (putative V5/hMT+) cortex (see Fig. 4A), suggesting that these regions may be particularly responsive to modulatory input originating from auditory cortex.

Visual Areas Do Not Entrain to Auditory Amplitude Modulations: 1–2 s Stimuli

Previous research indicates that although ∼20% of neurons in auditory cortex show transient onset and/or offset responses to AM sounds, the remaining neurons show primarily bandpass or lowpass frequency following responses maximally tuned to AM frequencies below 16 Hz (60). If visual neurons are sensitive to neural dynamics in auditory cortex in general, then they should also exhibit entrainment to these AM frequencies. However, if visual cortex primarily receives input from auditory neurons that display transient responses, then no crossmodal entrainment should be apparent. Finally, if some visual neurons receive input from other, subcortical auditory areas, then they should exhibit entrainment to higher frequencies.

To determine whether visual cortex exhibits entrainment to AM sounds during passive listening, we tested whether visual electrodes displayed ITPC in the frequency bands of auditory amplitude modulations. Individual modulation frequencies were analyzed separately for frequency-specific analyses and averaged together to gauge overall entrainment. Group- and individual-electrode level analyses were analogous to analyses of transient responses, except that ITPC was taken across full stimulus durations using only the AM frequency of each stimulus.

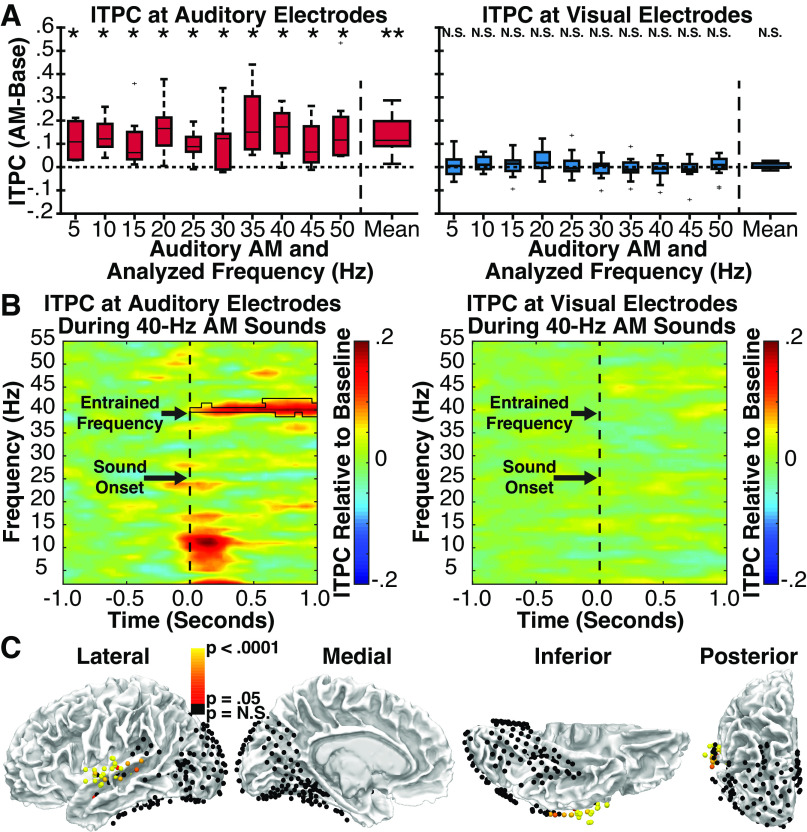

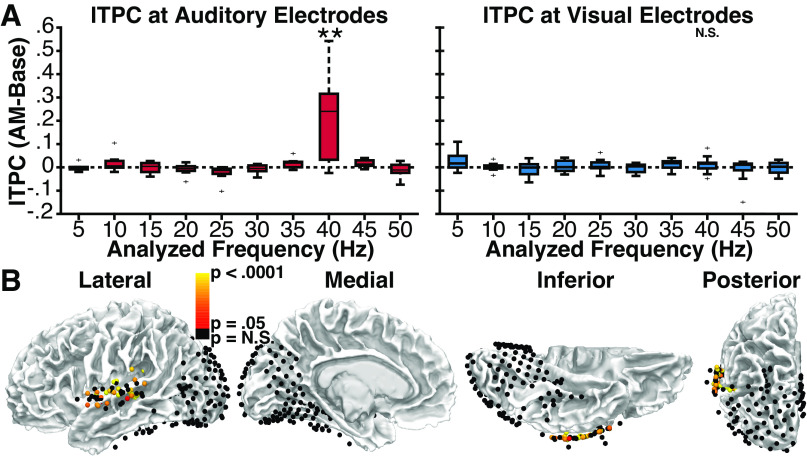

In group-level comparisons to the prestimulus baseline, auditory electrodes (Fig. 5A, left) showed significant entrainment in the frequency-averaged analysis [t(6) = 3.93, P = 0.004, d = 1.49], and for 10 of 10 modulation frequencies in frequency-specific analyses (all P < 0.05, corrected for multiple comparisons), suggesting that auditory neurons exhibited the expected reliable entrainment to amplitude modulations. No clear frequency tuning was evident, potentially indicating an influence of higher frequency thalamocortical signals on auditory electrodes. In contrast, visual electrodes (Fig. 5A, right) showed no significant entrainment in either the frequency-averaged [t(12) = 1.31, P = 0.108, d = 0.362) or frequency-specific analyses (all P > 0.295), suggesting that neurons in visual cortex do not exhibit entrainment to auditory amplitude modulations. As an illustrative example, group-level entrainment responses to 40 Hz AM sounds are shown for auditory and visual electrodes in Fig. 5B. Although entrainment is clearly visible in the 40-Hz frequency band in auditory electrodes, it is notably absent in visual electrodes.

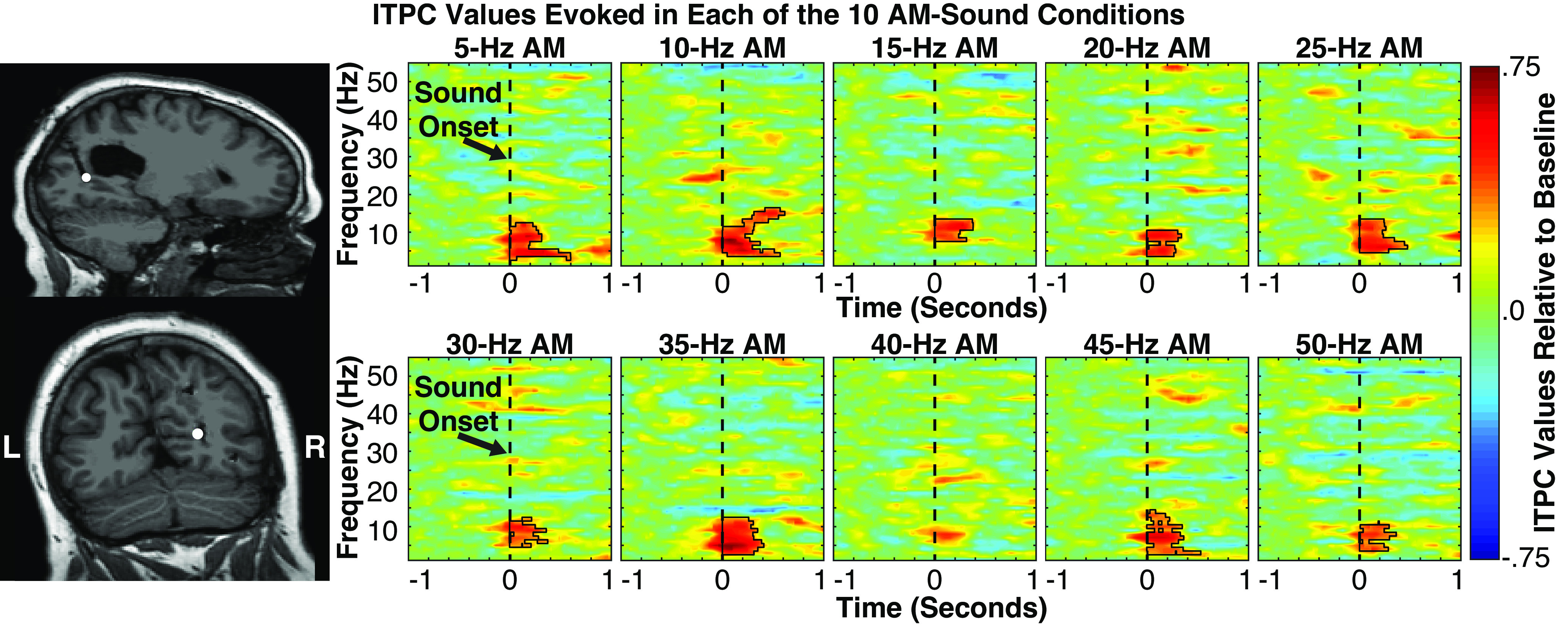

Figure 5.

Experiment 1. A: group-level analysis of intertrial phase coherence (ITPC) at auditory (left) and visual (right) electrodes in response to amplitude-modulated (AM) sounds relative to ITPC values in the prestimulus baseline period. Individual bars reflect auditory AM rate and matched analysis frequency. Center line indicates median; box limits, upper and lower quartiles; whiskers, 1.5 times interquartile range; points, outliers.*P < 0.05, **P < 0.005 (corrected for multiple comparisons). Error bars reflect SEM. B: group ITPC time-spectral plots for auditory (left) and visual (right) electrodes during a representative AM sound condition (40 Hz). Black polygon denotes p < 0.05 significance (corrected for multiple comparisons). Significant entrainment is present at 40 Hz only for auditory electrodes. C: auditory and visual electrodes from all patients showing either significant (red to white) or nonsignificant (black) ITPC values during the presentation of AM sounds relative to phase-shuffled data, reflecting neural entrainment to the auditory stimulus. Significant electrodes cluster around the central superior temporal gyrus, proximal to auditory cortex, and are noticeably absent from visual areas.

Electrode-specific analyses using frequency-averaged ITPC values yielded similar results. Relative to phase-shuffled data, 41 of 55 (74.5%) auditory electrodes showed significant (P < 0.05 FDR-corrected) entrainment (Fig. 5C). By contrast, only 1 of 178 visual electrodes (0.56%) showed significant entrainment when compared with either phase-shuffled data. Indeed, after limiting this analysis to the 41 of 178 visual electrodes with transient onset responses and the 7 of 178 visual electrodes with transient offset responses (to reduce the number of multiple comparison corrections required), we nonetheless failed to observe entrainment at any additional visual electrode (except for the single contact shown in Fig. 6).

Figure 6.

Left: sole visually selective intracranial electrode (represented by white disk on patients’ postoperative MRI) showing significant intertrial phase coherence (ITPC) values (following correction for multiple comparisons) in neural entrainment analyses. Electrode was localized to the anterior portion of the calcarine sulcus in the right hemisphere, probabilistically mapped to either V1 or V2. Right: spectral-temporal plots showing ITPC values evoked at this electrode in each of the 10 amplitude-modulated (AM) sound conditions. Black polygons denote p < 0.005 significance (corrected for multiple comparisons using cluster statistics). Significant ITPC values were evoked transiently in the α frequency range regardless of AM sound, highlighting the presence of a transient phase reset, and absence of AM sound entrainment of visual areas.

Because only a single-visual electrode was significant in this analysis, we inspected its response profile in detail to determine whether it genuinely exhibited entrainment or was merely a false positive. This analysis was of particular interest because the electrode in question was located in the anterior portion of the calcarine sulcus (Fig. 6, left; also Electrode 6 in Fig. 4), where direct crossmodal connections from auditory cortex have been shown to terminate in nonhuman primates (65, 66). Closer examination of frequency-resolved ITPC in this electrode indicated that it did not exhibit sustained entrainment to AM frequencies but, rather, showed pronounced transient onset responses in the 2–12 Hz range regardless of AM frequency. This large transient response may have produced the appearance of entrainment by introducing exceptionally large ITPC values from the low-frequency conditions into the frequency-averaged analysis. Consistent with this interpretation, transient ITPC at stimulus onset was greater in amplitude at this electrode than any other visual electrode and, indeed, 87% of auditory electrodes.

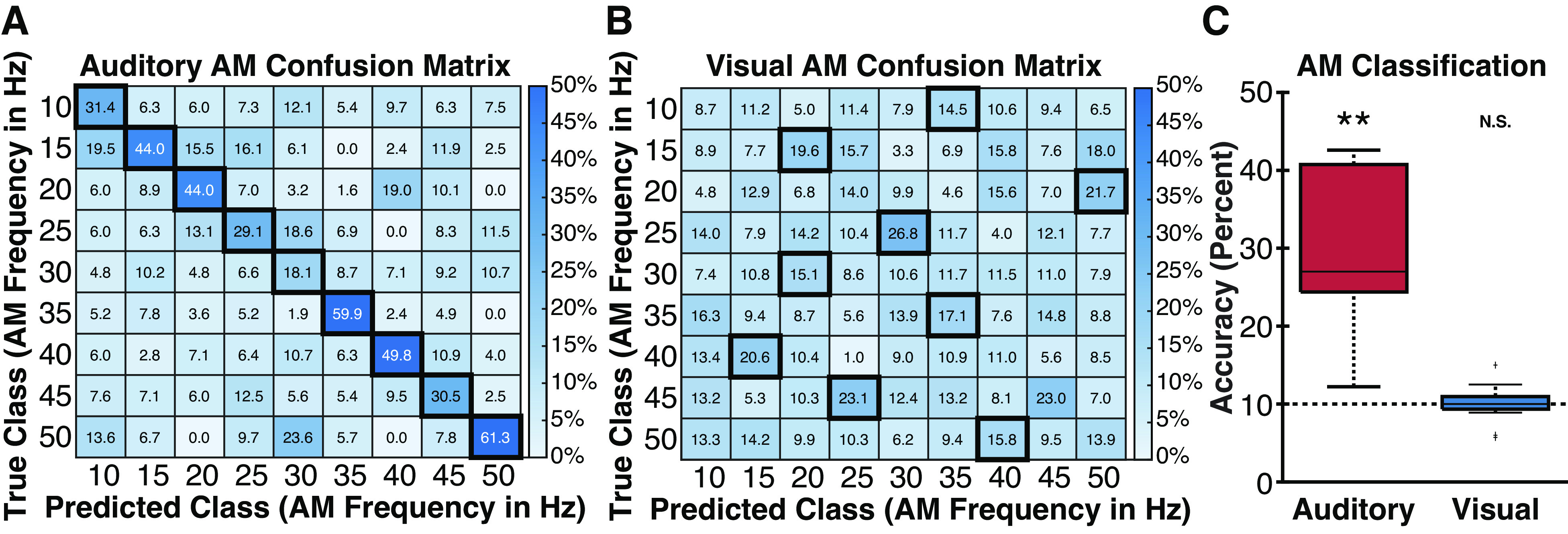

Finally, to examine whether different AM sounds elicited selective power or phase changes not captured in the entrainment models tested earlier, we used SVM classification of both ERSP and ITPC data for both auditory and visual electrodes. Specifically, ITPC and ERSP values were computed for each electrode and subject for each frequency of interest (from 5 to 50 Hz in 5-Hz intervals). As three participants were not tested on the 5-Hz AM sound condition, we calculated classification performance both including and excluding this condition (the pattern of results did not differ). Figure 7 shows classification performance and confusion matrices for auditory and visual electrodes. ITPC classification results mirrored the entrainment results. Classification of 10 AM sound frequencies was significantly above chance (10%) at the group level for auditory electrodes [accuracy = 33.3%, t(6) = 3.52, P = 0.006, d = 1.33], but not visual electrodes [accuracy = 10.0%, t(12) = −0.053, P = 0.521, d = −0.015]. Similarly, classification of 9 AM sound frequencies was significantly above chance (11.1%) at the group level for auditory electrodes [accuracy = 34.9%, t(6) = 3.90, P = 0.004, d = 1.47], but not visual electrodes [accuracy = 11.3%, t(12) = 0.190, P = 0.426, d = 0.053]. We observed a similar, albeit much weaker pattern of decoding using ERSP data consistent with the expectation that entrainment is better characterized by phase information captured by ITPC. AM sounds were classified at slightly above chance accuracy from ERSP values at auditory electrodes: 10 condition auditory [accuracy = 13.7%, t(6) = 2.14, P = 0.038, d = 0.807], 9 condition auditory [accuracy = 14.8%, t(6) = 2.15, P = 0.037, d = 0.813]. In contrast, AM sounds could not be classified using ERSP data from visual electrodes: 10 condition visual [accuracy = 9.60%, t(12) = −0.431, P = 0.663, d = −0.120], 9 condition visual [accuracy = 9.89%, t(12) = −0.798, P = 0.780, d = −0.221]. Taken together, these results suggest that visual cortex does not exhibit reliable entrainment to AM sounds during passive listening but is modulated by event-segmented auditory signals, potentially originating from auditory cortex.

Figure 7.

Group-level intertrial phase coherence (ITPC) classification data. A and B: confusion matrices at auditory and visual electrodes for amplitude-modulated (AM) sounds (10–50 Hz). Colors denote percentage of predicted class labels. Dark boxes reflect the most frequently predicted AM frequency for each true AM frequency. Auditory electrodes show reliable classification of the true AM sound (diagonal of the confusion matrix) whereas visual electrodes fail to reliably classify the diagonal. C: boxplots showing group-level AM classification rates for auditory and visual electrodes for AM sounds (5–50 Hz). Horizontal dotted line reflects chance-level accuracy. **P < 0.01.

Visual Areas Do Not Entrain to Auditory Amplitude Modulations: 10-s Stimuli

To further verify this interpretation, we considered the possibility that the stimuli used in our first experiment could have been too short to allow for reliable crossmodal entrainment to emerge. To test whether entrainment would become apparent with longer stimulus durations, we conducted a second experiment in which we presented 40-Hz AM sounds lasting 10 s in duration to 13 participants with similar electrode coverage (7 participants of whom were included in experiment 1 as well; see Fig. 8 and Supplemental Fig. S3 for electrode selection). Using this modulation frequency provided a rigorous test for subcortically driven crossmodal entrainment because it is near the preferred modulation frequencies for neurons in both the inferior colliculus and auditory thalamus (67, 68). Moreover, studies using nonhuman primates suggest that brief sounds can produce transient phase-resetting near the 40-Hz frequency band in low-level visual cortex (13). Therefore, if any subcortically driven crossmodal entrainment was present in visual cortex, it would likely be evident in this frequency band.

Figure 8.

Experiment 2. Top: intracranial electrodes from 13 patients, displayed on an average brain (all electrodes projected into the left hemisphere). Each colored sphere reflects a single electrode contact included in analyses, localized in auditory areas (red, 73 electrodes) or visual areas (blue, 151 electrodes). Auditory electrodes were limited to those located proximal to the superior temporal gyrus or neighboring white matter and showing a significant event-related potential (ERP) to sounds beginning at less than 120 ms. Visual electrodes were limited to those located in occipital, parietal, or inferior temporal areas and showing a significant ERP to visual stimuli beginning at less than 120 ms. Bottom: ERP responses at all auditory electrodes (red) in evoked during a passive listening task, and at all visual electrodes (blue) evoked during a visual task; the time zero indicates stimulus onset in each task.

To test for entrainment, we divided the duration of each stimulus (from 0 to 10 s) and each prestimulus baseline period (from −11 to −1 s) into 1-s epochs, producing a total of 10 stimulus and 10 baseline epochs per trial. The generation of artificial epochs was used to enhance the signal-to-noise ratio (SNR) of the entrained signal. We then used all stimulus or all baseline epochs to estimate ITPC in each frequency band from 5 to 50 Hz (in 5 Hz intervals) and averaged these values across each participant’s electrodes for our group-level analysis.

Comparing ITPC values between stimulus and baseline periods at the group level, we found significant entrainment in only the 40-Hz frequency band in auditory electrodes [t(7) = 3.00, P = 0.010, d = 1.06], indicating selective entrainment to the modulation frequency of the auditory stimulus (Fig. 9A, left). In contrast, no significant entrainment was observed at the group level in the 40-Hz frequency band for visual electrodes [t(10) = 1.10, P = 0.149, d = 0.331; Fig. 9A, right], providing additional evidence that AM sounds do not produce entrainment in visual cortex. In electrode-specific analyses comparing ITPC values in the 40-Hz frequency band to those produced using phase-shuffled data, 43 of 73 (58.9%) auditory electrodes showed significant (P < 0.05 FDR-corrected) entrainment (Fig. 9B). In contrast, 0 of 151 (0%) visual electrodes showed significant entrainment, providing further evidence that even prolonged AM sounds do not produce entrainment in visual cortex during passive listening.

Figure 9.

Experiment 2. A: group-level analysis of intertrial phase coherence (ITPC) at auditory (left) and visual (right) electrodes in response to 40-Hz amplitude-modulated (AM) sounds relative to ITPC values in the prestimulus baseline period. Individual bars reflect ITPC values at various frequencies, highlighting the specificity of auditory entrainment at 40 Hz. Center line indicates median; box limits, upper and lower quartiles; whiskers, 1.5 times interquartile range; points, outliers. **P < 0.01 (non-40-Hz frequencies corrected for multiple comparisons), Error bars reflect SEM. B: auditory and visual electrodes from all patients showing either significant (red to yellow) or nonsignificant (black) ITPC values during the presentation of AM sounds relative to phase-shuffled data, reflecting neural entrainment to the auditory stimulus. Significant electrodes cluster around the central superior temporal gyrus, proximal to auditory cortex, and are noticeably absent from visual areas.

DISCUSSION

In this study, we used iEEG to identify what information from an auditory signal is transmitted to visual cortex during passive listening. Although we found clear evidence of transient phase-resetting to the onsets and offsets of AM sounds, we found no reliable evidence of crossmodal entrainment to AM frequencies, consistent with past iEEG studies indicating that AM entrainment is largely restricted to auditory regions (69, 70). These transient cortical responses are thought to reflect temporal parsing of fine-grained stimulus dynamics into a segmented representation of auditory events (62), indicating that crossmodal phase-resetting primarily conveys the timing of individuated auditory events, rather than relatively “raw” auditory stimulus dynamics, to visual cortex. Furthermore, these results put an upper limit to the question of what types of auditory information is made available to the visual system during passive listening, as neurons in visual cortex failed to demonstrate entrainment to AM sounds but persisted in generating onset and offset responses.

These results validate and extend prior studies demonstrating the forms of information relayed from auditory to visual areas, including auditory timing and spatial location (29, 30). Here, we demonstrate the novel result in humans that auditory offsets (in addition to onsets) elicit transient responses in visual cortex that show similar temporal dynamics to transient responses in the auditory system. This is consistent with prior research on mice demonstrating both onset and offset responses to sounds in primary visual cortex (71). These onset/offset potentials may have perceptual consequences for the visual system, enabling better sensitivity to visual motion tracking and temporal duration estimation (64). These potentials may also contribute to the sound-induced illusory flash experience, in which a single-visual flash paired with two auditory beeps is perceived as two distinct visual events (72), or the audiovisual stream-bounce illusion, in which transient auditory onsets/offsets promote the perception of visual objects bouncing off of, rather than passing by each other (73).

The topography and dynamics of these crossmodal responses may also provide information about the likely sources of crossmodal modulatory signals that influence visual cortex. At subcortical levels, signals from the inferior colliculus or auditory thalamus could be transmitted to visual cortex via the tecto-pulvinar system (74) or other thalamocortical connections conveying modulatory signals (24). Cortically, auditory signals could instead be relayed to visual cortex via direct cortico-cortical pathways connecting low-level auditory and visual cortex (65, 66, 75, 76) or feedback connections from multisensory association cortex (65, 77–79). Although we found significant transient responses throughout visual cortex, onset responses were most pronounced in pericalcarine (putative V1/V2) and lateral occipito-temporal (putative V5/hMT+) sites where significant offset responses were also observed. One possibility is that modulatory signals from auditory cortex are transmitted directly to V1/V2 and V5/hMT+ through direct “lateral” cortico-cortical pathways from auditory cortex. Anatomical tracer studies in nonhuman primates have revealed direct connections from auditory cortex to V1/V2 (65, 66, 75), and recent diffusion MRI findings suggests a similar connection to area V5/hMT+ in humans (80). Consistent with this interpretation, we observed the strongest crossmodal response in the anterior portion of the calcarine sulcus, where direct connections from auditory cortex have been shown to terminate in nonhuman primates (65). This area is also densely connected with V5/hMT+ by projections from magnocellular-pathway neurons in V1 (81), which are characterized by their rapid transient responses to visual dynamics (82). One plausible hypothesis is that this set of connections could facilitate rapid detection of and behavioral responses to multisensory stimuli by connecting auditory and visual cortical neurons that are tuned to sudden changes in the sensory environment. Indeed, psychophysical studies suggest that temporally correspondent sounds primarily enhance the perception of transient low-spatial frequency or peripheral visual stimuli that are preferentially encoded by magnocellular neurons (2). Direct connections between low-level auditory and visual cortices may also contribute to the facilitative effects of spatialized sounds on visual perception and spatial orienting by modulating visual activity in a hemi-spatially biased manner (10, 12).

Because neurons at different levels of the auditory processing hierarchy exhibit unique responses to AM sounds, additional evidence for the likely sources of crossmodal modulatory signals can also be inferred from characteristic response patterns reflected in visual oscillations. Importantly, although subcortical auditory neurons reliably exhibit entrainment to AM sounds, ∼20% of neurons in auditory cortex do not exhibit entrainment and respond only to the onsets and/or offsets of auditory stimuli (60). Similarly, in human iEEG, neural populations in posterior superior temporal cortex have been found to respond preferentially to sentence onsets, rather than the extended amplitude dynamics of speech (83). Mirroring the expected response of these auditory cortical neurons, we observed reliable phase coherence to the onsets of AM sounds, weaker coherence to sound offsets, and no evidence of extended entrainment in visual cortex. These results are consistent with a cortical auditory source for the crossmodal modulations observed in visual cortex, and match results from recent animal studies (e.g., see Ref. 71). Indeed, onset-sensitive auditory neurons in the posterior temporal cortex are a plausible candidate source given their proximity to white-matter pathways connecting auditory cortex to V1/V2 and V5/hMT+ (65, 75, 78, 80). Thus, both anatomical and functional considerations point to direct “lateral” connections from auditory cortex as a plausible source for the observed crossmodal responses. Alternatively, cortical auditory signals could be transmitted to visual cortex via feedback connections from multisensory association cortex, including the posterior superior temporal sulcus and intraparietal sulcus (84, 85). In nonhuman primates, feedback connections from the superior temporal polysensory (STP) region also target low-level visual cortex (65, 75), leaving open the possibility that cortical auditory signals could be conveyed to visual cortex via this pathway. However, the speed with which sounds modulate activity in low-level visual cortex leads us to disfavor this explanation. Specifically, reliable auditory effects on V1/V2 activity have been observed as early as 28 ms after stimulus onset, only 2 ms after the earliest responses observed in (nonprimary) auditory cortex in the same participant (10) and 15 ms after the earliest responses to clicks recorded from primary auditory cortex in earlier reports (86), leaving little time for polysynaptic delays along the crossmodal pathway from polysensory cortex. Indeed, in the present data set, a significant auditory-evoked potential was observed in the calcarine sulcus (the electrode highlighted in Fig. 6) beginning at 39 ms, replicating prior reports of fast auditory-driven modulation of visual activity.

Finally, the pulvinar nucleus of the thalamus is another potential source of crossmodal modulations in visual cortex through its connections with the V1/V2, and V5/hMT+ (87, 88) and as recent research suggests that the pulvinar may exhibit temporal parsing of auditory patterns (89). However, contrary to these alternatives, the functional properties of pulvinar neurons and their cortical connections are largely inconsistent with the iEEG responses observed in our experiments using lateralized sounds (12). For example, pulvinar neural populations tend to have receptive fields of roughly 1° to 10° (90), and output to cortical neurons with spatially matched receptive fields (88). Thus, pulvinar outputs to visual cortex would be expected to exhibit at least hemifield-specific spatial tuning. In contrast, in our iEEG experiments, sounds lateralized at ∼45° away from the midline produced bilateral responses throughout visual cortex, with only a modest contralateral bias (12). Thus, the topography of crossmodal responses to lateralized sounds is not consistent with the expected strictly hemispheric response pattern predicted by a pulvinar source. Rather, the pattern of responses is more consistent with a source which exhibits only partial hemispheric response bias, such as the auditory cortex (86, 91). Thus, the current results, taken in conjunction with the previous literature, lead us to favor a cortico-cortical explanation for the observed crossmodal modulations.

Importantly, these inferences rest on the premise that responses observed in visual cortex primarily reflect the response properties of upstream neurons in the auditory system, and not transformations generated within visual cortex itself. We believe this assumption is likely justified for two reasons. First, the types of transformations or response nonlinearities required to produce the observed responses are unlikely to occur in V1 given the properties of relevant neural populations in V1. Second, even if the observed onset/offset responses were the result of a transformation in visual cortex, input from auditory subcortical regions would be expected to produce detectible presynaptic activity entrained to stimulus modulation frequencies in visual cortex. For example, one potential alternative explanation of our results is that the observed pattern of visual responses reflects lowpass filtering or nonlinear transformations of subcortical auditory input in visual cortex (i.e., visual neurons filter out irrelevant auditory information locally). However, this interpretation is largely inconsistent with the known properties of visual cortex and the biophysical basis of the iEEG signals studied here. In nonhuman primates, V1 neurons exhibit entrainment to visual dynamics up to 100 Hz (92, 93), with typical high-frequency cut-offs ranging from 12 to 48 Hz (94), providing sufficient temporal resolution for robust entrainment to high-frequency inputs from auditory subcortical sites.

Moreover, the likely generator of crossmodal responses observed in pericalcarine cortex is unlikely to produce the types of transformations or nonlinearities required to generate the observed response profiles. Previous research in nonhuman primates suggests that crossmodal influences on oscillatory activity, including auditory effects on primary visual cortex, occur through modulatory phase resetting of subthreshold oscillations in the supragranular layers (layers 2/3) of nominally unisensory cortex (13, 95, 96). Population responses in these layers of V1, as assessed using voltage-sensitive dye imaging, do not exhibit transient onset and offset responses to visual stimuli, and produce only negligible response nonlinearities associated with gain control (97). Moreover, because subthreshold modulations generally do not produce spiking activity, response nonlinearities associated with spiking are unlikely to have contributed to the crossmodal responses observed in the current study. In addition, although some V1 neurons exhibit transient offset responses to static visual stimuli (98), to our knowledge, there are no previous reports demonstrating transient offset responses to the terminations of temporally modulated stimuli (e.g., flicker) in visual cortex, despite the substantial literature exploring sustained entrainment responses to such stimuli. Rather, flickering stimuli tend to produce repeated offset responses at the frequency of stimulation, without especially pronounced offset responses or after-discharges at stimulus offset (38). Therefore, the response profiles observed in pericalcarine visual cortex are unlikely to have originated from transformations of subcortical inputs that occur in visual cortex.

Finally, because the extracellular potentials measured by iEEG partially reflect synaptic potentials associated with subthreshold synaptic inputs (99), temporally organized synaptic potentials produced by AM entrained inputs from auditory subcortex would have likely been observable even if substantial transformations of subcortical inputs occurred in visual cortex, particularly in our decoding analyses. For example, these synaptic potentials may have contributed to the apparently flat AM frequency tuning observed in auditory cortex entertainment responses, despite the well-documented lowpass tuning of auditory cortex neurons (60). However, similar entrainment responses were not observed in visual cortex, suggesting a lack of auditory subcortical input.

Nevertheless, as this study did not measure activity along a specific white matter pathway or functional connectivity between cortical auditory and visual sites, it remains possible that some of the observed effects are subcortical in origin, with the pulvinar as one reasonable candidate. Additional data will be required to resolve this question conclusively, including functional connectivity analyses between auditory and visual sites in humans, supplemented by recordings of the pulvinar. One possibility is that, as hypothesized in studies on unimodal attention, the pulvinar may play a role in coordinating functional connectivity across cortico-cortical anatomical connections (100).

The present study represents one of the largest iEEG studies of multisensory processing to date. Invasive recordings were necessary to address the question because scalp-recorded EEG lacks the required spatial resolution and as fMRI is insensitive to phase-encoded oscillatory information (101). One potential concern in the interpretation of these data is that they were acquired from patients with epilepsy who may exhibit altered network topology or neural activity. However, this concern is tempered by the fact that electrodes exhibiting epileptic activity were not included in any analyses, data were collected from a large number of patients with different seizure etiologies, and as electrodes were selected on the basis of their functional responses to visual stimuli and anatomical locations. Additional limitations include 1) the definition of V5/hMT+ based on anatomy as opposed to functional localization via a motion localizer task, 2) the absence of an auditory-visual condition in which visual stimuli flash at the same rate as AM sounds to examine whether the entrainment of visual neurons to visual stimuli is modulated by auditory entrainment, and 3), we only tested 40 Hz with 10-s long stimuli due to clinical constraints, whereas it is possible that low-frequency AM sounds (e.g., 5 Hz) may still lead to entrainment after prolonged presentation. We suggest these as necessary avenues of future research to understand the generalizability of the observed results. Finally, the absence of concurrent eye tracking prevents us from ruling out that some of the observed visual effects were related to eye-movements generated in response to the sounds. However, we believe this unlikely because of three pieces of evidence: 1) sounds were presented centrally and would not have required a saccade, 2) human saccades typically occur 100–150 ms after a stimulus is presented, which follows the onset of auditory responses in visual cortex (10, 102), and most importantly, 3) studies in humans and animals that used eye tracking concluded that auditory-driven responses in visual cortex could not be explained by eye movements (30, 71, 103, 104).

In summary, the present data demonstrate that sounds elicit robust phase-resetting responses in visual cortex in the absence of entrained responses during passive listening. This response pattern stands in contrast to more extended modulations produced by dynamic visual signals (e.g., visual speech) (105, 106) in auditory cortex. As the present studies used AM sounds as opposed to auditory speech stimuli, we cannot exclude the possibility that visual cortex may encode complex auditory dynamics in some contexts. Alternatively, we believe it more likely that differences in modulatory dynamics may reflect the distinct functional roles that low-frequency oscillations play in perceptual computations in each modality. Although nested oscillations in auditory cortex track the extended dynamics of vocalizations at multiple (e.g., phonemic, syllabic, and phrasal) time scales (107, 108), indicating their role in the integrative encoding of temporally extended auditory information, slow oscillations in visual cortex are thought to reflect temporally discretized sampling of visual signals (109–112). Crossmodal modulations may facilitate perception in a modality-dependent manner by modulating oscillatory dynamics in ways that support their primary functional role in the recipient cortical region. In visual cortex, this would entail temporally aligning periodic sampling to important visual events to maximize the information content of encoded visual signals (15). Crossmodal phase-resetting to the onsets and offsets of sounds could facilitate this process by time-locking visual computations to multisensory events marked by auditory discontinuities, thereby optimizing visual sampling in the temporal domain.

SUPPLEMENTAL DATA

Supplemental Fig. S1: https://doi.org/10.6084/m9.figshare.14410244;

Supplemental Fig. S2: https://doi.org/10.6084/m9.figshare.19388477;

Supplemental Fig. S3: https://doi.org/10.6084/m9.figshare.19698544;

Supplemental Table S1: https://doi.org/10.6084/m9.figshare.14410247.

GRANTS

This study was supported by National Institutes of Health (NIH) Grants R00 DC013828 and R01 EY021184, and National Institute of Neurological Disorders and Stroke (NINDS) Grant 2T32NS047987.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

D.B., A.S., M.G., V.L.T., and S.S. conceived and designed research; D.B., W.C.S., V.S.W., E.A., V.L.T., J.X.T., S.W., and N.P.I., performed experiments; D.B., J.P., and A.S. analyzed data; D.B., J.P., A.S., W.C.S., V.S.W., M.G., V.L.T., J.X.T., S.W., N.P.I., and S.S. interpreted results of experiments; D.B. and J.P. prepared figures; D.B. and J.P. drafted manuscript; D.B., J.P., A.S., W.C.S., V.S.W., M.G., E.A., V.L.T., J.X.T., S.W., N.P.I., and S.S., edited and revised manuscript; D.B., J.P., A.S., W.C.S., V.S.W., M.G., E.A., V.L.T., J.X.T., S.W., N.P.I., and S.S. approved final version of manuscript.

REFERENCES

- 1.Lippert M, Logothetis NK, Kayser C. Improvement of visual contrast detection by a simultaneous sound. Brain Res 1173: 102–109, 2007. doi: 10.1016/j.brainres.2007.07.050. [DOI] [PubMed] [Google Scholar]

- 2.Jaekl PM, Soto-Faraco S. Audiovisual contrast enhancement is articulated primarily via the M-pathway. Brain Res 1366: 85–92, 2010. doi: 10.1016/j.brainres.2010.10.012. [DOI] [PubMed] [Google Scholar]

- 3.Chen Y-C, Huang P-C, Yeh S-L, Spence C. Synchronous sounds enhance visual sensitivity without reducing target uncertainty. Seeing Perceiving 24: 623–638, 2011. doi: 10.1163/187847611X603765. [DOI] [PubMed] [Google Scholar]

- 4.Stein BE, Meredith MA. The Merging of the Senses (1st ed.). Cambridge, MA: The MIT Press, 1993. [Google Scholar]

- 5.Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci 10: 278–285, 2006. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- 6.Kayser C, Logothetis NK. Do early sensory cortices integrate cross-modal information? Brain Struct Funct 212: 121–132, 2007. doi: 10.1007/s00429-007-0154-0. [DOI] [PubMed] [Google Scholar]

- 7.Thorne JD, Debener S. Look now and hear what’s coming: on the functional role of cross-modal phase reset. Hear Res 307: 144–152, 2014. doi: 10.1016/j.heares.2013.07.002. [DOI] [PubMed] [Google Scholar]

- 8.van Atteveldt NM, Peterson BS, Schroeder CE. Contextual control of audiovisual integration in low-level sensory cortices. Hum Brain Mapp 35: 2394–2411, 2014. doi: 10.1002/hbm.22336. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Murray MM, Thelen A, Thut G, Romei V, Martuzzi R, Matusz PJ. The multisensory function of the human primary visual cortex. Neuropsychologia 83: 161–169, 2016. doi: 10.1016/j.neuropsychologia.2015.08.011. [DOI] [PubMed] [Google Scholar]

- 10.Brang D, Towle VL, Suzuki S, Hillyard SA, Tusa SD, Dai Z, Tao J, Wu S, Grabowecky M. Peripheral sounds rapidly activate visual cortex: evidence from electrocorticography. J Neurophysiol 114: 3023–3028, 2015. doi: 10.1152/jn.00728.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McClure JP, Polack P-O. Pure tones modulate the representation of orientation and direction in the primary visual cortex. J Neurophysiol 121: 2202–2214, 2019. doi: 10.1152/jn.00069.2019. [DOI] [PubMed] [Google Scholar]

- 12.Plass J, Ahn E, Towle VL, Stacey WC, Wasade VS, Tao J, Wu S, Issa NP, Brang D. Joint encoding of auditory timing and location in visual cortex. J Cogn Neurosci 31: 1002–1017, 2019. doi: 10.1162/jocn_a_01399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lakatos P, O'Connell MN, Barczak A, Mills A, Javitt DC, Schroeder CE. The leading sense: supramodal control of neurophysiological context by attention. Neuron 64: 419–430, 2009. doi: 10.1016/j.neuron.2009.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Naue N, Rach S, Strüber D, Huster RJ, Zaehle T, Körner U, Herrmann CS. Auditory event-related response in visual cortex modulates subsequent visual responses in humans. J Neurosci 31: 7729–7736, 2011. doi: 10.1523/JNEUROSCI.1076-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Romei V, Gross J, Thut G. Sounds reset rhythms of visual cortex and corresponding human visual perception. Curr Biol 22: 807–813, 2012. doi: 10.1016/j.cub.2012.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mercier MR, Foxe JJ, Fiebelkorn IC, Butler JS, Schwartz TH, Molholm S. Auditory-driven phase reset in visual cortex: human electrocorticography reveals mechanisms of early multisensory integration. NeuroImage 79: 19–29, 2013. doi: 10.1016/j.neuroimage.2013.04.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mercier MR, Molholm S, Fiebelkorn IC, Butler JS, Schwartz TH, Foxe JJ. Neuro-oscillatory phase alignment drives speeded multisensory response times: an electro-corticographic investigation. J Neurosci 35: 8546–8557, 2015. doi: 10.1523/JNEUROSCI.4527-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cecere R, Romei V, Bertini C, Làdavas E. Crossmodal enhancement of visual orientation discrimination by looming sounds requires functional activation of primary visual areas: a case study. Neuropsychologia 56: 350–358, 2014. doi: 10.1016/j.neuropsychologia.2014.02.008. [DOI] [PubMed] [Google Scholar]

- 19.Sutherland CAM, Thut G, Romei V. Hearing brighter: changing in-depth visual perception through looming sounds. Cognition 132: 312–323, 2014. doi: 10.1016/j.cognition.2014.04.011. [DOI] [PubMed] [Google Scholar]

- 20.Leo F, Romei V, Freeman E, Ladavas E, Driver J. Looming sounds enhance orientation sensitivity for visual stimuli on the same side as such sounds. Exp Brain Res 213: 193–201, 2011. doi: 10.1007/s00221-011-2742-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Schroeder CE, Foxe J. Multisensory contributions to low-level,‘unisensory’processing. Curr Opin Neurobiol 15: 454–458, 2005. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- 22.Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57: 11–23, 2008. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cappe C, Rouiller EM, Barone P. Multisensory anatomical pathways. Hear Res 258: 28–36, 2009. doi: 10.1016/j.heares.2009.04.017. [DOI] [PubMed] [Google Scholar]

- 24.Cappe C, Rouiller EM, Barone P. Cortical and thalamic pathways for multisensory and sensorimotor interplay. In: The Neural Bases of Multisensory Processes, edited by Murray MM, Wallace MT.. Boca Raton, FL: CRC Press/Taylor & Francis, 2012, p. 15–30. [PubMed] [Google Scholar]

- 25.Wang X. Cortical coding of auditory features. Annu Rev Neurosci 41: 527–552, 2018. doi: 10.1146/annurev-neuro-072116-031302. [DOI] [PubMed] [Google Scholar]

- 26.Vetter P, Smith FW, Muckli L. Decoding sound and imagery content in early visual cortex. Curr Biol 24: 1256–1262, 2014. doi: 10.1016/j.cub.2014.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Paton A, Petro L, Muckli L. An investigation of sound content in early visual areas. J Vis 16: 153–153, 2016. doi: 10.1167/16.12.153. [DOI] [Google Scholar]

- 28.Romei V, Murray MM, Merabet LB, Thut G. Occipital transcranial magnetic stimulation has opposing effects on visual and auditory stimulus detection: implications for multisensory interactions. J Neurosci 27: 11465–11472, 2007. doi: 10.1523/JNEUROSCI.2827-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Romei V, Murray MM, Cappe C, Thut G. Preperceptual and stimulus-selective enhancement of low-level human visual cortex excitability by sounds. Curr Biol 19: 1799–1805, 2009. doi: 10.1016/j.cub.2009.09.027. [DOI] [PubMed] [Google Scholar]