Abstract

Rationale

Mobile technology has been widely utilized as an effective healthcare tool during the COVID-19 pandemic. Notably, over 50 countries have released contact-tracing apps to trace and contain infection chains. While earlier studies have examined obstacles to app uptake and usage, whether and how this uptake affects users’ behavioral patterns is not well understood. This is crucial because uptake can theoretically increase or decrease behavior that carries infection risks.

Objective

The goal of this study is to evaluate the impact of app uptake on the time spent out of home in Japan. It tests four potential underlying mechanisms that drive the uptake effect: compliance with stay-at-home requirements, learning about infection risk, reminders, and commitment device.

Method

We use unique nationwide survey data collected from 4,379 individuals aged between 20 and 69 in December 2020 and February 2021 in Japan. Japan has features suitable for this exercise. The Japanese government released a contact tracing app in June 2020, which sends a warning message to users who have been in close contact with an infected person. We conduct a difference-in-differences estimation strategy combined with the entropy balancing method.

Results

App uptake reduces the time spent out of home. Sensitivity analysis shows that it cannot be explained by unobserved confounders. Importantly, the impact is large even among users who have not received a warning message from the app, and even larger for those with poor self-control ability. Furthermore, individuals’ self-control ability is negatively associated with the uptake decision, supporting our hypothesis that the apps serve as a commitment device.

Conclusions

It may be beneficial to encourage citizens to uptake contact tracing apps and other forms of commitment devices. This study also contributes to the literature on mobile health (mHealth) by demonstrating its efficacy as a commitment device.

Keywords: mHealth, Self-control, Commitment device, Unintended impact, COVID-19, Contact tracing apps

1. Introduction

Mobile technology is a widely utilized and effective healthcare tool (Mosa et al., 2012). Since the onset of the COVID-19 pandemic, various mobile apps have been developed for the self-management of symptoms, home monitoring, and risk assessment (Kondylakis et al., 2020). In particular, contact tracing apps have been released officially or semi-officially in more than 50 nations, and at least 300 million people have downloaded them as of January 2021 (MIT Technology Review, 2021). These apps aim to trace and contain infection chains by sending users a warning message when other users with whom they have been in close contact are confirmed to be infected. An experimental study in Spain shows that the app traces close-contact persons twice as well as manual tracing by healthcare centers (Rodríguez et al., 2021). Since the apps' effectiveness increases with their adoption rates, how to facilitate their uptake is of key interest to policymakers. To address this question, prior studies have examined the effects of socio-psychological obstacles to app uptake (or installation and usage), such as citizens’ lack of prosocial attitudes, concerns about security and privacy, and low estimations of infection risk and thus low perceived benefits from usage (Altmann et al., 2020; Jonker et al., 2020; Munzert et al., 2021; Shoji et al., 2021; von Wyl et al., 2021; Walrave et al., 2020).

While it is undeniably important to implement contact tracing more effectively, researchers and policymakers have not paid much attention to the potential side-effects of app uptake, that is, behavioral changes among users. Importantly, the uptake of apps may influence users' health behavior in both positive and negative ways. First, the apps may alter users’ subjective expectations about infection risk. Those who receive warning messages may update their expectation upward and reduce risky behavior, such as going to a crowded place, while those who do not receive such messages may react in the opposite way. Second, app uptake serves as a commitment or self-disciplining device (Gul and Pesendorfer, 2001; Strulik, 2019). If people are aware that they will experience disutility from receiving a warning message—such as the time opportunity costs of consulting with a medical facility and following stay-at-home requirements, fear of the possibility of infection, and stigma from peers (CNET Japan, 2020)—after app uptake, they may change their behavior to reduce the possibility of receiving the message, such as increasing time at home. Anticipating this change, people may use the app as a device to self-control risky behavior (Section 3 explores these and other potential effects of app uptake in greater detail.).

It is crucial to understand the effects of contact tracing apps on users' behavior for two reasons. First, without such understanding, it is questionable whether the government should encourage the diffusion of these apps, because they may lower users’ risk perception and aggravate risky behaviors. According to the first theoretical framework, the extent to which users update their subjective infection risk and alter risky behavior depends on their perception of (1) how many other people use the app, and (2) the probability that infected persons will disclose their status through the app. If users overestimate these probabilities, those who do not receive warning messages may become overly complacent and expose themselves to higher infection risk, suggesting unintentionally negative consequences. Given that the apps are widely used, officially endorsed by governments, and can threaten human lives, it is critical for app developers, users, and policymakers to understand the potential effects of app uptake on health behavior, not just its usefulness for contact tracing.

Second, it is important to understand individuals’ uptake decisions in order to design effective interventions to raise adoption rates. The commitment device hypothesis, which we focus on in this paper, predicts a lower adoption rate among those with high self-control ability, who can maintain social distancing and avoid risky behavior even without relying on a commitment device (See Section 3.4 for details). To facilitate their uptake, it may be effective for policymakers to emphasize alternative benefits, such as their positive effects on contact tracing in the community.

Given these arguments, the goal of this study is to examine the impact of uptake on changes in health behavior, and the mechanisms that underlie them, using the Japanese case. Japan has suitable features to address these questions, particularly when compared to Western countries where many studies on the uptake decision of contact tracing apps have previously been conducted. First, even during official states of emergency, national and local governments lack the legal authority to require business closures, shelter-in-place orders, or citywide lockdowns. Governors are restricted to urging (and if necessary, shaming) businesses and citizens to follow their directives. This enables us to find large variations in individual behavior before and after the release of the contact tracing app. Second, in terms of the app's diffusion, Japan has many similarities with other countries, making our findings generalizable. Specifically, the app's diffusion relies entirely on citizens' voluntary uptake. It was developed only to trace infection chains and does not keep users' behavior under surveillance. Third, internet literacy and smartphone usage rates are high, indicating that citizens are familiar with mobile apps.

2. Study site

2.1. Passive interventions for the COVID-19 pandemic in Japan

In Japan, the first case of COVID-19 was confirmed on January 15th, 2020. The infection spread accelerated soon after, and the government declared the first state of emergency on April 7th (Fig. 1 ). To reduce interpersonal contacts, various industries—such as restaurants and movie theatres—were requested to close until May 25th. The number of confirmed cases temporarily decreased during the emergency period, but it started to climb once more in June and reached around 3000 cases per day in December. Consequently, the government declared a second state of emergency on January 7th, 2021, requesting restaurants to close by 8 p.m. and office workers to work at home until March 21st.

Fig. 1.

Daily Newly Confirmed Cases in Japan. Source: Ministry of Health, Labor and Welfare, Japan. Note: Gray shaded areas indicate state of emergency periods between January 2020 and March 2021. Dashed line indicates the release of COCOA. Solid black lines indicate the survey periods.

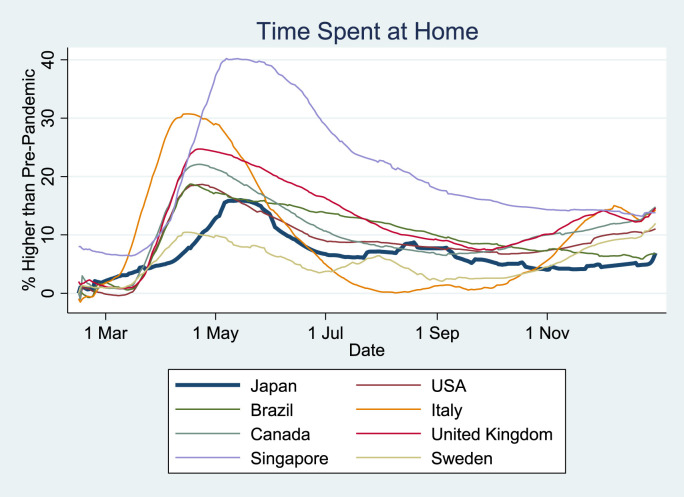

However, because Japanese law only allows governments to request, not legally enforce, these measures, citizens were not prohibited from engaging in regular activities during states of emergency. Furthermore, to support local economies, the government introduced the “Go To Travel” program, which subsidized domestic travel by citizens. These measures stand in stark contrast to many other countries, which went so far as to close public transportation and workplaces. Fig. 2 compares the changes in time spent at home in 2020 relative to the pre-pandemic period across countries. While there is some temporal variation, reflecting cross-national differences in infection spread and government measures, it appears that Japanese citizens did not stay at home as much as their overseas peers.

Fig. 2.

Increase in Time Spent at Home: Cross-Country Comparison. Source: COVID-19 Community Mobility Reports by Google between February and December 2020. Note: The 28-days moving average is reported.

2.2. The COVID-19 Contact Confirming Application (COCOA)

The Japanese government released its official mobile application—the COVID-19 Contact Confirming Application (COCOA)—on June 19th, 2020. It adopted a decentralized data privacy approach, which was among the three major types of contact tracing apps worldwide: centralized, decentralized, and hybrid (Ahmed et al., 2020; Nakamoto et al., 2020). Once COCOA is installed on a mobile phone, by using a Bluetooth sensor, it detects and records the app ID of other users who stay within 1 m for more than 15 min, even when the app is turned off (Ministry of Health, Labor, and Welfare, Japan, 2020; CNET Japan, 2020). In case a user is confirmed to be infected with COVID-19 and they report it via the app, other users with whom they were in close contact in the past 14 days receive a warning message. For those who were not in contact with any infected persons, COCOA does not send any message or notification to inform them of their safety.

The effectiveness of the app relies on three voluntary behaviors of citizens. The first is uptake (download and installation) of COCOA. Like apps in other countries, COCOA requires population adoption rates as high as 60% to contain the virus effectively. However, it is difficult to achieve this level, and the adoption rate was just 17.6% as of December 28th, 2020 (22.5 million downloads) (Ministry of Health, Labor and Welfare, Japan, 2021) (Figure A1). Shoji et al. (2021) show that major obstacles to the uptake of COCOA include lack of trust in the government, perceptions of health risks, and prosocial attitudes towards one's community. Second, users who are confirmed to be infected are requested to report it via the app, but this is not mandatory. Some people may hesitate due to fears that receivers of the message will identify them as the infected person, causing them to suffer reputationally. By the end of December 2020, only 5566 users had reported their infection on the app. Third, the receivers of the message are requested to consult with medical facilities, take RT-PCR tests, and stay home if necessary. However, these also rely on citizens' voluntary, individual compliance.

One advantage of uptaking the app is that users can acquire information about concrete infection risks through the receipt of warning messages. However, message receivers may also incur various disutilities. First, the government requests receivers to stay home and consult with a medical facility, particularly should they have any symptoms of infection. Second, some companies and schools prohibit their workers and students from commuting if they or their family members have been in close contact with an infected person. Although none of these requests is legally enforceable, they may still comply due to the psychological costs of engaging in illicit behavior, such as guilt and stigma (Katafuchi et al., 2021). Third, related with the second cost, message receivers may be stigmatized by their work colleagues or school friends. Finally, the receivers may experience fear and mental distress from worrying about their infection risk and health condition.

Although COCOA was initially expected to be an effective tool to implement contact tracing, in February 3, 2021, the government disclosed that a system error was detected in the Android version of the app (version 1.1.4) and warning messages had not been sent to the users since September 28, 2020. The error was fixed in the version released on 18 February (version 1.2.2). No errors were found in the iOS version.

3. Hypothesis

In this section, we illustrate four conceptual frameworks which predict behavioral changes among apps users: compliance with the stay-home requirement, learning about infection risks, reminders, and commitment devices. The first framework is an intended impact of the app, while the others are not necessarily so. Although the goal of this study is to evaluate the average impact for all users, this section discusses predicted behavior in response to the receipt of a close-contact warning message, which is critical to testing the mechanism of behavioral change empirically. The predictions of each framework are summarized in Table 1 .

Table 1.

The impact of app usage on risky behavior.

|

Frameworks |

The impact on risky behavior for: |

|

|---|---|---|

| Receivers of warning message | Non-receivers | |

| Compliance with the stay-home requirement | decrease | none |

| Learning about infection risks | decrease | increase |

| Reminders | decrease | none |

| Commitment device | decrease | decrease |

Note: In these predictions, the reference group (the comparison group) is app non-users.

3.1. Compliance with the stay-home requirement

As mentioned in Section 2.2, the government, as well as some companies and schools, has requested those who were in close-contact with an infected person to stay home, particularly if they have any symptoms of COVID-19 infection. Hence, receivers of the warning message may be more likely to comply and stay at home than non-users. This framework predicts behavioral change only among message receivers, and only for a short period until the requirement is lifted (typically fourteen days, by when infected persons should have become symptomatic).

3.2. Learning about infection risks

The apps affect users’ subjective expectation of their infection risk. Those who receive the warning message update their risk expectation upward and reduce risky behavior, such as going to a crowded place, while those who do not receive the message may feel safer and increase such behavior. Therefore, the total impact among app users depends on the proportion of users who receive messages, and the extent to which risk expectations are updated.

The extent of updating depends on each user's subjective expectations about (1) the app's adoption rate around him/her, and (2) the probability that infected users report their infection on the app. Users who overestimate both probabilities may unduly increase risky behaviors when they do not receive warning messages, because they underestimate their risk exposure. They may also be less surprised and react less to the receipt of a warning message. By contrast, those who underestimate both probabilities may overreact to the receipt of a warning message, which they consider a priori to be an unlikely event, while they may underreact to the non-receipt of a message.

3.3. Reminders

It is time consuming and exhausting for people to pay attention to their health risks on a daily basis. In fact, one of the most common healthcare interventions through mobile phones is the sending of reminder messages with healthcare information to those with limited attention (Marcolino et al., 2018). Warning message from COVID contact tracing apps may play the same role. This mechanism predicts that receivers of the warning message should reduce risky behavior for a short period until they lose attention again, but non-receivers should not change their behavior. Therefore, the predicted patterns are similar to the first framework.

3.4. Commitment device

The apps may serve as a commitment (or self-disciplining) device for those who are aware of their low self-control ability. The American Psychology Association's APA Dictionary of Psychology (VandenBos, 2015) defines self-control as “the ability to be in command of one's behavior (overt, covert, emotional, or physical) and to restrain or inhibit one's impulses.” It is plausible that for any individual, there are occasional utility gains from risky behavior (Fudenberg and Levine, 2006; Gul and Pesendorfer, 2001). For example, the utility gain from drinking with colleagues or friends may increase when the weather is nice or when their work goes smoothly. By definition, those with poorer self-control ability experience a larger volatility in utility gain than those with good self-control. Therefore, they may be more easily tempted to violate social-distancing requirements, even though they understand that such behavior exposes them to high infection risk.

However, if individuals are sophisticated, in that they are aware of their poor self-control ability, they can mitigate the temptation problem by voluntarily adopting a commitment device that increases the costs of risky behavior (Bryan et al., 2010). Commitment devices are defined as “an arrangement entered into by an agent who restricts his or her future choice set by making certain choices more expensive, perhaps infinitely expensive, while also satisfying two conditions: (a) The agent would, on the margin, pay something in the present to make those choices more expensive, even if he or she received no other benefit for the payment, and (b) the arrangement does not have a strategic purpose with respect to others” (Bryan et al., 2010). Examples include retirement savings devices and reducing the borrowing limits of credit cards (Thaler and Benartzi, 2004; Cho and Rust, 2017). Furthermore, the behavioral impact of commitment devices becomes larger for those with poorer self-control ability (Himmler et al., 2019). Earlier studies demonstrate the effectiveness of commitment devices for various health behavior, such as exercise, drinking, and smoking (Giné et al., 2010; Royer et al., 2015; Schilbach, 2019).

It is plausible that contact tracing apps may play the role of a commitment device. As mentioned in Section 2.2, a warning message causes various disutilities to receivers, such as the opportunity costs of stay-at-home requirements and negative psychological strain. The literature on information avoidance argues that individuals behave so as not to receive even useful information, if receiving it causes them to experience disutility (Golman et al., 2017; Sweeny et al., 2010). These arguments predict that app users stay home more than non-users regardless of the receipt of warning messages.

4. Data

4.1. Survey design and sample

This study uses data from an original, nationwide online panel survey conducted in Japan. Our survey was designed to collect responses from around 5,000 people aged between 20 and 69. In the sampling process, 68,480 people were selected from registrants with Cross Marketing inc., one of the largest survey companies in Japan (4.65 million registrations). They were randomly sampled based on stratified sampling with regard to gender (two categories), age group (10 five-year categories), and location of residence (10 categories), so that the expected distribution of these characteristics would be comparable to that of the Japanese population. The survey website was designed using Qualtrics. We obtained research ethics approval for this project from the IRB of the Institute of Social Science, the University of Tokyo.

The invitation for the first survey was sent to 68,480 members by email on December 18th, 2020, six months after the release of COCOA. They were informed that participants would receive tokens for shopping as financial incentive, and that the survey would be closed once the required sample size was obtained. The survey was closed on December 21st, by when 9,369 browsed the survey website, 7,997 agreed to participate, and 7,080 completed the survey. The median respondent spent 13.5 min on the survey. Between March 19th and 22nd, 2021, we conducted a short re-survey with these participants to collect further supplementary information. A total of 5,304 out of 7,080 individuals participated in the resurvey (median time = 8.5 min).

Among these respondents, we dropped those who finished the survey within 5 min in the first survey or within 3 min in the resurvey to control for survey quality, leaving 5,029 respondents. After conducting list-wise deletion to deal with missing values, the final sample size was 4,379, of which 637 (15%) had ever used COCOA. In the Online Appendices, we carefully discuss the statistical power of the data (A1), the issue of missing values and robustness to alternative approaches using the multiple imputation method (Sidi and Harel, 2018) (A2), and the representativeness of the sample (A3).

4.2. Measures

4.2.1. Risky behavior

In our survey, we asked respondents how much time they spent outside of their home on a typical non-workday in four periods—December 2019, February 2020, December 2020, and February 2021. The first and third periods were elicited in the first survey wave, while the second and fourth periods were elicited in the second follow-up wave. In other words, the data on the first and second periods are based on retrospective recall, while the third and fourth periods are contemporary to survey implementation. To mitigate potential concerns about recall bias, we asked respondents to choose an answer from a list of time intervals: (1) less than 2 h, (2) 2–4 h, (3) 4–8 h, (4) more than 8 h, and (5) do not want to answer. For the empirical analysis we construct a binary indicator of spending more than 2 h outside the home on a non-workday (roughly the sample median).

4.2.2. Usage of COCOA

Our independent variable of interest is a binary indicator that takes unity if the respondent had downloaded COCOA between its release (June 2020) and the first survey wave (December 2020), and zero otherwise.

4.2.3. Socio-psychological characteristics

In the survey in December 2020, the following socio-psychological information was elicited. First, our survey contains questions about self-control, based on a modified version of the Brief Self-Control Measure (Tangney et al., 2004). The original version consists of 13 items, and Ozaki et al. (2016) translated them into Japanese and conducted a confirmatory factor analysis with a sample of over 500 Japanese adults. Our survey contains 7 out of 13 items which recorded the highest factor loadings in their analysis: (1) “I have a hard time breaking bad habits”, (2) “I am lazy”, (3) “I do certain things that are bad for me, if they are fun”, (4) “I wish I had more self-discipline”, (5) “I have trouble concentrating”, (6) “Sometimes I can't stop myself from doing something, even if I know it is wrong”, and (7) “Pleasure and fun sometimes keep me from getting work done”. These were asked on a five-point Likert scale, with the answer option of “Do not want to answer”. Cronbach's alpha of the seven items is 0.92, confirming their validity. Subsequently, we took the negative of the summation of the answers to obtain a scale such that a higher score indicates greater self-control. Finally, we standardized it to construct our self-control measure (mean = 0; SD = 1). An important feature of this variable is that, since it is based on self-evaluation, only those who are aware of their self-control problem should exhibit a lower score. Therefore, this measure captures sophisticated time-inconsistent preferences but not the naïve one (i.e. those who do not know they have time-inconsistent preferences).

Second, the Big 5 personality traits were elicited to quantify individuals’ basic personality traits of neuroticism, extraversion, openness, agreeableness, and conscientiousness. We employ the Ten Item Personality Inventory, which was originally developed by Gosling et al. (2003) and modified into a Japanese version by Oshio et al. (2012), to measure these traits.

Third, our measure of generalized trust is based on an item in the World Values Survey: “Would you say that most people can be trusted?” It was asked on a five-point Likert scale, with the option of “Do not want to answer”. We also asked the extent to which respondents trust the Japanese government with the following question on a four-point Likert scale: “Do you trust the government?” These are frequently used in the literature on the determinants of COVID-19 contact tracing apps (Guillon and Kergall, 2020; Munzert et al., 2021).

Fourth, to capture individuals’ attachment to their neighborhoods, we also asked whether the respondents agree with the following statement on a four-point Likert scale with a “Do not want to answer” option: “I am very attached to my neighborhood”. This item is frequently used in the literature on place identity in psychology (Pitas et al., 2021; Raymond et al., 2010; Williams et al., 1992).

Fifth, willingness to take risks is measured with the following question: “Which of the following two sayings characterizes you better, A: nothing ventured, nothing gained, or B: a wise man never courts danger?” The answer options include (1) B, (2) Lean B, (3) Neutral, (4) Lean A, and (5) A. A lower score indicates greater risk aversion. This question is frequently used in the social survey literature (Iida, 2016; Ikeda et al., 2016 p142) and draws from earlier work in the United States.

Finally, the respondents were asked about their risk perception using a question on the perceived risk of suffering from severe symptoms in case of infections. While the respondents' odds of infections are influenced by their risky behavior, the actual risks of severe illness conditional on infections are primarily determined by their age and chronic diseases. As such, we believe that this variable serves as a reasonable proxy of respondents’ risk perceptions in the context of COVID.

4.2.4. Demographics and socio-economic characteristics

Demographic characteristics of respondents include continuous indicators of age and household size, and binary indicators of gender (female), married, living with a parent, and living with a child. Socio-economic characteristics include indicators of being a university graduate, having a stable job (i.e., full-time employee, self-employment, or corporate executive), experiences of declines in working hours and income since April 2020, and familiarity with mobile apps. The last variable is measured by the number of apps the respondents use at least once a week out of the following options: Twitter, Facebook, Instagram, LINE, Pinterest, WhatsApp, WeChat, LinkedIn, and TikTok. These questions were asked only once in the December 2020 survey.

4.3. Summary statistics

The first to fourth columns of Table 2 show that COCOA users are more likely to be males, university graduates, engage in a stable job, have a child, have experienced declines in income and working time, and be familiar with mobile apps. They demonstrate higher openness and agreeableness. They are also more likely to trust people and the government, feel attached to their community, and perceive higher risks of severe symptoms.

Table 2.

Respondent characteristics.

| Before Weighting |

After Weighting |

|||||||

|---|---|---|---|---|---|---|---|---|

| Users |

Non-Users |

Users |

Non-Users |

|||||

| Mean |

S.D. |

Mean |

S.D. |

Mean |

S.D. |

Mean |

S.D. |

|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | |

| Panel A: Demographics | ||||||||

| Age | 48.50 | 12.85 | 47.62 | 12.89 | 48.50 | 12.85 | 48.47 | 12.85 |

| Female | 0.42 | 0.49 | 0.48 | 0.50 | 0.42 | 0.49 | 0.42 | 0.49 |

| Married | 0.61 | 0.49 | 0.57 | 0.49 | 0.61 | 0.49 | 0.61 | 0.49 |

| Living with a parent | 0.25 | 0.43 | 0.28 | 0.45 | 0.25 | 0.43 | 0.25 | 0.43 |

| Living with a child | 0.38 | 0.48 | 0.33 | 0.47 | 0.38 | 0.48 | 0.38 | 0.48 |

| Household size |

2.66 |

1.23 |

2.65 |

1.22 |

2.66 |

1.23 |

2.66 |

1.23 |

| Panel B: Socio-Economic Characteristics | ||||||||

| University graduate | 0.60 | 0.49 | 0.50 | 0.50 | 0.60 | 0.49 | 0.60 | 0.49 |

| Stable job | 0.58 | 0.49 | 0.48 | 0.50 | 0.58 | 0.49 | 0.58 | 0.49 |

| Experienced decline in working time since April 2020 | 0.23 | 0.42 | 0.21 | 0.41 | 0.23 | 0.42 | 0.23 | 0.42 |

| Experienced decline in income since April 2020 | 0.32 | 0.47 | 0.26 | 0.44 | 0.32 | 0.47 | 0.32 | 0.46 |

| Familiarity with mobile apps |

1.93 |

1.39 |

1.48 |

1.56 |

1.93 |

1.39 |

1.93 |

1.39 |

| Panel C: Psychological Characteristics | ||||||||

| Self-control | −0.06 | 1.05 | 0.00 | 1.00 | −0.06 | 1.05 | −0.06 | 1.05 |

| Conscientiousness | 4.00 | 1.24 | 3.99 | 1.17 | 4.00 | 1.24 | 4.00 | 1.24 |

| Extraversion | 3.65 | 1.41 | 3.58 | 1.27 | 3.65 | 1.41 | 3.65 | 1.41 |

| Agreeableness | 4.88 | 1.06 | 4.75 | 1.05 | 4.88 | 1.06 | 4.87 | 1.06 |

| Neuroticism | 4.09 | 1.25 | 4.11 | 1.17 | 4.09 | 1.25 | 4.08 | 1.25 |

| Openness | 3.86 | 1.18 | 3.72 | 1.11 | 3.86 | 1.18 | 3.86 | 1.18 |

| Generalized trust | 3.27 | 1.09 | 3.11 | 1.03 | 3.27 | 1.09 | 3.27 | 1.09 |

| Trust in government | 2.06 | 0.83 | 1.98 | 0.79 | 2.06 | 0.83 | 2.06 | 0.83 |

| Attachment to the neighborhood | 2.95 | 0.84 | 2.82 | 0.83 | 2.95 | 0.84 | 2.95 | 0.84 |

| Willingness to take risk | 2.36 | 1.22 | 2.34 | 1.22 | 2.36 | 1.22 | 2.36 | 1.22 |

| Risk perception (of severe illness) |

3.47 |

1.13 |

3.33 |

1.10 |

3.47 |

1.13 |

3.46 |

1.13 |

| Panel D: Risky Behavior | ||||||||

| Spend more than 2 h out of home on non-work days | ||||||||

| December 2019 | 0.82 | 0.38 | 0.71 | 0.45 | 0.82 | 0.38 | 0.76 | 0.43 |

| February 2020 | 0.75 | 0.43 | 0.65 | 0.48 | 0.75 | 0.43 | 0.68 | 0.47 |

| December 2020 | 0.59 | 0.49 | 0.55 | 0.50 | 0.59 | 0.49 | 0.59 | 0.49 |

| February 2021 |

0.57 |

0.50 |

0.52 |

0.50 |

0.57 |

0.50 |

0.55 |

0.50 |

| Observations | 637 | 3,742 | 637 | 3,742 | ||||

Note: The sample of those who participated in both waves of the survey is used. The controls also include prefecture-period fixed effects but the results are not reported in the table.

Panel D of Table 2 shows that the proportion of respondents who spent more than 2 h out of their homes was around 10 percentage points higher for users than for nonusers in December 2019 and February 2020. However, the gap closes to half after the release of COCOA.

5. Econometric strategy

Our estimation strategy combines the difference-in-differences (DID) design and Hainmueller's (2012) entropy balancing method. The former controls for any time-invariant effects of respondents' observed and unobserved characteristics on behavior. The latter, which we explain in more detail below, controls for the time-variant and invariant effects of observed characteristics.

Specifically, we estimate the following weighted least squares model:

| (1) |

where Y ip1 takes unity if respondent i in prefecture p spent more than 2 h out of home on non-work days in the pre-pandemic period (as of December 2019). Prefectures are the main unit of subnational government in Japan. Y ipt is defined analogously for the subsequent periods (t = 2: February 2020; t = 3: December 2020; and t = 4: February 2021). A i takes unity if individual i installed COCOA, and zero otherwise. Post t is an indicator of t = 3 or 4, that is, time periods after the release of COCOA. X ip includes covariates for demographic, socio-economic, and psychological characteristics, listed in Table 2. The interaction term between X ip and Post t controls for intertemporal difference in the effects of observed covariates. Finally, δ pt denotes prefecture-period fixed effects. They capture the time-varying effects of the infection risk and socio-economic environment across time and regions (the time invariant effects are differenced-out by the DiD design).

In this design, the observations are weighted by 1 for COCOA users (A i = 1). The weight for non-users is computed by the entropy balancing method, so that the first and second moments of observed characteristics (X ip and δ pt) are balanced between users and non-users. Coefficient β 2 captures the difference in the behavioral change from December 2019 to February 2020 between users and non-users, serving as a falsification test. Coefficient β 1 represents the additional behavioral response among users after COCOA becomes available, and therefore captures the average treatment effect on the treated (ATT), i.e. COCOA users.

An underlying assumption of the model is conditional parallel trends: conditional on the observed covariates, COCOA users and non-users would have the same trends in outcomes from t = 2 to t = 3 and 4 if they did not use COCOA. Therefore, the choice of control variables is critical. Earlier studies uncover various determinants of uptake, such as trust in government (institutional trust), exposure to infection risks, willingness to take risks, access to information about the pandemic, familiarity with digital technology (e.g., mobile apps), and prosocial attitudes to one's community (Altmann et al., 2020; Jonker et al., 2020; Munzert et al., 2021; Shoji et al., 2021; von Wyl et al., 2021; Walrave et al., 2020). In addition, this study predicts that COCOA usage will be associated with self-control ability.

The bias would remain if these factors are not fully controlled for in the model, but we believe that it is unlikely to be severe. First, because our dependent variables are first differenced, the time-invariant impact of any unobserved characteristics is ruled out. Second, since our independent variables include the major determinants of uptake mentioned in the preceding paragraph, the time-variant effects of these characteristics are also controlled for. Third, we test the severity of the omitted variable bias by conducting a falsification test and sensitivity analysis in the next section.

The data and Stata codes are available in the Online Appendix.

6. Results

6.1. Main results

Before presenting the main results, we assess the performance of the entropy balancing method. The fifth to eighth columns of Table 2 present the summary statistics of respondent characteristics after weighting by the entropy balancing weight. They confirm that the first and second moments of all the covariates are balanced between COCOA users and non-users. In addition, Figure A2 depicts the histogram of the entropy balancing weights for the usage of COCOA. They mostly range from zero to one, suggesting that the covariate balance is achieved without placing too-large weights on a few observations.

Table 3 shows our main result: the impact of using the contact tracing app on risky behavior. For comparison we report the results of both OLS (Column (1)) and entropy balancing method (Column (2)). First, in both columns, the coefficients of app usage without interaction terms are small and statistically insignificant, suggesting that the pre-treatment conditional parallel trend is satisfied. Second, Column (2) shows that using the app decreases the probability of staying out of home for more than 2 h (a binary measure) on non-work days by 5.9 percentage points. This negative association cannot be explained by reverse causality, that is, individuals’ demand for risky behavior drove the uptake of the apps. The OLS results in Column (1) are qualitatively the same but the point estimate is smaller.

Table 3.

Behavioral impact of installing contact tracing apps (dep var: Change in time spent out of home).

| OLS |

EBM |

OLS |

EBM |

EBM |

EBM |

|

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | |

| App user | −0.001 (0.020) |

0.011 (0.024) |

−0.001 (0.020) |

0.011 (0.024) |

||

| App user x After app release | −0.045*** (0.015) |

−0.059*** (0.017) |

||||

| App user x 6 months after release | −0.050** (0.021) |

−0.067** (0.025) |

||||

| App user x 8 months after release | −0.041** (0.016) |

−0.051*** (0.019) |

||||

| App user x infection/close-contact | 0.044 (0.045) |

|||||

| App user x infection/close-contact | −0.138** | |||||

| x 6 months after release | (0.067) | |||||

| App user x infection/close-contact | −0.063 | |||||

| x 8 months after release | (0.052) | |||||

| App user x No infection/close-contact | 0.006 (0.026) |

|||||

| App user x No infection/close-contact | −0.057** | |||||

| x 6 months after release | (0.026) | |||||

| App user x No infection/close-contact | −0.050** | |||||

| x 8 months after release | (0.023) | |||||

| App user x Self-control<0 | 0.019 (0.032) |

|||||

| App user x Self-control<0 × 6 months after release | −0.106*** (0.038) |

|||||

| App user x Self-control<0 × 8 months after release | −0.090*** (0.028) |

|||||

| App user x Self-control>0 | −0.011 (0.036) |

|||||

| App user x Self-control>0 × 6 months after release | −0.008 (0.030) |

|||||

| App user x Self-control>0 × 8 months after release |

−0.007 (0.035) |

|||||

| Controls interacted with post-release dummy | Yes | Yes | Yes | Yes | Yes | Yes |

| Prefecture-period fixed effects | Yes | Yes | Yes | Yes | Yes | Yes |

| Observations | 13,137 | 13,137 | 13,137 | 13,137 | 13,137 | 12,357 |

| Mean Dep. Var. among users after the app release | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 | −0.184 |

Note: Columns (1) through (5) use the full sample. Column (6) uses the sample of respondents who were not confirmed to be infected or closely contacted with a confirmed person. Standard errors clustered at the prefecture level are in parentheses. ***p < 0.01, **p < 0.05, *p < 0.1.

This 5.9 percentage point reduction is substantively large. Given that 18.4% of COCOA users avoided risky behavior after December 2019 (reported at the bottom of Column (2)), our analysis implies that if those users had not installed the app, only 12.5% would have done the same. Such a large impact is likely observed because we evaluate behavioral changes on “non-workdays”, when people can adjust their behavioral pattern more easily.

In Columns (3) and (4), we allow changes in the impact of app usage over time periods by analyzing the interaction terms between app usage (A ip) and period dummies. The results do not change qualitatively. It is intriguing to find a significant impact even in February 2021, when Japan was under a state of emergency (see Fig. 1), although the point estimate becomes slightly smaller. Another potential reason for the smaller impact is weaker behavioral change among users who heard news of the app's system error (see Section 2.2).

The Online Appendix reports the following robustness tests: usage of multiple imputation methods (A4), inclusion of those who completed the survey too quickly in the sample (A5), alternative specification addressing the issue of ceiling effects (A6), test for potential spillover effects (A7), controls for individual slope (A8), the coefficient stability test of Cinelli and Hazlett (2020) (A9), and the usage of frequency of leisure travel as an alternative outcome variable (A10).

6.2. Disentangling four mechanisms

6.2.1. Heterogeneous impact with the receipt of warning message

The results in Section 6.1 suggest that usage of contact tracing apps encourages users to stay home. Section 3 suggests that these effects may be driven by four potential mechanisms, which we can disentangle by analyzing the heterogenous impact of receiving warning messages about having been in close contact with an infected person. The first and third mechanisms predict an insignificant impact of COCOA uptake on risky behavior among non-receivers, the second predicts increases in risky behavior, and the fourth mechanism (commitment device) predicts reductions in risky behavior (See Table 1).

Given data unavailability on the receipt of a warning message, in Column (5) of Table 3, we alternatively estimate the heterogeneous impact of actually experiencing infection and/or being in close-contact with an infected person. Although this approximation may be subject to measurement error, it is certain that those who were not infected or in close contact with an infected person should not have received a warning message. Hence, this issue is unlikely to be severe for the results of those who were not infected or in close-contact. The results show that the effect of app uptake is significantly negative even among those who were neither confirmed to be infected or in close contact with an infected person. These patterns are in line with the fourth mechanism, that COCOA serves as a commitment device, and counter to the other three mechanisms.

6.2.2. Further tests for the commitment device hypothesis

To further explore the relevance of the commitment device hypothesis, we conduct two tests. First, this hypothesis predicts a larger behavioral change from uptake for those with lower self-control ability than those with higher ability (See Section 3.4). Therefore, using the sample of those who were neither infected nor in close-contact, we examine the heterogeneous impact of uptake by their scores on the self-control scale. Column (6) of Table 3 demonstrates the pattern in line with this conjecture. The coefficients for those with higher self-control ability (−0.008 and −0.007) are less than 10% of that for those with lower ability (−0.106 and −0.090) and is statistically insignificant. Even among those with higher self-control ability and who were neither infected nor in close-contact, the app uptake does not increase their time out of home, again ruling out the second mechanism of learning about infection risks.

Second, if those who are aware of their lower self-control ability see the contact tracing app as a device to self-discipline their behavior, they should be more likely to install it than those with higher ability. We test this hypothesis by regressing the indicator of uptake on the self-control scale. The usage of our self-control scale is suitable for this test, since it captures only sophisticated time-inconsistency, as discussed in Section 4.2.3.

Table 4 presents the OLS results on the determinants of uptake. Column (1) includes no other covariates, while Column (2) controls for demographic and socioeconomic factors. The coefficient of the self-control measure is significantly negative for all columns. Column (3), which includes a battery of socio-psychological factors as controls, suggests that a one-standard deviation increase in self-control ability is associated with a decrease in the probability of uptake by 1.1 percentage points. This association is large, given that the adoption rate among this study's respondents is 14.6%. A potential issue with this result is that, if the uptake decision is attributed to unobserved personality traits related to self-control, the association may be partially explained by this common factor. However, since self-control is classified as a facet of conscientiousness, and the coefficient of conscientiousness is insignificant in Column (3), the effect of the common factor is unlikely to be severe. Furthermore, we assess the severity of this issue in the Online Appendix A11 through a sensitivity analysis.

Table 4.

Uptake decision of contact tracing apps.

| (1) | (2) | (3) | |

|---|---|---|---|

| Self-control | −0.008** (0.004) |

−0.010** (0.004) |

−0.011** (0.005) |

| Conscientiousness | −0.001 (0.005) |

||

| Extraversion | −0.002 (0.006) |

||

| Agreeableness | 0.013** (0.005) |

||

| Neuroticism | 0.006 (0.004) |

||

| Openness | 0.011** (0.005) |

||

| Generalized trust | 0.009* (0.006) |

||

| Trust in government | 0.008 (0.006) |

||

| Attachment to the neighborhood | 0.015* (0.008) |

||

| Willingness to take risk | −0.007 (0.005) |

||

| Risk perception |

0.011** (0.005) |

||

| Prefecture fixed effects | Yes | Yes | Yes |

| Demographics | No | Yes | Yes |

| Socio-Economic Characteristics | No | Yes | Yes |

| Observations | 4,379 | 4,379 | 4,379 |

Note: The OLS coefficients are reported. Standard errors clustered at the prefecture level are in parentheses. ***p < 0.01, **p < 0.05, *p < 0.1.

7. Discussion

This study shows that installing the COVID-19 contact tracing app in Japan altered the behavior of users by encouraging them to spend more time at home, regardless of the receipt of a warning message about close contact with an infected person. However, the impact is observed only for those who are aware of their poorer self-control ability, and such individuals are more likely to uptake the app. These findings suggest that for some people with self-control problems, using the contact tracing app serves as a commitment device to self-discipline against risky behavior. However, this may not be the only motive for app uptake, given that (1) those with higher self-control ability also uptake the app, and (2) the uptake does not have any behavioral impact on them.

However, we should be cautious about the interpretation of our results, because they hinge on the validity of our data and identification strategy. First, the use of observational data cannot fully rule out unobserved confounders, although we have conducted various robustness tests. Second, it is still unclear why those with higher self-control ability uptake the app. Further experimental studies that evaluate the impact of contact tracing apps and its mechanisms may be required.

Despite these limitations, our findings make three contributions to our understanding of behavioral responses to the COVID-19 pandemic. First and most important, to the best of our knowledge, this is the first study to evaluate the behavioral impact of COVID-19 contact tracing apps and identify its underlying mechanism. This is critical given that the apps can reduce risks to human lives, are widely used, and are officially endorsed by governments. Second, studies show that compliance with social-distancing requirements vary across individuals, based on their perception of infection risk, social capital, usage of social media, and political ideology (Barrios and Hochberg, 2020; Brodeur et al., 2021; Bursztyn et al., 2020; Cato et al., 2020, 2021a, 2021b; Dasgupta et al., 2020; Shoji et al., 2022). However, the role of self-control problems, which is a central feature of our paper, has been largely unexplored. Third, our findings suggest the importance of psychological mechanisms in explaining individuals' compliance with protective behavior. This line of argument may be applicable to understanding variations in receiving vaccinations. Existing studies have uncovered socio-political obstacles to achieving universal vaccination coverage, such as poor national governance and citizens’ low trust in the government (Aida and Shoji, 2022; Miyachi et al., 2020). This study suggests that there may be psychological reasons for the low vaccination rate. In line with this, the literature demonstrates the role of altruism and social norms in attitudes towards vaccination (Cato et al., 2022).

This study also makes two contributions to the literature on mobile health technology, or mHealth. First, while mobile health technology has been growing in popularity, evidence of its efficacy is still limited (Marcolino et al., 2018). This ambiguity is problematic, because new technologies and policies sometimes cause unintended outcomes. For example, computerized physician order entry (CPOE) systems in hospitals have been associated with medication error risks, greater workload, and overdependence on technology (Ash et al., 2007; Campbell et al., 2006; Harrison et al., 2007; Koppel et al., 2005). This study shows that COVID-19 contact tracing apps are unlikely to bring a negative impact on health. If anything, it is likely to have a positive impact.

Second, a common mHealth intervention is sending text messages for the purposes of reminder, education, motivation, and prevention (Marcolino et al., 2018). However, providing accurate information alone may not facilitate users' protective measures given their cognitive biases, such as the normalcy bias or the optimistic underestimation of the infection risk (Kahneman and Tversky, 1972). This study provides evidence that mobile health technology can also serve as commitment devices and ameliorate users’ cognitive bias driven by self-control problems.

8. Conclusion

The following policy implications can be derived from our findings. First, contact tracing apps have preferable side effects beyond tracing infection chains. Combined with earlier evidence about the impact of the Japanese app on mitigating psychological distress (Kawakami et al., 2021), these contact tracing apps may facilitate social distancing without harmful effects on mental health. This contrasts with other, major social-distancing measures, such as quarantines and school closures, which can be deleterious to mental health (Serafini et al., 2020; Yamamura and Tsustsui, 2021). Therefore, policymakers should encourage citizens to adopt the apps even further. That said, it should be noted that there is a tradeoff between the roles of apps as contact tracing and commitment devices. To strengthen the role as a commitment device, the apps need to impose even stronger disutility to those who engage in risky behavior. However, this may make citizens hesitate to install and use the app.

Second, our findings indicate that people may desire commitment devices to encourage protective behavior against the COVID-19 pandemic. Hence, the provision of other forms of commitment devices may be effective in containing the virus.

Author statement

Masahiro Shoji: Formal analysis, writing; Susumu Cato: survey design, writing; Asei Ito: writing; Takashi Iida: writing; Kenji Ishida: data collection, writing; Hiroto Katsumata: Formal analysis, writing; Kenneth Mori McElwain: survey design, writing.

Declaration of competing interest

None.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.socscimed.2022.115142.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Ahmed N., Michelin R.A., Xue W., Ruj S., Malaney R., Kanhere S.S., Jha S.K. A survey of covid-19 contact tracing apps. IEEE Access. 2020;8:134577–134601. [Google Scholar]

- Aida T., Shoji M. Cross-country evidence on the role of national governance in boosting COVID-19 vaccination. BMC Publ. Health. 2022;22:576. doi: 10.1186/s12889-022-12985-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altmann S., Milsom L., Zillessen H., Blasone R., Gerdon F., Bach R., Abeler J. Acceptability of app-based contact tracing for COVID-19: cross-country survey study. JMIR mHealth and uHealth. 2020;8(8) doi: 10.2196/19857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ash J.S., Sittig D.F., Poon E.G., Guappone K., Campbell E., Dykstra R.H. The extent and importance of unintended consequences related to computerized provider order entry. J. Am. Med. Inf. Assoc. 2007;14(4):415–423. doi: 10.1197/jamia.M2373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrios J.M., Hochberg Y. National Bureau of Economic Research; 2020. Risk Perception through the Lens of Politics in the Time of the COVID-19 Pandemic. No. w27008. [Google Scholar]

- Brodeur A., Grigoryeva I., Kattan L. Stay-at-home orders, social distancing, and trust. J. Popul. Econ. 2021 doi: 10.1007/s00148-021-00848-z. forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryan G., Karlan D., Nelson S. Commitment devices. Annual Review of Economics. 2010;2(1):671–698. [Google Scholar]

- Bursztyn L., Rao A., Roth C.P., Yanagizawa-Drott D.H. National Bureau of Economic Research; 2020. Misinformation during a Pandemic.https://www.nber.org/system/files/working_papers/w27417/w27417.pdf No. w27417. [Google Scholar]

- Campbell E.M., Sittig D.F., Ash J.S., Guappone K.P., Dykstra R.H. Types of unintended consequences related to computerized provider order entry. J. Am. Med. Inf. Assoc. 2006;13(5):547–556. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cato S., Iida T., Ishida K., Ito A., Katsumata H., McElwain K.M., Shoji M. Social media infodemics and social distancing under the COVID-19 pandemic: public good provisions under uncertainty. Glob. Health Action. 2021;14(1) doi: 10.1080/16549716.2021.1995958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cato S., Iida T., Ishida K., Ito A., Katsumata H., McElwain K.M., Shoji M. The bright and dark sides of social media usage during the COVID-19 pandemic: survey evidence from Japan. Int. J. Disaster Risk Reduc. 2021;54 doi: 10.1016/j.ijdrr.2020.102034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cato S., Iida T., Ishida K., Ito A., Katsumata H., McElwain K.M., Shoji M. Vaccination and altruism under the COVID-19 pandemic. Public Health in Practice. 2022 doi: 10.1016/j.puhip.2022.100225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cato S., Iida T., Ishida K., Ito A., McElwain K.M., Shoji M. Social distancing as a public good under the COVID-19 pandemic. Publ. Health. 2020;188:51–53. doi: 10.1016/j.puhe.2020.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cho S., Rust J. Precommitments for financial self-control? Micro evidence from the 2003 Korean credit crisis. J. Polit. Econ. 2017;125(5):1413–1464. [Google Scholar]

- Cinelli C, Hazlett C. Making sense of sensitivity: Extending omitted variable bias. J. Roy. Stat. Soc.: Ser. B (Stat. Methodol.) 2020;82(1):39–67. doi: 10.1111/rssb.12348. [DOI] [Google Scholar]

- CNET Japan Sesshoku kakunin apuri “COCOA” de tsuuchi wo uketara dousuru? 2020. https://japan.cnet.com/article/35160125/

- Dasgupta N., Jonsson Funk M., Lazard A., White B.E., Marshall S.W. 2020. Quantifying the Social Distancing Privilege Gap: a Longitudinal Study of Smartphone Movement. Available at SSRN 3588585. [Google Scholar]

- Fudenberg D, Levine D.K. A dual-self model of impulse control. Am. Econ. Rev. 2006;96(5):1449–1476. doi: 10.1257/aer.96.5.1449. [DOI] [PubMed] [Google Scholar]

- Giné X., Karlan D., Zinman J. Put your money where your butt is: a commitment contract for smoking cessation. Am. Econ. J. Appl. Econ. 2010;2(4):213–235. [Google Scholar]

- Golman R., Hagmann D., Loewenstein G. Information avoidance. J. Econ. Lit. 2017;55(1):96–135. [Google Scholar]

- Gosling S.D., Rentfrow P.J., Swann W.B., Jr. A very brief measure of the Big-Five personality domains. J. Res. Pers. 2003;37(6):504–528. [Google Scholar]

- Guillon M., Kergall P. Attitudes and opinions on quarantine and support for a contact-tracing application in France during the COVID-19 outbreak. Publ. Health. 2020;188:21–31. doi: 10.1016/j.puhe.2020.08.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gul F., Pesendorfer W. Temptation and self‐control. Econometrica. 2001;69(6):1403–1435. [Google Scholar]

- Hainmueller J. Entropy balancing for causal effects: a multivariate reweighting method to produce balanced samples in observational studies. Polit. Anal. 2012;20(1):25–46. [Google Scholar]

- Harrison M.I., Koppel R., Bar-Lev S. Unintended consequences of information technologies in health care—an interactive sociotechnical analysis. J. Am. Med. Inf. Assoc. 2007;14(5):542–549. doi: 10.1197/jamia.M2384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Himmler O., Jäckle R., Weinschenk P. Soft commitments, reminders, and academic performance. Am. Econ. J. Appl. Econ. 2019;11(2):114–142. [Google Scholar]

- Iida T. Bokutakusha; 2016. Yūkensha No Risuku Taido to Tōhyōkōdō. [Google Scholar]

- Ikeda S., Kato H.K., Ohtake F., Tsutsui Y., editors. Behavioral Economics of Preferences, Choices, and Happiness. Springer; 2016. [Google Scholar]

- Jonker M., de Bekker-Grob E., Veldwijk J., Goossens L., Bour S., Rutten-Van Mölken M. COVID-19 contact tracing apps: predicted uptake in The Netherlands based on a discrete choice experiment. JMIR mHealth and uHealth. 2020;8(10) doi: 10.2196/20741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D., Tversky A. Subjective probability: a judgment of representativeness. Cognit. Psychol. 1972;3(3):430–454. [Google Scholar]

- Katafuchi Y., Kurita K., Managi S. COVID-19 with stigma: theory and evidence from mobility data. Economics of Disasters and Climate Change. 2021;5:71–95. doi: 10.1007/s41885-020-00077-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kawakami N., Sasaki N., Kuroda R., Tsuno K., Imamura K. The effects of downloading a government-issued COVID-19 contact tracing app on psychological distress during the pandemic among employed adults: prospective study. JMIR mental health. 2021;8(1) doi: 10.2196/23699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kondylakis H., Katehakis D.G., Kouroubali A., Logothetidis F., Triantafyllidis A., Kalamaras I., Tzovaras D. COVID-19 mobile apps: a systematic review of the literature. J. Med. Internet Res. 2020;22(12) doi: 10.2196/23170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koppel R., Metlay J.P., Cohen A., Abaluck B., Localio A.R., Kimmel S.E., Strom B.L. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293(10):1197–1203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- Marcolino M.S., Oliveira J.A.Q., D'Agostino M., Ribeiro A.L., Alkmim M.B.M., Novillo-Ortiz D. The impact of mHealth interventions: systematic review of systematic reviews. JMIR mHealth and uHealth. 2018;6(1):e23. doi: 10.2196/mhealth.8873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ministry of Health, Labor and Welfare, Japan Contact confirmation application privacy policy. December. 2020;15:2020. [Google Scholar]

- Ministry of Health, Labor and Welfare, Japan COCOA official home page. 2021. https://www.mhlw.go.jp/stf/seisakunitsuite/bunya/cocoa_00138.html

- MIT Technology Review . 2021. COVID Tracing Tracker, Compiled by Pandemic Technology Project (Version of 25 January 2021.https://www.technologyreview.com/2020/12/16/1014878/covid-tracing-tracker/ [Google Scholar]

- Miyachi T., Takita M., Senoo Y., Yamamoto K. Lower trust in national government links to no history of vaccination. Lancet. 2020;395(10217):31–32. doi: 10.1016/S0140-6736(19)32686-8. [DOI] [PubMed] [Google Scholar]

- Mosa A.S.M., Yoo I., Sheets L. A systematic review of healthcare applications for smartphones. BMC Med. Inf. Decis. Making. 2012;12(1):1–31. doi: 10.1186/1472-6947-12-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munzert S., Selb P., Gohdes A., Stoetzer L.F., Lowe W. Tracking and promoting the usage of a COVID-19 contact tracing app. Nat. Human Behav. 2021;5(2):247–255. doi: 10.1038/s41562-020-01044-x. [DOI] [PubMed] [Google Scholar]

- Nakamoto I., Jiang M., Zhang J., Zhuang W., Guo Y., Jin M., Tang K. A peer-to-peer COVID-19 contact tracing mobile app in Japan: design and implementation evaluation. JMIR mHealth and uHealth. 2020;8(12) doi: 10.2196/22098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oshio A., Shingo A., Pino C. Development, reliability, and validity of the Japanese version of the ten item personality inventory (TIPI-J) Jpn. J. Pers. 2012;21:40–52. [Google Scholar]

- Ozaki Y., Goto T., Kobayashi M., Kutsuzawa G. Reliability and validity of the Japanese translation of brief self-control scale (BSCS-J) Shinrigaku Kenkyu: Jpn. J. Psychol. 2016;87(2):144–154. doi: 10.4992/jjpsy.87.14222. [DOI] [PubMed] [Google Scholar]

- Pitas N.A., Mowen A.J., Powers S.L. Person-place relationships, social capital, and health outcomes at a nonprofit community wellness center. J. Leisure Res. 2021;52(2):247–264. [Google Scholar]

- Raymond C.M., Brown G., Weber D. The measurement of place attachment: personal, community, and environmental connections. J. Environ. Psychol. 2010;30(4):422–434. [Google Scholar]

- Rodríguez P., Graña S., Alvarez-León E.E., Battaglini M., Darias F.J., Hernán M.A., Lacasa L. A population-based controlled experiment assessing the epidemiological impact of digital contact tracing. Nat. Commun. 2021;12(1):1–6. doi: 10.1038/s41467-020-20817-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Royer H., Stehr M., Sydnor J. Incentives, commitments, and habit formation in exercise: evidence from a field experiment with workers at a fortune-500 company. Am. Econ. J. Appl. Econ. 2015;7(3):51–84. [Google Scholar]

- Schilbach F. Alcohol and self-control: a field experiment in India. Am. Econ. Rev. 2019;109(4):1290–1322. [PubMed] [Google Scholar]

- Serafini G., Parmigiani B., Amerio A., Aguglia A., Sher L., Amore M. The psychological impact of COVID-19 on the mental health in the general population. QJM: Int. J. Med. 2020;113(8):531–537. doi: 10.1093/qjmed/hcaa201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shoji M., Ito A., Cato S., Iida T., Ishida K., Katsumata H., McElwain K.M. Prosociality and the uptake of COVID-19 contact tracing apps: survey Analysis of intergenerational differences in Japan. JMIR mHealth uHealth. 2021;9(8) doi: 10.2196/29923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shoji M., Cato S., Iida T., Ishida K., Ito A., McElwain K.M. 2022. Variations in Early-Stage Responses to Pandemics: Survey Evidence from the COVID-19 Pandemic in Japan. Economics of Disasters and Climate Change. forthcoming. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sidi Y., Harel O. The treatment of incomplete data: reporting, analysis, reproducibility, and replicability. Soc. Sci. Med. 2018;209:169–173. doi: 10.1016/j.socscimed.2018.05.037. [DOI] [PubMed] [Google Scholar]

- Strulik H. Limited self‐control and longevity. Health Econ. 2019;28(1):57–64. doi: 10.1002/hec.3827. [DOI] [PubMed] [Google Scholar]

- Sweeny K., Melnyk D., Miller W., Shepperd J.A. Information avoidance: who, what, when, and why. Rev. Gen. Psychol. 2010;14(4):340–353. [Google Scholar]

- Tangney J.P., Baumeister R.F., Boone A.L. High self‐control predicts good adjustment, less pathology, better grades, and interpersonal success. J. Pers. 2004;72(2):271–324. doi: 10.1111/j.0022-3506.2004.00263.x. [DOI] [PubMed] [Google Scholar]

- Thaler R.H., Benartzi S. Save more tomorrow™: using behavioral economics to increase employee saving. J. Polit. Econ. 2004;112(S1):S164–S187. [Google Scholar]

- VandenBos G.R. second ed. American Psychological Association; Washington, DC: 2015. APA Dictionary of Psychology. [Google Scholar]

- von Wyl V., Höglinger M., Sieber C., Kaufmann M., Moser A., Serra-Burriel M., Puhan M.A. Drivers of acceptance of COVID-19 proximity tracing apps in Switzerland: panel survey analysis. JMIR Public Health and Surveillance. 2021;7(1) doi: 10.2196/25701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walrave M., Waeterloos C., Ponnet K. Adoption of a contact tracing app for containing COVID-19: a health belief model approach. JMIR Public Health and Surveillance. 2020;6(3) doi: 10.2196/20572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams D.R., Patterson M.E., Roggenbuck J.W., Watson A.E. Beyond the commodity metaphor: examining emotional and symbolic attachment to place. Leisure Sci. 1992;14(1):29–46. [Google Scholar]

- Yamamura E., Tsustsui Y. School closures and mental health during the COVID-19 pandemic in Japan. J. Popul. Econ. 2021:1–38. doi: 10.1007/s00148-021-00844-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.