Abstract

Background

Systematic reviews of outcome measurement instruments are important tools in the evidence-based selection of these instruments. COSMIN (COnsensus-based Standards for the selection of health Measurement INstruments) has developed a comprehensive and widespread guideline to conduct systematic reviews of outcome measurement instruments, but key information is often missing in published reviews. This hinders the appraisal of the quality of outcome measurement instruments, impacts the decisions of knowledge users regarding their appropriateness, and compromises reproducibility and interpretability of the reviews’ findings. To facilitate sufficient, transparent, and consistent reporting of systematic reviews of outcome measurement instruments, an extension of the PRISMA (Preferred Reporting of Items for Systematic reviews and Meta-Analyses) 2020 guideline will be developed: the PRISMA-COSMIN guideline.

Methods

The PRISMA-COSMIN guideline will be developed in accordance with recommendations for reporting guideline development from the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network. First, a candidate reporting item list will be created through an environmental literature scan and expert consultations. Second, an international Delphi study will be conducted with systematic review authors, biostatisticians, epidemiologists, psychometricians/clinimetricians, reporting guideline developers, journal editors as well as patients, caregivers, and members of the public. Delphi panelists will rate candidate items for inclusion on a 5-point scale, suggest additional candidate items, and give feedback on item wording and comprehensibility. Third, the draft PRISMA-COSMIN guideline and user manual will be iteratively piloted by applying it to systematic reviews in several disease areas to assess its relevance, comprehensiveness, and comprehensibility, along with usability and user satisfaction. Fourth, a consensus meeting will be held to finalize the PRISMA-COSMIN guideline through roundtable discussions and voting. Last, a user manual will be developed and the final PRISMA-COSMIN guideline will be disseminated through publications, conferences, newsletters, and relevant websites. Additionally, relevant journals and organizations will be invited to endorse and implement PRISMA-COSMIN. Throughout the project, evaluations will take place to identify barriers and facilitators of involving patient/public partners and employing a virtual process.

Discussion

The PRISMA-COSMIN guideline will ensure that the reports of systematic reviews of outcome measurement instruments are complete and informative, enhancing their reproducibility, ease of use, and uptake.

Supplementary Information

The online version contains supplementary material available at 10.1186/s13643-022-01994-5.

Keywords: COSMIN, PRISMA, Reporting guideline, Delphi study, Systematic review, Consensus, Outcome measurement instruments

Background

Outcome measurement instruments (OMIs) are important tools in clinical practice and research to monitor patients’ health status and in the evaluation of treatment efficacy, effectiveness, and safety [1, 2]. OMIs include patient questionnaires, assessments by health professionals, biomarkers, clinical rating scales, imaging or laboratory tests, and performance-based tests. Choosing the appropriate OMI for a specific construct, target population, and setting can be difficult and time consuming as there are often numerous OMIs, of uncertain qualities, that aim to measure the same construct and were developed for the same patient population [3–6]. Apart from the OMI’s quality (i.e., its measurement properties), feasibility of the OMI and interpretability aspects need to be considered. The measurement properties concern nine different aspects of reliability, validity, and responsiveness (Table 1), which are all important for OMIs with an evaluative application [7].

Table 1.

COSMIN definitions of domains, measurement properties and aspects of measurement properties [7]

| Domain | Term | Definition | |

|---|---|---|---|

| Measurement property | Measurement property aspect | ||

| Reliability | The degree to which the measurement is free from measurement error | ||

| Reliability (extended definition) | The extent to which scores for patients who have not changed are the same for repeated measurement under several conditions: e.g., using different sets of items from the same OMI (internal consistency); over time (test-retest); by different persons on the same occasion (inter-rater); or by the same persons on different occasions (intra-rater) | ||

| Internal consistency | The degree of interrelatedness among the items | ||

| Reliability | The proportion of the total variance in the measurements which is due to ‘true’ differences between patients | ||

| Measurement error | The systematic and random error of a patient’s score that is not attributed to true changes in the construct to be measured | ||

| Validity | The degree to which an OMI measures the construct(s) it purports to measure | ||

| Content validity | The degree to which the content of an OMI is an adequate reflection of the construct to be measured | ||

| Face validity | The degree to which (the items of) an OMI indeed seems to be an adequate reflection of the construct to be measured | ||

| Construct validity | The degree to which the scores of an OMI are consistent with hypotheses (e.g., with regard to internal relationships, relationships to scores of other OMIs, or differences between relevant groups) based on the assumption that the OMI validly measures the construct to be measured | ||

| Structural validity | The degree to which the scores of an OMI are an adequate reflection of the dimensionality of the construct to be measured | ||

| Hypotheses testing | Idem construct validity | ||

| Cross-cultural validity | The degree to which the performance of the items on a translated or culturally adapted OMI are an adequate reflection of the performance of the items of the original version of the OMI | ||

| Criterion validity | The degree to which the scores of an OMI are an adequate reflection of a gold standard | ||

| Responsiveness | The ability of an OMI to detect change over time in the construct to be measured | ||

| Responsiveness | Idem responsiveness | ||

| Interpretabilitya | The degree to which one can assign qualitative meaning (i.e., clinical or commonly understood connotations) to an OMI’s quantitative scores or change in scores | ||

COSMIN COnsensus-based Standards for the selection of health Measurement INstruments

aNot considered a measurement property, but an important characteristic of a measurement instrument

Because of the widespread availability of many different OMIs for a given construct and population, systematic reviews in which the measurement properties of these OMIs are being evaluated and compared are increasingly being published (~ 140 new additions per year in the COSMIN database of Systematic Reviews since 2017) in order to facilitate their appraisal and selection [8]. By evaluating and summarizing the measurement properties reported for individual instruments, these systematic reviews are important tools in the evidence-based selection of OMIs. The COSMIN (COnsensus-based Standards for the selection of health Measurement INstruments) initiative has developed comprehensive and widely used guidance documents on how to conduct systematic reviews of OMIs [9–11]. Conducting a systematic review of OMIs for a specific context (e.g., construct, population) using the COSMIN methodology involves (1) systematically searching for the primary empirical studies that evaluate measurement properties and/or aspects of feasibility and interpretability; (2) appraising the methodological quality (i.e., risk of bias) of the included studies [12, 11]; (3) applying criteria for good measurement properties [13, 14]; and (4) summarizing the body of evidence and grading the quality of the evidence. With this combined information, recommendations can be formulated on whether or not to use an OMI.

Although the COSMIN guideline provides detailed guidance on how to conduct a systematic review of OMIs, key information is often missing in published systematic reviews [15–17]. For example, a previous study evaluating the quality and reporting of 246 systematic reviews of OMIs found that the syntax for the search strategy was lacking in more than half of the reports, and there was large variability in reporting of the appraisal process used for measurement properties [16]. In another study evaluating the quality of 102 reviews, it was unclear for 62% of them whether two reviewers evaluated the quality of the instruments. Moreover, in most reports the results from multiple studies on the same OMI were not synthesized at all (58%), or the methods to do so were not clearly described (22%) [17]. Lacking key information in published reports hinders the appraisal of the quality of OMIs, and might impact the decisions of knowledge users (e.g., researchers, healthcare providers, patients and policy-makers, who all rely on the findings of such systematic reviews) regarding the appropriateness of an OMI for a specific context [18].

Reporting guidelines and standards have been developed to help improve the completeness and transparency of different types of studies, data, and outcomes (e.g. [19–24],). With respect to OMIs, several guidelines exist, mostly focusing on patient-reported outcomes, such as guidelines for reporting results of quality of life assessments or patient-reported outcomes in clinical trials [25–27]. Large organizations have also published guidelines related to the use of patient-reported outcome measures (PROMs) in research, such as the Food and Drug Administration (FDA) [28], International Society of Quality of Life (ISOQOL) [29, 30], Outcome Measures in Rheumatology (OMERACT) [31], and others [32, 33]. Their guidelines often detail how to select PROMs or how to evaluate PROMs, but do not give extensive guidance on reporting of systematic reviews of PROMs, nor do they apply to other types of OMIs. With respect to systematic reviews, the PRISMA (Preferred Reporting of Items for Systematic reviews and Meta-Analyses) guideline [23] and its extensions (e.g. [34–37],) are best known for providing reporting guidance. The Institute of Medicine also published standards for reporting systematic reviews [38], which are a synthesis of standards from various organizations [23, 39–42]. Moreover, the Joanna Briggs Institute has published a manual for systematic reviews of measurement properties [43, 44], which endorses the PRISMA guideline [23] and COSMIN [12]. However, none of these guidelines specifically describes best-practices for the reporting of systematic reviews in which measurement properties of OMIs are assessed.

In 2021, the COSMIN reporting guideline for studies on measurement properties of PROMs has been developed [45]. This reporting guideline can improve and direct the reporting of primary studies investigating measurement properties of a PROM, but is not intended to direct the reporting of systematic reviews of PROMs or other types of OMIs. Thus far, authors of systematic reviews of OMIs have been encouraged to complete and adhere to the widely used generic version of the PRISMA guideline while reporting the results of their systematic review [23]. Yet, even though PRISMA captures some key aspects that are also included in the review process of OMIs (e.g., describing the search strategy), it does not include essential information specific and necessary to systematic reviews of OMIs, such as detailed information on the construct, population, type of OMI and measurement properties of interest, and methods used to appraise the methodological quality of the included studies and to evaluate the measurement properties of instruments. This information is required to make systematic reviews of OMIs reproducible and interpretable. Additionally, some (components of) items of the PRISMA 2020 checklist seem to be of limited relevance to systematic reviews of OMIs. Therefore, often peer reviewers and journal editors cannot properly appraise how a submitted review was done because key information is lacking, which contributes to the ongoing introduction of poor-quality reviews into the literature. Thus, there is a need for guidance regarding the reporting of systematic reviews of OMIs [18]. Extension and tailoring of the PRISMA 2020 guideline with key methodological details relevant to systematic reviews of OMIs ensures that reports of these reviews are comprehensive and informative, and facilitate their ease of use and uptake.

This protocol outlines the development process for an internationally harmonized reporting guideline for systematic reviews of OMIs, called the PRISMA-COSMIN guideline. Through an evidence-based and consensus-based process, PRISMA-COSMIN will evaluate what constitutes sufficient reporting of systematic reviews of OMIs employing COSMIN systematic review methodology.

Methods

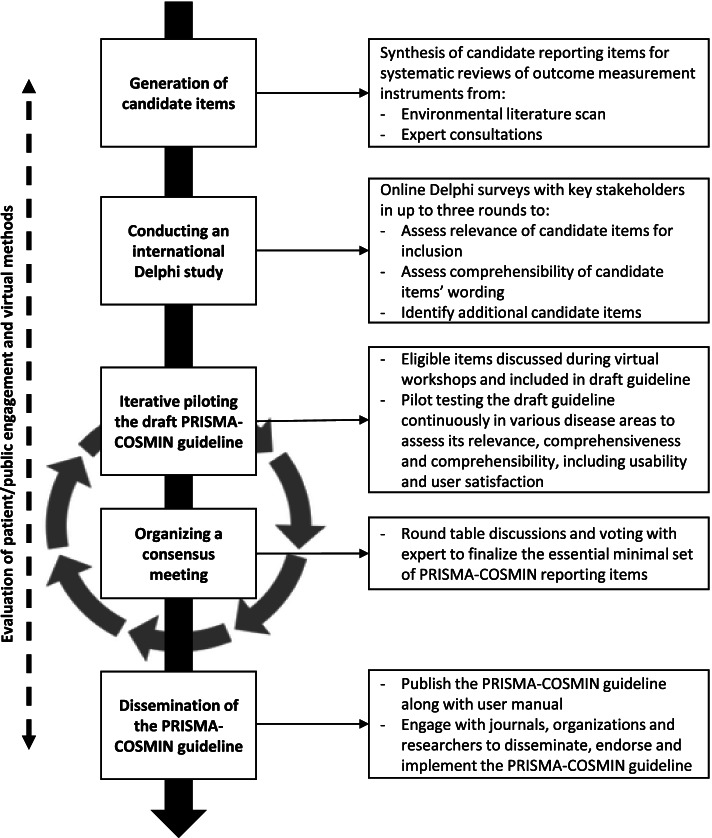

The PRISMA-COSMIN guideline will be developed in accordance with recommendations for reporting guideline development from the EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network [46]. The development process for the PRISMA-COSMIN guideline consists of the following phases, depicted in Fig. 1: (1) generation of candidate reporting items through an environmental scan of the literature and expert consultations; (2) conducting an international Delphi study to assess candidate items’ relevance for inclusion, comprehensibility and wording, and to identify any key missing reporting items; (3) iterative piloting of the draft PRISMA-COSMIN guideline to assess its relevance, comprehensiveness and comprehensibility, along with usability and user satisfaction; and (4) organizing a consensus meeting to finalize the essential minimal set of PRISMA-COSMIN reporting items. An innovative methodology for reporting guideline development will be explored, by employing a completely virtual process and including patients, caregivers, and members of the public as research partners (hereafter referred to as patient/public partners) throughout the project, which is not yet a formal part of the EQUATOR guideline. Patient/public engagement and acceptability of virtual methods will be evaluated during and after the project, resulting in an overview of barriers and facilitators of this innovative methodology for reporting guideline development.

Fig. 1.

Outline of the PRISMA-COSMIN development process

Project launch, steering committee, and technical advisory group

PRISMA-COSMIN was registered on the EQUATOR Network library on 22 September 2020, and officially launched in October 2021 after funding for development of the PRISMA-COSMIN guideline was secured from the Canadian Institutes of Health Research (CIHR).

The steering committee for the PRISMA-COMSIN guideline consists of eight experienced researchers with collective international expertise in reporting guideline development, knowledge synthesis and translation, and measurement science, including representation from COSMIN and PRISMA. A patient/public partner (MS) with expertise in patient/public engagement is also part of the steering committee. The steering committee will lead the study and provide project oversight. They will prepare and provide all necessary materials for each of the phases. They will generate candidate items, design the international Delphi study, and organize the consensus meeting. They will not act as panelists in the Delphi study but have the authority to make final decisions in each project phase, including a vote in the consensus meeting.

The steering committee will be supported by a 10-member technical advisory group, composed of key outcome methodologists from international leading groups in measurement science and reporting standards (see Additional file 1 for members of the steering committee and technical advisory group). The technical advisory group will be asked to give feedback on each stage of the project, including the opportunity to give feedback on the study protocol (this manuscript), to review the initial draft version of the checklist, to contribute to the Delphi study as panelists, to contribute to piloting the PRISMA-COSMIN checklist as pilot testers, to participate in the consensus meeting, and to give feedback on the (draft) guideline and user manual. Because of their large, international network, members of the steering committee and the technical advisory group will also be able to identify other panelists as well as other pilot testers, and other consensus meeting experts.

Phase 1: Generation of candidate items

To generate candidate items, the PRISMA 2020 checklist [23] was used as a starting point and its reporting items were evaluated for applicability for systematic reviews evaluating OMIs by the steering committee. If deemed applicable, the reporting item was refined to include aspects specific to systematic reviews of OMIs when necessary. The COSMIN guideline for systematic reviews [9, 10] and the COSMIN reporting guideline for studies on measurement properties of PROMs [45] served as guidance documents in this process. New items were added as well. Next, an environmental scan of the literature was conducted to search for scientific articles and existing guidelines that describe reporting items of OMIs or systematic reviews. Literature search results from previous reporting guideline development projects were used [45, 47], and in addition, a search was conducted for reporting recommendations of systematic reviews of OMIs. Reporting recommendations extracted from identified guidance documents [9, 10, 13, 14, 19–33, 38–44, 12, 45, 34–37] were compared to the candidate reporting items to support, refute and refine them, and to identify additional reporting items, in order to arrive at a comprehensive item list.

The comprehensive item list, containing all possible reporting items, was applied by a member of the steering committee (EE) to three existing high-quality systematic review reports: (1) a systematic review of all PROMs measuring physical functioning in type 2 diabetes, co-authored by some members of the steering committee [48]; (2) a systematic review of one specific PROM (i.e., the Dutch-Flemish PROMIS physical function item bank) [49]; and (3) a systematic review of digital monitoring devices measuring oxygen saturation and respiratory rate in COPD [50]. By applying the comprehensive item list to three different types of systematic reviews, a distinction could be made between items applicable for all review types, and items applicable to certain review types. Based on the results, the comprehensive item list, containing all possible items, was synthesized in an optimal item list, containing essential items. At this time point in the project, the current protocol paper was submitted for publication.

A table detailing all results thus far, including all possible reporting items, the results from the three systematic reviews, and the essential reporting items, will be presented to the entire steering committee and technical advisory group. Following these expert consultations, the preliminary PRISMA-COSMIN checklist will be iteratively presented and modified (i.e., new items will be added and existing items will be modified) based on feedback from the experts obtained in videoconference meeting and/or email communications.

Phase 2: International Delphi study

An online international Delphi study with a web-based questionnaire will be conducted. A Delphi study is a procedure that can be used to generate debate and to structure and organize group communication processes. It is an iterative, multistage process for making the best use of all available information, through structured rounds of surveys interspersed by controlled feedback, to arrive at consensus of opinion among a panel of stakeholders [51]. Based on the results of the generation of candidate items, described above, a list of reporting items, together with operational definitions and examples, will be developed for use in the Delphi. The complete questionnaire for the first Delphi round, including invitation texts, will be designed and pilot tested with five individuals from the steering committee and/or technical advisory group. Feedback on the design will be collected and the questionnaire will be revised accordingly.

Recruitment of panelists

International key stakeholders involved in the design, conduct, publication, and/or application of systematic reviews of OMIs will be invited to be panelists in the Delphi study. Panelists will be selected to represent various scientific backgrounds (e.g., clinical medicine, biostatistics, psychometrics/clinimetrics, epidemiology) as well as leading relevant organizations (e.g., ISOQOL, OMERACT, Cochrane, ISPOR) and journals (e.g., Quality of Life Research, Journal of Patient-Reported Outcomes, Journal of Clinical Epidemiology). In addition, patient/public partners (n = 5 in total) will be invited to contribute to the Delphi study.

Relevant groups, organizations, and individuals will be identified by the steering committee and technical advisory group through their professional contacts, networks, and affiliations. Known panelists from other relevant Delphi studies (e.g. [52, 11, 45],), as well as authors from relevant guidance documents (e.g. [12, 38, 29],) and authors who have conducted multiple systematic reviews of OMIs (as identified through the COSMIN database for systematic reviews [8]) will be invited. Patient/public partners will be recruited through newsletters, social media channels, and contact persons of relevant organizations that often involve them (e.g., OMERACT’s Patient Research Partner Network, COMET’s PoPPIE working group, Cochrane Consumers Network). Invitation to the Delphi study will include text asking candidate panelists to forward the invitation to other qualified colleagues or relevant groups or organizations that might be interested to contribute. There will be no geographical restrictions on eligibility. The Delphi study will be conducted in English. Panelists can enter the study in each round, but retention between rounds will be encouraged through communications conveying the importance of completing the entire Delphi study [53]. Panelists who complete the entire Delphi study will receive an acknowledgement in publications for their contributions, with their permission.

A minimum of 30 panelists will be considered appropriate [54]. Based on previous experiences [45, 11], it is anticipated that at the most 50% of the invited persons will complete at least one round. Therefore, initially at least 150 individuals will be invited. Those willing to be panelists will be asked to provide informed consent and complete a brief registration form, including questions regarding basic demographic information, such as job title, country of work, work setting, scientific background, and relevant work experience. Panelists from the patient/public community will be asked to identify their relevant patient/public engagement or lived experience. If less than 60 people are willing to be panelists, more persons will be invited, until 60 have agreed to complete round 1. Maximum variation with respect to panelists scientific background and type of work experience will be sought; recruitment strategies will be adjusted if certain groups are under- or overrepresented.

Delphi procedure

Virtual, interactive onboarding sessions with patient/public partners will be held approximately 3 weeks before the Delphi survey launch. These onboarding sessions will facilitate shared clarity and agreement on methodological and practical challenges in conducting systematic reviews of OMIs. In these onboarding sessions, the project will be explained and patient/public partners will be prepared for involvement in the Delphi study. It will also be determined on which topics they may need additional background reading and support. The onboarding sessions will ensure that patient/public partners have understandable information, so that they can meaningfully contribute to the Delphi study. The number and exact content of the onboarding sessions will be determined after meeting with patient/public partners, once their knowledge level and needs have been identified.

Prior to the Delphi study, all panelists will be given information about the objectives and process of the Delphi study. A web-based survey will be designed using Research Electronic Data Capture (REDCap) software [55]. Each Delphi round will be open for approximately 3 weeks. Depending on the results, we expect that we will need up to three rounds, to ensure that all items are evaluated twice. Panelists will receive weekly reminders approximately 1 week after the launch of each Delphi round. The responses of the panelists will remain anonymous throughout the Delphi study and will be analyzed anonymously as well; only delegate members of the steering committee will have access to the identifiable responses. Completion of each Delphi round will be voluntary.

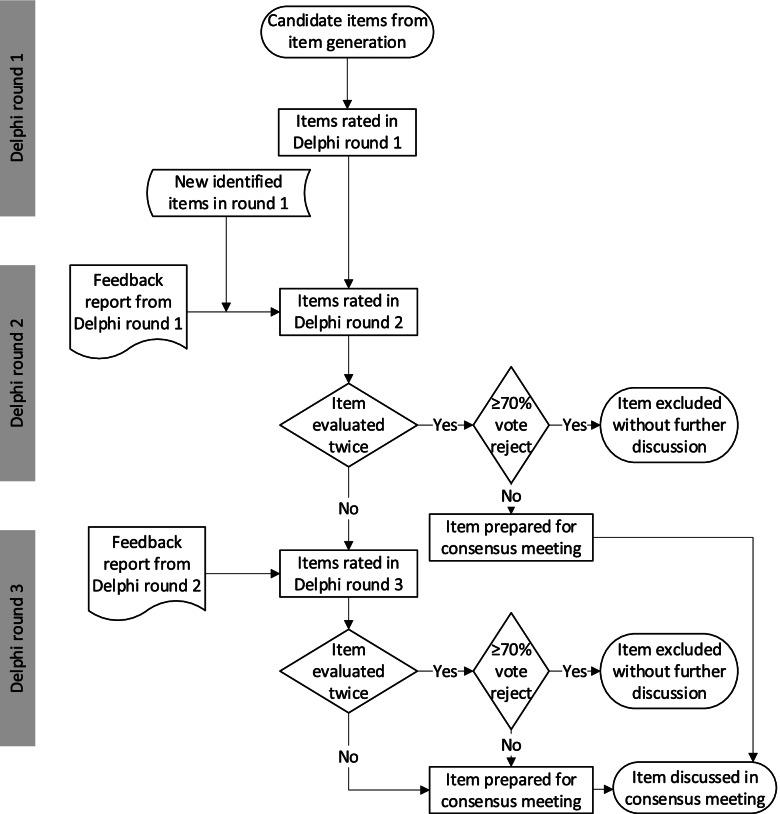

Figure 2 outlines the Delphi study process. Panelists will be asked to rate each reporting item identified in the generation of candidate items for inclusion in the PRISMA-COSMIN guideline. The presentation of items will be such that it will be clear whether an item is new (i.e., not present in the PRISMA 2020 checklist), modified (i.e., covered in part in the PRISMA 2020 checklist), or existing (i.e., already present in the PRISMA 2020 checklist). Panelists will be asked to rate the relevance for inclusion of each item on a 5-point scale (1—defintely reject, 2—probably reject, 3—neutral, 4—probably keep, 5—definitely keep). Panelists can also opt for “not my expertise” to accommodate those who do not have the level of expertise required to rate the item. This option may be especially relevant to reassure patient/public partners that it is not necessary for them to rate all items on the list. Panelists will be encouraged to provide a rationale for their ratings, to suggest modifications of definitions or wording of items, to indicate any possible overlap between items or possibilities to aggregate items, and to suggest potential new items not included in the list. Suggestions for item modification, aggregation or new items will be deliberated and discussed by the steering committee, and integrated into round 2. All findings will be organized into a feedback report that will be sent to the panelists.

Fig. 2.

Outline of the Delphi study process

In Delphi round 2, panelists will receive the feedback report containing the results of round 1 presented both quantitatively (i.e., the distribution of ratings/degree of consensus as well as their own rating) and qualitatively (i.e., the suggestions and comments of the panelists regarding each item). Panelists will again be asked to rate the relevance of each of the reporting items, as well as newly identified items, for inclusion in the PRISMA-COSMIN guideline on the same scale. They will again be encouraged to provide a rationale for their ratings, to suggest modifications of definitions or wording of items, and to indicate any possible overlap between items or possibilities to aggregate items. They will no longer be actively asked to suggest potential new items not included in the list. If an item is evaluated for the second time, consensus for exclusion of an item will be reached if at least 70% of the panelists opt for reject (i.e., score 1 or 2). These items will be considered confirmed for exclusion from the PRISMA-COSMIN guideline and will not be further discussed. All other items originating from the generation of candidate items (i.e., items that are evaluated twice) will move forward to the consensus meeting. Any new items proposed in round 1 will move forward to round 3. All findings will again be organized into a feedback report, containing similar information as the first feedback report.

In Delphi round 3, panelists will receive the feedback report containing the results of round 2. The list of items already considered confirmed for exclusion from the PRISMA-COSMIN guideline or prepared for the consensus meeting will also be provided. Panelists will again be asked to rate the relevance of each of the remaining reporting items for inclusion in the PRISMA-COSMIN guideline, on the same scale. Panelists will also be encouraged to provide a rationale for their ratings, to suggest modifications of definitions or wording of items, and to indicate any possible overlap between items or possibilities to aggregate items. They will not be actively asked to suggest potential new items not included in the list. As in round 2, consensus for exclusion of an item will be reached if at least 70% of the panelists opt for reject (i.e., score 1 or 2), and these items will be considered confirmed for exclusion from the PRISMA-COSMIN guideline and will not be discussed further. All other items will move forward to the consensus meeting.

Phase 3: Piloting of the PRISMA-COSMIN guideline

Items that will move forward to the consensus meeting will be discussed during a series of virtual workshops with members of the steering committee and technical advisory group. Phrasing of items will be clarified as necessary, considering the feedback of panelists from the Delphi study. Relevant content for the user manual, containing the background, rationale and justification for each reporting item, together with examples of good reporting, will also be discussed. The steering committee will develop a draft PRISMA-COSMIN guideline along with draft user manual.

Piloting the PRISMA-COSMIN guideline and user manual will be an iterative process, starting after the Delphi study and continuing till after the consensus meeting, to pilot test each subsequent version of the PRISMA-COSMIN guideline and user manual. Researchers and clinicians in various disease areas (e.g., mental health, rheumatology, surgery, child health) with expertise in systematic reviews of OMIs will be asked to pilot test the draft PRISMA-COSMIN guideline along with the user manual, to ensure the PRISMA-COSMIN guideline is applicable to all fields and outcome types. For each disease area, the PRISMA-COSMIN guideline will be applied to at least four different systematic reviews of OMIs. Pilot testers (n = 4 per disease area) will be selected by the steering committee and technical advisory group, and an open invitation for pilot testing and feedback will be distributed through social media channels and newsletters of relevant organizations.

The relevance and comprehensibility of each reporting item, and the comprehensiveness of the PRISMA-COSMIN guideline will be evaluated on a 7-point scale (1 = not at all to 7 = to a great extent). Pilot testers can also leave comments and suggestions. In addition, usability and user satisfaction with the PRISMA-COSMIN guideline will be determined by three questions:

Is the PRISMA-COSMIN guideline user friendly? Why (not)?

How much time does it take to complete the guideline?

Will it help or hinder the report writing process? Why?

The qualitative and quantitative feedback from these pilot tests will be incorporated to improve the quality of the PRISMA-COSMIN guideline and the user manual by the steering committee.

Phase 4: Consensus meeting

A consensus meeting will be organized in Toronto, Canada, to obtain expert consensus on which items will be included with their finalized wording in the final PRISMA-COSMIN guideline. Besides the steering committee and technical advisory group, editors of selected journals, members of important organizations, patient/public partners, and Delphi panelists with appropriate expertise will be invited, in order to obtain 20–25 diverse experts. Experts not able to attend in-person can join through videoconference.

Each candidate PRISMA-COSMIN item will be presented, along with results from the Delphi study, the recommendations from the virtual workshops and the results from the pilot tests. Moderated round table discussions for each item will follow, after which anonymous voting on each item will be conducted. Voting options are “include in final guideline”, “exclude from final guideline”, “merge with other item”, or “unsure”. Consensus for inclusion or exclusion of an item in/from the final guideline will be reached if at least 70% of the experts vote for inclusion or exclusion, respectively. Items that do not reach consensus will be subject to another round table discussion and voting procedure. This process will continue until all items have reached consensus. Round tables discussions will be audio recorded. If consensus for some items will not be reached before the end of the meeting, the final decision will be made by the steering committee, taking into account the statements from the round table discussions.

Publication and dissemination

Throughout the project, active presence on social media (e.g., Twitter, LinkedIn) and relevant websites (e.g., the COSMIN website [56]) will be maintained. The methods and preliminary results of the project will be presented at international, national, and local conferences. To disseminate the results of the project, a final PRISMA-COSMIN guideline with user manual will be developed by the steering committee and the project will be presented at relevant conferences. In addition, a scientific manuscript detailing the process of the PRISMA-COSMIN guideline development will be published in an open access journal. The PRISMA-COSMIN guideline and user manual will be made freely available through relevant websites, such as the EQUATOR website [57], the PRISMA website [58], and the COSMIN website [56]. Important journals and organizations currently endorsing the PRISMA guideline will be approached to also endorse the PRISMA-COSMIN guideline. Other dissemination strategies established throughout the project will also be considered.

Evaluation of innovative methods

To evaluate whether the methods for reporting guideline development (i.e., involving patient/public partners and employing a virtual process) have contributed to enhanced engagement and increased fidelity, contributors’ satisfaction will be monitored at all project phases (Table 2).

Table 2.

Overview of proposed evaluation instruments administered to stakeholders at different phases of the project

| Public and Patient Engagement Evaluation Tool [59, 60] | Patient Engagement In Research Scale [61] | Modified Acceptability E-scale [62] | PANELVIEW instrument [63] | ||||

|---|---|---|---|---|---|---|---|

| Patient-partner questionnaire | Project coordinator questionnaire | ||||||

| One-time engagements | Planning engagement | Assessing engagement | Assessing impact of engagement | ||||

| Prior to onboarding sessions | Steering committee | ||||||

| After onboarding sessions | Patient/public partners | Steering committee | Patient/public partners | ||||

| After Delphi study | Patient/public partners | Panelists | Panelists | ||||

| After virtual workshops | Steering committee and technical advisory group | ||||||

| After consensus meeting | Steering committee | Patient/public partners | Consensus meeting experts | ||||

| Three months after consensus meeting | Steering committee | ||||||

The Patient Engagement In Research Scale (PEIRS) [61] and the Public and Patient Engagement Evaluation Tool (PPEET) [59, 60] will be used to evaluate patient/public engagement. The PEIRS is a 37-item questionnaire that can be used to quantify meaningful patient engagement in research [61]. Patient/public partners will be asked to complete PEIRS after the Delphi study and the consensus meeting. The PPEET consists of a series of questionnaires for patient/public partners, project coordinators and managers of organizations [59, 60], of which the first two (i.e., the patient partner and project coordinator questionnaire) will be used. Patient/public partners will be asked to complete the patient partner questionnaire ‘one-time engagements’ module after the onboarding sessions. Each member of the steering committee will be asked to complete the project questionnaire ‘planning the engagement’ module prior to the onboarding sessions, the ‘assessing the engagement’ module after the onboarding sessions and the consensus meeting, and the ‘assessing the impact of engagement’ module once the project is finalized, three months after the consensus meeting. In addition to these instruments, a focus group discussion (or individual interview, if preferred) will be held with patient/public partners and members of the steering committee after the project to qualitatively assess patient engagement (e.g., what went well, what could be improved, what are lessons learned for future methodological projects).

The virtual methods employed (i.e., the onboarding sessions, the Delphi survey, and the virtual workshops) will be evaluated among all activity participants after the respective activity with a modified version of the Acceptability E-scale [62]. The Acceptability E-scale is a 6-item scale measuring the acceptability and usability of computer-based assessments or programs. A summary score of 24 or higher (i.e., ≥ 80% of the highest possible score) will be used as a threshold, indicating that the computer-based assessment is acceptable to users [62].

The overall guideline development process will be evaluated with the PANELVIEW instrument, which assesses contributors’ satisfaction with and perceived appropriateness of the development of the PRISMA-COSMIN guideline [63]. Panelists of the Delphi study will be asked to complete the PANELVIEW instrument after the Delphi study, whereas experts in the consensus meeting will be asked to complete the PANELVIEW instrument after the consensus meeting.

A scientific research methods manuscript will be published regarding the evaluation of the patient/public engagement and the virtual process, barriers and facilitators encountered, and lessons learned, to direct future reporting guideline developers.

Discussion

The PRISMA-COSMIN guideline will provide guidance on what should be minimally reported in systematic reviews of OMIs. The development and implementation of the PRISMA-COSMIN guideline aims to harmonize and standardize the reporting of systematic reviews of OMIs and ensure reports are comprehensive. This will make studies reproducible, contributes to the transparency of the conclusions drawn, and reduces research waste. Most importantly, it allows end-users of systematic reviews to formulate their own conclusions.

The evidence-based and consensus-based processes that will be used in the development of the PRISMA-COSMIN guideline will contribute to the acceptance and uptake of the PRISMA-COSMIN guideline by journals, organizations and individual researchers. Guidelines developed by individual experts or small research groups often do not have sufficient credibility to be accepted and implemented. Through involvement of a large group of experts with different scientific backgrounds, representing key international organizations, the PRISMA-COSMIN guideline has a good chance to become widely used.

Including patient/public partners and employing a virtual process are relatively novel methods in the field of reporting guideline development. Patients and members of the public can be considered direct end-users of the results of systematic reviews of OMIs, as the conclusions (i.e., the recommendation to use a specific OMI) can impact them directly. Therefore, and since PROMs are used increasingly, including the perspective of patient/public partners on what is relevant to report and how it should be reported is important. We recognize the challenge of engaging with patient/public partners on this complex methodological topic and have built in supports to enable them to participate meaningfully. We also note that the use of virtual methods for reporting guideline development might not be as challenging as it would have been pre COVID times. Due to the COVID pandemic, new patterns of international collaboration, exchange of ideas and electronic participation have emerged [64], which we anticipate to be beneficial for the development of the PRISMA-COSMIN guideline. Extensive evaluation of both these relatively novel methods will result in the identification of barriers and facilitators, and lessons learned. Evaluation results will be shared through a scientific research methods manuscript, to direct future reporting guideline developers who want to employ similar methods.

Conclusions

Systematic reviews of OMIs’ measurement properties are important tools in the evidence-based selection of these instruments, and are increasingly being published [8]. However, key information is often missing from published reports [15–17], compromising reproducibility and interpretability. Development of the PRISMA-COSMIN guideline will ensure that the reports of systematic reviews of OMIs are complete and informative, and include patient/public partners’ perspectives, thus enhancing their ease of use and uptake. We expect the final version of the PRISMA-COSMIN guideline and user manual to be ready in the last quarter of 2023.

Supplementary Information

Additional file 1. Group membership for the PRISMA-COSMIN guideline

Acknowledgements

Not applicable

Abbreviations

- CIHR

Canadian Institutes of Health Research

- COSMIN

COnsensus-based Standards for the selection of health Measurement INstruments

- EQUATOR

Enhancing the QUAlity and Transparency Of health Research

- FDA

Food and Drug Administration

- ISOQOL

International Society of Quality of Life

- OMERACT

Outcome Measures in Rheumatology

- OMI

Outcome measurement instrument

- PEIRS

Patient Engagement In Research Scale

- PPEET

Public and Patient Engagement Evaluation Tool

- PRISMA

Preferred Reporting of Items for Systematic reviews and Meta-Analyses

- PROM

Patient-reported Outcome Measure

Authors’ contributions

NB, LM, CT, AT, JG, MS, DM, and MO were involved in funding acquisition, and conceptualized and designed the study. EE drafted the manuscript. NB, LM, CT, AT, JG, OA, CB, MS, DM, and MO critically revised the manuscript. All authors read and approved the final manuscript.

Funding

The research is funded by the Canadian Institutes of Health Research (number 452145). The funder had no role in the design or conduct of this research.

Availability of data and materials

Not applicable

Declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

NB receives research funding from Canadian Institutes of Health Research, CHILD-BRIGHT, and the Cundill Centre for Child and Youth Depression. She also declares consulting fees from Nobias Therapeutics, Inc. LM and CT are one of the founders of the COSMIN initiative. OLA receives funding from the NIHR Birmingham Biomedical Research Centre (BRC), NIHR Applied Research Collaboration (ARC) West Midlands, Innovate UK (part of UK Research and Innovation), Janssen, Gilead, and GSK. OLA also declares personal fees from Gilead Sciences Ltd., GlaxoSmithKline (GSK) and Merck. CB has received funding from US Department of Defense, MGNet, Muscular Dystrophy Canada, Octapharma and Grifols. She has been consultant for Alexion, CSL, Sanofi, and Argenx. She is the primary developer of the MGII, and may receive royalties. The other authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Porter ME. What is value in health care. N Engl J Med. 2010;363(26):2477–2481. doi: 10.1056/NEJMp1011024. [DOI] [PubMed] [Google Scholar]

- 2.Nelson EC, Eftimovska E, Lind C, Hager A, Wasson JH, Lindblad S. Patient reported outcome measures in practice. BMJ. 2015;350:g7818. doi: 10.1136/bmj.g7818. [DOI] [PubMed] [Google Scholar]

- 3.Mew EJ, Monsour A, Saeed L, Santos L, Patel S, Courtney DB, et al. Systematic scoping review identifies heterogeneity in outcomes measured in adolescent depression clinical trials. J Clin Epidemiol. 2020;126:71–79. doi: 10.1016/j.jclinepi.2020.06.013. [DOI] [PubMed] [Google Scholar]

- 4.Ardestani SK, Karkhaneh M, Yu HC, Hydrie MZI, Vohra S. Primary outcomes reporting in trials of paediatric type 1 diabetes mellitus: a systematic review. BMJ Open. 2017;7(12):e014610. doi: 10.1136/bmjopen-2016-014610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Webbe JWH, Ali S, Sakonidou S, Webbe T, Duffy JM, Brunton G, et al. Inconsistent outcome reporting in large neonatal trials: a systematic review. Arch Dis Child Fetal Neonatal Ed. 2020;105(1):69–75. doi: 10.1136/archdischild-2019-316823. [DOI] [PubMed] [Google Scholar]

- 6.Page MJ, McKenzie JE, Green SE, Beaton DE, Jain NB, Lenza M, et al. Core domain and outcome measurement sets for shoulder pain trials are needed: systematic review of physical therapy trials. J Clin Epidemiol. 2015;68(11):1270–1281. doi: 10.1016/j.jclinepi.2015.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Mokkink LB, Terwee CB, Patrick DL, Alonso J, Stratford PW, Knol DL, et al. The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. J Clin Epidemiol. 2010;63(7):737–745. doi: 10.1016/j.jclinepi.2010.02.006. [DOI] [PubMed] [Google Scholar]

- 8.COSMIN. COSMIN database of systematic reviews. www.cosmin.nl/tools/database-systematic-reviews/. Accessed 30 Jan 2022.

- 9.Prinsen CA, Mokkink LB, Bouter LM, Alonso J, Patrick DL, De Vet HC, et al. COSMIN guideline for systematic reviews of patient-reported outcome measures. Qual Life Res. 2018;27(5):1147–1157. doi: 10.1007/s11136-018-1798-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Terwee CB, Prinsen CA, Chiarotto A, Westerman MJ, Patrick DL, Alonso J, et al. COSMIN methodology for evaluating the content validity of patient-reported outcome measures: a Delphi study. Qual Life Res. 2018;27(5):1159–1170. doi: 10.1007/s11136-018-1829-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mokkink LB, Boers M, van der Vleuten C, Bouter LM, Alonso J, Patrick DL, et al. COSMIN Risk of Bias tool to assess the quality of studies on reliability or measurement error of outcome measurement instruments: a Delphi study. BMC Med Res Methodol. 2020;20(1):1–13. doi: 10.1186/s12874-020-01179-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mokkink LB, De Vet HC, Prinsen CA, Patrick DL, Alonso J, Bouter LM, et al. COSMIN risk of bias checklist for systematic reviews of patient-reported outcome measures. Qual Life Res. 2018;27(5):1171–1179. doi: 10.1007/s11136-017-1765-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, et al. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol. 2007;60(1):34–42. doi: 10.1016/j.jclinepi.2006.03.012. [DOI] [PubMed] [Google Scholar]

- 14.Prinsen CA, Vohra S, Rose MR, Boers M, Tugwell P, Clarke M, et al. How to select outcome measurement instruments for outcomes included in a “Core Outcome Set”–a practical guideline. Trials. 2016;17(1):1–10. doi: 10.1186/s13063-016-1555-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mokkink LB, Terwee CB, Stratford PW, Alonso J, Patrick DL, Riphagen I, et al. Evaluation of the methodological quality of systematic reviews of health status measurement instruments. Qual Life Res. 2009;18(3):313–333. doi: 10.1007/s11136-009-9451-9. [DOI] [PubMed] [Google Scholar]

- 16.Lorente S, Viladrich C, Vives J, Losilla J-M. Tools to assess the measurement properties of quality of life instruments: a meta-review. BMJ Open. 2020;10(8):e036038. doi: 10.1136/bmjopen-2019-036038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Terwee CB, Prinsen C, Garotti MR, Suman A, De Vet H, Mokkink LB. The quality of systematic reviews of health-related outcome measurement instruments. Qual Life Res. 2016;25(4):767–779. doi: 10.1007/s11136-015-1122-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Butcher NJ, Monsour A, Mokkink LB, Terwee CB, Tricco AC, Gagnier J et al. Needed: guidance for reporting knowledge synthesis studies on measurement properties of outcome measurement instruments in health research. BMJ Open. 2020;10(9).

- 19.Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. Trials. 2010;11(1):1–8. doi: 10.1186/1745-6215-11-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Bull World Health Organ. 2007;85:867–872. doi: 10.2471/BLT.07.045120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP, Irwig L, et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. Clin Chem. 2015;61(12):1446–1452. doi: 10.1373/clinchem.2015.246280. [DOI] [PubMed] [Google Scholar]

- 22.Kottner J, Audigé L, Brorson S, Donner A, Gajewski BJ, Hróbjartsson A, et al. Guidelines for reporting reliability and agreement studies (GRRAS) were proposed. Int J Nurs Stud. 2011;48(6):661–671. doi: 10.1016/j.ijnurstu.2011.01.016. [DOI] [PubMed] [Google Scholar]

- 23.Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. doi: 10.1136/bmj.n71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chan A-W, Tetzlaff JM, Altman DG, Laupacis A, Gøtzsche PC, Krleža-Jerić K, et al. SPIRIT 2013 statement: defining standard protocol items for clinical trials. Ann Intern Med. 2013;158(3):200–207. doi: 10.7326/0003-4819-158-3-201302050-00583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Staquet M, Berzon R, Osoba D, Machin D. Guidelines for reporting results of quality of life assessments in clinical trials. Qual Life Res. 1996;5(5):496–502. doi: 10.1007/BF00540022. [DOI] [PubMed] [Google Scholar]

- 26.Revicki DA, Erickson PA, Sloan JA, Dueck A, Guess H, Santanello NC, et al. Interpreting and reporting results based on patient-reported outcomes. Value Health. 2007;10:S116–SS24. doi: 10.1111/j.1524-4733.2007.00274.x. [DOI] [PubMed] [Google Scholar]

- 27.Calvert M, Blazeby J, Altman DG, Revicki DA, Moher D, Brundage MD, et al. Reporting of patient-reported outcomes in randomized trials: the CONSORT PRO extension. JAMA. 2013;309(8):814–822. doi: 10.1001/jama.2013.879. [DOI] [PubMed] [Google Scholar]

- 28.FDA . Guidance for Industry - patient-reported outcome measures: use in medical product development to support labeling claims. U.S. Department of Health and Human Services Food and Drug Administration; 2009. [Google Scholar]

- 29.Reeve BB, Wyrwich KW, Wu AW, Velikova G, Terwee CB, Snyder CF, et al. ISOQOL recommends minimum standards for patient-reported outcome measures used in patient-centered outcomes and comparative effectiveness research. Qual Life Res. 2013;22(8):1889–1905. doi: 10.1007/s11136-012-0344-y. [DOI] [PubMed] [Google Scholar]

- 30.Brundage M, Blazeby J, Revicki D, Bass B, De Vet H, Duffy H, et al. Patient-reported outcomes in randomized clinical trials: development of ISOQOL reporting standards. Qual Life Res. 2013;22(6):1161–1175. doi: 10.1007/s11136-012-0252-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.OMERACT . The OMERACT handbook for establishing and implementing core outcomes in clinical trials across the spectrum of rheumatologic conditions. Ottawa: OMERACT; 2021. [Google Scholar]

- 32.NQF . Guidance for Measure Testing and Evaluating Scientific Acceptability of Measure Properties. Washington DC: National Quality Forum; 2011. [Google Scholar]

- 33.Lohr KN. Assessing health status and quality-of-life instruments: attributes and review criteria. Qual Life Res. 2002;11(3):193–205. doi: 10.1023/A:1015291021312. [DOI] [PubMed] [Google Scholar]

- 34.McInnes MD, Moher D, Thombs BD, McGrath TA, Bossuyt PM, Clifford T, et al. Preferred reporting items for a systematic review and meta-analysis of diagnostic test accuracy studies: the PRISMA-DTA statement. JAMA. 2018;319(4):388–396. doi: 10.1001/jama.2017.19163. [DOI] [PubMed] [Google Scholar]

- 35.Stewart LA, Clarke M, Rovers M, Riley RD, Simmonds M, Stewart G, et al. Preferred reporting items for a systematic review and meta-analysis of individual participant data: the PRISMA-IPD statement. JAMA. 2015;313(16):1657–1665. doi: 10.1001/jama.2015.3656. [DOI] [PubMed] [Google Scholar]

- 36.Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ. 2015;349. 10.1136/bmj.g7647. [DOI] [PubMed]

- 37.Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–473. doi: 10.7326/M18-0850. [DOI] [PubMed] [Google Scholar]

- 38.Morton S, Berg A, Levit L, Eden J. Standards for Reporting Systematic Reviews. Finding what works in health care: standards for systematic reviews. Washington DC: Institute of Medicine; 2011. [PubMed] [Google Scholar]

- 39.PCORI . Draft final research report: instructions for awardee. Washington DC: Patient-centered Outcomes Research Institute; 2021. [Google Scholar]

- 40.AHRQ . Methods guide for effectiveness and comparative effectiveness reviews. Rockville: Agency for Healthcare Research and Quality; 2019. [PubMed] [Google Scholar]

- 41.CRD . CRD’s guidance for undertaking reviews in health care. York: Centre for Reviews and Dissemination; 2009. [Google Scholar]

- 42.Higgins J, Lasserson T, Chandles J, Tovey D, Flemyng E, Churchill R. Methodological expectations of cochrane intervention reviews. London: Cochrane; 2021. [Google Scholar]

- 43.Aromataris E, Munn Z. JBI Manual for evidence synthesis. Adelaide: JBI; 2020. [Google Scholar]

- 44.Stephenson M, Riitano D, Wilson S, Leonardi-Bee J, Mabire C, Cooper K, et al. Chapter 12: Systematic reviews of measurement properties. In: Aromataris E, Munn Z, editors. JBI Manual for Evidence Synthesis. Adelaide: JBI; 2020.

- 45.Gagnier JJ, Lai J, Mokkink LB, Terwee CB. COSMIN reporting guideline for studies on measurement properties of patient-reported outcome measures. Qual Life Res. 2021;30(8):2197–2219. doi: 10.1007/s11136-021-02822-4. [DOI] [PubMed] [Google Scholar]

- 46.Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PLoS Med. 2010;7(2):e1000217. doi: 10.1371/journal.pmed.1000217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Butcher NJ, Mew EJ, Monsour A, Chan A-W, Moher D, Offringa M. Outcome reporting recommendations for clinical trial protocols and reports: a scoping review. Trials. 2020;21(1):1–17. doi: 10.1186/s13063-020-04440-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Elsman EB, Mokkink LB, Langendoen-Gort M, Rutters F, Beulens JW, Elders P, et al. Systematic review on the measurement properties of diabetes-specific patient-reported outcome measures (PROMs) for measuring physical functioning in people with type 2 diabetes. 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Abma IL, Butje BJ, Peter M, van der Wees PJ. Measurement properties of the Dutch–Flemish patient-reported outcomes measurement information system (PROMIS) physical function item bank and instruments: a systematic review. Health Qual Life Outcomes. 2021;19(1):1–22. doi: 10.1186/s12955-020-01647-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mehdipour A, Wiley E, Richardson J, Beauchamp M, Kuspinar A. The performance of digital monitoring devices for oxygen saturation and respiratory rate in COPD: A Systematic Review. COPD. 2021;18(4):469–475. doi: 10.1080/15412555.2021.1945021. [DOI] [PubMed] [Google Scholar]

- 51.Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- 52.Mokkink LB, Terwee CB, Patrick DL, Alonso J, Stratford PW, Knol DL, et al. The COSMIN checklist for assessing the methodological quality of studies on measurement properties of health status measurement instruments: an international Delphi study. Qual Life Res. 2010;19(4):539–549. doi: 10.1007/s11136-010-9606-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sinha IP, Smyth RL, Williamson PR. Using the Delphi technique to determine which outcomes to measure in clinical trials: recommendations for the future based on a systematic review of existing studies. PLoS Med. 2011;8(1):e1000393. doi: 10.1371/journal.pmed.1000393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Moore CM. Group techniques for idea building. Newbury Park: Sage Publications, Inc; 1987. [Google Scholar]

- 55.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.COSMIN. The COSMIN initiative. www.cosmin.nl. Accessed 3 Feb 2022.

- 57.EQUATOR. The EQUATOR Network. https://www.equator-network.org/. Accessed 15 Nov 2021.

- 58.PRISMA. http://www.prisma-statement.org/. Accessed 10 Oct 2021.

- 59.Bavelaar L, van Tol LS, Caljouw MA, van der Steen JT. Nederlandse vertaling en eerste stappen in validatie van de PPEET om burger-en patiëntenparticipatie te evalueren. Tijdschrift voor Gezondheidswetenschappen. 2021;99:146–153. doi: 10.1007/s12508-021-00316-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Abelson J, Li K, Wilson G, Shields K, Schneider C, Boesveld S. Supporting quality public and patient engagement in health system organizations: development and usability testing of the P ublic and P atient E ngagement E valuation T ool. Health Expect. 2016;19(4):817–827. doi: 10.1111/hex.12378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Hamilton CB, Hoens AM, McQuitty S, McKinnon AM, English K, Backman CL, et al. Development and pre-testing of the Patient Engagement In Research Scale (PEIRS) to assess the quality of engagement from a patient perspective. PLoS One. 2018;13(11):e0206588. doi: 10.1371/journal.pone.0206588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Tariman JD, Berry DL, Halpenny B, Wolpin S, Schepp K. Validation and testing of the Acceptability E-scale for web-based patient-reported outcomes in cancer care. Appl Nurs Res. 2011;24(1):53–58. doi: 10.1016/j.apnr.2009.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Wiercioch W, Akl EA, Santesso N, Zhang Y, Morgan RL, Yepes-Nuñez JJ, et al. Assessing the process and outcome of the development of practice guidelines and recommendations: PANELVIEW instrument development. CMAJ. 2020;192(40):E1138–E1E45. doi: 10.1503/cmaj.200193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Korbel JO, Stegle O. Effects of the COVID-19 pandemic on life scientists. Genome Biol. 2020;21:113. 10.1186/s13059-020-02031-1. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Group membership for the PRISMA-COSMIN guideline

Data Availability Statement

Not applicable