Abstract

We present a statistical learning framework for robust identification of differential equations from noisy spatio-temporal data. We address two issues that have so far limited the application of such methods, namely their robustness against noise and the need for manual parameter tuning, by proposing stability-based model selection to determine the level of regularization required for reproducible inference. This avoids manual parameter tuning and improves robustness against noise in the data. Our stability selection approach, termed PDE-STRIDE, can be combined with any sparsity-promoting regression method and provides an interpretable criterion for model component importance. We show that the particular combination of stability selection with the iterative hard-thresholding algorithm from compressed sensing provides a fast and robust framework for equation inference that outperforms previous approaches with respect to accuracy, amount of data required, and robustness. We illustrate the performance of PDE-STRIDE on a range of simulated benchmark problems, and we demonstrate the applicability of PDE-STRIDE on real-world data by considering purely data-driven inference of the protein interaction network for embryonic polarization in Caenorhabditis elegans. Using fluorescence microscopy images of C. elegans zygotes as input data, PDE-STRIDE is able to learn the molecular interactions of the proteins.

Keywords: statistical learning theory, sparse regression, differential equations, stability selection, PAR proteins, machine learning

1. Introduction

Predictive mathematical models, validated in experiments, are of key importance for the scientific understanding of natural phenomena. While this approach has been particularly successful in describing spatio-temporal dynamical systems in physics and engineering, it has not seen the same degree of success in other scientific fields, such as neuroscience, biology, finance, and ecology. This is because the underlying first principles in these areas remain largely elusive. Nevertheless, modelling in those areas has seen increasing use and relevance to help formulate simplified mathematical equations where sufficient observational data are available for validation [1–5]. In biology, modern high-throughput technologies have enabled collection of large-scale datasets, ranging from genomics, proteomics and metabolomics data, to microscopy images and videos of cells and tissues. These datasets are routinely used to infer parameters in hypothesized models, or to perform model selection among a finite number of alternative hypotheses [6–8]. The amount and quality of biological data, as well as the performance of computing hardware and computational methods, have now reached a level that promises direct inference of mathematical models of biological processes from the available experimental data. Such data-driven approaches seem particularly valuable in cell and developmental biology, where first principles are hard to come by, but large-scale imaging data are available, along with an accepted consensus of which phenomena a model could possibly entail. In such scenarios, data-driven modelling approaches have the potential to uncover the unknown first principles underlying the observed biological dynamics.

Biological dynamics can be formalized at different scales, from discrete molecular processes to the continuum mechanics of tissues. Here, we consider the macroscopic, continuum scale where spatio-temporal dynamics are modelled by partial differential equations (PDEs) over coarse-grained state variables [3,9]. PDE models have been used to successfully address a range of biological problems from embryo patterning [10] to modelling gene-expression networks [11,12] to predictive models of cell and tissue mechanics during growth and development [13,14]. They have shown their potential to recapitulate experimental observables in cases where the underlying physical phenomena are known or have been postulated [15–17]. In many biological systems, however, the governing PDEs are not (yet) known, which slows progress in discovering the underlying physical principles. Thus, it is desirable to verify existing models and discover new ones by extracting governing laws directly from measured spatio-temporal data.

For given observable spatio-temporal dynamics, with no governing PDE known, several proposals have been put forward to learn mathematically and physically interpretable PDE models. The earliest work in this direction [18] frames the problem of ‘PDE learning’ as a multivariate nonlinear regression problem where each component in the design matrix consists of space and time differential operators and low-order nonlinearities computed directly from data. Then, the alternating conditional expectation (ACE) algorithm [19] is used to compute both optimal element-wise nonlinear transformations of each component and their associated coefficients. In [20], the problem is formulated as a linear regression problem with a fixed predefined set of space and time differential operators and polynomial transformations that are computed directly from data. Then, backward elimination is used to identify a compact set of PDE components by minimizing the least-square error of the full model and pruning terms that worsen the fit the least. In the statistics literature [21,22], the PDE learning problem has been formulated as a Bayesian estimation problem where the observed dynamics are learned via non-parametric approximation, and a PDE representation serves as the prior to compute the posterior estimates of the PDE coefficients. Recent influential work revived the idea of jointly learning the structure and the coefficients of PDE models from data in discrete space and time using sparse regression [23–25]. Approaches such as SINDy [23] and PDE-FIND [24] compute a large pre-assembled dictionary of possible PDE terms from data and identify the most promising components by penalized linear regression. PDE-FIND was able to learn different types of PDEs from simulated spatio-temporal data, including Burgers, Kuramoto-Sivashinsky, reaction-diffusion, and Navier–Stokes equations. PDE-FIND’s performance was evaluated on noise-free simulated data as well as data with up to 1% additive noise and showed a critical dependence on proper tuning of the regularization parameters, which are typically unknown in practice. Recent works attempted to alleviate this dependence by using Bayesian sparse regression for model uncertainty quantification [26] or information criteria for parameter tuning [27]. There is also a growing body of literature that considers deep neural networks for PDE learning [28–30]. For instance, the deep feed-forward network PDE-NET [28] has been shown to directly learn computable, discretized forms of the underlying governing PDEs for forecasting [28,31]. PDE-NET exploits the connection between differential operators and order-of-sum rules of convolution filters [32] to constrain network layers to learning valid discretized differential operators. The forecasting capability of this approach was numerically demonstrated for predefined linear differential operator templates. A compact and interpretable symbolic identification of the PDE structure, however, is not available with this approach.

Here, we ask the question whether and how it is possible to extend the class of sparse regression inference methods to work on limited amounts of noisy experimental data. We present a statistical learning framework, PDE-STRIDE (STability-based Robust IDEntification of PDEs), to robustly infer PDE models from noisy spatio-temporal data without requiring manual tuning of learning parameters, such as regularization constants. PDE-STRIDE is based on the statistical principle of stability selection [33,34], which provides an interpretable criterion for any term’s inclusion in the learned PDE in a data-driven manner. Stability selection can be used with any sparsity-promoting regression method, including LASSO [33,35], iterative hard thresholding (IHT) [36], hard thresholding pursuit (HTP) [37] or sequential thresholding ridge regression (STRidge) [24]. PDE-STRIDE therefore provides a drop-in solution to rendering existing inference tools more robust, while reducing the need for parameter tuning. In our benchmarks, the combination of stability selection with de-biased iterative hard thresholding (IHT-d) empirically shows the best performance and highest consistency w.r.t. perturbations of the dictionary matrix and the sampling of the data.

This paper is organized as follows: §2 provides the mathematical formulation of the sparse regression problem and discusses how the design matrix is assembled. We also review the concepts of regularization paths and stability selection and discuss how they are combined in the proposed method. The numerical results in §3 highlight the performance and robustness of PDE-STRIDE for recovering different PDEs from noise-corrupted data. We also perform achievability analysis of PDE-STRIDE + IHT-d for consistency and convergence of the recovery probabilities with increasing sample size. Section 4 demonstrates that the robustness of the proposed method is sufficient for real-world applications. We consider learning a protein interaction model from noisy biological microscopy images of membrane protein dynamics in a Caenorhabditis elegans zygote. Section 5 provides a summary of our results and highlights future challenges for data-driven PDE learning.

2. Problem formulation and optimization

We outline the problem formulation underlying the data-driven PDE inference considered here. We review important sparse regression techniques and introduce the concept of stability selection used in PDE-STRIDE.

(a) . Problem formulation for partial differential equations learning

We propose a framework for stable estimation of the structure and parameters of the governing equations of continuous dynamical systems from discrete spatio-temporal measurements or observations. Specifically, we consider PDEs for the multidimensional state variable of the form shown in equation (2.1), composed of polynomial nonlinearities (e.g. ), spatial derivatives (e.g., ) and the parametric dependence modelled through .

| 2.1 |

Here, is the function map that models the spatio-temporal nonlinear dynamics of the system. We limit ourselves to forms of the function map that can be written as linear combinations of polynomial nonlinearities, spatial derivatives and combinations of both. For instance, for a one-dimensional () state variable , the function map can take the form

| 2.2 |

where are the coefficients of the PDE components for . The continuous PDE of the form described in equation (2.2), with appropriate coefficients , holds true for all continuous space and time points in the domain of the model. Numerical solutions of the PDE try to satisfy the equality relation in equation (2.2) for reconstituting the nonlinear dynamics of a dynamical system at discrete space and time points . We assume that we have access to noisy observational data points of the state variable at such discrete space and time points. The measurement errors are assumed to be independent and identically distributed following a normal distribution with mean zero and variance .

We follow earlier works [20,24,25] and construct a large dictionary of potential PDE components using discrete approximations of the terms from the data . For instance, for the one-dimensional example in equation (2.2), the discrete approximation of PDE terms can be written in vectorized form as a linear regression problem

| 2.3 |

Here, the left-hand side vector contains the discrete approximations of the time derivatives of at the data points and represents the response or outcome vector of the linear regression. Each column of the dictionary or design matrix represents the discrete approximation of one PDE component, i.e. one of the terms in equation (2.2), at the discretization points in space and time. Each column is interpreted as a potential predictor of the response vector . The vector is the vector of unknown PDE coefficients, i.e. the pre-factors of the terms in equation (2.2).

Both and need to be constructed from numerical approximations of the temporal and spatial derivatives of the observed state variables. There is a rich literature in numerical analysis on this topic (e.g. [38,39]). Here, we approximate the time derivatives by first-order forward finite differences from (i.e. the explicit Euler scheme) after initial denoising of the data. Similarly, the spatial derivatives are computed by second-order central finite differences. For denoising, we use truncated singular value decomposition (SVD) with a cut-off at the elbow of the singular values curve, as shown in the electronic supplementary material, figures S1 and S2.

Given the general linear regression ansatz in equation (2.3), we formulate the data-driven PDE inference problem as a regularized optimization problem of the form

| 2.4 |

where is the minimizer of the objective function, is a smooth convex data-fitting function, a regularization or penalty function and is a scalar regularization parameter that balances data fitting and regularization. The function is not necessarily convex or differentiable. We follow previous works [20,24,25] and consider the standard least-squares data-fitting term

| 2.5 |

The choice of the penalty function influences the properties of the coefficient estimates . We seek to identify a small subset of PDE components among the possible ones that accurately predict the time evolution of the state variables [23–25]. This implies that we want to identify a sparse coefficient vector , thus resulting in an simple and interpretable PDE model. This can be achieved through sparsity-promoting penalty functions . We next consider different choices for that enforce sparsity in the coefficient vector and review algorithms that solve the associated optimization problems.

(b) . Sparse optimization for partial differential equations learning

The least-squares loss in equation (2.5) can be combined with different sparsity-promoting penalty functions . The prototypical example is the -norm leading to the LASSO formulation of sparse linear regression [35]:

| 2.6 |

The LASSO objective comprises a convex smooth loss and a convex non-smooth regularizer. For this class of problems, efficient optimization algorithms exist that can exploit the properties of the functions and come with convergence guarantees. Important examples include coordinate-descent algorithms [40,41] and proximal algorithms, including the Douglas–Rachford algorithm [42] and the projected (or proximal) gradient method, also known as the forward-backward algorithm [43]. In signal processing, the latter schemes are also known as iterative shrinkage-thresholding algorithms (ISTA, see [44] and references therein), which can be extended to non-convex penalties. Although LASSO has been previously used for PDE learning [25], the statistical performance of LASSO estimates is known to deteriorate if certain conditions on the design matrix are not met. For example, the studies in [45,46] provide sufficient and necessary conditions, called the irrepresentable conditions, for consistent variable selection using LASSO, essentially excluding strong correlations of the predictors in the design matrix. These conditions are, however, difficult to check in practice, as they require knowledge of the true components of the model. One way to relax these conditions is via randomization. The randomized LASSO [33] therefore considers the objective

| 2.7 |

where each is an i.i.d. random variable uniformly distributed over with . For , randomized LASSO reduces to standard LASSO. Randomized LASSO has been shown to successfully overcome the limitations of LASSO in handling correlated components in the dictionary [33], while simultaneously preserving the overall convexity of the objective function. As part of our PDE-STRIDE framework, we evaluate the performance of randomized LASSO in the context of PDE learning using cyclical coordinate descent [41].

The sparsity-promoting property of the (weighted) -norm comes at the expense of considerable bias in the estimation of the non-zero coefficients [46], thus leading to reduced variable selection performance in practice. This drawback can be alleviated by using non-convex penalty functions [47,48], allowing near-optimal variable selection performance at the cost of needing to solve a non-convex optimization problem. For instance, using the -‘norm’ (which counts the number of non-zero elements of a vector) as regularizer leads to the NP-hard problem

| 2.8 |

This formulation has found widespread applications in compressed sensing and signal processing. Algorithms that deliver approximate solutions to equation (2.8) include greedy optimization strategies like orthogonal matching pursuit [49,50], compressed sampling matching pursuit (CoSaMP) [51] and subspace pursuit [52]. We here consider the IHT algorithm [36,53], which belongs to the class of ISTA algorithms. Given the design matrix and the measurement vector , IHT computes sparse solutions by applying a nonlinear shrinkage (thresholding) operator to gradient descent steps in an iterative manner. One step in the iterative scheme reads

| 2.9 |

The operator is the nonlinear hard-thresholding operator. Convergence of the above iteration is guaranteed if and only if in each iteration for some constant . Under the condition that , the IHT algorithm is guaranteed to not increase the cost function in equation (2.8) (Lemma 1 in [36]). The IHT algorithm can be viewed as a thresholded version of the classic Landweber iteration [54]. The fixed points for which for the nonlinear operator in equation (2.9) are local minima of the cost function in equation (2.8) (Lemma 3 in [36]). Under the same condition on the design matrix, i.e. , the optimal solution of the cost function in equation (2.8) thus belongs to the set of fixed points of the IHT algorithm (theorem 2 in [36] and theorem 12 in [55]). Although the IHT algorithm comes with theoretical convergence guarantees, the resulting fixed points are not necessarily sparse [36].

Here, we propose a modification of the IHT algorithm that will prove to be particularly well suited for solving PDE learning problems. Following a proposal in [37] for the HTP algorithm, we equip the IHT algorithm with an additional debiasing step. This involves solving at each iteration a least-squares problem restricted to the support obtained from the -th IHT iteration. We refer to this form of IHT as iterative hard thresholding with debiasing (IHT-d). In this two-step algorithm, the standard IHT step serves to extract the explanatory variables, while the debiasing step approximately debiases (or re-fits) the coefficients restricted to the currently active support [56]. Rather than solving the least-squares problem to optimality, we use gradient descent steps until a loose upper bound on the least-squares re-fit is satisfied, . This prevents over-fitting by attributing low confidence to large supports, which reduces computational overhead and renders the algorithm practical. The complete IHT-d procedure is detailed in Algorithm 1 in the electronic supplementary material. In PDE-STRIDE, we compare IHT-d with a heuristic iterative algorithm, Sequential Thresholding of Ridge regression (STRidge), that also uses penalization and is available in PDE-FIND [24].

(c) . Stability selection

The practical performance of sparse optimization techniques in PDE learning hinges on proper selection of the regularization parameter that balances model fit and model complexity. In model discovery tasks on real experimental data, a wrong choice of the regularization parameter could result in incorrect PDE model selection even if true model discovery would have been, in principle, achievable. In statistics, a large number of tuning parameter selection criteria are available, ranging from cross-validation approaches [57] to information criteria [58], or formulations that allow joint learning of model coefficients and tuning parameters [59,60]. Here, we advocate stability-based model selection [33] for robust PDE learning. The statistical principle of stability [61] has been put forward as one of the pillars of modern data science and statistics. It provides an intuitive approach to model selection [33,34,62]. Stability selection has found widespread application from the analysis of gene regulatory networks [63] to graphical models [64] and ecological studies [65] .

In the context of sparse regression, stability selection [33] proceeds as follows (see also figure 1 for an illustration): given a design matrix and measurement vector , generate random subsample indices of equal size and produce reduced sub-designs and by choosing rows according to the index set . For each of the resulting subproblems, apply a sparse regression technique and systematically record the recovered supports , as a function of over a regularization path . The values of and are data-dependent and are easily computable for generalized linear models with convex penalties [41]. In our case, the parameter for the non-convex problem in equation (2.8) can be determined from optimality conditions (Theorem 12 in [55] and Theorem 1 in [36]). The lower bound is set to with default . The -dependent importance measure for each coefficient is then computed as

| 2.10 |

where are the independent random sub-samples. The importance measure of each model coefficient can be plotted across the regularization path, resulting in a component stability profile (see figure 1f for an illustration). This visualization provides an intuitive overview of the importance of the different model components. Different from the original stability selection work [33], we define the stable components of the model as

| 2.11 |

Here, denotes the stability threshold parameter, which can be set to [33]. We always use the default setting . During exploratory data analysis, the threshold can also be set through visual inspection of the stability plots, allowing principled exploration of alternative PDE models. The importance measures also provide an interpretable criterion for a model component’s stability against random sub-sampling of the data and changes to the dictionary design, guiding the user to build the right model with high probability. As we show in the numerical experiments, stability selection thus ensures robustness against varying dictionary size, different types of data sampling, noise in the data and variability of the sub-optimal solutions when non-convex penalties are used. All of these properties are critical for consistent and reproducible model learning in real-world applications. Under certain conditions, stability selection also provides an upper bound on the expected number of false positives [33]. Such guarantees are not generally assured by any sparsity-promoting regression method in isolation [34]. For instance, stability selection combined with randomized LASSO (equation (2.7) with ) is consistent for variable selection even when the irrepresentable condition is violated [33].

Figure 1.

Enabling data-driven mathematical model discovery through stability selection. We outline the necessary steps in our method for learning PDE models from spatio-temporal data. (a) Extract spatio-temporal profiles from microscopy videos of the chosen state variables. Data courtesy of Grill Laboratory, MPI-CBG/TU Dresden [66]. (b) Compile the design matrix and the measurement vector from the data. (c) Construct multiple linear systems of reduced size through random sub-sampling of the rows of the design matrix and . (d) Solve and record the sparse/penalized regression solutions independently for each sub-sample along the -paths. (e) Compute the importance measure for each component. The histogram shows the importance measure for all components at a particular value of . (f) Construct the stability plot by aggregating the importance measures along the -path, leading to separation of the noise variables (dashed black) from the stable components (coloured). Identify the most stable components by thresholding . (g) Build the PDE model from the identified components. (Online version in colour.)

3. Numerical experiments on simulated data

We present numerical experiments in order to benchmark the performance and robustness of PDE-STRIDE combined with different sparsity-promoting regression methods to infer PDEs from spatio-temporal data. To provide comparisons and benchmarks, we first use simulated data obtained by numerically solving known ground-truth PDEs, before applying our method to a real-world dataset from biology. The benchmark experiments on simulation data are presented in four subsections that demonstrate different aspects of the inference framework: §a demonstrates the use of different sparsity-promoting regression methods in our framework in a simple one-dimensional Burgers problem. Section b then compares their performance in order to choose the best regression method, IHT-d. In §c, stability selection is combined with IHT-d to recover two-dimensional vorticity-transport and three-dimensional reaction–diffusion PDEs from limited, noisy simulation data. Section d reports achievability results to quantify the robustness of stability selection to variations in dictionary size, sample size and noise levels. In all cases, we follow the PDE-STRIDE procedure as explained in the box below.

STability-based Robust IDEntification of PDEs (PDE-STRIDE). Given the noise-corrupted data and a choice of regression method, e.g. (randomized) LASSO, IHT, HTP, IHT-d, STRidge:

-

(i)

Apply any required denoising method on the noisy data and compute the spatial derivatives and nonlinearities to construct the design matrix and the time-derivatives vector for suitable sample size and dictionary size, and , respectively.

-

(ii)

Build the sub-samples and , for , by uniformly randomly sub-sampling of rows from the design matrix and the corresponding rows from . For every sub-sample , standardize the sub-design matrix such that and , for . Here, is the element in row and column of the matrix . The corresponding measurement vector is centred to zero mean.

-

(iii)

Apply the sparsity-promoting regression method independently to each sub-sample to construct the -paths for values of as discussed in §2c.

-

(iv)

Compute the importance measures of all dictionary components along the discretized -paths, as discussed in §c. Select the stable support set by applying the threshold to all . Solve a linear least-squares problem restricted to the support to identify the coefficients of the learned model.

(i) . Adding noise to the simulation data

Let be the vector of clean simulation data sampled in both space and time. This vector is corrupted with additive Gaussian noise to

such that is the additive Gaussian noise with an empirical variance of the entries in the vector , and is the level of Gaussian noise added.

(ii) . Fixing the parameters for stability selection

We propose that PDE-STRIDE combined with IHT-d provides a PDE learning method that only rarely requires parameter tuning. To demonstrate this, all stability selection parameters described in §c are fixed to their standard values throughout our numerical experiments. The choice of these standard parameter values is well-discussed in the literature [33,41,64]. We thus fix: the repetition number , regularization path parameter , -path size and the importance threshold . Using these standard values, the method works robustly across all tests presented here with no case-specific parameter tuning required. In both stability and regularization plots, we show normalized values of the regularization parameter on a decimal logarithmic scale. Although the stable component set is always evaluated at , as in equation (2.11), the stability plots sometimes have their axes scaled to large or smaller ranges of for better visualization.

(a) . One-dimensional Burgers equation with different sparsity promoters

We show that stability selection can be combined with any sparsity-promoting penalized regression framework to learn PDE components from noisy and limited spatio-temporal data. We use simulated data of the one-dimensional Burgers equation

| 3.1 |

with identical boundary and initial conditions as used in [24] to provide fair comparison between methods: periodic boundaries in space and the following Gaussian initial condition:

The simulation domain is divided uniformly into 256 Cartesian grid points in space and 1000 time points. The numerical solution is visualized in space-time in figure 4. The numerical solution was obtained using a parabolic method based on finite differences and time stepping using explicit Euler with step size and the diffusion coefficient .

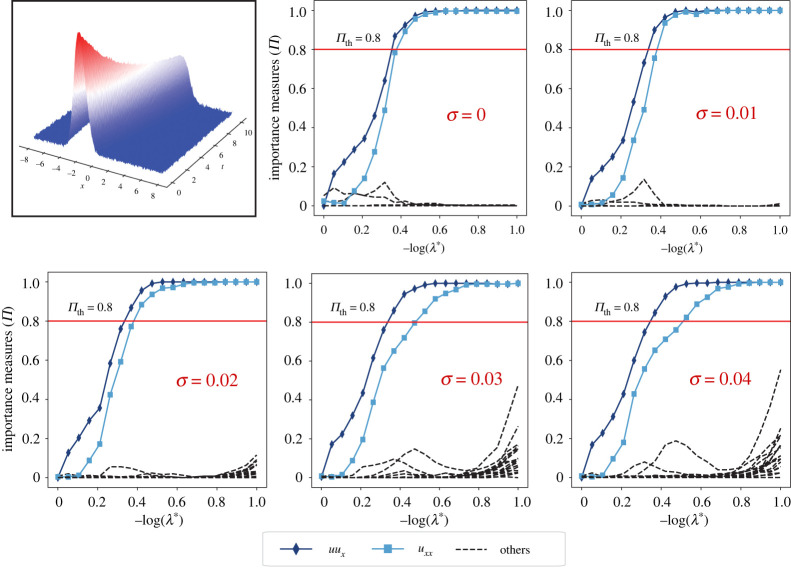

Figure 4.

Model selection with PDE-STRIDE+IHT-d for the one-dimensional Burgers equation. The top left image shows the numerical solution of the one-dimensional Burgers equations on space and time points, respectively. The stability plots for the design , show clear separation of the true PDE components (in solid colour with line symbols as identified in the inset legend) from the noise components (dashed black). The inference power of the method is tested for additive Gaussian noise levels up to 4%. In all cases, perfect recovery is possible without parameter tuning. (Online version in colour.)

We test the combinations of stability selection with the three sparsity-promoting regression techniques described in §2b: randomized LASSO, STRidge and IHT-d. The top row of figure 2 shows the regularization paths and the bottom row the corresponding stability plots for each component in the dictionary. The coloured solid lines correspond to the advection and diffusion terms of equation (3.1) as given in the inset legend.

Figure 2.

Model selection with PDE-STRIDE for the one-dimensional Burgers equation. The top row shows regularization paths (see §c) for three sparsity-promoting regression techniques: randomized LASSO, STRidge and IHT-d all for the same design (, ). The bottom row shows the corresponding stability plots. The inset legend at the bottom shows the colour and line symbol correspondence with the dictionary. The ridge parameter for STRidge is fixed to [24]. The value of for the randomized LASSO is set to . In all three cases, the standard threshold (red solid horizontal line) correctly identifies the true components. The is set to for randomized LASSO in order to demonstrate stability selection. (Online version in colour.)

Thresholding the importance measure at , all sparsity-promoting regression methods are able to identify the correct components of the model and separate them from the noise variables (dashed black lines).

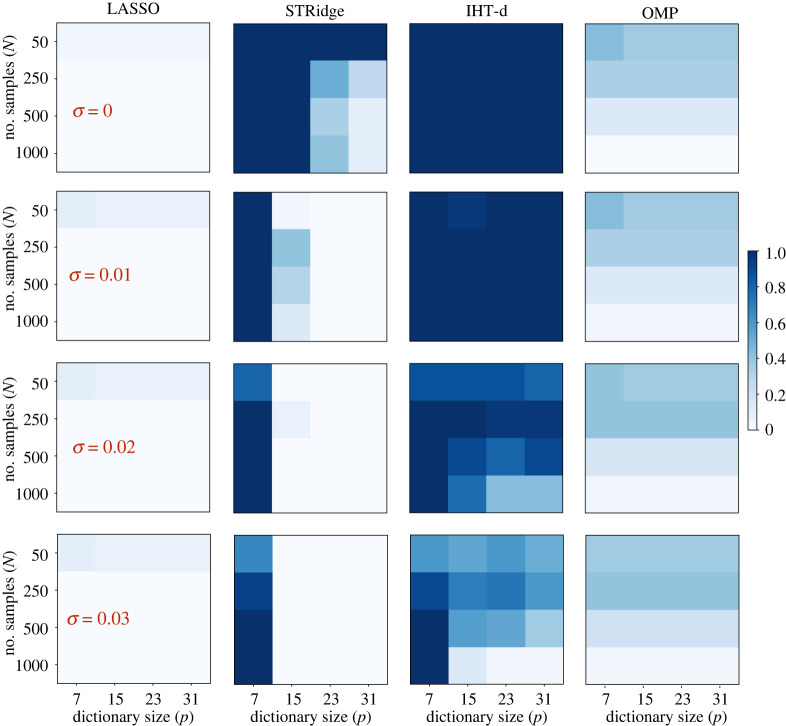

(b) . Comparison between sparsity-promoting techniques

Although stability selection can be used in conjunction with any or sparsity-promoting regression method, the question arises whether a particular choice of regression algorithm is particularly well suited for PDE learning. We therefore perform a systematic comparison between randomized LASSO, STRidge, orthogonal matching pursuit (OMP) [67,68] as implemented in scikit-learn, and IHT-d for recovering the one-dimensional Burgers equation under perturbations to the sample size , the dictionary size and the noise level . An experiment for a particular triple is considered a success if there exists a (see §c) for which the true PDE components are recovered. In figure 3, the success frequencies over 30 independent repetitions with uniformly random data sub-samples are shown for the four regression methods.

Figure 3.

Comparison between different sparse regression methods for the one-dimensional Burgers equation. Each coloured square corresponds to a design with certain sample size , dictionary size and noise level . Colour indicates the success frequency over 30 independent repetitions with uniformly random data samples, as given in the colour bar to the right. ‘Success’ is defined as the existence of a value for which the correct PDE is recovered from the data. The columns compare four popular sparsity-promoting regression methods: randomized LASSO, STRidge, IHT-d and OMP (left to right), as labelled at the top. (Online version in colour.)

A first observation from figure 3 is that solutions (here with STRidge, IHT-d and OMP) outperform relaxed solutions (here with randomized LASSO). We also observe that IHT-d performs better than the other three methods for large dictionary sizes , high noise and small sample sizes . Large dictionaries with higher-order derivatives computed from discrete data cause grouping (correlations between variables), for which randomized LASSO tends to select one variable from each group, ignoring the others [69]. Thus, randomized LASSO fails to identify the true support consistently. STRidge shows good recovery for large dictionary sizes with clean data, but it breaks down in the presence of noise in the data. OMP also uses regularization and therefore outperforms randomized LASSO, but fails to compete with STRidge and IHT-d. Of all four methods, IHT-d shows the best robustness to both noise and changes in the design. We note a decrease in inference power with increasing sample size , especially for large and high noise levels. This again can be attributed to correlations and groupings in the dictionary, which become more significant with increasing sample size .

Based on these results, we use IHT-d in the following sections as the sparsity-promoting regression method in PDE-STRIDE.

(c) . Stability-based model inference

We present benchmark results of PDE-STRIDE for PDE learning with IHT-d as the sparse regression method. This combination of methods is used to recover PDEs from limited noisy data obtained by numerical solution of the one-dimensional Burgers, two-dimensional vorticity-transport and three-dimensional Gray–Scott equations. Once the stable support of the PDE model has been learned by PDE-STRIDE with IHT-d, the actual coefficient values of the non-zero components are determined by solving a linear least-squares problem restricted to the recovered support . More sophisticated methods could instead be used for PDE parameter estimation with known model structure [21,22]. As the sample size exceeds the cardinality of the recovered support (), however, we find that simple least-squares fits provide good estimates for the PDE coefficients.

(i) . One-dimensional Burgers equation

We again consider the one-dimensional Burgers equation from equation (3.1), using the same simulated data as in §a, to quantify the performance and robustness against noise of the PDE-STRIDE+IHT-d method. The results are shown in figure 4 for a design with and . Even on this small dataset, with a sample size comparable with dictionary size, our method recovers the correct model with up to 4% noise on the data, although the least-squares fits of the coefficient values gradually deviate from their exact values (table 1).

Table 1.

Coefficient values of the recovered one-dimensional Burgers equation for different noise levels. The stable components of the PDE inferred from the plots in figure 4 are . The ground-truth coefficient values are given in parentheses in the column headings.

| (1.0) | (0.1) | |

|---|---|---|

| clean | 0.1000 | |

| 1% | 0.1016 | |

| 2% | 0.0997 | |

| 3% | 0.0976 | |

| 4% | 0.0984 | |

| 5% | 0.0967 |

For comparison, the corresponding stability plots for PDE-STRIDE + STRidge are shown in the electronic supplementary material, figure S3. STRidge creates many false positives even at mild noise levels (less than ).

(ii) . Two-dimensional vorticity transport equation

Next, we consider a two-dimensional domain and the vorticity transport equation given in equation (3.2). The vorticity transport equation can be obtained by taking curl of the Navier–Stokes equations and imposing the incompressibility constraint for the flow velocity field . This results in a governing equation for the vorticity , which is a scalar in two dimensions

| 3.2 |

This equation has numerous applications in oceanography and climate modelling [70]. The velocity vector has two components, and is the fluid’s viscosity. For the numerical solution of the transport equation (3.2) in the unit square, we impose a no-slip boundary condition at the left , right and bottom sides and a shear flow boundary condition with boundary velocity components , on the top side . This is the classic ‘lid-driven cavity’ problem from fluid mechanics. The simulation code was written using OpenFPM [71] with explicit time stepping on a grid in space. A Poisson equation was solved at every time step in order to correct the velocities to ensure divergence-freeness. The viscosity of the fluid simulated was set to . In figure 5, we show a single time snapshot of the two velocity components and the vorticity in the numerical simulation, along with the locations of the 500 sample points used for PDE inference from these data.

Figure 5.

Numerical solution of the two-dimensional vorticity transport equation. We show the two components of the flow velocity and the vorticity in the two-dimensional domain . The black dots in the right panel show the sample points used for PDE inference. They are uniformly randomly distributed in the rectangular box . (Online version in colour.)

Figure 6 shows the results of applying PDE-STRIDE + IHT-d to recover the two-dimensional vorticity transport equation from these data samples. The results demonstrate consistent recovery of the true support of the PDE for different noise levels . The stable components correspond to the true terms of equation (3.2). In table 2, we show the re-fitted coefficients for the recovered PDE components. Both the accuracy of the parameter fits and the separation between the true (coloured solid lines) and the noisy (black dashed lines) components deteriorate with increasing noise levels. In the electronic supplementary material, figure S4, we also report the plots when using STRidge in conjunction with stability selection for the same design and stability selection parameters. STRidge fails to recover the true support even at small noise levels.

Figure 6.

Model selection with PDE-STRIDE + IHT-d for the two-dimensional vorticity transport equation. The stability plots for the design , show the separation of the true PDE components (in solid colour with line symbols as identified in the inset legend) from the noise components (dashed black). The inference power of the method is tested for additive Gaussian noise levels up to 5%. In all cases, perfect recovery is possible without parameter tuning. (Online version in colour.)

Table 2.

Coefficient values of the recovered two-dimensional vorticity transport equation for different noise levels. The stable components of the PDE inferred from the plots in figure 6 are . The ground-truth coefficient values are given in parentheses in the column headings.

| (0.025) | (0.025) | (1.0) | (1.0) | |

|---|---|---|---|---|

| clean | 0.02504 | 0.02502 | ||

| 1% | 0.02501 | 0.02504 | ||

| 2% | 0.02492 | 0.0250 | ||

| 3% | 0.0247 | 0.0250 | ||

| 4% | 0.0245 | 0.0251 | ||

| 5% | 0.0242 | 0.0251 |

(iii) . Three-dimensional Gray–Scott equation

Finally, we consider a problem in three dimensions, namely, the three-dimensional Gray–Scott reaction–diffusion model

| 3.3a |

and

| 3.3b |

Reaction and diffusion of two chemical species with scalar concentrations and can produce a variety of patterns, reminiscent of those often observed in nature [72]. This is, for example, used to describe skin patterning and pigmentation [73]. This example also has coupled variables and is similar in structure to the real-world example discussed in §4.

We simulate equation (3.3) using second-order central finite differences implemented in OpenFPM [71]. A snapshot of the simulated concentration field in the three-dimensional cube is shown in figure 7. The simulation used discretization points in space on a regular Cartesian mesh and explicit Euler time stepping with step size until a final simulated time of 5 seconds. The ground-truth model parameters used are: , , and .

Figure 7.

Model selection with PDE-STRIDE+IHT-d for the three-dimensional Gray–Scott -component equation. The top left figure shows a visualization of the scalar concentration field in the three-dimensional simulation domain with red corresponding to high concentration and blue to low concentration. The stability plots for the design , show good separation of the true PDE components (in solid colour with line symbols as identified in the inset legend) from the noise components (dashed black). The inference power of the method is tested for additive Gaussian noise levels up to 6%. In all the cases, perfect recovery was possible without parameter tuning. (Online version in colour.)

We test recovery of the ground-truth PDE from data sampled only from the small cube in the centre of the domain. Figure 7 shows the PDE-STRIDE+IHT-d results for the species . All PDE components of the true equation (3.3a) are correctly identified for noise levels up to 6% with as few as samples for dictionary size . The plots for the species are shown in the electronic supplementary material, figure S5. Although perfect recovery was not possible owing to the small diffusivity () of the species, consistent and stable recovery of the reaction terms is seen. The re-fitted coefficients in the recovered PDEs for the and species are reported in table 3 and the electronic supplementary material, table S1, respectively.

Table 3.

Coefficients of the recovered -component Gray–Scott reaction–diffusion equation for different noise levels. The stable components of the PDE inferred from the plots in figure 7 are . The ground-truth coefficient values are given in parentheses in the column headings.

| 1 (0.014) | () | () | () | (1.0) | ||

|---|---|---|---|---|---|---|

| clean | 0.0140 | |||||

| 2% | 0.0142 | |||||

| 4% | 0.0144 | |||||

| 6% | 0.0150 |

For comparison, the results when using STRidge in conjunction with stability selection are shown in the electronic supplementary material, figures S6 and S7. STRidge is able to recover the complete form of equation (3.3), albeit only in the noise-free case. It fails to recover any of the two components when noise is added to the data.

(d) . Achievability results

We discuss the consistency and robustness of PDE-STRIDE + IHT-d with respect to design parameters including sample size , dictionary size and noise level . Achievability analysis provides a compact way of checking robustness and consistency of a model selection method for varying design parameters. It also provides approximate means to reveal the sample complexity of any and sparsity-promoting technique, i.e. the number of data points required to recover the model with full probability. Specifically, given a sparsity-promoting regularizer, dictionary size , sparsity and noise level , we are interested in how the sample size scales with , , for the recovery probability converging to one. The study in [74] reported sharp phase transitions from failure to success for Gaussian random designs with increasing sample size for LASSO-based sparsity solutions. The same study also provided sufficient lower bounds for sample size as a function of for full recovery probability. We ask the question whether sparse model selection with PDE-STRIDE + IHT-d exhibits similar sharp phase-transition behaviour. Given the dictionary components in PDE learning are compiled from derivatives and nonlinearities computed from noisy data, it is also interesting to observe whether full recovery is at all achieved and maintained with increasing sample size . In the particular context of PDE learning, increasing dictionary size by including higher-order nonlinearities and higher-order derivatives tends to introduce strongly correlated components, which can negatively impact the inference power.

In figures 8 and 9, the achievability plots for the one-dimensional Burgers system and the -component of the three-dimensional Gray–Scott system are shown, respectively. Each symbol in the figures shows the mean over 20 repetitions of an experiment with some design under random data sub-sampling. The Bernoulli variances are shown as coloured bands. An experiment with a design is considered a success if and only if there exists a for which the true PDE support is recovered by PDE-STRIDE+IHT-d with default importance threshold .

Figure 8.

Achievability plots for model selection with PDE-STRIDE+IHT-d for the one-dimensional Burgers equation. Each symbol is the mean over 20 repetitions of the inference for some design under random data sub-sampling. Different symbols and colours correspond to different dictionary sizes as given in the inset legend. The coloured bands show the variance of the Bernoulli trials. Noise levels are given by the red percentage in each panel. (Online version in colour.)

Figure 9.

Achievability plots for model selection with PDE-STRIDE+IHT-d for the -component of the three-dimensional Gray–Scott equation. Each symbol is the mean over 20 repetitions of the inference for some design under random data sub-sampling. Different symbols and colours correspond to different dictionary sizes as given in the inset legend. The coloured bands show the variance of the Bernoulli trials. Noise levels are given by the red percentage in each panel. (Online version in colour.)

In all cases, PDE-STRIDE + IHT-d is strongly consistent and highly robust. In addition, we also observe a sharp phase transition from failure to success with recovery probabilities quickly approaching one for increasing sample size beyond a certain threshold. This suggests that PDE-STRIDE not only enhances the inference power of IHT-d but also ensures consistency. The sharp phase transition also suggests the existence of a strict lower bound on the sample complexity, below which full recovery is not achievable [74]. From the achievability plots, we estimate the sample complexity of the learned dynamical systems: for the one-dimensional Burgers equation, 90% success probability is achieved with as few as data points in the noise-free and data points in the noisy cases across different designs (). For the three-dimensional Gray–Scott system, 90% success probability is achieved with as few as data points in the noise-free and in the noisy cases across different designs (). This demonstrates that PDE-STRIDE + IHT-d is able to consistently and robustly learn PDE models from limited noisy data.

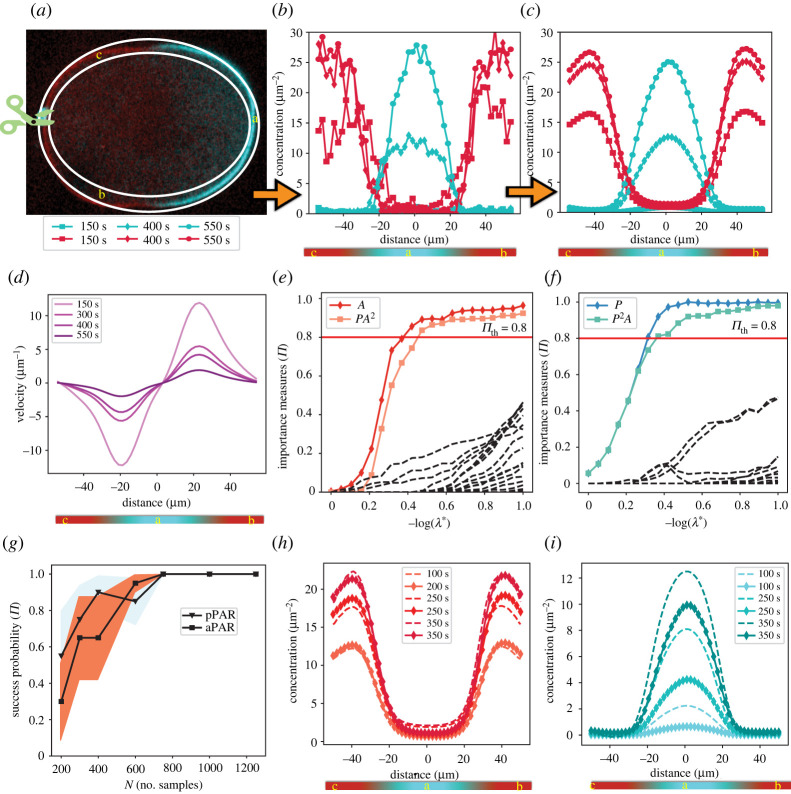

4. Data-driven inference on experimental data to explain C. elegans zygote polarization

We showcase the applicability of the PDE-STRIDE with IHT-d to real experimental data. We use microscopy images to infer a model that explains early C. elegans embryo polarity establishment, and we use it to confirm a previous hypothesis about the underlying protein interaction network. Earlier studies of this process proposed a mechano-chemical mechanism for PAR protein polarization on the cell membrane [15,16,66]. They systematically showed that active cortical flows in the zygote provide sufficient bias to trigger symmetry breaking [16]. The experiments conducted in [16] measured the concentration of the anterior PAR complex (aPAR), the concentration of the posterior PAR complex (pPAR) and the cortical flow velocity field as a function of time (figure 10a–d). The concentration and velocity fields were acquired on a grid with a resolution of in space and time, respectively. Space was modelled one-dimensional along a circumferential line around the embryo in the mid-plane cross section as shown in figure 10a.

Figure 10.

Data-driven inference of the regulatory network of PAR proteins from spatio-temporal data of a C. elegans zygote. (a) Final frame from a fluorescence microscopy video recorded by the Grill laboratory [16] at MPI-CBG showing a mid-plane cross section through the ellipsoidal zygote. Over time, the pPAR protein complex (blue fluorescence signal) localizes to the posterior pole of the embryo (region ‘a’), whereas aPAR (red fluorescence signal) localizes anterior (regions ‘b’ and ‘c’). (b) Protein concentrations extracted from the fluorescence intensity along the one-dimensional midline between the two white ellipses in (a) for aPAR (red) and pPAR (blue) for different time points (symbols, see inset legend under (a)). (c) De-noised concentration profiles obtained by extracting the principle mode of the singular value decomposition (SVD) of the noisy data in (b). (d) De-noised cortical flow velocity profiles (first SVD mode) in the tangential direction along the circumferential line around the zygote for different times (colour, inset legend). (e,f) Stability plots obtained by using PDE-STRIDE + IHT-d to separate the stable model components (coloured solid lines with symbols, inset legend) from the noise components (black dashed lines) for aPAR (e) and pPAR (f). (g) Achievability plot to test the robustness and consistency of the inferred model for both aPAR () and pPAR () with increasing sample size . (h,i) Simulation results (dashed lines) of the learned models for aPAR (h) and pPAR (i) compared with the denoised experimental data (solid lines with symbols) at different times (inset legend). (Online version in colour.)

We challenge to learn a differential equation model for the regulatory network of the interacting PAR proteins in a purely data-driven fashion from the one single microscopy video available as electronic supplementary material for [16]. Given the high level of noise in the video, we limit our analysis to the first SVD mode of the data as shown in figure 10c. We only consider the time after the initial advection trigger, when early domains of PAR proteins have already formed. PDE-STRIDE is then directed to learn an interpretable model that evolves the nascent protein domains to the fully developed polarity patterns shown in figure 10a.

The only mechanism included in this model is the chemical interaction between aPAR and pPAR of the general form

| 4.1 |

with unknown reactant and product stoichiometries and for aPAR and pPAR, respectively. The scalar fields and are the concentrations of aPAR and pPAR, and is the unknown reaction rate.

In designing the dictionary , the maximum allowed stoichiometry for reactants and products is restricted to 2, i.e. . The PDE-STRIDE + IHT-d results for the learned reaction network from data are shown in figure 10e,f. The stable components of the model for pPAR are , and for aPAR they are for , . The achievability plot in figure 10g confirms the consistency and robustness of the learned model across different sample sizes . The inference method achieves full recovery probability for .

The final chemical reaction model learned by PDE-STRIDE + IHT-d is

| 4.2a |

and

| 4.2b |

where and are the kinetic rate constants for membrane association and dissociation of aPAR and pPAR, respectively. The coefficients and represent the rates of membrane dissociation due to mutually antagonistic interactions between the protein complexes. The constants and can be interpreted as surface-to-volume conversion factors for the concentrations [16]. This purely data-driven model recapitulates the mutual inhibitory nature of the PAR proteins [16].

Moreover, our method allows us to estimate the values of the unknown reaction-rate parameters by least-squares re-fitting on the recovered stable support. The results are shown in table 4.

Table 4.

Coefficients values of the inferred model in equation (4.2).

In the figure 10h,i, we overlay the numerical solution of equation (4.2) (dashed lines) with the denoised experimental data (solid lines with symbols) at different time points (see inset legend) for both aPAR and pPAR. The de-noised spatio-temporal measurement data from the early domains are taken as the initial conditions for the simulation. The results show that the simple data-driven chemical reaction model is able to qualitatively match the temporal evolution of the PAR protein domains in the C. elegans zygote. Although the spatial patterns match well (e.g. the PAR domain sizes) for the two proteins, there exist non-negligible discrepancies between the simulation and the experiments in the time scales of the pPAR domain evolution. This is likely because our ODE model only includes the chemical reactions of the protein interactions, but neither the diffusion nor the advective flow of the proteins. In the electronic supplementary material, figure S8 (left), we show for , that the advection and diffusion components of the aPAR species carry enough importance to be included in the stable set , but that this is not the case for the pPAR components. The preferential advective displacement of aPAR to the anterior side modelled by the advective term is also in line with experimental observations [16]. However, such models with advection and diffusion components exhibit inconsistency for increasing sample size , in contrast to our simple ODE model, as illustrated in figure 10g. We therefore believe that it may be necessary to use structured sparsity for enforcing conservation laws through grouping [75] in order to further develop this data-driven model to also include the mechanical aspects of the PAR system.

5. Conclusion and discussion

We have addressed two key issues that have so far limited the application of sparse regression methods for automated PDE inference from noisy and limited data: the need for manual parameter tuning and the high sensitivity to noise in the data. We have shown that stability selection combined with any sparsity-promoting regression technique provides an appropriate level of regularization for consistent and robust recovery of the correct PDE model. Our numerical benchmarks suggested that iterative hard thresholding with de-biasing (IHT-d) is ideal in combination with stability selection to form a robust framework for PDE learning with minimal parameter tuning. This combination of methods outperformed all other tested algorithmic approaches with respect to identification performance, amount of data required and robustness to noise. The resulting stability-based PDE-STRIDE method was tested for robust recovery of the one-dimensional Burgers equation, two-dimensional vorticity transport equation and three-dimensional Gray–Scott reaction–diffusion equations from simulation data corrupted with up to 6% additive Gaussian noise. The achievability studies demonstrated the consistency and robustness of the PDE-STRIDE method for full recovery probability of the model with increasing sample size and for varying dictionary size and noise levels . In addition, we confirmed the sharp phase transition in recovery performance and noted that achievability plots provide a natural estimate for the sample complexity of the underlying nonlinear dynamical system. However, this empirical estimate of sample complexity depends on the choice of model selection scheme and on how the data are sampled.

We demonstrated the capabilities of PDE-STRIDE by learning a protein-interaction model of embryo polarization directly from fluorescence microscopy images of a C. elegans zygote. The model recovered the regulatory reaction network of the involved proteins, complete with its parameter values in a purely data-driven manner, with no knowledge used about the underlying physics or symmetries. The thus-learned, data-derived model was able to correctly predict the spatio-temporal dynamics of the embryonic polarity system from the early spatial domains to the fully developed patterns as observed in the polarized C. elegans zygote. The model we inferred from image data using our method confirms both the structure and the mechanisms of physics-derived cell polarity models [16]. Importantly, the mutually inhibitory interactions between the involved protein species, which have previously been discovered by extensive biochemical experimentation, were automatically and unambiguously extracted from the data [16].

Besides rendering sparse inference methods more robust to noise and to parameter settings, stability selection has the important conceptual benefit of also providing interpretable probabilistic importance measures for all model components. This enables modellers to construct their models with high fidelity, and to gain an intuition about correlations and sensitivities. Graphical inspection of stability plots provides additional freedom for intervention in semi-automated model discovery from data.

We expect that statistical learning methods have the potential to enable robust, consistent and reproducible discovery of predictive and interpretable models directly from observational data. Our PDE-STRIDE framework provides a first step towards this goal, but several open issues remain. First, numerically approximating time and space derivatives in the noisy data is a challenge for noise levels higher than a few percent. This currently limits the noise robustness of the overall method, regardless of how robust the subsequent statistical inference is. The impact of noise becomes even more severe when exploring models with higher-order derivatives or stronger nonlinearities. Future work should focus on formulations that are robust to the choice of different derivative-discretization methods, while providing the necessary freedom to impose structure on the coefficients. Here, signal/noise decomposition techniques based on deep neural networks with time-stepping constraints [76,77] could provide better denoising when the noise has finite correlation length in space and time.

Second, it would be desirable to have a principled way to constrain the learning process by physical priors, such as conservation laws and symmetries. Exploiting structural knowledge about the dynamical system is expected to greatly improve learning performance. Structured sparsity or grouping constraints from statistics may help express such prior knowledge in a sparse inference problem [75,78]. In the specific example of the PAR-polarity model, decades of experimentation and theory have revealed physical principles of mass conservation, detailed force balance in the cell cortex and antagonistic interactions between protein complexes. Using structured constraints to encode such physical principles leads to biologically plausible and physically consistent models, rather than models that simply fit the data [79].

In summary, we believe that data-driven model discovery has tremendous potential to provide novel insights into complex systems, in particular in biology. It provides an effective and complementary alternative to theory-driven approaches. We hope that the stability-based model selection method PDE-STRIDE presented here is going to contribute to the further development and adoption of these approaches in the sciences.

Supplementary Material

Acknowledgements

We are grateful to the Grill laboratory at MPI-CBG, who provided us with high-quality spatial-temporal PAR concentration and flow field data. We also thank Nathan Kutz (University of Washington) and his group for making their code and data public.

Data accessibility

The git repository for the codes and data can be found at https://git.mpi-cbg.de/mosaic/software/machine-learning/pde-stride.

The data are provided in the electronic supplementary material [80].

Authors' contributions

S.M.: conceptualization, data curation, formal analysis, investigation, software, validation, visualization, writing—original draft; B.L.C.: data curation, formal analysis, resources, writing—review and editing; I.F.S.: conceptualization, funding acquisition, investigation, methodology, project administration, resources, supervision, writing—review and editing; C.L.M.: conceptualization, formal analysis, investigation, methodology, project administration, resources, supervision, validation, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

This work was in parts supported by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) under funding code EXC-2068, Cluster of Excellence ‘Physics of Life’, and by the Center for Scalable Data Analytics and Artificial Intelligence (ScaDS.AI) Dresden/Leipzig funded by the Federal Ministry of Education and Research (BMBF, Bundesministerium für Bildung und Forschung).

References

- 1.Mogilner A, Wollman R, Marshall WF. 2006. Quantitative modeling in cell biology: what is it good for? Dev. Cell 11, 279-287. ( 10.1016/j.devcel.2006.08.004) [DOI] [PubMed] [Google Scholar]

- 2.Sbalzarini IF. 2013. Modeling and simulation of biological systems from image data. Bioessays 35, 482-490. ( 10.1002/bies.201200051) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tomlin CJ, Axelrod JD. 2007. Biology by numbers: mathematical modelling in developmental biology. Nat. Rev. Genet. 8, 331. ( 10.1038/nrg2098) [DOI] [PubMed] [Google Scholar]

- 4.Duffy DJ. 2013. Finite difference methods in financial engineering: a partial differential equation approach. Chichester, UK: John Wiley & Sons. [Google Scholar]

- 5.Adomian G. 1995. Solving the mathematical models of neurosciences and medicine. Math. Comput. Simul. 40, 107-114. ( 10.1016/0378-4754(95)00021-8) [DOI] [Google Scholar]

- 6.Donoho D. 2017. 50 years of data science. J. Comput. Graph. Stat. 26, 745-766. ( 10.1080/10618600.2017.1384734) [DOI] [Google Scholar]

- 7.Barnes CP, Silk D, Sheng X, Stumpf MPH. 2011. Bayesian design of synthetic biological systems. Proc. Natl Acad. Sci. USA 108, 15 190-15 195. ( 10.1073/pnas.1017972108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Asmus J, Müller CL, Sbalzarini IF. 2017. Lp-Adaptation: simultaneous design centering and robustness estimation of electronic and biological systems. Sci. Rep. 7, 6660. ( 10.1038/s41598-017-03556-5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kitano H. 2002. Computational systems biology. Nature 420, 206. ( 10.1038/nature01254) [DOI] [PubMed] [Google Scholar]

- 10.Gregor T, Bialek W, van Steveninck RRR, Tank DW, Wieschaus EF. 2005. Diffusion and scaling during early embryonic pattern formation. Proc. Natl Acad. Sci. USA 102, 18 403-18 407. ( 10.1073/pnas.0509483102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chen T, He HL, Church GM. 1999. Modeling gene expression with differential equations. In Biocomputing’99, pp. 29–40. World Scientific.

- 12.Ay A, Arnosti DN. 2011. Mathematical modeling of gene expression: a guide for the perplexed biologist. Crit. Rev. Biochem. Mol. Biol. 46, 137-151. ( 10.3109/10409238.2011.556597) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Prost J, Jülicher F, Joanny JF. 2015. Active gel physics. Nat. Phys. 11, 111. ( 10.1038/nphys3224) [DOI] [Google Scholar]

- 14.Mietke A, Jemseena V, Kumar KV, Sbalzarini IF, Jülicher F. 2019. Minimal model of cellular symmetry breaking. Phys. Rev. Lett. 123, 188101. ( 10.1103/PhysRevLett.123.188101) [DOI] [PubMed] [Google Scholar]

- 15.Etemad-Moghadam B, Guo S, Kemphues KJ. 1995. Asymmetrically distributed PAR-3 protein contributes to cell polarity and spindle alignment in early C. elegans embryos. Cell 83, 743-752. ( 10.1016/0092-8674(95)90187-6) [DOI] [PubMed] [Google Scholar]

- 16.Goehring NW, Trong PK, Bois JS, Chowdhury D, Nicola EM, Hyman AA, Grill SW. 2011. Polarization of PAR proteins by advective triggering of a pattern-forming system. Science 334, 1137-1141. ( 10.1126/science.1208619) [DOI] [PubMed] [Google Scholar]

- 17.Münster S, Jain A, Mietke A, Pavlopoulos A, Grill SW, Tomancak P. 2019. Attachment of the blastoderm to the vitelline envelope affects gastrulation of insects. Nature 568, 395-399. ( 10.1038/s41586-019-1044-3) [DOI] [PubMed] [Google Scholar]

- 18.Voss H, Bünner M, Abel M. 1998. Identification of continuous, spatiotemporal systems. Phys. Rev. E 57, 2820. ( 10.1103/PhysRevE.57.2820) [DOI] [Google Scholar]

- 19.Breiman L, Friedman JH. 1985. Estimating optimal transformations for multiple regression and correlation. J. Am. Stat. Assoc. 80, 580-598. ( 10.1080/01621459.1985.10478157) [DOI] [Google Scholar]

- 20.Bär M, Hegger R, Kantz H. 1999. Fitting partial differential equations to space-time dynamics. Phys. Rev. E 59, 337. ( 10.1103/PhysRevE.59.337) [DOI] [Google Scholar]

- 21.Xun X, Cao J, Mallick B, Maity A, Carroll RJ. 2013. Parameter estimation of partial differential equation models. J. Am. Stat. Assoc. 108, 1009-1020. ( 10.1080/01621459.2013.794730) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Raissi M, Perdikaris P, Karniadakis GE. 2017. Machine learning of linear differential equations using Gaussian processes. J. Comput. Phys. 348, 683-693. ( 10.1016/j.jcp.2017.07.050) [DOI] [Google Scholar]

- 23.Brunton SL, Proctor JL, Kutz JN. 2016. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc. Natl Acad. Sci. USA 113, 3932-3937. ( 10.1073/pnas.1517384113) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rudy SH, Brunton SL, Proctor JL, Kutz JN. 2017. Data-driven discovery of partial differential equations. Sci. Adv. 3, e1602614. ( 10.1126/sciadv.1602614) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Schaeffer H. 2017. Learning partial differential equations via data discovery and sparse optimization. Proc. R. Soc. A 473, 20160446. ( 10.1098/rspa.2016.0446) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Zhang S, Lin G. 2018. Robust data-driven discovery of governing physical laws with error bars. Proc. R. Soc. A 474, 20180305. ( 10.1098/rspa.2018.0305) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mangan NM, Askham T, Brunton SL, Kutz JN, Proctor JL. 2019. Model selection for hybrid dynamical systems via sparse regression. Proc. R. Soc. A 475, 1-22. ( 10.1098/rspa.2018.0534) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Long Z, Lu Y, Ma X, Dong B. 2018. PDE-net: learning PDEs from data. In Int. Conf. on Machine Learning, 10–15 July, pp. 3208–3216. PMLR.

- 29.Raissi M, Karniadakis GE. 2018. Hidden physics models: machine learning of nonlinear partial differential equations. J. Comput. Phys. 357, 125-141. ( 10.1016/j.jcp.2017.11.039) [DOI] [Google Scholar]

- 30.Raissi M, Perdikaris P, Karniadakis GE. 2019. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 378, 686-707. ( 10.1016/j.jcp.2018.10.045) [DOI] [Google Scholar]

- 31.Long Z, Lu Y, Dong B. 2019. PDE-Net 2.0: learning PDEs from data with a numeric-symbolic hybrid deep network. J. Comput. Phys. 399, 108925. ( 10.1016/j.jcp.2019.108925) [DOI] [Google Scholar]

- 32.Dong B, Jiang Q, Shen Z. 2017. Image restoration: wavelet frame shrinkage, nonlinear evolution PDEs, and beyond. Multiscale Model. Simul. 15, 606-660. ( 10.1137/15M1037457) [DOI] [Google Scholar]

- 33.Meinshausen N, Bühlmann P. 2010. Stability selection. J. R. Stat. Soc.: Ser. B (Stat. Methodol.) 72, 417-473. ( 10.1111/j.1467-9868.2010.00740.x) [DOI] [Google Scholar]

- 34.Shah RD, Samworth RJ. 2013. Variable selection with error control: another look at stability selection. J. R. Stat. Soc.: Ser. B (Stat. Methodol.) 75, 55-80. ( 10.1111/j.1467-9868.2011.01034.x) [DOI] [Google Scholar]

- 35.Tibshirani R. 1996. Regression shrinkage and selection via the LASSO. J. R. Stat. Soc. Ser. B (Methodological) 58, 267-288. ( 10.1111/j.2517-6161.1996.tb02080.x) [DOI] [Google Scholar]

- 36.Blumensath T, Davies ME. 2008. Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 14, 629-654. ( 10.1007/s00041-008-9035-z) [DOI] [Google Scholar]

- 37.Foucart S. 2011. Hard thresholding pursuit: an algorithm for compressive sensing. SIAM J. Numer. Anal. 49, 2543-2563. ( 10.1137/100806278) [DOI] [Google Scholar]

- 38.Chartrand R. 2011. Numerical differentiation of noisy, nonsmooth data. ISRN Appl. Math. 2011, 1-11. ( 10.5402/2011/164564) [DOI] [Google Scholar]

- 39.Stickel JJ. 2010. Data smoothing and numerical differentiation by a regularization method. Comput. Chem. Eng. 34, 467-475. ( 10.1016/j.compchemeng.2009.10.007) [DOI] [Google Scholar]

- 40.Wu TT, Lange K. 2008. Coordinate descent algorithms for LASSO penalized regression. Ann. Appl. Stat. 2, 224-244. ( 10.1214/07-aoas147) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Friedman J, Hastie T, Tibshirani R. 2010. Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1. ( 10.18637/jss.v033.i01) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Eckstein J, Bertsekas DP. 1992. On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293-318. ( 10.1007/BF01581204) [DOI] [Google Scholar]

- 43.Combettes PL, Pesquet JC. 2011. Proximal splitting methods in signal processing. In Fixed-point algorithms for inverse problems in science and engineering, pp. 185–212. New York, NY: Springer.

- 44.Beck A, Teboulle M. 2009. A fast iterative Shrinkage-Thresholding algorithm. Soc. Ind. Appl. Math. J. Imag. Sci. 2, 183-202. ( 10.1137/080716542) [DOI] [Google Scholar]

- 45.Meinshausen N, Bühlmann P. 2006. High-dimensional graphs and variable selection with the LASSO. Ann. Stat. 34, 1436-1462. ( 10.1214/009053606000000281) [DOI] [Google Scholar]

- 46.Zhao P, Yu B. 2006. On model selection consistency of LASSO. J. Mach. Learn. Res. 7, 2541-2563. [Google Scholar]

- 47.Fan J, Li R. 2001. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348-1360. ( 10.1198/016214501753382273) [DOI] [Google Scholar]

- 48.Zhang CH. 2010. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38, 894-942. ( 10.1214/09-AOS729) [DOI] [Google Scholar]

- 49.Tropp JA. 2004. Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 50, 2231-2242. ( 10.1109/TIT.2004.834793) [DOI] [Google Scholar]

- 50.Mallat SG, Zhang Z. 1993. Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 41, 3397-3415. ( 10.1109/78.258082) [DOI] [Google Scholar]

- 51.Needell D, Tropp JA. 2009. CoSaMP: iterative signal recovery from incomplete and inaccurate samples. Appl. Comput. Harmon. Anal. 26, 301-321. ( 10.1016/j.acha.2008.07.002) [DOI] [Google Scholar]

- 52.Dai W, Milenkovic O. 2009. Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 55, 2230-2249. ( 10.1109/TIT.2009.2016006) [DOI] [Google Scholar]

- 53.Blumensath T, Davies ME. 2009. Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 27, 265-274. ( 10.1016/j.acha.2009.04.002) [DOI] [Google Scholar]

- 54.Herrity KK, Gilbert AC, Tropp JA. 2006. Sparse approximation via iterative thresholding. In 2006 IEEE Int. Conf. on Acoustics Speech and Signal Processing Proc., 14–19 May, vol. 3, pp. III–III. IEEE.

- 55.Tropp JA. 2006. Just relax: convex programming methods for identifying sparse signals in noise. IEEE Trans. Inf. Theory 52, 1030-1051. ( 10.1109/TIT.2005.864420) [DOI] [Google Scholar]

- 56.Figueiredo MA, Nowak RD, Wright SJ. 2007. Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1, 586-597. ( 10.1109/JSTSP.2007.910281) [DOI] [Google Scholar]

- 57.Kohavi R. 1995. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Int. Joint Conf. of Artificial Intelligence, vol. 14, pp. 1137–1145.

- 58.Schwarz G. 1978. Estimating the dimension of a model. Ann. Stat. 6, 461-464. ( 10.1214/aos/1176344136) [DOI] [Google Scholar]

- 59.Lederer J, Müller CL. 2015. Don’t fall for tuning parameters: Tuning-free variable selection in high dimensions with the TREX. In Proc. of the 29th AAAI Conf. on Artificial Intelligence (AAAI 2015), Austin, TX, 25–30 January, pp. 2729–2735. AAAI Press.

- 60.Bien J, Gaynanova I, Lederer J, Müller CL. 2018. Non-convex global minimization and false discovery rate control for the TREX. J. Comput. Graph. Stat. 27, 23-33. ( 10.1080/10618600.2017.1341414) [DOI] [Google Scholar]

- 61.Yu B. 2013. Stability. Bernoulli 19, 1484-1500. ( 10.3150/13-BEJSP14) [DOI] [Google Scholar]

- 62.Liu H, Roeder K, Wasserman L. 2010. Stability approach to regularization selection (StARS) for high dimensional graphical models. Adv. Neural Inf. Process. Syst. 24, 1432-1440. [PMC free article] [PubMed] [Google Scholar]

- 63.Haury AC, Mordelet F, Vera-Licona P, Vert JP. 2012. TIGRESS: trustful inference of gene regulation using stability selection. BMC Syst. Biol. 6, 145. ( 10.1186/1752-0509-6-145) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Bühlmann P, Kalisch M, Meier L. 2014. High-dimensional statistics with a view toward applications in biology. Annu. Rev. Stat. Appl. 1, 255-278. ( 10.1146/annurev-statistics-022513-115545) [DOI] [Google Scholar]

- 65.Hothorn T, Müller J, Schröder B, Kneib T, Brandl R. 2011. Decomposing environmental, spatial, and spatiotemporal components of species distributions. Ecol. Monogr. 81, 329-347. ( 10.1890/10-0602.1) [DOI] [Google Scholar]

- 66.Gross P, Kumar KV, Goehring NW, Bois JS, Hoege C, Jülicher F, Grill SW. 2019. Guiding self-organized pattern formation in cell polarity establishment. Nat. Phys. 15, 293. ( 10.1038/s41567-018-0358-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Pati YC, Rezaiifar R, Krishnaprasad PS. 1993. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proc. of 27th Asilomar Conf. on Signals, Systems and Computers, 1–3 November, pp. 40–44. IEEE.

- 68.Tropp JA, Gilbert AC. 2007. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 53, 4655-4666. ( 10.1109/TIT.2007.909108) [DOI] [Google Scholar]

- 69.Wang S, Nan B, Rosset S, Zhu J. 2011. Random LASSO. Ann. Appl. Stat. 5, 468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Temam R. 2001. Navier-Stokes equations: theory and numerical analysis, vol. 343. Providence, RI: American Mathematical Society. [Google Scholar]

- 71.Incardona P, Leo A, Zaluzhnyi Y, Ramaswamy R, Sbalzarini IF. 2019. OpenFPM: a scalable open framework for particle and particle-mesh codes on parallel computers. Comput. Phys. Commun. 241, 155-177. ( 10.1016/j.cpc.2019.03.007) [DOI] [Google Scholar]

- 72.Meinhardt H. 1982. Models of biological pattern formation. New York, NY: Academic Press.

- 73.Manukyan L, Montandon SA, Fofonjka A, Smirnov S, Milinkovitch MC. 2017. A living mesoscopic cellular automaton made of skin scales. Nature 544, 173. ( 10.1038/nature22031) [DOI] [PubMed] [Google Scholar]

- 74.Wainwright MJ. 2009. Sharp thresholds for high-dimensional and noisy sparsity recovery using - constrained quadratic programming (LASSO). IEEE Trans. Inf. Theory 55, 2183-2202. ( 10.1109/TIT.2009.2016018) [DOI] [Google Scholar]

- 75.Ward JH Jr. 1963. Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc. 58, 236-244. ( 10.1080/01621459.1963.10500845) [DOI] [Google Scholar]

- 76.Rudy SH, Kutz JN, Brunton SL. 2019. Deep learning of dynamics and signal-noise decomposition with time-stepping constraints. J. Comput. Phys. 396, 483-506. ( 10.1016/j.jcp.2019.06.056) [DOI] [Google Scholar]

- 77.Maddu S, Sturm D, Cheeseman BL, Müller CL, Sbalzarini IF. 2021. STENCIL-NET: data-driven solution-adaptive discretization of partial differential equations. (http://arxiv.org/abs/2101.06182)

- 78.Kutz JN, Rudy SH, Alla A, Brunton SL. 2017. Data-Driven discovery of governing physical laws and their parametric dependencies in engineering, physics and biology. In 2017 IEEE 7th Int. Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), 10–13 December, pp. 1–5. IEEE.

- 79.Maddu S, Cheeseman BL, Müller CL, Sbalzarini IF. 2021. Learning physically consistent differential equation models from data using group sparsity. Phys. Rev. E 103, 042310. ( 10.1103/PhysRevE.103.042310) [DOI] [PubMed] [Google Scholar]

- 80.Maddu S, Cheeseman BL, Sbalzarini IF, Müller CL. 2022. Stability selection enables robust learning of differential equations from limited noisy data. Figshare. ( 10.6084/m9.figshare.c.6016866) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The git repository for the codes and data can be found at https://git.mpi-cbg.de/mosaic/software/machine-learning/pde-stride.

The data are provided in the electronic supplementary material [80].