Abstract

We present an optics-free CMOS image sensor that incorporates a novel time-gated dual-photodiode pixel design to allow filter- and lens-less image acquisition of near-infrared-excited (NIR-excited) upconverting nanoparticles. Recent biomedical advances have highlighted the benefits of NIR excitation, but NIR interaction with silicon has remained a challenge, even with high-performance optical blocking filters. Using a secondary diode and a dual-photodiode design, this sensor is able to remove the 100s of mV of NIR background on pixels and bring it down to single-digit mV level, nearing its noise floor of 2.2 mV rms, not achievable with any optical filter. Non-linear effects of background cancellation using the diode pair has been mitigated using an initial one-time pixel-level curve fitting and calibration in a post-processing setting. This imager comprises a highly linear 11 fF metal-oxide-metal (MOM) capacitor and includes integrated angle-selective gratings to reject oblique light and enhance sharpness. Each pixel also includes two distinct correlated double sampling schemes, to remove low frequency flicker noise and systematic offset in the datapath. We demonstrate the performance of this imager using pulsed NIR-excited upconverting nanoparticles on standard United-States-Air-Force (USAF) resolution targets and achieve an SNR of 15 dB, while keeping NIR background below 6 mV. This 36-by-80-pixel array measures only 2.3 mm by 4.8 mm and can be thinned down to 25 μm, allowing it to become surgically compatible with intraoperative instruments and equipment, while remaining optics-free.

Keywords: cancer detection, capacitive transimpedance amplifier (CTIA), dual-photodiode, image sensor, near-infrared, time-gated imaging

I. Introduction

The outcome of cancer therapies depends on the ability of clinicians to visualize, monitor and be guided by the real-time response of the treatment being administered. In the surgical arena, tumor resections are primarily reliant on tools for intraoperative optical navigation to ensure no microscopic residual cancer cell is being left behind in the tumor bed during the operation. It is therefore critical that these intraoperative imaging tools be sensitive, compatible with minimally invasive surgeries, and readily integrate with the clinical workflow, while maintaining an acceptable resolution and reliability. For example, clinical margins are often only detected on post-operative analysis in the pathology lab, visualizing cells clusters with fewer than 200 cells, which represents an area of 15 μm by 15 μm, is required for cancer staging purposes, which highlights the fundamental need for a highly sensitive imager[1]. These high levels of sensitivity often necessitate powerful optics which utilize complex and bulky elements, and these criteria impose restrictive requirements on the size and form factor of these intraoperative imaging platforms, particularly when a high degree of maneuverability in a minimally invasive surgical environment is needed.

To image tumor cells, cancer cells can be labeled with optically tagged molecular probes. These probes can be both specific, using a targeted molecule binding to a cancer-specific receptor, or non-specific, relying on effects such as EPR (enhanced permeability and retention). For fluorescent probes in the NIR optical window of tissue, upon excitation with a fixed wavelength, a slightly longer Stokes-shifted wavelength (often only 30 to 50 nm longer) emission light is emitted by the targeted cells and captured by the imaging sensor, revealing any existing cancer cell. Recent instrumentational breakthroughs have significantly reduced the form factor of these imagers, down to centimeter-scale levels[2], [3], and brought the field closer to achieving fully guided resection surgeries. Advances in fiber optics have enabled carrying the excitation light directly to the tumor bed and bringing the emission light back to the sensor, and as a result, bulky optics and sensors no longer need to be in direct contact with the specimen and miniaturization will only be limited by the size of the fiber optics. These fiber optics are however constrained by bending radius and because of their limited flexibility, they lack the ability to become scalable.

The large form factor of conventional imagers is constrained by the optical sensitivity requirements that need to be met for imaging NIR fluorophores. High performance and rigid optical filters are required to be able to reject the much stronger excitation light and extract the significantly (more than 105 times) weaker emission light, that is only a few dozen nanometers away. Moreover, conventional molecular probes often suffer from photobleaching, in which their optical characteristics degrade over time, preventing longer imaging times. Enhanced optical filters for widefield fluorescence microscopes, a diverse selection of more efficient optical probes and less bulky lenses have all contributed to the miniaturization of previously cumbersome intraoperative imagers. Nonetheless, hard-to-access and complex tumor cavities -common in current surgical procedures- remain a great obstacle in making those imagers practical, while remaining minimally-invasive, and current optics are not easily planarized-precluding a universal integration of devices into surgical procedures. A chip-scale imager can alleviate these challenges by integrating these optics directly onto planar sensors and use alternative imaging schemes to obviate filters all together.

Chip-based imagers are an emerging alternative for conventional fluorescence microscopy and are able to achieve ultra-small form factors and microscopic levels of imaging resolution[4]–[6]. In addition to their small size, chip-scale sensors allow direct contact imaging, allowing placement of the sensor only micrometers away from the specimen, obviating large lenses and focusing optics in favor of capturing light before it diverges. The ability to perform direct contact eliminates the need for restrictive fiber optics. Moreover, chip-scale imagers are inherently scalable in CMOS, enabling broad areas to be covered without sacrificing resolution or imaging speed, as pixels can largely operate in parallel. While these imagers achieve a significantly smaller form factor, the high-performance optical (color) filters needed to reject the background generated by the interfering excitation light -often many orders of magnitude stronger than the emission and particularly critical when using fluorophores with small Stokes shifts- hinders further miniaturization of the platform, as these filters are often angle-dependent and therefore unable to do away without precise focusing optics to adjust the emission light angle of incidence. A time-gated imaging scheme on the other hand, where the excitation light is pulsed and emission light is acquired only after excitation light is no longer present, would requires no optical filters and would enable a fully optics-free, chip-scale solution.

Achieving the smallest form factor requires eliminating optical filters, and their requisite lenses, in favor of an alternative imaging scheme that does not require them, ensuring autofluorescence -the tissue’s inherent short-wavelength fluorescence characteristic- is mitigated by using a longer excitation wavelength (low energy) and the molecular probes to be immune from photobleaching. A time-gated acquisition obviates the need for a blocking filter, as the excitation light would be turned off during integration, as seen in Fig. 1(a). To this date, time-gated image resolution has been used only in limited cases with conventional biomarkers, due to their very short (nanosecond) radiative decay and the fact that this decay is indistinguishable from tissue autofluorescence, resulting in an increased background. Optical probes with NIR-I (700–1000 nm) and NIR-II (1000–1700 nm) excitation wavelengths have deep penetration depths (several millimeters) and are therefore very appealing for surgical procedures requiring deep margin assessments such as lymph node imaging. These optical probes would mitigate the autofluorescence problem and a longer emission lifetime would allow time-gated acquisition to become viable.

Fig. 1.

Overview of time-gated imaging using upconverting nanoparticles. (a) time-gated imaging scheme waveforms. (b) upconverting nanoparticle absorption and emission. (c) proposed intraoperative imaging platform and surgical integration of the micro-imager.

Lanthanide-doped upconverting nanoparticles (UCNPs) are phosphors that have the ability to absorb multiple photons in the NIR range and combine their emission energy into a single photon with a higher energy, “upconverting” the excitation (Fig. 1(b)), and thus virtually preventing autofluorescence from occurring thanks to their long wavelength excitation (980 nm). In addition to their upconversion power efficiency being orders of magnitude higher than those of the best two-photon fluorophores[7], [8], they also have much longer decay times (>100 μs)[9] relative to organic fluorophores (ñs time scales) which enables time-resolved imaging with CMOS speed. Furthermore, their uniquely long excitation wavelength (980 nm) is ideal for deep tissue penetration and their visible emission (predominantly 660 nm) provides a synergistic compatibility with CMOS photodiodes and speeds readily achieved in modern processes.

Although no background illumination light or autofluorescence remains, when pulsed, NIR excitation light still results in large amounts of interference in CMOS-based photodiodes. This occurs because the NIR illumination light, absent a filter, will penetrate into the silicon bulk, beneath the photodiodes, generating substrate carriers with diffusion times on the order of hundreds of microseconds[10]. This introduces a background current in the photodiode that needs to be measured and subtracted from the desired signal. We here present a time-gated lens-less and filter-less chip-scale solution that mitigates the interference challenge by incorporating a dual photodiode pixel architecture and localized background level and interference adjustment method. Our optics-free platform utilizes UCNPs as optical probes and includes integrated micro-fabricated angle-selective gratings (ASGs) to further enhance image sharpness. As a fully planarized system, this sensor can be thinned down to a thickness nearing 25 μm and therefore can be seamlessly integrated on virtually any surgical surfaces and probes, as seen in Fig. 1(c), providing real-time intraoperative visualization of targeted cells with pulsed NIR excitation and direct contact imaging, while remaining free of background and autofluorescence.

This fully-integrated imaging system includes two correlated double sampling schemes to acquire data, as well as a built-in calibration module to correct gain mismatch between the pixels, to enhance sensitivity. The dual-photodiode design and localized background measurement and cancellation has also been compensated for non-linear dependencies and optimized for minimum area overhead.

This paper is organized into 6 sections. Section II describes the challenges of NIR excitation interference in CMOS and presents the dual-photodiode pixel architecture used in this work to mitigate that. Section III details the pixel circuit design and Section IV details the optics-free time-gated imaging system. We present experimental results to validate the sensor’s performance and its ability to carry out time-gated imaging with UCNP markers using a standard 1951 USAF resolution test chart plate- emulating cellular feature resolutions- in Section V. The conclusion is provided in Section VI.

II. NIR Excitation Interference and Dual-Photodiode Pixel Design

A. Effects of NIR Excitation on CMOS Photodiodes

Time-gated illumination schemes obviate, in principle, the need for optical emission filters, since the excitation light is turned off during image acquisition. However, the main challenge arises when light with a wavelength that travels deeper into silicon (i.e., NIR light such as 980 nm) penetrates into the bulk silicon generating a significant number of electron-hole pairs which enter the photodiode and function as interfering carriers. As seen in Fig. 2(a), these carriers are generated throughout the substrate, but the low doping of the bulk enables a slow recombination, allowing them time to travel towards the depletion regions at the surface where they recombine, thus creating a background interference on the photodiodes. Given the angular and spatial uniformity of the carriers’ paths inside the substrate, Fig. 2(a) also illustrates how these interfering carriers will even reach photodiodes covered by metal layers -albeit to a different extent. As a result of the low number of dopants present in the substrate, this undesirable background will have several hundreds of microseconds of recombination lifetime, posing a great challenge for time-gated acquisition.

Fig. 2.

Effect of pulsed NIR excitation on covered and uncovered photodiodes: (a) positive (uncovered) and negative (covered) photodiode and the locations and paths of the two different kinds of charges generated within the pixel. (b) time domain characteristics of UCNP emission decay and background generated by the pulsed excitation light on pixels (5-ms pulsed 980nm laser at 180 W/cm2). (c) measured background level on the PPD as a function of the pulsed (time-gated) 980nm excitation light (pulse duration = 5 ms).

While NIR light will stimulate a photodiode whether covered or not, shorter wavelengths such as UV or the visible emission of UCNPs that do not penetrate deep into the silicon, are more easily blocked by metal layers, and mainly create optically induced charges only in uncovered diodes, as seen in Fig. 2(a). Thus, we can use this distinction to measure the local background generated and subsequently remove it from the uncovered photodiode. The measured intensities and decay times of both UCNP emission (with a total particle size of 26 nm, comprising a 16 nm NaEr0.8Yb0.2F4 core and a 5 nm NaY0.8Gd0.2F4 shell[11] and concentration of 0.68 μM) and interfering background on a pixel are shown in Fig. 2(b). The UCNP emission has an effective decay lifetime of 950 μs, very similar to the 900 μs average recombination lifetime of interfering carriers, and their similarities in intensity and decay make it challenging to distinguish the signal from the background. Importantly, the intensity of the background does not scale down linearly with the excitation power, as shown in Fig. 2(c) demonstrating that even reducing illumination power dramatically (such as with a thin-film interference filter) does not eliminate the background notably. The converse is also true, that by increasing the optical power, we do not substantially increase our background, but do increase our signal proportionally.

B. Dual-Photodiode Pixel Design

Our proposed imaging system includes a novel background correction method that leverages a secondary and fully covered photodiode labeled “negative photodiode” (NPD), shown in Fig. 2(a), to extract a local and per-pixel measurement of the background and adjust the primary and uncovered “positive photodiode” (PPD) background accordingly and reduce the residual background to near noise level.

The idea of using a secondary covered photodiode to measure and adjust the baseline of the main sensing diode has been demonstrated in works such as [12]–[15], where the signal difference between the two diodes is differentially amplified by a chain of limiting amplifiers to extract the input optical signal, i.e., a binary signal of “0” or “1” where “1” represents light being detected and “0” represents absence of any optical signal at the input. While this allows achieving very high bandwidths of several GHz, its performance is limited to only digital optical signals and fails to extract analog information from signals such as the emission of fluorescently labelled tissues, the main source of information in medical imaging. We have developed a novel method using non-linear per-pixel characterization that allows using the secondary photodiode to adjust the local background and extract the underlying emission from it, while minimizing fill factor loss.

This addition of a second photodiode will result in overall signal loss due to a reduced fill factor. In the case of equal pixel area allocation for the two photodiodes, the system would see its dynamic range lowered by 6dB. As a result, the size of the secondary diode should only be as large as needed, and instead additional ratio adjustment is preferred to translate background levels between the two photodiodes. Fig. 3(a) illustrates the implementation of the two photodiodes, demonstrating their dissimilarity in both size and shape. The PPD is implemented using 4 identical 19 μm-by-19 μm photodiodes in parallel, covered by integrated angle selective gratings[16] to block oblique light from reaching the pixel to enhance spatial resolution as described in [16]. The NPD is formed from a cross-shaped active region spanning the space between the 4 PPDs, overall providing a centroid formation for a more uniform background measurement.

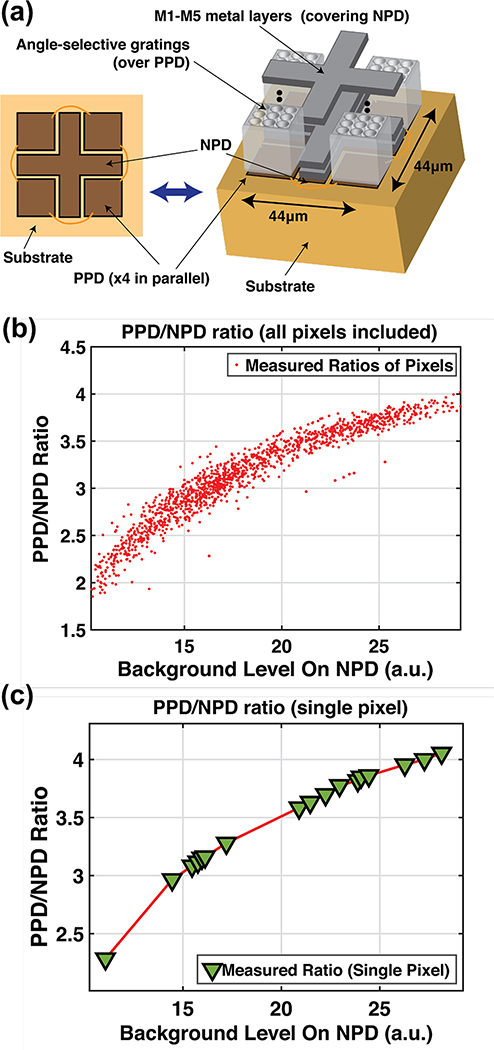

Fig. 3.

Non-linear dependency of positive-to-negative photodiode ratio: (a) 3D diagram of PPD (covered by angle-selective gratings) and NPD (covered with M1-M5 metal layers) in the pixel. (b) aggregate scatter plot of the ratios for all the pixel at various samples of NPD background levels. (b) fitted curve of the photodiode ratio as a function of measured NPD background for one sample pixel.

As seen in Fig. 3(a), the two diodes are not identically sized, and therefore ratio adjustment is needed, where ratio is defined as

| (1) |

with BP and BN being the background levels measured on the PPD and NPD respectively. The characteristics of f(BN) were measured by extracting the ratio at various background levels for each individual pixel, and the aggregate of all ratios extracted for the entire array is shown in Fig. 3(b). It can be seen from Fig. 3(b) that there is a non-linear dependency in the ratio and needs therefore to be accounted for when using the NPD to adjust the background of the PPD. To this end, the data for each individual pixel PD-pair is used to perform a one-time ratio curve fitting to extract the profile of this ratio. A curve fitting example for a sample pixel is shown in Fig. 3(c).

C. Emission Signal Leakage Correction

The 5 metal layers used to cover the NPD from the emission light, almost completely shield the NPD from short wavelengths such as UV, and thus the NPD level measured will only include the background generated by the excitation light, easily removed with ratio adjustment. However, the performance of the blocking metal layers starts to degrade with longer wavelengths, and at 660 nm -the UCNP peak emission wavelength- 6.5% of the emission signal captured by the PPD is also present in the measured signal on the NPD, as seen in Fig. 4(a). Failing to mitigate this “leakage” will result in not only an emission signal loss after adjusting the PPD background, but due to the non-linear dependency of the ratios of the two photodiodes, the background adjustment will also be corrupted.

Fig. 4.

Overview of the wavelength-dependent “k” factor used in the background correction scheme. (a) background generated on NPD at different wavelengths (namely 660 nm, which is of interest) as a percentage of the background on PPD. (b) histogram of original NPD values and true NPD background levels extracted using pixel-level non-linear curve fitting (the data for these two histograms were captured from an image of a 0.68 μM UCNP dispersion chamber, placed directly on the chip, and illuminated with 5 ms pulses of 980 nm excitation light at 45 W/cm2).

To account for the emission component measured on the NPD, we parametrize the measured signal on both photodiodes using the following equations:

| (2) |

where EP and EN are the emission components of the measured values on the PPD and NPD respectively, and BP and BN are the excitation background generated on the PPD and NPD. Knowing the proportional (linear) relationship between EP and EN (from Fig. 4(a)) and the ratio f(BN), as defined in (1), we can further simplify (2) into

| (3) |

where k is the proportional coefficient extracted from Fig. 4(a). While k is wavelength dependent (see Fig. 4(a) for dependency), we may consider a constant value thanks to the fact that majority of the emission power of the aUCNPs is concentrated around 660 nm. As a result, using the measured values of PPD and NPD, we can then solve (3) for BN and extract the true and corrected level of background. Removing BP from the PPD signal then becomes trivial using (2) and f(BN). Fig. 4(b) shows the distribution of a sample set of NPD measurements and the corrected and extracted background levels and highlights the importance of this correction.

Precision of this technique relies on the accuracy of the proportional coefficient k that is used. While the values shown in Fig. 4(a) are measured at normally incident light, it is evident that the emission light coming from an arbitrary specimen on the imager will not be confined to a normal incidence. To quantify the limits of this correction, the PPD background levels were extracted using a 20% larger and smaller k, to account for its angle-dependency with a reasonable range of change in its value. Fig. 5(a) shows a histogram of the extracted background values in the 3 cases of a 20% higher, baseline (normal incidence) and 20% lower k value. The individual deviations of the NPD backgrounds extracted from the baseline (normal incidence) case is shown in Fig. 5(b), demonstrating a mean NPD background deviation of −1.33 mV for a 20% higher k and 1.89 mV for a 20% lower one. The PPD deviations from their baseline values is shown in Fig. 5(c), demonstrating that the overall correction precision of this method is near 3.5 mV.

Fig. 5.

Effect of angle dependency, and k variation on NPD and PPD correction reliability. (a) histogram of sample set of extracted and adjusted NPD background values at baseline k, 20% higher k and 20% lower k value. (b) histogram of the deviation of adjusted NPD background with 20% higher and lower k value from their baseline values. (c) absolute value of effective deviation of PPD levels from their baseline values with a 20% higher and lower k value.

III. Pixel Design

There are several challenges to achieve a robust and linear pixel design that need to be carefully addressed, such as a sufficiently large dynamic range, a linear optical response, signal-dependent leakage of the switches, flicker noise and fill factor loss. Shown in Fig. 6 is the schematic of the pixel circuitry. The pixel architecture is a capacitive transimpedance amplifier (CTIA) which provides an acceptable dynamic range and a linear response, provided its loop gain is sufficiently high.

Fig. 6.

Schematic of the pixel circuit and control signal waveforms.

The integration capacitor Cint placed in feedback is a custom metal-oxide-metal (MOM) capacitor with a capacitance of 11 fF. This highly linear capacitor and a high gain front-end amplifier ensures a linear response to optical stimulation over the entire dynamic range. The two photodiode paths also include switches (M7-M8) to connect the photodiodes to the analog supply whenever not in use, to ensure leakage across the switches M5 and M6 remains signal-independent. To maintain the leakage across the integrating capacitor’s reset switch (M9-M10) signal-independent as well, a replica biasing circuitry is connected when the switch is opened (when ϕRST is low), to keep a constant voltage applied to the switch[16]. This allows the leakage of the switches to appear as a constant offset at the output, which can be easily measured and cancelled out during acquisition.

Each photodiode’s signal is measured individually in a time-domain duplexed manner, and the data is acquired using two rounds of correlated double sampling (CDS) schemes. Fig. 6 shows the timing of the control waveforms, where during the non-inverted acquisition for either of the photodiode, the laser light is initially pulsed (for 5 ms) and upon turning off, the acquisition begins with ϕRST going high (for about 100 μs) and resetting the charge on the integration capacitor. The first CDS scheme, implemented to mitigate the effects of flicker noise, is carried out by capturing two samples, “RESET” as the baseline and “SIGNAL” as the secondary CDS sample, each of which is first stored on a register capacitor and transferred to the output through dedicated column buffers. The integration time is determined by the spacing of the “RESET” and “SIGNAL” acquisition timepoint, as shown in Fig. 6.

The second CDS scheme is included to remove systematic offsets in the “RESET” and “SIGNAL” buffering paths and is implemented by acquiring a second sample for each photodiode, with swapped “RESET” and “SIGNAL” register capacitors, resulting in an inverted sample (shown in Fig. 6). This offset will appear in the two samples as a common-mode component which can be removed, and the underlying signal be retrieved via simple subtraction. Thanks to its fast readout time of 1.2 ms and repeatability, a single acquisition can be repeated as many times as needed and when averaged, increases the SNR by a factor proportional to , where N is the number of repetitions.

The noise performance of the pixel was simulated at various integration times, with all sources of noise sources accounted for, including but not limited to shot noise, thermal noise, and flicker noise, which are the major contributors of noise to the system. The shot noise in particular is a signal dependent noise which increases whenever emission or background is present and a larger photocurrent is generated, and is therefore the dominant source of noise at longer integration times (above a few ms). For simplicity and without loss of generality, all noise measurements and simulations have been performed in the absence of emission and excitation signal. The noise of the pixel was measured, and Fig. 7 shows the results of the simulated and measured noise values. For a typical integration window duration of 1 ms, the measured noise voltage was 2.2 mV rms, and is dominated primarily by the thermal noise of the front-end amplifier.

Fig. 7.

Pixel noise measurement and simulation results.

The chip microphotograph of the imager array, fabricated in a 0.18 um process, is shown in Fig. 8(a). The sensor measures 2.3 mm by 4.8 mm and includes internal digital control blocks to monitor and carry out the acquisition and readout of the array via an external FPGA. Fig. 8(b) shows a microphotograph of the pixels. As seen in Fig 8(b), the negative PD is covered by 5 metal layers and the 4 identical PPD are connected in parallel and covered by angle-selective-gratings, surrounded by control and readout circuitry of the pixel. The 55 μm by 55 μm pixel has been designed to allow efficient use of photodiode areas and shapes to minimize fill factor loss, while accommodating the photodiode pair in it.

Fig. 8.

Chip microphotograph: (a) pixel array and digital and control blocks. (b) pixel microphotograph close-up.

IV. Optics-Free Intraoperative Imaging Platform

As an intraoperative imaging platform, shown in Fig. 9, our proposed imaging system has at its core an 36-by-80-pixel array, where each pixel includes a photodiode pair (NPD and PPD) and column buffers for time-domain duplexed readout. The sensor also includes a digital control block for readout and acquisition control as well as a row decoder paired with column-wise multiplexers to enable parallel readout of 80 columns using only 8 channels. The acquisition and readout timings are controlled by an FPGA, using digital control signals, and readout data is sent to the FPGA with 8 external 12-bit analog-to-digital converters (ADCs), serially communicating with it. The readout process is carried out by the pair of data lines labeled “SIGNAL”, representing the main CDS sample, and “RESET”, representing the baseline sample for the CDS scheme.

Fig. 9.

System level overview of the imaging platform, including the specimen, external controlling FPGA, and image sensor and its internal architecture.

The imaging sensor also includes a current calibration scheme, implemented to correct integration capacitor variation throughout the array, which translates to a pixel gain error, and can be limiting factor for resolving images with low emission light levels. This calibration is an initial one-time step and is performed by directly connecting a single and constant current source to each pixel successively, extracting the current-to-voltage gain of each pixel relative to each other and using those stored values to adjust the gain during sample acquisition accordingly. Fig. 9 shows how the singular calibration current source is multiplexed and connected to each pixel.

Using direct contact imaging, the specimen will be placed directly on the chip, as shown in Fig. 9 while the pulsed excitation light is provided by the external light source. The decaying emission light will subsequently be captured and acquired by our proposed sensor using a time-resolved acquisition scheme.

V. Experimental Results

A. Experimental Setup

To validate the ability of our sensor to perform time-resolved imaging using upconverting nanoparticles, we tested its performance in a specimen experiment. Fig. 10(a) shows the imaging platform (without the specimen mounted). Due to our ability to perform direct contact imaging, the platform does not include any filters or lenses, and as a result, the imager chip can be used bare, as shown in Fig. 10(a).

Fig. 10.

Overview of the setup used for imaging: (a) before mounting the specimen on the imager. (b) after mounting the specimen on the imager.

The specimen used for the experiment is a negative USAF resolution target test plate, coated with a chrome background. We have selected a singular feature on the plate -the digit “1” in the “group 1” section of the plate- and placed the plate directly on the sensor. This specimen is illuminated by a quartz chamber containing upconverting nanoparticles dispersion, which is illuminated by an external excitation light source. 500 μL of a 0.68 μM upconverting nanoparticles dispersion (in hexane) is transferred into the quartz chamber, which has a 1 mm light path (thickness), placed directly on the USAF target plate, as shown in Fig. 10(b). Once the specimen is mounted, the laser is collimated and pulsed, and the time-gated acquisition of the specimen emission is carried out. The laser pulse duration and integration time were respectively 5 ms and 1 ms, resulting in an overall frame rate of 34 Hz. The laser power used for this experiment was 200 W/cm2. This experiment has been designed to emulate a tumor specimen that has been injected with targeted upconverting nanoparticles, where the imaging platform will serve as a surgical tool for clinicians to direct and guide them to locate and visualize tumor bed layers and microscopic residual cancer cells being left behind during resection surgeries.

B. Experimental Results

The ground truth image of the specimen (digit “1”) is shown in Fig. 11(a). The background correction method relies on the values extracted from the positive PD and the negative PD, both of which are shown in Fig. 11(b) and 11(c) respectively. The PPD measured an average of 225 mV of signal where emission was present, while the average NPD’s measured signal in that same region was 62 mV, both of which include an emission component as well as the background from the NIR excitation. Using the background adjustment method proposed, we can extract the underlying emission component of the image and resolve the image shown in Fig. 11(d). The average emission signal in the final extracted image is 50 mV, for an integration time of 1 ms. The background in Fig. 11(d) (surrounding the specimen) is less than 10 mV and has a mean of 6.5 mV, demonstrating that the proposed method is able to reduce the interfering NIR excitation to single-digit mV level and near the noise floor of 2.2 mV, thus allowing filter-less and lens-less time-resolved imaging to be possible. The angle-sensitivity of the background adjustment method remains the main limiting factor to achieve higher sensitivity, surpassing integration capacitor and photodiode responsivity mismatch between the pixels, each only resulting in at most 1.5 mV of measurement error.

Fig. 11.

Specimen experiment results: (a) image of the feature on the USAF resolution target plate being acquired. (b) measured time-resolved image of the positive photodiode (Tint = 1 ms). (c) measured time-resolved image of the negative photodiode (Tint = 1 ms). (d) extracted and background-corrected image of the specimen.

While our experimental results demonstrated enough resolution in Fig. 11(d) to be considered a reliable imaging tool for intraoperative imaging, its performance can be further improved if clinical and surgical settings allowed increasing the form factor of the imager. The sharpness and resolution of the imager can be further enhanced by further increasing the aspect ratio of the angle-selective micro-gratings. Other alternative methods of angular selectivity such as [17]–[19] have been previously reported, where the Talbot effect is used to recover angular information from the pixels and computationally deblur the image, however, these methods rely on optical wave interferences and are therefore wavelength-dependent and, unlike [16], [20]–[22], cannot be used for broad range of emission wavelengths.

As an intraoperative imager, our platform must ensure full compliance with clinical optical and maximum exposure limits. According to the American National Standards Institute (ANSI) the limit for a 5-ms long pulsed laser of 980 nm, the maximum allowed power density is 200 W/cm2[23]. Fig. 12 shows the SNR change with excitation power density, and as seen in the graph, despite the ANSI limit of 200 W/cm2, the imager is still able to achieve a maximum SNR of 15dB.

Fig. 12.

SNR of pixel (in emission region) as a function of excitation light power.

VI. Conclusion

Summarized in Table I is a comparison of the imager proposed in this paper to several other chip-scale image sensors for microscopic disease detection and biomedical applications. Existing sensors can be distinguished by their acquisition method and categorized into two types: time-resolved and wavelength-resolved. Wavelength-resolved acquisition requires excitation-blocking and color filters ([16], [24], [25]) - often resulting in a large form-factor-,while the time-resolved method requires the excitation to be turned off during integration. The signal is either extracted using a time-to-digital-converter (by measuring the time of arrival of photons) ([26]–[28]) using single-photon avalanche diodes, or measured using a CMOS photodiode and integrated over the integration time. Freed from optics (filters and lenses), the imagers can become seamlessly integrated with surgical surfaces and achieve universal integration.

TABLE I.

Comparison Table of Biomedical Imagers

| TBIOCAS’19 [16] | TBIOCAS’11 [24] | JSSC’17 [25] | ISSSC’ 15 [26] | JSSC’12 [27] | JSSC’19 [28] | TBIOCAS’20 [6] | TBIOCAS’21 [29] | This work | |

|---|---|---|---|---|---|---|---|---|---|

| Technology | 180 nm CMOS | 0.5 μm CMOS | 65 nm CMOS | 0.35 μm CMOS | 65 nm CMOS | 40 nm CMOS | 130 nm BCD* | 0.35 μm CMOS | 180 nm CMOS |

| Method of detection | Frequency -resolved (CMOS PD) | Time-resolved (TDC*) | Time-resolved (CMOS PD) | Time-resolved (CMOS PD) | |||||

| Imaging system size [mm × mm] | 2.3 × 4.8 | 3 × 3 | 0.4 × 0.8 | 3.4 × 3.5 | 4 × 4 | 3.2 × 2.4 | 4 × 0.42 | 3.8 × 3.8 | 2.3 × 4.8 |

| Requires optics? (filters/lenses) | Yes | Yes | No | Yes | Yes | No | No | No | No |

| Photodiode Area Fill Factor | 64% | 42 % | - | 21 % | 37 % | 42 % | - | 6.4 % | 48 % |

| Imaging rate | 19 fps | 28 fps | - | 486 fps | 20 fps | 18.6 kfps | 50 kfps | 2.4 fps | 34 fps |

| Integration time | 50 ms | 36 ms | 1–10 s | - | - | 100 ms | 2 ns | - | 1 ms |

| Pixel pitch [μm] | 55 | 20.1 | [91, 123] | 15 | [60,72] | [18.4, 9.2] | [25.3, 51.2] | 400 | 55 |

| Excitation | 405 nm (0.1 W/cm2) | 550 nm | 405 nm | 470 nm | 610 nm | 485 nm | 488 nm | 617 nm | 980 nm (200 W/cm2) |

| Array size | 36 × 80 | 132 × 124 | Single PD | 160 × 120 | 32 × 32 | 192 × 128 | 8 × 64 | 6 × 7 | 36 × 80 |

TDC: Time-to-digital-conversion

BCD: Bipolar-CMOS-DMOS

While emission filters and lenses have been obviated in [25] and [29] using integrated nano-structures and enzyme-based coatings respectively, our work has mitigated the issue of excitation background by using a time-resolved method instead and focusing optics have been miniaturized into angle-selective gratings to create a fully integrated system-on-chip. Despite the optics-free imagers presented in Table I ([6], [25], [28]) being able to resolve nanosecond-scale lifetimes, their performance will be compromised when imaging tumor tissues as tissue autofluorescence is most significant precisely at those excitation wavelengths (350 nm - 500 nm)[30]. Additionally, since the autofluorescence exhibits an almost identical lifetime to conventional fluorophores (nanosecond range[31]), the emission signal will be masked by tissue autofluorescence. In contrast, autofluorescence is virtually eliminated with NIR excitation, creating a regime where time-gated acquisition on direct tumor tissues is possible without any tissue autofluorescence background. In this regime, we have validated the performance of our imager with a UCNP specimen demonstrating having the ability to image sites of tumor targeted by UCNPs in a medical setting as well.

While our proposed imaging platform has been able to address some of the critical challenges of optics-free time-resolved imaging, further enhancements such as lower noise front-end circuitry, a more selective angle response alternative (whether integrated or external) will be able to increase signal-to-background ratio and improve overall detection sensitivity.

Acknowledgment

The author would like to acknowledge Efthymios Papageorgiou for his contribution to this work.

Research reported in this publication was supported by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under award number R21EB027238. HN is supported by R21EB027238. MA is supported by R21EB027238 and the Office of the Director and the National Institute of Dental & Craniofacial Research of the National Institutes of Health under award number DP2DE030713. Work at the Molecular Foundry was supported by the Director, Office of Science, Office of Basic Energy Sciences, Division of Materials Sciences and Engineering, of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231.

Biographies

Hossein Najafiaghdam (M’17) received the B.Sc. degree from Sharif University of Technology, Tehran, Iran, in 2016 where he worked on analog and mixed-signal circuit design, and is currently pursuing his Ph.D. degree from the Department of Electrical and Computer Sciences at the University of California Berkeley, Berkeley, CA, USA. His research includes developing biomedical imagers, specifically intraoperative surgical imagers for NIR-illuminated molecular probes targeting cancer cells. He has previously worked at Apple Inc. during the summers of 2019 and 2020 working on design test automation using machine learning and DAC calibration algorithm development for system-on-chip sigma-delta modulators. He is also currently with Apple Inc., Cupertino, CA, USA.

Cassio C.S. Pedroso, Ph.D., is postdoctoral fellow at the Molecular Foundry at Lawrence Berkeley National Laboratory in Berkeley, CA, USA, where his research focuses on the design, synthesis, and application of lanthanide-doped upconverting nanoparticles for bioimaging. Previously, he worked as a FAPESP postdoctoral fellow in the laboratory of Paolo Di Mascio at the University of São Paulo (USP, Brazil) developing luminescent nanoparticles for addressing problems in generation of radical oxygen species and redox processes in cells. He graduated with honors in Chemistry from Federal University of São Paulo (UNIFESP, Brazil) and earned his Ph.D. in Science with emphasis in Chemistry from USP (Brazil). He has a broad background covering nanoscience, spectroscopy, photo-physics, and chemical biology.

Bruce E. Cohen, Ph.D., is a staff scientist at the Molecular Foundry and the Division of Molecular Biophysics & Integrated Bioimaging at Lawrence Berkeley National Laboratory in Berkeley, CA, USA, where his research focuses on the design and synthesis of novel luminescent nanomaterials for bioimaging. He graduated with honors in Chemistry from Princeton and earned his Ph.D. in Chemistry and Biophysics from UC Berkeley working with Daniel E. Koshland, Jr. He earned a certificate in Neurobiology from Woods Hole Institute before working as a Howard Hughes Medical Association postdoctoral fellow in the laboratory of Lily Y. Jan (University of California San Francisco) developing organic and protein-based fluorescent probes for addressing problems in protein biophysics and bioimaging. He has a broad background covering nanoscience, biophysics, neuroscience, photo-physics, and chemical biology. His current research interests include development of novel organic fluorophores for imaging and sensing, nanoparticle-based biosensors, and lanthanide-doped upconverting nanoparticles as single molecule and deep tissue imaging probes.

Mekhail Anwar (M’17) received the B. A. degree in Physics from University of California Berkeley, Berkeley, CA, USA, where he graduated as the University Medalist, and the Ph.D. degree in Electrical Engineering and Computer Sciences from the Massachusetts Institute of Technology, Cambridge, MA, USA, in 2007, followed by an M.D. from the University of California, San Francisco, CA, USA, in 2009. After completing a Radiation Oncology residency in 2014 with UCSF, he joined the faculty with the UCSF Department of Radiation Oncology in 2014 where he is now an Associate Professor. He is board certified in Radiation Oncology and a Core Member of the UC Berkeley and UCSF Bioengineering group. He was the recipient of the Department of Defense Prostate Cancer Research Program Physician Research Award, the NIH Trailblazer Award, and the NIH New Innovator Award. His research interests include developing sensors to guide cancer care using integrated-circuit based platforms.

Contributor Information

Hossein Najafiaghdam, Department of Electrical Engineering and Computer Sciences, University of California, Berkeley, CA 94720 USA..

Cassio C.S. Pedroso, Molecular Foundry, Lawrence Berkeley National Laboratory, Berkeley, CA 94720 USA.

Bruce E. Cohen, Molecular Foundry and the Division of Molecular Biophysics & Integrated Bioimaging, Lawrence Berkeley National Laboratory, Berkeley, CA 94720 USA.

Mekhail Anwar, Department of Radiation Oncology, University of California, San Francisco, CA 94158 USA..

REFERENCES

- [1].AE G et al. , “Breast Cancer-Major changes in the American Joint Committee on Cancer eighth edition cancer staging manual,” CA: a cancer journal for clinicians, vol. 67, no. 4, pp. 290–303, Jul. 2017, DOI: 10.3322/CAAC.21393. [DOI] [PubMed] [Google Scholar]

- [2].Senarathna J et al. , “A miniature multi-contrast microscope for functional imaging in freely behaving animals,” Nature Communications 2019 10:1, vol. 10, no. 1, pp. 1–13, Jan. 2019, DOI: 10.1038/s41467-018-07926-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Senarathna J et al. , “A miniature multi-contrast microscope for functional imaging in freely behaving animals,” Nature Communications 2019 10:1, vol. 10, no. 1, pp. 1–13, Jan. 2019, DOI: 10.1038/s41467-018-07926-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Rustami E et al. , “Needle-Type Imager Sensor with Band-Pass Composite Emission Filter and Parallel Fiber-Coupled Laser Excitation,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 67, no. 4, pp. 1082–1091, Apr. 2020, DOI: 10.1109/TCSI.2019.2959592. [DOI] [Google Scholar]

- [5].Blair S, Cui N, Garcia M, and Gruev V, “A 120 dB Dynamic range logarithmic multispectral imager for near-infrared fluorescence image-guided surgery,” Proceedings - IEEE International Symposium on Circuits and Systems, vol. 2020-October, 2020, DOI: 10.1109/ISCAS45731.2020.9180736/VIDEO. [DOI] [Google Scholar]

- [6].Choi J et al. , “Fully Integrated Time-Gated 3D Fluorescence Imager for Deep Neural Imaging,” IEEE Transactions on Biomedical Circuits and Systems, vol. 14, no. 4, pp. 636–645, Aug. 2020, DOI: 10.1109/TBCAS.2020.3008513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Dong QJ et al. , “Novel yellow- to red-emitting fluorophores: Facile synthesis, aggregation-induced emission, two-photon absorption properties, and application in living cell imaging,” Dyes and Pigments, vol. 185, p. 108849, Feb. 2021, DOI: 10.1016/J.DYEPIG.2020.108849. [DOI] [Google Scholar]

- [8].Rodrigues ACB et al. , “Bioinspired water-soluble two-photon fluorophores,” Dyes and Pigments, vol. 150, pp. 105–111, Mar. 2018, DOI: 10.1016/J.DYEPIG.2017.11.020. [DOI] [Google Scholar]

- [9].Teitelboim A et al. , “Energy Transfer Networks within Upconverting Nanoparticles Are Complex Systems with Collective, Robust, and History-Dependent Dynamics,” The Journal of Physical Chemistry C, vol. 123, no. 4, pp. 2678–2689, Jan. 2019, DOI: 10.1021/ACS.JPCC.9B00161. [DOI] [Google Scholar]

- [10].Watters RL and Ludwig GW, “Measurement of Minority Carrier Lifetime in Silicon,” Journal of Applied Physics, vol. 27, no. 5, p. 489, May 2004, DOI: 10.1063/1.1722409. [DOI] [Google Scholar]

- [11].Tian B et al. , “Low irradiance multiphoton imaging with alloyed lanthanide nanocrystals,” Nature Communications 2018 9:1, vol. 9, no. 1, pp. 1–8, Aug. 2018, DOI: 10.1038/s41467-018-05577-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Lee D, Han J, Han G, and Park SM, “An 8.5-Gb/s fully integrated CMOS optoelectronic receiver using slope-detection adaptive equalizer,” IEEE Journal of Solid-State Circuits, vol. 45, no. 12, pp. 2861–2873, Dec. 2010, DOI: 10.1109/JSSC.2010.2077050. [DOI] [Google Scholar]

- [13].Huang SH and Chen WZ, “A 10-Gbps CMOS single chip optical receiver with 2-D meshed spatially-modulated light detector,” Proceedings of the Custom Integrated Circuits Conference, pp. 129–132, 2009, DOI: 10.1109/CICC.2009.5280901. [DOI] [Google Scholar]

- [14].Jutzi M, Grözing M, Gaugler E, Mazioschek W, and Berroth M, “2-Gb/s CMOS optical integrated receiver with a spatially modulated photodetector,” IEEE Photonics Technology Letters, vol. 17, no. 6, pp. 1268–1270, Jun. 2005, DOI: 10.1109/LPT.2005.846563. [DOI] [Google Scholar]

- [15].Kuijk M, Coppée D, and Vounckx R, “Spatially modulated light detector in CMOS with sense-amplifier receiver operating at 180 Mb/s for optical data link applications and parallel optical interconnects between chips,” IEEE Journal on Selected Topics in Quantum Electronics, vol. 4, no. 6, pp. 1040–1045, Nov. 1998, DOI: 10.1109/2944.736111. [DOI] [Google Scholar]

- [16].Papageorgiou EP, Boser BE, and Anwar M, “Chip-Scale Angle-Selective Imager for In Vivo Microscopic Cancer Detection,” IEEE Trans Biomed Circuits Syst, vol. 14, no. 1, pp. 91–103, Feb. 2020, DOI: 10.1109/TBCAS.2019.2959278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Wang A, Gill PR, and Molnar A, “An angle-sensitive CMOS imager for single-sensor 3D photography,” Digest of Technical Papers - IEEE International Solid-State Circuits Conference, pp. 412–413, 2011, DOI: 10.1109/ISSCC.2011.5746375. [DOI] [Google Scholar]

- [18].Choi J et al. , “A 512-Pixel, 51-kHz-Frame-Rate, Dual-Shank, Lens-Less, Filter-Less Single-Photon Avalanche Diode CMOS Neural Imaging Probe,” IEEE Journal of Solid-State Circuits, vol. 54, no. 11, pp. 2957–2968, Nov. 2019, DOI: 10.1109/JSSC.2019.2941529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Wang A and Molnar A, “A light-field image sensor in 180 nm CMOS,” IEEE Journal of Solid-State Circuits, vol. 47, no. 1, pp. 257–271, Jan. 2012, DOI: 10.1109/JSSC.2011.2164669. [DOI] [Google Scholar]

- [20].Papageorgiou EP, Boser BE, and Anwar M, “Chip-scale fluorescence imager for in vivo microscopic cancer detection,” IEEE Symposium on VLSI Circuits, Digest of Technical Papers, pp. C106–C107, Aug. 2017, DOI: 10.23919/VLSIC.2017.8008565. [DOI] [Google Scholar]

- [21].Najafiaghdam H, Papageorgiou E, Torquato NA, Tian B, Cohen BE, and Anwar M, “A 25 micron-thin microscope for imaging upconverting nanoparticles with NIR-I and NIR-II illumination,” Theranostics, vol. 9, no. 26, p. 8239, 2019, DOI: 10.7150/THNO.37672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Papageorgiou EP, Boser BE, and Anwar M, “An angle-selective CMOS imager with on-chip micro-collimators for blur reduction in near-field cell imaging,” Proceedings of the IEEE International Conference on Micro Electro Mechanical Systems (MEMS), vol. 2016-February, pp. 337–340, Feb. 2016, DOI: 10.1109/MEMSYS.2016.7421629. [DOI] [Google Scholar]

- [23].American National Standards Institute, American National Standard For Safe Use Of Lasers, Z136.1. New York, NY USA: Laser Institute of America, 2014. [Google Scholar]

- [24].Murari K, Etienne-Cummings R, Thakor N. v., and Cauwenberghs G, “A CMOS in-pixel CTIA high-sensitivity fluorescence imager,” IEEE Transactions on Biomedical Circuits and Systems, vol. 5, no. 5, pp. 449–458, Oct. 2011, DOI: 10.1109/TBCAS.2011.2114660. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Hong L, Li H, Yang H, and Sengupta K, “Fully Integrated Fluorescence Biosensors On-Chip Employing Multi-Functional Nanoplasmonic Optical Structures in CMOS,” IEEE Journal of Solid-State Circuits, vol. 52, no. 9, pp. 2388–2406, Sep. 2017, DOI: 10.1109/JSSC.2017.2712612. [DOI] [Google Scholar]

- [26].Perenzoni M, Massari N, Perenzoni D, Gasparini L, and Stoppa D, “A 160×120-pixel analog-counting single-photon imager with Sub-ns time-gating and self-referenced column-parallel A/D conversion for fluorescence lifetime imaging,” Digest of Technical Papers - IEEE International Solid-State Circuits Conference, vol. 58, pp. 200–201, Mar. 2015, DOI: 10.1109/ISSCC.2015.7062995. [DOI] [Google Scholar]

- [27].Guo J and Sonkusale S, “A 65 nm CMOS digital phase imager for time-resolved fluorescence imaging,” IEEE Journal of Solid-State Circuits, vol. 47, no. 7, pp. 1731–1742, 2012, DOI: 10.1109/JSSC.2012.2191335. [DOI] [Google Scholar]

- [28].Henderson RK et al. , “A 192 128 Time Correlated SPAD Image Sensor in 40-nm CMOS Technology,” IEEE Journal of Solid-State Circuits, vol. 54, no. 7, pp. 1907–1916, Jul. 2019, DOI: 10.1109/JSSC.2019.2905163. [DOI] [Google Scholar]

- [29].Hofmann A, Meister M, Rolapp A, Reich P, Scholz F, and Schafer E, “Light Absorption Measurement with a CMOS Biochip for Quantitative Immunoassay Based Point-of-Care Applications,” IEEE Transactions on Biomedical Circuits and Systems, vol. 15, no. 3, pp. 369–379, Jun. 2021, DOI: 10.1109/TBCAS.2021.3083359. [DOI] [PubMed] [Google Scholar]

- [30].Monici M, “Cell and tissue autofluorescence research and diagnostic applications,” Biotechnology Annual Review, vol. 11, no. SUPPL., pp. 227–256, Jan. 2005, DOI: 10.1016/S1387-2656(05)11007-2. [DOI] [PubMed] [Google Scholar]

- [31].Berezin MY and Achilefu S, “Fluorescence Lifetime Measurements and Biological Imaging,” Chemical Reviews, vol. 110, no. 5, pp. 2641–2684, May 2010, DOI: 10.1021/CR900343Z. [DOI] [PMC free article] [PubMed] [Google Scholar]