Abstract

Disease prediction from diagnostic reports and pathological images using artificial intelligence (AI) and machine learning (ML) is one of the fastest emerging applications in recent days. Researchers are striving to achieve near-perfect results using advanced hardware technologies in amalgamation with AI and ML based approaches. As a result, a large number of AI and ML based methods are found in the literature. A systematic survey describing the state-of-the-art disease prediction methods, specifically chronic disease prediction algorithms, will provide a clear idea about the recent models developed in this field. This will also help the researchers to identify the research gaps present there. To this end, this paper looks over the approaches in the literature designed for predicting chronic diseases like Breast Cancer, Lung Cancer, Leukemia, Heart Disease, Diabetes, Chronic Kidney Disease and Liver Disease. The advantages and disadvantages of various techniques are thoroughly explained. This paper also presents a detailed performance comparison of different methods. Finally, it concludes the survey by highlighting some future research directions in this field that can be addressed through the forthcoming research attempts.

Introduction

In this digital era, many organizations have been established across the globe which provide continuous health monitoring facilities for humans. In the traditional method, patients visit the clinic and the health professionals advise them through their expertise in diagnosis. However, in this age-old way of medical diagnosis, patients face various difficulties owing to the increase in the number of health related problems as well as the population, especially in developing countries. This scenario sometimes leads to improper care of a patient, which can even prove fatal.

To this end, technology provides an alternative to the traditional system. Hence, it plays a significant role in healthcare systems by incorporating a large number of computer aided supporting systems and tools. This bonding has not only improved the quality of patient care but also reduced the cost of treatment by imparting efficient allocation of medical resources. The main components of technology-enabled healthcare systems are medical experts, hardware and software. However, designing an automatic system that can predict the disease from electronically available medical data is very challenging. The huge social impact of this research field motivates researchers from various domains like computer science, biology, medicine, statistics, and drug design. These researchers are continuously trying to come up with a near perfect system for better patient care.

In this context, it is worth mentioning that with the growing availability of digital records and data, the last two decades have observed an exhaustive adoption of data mining and machine learning (ML) techniques [89] in healthcare/patients care systems. Healthcare, one of the most crucial sectors of our society, is availing the facility of digitization of medical records and the emergence of Electronic Health Records (EHRs). The large-scale availability of EHRs has led to a surge in Computer aided Diagnosis and Detection (CADD) systems which, in general, employ various ML algorithms to accurately predict the presence of a particular disease in a subject. These CADD systems help in removing subjectivity in EHR and/or histopathology image analysis, thereby minimizing the prediction error. According to the third global survey1 on electronic health (eHealth), conducted by the World Health Organization (WHO) in 2016, there has been a steady growth in the adoption of EHRs over the past 15 years and a 46.00% global increase in the past five years.

A disease is an abnormal condition that affects mostly a part of an organ and it is not caused by some external injury. In medical science, there are many categories of diseases like acute, infectious, heredity, and chronic. Chronic diseases generally persist for a longer period (3 months or more) in human organs. In general, such diseases cannot be prevented using vaccines or cured by medications. However, early detection of these diseases can save many human lives. Chronic diseases such as cancer, heart disease, and diabetes are the leading causes of death of human beings. With 784,821 deaths in India and an estimated 9.6 million deaths globally in 2018, cancer is considered as one of the most fatal diseases. Cancer generally involves abnormal cell growth with the potential to spread to other organs in the human body. The most common forms of cancer include Breast Cancer, Lung Cancer, Bronchial Cancer, Leukemia, Prostate Cancer, etc. It comes as no surprise that the earliest efforts in CADD [58] started with mammography for the detection of Breast Cancer, and these techniques were later applied to other forms of cancer as well.

Heart Disease, also called Cardiovascular Diseases (CVDs), is another group of diseases that causes a large number of deaths every year. Diseases that fall under the umbrella of CVDs include coronary artery disease, heart rhythm problems (also known as arrhythmias), and congenital heart diseases among others. According to Abdul-Aziz et al. [3], one in four deaths in India is due to CVD. According to a WHO report2 more people (over 17.9 million each year) die from CVDs worldwide than from any other cause. Diabetes is one of the prime risk factors for CVDs. It is of two types: Diabetes insipidus or Type-I Diabetes in which the pancreas produces little to no insulin and Diabetes mellitus or Type-II Diabetes which affects the way the body processes insulin. Type-II Diabetes accounts for more than 90% deaths of all the Diabetes cases [155]. It mainly arises out of unhealthy lifestyle choices. Over 30 million people have been diagnosed with Diabetes in India and are attributed as the direct cause of 1.6 million deaths as of 20163.

Diabetes along with hypertension is responsible for Chronic Kidney Diseases (CKD). In CKD, the malfunctioning kidneys fail to filter waste from the blood leading to waste accumulation and may eventually lead to renal failure. A major impediment to CKD diagnosis is that the early stages of CKD show no symptoms. Around 100,000 patients are diagnosed with end stage kidney disease every year4 in India. Unhealthy lifestyle choices also cause Liver Diseases. The liver is the largest organ in the body and Liver diseases mainly arise from excessive consumption of substances like alcohol, harmful gases, contaminated food, pickles and drugs [148]. With 259,749 deaths in 2017 in India and around 1.3 million deaths worldwide from cirrhosis alone, Liver Disease is one of the major health issues worldwide5.

Motivation and Contributions

All the facts mentioned earlier demand the need for early detection of such chronic diseases, which could save a large number of human lives. As a result, several researchers around the globe engaged their time to find the solutions for detecting chronic diseases at their early stages. Also, the abundant data gathered from EHRs, medical diagnosis and medical imaging alongside the technological and technical improvement in ML and artificial intelligence (AI) have led to significant research in Bioinformatics, Biomedical imaging and CADD systems. There has been extensive research in the domain of disease prediction. Since 1997, such methods have been published in different refereed journals and conferences [163]. As a result, voluminous research articles are present in the literature. Accordingly, several review articles [5, 57, 59, 78, 91, 92, 92, 93, 103, 134, 177, 201] are also present in the literature on chronic disease prediction. We have summarized the types of methods these review articles considered in Table 1. From Table 1, we can observe that these articles were prepared by highlighting the problem domain narrowly i.e., these were prepared either by highlighting a specific chronic disease [90, 93, 93, 177] in most of the cases or in a few cases, a specific category of ML or AI-aided techniques [5, 91, 92, 103, 201]. Sometimes, the authors discussed specific ML or AI-aided techniques for a particular disease [78, 90, 177]. Hence, it can be safely concluded that although surveys published enlisting research efforts for specific diseases or specific ML techniques, such surveys fail to shed light on the prevailing trend of research across multiple diseases or ML approaches.

Table 1.

Coverage of the present survey in comparison with the past surveys

| Review article | Remarks |

|---|---|

| Fatima et al. [57] | 1. Research works related to the prediction of heart, Diabetes, liver, dengue and hepatitis were included for discussion |

| 2. On average, 4 to 5 works for each disease were included for discussion | |

| 3. No critical analysis was provided to highlight the future research directions for such diseases | |

| Rigla et al. [177] | 1. Research findings on Diabetes detection from diagnosis reports were considered |

| 2. Classical ML approaches like Neural Network (NN), Decision Tree (DT), Support Vector Machine (SVM) based methods were included | |

| 3. Important factors like notable research findings, future research directions, critical analysis of the surveyed works were missing | |

| Jain and Singh [103] | 1. Importance was given to describing FS techniques rather than disease detection methods |

| 2. Most of the works used (more than 90%) for critical analysis were on Diabetes prediction | |

| 3. Image based disease predictions were absent as all the cited works were on diagnosis report based repositories | |

| 3. Disease specific discussion was missing | |

| Ahmadi et al. [5] | 1. Works considered for review are more fuzzy technique centric rather than disease-centric which means fuzzy techniques were prioritized rather than the disease detection |

| 2. Published papers till 2017 were considered for discussion | |

| 3. Only 52 works on different (>10), i.e., on an average 3 to 4 works per disease, and diagnosis report based disease were used | |

| Hosni et al. [91] | 1. Seven research questions, mostly classifier ensemble oriented, were set, and the published works that address each of the research questions mentioned, were investigated. So this review is entirely focused on ensemble based methods |

| 2. Only the works on Lung Cancer disease detection from diagnosis reports were considered | |

| 3. Ensemble methods that were considered followed classical ML algorithms | |

| Hosni et al. [90] | 1. Some of the notable research outcomes related to Breast Cancer detection from diagnosis reports were considered for analysis |

| 2. No description/critical analysis of the methods could be found. Rather categorical analyses of the works were made without individualization | |

| 3. No description of used datasets and performance comparison and hence the beginners may find it difficult to get the complete idea over the problem domain | |

| Fernández et al. [59] | 1. Research initiatives for Diabetes disease detection were considered |

| 2. Disease detection techniques that employed classifier ensemble techniques were only included | |

| 3. Better performing single classifier works were excluded based on the selection strategy of the research works | |

| 4. No critical analysis of the works was provided | |

| Hosni et al. [92] | 1. Extended version of the work [91] |

| 2. Extension in terms of paper selection strategy and increase of one research question | |

| 3. Statistics related to the questions were discussed. There was no focus on disease detection methods. | |

| Gupta and Gupta [78] | 1. Investigated the performance of Artificial NNs (ANNs), Restricted Boltzmann Machine, Deep Autoencoders, and Convolutional Neural Networks (CNNs) for screening Breast Cancer |

| 2. Described only 17 previously published research works | |

| 3. Results on only the Surveillance, Epidemiology and End Results (SEER) dataset were investigated to suggest a suitable solution | |

| Manhas et al. [134] | 1. Reviewed image based cancer detection techniques |

| 2. Five types of cancer - Cervical, Oral, Breast, Brain and Skin were included in the review | |

| 3. Diagnosis based cancer detection techniques were overlooked | |

| Sharma and Rani [201] | 1. A set of 12 research questions are identified for cancer research |

| 2. Gene expression data based research was highlighted most | |

| 3. Research related diagnosis reports were also included | |

| Houssein et al. [93] | 1. Breast Cancer screening techniques were discussed |

| 2. Only research work that dealt with histopathological, mammogram images were included | |

| 3. Diagnosis report based methods were also included | |

| Reshmi et al. [175] | 1. Image based Breast Cancer detection techniques were included |

| 2. Only research works that dealt with histopathological images were included and thus minimized the research spectrum | |

| 3. Other aspects of Breast Cancer detection were not discussed | |

| Proposed, 2022 | 1. Several diseases that include Breast Cancer, Lung Cancer, Leukemia, Heart Disease, Diabetes, Liver Disease and CKD have been covered |

| 2. Research endeavors made over the last two decades involving both image based and diagnostic report based disease screening methods have been studied | |

| 3. Various important ML strategies like missing value imputation, feature reduction, FS, classifier combination, fuzzy logic along with DL based strategies have been considered | |

| 4. A comprehensive description of each work along with its shortcomings and possible scope for future improvement is provided. Also a comparative performance analysis of the methods for each disease has been provided | |

| 5. The disease specific research trends are analyzed and accordingly some future research directions for each disease are suggested |

To this end, the present survey is a significant endeavor as it not only encapsulates the concerted research efforts in the specific diseases but also attempts to reflect on the current trends in research across chronic diseases like Breast Cancer, Leukemia, Lung Cancer, Heart Diseases, Diabetes, CKD and Liver, and AI based methods including missing value imputation, feature reduction, feature selection (FS), classifier combination, fuzzy logic, and ML and Deep Learning (DL) based approaches. This survey also chronicles the diagnostic report based and image based approaches for the said diseases. A comparative study of our survey with some recent review articles is reported in Table 1. Information in this table conveys that the present survey not only covers the similar methods described in state-of-the-art review articles but also includes a wider range of AI based applications in several disease prediction systems. It is to be noted that in the present article we only concentrate on automatic disease prediction systems (an integral part of technology-enabled health care systems) that use several ML and DL schemes for the prediction purposes.

Organization of the Article

The overall organization of this survey is shown in Table 2. Section 2 provides the detail working procedure of a generic disease prediction system and a generic diagnostic report based system and an image based system. Section 3 discusses the commonly applied evaluation metrics used for assessing the performance of a disease prediction system. Sections 4, 5, 6, 7 and 8 report the research endeavors in Cancer, Heart Disease, Diabetes, Liver Disease and CKD respectively. Section 4 is subdivided into three subsections for describing Breast Cancer, Lung Cancer and Leukemia respectively, each of which further contains subsections for image and diagnostic report based disease prediction techniques. Whereas, Sect. 5 has separate sections for the single classifier based approach and the Ensemble based approach in Heart Disease detection. For an easy reference, the organization of disease specific discussions made in this article is shown in Fig. 1. Some important future research directions are discussed in Sect. 9. Finally, this survey is concluded in Sect. 10.

Table 2.

The overall organization of the article

| Topic | Work Refs. |

|---|---|

| 1: Introduction | |

| 1.1: Motivation and Contributions | |

| 1.2: Organization of the Article | |

| 2: Generic Chronic Disease Prediction Method | |

| 2.1: Diagnosis Report based Method | |

| 2.2: Pathological Image based Method | |

| 3: Evaluation Metrics | |

| 4: Cancer Prediction Methods | |

| 4.1: Breast Cancer Prediction Methods | |

| 4.1.1: Diagnosis Report based Methods | [6, 36, 43, 49, 66, 67, 72, 73, 98, 109, 121, 122, 129, 149, 165, 166, 202–204] |

| 4.1.2: Pathological Image based Methods | [28, 30, 37, 38, 40, 44, 52, 77, 79, 105, 172, 178, 185, 189, 195, 199, 212, 228, 230] |

| 4.2: Lung Cancer Prediction Methods | |

| 4.2.1: Diagnosis Report based Methods | [47, 49, 53, 87, 132, 151, 153, 161, 169, 180, 183] |

| 4.2.2: Pathological Image based Methods | [9, 13, 16, 104, 117, 152, 198, 209, 216, 218, 222, 231] |

| 4.3: Leukemia Prediction Methods | |

| 4.3.1: Gene Expression based Methods | [27, 55, 63, 70, 94, 114, 187, 188] |

| 4.3.2: Pathological Image based Methods | [2, 125, 142–145, 170, 176, 181, 196, 197] |

| 5: Heart Disease Prediction Methods | |

| 5.1: Single Classifier based Methods | [8, 43, 56, 60, 64, 69, 81, 97, 100, 101, 106, 110, 113, 136, 158, 186, 194, 200, 208] |

| 5.2: Ensemble based Methods | [14, 48, 130, 138, 146] |

| 6: Diabetes Prediction Methods | [19, 39, 43, 46, 54, 81, 82, 84, 99, 102, 111, 154, 160, 168, 173, 182, 202–204, 206, 221, 227] |

| 7: CKD Prediction Methods | [11, 12, 15, 18, 21, 35, 41, 74, 81, 108, 164, 167, 186, 190, 207, 219, 223, 225] |

| 8: Liver Disease Prediction Methods | [1, 4, 22, 43, 45, 80, 83, 112, 118–120, 174, 210, 220] |

| 9: Future Research Directions | |

| 9.1: Exploring more DL Models | |

| 9.2: Designing Sophisticated Data Processing Strategies | |

| 9.2.1: Handling Missing Values | |

| 9.2.2: Exploring more Dimensionality Reduction Strategies | |

| 9.2.3: Designing new FS Strategies | |

| 9.3: Fusing Handcrafted Features with Deep Features | |

| 9.4: Handling Class Imbalance Problem | |

| 9.5: Generating Large-scale Datasets | |

| 9.5.1: New Data Collection | |

| 9.5.2: Generating Synthetic Data | |

| 9.5.3: Preparing Multi-modal Data | |

| 9.6: Medical Image Segmentation | |

| 9.7: Maintaining Ethical and Legal Aspects | |

| 10: Conclusion |

Fig. 1.

Organization of disease prediction methods discussed in this article. Numbers indicate sections and subsections where the particular topics are discussed

Generic Chronic Disease Prediction Method

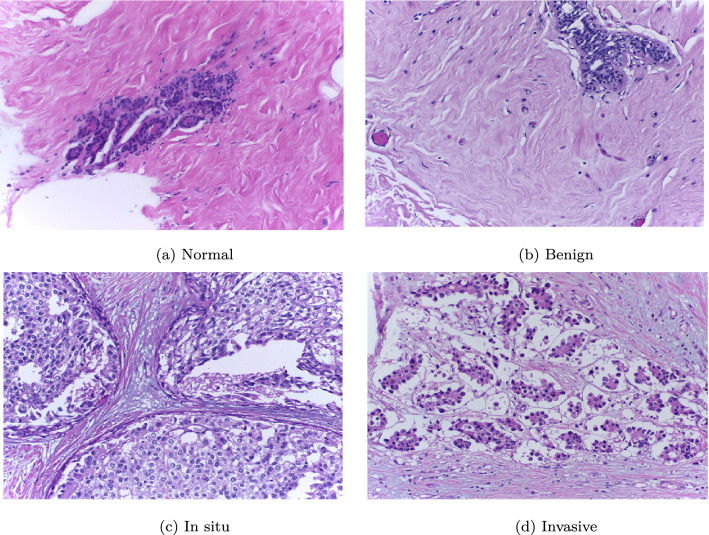

A chronic disease prediction system accepts data from a new subject as input and generates some specific report about the status of the disease observed in the subject. In general, the status of the subject is labelled either positive or negative. Status is positive if the subject is affected by the disease for which the test is made. Otherwise, the subject is labelled negative. In some recent systems, the positive cases are further classified into more categories based on the stage of the disease. For example, in the Breast Cancer Histology (BACH) image dataset6 Aresta et al. [17] labelled positive Breast Cancer stages into three stages: benign, in situ carcinoma and invasive carcinoma while in the Breast Cancer Histopathological Database (BreakHis)7 positive Breast Cancer cases labelled into eight sub-categories.

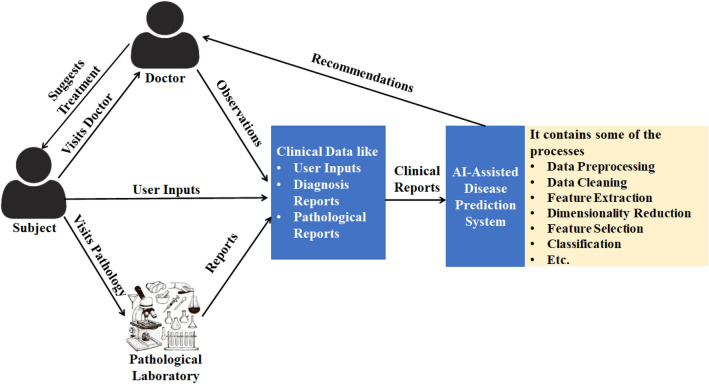

In Fig. 2, we have provided a schematic diagram which shows how a generic disease prediction system works (here a chronic disease). The figure highlights the communication among subjects, doctors, pathological laboratories and AI assisted decision support systems. The clinical data (a set of diagnostic reports) for a subject may have three different forms: (i) input from the subject (e.g., height, weight, age, and sex, etc.), (ii) input from the doctors or experts (e.g., heart rate, body mass index, and disease specific observations, etc.), and (iii) input from a pathological laboratory (e.g., blood cell counts, pathological image(s) and X-ray image, etc.). It is noteworthy to mention that the diagnostic parameters vary from one disease to another. The collected clinical data are analysed by an AI-assisted disease prediction system and the system generates some forms of recommendations to be suggested by the doctor for the subject, which are here considered as the presence or absence of disease. The doctors analyse the recommendations and suggest treatment for the subject. In this survey, based on the nature of clinical data, we broadly divide the disease prediction systems into diagnosis report based systems and pathological image based systems.

Fig. 2.

Steps involved in a general decision support system

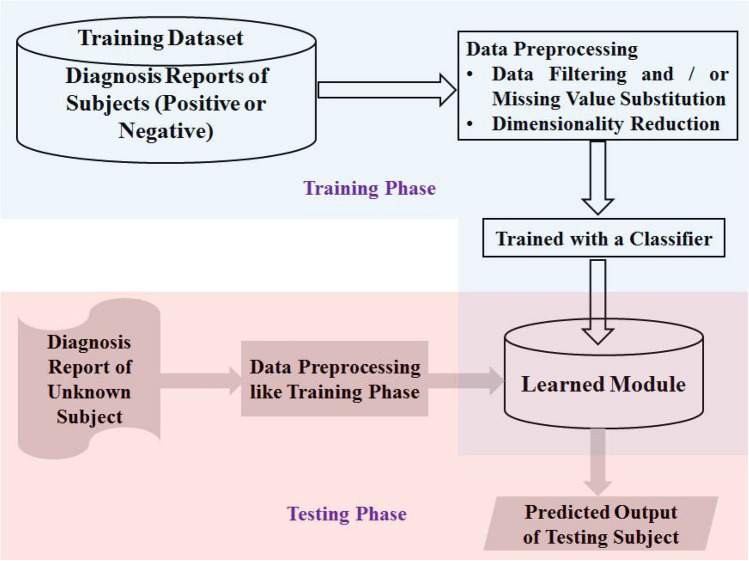

Diagnosis Report based Method

In the early years of research related to computerized disease prediction systems, the patients’ data (diagnostic reports) was mostly stored electronically in text form. As a result, a set of systems have been designed over time. In these systems, in general, a classifier is used to learn from these stored textual data. Most popular diagnosis report based datasets available electronically contains missing values, insignificant attributes and redundant information about the subject which do not help the classifiers to learn from these data sufficiently rather many times reduce their efficiency. Therefore, preprocessing techniques that include missing value imputation or data filtering (used as a substitute for missing value imputation) and data reduction have been employed by the researchers before feeding the data to a classification model. The preprocessed training samples are then fed to the selected classifier to generate a learning module that contains optimally tuned hyperparameters of the classifier. In the testing phase (or prediction phase), a diagnosis report is collected from an unknown subject and then preprocessed based on the available information from the training stage. Finally, the preprocessed data are fed to the saved learned model to decide whether the subject is carrying the disease or not. A generic model of diagnosis report based disease prediction system is shown in Fig. 3.

Fig. 3.

A block diagram representing steps used, in general, for predicting a disease from a given set of diagnosis reports

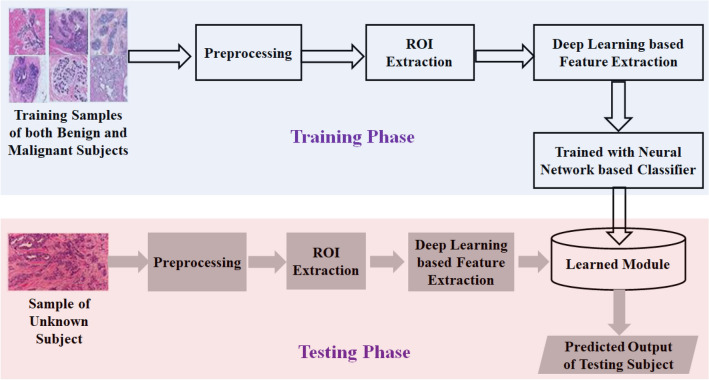

Pathological Image based Method

A diagnosis system that uses pathological images collected from the subjects to predict disease, in general, follows two different approaches: feature engineering based approach (see Fig. 4) and DL based approach (see Fig. 5). However, methods following any of these approaches commonly use some preprocessing techniques to obtain better features from the images. As shown in Figs. 4 and 5 after preprocessing, the general trend is to find out the Region of Interest (ROI) to pinpoint areas which can be used to extract the features. In the case of DL based methods, the high level features are extracted from ROI images through the use of a set of convolutional and pooling operations while in the case of the handcrafted features the domain knowledge contributes significantly while extracting features. In the case of feature engineering based methods, many standard classifiers are used to predict the disease from unknown samples. Whereas in the case of DL based methods, it is generally performed using multi-layered NNs that automatically extract features from the input data.

Fig. 4.

A block diagram representing steps used, in general, for predicting a disease from a pathological image following handcrafted features extraction and shallow learner based classifiers

Fig. 5.

A block diagram representing steps, in general, used for predicting a disease from pathological images using some DL based models

Evaluation Metrics

The disease prediction systems described in this article used some classification models for disease diagnosis. Researchers used some standard metrics like accuracy (ACC), precision (P), recall (R), F1-score (F1), Specificity (S) and Area under Receiver Operator Characteristic (ROC) Curve (ROC-AUC score or simply AUC score) of a classification model while citing the performance of their model. These evaluation metrics can easily be measured using the confusion matrix or error matrix which describes the complete performance of a model while predicting the result. It is a (N is the number of classes) dimensional matrix. It helps to summarize and visualize the performance of a predictive model. It consists of four metrics: True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN), which are defined below.

TP indicates the number of positive samples that are predicted correctly by the classification model

TN indicates the number of negative samples that are predicted as negative samples by the classification model.

FP indicates the number of negative samples that are predicted as positive samples by the classification model

FN indicates the number of positive samples that are predicted as negative samples by the classification model

Accuracy

One of the most commonly used metrics for reporting the performance of a disease prediction method is accuracy. It indicates how often a disease prediction model correctly predicts a sample. It is calculated as the fraction of the number of correctly classified samples (i.e., ) and the total number of test samples (i.e., ) and it is defined by Eq. 1.

| 1 |

If the test set suffers from the imbalanced class problem [32, 131] which is very common for medical datasets as normal cases overwhelm disease cases then this metric may mislead the overall model performance. Thus, the use of other metrics like P, R, F1, S, and AUC scores become necessary and thus used widely in the past works.

Precision

Precision, also known as Positive Predictive Value (PPV), represents the classifier’s capability to predict positive cases as the positive. It is calculated using Eq. 2.

| 2 |

Recall

Recall, alternatively known as sensitivity, is the fraction of positive samples that are classified by the model correctly with respect to the total number of actual positive cases present in the test dataset. Recall is calculated using Eq. 3.

| 3 |

F1-Score (F1)

F1-score represents the trade off between recall and precision scores, and the harmonic mean of precision and recall is considered as the F1-score value. When the FP and FP are equally important then the F1-score metric is very useful in order to know about the model’s prediction capability. It is calculated using Eq. 4.

| 4 |

Specificity

Specificity, also known as TN rate, is the proportion of negative samples that are correctly classified i.e., it is the fraction of TN and . It is calculated using Eq. 5.

| 5 |

ROC-AUC Score (AUC)

ROC curve is an evaluation metric for classification problems and disease diagnostic tasks, where the curve represents the probability curve that plots the TP rate against the FP rate at various threshold values. It is used to show the trade-off between sensitivity and specificity in a graphical way. In other words, the AUC score describes how an adjustable threshold causes changes in two types of errors: false positives and false negatives. AUC score works as a quantitative measure of the ROC curve based evaluation metric. It tells us how much a model is capable of distinguishing between classes. A higher value of AUC means the model is better at class prediction. The readers are suggested to read the article by Carrington et al. [32] for a more insightful explanation of the same.

Cancer Prediction Methods

In the current section, we have mostly discussed recent DL and ML aided cancer detection methods. Here, three types of cancer viz., Breast Cancer, Lung Cancer and Leukemia prediction systems have been discussed.

Breast Cancer Prediction Methods

We have elucidated some of the existing Breast Cancer disease detection techniques present in the literature. We have come across some quality research works related to both diagnosis reports and image based methods for Breast Cancer detection which are discussed hereafter.

Diagnosis Report based Methods

In literature, a significant number of research articles used the Wisconsin Breast Cancer (WBC)8 dataset in Breast Cancer detection research. It contains a diagnosis report constituting 9 attributes of 699 subjects (241 positive subjects and 458 negative subjects). The values of all these attributes are scaled to a range of 1 to 10 depending on their proportion. A class attribute (value is 2 for benign tumor and 4 for malignant tumor) is also present in the dataset. By thorough analysis of the state-of-the-art methods, we have observed that there are many works on this dataset using different ML based approaches. However, in most of the cases, methods involving SVM have outperformed the rest. Table 3 lists the performance of various research efforts on the WBC dataset. Some of the important methods are discussed here.

Table 3.

Performance comparison of recent prediction models on WBC datasets

| Work Ref. | Technique Used | Performance metrics | ||||

|---|---|---|---|---|---|---|

| ACC | P | R | F1 | S | ||

| Zheng et al. [234] | K-means aided feature transform + SVM | 0.94 | – | 0.96 | – | 0.92 |

| Kamel et al. [109] | GWO FS + SVM | 1.00 | – | 1.00 | – | 1.00 |

| Mafarja et al. [129] | Hybridized WOA and SA | 0.97 | – | – | – | – |

| Ghosh et al. [66] | SMO-X FS + k-NN | 1.00 | – | – | – | – |

| Huang et al. [98] | GA-RBF SVM (small scale data) | 0.98 | – | – | 0.99 | – |

| RBF-SVM (large scale data) | 0.99 | – | – | 0.99 | – | |

| Kumari and Singh [122] | k-NN | 0.99 | – | – | – | – |

| Kumar et al. [121] | Lazy k-star/ Lazy IBK. | 0.99 | 0.99 | 0.99 | 0.99 | – |

| Prakash and Rajkumar [165] | HLFDA-T2FNN | 0.98 | – | – | – | – |

| Preetha and Jinny [166] | PCA-LDA + ANNFIA | 0.97 | – | – | – | – |

| Sandhiya and Palani [186] | ICRF-LCFS | 0.94 | – | – | – | – |

| Chatterjee et al. [36] | SSD-LAHC + k-NN | 0.99 | – | – | – | – |

| Ahmed et al. [6] | RTHS + k-NN | 0.99 | – | – | – | – |

| Guha et al. [72] | CPBGSA +MLP | 0.99 | – | – | – | – |

| Guha et al. [73] | ECWSA + k-NN | 0.95 | – | – | – | – |

| Ghosh et al. [67] | MRFO + k-NN | 1.00 | – | – | – | – |

The FS based techniques are mostly employed in the last 10 years for Breast Cancer detection while considering the WBC dataset. In 2014, Zheng et al. [234] designed a feature transform method using the k-means algorithm where they first generated n (< number of attributes) possible cluster centres using the k-means algorithm on the training dataset and then the distances of test samples from these n cluster centres were considered as transformed features. Later to perform the disease detection, the transformed feature vectors were classified using the SVM classifier. The authors have proved experimentally that it outperformed the Genetic Algorithm (GA) and Particle Swarm Optimization (PSO) based FS techniques while using SVM as a classifier. Kamel et al. [109] showed the importance of a good FS technique for this research problem. In this work, outliers were eliminated utilizing the outer line method. They utilized Grey Wolf Optimization (GWO) [141] for FS. This FS technique yielded an extraordinary result using the SVM classifier. In another work, Mafarja et al. [129] proposed a hybrid FS approach, where the authors utilized the Whale Optimization Algorithm (WOA) [140] and Simulated Annealing (SA) [116]. The authors obtained very promising results for FS where only 4 attributes from 9 were selected. In another work, Fruit Fly Optimization Algorithm (FOA) [156] was used by Shen et al. [204] for selecting diagnostic attributes. FOA iteratively obtains the optimal features. Moorthy and Gandhi [149] proposed another hybrid FS technique using Analysis of Variance (ANOVA) and WOA based FS techniques. With this model, the accuracy of the dataset with the SVM classifier increased by 2.10% while compared to SVM based classification without FS.

Ghosh et al. [66] introduced a swarm based FS technique named social mimic optimizer using an X-shaped transfer function (called SMO-X). With SMO-X, the authors gained the maximum accuracy with the k-nearest neighbors (k-NN) classifier with the help of 33.33% of actual features. In another work, Prakash and Rajkumar [165] used a hybrid FS technique termed Hybrid Local Fisher Discrimination Analysis (HLFDA) [61]. After the FS, the dataset that has a near optimal feature subset was fed into Type 2 Fuzzy NN (T2FNN) for classification. Preetha and Jinny [166] combined two powerful feature transform strategies: Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA). They put the dataset individually in PCA and LDA first and then combined the resultant transformed features to obtain the final feature set, which was used for disease detection. Performances of three classifiers viz., Adaptive Neuro-Fuzzy Inference Systems (ANFIS), SVM and Multilayer Perceptron (MLP) were investigated and out of which ANFISs outperformed others. Recently, Dey et al. [49] proposed a hybrid FS technique called Learning Automata based Grasshopper Optimization Algorithm (LAGOA) where the premature convergence of Grasshopper Optimization Algorithm (GOA) was taken care of. The Learning Automata (LA) was used to adjust GOA parameters adaptively while a two-phase mutation technique was utilized to increase the exploitation capability of GOA.

One can also find a number of hybrid FS methods [6, 36, 67, 72, 73] where the researchers employed their method on WBC dataset. For example, Chatterjee et al. [36] designed a hybrid FS method which improved the local search capability of the Social Ski Driver (SSD) algorithm with the help of the Late Acceptance Hill Climbing (LAHC) method. The method is named as SSD-LAHC algorithm. In another work, Ahmed et al. [6] proposed Ring Theory based Harmony Search (RTHS) algorithm which coupled well-known Harmony Search (HS) algorithm and Ring Theory based Evolutionary Algorithm (RTEA). Guha et al. [72] designed a Clustering based Population in Binary Gravitational Search Algorithm (CPBGSA). In this work, the authors decided initial population using a clustering method to overcome the premature convergence of the Gravitational Search Algorithm. In another work, Guha et al. [73] proposed Embedded Chaotic Whale Survival Algorithm (ECWSA) using Whale Optimization Algorithm (WOA) aiming at better classification accuracy. They used a filter method to refine the selected features by WSA. Ghosh et al. in [67] compared the performance of Manta Ray Foraging Optimization (MRFO) with varying transfer functions. In this work, the performances of four variants of S-shaped and V-shaped transfer functions were studied.

Apart from the FS based techniques, we also find the use of other classical ML approaches. For example, Huang et al. [98] performed a detailed comparative study between SVM with the varying kernel (i.e., Liner, Polynomial and Radial Basis Function (RBF)) and ensemble methods (bagging and boosting) having SVM as classifier. They also experimented with GA to establish the effect of FS on classification in the WBC dataset. Through extensive experiments, they established that in the case of small datasets, boosted SVM with polynomial kernel and GA performed the best while for large datasets, SVM with RBF kernel and SVM with polynomial kernel performed the best. Missing values in data can put a detrimental effect on the classifier. To ameliorate this, Choudhury and Pal [43] introduced a novel method of missing value imputation using autoencoder NN. In this method, first the autoencoder NN was trained on multiple datasets like WDBC without any missing values for the attributes present there and then the learned autoencoder was used to predict missing values for other datasets that contain some missing values. As an initial guess of the missing value, the nearest neighbor rule was used and then refined by minimizing the reconstruction error. This was based on the hypothesis that a good choice for a missing value would be the one that can reconstruct itself from the autoencoder. Recently, Shaw et al. [202, 203] used methods to handle imbalance class problems and subsequently improved the cancer prediction performance. In the work [203], the authors proposed an ensemble approach to handle the class problem while in [202] they solved the problem using an evolutionary algorithm. In [202], the ring theory-based algorithm was hybridized with the PSO algorithm to select the near-optimal majority class samples from the training set. Such an initiative improved the final Breast Cancer detection performance of the classifiers. Apart from these major works, many authors like Kumari and Singh [122] and Kumar et al. [121] have given prominence to comparative studies. Kumari and Singh [122] applied correlation based FS and established the superiority of k-NN to SVM and LR classifiers while Kumar et al. [121] compared 12 classifiers, namely Adaptive Boosting (AdaBoost) algorithm, decision table, J-Rip, a DT classifier (J48), k-NN, Lazy K-star, LR, Multiclass Classifier, MLP, NB, RF and Random Tree (RT). The authors recommended the use of four classifiers, namely RF, RT, Lazy k-star, and k-NN that yielded the best accuracy during their experiments.

Performances of some state-of-the-art works on the WBC dataset have been recorded in Table 3. These results depict that the better performing methods used FS techniques before classifier training. Also, the methods like Shaw et al. [202], Ghosh et al. [67], and Dey et al. [49] provide state-of-the-art results on UCI Breast Cancer Wisconsin (Diagnostic) Dataset9. Hence, we can say that the use of the FS method will be considered a standard norm before using a classifier for diagnosis report based disease prediction systems.

Pathological Image Based Methods

It has already been mentioned that Breast Cancer is a deadly disease and early detection of the same is very crucial. Various diagnostic methods, including physical examination, ultrasound, magnetic resonance imaging, mammography, and biopsy have been developed and employed in the past. Among these, the histopathological image based diagnosis resulting from needle biopsy has turned out to be the gold standard in diagnosing Breast Cancer. However, DL and ML based CADDs are steadily finding their way into diagnostic studies. Deep NN (DNN) is approaching or even surpassing the accuracy and precision of histopathological diagnosis conducted by pathologists when identifying important features in diagnostic imaging studies.

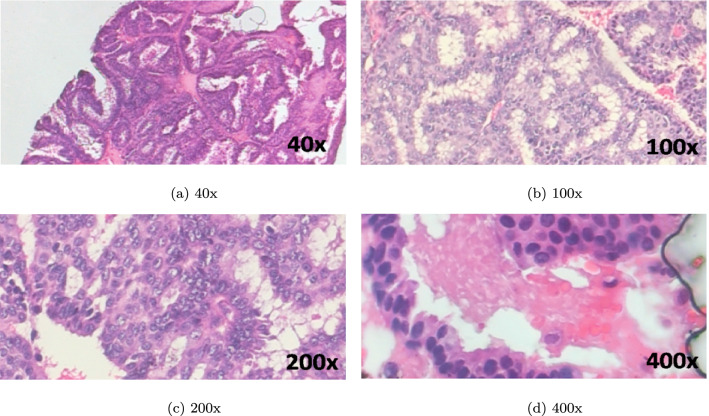

The DL and ML aided research works on Breast Cancer prognosis considered here have proposed histopathological image based classification while using the BreakHis and BACH datasets mostly for conducting experiments. The BreakHis dataset is made up of 9,109 histopathological images (2,480 benign and 5,429 malignant images) of breast tumor tissue. Images have been amassed from 82 patients using four different magnifying factors (40X, 100X, 200X, and 400X). Each image has been stored in PNG format with a resolution of pixels. Some samples from this dataset are shown in Figs. 6 and 7. The BACH dataset contains microscopy images that are made available through the grand challenge under International Conference on Image Analysis and Recognition (ICIAR 2018). This dataset consists of 400 Hematoxylin and Eosin (H&E) stained breast images of dimension labelled with one of the four classes, namely normal, benign, in situ carcinoma and invasive carcinoma. Images have been annotated by medical experts. Some samples from the BACH dataset are shown in Fig. 8.

Fig. 6.

Sample images from BreakHIS dataset : Eight classes of Breast Cancer histopathological images

Fig. 7.

Sample images from BreakHIS dataset: Papillary Carcinoma (malignant) tumor seen at different magnification levels

Fig. 8.

Examples of different forms of microscopic biopsy images taken from the BACH dataset

In the last few years, CNNs have undergone a substantial amount of tweaking and evolution. This has left us with a plethora of CNN architectures like VGG-16 [205], Inception-v1 [214], Inception-v3 [215], ResNet-50 [85], Xception [42] and DenseNet [96], all of which were extensively used in image based Breast Cancer prediction. Shallu and Mehra [199] presented a comparative study employing three pre-trained networks, VGG-16, VGG-19 and ResNet-50 for fine-tuning and full training. During the preprocessing of BreakHis images, data augmentation was carried out by rotation of images about their centre. The effect of image scaling and the influence of training data size on overall system performance was emphasized using fully trained (where weights were randomly assigned) and fine-tuned CNNs. However, overfitting caused by the enormous capacity of the network was not rectified using the freezing layers due to space constraints. The fine-tuned pre-trained VGG-16 model based feature extraction along with the LR classifier outperformed the rest of the pre-trained networks. In another work, Yan et al. [228] proposed a hybrid model involving CNN and recurrent NN (RNN) models. They released a larger and more diverse dataset10 consisting of 3771 Breast Cancer high resolution and annotated H&E stained histopathological images. Each image was labelled as normal, benign, in situ carcinoma, or invasive carcinoma. Instead of using the entire dataset, the dataset they used had the same size as the BACH dataset. The authors prepared a study and comparative analysis report on different combinations of patch-wise and image-wise DL based methods. In the preprocessing stage, patches were extracted from each image followed by data augmentation. A fine-tuned Inception-V3 was employed to extract features from each patch which were then fed into a bidirectional long short-term memory (BLSTM) to coalesce the features.

Coming to ResNets, Jannesari et al. [105] investigated the performances of ResNet extensions and all versions of the Inception model (i.e., v1-v4). Data augmentation methods like flipping, rotating, cropping and random resizing were applied to BreakHis dataset images. Saturation, brightness and contrast were applied to incorporate color distortions. Fine-tuned ResNet-152 competently classified malignant and benign cancer types with the highest accuracy. Unlike many studies where a single magnification level was used, this work used all the magnification levels i.e., 40X, 100X, 200X and 400X of BreakHis data. Rakhlin et al. [172] extracted deep features using three pre-trained CNN networks, namely ResNet-50, Inception-v3 and VGG-16 from strain normalized images. To encounter the overfitting problem the authors utilized an unsupervised dimensionality reduction mechanism along with data augmentation like image scaling and cropping. Recently, Gupta and Chawla [77] used pre-trained CNN models, namely, VGG-16, VGG-19, Xception, and ResNet-50 to extract features from histopathological images. CNNs were trained separately on different magnification factors (40x, 100x, 200x and 400x). The BreakHis dataset was used for performing experiments. The top layers i.e., the FC layers of the CNN model were removed and replaced by traditional ML methods i.e., SVM and LR. Features from pre-trained ResNet-50 with LR classifier outperformed other models on 40x and a 100x magnification factor. In another work, Dey et al. [52] used a pre-trained DenseNet-121 model for feature extraction purposes to detect Breast Cancer from thermal images. However, instead of passing the original image to the CNN model, they preprocessed the image using edge detection methods. The authors stacked edge images generated by Prewitt and Roberts edge detection technique with the original gray-level image to generate a three channel image. On the other hand, Sánchez-Cauce et al. [185] proposed a multi-input CNN model for detection of breast cancer from multi-modal data consist of patients’ information along with thermal images.

The above mentioned methods utilized the existing CNN architectures to obtain better results. However, some instances are found where researchers designed their own CNN architecture instead of using the popular CNN architectures as mentioned earlier. For example, Han et al. [79] designed Class Structure-based Deep CNN (CSDCNN) for the classification of Breast Cancer. Training instances were augmented to get rid of the imbalanced class problem. In their experiments, they made use of two different training strategies. In the first case, they trained the CSDCNN from scratch on the BreakHis dataset while the second case was based on transfer learning that initially pre-trains CSDCNN on ImageNet-37, followed by fine-tuning on BreakHis dataset. Sudharshan et al. [212] shed light on Multiple Instance Learning (MIL) and Single Instance Classification (SIC) problems while dealing with the Breast Cancer detection problem. This work divulged that MIL allows obtaining comparable or better results than SIC without labeling all the images. In this work, MIL methodologies like Axis-Parallel Hyper Rectangle (APR), diverse density, citation-k-NN, and SVMs with linear, polynomial and RBF kernels, non-parametric MIL and Multiple Instance Learning based CNN (MILCNN) were applied to the dataset. For each methodology, a grid search was used to tune their respective hyper-parameters. After successive evaluations, it transpired that the non-parametric MIL and MILCNN-APR performed adroitly and yielded the best results while APR and citation-k-NN did not perform up to the mark.

It is well-known that the performance of weak classifiers can be improved by using classifier ensemble techniques. Thus, many researchers in the literature have designed classifier combination techniques for image based Breast Cancer detection. For example, Yang et al. [230] proposed the Ensemble of Multi Scale CNN (EMS-NN) model. To form the ensemble, they utilized pre-trained models of DenseNet-161, ResNet-152 and ResNet-101. The proposed methodology followed three stages, the first being, Multiscale image patch extraction followed by Training multiple DCNNs and Model selection and combination. Overfitting was attenuated by applying various data augmentation techniques that were used to inflate the size and color diversity. However, Chennamsetty et al. [40] used two pre-trained CNN models - DenseNet-161 and ResNet-101 that were employed on differently preprocessed histology images. Brancati et al. [30] designed their ensemble model with different versions of the ResNet CNN architectures. The notable contribution of the work is that the authors reduced the problem complexity using a down-sampling technique where the image size was reduced by a factor of k. It also used the central patch of size as input to the model which reduced the training complexity of the model further. Recently, Bhowal et al. [28] proposed a classifier combination method that used Choquet fuzzy integral as the aggregator function. The aggregator was used to combine the confidence scores returned by the CNN based classifiers. The most notable aspect of the work is that the authors utilized coalition game and information theory to estimate the fuzzy measures used in Choquet integral with the help of validation accuracy. In another work, Chouhan et al. [44] proposed emotional learning inspired feature fusion strategy to classify a mammogram image into normal and abnormal classes. The authors used three static features: taxonomic indices, statistical measures and LBP along with CNN based features. Chattopadhyay et al. [38] designed a DL based method called Dense Residual Dual-shuffle Attention Network (DRDA-Net) for detection of Breast Cancer detection from histopathological image.

All the methods described above used the entire image to detect the presence of Breast Cancer using the pathological image i.e., all these methods passed the entire image to the detection system. However, multi-view analysis of the pathological images might help in improving the final result. With this objective, some authors followed patch-based approaches for the said task. For example, Roy et al. [178] designed a CNN aided patch-based classification model for classifying histology breast images. Patches carrying distinguishing information were extracted from the original images to perform classification, which followed two different evaluation strategies, namely One Patch in One Decision (OPOD) and All Patches in One Decision (APOD). In another work, Sanyal et al. [189] proposed a similar detection like Roy et al. [178]. However, in this work, the authors utilized a novel hybrid ensemble approach for classification purposes while Roy et al. [178] used a single CNN architecture. This ensemble model used the confidence score returned by base CNN models and the confidence scores returned by the Extreme gradient boosting trees (XGB) classifier with the help of different features to make the final decision at the patch level. Finally, the patch level results are again ensembled to take the image level decision.

All the above discussed works mostly used deep features that might contain some redundant/irrelevant features. Therefore, the use of some FS methods might help in improving the final output. Based on this, Chatterjee et al. [37] proposed a deep feature selection technique. In this method, the authors improved the Dragonfly Algorithm (DA) with the help of the Grunwald-Letnikov method to perform FS. By doing this the authors improved the model’s performance with fewer features. In computer vision, it is also observed that fusing handcrafted features with deep features improves the overall performance. Relying on this observation, Sethy and Behera [195] first concatenated LBP features with deep features extracted using the VGG19 CNN model and then classified them using the K-NN classifier. The performances of some important state-of-the-art Breast Cancer detection methods on different datasets are summarized in Table 4. The performance of state-of-the-art methods on different datasets is satisfactory but considering its deadliness characteristics it remains an open research problem. After analysing the performance trends of the methods studied here, we can safely comment that patch based methods assisted with DL performed better on BACH dataset. So, in the future, this approach could be studied on other datasets even for designing a better Breast Cancer detection technique from pathological images.

Table 4.

Performance comparison of recent prediction models on various Breast Cancer datasets. Classifiers with ’*’ represent the classifiers with the highest ACC scores in the respective paper

| Work Ref. | Classifier | Performance metrics | |||||

|---|---|---|---|---|---|---|---|

| ACC | P | R | F1 | S | AUC | ||

| BreakHis Dataset | |||||||

| Han et al. [79] | CSDCNN | 0.93 | – | – | – | – | – |

| Sudharshan et al. [212] | MILCNN* | 0.92 | – | – | – | – | – |

| Shallu and Mehra [199] | VGG 16 + LR | 0.92 | 0.93 | 0.93 | 0.93 | – | 0.95 |

| Jannesari et al. [105] | ResNet V1 152 | 0.98 | 0.99 | – | – | – | 0.98 |

| Gupta and Chawla [77] | ResNet50+LR | 0.93 | – | – | – | – | – |

| Chattopadhyay et al. [38] | DRDA-Net | 0.98 | – | – | – | – | – |

| BACH Dataset | |||||||

| Rakhlin et al. [172] | LightGBM + CNN | 0.87 | – | – | – | – | – |

| Yang et al. [230] | EMS-Net | 0.91 | – | – | – | – | – |

| Roy et al. [178] | Self-designed (OPOD) | 0.77 | 0.77 | – | 0.77 | 0.77 | – |

| Roy et al. [178] | Self-designed (APOD) | 0.90 | 0.92 | – | 0.90 | 0.90 | – |

| Sanyal et al. [189] | Hybrid Ensemble (OPOD) | 0.87 | 0.86 | 0.87 | 0.86 | 0.99 | – |

| Sanyal et al. [189] | Hybrid Ensemble (APOD) | 0.95 | 0.95 | 0.95 | 0.95 | 0.98 | – |

| Bhowal et al. [28] | Choquet fuzzy integral and coalition game based classifier ensemble | 0.95 | – | – | – | – | – |

| Mics. Dataset | |||||||

| Yan et al. [228] | Inception-V3* | 0.91 | – | 0.87 | – | – | 0.89 |

| Dey et al. [52] | DenseNet-121 | 0.99 | 0.99 | 0.98 | 0.98 | – | – |

Lung Cancer Prediction Methods

Like Breast Cancer, Lung Cancer detection methods are also divided into two categories in this work: diagnosis report based and pathological image based methods. Some research attempts produced satisfactory results with ML algorithms like SVM and AdaBoost. However, in most of the works, clear domination of DL based techniques is found for image based as well as diagnosis report based methods.

Diagnosis Report based Methods

The dataset that is very commonly used in diagnosis report based Lung Cancer detection is from UCI-Irvine repository11. It contains 32 instances of data where the number of attributes is 57 (1 class attribute and 56 predictive attributes). It is an age-old dataset and contains a very limited number of samples with a large number of diagnosis attributes makes it a challenging dataset. However, some works used Lung Cancer Data12 (LCD), survey Lung Cancer Data13 (SLCD) of data from world repository, Thoracic Surgery Dataset (TSD) of UCI repository [23], Michigan Lung Cancer Dataset14 (MLCD) and SEER15 dataset. LCD dataset contains 1000 instances with 24 attributes having three different class labels: ’0’ is for a healthy person, ’1’ for a person with a benign tumor and ’2’ for a person with a malignant tumor. SLCD contains 309 instances but with 16 attributes. Out of these 16 attributes, 14 attributes have values 1 and 2 to represent NO and YES respectively while for the gender M (for male) and F (for female) are used. The values of attribute age are normalized by the min-max normalization method. The TSD contains 17 attributes (14 having nominal values and 3 having continuous values) and 470 instances. In this dataset class label, the attribute is represented by RiskYr which is a binary attribute. Risk1Yr is true if the person is dead and false if the person is alive. MLCD contains 96 instances (86 instances are cancerous and 10 are healthy) and 7130 attributes. SEER dataset is a gene expression based dataset which was released in April 2016. It contains 149 attributes and 643,924 instances. The dataset named lungdata lists the performance of various research efforts on various datasets discussed here. The non-availability of the diversified dataset for Lung Cancer disease detection for a long period might lessen the quality of research works as compared to other cases.

Most of the works in the literature performed experimental comparative studies consisting of different shallow classifiers. For example, Danjuma [47] compared the performance of three different classifiers: MLP, J48 and Naive Bayes (NB) on TSD from the UCI repository. The outcome of their study showed that MLP produced the best result on these datasets. In another work, Murty and Babu [151] considered four popular classifiers viz., NB, NN with RBF kernel (RBF-NN), MLP and DT in their work to study the performances of these classifiers on two datasets: UCI repository and MLCD. RBF-NN outperformed all other classifiers. Radhika et al. [169] used the Lung Cancer dataset from the UCI repository and SLCD. In their research, they observed that LR with a 7-fold cross validation regime and SVM with a 10-fold cross validation strategy outperformed the other classifiers on the UCI repository and SLCD dataset respectively. Recently, Patra [161] compared the performances of four different classical ML algorithms: RBF-NN, k-NN, NB and J48 on the Lung Cancer dataset of the UCI repository. The experimental outcomes confirmed that NN produces the best accuracy with a 10-fold cross validation technique. Recently, Doppalapudi et al. [53] compared the performance of 6 classifiers (3 deep learning models, namely ANN, CNN and RNN, and 3 shallow learners, namely, NB, SVM, RF) for survivability prediction of Lung Cancer prediction using SEER dataset. They experimentally showed that ANN performed the best among these classifiers while RNN and CNN models followed the ANN classifier. In another work, Gultepe [75] made a study of six different classifiers namely, k-NN, RF, NB, Logistic Regression (LR), DT, and SVM on UCI- Irvine repository and found that K-NN performed the best. The author applied the classifier to preprocessed data obtained after inputting the missing values.

In addition to these comparative studies, there are a few works that have concentrated on method building. For example, Ali and Reza [180] employed different preprocessing to filter out redundant/noisy patient data as well as attributes from the SEER dataset. In the first phase of preprocessing, the data of the patient who died due to cancer were only kept and then some irrelevant attributes like the cause of death, ID_no, age and sex were removed. Later, a correlation based FS algorithm was employed to obtain the near optimal set of attributes. After the preprocessing, the researchers selected 46 attributes from 149 attributes and 17,484 instances from 643,924 instances. They applied various ensemble methods to this optimally selected dataset. Three classifiers: J48 with the base learner, RF with Dagging and Repeated Incremental Pruning to Produce Error Reduction (RIPPER) with AdaBoost were used and RIPPER with AdaBoost performed best.

In another work, Nasser and Abu-Naser [153] used the SLCD dataset for their work. Out of 16 attributes, in this research, the author used the first 15 attributes but excluded the chest pain attribute. ANN with 3, 1 and 2 nodes in the hidden layers was used. Salaken et al. [183] introduced a deep autoencoder based classification method. In this model, deep features were first learned and then trained an ANN with the learned features. Hinton and Salakhutdinov [87] used a DNN model with 80 and 10 features in the first and second layer respectively and the number of hidden neurons=5. They used Sigmoidal as an encoder function and got a promising result. Recently, Dey et al. [49] proposed an FS method termed LAGOA for several disease diagnosis purposes. The selected features from LAGOA are classified using an RF classifier. The model was evaluated on the UCI-Irvine Lung Cancer dataset. In another recent work, Meleki et al. [132] used a K-NN classifier along with GA based FS approach for lung cancer prediction from the LCD dataset. Recently, Hsu et al. [95] proposed an FS scheme by combining GA with correlation based FS technique to select an optimal set of features from UCI- Irvine repository.

Some of the important findings by the researchers are listed in Table 5. Like diagnosis report based Breast Cancer detection here also FS based methods dominate. However, to the best of our knowledge, methods assisted with FS and deep learners for Lung Cancer diagnosis are still missing in the literature. However, the use of such methods could improve the diagnosis performance of the ML/DL based method significantly.

Table 5.

Performance comparison of recent prediction models on various Lung Cancer datasets

| Work Ref. | Classifier | Performance Metrics | |||||

|---|---|---|---|---|---|---|---|

| ACC | P | R | F1 | Error | AUC | ||

| UCI -TSD Dataset | |||||||

| Danjuma [47] | MLP | 0.82 | 0.82 | 0.82 | 0.82 | – | 0.84 |

| MLCD Dataset | |||||||

| Murty et al. [151] | RBF-NN | – | – | – | – | 0.19 | – |

| UCI-Irvine Dataset | |||||||

| Patra [161] | RBF-NN | 0.81 | 0.81 | 0.81 | 0.81 | – | 0.75 |

| Dey et al. [49] | LAGOA+RF | 0.86 | – | – | – | – | – |

| LCD Dataset | |||||||

| Radhika et al. [169] | SVM | 0.99 | – | – | – | – | – |

| Salaken et al. [183] | Deep-ANN | 0.80 | – | – | – | – | – |

| Maleki et al. [132] | Deep-ANN | 1.00 | – | – | – | – | – |

| SEER Dataset | |||||||

| Ali and Reza [180] | RIPPER with AdaBoost | 0.89 | – | – | – | – | 0.95 |

| Doppalapudi et al. [53] | ANN | 0.71 | 0.71 | 0.71 | 0.71 | – | 0.87 |

| SLCD Dataset | |||||||

| Nasser and Abu-Naser [153] | ANN | 0.97 | – | – | – | – | – |

Pathological Image Based Methods

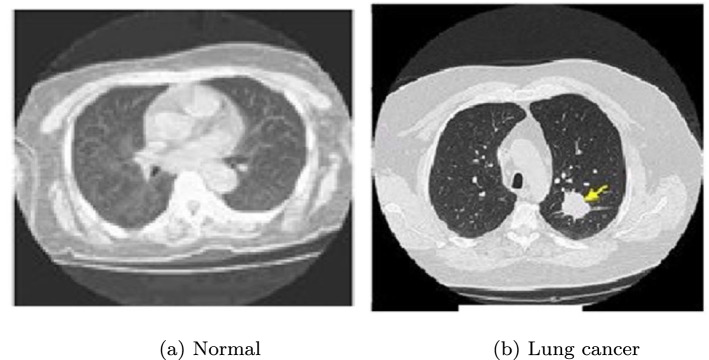

The practicalities of ML and DL based models have already been discussed in the previous sections. With the rapid advances in computational intelligence and GPUs, it has become easier for researchers to develop robust image classifiers. As a result, we find many recent methods that employed image classification protocol for Lung Cancer detection from Computed Tomography (CT) images. In the period before wider acceptability of transfer learning for learnable texture feature extraction, researchers [16, 117, 152] mostly used preprocessing to improve the visual quality of the CT images followed by handcrafted feature extraction to classify with the help of shallow learners. However, with the advent of the transfer learning concept, the research spectrum became wider. For example, Kulkarni and Panditrao [117] utilized the CT images obtained from the National Institutes of Health (NIH) or National Cancer Institute (NCI) Lung Image Database Consortium (LIDC) datasets for detecting the stages of Lung Cancer. In this work, before extracting the features, the quality of the CT images was enhanced using Median filter followed by the Gabor filter. Next, image segmentation was performed using marker based watershed segmentation [9] to extract the tumor regions. Finally, geometric features like area, perimeter and eccentricity were fed to the SVM classifier to determine the stages of cancer. Nadkarni and Borkar [152] classified CT images as cancerous (abnormal) and non-cancerous (normal) using an SVM classifier placed on top of several extracted geometric features that include area, perimeter and eccentricity. Prior to the extraction of features, the median filter was applied to eradicate the noise from the CT images. Down the line, image enhancement was carried out using contrast adjustment, followed by image segmentation. Annotated CT images of Lung Cancer were collected from the Cancer Imaging Archive (CIA) repository. Two sample images taken from the CIA database are shown in Fig. 9.

Fig. 9.

Examples of Lung CT images from CIA

Anifah et al. [16] used ANN with back-propagation and Gray Level Co-occurrence Matrix (GLCM) based features to classify 50 CT images obtained from the CIA. During prepossessing, the images were binarized and enhanced like in [152]. Finally, GLCM features were extracted from ROIs to classify the images using the designed ANN. Vas and Dessai [222] also proposed a Haralick feature assisted Lung Cancer detection technique where image segmentation was performed using morphological operation. Images were collected from the Manipal hospital and V.M.Salgaocar hospital both situated in Goa, India. The authors applied a median filter of size to improve the input CT image quality. For ROI segmentation a four steps method was employed. The steps are 1) complementing the image and opening the image with periodic-line structuring elements, 2) filtering out the lungs by using maximum area, 3) performing a close operation by disk structuring element to procure lung mask and 4) superimposing the lung mask on the original image. 7 Haralick features (second order statistical features) were extracted by applying Haar wavelets and creating GLCM from the wavelet transformed images. Recently, Shakeel et al. [198] used deep learning instantaneously trained neural network (DITNN) classifier on top of several statistical and spectral features designed by Sridhar et al. [209] aiming at designing a Lung Cancer detection model using CT images. The main contribution of the authors is proposing an improved profuse clustering technique (IPCT) that segments the affected regions from the CT image efficiently and thus improves the overall detection result. Alzubaidi et al. [13] performed an experimental comparison between global and local hand-crafted features used for Breast Cancer detection from CT images. For the comparative study, 100 CT images were collected from different sources while 10 different feature extraction processes, namely intensity histogram, HOG, Gabor Haar wavelet, etc. This comparative study confirms that the use of local Gabor features with the SVM classifier performed the best. Recently, Akter et al. [7] designed a fuzzy rule based image segmentation technique to extract suspicious nodules from a CT image. Next, they prepared shape (2D and 3D) and texture (2D) based features from the extracted nodules and classified them using a neuro fuzzy classifier. In another work, Zhou et al. [236] first employed a region growing mechanism to segment suspicious regions from a CT image and then a CNN model to classify the segmented regions to decide the presence of Lung Cancer.

In the era of DL with the concept of transfer learning, we observed some works that utilized the same in their work. For example, Jakimovski and Davcev [104] gathered CT images of 70 subjects from a local medical hospital and labelled them from oncology specialists. Two piles were created, where 58 subjects had cancer, and 12 were diagnosed cancer-free. The piles were split into training (90% of the images) and testing sets. After binarization, images were fed into the DNN for classification. In another work, Taher et al. [216] came up with a rule based method that analyzed the sputum samples obtained from the Tokyo Center of Lung Cancer in Japan. The features extracted from the nucleus region included nucleus-cytoplasm ratio, perimeter, density, curvature, circularity and eigen ratio. Diagnosis rules for each of the features were derived. 100 sputum color images were used to assess the rule based method. Finally, classification was done using a rule based method. While Kulkarni and Panditrao [117] used classical ML in their work, Tekade and Rajeswari [218] proposed a 3D multipath VGG-like CNN architecture. In the preprocessing stage like image binarization, morphological operations were applied. After preprocessing, U-Net architecture was used for the segmentation of lung nodules, which were fed to the CNN model for detection. Finally, the features were fed to an ANN for classification needs. Since identified lung nodules greatly help in risk assessment of Lung Cancer, Zhang and Kong [231] designed a Multi-Scene Deep Learning Framework (MSDLF) to detect Lung Nodule from Lung CT images. The detected nodules were classified using a 4-channel CNN architecture. The summary of performances of all the methods studied here is provided in Table 6.

Table 6.

Performance comparison of recent prediction models on Lung Cancer Datasets

| Work Ref. | Classifier | Performance metrics | ||||

|---|---|---|---|---|---|---|

| ACC | P | R | F1 | S | ||

| CIA | ||||||

| Tekade and Rajeswari [218] | VGG | 0.95 | – | – | – | – |

| Anifah et al. [16] | DNN | 0.80 | – | – | – | – |

| Alam et al. [10] | SVM | 0.97 | – | – | – | – |

| Shakeel et al. [198] | DITNN | 0.95 | 0.94 | 0.97 | 0.95 | 0.97 |

| Zhang and Kong [231] | MSDLF | 0.99 | – | – | – | – |

| Mics. Datasets | ||||||

| Jakimovski and Davcev [104] | DNN | 0.75 | – | – | – | – |

| Taher et al. [216] | Rule-based classifier | 0.93 | 0.95 | 0.94 | – | 0.91 |

| Vas and Dessai [222] | DNN | 0.92 | – | 0.88 | – | 0.97 |

| Alzubaidi et al. [13] | SVM | 0.97 | – | 0.96 | – | 0.97 |

| Akter et al. [7] | Neuro-Fuzzy | 0.90 | 0.86 | – | – | 0.81 |

Leukemia Prediction Methods

Considering the mortality rate of Leukemia, researchers have made significant inroads into the early detection of Leukemia using both gene expression and pathological image. All genes present in gene expression data are not responsible for Leukemia, rather there are a few which known as biomarkers. Thus, selecting the responsible genes was vastly explored in literature. Old days method used filter methods while the current trend is to use different hybrid FS mechanisms for the said purpose. In the case of image based methods, a shift from the traditional ML approach to the DL approach is observed. In the following subsections, we describe the methods that followed either gene expression or cell image based diagnosis.

Gene Expression Based Methods

In this study, the classification of Leukemia into Acute Lymphoblastic Leukemia (ALL) or Acute Myeloid Leukemia (AML) is considered. The most common dataset which has been used is the dataset16 provided by Golub et al. [70]. This is a gene expression dataset. It consists of 72 Leukemia patients (47 in the ALL category and 25 in the AML category) and each data consists of 7129 gene expression measurements. The researchers aimed to come up with a near optimal set of genes that are responsible for Leukemia disease prior to designing a classifier based detection technique. For example, Hsieh et al. [94] used Information Gain (IG) to select important genes that play a significant role in identifying the presence of Leukemia. The authors selected 150 genes out of 7128 genes using the IG. These 150 genes were later used to classify genes using SVM with the RBF kernel. In another work, Begum et al. [27] performed Consistency based FS (CBFS) to select optimal bio-markers (i.e., genes) that helped distinguish ALL from AML with SVM (with polynomial kernel) classifier. Contrary to the above methods, Kavitha et al. [114] used Fast Co-relation based Filter-Solution (FCBF) as feature selector and SVM with Recursive Feature Elimination (RFE) kernel as the classifier. In FCBF, first Symmetrical Uncertainty (SU) was computed for every feature and then the correlated features were deleted based on a preset threshold value.

In another work, Dwivedi [55] made a comparison study among many classifiers like ANN, SVM, LR, k-NN and classification tree. For cross-validation, researchers used to leave one out cross validation. The best result came out for ANN with the leave one out cross validation technique. Recently, Santhakumar and Logeswari [187] used Ant Lion Optimizer (ALO), designed by Mirjalili [139]. After FS, the SVM classifier was applied to the selected features. In another work [188], these authors proposed a hybridized ALO with Ant Colony Optimization (ACO) technique to improve their previous performance. Though these two methods are new approaches, their performance was not so good compared to other works on the said dataset. In another work, Gao and Liu [63] gained the highest accuracy with the help of an extension SVM classifier termed Least Square SVM (LSSVM) where the authors first normalized the data and then classified using LSSVM. Next, the F-statistic method was used as a filter method to lessen the complexity of SVM-RFE. Both PSO and FOA tend to get stuck in local optima. Hence, FOA was used first and then PSO was used to reach the global optimum if FOA stuck to the local optimum. Finally, LSSVM is used on the select features and they got an extraordinary result of 100% with 4 features.

Recently, Baldomero-Naranjo et al. [24] designed a modified SVM classifier that deals with outliers detection and FS simultaneously. They considered ramp loss margin error [31] in the newly designed SVM model to mitigate the influence of outlier on the classifier and a budget constraint approach similar to [123] to restrict the number of the selected features. In another work, Baliarsing et al. [25] designed a hybrid FS method that combines SA with Rao Algorithm (RA) (termed as SARA). The SA based local search helps in improving the exploration capability of the RA method. They also used Log sigmoidal function as a transfer function to convert the continuous domain SARA into the discrete domain. Mandal et al. [133] proposed a tri-state wrapper-filter FS method and evaluated the performance on the dataset provided by Golub et al. [70]. In the first stage, four filter methods, namely Mutual Information, ReliefF, Chi-Square, and Xvariance were considered to design an ensemble FS that reduces some irrelevant features from high-dimensional features. In the next stage, the highly correlated features were filtered out by employing the Pearson correlation based method. Finally, the authors employed WOA to select the final set of biomarkers. Table 7 lists the performance of various research efforts discussed here.

Table 7.

Performance comparison of recent prediction models on Leukemia Datasets. All the methods were evaluated on the Dataset provided by Golub et al. [70]

| Work Ref. | Classifier | Performance metrics | |||

|---|---|---|---|---|---|

| ACC | R | F1 | S | ||

| Hsieh et al. [94] | IG-SVM | 0.98 | – | – | – |

| Begum et al. [27] | CBFS-Poly SVM | 0.97 | – | – | – |

| Kavitha et al. [114] | FCBF-SVM RFE | 0.93 | 0.95 | – | 0.98 |

| Dwivedi [55] | ANN | 0.98 | 1.00 | – | 0.93 |

| Gao and Liu [63] | PSOFOA-ANNFIS | 1.00 | – | – | – |

| Santhakumar and Logeswari [187] | ALO-SVM | 0.91 | 0.95 | 0.93 | 0.95 |

| Santhakumar and Logeswari [188] | ALO-ACO-SVM | 0.95 | 0.95 | 0.96 | 0.98 |

| Baldomero-Naranjo et al. [24] | Modified SVM | 0.95 | – | – | – |

| Mandal et al. [133] | Tri-state FS | 1.00 | – | – | – |

Pathological Image Based Methods

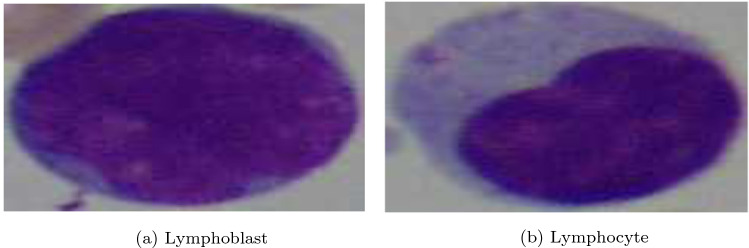

Since Leukemia disease usually involves white blood cells and thus the nature of these cells helps in screening it. As a result, the pathological image based Leukemia detection algorithm relied on understanding the nature/proportion of blood cells. For this category of Leukemia classification methods considered here have used the Acute Lymphoblastic Leukemia Image Database (ALL_IDB17). All images are in JPG format with 24-bit color depth and dimension . Some sample images of ALL_IDB dataset are shown in Figs. 10 and 11. The dataset has two parts: the ALL_IDB 1 and ALL_IDB 2. The ALL_IDB 1 dataset with 108 samples is used primarily for differentiating patients from non-patients. It contains about 39000 blood cells, where the lymphocytes have been labelled by expert oncologists. The ALL_IDB 2 dataset is primarily used to classify different types of Leukemia. It contains a collection of cropped areas of interest of normal and blast cells (see Fig. 11) that belong to the ALL_IDB1 dataset (see Fig. 10). ALL_IDB2 images have similar grey level properties to the images of the ALL_IDB1, except for the image dimensions. Hereafter we are discussing some significant works in Leukemia disease detection.

Fig. 10.

Samples taken from ALL_IDB images

Fig. 11.

Example of a peripheral blood smear having (a) lymphoblast and (b) lymphocyte (ALL_IDB Dataset)

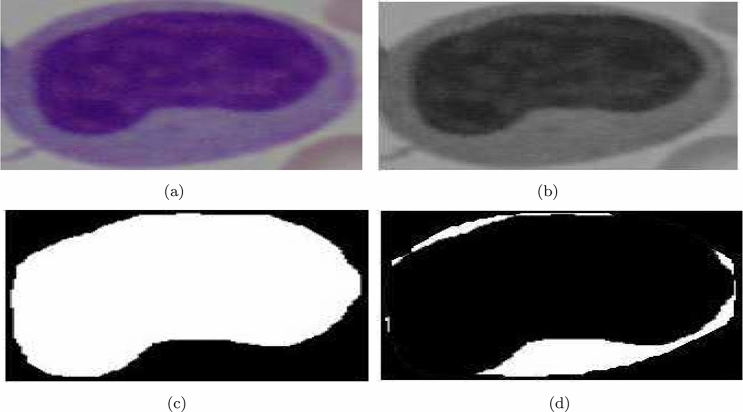

Abdeldaim et al. [2] utilized the ALL_IDB 2 dataset to detect different types of Leukemia disease. In this work, the images were first converted into a CMYK color model and then used histogram based threshold calculation method to separate the lymphocyte cells from the non-lymphocyte cells where roundness and solidity characteristics of the cells were considered. After this, 30 shape-based features, 15 color based features and 84 texture based features were extracted from detected lymphocyte cells and stacked to classify the cells using k-NN, SVM (with RBF, Polynomial and Linear kernels), DT, NB classifiers. k-NN outperformed the other classifiers. Mishra et al. [142–144] proposed a series of solutions in the said field with varying features and classifiers. In all these works, they utilized the Weiner filter followed by histogram equalization based contrast enhancement on the images from ALL_IDB 2 dataset. Next, grouped leukocytes were segmented using marker based watershed segmentation algorithm [125]. Some such segmented outputs are shown in Fig. 12).

Fig. 12.

Separation of nucleus and cytoplasm exercised by Mishra et al. [143] in their work

In the work, proposed by Mishra et al. [142], Discrete Cosine Transform (DCT) features were extracted from isolated leukocyte cells and classified using SVM, NB, k-NN and NN with Back Propagation (BPNN). It was found that the first 50 DCT coefficients with the SVM classifier performed the best. Next year, in the work [143], they first extracted GLCM features from segmented cells and then they employed probabilistic PCA for feature dimension reduction. Finally, they used RF, k-NN, SVM and BPNN classifiers on dimension reduced features for both nuclei as well as cytoplasm detection from which it was inferred that nucleus features were more suitable for accurate detection of Leukemia. In another work [144], the authors performed texture based cell classification for predicting Leukemia. Texture based features were extracted using Discrete Orthonormal S-Transform (DOST) from each segmented region. This was followed by dimensionality reduction using LDA. For classification, the authors tried AdaBoost, SVM, BPNN, RF and k-NN out of which AdaBoost yielded the best result.