Abstract

In a previous study, we identified biocular asymmetries in fundus photographs, and macula was discriminative area to distinguish left and right fundus images with > 99.9% accuracy. The purposes of this study were to investigate whether optical coherence tomography (OCT) images of the left and right eyes could be discriminated by convolutional neural networks (CNNs) and to support the previous result. We used a total of 129,546 OCT images. CNNs identified right and left horizontal images with high accuracy (99.50%). Even after flipping the left images, all of the CNNs were capable of discriminating them (DenseNet121: 90.33%, ResNet50: 88.20%, VGG19: 92.68%). The classification accuracy results were similar for the right and left flipped images (90.24% vs. 90.33%, respectively; p = 0.756). The CNNs also differentiated right and left vertical images (86.57%). In all cases, the discriminatory ability of the CNNs yielded a significant p value (< 0.001). However, the CNNs could not well-discriminate right horizontal images (50.82%, p = 0.548). There was a significant difference in identification accuracy between right and left horizontal and vertical OCT images and between flipped and non-flipped images. As this could result in bias in machine learning, care should be taken when flipping images.

Subject terms: Eye diseases, Computer science

Introduction

Optical coherence tomography (OCT) is an imaging modality providing high-resolution cross-sectional and three-dimensional images of living tissue1. OCT can be used to quickly and safely examine eyes at the cellular level. OCT has been widely used for diagnosing retinal and optic disc diseases, is readily accessible for ophthalmologists, and is being used increasingly in dermatology2 and cardiology2.

A convolutional neural network (CNN) is an image analysis method that has developed rapidly in recent years. The multi-layered structure of the visual cortex inspired the development of CNNs3. CNNs show high ability to analyze and classify images. In recent studies, the classification ability of some CNNs was similar to that of physicians4,5.

The accuracy of CNNs for diagnosing ophthalmic diseases has been evaluated in numerous studies6, including ones on retinal disease7–11 and glaucoma12, in which CNNs were able to determine patient’ age, sex, and even smoking status from retinal images. A fundus image of the left eye appears as a mirror image of the fundus image of the right eye. In a previous study of CNNs, we identified asymmetries in fundus photographs13. However, fundus images can also be affected by several factors such as the camera lens, flash, and room lighting conditions. As OCT is free from these factors, we try to evaluate the asymmetry of right and left eyes using high-resolution OCT with CNN models.

Results

Baseline characteristics of image sets

Medical charts of patients who visited Gyeongsang National University Changwon Hospital from February 2016 to December 2020 were reviewed retrospectively. A total of 3,238,650 macular images were taken from 9274 patients between 2016 and May 2021. We selected 129,546 median images from among the total of 3,238,650 images. There were 33,366 right horizontal, 31,211 right vertical, 33,429 left horizontal, and 31,540 left vertical OCT images.

We split the images in each set into training, validation, and testing sets according to a 8:1:1 ratio. Sets 1–5 consisted of horizontal images (33,366 right horizontal and 33,429 left horizontal images). Of these 66,795 images, 6680 were used for model testing. During CNN learning, 53,435 images were used to train the models and 6680 for model validation. Sets 6 and 7 consisted of vertical images (31,211 right vertical and 31,540 left vertical images). Of the 62,751 images, 6276 were used for testing. During CNN learning, 50,199 images were used for training and 6276 for validation. Set 8 consisted of only 33,366 right horizontal images, split into 3337 images for testing, 26,692 for training, and 3337 for validation.

Comparison of right and left horizontal OCT images (Set 1; RhLhD121)

We classified the right and left horizontal OCT images using the CNN model. After the 50th epoch, the validation accuracy was 99.50% (Fig. 1). Of the 6680 test set images, 6675 were correctly labeled by the CNNs, for a test accuracy of 99.93% (AUC = 0.999, p < 0.001, Fig. 2).

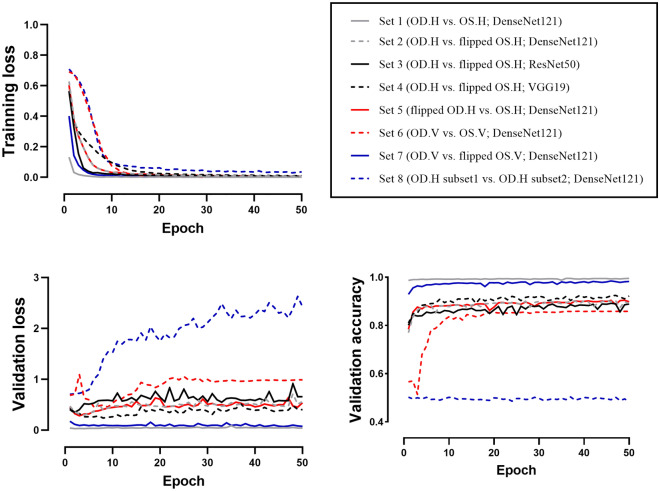

Figure 1.

Training and validation results. The training loss of Sets 1–7 approached zero. In Set 1, images of right and left eyes were easily distinguished, with the validation loss approaching zero and the validation accuracy thus approaching 1.0. In Sets 2–5, similar validation loss (~ 0.4) and validation accuracy (~ 90%) were obtained. For Set 6, which consisted of vertical images, the accuracy was slightly inferior compared to Sets 2–5. Set 7 showed the second-highest validation accuracy. In contrast to Sets 1–7, overfitting was observed in Set 8; in this set, training loss converged whereas validation loss diverged. The validation accuracy of Set 8 was around 0.5 and failed to improve over the learning period. OD oculus dexter, OS oculus sinister, H horizontal, V vertical.

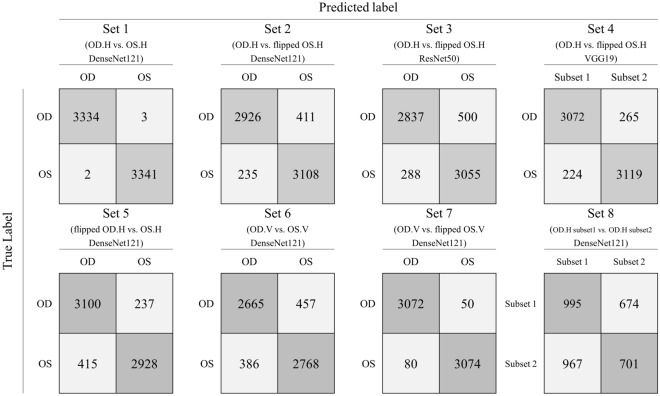

Figure 2.

Confusion matrix for each test set. The classification accuracy for Set 1 was 99.93%, which was the highest among all sets. (AUC = 0.999, p < 0.001). Sets 2–5 showed test accuracies of ~ 90% (90.33%, 88.20%, 92.68%, and 90.24%, respectively; AUC: 0.902, 0.882, 0.927, and 0.902, respectively; all p values < 0.001). Set 6 showed a test accuracy of 86.57% (AUC = 0.866, p < 0.001). The accuracy of Set 7 was 97.93%, which was the second-highest (AUC = 0.979, p < 0.001). The results for Sets 1–7 were statistically significant. Set 8 showed 50.82% classification accuracy (AUC = 0.505, p = 0.548). OD oculus dexter, OS oculus sinister, H horizontal, V vertical.

Comparison of right and flipped left horizontal OCT images (Sets 2–4; RhfLh)

We classified the non-flipped right horizontal OCT images and flipped left horizontal images using DenseNet121, ResNet50, and VGG19. The numbers of images in Sets 2–4(RhfLh) were the same as in Set 1(RhLhD121). After the 50th epoch, the validation accuracy was 92.97%, 88.92%, and 92.23% in Set 2(RhfLhD121), Set 3(RhfLhR50), and Set 4(RhfLhV19), respectively (Fig. 1). The test accuracies were around 90% (90.33%, 88.20%, and 92.68%, respectively; AUC: 0.902, 0.882, and 0.927, respectively; all p values < 0.001, Fig. 2). The AUCs differed significantly in the ROC curve comparisons (all p values < 0.001).

Comparison of flipped right and non-flipped left horizontal OCT images (Set 5; fRhLhD121)

Set 5(fRhLhD121) comprised horizontally inverted versions of the images in Set 2(RhfLhD121). As we flipped only the left horizontal images in other Sets, it could cause bias. We tried to verify the results by flipping the right eye images. The DenseNet121 model classified the flipped right horizontal images and non-flipped left horizontal images. After the 50th epoch, the validation accuracy was 89.83% (Fig. 1). The test accuracy was 90.24% (AUC: 0.902, p < 0.001, Fig. 2). In comparison to the ROC curve analysis for Set 2(RhfLhD121), the AUCs were not significantly different (fRhLhD121 vs. RhfLhD121; p = 0.756).

Comparison of right and left vertical OCT images (Set 6; RvLvD121)

We classified the right and left vertical untransformed images using the DensdNet121 model. After the 50th epoch, the validation accuracy was 85.86% (Fig. 1). Of the 6276 test set images, 5433 were correctly labeled by the CNNs, for a test accuracy of 86.57% (AUC = 0.866, p < 0.001, Fig. 2). The AUC was significantly lower than that of Set 1(RhLhD121; AUC = 0.999) and Set 2(RhfLhD121; AUC = 0.902) (all p values < 0.001).

Comparison of right and flipped left vertical OCT images (Set 7; RvfLvD121)

We classified the right and flipped left vertical untransformed images using the DensdNet121 model. After the 50th epoch, the validation accuracy was 98.26% (Fig. 1). Of the 6276 test set images, 6146 were correctly labeled by the CNNs, for a test accuracy of 97.93% (AUC = 0.979, p < 0.001, Fig. 2). The AUC was significantly higher than that of Set 6 (RvLvD121 vs. RvfLvD121; p < 0.001).

Comparison between randomly distributed right horizontal OCT images (Set 8; RhRhD121)

Set 8(RhRhD121) was designed to test overfitting, with DenseNet121 applied to classify randomly selected right horizontal images. Although the training accuracy increased and training loss decreased, the validation accuracy after the 50th epoch was only 48.55%. Of the 3337 test set images, 1696 (50.82%) were classified correctly (AUC = 0.505, p = 0.548, Fig. 2). Unlike Sets 1–7, Set 8 displayed an irregular CAM pattern that emphasized the outside regions of the vitreous and sclera.

Discussion

OCT is a novel imaging modality that provides high-resolution cross-sectional images of the internal microstructure of living tissue1. The low-coherence light of OCT penetrates the human retina and is then reflected back to the interferometer to yield a cross-sectional retinal image14. Retinal OCT images consist of repeated hyporeflective and hyperreflective layers. Hyperreflective layers in OCT include the retinal nerve fiber layer, inner plexiform layer, outer plexiform layer, external limiting membrane, ellipsoid zone, and retinal pigmented epithelium. Hyporeflective layers include the ganglion cell, inner nuclear, and outer nuclear layers. The choroid and parts of the sclera also appear in OCT images15.

Our previous study13 showed that left fundus images are not mirror-symmetric with respect to right fundus images. CNNs are capable of distinguishing the left from right fundus with an accuracy greater than 99.9%. However, it is important to consider the various factors that can affect fundus photography outcomes. In fundus photography, light from the flashlight reflects off the retina and enters the sensor of the fundus camera; a sensor then examines the wavelength and strength of the light. According to the working principle of a fundus camera, fundus images may be affected by the type and location of the light source and sensor, as well as by reflection and various environmental factors16. However, OCT is a completely different modality free from these confounding factors; high-quality cross-sectional OCT images allow visualization of the anatomy.

Our CNNs showed 99.93% classification accuracy for bilateral horizontal OCT images (Set 1; RhLhD121). This result is not surprising because the thick retinal nerve fiber layer (RNFL), which consists of the papillomacular bundle, is on the nasal side of the fovea but not the temporal side; in addition, large blood vessels are concentrated on the nasal side. Notably, CAM highlighted not only the RNFL but also the entire thickness of the parafoveal retina. These results indicate that CNNs are capable of recognizing anatomical asymmetry based on the anatomical information of every layer of the retina, choroid, and sclera, as well as the RNFL.

The human eye cannot distinguish a right horizontal OCT from a flipped left horizontal OCT, as the images largely coincide with each other. To examine this problem, we used image sets (Sets 2–4; RhfLh) to train DenseNet121, ResNet50, and VGG19. Although the classification accuracy differed among the models, all of the CNNs showed around 90% accuracy to distinguish for left and right horizontal OCT images. Thus, CNNs can discriminate horizontal OCT images that are not mirror-symmetric.

However, we could not fully explain the CAM results of Sets 2–4(RhfLh). CAM was used to analyze the last layers of the CNN, and the results may have been affected by the model structure. The classification results for DenseNet121 were similar between Set 1(RhLhD121) and Set 2(RhfLhD121). The ResNet50 CAM for Set 3(RhfLhR50) displayed a vertically linear pattern that included the vitreous region and the region outside the sclera. Given that the region surrounding the sclera is irrelevant for OCT images, this indicates an error. The AUC for Set 3(RhfLhR50) was significantly lower than those of Set 2(RhfLhD121) and Set 4(RhfLhV19); this was attributable to uninterpretable CAM results. The VGG19 CAM for Set 4(RhfLhV19) highlighted the outer retina parafoveal and foveolar regions, different from Set 2(RhfLhD121) and Set 3(RhfLhR50).

As we flipped only the left horizontal images in other Sets, it could induce bias. The purpose of Set 5(fRhLhD121) was to verify whether the flip function in the NumPy package has errors. Set 5(fRhLhD121) consisted of horizontally inverted versions of the images in Set 2(RhfLhD121). If image flipping did not distort the images, we would expect to obtain similar results between Set 2(RhfLhD121) and Set 5(fRhLhD121). Classification accuracy was similar between the two sets (RhfLhD121, 90.33%; fRhLhD121, 90.24%), and the AUCs were not significantly different (p = 0.756). Through this, we found that there was no difference between flipping the left eye images and the right eye images.

Set 6(RvLvD121) consisted of vertical images of the right and left eyes. It is believed that vertical images of the two eyes are symmetrical; thus, we did not expect the CNNs to distinguish them. Set 6(RvLvD121) images were unmodified, similar to Set 1(RhLhD121). The CNNs distinguished the right and left vertical OCT images with relatively high accuracy (86.57%, AUC = 0.866, p < 0.001). However, the accuracy for Set 6 images was significantly lower than for Sets 1 and 2. The CAM result for Set 6 were also different from Set 1(RhLhD121) and Set 2(RhfLhD121). For Set 6(RvLvD121) images, CAM highlighted not only the parafovea, but also the fovea. In a previous study using fundus photography13, CAM brightly highlighted the temporal parafovea and moderately highlighted the fovea. It is possible that the asymmetric differ on the location of the retina, and the temporal parafovea may have a larger asymmetric than the superior and inferior parafovea. This could be explained by asymmetry differing according to retina location; additional research is required to test this hypothesis.

Set 7(RvfLvD121) comprised OCT images of the upper and lower halves of the eye. Several studies17–19 have demonstrated macular and choroidal asymmetry between the upper and lower halves of the eyes. In this study, the classification accuracy was second-highest for Set 7(RvfLvD121), supporting previous studies. CAM highlighted the thickness of the parafoveal retina and choroid. The AUC of Set 7(RvfLvD121) was significantly higher compared to that of Set 6(RvLvD121), which also supports previous studies showing that the upper and lower halves of the retina and choroid are not identical.

Set 8(RhRhD121) was designed to test overfitting, which is a common problem with CNNs. The results for Sets 1–7 may have resulted from overfitting, in which a CNN would show similar results for any random OCT image. The reliability of our results would be demonstrated by an inability of the CNNs to distinguish among uniform images. The CNNs could not accurately discriminate Set 8(RhRhD121) images (p = 0.548), although training loss decreased. This result indicates that the classification results for Sets 1–7 were not affected by overfitting.

We observed asymmetry between the left- and right-eye OCT images. Cameron et al.20 also observed asymmetry; however, they were unable to identify specific asymmetric components. Wagner et al.21 reported that the “angles between the maxima of peripheral RNFL thickness” were higher in right than left eyes, and that RNFL asymmetry could be influenced by the locations of the superotemporal retinal artery and vein22. The retinal vascular system also exhibits interocular asymmetry. Leung et al23 reported that the mean central retinal arteriolar equivalent of right eyes was 3.14 µm larger than that of left eyes. In this study, the CNNs were well-capable of recognizing asymmetry.

Based on our results and previous studies using fundus imagery24–26, it seems clear that CNNs can distinguish several features through analyzing retinal images that cannot be resolved by humans; that is, CNNs can determine patient age, sex, and smoking status. Our CNNs identified several features distinguishing left- and right-eye images that cannot be detected by humans. The results were similar after resetting the CNNs many times. Therefore, we assume that there are hidden patterns in gray-scale OCT images detectable only by CNNs. One possible hypothesis is that the human brain has limited multi-tasking capacity compared to the computer. Human cognition has limitations in processing multiple inputs at the same time27. For example, in the “Where’s Wally?” visual search task28, the human brain has difficulty processing the seven salient features (a red-striped long-sleeved T-shirt, jeans, round glasses, a hat, a chin, and curly hair) simultaneously, whereas a computer can do this easily29. The numbers of filters in the last layer of DenseNet121, ResNet50, and VGG19 are 1024, 2048, and 512, respectively. In theory, each filter can find a different feature. Thus, DenseNet121 can process 1024 features, which is beyond human capabilities.

The main strength of this study was that we included images of both normal and pathological eyes. It seems that there are significant biocular asymmetries in both healthy and pathological eyes. It would be interesting to analyze asymmetry according to disease type and progression, which could aid the development of a scale for measuring normal structure degradation/destruction. In addition, we used “lossless” BMP and unmodified images (except for the cropping and flipping processes). However, only one OCT device (Spectralis SD-OCT; Heidelberg Engineering) was used, and all analyses were conducted at a single institution. Thus, future studies should compare multiple OCT systems.

In conclusion, we hypothesized that OCT images of the right and left eyes are mirror-symmetric. However, we found asymmetry in both vertical and horizontal OCT images of the right and left eyes. To our knowledge, this is the first machine learning study to assess differences in OCT images of the left and right eyes. Our CNNs could accurately distinguish left- and right-eye OCT images. However, asymmetry may introduce bias into CNN results; thus, care should be taken when flipping images during preprocessing, given the possible impact of bias on evaluations of diseases that involve the macula, such as age-related macular degeneration and diabetic macular edema.

Methods

Study design

This retrospective study was approved by the Institutional Review Board of Gyeongsang National University Changwon Hospital (GNUCH 2020-07-009). The procedures used in this study followed the principles of the Declaration of Helsinki. The requirement for informed patient consent was waived by the Institutional Review Board of Gyeongsang National University Changwon Hospital due to the retrospective nature of the study.

Image acquisition protocol

An expert examiner evaluated the retinas with a Spectralis SD-OCT device (Heidelberg Engineering, Heidelberg, Germany). The system acquired 40 k A-scans per second, with an axial resolution of 3.9 μm/pixel and transverse resolution of 5.7 μm/pixel. Twenty-five cross-sectional images were taken with an interval of 240 μm. Each cross-sectional image consisted of 768 A-scans and subtended an angle of 30° (Fig. 3). The examiner took a horizontal cross-sectional image of the macula, followed by vertical cross-sectional images. We accessed these images using automated programs written in AutoIt and saved them in the bitmap (BMP) format. Only the 13th image (i.e., the median image of 25 consecutive OCT images) was analyzed. We included all cases in the analysis to reduce selection bias. The cases included various retinal diseases such as epiretinal membrane, macular hole, and rhegmatogenous retinal detachment; some eyes were filled with gas, air, or silicone oil, etc.

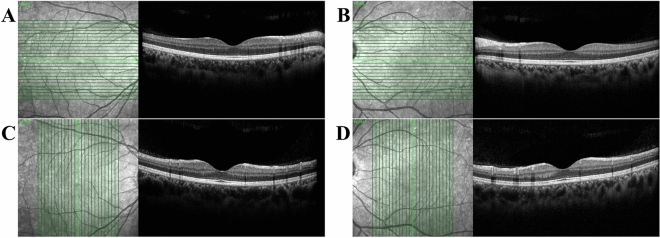

Figure 3.

Optical coherence tomography (OCT) images used in the study. Twenty-five OCT images were obtained during one examination; only the median (13th) images were analyzed. A Right horizontal OCT image, B Left horizontal OCT image, C Right vertical OCT image, D Left vertical OCT image. In horizontal images (A,B), the optic disc is positioned on the right side of the right eye (A) and left side of the left eye (B). Since the retinal nerve fiber layer (RNFL) and major retinal vessels gather from the optic disc, the RNFL is thicker on the optic disc than on the opposite side. Also, shadows of the retinal vessels are easily identifiable on the optic disc side. As the optic discs of both eyes are located on the opposite sides, the horizontal images of the right and left eyes look like mirror images. In vertical images (C,D), the left side is the inferior part of the fovea (bottom of vertical green line), and the right side is the superior part of the fovea (top of vertical green line). Because the inferior and superior parts are located at similar distances from the optic disc, the RNFL thickness and the vascular shade density are similar. The vertical images of the right eye (C) and left eye (D) are difficult to discriminate.

Data pre-processing

We did not perform data augmentation. We trimmed and flipped the images using the Python packages OpenCV and NumPy30. The size of the original OCT images was 768 × 496 pixels. We trimmed 136 pixels at both ends of each image to remove boundary artifacts, leaving only the macula (496 × 496 pixel-size image, Fig. 4). The trimmed 496 × 496 pixel image corresponds to about 19.4° of macular.

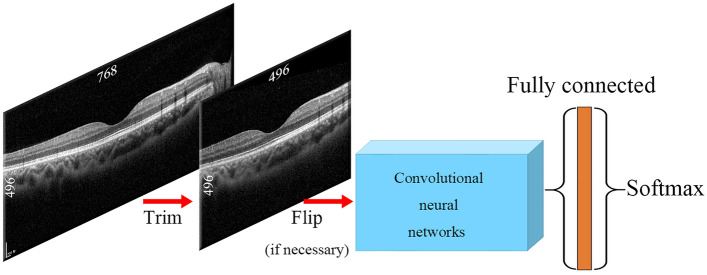

Figure 4.

Diagram of the image processing procedure. The images were trimmed from 768 × 496 to 496 × 496 pixels. If needed, the images were flipped horizontally. The processed images were loaded into convolutional neural networks (CNNs) embedded in the Keras package and attached to the fully connected and Softmax layers.

CNN model

Transfer learning is a method for generating a new model using a previously trained model and weights. We used a pre-trained model to improve accuracy31–34, as previous studies have demonstrated that the features of pre-trained models are transferable to ophthalmology data33. Densenet12135, ResNet5036, and VGG1937, embedded in the Keras package, showed excellent ability to distinguish left and right fundus photographs13. The outputs of DenseNet121, ResNet50, and VGG19 were connected to the fully connected layer and Softmax layer. We used categorical cross-entropy loss functions and Adam as the gradient descent optimization algorithm.

Image sets

Eight image sets were prepared (Fig. 5). Set 1(RhLhD121) consisted of horizontal OCT images of right and left eyes without any transformation. Sets 2–4(RhfLh) comprised non-flipped right horizontal images and horizontally flipped left horizontal images. We tested different CNNs on the same image dataset to check for asymmetry. Set 5(fRhLhD121), which consisted of flipped right horizontal images and non-flipped left horizontal images, showed an inversed dataset to Sets 2–4. Set 6(RvLvD121) consisted of vertical images of right and left eyes without any transformation. Set 7(RvfLvD121) consisted of non-flipped right vertical images and horizontally flipped left vertical images. The images in Sets 1–7 were of right and left eyes. The purpose of Set 8(RhRhD121) was to verify the overfitting problem that often occurs in CNNs. Set 8(RhRhD121) consisted of only non-flipped right horizontal images. The images were randomly divided into subsets 1 and 2.

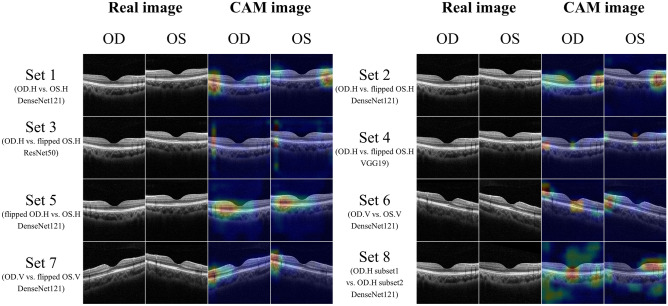

Figure 5.

Image sets and their corresponding class activation mapping (CAM) results. Set 1 consisted of horizontal images of right and left eyes. Papillomacular retinal nerve fiber layers with high reflectivity were shown on the nasal side of the image. CAM highlights both ends of parafoveal regions of images. Sets 2–4 consisted of right horizontal images and flipped left horizontal images. Papillomacular retinal nerve fiber layers with high reflectivity were shown in the right-side of OCT images. CAM patterns in Sets 2–4 differed: DenseNet121 highlighted the parafovea, whereas ResNet50 highlighted only the parafovea and VGG19 both the parafovea and macula. Set 5 consisted of flipped right and left horizontal images; CAM highlighted the parafovea, similar to Set 2. Set 6 consisted of vertical OCT images of right and left eyes. From Set 6, heatmaps highlighting the parafovea and macular region were produced. Set 7 comprised non-flipped right vertical images and flipped left vertical OCT images; the ends of the parafoveal regions were highlighted. Set 8 consisted only of right horizontal images. Unlike Sets 1–7, Set 8 images showed an irregular pattern, emphasizing the vitreous region and outside of the sclera. OD oculus dexter, OS oculus sinister, H horizontal, V vertical.

Class activation mapping

We used class activation mapping (CAM)38 to better understand how the CNNs worked. CAM uses heatmaps to identify the areas used by CNNs to make decisions. Hotter areas carry more weight in CAM heatmaps and are more important in the CNN class discrimination process. Using CAM, we identified locations that carried more weight in the final convolutional and classification layers.

Software

Python (version 3.7.9) was used for this study. The CNN model consisted of TensorFlow 2.4.1, Keras 2.4.3, OpenCV 4.5.1.48, and NumPy 1.19.5. The performance of each CNN model was evaluated by calculating the accuracy of the test set. The central processing unit used to train the CNN model was an Intel® Core™ i9-10980XE system (Intel Corp., Santa Clara, CA, USA), equipped with a GeForce RTX 3090 graphics card (Nvidia Corp., Santa Clara, CA, USA). We analyzed the results of the test set using SPSS for Windows statistical software (version 24.0; SPSS Inc., Chicago, IL, USA). We drew receiver operating characteristic (ROC) curves with test set results and computed the area under the ROC curve (AUC). The p values for the ROC curves were calculated with SPSS software. We compared AUC of two ROC curves using MedCalc Statistical Software for Windows (version 19.2.6; MedCalc, Ostend, Belgium). Statistical significance was set at a p value of < 0.05.

Author contributions

Design and conduct of the study (T.S.K. and Y.S.H.); collection of data (T.S.K., S.H.P); analyses and interpretation of data (T.S.K., S.H.P., and Y.S.H.); writing the manuscript (T.S.K. and Y.S.H.); critical revision of the manuscript (T.S.K., W.L., S.H.P. and Y.S.H.); and final approval of the manuscript (T.S.K., W.L., S.H.P. and Y.S.H.)

Data availability

Data supporting the findings of the current study are available from the corresponding author upon reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Schmitt JM. Optical coherence tomography (OCT): A review. IEEE J. Sel. Top. Quantum Electron. 1999;5:1205–1215. doi: 10.1109/2944.796348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc. IEEE. 1998;86:2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 3.Lindsay GW. Convolutional neural networks as a model of the visual system: Past, present, and future. J. Cogn. Neurosci. 2020;86:1–15. doi: 10.1162/jocn_a_01544. [DOI] [PubMed] [Google Scholar]

- 4.Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: An overview and application in radiology. Insights Imaging. 2018;9:611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schmidt-Erfurth U, Sadeghipour A, Gerendas BS, Waldstein SM, Bogunović H. Artificial intelligence in retina. Prog. Retin. Eye Res. 2018;67:1–29. doi: 10.1016/j.preteyeres.2018.07.004. [DOI] [PubMed] [Google Scholar]

- 7.Liu Y-P, Li Z, Xu C, Li J, Liang R. Referable diabetic retinopathy identification from eye fundus images with weighted path for convolutional neural network. Artif. Intell. Med. 2019;99:101694. doi: 10.1016/j.artmed.2019.07.002. [DOI] [PubMed] [Google Scholar]

- 8.Li Y-H, Yeh N-N, Chen S-J, Chung Y-C. Computer-assisted diagnosis for diabetic retinopathy based on fundus images using deep convolutional neural network. Mob. Inf. Syst. 2019;2019:1–14. [Google Scholar]

- 9.Hu K, et al. Retinal vessel segmentation of color fundus images using multiscale convolutional neural network with an improved cross-entropy loss function. Neurocomputing. 2018;309:179–191. doi: 10.1016/j.neucom.2018.05.011. [DOI] [Google Scholar]

- 10.García, G., Gallardo, J., Mauricio, A., López, J. & Del Carpio, C. In International Conference on Artificial Neural Networks 635–642 (Springer).

- 11.Eftekhari N, Pourreza H-R, Masoudi M, Ghiasi-Shirazi K, Saeedi E. Microaneurysm detection in fundus images using a two-step convolutional neural network. Biomed. Eng. Online. 2019;18:67. doi: 10.1186/s12938-019-0675-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Elangovan P, Nath MK. Glaucoma assessment from color fundus images using convolutional neural network. Int. J. Imaging Syst. Technol. 2020;31:955–971. doi: 10.1002/ima.22494. [DOI] [Google Scholar]

- 13.Kang TS, et al. Asymmetry between right and left fundus images identified using convolutional neural networks. Sci. Rep. 2022;12:1–8. doi: 10.1038/s41598-021-99269-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hassan, T., Akram, M. U., Hassan, B., Nasim, A. & Bazaz, S. A. Review of OCT and fundus images for detection of Macular Edema. In 2015 IEEE International Conference on Imaging Systems and Techniques (IST) 1–4 (2015).

- 15.Tao LW, Wu Z, Guymer RH, Luu CD. Ellipsoid zone on optical coherence tomography: A review. Clin. Exp. Ophthalmol. 2016;44:422–430. doi: 10.1111/ceo.12685. [DOI] [PubMed] [Google Scholar]

- 16.Agrawal A, Raskar R, Nayar SK, Li Y. Removing photography artifacts using gradient projection and flash-exposure sampling. ACM Trans. Graph. 2005;24:828–835. doi: 10.1145/1073204.1073269. [DOI] [Google Scholar]

- 17.Yamashita T, et al. Posterior pole asymmetry analyses of retinal thickness of upper and lower sectors and their association with peak retinal nerve fiber layer thickness in healthy young eyes. Invest. Ophthalmol. Vis. Sci. 2014;55:5673–5678. doi: 10.1167/iovs.13-13828. [DOI] [PubMed] [Google Scholar]

- 18.Yamada H, et al. Asymmetry analysis of macular inner retinal layers for glaucoma diagnosis. Am. J. Ophthalmol. 2014;158:1318–1329.e1313. doi: 10.1016/j.ajo.2014.08.040. [DOI] [PubMed] [Google Scholar]

- 19.Mori K, Gehlbach PL, Yoneya S, Shimizu K. Asymmetry of choroidal venous vascular patterns in the human eye. Ophthalmology. 2004;111:507–512. doi: 10.1016/j.ophtha.2003.06.009. [DOI] [PubMed] [Google Scholar]

- 20.Cameron JR, et al. Lateral thinking–interocular symmetry and asymmetry in neurovascular patterning, in health and disease. Prog. Retin. Eye Res. 2017;59:131–157. doi: 10.1016/j.preteyeres.2017.04.003. [DOI] [PubMed] [Google Scholar]

- 21.Wagner FM, et al. Peripapillary retinal nerve fiber layer profile in relation to refractive error and axial length: Results from the gutenberg health study. Transl. Vis. Sci. Technol. 2020;9:35–35. doi: 10.1167/tvst.9.9.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jee D, Hong SW, Jung YH, Ahn MD. Interocular retinal nerve fiber layer thickness symmetry value in normal young adults. J. Glaucoma. 2014;23:e125–e131. doi: 10.1097/IJG.0000000000000032. [DOI] [PubMed] [Google Scholar]

- 23.Leung H, et al. Computer-assisted retinal vessel measurement in an older population: Correlation between right and left eyes. Clin. Exp. Ophthalmol. 2003;31:326–330. doi: 10.1046/j.1442-9071.2003.00661.x. [DOI] [PubMed] [Google Scholar]

- 24.Wen, Y., Chen, L., Qiao, L., Deng, Y. & Zhou, C. On the deep learning-based age prediction of color fundus images and correlation with ophthalmic diseases. In Proceedings (IEEE International Conference on Bioinformatics and Biomedicine) 1171–1175 (2020).

- 25.Munk MR, et al. Assessment of patient specific information in the wild on fundus photography and optical coherence tomography. Sci. Rep. 2021;11:1–10. doi: 10.1038/s41598-021-86577-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Kim YD, et al. Effects of hypertension, diabetes, and smoking on age and sex prediction from retinal fundus images. Sci. Rep. 2020;10:1–14. doi: 10.1038/s41598-019-56847-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Marois R, Ivanoff J. Capacity limits of information processing in the brain. Trends Cogn. Sci. 2005;9:296–305. doi: 10.1016/j.tics.2005.04.010. [DOI] [PubMed] [Google Scholar]

- 28.Handford M. Where's Wally? Walker Books; 1997. [Google Scholar]

- 29.Harron, W., Pettinger, C. & Dony, R. A neural network approach to a classic image recognition problem. In 2008 Canadian Conference on Electrical and Computer Engineering 001503–001506 (2008).

- 30.Harris CR, et al. Array programming with NumPy. Nature. 2020;585:357–362. doi: 10.1038/s41586-020-2649-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sharif Razavian, A., Azizpour, H., Sullivan, J. & Carlsson, S. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 806–813.

- 32.Yosinski, J., Clune, J., Bengio, Y. & Lipson, H. How transferable are features in deep neural networks? arXiv preprint arXiv:1411.1792 (2014).

- 33.Kermany DS, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122–1131.e1129. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 34.Donahue, J. et al. Decaf: A deep convolutional activation feature for generic visual recognition. In International Conference on Machine Learning 647–655 (2014).

- 35.Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 4700–4708 (2017).

- 36.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 770–778 (2016).

- 37.Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 (2014).

- 38.Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 2921–2929 (2016).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data supporting the findings of the current study are available from the corresponding author upon reasonable request.