Abstract

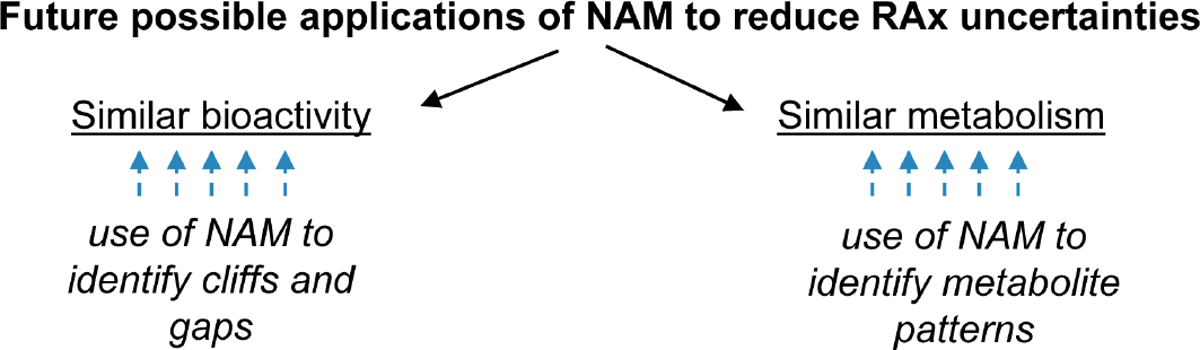

Read-across (RAx) translates available information from well-characterized chemicals to a substance for which there is a toxicological data gap. The OECD is working on case studies to probe general applicability of RAx, and several regulations (e.g., EU-REACH) already allow this procedure to be used to waive new in vivo tests. The decision to prepare a review on the state of the art of RAx as a tool for risk assessment for regulatory purposes was taken during a workshop with international experts in Ranco, Italy in July 2018. Three major issues were identified that need optimization to allow a higher regulatory acceptance rate of the RAx procedure: (i) the definition of similarity of source and target, (ii) the translation of biological/toxicological activity of source to target in the RAx procedure, and (iii) how to deal with issues of ADME that may differ between source and target. The use of new approach methodologies (NAM) was discussed as one of the most important innovations to improve the acceptability of RAx. At present, NAM data may be used to confirm chemical and toxicological similarity. In the future, the use of NAM may be broadened to fully characterize the hazard and toxicokinetic properties of RAx compounds. Concerning available guidance, documents on Good Read-Across Practice (GRAP) and on best practices to perform and evaluate the RAx process were identified. Here, in particular, the RAx guidance, being worked out by the European Commission’s H2020 project EU-ToxRisk together with many external partners with regulatory experience, is given.

1. Introduction

1.1. Background

In 2006, the REACH Regulation (Registration, Evaluation, Authorisation and Restriction of Chemicals, Regulation EC 1907/2006) was adopted in the EU, starting a new era of chemical assessment and asking for an unprecedented level of effort in collecting toxicological data on chemicals that were already on the market (Hartung, 2010a). Under REACH, importers and manufacturers of chemical substances are obliged to submit a registration dossier to the European Chemicals Agency (ECHA) containing an extensive list of data on the intrinsic properties of a substance, ranging from chemical characterization and physicochemical properties to toxicological and ecotoxicological data, with increasing demands depending on the tonnage band of the quantity of the substance placed on the market. In addition, registrants should collect information on use and exposure to perform a chemical safety assessment (CSA) based on the toxicity profile of the substance. The minimum data requirements are described in Annexes VI-X of the regulation. For toxicological testing, the standard requirements ask for in vivo or in vitro tests according to Regulation EC 440/2008, though tests performed according to OECD (Organisation for Economic Co-operation and Development) guidelines are generally accepted. Rovida and Hartung (2009) predicted that full compliance with the REACH Regulation would have required an enormous number of animals. This did not happen because fewer chemicals than expected were registered and because REACH permits waiving of the standard studies by applying a suitable adaptation to the standard required endpoint information. This opportunity is detailed in Annex XI of the REACH regulation, which also defines grouping of substances and the read-across (RAx) approach as: “Substances whose physicochemical, toxicological and ecotoxicological properties are likely to be similar or follow a regular pattern as a result of structural similarity may be considered as a group or ‘category’ of substances. Application of the group concept requires that physicochemical properties, human health effects, and environmental effects or environmental fate may be predicted from data for reference substance(s) within the group by interpolation to other substances in the group (read-across approach). This avoids the need to test every substance for every endpoint.”

Generally speaking, RAx is a technique used to predict endpoint information for one chemical by using data from the same endpoint from (an)other chemical(s) that is considered to be similar in some way. If data are read across from one or only a few source substances, this is often called an “analogue approach”. In contrast, grouping refers to the definition of a set of substances combined in a category, where their known toxicological properties follow a specific trend that can be used to infer the properties of the chemicals belonging to it. The precise border of distinction is not defined, because one-to-one comparison is often supported by evidence gained with other, similar chemicals. For this reason, RAx, as used in this report, represents any approach where a data gap is filled using existing information obtained for other substances (Patlewicz et al., 2014).

REACH Annex XI describes also other approaches to waive tests, including quantitative structure activity relationships (QSARs), weight-of-evidence (WoE), and in vitro testing. Among the non-testing approaches, no distinct edge between RAx, WoE or QSAR can be defined, and thus these three techniques may be combined.

According to the latest ECHA report (ECHA, 2017a) on the general use of alternative methods for REACH purposes, RAx has been extensively used in REACH registration dossiers, in particular for human health data endpoints. Indeed, alternative strategies have been used in about 34% of the dossiers for lower tier toxicological endpoints, e.g., local and acute endpoints for substances up to 100 t/y, whereas alternative strategies were employed in nearly 84% of the dossiers for substances above 100 t/y in the assessment of higher tier endpoints, e.g., repeated dose toxicity, and reproductive and developmental toxicity. The evaluation of these dossiers by ECHA found overall poor quality of the information provided, leading to low acceptance rates, as outlined by Ball et al. (2016) and ECHA. This was corroborated by a report and workshop organized by the German Federal Institute for Risk Assessment (BfR, 2018a,b). In many cases, RAx justifications by the registrants lacked important information, specifically with regard to explaining the influence of structural differences between source(s) and target(s), and justification of the inclusion/exclusion of group members. However, when performed correctly, RAx is probably the most direct approach for adapting standard information requirements.

Under Title VI, Chapter 1 (Dossier Evaluation) of the REACH Regulation, ECHA must evaluate the submitted dossiers for compliance with regulatory requirements. To that end, ECHA has implemented a system to manually check all dossiers containing waiving of standard information, as is the case when RAx is included in a REACH registration dossier. In this context, rejecting RAx due to inadequate justification has been shown to be one of the main reasons for requesting further (i.e., always in vivo) data from the registrants. Notably, a RAx justification will be rejected when ECHA considers the RAx hypothesis by the registrants implausible but also if the documentation provided is inadequate to allow a reliable assessment of its soundness. Given ECHA’s announcement to substantially increase their dossier evaluation activities, starting already in 20191, it is clear that adequately documenting valid RAx hypotheses in a scientifically sound way is a key issue when trying to avoid unnecessary animal testing under REACH.

Regarding RAx, ECHA issued a dedicated document describing the Read-Across Assessment Framework (RAAF) in 2015, which was updated in 2017 to include multi-constituent substances and substances of unknown or variable composition, complex reaction products and biological materials (UVCB) (ECHA, 2017b,c). Originally, these publications were intended only to define the rules applied by ECHA when assessing the RAx strategies. Like any framework, the RAAF document provides high-level information on the scope and the key elements a valid RAx justification should contain but provides no detailed guidance on how a RAx justification should be prepared. It also lacks practical examples of accepted or rejected RAx justifications. However, the RAAF document is very useful, as it describes the basic principles of RAx, which should be supported by both strong chemical and biological similarity, and a scoring system for rating the acceptability of the RAx strategy. Notably, the RAAF was published late in the REACH process, i.e., when most of the REACH registration dossiers were already submitted or at an advanced stage of preparation. Being published in 2015, the RAAF was available only to REACH phase-in registrants submitting dossiers for the final deadline, May 30, 2018, which included all substances marketed in the tonnage band above 1 t/y.

As far back as 2012, the European Centre for Ecotoxicology and Toxicology of Chemicals (ECETOC) published a technical report on the category approach, RAx and QSAR (ECETOC, 2012) that represents an introduction to the topic but provides only minor guidance on how to prepare and submit a RAx justification document to the regulatory authorities. Many of the recommendations proposed in the ECETOC document were later incorporated in an OECD guidance document for grouping that was published in 2014 (OECD, 2014) and updated in 2017 (OECD, 2017a). The latter describes the difference(s) between the analogue approach, where the comparison between source and target is mostly one-to-one and there is no difference in activity, and the category approach, where the toxicological assessment is performed on a group of chemicals for which the specific endpoint has a regular pattern or trend within the category. The innovative aspect was to provide some more guidance on how to go about crafting the RAx justification and start the discussion about where in vitro testing and adverse outcome pathways (AOPs) could play a role.

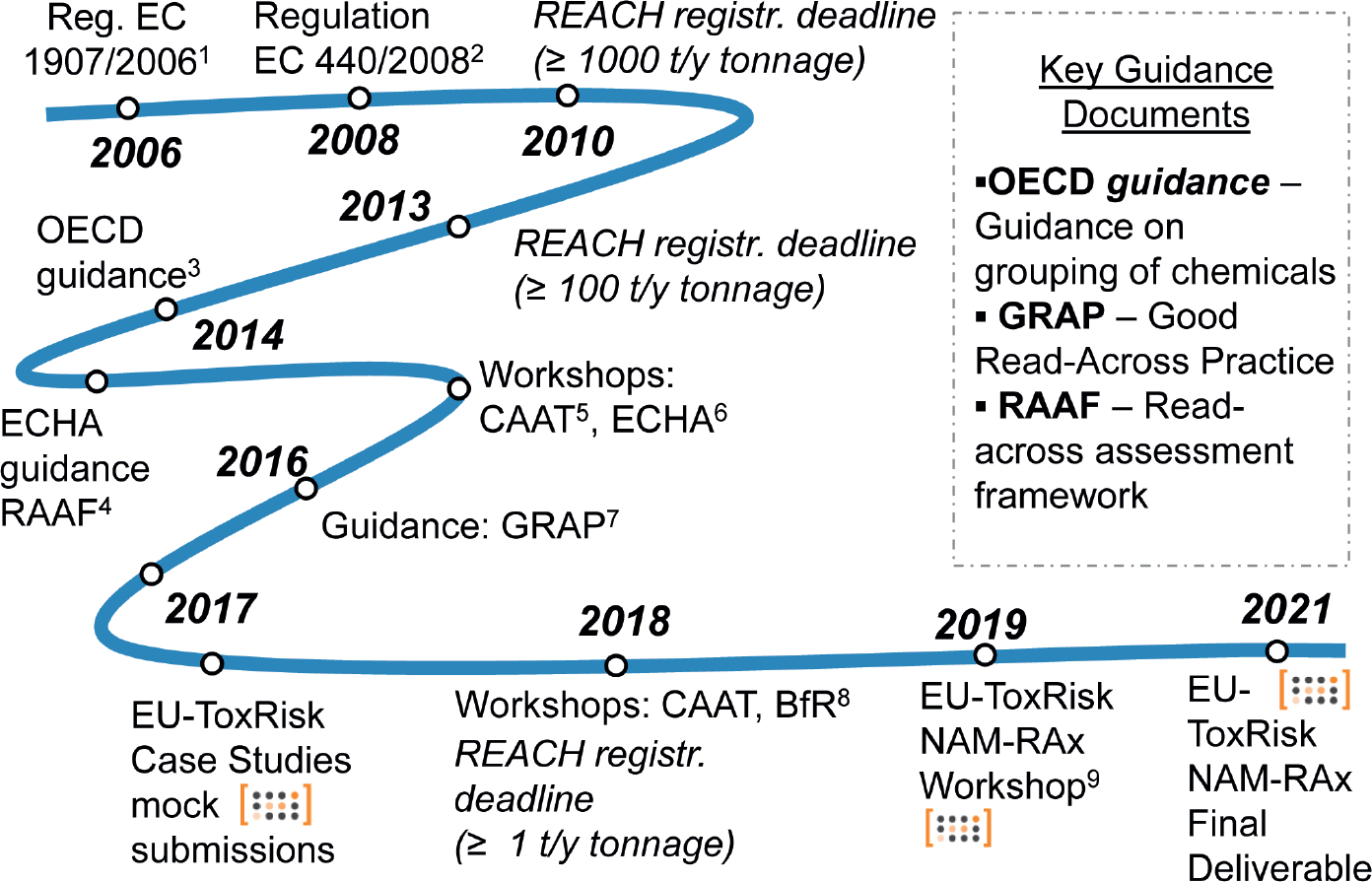

Acknowledging the needs for further guidance and agreement on how to build and apply a good RAx strategy, some initiatives were undertaken in the EU and in the US (Fig. 1). In 2016, CAAT-Europe, Cefic-LRI and EU-ToxRisk hosted a workshop (Maertens et al., 2016) to initiate a discussion on Good Read Across Practice (GRAP). This was followed by a similar initiative in the US2 with the collaboration of the US Food and Drug Administration’s Center for Food Safety and Applied Nutrition (FDA-CFSAN) accompanied by a satellite meeting at the Annual Meeting of the Society of Toxicology (SOT) in 2018 relating to the specific topic of regulatory acceptance of RAx (Chesnut et al., 2018). The intent was to summarize the state-of-the-art and provide examples and strategies for how to provide RAx justification based on the experience of industry registrants at the time.

Fig. 1: Timeline of the development of RAx application for risk assessment.

Schematic representation illustrating the key dates and documents for the development of the RAx application for regulatory purposes in Europe and within the EU-ToxRisk Project. Reference documents as mentioned in the text: 1 http://data.europa.eu/eli/reg/2006/1907/oj; 2 http://data.europa.eu/eli/reg/2008/440/oj; 3 https://bit.ly/2IqOBJB; 4 https://echa.europa.eu/documents/10162/13628/raaf_en.pdf; 5 Maertens et al., 2016; 6 ECHA, 2017b; 7 Ball et al., 2016; 8 https://bit.ly/3jVbYJ9; 9 https://bit.ly/3dl4Ing. The EU-ToxRisk final deliverable will represent an advisory document, complimentary to the already published ECHA reporting template for grouping and read-across (ECHA RAAF), facing the issue of regulatory acceptance from the point of view of the registrants of NAM-supported read-across dossiers.

A complementary document (Zhu et al., 2016) described the possible contribution of biological information to structure-based RAx. These concepts were also discussed at a workshop organized by ECHA in 2016 (ECHA, 2016, 2017d), arriving at the conclusion that new in vitro tests can be applied to justify RAx approaches. For the first time, the term “new approach methodologies” (NAMs) was introduced and taken “in a broad context to include in silico approaches, in chemico and in vitro assays, as well as the inclusion of information from the exposure of chemicals in the context of hazard assessment”. NAMs also embrace other information on toxicodynamics (e.g., high-throughput screening and high content-methods) and toxicokinetics with the aim of improving the understanding of toxic effects (van Vliet et al., 2014).

Currently, the EU-ToxRisk project, which is an Integrated European “Flagship” Programme driving mechanism-based toxicity testing and risk assessment for the 21st century (Daneshian et al., 2016), aims to move away from observational toxicology based on animal models and progress towards a toxicological assessment based on in vitro test responses using human cells together with a better mechanistic understanding of chemical adverse effects. The research is focused on two in vivo endpoints: repeated dose systemic toxicity (e.g., Hiemstra et al., 2019; Albrecht et al., 2019; Delp et al., 2019,) and developmental and reproductive toxicity (e.g., Rempel et al., 2015; Nyffeler et al., 2017; Delp et al., 2018). One of the first applications of the in EU-ToxRisk in vitro and in silico test batteries is applying the RAx hypothesis (Graepel et al., 2019; Escher et al., 2019). With this goal, the project has developed ad hoc case studies to be reviewed by regulators from ECHA and EU Member States with the aim of further improving the understanding of what constitutes an acceptable RAx justification and how this justification can be supported and improved using NAM data. The integrated results were submitted to regulators from ECHA and EU Member State competent authorities as mock dossiers and discussed in a workshop with stakeholders from academia, industry and authorities in May 2019 (manuscript in preparation).

The ongoing general discussion on RAx has confirmed the complexity of the approach and the need to establish suitable rules for its objective application, leading to a broader regulatory acceptance of RAx for the risk assessment of chemicals. With this goal, a group of stakeholders, coming from many different areas, expertise and organizations, including regulatory, validation and government bodies, was convened in Ranco (Varese, Italy) on July 16–18, 2018. During that workshop, sponsored and co-organized by CAAT-Europe, the EU-ToxRisk project and the Doerenkamp-Zbinden Foundation (DZF), it was decided to prepare an extensive document to present the state-of-the-art of RAx for regulatory purposes with the addition of further comments to foster its applicability for regulatory purposes. The present report, which benefits also from the contribution of experts who did not participate in that meeting, has the ambitious goal to describe the basis for immediate improvement of RAx acceptance as well as to define the strategy for future development in a contribution that may serve as an educational text both for those who are facing the argument for the first time and those who want to deepen it. The concepts and ideas presented here come from the individual participants and do not necessarily reflect the views and opinions of the organizations they represent. The debates were based on scientific discussions among the participants, without a necessarily unanimous final agreement on all issues.

1.2. The international dimension of RAx

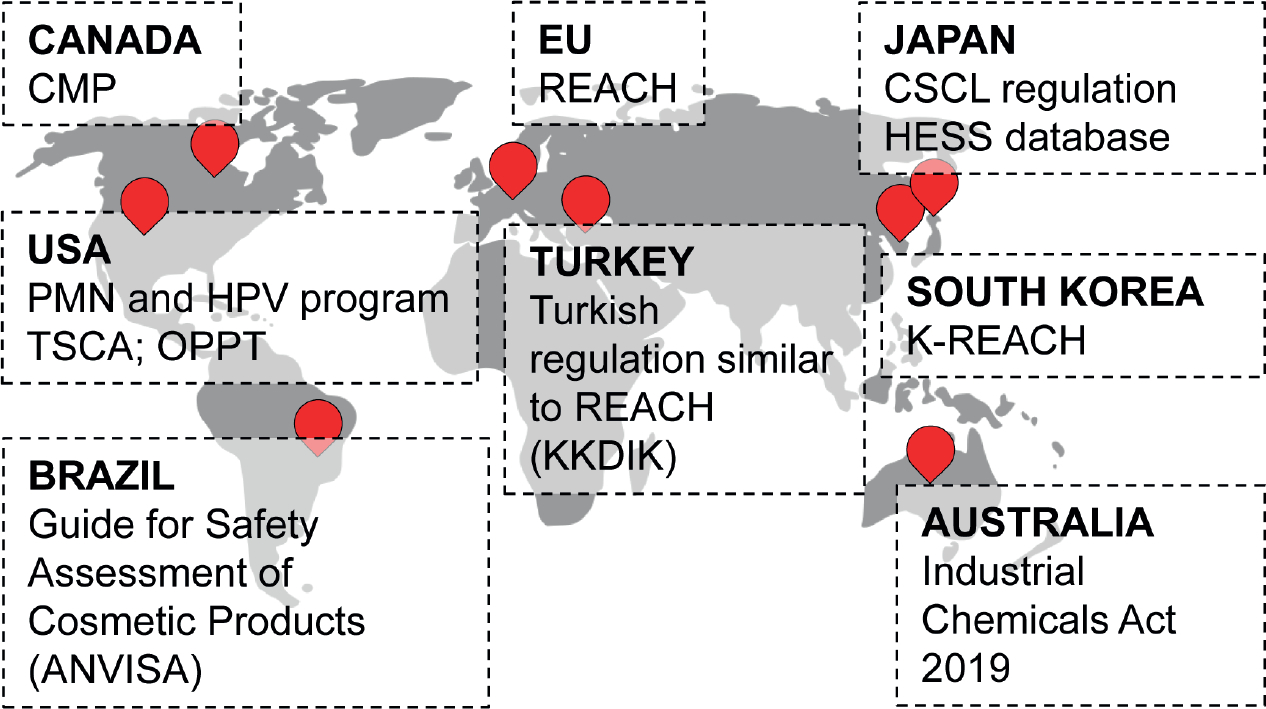

The EU was the first group of countries to accept RAx as a tool to address information requirements in lieu of standard testing, but for proper implementation of the new approach, greater international acceptance is necessary. RAx, and in more general terms in silico methods, are now included and promoted in many regulations across countries and sectors. As an example, the situation in some countries is considered here (Fig. 2), without the claim of providing an extensive analysis.

Fig. 2: Overview of the current legislations covering RAx regulatory applications worldwide.

ANVISA, Agência Nacional de Vigilância Sanitária (Brazilian National Health Surveillance Agency); CMP, Chemicals Management Plan; CSCL, Chemical Substances of Control Law; HESS, Hazard Evaluation Support System; HPV, High Production Volume; OPPT, Office of Pollution Prevention and Toxics; PMN, Pre-Manufacture Notice; REACH, Registration, Evaluation, Authorisation and Restriction of Chemicals; TSCA, Toxic Substances Control Act. This list is not intended to be complete.

In the US, there is minimal application of RAx for official regulatory dossiers, and there are no formal protocols, procedures or guidance documents on its use. To date, the most widely known use cases in the US are those within the US Environmental Protection Agency (EPA) that already have been captured in the current OECD guidance (OECD, 2017), such as experiences under the Pre-Manufacture Notice (PMN) process or High Production Volume (HPV) program (van Leeuwen et al., 2009) as well as the chemical categories used to review new chemicals under the Toxic Substances Control Act (TSCA)3. The US EPA’s Office of Pollution Prevention and Toxics (OPPT), whose experience with RAx approaches is relatively mature, has developed the Analogue Identification Methodology4 (AIM) and the Chemical Assessment Clustering Engine (ChemACE)5, specifically designed to assist, review and prioritize large inventories of chemicals and to facilitate RAx and data gap-filling for untested substances. The activity of OPPT started in 2010, with the publication of the New Chemical Categories document (OPPT, 2010) that was intended to provide an effective tool for EPA reviewers to benefit from the accumulated data and past decisions as precedents. This document collects the different substances grouped by main chemical functional groups that are supposed to result in a specific physicochemical or toxicological property of the molecule. The Interagency Coordinating Committee on the Validation of Alternative Methods (ICCVAM) has shown continued interest in the potential of RAx to waive new tests on vertebrate animals. In 2016, ICCVAM broadcast a dedicated webinar6 and established a working group focused on RAx, which was assigned the task of summarizing best practices for the application and implementation of RAx in the different regulatory settings of interest. A recent publication by the ICCVAM RAx working group summarized current needs and opportunities across US agencies, including decision contexts, desired activities, and any available guidance, and provided a list of freely available RAx tools (Patlewicz et al., 2019). This paper also detailed two specific case studies to illustrate how RAx is applied in practice: the EPA evaluation of n-heptanal under the Superfund program and a toxicological risk assessment for a medical device at the US FDA.

In Canada, Health Canada and Environment and Climate Change Canada’s risk assessment programs have continued to increase the use of RAx in risk assessments throughout the evolution of the three phases of the Chemicals Management Plan (CMP). RAx was introduced as a key approach to support risk assessment during Phase 2 of the CMP (Substance Groupings Initiative 2011–2015)7, where chemicals began to be assessed as groups based on structural, functional or mechanistic similarities, and is now routinely used in the risk assessment of new and existing substances in Phase 3 (2016–2020). The Government of Canada gained experience in using RAx by contributing to the development of the OECD guidance on the grouping of chemicals and subsequent updates (OECD, 2014, 2017a) and through expert consultation from the CMP Science Committee on best practices for deriving a sufficient rationale to support risk assessment decisions within the context of the CMP8. As the CMP in Canada continues to advance and modernize, RAx remains a critical tool contributing to the WoE evaluation9 and reducing uncertainty in an assessment, as well as permitting the assessment of substances that would otherwise have insufficient information. It can also provide verification or validation of other data to fill endpoint-specific data gaps and support the identification of needs for further testing. The RAx tools and approaches that have been developed and implemented under the CMP are evolving to facilitate the integration of emerging data sources and NAM data for the formation of chemical categories to support the identification of groups of chemicals as risk assessment priorities in moving forward. Furthermore, there is on-going development of computational approaches for analogue selection for RAx to advance risk assessment through integrated approaches to testing and assessment (IATA) such as that illustrated by the case study for a group of substituted phenols (Webster et al., 2019).

Other countries have adopted legislations very similar to REACH, but not equally advanced in the promotion of alternative methods. For example, in South Korea the structure of K-REACH resembles that of the EU approach but lacks all reference to the possibility to adapt standard information (Ha et al., 2016). This means that the RAx approach is acceptable but not applied. The first deadline for registering substances in the tonnage band above 1000 t/y will be in 2021, and it is now too early for any analysis of this topic.

In Australia, the Industrial Chemicals (Notification and Assessment) Act 1989 will be replaced on 1 July 2020 by the Industrial Chemicals Act 201910. This act contains no details on the toxicological tests that will be in dedicated guidelines still under construction at the time of writing. However, this new law includes the ban on animal tests on cosmetic ingredients.

A candidate country for accession to the EU, Turkey’s program includes the harmonization of its legislation. KKDIK, the Turkish version of REACH, is very similar to the EU’s REACH regulation. However, since KKDIK came into effect only in 2017, with pre-registration and registration deadlines in 2020 and 2023, respectively, it is not yet known to what extent adaptation to standard information requirements will be applied.

The Japanese Hazard Evaluation Support System (HESS) database and platform11, which was started in 2012 and has been updated regularly, is promising with respect to RAx application. HESS supports the evaluation of repeated dose toxicity by category approach and is linked to two databases. The first is a toxicity knowledge database, which contains information on repeated-dose toxicity and toxicity mechanisms. The second is a metabolism knowledge database containing rat metabolism maps and information on absorption, distribution, metabolism and excretion (ADME) in rats and humans. HESS allows chemicals to be categorized on the basis of structural, physicochemical and mechanistic similarities and supports the prediction of repeated-dose toxicity for untested chemicals by means of the category approach. Regarding the legislative framework, chemical substances are regulated by the Chemical Substances of Control Law (CSCL) that may accept a RAx approach for hazard assessment on a case-by-case basis upon the formal approval of a scientific committee. However, so far, the application of RAx for regulatory purposes has been limited.

Regarding Brazil, the Guide for Safety Assessment of Cosmetic Products published by the Brazilian National Health Surveillance Agency (ANVISA – Agência Nacional de Vigilância Sanitária) suggests the use of in silico models for selection of raw materials in order to avoid the use of unsafe chemicals. It also states that such results must be carefully used and do not fully replace validated in vitro or in vivo models. For drugs and biological products, impurity mutagenesis must be evaluated by two in silico methods (expert rule-based and statistics based). Read-across is not fully acceptable for general toxicity. The Brazilian Center for Validation of Alternative Methods (BraCVAM) encourages the use of the RAx concept, at least at the beginning of a study, as part of the integrated testing strategy (ITS).

1.3. Applications

Given its inherent potential for huge economic savings and scientific innovation, industry is, in general, open to the opportunity of RAx, provided that there are clear rules for its applicability and guarantees for regulatory acceptance. Some key examples are provided here.

The petrochemical industry represented by CONCAWE, a division of the European Petroleum Refiners Association, is studying the environmental health and safety of petroleum derivatives. The problem of petroleum products is that they are complex UVCBs with highly variable compositions. Grouping is generally performed through general similarities of analytical characterization and physicochemical characteristics, which are not sufficient in many cases to justify RAx hypotheses. For this reason, CONCAWE has sponsored a new project, called Cat-App12, with the final goal of addressing the specific challenge posed by UVCBs such as petroleum chemicals and defining practical strategies for grouping and RAx strategies for a cost-effective solution. The approach is to integrate innovations in (i) in vitro testing, (ii) high-throughput genomics, and (iii) integrative data analyses and visualization into a transparent workflow for RAx assessment. This approach offers interesting perspectives; however, it remains to be seen whether the results produced will ultimately affect the formation of more reliable categories that are acceptable from a regulatory point of view.

Another industrial association that is very active in this field is the International Fragrance Association (IFRA). Similar to petroleum products, many fragrances are also UVCBs, often with unclear structures and embracing many different chemical classes. Fragrances are common ingredients of cosmetic products, and their precise toxicological characterization is of the utmost importance. In the case of fragrances, and for the whole cosmetic sector in general, the opportunity of performing risk assessment using the RAx approach is highly appealing (Api et al., 2015). In fact, more than other industry branches, the cosmetics industry needs very accurate animal-free prediction methods for possible adverse health effects in humans due to the prohibition of testing in vertebrates by the EU Cosmetics Regulation (EC) 1223/2009. To demonstrate how this topic is of outstanding importance, the European Union’s Scientific Committee on Consumer Safety (SCCS) has published a call to hire RAx experts, acknowledging that assessment of cosmetic ingredients is increasingly based on this approach.

RAx is also applied in the evaluation of chemical constituents within dietary supplements (Cohen et al., 2018). For example, the US FDA has used computational modelling of the 25 most prevalent substances in Kratom, a material of plant origin, to compare structural similarities with opioid analgesics13. They then used this information, together with previously available experimental data, to determine the substances’ biological targets. Also in this area, botanical safety can be evaluated using RAx in combination with the threshold of toxicological concern (TTC), which is the estimated exposure level that is considered of negligible harm (Little et al., 2017).

An emerging field of RAx application is the grouping of nanomaterials. Many different nanomaterials are used in various sectors, ranging from cosmetics to paints to drugs. Specific biological activities of nanomaterials, which may be determined by their shape, size and/or surface properties, might not be fully captured by traditional toxicological methods, and testing all of these materials with in vivo methods seems impossible (Hartung, 2010b; Hartung and Sabbioni, 2011). A major challenge of applying grouping and read-across to nanomaterials is the identification of those material properties that are critical for adverse outcomes of nanomaterial exposure (Oomen et al., 2015; Burden et al., 2017). Current concepts for the grouping of nanomaterials for human health risk assessment (e.g., Landsiedel et al., 2017) consider composition, solubility, morphology and/or surface reactivity. These concepts have been developed further within the EU GRACIOUS project14. Mech et al. (2018) reviewed the regulatory acceptance of RAx applied to nanomaterials and pointed out that specific guidance for implementing grouping and RAx of nanomaterials still needs to be developed. There is, however, consensus (e.g., OECD, 2016a) that for nanomaterials, particularly nanoforms of the same chemical composition, grouping and RAx can help to reduce testing while still obtaining sufficient information to assess their risks. Under REACH, ECHA has issued a guidance appendix relevant for nanomaterials (ECHA, 2017e). Similarly, this has been highlighted as an option in the EFSA guidance on the application of nanoscience and nanotechnologies in the food and feed chain (EFSA, 2018a). Several case studies on nanomaterial RAx are available for specific endpoints or exposure routes (Arts et al., 2016; Aschberger et al., 2019).

Another sector where the potential of the RAx approach can be important is the assessment of polymers, whose toxicological assessment is seldom performed. In addition to that, the question about the impact of microplastics in the environment is only starting to be considered from a toxicological point of view. The only exceptions are in the application of polymers as food contact materials. In general, risk assessment of food contact materials is performed only on migrating substances.

The field of medical devices is definitely more complex. In the EU, medical devices are in the scope of the new EU Regulation 2017/745, which is still in the transition period until May 26, 2020. When fully in force, this regulation will require far more demanding tests to approve new products, in particular those with chronic exposure. Since it is new, there is time to improve the use of RAx, which is completely absent from the authorization dossiers of existing products. Toxicological assessment of medical devices in the US is very similar to that proposed in the EU, as both legislations ask for ISO test methods. In theory, in vitro tests and RAx are not prohibited, but the probability that regulators will request confirmation with in vivo tests usually leads applicants to immediately perform in vivo methods to avoid the risk of duplicate testing (Rovida, 2010), which increases costs and causes delays to marketing (Kerecman Myers et al., 2017).

Drug safety assessment shares a very similar situation. The European Medicines Agency (EMA), FDA and other governmental institutions with responsibilities in the approval of new drugs declare a willingness to consider any new approach if its scientific validity is demonstrated, but in the end, all substances must undergo extensive in vivo tests for acceptance. At present, RAx is nevertheless applied and accepted for the evaluation of drug impurities that cannot be isolated and characterized15. In this sense, RAx is formally accepted in the risk assessment of non-tested contaminants (EMA, 2014). Pharmaceutical companies extensively apply in silico methods during drug discovery and development to detect drug candidates with potential safety concerns, which are then removed from the development pipeline to save costs and minimize risks.

The EU Plant Protection Products Regulation (Regulation (EU) 1107/2009), while acknowledging the general principle of avoiding unnecessary animal testing, does not make specific reference to RAx. Article 5 of Regulation (EU) 283/2013 (detailing the data requirements for pesticide active substances) states that “tests on vertebrate animals shall be undertaken only where no other validated methods are available. Alternative methods to be considered shall include in vitro methods and in silico methods,” and arguably RAx could be considered an in silico method, in particular when supported by in silico tools such as the OECD QSAR Toolbox16. However, for the assessment of pesticide active ingredients, RAx is practically never used, though it is increasingly applied for pesticide impurities, metabolites and degradation products. RAx is also usually considered in the preliminary assessment during the gathering and organization of existing information (SAPEA, 2018). Only in the case of dietary assessment of pesticide metabolites is RAx formally used and accepted, in particular for the evaluation of genotoxicity (EFSA, 2016).

In contrast, Annex IV of the EU Biocidal Products Regulation (Regulation (EU) 528/2012) allows for the adaptation of standard information requirements in the same way as Annex XI of the REACH Regulation, and therefore analogue/grouping approaches are frequently employed. As an example, RAx was used to assess the similarity of 5-chloro-2-(4-chlorophenoxy)phenol (DCCP), a biocide approved for human hygiene, disinfection, food and animal feeds, to Triclosan, based on similar toxicokinetics measured in hamsters17.

Recently, ECHA and EFSA jointly prepared new guidance for the identification of endocrine disruptor properties of pesticides and biocides, in which a RAx opportunity is explicitly mentioned (ECHA/EFSA, 2018).

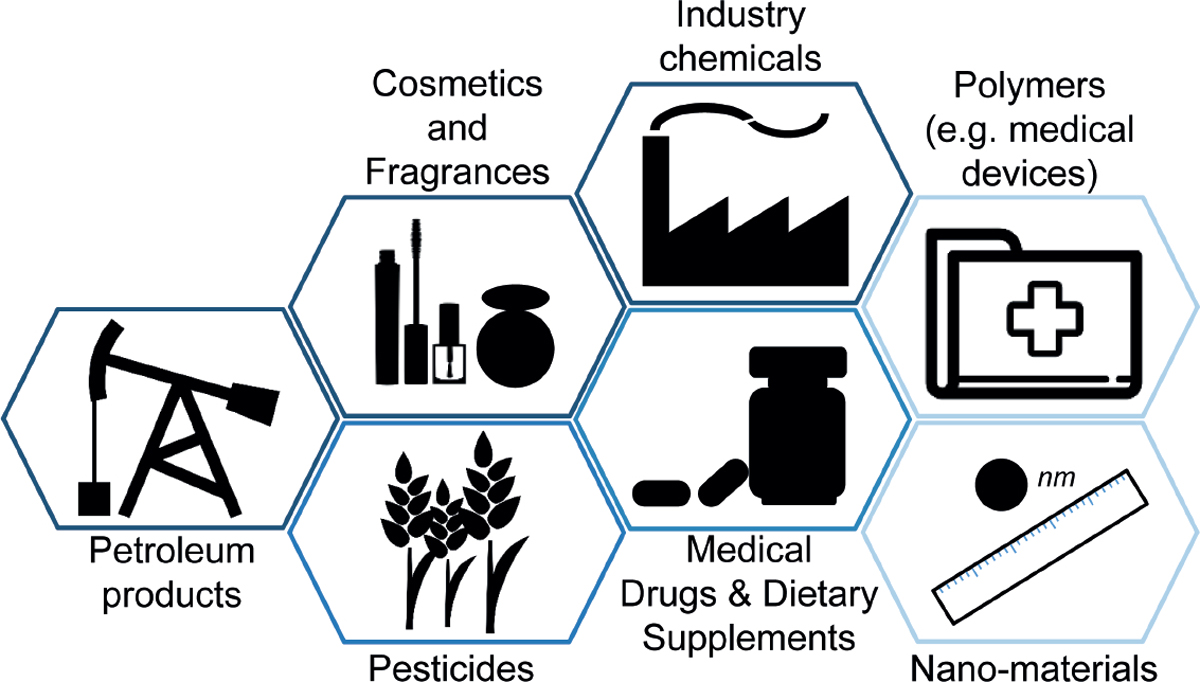

In conclusion, there are plenty of opportunities for the use of RAx in the different industrial sectors, but they remain substantially underexploited, even though the hope is for a more extensive application in the future (Fig. 3).

Fig. 3: Industry sectors affected by the application of the RAx approach for risk assessment.

Affected products range from industrial chemicals, cosmetics, medical drugs and pesticides, to petroleum products, nanomaterials, and polymers. Tiles are outlined in shades representing maturity of RAx applications, from darkest (most) to lightest (least).

2. Confidence building

2.1. Chemical and biological starting points for similarity assessment

Though this paper is not intended to review all scientific possibilities for RAx justification, it is important to provide the basic principles for the applicability of this approach to facilitate the discussion on validation and acceptance.

A RAx hypothesis should start from a proper definition of the target substance(s) by providing the correct molecular structure and purity. In the case of multi-constituent substances, each component should be defined both with respect to its molecular structure and relative content in the target substance or, in general, the composition should be described as much as possible. Ideally, full spectrometry characterization with mass spectrometry (MS) spectra, nuclear magnetic resonance (NMR) spectra of both hydrogen and carbon, if necessary, an infrared (IR) spectrum, and any other techniques that can be useful for the proper definition of the compound should be provided for each substance. This process should be aimed at the characterization of the whole sample rather than a mere definition of the main component. For this aim, the ECHA guidance for substance identification can be very helpful (ECHA, 2017f).

Once the characterization of the target substance is clear, the next step is the identification of one or more possible source substance(s). In many cases, the first approach is based on expert judgment derived from observation of the chemical structure. In comparatively easy scenarios, the source compound is a stereoisomer of the target compound or a salt with a different counterion or a substance sharing the same scaffold with minor differences in molecular weight. In other cases, the selection of source compounds is not trivial and requires the support of reliable computational tools that may assist in the identification of the proper chemical or group of chemicals. For this, the structure of the target substance should be transferred into a notation for molecular descriptions, for instance Simplified Molecular-Input Line-Entry System (SMILES) strings or the IUPAC International Chemical Identifier (InChI) keys, which are typically used as input into software for the calculation of molecular descriptors and physicochemical properties as well as molecular modelling (Heller et al., 2015). Some in silico methodology, e.g., docking simulations or molecular dynamic simulations, may require three-dimensional structural information (Luechtefeld and Hartung, 2017). The computational tool most frequently applied in the area of RAx is the OECD QSAR Toolbox16, which represents a good starting point for the identification of possible source substances. The QSAR Toolbox16 profiles molecules according to their chemical structure and corresponding possible reactivity in order to define criteria for category formation and provides the basis to explain the presence/absence of hazards or other properties.

After collecting candidates for source substances, the next step is the collection of all available information to fill the data gap and demonstrate similarity. A possible source compound is useless if there are no data or the available data are not accessible for any reason, such as the study report is proprietary or simply not available. Furthermore, existing information needs to be categorized and, in particular, all data should be analyzed to understand the mechanistic knowledge that they may bring along in order to identify biological pathways responsible for the outcome of an adverse effect that can be combined to substantiate the mechanism of action of target and source substances. If the mechanism behind an endpoint is unknown, chemical similarity and computational tools may still assist the hypothesis, but in this case, the prediction will be associated with a higher degree of uncertainty.

After the identification of possible source substances, and collection and categorization of the existing information, the next step is the formulation of a possible RAx hypothesis. Its confirmation may need the identification of a subset of assays and experimental tests to confirm the overlap of biological behavior through the demonstration that relevant key events are fully shared or that a regular trend exists (Zhu et al., 2016). In fact, evaluation of chemical similarity with Tanimoto metrics or other systems is useful but often not sufficient to demonstrate that the target and the source share similar properties for the endpoint under consideration (Mellor et al., 2019). For both regulatory acceptance and scientific reasons, it is preferable to broaden the perspective to include biological similarities and ensure that all possible source substances are taken into proper consideration.

The starting point of a strong similarity justification is the definition and characterization of all relevant physicochemical properties. This goal is achieved with the aid of available experimental information or reliable in silico predictions for both the source and the target substance(s). The full set depends on the specific substance and the in vivo endpoint under investigation, but should include as a minimum:

Water solubility;

Melting and boiling point;

Octanol/water partitioning (Ko/w);

Volatility, e.g., as vapor pressure or Henry’s law constant;

Particle size for powders;

Stability (e.g., in air, aqueous solution, pH, light).

Other properties should be considered based on the molecular structure and the endpoint in question. For endpoints such as (DNA-reactive) genotoxicity or skin sensitization parameters, estimating the electrophilicity of certain functional groups or, more generally, the chemical reactivity might be helpful. Other useful further parameters could be, for example, chelating power, surface tension, oxidizing properties, and so on. The scope may include data from related areas, such as environmental fate data, in particular ready biodegradability, because they are indicative of a possible biological similarity, even though not directly relevant to the endpoint of interest. If not possible or too costly to obtain, there are programs that can provide relatively accurate estimations based on quantitative structure-property relationship (QSPR) models. Most of them are collected in the ECHA guidance for the preparation of REACH registration dossiers in the sections on adaptations of the standard testing regime (ECHA, 2017g). Others have been recently published, e.g., the OPEn structure-activity/property relationship app (OPERA; Mansouri et al., 2018) developed jointly by EPA and NICEATM and applied to more than 800,000 chemicals to produce freely available predicted properties on the EPA CompTox Chemicals Dashboard18. However, as these parameters are fundamental for predicting the biological activity of any substance, it is recommended, in general, to measure physicochemical properties with standard experimental protocols rather than to use prediction models, if possible.

When all available data are collected, they must be evaluated to decide whether they provide a clear picture and enough information to conclude whether the comparison between the source substances and the target substance is accurate enough to permit a conclusion and fill the gaps as required. If the approach seems reasonable but not sufficient to demonstrate the similarity, further tests on the category are necessary. The necessity to perform new in vivo tests should be considered at the very end of the process and only when all other opportunities have been considered. Gaps or uncertainties should be filled with existing studies or new in vitro tests.

Computational tools assist the identification of source materials and the demonstration of similarity, which would ideally include both toxicokinetic and toxicodynamic (TK/TD) considerations. Computational systems can be applied to analyze the issue globally, for example by defining the bioactivity profile through fingerprint description to feed machine learning models (Sturm et al., 2018). A useful database that can facilitate read-across groupings based on chemical similarity is the Danish QSAR database. It contains information on more than 600,000 chemicals from over 200 QSAR models19. Where models are used, they should be run also for category members for which experimental data exist. In this way, the applicability of the QSAR/QSPR model(s) to the specific set of chemicals under examination can be demonstrated. Where available, study results should be compared with the output of the computational approach as a reference to assess the accuracy of the prediction and claim better reliability for the output when applied to fill a data gap. Another computational tool developed specifically for RAx purposes is EPA’s Generalized Read Across (GenRA) add-in within the CompTox Chemicals Dashboard18, which uses a similarity-weighted activity algorithm to compute a RAx prediction using both chemical (structural fingerprint) and biological (in vitro HTS data from the Tox21 program) descriptors.

Given that potential selection bias is one of the major issues with respect to the reliability of the RAx approach, it is essential to document selection and deselection as well as chemical and biological characterization of the source chemicals that have been performed according to a clear rationale and in an unbiased way. Whatever the amount and quality of existing information, there will always be some uncertainties that need to be characterized, quantified and accepted. Computational methods may be very useful in providing variance and correlation among features. Uncertainty should be defined for each piece of information and in case of discordant results for the same substance, a conclusion should be drawn carefully by weighing all available information and relevant differences in the experimental protocol of the studies. Missing data and the lack of mechanistic coverage should be overcome with new ad hoc experimental studies.

2.2. New approach methodologies (NAMs) and adverse outcome pathways (AOPs)

As already mentioned, NAMs represent a good opportunity to support the RAx hypothesis by providing data to confirm whether a group of substances shares the same biological mechanism or if they show a specific trend within the category (Fig. 4). In the latter case, NAMs can help in the identification of the most representative compounds for further testing and may also contribute to the definition of the boundaries for the group. In this sense, NAMs should constitute new experimental tests/predictions that are performed with the specific goal of demonstrating the RAx hypothesis. The strength of NAMs in RAx is that all members of the category are tested simultaneously with the same test method, and the results are assessed as a category, demonstrating similarities and dissimilarities or providing clues to link the chemical structure to the biological activity.

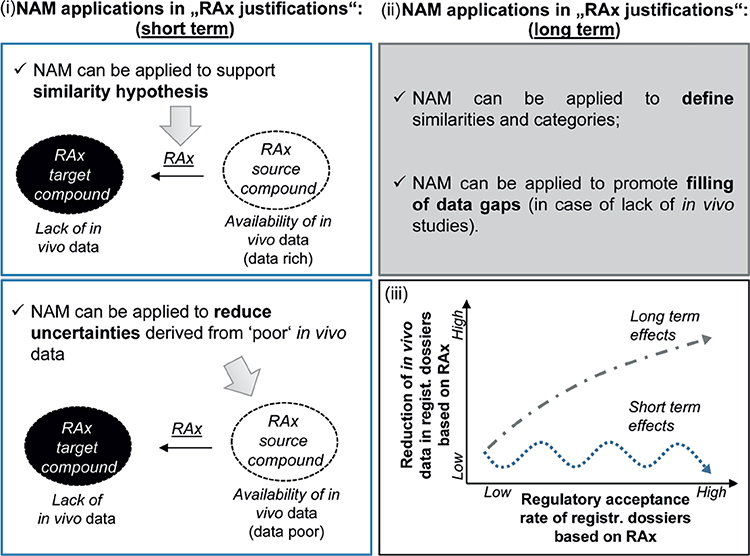

Fig. 4: Inclusion of NAM-derived data in RAx justification dossiers to build confidence in its regulatory use.

(i) A registration dossier based on RAx always contains a justification including data that prove the validity of the similarity hypothesis and/or the reliability of the in vivo studies performed on the source compound. Recently, NAM-derived data have started to be used as complimentary information to support the RAx hypothesis. (ii) An increasing confidence in the use of NAM-data may lead to their exclusive use for similarity definition, category formation, and to fill data gaps (in case of lack of in vivo data). (iii) As short-term effect, the use of NAM-derived data in RAx dossiers can increase the regulatory acceptance rate of RAx justifications in risk assessment. As long-term effect, the increasing confidence in such data could lead to a RAx application based only on in vitro/in silico data.

A plethora of in vitro and in silico techniques are now available and can assist in the purpose of demonstrating similarities within a category. Listing all the opportunities is out of the scope of this paper, and more details are available elsewhere (e.g., Berggren et al., 2015). NAMs are often organized to model a key event (KE) of an AOP, the principle of which is to describe a sequential chain of causally linked events at different levels of biological organization that lead to an adverse health or ecotoxicological effect (Ankley et al., 2010; Stiegler et al., 2011; Becker et al., 2015; Dreser et al., 2015). The AOP concept is very similar to the pathway of toxicity (PoT) concept (Kleensang et al., 2014). AOPs provide a useful framework for comparing two or more chemicals and also a starting point for building the experiments for the biological demonstration of similarity (Nelms et al., 2018). In the definition of an endpoint, NAMs can be organized in an IATA that represents how the different approaches are combined within a regulatory context to reach a final conclusion on the hazard characterization of a substance20 (Leist et al., 2014).

The idea to apply the AOP principle to organize information was first proposed during a workshop at the OECD organized in 2010 (OECD, 2011). Members of a category should share the same molecular initiating event (MIE) and the same metabolic pathways (Cronin and Richarz, 2017). According to these authors, the role of AOPs to support RAx can be summarized as:

A plausible and transparent means of linking MIEs to the in vivo outcomes of regulatory interest and making uncertainties explicit.

A qualitative means of establishing causal linkages.

A conceptual framework for organizing information at different levels of biological organization, characterizing the WoE.

Evidence supporting the robustness of chemical categories.

A means of forming categories based on both intrinsic chemical and biological activity.

A basis for testable hypotheses, which in turn leads to the development and use of in vitro databases for developing new profilers and to establish response-to-response relationships.

A means of developing and justifying targeted and efficient testing and assessment scenarios that save time and resources, e.g., by identifying data gaps.

A means of supporting assessments of combined exposure to multiple chemicals within and across AOPs.

Greater biological context to what is currently a statistically based approach.

Even though AOPs, as presented in the AOPwiki21, incorporate a useful framework for comparing two or more chemicals and also a starting point for building the experiments for the biological demonstration of similarity (Nelms et al., 2018), sharing the same AOP may only demonstrate the possible biological activity but says nothing about whether this effect really occurs and does not provide an indication of the doses that may activate the effect, which is fundamental in chemical risk assessment.

The EU-ToxRisk Project is developing conceptual frameworks to integrate NAMs for RAx assessment (Escher et al., 2019) and in particular on how NAM testing can prove the RAx hypothesis (Fig. 5). This concept has been developed through learnings from several case studies in which in vitro and in silico models are used to support the analysis of TD/TK properties, with a strong link between grouping and AOPs.

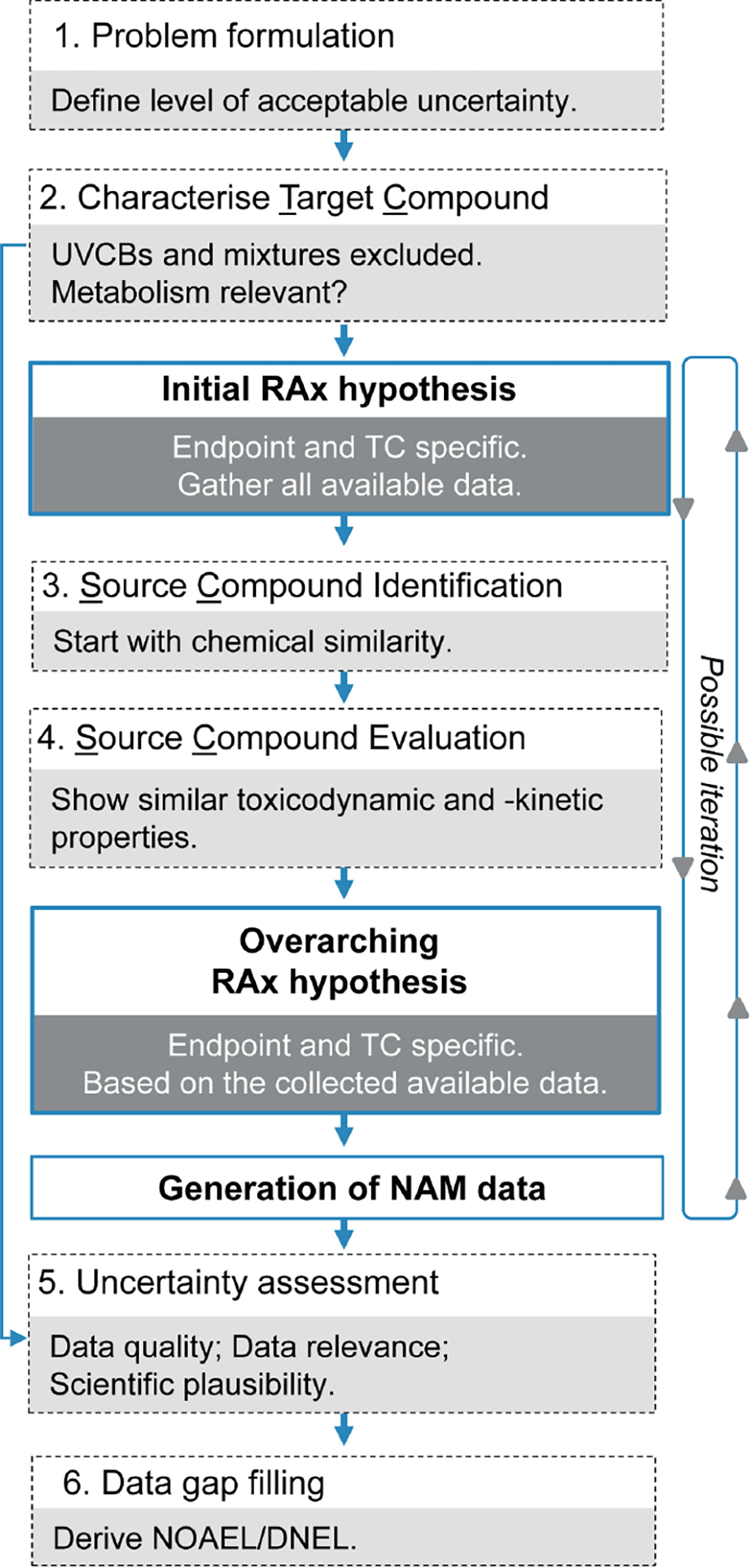

Fig. 5: Workflow describing the RAx framework developed and applied within the EU-ToxRisk project.

In the future, NAMs in combination with RAx represent a strong possibility to dramatically reduce or even eliminate the need for new in vivo testing. This will happen when the development of in vitro testing is mature enough to fill the data gaps in the categories (Fig. 4).

2.3. Absorption, distribution, metabolism and excretion (ADME)

ADME profiling represents an important step of RAx justification and, in particular, for moving from hazard to risk assessment. ADME also can be useful to select source substances.

To complement this information, both the source and target substance need a proper characterization of ADME data (Tsaioun et al., 2016). Unfortunately, outside of the pharmaceutical and agrochemical sector, this information is at best partially available from dedicated experimental studies.

Differences in absorption rate and distribution can be considered in RAx for risk assessment purposes, and big differences may be considered a pointer towards more important dissimilarities between chemicals, while the demonstration of similar absorption and distribution may support the RAx justification. Information on the distribution of a substance in the organism can also indicate a possible target organ. Here NAMs can be supported by quantitative in vitro to in vivo extrapolation (QIVIVE), even though there are still no valid and universally applicable systems (Hartung, 2018; Kisitu et al., 2019). Excretion mode and kinetics represent other parameters affecting systemic uptake and exposure, and they should always be considered to conclude on the similarity among the components of the category.

Metabolism is one of the most important steps of the ADME process. RAx studies where the source and target compounds share the same active metabolites are an infrequent but fortunate situation, allowing an easy justification of the RAx approach (van Ravenzwaay et al., 2016). In general, dissimilarities in metabolism will considerably complicate the RAx, since this would trigger the need to demonstrate the absence of (relevant) toxicity for all metabolites not common to both source and target chemicals. Metabolism assessment can be performed through a new experimental in vitro study, for example with primary hepatocytes, which can provide an idea of clearance and transformation. Unfortunately, an exhaustive analysis of metabolism requires long and expensive studies, and it is hardly ever performed outside of pharmaceutical and agrochemical applications. To cope with the lack of experimental data, chemoinformatics may help to provide a prediction of metabolism products (Madden and Cronin, 2006; Kirchmair et al., 2015; Djoumbou-Feunang et al., 2019; Miller et al., 2019). Computational metabolite predictions represent a valuable tool that may identify outliers in a group of similar substances or explain differences when in vivo animal studies are applied to the situation in humans.

The EU-ToxRisk project is taking up the challenge of ADME, being aware that, in many cases, lack of demonstration that target and source substances are similar was the cause of rejection of the RAx justification by regulators (Ball et al., 2016). The RAx is acceptable only if sufficient similarity in ADME properties between source and target chemicals is demonstrated and the possible impact of any dissimilarities is sufficiently explained. The absence of reliable ADME information often represents one of the weakest points in RAx justifications, and in this regard it seems fair to note that by not making ADME data a mandatory standard requirement, the REACH Regulation has put a major hurdle in the way of achieving its own goals in terms of facilitating the move away from in vivo animal testing.

2.4. Applicability domain of RAx

The ECHA RAAF document is very explicit in limiting RAx to a single endpoint (ECHA, 2017b,c). On the other hand, the success of RAx depends on a more holistic approach facilitated by the analysis of large quantities of data. Even though the final goal is the prediction of the specific endpoint, this always should be connected to a more general analysis of all possible analogues and groups of chemicals.

Enoch et al. (2008) demonstrated that reliability of QSARs is improved when modelling the individual mechanisms of toxic action present in the data set. The link of the prediction to the full elucidation of the mechanism provides specific limits to the prediction, with a precise definition of the boundaries for the group of chemicals in RAx, including the applicability domain. The one-to-one comparison, i.e., one target and one source chemical, should be applied only in case of very strong evidence of similarity and identical biological activity. Rather, any demonstration of similarity should benefit from a global analysis that includes not only a larger set of chemicals but also the assessment of general toxicity of the substances, with a possible explanation of the differences that may derive also from physicochemical properties.

The concept of local validity (Patlewicz et al., 2014) can be applied in reverse mode. The explanation of a possible mechanism can set the boundaries for the applicability domain of RAx by imposing the use of a specific method to substances that show a specific mechanism or that are metabolized to a specific chemical.

The mechanistic applicability domain is one important aspect, but the structural and physicochemical descriptors, as well as property and effect spaces also need proper attention when building a category. Overall, the applicability domain of a RAx category can be considered a multidimensional space composed of all these elements, in which members of a valid category are located relatively “closely” to each other. In case of even distribution of the category members, trust in RAx categories is increased if the category encompasses a densely populated multidimensional space and the target compound is located in such an area.

2.5. RAx for non-classified substances

Declaring the existence of a certain risk of toxicity for a substance is comparatively easy, even though there is always the possibility of a hidden risk of higher concern. The situation is different when the RAx exercise aims to demonstrate the absence of concern, because this conclusion should really include the demonstration that any possibility of hazard has been taken into account. For this reason, RAx for substances with no or low activity requires more comprehensive justification, supported by a high level of confidence and strong evidence. At the same time, most chemicals do not seem to require hazard classification (Hoffmann and Hartung, 2005; Luechtefeld et al., 2016a), and therefore it may be expected that the number of RAx resulting in a “negative”, i.e., absence of relevant effects, outcome will be clearly higher than that of RAx predictions with a “positive” effect.

The big question is how to achieve sufficient confidence in negative conclusions on toxicity. It is a truism that scientific knowledge develops over time, and the regulatory landscape will continue to change based on the discovery of new or a re-evaluation of known mechanisms and effects of chemicals in biological systems. Therefore, the natural answer to the above dilemma could be that predictions of a general absence of toxicity should cover all areas of potentially adverse effects known and considered relevant in the respective legislative framework at the time the prediction is performed. Under REACH, for example, the range of endpoints to consider in general is characterized by the output of the methodology accepted for the generation of the standard information specified in Annexes VII-X, even though it should be enlarged in situations where there is a reason to assume that the standard information is not sufficient. As a consequence, confidence, whether in traditional assessment or RAx, is best increased by considering a wide range of endpoints with in-depth TK/TD analysis. In the scope of RAx, the only way to demonstrate that a specific substance activates no toxicological alert should be supported by either a higher number of source substances, all demonstrating biological inactivity, or a strong demonstration of similarity if there is only one source substance. As far as possible, the latter case should always be supported by a further demonstration that “biological surprises” appear unlikely, for example by providing evidence for similar substances. A way to tackle uncertainty is by using a set of independent information that leads to the same conclusion in a WoE approach (Linkov et al., 2015). Schultz et al. (2017a) have provided a good example with the analysis of a set of n-alkanols, which were analyzed by grouping substances sharing common absorption profiles and metabolic pathways, confirmed by the existence of good 90-day oral repeated dose toxicity studies in rats.

In conclusion, general rules for RAx validity are applied also for demonstration of the absence of biological concern, with a further detailed description of ADME and all possible MoAs/AOPs that may occur. In the future, omics profiling or high-throughput testing schemes such as the Tox21/ToxCast program might aid in demonstrating a lack of biological activity over a broad range of effect markers.

As reported in other sections, the amount of information considered sufficient depends on the risk that is related to the assessment together with the regulatory application scope and should be considered on a case-by-case basis. The principles described to reduce the uncertainty are generally valid, but they should be applied even more rigorously for the definition of the absence of concern. More specific guidance would be helpful here.

2.6. Hazard characterization and potency

A comparatively easy application of RAx is hazard identification by means of a demonstration that two or more chemicals may qualitatively exhibit the same toxicological effect. In contrast, the prediction of potency, e.g., in the form of a classification sub-category, places considerably higher demands. Hazard characterization is communicated to all users with specific pictograms and hazard phrases, as internationally agreed by the United Nations in the Globally Harmonized System of Classification and Labelling of Chemicals (GHS), which was implemented in the EU with the Classification Labelling and Packaging (CLP) Regulation (EC) 1272/2008, which explicitly accepts RAx when describing the scope of WoE determination in its Annex I.

The difficulty in applying RAx for classification purposes lies in the analysis of potency for the determination of hazard category. Categories of local effects are generally well characterized with defined thresholds, while more concerning endpoints, such as CMRs (carcinogenic, mutagenic and reprotoxic substances), have no defined threshold in CLP, and the category is demarcated only by the qualitative probability that a substance may exert that effect. In fact, Category 2 is based on the presumption that the toxicity outcome may result, Category 1B is the proven toxicity in animals, and Category 1A the proven toxicity in humans. In all cases, there is a need to assign a dose to the hazard, which is a defined threshold for local and acute toxicity endpoints and the no-observed-adverse-effect-level (NOAEL) for the systemic endpoints, for risk assessment.

The OECD guidance on grouping of chemicals provides some consideration on how a quantitative prediction for target substances can be made by extra- and interpolation within a category for which trend(s) in toxicity or factors influencing toxicity have been identified. When the AOP and quantitative information on the relationship between KE and AO are known, NAMs can be used for the target and source substances to further strengthen the (quantitative) prediction, with the application of a suitable QIVIVE correction. While larger categories might provide the basis for trend analysis and thus a relative potency assessment of their members regarding a specific effect, the situation is much more difficult in one-to-one assessment, and additional reasoning will generally be required to assign the same, or a higher or lower, potency to the target. Depending on the legislative framework, the assignment of additional assessment factors (AF) might be required in such circumstances. A more scientific approach could consider a detailed toxicokinetic assessment with correlation of the exposure and the concentration at the target site.

This particular topic clearly needs more discussion and agreement between regulators.

2.7. Practical case studies

The published literature gives an indication of the considerable breadth of RAx studies that have been undertaken and reported. Whilst this is not necessarily representative of all REACH submissions, it does indicate that RAx has been applied in a number of scenarios and with a variety of techniques. Turning some of these into case studies is useful for a number of reasons that go beyond simply providing an illustration for further use (Tab. 1).

Tab. 1:

Some examples of available public case studies on the applicability of RAx for regulatory purposes, as described in the text

| Projects | Description | Main documents |

|---|---|---|

| ECHA - COLLA project (2017–2018) | The project aimed to improve the information used to decide on the needs for further regulatory risk management with the involvement of Member State competent authorities and concerned registrants. Two different elements were tested: addressing substances by groups and early interaction with registrants. The project demonstrated an increase in effectiveness, providing a better picture of the gaps and the points of concern. | ECHA, 2018 |

| SEURAT-1 (2009–2014) | The primary goal of the read-across case study within the project was to increase confidence in the read-across assessment by using data from alternative methods. The CS were run under 2 conditions: read-across without additional new approach data; addition of NAM data (primarily from ToxCast and from application of the alternative methods developed within the initiative). |

Berggren et al., 2015; Schultz et al., 2015, 2017a,b; Mellor et al., 2017 |

| EU-ToxRisk project (2016–2021) | In the EU-ToxRisk project, the Read-Across Case Study Strategy includes assessment of toxicokinetics, both in the in vitro experimental set up and the extrapolation to safe human doses. These are integrated into the design of EU-ToxRisk case studies through the use of ADME models and generation of ADME in vitro data. The results of the NAM-supported read-across case studies are compiled and sent for evaluation to regulators as a “mock submission”. The experience and learnings gained during the project will be compiled in a NAM-based read-across guidance. |

Escher et al., 2019; Rovida et al., in preparation |

| OECD Cooperative Chemicals Assessment Programme (CoCAP); IATA Case Studies Project (2015-current) | The objective of the IATA Case Studies Project is to increase experience with the use of IATA by developing case studies, which constitute examples of predictions that are fit for regulatory use. The aim is to create a common understanding of using novel methodologies and the generation of considerations/guidance stemming from these case studies. Case studies submitted by OECD member countries are reviewed regularly. Learnings and lessons derived from such reviews are regularly published as OECD reports. | OECD, 2018 |

For the illustration of RAx in various forms, the OECD has published case studies on the applicability of IATA including RAx (OECD 2016b, 2017b, 2018). These examples perfectly demonstrate the new idea of risk assessment as a combination of QSAR, RAx, NAMs and WoE. The way they are presented is not intended to provide exhaustive documentation to justify the RAx approach, but rather to analyze the state-of-the-art of this methodology, with particular attention to strengths and pitfalls. The focus is on one or more specific endpoints such as mutagenicity, repeated dose toxicity, etc. and not so much on the categories themselves.

As part of the SEURAT-1 initiative, RAx case studies were instigated (Berggren et al., 2015). This resulted in the development of a template for reporting RAx (Schultz et al., 2015) as well as the publication of some of the findings in the peer-reviewed literature (Schultz et al., 2017a,b; Mellor et al., 2017). Analysis of the case studies overall allowed Schultz and Cronin (2017) to provide practical examples on how to reduce the uncertainty related to the RAx assessment, concluding on the importance of data quality, similarity argumentation and justification, and the overwhelming need for TK/TD data (Pamies et al., 2018). These analyses later influenced the description of the overarching uncertainties associated with RAx, allowing for a series of questions to probe the quality, or otherwise, of the underpinning science and data (Schultz et al., 2019).

The EU-ToxRisk Project has started an initiative in collaboration with regulators from different scientific areas, including experts in the areas of pesticides, food safety, industrial chemicals, etc. Partners have prepared mock submissions to regulators from agencies associated with European regulations in order to learn whether their approaches would be acceptable in a legal context or not. In this way, EU-ToxRisk case studies are treated as if they were real cases with the purpose of challenging the traditional regulatory assessment approach. A dedicated workshop with the active collaboration of ECHA and EFSA has already taken place22, and the final report should be published in 2020.

In recent years, also ECHA and the EU Member States have increasingly included grouping approaches into their screening and assessment schemes. In particular, ECHA launched the collaborative approach (COLLA) pilot projects focused on five groups of substances (ECHA, 2018). The aim was to improve the information used to decide on the needs for further regulatory risk management with the involvement of Member State competent authorities and concerned registrants. The projects explored how the overall grouping approach can be used to clarify and address the identified concerns and what type of supporting information is required. The projects tested two different elements: addressing substances by groups and early interaction with registrants. It was reported that the resources spent by ECHA and Member State authorities were significant, and almost equally divided between the screening and the interaction phases. The COLLA could demonstrate an increase in effectiveness, providing a better picture of the gaps and the points for concern, while it was difficult to conclude on benefits in efficiency due to the amount of resources required in the management.

In the scope of REACH, other simple approaches have been used in the area of metal salts, e.g., for nickel, cobalt, iron chlorides, sulfates, nitrates, etc. More complex RAx categories have been built for petroleum/coal products, fatty acid derivatives, etc. With the exception of a few publications (Clark et al., 2013) or indications on the consortia website such as CONCAWE23, these data mainly derive from the personal experience of some co-authors and are generally not public. Further analysis is not possible and, moreover, the general feedback from the authorities is not known, unless looking for data relative to the submission of the individual substances. Even though it would be interesting, this search is out of the scope of this publication.

2.8. Integrated use of RAx

The main potential of RAx lies in its application in an integrated way on several source substances, so it is more than a simple tool to waive a single in vivo test. Rather, the assessment relies on a more global approach that considers a variety of data. Even if the comparison can be between one source and one target, the use in support of many other chemicals in a group or the comparison between other similar substances can be of benefit. Let us consider a very easy example of the RAx of a sodium salt (source) with its acid. If the dissociation constant confirms that the acid is fully dissociated at the physiological pH, such information already might seem enough to justify the read-across. However, the hypothesis would be strengthened further if there is the possibility to compare other experimental endpoints for both substances, highlighting differences that may derive only from the capacity of the acid to alter the pH. It would not be surprising if the acid was classified as an irritant and the salt was not, but they may share identical biological behavior with regard to mutagenicity, developmental toxicity, etc. RAx hypothesis supported by other similar substances, with data on both the acid and the sodium salt, and even other salts, would then appear much stronger.

In a more complex situation, the application of RAx requires a holistic approach that considers a multitude of data gained from both the target and the source substances. In Figure 6, the process for the demonstration of similarity is described. Such process is based on:

Similar chemical structure and behavior;

QSAR prediction;

Collection and evaluation of existing in vivo studies;

- Assessment of new in vitro tests to confirm the mechanism. If the RAx hypothesis is based on the hypothesis of the same TK/TD profile, strong RAx justification should include, if possible (Fig. 7):

- Comparison between two substances sharing the same metabolic products;

- Comparison with the substance representing the metabolic product itself;

- Comparison with a group of similar substances showing a comparable metabolic trend.

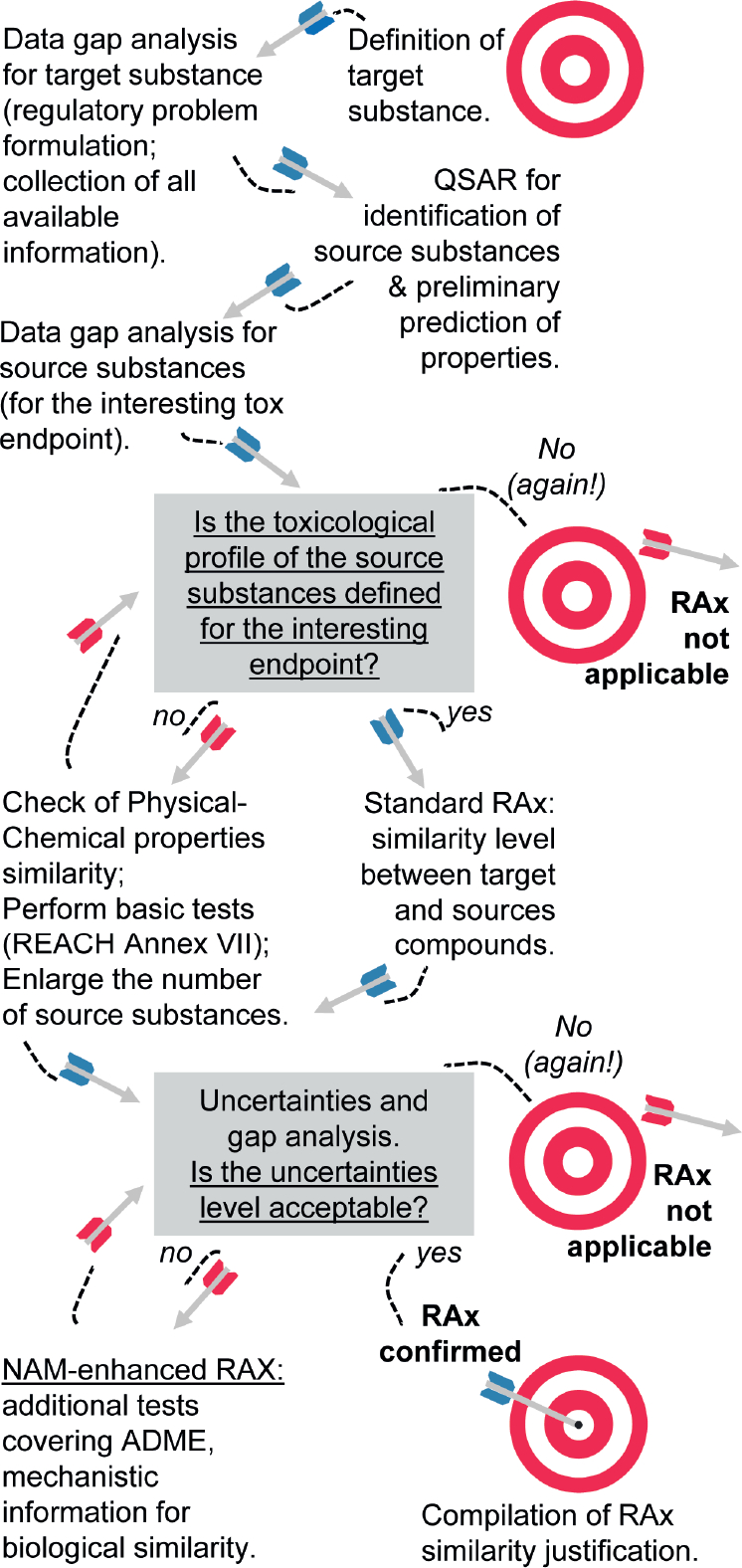

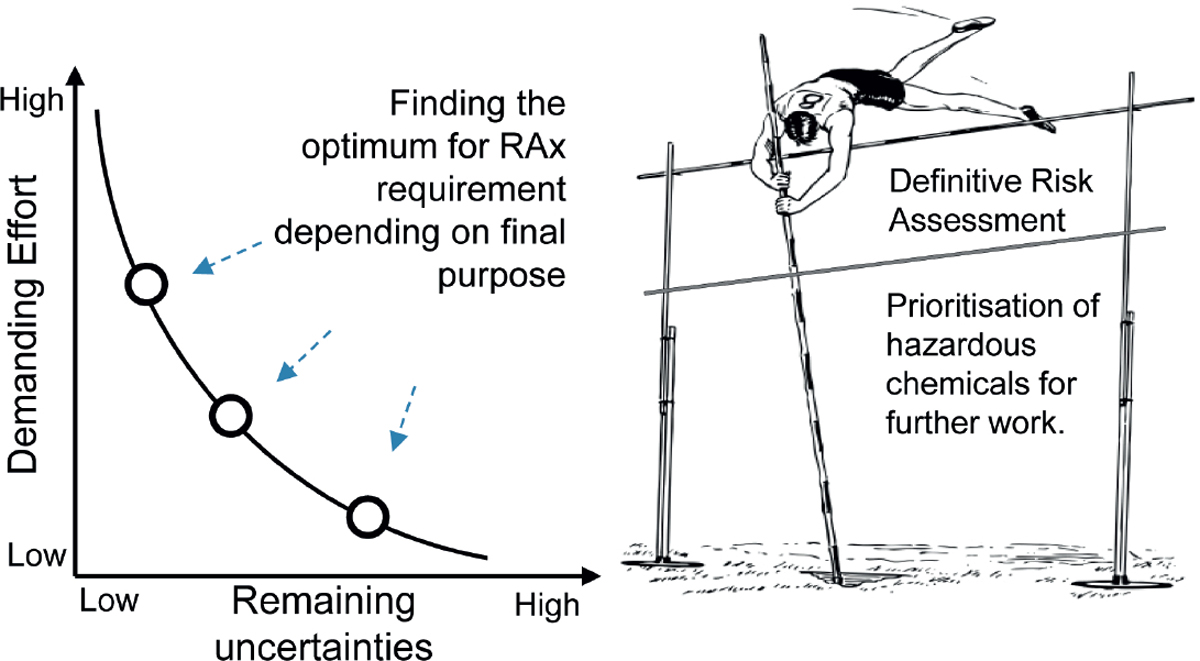

Fig. 6: Decision strategy for the application of a RAx framework for risk assessment.

After the definition of the target substance and the identification of data gaps, the first step is the identification of all possible source substances with the support of QSAR tools. The process proceeds only if it is demonstrated that target and source substances share a similar toxicological profile. A full characterization of the physicochemical properties and the experimental determination of basic biological endpoints, such as those required in Annex VII of REACH, can support this step. The NAM-enhanced RAx benefits from the performance of suitable in vitro tests that can provide mechanistic information for biological similarity or elucidation of ADME. The whole procedure moves forward in an iterative way. On the other hand, if RAx is confirmed, the last step is the compilation of the RAx report that should contain all details of the steps and the decisions taken along the process.

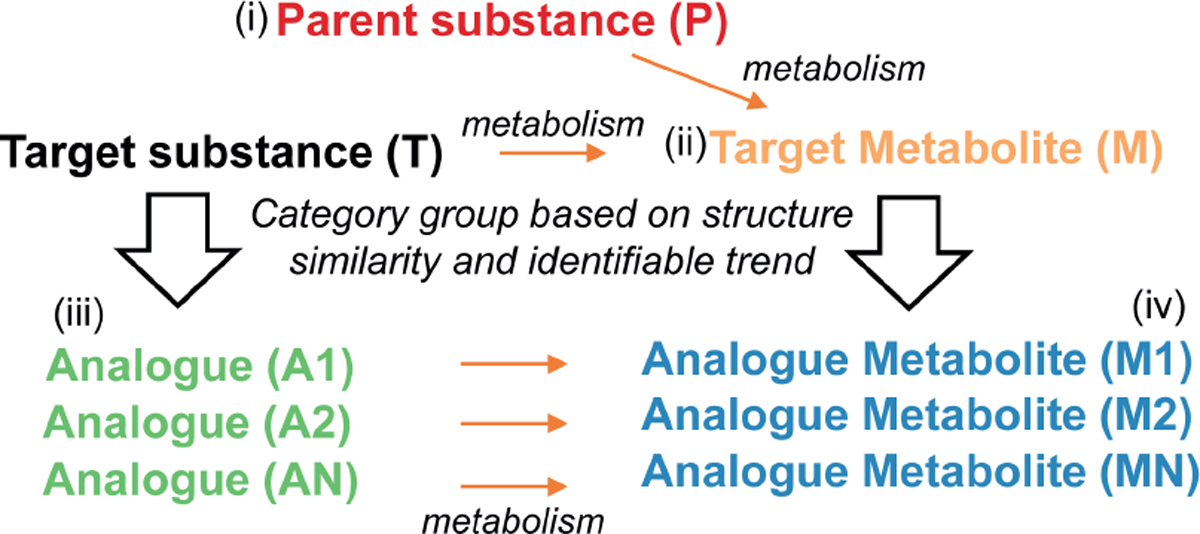

Fig. 7: Scheme for the identification of possible source substances in a RAx approach.

Given the target substance T, possible source substances are (i) substances producing the same metabolite M (parent substance P) but also (ii) the metabolite itself (target metabolite M). This pattern can be confirmed if other substances present the same trend in a category, where substances A1, A2, etc. (iii) metabolize to give metabolite M1, M2, etc., which can also be grouped together (iv). Availability of existing in vivo studies may be random in such a scheme. Nevertheless, they may predict the possible effect of target substance T with a high level of confidence.

From this perspective, RAx is much more than the comparison of two chemicals to waive an in vivo test, rather it is a complex system that studies toxicity in a highly integrated manner (Fig. 6). The final goal is an increase of knowledge on the toxicological profile of substances. This principle is well described in the EFSA document on WoE (EFSA, 2017). The EFSA guidance defines three steps for the WoE approach, i.e., assembling the evidence into lines of evidence of similar type, weighing the evidence, and integrating the evidence. The three key parameters are i) reliability, as the extent to which the information comprising a piece or line of evidence is correct, ii) relevance, as the contribution answering a specified question, and iii) consistency, as the compatibility of the different information towards the final toxicity prediction.

Regarding new in vitro tests, those should be tailored to the specific need, whether it is the demonstration of a shared KE within an AOP or a TK/TD route. Other tests should be considered, such as the fish embryo toxicity (FET) test, if there is an issue related to developmental toxicity (Kroese et al., 2015). The selection of the right method should consider the applicability domain, the regulatory scope, and also other practical constraints, such as experimental availability and costs, with the latter probably being the main obstacle to wider use of these new tools.

At the moment, this procedure is based mainly on expert judgment, but the inclusion of defined approaches, i.e., testing strategies with clearly specified information sources and objective data interpretation procedures, is highly recommended for the future in order to increase the objectivity of the final outcome and to guarantee that all available data and information is taken into consideration. The analysis of dossiers containing RAx justifications that have been rejected by the authorities can be very useful to understand the regulatory requirements of an application (Ball et al., 2016).

3. Regulatory use of RAx

3.1. Validation

Validation of the RAx approach represents the best opportunity for regulatory acceptance and the necessary step for reducing uncertainties and building scientific confidence. More specifically, method validation is a process designed to show that a given method is adequate and reliable for the purpose it was designed for. The requirement of validation is a direct consequence of the scientific method and addresses many of its fundamental aspects, e.g., hypothesis formulation, hypothesis testing, predictions based on hypothesis, replicability, bias, uncertainty, etc. If a method has not been validated, this means, in effect, that it is unknown whether it successfully serves the purpose it was designed for. The usefulness of such a method is then subordinate to subjective beliefs. This is a criticism brought forward also against the currently applied in vivo methods, most of which were never formally validated but which are still used in risk assessment following conventional scientific acceptance.

Regarding RAx, its validation should demonstrate that the approach leads to the expected result, e.g., that toxicological effects (or their absence) as observed in traditional in vivo or in vitro tests are correctly predicted within reasonable limits. RAx is not per se a defined method, and therefore it cannot be validated following the procedure that is traditionally applied for in vitro tests24. Also, each RAx is a case-specific assessment combining purely computational tools with a mix of chemistry, mechanistic knowledge, QSAR, in vivo and in vitro techniques, brought together under the auspices of expert judgement. Source and target substances can be unique or multiple, and the endpoint comparison can be based on the same value or following a trend. The quality and amount of source data are also variable. Nevertheless, the adequacy, reliability and relevance of individual test or prediction methods combined in a RAx argument can be assessed, as can the overall plausibility of the RAx justification along with the extent to which uncertainties remain.

In many cases, the RAx approach is performed manually by an individual, or group of, toxicologist(s) (Benfenati et al., 2016). Although standardized RAx workflows (similar to a “defined approach” (DA) as used by the OECD, i.e., a defined set of information sources assessed with a defined data interpretation procedure) or even automated RAx would appear a good option for providing an impartial output that is always repeatable and independent of the operator, fully automated assessment should not be run in an unsupervised mode. The best option should be somewhere in the middle, i.e., a standardized/automatic evaluation of particular criteria supporting expert judgement and evaluation providing performance indicators for expert review but not necessarily an overall opinion. Even then, the best diagnostic tools will have little value unless they are used by operators who are familiar with the RAx technique as well as the underlying toxicology and chemistry of the specific case and the context-specific criteria for scientific validity. For example, the most recent version of the OECD QSAR Toolbox16 supports the user by providing information on alert performance based on the category candidates that are selected (Yordanova et al., 2019). Recently, Luechtefeld and co-workers (2018a) attempted this task using an automated RAx-based QSAR approach on a huge set of acute and topical toxicity as well as ecotoxicological endpoints. They showed that on average a given self-classification in REACH registration dossiers could be reproduced with a RAx-based QSAR model based on the nearest neighbors of that chemical in descriptor space with higher reliability than was found for traditional test results in the REACH database. Notably, this work focused on a simple GHS/CLP classification endpoint, whereas such work currently cannot be realized for complex, multiple-endpoint systemic toxicities, even though some authors see such methodology as promising in the future, and this possibility should be kept open (Berggren et al., 2015).