Abstract

Reducing meat consumption may improve human health, curb environmental damage, and limit the large-scale suffering of animals raised in factory farms. Most attention to reducing consumption has focused on restructuring environments where foods are chosen or on making health or environmental appeals. However, psychological theory suggests that interventions appealing to animal welfare concerns might operate on distinct, potent pathways. We conducted a systematic review and meta-analysis evaluating the effectiveness of these interventions. We searched eight academic databases and extensively searched grey literature. We meta-analyzed 100 studies assessing interventions designed to reduce meat consumption or purchase by mentioning or portraying farm animals, that measured behavioral or self-reported outcomes related to meat consumption, purchase, or related intentions, and that had a control condition. The interventions consistently reduced meat consumption, purchase, or related intentions at least in the short term with meaningfully large effects (meta-analytic mean risk ratio [RR] = 1.22; 95% CI: [1.13, 1.33]). We estimated that a large majority of population effect sizes (71%; 95% CI: [59%, 80%]) were stronger than RR = 1.1 and that few were in the unintended direction. Via meta-regression, we identified some specific characteristics of studies and interventions that were associated with effect size. Risk-of-bias assessments identified both methodological strengths and limitations of this literature; however, results did not differ meaningfully in sensitivity analyses retaining only studies at the lowest risk of bias. Evidence of publication bias was not apparent. In conclusion, animal welfare interventions preliminarily appear effective in these typically short-term studies of primarily self-reported outcomes. Future research should use direct behavioral outcomes that minimize the potential for social desirability bias and are measured over long-term follow-up.

Keywords: Meta-analysis, Nutrition, Behavior interventions, Meat consumption, Planetary health

1. Introduction

Excessive consumption of meat and animal products may be deleterious to human health (with meta-analytic evidence regarding cancer (Crippa et al., 2018; Farvid et al., 2018; Gnagnarella et al., 2018; Larsson & Wolk, 2006), cardiovascular disease (Cui et al., 2019; Guasch-Ferré et al., 2019; Zhang and Zhang, 2018), metabolic disease (Fretts et al., 2015; Kim & Je, 2018; Pan et al., 2011), obesity (Rouhani et al., 2014), stroke (Kim et al., 2017), and all-cause mortality (Larsson & Orsini, 2013; Wang et al., 2016)); promotes the emergence and spread of pandemics and antibiotic-resistant pathogens (Bartlett et al., 2013; Di Marco et al., 2020; Marshall & Levy, 2011); is a major source of greenhouse gas emissions, environmental degradation, and biodiversity loss (Machovina et al., 2015; Sakadevan & Nguyen, 2017); and contributes to the preventable suffering and slaughter of approximately 500 to 12,000 animals over the lifetime of each human consuming a diet typical of his or her country (Bonnet et al., 2020; Scherer et al., 2019).1 Therefore, developing simple, effective interventions to reduce meat consumption could carry widespread societal benefits.

“Nudge” interventions that restructure the physical environment, for example by repositioning meat dishes in cafeterias or making vegetarian options the default, may be effective (Bianchi, Garnett, et al., 2018; Garnett et al., 2019; Hansen et al., 2019), as may direct appeals regarding individual health or the environment (Bianchi, Dorsel, et al., 2018; Jalil et al., 2019). Despite sustained academic interest in developing those types of interventions, there has been much less attention to the potential effectiveness of appeals related to animal welfare (Bianchi, Dorsel, et al., 2018). However, the emerging literature on the psychology of meat consumption suggests that appeals to animal welfare might operate on distinct and powerful psychological pathways (Rothgerber, 2020), suggesting that these appeals merit assessment as a potentially effective component of interventions to reduce meat consumption. We first provide a theoretical review of this psychological literature.

1.1. Psychological theory underlying animal welfare interventions

A number of interventions have used psychologically sophisticated approaches to reducing meat consumption by appealing to or portraying the welfare of animals raised for meat (henceforth “animal welfare interventions”). In general, portraying a desired behavior as aligning with injunctive social norms (what others believe one should do) or descriptive social norms (what others actually do) can effectively shift behaviors, including food choices (Higgs, 2015; Schultz et al., 2007). Many animal welfare interventions have invoked social norms (Amiot et al., 2018; Hennessy, 2016; Norris, 2014; Norris and Hannan, 2019; Norris and Roberts, 2016; Reese, 2015), for example by stating: “You can’t help feeling that eliminating meat is becoming unavoidably mainstream, with more and more people choosing to become vegetarians by cutting out red meat, poultry, and seafood from their diets” (Macdonald et al., 2016). Animal welfare interventions have also leveraged the “identifiable victim effect”, in which people often experience stronger affective reactions when considering a single, named victim rather than multiple victims or a generic group (Jenni & Loewenstein, 1997). In a classic demonstration of this general effect, subjects made larger real donations to the organization Save the Children after reading about a single named child than when reading about multiple children (Västfjäll et al., 2014). Analogously, many animal welfare interventions describe farm animals with reference to specific, named individuals, such as “Leon” the pig (Bertolaso, 2015) or “Lucy” the chicken (Reese, 2015), and this may indeed be more effective at shifting behavior than providing statistical descriptions of the number of animals affected. Last, many interventions provide concrete implementation suggestions for reducing meat consumption, for example by listing plant-based dishes or recipes for breakfasts, lunches, and dinners (Norris and Hannan, 2019). These suggestions may help individuals to form concrete intentions for how they would like to respond in the future when faced with food options, which can be an effective means of shifting food choices (Adriaanse et al., 2011).

In addition to leveraging these three well-known standard components of effective behavioral interventions, animal welfare interventions have the potential to harness the unique social, moral, and affective psychology underlying meat consumption (Loughnan et al., 2014; Rozin, 1996). For example, ethical concern about factory farming conditions is now a majority stance in several developed countries (Cornish et al., 2016), yet meat consumption remains nearly universal (the “meat paradox”; Bastian and Loughnan (2017)). How does meat-eating behavior survive the resulting cognitive dissonance between people’s ethical views and their actual behavior (Rothgerber, 2020)? There are several explanations. First, most individuals in developed countries do not acquire meat by personally raising animals in intensive factory farm conditions, slaughtering, and preparing them, but rather obtain already processed meat that bears little visual resemblence to the animals from which it came. It is therefore rather easy to implicitly view meat as distinct from animals (Benningstad & Kunst, 2020). This situation is captured well in an episode of The Simpsons that has been used as an intervention to reduce meat consumption (Byrd-Bredbenner et al., 2010), in which Homer Simpson chastises his newly vegetarian daughter: “Lisa, get a hold of yourself. This is lamb, not a lamb!” Some interventions operate simply by reminding the subject of the connection between meat and animals by, for example, displaying photographs of meat dishes alongside photographs of the animals from which they came; these meat-animal reminders seem to consistently reduce meat consumption (Kunst & Hohle, 2016; Kunst & Haugestad, 2018; Earle et al., 2019; Tian et al., 2016; da Silva, 2016; Lackner, 2019).

Second, the public is poorly informed about animal welfare conditions on factory farms, and individuals often deliberately avoid information about farm animal welfare, even admitting to doing so when asked explicitly (Onwezen and van der Weele, 2016). Presumably the public avoids information because they anticipate that the results may be upsetting (Knight & Barnett, 2008). Thus, interventions that circumvent individuals’ cultivated ignorance by graphically describing or depicting conditions on factory farms may provide a “moral shock” that could, for some individuals, lead to dietary change, potentially by triggering cognitive dissonance (Jasper & Poulsen, 1995; Rothgerber, 2020; Wrenn, 2013). In principle, animal welfare interventions might be more effective at prompting such dissonance than interventions appealing instead to individual health or the environment, though this point remains speculative (Rothgerber, 2020). However, the use of graphic depictions is controversial, as they might be ineffective or even detrimental in some contexts (Wrenn, 2013).

Third, even when individuals do consider the animal origins of meat and are informed about conditions on factory farms, they may ascribe little or no sentience to animals raised to produce meat or edible animal products (henceforth “farm animals”), limiting the moral relevance of eating meat (Rothgerber, 2020). Indeed, the Cartesian view that animals are automata that do not experience pain, suffering, or emotions, and hence are outside the sphere of moral concern, was once influential in ethical philosophy, though a modern scientific understanding of animal cognition has essentially eliminated this view from scholarly philosophy (Singer, 1995). In fact, individuals may reduce their attributions of mind and sentience post hoc when faced with the dissonance that could otherwise arise from eating meat: subjects randomly assigned to eat beef subsequently reported that cows are less capable of suffering, and they showed less moral concern, than subjects randomly assigned to eat nuts (Loughnan et al., 2010). Interventions that encourage mind attribution to farm animals, for example by asking subjects to imagine the cognitive and affective experiences of a cow, may disarm this dissonance-reduction strategy, thus reducing willingness to eat meat (Amiot et al., 2018). Similarly, other interventions leverage the fact that most people already recognize the sentience of companion animals, such as dogs and cats, and therefore incorporate these animals into their spheres of moral concern, even while excluding farm animals with comparable cognitive abilities and capacity for suffering (Rothgerber, 2020). Interventions targeting this form of dissonance highlight the moral equivalence of farm animals and companion animals, for example by stating: “If the anti-cruelty laws that protect pets were applied to farmed animals, many of the nation’s most routine farming practices would be illegal in all 50 states.” (Norris and Hannan, 2019).

Additionally, physical disgust and moral disgust are closely intertwined. Experiencing physical disgust can amplify negative moral judgments, even when the two sources of disgust are conceptually unrelated (e.g., viewing physically disgusting video clips versus judging cheating behavior); conversely, experiencing moral disgust can induce physical disgust (Chapman & Anderson, 2013). Given the powerful impact of physical disgust on food choices (Rozin and Fallon, 1980), evoking moral disgust regarding animal welfare may be a particularly potent means of shaping food choices (Feinberg et al., 2019). Indeed, many animal welfare interventions contain graphic verbal or visual depictions of conditions in factory farms that may themselves be physically disgusting. For example, one intervention (Cordts et al., 2014) describes “crowded conditions [and] pens covered in excrement and germs”; a leaflet that has been studied repeatedly describes sows with “deep, infected sores and scrapes from constantly rubbing against the [gestation crate] bars” and “decomposing corpses [found] in cages with live birds” (Vegan Outreach, 2018). We speculate that such interventions might increase moral disgust by triggering physical disgust. Although we are not aware of studies that directly assess this hypothesis using physically disgusting interventions, it is interesting that even interventions that are not obviously physically disgusting, such as the meat-animal reminders described above, seem to operate in part by increasing physical disgust (Earle et al., 2019; Kunst & Hohle, 2016).

Finally, the connection of animal welfare interventions to an existing social movement encouraging greater consideration of the welfare of farm animals (Singer, 1995) may further trigger “process motivations” for participation (Robinson, 2010, 2017, chap. 99), such that the process of participating in the social movement (e.g., reducing meat consumption due to ethical concerns) may itself be motivating. Participating in social movements can be intrinsically motivating because they provide opportunities for identity development, social interaction and support, perceived belonging, and activities that boost participants’ perceptions of collective efficacy (Bandura, 2002, chap. 6). That animal welfare interventions are related to a broader social movement may additionally trigger group- or societal-level changes (e.g., increasing public attention, shifting norms regarding meat consumption, or decreasing availability of meat) that may alter the social and physical environments to make it easier to sustain the new behaviors (Robinson, 2010, 2017, chap. 99). Whereas behavior-change appeals emphasizing individual health sometimes suffer from high recidivism (Grattan & Connolly-Schoonen, 2012; Robinson, 2010), interventions that instead link behavior to ethical values, self-identity, and existing social movements may be especially potent and long-lasting (Robinson, 2010, 2017, chap. 99; Walton, 2014). Such interventions have, for example, successfully reduced childhood obesity-related behaviors and risk factors for cardiovascular disease and diabetes by appealing to cultural and ethical values in order to increase physical activity, rather than by appealing directly to obesity reduction or other health motivations (Robinson et al, 2003, 2010; Weintraub et al., 2008). Animal welfare interventions might operate similarly.

In theory, then, animal welfare interventions have the potential to be particularly effective by harnessing:

multiple general mechanisms of effective behavior interventions (by leveraging social norms and the identifiable victim effect, and by giving implementation suggestions);

the unique psychology of meat-eating (by invoking meat-animal reminders, moral shocks triggered by graphic depictions of factory farms, mind attribution, the moral equivalence of farm animals and companion animals, and the physical-moral disgust connection); and

the psychological and practical advantages of connection to a social movement.

But do these interventions work in practice? We now turn to the present empirical assessment of this question.

1.2. Objectives of this meta-analysis

We conducted a systematic review and meta-analysis to address the primary research question: “How effective are animal welfare appeals at reducing consumption of meat?”, in which we define “meat” as any edible animal flesh (Section 3.3). We additionally investigated whether interventions’ effectiveness differed systematically based on their content, such as types of dietary recommendations made, use of verbal, visual, and/or graphic content,2 or on study characteristics such as length of follow-up and percentage of male subjects (Rozin et al., 2012) (Section 3.5). We evaluated risks of bias in each study (Sections 3.2.4) and conducted numerous sensitivity analyses (Section 3.4). Finally, considering the evidence holistically in light of its methodological strengths and limitations, we discuss what the evidence suggests about the effectiveness of animal welfare interventions (Section 4.1) and give specific recommendations for future research to advance the field (Section 4.3).

2. Methods

See the Reproducibility section for information on the publicly available dataset, code, and materials. All methods and statistical analysis plans were preregistered in detail (https://osf.io/d3y56/registrations) and subsequently published as a protocol paper (Mathur et al., 2020). In the Supplement, we describe and justify some deviations from this protocol.

2.1. Systematic search

Our inclusion criteria were as follows. Studies could recruit from any human population, including from online crowdsourcing websites. Studies needed to assess at least one intervention that was intended to reduce meat consumption or purchase, and interventions needed to include any explicit or implicit mention or portrayal of farm animals, their suffering, their slaughter, or their welfare. Composite interventions, defined as those including both an animal welfare appeal and some other form of appeal (e.g., environmental), were included, though we conducted sensitivity analyses excluding such interventions. Studies needed to include a control group, condition, or time period not subjected to any form of intervention intended to change meat consumption. Thus, studies making within-subject comparisons were eligible. Last, studies needed to report an outcome regarding the consumption or purchase of meat or all edible animal products, as assessed by a direct behavioral measure (e.g., the amount of meat that subjects self-served at a buffet), self-reported behavior (e.g., reported meat consumption over the week following exposure to the intervention), or a self-report of intended future behavior (e.g., intended meat consumption over the upcoming week). Although our focus was primarily on meat consumption, we included studies with outcomes related to consumption of all animal products because we anticipated that many interventions designed to reduce meat consumption would in fact make broader recommendations to reduce all animal product consumption and therefore would be assessed using correspondingly broad outcome measures. Further details on inclusion criteria were published previously (Mathur et al., 2020).

In addition to the nascent academic literature, evidence-based nonprofits have conducted numerous studies that have been reported in a separate body of grey literature. We therefore developed sensitive search strategies targeting both the academic literature and the grey literature to identify eligible articles, which could be published or released at any time and written in any language. For non-English articles, we used automated translation to determine eligibility. To search the academic literature, including unpublished dissertations and theses, we collaborated with an academic reference librarian (PAB) to design detailed search strings for each of eight databases (Medline, Embase, Web of Science, PsycInfo, CAB Abstracts, Sociological Abstracts, Pro-Quest Dissertations & Theses, and PolicyFile). To search the grey literature and help capture potentially missed academic articles, an author (JP) who is the director of an evidence-based animal welfare research organization designed a three-stage search strategy: (1) we screened The Humane League Labs’ existing internal compilation of relevant literature, including both academic studies and grey literature; (2) we screened the websites of 24 relevant nonprofits; and (3) we posted a bibliography of literature identified in the first two stages on relevant forums in the animal advocacy research community to solicit additional leads to studies.3 All methods are detailed in the Supplement. We conducted the final searches on January 17, 2020 for the academic literature and November 20, 2019 for the grey literature.

We reviewed articles and managed data using the software applications Covidence (Covidence Development Team, 2019) and Microsoft Excel. Each article retrieved from an academic database first underwent title/abstract screening, conducted independently by at least two reviewers from among DBR, JN, and MBM. In this stage, reviewers excluded only articles that clearly failed the inclusion criteria. Articles receiving an “include” vote from either or both reviewers proceeded to a full-text screening, during which at least two reviewers independently assessed inclusion criteria in detail. We resolved 9 conflicts between reviewers through discussion or adjudication by other authors. Interrater reliability for inclusion decisions was greater than4 Cohen’s κ = 0.71. Grey literature articles that JP identified as potentially eligible underwent the same full-text dual review as academic articles. We refer to articles ultimately judged to meet all inclusion criteria as “eligible”.

2.2. Data extraction

Basic descriptive characteristics of studies.

For each study, we extracted 33 basic descriptive characteristics regarding, for example, the subject population, location, intervention, and outcome. All extracted characteristics are enumerated in the Supplement. One of the extracted variables indicated whether the study was borderline with respect to the inclusion criteria, a classification made through discussion amongst the review team. For example, one “meat-animal reminder” intervention consisting of a photograph of a pork roast with the head still attached (versus a headless roast in the control condition) was classified as borderline because it was difficult to judge whether the intervention was about animal welfare. As described in Section 2.3, we conducted sensitivity analyses excluding borderline studies.

Hypothesized effect modifiers.

Also among the extracted variables were 13 hypothesized effect modifiers.5 We categorize the effect modifiers into 8 “coarse” intervention characteristics, which we could code for nearly all studies, versus 5 “fine-grained” intervention characteristics, which we could code for only the k = 80 studies for which we had access to all intervention materials or to detailed descriptions of their content. The coarse intervention characteristics were: (1) whether the intervention contained text; (2) whether the intervention contained visuals; (3) whether the intervention contained graphic visual or verbal depictions of factory farm conditions; (4) the nature of the recommendation made in the intervention (“go vegan”, “go vegetarian”, “reduce consumption”, a mixed recommendation [e.g., recommendations to either reduce or eliminate meat consumption], or no recommendation); (5) lasted longer than 5 min; (6) whether the outcome was measured 7 or more days after the intervention (to capture possible decays in intervention effects over time); (7) the percentage of male subjects in the study (because meat-eating may be closely intertwined with masculine identity in Western cultures (Rothgerber, 2020; Rozin et al., 2012; Ruby, 2012); and (8) subjects’ mean or median age.6

The fine-grained intervention characteristics described whether the intervention: (1) used mind attribution by describing a farm animal’s inner states; (2) described social norms in favor of reduced meat consumption; (3) used the identifiable victim effect by giving a proper-noun name to a farm animal (4) described or depicted pets (i.e., companion animals that typically live in people’s houses); with or without explicitly comparing them to farm animals; and (5) gave implementation suggestions in the form of describing or depicting a specific plant-based meal, restaurant dish, or recipe.

Risk-of-bias characteristics.

We also extracted 9 characteristics related to the study’s risks of bias, including design characteristics (e.g., randomization), missing data, analytic reproducibility and preregistration practices, exchangeability of the intervention and control conditions (i.e., avoidance of statistical confounding through randomization and minimization of differential dropout), avoidance of social desirability bias (e.g., by keeping subjects naïve to the purpose of the intervention), and external generalizability (i.e., the extent to which results are likely to apply to subjects in the general population, who may not already be particularly motivated to reduce meat consumption or purchase). Details on the development, contents, and use of our risk-of-bias tool are provided in the Supplement.

Quantitative data extraction.

We extracted quantitative data for the meta-analysis as follows. For each eligible article that reported sufficient statistical information, the statistician (MBM) used either custom-written R code or the R package metafor (Viechtbauer, 2010) to extract the point estimate(s) and variance estimate(s) most closely approximating the treatment effect of the animal welfare appeal on the risk ratio (RR) scale (see Supplement for details). We synchronized the directions of all studies’ estimates such that risk ratios greater than 1 represented reductions in meat consumption or purchase. When relevant statistics were not reported, we hand-calculated them from available statistics, plots, or publicly available datasets as feasible, or made repeated attempts to contact study authors. Articles reporting multiple point estimates on separate subject samples (even those sharing a control group) could contribute all of these point estimates to the analyses; further details on how we handled articles reporting on multiple eligible interventions were published previously (Mathur et al., 2020). For studies that reported multiple outcomes (e.g., consumption or purchase of all meat, of all edible animal products, and of specific subsets of meats), we used the outcome most closely matching the intended scope of the intervention. For example, we used the outcome “pork consumption” for an intervention specifically targeting pork consumption (Anderson, 2017) and used the outcome “all animal product consumption” for an intervention that recommended going vegan (Bertolaso, 2015).

Although meta-analysts sometimes extract all eligible outcomes from each paper and average them within studies (Sutton et al., 2000), we correctly anticipated that most studies with multiple eligible food outcomes would be those whose primary outcome was a composite measure of total meat or total animal product consumption and whose other eligible food outcomes were subscales of this composite, representing consumption of specific meats and animal products. Averaging these estimates within a given study would therefore yield a point estimate that would be essentially equivalent to the composite itself, but with the additional limitation that its variance would not be estimable without information on the full correlation structure of the various subscales.

When possible, to reduce the possibility of our choosing which estimates to include in a biased manner, we made all decisions about how we would calculate each study’s point estimate, and for which outcome, according to these and other detailed rules (see Supplement) before we actually calculated the estimate. However, doing so was not always feasible (e.g., for studies that directly reported the point estimates we needed to calculate).

2.3. Statistical analysis methods

Main analyses.

The statistician (MBM) conducted all statistical analyses in R (R Core Team, 2020b).7 To accommodate potential correlation between point estimates contributed by the same article and to obviate distributional assumptions, we fit a robust semiparametric meta-analysis model using the R package robumeta(Fisher & Tipton, 2015; Hedges et al., 2010). For the primary analysis, we included all eligible and borderline-eligible studies and estimated the average effect size with a 95% confidence interval and p-value, the standard deviation of the true population effects , and metrics that characterize evidence strength when effects are heterogeneous. Regarding the latter, we estimated the percentage of true population effects8 that were stronger than various effect-size thresholds representing all effects in the beneficial direction (i.e., RR>1) and more stringently representing only effects that might be considered to be meaningfully large by two different criteria (i. e., RR>1.1 or RR>1.2) (Mathur and VanderWeele, 2019, 2020b).9

We also estimated the percentage of true population effects with risk ratios less than 1 and alternatively less than 0.90, representing unintended detrimental effects of the interventions (Mathur and VanderWeele, 2019, 2020b). Taken together, these percentage metrics can help identify if: (1) there are few meaningfully large effects despite a “statistically significant” meta-analytic mean; (2) there are some large effects despite an apparently null meta-analytic mean; or (3) strong effects in the direction opposite of the meta-analytic mean also regularly occur (Mathur and VanderWeele, 2019, 2020b). As a hypothesis-generating method to help identify the individual interventions that appear most effective, we estimated the true population effect size in each study using nonparametric calibrated estimates (Wang and Lee, 2019). Intuitively, the calibrated estimates account for differences in precision between studies by shrinking each point estimate toward the meta-analytic mean, such that the least precise studies receive the strongest shrinkage toward the meta-analytic mean.

Publication bias.

We assessed publication bias using selection model methods (Vevea & Hedges, 1995), sensitivity analysis methods (Mathur and VanderWeele, 2020c), and the significance funnel plot (Mathur and VanderWeele, 2020c). These methods assume that the publication process favors “statistically significant” (i.e., p < 0.05) and positive results over “nonsignificant” or negative results, a typically reasonable assumption that also conforms well to empirical evidence on how publication bias operates in practice (Jin et al., 2015; Mathur and VanderWeele, 2020c). We used the sensitivity analysis methods to estimate the meta-analytic mean under hypothetical worst-case publication bias (i.e., if “statistically significant” positive results were infinitely more likely to be published than “nonsignificant” or negative results). These methods, unlike the selection model, also accommodated the point estimates’ non-independence within articles and did not make any distributional assumptions (Mathur and VanderWeele, 2020c).

Other sensitivity analyses.

As further sensitivity analyses, we conducted meta-analyses within 9 separate subsets of studies, namely: (1) excluding studies with borderline eligibility (leaving k = 91 analyzed studies); (2) excluding studies with composite interventions (k = 52 analyzed); (3) excluding studies that measured intended behavior rather than directly measured or self-reported behavior (k = 43 analyzed); (4) including only studies judged to be at the lowest risk of bias as described above (k = 12); (5) including only randomized studies (k = 75); (6) including only studies that were preregistered and had open data (k = 21); (7) including only published studies (k = 17); (8) including only unpublished studies (k = 83); and (9) excluding one study (FIAPO, 2017) with a very large point estimate and also a very large standard error, visible in Fig. 1 (k = 99 analyzed). The first 2 subset analyses were preregistered; the subsequent ones were introduced post hoc. We also conducted a simple post hoc analysis that assessed the sensitivity of our results to potential social desirability bias, conceptualized as bias in which subjects in the intervention group under-report meat and animal product consumption more than subjects in the control group (VanderWeele & Li, 2019). We ultimately did not conduct a preregistered sensitivity analysis in which we would have excluded interventions targeting consumption of only a specific type of meat; this proved infeasible because of the small number of interventions that unambiguously did so.

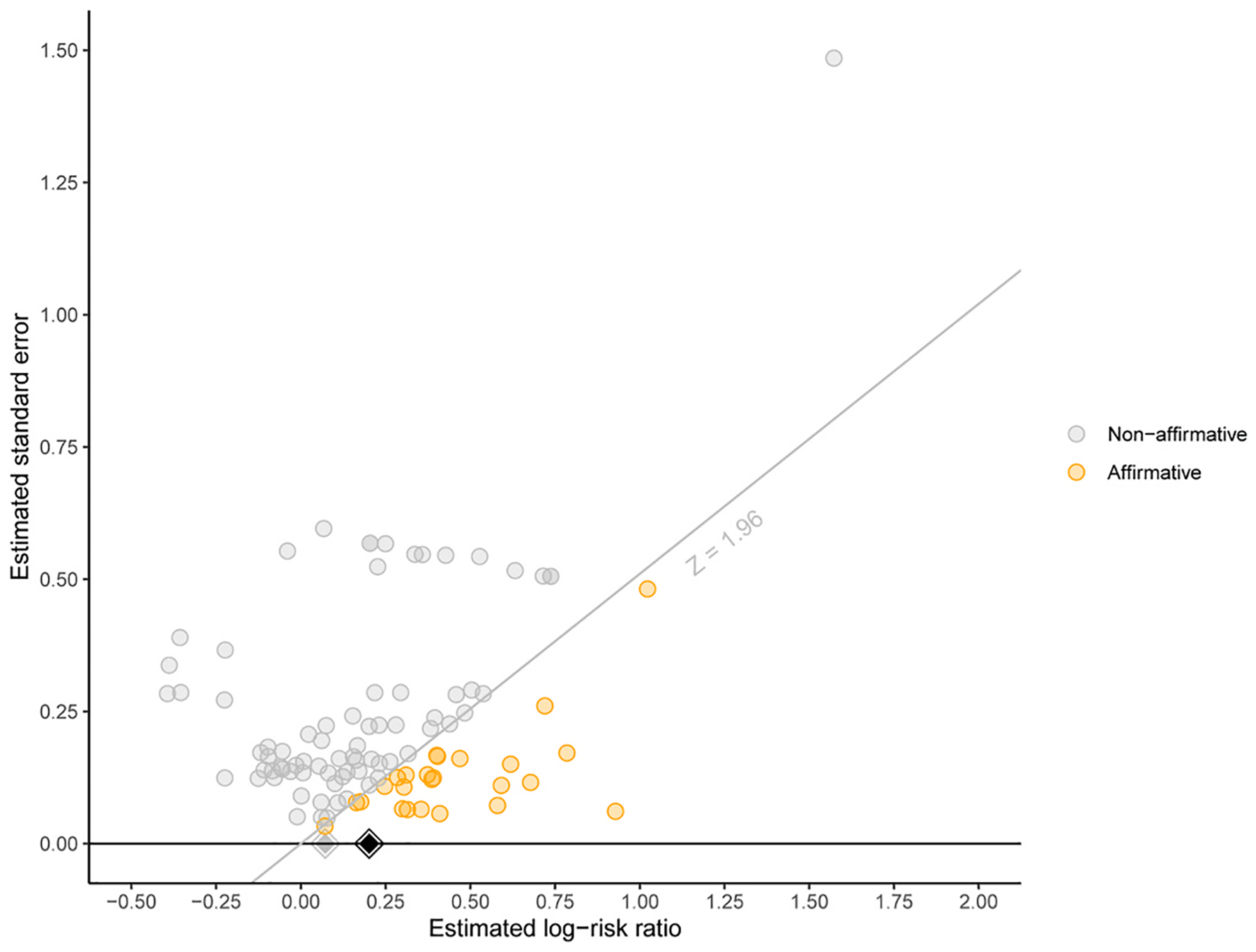

Fig. 1.

Significance funnel plot displaying studies’ point estimates versus their estimated standard errors. Orange points: affirmative studies (p < 0.05 and a positive point estimate). Grey points: nonaffirmative studies (p ≥ 0.05 or a negative point estimate). Diagonal grey line: the standard threshold of “statistical significance” for positive point estimates; studies lying on the line have exactly p = 0.05. Black diamond: main-analysis point estimate within all studies; grey diamond: worst-case point estimate within only the nonaffirmative studies.

Meta-regression on hypothesized effect modifiers.

As a secondary analysis, we used robust meta-regression (Hedges et al., 2010) to investigate intervention and study characteristics associated with increased or decreased effectiveness. The meta-regressive covariates were the hypothesized effect modifiers listed in Section 2.2. As described there, we could code the 5 fine-grained intervention characteristics (namely, use of mind attribution, social norms, the identifiable victim effect, depictions of pets, and implementation suggestions) for 80 studies. Additionally, because many of these components were used only in interventions that contained text, these 5 covariates were highly collinear with the covariate indicating that the intervention contained text. We therefore fit two meta-regression models: (1) a “coarse” model that included only the coarse intervention characteristics listed in Section 2.2 (k = 86 studies with complete data on the effect modifiers); and (2) a “fine-grained” model containing all fine-grained and the coarse intervention characteristics except the presence of text (k = 80 studies). For each model, we included the covariates in the meta-regression simultaneously rather than in separate univariable models because they were fairly highly correlated (Supplementary Table S5).

Metrics of societal impact.

We had preregistered an additional type of secondary analysis in which we intended to express intervention effectiveness using metrics that more directly characterize societal impact, such as the estimated reduction in human all-cause mortality events, in the number of animals raised for consumption, and in greenhouse gas emissions. However, limitations in outcome measurement and follow-up duration in the meta-analyzed studies ultimately precluded these analyses; we return to this issue in Section 4.3.

3. Results

3.1. Results of search process

Supplementary Fig. S1 is a PRISMA flowchart depicting the search results. After removing duplicate articles, we screened 4139 articles from the academic database searches and an additional 81 articles identified from manual searches of the grey literature and the academic literature. We assessed 144 full-text articles for eligibility. In full-text screening, we removed 96 articles that failed inclusion criteria, that reported insufficient information to determine eligibility and for which we could not obtain full texts, or that analyzed the same subject sample as an existing article (e.g., because they were re-analyses of an existing dataset). We thus found that 48 articles met the inclusion criteria. Of these, 7 could not be meta-analyzed because we could not obtain the relevant point estimates from publicly available data, from the paper, or from repeated attempts to contact the authors. (Characteristics of these eligible, but not analyzed, studies are described qualitatively in Supplementary Table S3.)

Of the 41 articles with available statistics, 7 met our preregistered inclusion criteria but were excluded from the main analysis because they reported on a specific, unanticipated study design that is at very high risk of bias. These studies assessed the effectiveness of “challenges” or “pledges” in which, for example, subjects try to follow a vegan diet for one month. These studies shared two key design features: (1) subjects were not specifically recruited, but rather were individuals who had chosen to sign up for the challenge and who therefore may have been already highly motivated to reduce their consumption; and (2) there was no separate control group, but rather the challenge’s effectiveness was assessed by comparing within-subject changes in consumption after versus before the challenge. The combination of these two features is highly problematic: subjects with a strong pre-existing motivation to reduce consumption may have done so regardless of whether they took the challenge, and the lack of a separate control group precludes estimation of a valid treatment effect of the challenge even among this subset of highly motivated subjects. These studies also typically provided very limited statistical summaries; in many cases, we would not have had enough information to calculate an effect size that was statistically comparable to the risk ratios we estimated for other studies. For these reasons, we made a post hoc decision during data extraction to exclude studies with both of these features (subject self-selection and lack of a separate control group) from the main analysis. However, in keeping with our preregistered inclusion criteria, we report results that include these “self-selected within-subjects” (SSWS) studies in Section 3.6. Therefore, after excluding the 7 articles reporting on SSWS studies, our main analyses included 34 articles, totaling 100 point estimates.

3.2. Characteristics of included articles and studies

Table 1 summarizes studies’ basic characteristics. We meta-analyzed data from 34 articles; 24% were published in peer-reviewed journals, and all others were dissertations, theses, conference proceedings, or reports by nonprofits. The chronologically first study (Byrd- Bredbenner et al., 2010) was published in 2010. The articles contributed a total of 100 point estimates, which were estimated using a total of 24,817 subjects. We will refer to these 100 estimates as “studies” to distinguish them from “articles”. We obtained 53% of statistical estimates from publicly available datasets; 28% by manually calculating statistics from information reported in the articles’ text, tables, or figures; and the remaining 19% from datasets provided by the study’s authors.

Table 1.

Basic characteristics of meta-analyzed studies and their interventions. Binary and categorical variables are reported as “count (percentage)”. Continuous variables are reported as “median (first quartile, third quartile)”.

| Characteristic | Number of studies (%) or median (Q1, Q3) |

|---|---|

| Country | |

| Canada | 1 (1%) |

| China | 2 (2%) |

| Czech Republic | 2 (2%) |

| Ecuador | 1 (1%) |

| France | 3 (3%) |

| Germany | 1 (1%) |

| India | 3 (3%) |

| Netherlands | 1 (1%) |

| Portugal | 2 (2%) |

| Scotland | 2 (2%) |

| USA | 37 (37%) |

| USA, Canada | 2 (2%) |

| Not reporteda | 43 (43%) |

| Percent male subjects | 43 (36.1, 51.7) |

| Not reported | 4 (4%) |

| Average age (years) | 33.7 (21.9, 35.4) |

| Not reported | 9 (9%) |

| Student recruitment | |

| Not undergraduates | 77 (77%) |

| General undergraduates | 10 (10%) |

| Social sciences undergraduates | 3 (3%) |

| Mixed | 10 (10%) |

| Intervention had text | |

| Yes | 83 (83%) |

| No | 14 (14%) |

| Not reported | 3 (3%) |

| Intervention had visuals | |

| Yes | 62 (62%) |

| No | 36 (36%) |

| Not reported | 2 (2%) |

| Intervention had graphic content | |

| Yes | 61 (61%) |

| No | 37 (37%) |

| Not reported | 2 (2%) |

| Intervention used mind attribution | |

| Yes | 65 (65%) |

| No | 28 (28%) |

| Not reported | 7 (7%) |

| Intervention used social norms | |

| Yes | 29 (29%) |

| No | 62 (62%) |

| Not reported | 9 (9%) |

| Intervention identified a named victim | |

| Yes | 21 (21%) |

| No | 70 (70%) |

| Not reported | 9 (9%) |

| Intervention depicted pets | |

| Yes | 32 (32%) |

| No | 60 (60%) |

| Not reported | 8 (8%) |

| Intervention gave implementation suggestions | |

| Yes | 29 (29%) |

| No | 61 (61%) |

| Not reported | 10 (10%) |

| Intervention described animal welfare only | |

| Yes | 52 (52%) |

| No | 44 (44%) |

| Not reported | 4 (4%) |

| Intervention was personally tailored | |

| Yes | 2 (2%) |

| No | 98 (98%) |

| Not reported | 0 (0%) |

| Intervention’s recommendation | |

| No recommendation | 43 (43%) |

| “Reduce consumption” | 13 (13%) |

| “Go vegetarian” | 9 (9%) |

| “Go vegan” | 7 (7%) |

| Mixed recommendation | 24 (24%) |

| Not reported | 4 (4%) |

| Intervention’s duration (minutes) | 1.5 (1, 5.88) |

| Not reported | 2 (2%) |

| Outcome category | |

| Consumption | 96 (96%) |

| Purchase | 4 (4%) |

| Length of follow-up (days) | 0 (0, 32.5) |

| Not reported | 1 (1%) |

| Source of statistics | |

| Data from author | 19 (19%) |

| Paper | 28 (28%) |

| Public data | 53 (53%) |

There was a high proportion of missing data regarding studies’ countries because we coded country as missing for online studies (e.g., conducted on Amazon Mechanical Turk) that did not specifically state whether they used geographical restrictions when recruiting subjects.

Many studies (53%) reported multiple food outcomes that were potentially eligible for inclusion; as described in Section 2.2, we extracted statistics for only the outcome most closely matching the scope of the intervention. For all but one article, the outcome we extracted (e. g., consumption of all meats) was a composite of some or all of the additional food outcomes (e.g., consumption of specific categories of meats), as we had anticipated. For the remaining article (Caldwell, 2016), the primary and additional outcomes were pork consumption and egg consumption respectively, and the latter was measured because the study also contained an ineligible intervention regarding egg production.

Given the large number of studies, we detail their individual characteristics, along with risks of bias ratings, point estimates, variance estimates, and additional food outcomes, in a publicly available spreadsheet (https://osf.io/8zsw7/; see Supplement for codebook).

3.2.1. Subjects

The median analyzed sample size per article was 522 subjects (25th percentile: 235; 75th percentile: 802). (We report the sample sizes by article rather than by study to avoid double-counting control subjects whose data contributed to multiple point estimates from the same article.) Studies were conducted in at least 11 countries (Canada, China, the Czech Republic, Ecuador, France, Germany, India, the Netherlands, Portugal, Scotland, and the United States) that represented a diverse range of geographical regions as well as cultural characteristics that may be relevant to meat consumption, including affluence, traditional cuisines, and religious and ethical traditions. The median percentage of male subjects was 43%, and the median of studies’ average ages was 33.7 years. 13% of studies recruited only undergraduates, including 2% (2 studies) that specifically recruited social sciences undergraduates. 77% did not specifically recruit undergraduates, and the remaining 10% recruited both undergraduate and non-undergraduate samples.

3.2.2. Interventions

Examples of typical interventions include, not exhaustively, providing informational leaflets about factory farming conditions (Norris and Hannan, 2019), showing photographs of meat dishes accompanied by photographs of the animals they came from (Kunst & Hohle, 2016), and providing mock newspaper articles (Macdonald et al., 2016). Details on the many other interventions represented in our meta-analysis are provided in the aforementioned publicly available spreadsheet of study characteristics. Below, we report on studies’ characteristics using percentages that count missing data as its own category, so percentages may add up to less than 100%. Most studies’ interventions contained text (83%), contained visuals (e.g., photographs, infographics, or videos) (62%), contained graphic verbal or visual depictions of welfare conditions in factory farms (61%), and used mind attribution (65%). Relatively fewer interventions invoked social norms (29%), identified a named victim (21%), depicted pets (32%), and gave implementation suggestions (29%), though these relatively lower percentages also reflect missing data on these variables when we did not have access to all intervention materials.

Most interventions (52%) made appeals only regarding animal welfare, with the remaining interventions also appealing to, for example, individual health or environmental concerns. The interventions were typically quite brief in duration: we estimated that 66% lasted less than 5 min, though there was a wide range, with some interventions lasting only about 30 s and others lasting over 90 min. Interventions also varied considerably in their use of explicit recommendations to the viewer: 13% recommended reducing meat consumption, 9% recommended going vegetarian, 7% recommended going vegan, and 24% made some combination of these recommendations. Another 43% of interventions made no explicit recommendation, instead simply describing or depicting animal welfare in a manner that was intended to reduce meat consumption. The overwhelming majority (98%) of interventions were not personally tailored (i.e., their content was the same for all subjects regardless of personal characteristics); the few tailored interventions were from a single article (FIAPO, 2017) in which the interventions’ contents differed for currently vegetarian subjects versus for currently non-vegetarian subjects.

3.2.3. Outcomes

Throughout this section, we describe the outcomes used in our own analysis based on the considerations described in Section 2, which sometimes differed from the main outcome reported in the article, especially when raw data were available. Nearly all studies (96 studies; 96%) assessed consumption of meat or animal products, with the remaining studies instead assessing purchasing. 57% of studies assessed subjects’ intended future behavior, another 41% of studies assessed subjects’ self-reported behavior, and the remaining 2% (2 studies) used direct objective measures of subjects’ behavior (i.e., the amount eaten of an offered sample of ham (Anderson & Barrett, 2016) or the percentage of actually purchased meals that contained meat (Schwitzgebel et al., 2019)). About half of studies (53%) measured outcomes immediately after exposure to the intervention. Among the studies that measured outcomes after some delay, the median and maximum follow-up lengths were 39 and 120 days, respectively. For 71% of studies, our extracted point estimates were risk ratios of low versus high meat or animal product consumption10 defined in absolute terms (e.g., being below versus above the baseline median consumption); the remaining estimates were risk ratios of reducing consumption relative to the subject’s own previous consumption.

3.2.4. Design quality, reproducibility, and risks of bias

Table 2 summarizes study characteristics related to the methodological strength of study design, analytic reproducibility, potential for selective reporting, and risks of bias. The table presents these characteristics for all studies, for published studies, and for unpublished studies. Overall, 75% of point estimates were from randomized studies11 (with randomization occurring between subjects, within subjects, or by clusters of subjects); the remaining estimates were from nonrandomized designs with a separate control group or in which subjects’ meat consumption was assessed before and after the intervention. Regarding analytic reproducibility and the potential for selective reporting, 53% of studies had publicly available data, 22% had publicly available analysis code, and 25% had been preregistered. The median percent of missing data at the longest follow-up time point was 7.5%, but 23% of studies did not report on missing data.12 As described above, nearly all studies (98%) measured outcomes based on subjects’ self-reports of behavior or of future intentions, rather than based on direct behavioral measures. We gave “low” or “medium” risk-of-bias ratings (versus “high” or “unclear”) to 61% of studies regarding exchangeability of the intervention and control groups, to 27% regarding social desirability bias, and to 64% of studies regarding external generalizability.13 Published studies appeared to be at lower risk of bias than unpublished studies on most criteria, except that unpublished studies had notably better analytic reproducibility and preregistration practices (Table 2). Post hoc, we defined studies with the lowest risk of bias overall as those that were randomized, had less than 15% missing data, and had “low” or “medium” risks of bias for exchangeability and social desirability bias.14 12 studies, contributed by 6 unique articles (Anderson & Barrett, 2016; Earle et al., 2019; Kunst & Haugestad, 2018; Kunst & Hohle, 2016; Lackner, 2019; Tian et al., 2016), were identified as being at lowest risk of bias.

Table 2.

Study characteristics regarding design, analytic reproducibility, and risks of bias. Binary and categorical variables are reported as “count (percentage)”. Continuous variables are reported as “median (first quartile, third quartile)”.

| Characteristic | All studies (k = 100) | Published studies (k = 17) | Unpublished studies (k = 83) |

|---|---|---|---|

| Design | |||

| Between-subjects RCT | 72 (72%) | 16 (94%) | 56 (67%) |

| Within-subject RCT | 1 (1%) | 1 (6%) | 0 (0%) |

| Cluster RCT | 2 (2%) | 0 (0%) | 2 (2%) |

| Between-subjects NRCT | 21 (21%) | 0 (0%) | 21 (25%) |

| Within-subject UCT | 4 (4%) | 0 (0%) | 4 (5%) |

| Outcome measurement | |||

| Direct behavioral measure | 2 (2%) | 1 (6%) | 1 (1%) |

| Self-reported past behavior | 41 (41%) | 1 (6%) | 40 (48%) |

| Intended future behavior | 57 (57%) | 15 (88%) | 42 (51%) |

| Percent missing data | 7.5 (7.3, 42) | 0 (0, 7.95) | 13.2 (7.5, 59.75) |

| Not reported | 23 (23%) | 2 (12%) | 21 (25%) |

| Exchangeability | |||

| Low risk of bias | 52 (52%) | 16 (94%) | 36 (43%) |

| Medium risk of bias | 9 (9%) | 1 (6%) | 8 (10%) |

| High risk of bias | 29 (29%) | 0 (0%) | 29 (35%) |

| Unclear | 10 (10%) | 0 (0%) | 10 (12%) |

| Avoidance of social desirability bias | |||

| Low risk of bias | 13 (13%) | 6 (35%) | 7 (8%) |

| Medium risk of bias | 14 (14%) | 5 (29%) | 9 (11%) |

| High risk of bias | 68 (68%) | 6 (35%) | 62 (75%) |

| Unclear | 5 (5%) | 0 (0%) | 5 (6%) |

| External generalizability | |||

| Low risk of bias | 36 (36%) | 10 (59%) | 26 (31%) |

| Medium risk of bias | 28 (28%) | 7 (41%) | 21 (25%) |

| High risk of bias | 15 (15%) | 0 (0%) | 15 (18%) |

| Unclear | 21 (21%) | 0 (0%) | 21 (25%) |

| Preregistered | |||

| Yes | 25 (25%) | 2 (12%) | 23 (28%) |

| No | 75 (75%) | 15 (88%) | 60 (72%) |

| Public data | |||

| Yes | 53 (53%) | 5 (29%) | 48 (58%) |

| No | 47 (47%) | 12 (71%) | 35 (42%) |

| Public code | |||

| Yes | 22 (22%) | 4 (24%) | 18 (22%) |

| No | 78 (78%) | 13 (76%) | 65 (78%) |

| Overall lowest risk of bias | |||

| Yes | 12 (12%) | 9 (53%) | 3 (4%) |

| No | 88 (88%) | 8 (47%) | 80 (96%) |

k: Number of studies in subset. RCT: randomized controlled trial. NRCT: non-randomized controlled trial. UCT: uncontrolled trial (i.e., no separate control group) but with subjects serving as own controls.

For studies in which missing data was unreported but the outcome was measured in the same session as the intervention, we coded missing data as 0. Details on the risk-of-bias categories are provided in the Supplement.

3.3. Main analyses

The overall pooled risk ratio was 1.22 (95% CI: [1.13, 1.33]; p < 0.0001), indicating that on average, interventions increased by 22% a subject’s probability of intending, self-reporting, or behaviorally demonstrating low versus high meat consumption, compared to the control condition. Most articles (71%) contributed more than one point estimate to the meta-analysis, and point estimates were moderately correlated within articles (intraclass correlation coefficient = 0.44).

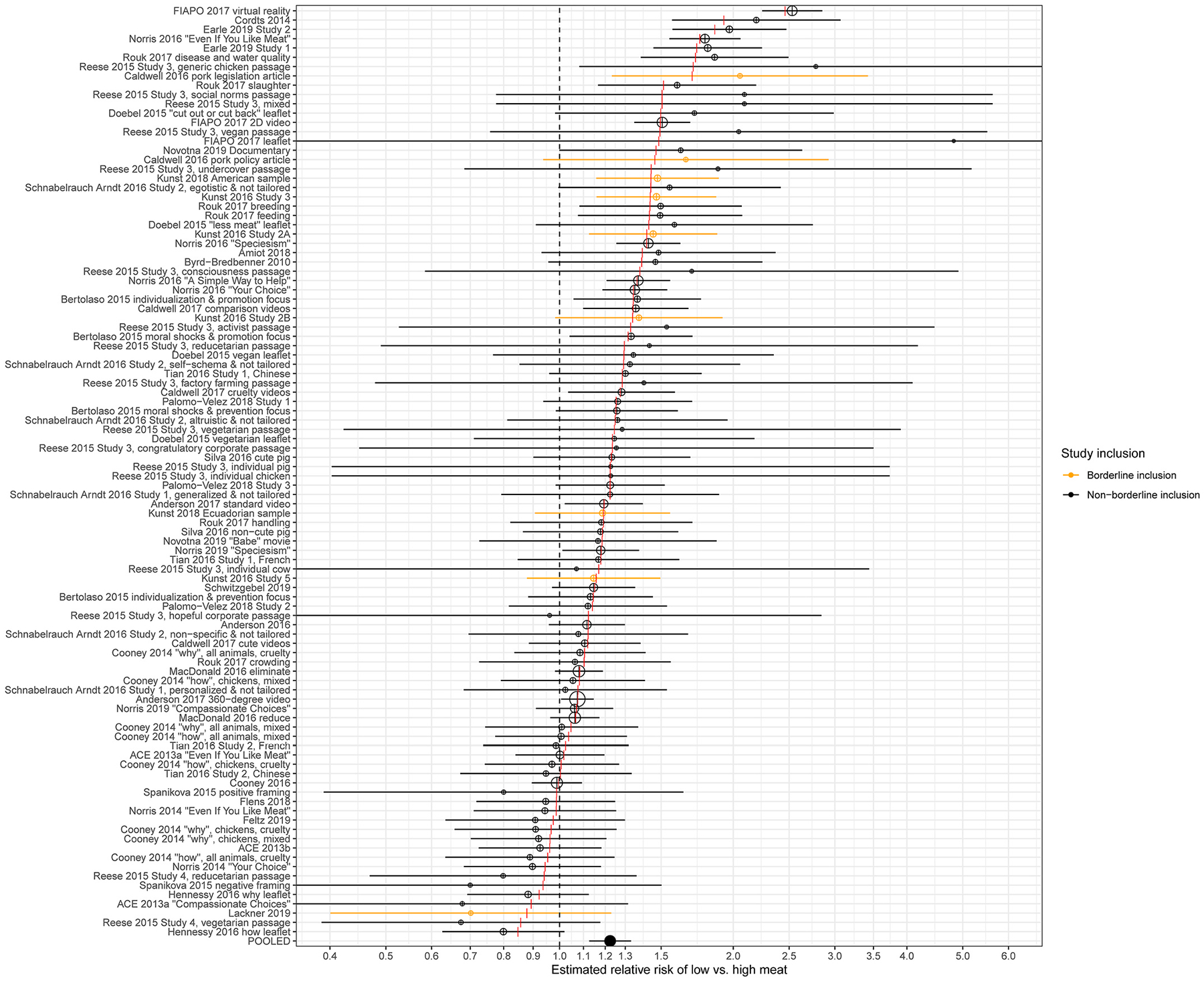

Fig. 2 shows point estimates, 95% confidence intervals [CI], and calibrated estimates for each study. Interventions’ effect sizes appeared fairly heterogeneous across studies, with an estimated standard deviation of the log-risk ratios of . Supplementary Fig. S2 shows the estimated distribution of true population effects across all studies. Despite this heterogeneity, the interventions’ effects were overwhelmingly in the beneficial direction (i.e., reducing rather than increasing meat consumption, purchase, or relevant intentions), with an estimated 83% (95% CI: [72%, 91%]) of true risk ratios above 1. Upon more stringently considering only risk ratios of at least 1.1, or alternatively 1.2, as being meaningfully large, we estimated that 71% (95% CI: [59%, 80%]) and 53% (95% CI: [38%, 64%]) of effects were stronger than these two thresholds, respectively. Supplementary Fig. S3 displays the estimated percentage of risk ratios above various other thresholds. Considering effects in the detrimental direction (i.e., interventions that “backfired” by increasing rather than decreasing meat consumption relative to the control condition), we estimated that 17% (95% CI: [6%, 27%]) of interventions had true population risk ratios below 1. Very few interventions produced risk ratios smaller than 0.90 in the detrimental direction (4%; 95% CI: [0%, 12%]). The 10 studies with the largest calibrated estimates (i.e., the top 10%) used interventions consisting of: brief factual passages that graphically described and/or visually depicted conditions on factory farms (Cordts et al., 2014; Reese, 2015) or fish farms (Rouk, 2017), sometimes combined with health or environmental appeals (Reese, 2015); a 16-page leaflet containing detailed information and graphic portrayals of conditions on factory farms along with health appeals and implementation suggestions (“Even If You Like Meat” leaflet; Norris and Roberts (2016)); a factual mock newspaper article with graphic photographs and descriptions of gestation crates along with discussion of legislation to ban their use (Caldwell, 2016); a 3-min virtual reality video graphically depicting conditions on factory farms (FIAPO, 2017); and meat-animal reminders consisting of photographs of meat dishes portrayed alongside photographs of the animals they came from (Earle et al., 2019). Nine of these 10 studies assessed outcomes related to intended behavior, and the remaining study assessed self-reported behavior (Norris and Roberts, 2016).

Fig. 2.

Point estimates in each study (open circles), ordered by the study’s calibrated estimate (vertical red tick marks), and the overall meta-analytic mean (solid circle). Areas of open circles are proportional to the estimate’s relative weight in the meta-analysis. Orange estimates were borderline with respect to inclusion criteria and were excluded in sensitivity analysis. The x-axis is presented on the log scale. Error bars represent 95% confidence intervals. The vertical, black dashed line represents the null (no intervention effect).

3.4. Sensitivity analyses

3.4.1. Publication bias

The meta-analytic mean corrected for publication bias (Hedges, 1992) was 1.33 (95% CI: [1.22, 1.45]; p < 0.0001). Thus, this estimate was in fact somewhat larger than the uncorrected meta-analytic mean of 1.22, though the estimates had substantially overlapping confidence intervals. This result suggests that publication bias was likely mild and did not meaningfully affect results. As a post hoc analysis, we fit the selection model to unpublished and to published studies separately, yielding similar conclusions. The sensitivity analyses for publication bias indicated that even under hypothetical worst-case publication bias (i.e., if “statistically significant” positive results were infinitely more likely to be published than “nonsignificant” or negative results), the meta-analytic mean would decrease to 1.07 (95% CI: [1.02, 1.13]) but would remain positive and with a confidence interval bounded above the null. This worst-case estimate arises from meta-analyzing only the 75 observed “nonsignificant” or negative studies and excluding the 25 observed “significant” and positive studies (Mathur and VanderWeele, 2020c). As a graphical heuristic, the significance funnel plot in Fig. 1 relates studies’ point estimates to their standard errors and compares the pooled estimate within all studies (black diamond) to the worst-case estimate (grey diamond). When the diamonds are close to one another or the grey diamond represents a positive, nonnegligible effect size, as seen here, the meta-analysis may be considered fairly robust to publication bias (Mathur and VanderWeele, 2020c). Taken together, the results from the selection model and from the sensitivity analysis suggest that publication bias appeared negligible and not likely to substantially attenuate the results.

3.4.2. Social desirability bias

We conducted a simple sensitivity analysis (VanderWeele and Li (2019); Mathur and VanderWeele (2020b); see Supplement for methodological details) that characterizes how severe social desirability bias would have to have been in the meta-analyzed studies in order for: (1) the pooled point estimate of RR = 1.22 to be entirely attributable to social desirability bias (i.e., such that the interventions in fact had no effect on actual consumption on average); or (2) the percentage of true population effects stronger than RR = 1.1 to be reduced from our estimated 71% to only 10%. Specifically, the sensitivity analyses characterize the hypothetical severity of social desirability bias as how strongly the interventions affected subjects’ reported consumption independently of their effects on subjects’ actual consumption (VanderWeele & Li, 2019). For example, this form of social desirability bias could arise in a study in which the intervention did not affect subjects’ actual consumption at all, but did induce them to under-report meat consumption more than they otherwise would, thus yielding a spurious intervention effect estimate. If there were no social desirability bias of this form, the interventions would affect reported consumption exclusively via their effects on actual consumption.

Another plausible form of social desirability bias could arise if subjects were to systematically under-report meat consumption, but by a similar degree in the intervention and control groups (e.g., Hebert et al. (1995); Neff et al. (2018); Rothgerber (2020)). Critically, this form of under-reporting would in general leave estimates of intervention effects either unbiased or would bias them toward, rather than away from, the null (Rothman et al., 2008). Hence, this form of under-reporting is of less concern than the type of differential social desirability bias that we considered in the sensitivity analyses, which could bias the estimates away from the null (Rothman et al., 2008).

The sensitivity analyses indicated that, for the observed RR = 1.22 to be entirely attributable to social desirability bias, the interventions would have needed to increase subjects’ probability of reporting low meat consumption, entirely independently of their potential effects on actual consumption, by at least 22% (95% CI: [17%, 29%]) on average. If the severity of social desirability bias were approximately the same for all studies, then to reduce the percentage of true population effects stronger than RR = 1.1 from our estimated 71% to only 10% would require that each intervention had increased subjects’ probability of reporting low meat consumption, independent of its effects on actual consumption, by at least 37% (95% CI: [31%, 55%]).

3.4.3. Subset analyses

The 9 subset analyses described in Section 2.3 yielded point estimates that were typically close to the main estimate of 1.22 and that were never smaller than 1.09 (ranging from 1.09 for preregistered studies with open data to 1.35 when restricting the analysis to published studies; see Table 3). Some estimates had wide confidence intervals due to the inclusion of only a small number of studies. All subset analyses corroborated the conclusion that a large majority of true population effects (at least 70%) were in the beneficial direction, and 7 of 9 analyses corroborated the conclusion that a majority of true population effects were greater than RR = 1.1.

Table 3.

Sensitivity analyses conducted on different groups of studies, with the overall estimate from the main analysis reported for comparison. Mean risk ratio: meta-analytic mean with 95% confidence interval.

| Studies analyzed | k | Mean risk ratio | p-value | % above 1 | % above 1.1 | % above 1.2 | |

|---|---|---|---|---|---|---|---|

| Main analysis | 100 | 1.22 [1.13, 1.33] | <0.0001 | 0.12 | 83 [72, 91] | 71 [59, 80] | 53 [38, 64] |

| Excluding borderline-eligible studies | 91 | 1.21 [1.11, 1.33] | 0.0003 | 0.12 | 82 [69, 91] | 68 [55, 77] | 52 [35, 63] |

| Excluding composite interventions | 52 | 1.29 [1.18, 1.40] | <0.0001 | 0.11 | 98 [NA, NA] | 87 [71, 94] | 71 [38, 83] |

| Studies at lowest risk of bias | 12 | 1.3 [0.98, 1.72] | 0.06 | 0.21 | 92 [NA, NA] | 75 [0, 92] | 58 [8, 83] |

| Excluding studies measuring intended behavior | 43 | 1.11 [0.98, 1.26] | 0.08 | 0.14 | 70 [47, 88] | 47 [26, 60] | 30 [9, 49] |

| Randomized studies | 75 | 1.24 [1.14, 1.34] | <0.0001 | 0.15 | 93 [79, 99] | 80 [66, 88] | 61 [36, 77] |

| Preregistered studies with open data | 21 | 1.09 [0.99, 1.19] | 0.06 | 0 | 100 [NA, NA] | 0 [NA, NA] | 0 [NA, NA] |

| Published studies | 17 | 1.35 [1.09, 1.67] | 0.02 | 0.16 | 100 [NA, NA] | 88 [NA, NA] | 71 [0, 94] |

| Unpublished studies | 83 | 1.19 [1.08, 1.32] | 0.001 | 0.13 | 80 [65, 89] | 66 [52, 77] | 51 [34, 64] |

| Excluding one extreme estimate | 99 | 1.22 [1.13, 1.33] | <0.0001 | 0.1 | 83 [71, 91] | 71 [59, 80] | 53 [36, 64] |

| Including SSWS studies | 108 | 1.31 [1.19, 1.44] | <0.0001 | 0 | 81 [71, 87] | 69 [56, 76] | 56 [44, 65] |

p-value: for mean risk ratio versus null of 1. k: Number of studies in subset. : estimated standard deviation of true population effects on log-risk ratio scale. Final three columns: estimated percentage of true population effects stronger than various thresholds on risk ratio scale. Bracketed values are 95% confidence intervals for the percentage of effects stronger than a threshold, which were sometimes not estimable (“NA”) when exactly or when the estimated proportion was very high.

3.5. Secondary analyses

As described in Section 2.3, we fit two meta-regression models. In the coarse model (k = 86 studies), the inclusion of the covariates in the model reduced the heterogeneity estimate from , suggesting that these covariates together predicted a moderate amount of the heterogeneity seen in the main analysis. Table 4 shows risk ratio estimates for each effect modifier, which represent the ratio by which the average intervention effect increased in studies in which the effect modifier was present versus absent. In general, these candidate effect modifiers were not strongly associated with effect sizes, although the confidence intervals sometimes indicated considerable uncertainty. Results were interesting regarding the type of recommendation made: studies of interventions making a “go vegan” recommendation appeared to have larger effects than studies of interventions making no recommendation (effect modification RR = 1.31; 95% CI: [1.06, 1.62]; p = 0.03), and point estimates in studies whose interventions recommended “going vegetarian” (1.03) or “reducing consumption” (1.00) heuristically suggested some degree of dose-response such that studies with broader-scoped recommendations (e.g., “go vegan” versus “reduce meat consumption”) typically had larger effects. Regarding other study characteristics, studies measuring outcomes after a relatively long delay (≥ 7 days) typically had smaller effect sizes (effect modification RR = 0.81; 95% CI: [0.68, 0.97]; p = 0.03), which could reflect decays in intervention effectiveness over time. See the Discussion for important guidance on the interpretation of these meta-regression results. Supplementary Figs. S4–S6 plot individual studies’ calibrated estimates versus the interventions’ durations, studies’ lengths of follow-up, and average ages.

Table 4.

Meta-regressive estimates of effect modification by various study design and intervention characteristics (coarse model). Intercept: estimated mean risk ratio when all listed covariates are set to 0. For binary covariates, estimates represent risk ratios for the increase in intervention effectiveness associated with a study’s having, versus not having, the covariate. For the percentage of male subjects, the estimate represents the risk ratio for the increase in intervention effectiveness associated with a 10-percentage-point increase in males. For the average age, the estimate represents the risk ratio for the increase in intervention effectiveness associated with a 5-year increase in average subject age.

| Coefficient | Effect modification RR [95% CI] | p-value |

|---|---|---|

| Intercept | 1.11 [0.66, 1.86] | 0.66 |

| Intervention had text | 0.85 [0.68, 1.06] | 0.12 |

| Intervention had visuals | 0.96 [0.81, 1.13] | 0.57 |

| Intervention had graphic content | 1.10 [0.97, 1.24] | 0.13 |

| Intervention’s recommendation | ||

| No recommendation | Ref. | Ref. |

| “Reduce consumption” | 1.00 [0.77, 1.31] | 0.98 |

| “Go vegetarian” | 1.03 [0.78, 1.36] | 0.81 |

| “Go vegan” | 1.31 [1.06, 1.62] | 0.03 |

| Mixed recommendation | 0.99 [0.83, 1.19] | 0.94 |

| Intervention duration >5 min | 1.03 [0.86, 1.24] | 0.70 |

| Follow-up length at least 7 days | 0.81 [0.68, 0.97] | 0.03 |

| Percentage male subjects (10-pt increase) | 1.00 [0.95, 1.06] | 0.88 |

| Average subject age (5-year increase) | 1.04 [0.98, 1.10] | 0.15 |

CI: confidence interval. p-values are for the effect modification coefficients themselves, not for the subset of studies having the listed characteristic.

In the fine-grained meta-regression model (k = 80 studies; Table 5), the point estimates for the fine-grained intervention components were again usually close to the null, with the possible exception of using implementation suggestions (effect modification RR = 1.24; 95% CI: [0.85, 1.80]; p = 0.22). In most cases, the confidence intervals were fairly wide. Estimates for the other intervention components (Table 5) were usually similar to those in the coarse model.

Table 5.

Meta-regressive estimates of effect modification by various study design and intervention characteristics (fine-grained model), presented as in Table 4.

| Coefficient | Effect modification RR [95% CI] | p-value |

|---|---|---|

| Intercept | 0.87 [0.51, 1.46] | 0.56 |

| Intervention used mind attribution | 1.00 [0.74, 1.33] | 0.97 |

| Intervention used social norms | 1.03 [0.64, 1.65] | 0.88 |

| Intervention identified victim | 0.94 [0.65, 1.35] | 0.68 |

| Intervention depicted pets | 1.11 [0.70, 1.78] | 0.47 |

| Intervention used implementation suggestions | 1.24 [0.85, 1.80] | 0.22 |

| Intervention had visuals | 1.04 [0.87, 1.25] | 0.61 |

| Intervention had graphic content | 1.07 [0.88, 1.29] | 0.45 |

| Intervention’s recommendation | ||

| No recommendation | Ref. | Ref. |

| “Reduce consumption” | 0.89 [0.68, 1.17] | 0.33 |

| “Go vegetarian” | 0.91 [0.63, 1.31] | 0.55 |

| “Go vegan” | 1.25 [0.78, 1.99] | 0.29 |

| Mixed recommendation | 0.78 [0.54, 1.12] | 0.14 |

| Intervention duration >5 min | 0.96 [0.66, 1.40] | 0.81 |

| Follow-up length at least 7 days | 0.76 [0.50, 1.14] | 0.15 |

| Percentage male subjects (10-pt increase) | 0.98 [0.91, 1.06] | 0.57 |

| Average subject age (5-year increase) | 1.08 [1.00, 1.17] | 0.06 |

3.6. Self-selected within-subjects studies

As described in Section 3.1, self-selected within-subjects (SSWS) studies as defined in Section 3.1 were excluded post hoc from main analyses, though they did meet our preregistered inclusion criteria. When we instead included the 8 SSWS studies from which we could obtain point estimates, the meta-analytic point estimate was somewhat larger than in main analyses (Table 3), but overall conclusions were not affected.

4. Discussion

4.1. Summary of findings

Despite authoritative calls for academic research and public policy regarding reducing meat consumption (Gardner et al., 2019; Godfray et al., 2018; Scherer et al., 2019; Tilman and Clark, 2014), the study of interventions to reduce meat consumption by appealing to animal welfare has largely remained outside the purview of academic research. Few, if any, attempts have been made to synthesize the evidence. In our systematic meta-analysis of this literature, we found that the body of evidence on this topic is well-developed both in terms of its size (100 studies reported in 34 articles) and in terms of the psychological sophistication of existing interventions (Section 1.1). The interventions appeared consistently beneficial at least in the short term and with outcomes primarily based on self-reported behavior or intended behavior, on average increasing by 22% an individual’s probability of intending, self-reporting, or behaviorally demonstrating low versus high meat consumption.

Although it seemed plausible, a priori, that in some settings, animal welfare appeals could be in danger of backfiring (e.g., by being perceived as intrusively self-righteous and moralizing), our results suggested that this danger was rarely realized, as we estimated that the large majority of interventions (83%) had true population effects in the beneficial rather than detrimental direction. This finding also suggests that the interventions were consistently effective across the culturally diverse samples we meta-analyzed, spanning at least 11 countries and four continents. However, it remains theoretically possible that even interventions with beneficial average effects could backfire for specific individuals (Rothgerber, 2020), a possibility that could be addressed in large studies by measuring individual-level effect modifiers or by assessing individually tailored interventions (Section 4.3).

These generally favorable results regarding animal welfare interventions do not imply, however, that appealing to animal welfare is necessarily more effective than, for example, appealing to concerns about health or the environment. To our knowledge, no quantitative meta-analysis of the latter types of interventions has been performed, precluding direct comparisons of evidence strength. Additionally, the literature on individuals’ reported motivations for reducing meat consumption is somewhat mixed: a nationally representative survey in the United States (McCarthy and DeKoster, 2020) suggested that individuals who reported having reduced their meat consumption most often cited health as a major or minor motivation (90% of subjects), though animal welfare was another important motivation (65% of subjects). Another survey suggested that primary motivations were cost (51% of subjects) and health (50%), with concerns about animal welfare (12%) and the environment (12%) cited considerably less often (Neff et al., 2018).

We would speculate three potential explanations for these findings regarding the motivating role of animal welfare concerns relative to other concerns. First, these differences in reported motivations may simply reflect the prevalence of receiving information and interventions with different appeals, rather than differences in effectiveness between appeals. As described in the Introduction, the public is largely uninformed and even misinformed about farm animal welfare, reflecting successful efforts by the animal agriculture industry as well as individuals’ deliberate avoidance of distressing information (Cornish et al., 2016; Rothgerber, 2020), whereas the public may be better informed about potential health consequences of excessive meat consumption. Second, if animal welfare interventions are indeed effective, this may be in part because they provide information that dissonates with many individuals’ stated ethical values (Cornish et al., 2016; Rothgerber, 2020), perhaps leading these individuals to reduce meat consumption even if they would not do so out of concern for their own health or the environment. Third, food choices in general are shaped more by unconscious adherence to habit and situational cues than by conscious motivations (van’t Riet et al., 2011); we would therefore speculate that non-educational components of animal welfare interventions (such as leveraging the physical-moral disgust connection, invoking social norms, and giving implementation suggestions) might largely bypass conscious motivations in their influence on behavior.

We investigated the associations of specific study and intervention characteristics with effectiveness. Critically, these meta-regression estimates of effect modification based on covariates that vary across studies should not be interpreted as causal interactions due to potential confounding, as we further discuss below (Thompson & Higgins, 2002; VanderWeele & Knol, 2011). That is, these estimates represent the correlation between effect size and various characteristics of studies and interventions, not causation. We found that, on average, studies of interventions that recommended going vegan had effects that were 31% larger than interventions making no recommendation, and effects in studies whose interventions made intermediate recommendations to “go vegetarian” or “reduce consumption” heuristically suggested dose-response such that studies of interventions making more forceful recommendations (e.g., “go vegan” versus “reduce meat consumption”) may have had larger effects on average. These findings preliminarily suggest that interventions that make more forceful recommendations do not seem to backfire and may in fact be more effective than subtler interventions. Studies measuring outcomes after a relatively long delay (≥ 7 days) typically had smaller effect sizes by an estimated 19%, though there was considerable statistical uncertainty due to the small number of studies with long follow-up times. Interventions’ durations, their inclusion of text or visuals, and studies’ percentages of male subjects did not appear meaningfully associated with effect size. Again, these estimates may not represent causal effects of different intervention designs; they could be confounded if, for example, more forceful interventions were typically used in studies of individuals who were already particularly motivated to reduce their meat consumption. Future research should conduct more head-to-head comparisons of interventions varying on these apparent effect modifiers, using randomization for those that can be directly manipulated (as in Cooney (2014); Macdonald et al. (2016)).

4.2. Strengths and limitations

The studies we meta-analyzed had some notable methodological strengths, including predominantly randomized designs, large sample sizes, and, especially among unpublished studies, a relatively high rate of data-sharing and preregistration. The studies also had limitations, including the potential for social desirability bias, the use of short lengths of follow-up (and typically high attrition when longer lengths of follow-up were used), and the use of self-reported rather than direct behavioral outcome measures; these issues were most apparent in the unpublished studies. Study designs appeared to be typically stronger with respect to exchangeability and external generalizability. However, a number of studies did appear susceptible to confounding due to a lack of randomization or differential attrition, and others may have had limited generalizability due to recruitment strategies that targeted highly motivated subjects or demographically restricted subjects (with samples tending to over-represent young North Americans, especially college undergraduates). Nevertheless, a number of sensitivity analyses, including those that included only studies at the lowest risk of bias by different criteria, had little effect on the results. Additionally, given the typically short lengths of follow-up, it is not clear whether interventions’ effects were sustained long enough to meaningfully improve individual health, a point we discuss further in Section 4.3. On the other hand, inexpensive interventions such as leaflets or online videos could be deployed for a large number of individuals, potentially still producing meaningfully large environmental and animal welfare impacts in aggregate even if effects might have been short-lived for any given individual.