Abstract

Background

The COVID-19 pandemic has given rise to more virtual simulation training. This study aimed to review the effectiveness of virtual simulations and their design features in developing clinical reasoning skills among nurses and nursing students.

Method

A systematic search in CINAHL, PubMed, Cochrane Library, Embase, ProQuest, PsycINFO, and Scopus was conducted. The PRISMA guidelines, Cochrane's risk of bias, and GRADE was used to assess the articles. Meta-analyses and random-effects meta-regression were performed.

Results

The search retrieved 11,105 articles, and 12 randomized controlled trials (RCTs) were included. Meta-analysis demonstrated a significant improvement in clinical reasoning based on applied knowledge and clinical performance among learners in the virtual simulation group compared with the control group. Meta-regression did not identify any significant covariates. Subgroup analyses revealed that virtual simulations with patient management contents, using multiple scenarios with nonimmersive experiences, conducted more than 30-minutes and postscenario feedback were more effective.

Conclusions

Virtual simulations can improve clinical reasoning skill. This study may inform nurse educators on how virtual simulation should be designed to optimize the development of clinical reasoning.

Keywords: virtual simulation, clinical reasoning, nursing education, systematic review, meta-analysis

Introduction

The coronavirus disease 2019 pandemic (COVID-19) has led to increased opportunities for the development of virtual technologies in nursing education. With the unpredictable nature of the pandemic, continued public health mitigations such as safe distancing measures and avoidance of large group classes have necessitated the transition to more integrated, blended learning approaches in nursing education (Haslam, 2021). Some educators have swiftly incorporated virtual simulation into nursing curricula to complement face-to-face teaching, while others turned to virtual simulation to supplement access to limited clinical placements, particularly in specialist areas such as mental health, pediatrics, and maternal health (Verkuyl et al., 2021).

Despite the increased adoption, understanding of the definition of virtual simulation among educators and researchers has remained unclear (Foronda et al., 2020). This paper adopts the definition of virtual simulation from the Healthcare Simulation Dictionary published by the Agency for Healthcare Research Quality (AHRQ), which refers to the recreation of reality portrayed on computer screens, involving real people operating simulated systems and playing key roles in performing skills, engaging in decision-making or communicating (Lioce, 2020). Virtual simulation can take the form of serious games, virtual reality, or partial or complete immersive screen-based experiences, with or without the use of headsets. It can be an effective pedagogy in nursing education to improve acquisition of knowledge, skills, critical thinking, self-confidence, and learner satisfaction (Foronda et al., 2020).

A unique function of virtual simulation is the development of clinical reasoning. Levett-Jones et al. (2010) conceptualized clinical reasoning as a process by which one gathers cues, processes the information, identifies the problem, plans and performs actions, evaluates outcomes, and reflects on and learns from the process. This cognitive and meta-cognitive process of synthesizing knowledge and patient data in relation to specific clinical situations is vital for nurses to respond to clinical changes and make decisions on care management (Clemett & Raleigh, 2021; Victor-Chmil, 2013). It is also important to enhance nursing students’ and nurses’ clinical reasoning skills to enable them to provide quality and safe patient care by making accurate inferences and evidence-based decisions (Mohammadi-Shahboulaghi et al., 2021).

Virtual simulation uses clinical scenarios for deliberate practice, a highly customizable, repetitive and structured activity with explicit learning objectives, to enhance learners’ performance in clinical decision-making skills in identifying patient problems and in care management that emphasizes decision-making with consequences (Ericsson et al., 1993; LaManna et al., 2019; Levett-Jones et al., 2019). The scenarios can be adjusted in complexity to allow for repetition and deliberate practice in a safe and controlled environment (Borg Sapiano et al., 2018). These scenarios provide feedback with associated expert practice and rationales that can support the development of clinical reasoning (Posel et al., 2015). The features of virtual simulation correspond with best practice guidelines for healthcare simulation (Motola et al., 2013) and the theoretical framework of experiential learning (Shin et al., 2019). As in other simulation methods, virtual simulation allows learners to be actively engaged in an experience and reflect on those experiences through feedback and assessment methods. These experiences are conceptualized and stored in learners’ existing cognitive frameworks to be utilized in real-world clinical practice (Kolb, 1984).

Prior reviews have supported the use of virtual simulation in the context of nursing education but are limited to mainly narrative synthesis and a focus on general learning outcomes (Coyne et al., 2021; Foronda et al., 2020; Shin et al., 2019). Only one review examined the effect of virtual simulation for teaching diagnostic reasoning to healthcare providers (Duff et al., 2016). However, that review employed a scoping review methodology and the acknowledged limitation of only 12 studies written between 2008 and 2015 (Duff et al., 2016). Although the use of virtual simulation has increased in nursing education, evaluation of its impact on the development of clinical reasoning skills has not yet been carried out. This could be due to the variety of approaches, including multiple-choice questions, script concordance, and clinical performance assessment, that were used as a proxy to evaluate the outcome measure of clinical reasoning (Clemett & Raleigh, 2021). Thampy et al. (2019) recommended targeting the higher level of Miller's pyramid of clinical competence to assess clinical reasoning. This pyramid has four levels of competency hierarchy: knowledge (tested by written assessment), applied knowledge (tested by problem-solving exercises such as case scenarios and written assignments), skills demonstration (through simulation and clinical exams), and practice (through observations in real clinical settings) (Witheridge et al., 2019). Currently, there is limited understanding of how virtual simulations can be designed to optimize the development of clinical reasoning. In view of the abovementioned research gaps, this review aimed to evaluate the effectiveness of virtual simulations and their associated design features for developing clinical reasoning among nurses and nursing students. The review was guided by the following research questions:

-

1.

What is the effectiveness of virtual simulation on clinical reasoning in nursing education?

-

2.

What are the essential features in designing virtual simulation that improves clinical reasoning in nursing?

Methods

This systematic review and meta-analysis were reported according to the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) checklist (see Appendix 1) (Liberati et al., 2009) and the Cochrane Handbook for Systematic Reviews (Higgins, Thomas, et al., 2020).

Eligibility Criteria

Inclusion criteria were as follows: (a) pre or postregistration nursing education, (b) randomized controlled trial (RCT) with a comparison group, (c) study intervention using virtual simulation that incorporated experiential learning approaches, (d) at least one outcome assessing clinical reasoning at Miller's pyramid level two and above. Knowledge assessed at level one of Miller's pyramid (“knows” the facts) was excluded. A detailed description of the inclusion and exclusion criteria is presented in Appendix 2.

Search Strategy

Two systematic review databases, PubMed Clinical Queries and the Cochrane Database of Systematic Reviews, were searched to prevent duplication. This was followed by a three-step search strategy that was developed with a librarian and based on the Cochrane Handbook for Systematic Reviews (Lefebvre et al., 2020). First, a search was conducted from inception to 10 January 2021 using keywords and index terms on seven bibliographic databases: PubMed, Scopus, Embase, Cochrane Central Register of Controlled Trials (CENTRAL), Cumulative Index to Nursing and Allied Health (CINAHL), PsycINFO and ProQuest (see Appendix 3). These databases were selected as they are major scientific databases for healthcare-related papers. Second, clinical trial registries including ClinicalTrials.gov and CenterWatch were searched for ongoing and unpublished trials. Lastly, grey literature, targeted journals, and reference lists were searched to optimize relevant articles.

Study Selection

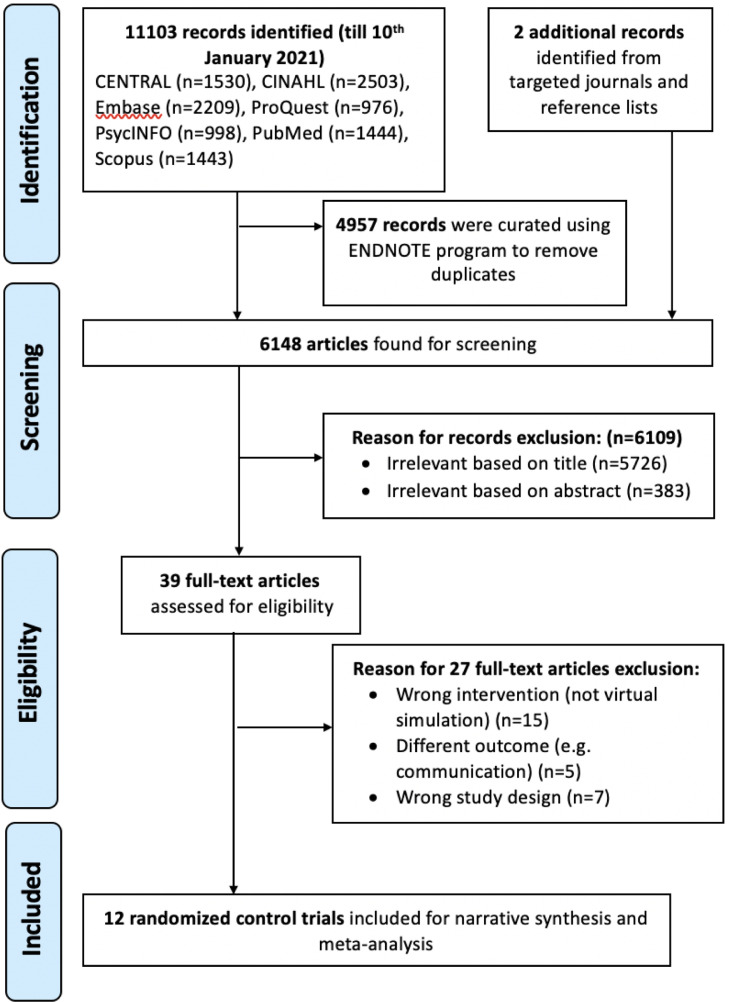

The PRISMA framework involving four-stages (identification, screening, eligibility, and inclusion) was adopted for study selection. The management software EndNote X9 (Clarivate Analytics, 2020) was used to import the record and remove duplicates. Next, two independent reviewers (JJMS and KDBR) screened titles and abstracts to select full-text articles for eligibility based on the inclusion and exclusion criteria. Subsequently, both reviewers compared findings, and any disagreements were resolved through discussion or consultation with a third reviewer (SYL). Reasons for trials exclusion are indicated in the PRISMA flow diagram (see Figure 1 ).

Figure 1.

Preferred reporting items for systematic review and meta-analyses (PRISMA) flow diagram.

Data Extraction

A modified Cochrane data extraction form (Li, Higgins, & Deeks, 2020) was used by the two independent reviewers (JJMS and KDBR) for data extraction. Items extracted from eligible studies included author(s), year, country, design, participants, sample size, intervention, comparator, outcomes, intention to treat (ITT), attrition rates, protocol, and trial registration. The specific components of virtual simulation included intervention regime (number/length of sessions, duration), learning content, feedback (postscenario/scenario-embedded), and immersion (nonimmersive/immersive). Authors were contacted for missing relevant data. A third reviewer (SYL) reviewed and confirmed the extracted data.

Quality Assessment

The Cochrane Collaboration's tool (version 1) was utilized by two independent reviewers (JJMS and KDBR) to assess risk of bias of individual studies (Minozzi et al., 2020). The presence of five biases, including selection, performance, detection, attrition, and reporting biases, was examined through (a) random sequence generation, (b) allocation concealment, (c) blinding of participants and personnel, (d) blinding of outcome assessment, (e) incomplete outcome data and (f) selective reporting. These six domains were each appraised as low, unclear, or high risk depending on the information provided. In addition, attrition rate, missing data management, ITT, trial and protocol registration, and funding were examined to ensure robustness of trials.

Grading of Recommendations, Assessment, Development, and Evaluation (GRADE) was used to assess the overall strength of evidence (GRADEpro, 2020). An overall grade of “high,” “moderate,” “low,” or “very low” was given depending on the five domains (methodological limitations, inconsistency, indirectness, imprecise, on and publication bias) (Schünemann, Brożek, Guyatt, & Oxman, 2013).

Data Synthesis and Statistical Analyses

Review Manager (RevMan) (version 5.4.1) (The Cochrane Collaboration, 2020) was utilized to analyze outcomes of meta-analyses. A random-effects model was used because it accounts for the statistical assumption of variation in the estimation of mean scores across all selected trials (Deeks, Higgins, & Altman, 2020). Z-statistics at the significance level of p < .05 was adopted to evaluate the overall effect. Standardized mean difference (SMD) or Cohen's d was used to express effect size and its magnitude of continuous outcomes, where d(0.01) = very small, d(0.2) = small, d(0.5) = medium, d(0.8) = large, d(1.2) = very large, and d(2.0) = huge (Sawilowsky, 2009). Cochran's Q (χ2 test) was utilized to evaluate statistical heterogeneity, with statistical significance of χ2 set at p < .10. I 2 statistics was adopted to quantify the degree of heterogeneity, where I 2 was categorized as unimportant (0%-40%), moderate (30%-60%), substantial (50%-90%), and considerable (75%-100%) (Chaimani et al., 2020). Sensitivity analyses were utilized to remove heterogeneous trials to ensure homogeneity (Deeks, Higgins, & Altman, 2020). Subgroup analyses were conducted to determine sources of heterogeneity and compare the intervention effects among intervention features (Higgins, Savović, Page, Elbers, & Sterne, 2020). Predefined subgroups included learning content, duration, feedback, immersion, and number of scenarios (Deeks, Higgins, & Altman, 2020).

Meta-regression was performed using Jamovi (version 1.6) (The Jamovi Project, 2021) to examine whether heterogeneity among trials was attributed to covariates (Deeks, Higgins, & Altman, 2020). The random-effects meta-regression model was utilized to determine if the year of publication, age of participants, sample size, learning content, number of scenarios, type of feedback, and immersive experience influenced the effect size of applied knowledge in virtual simulation. Random-effects meta-regression analysis at the significance level of p < .05 was adopted.

Results

Study Selection

As shown in Figure 1, the study selection process conducted following the PRISMA guidelines (Liberati et al., 2009) identified a total of 11,105 papers retrieved from seven databases including additional records, and removed 4,957 duplicate records using EndNoteX9 software. After 6,109 records based on titles and abstracts were excluded, the remaining 39 full-text articles were assessed for eligibility. Twelve RCTs met the criteria for this review.

Study Characteristics

Table 1 summarizes the characteristics of 12 RCTs. In total, there were 856 participants in the included studies, with a mean age of 18.97 (Bayram & Caliskan, 2019) to 27.61 years (Liaw et al., 2017). They represented seven countries, namely Canada (n = 1) (Cobbett & Clarke, 2016), China (n = 1) (Gu et al., 2017), France (n = 1) (Blanié et al., 2020), Portugal (n = 1) (Padilha et al., 2019), Singapore (n = 5) (Liaw et al., 2014; Liaw, Wong, Ang, et al., 2015; Liaw, Wong, Chan, et al., 2015; Liaw et al., 2017; Tan et al., 2017), Turkey (n = 1) (Bayram & Caliskan, 2019) and the United States (n = 2) (C. Li, 2016; LeFlore et al., 2012). The target population for all the RCTs was nursing students (n = 10), with most being final year students (n = 6), except for three (Liaw, Wong, Ang, et al., 2015a; Liaw, Wong, Chan, et al., 2015b; Liaw et al., 2017) that included nurses.

Table 1.

Characteristics of 12 Included Randomized Controlled Trials (RCTS)

| Author (Year) | Study Design/ Country | Participants/ Age: (Mean ± SD/Range) |

Sample Size | Intervention (I) |

Comparator (C) |

Outcomes (Measures) |

p- value |

Attrition Rate (%) | ITT/MDM | Protocol/ Registration/ Funding |

|---|---|---|---|---|---|---|---|---|---|---|

| Bayram & Caliskan (2019) | 2-arm RCT/ Turkey |

Undergraduate Nursing Students (1st Year) / I: NM C: NM Overalla: 18.97 ± 1.00 |

I: 43 C: 43 T: 86 |

Virtual Game† (Single scenario in immersive virtual environment related to tracheostomy clinical procedural skills, followed by postscenario feedback) LD: 10 minutes |

NonDigital Education (Classroom-Based Teaching) |

-Applied Knowledge (Self-Developed MCQ) -Skills Demonstration (Self-Developed Performance Tool) |

.568 .017 |

I: 13.5% C:13.5% |

No/Yes | No/No/Yes |

| Blanié et al. (2020) | 2-arm RCT/ France |

Undergraduate Nursing Students (Final Year) / I: 24.0 ± 6.40 C: 25.0 ± 6.50 Overalla: 24.5 ± NM |

I: 73 C: 73 T: 146 |

Virtual Game† (Multiple scenarios in nonimmersive virtual environment related to postoperative complications management, followed by postscenario feedback) LD: 120 minutes |

NonDigital Education (Classroom-Based Teaching) |

-Applied Knowledge (SCTs) |

.43 | I: 0% C: 0% |

NA/NA | No/Yes/No |

| Cobbett & Clarke (2016) | 2-arm RCT/ Canada |

Undergraduate Nursing Students (Final Year) / I: NM C: NM Overalla: 25.0 ± NM |

I: 27 C: 28 T: 55 |

vSimTM (Single scenario in nonimmersive virtual environment related to maternal-newborn complications management, followed by postscenario feedback) LD: 45 minutes |

NonDigital Education (PS) |

-Applied Knowledge (Self-Developed MCQ) |

.31 | I:1.8% C: 0% |

No/Yes | No/No/Yes |

| Gu et al. (2017) | 2-arm RCT/ China |

Undergraduate Nursing Students (2nd Year) / I: 19.0 ± 0.58 C: 19.29 ± 0.73 Overalla: 19.15 ± NM |

I: 13 C: 14 T: 27 |

vSimTM (Multiple scenarios in nonimmersive virtual environment related to fundamentals of nursing clinical procedural skills, followed by postscenario feedback) LD: 29 minutes |

NonDigital Education (Lecture) |

-Applied Knowledge (Self-Developed MCQ) |

.032* | I: 3.6% C: 0% |

No/Yes | No/No/No |

| Li (2016) | 2-Arm RCT/ United States |

Undergraduate Nursing Students (Final Year) / I: 25.68 ± 6.80 C: 25.63 ± 4.13 Overalla: 26.65 ± NM |

I: 22 C: 27 T: 49 |

vSimTM (Multiple scenarios in nonimmersive virtual environment related to management of acute and chronic diseases, followed by postscenario feedback) LD: 150 minutes |

NonDigital Education (PS) |

-Applied Knowledge (HSRT) |

.418 | I: 5.8% C: 0% |

No/Yes | No/No/No |

| LeFlore et al. (2012) | 2-Arm RCT/ United States |

Undergraduate Nursing Students (Final Year) / I: NM C: NM Overalla: 25.7 ± NM |

I: 46 C: 47 T: 93 |

Virtual Patient Trainer (Unreal Engine 3)† (Multiple scenarios in immersive virtual environment related to paediatric respiratory issues management, followed by scenario-embedded feedback) LD: 180 minutes |

NonDigital Education (Lecture) |

-Applied Knowledge (Self-Developed MCQ) |

.004* | I: 0% C: 0% |

NA/NA | No/No/No |

| Liaw et al. (2014) | 2-Arm RCT/ Singapore |

Undergraduate Nursing Students (Final Year) / I: NM C: NM Overalla: 21.86 ± 1.13 |

I: 31 C: 26 T: 57 |

e-RAPIDS (Multiple scenarios in nonimmersive virtual environment related to clinical deterioration management, followed by postscenario feedback) LD: 120 minutes |

NonDigital Education (PS) |

-Skills Demonstration (RAPIDS-Tool) |

.94 | I: 0% C: 6.6% |

No/Yes | No/No/Yes |

| Liaw et al. (2015a) | 2-Arm RCT/ Singapore |

Registered Nurses/ I: NM C: NM Overalla: 25.58 ± 3.19 |

I: 35 C: 32 T: 67 |

e-RAPIDS (Multiple scenarios in nonimmersive virtual environment related to clinical deterioration management, followed by postscenario feedback) LD: 180 minutes |

No Intervention Control Group | -Skills Demonstration (RAPIDS-Tool) |

<.001* | I: 0% C: 4.3% |

No/Yes | No/No/Yes |

| Liaw et al. (2015b) | 2-Arm RCT/ Singapore |

Registered Nurses/ I: 26.17 ± 3.17 C: 24.94 ± 3.14 Overalla: 25.6 ± NM |

I: 35 C: 32 T: 67 |

e-RAPIDS (Multiple scenarios in nonimmersive virtual environment related to clinical deterioration management, followed by postscenario feedback) LD: 180 minutes |

No Intervention Control Group | -Applied Knowledge (Self-Developed MCQ) -Skills Demonstration (Modified RAPIDS-Tool) |

<.001* <.001* |

I: 0% C: 4.3% |

No/Yes | No/No/Yes |

| Liaw et al.(2017) | 2-Arm RCT/ Singapore |

Enrolled Nurses/ I: 28.16 ± 4.00 C: 27.06 ± 4.30 Overalla: 27.61 ± 4.16 |

I: 32 C: 32 T: 64 |

e-RAPIDS (Multiple scenarios in nonimmersive virtual environment related to clinical deterioration management, followed by postscenario feedback) LD: 150 to 180 minutes |

No Intervention Control Group | -Applied Knowledge (Self-Developed MCQ) -Skills Demonstration (Modified RAPIDS-Tool) |

.01* .001* |

I: 3.0% C: 1.5% |

No/Yes | No/No/Yes |

| Padilha et al. (2019) | 2-Arm RCT/ Porto, Portugal |

Postgraduate Nursing Students (Final Year) / I: 19.29 ± 0.46 C: 20.29 ± 2.19 Overalla: 19.99 ± 1.99 |

I: 21 C: 21 T: 42 |

Clinical Virtual Simulator (Body Interact) (Single scenario in nonimmersive virtual environment related to management of respiratory issues, followed by postscenario feedback) LD: 45 minutes |

NonDigital Education (PS) |

-Applied Knowledge (Self-Developed True/False Questions And MCQ) |

.001* | I: 12.5% C: 12.5% |

No/Yes | No/No/Yes |

| Tan et al. (2017) | 2-Arm RCT/ Singapore |

Undergraduate Nursing Students (Second Year) / I: 21.14 ± 2.08 C: 20.72 ± 0.96 Overalla: 20.59 ± NM |

I: 57 C: 46 T: 103 |

Virtual Game (3DHive)† (Single scenario in immersive virtual environment related to blood transfusion and post -transfusion reaction management, followed by scenario-embedded feedback) LD: 30 minutes |

Wait-List Control Group |

-Applied Knowledge (Self-Developed MCQ) -Skills Demonstration (Self-Developed Performance Tool) |

<.001* .11 |

I: 4.5% C: 2.7% |

No/No | No/No/No |

a = total mean age of participants; C = comparator; CCTDI = California critical thinking disposition inventory; HSRT = health science reasoning test; I = intervention group; L = length of each intervention session; LD = learning duration; LEP = learning environment preferences; MCQ = multiple-choice questions; NA = not applicable; NM = not mentioned; NOS = number of intervention sessions; OD = overall duration of intervention; PS = physical simulation; RAPIDS = Rescuing a patient in deteriorating situations; SCTs = script concordance tests; T = total number of participants included in meta-analysis;

p < .05;

name of virtual simulation system not specified

Details of Virtual Simulation

The types of virtual simulations included in the papers included vSimTM (n = 3) (Cobbett & Clarke, 2016; Gu et al., 2017; Li, 2016), e-RAPIDS (n = 4) (Liaw et al., 2014; Liaw, Wong, Ang, et al., 2015; Liaw, Wong, Chan, et al., 2015; Liaw et al., 2017) and Clinical Virtual Simulator (Body Interact) (n = 1) (Padilha et al., 2019). Four of the RCTs had no specific name for the simulation. All of the reviews involved learning topics related to patient care management focused on acute care, except for Bayram and Caliskan (2019) and Gu et al. (2017), which focused on clinical procedural skills including tracheostomy care, medication administration and urinary catheterisation. The virtual environments were either immersive using three-dimensional (3D; n = 3) or nonimmersive using two-dimensional (2D; n = 9) modalities. All involved one scenario, except for Cobbett and Clarke (2016), which involved two; Li (2016), which involved five; and Bayram and Caliskan (2019) and Gu et al. (2017), which were user-determined. Only two studies used scenario-embedded feedback (LeFlore et al., 2012; Tan et al., 2017), with the others (n = 10) utilising postscenario feedback. Learning duration ranged from 10 (Bayram & Caliskan, 2019) to 180 (Liaw, Wong, Ang, et al., 2015) minutes.

Risk of Bias Assessment

All studies were appraised high risk, except Tan et al. (2017) and Liaw et al. (2017), where the risk of bias was rated as unclear (see Appendix 4). Predominant risk of bias was observed in the following domains: unclear risk of reporting bias (100%) due to lack of trial registration and ITT analysis for transparency, high risk of performance bias (58.3%) due to nature of virtual simulation, and high risk or unclear risk of selection bias for allocation concealment due to lack of apparent evidence (58.3%). Attrition bias was significantly low as only two studies had an attrition rate exceeding 20% (Bayram & Caliskan, 2019; Padilha et al., 2019), which raises threats to validity.

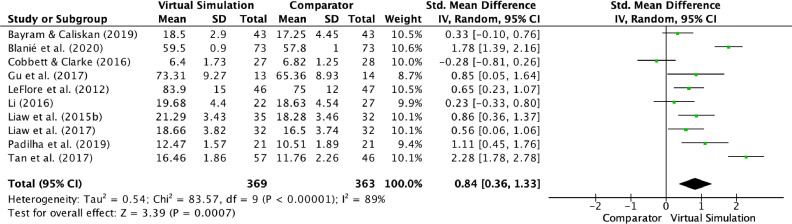

Applied Knowledge Outcomes

Figure 2 presents the pooled meta-analysis results from ten RCTs that measured knowledge scores between virtual simulation and comparator groups. A total of 732 participants were included in the analysis, which yielded a significant increase in knowledge scores in virtual simulation (Z = 3.39, p < .001), with large effect size (d = 0.84). Given that substantial heterogeneity (I 2 = 89%, p < .001) was detected, sensitivity test and subgroup analyses were performed. Sensitivity analysis was attempted, but heterogeneity was not improved.

Figure 2.

Forest plot of standardized mean difference (95% CI) on applied knowledge scores (post intervention) in VS.

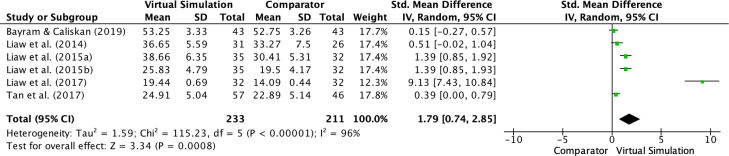

Skills Demonstration Outcomes

Figure 3 illustrates the pooled meta-analysis results from six RCTs with a total of 444 participants where skills demonstration scores were used as an outcome. The analysis indicated a significant improvement of skills in virtual simulation (Z = 3.34, p < .001), with very large to huge effect size (d = 1.79). Because of considerable heterogeneity (I 2 = 96%, p < .001), sensitivity test and subgroup analyses were conducted. Sensitivity analysis was attempted, but heterogeneity was not improved.

Figure 3.

Forest plot of standardized mean difference (95% CI) on skills demonstration scores (post intervention) in VS.

Subgroup Analyses

Subgroup analyses were conducted to examine key features of virtual simulation that result in acquisition of clinical reasoning skills through applied knowledge and skills demonstration (see Table 2 ). Virtual simulation had a greater effect in increasing knowledge scores when the learning content included patient care management (d = 0.91), when conducted in multiple scenarios (d = 0.84), and when using postscenario feedback (d = 0.73). As shown in Table 2, virtual simulation had no significant subgroup differences for knowledge scores, when comparing learning duration (I2 = 0%, p = .41) and immersive experience (I2 = 0%, p = .59).

Table 2.

Subgroup Analyses of Virtual Simulation for Applied Knowledge and Skills Demonstration Scores

| Category | Subgroups | No. of Studies(Ref) |

Sample Size (n) |

d (95% CI) | Overall effect (Z, p-value for Z) |

Subgroup Difference (p-value for Q, I^2) |

|---|---|---|---|---|---|---|

| Knowledge Scores | ||||||

| Learning Content | Patient Care Management | 8 (b, c, e, f, i, j, k, l) | 619 | 0.91 (0.33, 1.48) | Z = 3.08, p = .002* |

p = .25, I^2= 23.8% |

| Clinical Procedural Skills |

2 (a, d) | 113 | 0.48 (0.02, 0.93) | Z = 2.04, p = .04* | ||

| Number of Scenarios | Multiple | 6 (b, d, e, f, i, j) | 446 | 0.84 (0.35, 1.33) | Z = 3.36, p < .0008* |

p = .97, I^2= 0% |

| Single | 4 (a, c, k, l) | 286 | 0.86 (-0.25, 1.96) | Z = 1.52, p = .13 |

||

| Feedback | Post Scenario | 8 (b, c, d, e, f, i, j, k) | 543 | 0.73 (0.25, 1.20) | Z = 3.00, p < .003* |

p = .36, I^2= 0% |

| NonPost Scenario | 2 (a, l) | 189 | 1.33 (-0.61, 3.21) | Z = 1.33, p = .18 |

||

| Learning Duration | ≤ 30 minutes > 30 minutes |

3(a, d, l) 5(c, f, i, j, k) |

216 321 |

1.16 (-0.16, 2.47) 0.57 (0.15, 1.00) |

Z = 1.73, p = .08 Z = 2.63, p = .009* |

p = .41, I^2= 0% |

| Immersive Experience | Immersive Environment NonImmersive Environment |

3 (a, f, l) 7 (b, c, d, e, i, j, k) |

282 450 |

1.08 (-0.03, 2.19) 0.74 (0.17, 1.30) |

Z = 1.90, p = .06 Z = 2.56, p = .01* |

p = .59, I^2= 0% |

| Skills Demonstration Scores | ||||||

| Learning Duration | ≤30 minutes >30 minutes |

2 (a, l) 4 (g, h, i, j) |

189 255 |

0.28 (-0.01, 0.57) 2.82 (1.05, 4.59) |

Z = 1.92, p = .06 Z = 3.13, p = .002* |

p = .005*, I^2= 87.0% |

| Immersive Experience |

Immersive Environment NonImmersive Environment |

2 (a, l) 4 (g, h, i, j) |

189 255 |

0.28 (-0.01, 0.57) 2.82 (1.05, 4.59) |

Z = 1.92, p = .06 Z = 3.13, p = .002* |

p = .005*, I^2 = 87.0% |

Note: CI = Confidence Interval; d = Cohen's d (Effect Size); I^2 = Heterogeneity; Ref = Reference; Z = z-Statistics;

Reference: a(Bayram & Caliskan, 2019); b(Blanié et al., 2020); c(Cobbett & Clarke, 2016); d(Gu et al., 2017); e(Li, 2016); f(LeFlore et al., 2012); g(Liaw et al., 2014); h(Liaw et al., 2015a); i(Liaw et al., 2015b); j(Liaw et al., 2017); k(Padilha et al., 2019); l(Tan et al., 2017)

p < .05

As shown in Table 2, virtual simulation had a greater effect in increasing skills performance scores, with significant subgroup differences of considerable heterogeneity (I 2 = 87.0%, p = .005), when the duration was more than 30 minutes (d = 2.82) and when using nonimmersive virtual simulation (d = 2.82).

Meta-regression

The random-effects meta-regression was performed to assess the effects of the following covariates on the effect size of applied knowledge scores: year of publication, age of participants, sample size, learning content, number of scenarios, type of feedback and immersive experience (see Table 3 ). Covariates that had no effect on applied knowledge scores included year of publication (β = 0.10, p = .36), age of participants (β = -0.17, p = .37), sample size (β = 0.01, p = .76), patient care management (β = 0.34, p = .60), multiple scenarios (β = -0.03, p = .96), postscenario feedback (β = 0.30, p = .45) and nonimmersive environment (β = -0.34, p = .55).

TABLE 3.

Random Effects Meta-regression Models of Virtual Simulation by Various Covariates

| Covariates | Β | Standard Error | 95% Lower | 95% Upper | Z | p |

|---|---|---|---|---|---|---|

| Year of Publication | 0.10 | 0.11 | -0.12 | 0.33 | -0.91 | .36 |

| Age of Participants | -0.17 | 0.19 | -0.55 | 0.21 | -0.88 | .37 |

| Sample Size | 0.01 | 0.02 | -0.38 | 0.53 | 0.31 | .76 |

| Patient Care Management | 0.34 | 0.65 | -0.93 | 1.61 | 0.52 | .60 |

| Multiple Scenarios | -0.03 | 0.54 | -1.08 | 1.03 | -0.05 | .96 |

| PostScenario Feedback | -0.57 | 0.65 | -1.83 | 0.70 | -0.88 | .38 |

| Nonimmersive Environment | -0.34 | 0.57 | -1.47 | 0.78 | -0.59 | .55 |

Note: β = Regression coefficient; Z = Z statistics

Overall Quality Appraisal (GRADE)

Using the GRADE certainty assessment, the overall quality of evidence for knowledge and clinical performance outcomes was graded very low (see Appendix 5). The domains of certainty assessment, including biases, inconsistency, indirectness and imprecision, were downgraded as a result of methodological limitations; variabilities in population, intervention and comparator group; and small sample size. Publication bias was not detected for trials that reported applied knowledge scores as symmetrical distribution of the included trials on the funnel plot was observed (Egger's test, p = .73) (see Appendix 6).

Discussion

The meta-analysis demonstrated a significant improvement in clinical reasoning skills based on applied knowledge (know how) and clinical performance (show how) among nursing students and nurses in the virtual simulation groups compared with control groups. Subgroup analyses revealed that virtual simulation was more effective for the acquisition of clinical reasoning skills when learning content focused on patient management and when conducted for more than 30 minutes’ duration, using multiple scenarios with nonimmersive experiences and provision of postscenario feedback. Meta-regression did not identify any significant covariates.

By employing a quantitative synthesis of outcomes with selectively included studies, the findings from this review add further evidence to support earlier narrative reviews that identified the effectiveness of virtual simulation in improving knowledge and clinical performance of healthcare learners (Coyne et al., 2021; Foronda et al., 2020). Similar to our review findings, a meta-analysis on virtual patient simulations in health professional education found improved skills performance outcomes compared with traditional education. (Kononowicz et al., 2019).

The effectiveness of virtual simulation in improving clinical reasoning can be explained by the application of Kolb's (1984) experiential learning, which requires learners to engage in clinical decision-making processes through problem-solving of clinical scenarios and allows them access for feedback on performance to facilitate reflection. According to Fowler (2008), the effectiveness of experiential learning depends on the quality of the experience and reflection on the experience. As reported by Edelbring (2013), key design strategies for virtual simulation are essential to optimize experiential learning approaches. Although the features identified for effective learning in high-fidelity simulation include various clinical scenarios and training levels, deliberate practice and feedback were found to be commonly used in the design of virtual simulation (Liaw et al., 2014). However, the application of these features for optimal design of virtual simulation may vary according to educational context (Cook & Triola, 2009).

Our subgroup analysis provided evidence on the specific features of virtual simulation to optimize the facilitation of clinical reasoning. The findings revealed greater effect of virtual simulation programs that focused on developing critical thinking skills related to patient care management such as management of clinical deterioration than on developing knowledge application related to clinical procedure (e.g., tracheostomy care). Virtual simulation offers real-life clinical scenarios that enable learners to conduct nursing assessment based on the given scenario and apply the assessment findings to make clinical decisions in the development of a patient management plan (LaManna et al., 2019). Thus, it is known to be best suited for promoting clinical reasoning skills related to patient management, to prepare students for a range of clinical situations (Borg Sapiano et al., 2018). Conversely, the use of virtual simulation for the development of knowledge related to procedural skills has been criticized as other more cost-effective methods can be used (Cook & Triola., 2009).

In this subgroup analysis, virtual simulation was found to have greater effect when multiple clinical scenarios with longer duration (>30 minutes) were used. Expertise in clinical reasoning is believed to be developed through exposure to a range of clinical cases that can facilitate the ability to undertake appropriate pattern recognition—the process of recognizing similarity on the basis of a prior experience (Norman et al., 2007). Apart from promoting pattern recognition, multiple and varied clinical scenarios can facilitate deliberate practice of reasoning process through reinforcing knowledge structures (Cook & Triola., 2009). The ease of access and flexibility in terms of time and place were shown to promote deliberate practice in virtual simulation, which made it as effective as one-off manikin-based simulations (Liaw et al., 2014). Besides varied clinical scenarios, the deliberate practice of a mental model (e.g., ABCDE) in these scenarios was considered critical to arrive at the appropriate clinical decision for the specific patient (Liaw et al., 2015a). However, logistical challenges, such as scheduling of sessions with students and the development of appropriate case scenarios, should be taken into consideration during the virtual simulation development phase (Liaw et al., 2020).

In the studies included in this review, feedback using quizzes or checklists was incorporated throughout the clinical cases. According to Norman and Eva (2010), feedback that provides individual responses with rationales and evidence can support the development of clinical reasoning. Our study provides evidence that the incorporation of feedback at the end of each scenario is effective in supporting the development of clinical reasoning. As reported by Posel et al. (2015), postscenario feedback that enables learners to review the case for errors made, their associated rationales and experts’ responses can provide an opportunity for students to undertake postcase reflection—a critical element in the development of clinical reasoning skills. More research is needed to inform how to effectively deliver postscenario feedback to optimize the development of clinical reasoning in virtual simulation.

Interestingly, our findings revealed that nonimmersive 2D (e.g., screen-based simulation) is more effective than immersive 3D virtual environments (e.g., virtual reality simulation). The application of emotional engagement theory and cognitive load theory may help to clarify this finding (La Rochelle et al., 2011; Van der Land et al., 2013). While the 3D virtual environment has the capability to enhance students’ motivation and engagement to learn through realistic, immersive, and interactive learning environments, it can increase learners’ cognitive load as they have to pay attention to irrelevant immersive stimuli that distract them from the learning tasks (Van der Land et al., 2013). Thus, the 3D virtual environment should be used with caution as this approach, aiming to increase authenticity of learning, does not appear to improve clinical reasoning skills (La Rochelle et al., 2011).

Strengths and Limitations

This is one of the first systematic reviews and meta-analyses to present contemporary and robust evidence of the effectiveness and essential features of virtual simulation for developing clinical reasoning in nursing education. Although a robust search was undertaken to decrease publication bias, the inclusion of English-only articles might have limited the study selection and may affect generalization of the findings. Only RCT designs were included in this review to ensure scientific credibility. However, the presence of small sample groups in selected trials might have resulted in small study effects. Larger trials are needed for future studies to strengthen existing evidence. As a result of high risk of selection, performance, and reporting biases, the overall quality of the evidence was low; thus, the results should be interpreted with caution. Miller's pyramid of clinical competence was applied to assess clinical reasoning at the higher levels to ensure clinical reasoning was appropriately evaluated. The reviewed studies only included “know how” (applied knowledge) and “show how” (skills demonstration) levels. However, proficiency at these levels may not automatically transfer to real-life clinical settings (Thampy et al., 2019). Future studies should target the top of Miller's pyramid (“does” level) by examining whether the clinical reasoning skills gained in virtual simulations influence learners’ actual performance in clinical settings.

Conclusion

The development of clinical reasoning as a core competency is critical for nursing education to ensure the provision of safe and quality patient care. Our review demonstrated that the experiential learning approach in virtual simulation can improve this nursing competency. Future designs of virtual simulation should consider the use of nonimmersive virtual environments and multiple scenarios with postscenario feedback in delivering learning contents related to patient care management. Future studies using robust RCTs and examining the impact on actual clinical performance are needed to strengthen the existing evidence.

Acknowledgement

We would like to thank the Elite Editing for providing editing service for this manuscript.

Conflict of interest

There are no conflict of interest declared.

Footnotes

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.ecns.2022.05.006.

Appendix. Supplementary materials

References

- Bayram S., Caliskan N. Effect of a game-based virtual reality phone application on tracheostomy care education for nursing students: a randomized controlled trial. Nurse Education Today. 2019;79:25–31. doi: 10.1016/j.nedt.2019.05.010. [DOI] [PubMed] [Google Scholar]

- Blanié A., Amorim M.A., Benhamou D. Comparative value of a simulation by gaming and a traditional teaching method to improve clinical reasoning skills necessary to detect patient deterioration: a randomized study in nursing students. BMC Medical Education. 2020;(53):20. doi: 10.1186/s12909-020-1939-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borg Sapiano A., Sammut R., Trapani J. The effectiveness of virtual simulation in improving student nurses' knowledge and performance during patient deterioration: a pre and post test design. Nurse Education Today. 2018;62:128–133. doi: 10.1016/j.nedt.2017.12.025. [DOI] [PubMed] [Google Scholar]

- Chaimani, A., Caldwell, D., Li, T., Higgins, J., & Salanti, G. (2020). Chapter 11: Undertaking network meta-analyses. In cochrane handbook for systematic reviews of interventions. Accessed from: https://training.cochrane.org/handbook/current/chapter-11

- Clarivate Analytics. (2020). EndNote X9 [Computer software]. Philadelphia, PA: the endnote team. Accessed from: https://endnote.com

- Clemett V.J., Raleigh M. The validity and reliability of clinical judgement and decision-making skills assessment in nursing: a systematic literature review. Nurse Education Today. 2021;102 doi: 10.1016/j.nedt.2021.104885. [DOI] [PubMed] [Google Scholar]

- Cobbett S., Clarke E. Virtual versus face-to-face clinical simulation in relation to student knowledge, anxiety, and self-confidence in maternal-newborn nursing: a randomized controlled trial. Nurse Education Today. 2016;45:179–184. doi: 10.1016/j.nedt.2016.08.004. [DOI] [PubMed] [Google Scholar]

- Cook D.A., Triola M.M. Virtual patients: a critical literature review andproposed next steps. Medical Education. 2009;43(4):303–311. doi: 10.1111/j.1365-2923.2008.03286.x. [DOI] [PubMed] [Google Scholar]

- Coyne E., Calleja P., Forster E., Lin F. A review of virtual-simulation for assessing healthcare students' clinical competency. Nurse Education Today. 2021;96 doi: 10.1016/j.nedt.2020.104623. [DOI] [PubMed] [Google Scholar]

- Deeks, J., Higgins, J., & Altman, D. (2020). Chapter 10: Analysing data and undertaking meta-analyses. In cochrane handbook for systematic reviews of interventions. Accessed at: January 7, 2021 Accessed from: https://training.cochrane.org/handbook/current/chapter-10

- Duff E., Miller L., Bruce J. Online virtual simulation and diagnostic reasoning: a scoping review. Clinical Simulation in Nursing. 2016;12(9):377–384. doi: 10.1016/j.ecns.2016.04.001. [DOI] [Google Scholar]

- Edelbring S. Research into the use of virtual patients is moving forward by zooming out. Medical Education. 2013;47(6):544–546. doi: 10.1111/medu.12206. [DOI] [PubMed] [Google Scholar]

- Ericsson K., Krampe R., Tesch-Römer C. The role of deliberate practice in the acquisition of expert performance. Psychological Review. 1993;100(3):363–406. doi: 10.1037/0033-295x.100.3.363. [DOI] [Google Scholar]

- Foronda C.L., Fernandez-Burgos M., Nadeau C., Kelley C.N., Henry M.N. Virtual simulation in nursing education: a systematic review spanning 1996 to 2018. Simulation in Healthcare. 2020;15(1):46–54. doi: 10.1097/SIH.0000000000000411. [DOI] [PubMed] [Google Scholar]

- Fowler J. Experiential learning and its facilitation. Nurse Education Today. 2008;28(4):427–433. doi: 10.1016/j.nedt.2007.07.007. [DOI] [PubMed] [Google Scholar]

- GRADEpro. (2020). GRADEpro GDT: GRADEpro guideline development tool [software]. Accessed at: February 18, 2021. Accessed from: https://gradepro.org

- Gu Y., Zou Z., Chen X. The effects of vSIM for nursing™ as a teaching strategy on fundamentals of nursing education in undergraduates. Clinical Simulation in Nursing. 2017;13(4):194–197. doi: 10.1016/j.ecns.2017.01.005. [DOI] [Google Scholar]

- Haslam M.B. What might COVID-19 have taught us about the delivery of Nurse Education, in a post-COVID-19 world? Nurse Education Today. 2021;97 doi: 10.1016/j.nedt.2020.104707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M.J., & Welch, V. A. (2020). Cochrane handbook for systematic reviews of interventions. Accessed at: January 7, 2021. Accessed from: www.training.cochrane.org/handbook

- Higgins, J., Savović, J., Page, M., Elbers, R., & Sterne, J. (2020). Chapter 8: assessing risk of bias in a randomized trial. In Cochrane handbook for systematic reviews of interventions. Accessed at: January 7, 2021. Accessed from: www.training.cochrane.org/handbook

- Kolb D.A. Prentice-Hall; Englewood Cliffs, N.J: 1984. Experiential learning: experience as the sources of learning and development. [Google Scholar]

- Kononowicz A., Woodham L., Edelbring S., Stathakarou N., Davies D., Saxena N., Zary N. Virtual patient simulations in health professions education: systematic review and meta-analysis by the digital health education collaboration. Journal of Medical Internet Research. 2019;21(7):14676. doi: 10.2196/14676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaManna J., Guido-Sanz F., Anderson M., Chase S., Weiss J., Blackwell C. Teaching diagnostic reasoning to advanced practice nurses: positives and negatives. Clinical Simulation in Nursing. 2019;26:24–31. doi: 10.1016/j.ecns.2018.10.006. [DOI] [Google Scholar]

- Lefebvre, C., Glanville, J., Briscoe, S., Littlewood, A., Marshall, C., Metzendorf, M, … Wieland, S. (2020). Chapter 4: searching for and selecting studies. Cochrane Handbook for Systematic Reviews of Interventions. Accessed at: January 7, 2021. Accessed from: https://training.cochrane.org/handbook/archive/v6.1/chapter-04

- LeFlore J.L., Anderson M., Zielke M.A., Nelson K.A., Thomas P.E., Hardee G., John L. Can a virtual patient trainer teach student nurses how to save lives—Teaching nursing students about pediatric respiratory diseases. Simulation in Healthcare: The Journal of the Society for Simulation in Healthcare. 2012;7(1):10–17. doi: 10.1097/sih.0b013e31823652de. [DOI] [PubMed] [Google Scholar]

- Levett-Jones T., Hoffman K., Dempsey J., Jeong S.Y., Noble D., Norton C.A., Hickey N. The ‘five rights’ of of clinical reasoning: an educational model to enhance nursing students' ability to identify and manage clinically 'at risk' patients. Nurse Education Today. 2010;30(6):515–520. doi: 10.1016/j.nedt.2009.10.020. [DOI] [PubMed] [Google Scholar]

- Levett-Jones T., Pich J., Blakey N. In: Clinical reasoning in the health professions. 4th Ed. Higgs J, editor. Elsevier; Marrickville: 2019. Teaching clinical reasoning in nursing education. [Google Scholar]

- Li, C. (2016). A comparison of traditional face-to-face simulation versus virtual simulation in the development of critical thinking skills, satisfaction, and self-confidence in undergraduate nursing students. ProQuest Dissertations & Theses Global. 10800814.

- Li, T., Higgins, J., & Deeks, J. (2020). Chapter 5: Collecting Data. In Cochrane Handbook for Systematic Reviews of Intervention. Accessed at: January 7, 2021. Accessed from: https://training.cochrane.org/handbook/current/chapter-05

- Liaw S., Chan S., Chen F., Hooi S., Siau C. Comparison of virtual patient simulation with mannequin-based simulation for improving clinical performances in assessing and managing clinical deterioration: randomized controlled trial. Journal of Medical Internet Research. 2014;16(9):e214. doi: 10.2196/jmir.3322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liaw S., Wong L., Ang S., Ho J., Siau C., Ang E. Strengthening the afferent limb of rapid response systems: an educational intervention using web-based learning for early recognition and responding to deteriorating patients. BMJ Quality & Safety. 2015;25(6):448–456. doi: 10.1136/bmjqs-2015-004073. [DOI] [PubMed] [Google Scholar]

- Liaw S., Wong L., Chan S., Ho J., Mordiffi S., Ang S., Ang E. Designing and evaluating an interactive multimedia web-based simulation for developing nurses’ competencies in acute nursing care: randomized controlled trial. Journal of Medical Internet Research. 2015;17(1):e5. doi: 10.2196/jmir.3853. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liaw S., Chng D., Wong L., Ho J., Mordiffi S., Cooper S., Chua W., Ang E. The impact of a Web-based educational program on the recognition and management of deteriorating patients. Journal of Clinical Nursing. 2017;26(23-24):4848–4856. doi: 10.1111/jocn.13955. [DOI] [PubMed] [Google Scholar]

- Liaw S.Y., Ooi S.W., Rusli K.D.B., Lau T.C., Tam W.W.S., Chua W.L. Nurse-physician communication team training in virtual reality versus live simulation: randomized controlled trial on team communication and teamwork attitudes. Journal of Medical Internet Research. 2020;22(4):e17279. doi: 10.2196/17279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberati A., Altman D., Tetzlaff J., Mulrow C., Gotzsche P., Ioannidis J., Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339 doi: 10.1136/bmj.b2700. b2700-b2700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lioce L. Agency for Healthcare Research and Quality; Rockville, MD: 2020. Healthcare Simulation Dictionary –Second Edition. September 2020. AHRQ Publication No. 20-0019. [DOI] [Google Scholar]

- Minozzi S., Cinquini M., Gianola S., Gonzalez-Lorenzo M., Banzi R. The revised cochrane risk of bias tool for randomized trials (RoB 2) showed low interrater reliability and challenges in its application. Journal of Clinical Epidemiology. 2020;126:37–44. doi: 10.1016/j.jclinepi.2020.06.015. [DOI] [PubMed] [Google Scholar]

- Mohammadi-Shahboulaghi F., Khankeh H., HosseinZadeh T. Clinical reasoning in nursing students: a concept analysis. Nursing Forum. 2021;56(4):1008–1014. doi: 10.1111/nuf.12628. [DOI] [PubMed] [Google Scholar]

- Motola I., Devine L., Chung H., Sullivan J., Issenberg S. Simulation in healthcare education: a best evidence practical guide. AMEE guide no. 82. Medical Teacher. 2013;35(10):e1511–e1530. doi: 10.3109/0142159x.2013.818632. [DOI] [PubMed] [Google Scholar]

- Norman G., Eva K. Diagnostic error and clinical reasoning. Medical Education. 2010;44(1):94–100. doi: 10.1111/j.1365-2923.2009.03507.x. [DOI] [PubMed] [Google Scholar]

- Norman G., Yong M., Brooks L. Non-analytical models of clinical reasoning: the role of experience. Medical Education. 2007;41(12):1140–1145. doi: 10.1111/j.1365-2923.2007.02914.x. [DOI] [PubMed] [Google Scholar]

- Padilha J., Machado P., Ribeiro A., Ramos J., Costa P. Clinical virtual simulation in nursing education: randomized controlled trial. Journal of Medical Internet Research. 2019;21(3):e11529. doi: 10.2196/11529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posel N., Mcgee J., Fleiszer D.M. Twelve tips to support the development of clinical reasoning skills using virtual patient cases. Medical Teacher. 2015;37(9):813–818. doi: 10.3109/0142159x.2014.993951. [DOI] [PubMed] [Google Scholar]

- La Rochelle J., Durning S., Pangaro L., Artino A., van der Vleuten C., Schuwirth L. Authenticity of instruction and student performance: a prospective randomised trial. Medical Education. 2011;45(8):807–817. doi: 10.1111/j.1365-2923.2011.03994.x. [DOI] [PubMed] [Google Scholar]

- Sawilowsky S. New effect size rules of thumb. Journal of Modern Applied Statistical Methods. 2009;8(2):597–599. doi: 10.22237/jmasm/1257035100. [DOI] [Google Scholar]

- Schünemann, H., Brożek, J., Guyatt, G., & Oxman, A. (2013). Introduction to grading of recommendations, assessment, development and evaluation (GRADE) handbook. Accessed at: February 18, 2021. Accessed from: https://gdt.gradepro.org/app/handbook/handbook.html

- Shin H., Rim D., Kim H., Park S., Shon S. Educational characteristics of virtual simulation in nursing: an integrative review. Clinical Simulation in Nursing. 2019;37:18–28. doi: 10.1016/j.ecns.2019.08.002. [DOI] [Google Scholar]

- Tan A.J.Q., Lee C.C.S., Lin P.Y., Cooper S., Lau S.T.L., Chua W.L., Liaw S.Y. Designing and evaluating the effectiveness of a serious game for safe administration of blood transfusion: a randomized controlled trial. Nurse Education Today. 2017;55:38–44. doi: 10.1016/j.nedt.2017.04.027. [DOI] [PubMed] [Google Scholar]

- Thampy H., Willert E., Ramani S. Correction to: assessing clinical reasoning: targeting the higher levels of the pyramid. Journal of General Internal Medicine. 2019 doi: 10.1007/s11606-019-05593-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Cochrane Collaboration (2020). Review Manager (RevMan) [Computer Software]. (Version 5.4.1). Copenhagen: The Nordic Cochrane Centre. Accessed at: January 7, 2021. Accessed from: https://training.cochrane.org/online-learning/core-software-cochrane-reviews/revman

- The Jamovi Project (2021). Jamovi (Version 1.6) [Computer Software]. Sydney, Australia. Accessed from: https://www.jamovi.org

- Van Der Land S., Schouten A., Feldberg F., van den Hooff B., Huysman M. Lost in space? Cognitive fit and cognitive load in 3D virtual environments. Computers in Human Behavior. 2013;29(3):1054–1064. doi: 10.1016/j.chb.2012.09.006. [DOI] [Google Scholar]

- Verkuyl M., Lapum J.L., St-Amant O., Hughes M., Romaniuk D. Curricular uptake of virtual gaming simulation in nursing education. Nurse Education in Practice. 2021;50 doi: 10.1016/j.nepr.2021.102967. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Victor-Chmil J. Critical thinking versus clinical reasoning versus clinical judgment: differential diagnosis. Nurse Educator. 2013;38(1):34–36. doi: 10.1097/NNE.0b013e318276dfbe. [DOI] [PubMed] [Google Scholar]

- Witheridge A., Ferns G., Scott-Smith W. Revisiting Miller's pyramid in medical education: the gap between traditional assessment and diagnostic reasoning. International Journal of Medical Education. 2019;10:191–192. doi: 10.5116/ijme.5d9b.0c37. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.