Abstract

Following previous works about integro-differential equations of parabolic type modelling the Darwinian evolution of a population, we study a two-population system in the cooperative case. First, we provide a theoretical study of the limit of rare mutations and we prove that the limit is described by a constrained Hamilton-Jacobi equation. This equation is given by an eigenvalue of a matrix which accounts for the diffusion parameters and the coefficients of the system. Then, we focus on a particular application: the understanding of a phenomenon called Adaptation to DNA damage. In this framework, we provide several numerical simulations to illustrate our theoretical results and investigate mathematical and biological questions.

Keywords: Adaptive evolution, Cooperative system, Lotka-Volterra equation, Hamilton-Jacobi equation, Viscosity solutions

Introduction

A common way to investigate evolutionary dynamics [11, 22] is to model populations structured by a phenotypical trait with non-local partial differential equations [5, 6, 8]. This methodology has the advantage of studying not only the final situation but also the fitness landscape and the possible evolutionary paths in a given setting [42]. In those kind of models, the organisms are described by a trait and their density expands or decays in function of both and the competition with other individuals. A simple possibility to represent mutations along the trait is to use a Laplacian:

| 1.1 |

This type of model can be derived from individual based stochastic models in the large population limit [15, 16].

In many works (e.g. [5, 6, 8, 10]), the authors introduce a parameter which provides a way to study the asymptotic limit of the model in the regime of small mutations and long time [12]. This procedure relies upon a Hamilton-Jacobi approach and was extensively investigated for system (1.2). It consists in making the change of variable

which leads to the equation

| 1.2 |

This change of variables allows to catch the effective behaviour of the solutions in large timescales. It can be understood heuristically as follows. The parameter in front of the time derivative accelerates the time meanwhile the parameter in front of the mutation operator (the Laplacian here) avoids that individuals adapt to their environment too fast. Therefore, only the individuals with a trait “well-adapted” to the environment survive. This is why, we expect the solution to concentrate to a sum of Dirac mass centred at these well adapted traits. In a suitable mathematical setting, when , the solutions of (1.2) concentrate into a sum of Dirac masses moving in time, and in the limit the location of emergent traits is driven by an Hamilton-Jacobi equation of the form

| 1.3 |

This type of non-local model was intensively studied and applied to many different biological contexts, for example adaptation of cancer to treatment [17, 33, 34, 43], epigenetics changes [32], non-inherited antibiotic resistance [9] or more generally long-time evolutionary dynamics [24, 26, 37]. Finally, we underline that a more realistic approach is to use an integral term and a mutation kernel (see for instance [5, 39] and the references therein) since, in our case, it is tantamount to saying that the mutations are independent of birth.

Adaptation to DNA Damage as a Modelling Drive for This Study

The theoretical study in this article is mainly motivated by a specific modelling challenge which is still poorly understood from an evolutionary dynamics point of view: the so-called “adaptation to DNA damage” phenomenon.1

When eukaryotic cells face damage to their DNA, specialised mechanisms come into play. The DNA damage checkpoint signalling pathway leads to stopping the cell cycle at the G2/M phase. Then, appropriate repair pathways are activated. These mechanisms are called the DNA damage response. However, the attempts of the cell at restoring its DNA integrity can fail: for example, the damage may be impossible to repair, the appropriate repair pathway may not be available at this stage of the cell cycle or the source of the damage might still be present, causing damage faster than the cell can repair.

In case repair fails for too long, cells will override the DNA damage checkpoint and resume cell division even though the damage is still present [30, 50]. This prevents the cells from being blocked in the cell cycle until they die. This phenomenon is called adaptation to DNA damage. Note that this is a metabolic adaptation occurring in each individual cell which is to be distinguished from the genetic adaptation of the whole cell population on longer timescales (see footnote 1).

Due to improper chromosome segregation [27], adapted cells have chromosomal instability and a high mortality rate, making adaptation a last resort mechanism after all repair options have already failed. The descendants of adapted cells can carry the damage and share the mortality rate of their parents, but adaptation also allows cells to have access to other repair pathways at later stages of the cell cycle; it also happens that one of the daughter cells of an adapted cell doesn’t suffer from the damage due to asymmetric segregation of the chromosomes [48, 51]. Both the success of repair and, if repair is unsuccessful, the survival after an adaptation event, depend on the type and location of the damage. Double-strand breaks are especially dangerous to the cell survival and have been used by experimental biologists to investigate repair mechanisms.

This leads to a hierarchy of cell fate decisions: repair is attempted first and then the cells adapt. In the budding yeast model organism [45], the cells will adapt at a variable timing, ranging from 5 to 15 hours, which is consistent with the fact that one of the slowest repair pathways, break-induced replication,2 takes around 5 hours to be fully attempted [35]. Common experiments to investigate adaptation rely upon mutant populations which cannot repair heat-induced or irradiation-induced damage, making adaptation and its specific timescales easier to characterise [25, 28]. These experiments suggest that adaptation is detrimental to the population and mutants who cannot adapt have better survival when the experimental conditions are made favorable again.

Given how dangerous adaptation to DNA damage can be for cells and their progeny, an important question is to understand what shapes the characteristics of the phenomenon. The two main components of the adaptation process are when it happens on average after the damage which we refer to as the timing of adaptation and how variable it is in time, which we call heterogeneity of adaptation. At the moment, biologists have very few ways of investigating experimentally how these features are selected by natural selection or constrained by chemical limitations. Most of the experiments are performed in controlled environment and little is known about what happens in the wild or during long timescales. Some reviews by experts in the field proposed that adaptation timing and heterogeneity could have been selected by evolution because they are optimal for long term survival of the populations in adverse and unpredictable conditions (e.g. [20]).

A first evolutionary model was proposed recently for adaptation to DNA damage in [44]. The authors derive formally from a stochastic compartment model an ODE model representing how a population of damaged cells recovers and fills the medium up to carrying capacity.

If we call the adaptation timing and the heterogeneity of adaptation, the authors of [44] assume that cells damaged at time 0 repair with a given rate and adapt with a rate . The unit of is in cell-cycle, which is about 1.5–2 hours depending on the environmental conditions. The parameter ranges from 0 (totally random) and (totally deterministic). Based on experimental insights, they choose a logistic model for the adaptation rate after a time since the damage:

| 1.4 |

with the maximal value of the adaptation rate.

Using first the stochastic compartment model and then the deterministic ODE model, the authors prove that there is an optimal value for that allows for the fastest recovery of a fully damaged population. However, their optimisation procedure indicates that the optimal value for is , which is in contrast with what the experiments indicate: adaptation ranges between 5 and 15 hours. The authors then improve their model, taking into account the fact that if the source of damage is still present for some hours (heat, X-rays, chemicals in the medium,…) then the cells cannot repair at all because their repair capacity is overloaded by the continuous source of damage. For each run of their model, they draw a random variable determining when repair becomes possible after the damage. With this component and optimising for expectancy of survival, they find an optimal value for the heterogeneity parameter .

As explained in [44], the selection of an optimal value for the heterogeneity parameter when the environment becomes unpredictable can be related to the more general concept of bet-hedging. Bet-hedging occurs when, on the long term, an isogenic population of organisms selects a phenotype that is suboptimal in any given environment but is optimal for maximising survival in average in an unpredictable environment [47, 49]. A classical example is reservoirs of ungerminated seeds in the soil: in favourable conditions it is optimal for all seeds to germinate as soon as possible, but seed banks allows the population to escape extinction in case of severe drought because ungerminated seeds are less affected [19].

A major drawback in the work of [44] is that their model considers only an isogenic population with fixed parameters and , and then compares, depending on the parameter, the fitness of the population. Their work doesn’t model how the cells change their genetic traits nor how cells with different genetic profiles compete with each other in a same environment. Their model is also very rigid in terms of evolutionary timescales and assumes an initial damage to the whole population instead of continuous damage in time. The later is more realistic since DNA damage can also come from endogenous stochastic causes.

In this work, we propose a more general approach using non-local partial differential equations of the type of (1.1) and a rescaling procedure akin to the one used in (1.2). In Sect. 5, we explain in more details the model of [44] and we build upon it a more complex model consisting in three coupled non-local PDEs representing healthy, damaged and adapted cells: system (5.5)–(5.6)–(5.7)–(5.8) in Sect. 5.

This system being difficult to tackle both theoretically and numerically, we make the simplifying assumption of a quasi-stationary equation for damaged cells, which allows us to remove them from the system by assuming they are treated instantly and redirected to the healthy cells population, the adapted cells population or killed. We thereby obtain a simpler two-populations model describing the dynamics and genetic diffusion of healthy and adapted cells: system (5.10)–(5.11) in Sect. 5. In the theoretical part of this article, we provide a mathematical study of a more general class of two-populations non-local models that includes system (5.10)–(5.11). The next subsection describes this mathematical framework.

A Model for Two Cooperative Populations Structured by a Phenotypical Trait

We propose to study through a Hamilton-Jacobi procedure a system of non-local PDEs modelling two cooperative populations structured by a same phenotypical trait and described by their densities and . This model generalises the system (5.10)–(5.11). For this reason, we first perform the abstract mathematical study in the general case in order to have more tool for the subsequent modelling and numerical parts.

To the best of our knowledge, there is little research about the asymptotic behaviour of several specie non-local PDEs in evolutionary dynamics. Existing works in this direction focus, for instance, on the influence of a spatial domain [10], on organisms which specialise in order to consume particular resources [23], on a model for juvenile-adult population undergoing small mutations [14], on elliptic systems [29, 37, 41] for two species or on influence of a spatial domain [10].

The model we focus on writes

| 1.5 |

where and represent the intrinsic fitness of organisms with trait in the two populations. The terms and are cooperative terms (or, in our application in Sect. 5, conversion terms from one cell type to the other) between the two populations. The total number of cells represents the competition for resources. Since the system is cooperative (i.e. an increase in one of the two populations imply an increase in the other population), we expect that both population converge to a Dirac mass with a same trait as , even in the case where one population has a smaller mutation rate (notice that we allow in the forthcoming assumption (H1) but ). Heuristically, the dynamics of the main phenotype of this population will be driven by the mutation of the other population and the exchange term.

The system can be summarised in the following compact form

| 1.6 |

with Neuman boundary conditions: . Here stands for the vector and for the following operators:

| 1.7 |

First, we assume that

| H1 |

Note that (H1) allows one of the two coefficients being equal to 0, but not both at the same time. We will also assume that there exists such that

| H2 |

An other hypothesis is

| H3 |

Finally, we assume that both initial conditions satisfy:

| H4 |

Theorem 1.1

Under the assumptions (H1), (H2), (H3) and (H4), there exists a solution to (1.5). Moreover, we have

The proof is an adaptation of the one presented in Appendix A of [8]. We provide it in the Appendix for the sake of completeness.

The Main Mathematical Result

We adopt the classical approach for Hamilton-Jacobi equations: we perform the so-called Hopf-Cole transformation by defining

| 1.8 |

This approach is well adapted since we expect to converge to a Dirac mass in the sens of the measure as vanishes. In this order, it is sufficient to prove that converges in some set of continuous functions with for some whereas for . Performing the inverse transformation of the Hopf-Cole transform provides the desired result. Therefore, we rewrite (1.5) in the following form

| 1.9 |

Finally, following [7], we introduce the effective Hamiltonian as known as one of the eigenvalue of (associated to a constant sign eigen-vector):

| 1.10 |

We introduce the Hamiltonian fitness

| 1.11 |

such that

We will denote the corresponding principal eigen-vector:

| 1.12 |

All the components of can be chosen strictly positive. The other eigenvector, associated to the eigenvalue , has a positive and a negative component.

Theorem 1.2

Under the hypotheses (H1), (H2), (H3) and (H4), there hold

- The sequence converges to a non-decreasing function as with

- The sequence converges locally uniformly to a same continuous function , with a viscosity solution of

1.13 - The sequence converges in the sense of measures to . Moreover, we have

Outline of the Paper

In Sect. 2, we detail the general approach and state the main technical results that lead to the proof of Theorem 1.2. Section 3 is devoted to the proofs of these technical results. In Sect. 4, we prove Theorem 1.2. Next, in Sect. 5, we detail the biological context that motivates our theoretical study. Finally, in Sect. 6, we illustrate our theoretical study by some numerical simulations in the framework given by our biological motivations. We also investigate numerically some open questions.

Notations: All along the paper, we adopt the following conventions:

the letters refer, when there is no confusion possible, to an index in ,

if and are used in a same equation then ,

the bold mathematical characters are strictly reserved for vectors of or matrix of ,

the constants are taken positive and may change from line to line when there is no confusion possible (the capital letter is preferentially used for large constants and the small letter for small constants).

The Hamilton Jacobi Approach

We develop in this part of the work a general approach for non-local cooperative systems. For technical reasons, we focus on a model with only two species and a uni-dimensional space. This part is largely inspired by [7] and [8], but since we study a coupled system, we cannot use the same arguments straight away. Unlike in the articles [5] and [8] for the single species problem, it is not possible to obtain directly a uniform BV estimate for the total mass . There are additional mixing terms and, a priori, nothing prevents them to blow-up when goes to 0. Moreover, one can not apply directly the method of [7] because the non-local total mass does not prevent the logarithm of the solution to be positive. We will circumvent these issues by employing a combination of the two former approaches.

The Approach

Before dealing with the mathematical details, we propose an overview of the classical methods to treat this kind of problem as well as a presentation of heuristic arguments.

A local version of (1.6) was studied in [7] (i.e. with replaced by ). Moreover, the authors focus on general systems with more that two equations. We do not obtain the same level of generality than [7]. As we will see later, the hypothesis of having only two equations (rather than several) is a key hypothesis in our work. From a technical point of view, Barles, Evans and Souganidis do not prove any regularity results on but they study the system through the semi-relaxed limit method by defining

We did not succeed in adapting this idea without proving any regularity results on . Indeed, with the semi-relaxed limit approach, one key point is to prove that . In [7], this claim is true; otherwise, it would be in contradiction with some natural bounds on (obtained with the maximum principle). However, in our setting without any regularity result in space on , even if we have natural bounds on the total mass , nothing prevents the solution to be positive at a singular point. Indeed, contrary to the problem studied in [7], may be positive on a sequence of intervals with (where stands for the Lebesgue measure).

Therefore, we state regularity results in space on . Our result generalizes the case of the single population equation (1.2) (i.e. and ). In the first works treating this equation [5, 6, 8], the main result on the convergence of was obtained by proving some BV-estimates on and some bounds on by using the Bernstein method. Then obtaining the Lipschitz regularity of with respect to time leads to the convergence by using the Arzela-Ascoli Theorem. Before, dealing with the Hamilton-Jacobi equation (1.13), we prove the convergence of toward subsequence. We adapt the proof of [6] (Theorem 3.1) and [8] (Theorem 2.4). The proof of the Theorem 3.1 of [6] involves the positiveness of (equation (3.5) of [6]). In our work, it is not clear in general that

the right-hand side being what we would obtain in place of .

To tackle this issue, we propose a precise estimate of . Indeed, this estimate ensures that the exponential term is bounded and then one can apply the classical Bernstein method to obtain regularity in space. From this space regularity, we will deduce that is Lipschitz with respect to time. Finally from this last result, we deduce that the family converges. It will allow us to conclude.

We underline that the estimate of plays a similar role than the Harnack estimates obtained in [29, 36] in elliptic settings.

We formally write a Taylor expansion of :

We first expect that since we do not expect a blow up of the exponential term. Next, by subtracting the two equations of (1.9) and using the fact that , we obtain

Taking formally, the limit , we expect

The above expression involves which is not clearly defined yet. Notice here that in the special case the formula is simpler since the right-hand part is only defined thanks to the functions and we expect

Definition 2.1

Let be the unique positive root of

| 2.1 |

The fact that is important because it allows us to make a reasoning on the sign of . Next, with this definition, we state the main technical statements that are necessary to prove Theorem 1.2.

Theorem 2.2

Under the hypotheses (H1), (H2), (H3) and (H4), the following assertions hold true.

- Bounds. There exists such that

2.2 - Space regularity. For any times and there exists a constant such that

2.3 - Ratio . For any positive time, we have

2.4 Time regularity. The family is locally uniformly continuous with respect to time.

Remark that the third item (the ratio estimates) comes after the space regularity result since when , if is not locally bounded with respect to , one can not conclude the proof of (2.4). However, to prove the space regularity result, one needs an estimate similar to (2.4). We prove a weaker version of (2.4) as an intermediate result but we state only the stronger result in the theorem above. We also highlight that the terms has an exponent 4 that will be used in the proof of point 1. of Theorem 1.2.

The Special Case

In this special setting, note that does not involve anymore. Therefore, the point 3. of Theorem 2.2 can be obtained directly by observing that

(for some large constant ). We refer to the forthcoming proof of Lemma 3.3 for more details. It follows that the point 2. of Theorem 2.2 can be obtained from the point 3.

Last, we can also derive formally a simpler equivalent equation for system (5.10) in the long time limit. We can assume when and thus the quantity

should satisfy the equation

| 2.5 |

with and where the global fitness function of the system writes

Equation (2.5) is well understood. It is proved in [1, 31] that for each there exists a unique solution which is the ground state of the Schroedinger operator

First, we remark that

Recalling the definition (1.11), we notice that

We conclude

| 2.6 |

Hence, the Hamiltonian fitness referred to above describes the behaviour of the system in both the limits and . This function is formally the equivalent fitness of the overall system formed by the two cooperating populations. They adjust their fitness parameter in function of the maximum points of .

The Intermediate Technical Results

Here, we prove all the statements of Theorem 2.2 and some intermediate results that are not stated above.

Bounds on

First, we focus on the bounds for . The method is quite standard (see e.g. [4] for a general introduction of this kind of method or [8] for a closer use of this method) but some new difficulties arise from the interplay between the two populations.

Proof of 1. of 2.2

We split the proof into two parts: the upper bound and then the lower one.

The upper bound. First, we define with and that will be fixed later on. We also introduce and the corresponding integer. From assumption (H4), it is clear that . Next, we consider

We prove by contradiction that . Assume . We distinguish two cases:

- Case 1: There exists such that . It follows by definition of

The definition of yields that the exponential part is bounded by 1. From this bound and (H2), it follows

which is impossible for . (We remark that according to the Neumann boundary conditions imposed on . Moreover, the first inequality above is a strict inequality whenever .) -

Case 2: There holds with . In this case, we introduce with and

Since and , we have . Remark also that for . Moreover, since , we conclude as in case 1 that . We claim that . Indeed, since there exists, by definition of , a sequence such that . If , it would imply for large enough that and a contradiction follows from

We deduce the existence of and such that

Finally, we introduce

We underline that since .Moreover, for all such that , one has that since . We deduce that there exists such that

As above, we deduce that

Next, using the bounds on and , we conclude that

Passing to the inferior limits and then , it follows

which is absurd for .

It concludes the proof of the upper bound.

The lower bound. First, we define with two free parameters satisfying . We prove the lower bound for with . As above, we introduce

Remarking that , we deduce that . As for the upper bound, we distinguish the proof into two cases:

- Case 1: There exists such that . In this case, we have

We deduce that

It is impossible for large enough (the first above inequality is strict if and only if ). - Case 2: There holds for all . As for the upper bound, we introduce for

It is clear that . Next, there exists and such that

We introduce

Moreover, we have . Since for , we have (since ), it follows the existence of such that

A direct computation implies

Taking the inferior limits and and large enough, leads to the desired contradiction.

It concludes the proof. □

A First Weak Asymptotic Result for

As mentioned in the comment that follows the statement of Theorem 2.2, we only prove a first imprecise (but necessary) result on . For this purpose, we introduce

Definition 3.1

Let be defined by

| 3.1 |

We emphasize that

| 3.2 |

This new quantity is introduced in order to prove the following result

Lemma 3.2

Under the hypothesis (H2), we have

Proof

By definition of , in any case and for all , we have

Thanks to (H2), we have

□

Notice that when , the conclusion of Lemma 3.2 may be false for if is not locally bounded. With this result, one can state the following lemma:

Lemma 3.3

Under the hypothesis (H1), (H2), (H3) and (H4), we have

Proof

Set , and . The aim is to prove that

First, we assume . We introduce

Thanks to 1. of Theorem 2.2, we have . Remark also that for all , we have

It follows that for all . Next, we distinguish two cases:

,

.

We will only consider the second case, since it is clear that in the first case the conclusion holds true.

We prove by contradiction that this case can not hold. Let be such that converges to . For sake of readability, we replace by . Notice that this limit belongs to .

According to the point 1. of Theorem 2.2 and Lemma 3.2, we have

It follows the existence of such that

Moreover, we observe that as , one has . One also has

| 3.3 |

Since converges as and all the involved functions are continuous, we deduce the existence of (independent of but that may depend on ) such that for all small enough,

| 3.4 |

We subtract the equations for and and we obtain

| 3.5 |

Next, we evaluate the above equation at . First, since , we deduce that

It follows

We deduce thanks to (3.3), (3.4) and (3.5) that there holds for small enough

We have reached the desired contradiction.

If , we provide the same arguments with

The proof for is identical by studying . Therefore, we let it to the reader. □

It follows the following corollary

Corollary 3.4

Under the hypothesis (H1), (H2), (H4) and (H3) we have

| 3.6 |

Proof

We focus on the case , the other case works exactly the same. According to Lemma 3.3, it is sufficient to prove that

First, we remark that thanks to (H2)

Next, we treat the numerator of . When , we have

Combining the two above equations the conclusion follows.

For the case, , we simply have thanks to the above computations

□

Space Regularity of

Proof of 2. of Theorem 2.2

First, we fix an initial time and a maximal time and a bound . We focus our study in the set .

Next, we define where will be chosen later on. A direct computation yields:

Replacing in the th equation (1.9), it follows

| 3.7 |

Next, we differentiate (3.7) with respect to and we multiply by to obtain:

Next, we assume that . It follows

Next, by defining where is large enough such that (thanks to point 1. of Theorem 2.2) and dividing by , we obtain thanks to Corollary 3.4

Next, thanks to Corollary 3.4, for any , it follows the existence of a large constant (independent of ), such that for

we have

Following, the Appendix B of [8], the conclusion follows by comparing to . We conclude that

□

Corollary 3.5

Under the hypotheses (H1), (H2), (H3) and (H4) we have

(where is a new arbitrary large constant that depends only on and ).

Asymptotic of

We only prove the following Lemma

Lemma 3.6

Under the hypotheses (H1), (H2), (H3) and (H4), for any time interval , there exists such that

Indeed, it is sufficient to prove this lemma because the proof of the upper bound point 3. of Theorem 2.2 is the same than the proof of Lemma 3.3 by replacing by and by using the lower bound provided by Lemma 3.6 instead of the estimate provided by Lemma 3.2. Notice that the proof of the lower bounds follows exactly the same argument than the upper bound except that . Therefore, we let the details of the proof for the reader.

Proof of Lemma 3.6

We prove this lemma for , the proof works the same with . We underline that the constant can increase from line to line but does not depend on or .

• The upper bound. We start from the definition of :

| 3.8 |

According to Corollary 3.5, we have that for all

It follows

| 3.9 |

Thanks to (H2), we have

| 3.10 |

Inserting (3.9) and (3.10) into (3.8), the conclusion follows for the upper bound.

• The lower bound. If , then the result is exactly the one obtained in Lemma 3.2. Therefore, we only consider the case , in this case we have

Next, following similar computations than (3.9) and (3.10) the conclusion follows for the lower bound. □

To finish, we state a Proposition that provides some identity related to . The proposition follows from straightforward computations that we omit here. However, the following identities will be very useful in the proof of point 1. of Theorem 1.2.

Proposition 3.7

The following identities hold true:

where is introduced in (1.11),

,

.

Notice that in the special case , we recover .

Time Regularity of

The local Lipschitz time regularity of is an exact transposition of the proof of the time regularity of in [8] in Sect. 3.5. It is performed with the so-called method of doubling variable and relies mainly on the above bounds about . We do not provide this proof and just refer to [8] Sect. 3.5.

The Hamilton Jacobi Convergence Result

Proof of Theorem 1.2

We split the proof in several parts:

The convergence of ,

The convergence of to and the control condition,

The function is solution of (1.13),

The convergence of .

• Convergence of . We follow the proofs of Theorem 3.1 of [6] and Theorem 2.4 of [8]. First, we sum the two equations and we integrate with respect to , it follows

Notice that for all , we have

| 4.1 |

Next, we differentiate over time and it follows thanks to (H3)

As mentioned in the introduction, a new technical difficulty arises since we deal with a system: it is not clear that the quantity

Indeed, using mainly Proposition 3.7 on , we prove

which is enough to conclude to the convergence of (as we will detail later on). To prove such an inequality, we start from

| 4.2 |

Thanks to the point 3. of Theorem 2.2 and Proposition 3.7, we have for

| 4.3 |

With similar computations, we also have

| 4.4 |

Inserting (4.3) and (4.4) into (4.2), it follows

Using again the point 3. of Theorem 2.2 and Proposition 3.7, it follows

We deduce that

We conclude that for all , we have

By integrating the above inequality between and , we deduce thanks to (4.1)

Finally, following the Annex B of [8], we fix and it follows for

We conclude thanks to the compact embedding of into . Up to a subsequence, converges to a function on every interval of the form for every . By a diagonal process, we conclude to the convergence of on . Moreover, it is clear that is non-decreasing.

• Convergence of . From the points 1, 2 and 4 of Theorem 2.2, we deduce thanks to the Arzela-Ascoli Theorem that converges uniformly on any set of the form with arbitrary large constants and an arbitrary small constant. We deduce that converges uniformly locally on . Moreover, thanks to the point 3 of Theorem 2.2, we deduce that

Next, we claim that . We prove it by contradiction: assume that there exists a time and such that . We deduce the existence of a sequence such that . Next, according to the point 2 of Theorem 2.2, there exists a radius such that for all , there holds

It follows that for small enough, which is in contradiction with the conclusion of Theorem 1.1.

We finally claim that for all , we have . Assume that the conclusion does not hold true. It follows the existence of a time such that . We deduce that for small enough, we have

We conclude that for small enough, which is in contradiction with the conclusion of Theorem 1.1.

• The function is solution of (1.13). We first prove that is a super-solution in a viscosity sense of . We proceed as it was introduced in the article [8]. Let and be a regular test function such that

Then, we notice that

where

The function , introduced in (1.12), is a positive eigenvector of associated to the eigenvalue . We deduce

| 4.5 |

As we have denoted , we will denote . Notice that . Since it is a minimum point, it follows

Using the equation (1.9), we deduce that

Moreover, according (4.5), we have

It follows

Moreover, recalling that is an eigenvalue of , it follows

We deduce that

Taking the limit , we conclude that is a super-solution of in a viscosity sense.

It remains to prove the limit conditions: we verify that satisfies in a viscosity sense . Let be such that takes its minimum at and for some positive time . We deduce the existence of such that

We distinguish two cases:

- Case 1: . In this case, we conclude exactly as above that

- Case 2: . In this case, using the fact that is a minimum point, we deduce that

Next, according to the Neumann boundary conditions imposed to , we deduce that

Passing to the superior limit

which corresponds to the boundary conditions in a viscosity sense.

The proof that is a sub-solution of (1.13) follows from the same arguments.

• Convergence of in the sense of measures. The proof that converges to a measure follows from the convergence of towards . Indeed, fix times ; then, according to point 1. of Theorem 2.2, there exists such that for any , and small enough, we have

Hence, we deduce that on . It follows

Since the same type of inequalities is valid for , we deduce that up to an extraction we have , where and are two non-trivial measures. Next, we prove that

Let a time and be a positive regular compactly supported test function, such that

We deduce that there exists such that

Hence, for small enough, we have . The conclusion follows the following computation:

□

Optimal Timing and Heterogeneity in the Adaptation to DNA Damage

A General Non-local System Modelling Adaptation to DNA Damage

We now describe with more mathematical details the model used in [44] and we build a more complex model involving coupled non-local partial differential equations.

The initial population is composed of a quantity of damaged cells. These cells are assumed to be the only survivors of an event that damaged their DNA and killed part of them. They first try to repair their DNA and succeed at a time-dependent rate that we assume to be Gaussian:

| 5.1 |

where is the time elapsed since the damage, is the maximal value of the rate, the variance and the mean. We assume that the cells adapt with a time-dependent rate of logistic type:

| 5.2 |

This rate involves the parameters of the adaptation timing that represent the center of the curve and which represents the heterogeneity. We also fix the hyper-parameter for the maximal value of this rate.

Adapted cells and their progeny have access to other repair mechanisms at later stages of the cell cycle and we assume that they manage to repair their DNA damage at a constant low rate . The values and are the death rates of damaged and adapted cells respectively; the death rate of healthy cells is assumed to be 0 for the sake of clearness.

Currently, there are not enough data to justify a precise choice of the fixed parameters and , but insights from experimental biologists suggest orders of magnitudes and approximate values. In this work, we stick to the choices of [44], which come from discussions with experimental biologists:

The choice of 0 for the death rate of healthy cells is not important since what matters in the model is the relative values of the different death rates. The real values of these death rates in the wild is very hard to determine and depends on many varying factors like predators and environmental conditions.

With these choices of parameters and functions, the model of [44] is defined by the following system of ordinary differential equations:

where, at time , is the quantity of damaged cells, the quantity of adapted cells and the quantity of healthy cells whose DNA is repaired. We denote

the total population at time . This system converges to a situation where the healthy cells fill entirely the carrying capacity : when .

Depending on the value of the timing of adaptation (or the value when we study heterogeneity), the population will take a certain time (respectively ) to reach some arbitrary level near the carrying capacity of the system. The authors of [44] observe that there exists for most values of an optimal value which minimises , thus allowing the population to grow back to a healthy size as fast as possible after an external event has damaged the DNA of all cells. The authors also investigate the dependency of with respect to the heterogeneity parameter when is fixed. As we explained in the introduction, for realistic values of , , which is in contrast with experimental data that indicate that adaptation is quite heterogeneous in time. The authors of [44] then improve their model, assuming that the source of the initial damage (heat, X-rays, chemicals in the medium,…) is still present for some time and prevents repair. This is modelled by a random variable determining when repair becomes possible after the damage. With this component and minimising the expectancy with respect to the law of the environmental random variable, they find an optimal value

for the heterogeneity parameter .

Here we go further into investigating the selection of optimal adaptation timing and heterogeneity . Instead of studying for each value of the genetic trait the evolution after a damaging event in the isogenic population, we consider a population of cells with varying genetic trait competing for the same resources in an environment.

Consider for example the trait representing the timing of adaptation. Let represent at time the density of healthy cells with genetic trait ; let represent at time the density of cells with genetic trait whose DNA is damaged since a time ; let represent the density of adapted cells at time with genetic trait . We now assume that some cells are damaged at rate . We model genetic diffusion in the variable of interest ( or ) for both healthy and adapted cells with a Laplacian with diffusion coefficients (healthy cells) and (adapted cells). The adapted cells having higher genomic instability, it can be interesting to consider the case . Last, we directly introduce the scaling parameter in the spirit of the model (1.2). As mentioned in the introduction, the parameter accelerates time and makes the mutations rare. In this regime, we expect that only the traits with a positive or null growth rate will be selected. Therefore, we expect that the set of solutions converges to moving Dirac masses.

The repair rate can now take into account both an absolute time part and a “time elapsed since the damage occurred” part:

| 5.3 |

where the function allows us to take into account environmental events that prevent cells from repairing their DNA damage, like X-rays, anomalous heat or toxic chemicals. The adaptation rate can depend on or depending on what we investigate, which we will denote for clarity

| 5.4 |

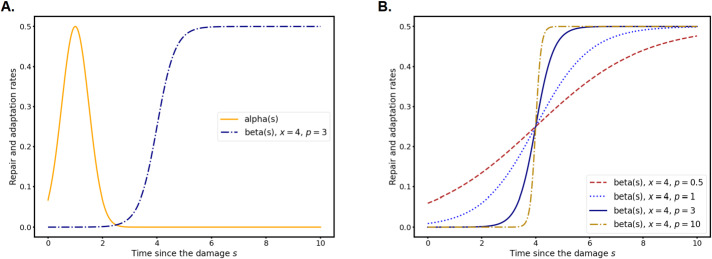

to indicate if cells vary along genetic trait or in the model. In Fig. 1 A, we present the rates and in terms of the time elapsed since the damage of a cell. In Fig. 1 B, we plot on the same graph the adaptation rate for different values of the heterogeneity parameter to help visualise what kind of effect varying it has.

Fig. 1.

(A) Plot of the rates with and with and in terms of the time since the damage. (B) Plot of the adaptation rate in terms of the time since the damage for and

Our non-local PDE model writes:

| 5.5 |

| 5.6 |

| 5.7 |

| 5.8 |

with the following initial and boundary conditions

| 5.9 |

and where the constants are the same as in the previous model.

Let us comment further on this system in order to justify the different choices we made in the crafting of equations (5.5)–(5.6)–(5.7)–(5.8).

-

Diffusion part: We assume that mutations can occur when cells reproduce and we choose to model these mutations with a Laplacian in the variable under investigation ( or ). Let us recall that other approaches have been studied in the literature, like integral operator with a mutation kernel [8, 40]. Our main assumption in this work, which comes from insights from the experimental community ([20]), is that the value of the parameters and can be affected by random mutations, leading to colonies where cells present different behaviours when faced with damage on their DNA.

In equation (5.5), mutations occur in the healthy population with a rate driven by the diffusion coefficient and the scaling parameter ; when the later is small, we consider a regime of rare mutations over a long timescale. Although they have chromosomic instability, damaged cells do not have genetic diffusion because, since they are blocked in the cell cycle, they don’t reproduce at all until the damage it either sorted out or ignored. When they adapt, cell division resumes and we model mutations in the adapted population with a Laplacian and a diffusion coefficient . It is expected that because of the genetic instability ([20]), but there are currently no experimental data to rigorously support this claim. Estimating mutation rates for organisms is still a widely open question in experimental biology and most systematic review on the topic concern bacteria (e.g. [46]).

Let us also clarify a point about diffusion when we consider as a variable. The times at which individual cells adapt is then random for two different reasons: first the value of (the smaller , the more random are adaptation events), then the diversity of the values of in the population. The effect we want to investigate is the first one, in order to determine which value of leads to the more fitness in the population, which is why we assume that mutations are rare (), leading to less heterogeneity in adaptation events due to the diversity of values of and more heterogeneity due to the value of which is selected by evolution over long timescales.

- Reaction part: Cells are damaged at a rate and thus removed from the healthy population . These damaged cells enter the damaged population at time since damage through the boundary condition . The damaged cells population evolves according to the age-structured hyperbolic equation (5.6). Time since the damage increases at speed , damaged cells die at rate . The cells damaged at time since a time repair their DNA at a rate . At any time , all cells repaired are put back into the healthy population through the term

In the same fashion, damaged cells adapt at a rate and at any time all cells that adapt are put in the adapted population through the term

Last, all cells compete for resources and are subject to the control of the total population defined by (5.8). These integrals and the equation (5.6) for damaged cells could be posed on the interval in the direction since the hyperbolic nature of this equation implies a finite propagation speed. However, it would prevent the use of an arbitrary initial condition in the system and other age-structured equations in the literature are posed with ; for these reasons, we pose the equation on an infinite domain for .

Simplification into a Two Populations System

This system of non-local partial differential equations is hard to tackle numerically for multiple reasons. First, the hyperbolic equation with coupled boundary condition describing damaged cells must be solved on a two dimensional domain which may be large in the direction because when is not small adaptation events can happen later on. This implies that the more the domain is large in , the more it must be large in . A potential way to circumvent this issue would be a non-square domain, but then it raises the problem of preserving the hyperbolic structure of the equation. More generally, the system possesses conservation properties between , and that a robust numerical scheme would have to preserve. Then, every iteration of the system involves the computation of a lot of integrals in the direction: in the equations for and and in the computation of the total mass . Last, when the scaling parameter becomes small, there are no guarantees of numerical stability for this intricate non-local parabolic-hyperbolic system; without proper tuning of the numerical scheme, the time step would have to be small. Numerical study of this model is still possible with a carefully crafted numerical scheme and enough computational power, but this is outside the scope of our paper.

Hence, we simplify the dynamics of the damaged cells by making for small enough the quasi-static approximation

Then, we can compute the quantity of damaged cells explicitly:

We also make the simplifying assumption that the damage rate is constant, i.e. , and the new total mass is given by

The damaged cells are absent of this total mass because the quasi-static approximation is tantamount to say that on longer timescales damaged cells are instantaneously distributed between the three categories they go to: healthy, adapted and dead. Since they are instantly redistributed, they don’t need to participate in the carrying capacity in this simpler model.

Hence, we come to the simplified model

| 5.10 |

with the following initial and boundary conditions

| 5.11 |

If we choose , i.e. , and if we denote

the system (5.10) is of the form (1.5).

If we assume that and , then the functions defined above satisfy assumptions (H2) and (H3). Most of the conditions can be readily checked and we postpone the remaining technicalities to the Appendix B. For (H2) the only difficult part is to check that , which is granted thanks to Lemma B.1. For (H3), thanks to Lemma B.2 and using the fact that

we can choose

Therefore, we can apply Theorem 1.2 and Theorem 2.2. In particular, if , then for all ,

Moreover, recall that in the case the Hamiltonian defined in (1.10) can be decomposed into

with

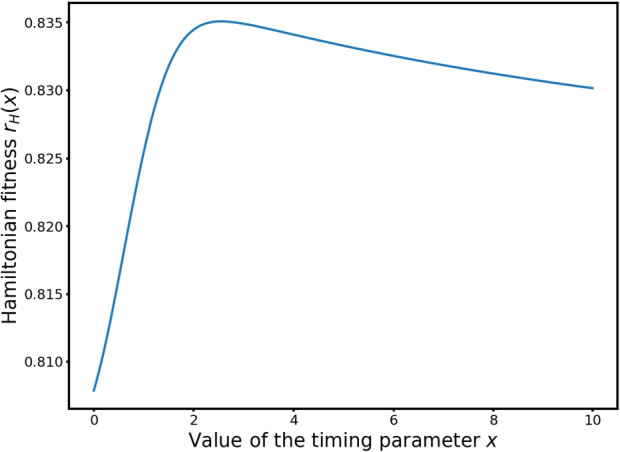

the Hamiltonian fitness. This function also describes the stationary states as explained in Sect. 2.2. We can compute numerically this function to gain insights about the behaviour of the system in the limits or .

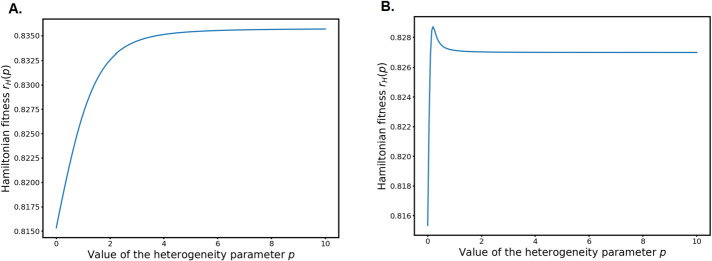

When the variable of interest is the mean time of adaptation , with fixed , has a unique global maximum as can be seen on Fig. 2. The numerical results in the next section indicate that when goes to 0, the solutions concentrate on a Dirac mass moving towards the maximum point. Hence, this model strengthens the hypothesis of [44] that an optimal timing for adaptation tend to be favored by natural selection over long timescales. Here, the optimal time is expressed as

Let us mention that should also drive the profile of the stationary state for fixed , since, as mentioned above, the formal equation for is

Fig. 2.

Hamiltonian fitness of the system for

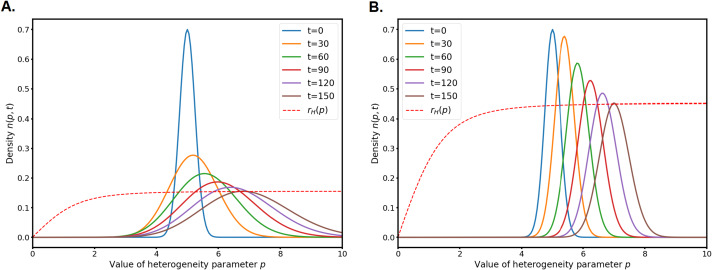

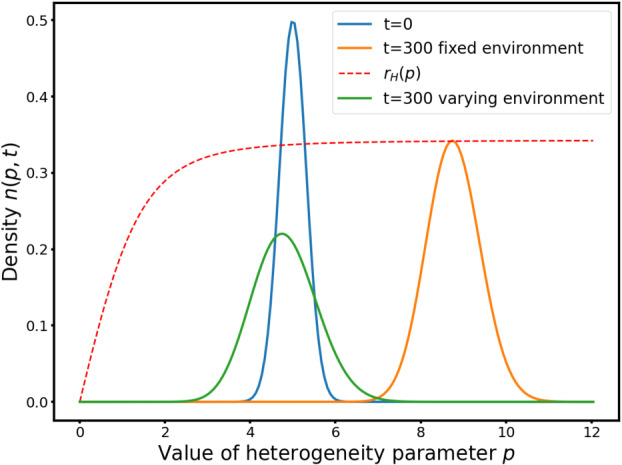

If we fix an adaptation timing and we take as a variable the adaptation heterogeneity parameter , we can compute another equivalent fitness which is displayed in Fig. 3.

Fig. 3.

Hamiltonian fitness of the system for (A.) and (B.)

As can be seen in Fig. 3A, for “reasonable” values of the function is increasing on . As we can observe in the numerical simulations in the following section, when the solutions concentrate on a Dirac mass that moves towards . This is in accordance with the findings of [44] in the simpler ODE model: when the environment is predictable, the optimal strategy for the cells is to minimise the variance around any “good enough” adaptation timing, which amount to taking the largest possible value for .

If the mean adaptation time is large enough, for example , it can be seen in Fig. 3B that has a unique global maximum. Since adaptation is really late, a smaller value (i.e. a larger variance for the adaptation) is selected to compensate.

However, as we said in the beginning of this section, in real life experiments the cells adapt with a variable timing. In [44], this fact was explained as a bet-hedging mechanisms in an unpredictable environment. When the optimisation procedure in the variable has to take into account a random variable in the repair function , a particular value is selected. Here we use the absolute-time part in the repair function to model the changing environment. In the next section, we also make numerical experiments to explore what happens to the solution with a time-periodic function.

Numerical Simulations

In this section, we investigate numerically the behaviour of the system (5.10) with the parameters and functions described in Sect. 5.2.

We use a standard Cranck-Nicolson scheme for the Laplacians and the reaction terms are treated explicitly. This rather simple scheme appears to be very robust even for small values, as long as the time step is of the same order of magnitude than . The numerical domain is chosen such that increasing it further has no effect whatsoever on the simulation, which is most of the time . The smaller the scaling parameter is, the smaller the numerical domain can be. At both ends of the numerical domain, we use a homogeneous Neumann boundary condition. This method is valid also because we use fast decreasing initial conditions.

Note that the recent preprint [13] proposes a new asymptotic-preserving numerical scheme for this type of equations, along with theoretical guarantees. It could lead to further numerical research in this two-population framework.

The Python code we used to produce the numerical simulations is available at https://github.com/pierreabelroux/Leculier_Roux_2021. The figures can be obtained by running the code.

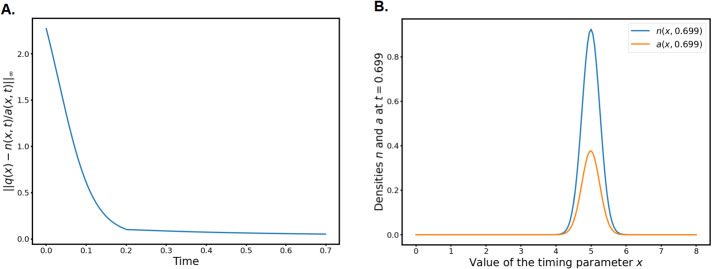

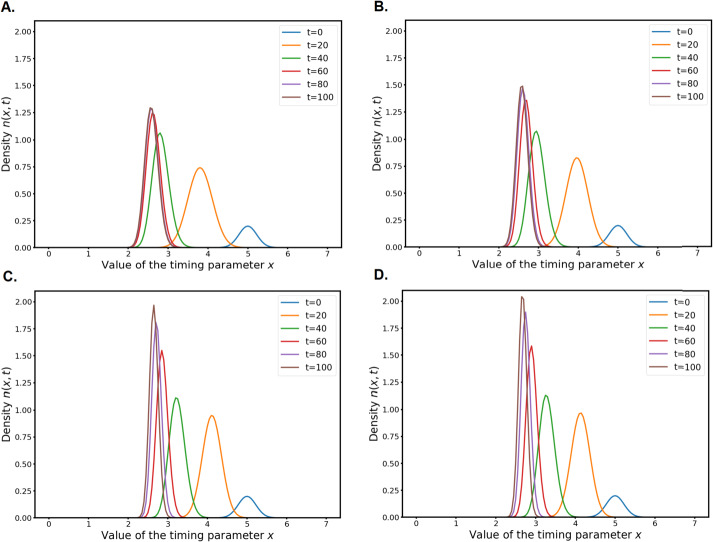

As can be seen on Fig. 4, the convergence of the quantity is very fast and the shapes of and are similar right after a short transitory period. In this figure and the following ones we take

to avoid visual scaling problems with (this quotient can be very large in the first milliseconds for Gaussians with distant means) but it does not affect the speed of convergence towards which is consistent across all types of initial data.

Fig. 4.

(A.) Distance between and in norm. The norm is computed over the finite numerical domain (B.) Plot of and for . The diffusion parameters are

Consequently, we will only plot in the following numerical experiments for the sake of clarity.

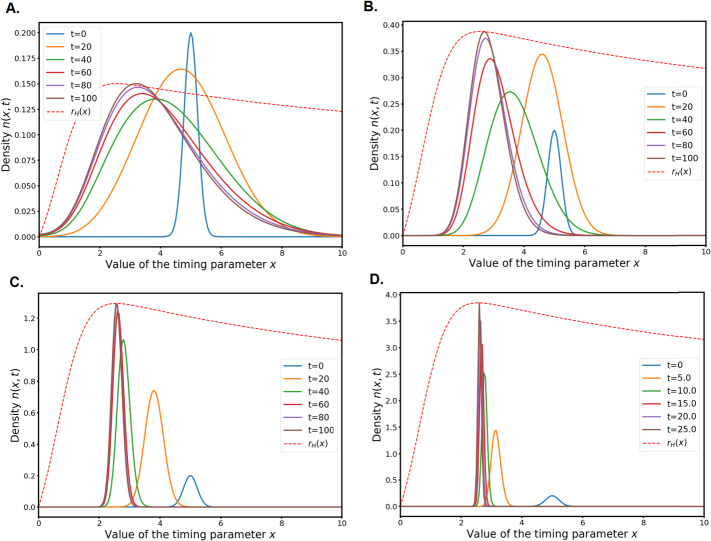

Evolution Along the Parameter for Fixed

For the fixed values and , we simulate the system (5.10) for different values of (see Fig. 5). As predicted by our theoretical results, when tends to 0 the solution behaves like a Dirac mass moving towards the maximum point of the Hamiltonian fitness . This corresponds to the selection of an optimal mean value for the timing of the adaptation process. In laboratory experiments on budding yeasts ([20, 25, 28]), researchers use mutant populations of cells that are incapable of repairing heat-induced or radiation induced damage. They observe that despite repair being impossible, adaptation occur at a specific timing. The theoretical and numerical results of our model strengthen the hypothesis that this specific average timing is driven by natural selection. Note that this natural selection mechanism is not something that experiments can test currently, which makes model aided hypotheses quite useful.

Fig. 5.

Evolution in time of from the same initial data for and different values of . The dashed line is the Hamiltonian fitness which is re-scaled for the sake of readability. (A.) (B.) (C.) (D.)

Yet, taking is not realistic from a biological point of view in this context. It is observed in experiments that adapted cells have a more unstable genome and thus the genetic diffusion might be very asymmetric [20, 21]. Our theoretical setting gives us less clear results in the case because we can’t define and simulate in a simple way a Hamiltonian fitness to see were are the optimal traits: the Hamiltonian rather decomposes into

and the function then involves the gradient of the solution, which is evolving in space and time.

Therefore, we run numerical experiments for and different values of and (see Fig. 6) to see how it impacts the evolution of the solutions in time. It appears that the overall behaviour of the system is not changed much by the different values but asymmetric diffusion make the concentration to a Dirac mass faster. The higher diffusion drives the evolution and even in the extreme case the qualitative behaviour similar to the case . This last case, when the genetic diffusion is assumed to be negligible in healthy cells, is of particular interest for biologists for it allows to investigate adaptation to DNA damage as a mechanism promoting genetic diversity of organisms. Note that this question was opened in [20]. The stability of the model with respect to this particular case strengthens the hypothesis of a role played by adaptation in genetic diversity of cell populations.

Fig. 6.

Evolution in time of from the same initial data for , and different pairs with same sum (A.) , (B.) , (C.) , (D.) ,

Evolution Along the Parameter for Fixed

In [44], the authors also explore the influence of the parameter which describes the slope of the adaptation curve (see Fig. 1B). When is very large, the population barely adapt before time since the damage and brutally starts adapting with constant rate after a time . When is mild, there is a smoother transition and thus cells adapt at more random times. Experimental evidence strongly suggest that the individual adaptation time is heterogeneous and the curves available in the literature suggest that is neither too small nor too big. In [44], the authors set a biologically realistic value of , and then run the model for different values of to see which values lead to the fastest recovery of the population after a damage of all the cells. In a stable environment (, they find that the optimal value is , which is in contrast to experiments. The authors then add randomness into the repair process to account for the fact that repairing can be impossible for some time in hostile environment or if the source of damage is still present. Across different choices of laws for the random environment (Gaussian, exponential, uniform) an optimal value for emerge. The authors relate this phenomenon to the wider concept of bet-hedging (see [47] for a good introduction to this notion).

In our non-local PDE setting, we add more complexity as now is not a fixed parameter but a variable and the population is genetically heterogeneous. We simulate the evolution in genetic trait of the population to see if we can validate some of the findings of [44].

Stable Environment

If we fix a mild value for the timing parameter and take the heterogeneity parameter as the variable, then the equivalent fitness function is increasing. We can observe (see Fig. 7) that the solution stabilizes into a Gaussian moving in time towards . When is smaller, the variance decreases. According to our theoretical results and [5, Theorem 7.1], the solutions concentrate in the limit on a Dirac measure moving towards infinity. This is what the authors of [5] call the monomorphic case. An increasing fitness function in the Hamilton-Jacobi limit ensures that the movement of the Dirac delta mass is monotone and continuous almost everywhere in time.

Fig. 7.

Evolution in time of from the same initial data for and different values of . The dashed line is the Hamiltonian fitness which is re-scaled for the sake of readability. (A.) (B.)

This implies that in a stable environment, the cells select an optimal adaptation timing for the adaptation to DNA damage and then minimise the variance around it, which amounts to maximising towards . It confirms the conclusions of [44] obtained with a simpler model: in a predictable environment, the optimal strategy is to minimise randomness and optimise timing.

Time-Varying Environment

Yet, the experiments on budding yeast cells show that there is a huge variance around the mean adaptation timing. There are to our knowledge no study quantifying it from a statistical perspective, but the fact that adaptation occur at a variable timing is widely accepted in the community studying it. Following [44], we try to explain this discrepancy between the model and reality by adding a varying environment. In the wild, when sources of damage like heat, solar radiation or chemicals damage the DNA of cells, repair can be impossible for some time because the damage is still present and overloads the repair capacity of the cells. In such case, having some individuals that will adapt earlier can be beneficial for the survival of the population. Since there are no information available about the time distribution of such events in the wild, we choose here to use a time-periodic function for the varying environment, thereby modelling an alternation between favorable and hostile environments. Time periodic environment have been used recently in other studies (see e.g. [3, 24]) to model colonisation of an unpredictable environment by organisms.

To make the problem numerically tractable we use

rather than

because the later requires the program to compute a full vector of integrals at each time step, which makes long time simulations intractable. What this modification change is that the exponential term under the integral in the adaptation and repair inflows are time-independent but the repair inflow is suppressed altogether in hostile environment. It tends to slightly underestimate adaptation when repair is not possible because in the exponential more cells are suppressed “like they have repaired” than the quantity of cells actually repairing. This modification thus leads to a slightly increased death term for damaged cells in hostile environment. It is hard to be sure of which effect it has, but our guess is that the outcome would be the same where we able to simulate the costly time-dependent repair and adaptation rates.

We choose the time-varying environmental function

and we run the model in both a fixed and a time-varying environment from the same initial datum (see Fig. 8). We can observe that at the results are very different. In the case of the stable environment, as in Fig. 7, the mass moves towards . However, with the time-varying environment, the solution moves slowly towards the left. This numerical result strengthens the hypothesis of [44] that the heterogeneity in time of the adaptation to DNA process could be due to a bet-hedging mechanism when cells face an unpredictable environment.

Fig. 8.

Evolution in time of for , and in a stable or in a periodically varying environment from the same initial datum

Conclusion and Perspectives

In this article, we have investigated a cooperative two-population system of non-local parabolic PDEs motivated by a particular application in genetics: the understanding of the so-called adaptation to DNA damage phenomenon. We used a Hamilton-Jacobi approach which is well understood for one population non-local models [5, 6, 8]. This approach is well adapted to characterize a guess of the main trait in the limit population as it is underlined in [38]. Such a guess can be useful for instance in the context of cancer treatment as it is developed in [2, 17, 18] (and the references therein). It also appears that studying the Hopf-Cole transform (1.8) instead of the solution allows to compute the ratio . It would be helpful to calibrate the system.

First, in order to prove a similar result in our setting, we combined the approach for the one-population model with tools developed in [7] for non-local systems. We wrote the Hamiltonian associated with the system in terms of an eigenvalue of the matrix . After performing a Hopf-Cole transform, we first prove uniform regularity results on the solutions , which allows us to pass to the limit and obtain the constrained Hamilton-Jacobi equation for the limit . When the diffusion coefficients of the two populations are identical, we obtain the additional result that converges in time towards a corrective term dependent only on .

Then, we have derived from the ODE model of [44] a PDE system modelling the evolutionary dynamics of adaptation to DNA damage in a population of eukaryotic cells. Our theoretical results and numerical simulations allow us to support the findings of [44] that:

natural selection could be responsible for the apparition of a precise mean timing for the adaptation phenomenon.

the experimentally observed heterogeneity of individual adaptation timings in a population of cells could be explained by a bet-hedging mechanism while facing an unpredictable environment.

Our modelling approach is also more general and realistic than the one proposed in [44], because we take into account both mutations and competitions between cells presenting different genotypes in a same environment. It allows us to understand better why a particular trait is selected for the average timing in adaptation to DNA damage: using the optimal average timing allows cells to reproduce and thus out-compete populations with other phenotypes

Yet, this study leaves open several questions on both the mathematical and biological sides that this multiple population approach could help answer in the future.

First, our method relies heavily upon the cross terms and being positive. In the case of a competitive system or a prey-predator setting, we cannot apply the same techniques. In particular, it is unlikely that the convergence towards a fixed corrective term will hold true.

We did not address rigorously the question of the long time behaviour of the system. Our heuristic reasoning and the numerical simulations indicate strongly that there is convergence in time of towards a stationary state of the one-population model endowed with the Hamiltonian fitness of the system. A careful analysis is needed to validate this result.

Moreover, the use of a system of non-local PDEs open the possibility to test more hypotheses on adaptation to DNA damage or other natural selection mechanisms. For example by coupling with other equations representing predators, we could sharpen our understanding of the selection dynamics. The presence of dynamically evolving predators densities could introduce an Allee effect, giving ill-adapted cells lesser chances of survival.

Regarding the bet-hedging explanation for the heterogeneity of adaptation to DNA damage, our theoretical framework has to be adapted to prove solid results. It would be very useful to have a theory able to encompass the same kind of system but with time-varying coefficients as in [24] for a single species. The question of periodically changing environment is important in many biological applications [3]. It would be especially important to study theoretically and numerically the influence of the time period of those coefficients with a more systematic and realistic approach. For now, we can conclude with our numerical experiments that the varying environment changes radically the dynamics, but the convergence is very slow. We did experiments for longer time and it was still unclear if a stable equilibrium was reached or not. An inappropriate time-step can also produce strange resonances in the numerical solution, further hindering the numerical exploration. Implementing the asymptotic-preserving scheme recently proposed in [13] could be a route for a better understand of the evolution for small in a variable environment.

It would also be interesting to study this kind of two populations system in higher dimension. In particular, in our biological setting, it would be interesting to have a bi-dimensional space for the genetic trait with Neumann boundary conditions on the boundaries and in order to validate the idea that in a stable environment the solution concentrates on a Dirac mass moving at the same time towards the line and the direction in the limit. In this bi-dimensional space for the genetic trait, it would also be possible to study the effect of a time-periodic environment in a more realistic framework.

Last, it could be useful to study the more complex model (5.5)–(5.8) theoretically and numerically in order to verify that the quasi-static approximation does not hide key features of the biological phenomenon. This might require cumbersome computations and significant computing power, but it remains feasible in principle with a carefully crafted numerical scheme.

Acknowledgements

Alexis Léculier and Pierre Roux were supported by the ERC ADORA. Pierre Roux was supported by the Advanced Grant Non local-CPD (Nonlocal PDEs for Complex Particle Dynamics: Phase Transitions, Patterns and Synchronization) of the European Research Council Executive Agency (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No. 883363). Both authors want to warmly thank Benoît Perthame for his help and precious advice. Both authors want also to thank Zhou Xu for the time dedicated to fruitful discussions about the biological motivation of this project. A.L. wants also to thank Sepideh Mirrahimi for all the enlightening discussions.

Appendix A: Existence and Bounds of

We prove in this section the existence of a solution of (1.5). We use the classical Picard Banach fixed point Theorem. The details follow the Appendix A of [8]. We recall that we have made the assumptions (H1)-(H4).

Let be a given time and be the following closed subset:

where and for some positive . In the following, we will denote . Next, we define the following application

where is the solution of

| A.1 |

where

and

The aim is to verify that satisfies two claims:

maps into itself,

is a contractive application for small enough.

Proof of claim 1

Let and . By the maximum principle, we have that . It remains to prove the bound. According to (A.1), we have

with . Next, we conclude thanks to the Gronwall Lemma that

It finishes the proof of claim 1. □

Proof of claim 2

Let , and . We have

We recall that and . Next, by multiplying at left by and integrating over space, we deduce that

Since , we conclude thanks to the Gronwall Lemma that

Remarking that as , we conclude that for small enough, is contractive. It concludes the proof of existence. □

Next, we focus on the bounds of . According to (1.5), we have

Adding the two first equations of the system (1.5) and integrating over leads to

Therefore, since each components of the min (resp. max) in (H3) is decreasing with respect to , we deduce that if then (resp. if then ). The conclusion follows.

Appendix B: Checking the Technical Hypotheses in the Main Application

Lemma B.1

Denote

| B.1 |

Then, there exists such that

Proof

Note first that the form of implies that

| B.2 |

Then, we have for all ,

By integration by parts we have

Moreover,

and with (B.2),

Thus, we have

and the result follows. □

Lemma B.2

Assume , then

and there exist such that

Proof

Note that there exists two constants such that

We have

By positivity of and ,

because .

We compute:

Moreover,

and

Hence,

□

Footnotes

The naming “adaptation to DNA damage” can be a bit misleading because it describes a metabolic response of the cells and not a genetic adaptation, so speaking about both adaptation to DNA damage and genetic adaptation from the perspective of evolutionary dynamics can sometimes be confusing. Nonetheless, this expression was used for so long among specialists and has gained so much momentum that it is now impossible to change.

Break-induced replication (BIR) consists in healing a one-ended break by copying to the extremity a template chromosome

P. Roux previously: Laboratoire Jacques-Louis Lions (LJLL), Sorbonne Université, 75205 Paris Cedex 06, France.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Alexis Léculier, Email: leculier@ljll.math.upmc.fr.

Pierre Roux, Email: pierre.roux@maths.ox.ac.uk.

References

- 1.Alfaro M., Veruete M. Evolutionary branching via replicator-mutator equations. J. Dyn. Differ. Equ. 2019;31(4):2029–2052. [Google Scholar]

- 2.Almeida L., Bagnerini P., Fabrini G., Hughes B.D., Lorenzi T. Evolution of cancer cell populations under cytotoxic therapy and treatment optimisation: insight from a phenotype-structured model. ESAIM: Math. Model. Numer. Anal. 2019;53(4):1157–1190. [Google Scholar]

- 3.Ambrosio B., Ducrot A., Ruan S. Generalized traveling waves for time-dependent reaction–diffusion systems. Math. Ann. 2021;381(1):1–27. [Google Scholar]

- 4.Barles G. Hamilton-Jacobi Equations: Approximations, Numerical Analysis and Applications. Heidelberg: Springer; 2013. An introduction to the theory of viscosity solutions for first-order Hamilton-Jacobi equations and applications; pp. 49–109. [Google Scholar]

- 5.Barles G., Perthame B. Concentrations and constrained Hamilton-Jacobi equations arising in adaptive dynamics. Contemp. Math. 2007;439:57–68. [Google Scholar]

- 6.Barles G., Perthame B. Dirac concentrations in Lotka-Volterra parabolic PDEs. Indiana Univ. Math. J. 2008;57:3275–3301. [Google Scholar]

- 7.Barles G., Evans L.C., Souganidis P.E. Wavefront propagation for reaction-diffusion systems of PDE. Duke Math. J. 1990;61(3):835–858. [Google Scholar]

- 8.Barles G., Mirrahimi S., Perthame B. Concentration in Lotka-Volterra parabolic or integral equations: a general convergence result. Methods Appl. Anal. 2009;16(3):321–340. [Google Scholar]

- 9.Bootsma M., van der Horst M., Guryeva T., Ter Kuile B., Diekmann O. Modeling non-inherited antibiotic resistance. Bull. Math. Biol. 2012;74(8):1691–1705. doi: 10.1007/s11538-012-9731-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Bouin, E., Mirrahimi, S.: A Hamilton-Jacobi approach for a model of population structured by space and trait (2013). 1307.8332

- 11.Brännström Å., Johansson J., Von Festenberg N. The hitchhiker’s guide to adaptive dynamics. Games. 2013;4(3):304–328. [Google Scholar]

- 12.Calsina À., Cuadrado S. Small mutation rate and evolutionarily stable strategies in infinite dimensional adaptive dynamics. J. Math. Biol. 2004;48(2):135–159. doi: 10.1007/s00285-003-0226-6. [DOI] [PubMed] [Google Scholar]

- 13. Calvez, V., Hivert, H., Yoldaş, H.: Concentration in Lotka-Volterra parabolic equations: an asymptotic-preserving scheme (2022). 2204.04146

- 14.Carrillo J.A., Cuadrado S., Perthame B. Adaptive dynamics via Hamilton–Jacobi approach and entropy methods for a juvenile-adult model. Math. Biosci. 2007;205(1):137–161. doi: 10.1016/j.mbs.2006.09.012. [DOI] [PubMed] [Google Scholar]

- 15.Champagnat N., Ferrière R., Méléard S. Unifying evolutionary dynamics: from individual stochastic processes to macroscopic models. Theor. Popul. Biol. 2006;69(3):297–321. doi: 10.1016/j.tpb.2005.10.004. [DOI] [PubMed] [Google Scholar]

- 16.Champagnat N., Ferrière R., Méléard S. Seminar on Stochastic Analysis, Random Fields and Applications V. Berlin: Springer; 2007. Individual-based probabilistic models of adaptive evolution and various scaling approximations; pp. 75–113. [Google Scholar]

- 17.Chisholm R.H., Lorenzi T., Lorz A., Larsen A.K., de Almeida L.N., Escargueil A., Clairambault J. Emergence of drug tolerance in cancer cell populations: an evolutionary outcome of selection, nongenetic instability, and stress-induced adaptation. Cancer Res. 2015;75(6):930–939. doi: 10.1158/0008-5472.CAN-14-2103. [DOI] [PubMed] [Google Scholar]

- 18.Clairambault J., Pouchol C. A survey of adaptive cell population dynamics models of emergence of drug resistance in cancer, and open questions about evolution and cancer. Biomathematics. 2019;8(1):1905147. [Google Scholar]

- 19.Cohen D. Optimizing reproduction in a randomly varying environment. J. Theor. Biol. 1966;12(1):119–129. doi: 10.1016/0022-5193(66)90188-3. [DOI] [PubMed] [Google Scholar]

- 20.Coutelier H., Xu Z. Adaptation in replicative senescence: a risky business. Curr. Genet. 2019;65(3):711–716. doi: 10.1007/s00294-019-00933-7. [DOI] [PubMed] [Google Scholar]

- 21.Coutelier H., Xu Z., Morisse M.C., Lhuillier-Akakpo M., Pelet S., Charvin G., Dubrana K., Teixeira M.T. Adaptation to DNA damage checkpoint in senescent telomerase-negative cells promotes genome instability. Genes Dev. 2018;32(23–24):1499–1513. doi: 10.1101/gad.318485.118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Diekmann O. Summer School on Mathematical Biology. 2002. A beginners guide to adaptive dynamics; pp. 63–100. [Google Scholar]

- 23.Diekmann O., Jabin P.-E., Mischler S., Perthame B. The dynamics of adaptation: an illuminating example and a Hamilton-Jacobi approach. Theor. Popul. Biol. 2005;67(4):257–271. doi: 10.1016/j.tpb.2004.12.003. [DOI] [PubMed] [Google Scholar]

- 24.Figueroa Iglesias S., Mirrahimi S. Long time evolutionary dynamics of phenotypically structured populations in time-periodic environments. SIAM J. Math. Anal. 2018;50(5):5537–5568. [Google Scholar]

- 25.Galgoczy D.J., Toczyski D.P. Checkpoint adaptation precedes spontaneous and damage-induced genomic instability in yeast. Mol. Cell. Biol. 2001;21(5):1710–1718. doi: 10.1128/MCB.21.5.1710-1718.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jabin P.-E., Raoul G. On selection dynamics for competitive interactions. J. Math. Biol. 2011;63(3):493–517. doi: 10.1007/s00285-010-0370-8. [DOI] [PubMed] [Google Scholar]

- 27.Kaye J.A., Melo J.A., Cheung S.K., Vaze M.B., Haber J.E., Toczyski D.P. DNA breaks promote genomic instability by impeding proper chromosome segregation. Curr. Biol. 2004;14(23):2096–2106. doi: 10.1016/j.cub.2004.10.051. [DOI] [PubMed] [Google Scholar]

- 28.Klermund J., Bender K., Luke B. High nutrient levels and torc1 activity reduce cell viability following prolonged telomere dysfunction and cell cycle arrest. Cell Rep. 2014;9(1):324–335. doi: 10.1016/j.celrep.2014.08.053. [DOI] [PubMed] [Google Scholar]

- 29. Léculier, A., Mirrahimi, S.: Adaptation to a heterogeneous patchy environment with nonlocal dispersion (2021). Preprint

- 30.Lee S.E., Moore J.K., Holmes A., Umezu K., Kolodner R.D., Haber Saccharomyces J.E. Ku70, Mre11/Rad50, and RPA proteins regulate adaptation to G2/M arrest after DNA damage. Cell. 1998;94(3):399–409. doi: 10.1016/s0092-8674(00)81482-8. [DOI] [PubMed] [Google Scholar]

- 31.Lorenzi T., Pouchol C. Asymptotic analysis of selection-mutation models in the presence of multiple fitness peaks. Nonlinearity. 2020;33(11):5791. [Google Scholar]

- 32.Lorenzi T., Chisholm R.H., Desvillettes L., Hughes B.D. Dissecting the dynamics of epigenetic changes in phenotype-structured populations exposed to fluctuating environments. J. Theor. Biol. 2015;386:166–176. doi: 10.1016/j.jtbi.2015.08.031. [DOI] [PubMed] [Google Scholar]

- 33.Lorenzi T., Chisholm R.H., Clairambault J. Tracking the evolution of cancer cell populations through the mathematical lens of phenotype-structured equations. Biol. Direct. 2016;11(1):1–17. doi: 10.1186/s13062-016-0143-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lorz A., Lorenzi T., Hochberg M.E., Clairambault J., Perthame B. Populational adaptive evolution, chemotherapeutic resistance and multiple anti-cancer therapies. ESAIM: Math. Model. Numer. Anal. 2013;47(2):377–399. [Google Scholar]

- 35.Malkova A., Naylor M.L., Yamaguchi M., Ira G., Haber J.E. Rad51-dependent break-induced replication differs in kinetics and checkpoint responses from rad51-mediated gene conversion. Mol. Cell. Biol. 2005;25(3):933–944. doi: 10.1128/MCB.25.3.933-944.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mirrahimi S. Adaptation and migration of a population between patches. Discrete Contin. Dyn. Syst., Ser. B. 2013;18(3):753–768. [Google Scholar]

- 37.Mirrahimi S. A Hamilton-Jacobi approach to characterize the evolutionary equilibria in heterogeneous environments. Math. Models Methods Appl. Sci. 2017;27(13):2425–2460. [Google Scholar]

- 38.Mirrahimi S., Gandon S. Evolution of specialization in heterogeneous environments: equilibrium between selection, mutation and migration. Genetics. 2020;214(2):479–491. doi: 10.1534/genetics.119.302868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Perthame B. Transport Equations in Biology. Berlin: Springer; 2006. [Google Scholar]

- 40.Perthame B. Transport Equations in Biology. Berlin: Springer; 2006. [Google Scholar]

- 41.Perthame B., Souganidis P. Rare mutations limit of a steady state dispersal evolution model. Math. Model. Nat. Phenom. 2016;11(4):154–166. [Google Scholar]