Abstract.

Purpose

The purpose of our study was to analyze dental panoramic radiographs and contribute to dentists’ diagnosis by automatically extracting the information necessary for reading them. As the initial step, we detected teeth and classified their tooth types in this study.

Approach

We propose single-shot multibox detector (SSD) networks with a side branch for 1-class detection without distinguishing the tooth type and for 16-class detection (i.e., the central incisor, lateral incisor, canine, first premolar, second premolar, first molar, second molar, and third molar, distinguished by the upper and lower jaws). In addition, post-processing was conducted to integrate the results of the two networks and categorize them into 32 classes, differentiating between the left and right teeth. The proposed method was applied to 950 dental panoramic radiographs obtained at multiple facilities, including a university hospital and dental clinics.

Results

The recognition performance of the SSD with a side branch was better than that of the original SSD. In addition, the detection rate was improved by the integration process. As a result, the detection rate was 99.03%, the number of false detections was 0.29 per image, and the classification rate was 96.79% for 32 tooth types.

Conclusions

We propose a method for tooth recognition using object detection and post-processing. The results show the effectiveness of network branching on the recognition performance and the usefulness of post-processing for neural network output.

Keywords: dental panoramic radiograph, deep learning, tooth recognition, computer-assisted diagnosis

1. Introduction

Medical images used in the field of dentistry include dental panoramic radiographs and dental periapical films, as well as dental computed tomography (CT) images. Dental panoramic radiographs are the most common images in the dental field, and they are routinely taken and interpreted. However, because panoramic radiographs contain various types of information, including the treatment status, caries, and apex lesions, it is difficult for dentists to make an accurate diagnosis of every tooth. In addition to teeth, many different types of lesions, such as periodontal disease and jawbone lesions, can be visualized in the images. Therefore, computer-aided diagnosis is expected to assist dentists in reading the images.1 The purpose of this study was to extract information necessary for reading dental panoramic radiographs and contribute to a diagnosis. We detected teeth and classified their tooth types as the initial step in this study.

In this study, we considered a neural network and post-processing for the detection of teeth and a classification of tooth types on dental panoramic radiographs. An attention branch network2 has been proposed to improve the classification performance by creating attention map through a network branch and boost the feature maps. We believe that the use of global information acquired from a branch will be useful for medical imaging, where a variety of findings take place. In this study, we propose a network based on a single shot multibox detector (SSD),3 which predicts the probability of the existence of 32 tooth types through the branch as an alternative to an attention map.

Studies on tooth recognition in dental images include methods for classifying tooth types using cropped images of each tooth4–6 and segmenting individual teeth7–10 in panoramic images and dental cone beam CT. Regarding the method used to recognize the 32 tooth types in panoramic radiographs, as applied in this study, Kim et al.11 proposed a method using object detection, classification, and heuristics. They reported the tooth recognition recall of 58.4%, which is calculated by multiplying the tooth detection recall by the accuracy of the 32 tooth-type classification. Lee12 proposed a method using multiclass segmentation for each tooth type. The recall for tooth segmentation was 89.3%, while the classification performance was not reported. Tuzoff et al.13 used 1574 cases taken at a single institution for tooth detection using Faster region-based convolutional neural networks (R-CNN) and then classified them within a possible anatomical order. They obtained the recognition recall of 97.4%. Moreover, Chung et al.14 used 818 cases taken by four different imaging machines to recognize 32 tooth types by predicting their positions regardless of missing teeth. Although the tooth identification recall of 97.2% was reported, the boxes with teeth present and absent were not distinguished. In the aforementioned studies, two different CNN models were used for detection and classification. In patch-based classification, local and global information, as generally used by dentists, is not fully utilized. We first used multi-class detection to detect and classify teeth simultaneously. Furthermore, we expected that the proposed model would improve the performance of tooth type recognition compared to SSD using local and global information.

In our preliminary study, the proposed network with post-processing was evaluated using 100 panoramic images obtained at a single university hospital.15 However, owing to a lack of cases, the robustness was a concern. In this study, we evaluated the proposed method using 950 cases taken with various imaging systems at ten different facilities, the majority of which are community dental clinics. In addition, we compared the proposed model with the original SSD and the attention SSD. The attention map was created as suggested in the attention branch network.2

2. Methods

2.1. Databases

In this study, 950 dental panoramic radiographs obtained at ten facilities, including a university hospital and dental clinics, were used. They were collected under the approval of the Institutional Review Board of Asahi University. Informed consent was waived with opportunities for an opt-out. The images were captured using various imaging machines, and the matrix size ranged from pixel resolutions of to . Approximate outlines of the crown and root of each tooth were annotated by six dentists with 4, 6, 10, 11, 13, and 24 years of experience in dentistry. Each case was annotated by only one dentist. In addition, all cases were checked by another dentist with 35 years of experience. Rectangular bounding boxes that confined the outlines were used as labels in this study. The detection target was teeth with crowns and roots, or teeth with crowns only. The crown-only teeth mainly include impacted teeth that the roots are not visible in the image due to the angle of the teeth, or only crowns have formed but not roots in young patients. Implants, pontics, and the remaining roots were excluded.

2.2. Input Images

First, dentition areas were extracted to exclude any unnecessary background to save processing time and remove sources of false positives and unify the approximate sizes of the teeth between images from different machines. The original SSD was trained using the training samples described in Sec. 2.5. Once the dentition area was detected, zero padding was applied to the top and bottom to form a square, which was then scaled down to a pixel resolution of for input to the tooth recognition models (Fig. 1).

Fig. 1.

Region of interest as shown in the green rectangle was extracted from the original image and zero padded on the top and bottom to create the input image.

2.3. Object Detectors

In the classification of tooth types, the teeth surrounding the target tooth contain important information. Local information, similar to what dentists use, is useful for the multi-class detection of teeth. However, teeth generally have similar characteristics in panoramic radiographs. Therefore, to increase the tooth detection sensitivity and type classification accuracy, the two object detection models were trained for one class only (“tooth” class) and for 16 classes (central incisors, lateral incisors, canines, first bicuspids, second bicuspids, first molars, second molars, and third molars, as distinguished by the upper and lower jaws). We labeled 16 classes instead of 32 because there was no significant difference in shape between the right and left teeth of the same type, and we judged that it would be difficult to distinguish them using deep learning. In addition, it can increase the number of samples by approximately two-fold.

In the proposed model, the network is branched, and the probability of the presence of the 32 tooth types is learned and predicted at the destination of the branch. We combined this global information with the local features extracted using the convolutional layers. Figure 2 shows the proposed model. The base model is the SSD, and the newly added branched network in our model is specified in the shaded area. In this paper, we call the array of the probability of the existence of the 32 dental types, expressed from 0 to 1, a dental chart. The original meaning of the dental chart is a record of the dental findings by a dentist. The array here is a simplified concept compared to the original.

Fig. 2.

Proposed model, based on SSD, with the newly added branching network specified by the shaded area.

Global average pooling is applied to each of the seven scales of feature maps obtained through the feature extraction section and combined with a concatenation layer. It is then input to a fully connected layer (Dense) to predict the dental chart. Binary cross entropy is then used as the loss function for this side branch. During inference, the predicted dental chart is adjusted to the size of the feature map for each scale through dense and reshape layers, and multiplication and addition are then conducted. Finally, object detection is conducted on the feature map of each scale according to the SSD process. The overall learning loss is the sum of the dental chart loss and the SSD-loss.

2.4. Post-Processing

For the detected 2D-bounding boxes, we applied a (i) duplicate deletion, (ii) left-right classification, (iii) integration, and (iv) classification correction. Figure 3 shows an overview of the post-processing.

Fig. 3.

Flow of post-processing.

In (i), if the intersection over union (IoU) of the two detected boxes exceeds 0.5, the one having lower output confidence is deleted. In (ii), the remaining boxes are classified into left and right teeth based on the detected central incisors according to results of the 16-class detection. Using this process, the 16 classes were converted into 32 classes (32 tooth types). In (iii), the results of the 1-class detection in (i) and the output of (ii) are integrated. Duplicate deletions are applied again on the integrated results; in this case, if the two detection boxes are output by the different networks, the box from the 16-class detection is kept and one from the 1-class detection is deleted. Because there was a trade-off between the detection rate and multi-class detection, we improved the detection rate by combining the results of the two networks. In (iv), the detected box with the tooth class from the 1-class detection was given a new class corresponding to the 32 tooth types based on the adjacent boxes. However, if the classes are overlapped among multiple boxes, those classes of boxes with lower confidence are modified or the boxes are deleted. The correction is based on a box with a higher confidence among the detected boxes adjacent to the target box. When the left and right adjacent boxes are numbered sequentially, the target box is deleted.

2.5. Evaluation Methods

Of the 950 cases, 680 cases were used as training data, 80 as validation data, and 190 as test data in a 5-fold cross-validation. For each round of the cross-validation, no test data were used during the training. The test data were used only once to evaluate the performance of the final fixed model, and no parameter adjustments were made. For the detected box, if the IoU with the labeled box exceeded 0.5, it was considered a successful detection; otherwise, it was considered a false detection. If there were multiple detection boxes for a labeled box with , one with the highest IoU were counted as true detection; other boxes were considered false positives. The following are used as indices for tooth detection and the classification performance.

-

1.

Detection rate: total number of successful boxes/total number of labeled boxes.

-

2.

Number of false positives per image: total number of false detection boxes/number of cases.

-

3.

Recognition rate: total number of successful boxes with the correct class/total number of labeled teeth.

-

4.

Perfect detection (PD): 100% detection rate with no false positives in a case.

-

5.

Perfect recognition (PR): 100% recognition rate with no false positives in a case (PD and classification).

3. Results

3.1. Comparison of Detectors

Table 1 gives an evaluation of the outputs of the detectors before post-processing. The results shown here are the result of evaluating all 950 cases by a five-fold cross-validation. In this section, we compare the original SSD, SSD with an attention map, and the proposed model. An example of an attention map is shown in Fig. 4. The numbers shown in Table 1 are the average results of the five-fold cross-validation.

Table 1.

Comparison of detectors.

| Detection rate (%) | Number of false positives per image | Recognition rate (%) | ||

|---|---|---|---|---|

| 1-class | SSD | 98.27 | 0.31 | — |

| Attention map | 98.01 | 0.29 | — | |

| Proposed model | 98.45 | 0.28 | — | |

| 16-class | SSD | 97.75 | 2.03 | 95.20 |

| Attention map | 97.67 | 1.98 | 95.08 | |

| Proposed model | 98.04 | 1.80 | 95.75 | |

Fig. 4.

An input image (above) and the attention map (below). The attention map shows the areas of focus for predicting the probability of the presence of teeth.

3.2. Post-Processing Effects

Table 2 gives the post-processing results of the proposed model for the 1-class and 16-class detections. Figure 5 shows examples of PDs in which all teeth were detected except one with an incorrect class, and PRs in which all teeth were correctly classified. Figure 6 shows the cases of misdetection and/or misclassification.

Table 2.

Post-processing effects (16 tooth types for before post-processing and (i), and 32 tooth types for (ii) and after.).

| Detection rate (%) | Number of false positives per image | Recognition rate (%) | Number of cases of PD | Number of cases of PR | |

|---|---|---|---|---|---|

| Before post-processing | 98.04 | 1.80 | 95.75 | — | — |

| (i) Duplicate deletion | 97.73 | 0.25 | 95.48 | — | — |

| (ii) Left-right classification | 97.73 | 0.25 | 95.32 | 472 | 333 |

| (iii) Integration | 99.12 | 0.37 | 95.31 | 581 | 308 |

| (iv) Classification correction | 99.03 | 0.29 | 96.79 | 606 | 456 |

| Tuzoff et al.13 | 99.41 | 0.13 | 97.42 | — | — |

Fig. 5.

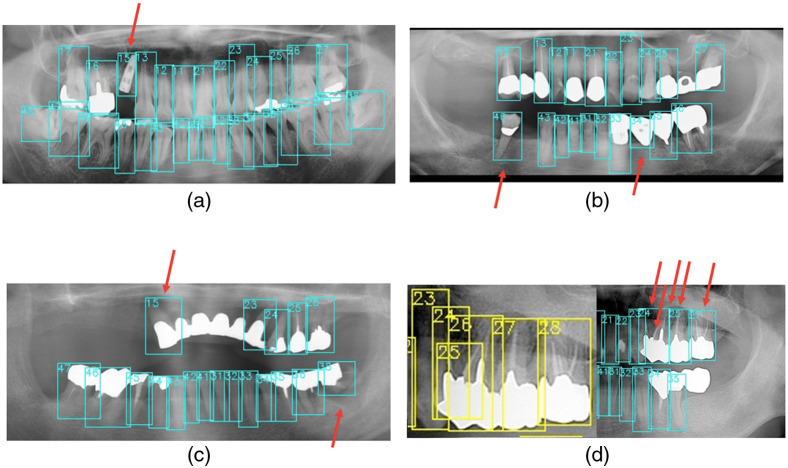

(a) A case of perfect detection. The arrow shows the detected box predicted as class 23, when it is actually class 24. (b) A case of perfect detection. The arrow shows the box predicted as class 18, where the true class is actually class 17. (c) and (d) Cases of perfect recognition.

Fig. 6.

Cases with misdetection and/or misclassification (arrows). (a) Implant predicted as 15 was a false positive. (b) The 45 was predicted as 46. The box predicted as 34 is a false positive (a pontic is excluded from detection in this study). (c) The predicted classes were 15 and 38, whereas the true classes are 13 and 37, respectively. (d) Numbers 24–28 were incorrectly detected/misclassified. The correct boxes are shown on the left in yellow.

4. Discussion

4.1. Proposed Model

The recognition performance of the proposed model was improved by comparing it with the SSD and the proposed model. This is probably owing to the fact that the global information in terms of the existence of 32 tooth types is considered in the feature maps. Therefore, further improvement in the recognition performance is expected by exploring methods that provide feedback to feature maps from the branch destination. In this study, the performance did not improve when the attention map was fed back. We believe that one of the advantages of the proposed model over the attention map is that the proposed model can determine how the information is added to the feature map through training.

On the other hand, the performance of the proposed method is a little lower than that of the method developed by Tuzoff et al.,13 which has the best performance among the related studies. However, this study used images taken at multiple facilities, whereas Tuzoff et al. used the images obtained by a single imaging unit at single institution. Therefore, we believe that the proposed method is highly versatile. In addition, the proposed method requires only one CNN model for detection and classification, while that of the method developed by Tuzoff et al.13 requires two CNN models, one for detection and one for classification.

4.2. Post-Processing

Because most of the false positives were caused by the prediction of multiple detection boxes for a single tooth, post-processing (i) was able to remove many of the false positives. Although (ii) was successful in many cases, classification failures occurred in some cases, reducing the recognition rate. Because this process depends on the prediction accuracy of the central incisors, it is expected to be improved by improving the recognition performance of the detector. In addition, (iii) contributed to an improvement in the detection rate. Moreover, the recognition rate and number of cases of PR showed that a class correction was successfully conducted in (iv).

4.3. Failed Cases

Implants and pontics were often sources of false positives, as shown in Figs. 6(a) and 6(b). Some of them were given tooth numbers that were inconsistent with the surrounding teeth but were corrected through post-processing (iii). Therefore, it is possible to recognize them. However, whether they should be included in the detection target and treated as teeth or distinguished from teeth during the training process will be discussed in the future. For failures in Figs. 5(b) and 6(c), we believe that the shape of the tooth had an effect. As shown in Figs. 5 and 6, there were frequent misclassifications between classes 6, 7, and 8, which are similar in shape. For the Class 13 tooth in Fig. 6(c), the misclassification was probably caused by a change in the shape of the crown owing to treatment. Another difficult case was that of the overlapping teeth, as shown in Fig. 6(d).

4.4. A 32-class detection

To investigate the usefulness of 16-class detection, we conducted 32-class detection using SSD. The results (before post-processing) showed that the detection rate was 99.46%, the number of false positives was 31.79 per image, and the recognition rate was 60.53%, resulting in a significant decrease in the recognition rate. The 32-class detection mainly failed to distinguish between the left and right teeth, indicating the effectiveness of the proposed method of 16-class detection followed by left-right classification.

4.5. Imaging Conditions

The database we used consisted of images obtained at ten different facilities, which is, to the best of our knowledge, the largest number among the relevant studies. Figure 7 shows images taken using the four different types of imaging machines. Images from different machines have different image qualities, such as the mean pixel value, contrast, and edge strength. However, the proposed method can successfully recognize the teeth in all images shown in Fig. 7, as indicated in Fig. 8. We achieved a high detection and recognition performance, as shown in Table 2, indicating the robustness of our proposed method.

Fig. 7.

Four images (a)–(d) obtained with four different machines. They present various contrasts and average pixel values.

Fig. 8.

Results of images in Figs. 7(a)–7(d) by the proposed method. All cases have perfect recognition.

5. Conclusion

Using 950 dental panoramic radiographs, we recognized 32 tooth types using the proposed model with branching on SSD and post-processing. The detection rate was 99.03%, the number of false positives per image was 0.29, and the recognition rate was 96.79%. Because the proposed model has improved the recognition performance compared to the simple SSD, we will explore ways to effectively provide feedback information from the branch to further improve the recognition performance. We believe that the proposed model can assist dentists in achieving an image diagnosis.

Acknowledgments

This study was partially supported by JSPS Grants-in-Aid for Scientific Research JP19K10347 and by a joint study grant from Eye Tech Co. The authors would like to express their gratitude to Y. Ariji, DDS, PhD, and E. Ariji, DDS, PhD, of Aichi-Gakuin University, and all who provided useful advice in conducting this study. We would like to thank Editage16 for English language editing.

Biographies

Takumi Morishita received a master’s degree in engineering at the Graduate School Natural Science and Technology, Gifu University, Japan, in 2022.

Chisako Muramatsu is an associate professor at Shiga University’s Faculty of Data Science, Japan. She received her PhD in medical physics from the University of Chicago in 2008. Her research interests include image processing and medical image analysis. She is a member of SPIE.

Yuta Seino received his DDS (doctor of dental surgery) and PhD degrees in dentistry from Niigata University, Japan, in 2016 and 2020, respectively. From 2020 to 2022, he had been in the research fellowship for young scientists of "Japan Society for the Promotion of Science.” He is a postdoctoral research fellow of the same organization and working at Gifu University (Faculty of Engineering), Japan. His research interest includes clinical application of AI using medical images, especially in dentistry.

Wataru Nishiyama graduated from Aichi-Gakuin University School of Dentistry in 2009. He has been an assistant professor in the Department of Dental Radiology at Aichi-Gakuin University since 2014. He has been an assistant professor in the Department of Dental Radiology at Asahi University since 2016. He received his PhD from Asahi University in 2021. His research interest includes AI-based computer-aided diagnosis system for panoramic radiography.

Xiangrong Zhou received his MS and PhD degrees in information engineering from Nagoya University, Japan, in 1997 and 2000, respectively. From 2000 to 2002, he continued his research in medical image processing as a postdoctoral researcher at Gifu University. Currently, he is an associate professor in the Department of Electrical, Electronic & Computer Engineering, Gifu University, Japan. His research interests include medical image analysis, pattern recognition, and machine learning.

Takeshi Hara received his PhD in information engineering from Gifu University, Japan, in 2000. He became an associate professor in 2001 in the Faculty of Engineering. From 2002 to 2017, he was an associate professor in the Department of Intelligent Image Information, Graduate School of Medicine, Gifu University. He is currently a professor in the Faculty of Engineering. His research interest includes image analysis and evaluation of computer-aided diagnosis methods using human-AI interaction.

Akitoshi Katsumata received his DDS (doctor of dental surgery) degree from Asahi University School of Dentistry in April 1987. He received his doctor of dental science degree (PhD equivalent) from Asahi University in March 1993. He became a professor and chairman in the Department of Oral Radiology, School of Dentistry, Asahi University, in April 2011. His specialty is oral and maxillofacial radiology.

Hiroshi Fujita received his PhD in information engineering from Nagoya University, Japan, in 1983. From 1983 to 1986, he was a research associate at the University of Chicago, United States. He became a professor in the Faculty of Engineering, Gifu University, in 1995. From 2002 to 2017, he was a chairman and professor in the Department of Intelligent Image Information, Graduate School of Medicine. He is currently a research professor/emeritus professor. His research interest includes AI-based computer-aided diagnosis systems.

Disclosures

The authors have no relevant conflicts of interest to disclose.

Contributor Information

Takumi Morishita, Email: tmori@fjt.info.gifu-u.ac.jp.

Chisako Muramatsu, Email: chisako-muramatsu@biwako.shiga-u.ac.jp.

Yuta Seino, Email: historoids@gmail.com.

Ryo Takahashi, Email: r-takahashi@eyetech.jp.

Tatsuro Hayashi, Email: t-hayashi@eyetech.jp.

Wataru Nishiyama, Email: wataru@dent.asahi-u.ac.jp.

Xiangrong Zhou, Email: zxrgoo@gmail.com.

Takeshi Hara, Email: hara@fjt.info.gifu-u.ac.jp.

Akitoshi Katsumata, Email: kawamata@dent.asahi-u.ac.jp.

Hiroshi Fujita, Email: fujita@fjt.info.gifu-u.ac.jp.

References

- 1.Fujita H., “AI-based computer-aided diagnosis (AI-CAD): the latest review to read first,” Radiol. Phys. Technol. 13(1), 6–19 (2020). 10.1007/s12194-019-00552-4 [DOI] [PubMed] [Google Scholar]

- 2.Fukui H., et al. , “Attention branch network: learning of attention mechanism for visual explanation,” in Proc. IEEE Conf. Comput. Vision and Pattern Recognit., pp. 10705–10714 (2019). 10.1109/CVPR.2019.01096 [DOI] [Google Scholar]

- 3.Liu W., et al. , “SSD: single shot multibox detector,” in Eur. Conf. comput. Vision, pp. 21–37 (2016). [Google Scholar]

- 4.Muramatsu C., et al. , “Tooth detection and classification on panoramic radiographs for automatic dental chart filing: improved classification by multi-sized input data,” Oral Radiol. 37(1), 13–19 (2021). 10.1007/s11282-019-00418-w [DOI] [PubMed] [Google Scholar]

- 5.Miki Y., et al. , “Tooth labeling in cone-beam CT using deep convolutional neural network for forensic identification,” Proc. SPIE 10134, 101343E (2017). 10.1117/12.2254332 [DOI] [Google Scholar]

- 6.Miki Y., et al. , “Classification of teeth in cone-beam CT using deep convolutional neural network,” Comput. Biol. Med. 80, 24–29 (2017). 10.1016/j.compbiomed.2016.11.003 [DOI] [PubMed] [Google Scholar]

- 7.Cui Z., Li C., Wang W., “ToothNet: automatic tooth instance segmentation and identification from cone beam CT images,” in IEEE/CVF Conf. Comput. Vision and Pattern Recognit., pp. 6368–6377 (2019). 10.1109/CVPR.2019.00653 [DOI] [Google Scholar]

- 8.Silva G., Oliveira L., Pithon M., “Automatic segmenting teeth in X-ray images: trends, a novel data set, benchmarking and future perspectives,” Expert Syst. Appl. 107, 15–31 (2018). 10.1016/j.eswa.2018.04.001 [DOI] [Google Scholar]

- 9.Wirtz A., Mirashi S. G., Wesarg S., “Automatic teeth segmentation in panoramic X-ray images using a coupled shape model in combination with a neural network,” Lect. Notes Comput. Sci. 11073, 712–719 (2018). 10.1007/978-3-030-00937-3_81 [DOI] [Google Scholar]

- 10.Tian S., et al. , “Automatic classification and segmentation of teeth on 3D dental model using hierarchical deep learning networks,” IEEE Access 7, 84817–84828 (2019). 10.1109/ACCESS.2019.2924262 [DOI] [Google Scholar]

- 11.Kim C., et al. , “Automatic tooth detection and numbering using a combination of a CNN and heuristic algorithm,” Appl. Sci. 10(16), 5624 (2020). 10.3390/app10165624 [DOI] [Google Scholar]

- 12.Lee J. H., “Application of a fully deep convolutional neural network to the automation of tooth segmentation on panoramic radiographs,” Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 129(6), 635–642 (2020). 10.1016/j.oooo.2019.11.007 [DOI] [PubMed] [Google Scholar]

- 13.Tuzoff D. V., et al. , “Tooth detection and numbering in panoramic radiographs using convolutional neural networks,” Dentomaxillofac. Radiol. 48(4), 20180051 (2019). 10.1259/dmfr.20180051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Chung M., et al. , “Individual tooth detection and identification from dental panoramic x-ray images via point-wise localization and distance regularization,” Artif. Intell. Med. 111, 101996 (2021). 10.1016/j.artmed.2020.101996 [DOI] [PubMed] [Google Scholar]

- 15.Morishita T., et al. , “Tooth recognition and classification using multi-task learning and post-processing in dental panoramic radiographs,” Proc. SPIE 11597, 115971X (2021). 10.1117/12.2582046 [DOI] [Google Scholar]

- 16.Editage, “Meeting all your researcher needs to help you publish & flourish,” www.editage.com (accessed 27 July 2021).