Abstract

In this article, we consider the development of unbiased estimators of the Hessian, of the log-likelihood function with respect to parameters, for partially observed diffusion processes. These processes arise in numerous applications, where such diffusions require derivative information, either through the Jacobian or Hessian matrix. As time-discretizations of diffusions induce a bias, we provide an unbiased estimator of the Hessian. This is based on using Girsanov’s Theorem and randomization schemes developed through Mcleish (2011 Monte Carlo Methods Appl. 17, 301–315 (doi:10.1515/mcma.2011.013)) and Rhee & Glynn (2016 Op. Res. 63, 1026–1043). We demonstrate our developed estimator of the Hessian is unbiased, and one of finite variance. We numerically test and verify this by comparing the methodology here to that of a newly proposed particle filtering methodology. We test this on a range of diffusion models, which include different Ornstein–Uhlenbeck processes and the Fitzhugh–Nagumo model, arising in neuroscience.

Keywords: partially observed diffusions, randomization methods, Hessian estimation, coupled conditional particle filter

1. Introduction

In many scientific disciplines, diffusion processes [1] are used to model and describe important phenomena. Particular applications where such processes arise include biological sciences, finance, signal processing and atmospheric sciences [2–5]. Mathematically, diffusion processes take the general form

| 1.1 |

where , is a parameter, is the initial condition with given, denotes the drift term, denotes the diffusion coefficient and is a standard -dimensional Brownian motion. In practice, it is often difficult to have direct access to such continuous processes, where instead one has discrete-time partial observations of the process , denoted as , where , such that . Such processes are referred to as partially observed diffusion processes (PODPs), where one is interested in doing inference on the hidden process (1.1) given the observations. In order to do such inference, one must time-discretize such a process which induces a discretization bias. For (1.1), this can arise through common discretization forms such as an Euler or Milstein scheme [6]. Therefore, an important question, related to inference, is how one can reduce, or remove the discretization bias. Such a discussion motivates the development and implementation of unbiased estimators.

(a) . Motivating example

To help motivate unbiased estimation for PODPs, we provide an interesting application, for which we will test in this work. Our model example is the Fitzhugh–Nagumo (FHN) model for a neuron, which is a second-order ODE model arising in neuroscience, describing the actional potential generation within a neuronal axon. We consider a stochastic version of it, which is represented as the following:

| 1.2 |

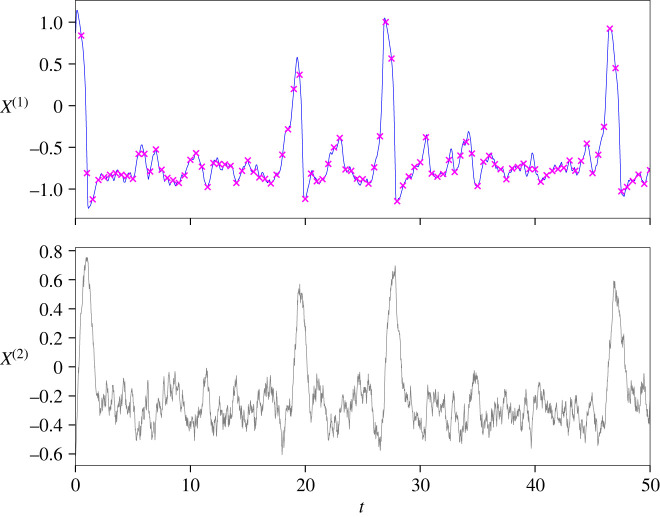

where is the membrane potential. There has been some interest in parameter estimation [7–9], related to various variants of the FHN model. This particular model is well known within the community, and commonly acts as a toy problem in the field of mathematical neurosciences. For this reason, we will use this example within our numerical experiments. In figure 1, we provide a simulation of (1.2), for arbitrary choices of , which demonstrates the interesting behaviour and dynamics. In the plot, we have also plotted non-noisy observations for .

Figure 1.

Simulation of FHN model for . Purple crosses in the top subplot represent discrete-time observations. (Online version in colour.)

(b) . Methodology

The unbiased estimation of PODPs has been an important, yet challenging topic. Some original seminal work on this has been the idea of exact simulation, proposed in various works [10–12]. The underlying idea behind exact simulation is that, through a particular transformation, one can acquire an unbiased estimator, subject to certain restrictions on the form of the diffusion and its dimension. Since then there have been a number of extensions aimed at going beyond this, w.r.t. to more general multidimensional diffusions and continuous-time dynamics [13,14]. However, attention has recently been paid to unbiased estimation, for Bayesian computation, through the work of Rhee and Glynn [15,16], in which they provide unbiased and finite variance estimators through introducing randomization. In particular, these methods allow us to unbiasedly estimate an expectation of a functional, by randomizing on the level of the time-discretization in a type of multilevel Monte Carlo (MLMC) approach [17], where there is a coupling between different levels. As a result, this methodology has been considered in the context of both filtering and Bayesian computation [18–21] and gradient estimation [22]. One advantage of this approach is that, with couplings, it is relatively simple to use & implement computationally, while exploiting such methodologies on a range of different model problems or set-ups.

In this work, we are interested in developing an unbiased estimator of the Hessian for PODPs. Typically, the Hessian is not required for PODPS but currently this is of interest as current state-of-the-art stochastic gradient methodologies exploit Hessian information for an improved speed of convergence. Methods using this information include Newton type methods [23,24], which have improved rates of convergence over first-order stochastic gradient methods. It is also well known that these methods are typically biased as one does not fully require the whole Hessian, due to the computational burden. Therefore, this provides our motivation for firstly developing an unbiased estimator, and secondly for the Hessian. In order to develop an unbiased estimator, our methodology will largely follow that described in [22], with the extension of this from the score function to the Hessian. Other works that consider unbiased estimation of the gradient include [25,26]. In particular, we will exploit the use of the conditional particle filter (CPF), first considered by Andrieu et al. [27,28]. We provide an expression for the Hessian of the likelihood, while introducing an Euler time-discretization of the diffusion process in order to implement our unbiased estimator. We then describe how one can attain unbiased estimators, which is based on various couplings of the CPF. From this, we test this methodology to that of using the methods of [18,29] for the Hessian computation, as for a comparison, where we demonstrate the unbiased estimator through both the variance and bias. This will be conducted on both a single and multidimensional Ornstein–Uhlenbeck (OU) process, as well as a more complicated form of the FHN model. We remark that our estimator of the hessian is unbiased, but if the inverse hessian is required, it is possible to adapt the forthcoming methodology to that context as well.

(c) . Outline

In §2, we present our setting for our diffusion process. We also present a derived expression for the Hessian, with an appropriate time-discretization. Then, in §3, we describe our algorithm in detail for the unbiased estimator of the Hessian. This will be primarily based on a coupling of a coupled CPF. This will lead to §4 where we present our numerical experiments, which provide variance and bias plots. We compare the methodology of this work with that of the Delta particle filter. This comparison will be tested on a range of diffusion processes, which include an OU process and the FHN model. We summarize our findings in §5.

2. Model

In this section, we introduce our setting and notation regarding our partially observed diffusions. This will include a number of assumptions. We will then provide an expression for the Hessian of the likelihood function, with a time-discretization based on the Euler scheme. This will include a discussion on the stochastic model where we define the marginal likelihood. Finally, we present a result indicating the approximation of the Hessian computation as we take the limit of the discretization level.

(a) . Notation

Let be a measurable space. For we write as the collection of bounded measurable functions, are the collection of -times, continuously differentiable functions and we omit the subscript if the functions are simply continuous; if we write and . Let , denote the collection of real-valued functions that are Lipschitz w.r.t. ( denotes the norm of a vector ). That is, if there exists a such that for any

We write as the Lipschitz constant of a function . For , we write the supremum norm . denotes the collection of probability measures on . For a measure on and a , the notation is used. denote the Borel sets on . is used to denote the Lebesgue measure. Let be a non-negative operator and be a measure, then we use the notations and for , For , the indicator is written . denotes the uniform distribution on the set . (resp. ) denotes an -dimensional Gaussian distribution (density evaluated at ) of mean and covariance . If we omit the subscript . For a vector/matrix , is used to denote the transpose of . For , denotes the Dirac measure of , and if with , we write . For a vector-valued function in -dimensions (resp. -dimensional vector), (resp. ) say, we write the component () as (resp. ). For a matrix we write the entry as . For and a random variable on with distribution associated with we use the notation .

(b) . Diffusion process

Let be fixed and we consider a diffusion process on the probability space , such that

| 2.1 |

where , with given, is the drift term, is the diffusion coefficient and is a standard -dimensional Brownian motion. We assume that for any fixed , and for . For fixed , we have for .

Furthermore, we make the following additional assumption, termed (D1).

-

(i)

Uniform ellipticity: is uniformly positive definite over .

-

(ii) Globally Lipschitz: for any , there exists a positive constant such that

for all , .

Let be a given collection of time points. Following [22], by the use of Girsanov Theorem, for any -integrable ,

| 2.2 |

where denotes the expectation w.r.t. , set , and the change of measure is given by

with is a -dimensional vector. Below, we consider a change of measure to the law , which is induced by using that , where solves such a process. Since and are equibilant, therefore by Girsanov’s Theorem

where the corresponding Radon–Nikodym derivative is

Now if we assume that is differentiable w.r.t. , then one has for

| 2.3 |

where and is the law of the diffusion process (1.1). From herein, we will use the short-hand notation and also set, for ,

(c) . Hessian expression

Given the expression (2.3) our objective is now to write the matrix of second derivatives, for

in terms of expectations w.r.t. .

We have the following simple calculation:

Under relatively weak conditions, one can express and as

and

Therefore, we have the following expression:

| 2.4 |

Defining, for

one can write more succinctly

(d) . Stochastic model

Consider a sequence of random variables where , where , which are assumed to have the following joint conditional Lebesgue density

where for any such that is the Lebesgue measure. Now if one considers instead realizations of the random variables , in the conditioning of the joint density we have a state-space model with marginal likelihood

Note that the framework to be investigated in this article is not restricted to this special case, but we shall focus on it for the rest of the paper. So to clarify from herein.

In reference to (2.3) and (2.4), we have that

and

(e) . Time-discretization

From herein, we take the simplification that . Let be a given level of discretization, and consider the Euler discretization of step size with :

| 2.5 |

Set . We then consider the vector-valued function and the matrix-valued function defined as, for

and

Then, noting (2.4), we have an Euler approximation of the Hessian

In the context of the model in §d, if one sets

where is the transition density induced by discretized diffusion process (2.5) (over unit time), and we use the abuse of notation that is the Lebesgue measure on the coordinates , then one has that

| 2.6 |

where we are using the short-hand and etc.

We have the following result whose proof and assumption (D2) is in appendix A.

Proposition 2.1. —

Assume (D1-D2). Then for any , we have

The main strategy of the proof is by strong convergence, which means that one can characterize an upper-bound on of but that rate is most likely not sharp, as one expects .

3. Algorithm

The objective of this section, using only approximations of (2.6), is to obtain an unbiased estimate of for any fixed and . Our approach is essentially an application of the methodology in [22] and so we provide a review of that approach in the sequel.

(a) . Strategy

To focus our description, we shall suppose that we are interested in computing an unbiased estimate of for some fixed ; we remark that this specialization is not needed and is only used for notational convenience. An Euler approximation of is . To further simplify the notation, we will simply write instead of .

Suppose that one can construct a sequence of random variables on a potentially extended probability space with expectation operator , such that for each , . Moreover, consider the independent sequence of random variables, which are constructed so that for

| 3.1 |

with . Now let be a positive probability mass function on and set . Now if,

| 3.2 |

then if one samples from independently of the sequence then by e.g. ([17], Theorem 5) the estimate

| 3.3 |

is an unbiased and finite variance estimator of . Before describing in fuller detail our approach, which requires numerous techniques and methodologies, we first present our main result which is an unbiased theorem related to our estimator of the Hessian. This is given through the following proposition.

Proposition 3.1. —

Assume (D1-D2). Then there exists choices of so that (3.9) is an unbiased and finite variance estimator of for each .

Proof. —

This is the same as ([22], theorem 2), except one must repeat the arguments of that paper given lemma A.3 in the appendix and given the rate in the proof of proposition 2.1. Since the arguments and calculations are almost identical, they are omitted in their entirety.

The main point is that the choice of is as in [22], which is: in the case that is constant and in the non-constant case ; both choices achieve finite variance and costs to achieve an error of with high probability as in ([16], propositions 4 and 5).

The main issue is to construct the sequence of independent random variables such that (3.1) and (3.2) hold and that the expected computational cost for doing so is not unreasonable as a functional of : a method for doing this is in [22] as we will now describe.

(b) . Computing

The computation of is performed by using exactly the coupled conditional particle filter (CCPF) that has been introduced in [30]. This is an algorithm which allows one to construct a random variable such that and we will set .

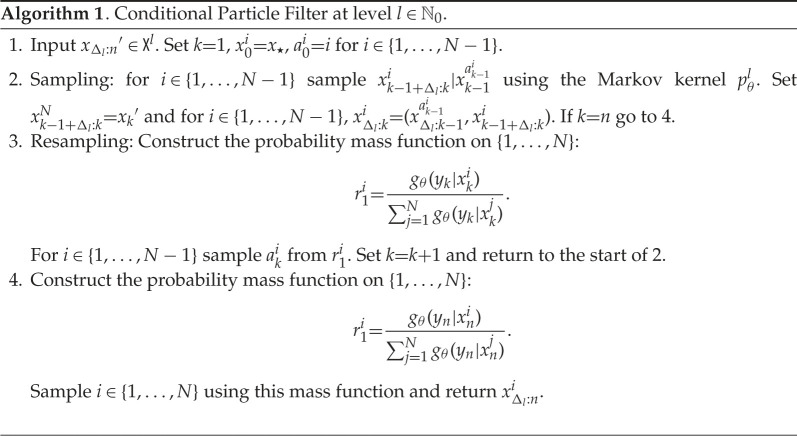

We begin by introducing the Markov kernel in algorithm 1. To that end, we will use the notation , where is the level of discretization, is a particle (sample) indicator, is a time parameter and . The kernel described in algorithm 1 is called the called the CPF, as developed in [27], and allows one to generate, under minor conditions, an ergodic Markov chain of invariant measure . By itself, it does not provide unbiased estimates of expectations w.r.t. , unless is the initial distribution of the Markov chain. However, the kernel will be of use in our subsequent discussion.

Our approach generates a Markov chain on the space , . In order to describe how one can simulate this Markov chain, we introduce several objects which will be needed. The first of these is the kernel , which we need in the case and its simulation is described in algorithm 2. The Markov kernel is used to simulate intermediate points from to the next observations at time , with time step . We will also need to simulate the maximal coupling of two probability mass functions on , for some , and this is described in algorithm 3.

Remark 3.2. —

Step 4 of algorithm 3 can be modified to the case where one generates the pair from any coupling of the two probability mass functions . In our simulations in §4, we will do this by sampling by inversion from , using the same uniform random variable. However, to simplify the mathematical analysis that we will give in the appendix, we consider exactly algorithm 3 in our calculations.

To describe the CCPF kernel, we must first introduce a driving CCPF, which is presented in algorithm 4. The driving CCPF is nothing more than an ordinary coupled particle filter, except the final pair of trajectories is ‘frozen’ as is given in the algorithm (that is as in step 1 of algorithm 4) and allowed to interact with the rest of the particle system. Given the ingredients in algorithms 2–4, we are now in a position to describe the CCPF kernel, which is a Markov kernel , whose simulation is presented in algorithm 5. We will consider the Markov chain , , generated by the CCPF kernel in algorithm 5 and with initial distribution

| 3.4 |

where .

We remark that in algorithm 5, marginally, (resp. ) has been generated according to (resp. ). A rather important point is that if the two input trajectories in step 1 of algorithm 5 are equal, i.e. , then the output trajectories will also be equal. To that end, define the stopping time associated with the given Markov chain

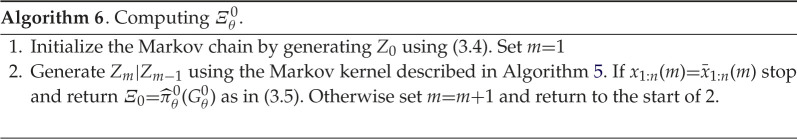

Then, setting one has the following estimator:

| 3.5 |

and one sets . The procedure for computing is summarized in algorithm 6.

(c) . Computing

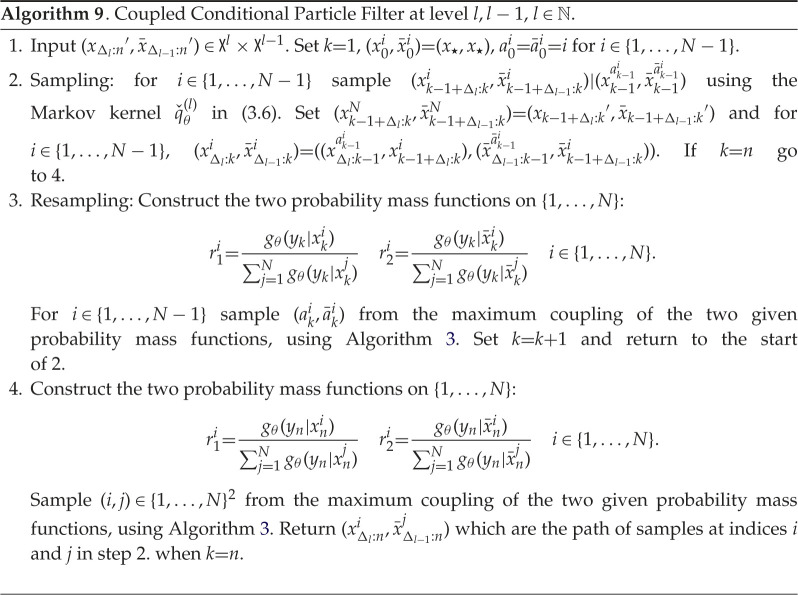

We are now concerned with the task of computing such that (3.1)–(3.2) are satisfied. Throughout the section is fixed. We will generate a Markov chain on the space , where and . In order to construct our Markov chain kernel, as in the previous section, we will need to provide some algorithms. We begin with the Markov kernel which will be needed and whose simulation is described in algorithm 7. We will also need to sample a coupling for four probability mass functions on and this is presented in algorithm 8.

To continue onwards, we will consider a generalization of that in algorithm 4. The driving CCPF at level is described in algorithm 10. Now given algorithms 7–10, we are in a position to give our Markov kernel, , which we shall call the coupled-CCPF (C-CCPF) and it is given in algorithm 11. To assist the subsequent discussion, we will introduce the marginal Markov kernel

| 3.6 |

Given this kernel, one can describe the CCPF at two different levels in algorithm 9. Algorithm 9 details a Markov kernel which we will use in the initialization of our Markov chain to be described below.

We will consider the Markov chain , with

generated by the C-CCPF kernel in algorithm 11 and with initial distribution

| 3.7 |

where . An important point, as in the case of algorithm 5, is that if the two input trajectories in step 1 of algorithm 11 are equal, i.e. , or , then the associated output trajectories will also be equal. As before, we define the stopping times associated with the given Markov chain ,

Then, setting one has the following estimator:

| 3.8 |

and one sets . The procedure for computing is summarized in algorithm 12.

(d) . Estimate and remarks

Given the commentary above we are ready to present the procedure for our unbiased estimate of for each ; is a symmetric matrix. The two main algorithms we will use (algorithms 6 and 12) are stated in terms of providing for one specified function (recall that was suppressed from the notation). However, the algorithms can be run once and provide an unbiased estimate of for every , of and for every . To that end, we will write , and to denote the appropriate estimators.

Our approach consists of the following steps, repeated for :

-

(i)

Generate according to .

-

(ii)

Compute for every and , for every using algorithm 6. Independently, compute for every using algorithm 6.

-

(iii)

If then independently for each and independently of step 2, calculate for every and , for every using algorithm 12.

-

(iv)

If then independently for each and independently of steps 2 and 3, calculate for every using algorithm 12.

-

(v) Compute for every

Then our estimator is for each

| 3.9 |

The algorithm and the various settings are described and investigated in detail in [22] as well as enhanced estimators. We do not discuss the methodology further in this work.

Remark 3.3. —

As we will see in the succeeding section, we will compare our methodology which is based on the C-CCPF to that of another methodology, which is the PF, within particle Markov chain Monte Carlo. Specifically, it will be a particle marginal Metropolis Hastings algorithm. We omit such a description of the latter, as we only use it as a comparison, but we refer the reader to [18] for a more concrete description. However, we emphasize that it is only asymptotically unbiased, in relation to the Hessian identity (2.4).

Remark 3.4. —

It is important to emphasize that with inverse Hessian, which is required for Newton methodologies, we can debias both the C-CCPF and the PF. This can be achieved by using the same techniques which are presented in the work of Jasra et al. [21].

4. Numerical experiments

In this section, we demonstrate that our estimate of the Hessian is unbiased through various different experiments. We consider testing this through the study of the variance and bias of the mean square error, while also providing plots related to the Newton-type learning. Our results will be demonstrated on three underlying diffusion processes: a univariate OU process, a multivariate OU process and the FHN model. We compare our methodology to that using the PF instead of the coupled-CCPF within our unbiased estimator.

(a) . Ornstein–Uhlenbeck process

Our first set of numerical experiments will be conducted on a univariate OU process, which takes the form

and

where is our initial condition, is a parameter of interest and is the diffusion coefficient. For our discrete observations, we assume that we have Gaussian measurement errors, for and for some . Our observations will be generated with parameter choices defined as , and . Throughout the simulation, one observation sequence is used. The true distribution of observations can be computed analytically, therefore the Hessian is known. In figure 2, we present the surface plots comparing the true Hessian with the estimated Hessian, obtained by the Rhee & Glynn estimator (3.9) truncated at discretization level , this is done by letting . We use to obtain the estimate Hessian surface plot. Both surface plots are evaluated at . In figure 3, we test out the convergence of bias of the Hessian estimate (3.9) with respect to its truncated discretization level. This essentially tests the result in lemma A.2. We use and plot the bias against .

Figure 2.

Experiments for the OU model. (a) True values of the Hessian. (b) Estimated values of the Hessian. (Online version in colour.)

Figure 3.

Experiments for the OU model: bias values of Hessian estimate. (Online version in colour.)

The bias is obtained by using i.i.d. samples, and taking its entry-wise difference with the true Hessian entry-wise value. Note that the Hessian estimate here is evaluated with true parameter choice. As the parameter is two-dimensional, we present four - plots where the rate represents the fitted slope of -scaled bias against -scaled . We observe that the Hessian estimate bias is of order where respectively for the four entries, which verifies our result in lemma A.2. We also compare the wall-clock time cost of obtaining one realization of Hessian estimate (3.9) with the cost of obtaining one realization of score estimate (see [22]), both truncated at the same discretization levels , here . The comparison result is provided in figure 4a.

Figure 4.

Experiments for the OU model. (a) Cost of Hessian & score estimate. (b) Incremental Hessian estimate variance summed over all entries. (Online version in colour.)

We observe that the cost of obtaining the Hessian estimate is on average three times more expensive than obtaining a score estimate. The reason for this is that we need to simulate three CCPF paths in order to obtain one summand in the Hessian estimate, while to estimate the score function, we need only one path. We also record the fitted slope of -scaled cost against -scaled for both estimates, the cost for Hessian estimates is roughly proportional to . To verify the rate obtained in lemma A.3, we compare the variance of the Hessian incremental estimate with respect to discretization level . The incremental variance is approximated with the sample variance over repetitions, and we sum over all entries and present the - plot of the summed variance against on the right of figure 4. We observe that the incremental variance is of order for the OU process model. This verifies the result obtained in lemma A.3. It is known that when truncated, the Rhee & Glynn method essentially serves as a variance reduction method. As a result, compared to the discrete Hessian estimate (2.6), the truncated Hessian estimate (3.9) will require less cost to achieve the same MSE target.

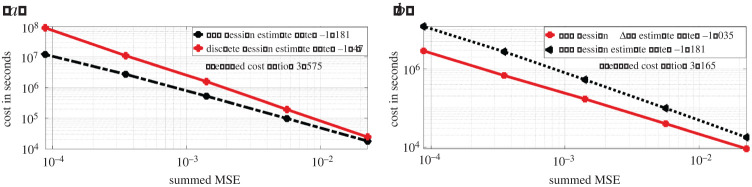

We present on figure 5a, the - plot of cost against MSE for discrete Hessian estimate (2.6) and the Rhee & Glynn (R&G) Hessian estimate (3.9). We observe that (2.6) requires much lower cost for an MSE target compared to (3.9). For (2.6), the cost is proportional to for an MSE target of order . While for (3.9), the cost is proportional to . The average cost ratio between (3.9) and (2.6) under the same MSE target is 5.605. In figure 5b, we present the - plot of cost against MSE for (3.9) and the Hessian estimate obtained by the . We observe that under similar MSE target, the latter method on average has cost 5.054 times less than (3.9). In figure 6, we present the convergence plots for the stochastic gradient descent (SGD) method with score estimate and Newton method with score Hessian estimate. For both methods, the parameter is initialized at , and the learning rate for the SGD method is set to 0.002. Our conclusion from figure 5a is that firstly the methodology using R&G has a better rate, relating the MSE to computational cost, which favours our methodology. For figure 5b, we note that the methodology exploiting the and our methodology, i.e. ‘R&G Hessian’, result in the same rate. As we know the former is unbiased, this comparison indicates our methodology is also unbiased, despite being more expensive by 5.054 times. For figure 6, we observe as expected that the Newton method requires fewer iterations (five compared to 132 which uses SGD) to reach the true parameters of interest, i.e. and .

Figure 5.

Hessian estimate cost against summed MSE for the OU model. (Online version in colour.)

Figure 6.

Parameter estimate for the OU model. (a) SGD with score estimate. (b) Newton method with score & Hessian estimate. (Online version in colour.)

(b) . Multivariate Ornstein–Uhlenbeck process model

Our second model of interest is a two-dimensional OU process defined as

where is the initial condition and are the diffusion coefficients. We assume Gaussian measurement errors, where is a two-dimensional identity matrix. We generate one sequence of observations up to time with parameter choice , , , . As before, we study various properties of (3.9) with the true parameter choice. In figure 7, we present the - plot of bias against for (3.9), where the five points are evaluated with . The bias is approximated by the difference between (3.9) and the true Hessian with , we sum over all entry-wise bias and present it on the plot. We observe that the summed bias is of order . This verifies the result in lemma A.2. In figure 8a, we present a - plot of cost against for (3.9) and the RG score estimate both with . We observe that the cost of (3.9) is proportional to . This rate is similar to that of the score estimate, on average the cost ratio between (3.9) and the score estimate is 3.495.

Figure 7.

Hessian estimate bias summed over all entries for the multivariate OU diffusion model. (Online version in colour.)

Figure 8.

Experiments for the multivariate OU diffusion model. (a) Cost of Hessian and score estimate. (b) Incremental Hessian estimate variance summed over all entries. (Online version in colour.)

In figure 8, we present on the left the - plot of summed incremental variance of Hessian estimate against . We compute the entry-wise sample variance of the incremental Hessian estimate for times, and plot the summed variance against . We observe that the Hessian incremental variance is proportional to . This verifies the result in lemma A.3. In figure 9a, we present the - plot of the cost against MSE for (3.9) and (2.6), where the MSE is approximated through averaging over i.i.d. repetitions of both estimators. We observe that under a summed MSE target of , the cost for (3.9) is of order , while the cost for (2.6) is of order . On average, the cost ratio between (2.6) and (3.9) is 3.575. This verifies the variance reduction effect of truncated R&G scheme. In figure 9b, we present the - plot of the cost against MSE for (3.9) and Hessian estimate using PF. We observe that under a similar MSE target, the latter method on average costs times less than that of (3.9). In figure 10, we present the convergence plots for the SGD and Newton methods. Both the score estimate and the Hessian estimate (3.9) are obtained with , truncated at level . The learning rate for the SGD is set to 0.005. The training reaches convergence when the relative Euclidean distance between trained and true is no bigger than 0.02. We initialize the training parameter at , and we observe that the SGD method reaches convergence with iterations, compared to four iterations of the modified Newton method. The actual training time until convergence for the Newton method is roughly 7.6 times faster than the SGD method.

Figure 9.

Hessian estimate cost against summed MSE for the multivariate OU diffusion model. (Online version in colour.)

Figure 10.

Parameter estimate for the multivariate OU model. (a) SGD with the score estimate. (b) Newton method with score and Hessian estimate. (Online version in colour.)

(c) . FitzHugh–Nagumo model

Our next model will be a two-dimensional ordinary differential equation, which arises in neuroscience, known as the FHN model [7,31]. It is concerned with the membrane potential of a neuron and a (latent) recovery variable modelling the ion channel kinetics. We consider a stochastic perturbed extended version, given as

For the discrete observations, we assume Gaussian measurement errors, , where , ( are the diffusion coefficients and, as before, is a Brownian motion. We generate one observation sequence with parameter choices , . As the true distribution of the observation is not available analytically, we use to simulate out where .

In figure 11, we compared the bias of (3.9), truncated at discretization level and plot it against (- plot). The summed bias is obtained by taking element-wise difference between an average of i.i.d. realizations of the Hessian estimate and the true Hessian, then summed over all the element-wise difference. The true Hessian is approximated by (3.9) with and . We observe that the summed bias is of order . This verifies the result in lemma A.2. In figure 12a, we present the - plot of cost against for (3.9) and the RG score estimate both with . We observe that the cost of (3.9) is of order , while the cost for score estimate is of order . The average cost ratio between (3.9) and the score estimate is . In figure 12b, we present the - plot of the summed incremental variance with . We observe that the summed incremental variance is of order . This verifies the result in lemma A.3.

Figure 11.

Hessian estimate bias summed over all entries for the FHN model.

Figure 12.

Experiments for the FHN model. (a) Cost of Hessian & score estimate. (b) Incremental Hessian estimate variance summed over all entries. (Online version in colour.)

In figure 13a, we again present a - plot of the cost against the summed MSE of (3.9) over all entries for both (3.9) and (2.6). We observe that under an MSE target of , (3.9) requires cost of order , while (2.6) requires cost of order . The average cost ratio between (3.9) and (2.6) under the same MSE target is 3.987. This verifies the variance reduction effect of truncated R&G scheme. In figure 13b is the - plot of cost against summed MSE for (3.9) and Hessian estimate using the PF. We observe that under similar MSE target, the latter method on average costs times less than that of (3.9). In figure 14, we present the convergence plots of SGD and the modified Newton method. For the modified Newton method, we set all the off-diagonal entries to zero for the Hessian estimate, and add 0.0001 to the diagonal entries to avoid singularity. When the norm of the score is smaller than 0.1, we scale the searching step by a learning rate of 0.002. Both the score estimate and the Hessian estimate (3.9) are obtained with , truncated at level . The learning rate for the SGD is set to 0.001. The training reaches convergence when the relative Euclidean distance between trained and true is no bigger than 0.02. We initialize the training parameter at , and observe that the SGD method reaches convergence with fewer iterations than the stochastic Newton method.

Figure 13.

Hessian estimate cost against summed MSE for FHN model. (Online version in colour.)

Figure 14.

Parameter estimate for the FHN model. (a) SGD with score estimate. (b) Modified Newton method with score & Hessian estimate. (Online version in colour.)

5. Summary

In this work, we were interested in developing an unbiased estimator of the Hessian, related to PODPs. This task is of interest, as computing the Hessian is primarily biased, due to its computational cost, but also it has improved convergence over the score function. We presented a general expression for the Hessian and proved, in the limit of discretization level, that it is consistent with the continuous form. We demonstrated that we were able to reduce the bias, arising from the discretization. This was shown through various numerical experiments that were tested on a range of diffusion processes. This not only highlighted the reduction in bias, but also that convergence is better compared to computing and using the score function. In terms of research directions beyond what we have done, it would be nice firstly to extend this to more complicated diffusion models, such as ones arising in mathematical finance [32,33]. Such diffusion models would be rough volatility models. Another potential direction would be to consider diffusion bridges, and analyse how one can could use the tools here and adapt them. This has been of interest, with recent works such as [34,35]. One could also aim to derive similar results for unbiased estimation using alternative discretization schemes, such as the Milstein scheme. This should result in different rates of convergence, however the analysis would be different. To do so, one would also require such a newly developed analysis for the score function [22]. Finally, one could aim to apply this to other applications, which are Monte Carlo methods being exploited such as phylogenetics. In particular, as we have tested our methodology on various OU processes, one could test this further on an SDE arising in phylogenetics, presented ad discussed in [36,37].

Supplementary Material

Appendix A. Proofs for proposition 2.1

In this section, we will consider a diffusion process which follows (2.1) and has an initial condition and we will also consider Euler discretizations (2.5), at some given level , which are driven by the same Brownian motion as and the same initial condition, written . We also consider another diffusion process which also follows (2.1), initial condition with the same Brownian motion as and associated Euler discretizations, at level , which are driven by the same Brownian motion as and the same initial condition, written . The purpose of signifying the initial condition will be made apparent later on in the appendix. The expectation operator for the described process is written .

We require the following additional assumption called (D2) and all derivatives are assumed to be well defined.

-

—

.

-

—

For any , , , .

-

—

For any , there exists such that for any , . In addition for any , .

-

—

For any , , .

-

— For any ,

.

The following result is proved in [22] and is lemma 1 of that article.

Lemma A.1. —

Assume (D1-2). Then for any , there exists a such that for any

We have the following result which can be proved using very similar arguments to lemma A.1.

Lemma A.2. —

Assume (D1-2). Then for any , there exists a such that for any :

Proof of proposition 2.1. —

In the following proof, we will suppress the initial condition from the notation. We have

where

We remark that

A 1 by using (D2) and convergence of Euler approximations of diffusions. For , we have

So one can easily deduce by ([22], theorem 1) and (D2) that for some that does not depend upon

Now for any real numbers, with non-zero, we have the simple identity

So for combining this identity with lemma A.2, (A 1) and (D2) one can easily conclude that for some that does not depend upon

From here, the proof is easily concluded.

We end the section with a couple of results which are more-or-less direct corollaries of ([22], remarks 1 & 2). We do not prove them.

Lemma A.3. —

Assume (D1–D2). Then for any , there exists a such that for any

Appendix B. Algorithms

Data accessibility

Data and code for the paper can be found at https://github.com/fangyuan-ksgk/Hessian_Estimate.

Authors' contributions

N.K.C.: conceptualization, investigation, methodology, resources, supervision, writing—original draft, writing—review and editing; A.J.: formal analysis, methodology, project administration, resources, supervision, writing—original draft, writing—review and editing; F.Y.: resources, software, visualization.

All authors gave final approval for publication and agreed to be held accountable for the work performed therein.

Conflict of interest declaration

We declare we have no competing interest.

Funding

This work was supported by KAUST baseline funding.

References

- 1.Cappé O, Moulines E, Ryden T. 2005. Inference in Hidden Markov Models, vol. 580. New York, NY: Springer. [Google Scholar]

- 2.Bain A, Crisan D. 2009. Fundamentals of stochastic filtering. New York, NY: Springer. [Google Scholar]

- 3.Majda A, Wang X. 2006. Non-linear dynamics and statistical theories for basic geophysical flows. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 4.Ricciardi LM. 1977. Diffusion processes and related topics in biology. Lecture Notes in Biomathematics. Berlin, Heidelberg: Springer. [Google Scholar]

- 5.Shreve SE. 2004. Stochastic calculus for finance II: continuous-time model. New York, NY: Springer Science & Business Media. [Google Scholar]

- 6.Kloeden PE, Platen E. 2013. Numerical solution of stochastic differential equations, vol. 23. New York, NY: Springer Science & Business Media. [Google Scholar]

- 7.Bierkens J, van der Meulen F, Schauer M. 2020. Simulation of elliptic and hypo-elliptic conditional diffusions. Adv. Appl. Probab. 52, 173-212. ( 10.1017/apr.2019.54) [DOI] [Google Scholar]

- 8.Che Y, Geng L-H, Han C, Cui S, Wang J. 2012. Parameter estimation of the FitzHugh-Nagumo model using noisy measurements for membrane potential. Chaos 22, 023139. ( 10.1063/1.4729458) [DOI] [PubMed] [Google Scholar]

- 9.Doruk R, Abosharb L. 2019. Estimating the parameters of Fitzhugh-Nagumo neurons from neural spiking data. Brain Sci. 9, 364. ( 10.3390/brainsci9120364) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Beskos A, Roberts GO. 2005. Exact simulation of diffusions. Ann. Appl. Probab. 15, 2422-2444. ( 10.1214/105051605000000485) [DOI] [Google Scholar]

- 11.Beskos A, Papaspiliopoulos O, Roberts GO. 2006. Retrospective exact simulation of diffusion sample paths with applications. Bernoulli 12, 1077-1098. ( 10.3150/bj/1165269151) [DOI] [Google Scholar]

- 12.Fearnhead P, Papaspiliopoulos O, Roberts GO. 2008. Particle filters for partially observed diffusions. J. R. Stat. Soc. B 70, 755-777. ( 10.1111/j.1467-9868.2008.00661.x) [DOI] [Google Scholar]

- 13.Blanchet J, Zhang F. 2017. Exact simulation for multivariate Ito diffusions. (http://arxiv.org/abs/1706.05124)

- 14.Fearnhead P, Papaspiliopoulos O, Roberts GO, Stuart AM. 2010. Random-weight particle filtering of continuous time processes. J. R. Stat. Soc. Ser. B Stat. Methodol. 72, 497-512. ( 10.1111/j.1467-9868.2010.00744.x) [DOI] [Google Scholar]

- 15.Glynn PW. 2014. Exact estimation for Markov chain equilibrium expectations. J. Appl. Probab. 51, 377-389. ( 10.1239/jap/1417528487) [DOI] [Google Scholar]

- 16.Rhee CH, Glynn P. 2016. Unbiased estimation with square root convergence for SDE models. Op. Res. 63, 1026-1043. ( 10.1287/opre.2015.1404) [DOI] [Google Scholar]

- 17.Vihola M. 2018. Unbiased estimators and multilevel Monte Carlo. Op. Res. 66, 448-462. ( 10.1287/opre.2017.1670) [DOI] [Google Scholar]

- 18.Chada NK, Franks J, Jasra A, Law KJH, Vihola M. 2021. Unbiased inference for discretely observed hidden Markov model diffusions. SIAM/ASA J. Unc. Quant. 9, 763-787. ( 10.1137/20m131549x) [DOI] [Google Scholar]

- 19.Heng J, Jasra A, Law KJH, Tarakanov A. 2021. On unbiased estimation for discretized models. (http://arxiv.org/abs/2102.12230)

- 20.Jacob PE, O’Leary J, Atchadè YF. 2020. Unbiased Markov chain Monte Carlo methods with couplings. J. R. Stat. Soc. B 82, 543-600. ( 10.1111/rssb.12336) [DOI] [Google Scholar]

- 21.Jasra A, Law KJH. 2021. Unbiased filtering for a class of partially observed diffusion processes. Adv. Appl. Probab. (to appear). [Google Scholar]

- 22.Heng J, Houssineau J, Jasra A. 2021. On unbiased score estimation for partially observed diffusions. (http://arxiv.org/abs/2105:04912)

- 23.Agarwal N, Bullins B, Hazan E. 2017. Second-order stochastic optimization for machine learning in linear time. J. Mach. Learn. Res. 18, 1-40. [Google Scholar]

- 24.Byrd RH, Chin GM, Neveitt W, Nocedal J. 2011. On the Use of stochastic Hessian information in optimization methods for machine learning. SIAM J. Optim. 21, 977-995. ( 10.1137/10079923X) [DOI] [Google Scholar]

- 25.Jasra A, Law KJH, Lu D. 2021. Unbiased estimation of the gradient of the log-likelihood in inverse problems. Stat. Comp. 31, 1-18. ( 10.1007/s11222-021-09994-6) [DOI] [Google Scholar]

- 26.Shi Y, Cornish R. 2021. On multilevel Monte Carlo unbiased gradient estimation for deep latent variable models. In Proc. of The 24th Int. Conf. on Artificial Intelligence and Statistics, vol. 130, pp. 3925–3933, PMLR.

- 27.Andrieu C, Doucet A, Holenstein R. 2010. Particle Markov chain Monte Carlo methods. J. R. Stat. Soc. B 72, 269-342. ( 10.1111/j.1467-9868.2009.00736.x) [DOI] [Google Scholar]

- 28.Andrieu C, Lee A, Vihola M. 2018. Uniform ergodicity of the iterated conditional SMC and geometric ergodicity of particle Gibbs samplers. Bernoulli 24, 842-872. ( 10.3150/15-BEJ785) [DOI] [Google Scholar]

- 29.Jasra A, Law KJH, Zhou Y. 2018. Bayesian static parameter estimation for partially observed diffusions via multilevel Monte Carlo. SIAM J. Sci. Comp. 40, A887-A902. ( 10.1137/17M1112595) [DOI] [Google Scholar]

- 30.Jacob P, Lindstein F, Schön T. 2021. Smoothing with couplings of conditional particle filters. J. Am. Stat. Assoc. 115, 721-729. ( 10.1080/01621459.2018.1548856) [DOI] [Google Scholar]

- 31.Ditlevsen S, Samson A. 2019. Hypoelliptic diffusions: filtering and inference from complete and partial observations. J. R. Stat. Soc. B 81, 361-384. ( 10.1111/rssb.12307) [DOI] [Google Scholar]

- 32.Gatheral J. 2006. The volatility surface: a practitioner’s guide. New York, NY: John Wiley & Sons. [Google Scholar]

- 33.Gatheral J, Jaisson T, Rosenbaum M. 2018. Volatility is Rough. Quant. Financ. 18, 933-949. ( 10.1080/14697688.2017.1393551) [DOI] [Google Scholar]

- 34.van der Meulen F, Schauer M. 2018. Bayesian estimation of incompletely observed diffusions. Stochastic 90, 641-662. ( 10.1080/17442508.2017.1381097) [DOI] [Google Scholar]

- 35.Schauer M, Van Der Meulen F, Van Zanten H. 2017. Guided proposals for simulating multi-dimensional diffusion bridges. Bernoulli 23, 2917-2950. ( 10.3150/16-BEJ833) [DOI] [Google Scholar]

- 36.Hansen T, Martins E. 1996. Translating between microevolutionary process and macroevolutionary patterns: the correlation structure of interspecific data. Evolution 50, 1404-1417. ( 10.1111/j.1558-5646.1996.tb03914.x) [DOI] [PubMed] [Google Scholar]

- 37.Jhwueng T, Vasileios M. 2014. Phylogenetic Ornstein–Uhlenbeck regression curves. Stat. Probab. Lett. 89, 110-117. ( 10.1016/j.spl.2014.02.023) [DOI] [Google Scholar]

- 38.Thorisson H. 2002. Coupling, stationarity, and regeneration. New York, NY: Springer. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data and code for the paper can be found at https://github.com/fangyuan-ksgk/Hessian_Estimate.