Abstract

Various non-classical approaches of distributed information processing, such as neural networks, reservoir computing, vector symbolic architectures, and others, employ the principle of collective-state computing. In this type of computing, the variables relevant in a computation are superimposed into a single high-dimensional state vector, the collective-state. The variable encoding uses a fixed set of random patterns, which has to be stored and kept available during the computation. Here we show that an elementary cellular automaton with rule 90 (CA90) enables space-time tradeoff for collective-state computing models that use random dense binary representations, i.e., memory requirements can be traded off with computation running CA90. We investigate the randomization behavior of CA90, in particular, the relation between the length of the randomization period and the size of the grid, and how CA90 preserves similarity in the presence of the initialization noise. Based on these analyses we discuss how to optimize a collective-state computing model, in which CA90 expands representations on the fly from short seed patterns – rather than storing the full set of random patterns. The CA90 expansion is applied and tested in concrete scenarios using reservoir computing and vector symbolic architectures. Our experimental results show that collective-state computing with CA90 expansion performs similarly compared to traditional collective-state models, in which random patterns are generated initially by a pseudo-random number generator and then stored in a large memory.

Keywords: cellular automata, rule 90, collective-state computing, reservoir computing, hyperdimensional computing, vector symbolic architectures, distributed representations, random number generation

I. Introduction

Collective-state computing is an emerging paradigm of computing, which leverages interactions of nodes or neurons in a highly interconnected network [1]. This paradigm was first proposed in the context of neural networks and neuroscience for exploiting the parallelism of complex network dynamics to perform challenging computations. The classical examples include reservoir computing (RC) [2]–[5] for buffering spatio-temporal inputs, and attractor networks for associative memory [6] and optimization [7]. In addition, many other models can be regarded as collective-state computing, such as random projection [8], compressed sensing [9], [10], randomly connected feedforward neural networks [11], [12], and vector symbolic architectures (VSAs) [13]–[15]. Interestingly, these diverse computational models share a fundamental commonality – they all include an initialization phase in which high-dimensional independent and identically distributed (i.i.d.) random vectors or matrices are generated that have to be memorized.

In different models, these memorized random vectors or matrices serve a similar purpose: to represent inputs and variables that need to be manipulated as distributed patterns across all neurons. For example, in RC random matrices are used as weights for projecting input to the reservoir (commonly denoted as Win) as well as weights of recurrent connections between the neurons in the reservoir (commonly denoted as W). The collective state is the (linear) superposition of these distributed representations. Decoding a particular variable from the collective state can be achieved by a linear projection onto the corresponding representation vector. Since high-dimensional random vectors are pseudo-orthogonal, the decoding interference is rather small, even if the collective state contains many variables1. In contrast, if representations of different variables are not random, but contain correlations or statistical dependencies, then the interference becomes large when decoding and collective-state computing ceases to work. In order to achieve near orthogonality and low decoding interference, a large dimension of the random vectors is essential.

When implementing a collective-state computing model in hardware (e.g., in Field Programmable Gate Arrays, FPGA), the memory requirements are typically a major bottleneck for scaling the system [16]. It seems strangely counter intuitive to spend a large amount of memory just to store random vectors. Thus, our key question is whether collective-state computing can be achieved without memorizing the full array of random vectors. Instead of memorization, can memory requirements be traded off by computation?

Cellular automata (CA) are simple discrete computations capable of producing complex random behavior [17]. Here we study the randomization behavior of an elementary cellular automaton with rule 90 (CA90) for generating distributed representations for collective-state computing. CA90 is chosen because of its highly parallelizable implementation and randomization properties, which, in our opinion, are amenable for collective-state computing (see Section II-C). We demonstrate in the context of RC and VSAs that collective-state computing at full performance is possible by storing only short random seed patterns, which are then expanded “on the fly” to the full required dimension by running rule CA90. Thus, CA90 provides the space-time tradeoff for collective-state computing models since instead of memorizing random vectors/matrices CA90 allows re-compute them in real-time while using only a fraction of the fully memorized solution at the cost of running CA90 computations every time when the access is required. This work is partially inspired by [18], which proposed that RC, VSAs, and CA can benefit from each other, by expanding low-dimensional representations via CA computations into high-dimensional representations that are then used in RC and VSA models. The specific contributions of this article are:

Characterization of the relation between the length of the randomization period of CA90 and the size of its grid;

Analysis of the similarity between CA90 expanded representations in the case when the seed pattern contains errors;

Experimental evidence that for RC and VSAs the CA90 expanded representations are functionally equivalent to the representations obtained from a standard pseudo-random number generator.2

The article is structured as follows. The main concepts used in this study are presented in Section II. The effect of randomization of states by CA90 is described in Section III. The use of RC and VSAs with the CA90 expanded representations is reported in Section IV. The findings and their relation to the related work are discussed in Section V.

II. Concepts

A. Collective-state computing

As explained in the introduction, collective-state computing subsumes numerous types of network computations that employ distributed representation schemes based on i.i.d. random vectors. One type is VSA or hyperdimensional computing [13], [19], [20], which has been proposed in cognitive neuroscience as a formalism for symbolic reasoning with distributed representations. Recently, the VSA formalism has been used to formulate other types of collective-state computing models, such as RC [5], compressed sensing [21], and randomly connected feed-forward neural networks [12] such as random vector functional link networks [11] and extreme learning machines [22]. Following this lead, we will formulate the types of collective-state computing, which are used in Section IV to study the CA90 expansion.3

VSAs are defined for different spaces (e.g., real or complex), but here we focus on VSAs with dense binary [23] or bipolar [24] vectors where the similarity between vectors is measured by normalized Hamming distance (denoted as dh) for binary vectors or by dot product for bipolar ones (denoted as dd). The VSA formalism will be introduced as we go. Please also refer to Section S.2 in the Supplementary materials for a concise introduction to VSAs.

1). Item memory with nearest neighbor search:

A common feature in collective-state computing is that a set of basic concepts/symbols4 is defined and assigned with i.i.d. random high-dimensional atomic vectors. In VSAs, these atomic vectors are stored in the so-called item memory (denoted as H), which in its simplest form is a matrix with the size dependent on the dimensionality of vectors (denoted as K) and the number of symbols (denoted as D). The item memory H enables associative or content-based search, that is, for a given query vector q it returns the nearest neighbor. Given such a noisy query, the memory returns the best match using the nearest neighbor search:

| (1) |

The search returns the index of the vector that is closest to the query in terms of the similarity metric (e.g., the Hamming distance as in (1)). In VSAs and, implicitly, in most types of collective-state computing, this content-based search is required for selecting and error-correcting results of computations that, in noisy form, have been produced by dynamical transformations of the collective state.

2). Memory buffer:

RC is a prominent example of collective-state computing as in echo state networks [3], liquid state machines [2], and state-dependent computation [35]. In these models the dynamics of a recurrent network is used to memorize or buffer the structure of a spatio-temporal input pattern. In essence, the memory buffer accumulates the input history over time into a compound vector. For example, the recurrent network dynamics of echo state networks can be viewed as attaching time stamps to the inputs arriving at different times.5 The time stamps can be used to isolate past inputs at a particular time from the compound vector describing the present network state. For a detailed explanation of this view of echo state networks, see [5].

It has been recently shown how the memory buffer task can be analyzed using the VSA formalism [5], [36], which builds on earlier VSA proposals of the memory buffer task under the name trajectory association task [37], [38].

Here we use a simple variant of the echo state network [39], called integer echo state network [40], which uses a random binary matrix for projecting the input to the reservoir. The memory buffer involves the item memory and two other VSA operations: permutation and bundling. The item memory contains a random binary/bipolar vector assigned for each symbol from a dictionary of symbols of size D. The item memory corresponds to Win weight matrix for projecting input to the reservoir. A fixed permutation (rotation) of the components of the vector (denoted as ρ)6 is used to represent the position of a symbol in the input sequence. In other words, the permutation operation is used as a systematic transformation of a symbol as a function of its serial position. For example, a symbol a represented by a is associated with its position i in the sequence by the result of permutation (denoted as r) as:

| (2) |

where ρi(∗) denotes that some fixed permutation ρ() has been applied i times.

The bundling operation forms a linear superposition of several vectors, which in some form is present in all collective-state computing models. Its simplest realization is an component-wise addition. However, when using the component-wise addition, the vector space becomes unlimited, therefore, it is practical to limit the values of the result. In general, the normalization function applied to the result of superposition is denoted as fn(∗).7 The vector x resulting from the bundling of several vectors, e.g.,

| (3) |

is similar to each of the bundled vectors, which allows storing information as a superposition of multiple vectors [5], [41]. Therefore, in the context of the memory buffer, the bundling operation is used to update the buffer with new symbols.

The memory buffer task involves two stages: memorization and recall, which are done in discrete time steps. At the memorization stage, at every time step t we add a vector Hs(t) representing the symbol s(t) from the sequence s to the current memory buffer x(t), which is formed as:

| (4) |

where x(t − 1) is the previous state of the buffer. Note that the symbol added d step ago is represented in the buffer as ρd−1(Hs(t−d)).

At the recall stage, at every time step we use x(t) to retrieve the prediction of the delayed symbol stored d steps ago using the readout matrix (Wd) for particular d using the nearest neighbor search:

| (5) |

Due to the use of a normalization function fn(∗), the memory buffer possesses the recency effect, therefore, the average accuracy of the recall is higher for smaller values of delay. There are several approaches how to form the readout matrix Wd and the normalization function fn(∗). Please see Section S.1 in the Supplementary materials for additional details on integer echo state networks.

3). Factorization with resonator network:

General symbolic manipulations with VSA require one other operation, in addition to bundling, permutation and item memory. The represention of an association of two or more symbols, such as a role-filler pair, is achieved by a binding operation, which associates several vectors (e.g., a and b) together and produces another vector (denoted as z) of the same dimensionality:

| (6) |

where the notation ⊕ denotes component-wise XOR used for the binding in dense binary VSAs. While bundling leads to a vector which is correlated with each of its components, in binding the resulting vector z is pseudo-orthogonal to the component vectors. Another important property of binding is that it is conditionally invertible. Given all but one components, one can simply compute from the binding representation of the unknown factor, e.g, z ⊕ x = x ⊕ x ⊕ y = y.

If none of the factors are given, but are contained in the item memory, the unbinding operation is still feasible but becomes a combinatorial search problem, whose complexity grows exponentially with the number of factors. This problem often occurs in symbolic manipulation problems, for example, in finding the position of a given item in a tree structure [42]. Let us assume that each component (factor; denoted as fi) comes from a separate item memory (1H,2H,...), which is called factor item memory, e.g., a general example of a vector with four factors is:

| (7) |

Recent work [43] proposes an elegant mechanism called the resonator network to address the challenge of factoring. In the nutshell, the resonator network [43] is a novel recurrent neural network design that uses VSAs principles to solve combinatorial optimization problems.

To factor the components from the input vector f1 ⊕ f2 ⊕ f3 ⊕ f4 representing the binding of several vectors, the resonator network uses several populations of units, , , each of which tries to infer a particular factor from the input vector. Each population, called a resonator, communicates with the input vector and all other neighboring populations to invert the input vector using the following dynamics:

| (8) |

Note that the process is iterative and progresses in discrete time steps, t. In essence, at time t each resonator can hold multiple weighted guesses for a vector from each factor item memory through the VSAs principle of superposition, which is used for the bundling operation. Each resonator also uses the current guesses for factors from other resonators. These guesses from the other resonators are used to invert the input vector and infer the factor of interest in the given resonator. The principle of superposition allows a population to hold multiple estimates of factor identity and test them simultaneously. The cost of superposition is a crosstalk noise. The inference step is, thus, noisy when many guesses are tested at once. However, the next step is to use factor item memory iH to remove the extraneous guesses that do not fit. Thus, the guess for each factor is cleaned-up by constraining the resonator activity only to the allowed atomic vectors stored in iH. Finally, a regularization step (denoted as fn(∗)) is needed. Successive iterations of this inference and clean-up procedure (8), eliminate the noise as the factors become identified and find their place in the input vector. When the factors are fully identified, the resonator network reaches a stable equilibrium and the factors can be read out from the stable activity pattern. Please refer to Section S.3 in the Supplementary materials for additional motivation and explanation of the resonator network.

B. Cellular automata-based expansion

The CA is a discrete computational model consisting of a regular grid of cells [17] of size N. Each cell can be in one of a finite number of states (the elementary CA is binary). States of cells evolve in discrete time steps according to some fixed rule. In the elementary CA, the new state of a cell at the next step depends on its current state and the states of its immediate neighbors. Despite the seeming simplicity of the system, amongst the elementary CAs there are rules (e.g., rule 110) that make CA dynamics operate at the edge of chaos [44], and which were proven to be Turing complete [45]. In the scope of this article, we consider another rule – rule 90 (CA90) as it possess several properties highly relevant for collective-state computing.

In the elementary CA, the state of a cell is updated using the current states of the cell itself and its left and right neighboring cells. A computation step in CA refers to the simultaneous update of states of all the cells in a grid. CA with binary states, there are in total 23 = 8 possible input combinations for each input there are two possible assignments for the output cell, which makes in total 28 = 256 combinations where each particular assignment defines a rule. Fig. 1 shows all input combinations and corresponding assignment of output states for CA90. CA90 assigns the next state of a central cell based on the previous states of the neighbors. In particular, the new state is the result of XOR operation on the states of the neighboring cells. This is particularly attractive because CA90 has a computational advantage since the CA implementation can be easily vectorized and implemented in hardware (especially when working with cyclic boundary conditions8). For example, if at time step t vector x(t) describes the states of the CA grid, then the grid state at t + 1 is computed as:

| (9) |

where ρ{+1,−1} is the notation for cyclic shift to the right or left by one. Note that (9) is identical to one step of evolution of CA90 with cyclic boundary conditions. This observation is important as it allows for highly parallelized implementation in, e.g., FPGA or application-specific integrated circuits (ASIC).

Fig. 1.

The assignment of new states for a center cell when the CA uses rule 90. A hollow cell corresponds to zero state while a shaded cell marks one state.

Since in the context of this study we use CA90 for the purposes of randomization, we will call the state of the grid x(0) at the beginning of computations as an initial short seed. It is worth pointing out that CA90 formulated as in (9) is a sequence of VSA operations [46]. Given the vector x as an argument, by performing two rotations (ρ+1(x) and ρ−1(x)) and then binding the results of rotations together (ρ+1(x) ⊕ ρ−1(x)), we implement CA90.

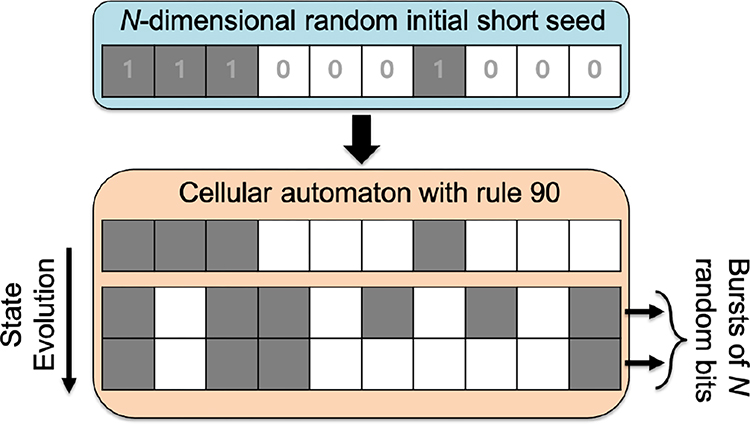

The core idea of this paper is to use CA90 to generate a distributed representation of expanded dimensionality that can be used within the context of collective-state computing. This expansion must have certain randomization properties and be robust to perturbations. Fig. 2 presents the basic idea of obtaining an expanded dense binary distributed representation from a short initial seed. In essence, the seed is used to initialize the CA grid. Once initialized, CA90 computations are applied for several steps (denoted as L).

Fig. 2.

The basic scheme for expanding distributed representations with CA90 from some initial short seed. The dimensionality of the seed N equals to the the size of the CA grid.

To illustrate the idea, let us consider a concrete example. In the context of RC, the CA90 expansion might be used to significantly reduce the memory footprint required to store a random matrix Win of an echo state network containing the weights between input and reservoir layers. Normally, Win ∈ [K × u] where K corresponds to the size of the reservoir and u denotes the size of the input. Instead of storing the whole Win, the use of CA90 expansion allows storing a smaller matrix S(0) ∈ [N × u], with N = K/L. Thus, the usage of CA90 in RC reduces the memory requirements by a factor of L. In order to explicitly re-materialize Win obtained from the expansion, S(0) is used to initialize the grid and CA90 (9) is run for L − 1 steps:

| (10) |

where [·,·] denotes the concatenation along the vertical dimension and S(i) = ρ+1(S(i − 1)) ⊕ ρ−1(S(i − 1)),1 ≤ i ≤ L − 1. Thus, the expansion comes at a computational cost of executing L − 1 steps of CA90. At every step i of CA90 evolution, the states of the grid in S(i) provide a new burst of N × u bits, which can be either used on the fly (without memorization) to make the necessary manipulations and then erased, or concatenated (with memorization as shown in (10)) to the previous states if the distributed representation should be re-materialized explicitly. In any case, the dimensionality of the expanded representation is K = NL.

C. CA90 and VSAs

Section V will present the joint use of RC, VSAs, and CA90 expansion. Amongst the related works discussed [47]–[52] (see Section V-B1), [50] is the most relevant, as it uses the randomization property of CA90. In particular, this work identified the following useful properties of CA90 for VSAs:

Random projection;

Preservation of the binding operation;

Preservation of the cyclic shift.

By random projection we mean that when CA90 is initialized with a random state x(0) (p1 ≈ p0 ≈ 0.5), which should be seen as an initial short seed, its evolved state at step t is a vector x(t) of the same size and density. Moreover, during the randomization period (see Section III-A) x(t) is dissimilar to the initial short seed x(0), i.e., dh(x(0),x(t)) ≈ 0.5 as well as to the other states in the evolution of the seed.

The preservation of the binding operation refers to the fact that if a seed c(0) is the result of the binding of two other seeds: c(0) = a(0) ⊕ b(0) then after t computational steps of CA90, the evolved state c(t) can be obtained by binding the evolved states of the initial seeds a(0) and b(0) used to form c(0), i.e., c(t) = a(t) ⊕ b(t).

Finally, CA90 computations preserve a special case of the permutation operation – cyclic shift by i cells. Suppose d(0) = ρi(a(0)) is an initial seed. After t computational steps of CA90, the cyclic shift of the evolved seed a(t) by i cells equals the evolved shifted seed d(t) so that dh(d(t), ρi(a(t)) = 0.

III. Randomization of states by cellular automaton

A. Errorless randomization

Usually, distributed representations in collective-state computing use i.i.d. random vectors. Similarly, we start with i.i.d. random vectors for short seeds. However, in contrast to the conventional approach, we are going to expand the dimensionality of the seed vectors via CA90 with boundary conditions. An important question for expansion is what are the limits of CA90 in terms of producing randomness?

One very useful empirical tool for answering this question is calculation of degrees of freedom from the statistics of normalized Hamming distances between binary vectors (see, e.g., [53] for an example). Given that ph denotes the average normalized Hamming distance and σh denotes its standard deviation, the degrees of freedom are calculated as:

| (11) |

Due to the randomization properties of CA90, we expect that after a certain number of steps it will produce new degrees of freedom. To be able to compare different grid sizes, we will report the degrees of freedom normalized by the grid size, i.e., F/N. In other words, if a single step of CA90 increased F by N (best case), the normalized value would increase by 1.

Fig. 3 presents the normalized degrees of freedom measured for 5, 000 steps of CA90 for different grid sizes using the same values as in [17] (p. 259). From the figure we can draw several interesting observations. First for all of the considered grid sizes, the degrees of freedom grows linearly at the beginning (following the reference, dashed black line, which indicates degrees of freedom in random binary vectors of the corresponding size). At some point, however, the degrees of freedom reach a maximum value and start to saturate, as we would expect. We are interested in the period of linear growth, and we call this the randomization period. Second, we see that larger grid sizes typically have longer randomization periods. For example, the longest randomization period of 2, 047 steps was observed for N = 23 (but this is not the largest grid size).9 Third, the randomization period of odd grid sizes are always longer than that of the even ones. For example, the randomization periods for N = 22 and N = 24 were only 31 and 7, respectively (cf. 2, 047 for N = 23). Thus, there is a dependency between N and the randomization period, but, despite the above observations, there is no clear general pattern connecting the grid size to the length of the randomization period.

Fig. 3.

The normalized degrees of freedom for different values of the grid size of CA90. The evolution of CA90 is reported for 5, 000 steps. The number of short seeds in the item memory was fixed to 100. The reported values were averaged over 100 simulations randomizing initial short seeds. Note the logarithmic scales of the axes.

The good news, however, is that the length of the randomization period is closely related to the length of periodic cycles (denoted as ΠN) in CA90 discovered in [54]. In short, the irregular behaviour of randomization periods and periodic cycles is a consequence of their dependence on number theoretical properties of N; [54] provides the following characterization of periodic cycles ΠN in CA90:

For CA90 with N of the form 2j, ΠN = 1;

For CA90 with N even but not of the form 2j, ΠN = 2ΠN/2;

For CA90 with N odd, , where sordN(2) is the multiplicative “sub-order” function of 2 modulo N, defined as the least integer j such that 2j = ±1 mod N.

Fig. 4 presents the empirically measured randomization periods as well as analytically calculated periodic cycles [54] for the grid size in the range [9, 46]. First, we see that when N is odd, the randomization period equals to the periodic cycle. The only exception is the case when N = 37, but this is just the first exception where . Second, when N is of the form 2j, the randomization period does not equal one because the CA90 is producing activity for 2j−1 steps, which increases the degrees of freedom. In fact, the randomization period in this case is 2j−2 − 1. Third, when N is even, the CA90 produces ΠN unique grid states but the patterns of Hamming distances between the states evolved from two random initial short seeds start to repeat after ΠN/2 steps, thus, they do not contribute new degrees of freedom. Therefore, the randomization period is two times lower than the periodic cycle. Aggregating these points, with respect to the randomization period of CA90, we have the following:

Fig. 4.

The empirically measured randomization period (blue) and the analytical periodic cycles [54] (red) for the grid size in the range [9, 46]. Note the logarithmic scale of y-axis.

For CA90 with N of the form 2j, the randomization period is 2j−2 − 1;

For CA90 with N even but not of the form 2j, the randomization period is ΠN/2;

For CA90 with N odd, the randomization period is divide of .

B. The effect of noise in the short seed

In the previous section, we have seen how CA90 can be used to expand initial short seeds into longer randomized representations. This property could be utilized by a collective-state system for efficient communication by exchanging only short seeds and expanding the seed with CA90. Since in reality communication channels are noisy, one one must be able to handle some amount of error in the communicated short seeds. Therefore, it is important to understand how the evolution of an expanded distributed representation is affected by errors in the initial short seed.

We address this issue by observing the empirical behavior for N = 37 and the first 256 steps of CA90 evolution. Fig. 5 reports the averaged normalized Hamming distance for an errorless short seed and a noisy version of it, where either 2 (dash-dot line), 4 (solid line), or 8 (dashed line) bits were flipped randomly. The results reported are for the corresponding states of the grid at a given step; not for the concatenated states. This shows that even a single step of CA90 increases the normalized Hamming distance between the evolved states. For example, when 4 bits were flipped the normalized Hamming distance between the seeds was 4/37 ≈ 0.11 while after a single step of CA90 it increased to almost 0.2, almost doubling. Further, we observe that the normalized Hamming distance will never be lower than after the first step.

Fig. 5.

The normalized Hamming distance between the original and noisy vectors obtained from short seeds for N = 37 during the first 256 steps of CA90 evolution. The reported values were averaged over 500 simulations where both initial short seeds and errors were chosen at random. Note the logarithmic scales of axes.

What is very interesting is that the distances induced by errors change in a predictable manner. We see that the errors reset to the lowest possible value at regular intervals: each CA90 step of the form 2j. This behavior of CA90 suggests that we can mitigate the impact of errors when expanding short seeds. In order to minimize the distance between the errorless evolutions and their noisy versions, one should only use the CA90 expansion in steps of the form 2j, which places additional limits for the possibility of dimensionality expansion.

To understand the observed cyclic behavior of CA90, we examine the case when the initial state of the grid includes only one active cell. Due to the fact that CA90 is additive, the active cell can be interpreted as one bit flip of error introduced to some random initial short seed. Fig. 6 demonstrates the evolution of the considered configuration for the first 65 steps. Red rectangles in Fig. 6 highlight the steps of the form 2j. At these steps there are only 2 active cells. The behavior of the configuration with the single active cell explains both why for small number of bit flips in Fig. 5 the normalized Hamming distance approximately doubled after the first step and why the distance reset for every step of the form 2j.

Fig. 6.

The evolution of CA90 for 65 steps, N = 37; the initial state includes one active cell, which can be thought as introducing one bit flip to some random initial short seed. All steps of the form 2j are highlighted by red rectangles.

Given the Bit Error Rate (BER, number of bit flips) in the short seed (pbf), we can calculate the BER after CA90 expansion (denoted as pCA) for steps of the form 2j as follows:

| (12) |

The intuition here is that due to the local interactions of CA, it is enough to only consider cases as in Fig. 1. In particular, we are only interested in cases, which result in active cells at the next step. There are only 4 such cases: two with two active cells and two with one active cell; enumerated in (12)

Fig. 7 plots the analytical pCA according to (12) against the empirical one obtained in numerical simulations, we see that the curves match.

Fig. 7.

The expected BER for CA90 steps of the form 2j against the BER in the short seeds. The solid line is analytical calculation while the dashed line was measured empirically. The empirical results were averaged over 10 simulations.

IV. Experimental demonstration of CA90 expansion for collective-state computing

This section focuses on using CA90 expansion for RC and VSAs. In several scenarios, we provide empirical evidence that expanded vectors obtained via CA90 computations are functionally equivalent to i.i.d. random vectors.10 The code for reproducing the results of the experiments is available as the supplementary materials to this article.

A. Nearest neighbor search in item memories

One potential application of CA90 expansion of vectors will be for “on the fly” generation of item memories as used in RC and VSAs. An item memory is used to decode the output of a collective-state computation, where often the nearest neighbor to a query vector within the item memory is to be found. As mentioned before, when there is noise in the query vector, the outcome of the search may not always be correct. Therefore, we explored the accuracy of the nearest neighbor search when the query vector was significantly distorted by noise. We compare two item memories: one with i.i.d. random vectors, and the other with CA90 expanded vectors where only initial short seeds (N = 23) were i.i.d. random. Fig. 8 reports the accuracy results of simulation experiments. The item memory with vectors based on CA90 expansion demonstrated the same accuracy as the item memory with fully i.i.d. random vectors.

Fig. 8.

The usage of i.i.d. random vectors against the CA90 expanded representations in the item memory. The figure reports the accuracy of the nearest neighbor search where a query was a noisy version of one of the vectors stored in the item memory. The noise was introduced in the form of bit flips. Three different values of Bit Error Rates were simulated ({0.30, 0.35, 0.40}). The dimensionality of the initial short seeds was set to N = 23. The size of the item memory was set to 100. The reported values were averaged over 1, 000 simulations randomizing initial short seeds, random item memories, and noise added to queries.

B. Memory buffer

Next, we demonstrate the use of CA90 expanded representations in the memory buffer task described in Section II-A2. These experiments were done with integer echo state networks. In these experiments, we measured the accuracy of recall from the memory buffer when the item memory was created from initial short seeds (N = 37) by concatenating the results of CA90 computations for several steps so that K = NL. As a benchmark, we used an item memory with i.i.d. random vectors of matching dimensionality. Three different values of delay were considered: {5, 10, 15}. The results are reported in Fig. 9. As expected, we observe that increasing the dimensionality of the memory buffer increased the accuracy of the recall. The main point, however, is that the memory buffer made from CA90 expanded representations demonstrates the same accuracy as the memory buffer made from i.i.d. random vectors.

Fig. 9.

The usage of i.i.d. random vectors against the CA90 expanded representations in the memory buffer task; D = 27 in the experiments. The figure reports the accuracy of the correct recall of symbols for three different values of delay ({5, 10, 15}). The dimensionality of the initial short seeds was set to N = 37. The reported values were averaged over 10 simulations randomizing initial short seeds, random item memories, and traces of symbols to be memorized.

C. Resonator network factoring in the errorless case

To further assess the quality of vectors obtained via the results of CA90 computations, we also examined their use in the resonator network [42]. Please see Section II-A3 and Section S.3 in the Supplementary materials for details of the resonator network.

It is important to emphasize that due to the preservation of the binding operation by CA90, that multiple aspects of the resonator network can benefit from CA90 expansion. Both the composite input vector and the factor item memories do not have to be memorized explicitly, but rather can be expanded from seeds. The factorization process is computed at each level of CA90 expansion, with the vector dimensions increasing by N for each CA step. The outputs of the resonator network are collected and compared to the ground truth, and averaged over many randomized simulation experiments. Fig. 10 presents the average accuracies (left column) and the average iterations until convergence (right column) for three different dimensionalities of initial short seeds {100, 200, 300} and three sizes of factor item memories {8, 16, 32}. The number of factors was set to four. The simulations considered the first 100 steps of CA90, which was less than the randomization period (1, 023) of the shortest seed (N = 100).

Fig. 10.

The usage of random vectors against CA90 expanded representations in the resonator network. Left column reports the average accuracies while right column reports the average number of iterations until convergence. The maximal number of iterations was set to 500. The dimensionality of the initial short seeds varied between {100, 200, 300}. The evolution of CA90 is reported for the first 100 steps. The size of an individual item memory varied between {8, 16, 32}. The number of factors was fixed to four. The reported values were averaged over 100 simulations randomizing initial short seeds.

The performance of the resonator network was as expected. For a given dimensionality of short seed and item memory size, the average accuracy increased with the increased number of CA90 steps – as practically it means using vectors of larger dimensionality. The number of iterations in contrast decreased for larger vectors. Importantly, there was no notable difference in the performance of the resonator network both in terms of the accuracy and number of iterations when operating with CA90 expanded representations. This further confirms that it is reasonable to use CA90 expanded representations in order to tradeoff memory for computation.

D. Resonator network factoring in the case of errors

In order to examine the capabilities of CA90 expanded representations when the initial short seeds were subject to errors, we performed simulations for two dimensionalities of initial short seeds (N = 37 and N = 39). Similar to the experiments in Fig. 10, we used the resonator network to reconstruct a randomly chosen combination of factors. The difference was that some bit flips were added to the initial short seed (i.e., vector to be factored) where the number of bit flips was in the range [0, 5] with step 1. The results are reported in Fig. 11. To minimize the noise introduced by CA90 computations we only used steps (x-axis in Fig. 11) of the form 2j (cf. Fig. 6) to expand the dimensionality. Columns in Fig. 11 correspond to different amounts of information carried by the vector to be factored.

Fig. 11.

The usage of the CA90 expanded representations in the resonator network in the case when the initial short seed might have errors. The upper panels report the case for N = 37 while the lower panels correspond to N = 39. The noise was introduced in the form of bit flips. The number of bit flips was in the range [0, 5] with step 1. The legends show the corresponding Bit Error Rates relative to N. The solid lines depict the resonator network with 4 factors while the dashed lines depict the resonator network with 3 factors. Columns correspond to different amount of information carried by a vector, which was determined by the size of an item memory for one factor. The sizes of item memories for resonator networks with three and four factors were set to approximately match each other in terms of amount of information. The reported values were averaged over 100 simulations randomizing initial short seeds as well as introduced bit flips.

The experiments were done for two configurations of the resonator network: with 3 factors (dashed lines) and with 4 factors (solid lines). Clearly, when given the same conditions, the resonator network with 3 factors outperforms the one with 4 factors. This observation is in line with the expected behavior of the resonator network. It should be noted, however, that the resonator network with 3 factors requires larger item memories in order store the same amount of information. For example, for 16.00 bits in the case of 4 factors the size of individual item memory was 16 while in the case of 3 factors it was 40, i.e., the resonator network with 3 factors required about 2.5 times more memory. Thus, the use of a resonator network with fewer factors results in a better performance but it requires more memory to be allocated.

We also see that even in the absence of errors (BER=0.00) the accuracy is not perfect when a vector carries a lot of information because we are limited by the capacity of the resonator network – which does fail at factorization when the size of the factorization problem is too large. For example, for 16.00 bits none of the expanded dimensionalities reached the perfect accuracy as opposed to the other two cases. Naturally, the inclusion of errors hurts the accuracy, but the performance degradation is gradual.

When comparing the performance of the resonator networks for the expanded vectors using all 21 CA90 steps we made a counter intuitive observation that the performance for N = 37 is better despite shorter vectors and higher Bit Error Rates (BER). Recall from Fig. 4 that the chosen grid sizes have different randomization periods: 87, 381 and 4095 for N = 37 and N = 39, respectively. The longer randomization period for N = 37 means that the use of N = 37 provides more randomness for large number of CA90 steps. This is the main reason for the counter intuitive observation that the use of shorter seed at higher BER resulted in a better performance. When taking into account only the steps of the form 2j the corresponding randomization period results in about 16.41 and 12.00. These are exactly the values for which the performance of the resonator networks starts to saturate since concatenating additional dimensions after the randomization period stops adding extra randomness.

V. Discussion

A. Summary of our results

The use of CA computations for the generation of random numbers is not new, for instance, a seminal work [55] has proposed to generate random sequences with CA with rule 30.11 Numerous studies followed on building CA-based pseudo-random number generators, e.g., [56], see [57] for a recent overview of this work, some of it specifically investigating CA90. Here, we focused on how collective-state computing can benefit from the randomness produced by CA90. Our results are based on a key observation that collective-state computing typically relies on high-dimensional random representations, which have to be initially generated and stored for, e.g., being accessible by nearest neighbor searches during compute time. In many models, the representations are dense binary random patterns. Rather than storing the full random representations in memory (e.g., Win in RC), we proposed to store just short seed patterns, and to use CA90 for rematerializing the full random representations when required. The usage of CA90 expanded high-dimensional representations was demonstrated in the context of RC and VSAs. Our results provided empirical evidence that the expansion of representations on-demand (re-materialization solution) is functionally equivalent to storing the full i.i.d. random representations in the item memory (memorization solution).

Specifically, we have shown that the randomization period of CA90 is closely connected to its periodic cycle length and depends of the size of the grid. We provided the exact relation between the grid size and the length of the randomization period. The general trend is that larger grid sizes yield longer randomization periods. However, period length depends on number-theoretic properties of the grid size integer. In general, odd numbered grid sizes have longer randomization periods than even numbered. In particular, one should avoid grid sizes that are powers of two (2j), as they have the shortest randomization period. The longest periods are obtained when the size of the grid is a prime number. Thus, given a memory constraint, it is best to choose the largest prime within the constraint.

We have also demonstrated that it is possible to use the expansion even in the presence of errors in the short seed patterns. Unfortunately, CA90 introduces additional errors to the ones present in the seed pattern so the error rate after CA90 increases. The distribution of introduced errors is, however, not uniform – some of the steps introduce more errors than the others. We have shown that the lowest amount of errors (cf. Fig. 5) is introduced for CA90 steps that are powers of two (2j). Thus, in order to minimize the errors in the expanded representation one should use only steps of the form 2j. This, of course, limits the possibilities for expansion as practically not that many steps of the form 2j can be computed (e.g., we used up to 20 in the experiments).

B. Related work

1). Combining reservoir computing, vector symbolic architectures and cellular automata:

It has been demonstrated recently [5], [40] that echo state networks [39], an instance of RC, can be formulated in the VSA formalism. CA have been first introduced to RC and VSA models for expanding low-dimensional feature representations into high-dimensional representations to improve classification [18], [47]. Due to the local interactions in CA, the evolution of the initial state (i.e., low-dimensional representation) over several steps produces a richer and higher-dimensional representation of features, while preserving similarity. This method was applied to activation patterns from neural networks [47], and to manually extracted features [58]. The expanded representations were able to improve classification results for natural [47] and medical [59] images.

Works [18], [47] suggested that the expanded representations could be seen as a reservoir but they have not studied its memorization capabilities in details. The characteristics of a formed memory were studied in [48]. Later, work [49] proposed to form a reservoir using a pair of CA by first evolving the initial state for several steps with one CA and then continuing the computations with some other CA rule. Similarly, works [51], [52] investigated nonuniform binary CA [51] and CA rules with larger neighborhoods [52] (five) and more states (three, four, and five).

Similar to these works, our approach also employs CA to expand the dimension of representations. However, we have applied expansion not to feature vectors, but just to i.i.d. random seed patterns. All we need is the property of CA90 that the resulting high-dimensional vectors are still pseudo-orthogonal. In our study, similarity preservation is only required if the random seed patterns contain errors.

Our work is most directly inspired by [16] and [50] who both used CA to expand item memory with short [16] or long [50] i.i.d. random seed patterns. In [16] the expansion was done with the CA30 rule, which is known to exhibit chaotic behaviour. Here, as in [50], we used the CA90 rule.12

For collective-state computing, CA90 has the great advantage that it distributes over the binding and the cyclic shift operation. We have seen this advantage in action when studying the resonator network in Section IV-C. Since CA90 distributes over the binding operation, it was possible to expand the collective-state (i.e., the input vector (7) with factors) on-demand during the factorization. Going beyond [50], [60], we also systematically explored the randomization properties of CA90, such as the length of the randomization period. Moreover, none of the previous work has studied the randomization behaviour of CA90 in the presence of errors in the initial seed.

2). Other computation methods that use randomness:

A complementary approach of computing with randomness is sampling-based computation [61]. This approach differs fundamentally from collective-state computing, which exploits a concentration of mass phenomenon of random patterns making them pseudo-orthogonal. Once generated, a fixed set of random patterns can serve as unique and well distinguishable identifiers for handling variables and values during compute time. In contrast, in the sampling-based computation each compute step produces independent randomness to provide good mixing properties. Good mixing ensures that even a small set of samples is representative for the entire probability distribution and, therefore, constitutes a compact, faithful representation (ch. 29 in [62]). We should add that the “frozen” randomness in VSA can be used to form different types of compact representation of probability distributions. For example, a combination of binding and bundling can constitute compact representations of large histograms describing the n-gram statistics of languages [30]. The advantage of such a representation is that it is a vector of the same fixed dimension as the atomic random patterns, somewhat independent of the number of non-zero entries in the histogram.

C. Future work

1). Potential for hardware implementation:

The space-time or memory-computation tradeoff introduced by the inclusion of CA can be used to optimize the implementation of collective-state computations in hardware. Of course, this optimization depends on the context of a computational problem and a particular hardware platform, which is outside the scope of this article.

Further, despite the fact that the use of CA90 incurs additional computational costs, it enables highly energy efficient collective-state computing models. For example, recently an ASIC hardware implementation of an RC system with the proposed CA90 expansion has been reported [63]. This work reports that the CA90 expansion improved the energy efficiency by 4.8× over state-of-the-art implementations. The reason for this is that the use of CA90 allows to flexibly chose the operation point between the two (sub-optimal extremes): large leakage power when storing all randomly generated parts of the model explicitly (no CA90 expansion) versus large dynamic power due to many CA90 computational steps (with CA90 expansion when N is very small relative to K). All told, we expect the CA90 expansion to become a standard primitive for designing efficient hardware implementations of collective-state computing models.

The optimized hardware implementation of models we described involves another interesting topic for future research, the question how to parallelize the computation of CA90. While the implementation of CA90 in FPGA is quite straightforward, see equation (9), the implementation with neural networks and on neuromorphic hardware [64] is still an open problem.

2). Integration of CA computations in neural associative memories:

Another interesting future direction is to investigate how associative memories [65] can trade off synaptic memory with neural computation implementing the CA. Such CA-based approaches could be compared to other suggestions in the literature how to replace memory by computation, e.g., [66].

Supplementary Material

Acknowledgments

The authors thank members of the Redwood Center for Theoretical Neuroscience and Berkeley Wireless Research Center for stimulating discussions. The authors also thank Evgeny Osipov and Abbas Rahimi for fruitful discussions on the potential role of cellular automata in vector symbolic architectures, which inspired the current work. The work of DK was supported by the European Union’s Horizon 2020 Research and Innovation Programme under the Marie Skłodowska-Curie Individual Fellowship Grant Agreement 839179 and in part by the DARPA’s Virtual Intelligence Processing (VIP, Super-HD Project) and Artificial Intelligence Exploration (AIE, HyDDENN Project) programs. FTS is supported by NIH R01-EB026955.

Biographies

Denis Kleyko (M’20) received the B.S. degree (Hons.) in telecommunication systems and the M.S. degree (Hons.) in information systems from the Siberian State University of Telecommunications and Information Sciences, Novosibirsk, Russia, in 2011 and 2013, respectively, and the Ph.D. degree in computer science from the Luleå University of Technology, Luleå, Sweden, in 2018.

He is currently a Post-Doctoral Researcher on a joint appointment between the Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA, USA and the Intelligent Systems Lab, Research Institutes of Sweden, Kista, Sweden. His current research interests include machine learning, reservoir computing, and vector symbolic architectures/hyperdimensional computing.

Edward Paxon Frady received the B.S. degree in computation and neural systems from California Institute of Technology, USA in 2008 and the Ph.D. degree in neuroscience from University of California San Diego, USA in 2014.

He is currently a Researcher in Residence with the Neuromorphic Computing Lab, Intel Labs, Santa Clara, CA, USA, and a Visiting Scholar with the Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA, USA. His research interests include neuromorphic engineering, vector symbolic architectures/hyperdimensional computing, and machine learning.

Friedrich T. Sommer received the diploma in physics from the University of Tuebingen, Tuebingen, Germany, in 1987 and the Ph.D. degree from the University of Duesseldorf, Duesseldorf, Germany, in 1993. He received his habilitation in computer science from University of Ulm, Ulm, Germany, in 2002.

He is currently a Researcher in Residence with the Neuromorphic Computing Lab, Intel Labs, Santa Clara, CA, USA, and an Adjunct Professor with the Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA, USA. His research interests include neuromorphic engineering, vector symbolic architectures/hyperdimensional computing, and machine learning.

Footnotes

Although decoding by projection would work even better for exactly orthogonal distributed patterns, such a coding scheme is less desirable: i.i.d random generation of distributed patterns is computationally much cheaper and does not pose a hard limit on the number of encoded variables to be smaller or equal than the number of nodes or neurons.

Please note that while a (pseudo-) random number generator is not part of collective-state computing models (RC, VSA, etc.) during run time, it is required for model initialization.

There are several different terms, describing computations in CA, e.g., “expansion”, “evolution”, and development. In the context of this article, we use terms expansion and evolution interchangeably. The term “expansion” is used to emphasize the fact that CA90 is used to produce distributed representations while the term “evolution” is used when referring to running CA computations.

Examples of the basic concepts are distinct features in machine learning problems [25]–[28] or distinct symbols in data structures [29]–[34].

This is done implicitly since the recurrent connection matrix is applied different number of times to inputs presented at different time stamps.

The cyclic shift is used frequently due to its simplicity.

In the case of dense binary VSAs, the arithmetic sum-vector of two or more vectors is thresholded back to binary space vector by using the majority rule/sum (denoted as fm(∗)) where ties are broken at random.

Cyclic boundary condition means that the first and the last cells in the grid are considered to be neighbors.

As will be explained later in this section, this is because the length of the randomization period depends on N and primes tend to have long randomization periods. Since N = 23 is the largest prime used in Fig. 3, this grid size exhibits the longest randomization period among all considered grid sizes.

In the scope of this article by random vectors we mean vectors generated with the use of a standard pseudo-random number generator. So strictly speaking, they should be called pseudo-random vectors but the term random is used to oppose them to the vectors obtained with CA90 computations.

CA with rule 30 is also used for random number generation in Wolfram Mathematica [17].

Contributor Information

Denis Kleyko, Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA 94720 USA; Intelligent Systems Lab at Research Institutes of Sweden, 164 40 Kista, Sweden.

E. Paxon Frady, Neuromorphic Computing Lab, Intel Labs, Santa Clara, CA 95054 USA; Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA 94720 USA.

Friedrich T. Sommer, Neuromorphic Computing Lab, Intel Labs, Santa Clara, CA 95054 USA Redwood Center for Theoretical Neuroscience at the University of California, Berkeley, CA 94720 USA.

References

- [1].Csaba G and Porod W, “Coupled Oscillators for Computing: A Review and Perspective,” Applied Physics Reviews, vol. 7, no. 1, pp. 1–19, 2020. [Google Scholar]

- [2].Maass W and Natschlager T and Markram H, “Real-Time Computing Without Stable States: A New Framework for Neural Computation Based on Perturbations,” Neural Computation, vol. 14, no. 11, pp. 2531–2560, 2002. [DOI] [PubMed] [Google Scholar]

- [3].Jaeger H, “Tutorial on Training Recurrent Neural Networks, Covering BPTT, RTRL, EKF and the Echo State Network Approach,” Technical Report GMD Report 159, German National Research Center for Information Technology, Tech. Rep, 2002. [Google Scholar]

- [4].Rodan A and Tiňo P, “Minimum Complexity Echo State Network,” IEEE Transactions on Neural Networks, vol. 22, no. 1, pp. 131–144, 2011. [DOI] [PubMed] [Google Scholar]

- [5].Frady EP, Kleyko D, and Sommer FT, “A Theory of Sequence Indexing and Working Memory in Recurrent Neural Networks,” Neural Computation, vol. 30, pp. 1449–1513, 2018. [DOI] [PubMed] [Google Scholar]

- [6].Hopfield JJ, “Neural Networks and Physical Systems with Emergent Collective Computational Abilities,” Proceedings of the National Academy of Sciences, vol. 79, no. 8, pp. 2554–2558, 1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Hopfield JJ and Tank DW, ““Neural” Computation of Decisions in Optimization Problems,” Biological Cybernetics, vol. 52, no. 3, pp. 141–152, 1985. [DOI] [PubMed] [Google Scholar]

- [8].Achlioptas D, “Database-friendly Random Projections: Johnson-Lindenstrauss with Binary Coins,” Journal of Computer and System Sciences, vol. 66, no. 4, pp. 671–687, 2003. [Google Scholar]

- [9].Donoho DL, “Compressed Sensing,” IEEE Transactions on Information Theory, vol. 52, no. 4, pp. 1289–1306, 2006. [Google Scholar]

- [10].Amini A and Marvasti F, “Deterministic Construction of Binary, Bipolar, and Ternary Compressed Sensing Matrices,” IEEE Transactions on Information Theory, vol. 57, no. 4, pp. 2360–2370, 2011. [Google Scholar]

- [11].Igelnik B and Pao YH, “Stochastic Choice of Basis Functions in Adaptive Function Approximation and the Functional-Link Net,” IEEE Transactions on Neural Networks, vol. 6, pp. 1320–1329, 1995. [DOI] [PubMed] [Google Scholar]

- [12].Kleyko D, Kheffache M, Frady EP, Wiklund U, and Osipov E, “Density Encoding Enables Resource-Efficient Randomly Connected Neural Networks,” IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 8, pp. 3777–3783, 2021. [DOI] [PubMed] [Google Scholar]

- [13].Plate TA, “Holographic Reduced Representations,” IEEE Transactions on Neural Networks, vol. 6, no. 3, pp. 623–641, 1995. [DOI] [PubMed] [Google Scholar]

- [14].Rachkovskij DA, “Representation and Processing of Structures with Binary Sparse Distributed Codes,” IEEE Transactions on Knowledge and Data Engineering, vol. 3, no. 2, pp. 261–276, 2001. [Google Scholar]

- [15].Kanerva P, “Hyperdimensional Computing: An Introduction to Computing in Distributed Representation with High-Dimensional Random Vectors,” Cognitive Computation, vol. 1, no. 2, pp. 139–159, 2009. [Google Scholar]

- [16].Schmuck M, Benini L, and Rahimi A, “Hardware Optimizations of Dense Binary Hyperdimensional Computing: Rematerialization of Hypervectors, Binarized Bundling, and Combinational Associative Memory,” ACM Journal on Emerging Technologies in Computing Systems, vol. 15, no. 4, pp. 1–25, 2019. [Google Scholar]

- [17].Wolfram S, A New Kind of Science. Champaign, IL. Wolfram Media, Inc., 2002. [Google Scholar]

- [18].Yilmaz O, “Symbolic Computation Using Cellular Automata-Based Hyperdimensional Computing,” Neural Computation, vol. 27, no. 12, pp. 2661–2692, 2015. [DOI] [PubMed] [Google Scholar]

- [19].Gallant SI and Okaywe TW, “Representing Objects, Relations, and Sequences,” Neural Computation, vol. 25, no. 8, pp. 2038–2078, 2013. [DOI] [PubMed] [Google Scholar]

- [20].Rahimi A, Datta S, Kleyko D, Frady EP, Olshausen B, Kanerva P, and Rabaey JM, “High-dimensional Computing as a Nanoscalable Paradigm,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 64, no. 9, pp. 2508–2521, 2017. [Google Scholar]

- [21].Frady EP, Kleyko D, and Sommer FT, “Variable Binding for Sparse Distributed Representations: Theory and Applications,” IEEE Transactions on Neural Networks and Learning Systems, vol. PP, no. 99, pp. 1–14, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Huang G and Zhu Q and Siew C, “Extreme Learning Machine: Theory and Applications,” Neurocomputing, vol. 70, no. 1–3, pp. 489–501, 2006. [Google Scholar]

- [23].Kanerva P, “Fully Distributed Representation,” in Real World Computing Symposium (RWC), 1997, pp. 358–365. [Google Scholar]

- [24].Gayler RW, “Multiplicative Binding, Representation Operators & Analogy,” in Gentner D, Holyoak KJ, Kokinov BN (Eds.), Advances in analogy research: Integration of theory and data from the cognitive, computational, and neural sciences, New Bulgarian University, Sofia, Bulgaria, 1998, pp. 1–4. [Google Scholar]

- [25].Kleyko D, Osipov E, Senior A, Khan AI, and Sekercioglu YA, “Holographic Graph Neuron: A Bioinspired Architecture for Pattern Processing,” IEEE Transactions on Neural Networks and Learning Systems, vol. 28, no. 6, pp. 1250–1262, 2017. [DOI] [PubMed] [Google Scholar]

- [26].Rasanen O and Saarinen J, “Sequence Prediction with Sparse Distributed Hyperdimensional Coding Applied to the Analysis of Mobile Phone Use Patterns,” IEEE Transactions on Neural Networks and Learning Systems, vol. 27, no. 9, pp. 1878–1889, 2016. [DOI] [PubMed] [Google Scholar]

- [27].Rosato A, Panella M, and Kleyko D, “Hyperdimensional Computing for Efficient Distributed Classification with Randomized Neural Networks,” in International Joint Conference on Neural Networks (IJCNN), 2021, pp. 1–10. [Google Scholar]

- [28].Diao C, Kleyko D, Rabaey JM, and Olshausen BA, “Generalized Learning Vector Quantization for Classification in Randomized Neural Networks and Hyperdimensional Computing,” in International Joint Conference on Neural Networks (IJCNN), 2021, pp. 1–9. [Google Scholar]

- [29].Kleyko D, Davies M, Frady EP, Kanerva P, Kent SJ, Olshausen BA, Osipov E, Rabaey JM, Rachkovskij DA, Rahimi A, and Sommer FT, “Vector Symbolic Architectures as a Computing Framework for Nanoscale Hardware,” arXiv:2106.05268, pp. 1–28, 2021. [PMC free article] [PubMed] [Google Scholar]

- [30].Joshi A, Halseth JT, and Kanerva P, “Language Geometry Using Random Indexing,” in Quantum Interaction (QI), 2016, pp. 265–274. [Google Scholar]

- [31].Osipov E, Kleyko D, and Legalov A, “Associative Synthesis of Finite State Automata Model of a Controlled Object with Hyperdimensional Computing,” in Annual Conference of the IEEE Industrial Electronics Society (IECON), 2017, pp. 3276–3281. [Google Scholar]

- [32].Yerxa T, Anderson A, and Weiss E, “The Hyperdimensional Stack Machine,” in Cognitive Computing, 2018, pp. 1–2. [Google Scholar]

- [33].Pashchenko DV, Trokoz DA, Martyshkin AI, Sinev MP, and Svistunov BL, “Search for a Substring of Characters using the Theory of Non-deterministic Finite Automata and Vector-Character Architecture,” Bulletin of Electrical Engineering and Informatics, vol. 9, no. 3, pp. 1238–1250, 2020. [Google Scholar]

- [34].Kleyko D, Osipov E, and Gayler RW, “Recognizing Permuted Words with Vector Symbolic Architectures: A Cambridge Test for Machines,” Procedia Computer Science, vol. 88, pp. 169–175, 2016. [Google Scholar]

- [35].Buonomano DV and Maass W, “State-dependent Computations: Spatiotemporal Processing in Cortical Networks,” Nature Reviews Neuroscience, vol. 10, no. 2, pp. 113–125, 2009. [DOI] [PubMed] [Google Scholar]

- [36].Kleyko D, Rosato A, Frady EP, Panella M, and Sommer FT, “Perceptron Theory for Predicting the Accuracy of Neural Networks,” arXiv:2012.07881, pp. 1–12, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Plate TA, “Holographic Recurrent Networks,” in Advances in Neural Information Processing Systems (NIPS), 1992, pp. 34–41. [Google Scholar]

- [38].——, Holographic Reduced Representations: Distributed Representation for Cognitive Structures. Stanford: CSLI, 2003. [Google Scholar]

- [39].Lukosevicius M, “A Practical Guide to Applying Echo State Networks,” in Neural Networks: Tricks of the Trade, ser. Lecture Notes in Computer Science, vol. 7700, 2012, pp. 659–686. [Google Scholar]

- [40].Kleyko D, Frady EP, Kheffache M, and Osipov E, “Integer Echo State Networks: Efficient Reservoir Computing for Digital Hardware,” IEEE Transactions on Neural Networks and Learning Systems, vol. PP, no. 99, pp. 1–14, 2020. [DOI] [PubMed] [Google Scholar]

- [41].Thomas A, Dasgupta S, and Rosing T, “A Theoretical Perspective on Hyperdimensional Computing,” Journal of Artificial Intelligence Research, vol. 72, pp. 215–249, 2021. [Google Scholar]

- [42].Frady EP, Kent SJ, Olshausen BA, and Sommer FT, “Resonator Networks, 1: An Efficient Solution for Factoring High-Dimensional, Distributed Representations of Data Structures,” Neural Computation, vol. 32, no. 12, pp. 2311–2331, 2020. [DOI] [PubMed] [Google Scholar]

- [43].Kent SJ, Frady EP, Sommer FT, and Olshausen BA, “Resonator Networks, 2: Factorization Performance and Capacity Compared to Optimization-Based Methods,” Neural Computation, vol. 32, no. 12, pp. 2332–2388, 2020. [DOI] [PubMed] [Google Scholar]

- [44].Langton CG, “Computation at the Edge of Chaos: Phase Transitions and Emergent Computation,” Physica D: Nonlinear Phenomena, vol. 42, no. 1–3, pp. 12–37, 1990. [Google Scholar]

- [45].Cook M, “Universality in Elementary Cellular Automata,” Complex Systems, vol. 15, no. 1, pp. 1–40, 2004. [Google Scholar]

- [46].Gayler RW, “Vector Symbolic Architectures Answer Jackendoff’s Challenges for Cognitive Neuroscience,” in Joint International Conference on Cognitive Science (ICCS/ASCS), 2003, pp. 133–138. [Google Scholar]

- [47].Yilmaz O, “Machine Learning Using Cellular Automata Based Feature Expansion and Reservoir Computing,” Journal of Cellular Automata, vol. 10, no. 5–6, pp. 435–472, 2015. [Google Scholar]

- [48].Nichele S and Molund A, “Deep Learning with Cellular Automaton-Based Reservoir Computing,” Complex Systems, vol. 26, no. 4, pp. 319–339, 2017. [Google Scholar]

- [49].McDonald N, “Reservoir Computing and Extreme Learning Machines using Pairs of Cellular Automata Rules,” in International Joint Conference on Neural Networks (IJCNN), 2017, pp. 2429–2436. [Google Scholar]

- [50].Kleyko D and Osipov E, “No Two Brains Are Alike: Cloning a Hyperdimensional Associative Memory Using Cellular Automata Computations,” in Biologically Inspired Cognitive Architectures (BICA), ser. Advances in Intelligent Systems and Computing, vol. 636, 2017, pp. 91–100. [Google Scholar]

- [51].Nichele S and Gundersen M, “Reservoir Computing Using Nonuniform Binary Cellular Automata,” Complex Systems, vol. 26, no. 3, pp. 225–245, 2017. [Google Scholar]

- [52].Babson N and Teuscher C, “Reservoir Computing with Complex Cellular Automata,” Complex Systems, vol. 28, no. 4, pp. 433–455, 2019. [Google Scholar]

- [53].Daugman J, “The Importance of being Random: Statistical Principles of Iris Recognition,” Pattern Recognition, vol. 36, pp. 279–291, 2003. [Google Scholar]

- [54].Martin O, Odlyzko AM, and Wolfram S, “Algebraic Properties of Cellular Automata,” Communications in Mathematical Physics, vol. 93, pp. 219–258, 1984. [Google Scholar]

- [55].Wolfram S, “Random Sequence Generation by Cellular Automata,” Advances in Applied Mathematics, vol. 7, no. 2, pp. 123–169, 1986. [Google Scholar]

- [56].Santoro R, Roy S, and Sentieys O, “Search for Optimal Five-neighbor FPGA-based Cellular Automata Random Number Generators,” in International Symposium on Signals, Systems and Electronics (ISSSE), 2007, pp. 343–346. [Google Scholar]

- [57].Dascalu M, “Cellular Automata and Randomization: A Structural Overview,” in From Natural to Artificial Intelligence - Algorithms and Applications, Lopez-Ruiz R, Ed. IntechOpen, 2018, ch. 9, pp. 165–183. [Google Scholar]

- [58].Karvonen N, Nilsson J, Kleyko D, and Jimenez LL, “Low-Power Classification using FPGA—An Approach based on Cellular Automata, Neural Networks, and Hyperdimensional Computing,” in IEEE International Conference On Machine Learning And Applications (ICMLA), 2019, pp. 370–375. [Google Scholar]

- [59].Kleyko D, Khan S, Osipov E, and Yong SP, “Modality Classification of Medical Images with Distributed Representations based on Cellular Automata Reservoir Computing,” in IEEE International Symposium on Biomedical Imaging (ISBI), 2017, pp. 1053–1056. [Google Scholar]

- [60].McDonald N and Davis R, “Complete & Orthogonal Replication of Hyperdimensional Memory via Elementary Cellular Automata,” in Unconventional Computation and Natural Computation (UCNC), 2019, pp. 1–1. [Google Scholar]

- [61].Orbán G, Berkes P, Fiser J, and Lengyel M, “Neural Variability and Sampling-based Probabilistic Representations in the Visual Cortex,” Neuron, vol. 92, no. 2, pp. 530–543, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [62].MacKay DJC, Information Theory, Inference and Learning Algorithms. Cambridge university press, 2003. [Google Scholar]

- [63].Menon A, Sun D, Aristio M, Liew H, Lee K, and Rabaey JM, “A Highly Energy-Efficient Hyperdimensional Computing Processor for Wearable Multi-modal Classification,” in IEEE Biomedical Circuits and Systems Conference (BioCAS), 2021, pp. 1–4. [DOI] [PubMed] [Google Scholar]

- [64].Davies M, Srinivasa N, Lin T-H, Chinya G, Cao Y, Choday SH, Dimou G, Joshi P, Imam N, Jain S, Liao Y, Lin C-K, Lines A, Liu R, Mathaikutty D, McCoy S, Paul A, Tse J, Venkataramanan G, Weng Y-H, Wild A, Yang Y, and Wang H, “Loihi: A Neuromorphic Manycore Processor with On-Chip Learning,” IEEE Micro, vol. 38, no. 1, pp. 82–99, 2018. [Google Scholar]

- [65].Gritsenko VI, Rachkovskij DA, Frolov AA, Gayler RW, Kleyko D, and Osipov E, “Neural Distributed Autoassociative Memories: A Survey,” Cybernetics and Computer Engineering, vol. 2, no. 188, pp. 5–35, 2017. [Google Scholar]

- [66].Knoblauch A, “Zip Nets: Efficient Associative Computation with Binary Synapses,” in International Joint Conference on Neural Networks (IJCNN), 2010, pp. 1–8. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.