Abstract

We consider estimation of mean and covariance functions of functional snippets, which are short segments of functions possibly observed irregularly on an individual specific subinterval that is much shorter than the entire study interval. Estimation of the covariance function for functional snippets is challenging since information for the far off-diagonal regions of the covariance structure is completely missing. We address this difficulty by decomposing the covariance function into a variance function component and a correlation function component. The variance function can be effectively estimated nonparametrically, while the correlation part is modeled parametrically, possibly with an increasing number of parameters, to handle the missing information in the far off-diagonal regions. Both theoretical analysis and numerical simulations suggest that this hybrid strategy is effective. In addition, we propose a new estimator for the variance of measurement errors and analyze its asymptotic properties. This estimator is required for the estimation of the variance function from noisy measurements.

Keywords: Functional data analysis, functional principal component analysis, sparse functional data, variance function, correlation function

1. Introduction

Functional data are random functions on a common domain, e.g., an interval . In reality they can only be observed on a discrete schedule, possibly intermittently, which leads to an incomplete data problem. Luckily, by now this problem has largely been resolved (Rice and Wu, 2001; Yao et al., 2005; Li and Hsing, 2010; Zhang and Wang, 2016) and there is a large literature on the analysis of functional data. For a more comprehensive treatment readers are referred to the monographs by Ramsay and Silverman (2005), Ferraty and Vieu (2006), Hsing and Eubank (2015) and Kokoszka and Reimherr (2017), and a review paper by Wang et al. (2016).

In this paper, we address a different type of incomplete data, which occurs frequently in longitudinal studies when subjects enter the study at random time and are followed for a short period within the domain . Specifically, we focus on functional data with the following property: each function Xi is only observed on a subject-specific interval , and

(S) there exists an absolute constant δ such that 0 < δ < 1 and for all .

As a result, the design of support points (Yao et al., 2005) where one has information about the covariance function is incomplete in the sense that there are no design points in the off-diagonal region, . This is mathematically characterized by

| (1) |

Consequently, local smoothing methods, such as PACE (Yao et al., 2005), that are interpolation methods fail to produce a consistent estimate of the covariance function in the off-diagonal region as the problem requires data extrapolation.

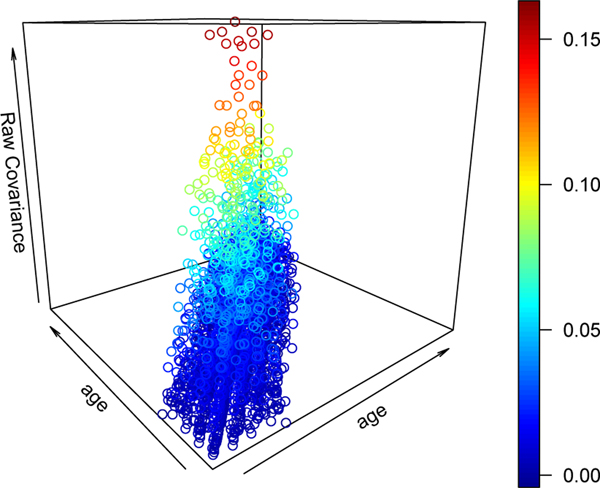

An example is the spinal bone mineral density data collected from 423 subjects ranging in age from 8.8 to 26.2 years (Bachrach et al., 1999). The design plot for the covariance function, as shown in Figure 1, indicates that all of the design points fall within a narrow band around the diagonal area but the domain of interest [8.8, 26.2] is much larger than this band. The cause of this phenomenon is that each individual trajectory is only recorded in an individual specific subinterval that is much shorter than the span of the study. For the spinal bone mineral density data, the span (length of interval between the first measurement and the last one) for each individual is no larger than 4.3 years, while the span for the study is about 17 years. Data with this characteristic, mathematically described by (S) or (1), are called functional snippets in this paper, analogous to the longitudinal snippets studied in Dawson and Müller (2018). As it turns out, functional snippets are quite common in longitudinal studies (Raudenbush and Chan, 1992; Galbraith et al., 2017) and require extrapolation methods to handle. Usually, this is not an issue for parametric approaches, such as linear mixed-effects models, but requires a thoughtful plan for non- and semi-parametric approaches.

Figure 1:

The design of covariance function from spinal bone mineral density data.

Functional fragments (Liebl, 2013; Kraus, 2015; Kraus and Stefanucci, 2019; Kneip and Liebl, 2019+; Liebl and Rameseder, 2019), like functional snippets, are also partially observed functional data and have been studied broadly in the literature. However, for data investigated in these works as functional fragments, the span of a single individual domain [Ai,Bi] can be nearly as large as the span [a,b] of the study, making them distinctively different from functional snippets. Such data, collectively referred to as “nonsnippet functional data” in this paper, often satisfy the following condition:

(F) for any for a strictly increasing sequence .

For instance, Kneip and Liebl (2019+) assumed that , which implies that design points and local information are still available in the off-diagonal region . In other words, for non-snippet functional data and for each , one has for sufficiently large n, contrasting with (1) for functional snippets. Other related works by Gellar et al. (2014); Goldberg et al. (2014); Gromenko et al. (2017); Stefanucci et al. (2018) on partially observed functional data, although do not explicitly discuss the design, require condition (F) for their proposed methodologies and theory. All of them can be handled with a proper interpolation method, which is fundamentally different from the extrapolation methods needed for functional snippets.

The analysis of functional snippets is more challenging than non-snippet functional data, since information in the far off-diagonal regions of the covariance structure is completely missing for functional snippets according to (1). Delaigle and Hall (2016) addressed this challenge by assuming that the underlying functional data are Markov processes, which is only valid at the discrete level, as pointed out by Descary and Panaretos (2019). Zhang and Chen (2018) and Descary and Panaretos (2019) used matrix completion methods to handle functional snippets, but their approaches require modifications to handle longitudinally recorded snippets that are sampled at random design points, and their theory does not cover random designs. Delaigle et al. (2019) proposed to numerically extrapolate an estimate, such as PACE (Yao et al., 2005), from the diagonal region to the entire domain via basis expansion. In this paper, we propose a divide-and-conquer strategy to analyze (longitudinal) functional snippets with a focus on the mean and covariance estimation. Once the covariance function has been estimated, functional principal component analysis can be performed through the spectral decomposition of the covariance operator.

Specifically, we divide the covariance function into two components, the variance function and the correlation function. The former can be estimated via classic kernel smoothing, while the latter is modeled parametrically with a potentially diverging number of parameters. The principle behind this idea is to nonparametrically estimate the unknown components for which sufficient information is available while parameterizing the component with missing pieces. Since the correlation structure is usually much more structured than the covariance surface and it is possible to estimate the correlation structure nonparametrically within the diagonal band, a parametric correlation model can be selected from candidate models in existing literature and this usually works quite well to fit the unknown correlation structure.

Compared to the aforementioned works, our proposal enjoys at least two advantages. First, it can be applied to all types of designs, either sparsely/densely or regularly/irregularly observed snippets. Second, our approach is simple thanks to the parametric structure of the correlation structure, and yet powerful due to the potential to accommodate growing dimension of parameters and nonparametric variance component. We stress that, our semiparametric and divide-and-conquer strategy is fundamentally different from the penalized basis expansion approach that is adopted in the recent paper by Lin et al. (2019) where the covariance function is represented by an analytic basis and the basis coefficients are estimated via penalized least squares. Numerical comparison of these two methods is provided in Section 5.

This divide-and-conquer approach has been explored in Fan et al. (2007) and Fan and Wu (2008) to model the covariance structure of time-varying random noise in a varying-coefficient partially linear model. We demonstrate here that a similar strategy can overcome the challenge of the missing data issue in functional snippets and further allow the dimension of the correlation function to grow to infinity. In addition, we take into account the measurement error in the observed data, which is an important component in functional data analysis but is of less interest in a partially linear model and thus not considered in Fan et al. (2007) and Fan and Wu (2008). The presence of measurement errors complicates the estimation of the variance function, as they are entangled together along the diagonal direction of the covariance surface. Consequently, the estimation procedure for the variance function in Fan et al. (2007) and Fan and Wu (2008) does not apply. While it is possible to estimate the error variance using the approach in Yao et al. (2005) and Liu and Müller (2009), these methods require a pilot estimate of the covariance function in the diagonal area, which involves two-dimensional smoothing, and thus are not efficient. A key contribution of this paper is a new estimator for the error variance in Section 3 that is simple and easy to compute. It improves upon the estimators in Yao et al. (2005) and Liu and Müller (2009), as demonstrated through theoretical analysis and numerical studies; see Section 4 and 5 for details.

2. Mean and Covariance Function Estimation

Let X be a second-order random process defined on an interval with mean function , and covariance function . Without loss of generality, we assume in the sequel.

Suppose is an independent random sample of X, where n is the sample size. In practice, functional data are rarely fully observed. Instead, they are often noisily recorded at some discrete points on . To accommodate this practice, we assume that each Xi is only measured at mi points , and the observed data are for , where represents the homoscedastic random noise such that and . This homoscedasticity assumption can be relaxed to accommodate heteroscedastic noise; see Section 3 for details. To further elaborate the functional snippets characterized by (S), we assume that the ith subject is only available to be studied between time Oi − δ/2 and Oi + δ/2, where the variable , called reference time in this paper, is specific to each subject and is modeled as identically and independently distributed (i.i.d.) random variables. We then assume that, are i.i.d., conditional on Oi. These assumptions reflect the reality of many data collection processes when subjects enter a study at random time Oi − δ/2 and are followed for a fixed period of time. Such a sampling plan, termed accelerated longitudinal design, has the advantage to expand the time range of interest in a short period of time as compared to a single cohort longitudinal design study.

2.1. Mean Function

Even though only functional snippets are observed rather than a full curve, smoothing approaches such as Yao et al. (2005) can be applied to estimate the mean function μ, since for each t, there is positive probability that some design points fall into a small neighborhood of t. Here, we adopt a ridged version of the local linear smoothing method in Zhang and Wang (2016), as follows.

Let K be a kernel function and hμ a bandwidth, and define . The non-ridged local linear estimate of μ is given by with

where wi ≥ 0 are weight such that . For the optimal choice of weight, readers are referred to Zhang and Wang (2018). It can be shown that , where

Although behaves well most of the time, for a finite sample, there is positive probability that , hence may become undefined. This minor issue can be addressed by ridging, a regularization technique used by Fan (1993) with details in Seifert and Gasser (1996) and Hall and Marron (1997). The basic idea is to add a small positive constant to the denominator of when falls below a threshold. More specifically, the ridged version of is given by

| (2) |

where Δ is a sufficiently small constant depending on n and . A convenient choice here is , where .

The tuning parameter hμ could be selected via the following κ-fold cross-validation procedure. Let κ be a positive integer, e.g., κ = 5, and be a roughly even random partition of the set . For a set of candidate values for hμ, we choose one from it such that the following cross-validation error

| (3) |

is minimized, where is the estimator in (2) with hμ = h and subjects in excluded.

2.2. Covariance Function

Estimation of the covariance function for functional snippets is considerably more challenging. As we have pointed out in Section 1, local information in the far off-diagonal region, |s − t| > δ, is completely missing. To tackle this challenge, we first observe that the covariance function can be decomposed into two parts, a variance function and a correlation structure, i.e., , where is the variance function of X, or more precisely, , and is the correlation function. Like the mean function μ, the variance function can be well estimated via local linear smoothing even in the case of functional snippets. The real difficulty stems from the estimation of the correlation structure, which we propose to model parametrically. At first glance, a parametric model might be restrictive. However, with a nonparametric variance component and a large number of parameters, the model will often still be sufficiently flexible to capture the covariance structure of the data. Indeed, in our simulation studies that are presented in Section 5, we demonstrate that even with a single parameter, the proposed model often yields good performance when sample size is limited. As an additional flexibility, our parametric model does not require the low-rank assumption and hence is able to model truly infinitely-dimensional functional data. This trade of the low-rank assumption with the proposed parametric assumption seems worthwhile, especially because we allow the dimension of the parameters to increase with the sample size. The increasing dimension of the parameter essentially puts the methodology in the nonparametric paradigm.

To estimate , we first note that the PACE method in Yao et al. (2005) can still be used to estimate on the band that includes the diagonal, although not on the full domain . Since , the PACE estimate for on the diagonal gives rise to an estimate of . However, this method requires two-dimensional smoothing, which is cumbersome and computationally less efficient. In addition, it has the convergence rate of a two-dimensional smoother, which is suboptimal for a target that is a one-dimensional function. Here we propose a simpler approach that only requires one-dimensional smoothing, based on the observation that the quantity can be estimated by local linear smoothing on the observations . More specifically, the non-ridged local linear estimate of , denoted by , is with

where hσ is the bandwidth to be selected by a cross-validation procedure similar to (3). As with the ridged estimate of the mean function in (2), to circumvent the positive probability of being undefined for , we adopt the ridged version of as the estimate for , denoted by . Then our estimate of , where is a new estimate of , to be defined in the next section, that has a convergence rate of a one-dimensional smoother. Because also has a one-dimensional convergence, the resulting estimate of has a one-dimensional convergence rate.

For the correlation function ρ, we assume that ρ is indexed by a dn-dimensional vector of parameters, denoted by . Here, the dimension of parameters is allowed to grow with the sample size at a certain rate; see Section 4 for details. Some popular parametric families for correlation function are listed below.

- Power exponential:

- Rational quadratic (Cauchy):

- Matérn:

(4)

with being the modified Bessel function of the second kind of order θ.

Note that if are correlation functions, then is also a correlation function if and vk ≥ 0 for all k. Therefore, a fairly flexible class of correlation functions can be constructed from several relatively simple classes by this convex combination. We point out here that, even when one adopts a stationary correlation function, the resulting covariance can be non-stationary due to a nonparametric and hence often non-stationary variance component.

Given the estimate , the parameter θ can be effectively estimated using the following least squares criterion, i.e., with

where is the raw covariance of subject i at two different measurement times, Tij and Til.

3. Estimation of Noise Variance

The estimation of received relatively little attention in the literature. For sparse functional data, the PACE estimator proposed in Yao et al. (2005) is a popular option. However, the PACE estimator can be negative in some cases. Liu and Müller (2009) refined this PACE estimator by first fitting the observed data using the PACE estimator and then estimating by cross-validated residual sum of squares; see appendix A.1 of Liu and Müller (2009) for details. These methods require an estimate of the covariance function, which we do not have here before we obtain an estimate of . Moreover, the estimate in both methods is obtained by two-dimensional local linear smoothing as detailed in Yao et al. (2005), which is computationally costly and leads to a slower (two-dimensional) convergence rate of these estimators. To resolve this conundrum, we propose the following new estimator that does not require estimation of the covariance function or any other parameters such as the mean function.

For a bandwidth h0 > 0, define the quantities

and

where T1 and T2 denote two design points from the same generic subject. From the above definition, we immediately see that . Also, these quantities seem easy to estimate. For example, A0 and B can be straightforwardly estimated respectively by

| (5) |

and

| (6) |

This motivates us to estimate via estimation of A0, A1 and B.

It remains to estimate A1, which cannot be estimated using information along the diagonal only, due to the presence of random noise. Instead, we shall explore the smoothness of the covariance function and observe that if T1 is close to T2, say , then and

Indeed, we show in Lemma 5 that . Therefore, it is sensible to use A2 as a surrogate of A1. The former can be effectively estimated by

| (7) |

and we set . Finally, the estimate of is given by

| (8) |

To choose h0, motivated by the convergence rate stated in Theorem 1 of the next section, we suggest the following empirical rule, , for sparse functional snippets, where acts as an estimate for represents the average number of measurements per curve, is the estimate of defined in Section 2, and represents the overall variability of the data. The coefficient 0.29 is determined by a method described in the appendix. If this rule yields a value of h0 that makes the neighborhood empty or contain too few points, then we recommend to choose the minimal value of h0 such that contains at least points. In this way, we ensure that a substantial fraction of the observed data are used for estimation of the variance . This rule is found to be very effective in practice; see Section 5 for its numerical performance.

Compared to Yao et al. (2005) and Liu and Müller (2009), the proposed estimate (8) is simple and easy to compute. Indeed, it can be computed much faster since it does not require the costly computation of . More importantly, the ingredients and for our estimator are obtained by one-dimensional smoothing, with the term in (5)–(7) acting as a local constant smoother. Consequently, as we show in Section 4, our estimator enjoys an asymptotic convergence rate that is faster than the one from a two-dimensional local linear smoother. In addition, the proposed estimate is always nonnegative, in contrast to the one in Yao et al. (2005). This is seen by the following derivation:

| (9) |

Remark:

The above discussion assumes that the noise is homoscedastic, i.e., its variance is identical for all t. As an extension, it is possible to modify the above procedure to account for heteroscedastic noise, as follows. With intuition and rationale similar to the homoscedastic case, we define

and let

be the estimate of which is the variance of the noise at . Like the derivation in (9), one can also show that this estimator is nonnegative.

4. Theoretical Properties

For clarity of exposition, we assume throughout this section that all the mi have the same rate m, i.e., mi = m, where the sampling rate m may tend to infinity. We emphasize thatpar allel asymptotic results can be derived without this assumption by replacing m with . Note that the theory to be presented below applies to both the case that m is bounded by a constant, i.e., m ≤ m0 for some m0 < ∞, and the case that m diverges to ∞ as n → ∞.

We assume that the reference time Oi is identically and independently distributed (i.i.d.) sampled from a density , and are i.i.d., conditional on Oi. The i.i.d. assumptions can be relaxed to accommodate heterogeneous distributions and weak dependence, at the cost of much more complicated analysis and heavy technicalities. As such relaxation does not provide further insight into our problem, we decide not to pursue it in the paper. The following conditions about Oi and other quantities are needed for our theoretical development.

(A1) The density of each Oi satisfies for all , and the conditional density of Tij given Oi satisfies for a fixed function f0 and for all and . Also, the derivative is Lipschitz continuous on [0, δ].

(A2) The second derivatives of μ and are continuous and hence bounded on and , respectively.

(A3) and .

In the above, the condition (A1) characterizes the design points for functional snippets and can be relaxed, while the regularity conditions (A2) and (A3) are common in the literature, e.g., in Zhang and Wang (2016). According to Scheuerer (2010), (A2) also implies that the sample paths of X are continuously differentiable and hence Lipschitz continuous almost surely. Let LX be the best Lipschitz constant of X, i.e., . We will see shortly that a moment condition on LX allows us to derive a rather sharp bound for the convergence rate of . For the bandwidth h0, we require the following condition:

(H1) and .

The following result gives the asymptotic rate of the estimator . The proof is straightforward once we have Lemma 5, which is given in the appendix.

Theorem 1.

Assume the conditions (A1)–(A3) and (H1) hold.

. With the optimal choice , .

If in addition , then . With the optimal choice .

If we define with , the ridged version of (8), then in the above theorem, can be replaced with and can be replaced with , respectively. For comparison, under conditions stronger than (A1)–(A3), the rate derived in Yao et al. (2005) for the PACE estimator is at best . This rate was improved by Paul and Peng (2011) to . Our estimator clearly enjoys a faster convergence rate, in addition to its computational efficiency. The rate in part (b) of Theorem 1 has little room for improvement,since when n is finite but m → ∞, the rate is optimal, i.e., . When m is finite but n → ∞ in the sparse design, we obtain , in contrast to the rate OP(n−2/3) for the PACE estimator according to Paul and Peng (2011).

To study the properties of and , we shall assume

(B1) the kernel K is a symmetric and Lipschitz continuous density function supported on [−1,1].

Also, the bandwidth hμ and hσ are assumed to meet the following conditions.

(H2) hμ → 0 and nmhμ → ∞.

(H3) hσ → 0 and nmhσ → ∞.

The choice of these bandwidths depends on the interplay of the sampling rate m and sample size n. The optimal choice is given in the following condition.

(H4) If , then , where the notation means . Otherwise, . Also, .

The asymptotic convergence rates for and are given in the following theorem, whose proof can be obtained by adapting the proof of Proposition 1 in Lin and Yao (2020+) and hence is omitted. It shows that both and have the same rate, which is hardly surprising since they are both obtained by a one-dimensional local linear smoothing technique. Note that our results generalize those in Fan et al. (2007) and Fan and Wu (2008) by taking the measurement errors and the order of the sampling rate m into account in the theoretical analysis. In addition, our convergence rates of these estimators complement the asymptotic normality results in Fan et al. (2007) and Fan and Wu (2008).

Theorem 2.

Suppose the conditions (A1)–(A3) hold.

With additional conditions (B1) and (H2) . With the choice of bandwidth in (H4), .

With additional conditions (B1) and (H1)–(H2). . With the choice of bandwidth in (H4), .

To derive the asymptotic properties of , we need the convergence rate of . Define

and assume the following conditions.

(B2) is twice continuously differentiable with respect to s and t. Furthermore, the first three derivatives of with respect to θ are uniformly bounded for all .

(B3) for some c0 > 0 and τ ≥ 0, where θ0 denotes the true value of θ, and λmin(·) denotes the smallest eigenvalue of a matrix.

(B4) for some and .

Note that in the condition (B3), we allow the smallest eigenvalue of the Hessian to decay with dn. This, departing from the assumption in Fan and Wu (2008) of fixed dimension on the parameter θ, enables us to incorporate the case that ρθ is constructed from the aforementioned convex combination of a diverging number of correlation functions, e.g., , where τ = 1 if all components satisfy (B2) uniformly. The condition (B4), although it is slightly stronger than (A3), is often required in functional data analysis, e.g., in Li and Hsing (2010) and Zhang and Wang (2016) for the derivation of uniform convergence rates for . Such uniform rates are required to bound sharply in our development, which is critical to establish the following rate for .

Proposition 3.

Suppose the conditions (A1)–(A2) and (B1)–(B4) hold. If , then with the choice of bandwidth in (H4), .

The above result suggests that the estimation quality of depends on the dimension of parameters, sample size and singularity of the Hessian matrix at θ = θ0, measured by the constant τ in condition (B3). In practice, a few parameters are often sufficient for an adequate fit. In such cases, the dimension dn might not grow with sample size, i.e., dn = O(1), and we obtain a parametric rate for . Now we are ready to state our main theorem that establishes the convergence rate for in the Hilbert-Schmidt norm , which follows immediately from the above results.

Theorem 4.

Under the same conditions of Proposition 3, we have . With the choice of bandwidth in (H4), .

In practice, a fully nonparametric approach like local regression to estimating the correlation structure is inefficient, in particular when data are snippets. On the other hand, a parametric method with a fixed number of parameters might be restrictive when the sample size is large. One way to overcome such a dilemma is to allow the family of parametric models to grow with the sample size. As a working assumption, one might consider that the correlation function ρ falls into , a dn-dimensional family of models for correlation functions, when the sample size is n. Here, the dimension typically grows with the sample size. For example, one might consider a dn-Fourier basis family:

| (10) |

where and are fixed orthonormal Fourier basis functions defined on . The theoretical result in Theorem 4 applies to this setting by explicitly accounting for the impact of the dimension dn on the convergence rate.

5. Simulation Studies

To evaluate the numerical performance of the proposed estimators, we generated X(·) from a Gaussian process. Three different covariance functions were considered, namely,

with the variance function and the Matérn correlation function ρθ=(0.5,1),

with λ = 2 and Fourier basis functions , and

with .

Two different sample sizes n = 50 and n = 200 were considered to illustrate the behavior of the estimators under a small sample size and a relatively large sample size. We set the domain = [0, 1] and δ = 0.25.

To evaluate the impact of the mean function, we also considered two different mean functions, and . We found that the results are not sensitive to the mean function, and thus focus only on the case μ1(t) in this section; the results for the case are provided in Supplementary Material. In addition, to evaluate the impact of the design, we considered two design schemes. In the first scheme, that is referred to as the sparse design, each curve was sparsely sampled at 4 points on average to mimic the scenario of the data application in Section 6. In the second scheme, that is referred to as the dense design, each snippet was recorded in a dense and regular grid of an individual specific subinterval of length δ. As the focus of the paper is on sparse snippets, we report the results for the sparse design below. The results for dense snippets are reported in Supplementary Material.

To assess the performance of the estimators for the noise variance , we considered different noise levels , varying from no noise to large noise. For example, when , the signal-to-noise ratio is about 2. The performance of is assessed by the root mean squared error (RMSE), defined by

where N is the number of independent simulation replicates, which we set to 100. For the purpose of comparison, we also computed the PACE estimate of Yao et al. (2005) and the estimate proposed by Liu and Müller (2009), denoted by LM, using the fdapace R package (Chen et al., 2020) that is available in the comprehensive R archive network (CRAN). The bandwidth hμ and hσ, as well as those in Yao et al. (2005) and Liu and Müller (2009), were selected by five-fold cross-validation. The tuning parameter h0 was selected by the empirical rule that is described in Section 3. The simulation results are summarized in Table 1 for the sparse design with mean function μ1, as well as Tables S.1–S.3 for the dense design and mean function μ2 in Supplementary Material, where SNPT denotes our method proposed in Section 3. We observe that in almost all cases, SNPT performs significantly better than the other two methods. The results also demonstrate the effectiveness of the proposed empirical selection rule for the tuning parameter h0.

Table 1:

RMSE and their standard errors for under the sparse design and μ1

| method | |||||

|---|---|---|---|---|---|

| Cov | n | SNPT | PACE | LM | |

| I | 50 | 0 | 0.012 (0.009) | 0.144 (0.166) | 0.129 (0.203) |

| 0.1 | 0.029 (0.038) | 0.129 (0.146) | 0.186 (0.197) | ||

| 0.25 | 0.050 (0.056) | 0.147 (0.185) | 0.117 (0.125) | ||

| 0.5 | 0.100 (0.135) | 0.181 (0.195) | 0.157 (0.131) | ||

| 200 | 0 | 0.009 (0.005) | 0.080 (0.103) | 0.073 (0.077) | |

| 0.1 | 0.017 (0.019) | 0.091 (0.098) | 0.144 (0.150) | ||

| 0.25 | 0.032 (0.038) | 0.086 (0.097) | 0.093 (0.127) | ||

| 0.5 | 0.049 (0.064) | 0.098 (0.118) | 0.165 (0.106) | ||

| II | 50 | 0 | 0.036 (0.030) | 0.252 (0.245) | 0.219 (0.255) |

| 0.1 | 0.047 (0.052) | 0.254 (0.285) | 0.237 (0.255) | ||

| 0.25 | 0.087 (0.133) | 0.241 (0.244) | 0.159 (0.151) | ||

| 0.5 | 0.128 (0.202) | 0.238 (0.260) | 0.126 (0.134) | ||

| 200 | 0 | 0.024 (0.015) | 0.177 (0.172) | 0.192 (0.200) | |

| 0.1 | 0.027 (0.027) | 0.185 (0.179) | 0.176 (0.174) | ||

| 0.25 | 0.042 (0.050) | 0.177 (0.177) | 0.097 (0.097) | ||

| 0.5 | 0.071 (0.084) | 0.174 (0.182) | 0.124 (0.089) | ||

| III | 50 | 0 | 0.004 (0.004) | 0.099 (0.103) | 0.028 (0.064) |

| 0.1 | 0.024 (0.029) | 0.102 (0.106) | 0.099 (0.127) | ||

| 0.25 | 0.049 (0.063) | 0.093 (0.109) | 0.077 (0.080) | ||

| 0.5 | 0.094 (0.130) | 0.113 (0.146) | 0.172 (0.128) | ||

| 200 | 0 | 0.002 (0.002) | 0.065 (0.077) | 0.009 (0.023) | |

| 0.1 | 0.010 (0.012) | 0.066 (0.067) | 0.049 (0.075) | ||

| 0.25 | 0.027 (0.033) | 0.068 (0.071) | 0.069 (0.067) | ||

| 0.5 | 0.059 (0.071) | 0.067 (0.073) | 0.163 (0.091) | ||

To evaluate the performance of the estimators for the covariance structure, we considered two levels of signal-to-noise ratio (SNR), namely, SNR = 2 and SNR = 4. The performance of estimators for the variance function and the covariance function is evaluated by the root mean integrated squared error (RMISE), defined by

for the variance function and

for the covariance function. We compared four methods. The first two, denoted by SNPTM and SNPTF, are our semi-parametric approach with the correlation given in (4) and (10), respectively. For the SNPTF method, the dimension dn of (10) is selected via five-fold cross-validation. It is noted that SNPTM and SNPTF yield the same estimates of the variance function but different estimates of the correlation structure. The third one, denoted by PFBE (penalized Fourier basis expansion), is the method proposed by Lin et al. (2019), and the last one, denoted by PACE, is the approach invented by Yao et al. (2005).

For the estimation of the variance function , the results are summarized in Table 2 for the sparse design and mean function μ1, and also in Tables S.4–S.6 in Supplementary Material for the dense design and mean function μ2. In these tables, the results of SNPTF are not reported since they are the same as the results of SNPTM. We observe that, in all cases, SNPTM and PFBE substantially outperform PACE. For the dense design, the methods SNPTM and PFBE yield comparable results. The SNPTM method performs better than PFBE when n = 200 in most cases, except in the setting III which favors the PFBE method. This suggests that the SNPTM method, which adopts the local linear smoothing strategy combined with our estimator for the variance of the noise, generally converges faster as the sample size grows.

Table 2:

RMISE and their standard errors for under the sparse design and μ1

| method | |||||

|---|---|---|---|---|---|

| Cov | SNR | n | SNPTM | PFBE | PACE |

| I | 2 | 50 | 0.535 (0.218) | 0.518 (0.211) | 2.133 (1.536) |

| 200 | 0.339 (0.130) | 0.330 (0.118) | 1.344 (1.126) | ||

| 4 | 50 | 0.531 (0.199) | 0.517 (0.229) | 1.845 (1.461) | |

| 200 | 0.313 (0.136) | 0.334 (0.127) | 1.151 (0.952) | ||

| II | 2 | 50 | 0.775 (0.396) | 0.743 (0.214) | 2.602 (1.747) |

| 200 | 0.509 (0.163) | 0.530 (0.141) | 1.699 (1.045) | ||

| 4 | 50 | 0.768 (0.303) | 0.734 (0.351) | 2.510 (1.578) | |

| 200 | 0.471 (0.162) | 0.507 (0.149) | 1.515 (1.056) | ||

| III | 2 | 50 | 0.633 (0.201) | 0.592 (0.136) | 1.478 (1.052) |

| 200 | 0.376 (0.133) | 0.392 (0.107) | 1.178 (0.700) | ||

| 4 | 50 | 0.592 (0.208) | 0.586 (0.158) | 1.428 (1.166) | |

| 200 | 0.350 (0.139) | 0.385 (0.114) | 0.923 (0.451) | ||

For the estimation of the covariance function , we summarize the results in Table 3 for the sparse design and mean function μ1, and in Tables S.7–S.9 in Supplementary Material for the dense design and mean function μ2. As expected, in all cases, SNPTM, SNPTF and PFBE substantially outperform PACE, since PACE is not designed to process functional snippets. Among the estimators SNPTM, SNPTF and PFBE, in the setting I, SNPTM outperforms the others since in this case the model is correctly specified for SNPTM, in the setting II, SNPTF is the best since the model is correctly specified for SNPTF, and in the setting III, PFBE has a favorable performance. Although there is no universally best estimator, overall these three estimators have comparable performance. To select a method in practice, one can first produce a scatter plot of the raw covariance function. If the function appears to decay monotonically as the point (s, t) moves away from the diagonal, then SNPT with a monotonic decaying correlation such as SNPTM is recommended. Otherwise, SNPT with a general correlation structure such as SNPTF or the PFBE approach might be adopted.

Table 3:

RMISE and their standard errors for under the sparse design and μ1

| method | ||||||

|---|---|---|---|---|---|---|

| Cov | SNR | n | SNPTM | SNPTF | PFBE | PACE |

| I | 2 | 50 | 0.339 (0.101) | 0.441 (0.158) | 0.399 (0.156) | 1.470 (0.808) |

| 200 | 0.235 (0.092) | 0.359 (0.089) | 0.295 (0.101) | 1.044 (0.625) | ||

| 4 | 50 | 0.315 (0.093) | 0.424 (0.135) | 0.371 (0.143) | 1.348 (0.809) | |

| 200 | 0.225 (0.084) | 0.341 (0.090) | 0.254 (0.097) | 0.902 (0.513) | ||

| II | 2 | 50 | 0.556 (0.119) | 0.521 (0.183) | 0.541 (0.160) | 2.061 (1.061) |

| 200 | 0.474 (0.068) | 0.436 (0.132) | 0.465 (0.101) | 1.625 (0.632) | ||

| 4 | 50 | 0.536 (0.126) | 0.472 (0.148) | 0.517 (0.139) | 2.014 (0.868) | |

| 200 | 0.457 (0.063) | 0.419 (0.133) | 0.431 (0.112) | 1.543 (0.604) | ||

| III | 2 | 50 | 0.503 (0.090) | 0.511 (0.154) | 0.491 (0.130) | 1.248 (0.650) |

| 200 | 0.473 (0.041) | 0.439 (0.092) | 0.366 (0.052) | 1.136 (0.439) | ||

| 4 | 50 | 0.493 (0.075) | 0.499 (0.120) | 0.487 (0.122) | 1.217 (0.727) | |

| 200 | 0.469 (0.055) | 0.423 (0.087) | 0.358 (0.063) | 0.997 (0.316) | ||

6. Application

We applied the proposed method to analyze the longitudinal data that was collected and detailed in Bachrach et al. (1999). It consists of longitudinal measurements of spinal bone mineral density for 423 healthy subjects. The measurement for each individual was observed annually for up to 4 years. Among 423 subjects, we focused on n = 280 subjects ranging in age from 8.8 to 26.2 years who completed at least 2 measurements. A plot for the design of the covariance function is given in Figure 1, while a scatter plot for the raw covariance surface is given in Figure 2. The raw covariance surface seems to decay rapidly to zero as design points move away from the diagonal. This motivated us to estimate the covariance structure with a Matérn correlation function. This method is referred to as SNPTM. In addition, we also used the more flexible dn-Fourier basis family to see whether a better fit can be achieved, where dn = 2 was selected by Akaike information criterion (AIC). Such approach is denoted by SNPTF.

Figure 2:

Scatter plot of the raw covariance function of the spinal bone mineral density data.

The estimated variance of the measurement error is 1.5×10−3 by the method proposed in Section 3, 10−6 by PACE and 7.8 × 10−7 by LM, respectively. The estimates of the covariance surface are depicted in Figure 3. We observe that, the estimates produced by SNPTM and SNPTF are similar in the diagonal region, while visibly differ in the off-diagonal region. For this dataset, the upward off-diagonal parts of the estimated covariance surface by SNPTF seem artificial, so we recommend the SNPTM estimate for this data. For the PACE estimate, due to the missing data in the off-diagonal region and insufficient observations at two ends of the diagonal region, it suffers from significant boundary effect.

Figure 3:

The estimated covariance functions by SNPTM (left), SNPTF (middle) and PACE (right). The z-axis is scaled by 10−2 for visualization.

The mean function estimated by SNPTM1 shown in the left panel of Figure 4 and found similar to its counterpart in Lin et al. (2019), suggests that the spinal bone mineral density increases rapidly from age 9 to age 16, and then slows down afterward. The mineral density has the largest variation around age 14, indicated by the variance function estimated by SNPTM2 and shown in the middle panel of Figure 4. As a comparison, the PACE estimate, shown in the right panel of Figure 4, suffers from the boundary effect that is passed from the PACE estimate of the covariance function, because the PACE method estimates the variance function by the diagonal of the estimated covariance function.

Figure 4:

The estimated mean function (left), the estimated variance function by SNPTM and SNPTF (middle), and the estimated variance function by PACE (right).

7. Concluding Remarks

In this paper, we consider the mean and covariance estimation for functional snippets. The estimation of the mean function is still an interpolation problem so previous approaches based on local smoothing methods still work, except that the theory needs a little adjustment to reflect the new design of functional snippets. However, the estimation of the covariance function is quite different because it is now an extrapolation problem rather an interpolation problem, so previous approaches based on local smoothing do not work anymore. We propose a hybrid approach that leverages the available information and structure of the correlation in the diagonal band to estimate the correlation function parametrically but the variance function nonparametrically. Because the dimension of the parameters can grow with the sample size, the approach is very flexible and can be made nearly nonparametric for the final covariance estimate.

An interesting feature of the algorithm is that it reverses the order of estimation for the variance components, compared to existing approaches for non-snippets functional data, by first estimating the noise variance σ0, then estimating the variance function , followed by the fitting of the correlation function. The estimation of the covariance function is performed at the very end when all other components have been estimated. The proposed approach differs substantially from traditional approaches, such as PACE (Yao et al., 2005), which estimate the covariance function first, from there the variance function is obtained as a byproduct through the diagonal elements of the covariance estimate, while the noise variance is estimated at the very end. The new procedure to estimate the noise variance is both simpler and better than the PACE estimates. Thus, even if the data are non-snippet types, one can use the new method proposed in Section 3 to estimate the noise variance.

We emphasize that, although the proposed method targets functional snippets, it is also applicable to functional fragments or functional data in which each curve consists of multiple disjoint snippets. In addition, the theory presented in Section 4 can be slightly modified to accommodate such data. In contrast, methods designed for nonsnippet functional data are generally not applicable to functional snippets, due to the reasons discussed in Section 1. In practice, one might distinguish between functional snippets and nonsnippets by the design plot like Figure 1. If the support points cover the entire region, then the data are of the nonsnippet type. Otherwise they are functional snippets. However, there might be some case that it is unclear whether the entire region is fully covered by support points, especially when data are sparsely observed. In such situation, snippet-based methods, such as the proposed one, is a safer option.

Reliable estimates of the mean and covariance functions are fundamental to the analysis of functional data. They are also the building blocks of functional regression methods and functional hypothesis test procedures. The proposed estimators for the mean and covariance of functional snippets together provide a stepping stone to future study on regression and inference that are specific to functional snippets.

Supplementary Material

Acknowledgments

Research supported by NIH ECHO grant (5UG3OD023313-03) and NSF (15-12975 and 19-14917).

Appendix

Selection of h0

The constant 0.29 in the empirical rule presented in Section 3 was determined by optimizing over , where the summation is taken over the combinations of various parameters. Specifically, for each tuple , we generated a batch of G = 100 independent datasets of n centered Gaussian snippets with the covariance function . Each snippet was recorded at m random points from a random subinterval of length δ in [0,1]. For each batch of datasets, we found to minimize , where is the estimate of based the rth dataset in the batch and by using the proposed method with the bandwidth . We also obtained the quantities and , where and are the estimate of δ and ς based on the rth dataset in the batch, respectively. In this way, we obtain a collection of vectors . Finally, we found c = 0.29 to minimize , where the summation is taken over the collection .

In the above process, the covariance function was taken from a collection composed by 1) covariance functions whose correlation part is the correlation function listed in Section 2 with various values of the parameters and whose variance functions are exponential functions, squared sin/cos functions and positive polynomials, 2) covariance functions with various values of a > 0, 3) covariance functions with various values of a > 0, λ > 0 and K ≥ 1, where the functions are the Fourier basis functions described in Section 5, and 4) covariance functions with various choices of a > 0, b > 0 and K ≥ 1.

Technical Lemmas

Lemma 5.

Under conditions (A1)–(A2), one has .

With condition (A1), .

Under conditions(A1)–(A3) . If is also assumed, then .

Proof.

To show in part (a), we define and . Let gs be the partial derivative of g with respect to s. Then, gs is Lipschitz continuous given condition (A1) and (A2). With t∗ denoting a real number satisfying , one has

where the last equality is obtained by observing that

For part (b), it is seen that and

| (11) |

Now we first observe that , since

and

Therefore, if j, l, p, q are all distinct, then

It is relatively straightforward to show that if j = p but l ≠ q or j = q but l ≠ p, then , and if j = p and l = q or j = q and l = p, then . Assembling the above results, one has

which together with (11) implies the conclusion of part (b).

For part (c), with the aid of part (a), it is straightforward to see that

| (12) |

Now we shall calculate the variance of . With definition , one derives

| (13) |

Below we derive bounds for the term V (j, l, p, q).

Case 1: j, l, p and q are all distinct. In this case, via straightforward computation, one can show that .

Case 2: j = p but or j = q but . Similar to Case 1, one has V (j, l, p, q) = .

Case 3: j = p and l = q or j = q and l = p. In this case,

Based on the above bounds, we have . Together with the bias given in (12), this implies the first statement of part (c).

For the second statement of part (c), we observe that with condition , the bound in Case 1 can be sharpened in the following way. First, we see that

where . Then, we decompose into , where

For I2, one can show that

where the first equality is due to the assumption that are i.i.d. conditional on Oi, and the second one is based on the following observation

where we recall that . Similarly, I3 = 0 and I4 = 0. For I1, one can show that

where the first inequality is due to the Lipschitz continuity property of sample paths. Again, based on such continuity property, one has . Therefore, we conclude that . Together with I2 = I3 = I4 = 0, this implies that . It further indicates that . Combined with the bias term in (12), this implies the second statement of part (c). □

Proofs of Main Results

Proof of Proposition 3.

For the moment, we assume . Denote

Now we show that

| (14) |

where an = (log n){(nm)−4/5 + n−1. First, we observe that

with

To derive the rate for I1, we define

It can be verified that , and also given condition (A3) and (B2). We view each Gi as a random linear functional from the space , i.e.,

where . Then we follow the same lines of the argument for Lemma 2 of Severini and Wong (1992) to establish that converges to a Gaussian element on the Banach space C(Λ0) of continuous functions on Λ0 with the sup norm. On the other hand, using the same technique of Zhang and Wang (2016) for the uniform convergence of the local linear estimator for the mean function, we can show that , and hence . By condition (B2) that is uniformly bounded for all j, we can deduce that, for sufficiently large n, with probability tending to one, the function with falls into for all j. Therefore,

where OP is uniform for all j. Noting that , one can deduce from the above that

When , an argument similar to the above can also be applied to handle extra terms induced by the discrepancy between and μ, so that we still obtain the same rate as the above. Similar argument applies to I2, and we have . It is easy to see that I3 is dominated by the other terms. Together, we establish (14). It is seen that . Thus, we have

Straightforward but somewhat tedious calculation can show that

and

Now let . By Taylor expansion,

for some constant c0 > 0 and if for a sufficiently large absolute constant c > 0. Thus, .

Footnotes

SNPTM, SNPTF and PACE use the same method to estimate the mean function.

SNPTM and SNPTF use the same method to estimate the variance function.

Supplementary Material

The online supplementary material contains additional simulation results, as well as information for implementation of the proposed method in the R package mcfda3.

Contributor Information

Zhenhua Lin, Department of Statistics and Applied Probability National University of Singapore.

Jane-Ling Wang, Department of Statistics University of California at Davis.

References

- Bachrach LK, Hastie T, Wang M-C, Narasimhan B, and Marcus R (1999), “Bone mineral acquisition in healthy Asian, hispanic, black, and Caucasian youth: A longitudinal study,” The Journal of Clinical Endocrinology & Metabolism, 84, 4702–4712. [DOI] [PubMed] [Google Scholar]

- Chen Y, Carroll C, Dai X, Fan J, Hadjipantelis PZ, Han K, Ji H, Müller H-G, and Wang J-L (2020), fdapace: Functional Data Analysis and Empirical Dynamics, R package version 0.5.2, available at https://CRAN.R-project.org/package=fdapace.

- Dawson M and Müller H-G (2018), “Dynamic modeling of conditional quantile trajectories, with application to longitudinal snippet data,” Journal of the American Statistical Association, 113, 1612–1624. [Google Scholar]

- Delaigle A and Hall P (2016), “Approximating fragmented functional data by segments of Markov chains,” Biometrika, 103, 779–799. [Google Scholar]

- Delaigle A, Hall P, Huang W, and Kneip A (2019), “Estimating the covariance of fragmented and other related types of functional data,” Journal of the American Statistical Association, to appear. [Google Scholar]

- Descary M-H and Panaretos VM (2019), “Functional data analysis by matrix completion,” The Annals of Statistics, 47, 1–38. [Google Scholar]

- Fan J (1993), “Local linear regression smoothers and their minimax efficiencies,” The Annals of Statistics, 21, 196–216. [Google Scholar]

- Fan J, Huang T, and Li R (2007), “Analysis of longitudinal data with semiparametric estimation of covariance function,” Journal of the American Statistical Association, 102, 632–641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J and Wu Y (2008), “Semiparametric estimation of covariance matrices for longitudinal data,” Journal of American Statistical Association, 103, 1520–1533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferraty F and Vieu P (2006), Nonparametric Functional Data Analysis: Theory and Practice, New York: Springer-Verlag. [Google Scholar]

- Galbraith S, Bowden J, and Mander A (2017), “Accelerated longitudinal designs: an overview of modelling, power, costs and handling missing data,” Statistical Methods in Medical Research, 26, 374–398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gellar JE, Colantuoni E, Needham DM, and Crainiceanu CM (2014), “Variable-domain functional regression for modeling ICU data,” Journal of the American Statistical Association, 109, 1425–1439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg Y, Ritov Y, and Mandelbaum A (2014), “Predicting the continuation of a function with applications to call center data,” Journal of Statistical Planning and Inference, 147, 53–65. [Google Scholar]

- Gromenko O, Kokoszka P, and Sojka J (2017), “Evaluation of the cooling trend in the ionosphere using functional regression with incomplete curves,” The Annals of Applied Statistics, 11, 898–918. [Google Scholar]

- Hall P and Marron JS (1997), “On the shrinkage of local linear curve estimators,” Statistics and Computing, 516, 11–17. [Google Scholar]

- Hsing T and Eubank R (2015), Theoretical Foundations of Functional Data Analysis, with an Introduction to Linear Operators, Wiley. [Google Scholar]

- Kneip A and Liebl D (2019+), “On the optimal reconstruction of partially observed functional data,” The Annals of Statistics, to appear. [Google Scholar]

- Kokoszka P and Reimherr M (2017), Introduction to Functional Data Analysis, Chapman and Hall/CRC. [Google Scholar]

- Kraus D (2015), “Components and completion of partially observed functional data,” Journal of Royal Statistical Society: Series B (Statistical Methodology), 77, 777–801. [Google Scholar]

- Kraus D and Stefanucci M (2019), “Classification of functional fragments by regularized linear classifiers with domain selection,” Biometrika, 106, 161–180. [Google Scholar]

- Li Y and Hsing T (2010), “Uniform convergence rates for nonparametric regression and principal component analysis in functional/longitudinal data,” The Annals of Statistics, 38, 3321–3351. [Google Scholar]

- Liebl D (2013), “Modeling and forecasting electricity spot prices: A functional data perspective,” The Annals of Applied Statistics, 7, 1562–1592. [Google Scholar]

- Liebl D and Rameseder S (2019), “Partially observed functional data: The case of systematically missing parts,” Computational Statistics & Data Analysis, 131, 104–115. [Google Scholar]

- Lin Z, Wang J-L, and Zhong Q (2019), “Basis expansions for functional snippets,”arxiv. [Google Scholar]

- Lin Z and Yao F (2020+), “Functional regression on manifold with contamination,” Biometrika, to appear. [Google Scholar]

- Liu B and Müller H-G (2009), “Estimating derivatives for samples of sparsely observed functions, with application to online auction dynamics,” Journal of the American Statistical Association, 104, 704–717. [Google Scholar]

- Paul D and Peng J (2011), “Principal components analysis for sparsely observed correlated functional data using a kernel smoothing approach,” Electronic Journal of Statistics, 5, 1960–2003. [Google Scholar]

- Ramsay JO and Silverman BW (2005), Functional Data Analysis, Springer Series in Statistics, New York: Springer, 2nd ed. [Google Scholar]

- Raudenbush SW and Chan W-S (1992), “Growth Curve Analysis in Accelerated Longitudinal Designs,” Journal of Research in Crime and Delinquency, 29, 387–411. [Google Scholar]

- Rice JA and Wu CO (2001), “Nonparametric Mixed Effects Models for Unequally Sampled Noisy Curves,” Biometrics, 57, 253–259. [DOI] [PubMed] [Google Scholar]

- Scheuerer M (2010), “Regularity of the sample paths of a general second order random field,” Stochastic Processes and their Applications, 120, 1879–1897. [Google Scholar]

- Seifert B and Gasser T (1996), “Finite-Sample Variance of Local Polynomials: Analysis and Solutions,” Journal of the American Statistical Association, 91, 267–275. [Google Scholar]

- Severini TA and Wong WH (1992), “Profile Likelihood and Conditionally Parametric Models,” The Annals of Statistics, 20, 1768–1802. [Google Scholar]

- Stefanucci M, Sangalli LM, and Brutti P (2018), “PCA-based discrimination of partially observed functional data, with an application to AneuRisk65 data set,” Statistica Neerlandica, 72, 246–264. [Google Scholar]

- Wang J-L, Chiou J-M, and Müller H-G (2016), “Review of functional data analysis,” Annual Review of Statistics and Its Application, 3, 257–295. [Google Scholar]

- Yao F, Müller H-G, and Wang J-L (2005), “Functional Data Analysis for Sparse Longitudinal Data,” Journal of the American Statistical Association, 100, 577–590. [Google Scholar]

- Zhang A and Chen K (2018), “Nonparametric covariance estimation for mixed longitudinal studies, with applications in midlife women’s health,” arXiv. [Google Scholar]

- Zhang X and Wang J-L (2016), “From sparse to dense functional data and beyond,” The Annals of Statistics, 44, 2281–2321. [Google Scholar]

- Zhang X (2018), “Optimal weighting schemes for longitudinal and functional data,” Statistics & Probability Letters, 138, 165–170. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.