Abstract

Background: The previous COVID-19 lung diagnosis system lacks both scientific validation and the role of explainable artificial intelligence (AI) for understanding lesion localization. This study presents a cloud-based explainable AI, the “COVLIAS 2.0-cXAI” system using four kinds of class activation maps (CAM) models. Methodology: Our cohort consisted of ~6000 CT slices from two sources (Croatia, 80 COVID-19 patients and Italy, 15 control patients). COVLIAS 2.0-cXAI design consisted of three stages: (i) automated lung segmentation using hybrid deep learning ResNet-UNet model by automatic adjustment of Hounsfield units, hyperparameter optimization, and parallel and distributed training, (ii) classification using three kinds of DenseNet (DN) models (DN-121, DN-169, DN-201), and (iii) validation using four kinds of CAM visualization techniques: gradient-weighted class activation mapping (Grad-CAM), Grad-CAM++, score-weighted CAM (Score-CAM), and FasterScore-CAM. The COVLIAS 2.0-cXAI was validated by three trained senior radiologists for its stability and reliability. The Friedman test was also performed on the scores of the three radiologists. Results: The ResNet-UNet segmentation model resulted in dice similarity of 0.96, Jaccard index of 0.93, a correlation coefficient of 0.99, with a figure-of-merit of 95.99%, while the classifier accuracies for the three DN nets (DN-121, DN-169, and DN-201) were 98%, 98%, and 99% with a loss of ~0.003, ~0.0025, and ~0.002 using 50 epochs, respectively. The mean AUC for all three DN models was 0.99 (p < 0.0001). The COVLIAS 2.0-cXAI showed 80% scans for mean alignment index (MAI) between heatmaps and gold standard, a score of four out of five, establishing the system for clinical settings. Conclusions: The COVLIAS 2.0-cXAI successfully showed a cloud-based explainable AI system for lesion localization in lung CT scans.

Keywords: COVID-19 lesion, lung CT, Hounsfield units, glass ground opacities, hybrid deep learning, explainable AI, segmentation, classification, GRAD-CAM, Grad-CAM++, Score-CAM, FasterScore-CAM

1. Introduction

COVID-19, the novel coronavirus or SARS-CoV-2, the severe acute respiratory syndrome coronavirus 2, has been a rapidly spreading epidemic that was declared a global pandemic on 11 March 2020 by the World Health Organization (WHO) [1]. As of 20 May 2022, COVID-19 had infected over 521 million people worldwide and has killed nearly 6.2 million [2].

Molecular pathways [3] and imaging [4] of COVID-19 have proven to be worse in individuals with comorbidities such as coronary artery disease [5,6], diabetes [7], atherosclerosis [8], fetal programming [9], pulmonary embolism [10], and stroke [11]. Further, the evidence shows the damage to the aorta’s vasa vasorum, leading to thrombosis and plaque vulnerability [12]. COVID-19 can cause severe lung damage, with abnormalities primarily in the lower region of the lung lobes [13,14,15,16,17,18,19,20]. It is challenging to distinguish COVID-19 pneumonia from interstitial pneumonia or other lung illnesses; as a result, manual classification can be skewed based on radiological expert opinion. As a result, an automated computer-aided diagnostics (CAD) system is sorely needed to categorize and characterize the condition [21], as it delivers excellent performance due to minimal inter-and intra-observer variability.

With the advancements of artificial intelligence (AI) technology [22,23,24], machine learning (ML) and deep learning (DL) approaches have become increasingly popular for detection of pneumonia and its categorization. There have been several innovations in ML and DL frameworks, some of which are applied to lung parenchyma segmentation [25,26,27], pneumonia classification [21,25,28], symptomatic vs. asymptomatic carotid plaque classification [29,30,31,32,33], coronary disease risk stratification [34], cardiovascular/stroke risk stratification [35], classification of Wilson disease vs. controls [36], classification of eye diseases [37], and cancer classification in thyroid [38], liver [39], ovaries [40], prostate [41], and skin [42,43,44].

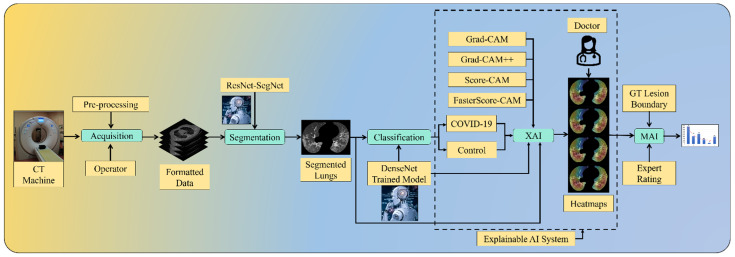

AI can further help in the detection of pneumonia type and can overcome the shortage of specialist personnel by assisting in investigating CT scans [45,46]. One of the key benefits of AI is its ability to emulate manually developed processes. Thus, AI speeds up the process of identifying and diagnosing diseases. On the contrary, the black-box nature of AI offers resistance to usage in clinicians’ settings. Thus, there is a clear need for human readability and interpretability of deep networks, which requires identified lesions to be interpreted and quantified. We, therefore, developed an explainable AI system in a cloud framework, labeled the “COVLIAS 2.0-cXAI” system, which was our primary novelty [47,48,49,50,51,52]. The COVLIAS 2.0-cXAI design consisted of three stages (Figure 1): (i) automated lung segmentation using the hybrid deep learning ResNet-UNet model using automatic adjustment of Hounsfield units [53], hyperparameter optimization [54], and the parallel and distributed nature of design during training; (ii) classification using three kinds of DenseNet (DN) models (DN-121, DN-169, DN-201) [55,56,57,58]; and (iii) scientific validation using four kinds of class activation mapping (CAM) visualization techniques: gradient-weighted class activation mapping (Grad-CAM) [59,60,61,62,63], Grad-CAM++ [64,65,66,67], score-weighted CAM (Score-CAM) [68,69,70], and FasterScore-CAM [71,72]. The COVLIAS 2.0-cXAI was validated by a trained senior radiologist for its stability and reliability. The proposed study also considers different variations in COVID-19 lesions, such as ground-glass opacity (GGO), consolidation, and crazy paving [73,74,75,76,77,78,79,80,81,82]. The COVLIAS 2.0-cXAI design showed the reduction of model size by roughly 30% and an improvement of the online version of the AI system by two times.

Figure 1.

COVLIAS 2.0-cXAI system.

To summarize, our prime contributions in the proposed study consist of six main stages: (i) automated lung segmentation using the HDL-ResNet-UNet model; (ii) classification of COVID-19 vs. controls using three kinds of DenseNets such as DenseNet-121 [55,56,57,83], DenseNet-169, and DenseNet-201; the combination of segmentation and classification depicting the overall performance of the system; (iii) using explainable AI to visualize and validate the prediction of the DenseNet models using four kinds of CAM, namely Grad-CAM, Grad-CAM++, Score-CAM, and FasterScore-CAM, for the first time. This helps us understand the AI model’s learning in the input CT image [35,84,85,86]. (iv) Mean alignment index (MAI) between heatmaps and the gold standard score from three trained senior radiologists, a score of four out of five, establishing the system for clinical applicability. Further, a Friedman statistical test was also conducted to present the statistical significance of the scores from the three experts. (v) Application of the quantization for the trained AI model to make the system light and further ensure faster online prediction. Lastly, (vi) presents an end-to-end cloud-based CT image analysis system, including the CT lung segmentation and COVID-19 intensity map using the four CAM techniques (Figure 1).

Our study is divided into six sections. The methodology, patient demographics, image acquisition, description of the DenseNet models, and the explainable AI system used in this work are described in Section 2. Section 3 presents the background literature. In Section 4, the models’ findings and their performance evaluation are presented. The discussion and benchmarking sections are in Section 4, and Section 5 presents the conclusions.

2. Methodology

2.1. Patient Demographics

Two distinct cohorts representing two different countries (Croatia and Italy) were used in the proposed study. The experimental data set included 20 Croatian COVID-19-positive individuals, 17 of whom were male, and the remainder of whom were three females. The GGO, consolidation, and crazy paving had an average value of 4. The second data set included 15 Italian control subjects, ten of whom were male, and the remainder of whom were five females. To confirm the presence of COVID-19 in the selected cohort, an RT-PCR test [87,88,89] was performed for both data sets.

2.2. Image Acquisition and Data Preparation

2.2.1. Croatian Data Set

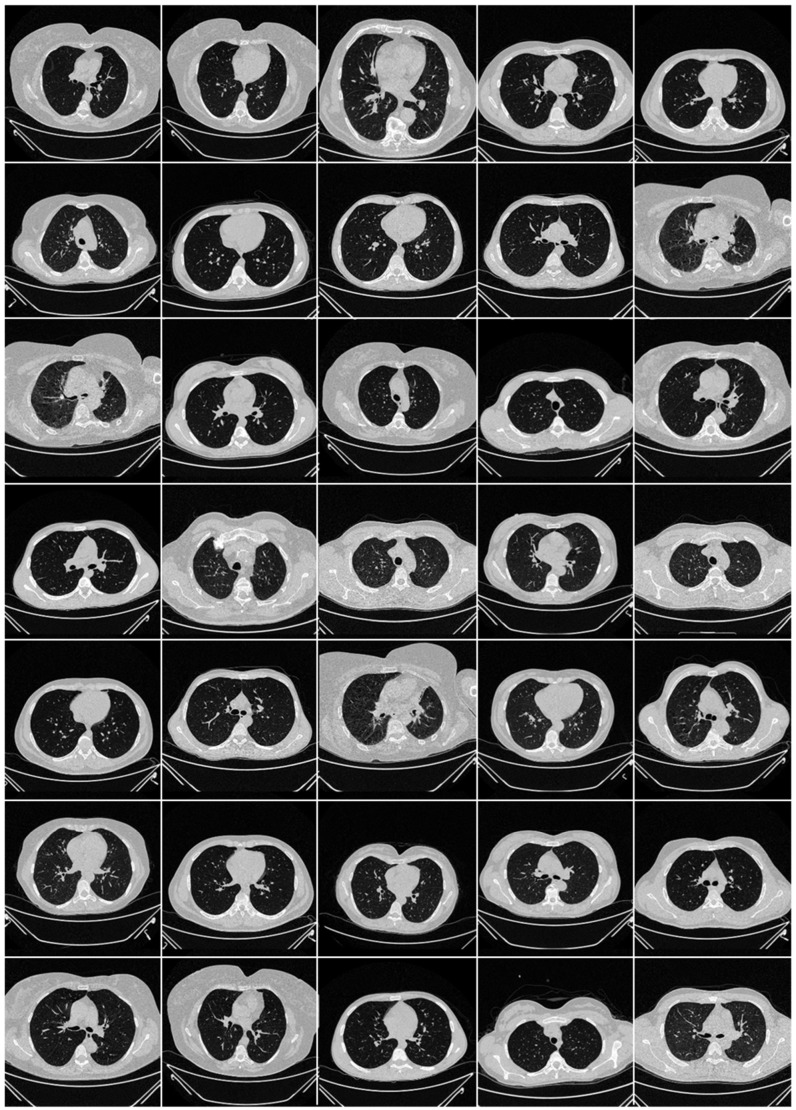

A Croatian data set of 20 COVID-19-positive patients was employed in our investigation (Figure 2). This cohort was acquired between 1 March and 31 December 2020, at the University Hospital for Infectious Diseases (UHID) in Zagreb, Croatia. The patients who underwent thoracic MDCT during their hospital stay showed a positive RT-PCR test for COVID-19 and were also above the age of 18 years. These patients also had hypoxia (oxygen saturation 92%), tachypnea (respiratory rate 22 per minute), tachycardia (pulse rate > 100), and hypotension (systolic blood pressure 100 mmHg). The proposal was approved by the UHID Ethics Committee. The acquisition of the CT data was conducted using a 64-detector FCT Speedia HD scanner (Fujifilm Corporation, Tokyo, Japan, 2017).

Figure 2.

Raw CT slice of COVID-19 patients taken from Croatian data set.

2.2.2. Italian Data Set

The CT scans for the Italian cohort of 15 patients (Figure 3) were acquired using a 128-slice multidetector-row CT scanner (Philips Ingenuity Core, by Philips Healthcare). The breath-hold procedure was used during acquisition and no contrast agent was administered. To acquire a 1 mm thick slice, a lung kernel of a 768 × 768 matrix together with a soft-tissue kernel was utilized. The CT scans were carried out with a 120 kV, 226 mAs/slice detector configuration (using Philips’ automated tube current modulation—Z-DOM), a spiral pitch factor of 1.08, and a 0.5 s gantry rotation time 64 × 0.625 detector was considered.

Figure 3.

Raw control CT slice taken from Italian data set.

2.3. Artificial Intelligence Architecture

Recent deep learning developments, such as hybrid deep learning (HDL), have yielded encouraging results [26,27,90,91,92,93,94,95]. We hypothesize that HDL models are superior to SDL models (e.g., UNet [96] and SegNet [97]) due to the joint effect of the two DL models. As a result, we offer a hybrid DL (HDL) such as the ResNet-UNet model that has been trained and tested for the COVID-19-based lung segmentation database in our current study. The aim of the proposed study is directed mainly at the explainable AI (XAI) using the classification models; therefore, we have only used one HDL model.

2.3.1. ResNet-UNet Architecture

VGGNet [98,99,100] was highly efficient and speedy, but it had a problem with vanishing gradients. During backpropagation, it results in substantially minimal or no weight training because it is multiplied by the gradient at each epoch, and the update is very modest in the initial layers. The residual network, or ResNet [101], was created to solve this problem. Skip connections, a new link, were built into this architecture, allowing gradients to skip a specific set of layers, thus overcoming the problem of vanishing gradient. Furthermore, during the backpropagation step, the local gradient value was preserved by an identity function network. In a ResNet-UNet-based segmentation network, the encoding part of the base UNet network is substituted with ResNet architecture, thus proving a hybrid approach.

2.3.2. Dense Convolutional Network Architecture

A dense convolutional network (CNN) has an architecture that uses shorter connections across layers, thereby making them highly efficient during training [102]. DenseNet is a CNN where every layer is connected to the ones below it. The primary layer communicates with the 2nd, 3rd, 4th, and so on, whereas the secondary layer communicates with the 3rd, 4th, 5th, and so on. The key idea here was to increase the flow of information between the network layers.

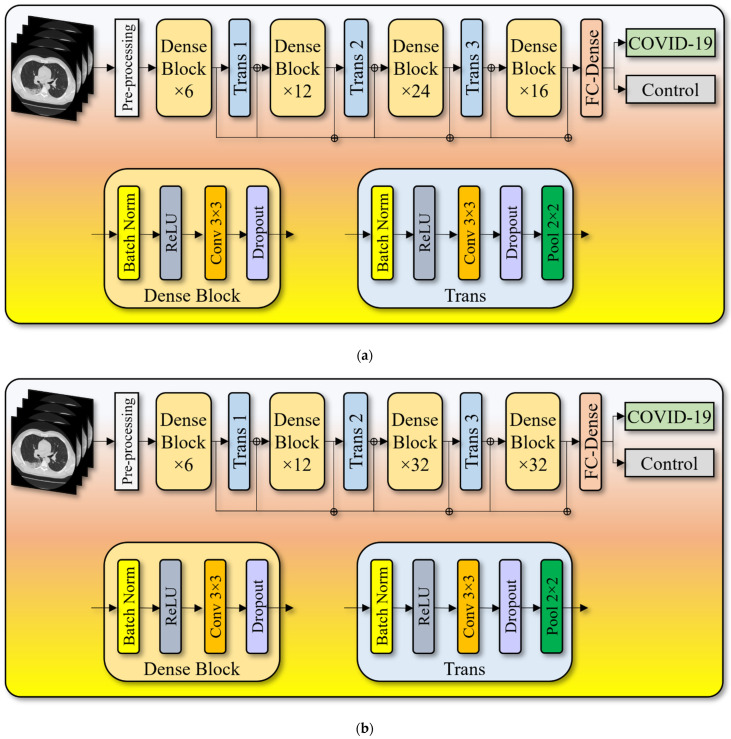

To maintain the flow of the system, the input received by each layer is forwarded to all the further layers in a feature map. Unlike ResNet, it does not combine features by summarizing them; instead, it concatenates them. As a result, the “jth” layer contains J inputs and comprises feature maps from all the convolutional blocks from the subsequent “J − j” layers that receive their feature maps. Instead of only J connections, the network now has “(J(J + 1))/2” links, like standard deep learning designs. This requires fewer parameters than traditional CNN, avoiding meaningless feature maps to be learned. This paper presents three kinds of DenseNet architectures, namely, (i) DenseNet-121 (Figure 4a), (ii) DenseNet-169 (Figure 4b), and (iii) DenseNet-201 (Figure 4c). Table 1 presents the output feature map sizes of the input layer, convolution layer, dense blocks, transition layers, and fully connected layer followed by the SoftMax classification layer.

Figure 4.

(a) DenseNet-121 model. (b) DenseNet-169 model. (c) DenseNet-201 model.

Table 1.

Output feature map sizes of the three DenseNet architectures.

| Layers | Output Feature Size |

|---|---|

| Input | 512 512 |

| Conv. | 256 256 |

| Max Pool | 128 128 |

| Dense Block 1 | 128 128 |

| Transition Layer 1 | 128 128 |

| 64 64 | |

| Dense Block 2 | 64 64 |

| Transition Layer 2 | 64 64 |

| 32 32 | |

| Dense Block 3 | 32 32 |

| Transition Layer 3 | 32 32 |

| 16 16 | |

| Dense Block 4 | 16 16 |

| Classification Layer (SoftMax) | 1024 |

| 2 |

2.4. Explainable Artificial Intelligence System for COVID-19 Lesion

We are utilizing machine learning to address more complicated problems as the technology improves and models become more accurate. As machine learning (ML) technology advances, it becomes increasingly sophisticated. This is one of the reasons to use cloud-based explainable AI (cXAI) to help understand how the ML model predicts utilizing a set of tools.

Instead of presenting individual pixels, cXAI is a new approach to displaying attributes that highlight which prominent characteristics of an image had the most significant impact on the model. The effect is seen here (image with heatmap red-yellow-blue), along with which regions contributed to our model’s identification of this image as a husky. Based on the color palette, cXAI highlights the most influential areas in red, the medium influential part in yellow, and the least influential factors in blue. Understanding why a model produced the forecast it did is helpful when debugging a model’s incorrect categorization or determining whether to believe its prediction. Explainability can help (i) debug the AI model, (ii) validate the results, and (iii) provide a visual explanation as to what drove the AI model to classify the image in a certain way. As part of cXAI, we present four cloud-based CAM techniques to visualize the prediction of the AI model and validate it using the color palette as described above.

Four CAM Techniques in Cloud-Based Explainable Artificial Intelligence System

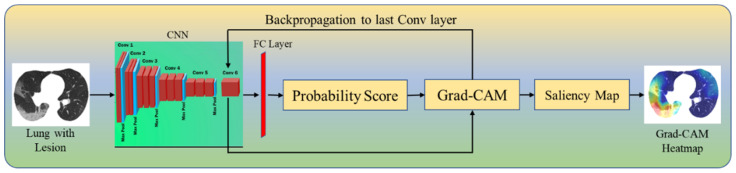

Grad-CAM (Figure 5) generates a localization map that shows the critical places in the image representing the lesions by employing gradients from the target label/class settling into the final convolutional layer. The input image is fed to the model which is then transformed by the Grad-CAM heatmap (Equation (1)) to show the explainable lesions in the COVID-19 CT scans. This image then follows the typical prediction cycle, generating class probability scores before calculating the model loss. Following that, using the output from our desired model layer, we compute the gradient in terms of model loss. Finally, the gradient areas that contribute to the prediction are then preprocessed (Equation (3)), thereby overlaying the heatmap on the original grayscale scans.

Figure 5.

Grad-CAM.

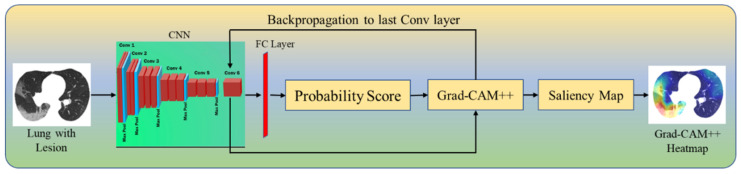

Grad-CAM++ (Figure 6) is an improved version of Grad-CAM, providing a better understanding by creating an accurate localization map of the identifying object and explaining the same class objects having multiple occurrences. Grad-CAM++ generates a pictorial depiction for the class label as weights derived from the feature map of the CNN layer by considering its positive partial derivatives (Equation (2)). Then, a similar process is followed as in Grad-CAM to produce the gradient’s saliency map (Equation (3)) that contributes to the prediction. This map is then overlaid with the original image.

| (1) |

| (2) |

| (3) |

where represents the final score of class c and represents the global average pool of the last convolutional layer by considering its linear combination. Estimated weights for the last convolutional layer can be given by for class c. represents a class-specific saliency map for each spatial location (i, j).

Figure 6.

Grad-CAM++.

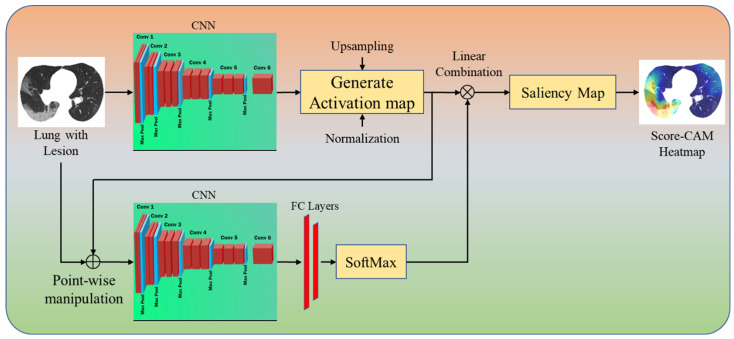

Our third CAM technique is Score-CAM (Figure 7). In this technique, the produced activation mask is used as a mask for the input image, masking sections of the image and causing the model to forecast on the partially masked image. The target class’s score is then used to represent the activation map’s importance. The main difference between Grad-CAM and Score-CAM is that this technique does not incorporate the use of gradients, as the propagated gradients introduce noise and are unstable. The technique is separated into the following parts to obtain the class discriminative saliency map using Score-CAM. (i) Images are processed through the CNN model as a forward pass. The activations are taken from the network’s last convolutional layer after the forward pass. (ii) Each activation map with the shape 1xmxn produced from the previous layer is sampled to the same size as the input image using bilinear interpolation. (iii) The generated activation maps are normalized with each pixel within [0, 1] to maintain the relative intensities between the pixels after upsampling. The formula given in Equation (4) is used for the normalization of the data. (iv) After the activation maps have been normalized, the highlighted areas are projected onto the input space by multiplying each normalized activation map (1 × X × Y) with the original input image (3 × X × Y) to obtain a masked image M with the shape 3 × X × Y (Equation (5)). The resulting masked images M are then fed into a CNN with SoftMax output (Equation (6)). (v) Finally, pixel-wise ReLU (Equation (7)) is applied to the final activation map generated using the sum of all the activation maps for the linear combination of the target class score and each activation map.

| (4) |

| (5) |

| (6) |

| (7) |

Figure 7.

Score-CAM++.

Finally, the fourth technique is labeled FasterScore-CAM. The main innovation of using FasterScore-CAM over the traditional Score-CAM technique is that it eliminates the channels with small variance and only utilizes the activation maps with large variance for heatmap computation and visualization. This selection of activation maps with large variance helps improve the overall speed by nearly ten-fold compared to Score-CAM.

2.5. Loss Function for Artificial-Intelligence-Based Models

During model generation, our system uses the cross-entropy (CE)-loss [103,104,105] function. If CE-loss can be represented by the notation , probability of the AI model by , gold standard label 1 and 0 by i and (1 − i), respectively, then the loss function equation can be mathematically expressed as shown in Equation (8).

| (8) |

2.6. Experimental Protocol

Our team has demonstrated several cross-validation (CV) protocols using the AI framework; the study uses a standardized five-fold CV technique to train the AI models [106,107]. The data consisted of 80% training data and 20% testing data. K5 CV protocol was adapted where the data were partitioned into five parts, each consisting of a unique training set and testing set and rotated cyclically for all the parts that were used independently. Note that we also used 10% of the data for validation.

The accuracy of the AI system is computed by evaluating the predicted output to the ground-truth label. The output lung mask was just black or white; these measurements were interpreted as binary (1 for white or 0 for black) values. If the symbols TP, TN, FN, and FP represent true positive, true negative, false negative, and false positive, respectively, Equation (9) may be used to evaluate the accuracy of the AI system.

| (9) |

Precision (Equation (10)) of an AI model is given as the ratio of the correctly labeled classes by the model w.r.t total labels of the COVID-19 class including the false-positive cases. Recall (Equation (11)) of an AI model is given as the ratio of the correctly labeled COVID-19 positive class by the AI model to the total COVID-19 in the data set. F1-score (Equation (12)) is the harmonic average of the precision and recall for the given AI model [108,109,110].

| (10) |

| (11) |

| (12) |

3. Results and Performance Evaluation

The proposed study uses the ResNet-UNet model for lung CT segmentation (see Appendix A, Figure A1) and three DenseNet models, namely, DenseNet-121, DenseNet-169, and DenseNet-201 to classify COVID-19 vs. control. The AI classification model was trained on 1400 COVID-19 and 1050 control images, giving an accuracy of 98.21% with an AUC of 0.99 (p < 0.0001).

A confusion matrix (CM) is a table that shows how well a classification model performs on a set of test data for which the real values are known. Table 2 presents CM for three kinds of DenseNet (DN) models (DN-121, DN-169, and DN-201). For DN-121, a total of 1382 and 1020 images were correctly classified and 18 and 30 were misclassified as COVID-19 and control. For DN-169, a total of 1386 and 1028 images were correctly classified and 14 and 22 were misclassified as COVID-19 and control. For DN-201, a total of 1388 and 1038 images were correctly classified and 12 and 12 were misclassified as COVID-19 and control.

Table 2.

Confusion matrix.

| DN-121 | COVID | Control |

|---|---|---|

| COVID | 99% (1382) | 3% (30) |

| Control | 1% (18) | 97% (1020) |

| DN-169 | COVID | Control |

| COVID | 99% (1386) | 2% (22) |

| Control | 1% (14) | 98% (1028) |

| DN-201 | COVID | Control |

| COVID | 99% (1388) | 1% (12) |

| Control | 1% (12) | 99% (1038) |

3.1. Results Using Explainable Artificial Intelligence

Visual Results Representing Lesion Using the Four CAM Techniques

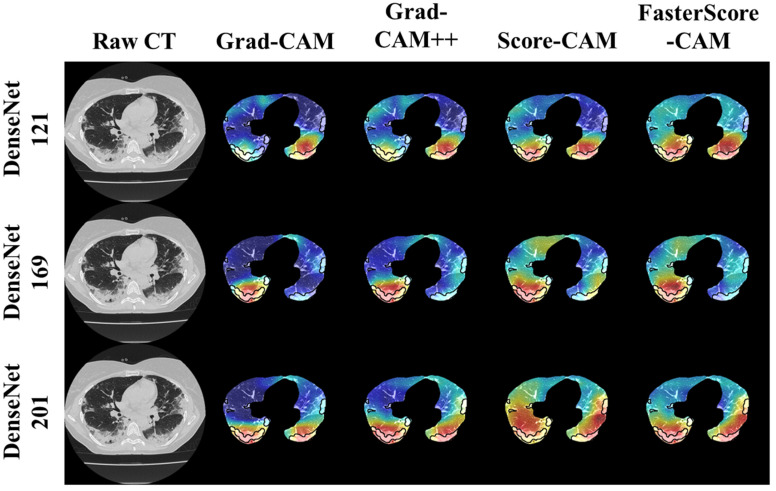

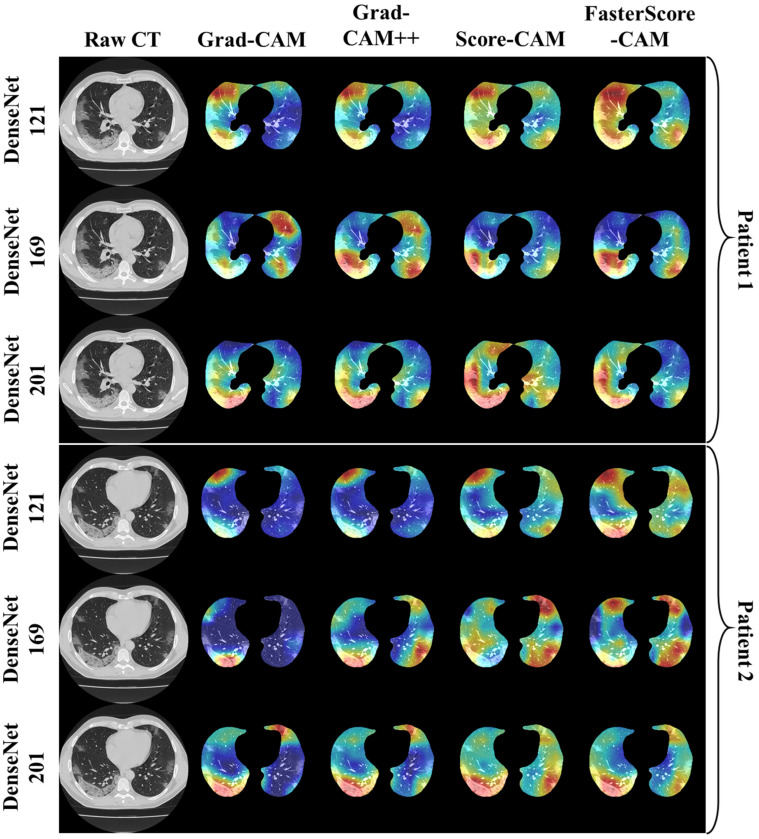

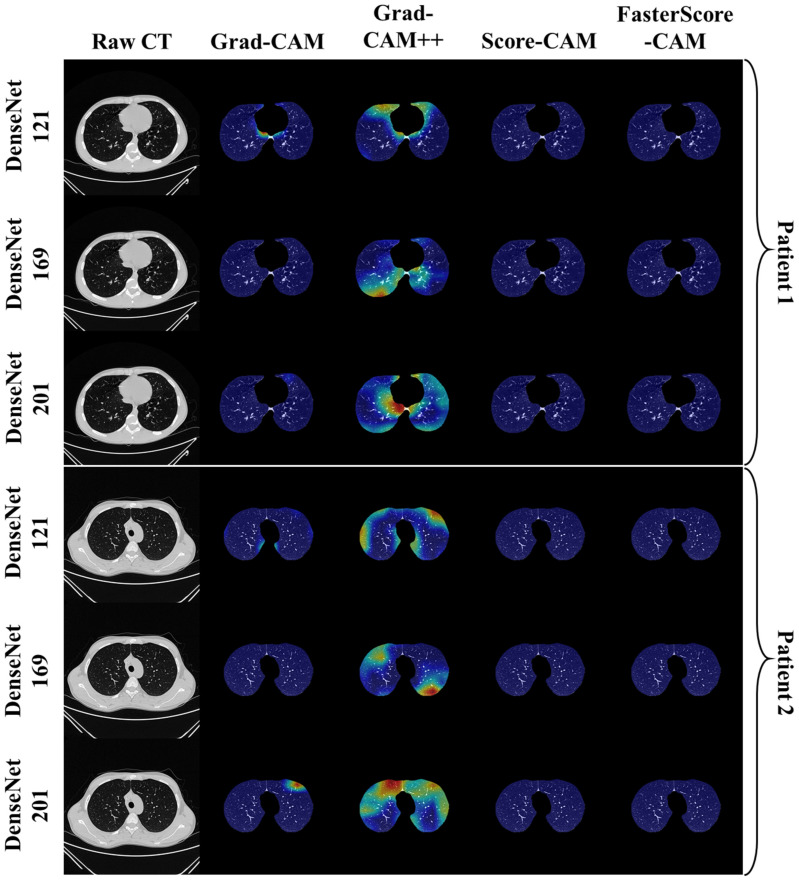

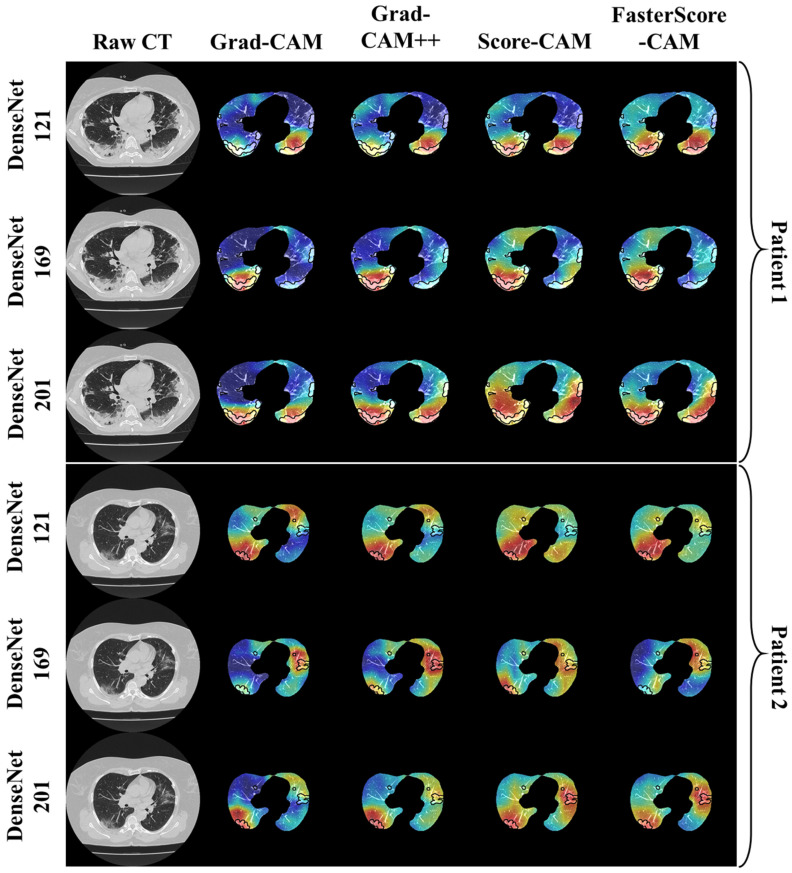

The trained classification model from DenseNet-121, DenseNet-169, and DenseNet-201 was taken, and then cXAI was applied to it to generate the heatmap representing the lesion, thereby validating the prediction of the DenseNet models. These images which were used to train the classification models followed the pipeline described in Figure 1, where we first preprocess the CT volume with HU intensities followed by lung segmentation using the ResNet-UNet model. These segmented lung images are then fed to the classification network for the training and application of cXAI. As part of cXAI, we used four CAM techniques, namely, (i) Grad-CAM, (ii) Grad-CAM++, (iii) Score-CAM, and (iv) FasterScore-CAM to visualize the results of the classification model. Figure 8 shows the output from the cXAI, which includes the expert’s lesion localization with black borders, representing the AI model’s missed and correctly captured lesion.

Figure 8.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on COVID-19 lesion images.

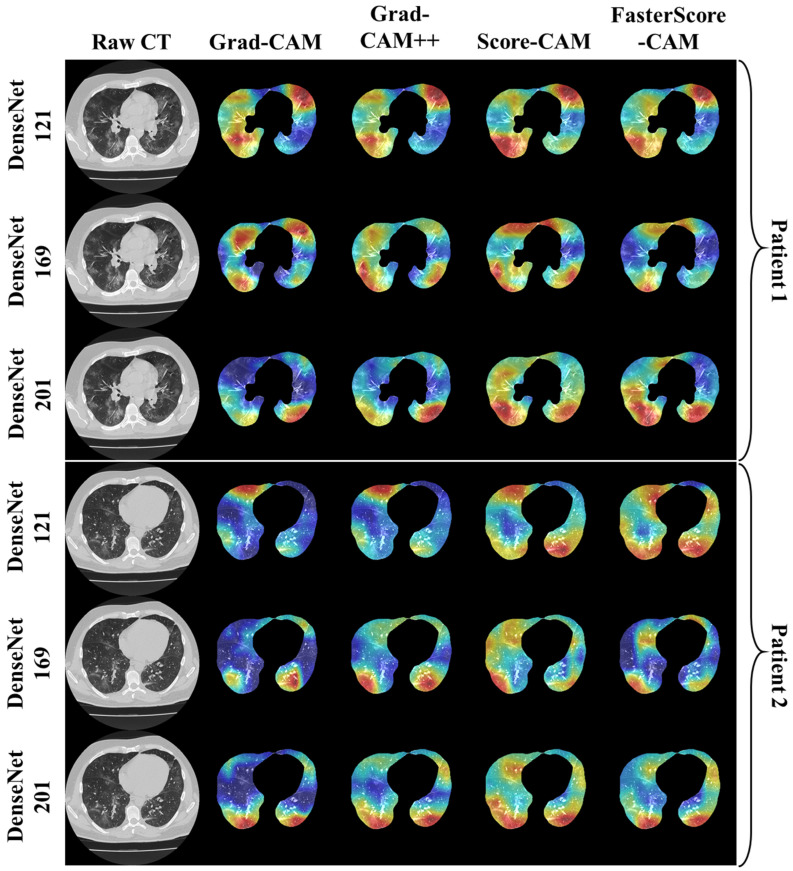

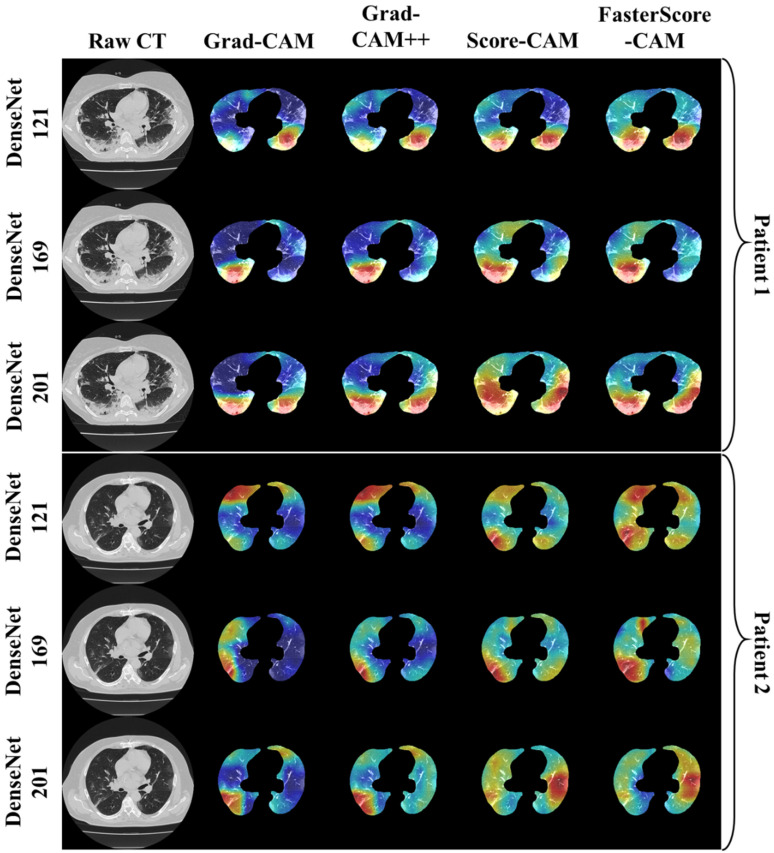

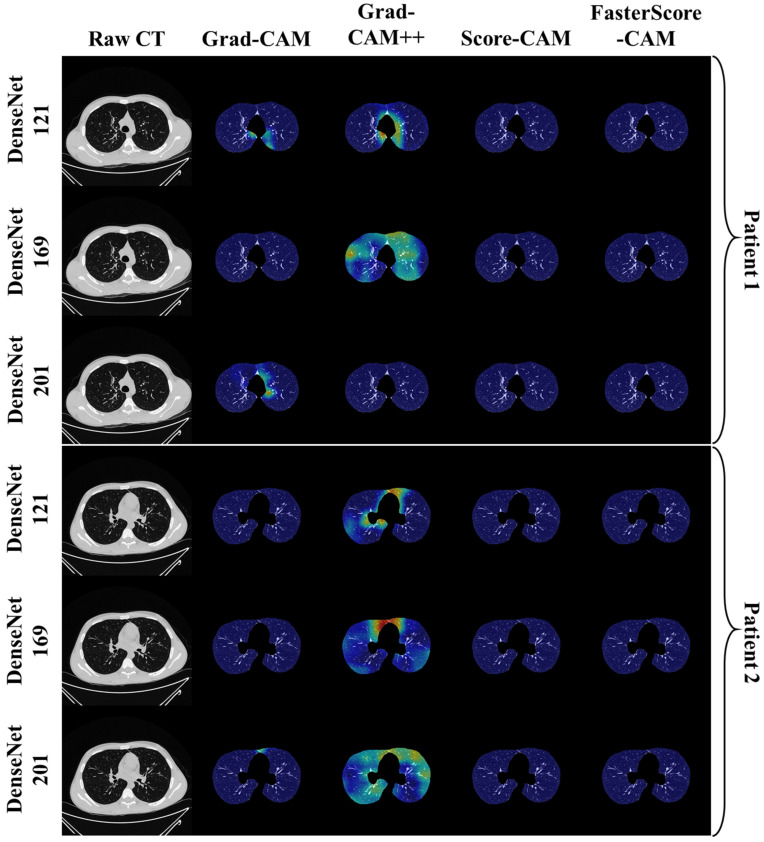

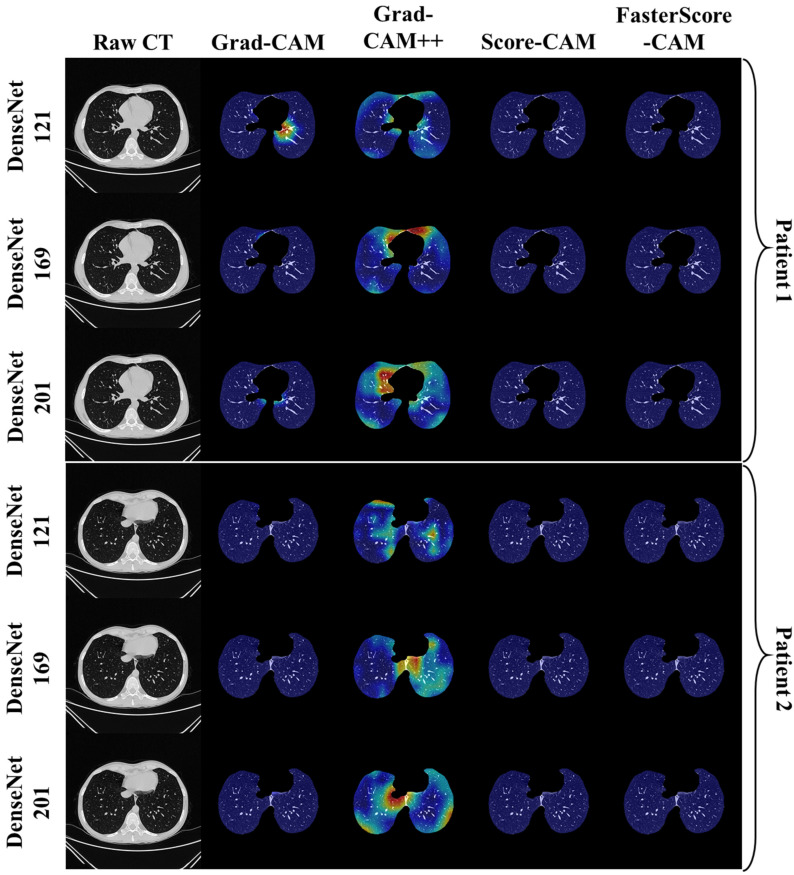

Figure 9, Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14 show the visual results for the three kinds of DenseNet-based classifiers wrapped up with four types of CAM models, namely Grad-CAM (column 2), Grad-CAM++ (column 3), Score-CAM (column 4), and FasterScore-CAM (column 5) on COVID-19 vs. control segmented lung images, where the color map red shows the lesion localization using cXAI, thereby validating the prediction of the DenseNet models. Table 3 presents a comparative analysis of the three DenseNet models used in this study. The performance of the models has been compared using accuracy, loss, specificity, F1-score, recall, precision, and AUC scores. DenseNet-201 is the best-performing model when comparing the accuracy, loss, specificity, F1-score, recall, and precision. However, due to the larger model’s size of 233 MB and a total number of parameters of 203 million, training the batch size of the model was kept at 4. While the batch size while training DenseNet-121 and DenseNet-169 was kept at 16 and 8 due to a smaller model size of 93 MB and 165 MB and further had a lesser number of parameters of 81 million and 143 million, respectively.

Figure 9.

Heatmap using four CAM techniques and three kinds of DenseNet classifiers on COVID-19 lesion images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Figure 10.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on COVID-19 lesion images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Figure 11.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on COVID-19 lesion images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Figure 12.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on control images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Figure 13.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on control images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Figure 14.

Heatmap using four CAM techniques using three kinds of DenseNet classifiers on control images. The top row is the CT slice for patient 1, and the bottom row is the CT slice for patient 2.

Table 3.

Comparative table for three kinds of DenseNet classifier models.

| SN | Attributes | DN-121 | DN-169 | DN-201 |

|---|---|---|---|---|

| 1 | # Layers | 430 | 598 | 710 |

| 2 | Learning Rate | 0.0001 | 0.0001 | 0.0001 |

| 3 | # Epochs | 20 | 20 | 20 |

| 4 | Loss | 0.003 | 0.0025 | 0.002 |

| 5 | ACC | 98 | 98.5 | 99 |

| 6 | SPE | 0.975 | 0.98 | 0.985 |

| 7 | F1-Score | 0.96 | 0.97 | 0.98 |

| 8 | Recall | 0.96 | 0.97 | 0.98 |

| 9 | Precision | 0.96 | 0.97 | 0.98 |

| 10 | AUC | 0.99 | 0.99 | 0.99 |

| 11 | Size (MB) | 93 | 165 | 233 |

| 12 | Batch size | 16 | 8 | 4 |

| 13 | Trainable Parameters | 80 M | 141 M | 200 M |

| 14 | Total Parameters | 81 M | 143 M | 203 M |

DN-121: DenseNet-121; DN-169: DenseNet-169; DN-201: DenseNet-201; # = number of. Bold highlights the superior performance of the DenseNet-201 (DN-201) model.

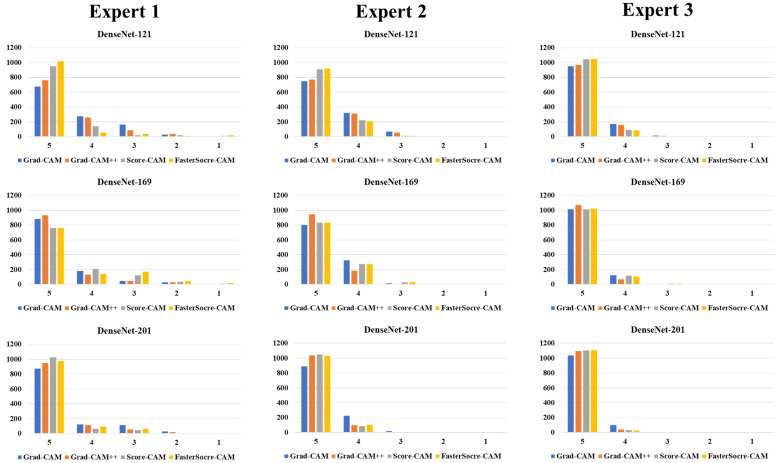

3.2. Performance Evaluation

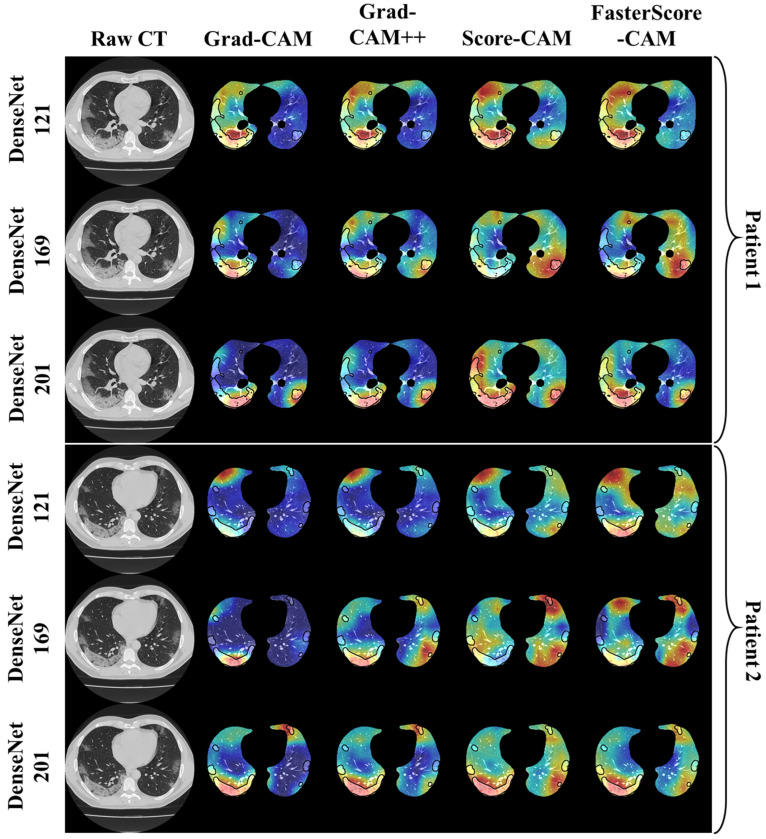

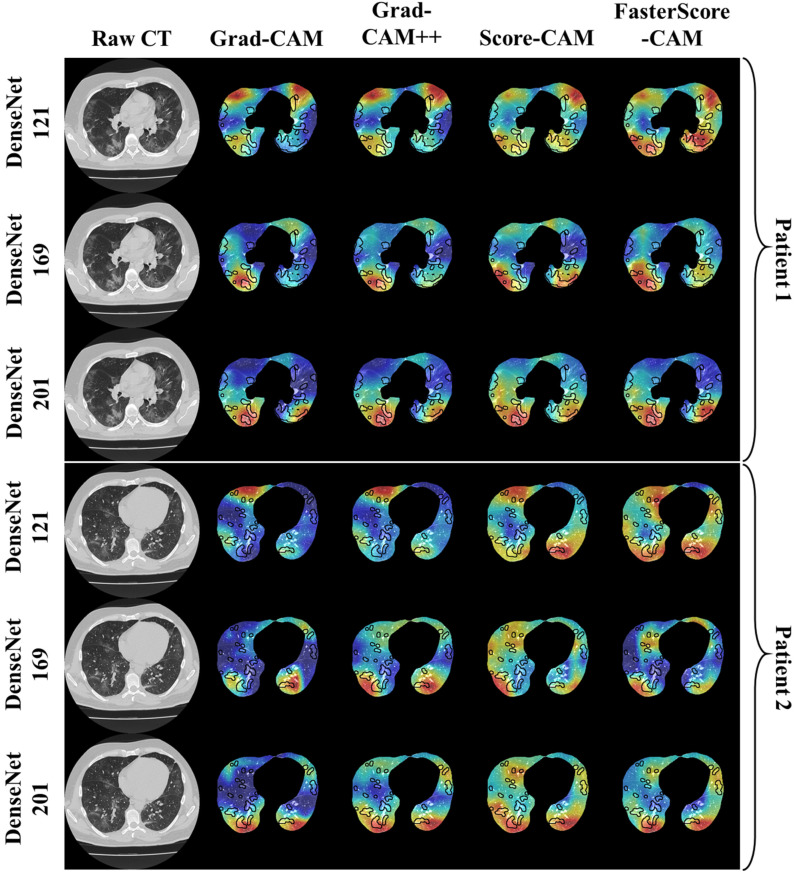

The proposed study uses two techniques: (i) segmentation of the CT lung; and (ii) classification of the CT lung between COVID-19 vs. controls. For the segmentation part, we have presented mainly five kinds of performance evaluation metrics: (i) area error, (ii) Bland–Altman [111,112], (iii) correlation coefficient [113,114], (iv) dice similarity [115], and (v) Jaccard index. Figure 15, Figure 16 and Figure 17 show the overlay of the ground truth lesions on heatmaps as part of the performance evaluation. The four columns represent Grad-CAM (column 2), Grad-CAM++ (column 3), Score-CAM (column 4), and FasterScore-CAM (column 5) on the segmented lung CT image. For the three DenseNet-based classification models, we introduce a new metric to evaluate the heatmap, i.e., mean alignment index (MAI). This MAI requires grading from a trained radiologist, where the radiologist rates the heatmap image between 1 and 5, with 5 being the best score. This study incorporates inter-observer analysis using three senior trained radiologists from different countries for MAI scoring on the cXAI-generated heatmap of the lesion localization on the images. The scores are then presented in the form of a bar chart (Figure 18) with grading from expert 1 (Figure 18, column 1), expert 2 (Figure 18, column 2), and expert 3 (Figure 18, column 3).

Figure 15.

Overlay of ground truth annotation on heatmap using four CAM techniques on three kinds of DenseNet classifiers for COVID-19 lesion images as part of the performance evaluation.

Figure 16.

Overlay of ground truth annotation on heatmap using four CAM techniques on three kinds of DenseNet classifiers for COVID-19 lesion images as part of the performance evaluation.

Figure 17.

Overlay of ground truth annotation on heatmap using four CAM techniques on three kinds of DenseNet classifiers for COVID-19 lesion images as part of the performance evaluation.

Figure 18.

Bar chart representing the MAI.

3.3. Statistical Validation

This study uses the Friedman test to prove the statistically significant difference between the means of three or more groups, all of which have the same subjects [116,117,118]. The Friedman test’s null hypothesis states that there are no differences between the sample medians. The null hypothesis will be rejected if the p-value calculated is less than the set significance threshold (0.05), and it can be determined that at least two of the sample medians are substantially different from each other. Further analysis of the Friedman test is presented in “Appendix A (Table A1, Table A2 and Table A3)”. It was noted that for all the MAI scores of three experts, the three classification models, namely, DenseNet-121, DenseNet-169, and DenseNet-201, and using the four CAM techniques used in XAI showed significance of p < 0.00001. Thus, this proves the reliability of the overall COVLIAS 2.0-cXAI system.

4. Discussion

4.1. Study Findings

To summarize, our prime contributions in the proposed study are six types of innovation in the design of COVLIAS 2.0-cXAI: (i) automated HDL lung segmentation using the ResNet-UNet model; (ii) classification of COVID-19 vs. controls using three kinds of DenseNets, namely, DenseNet-121 [55,56,57,83], DenseNet-169, and DenseNet-201; the combination of segmentation and classification improved the overall performance of the system; (iii) using explainable AI to visualize and validate the prediction of the DenseNet models using four kinds of CAM, namely Grad-CAM, Grad-CAM++, Score-CAM, and FasterScore-CAM, for the first time. This helps us understand the AI model’s learning in the input CT image [35,84,85,86]. (iv) Mean alignment index (MAI) between heatmaps and the gold standard score from three trained senior radiologists, a score of four out of five, establishing the system for clinical applicability. Further, a Friedman test was also conducted to present the statistical significance of the scores from the three experts. (v) Application of the quantization to the trained AI model while making the prediction help in faster online prediction. Further, it also reduces the final trained AI model size, making the complete system light. Lastly, (vi) presents an end-to-end cloud-based CT image analysis system, including the CT lung segmentation and COVID-19 intensity map using the four CAM techniques (Figure 1).

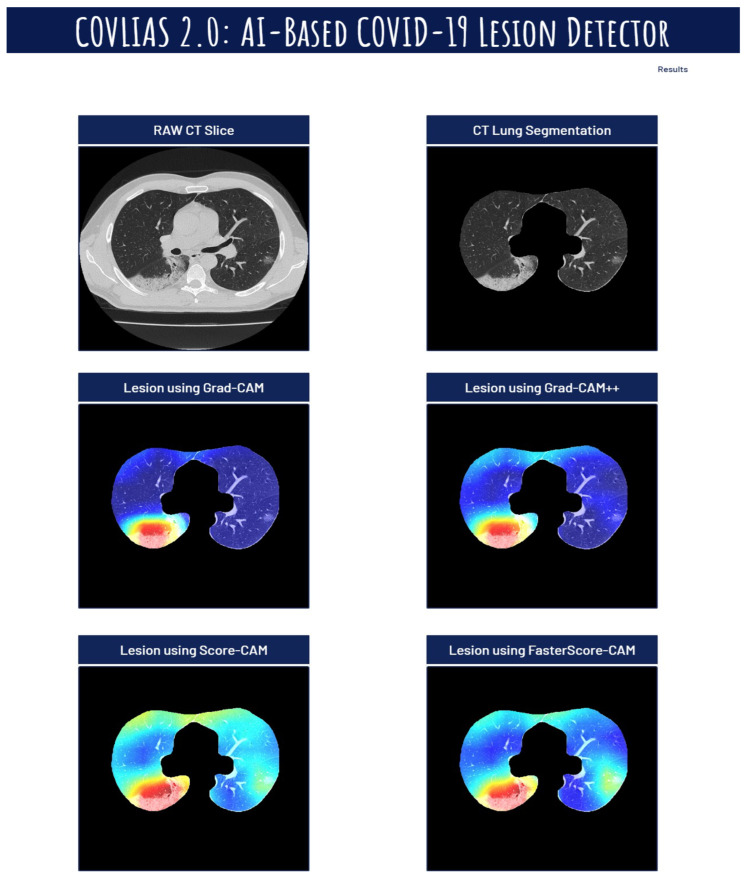

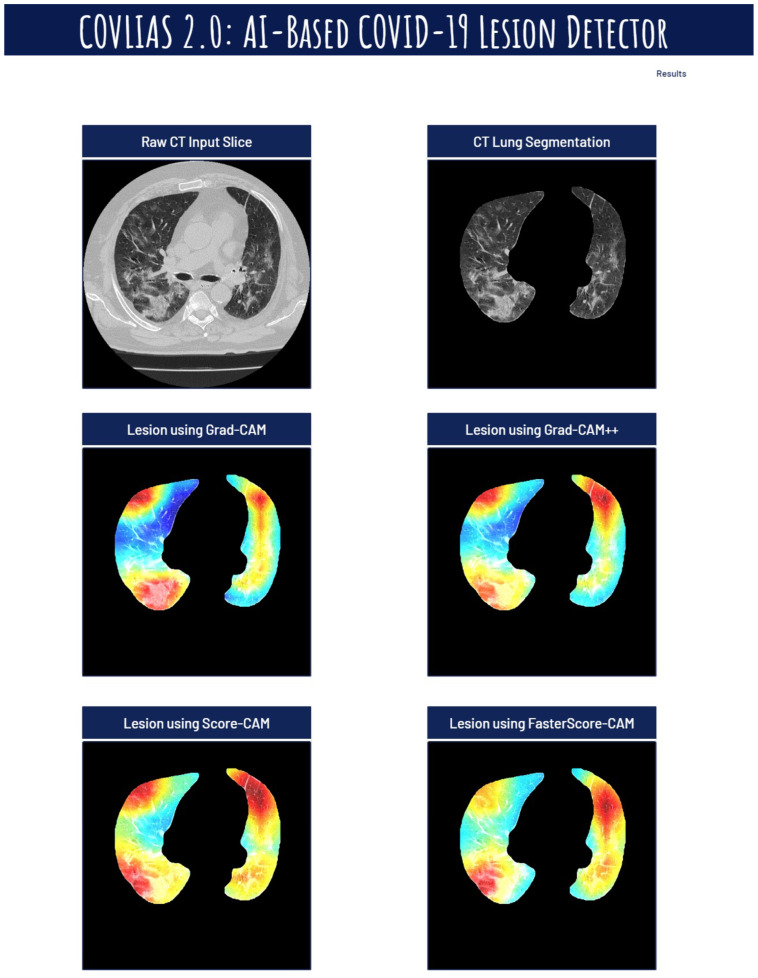

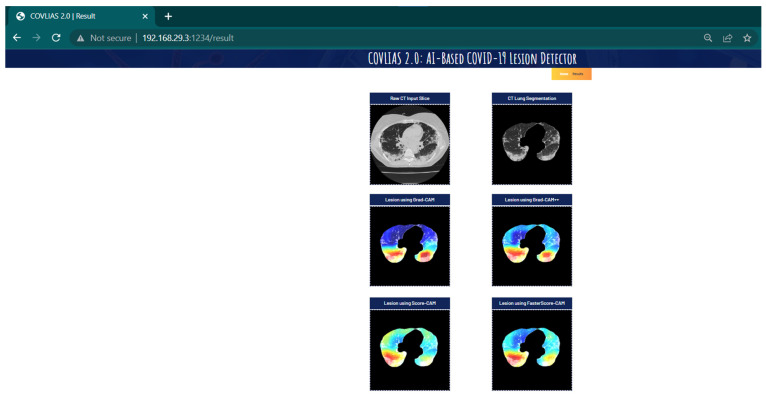

The proposed study presents heatmaps using four CAM techniques, namely, (i) Grad-CAM, (ii) Grad-CAM++, (iii) Score-CAM, and (iv) FasterScore-CAM. The CT lung segmentation using ResNet-UNet was adapted from our previous publication [93]. This segmented lung is then given as the input to the classification DenseNet models to train in distinguishing between COVID-19-positive and control individuals. The preprocessing involved while training the classification model consists of a Hounsfield unit (HU) adjusted to highlight the lung region (1600, −400), causing the model to train efficiently by improving the visibility of COVID-19 lesions [53]. Further, we have also designed a cloud-based AI system that takes the raw CT slice as the input and then processes this image first for lung segmentation, followed by heatmap visualization using four techniques [119,120,121,122,123]. Figure 19, Figure 20 and Figure 21 represent the output from the cloud-based COVLIAS 2.0-cXAI system (Figure 22, a web-view screenshot). This COVLIAS 2.0-cXAI uses multithreading to process the four CAM techniques in a parallel manner and produces results faster than sequential processing.

Figure 19.

COVLIAS 2.0 cloud-based display of the lesion images using four CAM models.

Figure 20.

COVLIAS 2.0 cloud-based display of the lesion images using four CAM models.

Figure 21.

COVLIAS 2.0 cloud-based display of the lesion images using four CAM models.

Figure 22.

A web-view screenshot.

While it is intuitive to examine the relationship between demographics and COVID-19 severity [22,124,125,126], it is not always necessarily the case that (i) there can be a relationship between demographics and COVID-19 severity, (ii) there can be data collection with all demographics parameters and COVID-19 severity, (iii) there can be data collection keeping comorbidity in mind, and/or (iv) the cohort sizes are large enough to establish the relationship between demographics and COVID-19 severity. Such conditions are prevalent in our setup and therefore no such relationship could be established; however, as part of the research, one can establish such a relationship along with survival analysis. The objective of this study was squarely not aimed at collecting demographics and relating them to COVID-19 severity; however, we have attempted this in previous studies [127].

Multilabel classification is not new [21,124,128,129]. For multilabel classification, the models are trained with multiple classes, for example, if there are two or more than two classes, then the gold standard must consist of two or more than two classes [124,129]. Note that in our study, the only two classes used were COVID-19 and controls; however, different kinds of lesions can be classified using a multiclass-based classification framework (for example, GGO vs. consolidations vs. crazy paving), which was out of the scope of the current work, but this can be part of the future study. Moreover, inclusion of unsupervised techniques can also be attempted [130].

The total data size for ResNet-UNet-based segmentation was 5000. These trained models were used for segmentation followed by classification on 2450 test CT scans consisting of 1400 COVID-19 cases and 1050 control CT scans. Three kinds of DenseNet classifiers were used for classification of COVID-19 vs. controls. Further, the COVLIAS 2.0-cXAI used the explainable AI using three kinds of Grad-CAM for heatmap generation. Thus, overall, the system used 7450 CT images, which is relatively large. Due to the radiologists’ time and cost reasons, the test data set was nearly 33% of the total data set of the system, which is considered reasonable.

4.2. Memorization vs. Generalization for Longitudinal Studies

Generalization is the process where the AI model does not purely depend upon the data sample size for best performance [34,131]. Since the models were trained using K5 cross-validation (CV) protocol (80:20), and the accuracy was predicted on the test data set, which was not part of the training data sets, the process of memorization was thus less likely to happen. Note that for every CV protocol, the “memorization vs. generalization” needs to be evaluated independently, especially keeping the treatment paradigm for longitudinal data sets, which was out of scope for the current settings. From our past experiences, the effect of generalization can be retained in the deep learning framework to a certain degree. In our recent experiments, where we had applied “unseen test data” on our trained AI models, it resulted in encouraging accuracy [27,132], which justifies “superior generalization” in deep learning frameworks, unlike in machine learning frameworks. Since COVLIAS 2.0-cXAI is a deep learning framework, we thus conclude that the cloud-based “COVLIAS 2.0-cXAI” can be adopted for the longitudinal data sets during the monitoring phase.

4.3. A Special Note on Training Data Set

We trained the segmentation model using ResNet-UNet on 5000 COVID-19 images. An unseen data set of 2450 (1400 COVID-19 and 1050 control images) was used for testing. Since the training data set was quite large, we did not use augmentation during training protocol. Note that the unseen data (2450) was also not augmented. While several studies have been published that used the augmentation protocol [36,90,94,133,134,135] during classification, our DenseNet models for classification were never modified and never underwent change in rotation, tilt, or orientation. Further, note that we used the DICOM image directly, which contains orientation information. This information was used to solve the problem of rotation, tilting, or any abnormal orientation. This orientation information in the DICOM tag was used to fix the orientation of the image so that the lung is always vertically straight in the image.

4.4. A Special Note on Four CAM Models

While DL has demonstrated accuracy in image classification, object recognition, and image segmentation, model interpretability, a key component in model explainability, comprehension, and debugging, is one of the most significant issues. That poses an intriguing question: how can you trust a model’s decisions if you cannot fully justify how it got there? There has been the latest trend in the growth of XAI for a better understanding of the AI black boxes [49,136,137,138,139]. Grad-CAM or Grad-CAM++ produces a coarse clustering map showing the key regions in the picture for predicting any target idea (say, “COVID-19” in a classification network) by using the gradients of any target concept (say, “COVID-19” in a classification network) in the final convolutional layer. In contrast, Score-CAM is built on the idea of perturbation-based approaches that mask portions of the original input and measure the change in target score. The produced activation mask is handled as a mask for the input image, masking sections of the input image and causing the model to predict the partially masked image. The target class score is then used to reflect the significance of the class activation map. While Score-CAM is an excellent method, it, however, takes more time to process compared to other CAM methods. FasterScore-CAM makes Score-CAM more efficient. This was achieved using only the dominating channels with significant variances as the mask image. Thus, a CAM version that is ten times faster than Score-CAM is produced.

4.5. Benchmarking the Proposed Model against Previous Strategies

We present the benchmarking strategy in Table 4, and this includes studies that utilized the CAM technique for COVID-19-based lesion localization. Lu et al. [140] presented CGENet, a deep graph model for COVID-19 detection on CT images. First, they established the appropriate backbone network for the CGENet adaptively. The authors then devised a novel graph-embedding mechanism to merge the spatial relationship into the feature vectors. Finally, to improve classification performance, they picked the extreme learning machine (ELM) [24] as the classifier for the proposed CGENet. Based on five-fold cross-validation, the suggested CGENet obtained an average accuracy of 97.78% on a large publicly available COVID-19 data set with ~2400 CT slices. They also compared the performance of CGENet against five existing methods. In addition, based on COVID-19 samples, the Grad-CAM maps were used to offer a visual explanation of CGENet. The authors did not report the AUC values and did not compare the other CAM methods such as Grad-CAM++, Score-CAM, and FasterScore-CAM.

Table 4.

Benchmarking table.

| C0 | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | C11 | C12 | C13 | C14 | C15 | C16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SN | Author | Year | TP | TS | IS2 | TM | DL Model | Modality | XAI | Heatmap Models | AUC | SEN | SPE | PRE | F1 | ACC |

| 1 | Lu et al. [140] | 2021 | 2482 | 100 to 500 | 5 | CGENet | CT | ✗ | Grad-CAM | ✗ | 97.9 | 97.7 | 97.7 | 97.8 | 97.8 | |

| 2 | Lahsaini et al. [141] | 2021 | 177 | 4968 | ✗ | 6 | Transferred DenseNet201 | CT | ✗ | Grad-CAM | 0.988 | 99.5 | 98.2 | 97.8 | 98 | 98.2 |

| 3 | Zhang et al. [143] | 2021 | 86 | 5504 | 1024(CT) 2048(X-Ray) | 8 | MIDCAN | CT, X-ray | ✗ | Grad-CAM | 0.98 | 98.1 | 98 | 97.9 | 98 | 98 |

| 4 | Monta et al. [144] | 2021 | 9208 | 299 | 7 | Fused-DenseNet-Tiny | X-ray | ✗ | Grad-CAM | ✗ | ✗ | ✗ | 98.4 | 98.3 | 98 | |

| 5 | Proposed Suri et al. |

2022 | 80 | 5000 | 512 | 3 | DenseNet-121 DenseNet-169 DenseNet-201 |

CT | ✓ | Grad-CAM Grad-CAM++ Score-CAM FasterScore-CAM |

0.99 0.99 0.99 |

0.96 0.97 0.98 |

0.975 0.98 0.985 |

0.96 0.97 0.98 |

0.96 0.97 0.98 |

98 98.5 99 |

TP: total patients; TS: total slice; IS: image size; TM: total models; AUC: area under the curve; SEN (%): sensitivity (or recall); SPE (%): specificity; PRE (%): precision; ACC (%): accuracy.

At Tlemcen Hospital in Algeria, Lahsaini et al. [141] first gathered a data set of 4986 COVID and non-COVID images validated by RT-PCR assays. Then, to conduct a comparative analysis, they performed transfer learning on DL models that received the highest results on the ImageNet data set, such as DenseNet-121, DenseNet-201, VGG16, VGG19, Inception Resnet-V2, and Xception [142]. Finally, they proposed an explainable model for detecting COVID-19 in chest CT images and explaining the output decision based on the DenseNet-201 architecture. According to the results of the tests, the proposed design has a 98.8% accuracy rate. It also uses Grad-CAM to provide a visual explanation. The authors did not compare them with other CAM methods such as Grad-CAM++, Score-CAM, and FasterScore-CAM.

Zhang et al. [143] investigated whether combining chest CT and chest X-ray data can assist AI to diagnose more accurately. Approximately 5500 CT slices were collected from 86 participants for this study. The convolutional block attention module was used to create an end-to-end multiple-input deep convolutional attention network (MIDCAN) (CBAM). One of our model’s inputs received a CT picture, while the other received an X-ray image. Grad-CAM was also used to create an explainable heatmap. The suggested MIDCAN had accuracy of 98.02%, sensitivity of 98.1%, and specificity of 97.95%. The authors did not compare the other CAM methods such as Grad-CAM++, Score-CAM, and FasterScore-CAM.

Monta et al. [144] presented the Fused-DenseNet-Tiny, a lightweight DCNN model based on a truncated and concatenated DenseNet. Transfer learning, partial layer freezing, and feature fusion were used to train the model to learn CXR features utilizing 9208 CXR. The proposed model was shown to be 97.99% accurate during testing. Despite its lightweight construction, the Fused-DenseNet-Tiny cannot outperform its larger cousin due to its limited extraction capabilities. The authors also used Grad-CAM to explain the trained AI model visually. The authors did not report the AUC values and did not compare the other CAM methods such as Grad-CAM++, Score-CAM, and FasterScore-CAM.

4.6. Strengths, Weakness, and Extensions

The study presented COVLIAS 2.0-cXAI, a cloud-based XAI system for COVID-19 lesion detection and visualization. The cXAI system presented a comparison of four heatmap techniques, (i) Grad-CAM, (ii) Grad-CAM++, (iii) Score-CAM, and (iv) FasterScore-CAM for the first time using three DenseNet models, namely, DenseNet-121, DenseNet-169, and DenseNet-201 for COVID-19 lung CT images. To improve the prediction of the three DenseNet models, we first segment the CT lung using a hybrid DL model ResNet-UNet and then pass it to the classification network. Applying quantization to the three trained AI models, namely, DenseNet-121, DenseNet-169, and DenseNet-201, while making the prediction, helps in faster online prediction. Further, it also reduces the final trained AI model size, making the complete system light. The overall cXAI system incorporates validation of the lesion localization using expert grading, thereby generating an MAI score. Lastly, the study presents an end-to-end cloud-based CT image analysis system (COVLIAS 2.0-cXAI), including the CT lung segmentation (ResNet-UNet) and COVID-19 lesion intensity map using cXAI techniques. This study uses inter-observer variability similar to other variability measurements [145] to score the MAI for lesion localization, which was further validated using the Friedman test.

Even though the three AI models, DenseNet-121, DenseNet-169, and DenseNet-201, produced promising results on a data set from a single location, the study was limited to one observer due to cost, time, and radiologists’ availability. Several kinds of DenseNet systems have been developed which can be tried and the current DenseNet can be replaced by [146,147,148]; as part of the extension to this study, more AI models can be explored and can incorporate the use of the HDL model for binary or multiclass-based classification [128] framework. Explainable AI is an emerging field and many new strategies can be incorporated [47,50,149,150,151,152,153,154,155,156,157]. New techniques have evolved such as SHAP [52,158] and UMAP [159]. Heatmaps produced by Grad-CAM have been used for XAI in several applications [64], where the generated heatmaps are the threshold to compute the lesions which are then compared against the gold standard [49]. Choi et al. [48] used SHAP to demonstrate the high-risk factors responsible for higher phosphate. Further, to improve the speed of the AI model, model optimization techniques such as weight clustering and AI model pruning [160,161,162,163,164] can be applied [115,165,166,167,168,169]. Techniques such as storage reduction are necessary when dealing with AI solutions [51,54,170,171,172]. Fusion of conventional image processing can be used with AI to improve the performance of the system [173,174]. These AI technologies are likely to benefit long-COVID [175].

5. Conclusions

The proposed study is the first pilot study that integrates a cloud-based explainable artificial intelligence system using four techniques, namely, (i) Grad-CAM, (ii) Grad-CAM++, (iii) Score-CAM, and (iv) FasterScore-CAM-based lesion localization using three DenseNet models, namely, DenseNet-121, DenseNet-169, and DenseNet-201. Thus, it compares the methods and explainability of the four different CAM strategies for COVID-19-based CT lung lesion localization. DenseNet-121, DenseNet-169, and DenseNet-201 demonstrated an accuracy performance of 98%, 98.5%, and 99%, respectively. The study incorporated a hybrid DL (ResNet-UNet) for COVID-19-based CT lung segmentation using independent cross-validation and performance evaluation schemes. To validate the lesion, three trained senior radiologists scored the lesion localization on the CT lung data set and then compared it against the heatmap generated by cXAI, resulting in the MAI score. Overall, ~80% of CT scans were above an MAI score of four out of five, demonstrating matching lesion locations using cXAI vs. gold standard, thus proving the clinical applicability. Further, the Friedman test was also performed on the MAI scores by comparing the three radiologists. The online cloud-based COVLIAS 2.0-cXAI achieves (i) CT lung image segmentation and (ii) generation of four CAM techniques in less than 10 s for one CT slice. The COVLIAS 2.0-cXAI demonstrated reliability, high accuracy, and clinical stability.

Appendix A

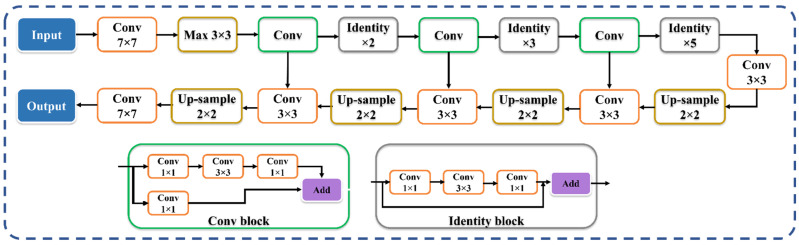

Figure A1.

ResNet-UNet architecture.

Table A1.

Friedman test using DenseNet-121 model on the MAI score from three experts.

| XAI | Experts | Min. | 25th Percentile | Med | 75th Percentile | Max | DF-1 | DF-2 | p Value | F | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DenseNet-121 | Grad-CAM | Expert 1 | 2 | 4 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 171.81 |

| Expert 2 | 3 | 4 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.7 | 4.2 | 4.6 | 4.8 | 5 | ||||||

| Grad-CAM++ | Expert 1 | 2 | 4 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 244.9 | |

| Expert 2 | 3 | 4 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.8 | 4.3 | 4.6 | 4.8 | 5 | ||||||

| Score-CAM | Expert 1 | 1 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 740.1 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 2 | 4.5 | 4.7 | 4.9 | 5 | ||||||

| FasterScore-CAM | Expert 1 | 1 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 1072.54 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.8 | 4.5 | 4.7 | 4.8 | 5 |

Min: minimum; Med: median; Max: maximum; F: Friedman statistics.

Table A2.

Friedman test using DenseNet-169 model on the MAI score from three experts.

| XAI | Experts | Min. | 25th Percentile | Med | 75th Percentile | Max | DF-1 | DF-2 | p Value | F | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DenseNet-169 | Grad-CAM | Expert 1 | 2 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 432.84 |

| Expert 2 | 3 | 4 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.7 | 4.4 | 4.6 | 4.8 | 5 | ||||||

| Grad-CAM++ | Expert 1 | 2 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 689.05 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 3.2 | 4.5 | 4.7 | 4.8 | 5 | ||||||

| Score-CAM | Expert 1 | 1 | 4 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 282.56 | |

| Expert 2 | 3 | 4 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.8 | 4.5 | 4.7 | 4.8 | 5 | ||||||

| FasterScore-CAM | Expert 1 | 1 | 4 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 253.15 | |

| Expert 2 | 3 | 4 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.7 | 4.4 | 4.4 | 4.8 | 5 |

Min: minimum; Med: median; Max: maximum; F: Friedman statistics.

Table A3.

Friedman test using DenseNet-201 model on the MAI score from three experts.

| XAI | Experts | Min. | 25th Percentile | Med | 75th Percentile | Max | DF-1 | DF-2 | p Value | F | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DenseNet-201 | Grad-CAM | Expert 1 | 2 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 499.3 |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.8 | 4.5 | 4.7 | 4.9 | 5 | ||||||

| Grad-CAM++ | Expert 1 | 2 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 1151.78 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.7 | 4.6 | 4.7 | 4.9 | 5 | ||||||

| Score-CAM | Expert 1 | 3 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 1719.93 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 3 | 4.6 | 4.7 | 4.9 | 5 | ||||||

| FasterScore-CAM | Expert 1 | 3 | 5 | 5 | 5 | 5 | 2 | 2278 | <0.00001 | 1239.82 | |

| Expert 2 | 3 | 5 | 5 | 5 | 5 | ||||||

| Expert 3 | 2.9 | 4.6 | 4.7 | 4.9 | 5 |

Min: minimum; Med: median; Max: maximum; F: Friedman statistics.

Author Contributions

Conceptualization, J.S.S., S.A. and N.N.K.; Data curation, G.L.C., A.C., A.P., P.S.C.D., L.S., A.M., G.F., M.T., P.R.K., F.N., Z.R. and K.V.; Formal analysis, J.S.S.; Funding acquisition, M.M.F.; Investigation, J.S.S., I.M.S., P.S.C., A.M.J., N.N.K., S.M., J.R.L., G.P., D.W.S., P.P.S., G.T., A.D.P., D.P.M., V.A., J.S.T., M.A.-M., S.K.D., A.N., A.S., M.F., A.A., F.N., Z.R., M.M.F. and K.V.; Methodology, J.S.S., S.A., G.L.C. and A.B.; Project administration, J.S.S. and M.K.K.; Software, S.A. and L.S.; Supervision, J.S.S., L.S., A.M., M.F., M.M.F., S.N. and M.K.K.; Validation, S.A., G.L.C., K.V. and M.K.K.; Visualization, S.A. and V.R.; Writing—original draft, S.A.; Writing—review & editing, J.S.S., G.L.C., A.C., A.P., P.S.C.D., L.S., A.M., I.M.S., M.T., P.S.C., A.M.J., N.N.K., S.M., J.R.L., G.P., M.M., D.W.S., A.B., P.P.S., G.T., A.D.P., D.P.M., V.A., G.D.K., J.S.T., M.A.-M., S.K.D., A.N., A.S., V.R., M.F., A.A., M.M.F., S.N., K.V. and M.K.K. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by: For Italian Dataset: IRB for the retrospective analysis of CT lung in patients affected by COVID-19 granted by the Hospital of Novara to Alessandro Carriero, Co-author of the research you are designing in the artificial intelligence application in the detection and risk stratification of COVID patients. Ethic Committee Name: Assessment of diagnostic performance of Computed Tomography in patients affected by SARS COVID-19 Infection. Approval Code: 131/20. Approval: authorized by the Azienda Ospedaliero Universitaria Maggiore della Carità di Novara on 25 June 2020. For Croatian Dataset: Ethic Committee Name: The use of artificial intelligence for multislice computer tomography (MSCT) images in patients with adult respiratory diseases syndrome and COVID-19 pneumonia. Approval Code: 01-2239-1-2020. Approval: authorized by the University Hospital for Infectious Diseases “Dr. Fran Mihaljevic”, Zegreb, Mirogojska 8. On 9 November 2020. Approved to Klaudija Viskovic.

Informed Consent Statement

Informed Consent was waived because the research involves anonymized records and data sets.

Data Availability Statement

Not available.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Cucinotta D., Vanelli M. WHO Declares COVID-19 a Pandemic. Acta Biomed. 2020;91:157–160. doi: 10.23750/abm.v91i1.9397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.WHO Coronavirus (COVID-19) Dashboard. [(accessed on 24 January 2022)]. Available online: https://covid19.who.int/

- 3.Suri J.S., Puvvula A., Biswas M., Majhail M., Saba L., Faa G., Singh I.M., Oberleitner R., Turk M., Chadha P.S., et al. COVID-19 pathways for brain and heart injury in comorbidity patients: A role of medical imaging and artificial intelligence-based COVID severity classification: A review. Comput. Biol. Med. 2020;124:103960. doi: 10.1016/j.compbiomed.2020.103960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cau R., Mantini C., Monti L., Mannelli L., Di Dedda E., Mahammedi A., Nicola R., Roubil J., Suri J.S., Cerrone G., et al. Role of imaging in rare COVID-19 vaccine multiorgan complications. Insights Imaging. 2022;13:44. doi: 10.1186/s13244-022-01176-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Saba L., Gerosa C., Fanni D., Marongiu F., La Nasa G., Caocci G., Barcellona D., Balestrieri A., Coghe F., Orru G., et al. Molecular pathways triggered by COVID-19 in different organs: ACE2 receptor-expressing cells under attack? A review. Eur. Rev. Med. Pharm. Sci. 2020;24:12609–12622. doi: 10.26355/eurrev_202012_24058. [DOI] [PubMed] [Google Scholar]

- 6.Onnis C., Muscogiuri G., Paolo Bassareo P., Cau R., Mannelli L., Cadeddu C., Suri J.S., Cerrone G., Gerosa C., Sironi S., et al. Non-invasive coronary imaging in patients with COVID-19: A narrative review. Eur. J. Radiol. 2022;149:110188. doi: 10.1016/j.ejrad.2022.110188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Viswanathan V., Puvvula A., Jamthikar A.D., Saba L., Johri A.M., Kotsis V., Khanna N.N., Dhanjil S.K., Majhail M., Misra D.P. Bidirectional link between diabetes mellitus and coronavirus disease 2019 leading to cardiovascular disease: A narrative review. World J. Diabetes. 2021;12:215. doi: 10.4239/wjd.v12.i3.215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fanni D., Saba L., Demontis R., Gerosa C., Chighine A., Nioi M., Suri J.S., Ravarino A., Cau F., Barcellona D., et al. Vaccine-induced severe thrombotic thrombocytopenia following COVID-19 vaccination: A report of an autoptic case and review of the literature. Eur. Rev. Med. Pharm. Sci. 2021;25:5063–5069. doi: 10.26355/eurrev_202108_26464. [DOI] [PubMed] [Google Scholar]

- 9.Gerosa C., Faa G., Fanni D., Manchia M., Suri J., Ravarino A., Barcellona D., Pichiri G., Coni P., Congiu T. Fetal programming of COVID-19: May the barker hypothesis explain the susceptibility of a subset of young adults to develop severe disease? Eur. Rev. Med. Pharmacol. Sci. 2021;25:5876–5884. doi: 10.26355/eurrev_202109_26810. [DOI] [PubMed] [Google Scholar]

- 10.Cau R., Pacielli A., Fatemeh H., Vaudano P., Arru C., Crivelli P., Stranieri G., Suri J.S., Mannelli L., Conti M., et al. Complications in COVID-19 patients: Characteristics of pulmonary embolism. Clin. Imaging. 2021;77:244–249. doi: 10.1016/j.clinimag.2021.05.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kampfer N.A., Naldi A., Bragazzi N.L., Fassbender K., Lesmeister M., Lochner P. Reorganizing stroke and neurological intensive care during the COVID-19 pandemic in Germany. Acta Biomed. 2021;92:e2021266. doi: 10.23750/abm.v92i5.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Congiu T., Demontis R., Cau F., Piras M., Fanni D., Gerosa C., Botta C., Scano A., Chighine A., Faedda E. Scanning electron microscopy of lung disease due to COVID-19-a case report and a review of the literature. Eur. Rev. Med. Pharmacol. Sci. 2021;25:7997–8003. doi: 10.26355/eurrev_202112_27650. [DOI] [PubMed] [Google Scholar]

- 13.Pan F., Ye T., Sun P., Gui S., Liang B., Li L., Zheng D., Wang J., Hesketh R.L., Yang L. Time course of lung changes on chest CT during recovery from 2019 novel coronavirus (COVID-19) pneumonia. Radiology. 2020;13:200370. doi: 10.1148/radiol.2020200370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wong H.Y.F., Lam H.Y.S., Fong A.H., Leung S.T., Chin T.W., Lo C.S.Y., Lui M.M., Lee J.C.Y., Chiu K.W., Chung T.W., et al. Frequency and Distribution of Chest Radiographic Findings in Patients Positive for COVID-19. Radiology. 2020;296:E72–E78. doi: 10.1148/radiol.2020201160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Smith M.J., Hayward S.A., Innes S.M., Miller A.S.C. Point-of-care lung ultrasound in patients with COVID-19—A narrative review. Anaesthesia. 2020;75:1096–1104. doi: 10.1111/anae.15082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tian S., Hu W., Niu L., Liu H., Xu H., Xiao S.Y. Pulmonary Pathology of Early-Phase 2019 Novel Coronavirus (COVID-19) Pneumonia in Two Patients With Lung Cancer. J. Thorac. Oncol. 2020;15:700–704. doi: 10.1016/j.jtho.2020.02.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ackermann M., Verleden S.E., Kuehnel M., Haverich A., Welte T., Laenger F., Vanstapel A., Werlein C., Stark H., Tzankov A., et al. Pulmonary Vascular Endothelialitis, Thrombosis, and Angiogenesis in COVID-19. N. Engl. J. Med. 2020;383:120–128. doi: 10.1056/NEJMoa2015432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Aigner C., Dittmer U., Kamler M., Collaud S., Taube C. COVID-19 in a lung transplant recipient. J. Heart Lung Transpl. 2020;39:610–611. doi: 10.1016/j.healun.2020.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Suri J.S., Rangayyan R.M. Recent Advances in Breast Imaging, Mammography, and Computer-Aided Diagnosis of Breast Cancer. SPIE; Bellingham, WA, USA: 2006. [Google Scholar]

- 20.Peng Q.Y., Wang X.T., Zhang L.N., Chinese Critical Care Ultrasound Study G. Findings of lung ultrasonography of novel corona virus pneumonia during the 2019–2020 epidemic. Intensive Care Med. 2020;46:849–850. doi: 10.1007/s00134-020-05996-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nillmani, Jain P.K., Sharma N., Kalra M.K., Viskovic K., Saba L., Suri J.S. Four Types of Multiclass Frameworks for Pneumonia Classification and Its Validation in X-ray Scans Using Seven Types of Deep Learning Artificial Intelligence Models. Diagnostics. 2022;12:652. doi: 10.3390/diagnostics12030652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Suri J.S., Agarwal S., Gupta S.K., Puvvula A., Biswas M., Saba L., Bit A., Tandel G.S., Agarwal M., Patrick A. A narrative review on characterization of acute respiratory distress syndrome in COVID-19-infected lungs using artificial intelligence. Comput. Biol. Med. 2021;130:104210. doi: 10.1016/j.compbiomed.2021.104210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Saba L., Biswas M., Kuppili V., Cuadrado Godia E., Suri H.S., Edla D.R., Omerzu T., Laird J.R., Khanna N.N., Mavrogeni S., et al. The present and future of deep learning in radiology. Eur. J. Radiol. 2019;114:14–24. doi: 10.1016/j.ejrad.2019.02.038. [DOI] [PubMed] [Google Scholar]

- 24.Kuppili V., Biswas M., Sreekumar A., Suri H.S., Saba L., Edla D.R., Marinhoe R.T., Sanches J.M., Suri J.S. Extreme learning machine framework for risk stratification of fatty liver disease using ultrasound tissue characterization. J. Med. Syst. 2017;41:152. doi: 10.1007/s10916-017-0797-1. [DOI] [PubMed] [Google Scholar]

- 25.Noor N.M., Than J.C., Rijal O.M., Kassim R.M., Yunus A., Zeki A.A., Anzidei M., Saba L., Suri J.S. Automatic lung segmentation using control feedback system: Morphology and texture paradigm. J. Med. Syst. 2015;39:22. doi: 10.1007/s10916-015-0214-6. [DOI] [PubMed] [Google Scholar]

- 26.Suri J.S., Agarwal S., Pathak R., Ketireddy V., Columbu M., Saba L., Gupta S.K., Faa G., Singh I.M., Turk M. COVLIAS 1.0: Lung Segmentation in COVID-19 Computed Tomography Scans Using Hybrid Deep Learning Artificial Intelligence Models. Diagnostics. 2021;11:1405. doi: 10.3390/diagnostics11081405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Suri J.S., Agarwal S., Carriero A., Pasche A., Danna P.S.C., Columbu M., Saba L., Viskovic K., Mehmedovic A., Agarwal S., et al. COVLIAS 1.0 vs. MedSeg: Artificial Intelligence-Based Comparative Study for Automated COVID-19 Computed Tomography Lung Segmentation in Italian and Croatian Cohorts. Diagnostics. 2021;11:2367. doi: 10.3390/diagnostics11122367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Saba L., Agarwal M., Patrick A., Puvvula A., Gupta S.K., Carriero A., Laird J.R., Kitas G.D., Johri A.M., Balestrieri A., et al. Six artificial intelligence paradigms for tissue characterisation and classification of non-COVID-19 pneumonia against COVID-19 pneumonia in computed tomography lungs. Int. J. Comput. Assist. Radiol. Surg. 2021;16:423–434. doi: 10.1007/s11548-021-02317-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Acharya R.U., Faust O., Alvin A.P., Sree S.V., Molinari F., Saba L., Nicolaides A., Suri J.S. Symptomatic vs. asymptomatic plaque classification in carotid ultrasound. J. Med. Syst. 2012;36:1861–1871. doi: 10.1007/s10916-010-9645-2. [DOI] [PubMed] [Google Scholar]

- 30.Acharya U.R., Faust O., Alvin A.P.C., Krishnamurthi G., Seabra J.C., Sanches J., Suri J.S. Understanding symptomatology of atherosclerotic plaque by image-based tissue characterization. Comput. Methods Programs Biomed. 2013;110:66–75. doi: 10.1016/j.cmpb.2012.09.008. [DOI] [PubMed] [Google Scholar]

- 31.Acharya U.R., Faust O., Sree S.V., Alvin A.P.C., Krishnamurthi G., Sanches J., Suri J.S. Atheromatic™: Symptomatic vs. asymptomatic classification of carotid ultrasound plaque using a combination of HOS, DWT & texture; Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Boston, MA, USA. 30 August–3 September 2011; pp. 4489–4492. [DOI] [PubMed] [Google Scholar]

- 32.Acharya U.R., Mookiah M.R., Vinitha Sree S., Afonso D., Sanches J., Shafique S., Nicolaides A., Pedro L.M., Fernandes E.F.J., Suri J.S. Atherosclerotic plaque tissue characterization in 2D ultrasound longitudinal carotid scans for automated classification: A paradigm for stroke risk assessment. Med. Biol. Eng. Comput. 2013;51:513–523. doi: 10.1007/s11517-012-1019-0. [DOI] [PubMed] [Google Scholar]

- 33.Molinari F., Liboni W., Pavanelli E., Giustetto P., Badalamenti S., Suri J.S. Accurate and automatic carotid plaque characterization in contrast enhanced 2-D ultrasound images; Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; Lyon, France. 22–26 August 2007; pp. 335–338. [DOI] [PubMed] [Google Scholar]

- 34.Banchhor S.K., Londhe N.D., Araki T., Saba L., Radeva P., Laird J.R., Suri J.S. Wall-based measurement features provides an improved IVUS coronary artery risk assessment when fused with plaque texture-based features during machine learning paradigm. Comput. Biol. Med. 2017;91:198–212. doi: 10.1016/j.compbiomed.2017.10.019. [DOI] [PubMed] [Google Scholar]

- 35.Saba L., Sanagala S.S., Gupta S.K., Koppula V.K., Johri A.M., Khanna N.N., Mavrogeni S., Laird J.R., Pareek G., Miner M., et al. Multimodality carotid plaque tissue characterization and classification in the artificial intelligence paradigm: A narrative review for stroke application. Ann. Transl. Med. 2021;9:1206. doi: 10.21037/atm-20-7676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Agarwal M., Saba L., Gupta S.K., Johri A.M., Khanna N.N., Mavrogeni S., Laird J.R., Pareek G., Miner M., Sfikakis P.P. Wilson disease tissue classification and characterization using seven artificial intelligence models embedded with 3D optimization paradigm on a weak training brain magnetic resonance imaging datasets: A supercomputer application. Med. Biol. Eng. Comput. 2021;59:511–533. doi: 10.1007/s11517-021-02322-0. [DOI] [PubMed] [Google Scholar]

- 37.Acharya U.R., Kannathal N., Ng E., Min L.C., Suri J.S. Computer-based classification of eye diseases; Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society; New York, NY, USA. 30 August–3 September 2006; pp. 6121–6124. [DOI] [PubMed] [Google Scholar]

- 38.Acharya U., Vinitha Sree S., Mookiah M., Yantri R., Molinari F., Zieleźnik W., Małyszek-Tumidajewicz J., Stępień B., Bardales R., Witkowska A. Diagnosis of Hashimoto’s thyroiditis in ultrasound using tissue characterization and pixel classification. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2013;227:788–798. doi: 10.1177/0954411913483637. [DOI] [PubMed] [Google Scholar]

- 39.Biswas M., Kuppili V., Edla D.R., Suri H.S., Saba L., Marinhoe R.T., Sanches J.M., Suri J.S. Symtosis: A liver ultrasound tissue characterization and risk stratification in optimized deep learning paradigm. Comput. Methods Programs Biomed. 2018;155:165–177. doi: 10.1016/j.cmpb.2017.12.016. [DOI] [PubMed] [Google Scholar]

- 40.Acharya U.R., Saba L., Molinari F., Guerriero S., Suri J.S. Ovarian tumor characterization and classification: A class of GyneScan™ systems; Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Diego, CA, USA. 28 August–1 September 2012; pp. 4446–4449. [DOI] [PubMed] [Google Scholar]

- 41.Pareek G., Acharya U.R., Sree S.V., Swapna G., Yantri R., Martis R.J., Saba L., Krishnamurthi G., Mallarini G., El-Baz A. Prostate tissue characterization/classification in 144 patient population using wavelet and higher order spectra features from transrectal ultrasound images. Technol. Cancer Res. Treat. 2013;12:545–557. doi: 10.7785/tcrt.2012.500346. [DOI] [PubMed] [Google Scholar]

- 42.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. Exploring the color feature power for psoriasis risk stratification and classification: A data mining paradigm. Comput. Biol. Med. 2015;65:54–68. doi: 10.1016/j.compbiomed.2015.07.021. [DOI] [PubMed] [Google Scholar]

- 43.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. A novel and robust Bayesian approach for segmentation of psoriasis lesions and its risk stratification. Comput. Methods Programs Biomed. 2017;150:9–22. doi: 10.1016/j.cmpb.2017.07.011. [DOI] [PubMed] [Google Scholar]

- 44.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. Reliable and accurate psoriasis disease classification in dermatology images using comprehensive feature space in machine learning paradigm. Expert Syst. Appl. 2015;42:6184–6195. doi: 10.1016/j.eswa.2015.03.014. [DOI] [Google Scholar]

- 45.Bayraktaroglu S., Cinkooglu A., Ceylan N., Savas R. The novel coronavirus pneumonia (COVID-19): A pictorial review of chest CT features. Diagn. Interv. Radiol. 2021;27:188–194. doi: 10.5152/dir.2020.20304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Verschakelen J.A., De Wever W. Computed Tomography of the Lung. Springer; Berlin/Heidelberg, Germany: 2007. [Google Scholar]

- 47.Arrieta A.B., Díaz-Rodríguez N., Del Ser J., Bennetot A., Tabik S., Barbado A., García S., Gil-López S., Molina D., Benjamins R. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion. 2020;58:82–115. doi: 10.1016/j.inffus.2019.12.012. [DOI] [Google Scholar]

- 48.Choi T.Y., Chang M.Y., Heo S., Jang J.Y. Explainable machine learning model to predict refeeding hypophosphatemia. Clin. Nutr. ESPEN. 2021;45:213–219. doi: 10.1016/j.clnesp.2021.08.022. [DOI] [PubMed] [Google Scholar]

- 49.Gunashekar D.D., Bielak L., Hagele L., Oerther B., Benndorf M., Grosu A.L., Brox T., Zamboglou C., Bock M. Explainable AI for CNN-based prostate tumor segmentation in multi-parametric MRI correlated to whole mount histopathology. Radiat. Oncol. 2022;17:65. doi: 10.1186/s13014-022-02035-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Gunning D., Aha D. DARPA’s explainable artificial intelligence (XAI) program. AI Mag. 2019;40:44–58. [Google Scholar]

- 51.Sabih M., Hannig F., Teich J. Utilizing explainable AI for quantization and pruning of deep neural networks. arXiv. 20202008.09072 [Google Scholar]

- 52.Apley D.W., Zhu J. Visualizing the effects of predictor variables in black box supervised learning models. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2020;82:1059–1086. doi: 10.1111/rssb.12377. [DOI] [Google Scholar]

- 53.DenOtter T.D., Schubert J. StatPearls. StatPearls Publishing LLC.; Treasure Island, FL, USA: 2022. Hounsfield Unit. [PubMed] [Google Scholar]

- 54.Adedigba A.P., Adeshina S.A., Aina O.E., Aibinu A.M. Optimal hyperparameter selection of deep learning models for COVID-19 chest X-ray classification. Intell.-Based Med. 2021;5:100034. doi: 10.1016/j.ibmed.2021.100034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Chhabra M., Kumar R. Mobile Radio Communications and 5G Networks. Springer; Berlin/Heidelberg, Germany: 2022. A Smart Healthcare System Based on Classifier DenseNet 121 Model to Detect Multiple Diseases; pp. 297–312. [Google Scholar]

- 56.Hasan N., Bao Y., Shawon A., Huang Y. DenseNet convolutional neural networks application for predicting COVID-19 using CT image. SN Comput. Sci. 2021;2:389. doi: 10.1007/s42979-021-00782-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Nandhini S., Ashokkumar K. An automatic plant leaf disease identification using DenseNet-121 architecture with a mutation-based henry gas solubility optimization algorithm. Neural Comput. Appl. 2022;34:5513–5534. doi: 10.1007/s00521-021-06714-z. [DOI] [Google Scholar]

- 58.Ruiz J., Mahmud M., Modasshir M., Shamim Kaiser M., for the Alzheimer’s Disease Neuroimaging Initiative . International Conference on Brain Informatics. Springer; Berlin/Heidelberg, Germany: 2020. 3D DenseNet ensemble in 4-way classification of Alzheimer’s disease; pp. 85–96. [Google Scholar]

- 59.Jiang H., Xu J., Shi R., Yang K., Zhang D., Gao M., Ma H., Qian W. A multi-label deep learning model with interpretable Grad-CAM for diabetic retinopathy classification; Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); Montreal, QC, Canada. 20–24 July 2020; pp. 1560–1563. [DOI] [PubMed] [Google Scholar]

- 60.Joo H.-T., Kim K.-J. Visualization of deep reinforcement learning using grad-CAM: How AI plays atari games?; Proceedings of the 2019 IEEE Conference on Games (CoG); London, UK. 20–23 August 2019; pp. 1–2. [Google Scholar]

- 61.Panwar H., Gupta P., Siddiqui M.K., Morales-Menendez R., Bhardwaj P., Singh V. A deep learning and grad-CAM based color visualization approach for fast detection of COVID-19 cases using chest X-ray and CT-Scan images. Chaos Solitons Fractals. 2020;140:110190. doi: 10.1016/j.chaos.2020.110190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Zhang Y., Hong D., McClement D., Oladosu O., Pridham G., Slaney G. Grad-CAM helps interpret the deep learning models trained to classify multiple sclerosis types using clinical brain magnetic resonance imaging. J. Neurosci. Methods. 2021;353:109098. doi: 10.1016/j.jneumeth.2021.109098. [DOI] [PubMed] [Google Scholar]

- 63.Selvaraju R.R., Cogswell M., Das A., Vedantam R., Parikh D., Batra D. Grad-cam: Visual explanations from deep networks via gradient-based localization; Proceedings of the IEEE International Conference on Computer Vision; Venice, Italy. 22–29 October 2017; pp. 618–626. [Google Scholar]

- 64.Chattopadhay A., Sarkar A., Howlader P., Balasubramanian V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks; Proceedings of the 2018 IEEE winter Conference on Applications of Computer Vision (WACV); Lake Tahoe, NV, USA. 12–15 March 2018; pp. 839–847. [Google Scholar]

- 65.Inbaraj X.A., Villavicencio C., Macrohon J.J., Jeng J.-H., Hsieh J.-G. Object Identification and Localization Using Grad-CAM++ with Mask Regional Convolution Neural Network. Electronics. 2021;10:1541. doi: 10.3390/electronics10131541. [DOI] [Google Scholar]

- 66.Joshua E.S.N., Chakkravarthy M., Bhattacharyya D. Smart Technologies in Data Science and Communication. Springer; Berlin/Heidelberg, Germany: 2021. Lung cancer detection using improvised grad-cam++ with 3d cnn class activation; pp. 55–69. [Google Scholar]

- 67.Joshua N., Stephen E., Bhattacharyya D., Chakkravarthy M., Kim H.-J. Lung Cancer Classification Using Squeeze and Excitation Convolutional Neural Networks with Grad Cam++ Class Activation Function. Traitement Signal. 2021;38:1103–1112. doi: 10.18280/ts.380421. [DOI] [Google Scholar]

- 68.Wang H., Wang Z., Du M., Yang F., Zhang Z., Ding S., Mardziel P., Hu X. Score-CAM: Score-weighted visual explanations for convolutional neural networks; Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops; Seattle, WA, USA. 14–19 June 2020; pp. 24–25. [Google Scholar]

- 69.Wang H., Du M., Yang F., Zhang Z. Score-Cam: Improved Visual Explanations via Score-Weighted Class Activation Mapping. arXiv. 20191910.01279 [Google Scholar]

- 70.Naidu R., Ghosh A., Maurya Y., Kundu S.S. IS-CAM: Integrated Score-CAM for axiomatic-based explanations. arXiv. 20202010.03023 [Google Scholar]

- 71.Oh Y., Jung H., Park J., Kim M.S. Evet: Enhancing visual explanations of deep neural networks using image transformations; Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision; Virtual. 5–9 January 2021; pp. 3579–3587. [Google Scholar]

- 72.Sugeno A., Ishikawa Y., Ohshima T., Muramatsu R. Simple methods for the lesion detection and severity grading of diabetic retinopathy by image processing and transfer learning. Comput. Biol. Med. 2021;137:104795. doi: 10.1016/j.compbiomed.2021.104795. [DOI] [PubMed] [Google Scholar]

- 73.Cozzi D., Cavigli E., Moroni C., Smorchkova O., Zantonelli G., Pradella S., Miele V. Ground-glass opacity (GGO): A review of the differential diagnosis in the era of COVID-19. Jpn. J. Radiol. 2021;39:721–732. doi: 10.1007/s11604-021-01120-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Wu J., Pan J., Teng D., Xu X., Feng J., Chen Y.C. Interpretation of CT signs of 2019 novel coronavirus (COVID-19) pneumonia. Eur. Radiol. 2020;30:5455–5462. doi: 10.1007/s00330-020-06915-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.De Wever W., Meersschaert J., Coolen J., Verbeken E., Verschakelen J.A. The crazy-paving pattern: A radiological-pathological correlation. Insights Imaging. 2011;2:117–132. doi: 10.1007/s13244-010-0060-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Niu R., Ye S., Li Y., Ma H., Xie X., Hu S., Huang X., Ou Y., Chen J. Chest CT features associated with the clinical characteristics of patients with COVID-19 pneumonia. Ann. Med. 2021;53:169–180. doi: 10.1080/07853890.2020.1851044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Salehi S., Abedi A., Balakrishnan S., Gholamrezanezhad A. Coronavirus Disease 2019 (COVID-19): A Systematic Review of Imaging Findings in 919 Patients. AJR Am. J. Roentgenol. 2020;215:87–93. doi: 10.2214/AJR.20.23034. [DOI] [PubMed] [Google Scholar]

- 78.Xie X., Zhong Z., Zhao W., Zheng C., Wang F., Liu J. Chest CT for Typical Coronavirus Disease 2019 (COVID-19) Pneumonia: Relationship to Negative RT-PCR Testing. Radiology. 2020;296:E41–E45. doi: 10.1148/radiol.2020200343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Cau R., Falaschi Z., Pasche A., Danna P., Arioli R., Arru C.D., Zagaria D., Tricca S., Suri J.S., Karla M.K., et al. Computed tomography findings of COVID-19 pneumonia in Intensive Care Unit-patients. J. Public Health Res. 2021;10:2270. doi: 10.4081/jphr.2021.2270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Yang X., He X., Zhao J., Zhang Y., Zhang S., Xie P. COVID-CT-dataset: A CT scan dataset about COVID-19. arXiv. 20202003.13865 [Google Scholar]

- 81.Shalbaf A., Vafaeezadeh M. Automated detection of COVID-19 using ensemble of transfer learning with deep convolutional neural network based on CT scans. Int. J. Comput. Assist. Radiol. Surg. 2021;16:115–123. doi: 10.1007/s11548-020-02286-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Gozes O., Frid-Adar M., Greenspan H., Browning P.D., Zhang H., Ji W., Bernheim A., Siegel E. Rapid ai development cycle for the coronavirus (COVID-19) pandemic: Initial results for automated detection & patient monitoring using deep learning ct image analysis. arXiv. 20202003.05037 [Google Scholar]

- 83.Solano-Rojas B., Villalón-Fonseca R., Marín-Raventós G. Alzheimer’s disease early detection using a low cost three-dimensional densenet-121 architecture; Proceedings of the International Conference on Smart Homes and Health Telematics; Hammamet, Tunisia. 24–26 June 2020; pp. 3–15. [Google Scholar]

- 84.Saba L., Suri J.S. Multi-Detector CT Imaging: Principles, Head, Neck, and Vascular Systems. Volume 1 CRC Press; Boca Raton, FL, USA: 2013. [Google Scholar]

- 85.Murgia A., Erta M., Suri J.S., Gupta A., Wintermark M., Saba L. CT imaging features of carotid artery plaque vulnerability. Ann. Transl. Med. 2020;8:1261. doi: 10.21037/atm-2020-cass-13. [DOI] [PMC free article] [PubMed] [Google Scholar]