Abstract

Behavioral science researchers are often interested in whether there is negligible interaction among continuous predictors of an outcome variable. For example, a researcher might be interested in demonstrating that the effect of perfectionism on depression is very consistent across age. In this case, the researcher is interested in assessing whether the interaction between the predictors is too small to be meaningful. Unfortunately, most researchers address the above research question using a traditional association-based null hypothesis test (e.g. regression) where their goal is to fail to reject the null hypothesis of no interaction. Common problems with traditional tests are their sensitivity to sample size and their opposite (and hence inappropriate) hypothesis setup for finding a negligible interaction effect. In this study, we investigated a method for testing for negligible interaction between continuous predictors using unstandardized and standardized regression-based models and equivalence testing. A Monte Carlo study provides evidence for the effectiveness of the equivalence-based test relative to traditional approaches.

KEYWORDS: Moderation, negligible interaction, linear models, interaction, multiple regression

Negligible interaction test for continuous predictors

An interaction effect between two predictors occurs when the relationship between one predictor and an outcome depends on the level of a second predictor. A negligible interaction is defined as an interaction effect so small that it has no practical significance (importance) within the context of the research; zero interaction (i.e. a population interaction coefficient of exactly 0) would be the smallest magnitude example of a negligible interaction. In this case, the relationship between one predictor and an outcome would not depend on the level of the second predictor, or minimally depend on the level of the second predictor. Detecting a negligible interaction among two or more predictor variables on an outcome variable is of interest to many researchers in the behavioral sciences. For example, negligible interaction might be the primary hypothesis of a study or it might be a necessary requirement in order to drop a higher order interaction term from a model. Applying traditional null hypothesis tests (i.e. tests where the goal is to detect the presence of an association, such as an interaction, among variables) in order to assess whether there is negligible interaction has been a common approach among researchers, even though these tests are not appropriate for assessing a lack of relationship (e.g. [23,29]). More specifically, it is not suitable to use non-significance of the interaction term from a traditional (association-based) null hypothesis significance test as evidence of a lack of interaction.

In this paper, a method based on an equivalence testing framework was proposed for testing negligible interaction among continuous predictors using standardized regression-based models. Previously, Cribbie, Ragoonanan, and Counsell [10] addressed a lack of interaction when predictors are categorical, while in this paper, we address this issue when the predictors are continuous. Cribbie et al. [10] proposed an unstandardized bootstrap-based intersection-union test [5] for 2 × 2 and, in general, a × b designs to assess a negligible interaction among categorical predictors and explained the problems of utilizing factorial analysis of variance test (ANOVA) for this matter. In contrast to categorical variables, selecting an appropriate equivalence interval with continuous predictors is more entailed. Moreover, there are important issues that arise with continuous predictors because of the various scaling.

The order of the paper is as follows. First, the paper introduces the benefits of equivalence tests and methodological difficulties of traditional (association-based) null hypothesis significance tests for detecting negligible interaction. Following that, the paradigm of the proposed test for negligible interaction is outlined. Lastly, a simulation study was used to compare the proposed equivalence-based test of negligible interaction with the inappropriate, but popular, strategy of utilizing a non-significant interaction effect from a traditional regression model (which focuses on detecting the presence of an interaction) to demonstrate negligible interaction.

Introduction to equivalence testing

Traditional null hypothesis significance tests, for example the two independent-samples t-test with null hypothesis H0: µ1 = µ2, can be used to assess whether there is an association among the predictor variable(s) and outcome variable(s) (in this case whether the two populations have different means). It should be evident that increasing the sample size with a traditional test causes an increase in power for detecting an association among the predictor and outcome. In this case, even a small association among the variables may be statistically significant. Moreover, since not rejecting H0 does not prove that H0 is true, traditional null hypothesis testing is not useful for research questions regarding a lack of association [11,23].

When the research hypothesis relates to a lack of association among variables, equivalence testing is the recommended approach. In the example from the paragraph above, equivalence testing would be valuable if a researcher was interested in demonstrating that the difference in means across two independent groups was too small to be of any importance. The goal of equivalence tests is not to demonstrate that variables are completely unrelated (e.g. that population means are identical), but instead that the association (in this case difference in means) is too small to be considered meaningful. For example, imagine that a researcher wants to demonstrate that treating an illness with a generic drug is similar to treating the illness with a brand name drug. In this example, showing that the two types of treatments are exactly equivalent (H0: µ1 = µ2) would not be the interest of the researcher, but showing that the differences in the treatment results are small enough to be considered negligible would be of interest to the researcher. The null hypothesis in this case could be expressed as: H0: µ1 – µ2 ≥ ε | µ1 – µ2 ≤ −ε, where ε represents the smallest difference in means that is practically significant (| is the ‘or’ operator). Note that the null hypothesis in this case is specifying the presence of a non-negligible mean difference, where traditional null hypotheses specify a lack of association. Rejecting H0 would provide evidence that the difference in means is negligible (i.e. −ε < µ1 – µ2 < ε).

Equivalence tests, which have been popular in biopharmaceutical and bio-statistical studies for decades, are now being used in behavioral science research for determining whether two means are equivalent (e.g. [11,23]), whether there is lack of association among two variables (e.g. [13,17,22,32], for evaluating measurement invariance [31,9], etc. Numerous different types of equivalence-based tests have been proposed (e.g. [2,24,26,28,29,30]). However, the two one-sided tests (TOST) approach of Schuirmann [26] is one of the most popular methods, and important articles by Rogers et al. [23] and Seaman and Serlin [27], which both focused on the TOST approach, introduced equivalence testing to the behavioral sciences. The two one-sided tests approach specifies that a researcher conduct two separate tests, one for each potential direction of an effect. For example, we could rewrite the null hypothesis above as: H01: µ1 – µ2 ≥ ε; H02: µ1 – µ2 ≤ −ε. The first null hypothesis specifies that the difference in population means is greater than ε, while the second specifies that the difference in population means is less than –ε. Both null hypotheses would need to be rejected in order to conclude that the population mean difference falls between −ε and ε.

In this study, we explore an equivalence-based test for evaluating whether an interaction among two continuous variables is too small to be considered meaningful. As the TOST is the dominant and most straightforward method for conducting equivalence testing, the proposed negligible interaction test is developed using the logic of the TOST procedure.

Traditional linear model test for interaction

We are interested in linear models where the primary focus is on whether there is an interaction among continuous predictors. The traditional general linear model (regression) approach can be written as

where yi is the score on the response variable for subject i, x1i, x2i are respectively the scores for the ith subject on the first and second predictors, and x1ix2i represents the interaction among the predictors and is discussed in more detail below. represents the intercept, 1 and are the regression coefficients (slopes) for the predictors, and is the coefficient for the interaction. δi represents the error in predicting the outcome score for subject i, and the δ values across all i = 1, … , n subjects are assumed to be independent and normally distributed. With traditional (association-based) null hypothesis testing, if the research hypothesis relates to the interaction then the goal is to reject H0: , where represents the population regression coefficient for the interaction, and thus provide support for the alternative hypothesis that there is an interaction (H1: . The (two-tail) null hypothesis is rejected if:

where SE(b3) represents the standard error associated with b3, α is the nominal Type I error rate, and k is the number of predictors (in this case k = 3). When a research hypothesis concerns the detection of an interaction, the main goal is to reject the null hypothesis of no interaction. However, when the researcher attempts to establish negligible interaction, they often do so (inappropriately) by attempting to fail to reject the null hypothesis of no interaction. However, this approach is problematic for (at least) two reasons. First, failing to reject the null hypothesis of zero interaction (H0: = 0) does not imply that = 0; as discussed by Altman and Bland [1], absence of evidence is not evidence of absence. Therefore, any conclusion regarding the null hypothesis, when the null hypothesis of zero interaction is not rejected, cannot be supported because of lack of statistical evidence [7]. Second, when using a test of the traditional null hypothesis to test for a lack of interaction, a decrease in sample size actually increases the probability of concluding that there is no interaction. This runs counter to the more desirable (and scientifically-based) notion that a decrease in sample size should be accompanied by a decrease in one’s ability to detect the actual state of affairs – in this case, interaction. Thus, smaller sample sizes lack power for rejecting H0: = 0 and have inflated power for finding a lack of interaction. However, when the sample size increases, H0: = 0 will almost always be rejected and thus it will be almost impossible to ever conclude that there is a negligible interaction effect even if the effect is in fact trivial. This undesirable property of traditional tests, i.e. ample power for finding a negligible interaction with small sample sizes but minimal power for finding a negligible interaction with large sample sizes, is a very important issue that should be addressed under the equivalence-testing framework.

Assessing negligible interaction via equivalence testing

Since a complete lack of association among variables (i.e. population coefficient, such as , of exactly 0) is unrealistic, the goal of equivalence tests is not to demonstrate that there is no relationship among variables, but to show that any relationship between the variables is too small to be meaningful (i.e. negligible). Defining how small of a relationship can be considered meaningful is both important and difficult [25]. Let the absolute value of the smallest interaction effect that is still meaningful be ε, which is defined a priori as an equivalence interval [i.e. EI = {−ε, ε}]. Measuring whether the interaction effect ( ) falls within the pre-specified interval (e.g. −ε < < ε) is the purpose of the proposed equivalence test.

As introduced above, a subjective yet challenging feature of equivalence testing is determining the threshold values based on the experimenters’ goals. There are very few rules to aid behavioral science researchers in choosing these threshold values based on their field of study and expertise [23,25]. This subjective choice requires an understanding of what constitute a minimum meaningful interaction in the research area of interest; for instance, a researcher may find 10% of the standard deviation an appropriate choice, but another researcher may not find it suitable for their particular study.

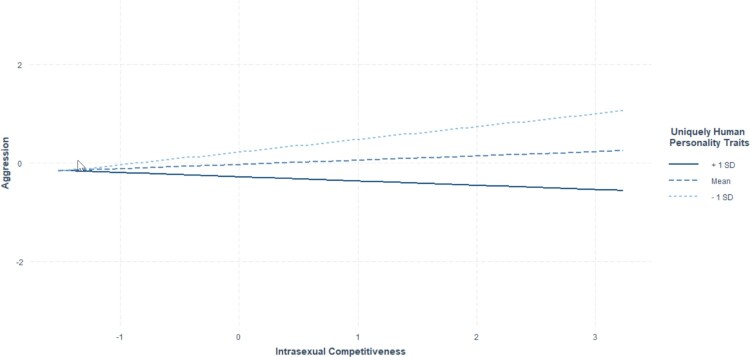

As an example, we discuss a study by Arnocky et al. [3]. The data from the study is accessible at https://osf.io/jqug4/. One the primary hypotheses of Arnocky et al. [3] was the interaction of uniquely human personality traits (comprised of openness and conscientiousness personality items) and individual differences in intrasexual competitiveness on aggression. If this researcher is interested in testing for negligible interaction, then the researcher needs to determine the smallest meaningful interaction effect (i.e. the smallest difference in slopes that would be meaningful for a one unit change in the predictor, recalling that the variables are standardized so a one unit change is a one standard deviation change). Although the decision is highly subjective and dependent on the context of the research, we can use general guidelines as a starting point. Cohen [8] suggested that standardized coefficients of .1 might represent a small relationship and .3 might represent a moderate relationship. Thus, in this study, we adopted values for ε of .1 and .3 to represent conservative and liberal equivalence thresholds (for models including standardized variables). In other words, the associated equivalence intervals would be EIconservative = {−.1, .1} and EIliberal = {−.3, .3}. Note that what is being specified, at the conservative level, is that for the effect of uniquely human personality traits a .1 difference in the slopes for a one standard deviation difference in intrasexual competitiveness would be minimally meaningful. Returning to the example, a multiple regression on the standardized variables returns an interaction effect of bint = −.17, and the interaction is plotted in Figure 1. According to the criteria above, the interaction effect would be considered negligible according to our liberal bounds, but non-negligible according to our conservative bounds. However, no actual hypothesis testing has been conducted so we now outline non-standardized and standardized approaches for testing for negligible interaction.

Figure 1.

Plot of the interaction between intrasexual competitiveness and uniquely human personality traits on aggression.

Non-standardized equivalence-based approach for testing negligible interaction

Under the described model with normally distributed errors, the TOST approach can be used for hypothesis testing. Noting that the following approach could be easily extended to the higher dimensional cases, we focus our attention on the case where there are two predictors (plus the interaction, i.e. k = 3) in our (repeated from earlier) model:

In this case, the null hypothesis can be written as H0: ≥ ε | ≤ −ε and the alternate hypothesis can be written as H1: −ε < < ε. Unlike traditional association-based hypothesis testing, where the null hypothesis states that b3* exactly equals zero, in this new proposed method accepts a range of values under the null and alternate hypotheses. The null hypothesis is rejected if:

where s quantile of a t-distribution with n-k degrees of freedom. Equivalently, H0 is rejected if the (1-2α)% confidence interval for is contained within the equivalence interval [27].

Standardized equivalence-based approach for testing negligible interaction

In some instances, it might be difficult to select an appropriate equivalence interval because the predictor and/or outcome variables are measured on an arbitrary scale. To make the threshold interval unit-invariant, one possibility is to standardize the response variable and predictors before applying the regression analysis; hence the coefficients refer to the number of standard deviation changes in the response or outcome variable per standard deviation increase in the predictor variable [19].

Even though standardizing the variables is convenient, and the recommended approach for standardization, it should be considered that the standard error will not be correct for the standardized coefficients. The standard errors of standardized parameters are not a simple rescaling of the standard errors of the original parameter estimates [14], and will be both biased and inconsistent [15]. In the current study, we adjusted the standard error of the interaction coefficient with the delta method due to Jones and Waller. The delta method, unlike standard methods for computing confidence intervals with standardized variables, accounts for the sampling variability of the criterion standard deviation.

Regardless of the unit invariant property inherited from standardization, there is still much subjectivity in determining an appropriate equivalence interval. In this study, as discussed earlier, we use suggested small and medium thresholds for standardized regression coefficients [8], specifically .1 and .3, respectively. However, researchers are encouraged to consider what the smallest meaningful standardized coefficient might be for their particular research study by considering the context of the work.

Given a sample of n observations, we test the null hypothesis H0: ≥ ε | ≤ −ε by applying the following steps (note that β represents the standardized coefficient, and β* represents the population standardized coefficient). First, standardize the response variable and each of the predictors. Second, create the interaction term as the product of the standardized predictor variables (instead of standardizing the product of the raw predictor variables; see [20]). Third, regress the standardized outcome variable on the standardized predictor and interaction variables. Finally, reject the null hypothesis H0: ≥ ε | ≤ −ε if:

and retain it otherwise. An equivalent alternative is to reject the null hypothesis if the 1 – 2α confidence interval for falls completely within the equivalence interval { , }. Recall that is computed via the delta method [15].

To follow up on the Arnocky et al. [3] example, let’s apply the standardized equivalence-based approach for testing for negligible interaction. To recall, the outcome variable is aggression, and the predictors are uniquely human personality traits, individual differences in intrasexual competitiveness, and the interaction among these two predictors. The null hypothesis being evaluated is H0: ≥ ε | ≤ −ε, where in this case represents the population standardized interaction effect between uniquely human personality traits and individual differences in intrasexual competitiveness. We will use, arbitrarily, α = .05 and the conservative standardized bounds ({ , } = {−.1, .1}). First, we will convert all of our variables (outcome and predictors) to standardized variables. Next, we will compute the interaction term as the product of the standardized predictors. We then use the seBeta function from the fungible package in R to compute the 1 – 2α confidence intervals. The estimated standardized interaction effect is −.191, with a 1 – 2α = 1–2(.05) = .90 (90%) confidence interval of {−0.378, −0.003}. Since the confidence interval does not fall completely within the equivalence interval, {−.1, .1}, we do not reject the null hypothesis. In other words, we cannot conclude that the interaction between uniquely human personality traits and individual differences in intrasexual competitiveness is negligible. To assist readers, we have made the full R code for this example available at: osf.io/?

Method

A simulation study was conducted using R [21] to investigate the efficiency of the proposed negligible interaction test and the traditional association-based test for detecting negligible interaction (i.e. in this latter case, inappropriately concluding negligible interaction from a non-significant test of the interaction). The application of the traditional association-based test is inappropriate for testing for a lack of interaction regardless of the results of the simulation study. However, by providing empirical results, we aim to help the reader better understand the inaccurate use of non-rejection of the null hypothesis of no interaction in a traditional association-based test for detecting a lack of interaction and provide evidence to support the use of the proposed equivalence-based test of negligible interaction.

Ten thousand simulations were conducted for each condition using a nominal significance level of α = .05. Manipulated variables included sample size (N = 25, 50, 100, 1000, 5000), standardized equivalence interval ( ) and interaction coefficients ( / ). Note that different coefficients are used for evaluating Type I error ( falls outside of the equivalence interval), maximum Type I error ( falls on the border of the equivalence interval) and power ( falls within the equivalence interval). With regard to the maximum Type I error rate, recall that with equivalence testing there is not a point null (e.g. H0: = 0), and therefore many mean differences qualify as Type I error conditions. For example, if = .1, then rejecting Ho when = .2 is a Type I error, rejecting Ho when = .5 is a Type I error, etc. However, the MAXIMUM Type I error rate is achieved when = .1 (the minimum effect that qualifies as a Type I error). Also note that when referring to the equivalence interval (e.g. ) below we often just use the short-form ε = .1 (since all of the simulations focus on positive values for / ). The specific conditions used in the study can be found in Table 1.

Table 1.

Simulation study conditions.

| Type I Error | Max Type I Error | Power (1) | Power (2) | |

|---|---|---|---|---|

| = 0.08 | = 0.02021 | = 0 | = 0.01 | |

| = 0.2 | = 0.06290 | = 0 | = 0.03 | |

Note: ε represents the equivalence bounds [recall that the equivalence interval is (−ε, ε)], represents the unstandardized interaction regression coefficient (which has an associated coefficient), and Max Type I error represents the case where the standardized interaction coefficient is on the edge of the equivalence interval (i.e. = ε). Power (1) and Power (2) represent two different conditions in which falls within the equivalence interval. Each case of represents a distinct condition, so there are eight different conditions. Note that each condition was repeated for each of the sample size conditions.

For each simulation, a sample of size n for and was drawn independently from distributions and , respectively. A full factorial model was utilized to generate the outcome variable: where b1 and b2 were fixed at 0, δi was from the normal distribution N (0, σ2) and was set at the values in Table 1. The next step was to standardize the outcome variable and each of the predictors and generate the interaction term as the product of the standardized predictor variables.

The proposed equivalence-based test was evaluated by exploring Type I error rates and power. In addition, the traditional (association-based) hypothesis testing approach for interactions was utilized where the inappropriate use of a non-significant interaction test was used as evidence of a lack of interaction (and the definitions of Type I error and power are in many cases unique from those of equivalence testing). In other words, since the null and alternate hypotheses are different under these two tests, Type I error and power have different meanings. To relate this back to the Arnocky et al. [3] example, where the null hypothesis was that the interaction was non-negligible, a Type I error would be rejecting the null hypothesis and concluding that the interaction was negligible when in fact in the population it was non-negligible.

For the proposed equivalence-based procedure, when | | ≥ ε, Type I error rates were investigated. For example, when = 0.02021 and ε = .1, = ε and Type I error rates are expected to be at the maximum rate. Recall that represents the population standardized interaction coefficient, and represents the population unstandardized interaction coefficient. Larger values of also fall under the label of Type I error conditions but the expected Type I error rate is expected to be lower since it is less likely to falsely reject the null hypothesis with a large value for / . When |β3*| < ε power rates were investigated. For example, when = 0 or .01 and ε = .1, < ε and thus this is a power condition. Type I error rates for the proposed test were considered acceptable if they fell within Bradley’s [6] liberal limits, α ± .5α, which for our study equates to .025–.075.

For the traditional null hypothesis testing procedure, when | | > 0 this would be a power condition, whereas when = 0 this would be a Type I error condition; however, in this study we are recording when a lack of interaction is concluded (i.e. nonrejection of the traditional null hypothesis) and therefore whenever | | > 0 the recorded rates (i.e. conclusions of lack of interaction) are actually Type II errors, whereas whenever = 0 the recorded rates are correct decisions. The traditional test is included as a comparison in order to demonstrate to researchers that it is not acceptable to use a non-significant test of interaction as proof of a negligible interaction effect.

Results

Figures 2 and 3 display the proportion of lack of interaction conclusions for the proposed and traditional tests. Figure 2 displays the results for the conservative equivalence interval ( 1) and Figure 3 displays the results for the liberal equivalence interval ( ).

Figure 2.

Rate of detecting negligible interaction between continuous predictors in traditional test and proposed test with the equivalence interval of . TNI = proposed test of negligible interaction; Trad = traditional interaction test. = 0.02021 is an example of an estimated interaction coefficient on the edge of interval, = 0.08 is an example of a non-negligible interaction (ε.), and = 0.01 is an example of a non-zero negligible interaction (−ε < < ε).

Figure 3.

Rate of detecting negligible interaction between continuous predictors in traditional test and proposed test with the equivalence interval of . TNI = proposed test of negligible interaction; Trad = traditional interaction test. = 0.0629 is an example of an estimated interaction coefficient on the edge of interval, = 0.2 is an example of a non-negligible interaction (ε.), and = 0.03 is an example of a non-zero negligible interaction (−ε < < ε).

Type I error conditions for the proposed equivalence-based test

The further the interaction coefficient is from the equivalence interval, the more conservative the Type I error rate. Thus, in order to understand the behavior of the upper bound of the Type I error (maximum Type I error rate) as a function of sample size, we consider the interaction coefficient that falls on the edge of the equivalence interval after standardization. In other words, when is equal to ε this is a Type I error condition for the proposed test (but a power condition for the traditional test, i.e. as discussed, the reported rates are the proportion of Type II errors). Note that = .02021 for ε = .1 and = .06290 for ε = .3 are the required coefficients for simulating a Type I error condition because the partial standardized coefficient for = .02021 is = .1 and the partial standardized coefficient for = .06290 is = .3.

When ε = .3, the Type I error results were approximately equal to α at all sample sizes (i.e. within Bradley’s limit). However, when ε = .1, the rates of Type I error were approximately equal to α only for n > 100 and conservative otherwise.

Recalling that the null hypothesis of the proposed test holds whenever the interaction coefficient (after standardization) falls outside of the equivalence interval, in order to simulate Type I error conditions we can choose infinitely many values for the interaction term (i.e. any ≥ ε | ≤ −ε). For the cases where we have a non-negligible interaction effect (i.e. = .08 for ε = .1, = .20 for ε = .3), both the traditional test and the proposed test rates of detecting no interaction, as expected, approach zero as the sample size increases. The most important difference is that the proposed test never results in a conclusion of negligible interaction, whereas the traditional null hypothesis test incorrectly concludes a lack of interaction more than 50% of the time at n = 25 and more than 25% of the time at n = 50.

Power conditions for the proposed equivalence-based test

When the partial standardized population interaction coefficient ( ) falls within the equivalence interval this is a power condition for the equivalence-based test and our interest is in the power of the proposed test to reject the null (i.e. detect a negligible interaction effect). In addition to = 0, = 0.01 and = 0.03 are examples of non-zero negligible interaction effects (−ε < < ε) used in the simulation study for 1 and 3, respectively. For the traditional test, however, when = 0 what is being recorded is the inverse of the Type I error rate (i.e. we expect the rates to be equal to 1 – α), whereas when > 0 we are again recording the Type II error rates.

For both ε = .1 and ε = .3, the power of the proposed negligible interaction test increased from 0 to 1 as n increased. The power of the proposed test is close to zero for n < 100 for and n = 25 for . This was expected, as we do not have enough evidence to reject the null hypothesis of existence of non-negligible interaction with a small number of cases. As sample size grows, we have more statistical power for rejecting H0: ≥ ε | ≤ −ε. For the non-zero interaction coefficient and narrower equivalence interval, as expected, the power of the proposed test is lower.

The traditional null hypothesis test results for = 0 are, as expected, approximately equal to 1 – α. For the cases where we have a non-zero negligible interaction, the traditional test will often conclude no interaction with a small sample size because of a lack of statistical power, but rarely or never find a lack of interaction with a large sample size.

Discussion

Two methods for detecting a negligible interaction effect were compared in this study: The traditional association-based null hypothesis testing method (which is actually testing for the presence of a non-zero interaction effect and relies on a non-significant coefficient for the interaction) and a proposed equivalence-testing based method, which was introduced in this study. Detecting a negligible interaction is the interest of many researchers and consequently the availability of an appropriate test for assessing a negligible interaction effect is crucial. The traditional association-based approach for assessing a lack of interaction has some known issues and is not recommended for the purpose of detecting negligible interaction.

The proposed test introduced in this study, unlike the traditional approach mentioned above, has statistical properties that are in line with the research hypothesis of the study. More specifically, the null hypothesis of the proposed equivalence-based test specifies a non-negligible interaction effect and the goal is to be able to reject this hypothesis and conclude that there is evidence for a negligible interaction effect. A procedure similar in terms of the objective of the study was previously proposed by Cribbie et al. [10] on inappropriately utilizing factorial analysis of variance test (ANOVA) to assess a lack of interaction with categorical predictors. However, the approach proposed in that work involved an unstandardized bootstrap-based intersection-union test for categorical variables in a x b designs. Such an approach is not necessary in the continuous predictor setting because there is a single coefficient representing the interaction term (rather than, for example, multiple interaction contrast dummy variables). In addition to the nature of the test, the procedure, including scaling, equivalence interval construction, interpretation, etc. are very different from the proposed testing procedure of this study for assessing negligible interaction among continuous predictors using standardized regression-based models. Below we discuss the results of the simulation study, and it is important to remember that the definitions of Type I error and power differ for the negligible interaction and traditional hypothesis testing approaches.

Type I error rates for the proposed test of negligible interaction when = ε = .1, were acceptable only when the sample size reached N = 1000. However, when = ε = .3, Type I error rates of the proposed test were acceptable at all sample sizes. As was discussed above, there is much subjectivity in setting an appropriate equivalence interval, and our results highlight that when the equivalence interval is very narrow, results can be overly conservative. To clarify, we are not suggesting that researchers increase the width of the equivalence interval in order to achieve appropriate Type I error rates; what we are proposing is that researchers take very seriously the selection of an appropriate equivalence interval for each negligible interaction problem see [4]. What degree of interaction is deemed negligible in one setting may not be deemed negligible in another. As expected, rates of Type I error were zero in all conditions when > ε. This is a desirable property of the test in that it does not permit any Type I errors when the coefficient falls beyond the border of the equivalence interval.

In the proposed test, when < ε and ε = .3, power rates reached 100% for both = 0 and = .03 when sample sizes were equal to 1000. With the conservative interval, ε = .1, when < ε, power rates reached 100% for sample sizes equal to 5000. Power rates for ε = .1 were nil until N = 500, however ε = .3 increased fairly steadily from the smallest to largest sample size. This is a key point; as introduced at the start of the paper, detecting negligible interaction is an important goal for many researchers. It is possible to declare negligible interaction with a theoretically appropriate method as long as the equivalence interval is not overly narrow or the sample size is large.

To summarize, since the null hypothesis of the proposed test matches the research hypothesis, as the sample size increases the proposed test’s rate of detecting negligible interaction will approach 100% when the interaction term (after standardization) falls within the equivalence interval and reaches zero when the interaction term (after standardization) falls outside the equivalence interval (and approximates α if the interaction term after standardization falls on the edge of the equivalence interval).

The results for the traditional test when > 0 are Type II errors and thus approach zero as the sample size increases. However, when sample sizes were small the traditional test often concluded a lack of interaction even when the interaction effect was non-zero. It is important to recognize the importance of this result; when working with the proposed test, with small or large sample sizes, interaction coefficients beyond the bounds of the equivalence interval were never declared negligible. However, with the traditional test, even interaction coefficients beyond the bounds of the equivalence interval can lead to a conclusion of no interaction without large sample sizes. These results highlight why it is not appropriate to use non-rejection of a traditional test as evidence of negligible interaction; with null hypothesis significance testing, it is important that power for investigating the research hypothesis increases, not decreases, with sample size.

For the cases with a small sample size and a negligible interaction effect, the traditional test appears to perform better than the proposed test. However, as described above, this apparent superior performance is a result of how the null hypotheses are defined for the traditional test and the proposed test. For small sample sizes, with a traditional test and a small to moderate effect there is a lack of evidence for rejection of the null. In other words, there is not sufficient power for being able to detect the interaction effect. However, it is still inappropriate to prefer the traditional test in this situation since a lack of power for detecting an interaction is not an appropriate test for detecting a lack of interaction. Further, when appropriate, larger values of ε provide good power and maintain the testing within the recommended equivalence testing framework.

What do all of these results mean for applied researchers? If a researcher is attempting to demonstrate that a negligible interaction effect exists between two predictors on an outcome, using a traditional (association-based) null hypothesis significance test might provide more power for concluding that negligible interaction exists with small sample sizes, however that is at the expense of also often declaring that negligible interaction occurs when, in fact, a non-negligible interaction is present. Further, with a large sample size, even very small (i.e. negligible) interactions may be declared statistically significant even if they have little practical significance. These results occur because the hypothesis testing procedure is backwards; the research hypothesis is aligned with the null, not the alternate, hypothesis. The equivalence-testing based approach proposed in this study aligns the research hypothesis (negligible interaction) with the alternate hypothesis, and thus the statistical results are as desired (i.e. power for detecting negligible interaction increases as sample size increases, and Type I error rates are minimal for non-negligible interactions).

As with all simulation studies, the results and conclusions are limited to the conditions we investigated in this study. Our focus was on linear models with two continuous independent variables and all assumptions were met. Future research may expand this methodology to higher order designs, such as three-way interactions, or assessing the effect of assumption violations on lack of interaction tests.

An alternative to the proposed approach is the use of Bayesian methods (e.g. [33]). For example, Bayesian highest density intervals on the interaction effect can be applied in a similar method to that of the methods proposed in this paper (and will yield similar results unless the sample size is small and a precise prior is adopted, see [16]). Bayes factors can also be applied, wherein the researcher is comparing the relative likelihood of the null and alternative hypotheses. It is important in these settings that an interval-based null hypothesis is adopted in order to be able to incorporate the equivalence interval (Hoyda, Counsell, & Cribbie; [18]). Another alternative is to simply explore the effect size for the interaction, however, hypothesis testing adds important information regarding the magnitude of the effect relative to the error [12].

To summarize, although much care is required when setting an appropriate equivalence interval, researchers investigating negligible interaction among continuous predictors are encouraged to utilize an equivalence-testing based approach.

Funding Statement

This work was supported by Social Sciences and Humanities Research Council of Canada: [Grant Number 435-2016-1057].

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- 1.Altman D.G., and Bland J.M., Statistics notes: absence of evidence is not evidence of absence. Br. Med. J. 311 (1995), pp. 485–485. doi: 10.1136/bmj.311.7003.485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Anderson S., and Hauck W.W., A new procedure for testing equivalence in comparative bioavailability and other clinical trials. Commun. Stat. Theory Methods 12 (1983), pp. 2663–2692. doi: 10.1080/03610928308828634 [DOI] [Google Scholar]

- 3.Arnocky S., Proietti V., Ruddick E.L., Côté T.-R., Ortiz T.L., Hodson G., and Carré J.M., Aggression toward sexualized women is mediated by decreased perceptions of humanness. Psychol. Sci. 30 (2019), pp. 748–756. doi: 10.1177/0956797619836106 [DOI] [PubMed] [Google Scholar]

- 4.Beribisky N., Davidson H., and Cribbie R.A., Exploring perceptions of meaningfulness in visual representations of bivariate relationships. PeerJ. 7 (2019), pp. e6853. doi: 10.7717/peerj.6853 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Berger R.L., Multiparameter hypothesis testing and acceptance sampling. Technometrics. 24 (1982), pp. 295–300. doi: 10.2307/1267823 [DOI] [Google Scholar]

- 6.Bradley J.V., Robustness? Br. J. Math. Stat. Psychol. 31 (1978), pp. 144–152. doi: 10.1111/j.2044-8317.1978.tb00581.x [DOI] [Google Scholar]

- 7.Cheng B., and Shao J., Exact tests for negligible interaction in two-way analysis of variance/covariance. Stat. Sin. 17 (2007), pp. 1441–1455. [Google Scholar]

- 8.Cohen J., Statistical Power Analysis for the Behavioral Sciences, 2nd ed., Lawrence Erlbaum, New Jersey, 1988. [Google Scholar]

- 9.Counsell A., Cribbie R.A., and Flora D., Evaluating equivalence testing methods for measurement invariance. Multivariate. Behav. Res. 52 (2020), pp. 312–328. doi: 10.1080/00273171.2019.1633617 [DOI] [PubMed] [Google Scholar]

- 10.Cribbie R.A., Ragoonanan C., and Counsell A., Testing for negligible interaction: A coherent and robust approach. Br. J. Math. Stat. Psychol. 69 (2016), pp. 159–174. doi: 10.1111/bmsp.12066 [DOI] [PubMed] [Google Scholar]

- 11.Cribbie R.A., Gruman J., and Arpin-Cribbie C., Recommendations for applying tests of equivalence. J. Clin. Psychol. 60 (2004), pp. 1–10. doi: 10.1002/jclp.10217 [DOI] [PubMed] [Google Scholar]

- 12.Funder D.C., and Ozer D.J., Evaluating effect size in psychological research: Sense and nonsense. Adv. Methods Pract. Psychol. Sci. 2 (2019), pp. 156–168. doi: 10.1177/2515245919847202 [DOI] [Google Scholar]

- 13.Goertzen J.R., and Cribbie R.A., Detecting a lack of association. Br. J. Math. Stat. Psychol. 63 (2010), pp. 527–537. doi: 10.1348/000711009X475853 [DOI] [PubMed] [Google Scholar]

- 14.Jamshidian M., and Bentler P.M., Improved standard errors of standardized parameters in covariance structure models: Implications for construct explication, in Problems and Solutions in Human Assessment: Honoring Douglas N. Jackson at Seventy, Goffin R.D., Helmes E., eds., Kluwer Academic/Plenum Publishers, New York, 2000. pp. 73–94. [Google Scholar]

- 15.Jones J.A., and Waller N.G., The normal-theory and asymptotic distribution-free (ADF) covariance matrix of standardized regression coefficients: Theoretical extensions and finite sample behavior. Psychometrika 80 (2015), pp. 365–378. doi: 10.1007/s11336-013-9380-y [DOI] [PubMed] [Google Scholar]

- 16.Kruschke J.K., and Liddell T.M., The Bayesian new statistics: hypothesis testing, estimation, meta-analysis, and power analysis from a Bayesian perspective. Psychonomic Bull. Rev. 25 (2018), pp. 178–206. doi: 10.3758/s13423-016-1221-4 [DOI] [PubMed] [Google Scholar]

- 17.Mascha E.J., and Sessler D.I., Equivalence and noninferiority testing in regression models and repeated-measures designs. Anesth. Analg. 112 (2011), pp. 678–687. doi: 10.1213/ANE.0b013e318206f872 [DOI] [PubMed] [Google Scholar]

- 18.Morey R.D., and Rouder J.N., Bayes factor approaches for testing interval null hypotheses. Psychol. Methods 16 (2011), pp. 406–419. doi: 10.1037/a0024377 [DOI] [PubMed] [Google Scholar]

- 19.Nieminen P., Lehtiniemi H., Vähäkangas K., Huusko A., and Rautio A., Standardized regression coefficient as an effect size index in summarizing findings in epidemiological studies. Epidemiol. Biostat. Public Health 10 (2013). doi: 10.2427/8854. [DOI] [Google Scholar]

- 20.Preacher K.J. (2003). A primer on interaction effects in multiple linear regression. Retrieved from http://www.quantpsy.org/interact/interactions.htm

- 21.R Core Team , R: A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, Vienna, Austria, 2016. https://www.R-project.org/. [Google Scholar]

- 22.Robinson A.P., Duursma R.A., and Marshall J.D., A regression-based equivalence test for model validation: shifting the burden of proof. Tree Physiol. 25 (2005), pp. 903–913. doi: 10.1093/treephys/25.7.903 [DOI] [PubMed] [Google Scholar]

- 23.Rogers J.L., Howard K.I., and Vessey J.T., Using significance tests to evaluate equivalence between two experimental groups. Psychol. Bull. 113 (1993), pp. 553–565. doi: 10.1037/0033-2909.113.3.553 [DOI] [PubMed] [Google Scholar]

- 24.Rouanet H., Bayesian methods for assessing importance of effects. Psychol. Bull. 119 (1996), pp. 149–158. doi: 10.1037/0033-2909.119.1.149 [DOI] [Google Scholar]

- 25.Rusticus S.A., and Eva K.W., Defining equivalence in medical education evaluation and research: does a distribution-based approach work? Adv. Health Sci. Educ. 21 (2015), pp. 359–373. doi: 10.1007/s10459-015-9633-x [DOI] [PubMed] [Google Scholar]

- 26.Schuirmann D.J., A comparison of the two one-sided tests procedure and the power approach for assessing equivalence of average bioavailability. J. Pharmacokinet. Biopharm. 15 (1987), pp. 657–680. doi: 10.1007/BF01068419 [DOI] [PubMed] [Google Scholar]

- 27.Seaman M.A., and Serlin R.C., Equivalence confidence intervals for two-group comparisons of means. Psychol. Methods 3 (1998), pp. 403–411. doi: 10.1037/1082-989X.3.4.403 [DOI] [Google Scholar]

- 28.Selwyn M.R., and Hall N.R., On Bayesian methods for bioequivalence. Biometrics 40 (1984), pp. 1103–1108. doi: 10.2307/2531161 [DOI] [PubMed] [Google Scholar]

- 29.Wellek S., Testing Statistical Hypotheses of Equivalence and Noninferiority, 2nd ed., Chapman and Hall/CRC, 2010. doi: 10.1201/EBK1439808184 [DOI] [Google Scholar]

- 30.Westlake W.J., Symmetrical confidence intervals for bioequivalence trials. Biometrics 32 (1976), pp. 741–744. doi: 10.2307/2529259 [DOI] [PubMed] [Google Scholar]

- 31.Yuan K.H., and Chan W., Measurement invariance via multigroup SEM: issues and solutions with chi-square-difference tests. Psychol. Methods 21 (2016), pp. 405–426. doi: 10.1037/met0000080 [DOI] [PubMed] [Google Scholar]

- 32.Shiskina T., Farmus L., and Cribbie R.A., Testing for a lack of relationship among categorical variables. Quant. Methods Psychol. 14 (2018), pp. 167–179. doi: 10.20982/tqmp.14.3.p167 [DOI] [Google Scholar]

- 33.Hoyda J., Counsell A., and Cribbie R.A., Traditional and Bayesian approaches for testing mean equivalence and a lack of association. Quant. Methods Psychol. 15 (2019), pp. 12–24. doi: 10.20982/tqmp.15.1.p012 [DOI] [Google Scholar]