PURPOSE

Older hospitalized cancer patients face high risks of hospital mortality. Improved risk stratification could help identify high-risk patients who may benefit from future interventions, although we lack validated tools to predict in-hospital mortality for patients with cancer. We evaluated the ability of a high-dimensional machine learning prediction model to predict inpatient mortality and compared the performance of this model to existing prediction indices.

METHODS

We identified patients with cancer older than 75 years from the National Emergency Department Sample between 2016 and 2018. We constructed a high-dimensional predictive model called Cancer Frailty Assessment Tool (cFAST), which used an extreme gradient boosting algorithm to predict in-hospital mortality. cFAST model inputs included patient demographic, hospital variables, and diagnosis codes. Model performance was assessed with an area under the curve (AUC) from receiver operating characteristic curves, with an AUC of 1.0 indicating perfect prediction. We compared model performance to existing indices including the Modified 5-Item Frailty Index, Charlson comorbidity index, and Hospital Frailty Risk Score.

RESULTS

We identified 2,723,330 weighted emergency department visits among older patients with cancer, of whom 144,653 (5.3%) died in the hospital. Our cFAST model included 240 features and demonstrated an AUC of 0.92. Comparator models including the Modified 5-Item Frailty Index, Charlson comorbidity index, and Hospital Frailty Risk Score achieved AUCs of 0.58, 0.62, and 0.71, respectively. Predictive features of the cFAST model included acute conditions (respiratory failure and shock), chronic conditions (lipidemia and hypertension), patient demographics (age and sex), and cancer and treatment characteristics (metastasis and palliative care).

CONCLUSION

High-dimensional machine learning models enabled accurate prediction of in-hospital mortality among older patients with cancer, outperforming existing prediction indices. These models show promise in identifying patients at risk of severe adverse outcomes, although additional validation and research studying clinical implementation of these tools is needed.

INTRODUCTION

Each year, > 40% of cancer-related deaths occur within patients with cancer older than 75 years.1 A large proportion of these patients will use hospital resources throughout their disease course.2 Management of older patients with cancer is complicated by accumulated age-related comorbidities.3 Furthermore, this population of older patients with cancer is at risk of frailty, defined as an aging-related syndrome of physiologic decline, associated with a decreased tolerance to acute stressors and a high risk of mortality.4 Different prediction algorithms have been constructed to help identify frail older adults who might benefit from early interventions to help mitigate the risks of adverse outcomes.5-8

CONTEXT

Key Objective

Can machine learning (ML) methods improve hospital mortality risk-stratification for older patients with cancer presenting for acute care?

Knowledge Generated

We demonstrate that three commonly used risk indices are poor at predicting in-hospital mortality for older patients with cancer. Our ML model incorporated 240 features—acute and chronic diagnoses, demographics, and cancer characteristics—and vastly outperformed existing risk indices with high accuracy (area under the curve, 0.92).

Relevance

Our high-dimensional ML model demonstrated high capacity for in-hospital mortality prediction, highlighting the potential use of these models for supporting clinical decision making regarding adverse outcomes for patients with cancer.

Despite the benefits of using risk prediction to identify frail older adults with cancer, we lack a validated frailty algorithm to use with older patients with cancer. Assessments such as the Hospital Frailty Risk Score (HFRS) were developed from a general population of older adults. Other indexes, such as the Modified 5-Item Frailty Index (mFI-5), have been tested in patients with cancer, although this assessment was limited largely to oncologic surgeries.9,10 Many additional cancer-specific studies have been evaluated for risk prediction. However, these studies have limitations for older patients with cancer including single-institution studies, small sample size, single tumor subtype, and inclusion of younger patients with cancer.11-14 The purpose of this study was to create and test a novel high-dimensional predictive model to help identify older patients with cancer at risk of hospital mortality. Furthermore, we compared our predictive model to existing predictive indices to assess the overall utility of this approach in a population of older adults with cancer.

METHODS

Study Design and Data Source

We intended to evaluate the applicability of frailty risk assessment tools at the earliest initiation of hospital care, which for most patients is the emergency department (ED). Using ED-level visits also allowed us to select for the acute care setting while avoiding inpatient admissions for elective procedures. With this study, we identified patients from the National Emergency Department Sample (NEDS) developed for the Healthcare Cost and Utilization Project, sponsored by the Agency for Healthcare Research and Quality. NEDS is a large nationally representative emergency database, covering 980 hospitals and 79.2% of all ED visits in 37 states.15 The database covers both academic and community medical centers capturing demographical, clinical, and nonclinical variables, and can be requested from the Healthcare Cost and Utilization Project. This study was deemed exempt from the institutional review board and was an unfunded study.

Our cohort consisted of patients with cancer who visited the ED between 2016 and 2018. Similar to existing research on frailty among older adults,5 our study cohort included only individuals age 75 years or older. NEDS records International Classification of Diseases, version 10 (ICD-10), diagnosis codes, recording up to 35 ICD-10 diagnosis codes per encounter. For each weighted patient visit, the ICD-10 codes accumulated during their hospital course are included. These include both acute conditions and any recorded chronic conditions. Per the structure of NEDS coding, no patient should have previously recorded ICD-10 codes before their ED encounter, although we cannot exclude the possibility of some data from previous encounters remaining a possible limitation. Using existing methodology to identify patients with cancer,16,17 we identified cancer diagnoses as patients having any cancer ICD-10 code (Data Supplement) in any position on their list of diagnosis codes, whereas the specific type of cancer was classified based off of their first listed cancer diagnoses in cases where more than one code was present.

Model Selection and Risk-Tool Evaluation

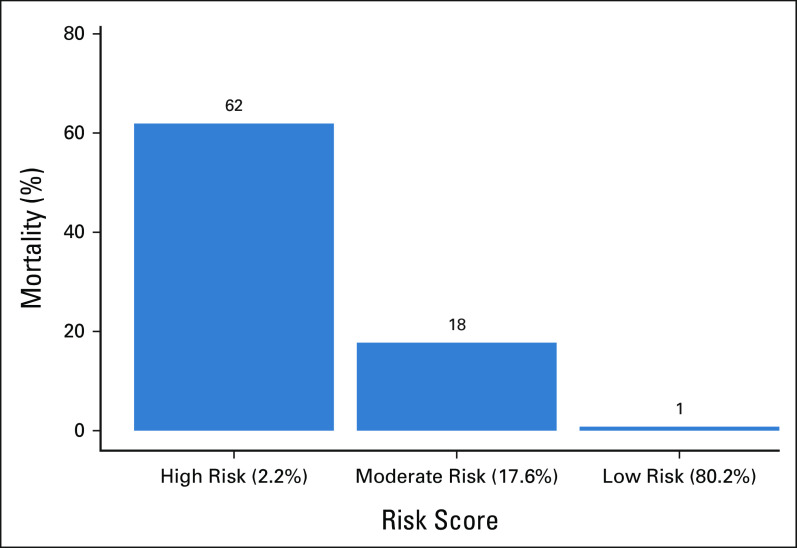

We constructed a high-dimensional predictive model named the Cancer Frailty Assessment Tool (cFAST) to predict the primary end point of hospital mortality defined as death in the ED or during subsequent inpatient admission. With this model, we used an extreme gradient boosting algorithm (XGBoost).18 This predictive model incorporated individual ICD-10 diagnosis codes as binary variables. We condensed ICD-10 codes into their first three characters, with the exception of the codes for radiation therapy, chemotherapy/immunotherapy, and palliative care, which we kept as independent variables. We only included ICD-10 codes with > 1% prevalence in the cohort. Our model also incorporated patient variables and hospital characteristics, including age, sex, primary payer, rural/metro status, income quartile, discharge quarter, hospital ownership, and hospital teaching status, although the majority of features were ICD-10 diagnoses codes. Missing data were binned into categorical unknown columns. We divided the entire data set into training (75%) and test (25%) data. We used the training data to construct models, and the test data set to formally assess model performance. We estimated XGBoost feature importance with total gain, which evaluates the relative contribution to model accuracy for individual variable inclusion. Model performance may be influenced by the underlying predictive algorithm; therefore, we conducted a sensitivity analysis where we constructed two additional machine learning (ML) models including L1 regularized logistic regression (lasso), and a simple neural network. The specifics of model training for all ML models, including hyperparameter tuning and number of rounds, is summarized in the Data Supplement. We used the predictive risk scores generated from our cFAST model to categorize patients into predicted risk groups, either low risk, moderate risk, or high risk. We defined these risk groups on the basis of cutoffs determined to be clinically relevant a priori (Fig 1).

FIG 1.

Risk groups for the cFAST. Percentage of hospital mortality among risk-stratified patients converted using cFAST. Risk scores displayed as risk: (% of total validation cohort within this risk). cFAST, Cancer Frailty Assessment Tool.

Comparator Models

We selected comparator risk indices based upon the extent of their validation, frequency of use, and feasibility constructing from variables within the NEDS data set. The mFI-5, Charlson comorbidity index, and HFRS scores represent well-established indices that have been tested in a variety of cohorts, and all three can be calculated from ICD-10 diagnosis codes. The mFI-5 was developed as a more concise alternative to the 11-item modified frailty index and was intended to risk-stratify surgical patients.6 The mFI-5 includes five common comorbidities. The Charlson comorbidity index was originally developed to predict risk of death within 1 year of hospitalization, using 19 categories of comorbidity.19 Its use has expanded to diverse risk-stratification end points and widely used within health outcomes research. The HFRS was developed using more than 100 ICD-10 codes associated with older, frail patients.5 For consistency between our cFAST and the comparator models, we incorporated patient demographic and hospital variables into each of the final comparator risk scores. We accomplished this by taking the calculated individual comparator risk score and incorporating the score as a predictor for an XGBoost algorithm along with the demographic and hospital variables. Therefore, the three presented comparator risk scores include both the baseline risk score (mFI-5, Charlson, or HFRS), along with the demographic and hospital variables. For each predictive model, including our cFAST model, we calculated the area under the curve (AUC) from a receiver operating characteristic curve to assess prediction accuracy using the test data sets. An AUC of 1.0 indicates perfect prediction. All analyses were conducted using R 4.0.3., with ML models used from the tidymodels, xgboost, glmnet, and nnet libraries.

RESULTS

Between 2016 and 2018, we identified 2,723,330 weighted ED visits among older patients with cancer across 990 EDs. Among these visits, 1,918,504 (70.4%) led to an inpatient admission. Out of all visits, 144,653 (5.3%) resulted in death either in the ED (n = 9,290) or during the subsequent inpatient admission (n = 135,363). Demographics of the study cohort by ED visit are included in Table 1, stratified by whether the encounter resulted in death or not. The most prevalent cancer subtypes were lung (11.8%), myelodysplastic and other hematopoietic disorders (10.3%), and leukemias (9.3%).

TABLE 1.

Patient and Hospital Demographics for Older Adult Patients With Cancer Visiting the Emergency Department

A total of 1,806 possible unique three-character ICD-10 codes were present in our cancer cohort. The final cFAST model was trained from 215 ICD-10 codes with prevalence of 1% or more and 25 patient demographic variables for a total of 240 model features. A total of 36, 292 patients had at least one demographic variable binned into an unknown categorical feature. Evaluation of the predictive ability of the cFAST model revealed an AUC of 0.92 (95% CI, 0.915 to 0.921; Fig 2). The risk cohorts derived from cFAST categorized 2.2% of patients as high risk, with 62% of these patients experiencing hospital mortality (Fig 1). Conversely, 80.2% of patients were classified as low risk, and only 1% of these patients died within the hospital. Our sensitivity analysis with different ML techniques found similar predictive ability with a lasso regression (AUC of 0.90) and a simple neural network (AUC of 0.91). In evaluating the accuracy of the comparator prediction algorithms, their AUCs were lower than that of the cFAST algorithm. The AUC of HFRS was 0.71, followed by the Charlson comorbidity index with an AUC of 0.62, and mFI-5 with an AUC of 0.58 (Fig 2).

FIG 2.

Performance of the cFAST prediction and comparator prediction models. This figure demonstrates the receiving operating characteristic curves for the cFAST model, mFI-5, Charlson comorbidity index, and the HFRS in the ability to predict in-hospital mortality for older adults with cancer. The AUC assesses the model accuracy. AUC, area under the curve; cFAST, Cancer Frailty Assessment Tool; HFRS, Hospital Frailty Risk Score; mFI-5, Modified 5-Item Frailty Index.

With our cFAST model, Figure 3 demonstrates the top 30 most important features influencing the model prediction. The important features included cancer and treatment-related factors such as palliative care, metastatic disease, and fluid/electrolyte disturbances. Other important features included emergent conditions (respiratory failure, systemic inflammatory response syndrome, and shock), chronic conditions (lipidemia and hypertension), and diagnoses reflecting patient mentation (somnolence, stupor, and coma). Among demographic factors, age and sex were considered more important, although income and hospital characteristics were also weighted highly.

FIG 3.

The 30 most important features for the cFAST model to predict in-hospital mortality for older adults with cancer. The feature importance reflects the total gain for individual variables with the extreme gradient boosting algorithm. cFAST, Cancer Frailty Assessment Tool; SIRS, systemic inflammatory response syndrome.

All predictive models (both cFAST and comparator models) incorporated ICD-10 codes as well as patient demographic and hospital variables, although with a secondary analysis, we constructed models dropping the patient demographic and hospital variables. This secondary analysis without patient demographic and hospital variables found AUCs only slightly lower than the initial estimates (decreased by 0.00-0.02). The results of this secondary analysis are provided in the Data Supplement.

Finally, we evaluated the performance of our cFAST model on different subsets of patients. Table 2 demonstrates model performance in subsets of patients stratified by patient age, sex, income quartile, and cancer type (Table 2). Overall, the cFAST model maintained its ability to predict the risk of hospital mortality among these different cohorts of patients with AUC ranging from 0.89 to 0.94.

TABLE 2.

Performance of Cancer Frailty Assessment Tool Model in Subsets of Older Patients With Cancer

DISCUSSION

In this retrospective study, we used a high-dimensional ML model to predict the risk of death among a large cohort of older adults with cancer. We found that our ML model, cFAST, substantially outperformed existing risk prediction models including the HFRS, mFI-5, and Charlson comorbidity index when applied to our cohort of older patients with cancer. Our cFAST model achieved a relatively robust AUC of 0.92, whereas the AUC of existing indices demonstrated a relatively poor ability to predict mortality, with an AUC ranging from 0.58 to 0.71. One must consider that an AUC of 0.5 represents random chance.

To understand these differences in model performance, it helps to consider differences in model composition. One main difference between models is that the existing indices we evaluated were not created among cohorts of patients with cancer and therefore, do not explicitly capture unique prognostic aspects related to patients with cancer. For instance, renal failure within a cancer population may confer a distinct risk because of specific exposures—nephrotoxic chemotherapy—that are not present within the general population. The use of comorbid conditions when risk-stratifying patients with cancer would benefit from models that consider the unique treatment characteristics and systemic tumor burden of patients with cancer. The analysis of important features in our cFAST model emphasizes the importance of considering cancer-specific features in a prognostic model among patients with cancer. We found several important model features of acute illness (respiratory failure, sepsis, and hypotension), although we also found important features more specific to patients with cancer, including metastatic disease and complications commonly associated with cancer and cancer treatment. Notably, palliative care and do not resuscitate status were within the top three most important features identified by cFAST. Transitioning from cancer-focused therapy to palliative or comfort care within the acute care or inpatient setting is a challenging and important clinical decision, which is not accounted for in the pre-existing risk indices. By better accounting for these transitions in care, our cFAST model adds the potential utility of aiding physicians in end-of-life care discussions. Additionally, the high-, moderate-, and low-risk categories calculated by cFAST may serve as a quick, efficient reference when evaluating these patients.

Furthermore, the addition of baseline patient demographics and hospital characteristics did not substantially influence model discrimination. One potential explanation, in a cohort of older patients with cancer, predictors such as age or sex may offer less predictive gain when compared with differences in comorbid conditions. These findings underscore the necessity of cancer-specific cohort validation when constructing these risk indices to more accurately weight patient comorbidities. Additionally, our analysis of different subsets of patients with cancer found comparable performance for individual tumor subtypes ranging from AUC of 0.89-0.94. Altogether, this emphasizes the point that prediction models among patients with cancer require model training on a cancer-specific cohort of patients.

Another important observation worth discussing relates to the influence of the high-dimensional model on the ability to predict our outcome of interest. Our cFAST model includes a large number of variables, many more variables than the existing comparator indices in this study. Increasing the number of variables in a model tends to increase the predictive capacity, although one must consider that a high-dimensional model would require a different implementation strategy before becoming integrated in practice. A provider can use an online calculator or mobile app to estimate a risk score with a simple predictive model such as the mFI-5 or Charlson comorbidity index. An online calculator would not be feasible with our cFAST prediction algorithm, which includes 240 variables. However, a high-dimensional model such as cFAST is well positioned for integration within an electronic health record (EHR) system, given that ICD-10 codes are ubiquitous within EHR systems. Additionally, automatic calculation from ICD-10 codes within an EHR would permit a dynamic prediction that could evolve over a patient's admission. Our cFAST model stands in contrast to some existing cancer-specific prognostication tools, such as those developed by Chow et al and Krishnan et al.20,21 These tools relied less on diagnoses coding and included features such as performance status and previous cancer treatments. Both performed well in the outpatient oncology setting, and our cFAST model would certainly benefit from inclusion of similar variables in future iterations of our algorithm.

The application of ML algorithms toward clinical oncology is an increasing area of study. One group developed a ML tool for predicting emergency visits and hospitalizations during cancer therapy, which was then prospectively validated, achieving an AUC of 0.85.22,23 When considering existing research on survival, our model's discrimination compares favorably to published research. Research on hospital mortality among patients with cancer within the NEDS cohort is relatively unique, although other predictive models using variable methods in different study environments often have lower predictive ability.24 One notable study from a large academic health system used a ML approach to predict 6-month mortality among patients with cancer, using a different set of variables than this study, but found a promising AUC that ranged from 0.86 to 0.88.25 Overall, the increased precision with ML will help this methodologic approach continue to insert itself into different applications across health care.26

This study has limitations worth noting. The NEDS database lacks information regarding tumor stage, tumor burden, specifics of treatment, and patient-specific factors known to influence survival such as performance status, vital signs, and laboratory values. Incorporating these factors into a predictive model would likely improve the accuracy of our model, although despite this lack of information, our model still had superb ability to predict in-hospital mortality. Tumor stage and burden information may also provide more nuanced discrimination between predictive features and better inform future studies moving forward. Variability in coding with ICD-10 diagnosis codes represents another limitation. We suspect some degree of misclassification with coding, although in general, we expect this misclassification would tend to bias our analysis toward the null (ie, pull the AUC closer to 0.5). More accurate coding would likely increase our model's ability to predict outcomes. Availability of features in real-world EHRs may represent another limitation. Our cFAST model incorporates basic patient demographic information (age, sex, and insurance status), hospital information (ownership and rural designation), and ICD-10 diagnoses codes. Theoretically, diagnoses information should be available shortly after admission to the hospital, although misdiagnoses could harm performance of cFAST. Some variables, such as patient income, may be less readily available upon admission, though could be attained. Finally, the ICD-10 codes included in this study represent the final diagnoses codes associated with an ED or subsequent inpatient visit and therefore, the ICD-10 codes we incorporate into our model reflects events across the entire patient encounter. Additional research is needed to evaluate how the cFAST model performs with diagnosis codes at the time of presentation.

Despite these limitations, this analysis demonstrates the utility of a high-dimensional predictive model to accurately identify patients with cancer at an increased risk of in-hospital mortality. Furthermore, this study emphasizes the importance of validating existing noncancer prediction indices among patients with cancer. High-dimensional prediction models on the basis of ICD diagnosis codes hold promise, although these algorithms require additional validation and research into optimal implementation strategies before translation into clinical practice.

Lucas K. Vitzthum

Research Funding: RefleXion Medical (Inst)

James D. Murphy

Consulting or Advisory Role: Boston Consulting Group

Research Funding: eContour

No other potential conflicts of interest were reported.

AUTHOR CONTRIBUTIONS

Conception and design: Edmund M. Qiao, Lucas K. Vitzthum, James D. Murphy

Financial support: Edmund M. Qiao, James D. Murphy

Administrative support: Edmund M. Qiao, James D. Murphy

Provision of study materials or patients: Edmund M. Qiao

Collection and assembly of data: Edmund M. Qiao, Vinit Nalawade, James D. Murphy

Data analysis and interpretation: Edmund M. Qiao, Alexander S. Qian, Vinit Nalawade, Rohith S. Voora, Nikhil V. Kotha, James D. Murphy

Manuscript writing: All authors

Final approval of manuscript: All authors

Accountable for all aspects of the work: All authors

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The following represents disclosure information provided by authors of this manuscript. All relationships are considered compensated unless otherwise noted. Relationships are self-held unless noted. I = Immediate Family Member, Inst = My Institution. Relationships may not relate to the subject matter of this manuscript. For more information about ASCO's conflict of interest policy, please refer to www.asco.org/rwc or ascopubs.org/cci/author-center.

Open Payments is a public database containing information reported by companies about payments made to US-licensed physicians (Open Payments).

Lucas K. Vitzthum

Research Funding: RefleXion Medical (Inst)

James D. Murphy

Consulting or Advisory Role: Boston Consulting Group

Research Funding: eContour

No other potential conflicts of interest were reported.

REFERENCES

- 1.USCS Data Visualizations. CDC. https://gis.cdc.gov/Cancer/USCS/#/Demographics/ [Google Scholar]

- 2.Stafford RS, Cyr PL: The impact of cancer on the physical function of the elderly and their utilization of health care. Cancer 80:1973-1980, 1997 [DOI] [PubMed] [Google Scholar]

- 3.Ethun CG, Bilen MA, Jani AB, et al. : Frailty and cancer: Implications for oncology surgery, medical oncology, and radiation oncology. CA Cancer J Clin 67:362-377, 2017 [DOI] [PubMed] [Google Scholar]

- 4.Handforth C, Clegg A, Young C, et al. : The prevalence and outcomes of frailty in older cancer patients: A systematic review. Ann Oncol 26:1091-1101, 2015 [DOI] [PubMed] [Google Scholar]

- 5.Gilbert T, Neuburger J, Kraindler J, et al. : Development and validation of a Hospital Frailty Risk Score focusing on older people in acute care settings using electronic hospital records: An observational study. Lancet 391:1775-1782, 2018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chimukangara M, Helm MC, Frelich MJ, et al. : A 5-item frailty index based on NSQIP data correlates with outcomes following paraesophageal hernia repair. Surg Endosc 31:2509-2519, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hall DE, Arya S, Schmid KK, et al. : Development and initial validation of the Risk Analysis Index for measuring frailty in surgical populations. JAMA Surg 152:175-182, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kim SW, Han HS, Jung HW, et al. : Multidimensional frailty score for the prediction of postoperative mortality risk. JAMA Surg 149:633-640, 2014 [DOI] [PubMed] [Google Scholar]

- 9.Huq S, Khalafallah AM, Jimenez AE, et al. : Predicting postoperative outcomes in brain tumor patients with a 5-factor modified frailty index. Neurosurgery 88:147-154, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dammeyer K, Alfonso AR, Diep GK, et al. : Predicting postoperative complications following mastectomy in the elderly: Evidence for the 5-factor frailty index. Breast J 27:509-513, 2021 [DOI] [PubMed] [Google Scholar]

- 11.Adelson K, Lee DKK, Velji S, et al. : Development of imminent mortality predictor for advanced cancer (IMPAC), a tool to predict short-term mortality in hospitalized patients with advanced cancer. J Oncol Pract 14:e168-e175, 2018 [DOI] [PubMed] [Google Scholar]

- 12.Loh KP, Kansagra A, Shieh MS, et al. : Predictors of in-hospital mortality in patients with metastatic cancer receiving specific critical care therapies. J Natl Compr Canc Netw 14:979-987, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hui D, Kilgore K, Fellman B, et al. : Development and cross-validation of the in-hospital mortality prediction in advanced cancer patients score: A preliminary study. J Palliat Med 15:902-909, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hill JS, Zhou Z, Simons JP, et al. : A simple risk score to predict in-hospital mortality after pancreatic resection for cancer. Ann Surg Oncol 17:1802-1807, 2010 [DOI] [PubMed] [Google Scholar]

- 15.NEDS Overview. https://www.hcup-us.ahrq.gov/nedsoverview.jsp [Google Scholar]

- 16.Rivera DR, Gallicchio L, Brown J, et al. : Trends in adult cancer–related emergency department utilization: An analysis of data from the nationwide emergency department sample. JAMA Oncol 3:e172450, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Qian AS, Qiao EM, Nalawade V, et al. : Impact of underlying malignancy on emergency department utilization and outcomes. Cancer Med 10:9129-9138, 2021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen T, Guestrin C: XGBoost: A scalable tree boosting system, in Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, NY, Association for Computing Machinery, 2016, pp 785-794 [Google Scholar]

- 19.Charlson ME, Pompei P, Ales KL, et al. : A new method of classifying prognostic comorbidity in longitudinal studies: Development and validation. J Chronic Dis 40:373-383, 1987 [DOI] [PubMed] [Google Scholar]

- 20.Chow E, Abdolell M, Panzarella T, et al. : Predictive model for survival in patients with advanced cancer. J Clin Oncol 26:5863-5869, 2008 [DOI] [PubMed] [Google Scholar]

- 21.Krishnan MS, Epstein-Peterson Z, Chen Y-H, et al. : Predicting life expectancy in patients with metastatic cancer receiving palliative radiotherapy: The TEACHH model. Cancer 120:134-141, 2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hong JC, Niedzwiecki D, Palta M, et al. : Predicting emergency visits and hospital admissions during radiation and chemoradiation: An internally validated pretreatment machine learning algorithm. JCO Clin Cancer Inform 2:1-11, 2018 [DOI] [PubMed] [Google Scholar]

- 23.Hong JC, Eclov NCW, Dalal NH, et al. : System for high-intensity evaluation during radiation therapy (SHIELD-RT): A prospective randomized study of machine learning–directed clinical evaluations during radiation and chemoradiation. J Clin Oncol 38:3652-3661, 2020 [DOI] [PubMed] [Google Scholar]

- 24.Nates JL, Cárdenas-Turanzas M, Ensor J, et al. : Cross-validation of a modified score to predict mortality in cancer patients admitted to the intensive care unit. J Crit Care 26:388-394, 2011 [DOI] [PubMed] [Google Scholar]

- 25.Parikh RB, Manz C, Chivers C, et al. : Machine learning approaches to predict 6-month mortality among patients with cancer. JAMA Netw Open 2:e1915997, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Davenport T, Kalakota R: The potential for artificial intelligence in healthcare. Futur Healthc J 6:94-98, 2019 [DOI] [PMC free article] [PubMed] [Google Scholar]