Abstract

Background

Video-based coaching (VBC) is used to supplement current teaching methods in surgical education and may be useful in competency-based frameworks. Whether VBC can effectively improve surgical skill in surgical residents has yet to be fully elucidated. The objective of this study is to compare surgical residents receiving and not receiving VBC in terms of technical surgical skill.

Methods

The following databases were searched from database inception to October 2021: Medline, EMBASE, Cochrane Central Register of Controlled Trials (CENTRAL), and PubMed. Articles were included if they were randomized controlled trials (RCTs) comparing surgical residents receiving and not receiving VBC. The primary outcome, as defined prior to data collection, was change in objective measures of technical surgical skill following implementation of either VBC or control. A pairwise meta-analyses using inverse variance random effects was performed. Standardized mean differences (SMD) were used as the primary outcome measure to account for differences in objective surgical skill evaluation tools.

Results

From 2734 citations, 11 RCTs with 157 residents receiving VBC and 141 residents receiving standard surgical teaching without VBC were included. There was no significant difference in post-coaching scores on objective surgical skill evaluation tools between groups (SMD 0.53, 95% CI 0.00 to 1.01, p = 0.05, I2 = 74%). The improvement in scores pre- and post-intervention was significantly greater in residents receiving VBC compared to those not receiving VBC (SMD 1.62, 95% CI 0.62 to 2.63, p = 0.002, I2 = 85%). These results were unchanged with leave-one-out sensitivity analysis and subgroup analysis according to operative setting.

Conclusion

VBC can improve objective surgical skills in surgical residents of various levels. The benefit may be most substantial for trainees with lower baseline levels of objective skill. Further studies are required to determine the impact of VBC on competency-based frameworks.

Keywords: Surgical education, Medical education, Video-based coaching, Surgical simulation, Surgical residency, Practice-based learning and improvement, Patient care and procedural skills

Surgical residency requires mastery of both theoretical knowledge and technical skill [1, 2]. This is a progressively challenging task, especially with increasingly detailed knowledge of underlying disease processes, advancing technologies, and the advent of sub-specialized fields [3, 4]. Recent restrictions on working hours may provide an additional barrier to attaining necessary exposure to develop adequate intraoperative skills [5, 6]. Moreover, the COVID-19 pandemic has further reduced operative exposure for surgical residents [7]. These factors have combined to create a unique situation in which surgical residency programs are being forced to adapt to ensure that they are graduating technically competent surgeons prepared for independent practice.

Currently, the most common approach to surgical technical training is the master-apprentice model (MAM) [8]. This model is heavily didactic and rarely extends beyond the walls of the operating room (OR) [8]. In an attempt to increase the efficiency and effectiveness of surgical technical training, video-based coaching (VBC) has recently been applied [9–11]. A concept first applied in sport, VBC refers to the use of modeling and provision of feedback by a coach on the basis of audiovisual recordings of the player practicing or playing in a game situation [12]. In surgery, VBC is applied similarly with the coach often being a staff surgeon, the player being a resident, and the audiovisual footage pertaining to intraoperative technical or interpersonal skills. Numerous publications, including randomized controlled trials (RCTs), have demonstrated effectiveness at improving objective technical skill [13–16]. Coaching frameworks, such as the Wisconsin Surgical Coaching Framework, have been designed to further enhance its effectiveness [17]. In addition to improving efficiency and effectiveness for surgical skill training, it is also a teaching method that can be completed in the perioperative period remote from the physical space of the OR and thus may address any potential aforementioned lack of OR exposure. [18]

Yet, the implementation of VBC in surgical residency remains sporadic. A recently published meta-analysis pooled peer-reviewed data pertaining to VBC, but it included data derived from medical student surgical training programs as well as staff surgeon peer-to-peer feedback programs [18]. While these are important areas of surgical education research, we believe that these data introduced significant heterogeneity and limited the ability to apply these findings in a practical setting. Moreover, surgical residency is under significant strain from both work-hour restrictions and decreasing OR exposure and thus may benefit most from VBC research [5–7]. As such, the aim of the present systematic review and meta-analysis was to pool previously published data evaluating the impact of VBC and compare surgical residents receiving and not receiving VBC in terms of technical surgical skill.

Materials and methods

Search strategy

The following databases were searched from database inception to October 2021: Medline, EMBASE, Cochrane Central Register of Controlled Trials (CENTRAL), and PubMed. The search was designed and conducted by a medical research librarian with input from study investigators. Search terms included “video-based,” “coaching,” “internship and residency,” “surgical education,” and more others (complete search strategy available in Appendix). The references of published studies and gray literature were searched manually to ensure that all relevant articles were included. This systematic review and meta-analysis is reported in accordance with the Preferred Reporting items for Systematic Reviews and Meta-Analyses (PRISMA) [19]. The study protocol was registered on the PROSPERO International Prospective Register of Systematic Reviews a priori.

Study selection

Articles were eligible for inclusion if they were RCTs that compared technical performance in live or simulated surgical tasks with and without a preoperative or postoperative VBC intervention for surgical residents. For this study, VBC was defined as review of and feedback pertaining to audiovisual footage of a specific surgical operation, task, or simulation prior to and/or following the completion of that same task [9, 18]. Observational studies were not eligible for inclusion. Single-arm studies, studies that did not include surgical residents, studies including medical students, studies evaluating peer-to-peer coaching, and studies that did not employ preoperative or postoperative VBC were excluded. Studies were not discriminated on the basis of language. Case reports, systematic reviews, meta-analyses, letters to editors, and editorials were excluded.

Outcomes assessed

The primary outcome was change in objective measures of technical surgical skill following implementation of either VBC or control. Many objective measures of technical surgical skill exist. Contemporary VBC surgical literature most commonly employs the following objective measures/scales: (1) Objective Structured Assessment of Technical Skills (OSATS) [20]; (2) Mini/Modified Objective Structured Assessment of Technical Skills (MOSATS) [20]; (3) Bariatric Objective Structured Assessment of Technical Skills (BOSATS) [21]; (4) Global Operative Assessment of Laparoscopic Skills (GOALS) [22]; (5) Generic Error Rating Tool (GERT) [23]; (6) time to completion; and (7) other institution-specific global technical skills assessment scales.

Secondary outcomes included post-coaching scores according to the aforementioned objective measures of technical surgical skill. Additional secondary outcomes included resident satisfaction with VBC and procedure/simulation-specific outcomes.

Data extraction

Three reviewers independently evaluated the systematically searched titles and abstracts using a standardized, pilot-tested form. Discrepancies that occurred at the title and abstract screening phases were resolved by inclusion of the study. At the full-text screening stage, discrepancies were resolved by consensus between the three reviewers. If disagreement persisted, the study was excluded. The same reviewers independently conducted data extraction into a data collection form designed a priori. The extracted data included study characteristics (e.g., author, year of publication, study design), resident demographics (e.g., age, year of study, operative experience), intervention characteristics (e.g., simulated and/or clinical environment, timing of intervention), and resident operative performance measures (e.g., OSATS, GOALS, time, number of errors).

Risk of bias assessment

Risk of bias for each included study was assessed using the Cochrane Risk of Bias Tool for Randomized Controlled Trials 2.0 [24]. The Cochrane Risk of Bias Tool analyzes RCTs according to randomization process, assignment to intervention, adherence to intervention, missing outcome data, outcome measurement, and outcome reporting. Studies were assigned low risk of bias, some concerns for bias, and high risk of bias in each domain, as well as overall. Three reviewers assessed the quality of the studies independently. Discrepancies were discussed among the reviewers until consensus was reached.

Statistical analysis

All statistical analyses and meta-analyses were performed on STATA version 14 (StataCorp, College, TX) and Cochrane Review Manager 5.3 (London, United Kingdom). The threshold for statistical significance was set a priori at a p of < 0.05. A pairwise meta-analysis was performed using an inverse variance random effects model for all meta-analyzed outcomes. Pooled effect estimates were obtained by calculating the standardized mean difference (SMD) for continuous variables along with the respective 95% confidence intervals (CIs) to confirm the effect size estimation. The SMDs were utilized to account for variability in objective technical performance scaling (e.g., OSATS, GOALS). Mean and standard deviation (SD) were estimated for studies that only reported median and interquartile range or range using the method described by Wan et al. [25]. For studies that did not report a measure of central tendency, authors were contacted for missing data. Data were presumed to be unreported if no response was received from study authors within two weeks from the index point of contact. Missing SD data were then calculated according to the prognostic method [26]. Assessment of heterogeneity was completed using the inconsistency (I2) statistic. An I2 greater than 50% was considered to represent considerable heterogeneity [27]. Bias in meta-analyzed outcomes was assessed with funnel plots when data from more than 10 studies were included in the analysis [28]. A leave-one-out sensitivity analysis was performed by iteratively removing one study at a time from the inverse variance random effects models to ensure that pooled effect estimates were not driven by a single study. Risk of bias sensitivity analyses were performed for all meta-analyzed outcomes. Subgroup analyses were completed by setting (i.e., operating room, simulation) and level of training (i.e., post-graduate year) where applicable. For outcomes reported in less than three studies or outcomes in which heterogeneous reporting precluded meta-analysis, a systematic narrative summary was provided [29].

Results

Study characteristics

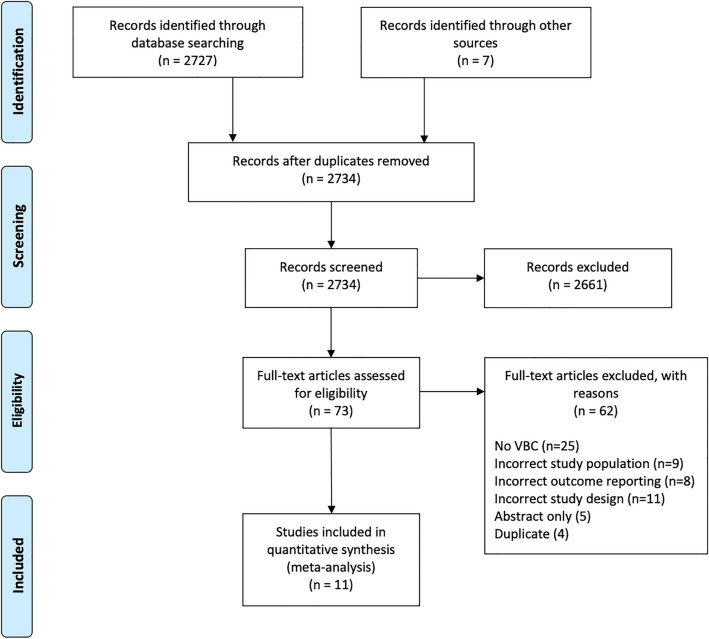

From 2734 relevant citations, 11 RCTs with 157 residents receiving VBC and 141 residents receiving standard surgical teaching without VBC were included [13–16, 30–36]. A PRISMA flow diagram of the study selection is illustrated in Fig. 1. Eight studies reported post-graduate year (PGY) of training for included residents; 49.3% and 45.4% were PGY-1, 27.5% and 29.2% were PGY-2, 5.8% and 6.2% were PGY-3, 10.1% and 13.8% were PGY-4, and 7.2% and 5.4% were PGY-5 in the VBC group and standard surgical teaching without VBC group, respectively. Nine studies reported trainee surgical subspecialties; 58.3% and 59.3% were general surgery residents, 23.3% and 24.6% were orthopedic surgery residents, and 18.3% and 16.1% were obstetrics and gynecology residents in the VBC group and standard surgical teaching without VBC group, respectively. Detailed study characteristics of the included studies are reported in Table 1.

Fig. 1.

PRISMA diagram—transparent reporting of systematic reviews and meta-analysis flow diagram outlining the search strategy results from initial search to included studies

Table 1.

Study characteristics of included studies

| Study | Arm | N | % Female | N level of training (%) | N discipline (%) |

|---|---|---|---|---|---|

| Backstein et al. [36] | VBC | 14 | – | PGY-1: 14 (100) |

General: 11 (42.3) Ortho: 7 (26.9) Cardiac: 1 (3.8) NeuroSx: 1 (3.8) ENT: 3 (11.6) Plastics: 3 (11.6) |

| No VBC | 12 | – | PGY-1: 12 (100) | ||

| Jensen et al. [32] | VBC | 23 | – |

PGY-1: 17 (73.9) PGY-2: 6 (26.1) |

– |

| No VBC | 22 | – |

PGY-1: 13 (59.1) PGY-2: 9 (40.9) |

– | |

| Bonrath et al. [14] | VBC | 9 | 88.9 | Median: PGY-4 (range 3.5–5) | General: 9 (100) |

| No VBC | 9 | 54.4 | Median: PGY-4 (range 3–4.5) | General: 9 (100) | |

| Crawshaw et al. [31] | VBC | 27 | 44.4 |

PGY-2: 5 (18.5) PGY-3: 3 (11.1) PGY-4: 9 (33.3) PGY-5: 10 (37.0) |

General: 27 (100) |

| No VBC | 27 | 48.1 |

PGY-2: 5 (18.5) PGY-3: 3 (11.1) PGY-4: 12 (44.4) PGY-5: 7 (25.9) |

General: 27 (100) | |

| Karam et al. [33] | VBC | 7 | – |

PGY-1: 3 (42.9) PGY-2: 4 (57.1) |

Ortho: 7 (100) |

| No VBC | 8 | – |

PGY-1: 4 (50.0) PGY-2: 4 (50.0) |

Ortho: 8 (100) | |

| Yule et al. [34] | VBC | 8 | – | – | General: 8 (100) |

| No VBC | 8 | – | – | General: 8 (100) | |

| Rindos et al. [30] | VBC | 11 | 91.0 |

PGY-1: 4 (36.4) PGY-2: 4 (36.4) PGY-3/4: 3 (27.3) |

OBGYN: 11 (100) |

| No VBC | 9 | 88.9 |

PGY-1: 3 (33.3) PGY-2: 3 (33.3) PGY-3/4: 3 (33.3) |

OBGYN: 11 (100) | |

| Vaughn et al. [35] | VBC | 12 | – | PGY-1: 12 (100) | General: 12 (100) |

| No VBC | 12 | – | PGY-1: 12 (100) | General: 12 (100) | |

| Soucisse et al. [13] | VBC | 14 | – |

PGY-1: 4 (28.6) PGY-2: 3 (21.4) PGY-3: 2 (14.3) PGY-4: 5 (35.7) |

General: 14 (100) |

| No VBC | 14 | – |

PGY-1: 4 (28.6) PGY-2: 2 (14.3) PGY-3: 2 (14.3) PGY-4: 6 (42.9) |

General: 14 (100) | |

| Dickerson et al. [16] | VBC | 20 | 30.0 | Mean: 2.7 | Ortho: 20 (100) |

| No VBC | 22 | 18.0 | Mean: 2.3 | Ortho: 22 (100) | |

| Norris et al. [15] | VBC | 11 | 83.3 |

PGY-1: 4 (33.3) PGY-2: 8 (66.7) |

OBGYN: 11 (100) |

| No VBC | 10 | 91.7 |

PGY-1: 4 (33.3) PGY-2: 8 (66.7) |

OBGYN: 10 (100) |

N number of participants; VBC video-based coaching; PGY post-graduate year; Ortho orthopedics; NeuroSx neurosurgery; ENT ear, nose, and throat surgery; OBGYN obstetrics and gynecology

Coaching environment

Two of the included studies evaluated the use of VBC in the preoperative setting [31, 32]. The remaining studies evaluated the use of postoperative VBC. Three of the included studies evaluated the use of VBC with intraoperative tasks/surgeries (e.g., laparoscopic right hemicolectomy, laparoscopic salpingo-oophorectomy) [14, 15, 31]. The most common objective skills assessment scoring system was the OSATS (8 studies). Detailed surgical and coaching parameters of the included studies are reported in Table 2.

Table 2.

Video-based coaching environments

| Study | Arm | N | Timing of intervention | Assessment tool(s) | Operative setting | Description of procedure |

|---|---|---|---|---|---|---|

| Backstein et al. [36] | VBC | 14 | Postoperative | MOSATS | Simulation | Hand-sewn vascular anastomosis with human, animal, and inanimate models |

| No VBC | 12 | |||||

| Jensen et al. [32] | VBC | 23 | Preoperative | OSATS, time to completion, assessment of final product quality | Simulation | Excision of a simulated skin lesion and interrupted vertical mattress closure of the wound with porcine tissue; hand-sewn bowel anastomosis with porcine tissue |

| No VBC | 22 | |||||

| Bonrath et al.[14] | VBC | 9 | Postoperative | OSATS, BOSATS, GERT | OR | Laparoscopic jejunojejunostomy in a live OR |

| No VBC | 9 | |||||

| Crawshaw et al.[31] | VBC | 27 | Preoperative | Global assessment scale | OR | Laparoscopic right hemicolectomy in a live OR |

| No VBC | 27 | |||||

| Karam et al. [33] | VBC | 7 | Postoperative | OSATS, time to completion, total number of fluoroscopic images required | Simulation | Validated tibial plateau fracture simulator with synthetic material |

| No VBC | 8 | |||||

| Yule et al. [34] | VBC | 8 | Postoperative | NOTSS, time to completion, time to stop bleeding, time to call for help | Simulation | Laparoscopic cholecystectomy in a simulated OR with five different synthetic models |

| No VBC | 8 | |||||

| Rindos et al. [30] | VBC | 11 | Postoperative | GOALS | Simulation | Suturing on a validated, synthetic vaginal cuff model |

| No VBC | 9 | |||||

| Vaughn et al. [35] | VBC | 12 | Postoperative | Global assessment scale; procedure and institution-specific checklist | Simulation | A simulated surgical skills curriculum that included two-hand square knot tying, one-hand slip knot tying, running simple interrupted suturing, and running subcuticular suturing |

| No VBC | 12 | |||||

| Soucisse et al. [13] | VBC | 14 | Postoperative | OSATS | Simulation | Hand-sewn side-to-side intestinal anastomosis using cadaveric dog bowel |

| No VBC | 14 | |||||

| Dickerson et al. [16] | VBC | 20 | Postoperative | OSATS, GRS | Simulation | A fracture reduction simulation with a three-segment distal tibial fracture model with soft tissue coverage used to represent a comminuted and displaced tibial pilon fracture |

| No VBC | 22 | |||||

| Norris et al. [15] | VBC | 11 | Preoperative | OSATS | OR | Laparoscopic salpingo-oophorectomy in a live OR |

| No VBC | 10 |

N number of participants; VBC video-based coaching; OSATS Objective Structured Assessment of Technical Skills; MOSATS Mini/Modified Objective Structured Assessment of Technical Skills; BOSATS Bariatric Objective Structured Assessment of Technical Skills; GERT Generic Error Rating Scale; GRS Global Rating Scale; OR operating room; NOTSS Non-Technical Skills for Surgeons

*Median (IQR)

†Mean (range)

Objective skills assessments

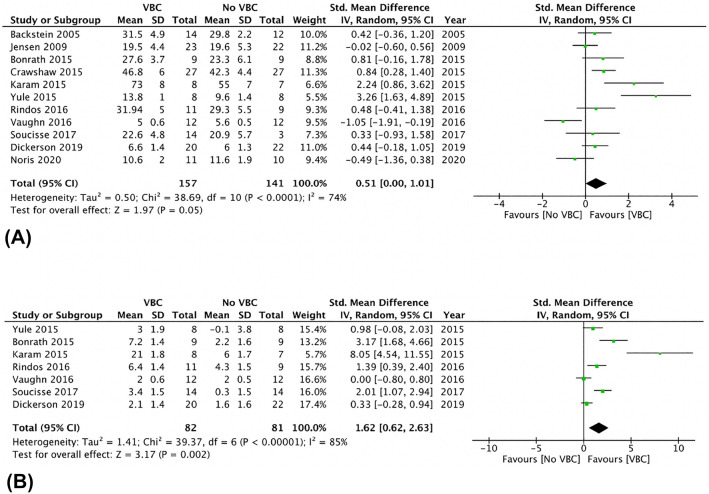

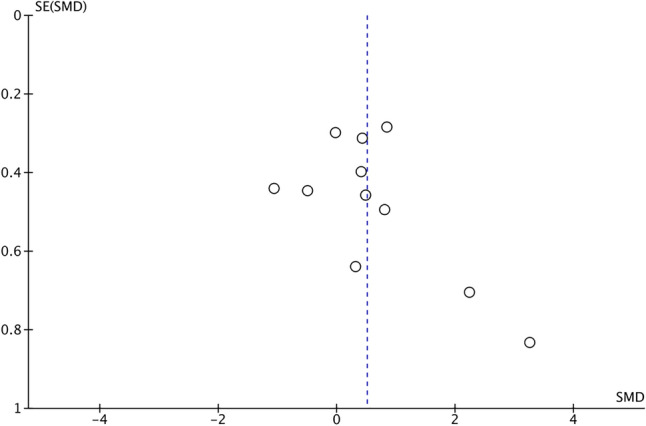

There was no significant difference in post-coaching scores on objective surgical skill evaluation tools between groups (SMD 0.53, 95% CI 0.00 to 1.01, p = 0.05, I2 = 74%) (Fig. 2A). The corresponding funnel plot is presented as Fig. 3. The association between VBC and post-coaching scores was statistically significant with leave-one-out and risk of bias sensitivity analyses with removal of studies by Norris et al., Jensen et al., and Vaughn et al. [15, 32, 35] Post-coaching scores were also significantly different between the two groups upon subgroup analysis only including low risk of bias studies (SMD 1.46, 95% CI 0.56–2.36, p = 0.002, I2 = 77%) and only including simulation-based coaching (SMD 1.34, 95% CI 0.34–2.34, p = 0.009, I2 = 84%).

Fig. 2.

A Post-video-based coaching objective Assessment Tool Scores—random effect meta-analysis comparing presence and absence of video-based coaching. B Change in Objective Assessment Tool Scores—random effect meta-analysis comparing presence and absence of video-based coaching

Fig. 3.

Funnel plot for post-video-based Coaching Objective Assessment Tool Scores random effect meta-analysis

The improvement in objective surgical skill scores pre- and post-intervention was significantly greater in residents receiving VBC compared to those receiving standard surgical teaching without VBC (SMD 1.62, 95% CI 0.62 to 2.63, p = 0.002, I2 = 85%) (Fig. 2B). These results were unchanged with leave-one-out sensitivity analysis. Subgroup analyses including only low risk of bias studies (SMD 0.54, 95% CI 0.03–1.04, p = 0.04, I2 = 66%) and simulation-based coaching (SMD 0.51, 95% CI 0.07–0.95, p = 0.02, I2 = 62%) also demonstrated statistically significant improvements in the VBC group. Table 3 reports all objective skills assessment data from the included studies.

Table 3.

Comparison between baseline and post-intervention technical performance in the included studies

| Study | Arm | N | Assessment tool | Mean baseline performance (SD) | Mean post-intervention performance (SD) |

|---|---|---|---|---|---|

| Backstein et al. [36] | VBC | 14 | MOSATS | – | 31.46 (4.87) |

| No VBC | 12 | – | 29.75 (2.23) | ||

| Jensen et al. [32] | VBC | 23 | OSATS | – | 19.5 (4.4) |

| No VBC | 22 | – | 19.6 (5.3) | ||

| Bonrath et al. [14 | VBC | 9 | OSATS | 21.5 (17–22)* | 27.0 (25.75–30.75)* |

| No VBC | 9 | 20.0 (17.5–25.5)* | 24.0 (19.5–26.5)* | ||

| Crawshaw et al. [31] | VBC | 27 | Global assessment scale | – | 46.8 (6.0) |

| No VBC | 27 | – | 42.3 (4.4) | ||

| Karam et al. [33] | VBC | 7 | OSATS | 52.0 (12.0) | 73.0 (8.0) |

| No VBC | 8 | 49.0 (8.0) | 55.0 (7.0) | ||

| Yule et al. [34] | VBC | 8 | NOTSS | 10.8 (8.5–12.5)† | 13.8 (12–16)† |

| No VBC | 8 | 9.7 (4.5–14)† | 9.6 (6.5–12.5)† | ||

| Rindos et al. [30] | VBC | 11 | GOALS | 25.5 (5.0) | 31.9 (5.0) |

| No VBC | 9 | 25.1 (5.5) | 29.3 (5.5) | ||

| Vaughn et al. [35] | VBC | 12 | Global assessment scale | 3.0 (2.0–4.0)* | 5.0 (4.0–6.0)* |

| No VBC | 12 | 3.5 (3.0–4.5)* | 5.5 (5.0–6.5)* | ||

| Soucisse et al. [13] | VBC | 14 | OSATS | 19.2 (4.6) | 22.6 (4.8) |

| No VBC | 14 | 20.6 (5.8) | 20.9 (5.7) | ||

| Dickerson et al. [16] | VBC | 20 | OSATS | 4.5 (–) | 6.6 (–) |

| No VBC | 22 | 4.4 (–) | 6.0 (–) | ||

| Norris et al. [15] | VBC | 11 | OSATS | – | 10.6 (2.1) |

| No VBC | 10 | – | 11.6 (1.9) |

N number of participants; VBC video-based coaching; OSATS Objective Structured Assessment of Technical Skills; MOSATS Mini/Modified Objective Structured Assessment of Technical Skills; NOTSS Non-Technical Skills for Surgeons; SD standard deviation

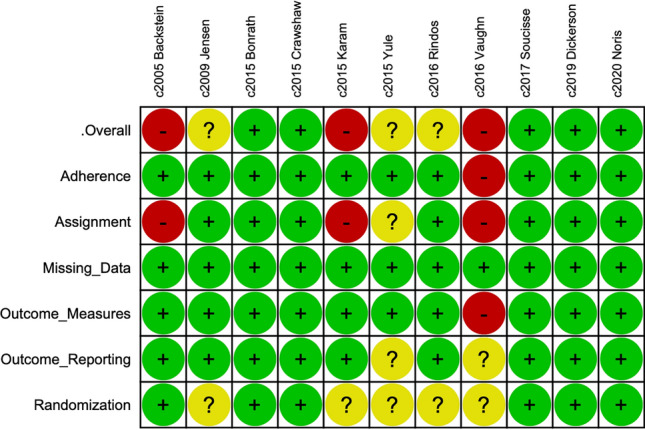

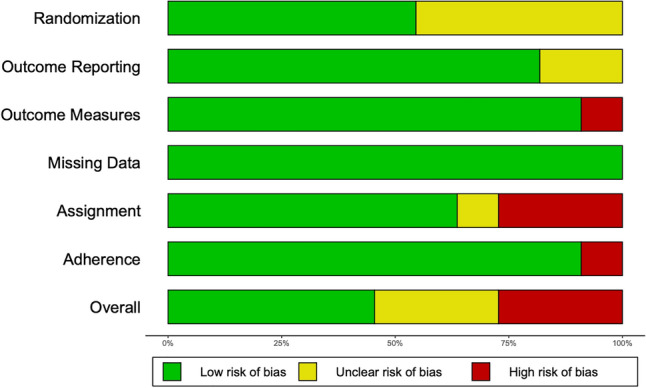

Risk of bias

Figures 4 and 5 present the risk of bias assessment for each included study and the overall cohort of included studies, respectively, according to the Cochrane Risk of Bias Tool for Randomized Controlled Trials 2.0 [24]. Five studies were found to be at a low risk of bias, three had an unclear risk of bias, and three studies were at high risk of bias. Included studies were uniformly at low risk of bias from outcome reporting, randomization, and missing data. All studies found to be at high risk of bias introduced bias through failure to estimate the effect of the assignment with appropriate analyses. For example, Karam et al. evaluated orthopedic surgery resident performance in repairing a simulated tibial plafond fracture and did not report nor statistically control for previous experience with the procedure [33]. There were no missing outcome data across all studies.

Fig. 4.

Cochrane risk of bias tool for randomized controlled trials 2.0—individual study analyses

Fig. 5.

Cochrane risk of bias tool for randomized controlled trials 2.0—grouped outcomes for included trials

Discussion

VBC remains a relatively novel concept in surgical education [9]. The majority of published data are RCTs from the last 5–6 years [13–15, 30, 33–35]. Upon pooling of these data, this review was able to demonstrate that VBC can be an effective intervention for improving surgical resident technical skill. Specifically, residents experiencing VBC were more likely to demonstrate improvement in scores on objective surgical skill assessments compared to surgical residents undergoing standard surgical teaching without VBC. This same improvement was not observed when post-intervention scores were analyzed in isolation, suggesting that the benefit may be most substantial for trainees with lower baseline levels of objective skill and for trainees earlier in their surgical residency. Risk of bias was relatively low across included studies.

The findings of this review are in keeping with previously published literature examining VBC in surgery. The previous meta-analysis by Augestad et al. demonstrated significant improvements in objective surgical scoring scales in trainees receiving VBC [37]. In RCTs evaluating the use of VBC for surgical residents either improvements or no change in technical skill but increased confidence and knowledge of intraoperative tasks and anatomy were noted [13, 15, 18, 30]. For example, Soucisse et al. demonstrated a significant improvement in OSATS scores in residents undergoing VBC for a simulated bowel anastomosis task compared to residents without VBC [13]. Rindos et al. found VBC to be the most influential variable in improving junior resident surgical skill in a simulated laparoscopic vaginal cuff closure model [30]. The most recent RCT evaluating the use of VBC in surgical residents undergoing laparoscopic salpingo-oophorectomy by Norris et al. did not demonstrate a measurable improvement in objective surgical skill; however, it did improve knowledge and confidence in operative anatomy [15]. In addition to VBC for surgical residents, VBC has also been used in the surgical setting for peer-to-peer coaching [38, 39]. Wisconsin has developed a state-wide VBC program that matches practicing surgeons with surgeon coaches. Participants found value in sharing ideas and learning from other surgeons and overall had a positive perception of the program [39]. These variations on surgical VBC, as well as many others, can all be valuable when applied in the correct setting and warrant further implementation into surgical curricula across different levels of training.

In surgical residency, VBC can address decreasing OR exposure as well as an increasingly demanding curriculum. It offers time and teaching in a perioperative setting pertaining to the technical steps of operations [40]. As we progress into the era of competency-by-design (CBD) residency programs, VBC may become even more important [41]. In addition to heightening the efficiency and effectiveness of surgical teaching, VBC can offer regular check points whereby surgeons are able to assess both the technical competency and intraoperative knowledge of surgical trainees. We believe implementing VBC sessions for residents on a regular basis (e.g., monthly) could be a positive addition to CBD curriculums and warrants investigation.

A barrier to the implementation of VBC into CBD curriculums may be scheduling, given the clinical duties of both staff surgeons and surgical residents. Moreover, while VBC sessions are efficient means to teach surgical skills, they still require an additional 20–30 min of time [13, 15, 18]. Artificial Intelligence (AI) may have a role in lessening that burden and fully realizing the potential of VBC. Recent papers indicate the ability of AI to segment out important parts of operations, which may help identify high-yield intraoperative techniques for VBC [42]. Mirchi et al. even employed a virtual assistant that was able to provide feedback to novice and expert learners based on a simulated surgical task [43]. Importantly, however, AI systems do not necessarily convey the nuanced technical detail that an experienced surgeon may provide [44]. A hybrid version of VBC that combines both staff surgeons and AI-driven tools may be the most efficient and effective solution for the future of surgical post-graduate education [2]

The strengths of the present systematic review and meta-analysis include the rigorous methodology, exclusion of non-RCT data, comprehensive risk of bias assessment, thorough sensitivity analyses, and inclusion of a homogenous group of studies pertaining only to surgical resident education. Limitations of the present study include a small number of residents in the included studies (n = 298) and heterogeneity in objective measures of surgical skill. Moreover, there was no uniform definition of VBC among the included studies, with some studies employing VBC preoperatively and others examined its use in the postoperative setting. Most studies utilized VBC with simulated surgical tasks and thus, the impact of VBC on performance in the OR remains largely unexplored [14, 15, 31]. Further studies examining the use of VBC with live intraoperative audiovisual footage setting are warranted. Lastly, none of the included studies evaluated open operations in a live OR. This is understandable given the inherent difficulty in obtaining high-quality audiovisual footage in a sterile field without a laparoscope. Future studies may evaluate the use of wearable technology, such as GoPros©, for capturing intraoperative data that can be subsequently used for VBC.

The findings of this systematic review and meta-analysis demonstrate a significant improvement in baseline objective surgical skill in surgical residents undergoing VBC compared to surgical residents receiving standard surgical teaching without VBC. The lack of difference in post-intervention scores suggests that the benefit may be most substantial for trainees with lower baseline levels of objective skill and those earlier in their surgical residencies (i.e., PGY-1 and -2). Further work evaluating its effectiveness as part of CBD curricula is warranted and could yield important benefits for contemporary surgical education.

Acknowledgements

None.

Abbreviations

- BOSATS

Bariatric Objective Structured Assessment of Technical Skills

- CBD

Competency-by-Design

- GERT

Generic Error Rating Tool

- GOALS

Global Operative Assessment of Laparoscopic Skills

- MOSATS

Mini/Modified Objective Structured Assessment of Technical Skills

- OR

Operating room

- OSATS

Objective Structured Assessment of Technical Skills

- PGY

Post-graduate year

- RCT

Randomized controlled trial

- SMD

Standardized mean difference

- VBC

Video-based coaching

Appendix: Complete search strategy (Medline database example)

| OVID Medline Epub Ahead of Print, In-Process & Other Non-Indexed Citations, Ovid MEDLINE(R) Daily, and Ovid MEDLINE(R) 1946 to Oct 2021 | |

|---|---|

|

1. Video Recording/ 2. Video-Audio Media/ 3. Videotape Recording/ 4. Video-Based.mp 5. Video Based.mp 6. Video.mp 7. Or/1–6 8. Clinical Competence/ 9. Competency-Based Education/ 10. Mentoring/ 11. Coaching.mp 12. Feedback.mp 13. OSATS.mp 14. GOALS.mp 15. “Internship and Residency”/ 16. Surgical Education.mp 17. Surgical Training 18. Residen**.mp 19. Trainee*.mp 20. Learner.mp 21. Novice.mp 22. Apprentice.mp 23. Or/8–22 24. General Surgery/ 25. Neurosurgery/ 26. Orthopedic Procedures/ 27. Surgery, Plastic/ 28. Cardiac Surgery.mp 29. Otolaryngology/ 30. Obstetrics/ 31. Urology/ 32. Vascular Surgical Procedures/ 33. Thoracic Surgery/ 34. Or/24–33 35. 7 and 23 and 34 36. Animals/ 37. Humans/ 38. 36 not (36 and 37) 39. 35 not 38 |

Author contributions

Conception and design of the study—all authors. Acquisition of data—RD, CCK, and TM. Analysis and interpretation of data—all authors. Drafting and revision of the manuscript—all authors. Approval of the final version of the manuscript—All authors. Agreement to be accountable for all aspects of the work—All authors.

Funding

This research was conducted without external or internal sources of funding.

Declarations

Disclosures

Ryan Daniel, Tyler McKechnie, Colin C. Kruse, Marc Levin, Yung Lee, Aristithes G. Doumouras, Dennis Hong, and Cagla Eskicioglu have no conflicts of interest or financial ties to disclose.

Footnotes

Presentation

Quick shot oral presentation at the SAGES 2022 Annual Meeting (S011).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Ryan Daniel and Tyler McKechnie are Joint first authors, contributed equally.

References

- 1.Liu JH, Etzioni DA, O’Connell JB, Maggard MA, Ko CY. The increasing workload of general surgery. Arch Surg. 2004;139(4):423–428. doi: 10.1001/archsurg.139.4.423. [DOI] [PubMed] [Google Scholar]

- 2.Levin M, McKechnie T, Khalid S, Grantcharov TP, Goldenberg M. Automated methods of technical skill assessment in surgery: a systematic review. J Surg Educ. 2019;76(6):1629–1639. doi: 10.1016/j.jsurg.2019.06.011. [DOI] [PubMed] [Google Scholar]

- 3.Malangoni MA, Biester TW, Jones AT, Klingensmith ME, Lewis FR. Operative experience of surgery residents: trends and challenges. J Surg Educ. 2013;70(6):783–788. doi: 10.1016/j.jsurg.2013.09.015. [DOI] [PubMed] [Google Scholar]

- 4.Borman KR, Vick LR, Biester TW, Mitchell ME. Changing demographics of residents choosing fellowships: longterm data from the american board of surgery. J Am Coll Surg. 2008;206(5):782–788. doi: 10.1016/j.jamcollsurg.2007.12.012. [DOI] [PubMed] [Google Scholar]

- 5.Jamal MH, Wong S. Whalen T v Effects of the reduction of surgical residents’ work hours and implications for surgical residency programs: a narrative review. BMC Med Educ. 2014;14(1):S14. doi: 10.1186/1472-6920-14-S1-S14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Webber EM, Ronson AR, Gorman LJ, Taber SA, Harris KA. The future of general surgery: evolving to meet a changing practice. J Surg Educ. 2016;73(3):496–503. doi: 10.1016/j.jsurg.2015.12.002. [DOI] [PubMed] [Google Scholar]

- 7.McKechnie T, Levin M, Zhou K, Freedman B, Palter V, Grantcharov TP. Virtual surgical training during COVID-19: operating room simulation platforms accessible from home. Ann Surg. 2020 doi: 10.1097/SLA.0000000000003999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schwellnus H, Carnahan H. Peer-coaching with health care professionals: what is the current status of the literature and what are the key components necessary in peer-coaching? A scoping. Rev Med Teach. 2013;36(1):38–46. doi: 10.3109/0142159X.2013.836269. [DOI] [PubMed] [Google Scholar]

- 9.Greenberg CC, Dombrowski J, Dimick JB. Video-based surgical coaching an emerging approach to performance improvement. JAMA Surg. 2016;151(3):282–283. doi: 10.1001/jamasurg.2015.4442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hu YY, Mazer LM, Yule SJ, et al. Complementing operating room teaching with video-based coaching. JAMA Surg. 2017;152(4):318–325. doi: 10.1001/jamasurg.2016.4619. [DOI] [PubMed] [Google Scholar]

- 11.Levin M, McKechnie T, Kruse CC, Aldrich K, Grantcharov TP, Langerman A. Surgical data recording in the operating room: a systematic review of modalities and metrics. Br J Surg. 2021;108(6):613–621. doi: 10.1093/bjs/znab016. [DOI] [PubMed] [Google Scholar]

- 12.O’Donoghue P. The use of feedback videos in sport. Int J Perform Anal Sport. 2006;6(2):1–14. doi: 10.1080/24748668.2006.11868368. [DOI] [Google Scholar]

- 13.Soucisse ML, Boulva K, Sideris L, Drolet P, Morin M, Dubé P. Video coaching as an efficient teaching method for surgical residents—a randomized controlled trial. J Surg Educ. 2017;74(2):365–371. doi: 10.1016/j.jsurg.2016.09.002. [DOI] [PubMed] [Google Scholar]

- 14.Bonrath EM, Dedy NJ, Gordon LE, Grantcharov TP. Comprehensive surgical coaching enhances surgical skill in the operating room: a randomized controlled trial. Ann Surg. 2015;262(2):205–212. doi: 10.1097/SLA.0000000000001214. [DOI] [PubMed] [Google Scholar]

- 15.Norris S, Papillon-Smith J, Gagnon LH, Jacobson M, Sobel M, Shore EM. Effect of a surgical teaching video on resident performance of a laparoscopic salpingo-oophorectomy: a randomized controlled trial. J Minim Invasive Gynecol. 2020;27(7):1545–1551. doi: 10.1016/j.jmig.2020.01.010. [DOI] [PubMed] [Google Scholar]

- 16.Dickerson P, Grande S, Evans D, Levine B, Coe M. Utilizing intraprocedural interactive video capture with google glass for immediate postprocedural resident coaching. J Surg Educ. 2019;76(3):607–619. doi: 10.1016/j.jsurg.2018.10.002. [DOI] [PubMed] [Google Scholar]

- 17.Greenberg CC, Ghousseini HN, Quamme SRP, Beasley HL, Wiegmann DA. Surgical coaching for individual performance improvement. Ann Surg. 2015;261(1):32–34. doi: 10.1097/SLA.0000000000000776. [DOI] [PubMed] [Google Scholar]

- 18.Augestad KM, Butt K, Ignjatovic D, Keller DS, Kiran R. Video-based coaching in surgical education: a systematic review and meta-analysis. Surg Endosc. 2020;34(2):521–535. doi: 10.1007/s00464-019-07265-0. [DOI] [PubMed] [Google Scholar]

- 19.Moher D, Liberati A, Tetzlaff J, Altman DG. Prisma group preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–269. doi: 10.1371/journal.pmed.1000097. [DOI] [PubMed] [Google Scholar]

- 20.Martin JA, Regehr G, Reznick R, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. Br J Surg. 2005;84(2):273–278. doi: 10.1046/j.1365-2168.1997.02502.x. [DOI] [PubMed] [Google Scholar]

- 21.Zevin B, Bonrath E, Aggarwal R, Ahmed N, Grantcharov T. Development, feasibility, validity, and reliability of a scale for objective assessment of operative performance in laparoscopic gastric bypass surgery. J Am Coll Surg. 2013;216(5):955–965. doi: 10.1016/j.jamcollsurg.2013.01.003. [DOI] [PubMed] [Google Scholar]

- 22.Vassiliou M, Feldman L, Andrew C, et al. A global assessment tool for evaluation of intraoperative laparoscopic skills. Am J Surg. 2005;190:107–113. doi: 10.1016/j.amjsurg.2005.04.004. [DOI] [PubMed] [Google Scholar]

- 23.Husslein H, Shirreff L, Shore E, Lefebvre G, Grantcharov T. The generic error rating tool: a novel approach to assessment of performance and surgical education in gynecologic laparoscopy. J Surg Educ. 2015;72(6):1259–1265. doi: 10.1016/j.jsurg.2015.04.029. [DOI] [PubMed] [Google Scholar]

- 24.Higgins JP, Savovic J, Page MJ, Sterne J. Revised cochrane risk-of-bias tool for randomized trials (RoB 2) Cochrane Handb Syst Rev Interv. 2019 doi: 10.1002/9781119536604.ch8. [DOI] [Google Scholar]

- 25.Wan X, Wang W, Liu J, Tong T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med Res Methodol. 2014;14(1):135. doi: 10.1186/1471-2288-14-135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Weir CJ, Butcher I, Assi V, et al. Dealing with missing standard deviation and mean values in meta-analysis of continuous outcomes: a systematic review. BMC Med Res Methodol. 2018 doi: 10.1186/s12874-018-0483-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Higgins J, Green S. Identifying and measuring heterogeneity. In: Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA, editors. Cochrane Handbook for Systematic Reviews of Interventions. Hoboken: Wiley; 2011. [Google Scholar]

- 28.Lan J. The case of the misleading funnel plot. BMJ. 2006;333:597–600. doi: 10.1136/bmj.333.7568.597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Higgins J, Green S. Cochrane Handbook for Systematic Reviews of Interventions. Hoboken: Wiley; 2008. [Google Scholar]

- 30.Rindos NB, Wroble-Biglan M, Ecker A, Lee TT, Donnellan NM. Impact of video coaching on gynecologic resident laparoscopic suturing: a randomized controlled trial. J Minim Invasive Gynecol. 2017;24(3):426–431. doi: 10.1016/j.jmig.2016.12.020. [DOI] [PubMed] [Google Scholar]

- 31.Crawshaw BP, Steele SR, Lee EC, et al. Failing to prepare is preparing to fail: a single-blinded, randomized controlled trial to determine the impact of a preoperative instructional video on the ability of residents to perform laparoscopic right colectomy. Dis Colon Rectum. 2016;59(1):28–34. doi: 10.1097/DCR.0000000000000503. [DOI] [PubMed] [Google Scholar]

- 32.Jensen AR, Wright AS, Levy AE, et al. Acquiring basic surgical skills: Is a faculty mentor really needed? Am J Surg. 2009;197(1):82–88. doi: 10.1016/j.amjsurg.2008.06.039. [DOI] [PubMed] [Google Scholar]

- 33.Karam MD, Thomas GW, Koehler DM, et al. Surgical coaching from head-mounted video in the training of fluoroscopically guided articular fracture surgery. J Bone Jt Surg. 2015;97(12):1031–1039. doi: 10.2106/JBJS.N.00748. [DOI] [PubMed] [Google Scholar]

- 34.Yule S, Parker SH, Wilkinson J, et al. Coaching non-technical skills improves surgical residents’ performance in a simulated operating room. J Surg Educ. 2015;72(6):1124–1130. doi: 10.1016/j.jsurg.2015.06.012. [DOI] [PubMed] [Google Scholar]

- 35.Vaughn CJ, Kim E, O’Sullivan P, et al. Peer video review and feedback improve performance in basic surgical skills. Am J Surg. 2016;211(2):355–360. doi: 10.1016/j.amjsurg.2015.08.034. [DOI] [PubMed] [Google Scholar]

- 36.Backstein D, Agnidis Z, Sadhu R, Macrae H. Effectiveness of repeated video feedback in the acquisition of a surgical technical skill. Can J Surg. 2005;48(3):195. [PMC free article] [PubMed] [Google Scholar]

- 37.Magne Augestad K. Delaney knut magne augestad CP, delaney CP postoperative ileus: impact of pharmacological treatment, laparoscopic surgery and enhanced recovery pathways. World J Gastroenterol. 2010;16(17):2067–2074. doi: 10.3748/wjg.v16.i17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Palter VN, Beyfuss KA, Jokhio AR, Ryzynski A, Ashamalla S. Peer coaching to teach faculty surgeons an advanced laparoscopic skill: a randomized controlled trial. Surgery (United States) 2016;160(5):1392–1399. doi: 10.1016/j.surg.2016.04.032. [DOI] [PubMed] [Google Scholar]

- 39.Greenberg CC, Ghousseini HN, Pavuluri Quamme SR, et al. A statewide surgical coaching program provides opportunity for continuous professional development. Ann Surg. 2018 doi: 10.1097/SLA.0000000000002341. [DOI] [PubMed] [Google Scholar]

- 40.Orr CJ, Sonnadara RR. Coaching by design: exploring a new approach to faculty development in a competency-based medical education curriculum. Adv Med Educ Pract. 2019;10:229–244. doi: 10.2147/AMEP.S191470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hu YY, Mazer LM, Yule SJ, et al. Complementing operating room teaching with video-based coaching. JAMA Surg. 2017 doi: 10.1001/jamasurg.2016.4619. [DOI] [PubMed] [Google Scholar]

- 42.Kadkhodamohammadi A, Sivanesan Uthraraj N, Giataganas P, et al. Towards video-based surgical workflow understanding in open orthopaedic surgery. Computer Methods Biomech Biomed Eng. 2021;9(3):286–293. doi: 10.1080/21681163.2020.1835552. [DOI] [Google Scholar]

- 43.Mirchi N, Bissonnette V, Yilmaz R, Ledwos N, Winkler-Schwartz A, del Maestro RF. The virtual operative assistant: an explainable artificial intelligence tool for simulation-based training in surgery and medicine. PLoS ONE. 2020 doi: 10.1371/journal.pone.0229596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Skjold-Ødegaard B, Søreide K. Competency-based Surgical training and entrusted professional activities—perfect match or a procrustean bed? Ann Surg. 2021;273(5):e173–e175. doi: 10.1097/SLA.0000000000004521. [DOI] [PubMed] [Google Scholar]