Abstract

Background

Artificial intelligence (AI) in medicine has shown significant promise, particularly in neuroimaging. AI increases efficiency and reduces errors, making it a valuable resource for physicians. With the increasing amount of data processing and image interpretation required, the ability to use AI to augment and aid the radiologist could improve the quality of patient care.

Observations

AI can predict patient wait times, which may allow more efficient patient scheduling. Additionally, AI can save time for repeat magnetic resonance neuroimaging and reduce the time spent during imaging. AI has the ability to read computed tomography, magnetic resonance imaging, and positron emission tomography with reduced or without contrast without significant loss in sensitivity for detecting lesions. Neuroimaging does raise important ethical considerations and is subject to bias. It is vital that users understand the practical and ethical considerations of the technology.

Conclusions

The demonstrated applications of AI in neuroimaging are numerous and varied, and it is reasonable to assume that its implementation will increase as the technology matures. AI’s use for detecting neurologic conditions holds promise in combatting ever increasing imaging volumes and providing timely diagnoses.

Artificial intelligence (AI) in medicine has shown significant promise, particularly in neuroimaging. AI refers to computer systems designed to perform tasks that normally require human intelligence.1 Machine learning (ML), a field in which computers learn from data without being specifically programmed, is the AI subset responsible for its success in matching or even surpassing humans in certain tasks.2

Supervised learning, a subset of ML, uses an algorithm with annotated data from which to learn.3 The program will use the characteristics of a training data set to predict a specific outcome or target when exposed to a sample data set of the same type. Unsupervised learning finds naturally occurring patterns or groupings within the data.4 With deep learning (DL) algorithms, computers learn the features that optimally represent the data for the problem at hand.5 Both ML and DL are meant to emulate neural networks in the brain, giving rise to artificial neural networks composed of nodes structured within input, hidden, and output layers.

The DL neural network differs from a conventional one by having many hidden layers instead of just 1 layer that extracts patterns within the data.6 Convolutional neural networks (CNNs) are the most prevalent DL architecture used in medical imaging. CNN’s hidden layers apply convolution and pooling operations to break down an image into features containing the most valuable information. The connecting layer applies high-level reasoning before the output layer provides predictions for the image. This framework has applications within radiology, such as predicting a lesion category or condition from an image, determining whether a specific pixel belongs to background or a target class, and predicting the location of lesions.1

AI promises to increase efficiency and reduces errors. With increased data processing and image interpretation, AI technology may help radiologists improve the quality of patient care.6 This article discusses the current applications and future integration of AI in neuroradiology.

NEUROIMAGING APPLICATIONS

AI can improve the quality of neuroimaging and reduce the clinical and systemic loads of other imaging modalities. AI can predict patient wait times for computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and X-ray imaging.7 A ML-based AI has detected the variables that most affected patient wait times, including proximity to federal holidays and severity of the patient’s condition, and calculated how long patients would be delayed after their scheduled appointment time. This AI modality could allow more efficient patient scheduling and reveal areas of patient processing that could be changed, potentially improving patient satisfaction and outcomes for time-sensitive neurologic conditions.

AI can save patient and health care practitioner time for repeat MRIs. An estimated 20% of MRI scans require a repeat series—a massive loss of time and funds for both patients and the health care system.8 A DL approach can determine whether an MRI is usable clinically or unclear enough to require repetition.9 This initial screening measure can prevent patients from making return visits and neuroradiologists from reading inconclusive images. AI offers the opportunity to reduce time and costs incurred by optimizing the health care process before imaging is obtained.

Speeding Up Neuroimaging

AI can reduce the time spent performing imaging. Because MRIs consume time and resources, compressed sensing (CS) is commonly used. CS preferentially maintains in-plane resolution at the expense of through-plane resolution to produce a scan with a single, usable viewpoint that preserves signal-to-noise ratio (SNR). CS, however, limits interpretation to single directions and can create aliasing artifacts. An AI algorithm known as synthetic multi-orientation resolution enhancement works in real time to reduce aliasing and improve resolution in these compressed scans.10 This AI improved resolution of white matter lesions in patients with multiple sclerosis (MS) on FLAIR (fluid-attenuated inversion recovery) images, and permitted multiview reconstruction from these limited scans.

Tasks of reconstructing and anti-aliasing come with high computational costs that vary inversely with the extent of scanning compression, potentially negating the time and resource savings of CS. DL AI modalities have been developed to reduce operational loads and further improve image resolution in several directions from CS. One such deep residual learning AI was trained with compressed MRIs and used the framelet method to create a CNN that could rapidly remove global and deeply coherent aliasing artifacts.11 This system, compared with synthetic multi-orientation resolution enhancement, uses a pretrained, pretested AI that does not require additional time during scanning for computational analysis, thereby multiplying the time benefit of CS while retaining the benefits of multidirectional reconstruction and increased resolution. This methodology suffers from inherent degradation of perceptual image quality in its reconstructions because of the L2 loss function the CNN uses to reduce mean squared error, which causes blurring by averaging all possible outcomes of signal distribution during reconstruction. To combat this, researchers have developed another AI to reduce reconstruction times that uses a different loss function in a generative adversarial network to retain image quality, while offering reconstruction times several hundred times faster than current CS-MRI structures.12 So-called sparse-coding methods promise further reduction in reconstruction times, with the possibility of processing completed online with a lightweight architecture rather than on a local system.13

Neuroimaging of acute cases benefits most directly from these technologies because MRIs and their high resolution and SNR begin to approach CT imaging time scales. This could have important implications in clinical care, particularly for stroke imaging and evaluating spinal cord compression. CS-MRI optimization represents one of the greatest areas of neuroimaging cost savings and neurologic care improvement in the modern radiology era.

Reducing Contrast and Radiation Doses

AI has the ability to read CT, MRI, and positron emission tomography (PET) with reduced or without contrast without significant loss in sensitivity for detecting lesions. With MRI, gadolinium-based contrast can cause injection site reactions, allergic reactions, metal deposition throughout the body, and nephrogenic systemic fibrosis in the most severe instances. 14 DL has been applied to brain MRIs performed with 10% of a full dose of contrast without significant degradation of image quality. Neuroradiologists did not rate the AI-synthesized images for several MRI indications lower than their full-dose counterparts.15 Low-dose contrast imaging, regardless of modality, generates greater noise with a significantly reduced signal. However, with AI applied, researchers found that the software suppressed motion and aliasing artifacts and improved image quality, perhaps evidence that this low-dose modality is less vulnerable to the most common pitfalls of MRI.

Recently, low-dose MRI moved into the spotlight when Subtle Medical Subtle-GAD software received a National Institutes of Health grant and an expedited pathway to phase 2 clinical trials.16 SubtleGAD, a DL AI that enables low-dose MRI interpretation, might allow contrast MRI for patients with advanced kidney disease or contrast allergies. At some point, contrast with MRI might not be necessary because DL AI applied to noncontrast MRs for detecting MS lesions was found to be preliminarily effective with 78% lesion detection sensitivity.17

PET-MRI combines simultaneous PET and MRI and has been used to evaluate neurologic disorders. PET-MRI can detect amyloid plaques in Alzheimer disease 10 to 20 years before clinical signs of dementia emerge.18 PET-MRI has sparked DL AI development to decrease the dose of the IV radioactive tracer 18F-florbetaben used in imaging to reduce radiation exposure and imaging costs. This reduction is critical if PET-MRI is to become used widely.19–21

An initial CNN could reconstruct low-dose amyloid scans to full-dose resolution, albeit with a greater susceptibility to some artifacts and motion blurring.22 Similar to the synthetic multi-orientation resolution enhancement CNN, this program showed signal blurring from the L2 loss function, which was corrected in a later AI that used a generative adversarial network to minimize perceptual loss.23 This new AI demonstrated greater image resolution, feature preservation, and radiologist rating over the previous AI and was capable of reconstructing low-dose PET scans to full-dose resolution without an accompanying MRI. Applications of this algorithm are far-reaching, potentially allowing neuroimaging of brain tumors at more frequent intervals with higher resolution and lower total radiation exposure.

AI also has been applied to neurologic CT to reduce radiation exposure.24 Because it is critical to abide by the principles of ALARA (as low as reasonably achievable), the ability of AI to reduce radiation exposure holds significant promise. A CNN has been used to transform low-dose CTs of anthropomorphic models with calcium inserts and cardiac patients to normal-dose CTs, with the goal of improving the SNR.25 By training a noise-discriminating CNN and a noise-generating CNN together in a generative adversarial network, the AI improved image feature preservation during transformation. This algorithm has a direct application in imaging cerebral vasculature, including calcification that can explain lacunar infarcts and tracking systemic atherosclerosis.26

Another CNN has been applied to remove more complex noise patterns from the phenomena of beam hardening and photon starvation common in low-dose CT. This algorithm extracts the directional components of artifacts and compares them to known artifact patterns, allowing for highly specific suppression of unwanted signals.27 In June 2019, the US Food and Drug Administration (FDA) approved ClariPi, a deep CNN program for advanced denoising and resolution improvement of low- and ultra low-dose CTs.28 Aside from only low-dose settings, this AI could reduce artifacts in all CT imaging modalities and improve therapeutic value of procedures, including cerebral angiograms and emergency cranial scans. As the average CT radiation dose decreased from 12 mSv in 2009 to 1.5 mSv in 2014 and continues to fall, these algorithms will become increasingly necessary to retain the high resolution and diagnostic power expected of neurologic CTs.29,30

DOWNSTREAM APPLICATIONS

Downstream applications refer to AI use after a radiologic study is acquired, mostly image interpretation. More than 70% of FDA-approved AI medical devices are in radiology, and many of these relate to image analysis.6,31 Although AI is not limited to black-and-white image interpretation, it is hypothesized that one of the reasons radiology is inviting to AI is because gray-scale images lend themselves to standardization. 3 Moreover, most radiology departments already use AI-friendly picture archiving and communication systems.31,32

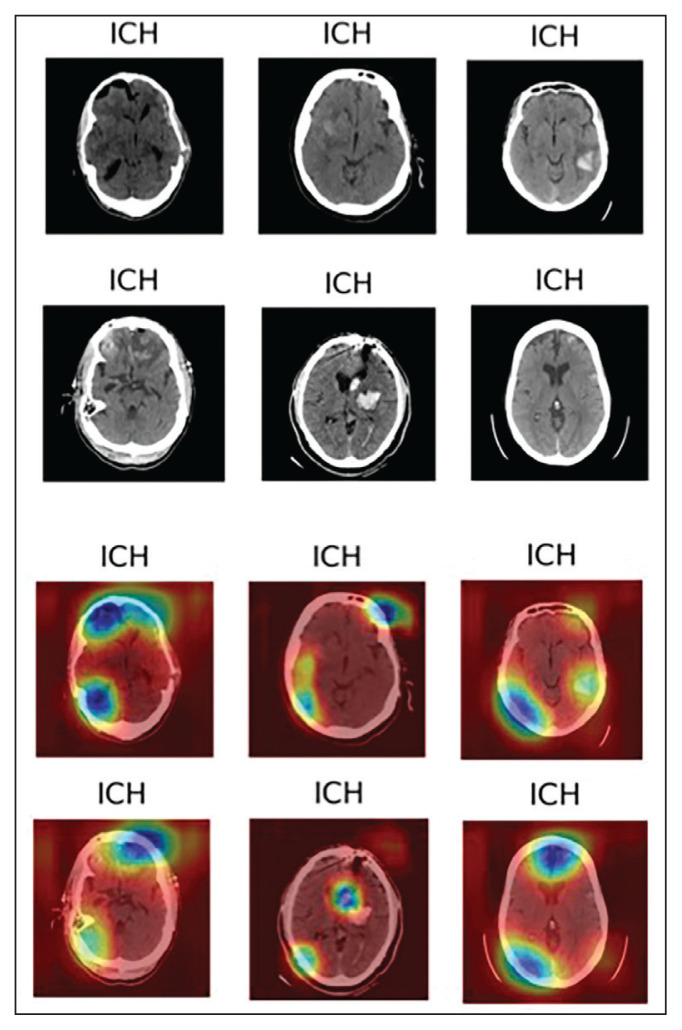

AI has been applied to a range of radiologic modalities, including MRI, CT, ultrasonography, PET, and mammography.32–38 AI also has been specifically applied to radiography, including the interpretation of tuberculosis, pneumonia, lung lesions, and COVID-19.33,39–45 AI also can assist triage, patient screening, providing a “second opinion” rapidly, shortening the time needed for attaining a diagnosis, monitoring disease progression, and predicting prognosis. 37–39,43,45–47 Downstream applications of AI in neuroradiology and neurology include using CT to aid in detecting hemorrhage or ischemic stroke; using MRI to automatically segment lesions, such as tumors or MS lesions; assisting in early diagnosis and predicting prognosis in MS; assisting in treating paralysis, including from spinal cord injury; determining seizure type and localizing area of seizure onset; and using cameras, wearable devices, and smartphone applications to diagnose and assess treatment response in neurodegenerative disorders, such as Parkinson or Alzheimer diseases (Figure).37,48–56

FIGURE. Grad-CAM of ICH.

Abbreviations: Grad-CAM, gradient-weighted class activation mapping; ICH, intracranial hemorrhage. A convolutional neural net, Grad-CAM can detect and classify serious neurologic conditions, such as ICH.

Several AI tools have been deployed in the clinical setting, particularly triaging intracranial hemorrhage and moving these studies to the top of the radiologist’s worklist. In 2020 the Centers for Medicare and Medicaid Services (CMS) began reimbursing Viz.ai software’s AI-based Viz ContaCT (Viz LVO) with a new International Statistical Classification of Diseases, Tenth Revision procedure code.57

Viz LVO automatically detects large vessel occlusions, flags the occlusion on CT angiogram, alerts the stroke team (interventional radiologist, neuroradiologist, and neurologist), and transmits images through a secure application to the stroke team members’ mobile devices— all in less than 6 minutes from study acquisition to alarm notification.48 Additional software can quantify and measure perfusion in affected brain areas.48 This could have implications for quantifying and targeting areas of ischemic penumbra that could be salvaged after a stroke and then using that information to plan targeted treatment and/or intervention. Because many trials (DAWN/DEFUSE3) have shown benefits in stroke outcome by extending the therapeutic window for the endovascular thrombectomy, the ability to identify appropriate candidates is essential.58,59 Development of AI tools in assessing ischemic penumbra with quantitative parameters (mean transit time, cerebral blood volume, cerebral blood flow, mismatch ratio) using AI has benefited image interpretation. Medtronic RAPID software can provide quantitative assessment of CT perfusion. AI tools could be used to provide an automatic ASPECT score, which provides a quantitative measure for assessing potential ischemic zones and aids in assessing appropriate candidates for thrombectomy.

Several FDA-approved AI tools help quantify brain structures in neuroradiology, including quantitative analysis through MRI for analysis of anatomy and PET for analysis of functional uptake, assisting in more accurate and more objective detection and monitoring of conditions such as atrophy, dementia, trauma, seizure disorders, and MS.48 The growing number of FDA-approved AI technologies and the recent CMS-approved reimbursement for an AI tool indicate a changing landscape that is more accepting of downstream applications of AI in neuroradiology. As AI continues to integrate into medical regulation and finance, we predict AI will continue to play a prominent role in neuroradiology.

PRACTICAL AND ETHICAL CONSIDERATIONS

In any discussion of the benefits of AI, it is prudent to address its shortcomings. Chief among these is overfitting, which occurs when an AI is too closely aligned with its training dataset and prone to error when applied to novel cases. Often this is a byproduct of a small training set.60 Neuroradiology, particularly with uncommon, advanced imaging methods, has a smaller number of available studies.61 Even with more prevalent imaging modalities, such as head CT, the work of collecting training scans from patients with the prerequisite disease processes, particularly if these processes are rare, can limit the number of datapoints collected. Neuroradiologists should understand how an AI tool was generated, including the size and variety of the training dataset used, to best gauge the clinical applicability and fitness of the system.

Another point of concern for AI clinical decision support tools’ implementation is automation bias—the tendency for clinicians to favor machine-generated decisions and ignore contrary data or conflicting human decisions.62 This situation often arises when radiologists experience overwhelming patient loads or are in underresourced settings, where there is little ability to review every AI-based diagnosis. Although AI might be of benefit in such conditions by reducing physician workload and streamlining the diagnostic process, there is the propensity to improperly rely on a tool meant to augment, not replace, a radiologist’s judgment. Such cases have led to adverse outcomes for patients, and legal precedence shows that this constitutes negligence.63 Maintaining awareness of each tool’s limitations and proper application is the only remedy for such situations.

Ethically, we must consider the opaqueness of ML-developed neuroimaging AIs. For many systems, the specific process by which an AI arrives at its conclusions is unknown. This AI “black box” can conceal potential errors and biases that are masked by overall positive performance metrics. The lack of understanding about how a tool functions in the zero-failure clinical setting understandably gives radiologists pause. The question must be asked: Is it ethical to use a system that is a relatively unknown quantity? Entities, including state governments, Canada, and the European Union, have produced an answer. Each of these governments have implemented policies requiring that health care AIs use some method to display to end users the process by which they arrive at conclusions.64–68

The 21st Century Cures Act declares that to attain approval, clinical AIs must demonstrate this explainability to clinicians and patients.69 The response has been an explosion in the development of explainable AI. Systems that visualize the areas where AI attention most often rests with heatmaps, generate labels for the most heavily weighted features of radiographic images, and create full diagnostic reports to justify AI conclusions aim to meet the goal of transparency and inspiring confidence in clinical end users.70 The ability to understand the “thought process” of a system proves useful for error correction and retooling. A trend toward under- or overdetecting conditions, flagging seemingly irrelevant image regions, or low reproducibility can be better addressed when it is clear how the AI is drawing its false conclusions. With an iterative process of testing and redesigning, false positive and negative rates can be reduced, the need for human intervention can be lowered to an appropriate minimum, and patient outcomes can be improved.71

Data collection raises another ethical concern. To train functional clinical decision support tools, massive amounts of patient demographic, laboratory, and imaging data are required. With incentives to develop the most powerful AI systems, record collection can venture down a path where patient autonomy and privacy are threatened. Radiologists have a duty to ensure data mining serves patients and improves the practice of radiology while protecting patients’ personal information.62 Policies have placed similar limits on the access to and use of patient records.64–69 Patients have the right to request explanation of the AI systems their data have been used to train. Approval for data acquisition requires the use of explainable AI, standardized data security protocol implementation, and adequate proof of communal benefit from the clinical decision support tool. Establishment of statemandated protections bodes well for a future when developers can access enormous caches of data while patients and health care professionals are assured that no identifying information has escaped a well-regulated space. On the level of the individual radiologist, the knowledge that each datum represents a human life. These are people who has made themselves vulnerable by seeking relief for what ails them, which should serve as a lasting reminder to operate with utmost care when handling sensitive information.

CONCLUSIONS

The demonstrated applications of AI in neuroimaging are numerous and varied, and it is reasonable to assume that its implementation will increase as the technology matures. AI use for detecting important neurologic conditions holds promise in combatting ever greater imaging volumes and providing timely diagnoses. As medicine witnesses the continuing adoption of AI, it is important that practitioners possess an understanding of its current and emerging uses.

Footnotes

Author disclosures

The authors report no actual or potential conflicts of interest or outside sources of funding with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the US Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

References

- 1.Chartrand G, Cheng PM, Vorontsov E, et al. Deep learning: a primer for radiologists. Radiographics. 2017;37(7):2113–2131. doi: 10.1148/rg.2017170077. [DOI] [PubMed] [Google Scholar]

- 2.King BF., Jr Guest editorial: discovery and artificial intelligence. AJR Am J Roentgenol. 2017;209(6):1189–1190. doi: 10.2214/AJR.17.19178. [DOI] [PubMed] [Google Scholar]

- 3.Syed AB, Zoga AC. Artificial intelligence in radiology: current technology and future directions. Semin Musculoskelet Radiol. 2018;22(5):540–545. doi: 10.1055/s-0038-1673383. [DOI] [PubMed] [Google Scholar]

- 4.Deo RC. Machine learning in medicine. Circulation. 2015;132(20):1920–1930. doi: 10.1161/CIRCULATIONAHA.115.001593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Litjens G, Kooi T, Bejnordi BE, et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 6.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2(1):35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Curtis C, Liu C, Bollerman TJ, Pianykh OS. Machine learning for predicting patient wait times and appointment delays. J Am Coll Radiol. 2018;15(9):1310–1316. doi: 10.1016/j.jacr.2017.08.021. [DOI] [PubMed] [Google Scholar]

- 8.Andre JB, Bresnahan BW, Mossa-Basha M, et al. Toward quantifying the prevalence, severity, and cost associated with patient motion during clinical MR examinations. J Am Coll Radiol. 2015;12(7):689–695. doi: 10.1016/j.jacr.2015.03.007. [DOI] [PubMed] [Google Scholar]

- 9.Sreekumari A, Shanbhag D, Yeo D, et al. A deep learning-based approach to reduce rescan and recall rates in clinical MRI examinations. AJNR Am J Neuroradiol. 2019;40(2):217–223. doi: 10.3174/ajnr.A5926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhao C, Shao M, Carass A, et al. Applications of a deep learning method for anti-aliasing and super-resolution in MRI. Magn Reson Imaging. 2019;64:132–141. doi: 10.1016/j.mri.2019.05.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lee D, Yoo J, Tak S, Ye JC. Deep residual learning for accelerated MRI using magnitude and phase networks. IEEE Trans Biomed Eng. 2018;65(9):1985–1995. doi: 10.1109/TBME.2018.2821699. [DOI] [PubMed] [Google Scholar]

- 12.Mardani M, Gong E, Cheng JY, et al. Deep generative adversarial neural networks for compressive sensing MRI. IEEE Trans Med Imaging. 2019;38(1):167–179. doi: 10.1109/TMI.2018.2858752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Dong C, Loy CC, He K, Tang X. Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell. 2016;38(2):295–307. doi: 10.1109/TPAMI.2015.2439281. [DOI] [PubMed] [Google Scholar]

- 14.Sammet S. Magnetic resonance safety. Abdom Radiol (NY) 2016;41(3):444–451. doi: 10.1007/s00261-016-0680-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gong E, Pauly JM, Wintermark M, Zaharchuk G. Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging. 2018;48(2):330–340. doi: 10.1002/jmri.25970. [DOI] [PubMed] [Google Scholar]

- 16.Subtle Medical NIH awards Subtle Medical, Inc. $1.6 million grant to improve safety of MRI exams by reducing gadolinium dose using AI. [Accessed March 14, 2022]. Press release September 18, 2019. https://www.biospace.com/article/releases/nih-awards-subtle-medical-inc-1-6-million-grant-to-improve-safety-of-mri-exams-by-reducing-gadolinium-dose-using-ai .

- 17.Narayana PA, Coronado I, Sujit SJ, Wolinsky JS, Lublin FD, Gabr RE. Deep learning for predicting enhancing lesions in multiple sclerosis from noncontrast MRI. Radiology. 2020;294(2):398–404. doi: 10.1148/radiol.2019191061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Jack CR, Jr, Knopman DS, Jagust WJ, et al. Hypothetical model of dynamic biomarkers of the Alzheimer’s pathological cascade. Lancet Neurol. 2010;9(1)(09):119–128. 70299–6. doi: 10.1016/S1474-4422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gatidis S, Würslin C, Seith F, et al. Towards tracer dose reduction in PET studies: simulation of dose reduction by retrospective randomized undersampling of list-mode data. Hell J Nucl Med. 2016;19(1):15–18. doi: 10.1967/s002449910333. [DOI] [PubMed] [Google Scholar]

- 20.Kaplan S, Zhu YM. Full-dose PET image estimation from low-dose PET image using deep learning: a pilot study. J Digit Imaging. 2019;32(5):773–778. doi: 10.1007/s10278-018-0150-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Xu J, Gong E, Pauly J, Zaharchuk G. 200x low-dose PET reconstruction using deep learning. [Accessed 2/16/2022]. arXiv: 171204119. https://arxiv.org/pdf/1712.04119.pdf .

- 22.Chen KT, Gong E, de Carvalho Macruz FB, et al. Ultra-low-dose 18F-florbetaben amyloid PET imaging using deep learning with multi-contrast MRI inputs. Radiology. 2019;290(3):649–656. doi: 10.1148/radiol.2018180940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ouyang J, Chen KT, Gong E, Pauly J, Zaharchuk G. Ultra-low-dose PET reconstruction using generative adversarial network with feature matching and task-specific perceptual loss. Med Phys. 2019;46(8):3555–3564. doi: 10.1002/mp.13626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brenner DJ, Hall EJ. Computed tomography—an increasing source of radiation exposure. N Engl J Med. 2007;357(22):2277–2284. doi: 10.1056/NEJMra072149. [DOI] [PubMed] [Google Scholar]

- 25.Wolterink JM, Leiner T, Viergever MA, Isgum I. Generative adversarial networks for noise reduction in low-dose CT. IEEE Trans Med Imaging. 2017;36(12):2536–2545. doi: 10.1109/TMI.2017.2708987. [DOI] [PubMed] [Google Scholar]

- 26.Sohn YH, Cheon HY, Jeon P, Kang SY. Clinical implication of cerebral artery calcification on brain CT. Cerebrovasc Dis. 2004;18(4):332–337. doi: 10.1159/000080772. [DOI] [PubMed] [Google Scholar]

- 27.Kang E, Min J, Ye JC. A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med Phys. 2017;44(10):e360–e375. doi: 10.1002/mp.12344. [DOI] [PubMed] [Google Scholar]

- 28.ClariPi gets FDA clearance for AI-powered CT image denoising solution. [Accessed February 16,2022]. Published June 24, 2019. https://www.itnonline.com/content/claripi-gets-fda-clearance-ai-powered-ct-image-denoising-solution .

- 29.Hausleiter J, Meyer T, Hermann F, et al. Estimated radiation dose associated with cardiac CT angiography. JAMA. 2009;301(5):500–507. doi: 10.1001/jama.2009.54. [DOI] [PubMed] [Google Scholar]

- 30.Al-Mallah M, Aljizeeri A, Alharthi M, Alsaileek A. Routine low-radiation-dose coronary computed tomography angiography. Eur Heart J Suppl. 2014;16(suppl B):B12–B16. doi: 10.1093/eurheartj/suu024. [DOI] [Google Scholar]

- 31.Benjamens S, Dhunnoo P, Meskó B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: an online database. NPJ Digit Med. 2020;3:118. doi: 10.1038/s41746-020-00324-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Talebi-Liasi F, Markowitz O. Is artificial intelligence going to replace dermatologists? Cutis. 2020;105(1):28–31. [PubMed] [Google Scholar]

- 33.Khan O, Bebb G, Alimohamed NA. Artificial intelligence in medicine: what oncologists need to know about its potential—and its limitations. Oncology Exchange. 2017;16(4):8–13. http://www.oncologyex.com/pdf/vol16_no4/feature_khan-ai.pdf . [Google Scholar]

- 34.Liu X, Faes L, Kale AU, et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health. 2019;1(6):e271–e297. doi: 10.1016/S2589-7500(19)30123-2. [DOI] [PubMed] [Google Scholar]

- 35.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 36.Salim M, Wåhlin E, Dembrower K, et al. External evaluation of 3 commercial artificial intelligence algorithms for independent assessment of screening mammograms. JAMA Oncol. 2020;6(10):1581–1588. doi: 10.1001/jamaoncol.2020.3321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Arbabshirani MR, Fornwalt BK, Mongelluzzo GJ, et al. Advanced machine learning in action: identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration. NPJ Digit Med. 2018;1(1):1–7. doi: 10.1038/s41746-017-0015-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Sheth D, Giger ML. Artificial intelligence in the interpretation of breast cancer on MRI. J Magn Reson Imaging. 2020;51(5):1310–1324. doi: 10.1002/jmri.26878. [DOI] [PubMed] [Google Scholar]

- 39.Borkowski AA, Viswanadhan NA, Thomas LB, Guzman RD, Deland LA, Mastorides SM. Using artificial intelligence for COVID-19 chest X-ray diagnosis. Fed Pract. 2020;37(9):398–404. doi: 10.12788/fp.0045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kermany DS, Goldbaum M, Cai W, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172(5):1122–1131e9. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 41.Nam JG, Park S, Hwang EJ, et al. Development and validation of deep learning-based automatic detection algorithm for malignant pulmonary nodules on chest radiographs. Radiology. 2019;290(1):218–228. doi: 10.1148/radiol.2018180237. [DOI] [PubMed] [Google Scholar]

- 42.Zech JR, Badgeley MA, Liu M, Costa AB, Titano JJ, Oermann EK. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: a cross-sectional study. PLoS Med. 2018;15(11):e1002683. doi: 10.1371/journal.pmed.1002683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lakhani P, Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology. 2017;284(2):574–582. doi: 10.1148/radiol.2017162326. [DOI] [PubMed] [Google Scholar]

- 44.Rajpurkar P, Joshi A, Pareek A, et al. CheXpedition: investigating generalization challenges for translation of chest X-Ray algorithms to the clinical setting. arXiv preprint arXiv:200211379. [Accessed February 16, 2022]. https://arxiv.org/pdf/2002.11379.pdf .

- 45.He J, Baxter SL, Xu J, Xu J, Zhou X, Zhang K. The practical implementation of artificial intelligence technologies in medicine. Nat Med. 2019;25(1):30–36. doi: 10.1038/s41591-018-0307-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Meyer-Bäse A, Morra L, Meyer-Bäse U, Pinker K. Current status and future perspectives of artificial intelligence in magnetic resonance breast imaging. Contrast Media Mol Imaging. 2020;2020:6805710. doi: 10.1155/2020/6805710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Booth AL, Abels E, McCaffrey P. Development of a prognostic model for mortality in COVID-19 infection using machine learning. Mod Pathol. 2020;4(3):522–531. doi: 10.1038/s41379-020-00700-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Bash S. Enhancing neuroimaging with artificial intelligence. Applied Radiology. 2020;49(1):20–21. [Google Scholar]

- 49.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. 2017;2(4):230–243. doi: 10.1136/svn-2017-000101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Valliani AA, Ranti D, Oermann EK. Deep learning and neurology: a systematic review. Neurol Ther. 2019;8(2):351–365. doi: 10.1007/s40120-019-00153-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gupta R, Krishnam SP, Schaefer PW, Lev MH, Gonzalez RG. An east coast perspective on artificial intelligence and machine learning: part 2: ischemic stroke imaging and triage. Neuroimaging Clin N Am. 2020;30(4):467–478. doi: 10.1016/j.nic.2020.08.002. [DOI] [PubMed] [Google Scholar]

- 52.Belić M, Bobić V, Badža M, Šolaja N, Đurić-Jovičić M, Kostić VS. Artificial intelligence for assisting diagnostics and assessment of Parkinson’s disease-A review. Clin Neurol Neurosurg. 2019;184:105442. doi: 10.1016/j.clineuro.2019.105442. [DOI] [PubMed] [Google Scholar]

- 53.An S, Kang C, Lee HW. Artificial intelligence and computational approaches for epilepsy. J Epilepsy Res. 2020;10(1):8–17. doi: 10.14581/jer.20003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Pavel AM, Rennie JM, de Vries LS, et al. A machine-learning algorithm for neonatal seizure recognition: a multicentre, randomised, controlled trial. Lancet Child Adolesc Health. 2020;4(10):740–749. doi: 10.1016/S2352-4642(20)30239-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Afzal HMR, Luo S, Ramadan S, Lechner-Scott J. The emerging role of artificial intelligence in multiple sclerosis imaging. Mult Scler. 2020:1352458520966298. doi: 10.1177/1352458520966298. [DOI] [PubMed] [Google Scholar]

- 56.Bouton CE. Restoring movement in paralysis with a bioelectronic neural bypass approach: current state and future directions. Cold Spring Harb Perspect Med. 2019;9(11):a034306. doi: 10.1101/cshperspect.a034306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Hassan AE. New technology add-on payment (NTAP) for Viz LVO: a win for stroke care. J Neurointerv Surg. 2020 doi: 10.1136/neurintsurg-2020-016897. neurintsurg-2020016897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Nogueira RG, Jadhav AP, Haussen DC, et al. DAWN Trial Investigators. Thrombectomy 6 to 24 hours after stroke with a mismatch between deficit and infarct. N Engl J Med. 2018;378:11–21. doi: 10.1056/NEJMoa1706442. [DOI] [PubMed] [Google Scholar]

- 59.Albers GW, Marks MP, Kemp S, et al. DEFUSE 3 Investigators. Thrombectomy for stroke at 6 to 16 hours with selection by perfusion imaging. N Engl J Med. 2018;378:708–18. doi: 10.1056/NEJMoa1713973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Bi WL, Hosny A, Schabath MB, et al. Artificial intelligence in cancer imaging: clinical challenges and applications. CA Cancer J Clin. 2019;69(2):127–157. doi: 10.3322/caac.21552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Wagner MW, Namdar K, Biswas A, Monah S, Khalvati F, Ertl-Wagner BB. Radiomics, machine learning, and artificial intelligence-what the neuroradiologist needs to know. Neuroradiology. 2021;63(12):1957–1967. doi: 10.1007/s00234-021-02813-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Geis JR, Brady AP, Wu CC, et al. Ethics of artificial intelligence in radiology: summary of the Joint European and North American Multisociety Statement. J Am Coll Radiol. 2019;16(11):1516–1521. doi: 10.1016/j.jacr.2019.07.028. [DOI] [PubMed] [Google Scholar]

- 63.Kingston J. Artificial intelligence and legal liability. arXiv180207782 https://arxiv.org/ftp/arxiv/papers/1802/1802.07782.pdf.

- 64.Council of the European Union. General Data Protection Regulation Official Journal of the European Union. [Accessed February 16, 2022]. https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 .

- 65.Consumer Privacy Protection Act of 2017, HR 4081:115th Cong 2017. [Accessed February 10, 2022]. https://www.congress.gov/bill/115th-congress/house-bill/4081 .

- 66.Cal. Civ. Code § 1798,198 (a) 2018. California Consumer Privacy Act of 2018

- 67.Va. Code Ann§ 59.1. Consumer Data Protection Act. 2021. [Accessed February 10, 2022]. https://lis.virginia.gov/cgi-bin/legp604.exe?212+ful+SB1392ER+pdf .

- 68.Colo. Rev. Stat. § 6, 1-1301 (2021) Colorado Privacy Act. [Accessed February 10, 2022]. https://leg.colorado.gov/sites/default/files/2021a_190_signed.pdf .

- 69.21st Century Cures Act, Pub L No. 114, 255. 2016. [Accessed February 10, 2022]. https://www.govinfo.gov/content/pkg/PLAW-114publ255/html/PLAW-114publ255.htm .

- 70.Huff DT, Weisman AJ, Jeraj R. Interpretation and visualization techniques for deep learning models in medical imaging. Phys Med Biol. 2021;66(4):04TR01. doi: 10.1088/1361-6560/abcd17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Thrall JH, Li X, Li Q, et al. Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol. 2018;15(3 pt B):504–508. doi: 10.1016/j.jacr.2017.12.026. [DOI] [PubMed] [Google Scholar]