Abstract

Cardiovascular diseases (CVD) are the leading cause of death worldwide. People affected by CVDs may go undiagnosed until the occurrence of a serious heart failure event such as stroke, heart attack, and myocardial infraction. In Qatar, there is a lack of studies focusing on CVD diagnosis based on non-invasive methods such as retinal image or dual-energy X-ray absorptiometry (DXA). In this study, we aimed at diagnosing CVD using a novel approach integrating information from retinal images and DXA data. We considered an adult Qatari cohort of 500 participants from Qatar Biobank (QBB) with an equal number of participants from the CVD and the control groups. We designed a case-control study with a novel multi-modal (combining data from multiple modalities—DXA and retinal images)—to propose a deep learning (DL)-based technique to distinguish the CVD group from the control group. Uni-modal models based on retinal images and DXA data achieved 75.6% and 77.4% accuracy, respectively. The multi-modal model showed an improved accuracy of 78.3% in classifying CVD group and the control group. We used gradient class activation map (GradCAM) to highlight the areas of interest in the retinal images that influenced the decisions of the proposed DL model most. It was observed that the model focused mostly on the centre of the retinal images where signs of CVD such as hemorrhages were present. This indicates that our model can identify and make use of certain prognosis markers for hypertension and ischemic heart disease. From DXA data, we found higher values for bone mineral density, fat content, muscle mass and bone area across majority of the body parts in CVD group compared to the control group indicating better bone health in the Qatari CVD cohort. This seminal method based on DXA scans and retinal images demonstrate major potentials for the early detection of CVD in a fast and relatively non-invasive manner.

Keywords: cardiovascular diseases, DXA, retina, deep learning, machine learning, Qatar Biobank (QBB)

1. Introduction

Globally, cardiovascular diseases (CVDs) remain the leading cause of mortality and hence increasing healthcare costs in many countries [1]. CVDs have accounted for 32% of the overall global death where two-third occurs in low- and middle-income countries, according to the World Health Organization (WHO) [2]. Furthermore, CVD has caused 38% of the total deaths of people below 70 years old of age (i.e., premature deaths) in non-communicable diseases (NCD) [3]. In the East Mediterranean region, 54% of the total NCD deaths are caused by CVD according to the WHO’s Eastern Mediterranean Regional Office [4]. In the State of Qatar, according to the Planning and Statistics Authority, 33.1% of the total deaths in 2018 were caused by diseases related to the circulatory system including blood pressure, which made CVD the top concern for Qatar in combating NCD [5]. Although diagnosis and treatment methods/tools of CVD have advanced throughout the years, yet many people still go undiagnosed until the occurrence of serious CVD events such as stroke, heart attack, or myocardial infraction [3].

Risk factors such as age, gender, smoking, obesity, diabetes, hypertension, low-density lipoprotein (LDL) cholesterol, and sedentary lifestyle [6] are known to be linked to CVD and some of these factors have been studied and confirmed for the Qatari population [7,8]. In fact, there exists risk score calculators for CVD such as the Framingham Risk Score [9], and ASCVD [10], which use some of the above factors to predict the 10-year risk of developing CVD. There exists multiple clinical biomarkers such as cardiac troponins I and T, C-reactive protein, D-dimer, and B-type natriuretic peptides which are also shown to be linked to CVD [6]. Furthermore, with the advancement of medical imaging techniques, several imaging modalities have been used to diagnose CVD. Imaging modalities such as delayed enhancement cardiac magnetic resonance (DE-CMR) [11], echocardiogram (ECG) [12,13], ultrasound imaging [14], magnetic resonance imaging (MRI) [15,16,17], and computed tomography (CT) [18,19,20], single-photon emission computerized tomography (SPECT) [21,22], and Coronary CT angiography (CCTA) [23] are also used to detect CVDs. Recently retinal fundus image has emerged as a non-invasive data modality to examine the heart condition. Retinal microvascular abnormalities are found to be linked to CVDs [24,25]. For example, [25] showed that generalized arteriolar narrowing, focal arteriolar narrowing, arteriovenous nicking, and retinopathy, which are all abnormalities in retinal vessels, can be used as indicators for cardiovascular diseases. They also showed that Fundus images can capture these abnormalities. In [26], the authors predicted a variety of CVD risk factors such as hypertension, hyperglycemia, and dyslipidemia from retinal fundus images. Moreover, retinal fundus images have been utilized to predict diabetes [27], and CVD risk factors [28,29].

Another imaging technique that could also be used to diagnose CVD events is the dual-energy X-ray absorptiometry (DXA) through lean and fat mass [30] and/or bone mineral density (BMD) [31]. DXA measurements of fat mass have been shown to have a strong correlation with CVD events as well as its risks [32]. Furthermore, a recent study has shown an independent association between lower BMD and high risk of ASCVD events and death among women in South Korea [33]. DXA provides a number of advantages, including the ability to accurately and precisely measure and differentiate fat, lean, and bone components. It can assess the entire body in a single scan, making it fast in the acquisition while less hazardous due to low-radiation. In addition, it is a non-invasive procedure [34].

In this paper, we focused on two imaging techniques, namely DXA scan and retinal fundus images to demonstrate their contribution to diagnose CVD in Qatari population. We selected these techniques considering their non-invasive nature as well as the ease of access to the data source as they have already been incorporated in QBB protocol to collect participants’ health status. Moreover, the capability of the retinal image and DXA scan in CVD diagnosis has not been investigated thoroughly for the Qatari population. The contributions of this work are as follows:

We proposed a novel technique that uses retinal images to distinguish CVD group from the control group with over 75% accuracy. To the best of our knowledge, this is a seminal work in CVD diagnosis from retinal images.

We conducted extensive experiments on DXA scan data and showed that it has reasonable discriminating ability to separate CVD group from the control group yielding over 77% accuracy in the Qatari population.

We proposed a superior multi-modal approach for CVD diagnosis that fuses both tabular (DXA) and image data (retinal images) to distinguish CVD from the control group with an accuracy of 78.3%. Hence, our study proposes a fast and relatively non-invasive approach to diagnose patients with CVD.

2. Related Work

2.1. Retinal Fundus Images

Retinal fundus images provide an easy-to-use, non-invasive, and accessible way to screen the human eye health [35]. Retinal images allow medical professionals to examine the eye and diagnose diseases such as diabetic retinopathy, hypertension, or arteriosclerosis. They also provide the diameter and tortuosity measurements of the retinal blood vessels enabling the diagnosis of CVD [36]. ML-based techniques have been utilized to process retinal images for risk factor prediction for CVD. Guo et al. [37] presented a study to investigate the association between CVD and series of retinal information, and whether this association is independent of CVD risk factors in patients with type 2 diabetes. The age- and gender-based case-control study recruited 79 eligible patients with CVD and 150 non-CVD (control) and used three stepwise logistic regression models to evaluate CVD risk factors. Area Under the Curve (AUC) was used to evaluate three models having AUC values of 0.692, 0.661, and 0.775, respectively. Results of the first model showed that hypertension, longer diabetes duration and decreased high-density lipoprotein (HDL)-cholesterol were associated with CVD. Furthermore, the second model showed that patients with diabetic retinopathy, smaller arteriolar-to-venular diameter ratio (AVR), and arteriolar junctional exponent (JE) were likely to develop CVD. Finally, the third model showed that CVD is associated with hypertension, longer diabetes duration, higher HbA1c level, smaller AVR, arteriolar branching coefficient (BC) and JE, as well as larger venular length-to-diameter ratio; this shows that retinal information is associated with CVD, however, this association is independent from CVD risk factors in patients having type 2 diabetes, but it needs further investigation. Cheung et al. [29] developed a DL-based model that can measure the retinal vascular calibre from retinal images. The authors used the Singapore I Vessel Assessment (SIVA) to evaluate the assessments given by the DL system with human graders and found a strong correlation between the DL system and human graders. The dataset used in that study was a composite of images from 15 different retinal imaging datasets, totaling over 70,000 images. The results indicated a strong correlation between 0.82 and 0.95 for the system and human utilizing SIVA on various datasets. Furthermore, multivariable linear regression analysis was carried out between CVD risk factors and vessel caliber measurements by both DL system and human. The central retinal artery equivalent (CRAE) and central retinal vein equivalent (CRVE) were both assessed using regression analysis, and the results showed that R-squared () for all CVD risk factors was greater while using the DL system compared to human measurement. Zhang et al. [26] published a study that used retinal images to predict hypertension, hyperglycemia, dyslipidemia, and other CVD risk factors. 1222 retinal images were collected from 625 Chinese people. For the creation of the prediction model, the researchers applied transfer learning. For hyperglycemia detection, the model had an accuracy of 78.7% and an AUC of 0.880; for hypertension detection, the model had an accuracy of 68.8% and an AUC of 0.766; and for dyslipidemia detection, the model had an accuracy of 66.7% and an AUC of 0.703. Age, gender, drinking status, smoking status, salty taste, BMI, waist-hip ratio (WHR), and hematocrit were also used to train the model, and it was able to predict them with an AUC over 0.7. Gerrits et al. [38] used retinal images to develop a deep neural network approach for predicting cardiometabolic risk variables. The study employed data from the Qatar Biobank (QBB), which included 3000 people and 12,000 retinal scans. Age, sex, smoking, total lipid, blood pressure, glycaemic state, sex steroid hormones, and bioimpedance measures were all investigated as risk factors. In addition, the study looked into the impact of age and sex as a mediating factor in cardiometabolic risk prediction. When four images were utilized for person level, good results were achieved for predicting age and sex; acceptable results were obtained for the prediction of diastolic blood pressure (DBP), HbA1c, relative fat mass (RFM), testosterone, and smoking habit; nevertheless, poor results were obtained for total lipid prediction. Craenendonck et al. [39] used retinal images to investigate the relationship between mono- and multi-fractal retinal vessels and cardiometabolic risk variables. The study considered data from 2333 QBB participants. To estimate retinal blood vessel topological complexity, mono- and multi-fractal metrics were derived using the dataset in this work. Results from multiple linear regression analysis revealed that there is a significant relationship between one or more fractal metrics and age, sex, systolic blood pressure (SBP), DBP, BMI, insulin, HbA1c, glucose, albumin, and LDL cholesterol. Based on above-mentioned studies, we can observe that there exists multiple studies focusing on CVD risk factor estimation based on retinal fundus images (Table 1). However, to the best of our knowledge, there exists no study that focused on diagnosing CVD by classifying CVD from non-CVD (control) subjects based on retinal images for the Qatari population.

Table 1.

Summary of DXA and retinal image-based works for CVD associated risk factor; (AUC: Area under the curve, MAE: mean absolute error, N/A: Not available).

| Reference | Year | Dataset | Cohort | ML/DL Results | Findings |

|---|---|---|---|---|---|

| Retinal Images Data | |||||

| [37] | 2016 | Retina Images | Images of 79 CVD and 150 Non-CVD patients in Hong Kong |

AUC: Model 1 (0.692) Model 2 (0.661) Model 3 (0.775) |

The paper evaluated three stepwise logistic regression models to investigate CVD association to retinal information and whether the association is independent from having type 2 diabetes. The findings reveal that information obtained from the retina is independently linked to CVD in type 2 diabetic patients. |

| [29] | 2020 | Retina Images | >70,000 images (Collection from 15 datasets from multiple countries and ethnicities) |

N/A | The paper developed a DL system to assess retinal vessel caliber (which measure microvascular structure changes which are correlated with CVD risk factors. Outcome of the DL system was compared with human graders. Later a comparison with associated risk factors was carried out. In addition, DL system was able to predict CVD risk factors better than or comparable to human graders. |

| [26] | 2020 | Retina Images | Retina images of 625 patients from China |

Accuracy of 78.7% for hyperglycemia detection, 68.8% for hypertension detection and 66.7% for dyslipidemia detection |

The study aimed at predicting hypertension, hyperglycemia, and dyslipidemia, and other CVD risk factors from retinal images. Transfer learning utilized for model development and obtained a good result. |

| [38] | 2020 | Retina Images | 12,000 images from QBB |

AUC (sex): 0.97; MAE (age): 2.78 years; MEA (SBP): 8.96 mmHg; MAE (DBP): 6.84 mmHg; MAE (Hb1Ac): 0.61%; MAE (relative mass): 5.68 units); MAE (testosterone): 3.76 nmol/L |

The paper investigated the possibility of fundus images in predicting cardiometabolic risk factors such as age, gender, smoking habit, blood pressure, lipid profile, and bioimpedance using DL |

| [39] | 2020 | Retina Images | Images of 2333 participants from QBB |

N/A | The study investigated association between cardiometabolic risk factors and mono- and multifractal retinal vessel using retinal images. Fractal metrics were calculated, and then linear regression analysis was carried out. One or more fractals are linked to sex, age, BMI, SBP, DBP, glucose, insulin, HbA1c, albumin, and LDL, according to the findings. |

| DXA Data | |||||

| [40] | 2012 | DXA Data | 409 participants | N/A | The study aims at comparing BMI with direct measure of fat and lean mass to predict CVD and diabetes among buffalo police officers in New York, US. Findings shows a strong correlation of multiple DXA indices of obesity showed with cardiovascular disease. |

| [41] | 2014 | DXA Data | 616 ambulatory patients who were not pregnant women, or had self-reported cardiac failure, had cardiac-pacemaker or undergone limb amputation. |

N/A | The researchers looked at how body composition factors affect BMI and if they may be used as markers for metabolic and cardiovascular health in Switzerland. The researchers observed that fat mass and muscle mass were important nutritional status markers, and they broadened their investigation to look at the impact on health outcomes for all BMI categories. The authors also underlined the need of evaluating body composition during medical examinations to predict metabolic and cardiovascular diseases. |

| [42] | 2016 | DXA Data | 117 patients with heart failure with preserved ejection fraction |

N/A | The study that was conducted on patients from Germany, England and Slovenia aimed to find out how sarcopenia in individuals with heart failure with preserved ejection fraction is related to exercise ability and muscle strength as well as quality of life. It was found that heart failure has a detrimental effect on appendicular skeletal muscle mass, |

| [43] | 2020 | DXA Data | 570 patients with and without heart failure |

N/A | The goal of the study was to see how aging and heart failure treatments affected bone mineral density. Heart failure was observed to be linked to a greater BMD prevalence of osteoporosis. Heart failure exacerbates the loss of mineral bone density that comes with aging. |

| [44] | 2020 | Anthropometric & DXA Data |

558 participants who were not diagnosed with diabetes, hypertension, dyslipidemia or CVD. |

N/A | The study, which was based on Qatari population from QBB, aimed at comparing Anthropometric & DXA Data in predicting cardio-metabolic risk factors. randomly healthy participants were selected. The study revealed a more in-depth relationship between DXA-based assessment of adiposity as a cardio metabolic risk predictor in Qatar compared to anthropometric markers. |

| [45] | 2020 | Demographic, Cardio-metabolic and DXA Data |

2802 participants from QBB | N/A | The goal of the study was to find the body fat composition cut-off values to predict metabolic risk in the Qatari population. For Qatari adults of various ages and genders, the study developed cut-off values for body fat measurements that may be used as a reference for assessing obesity-related metabolic risks. According to the findings, there is a substantial link between body fatness and the likelihood of developing metabolic illnesses. |

2.2. Dual-Energy X-ray Absorptiometry (DXA)

Bone densitometry, also known as Dual-energy X-ray Absorptiometry (DXA), is a form of X-ray technology that is used to analyze bone health and bone loss. DXA is currently a widely accepted procedure for measuring BMD [46]. It has been reported that reduced BMD has an adverse effect on cardiovascular system and osteoporosis which poses a risk to patients with heart failure history [47]. Furthermore, another study with 570 patients found that heart failure was linked to a faster loss of BMD, regardless of other osteoporosis risk factors [43].

In another study involving 117 people having the history of heart failure, DXA revealed that heart failure has a negative impact on appendicular skeletal muscle mass [42]. In a study conducted on 409 participants from police officers in buffalo, New York, multiple DXA indices of obesity showed a strong correlation with CVD [40]. Lang et al. [41] discovered that DXA readouts of fat mass and muscle mass were key nutritional status indicators, and they expanded their research to look at the influence on health outcomes for all BMI categories. Furthermore, the authors emphasized the necessity of assessing body composition during medical examinations in order to anticipate metabolic and cardiovascular disorders. It was reported that BMD, muscle mass, and fat content associated with CVD have detrimental impact in patients with advanced CVD stage which can be seen in the above-mentioned research. Reid et al. [48] investigated the use of CNN to predict abdominal aortic calcification (AAC) based on DXA. High AAC values could be used as a predictor of coronary artery calcium, cardiovascular outcome or even death. In their work, they used data of vertebral fracture assessment (VFA) lateral spine images extracted from DXA and an ensemble CNN was used for training and evaluation. Computational prediction showed high correlation with human-level annotation. In Qatar, the use of DXA in the prediction of CVD, its risk factors, and associated medical conditions has just lately gained traction. Bawadi et al. [49] examined data from the QBB in 2019 to investigate if body shape index could be used as a predictor of diabetic mellitus (DM). Kerkadi et al. [44] studied DXA-based measures in cardio-metabolic risk prediction a year later in 2020, and this study revealed a more in-depth relationship between DXA-based assessment of adiposity as a cardio metabolic risk predictor in Qatar. In the same year, Bawadi et al. [45] carried out a study in Qatar on the age and gender-specific cut-off points for body fat among adults. From the above mentioned articles (Table 1) we can say that, DXA data are linked to multiple metabolic risk factors related to CVD. However, to the best of our knowledge, there exists no study that applies machine or deep learning techniques to diagnose CVD using DXA measurements.

2.3. Multi-Modal Approaches

Both DXA and retinal image data collection processes are non-invasive and require less preparation overhead; there is no published work that uses a combination of DXA and retinal image data for CVD detection. We, however, present a summary of existing methods (Table 2) that use a multi-modal approach albeit with modalities different from DXA and retinal images. The existing multi-modal based approaches mainly rely on modalities such as Magnetic Resonance Imaging (MRI), X-Ray, Myocardial Perfusion Imaging (MPI), Electrocardiogram (ECG), Echocardiography (ECHO), and Phonocardiogram (PCG) (Table 2). We would like to mention that none of these invasive multi-modal methods are directly comparable to ours due to the use of different modalities than our approach.

Table 2.

CVD detection based on multi-modal dataset.

| Ref. | Year | Country | Fused Data | Results | Summary |

|---|---|---|---|---|---|

| [50] | 2019 | USA | Electronic Health Record (EHR) and Genetic data |

AUROC: 0.790 AUPRC 0.285 |

The study used a 10-year data from HER and genetic data to predict CVD events using random forest, gradient boosting trees, logistic regression, CNN and long short-term memory (LSTM). Chi-squared was used for feature selection on the EMR data. Results show an improved prediction of CVD with AUROC of 0.790 compared to EMR alone (AUC of 0.71) or genetic alone (AUC of 0.698) |

| [51] | 2020 | China | Electrocardiogram (ECG), Phonocardiogram (PCG), Holter monitoring, Echocardiography (ECHO), and biomarker levels (BIO) |

Accuracy: 96.67 Sensitivity: 96.67 Specificity: 96.67 F1-score: 96.64 |

The study aimed at the detection of coronary artery disease (CAD). Data from ECG and PCG of 62 patients were used. Furthermore, data were also collected from Holter monitoring, ECHO and BIO. Feature selection was applied to attain optimum features and support vector machine was used for classification. Results show best performance when feature were fused from all sources. |

| [52] | 2020 | USA | Sensors (collect blood pressure, oxygen, respiration rate, etc.) and Medical records (history, lab test, etc.) |

Results after feature weighting method: Accuracy: 98.5 Recall: 96.4 Precision: 98.2 F1-score: 97.2 RMSE: 0.21 MAE: 0.12 |

The study aimed at predicting heart diseases (such as heart attack or stroke) using data gathered from sensors and medical records. Features such as age, height, BMI, respiration rate, and blood pressure were extracted, and then data from both sources were fused. Furthermore, conditional probability is utilized for feature weighting to help in accuracy improvement. An ensemble deep learning is then used for the prediction of heart disease. |

| [53] | 2021 | Greece | Myocardial Perfusion Imaging (MPI) and Clinical data |

Accuracy: 78.44 Sensitivity: 77.36 Specificity: 79.25 F1-score: 75.50 AUC: 79.26 |

The study aimed at cardiovascular disease diagnosis using MPI and Clinical data. Polar maps were derived from the MPI data and fused with clinical data of 566 patients. Random forest, neural network, and deep learning with Inception V3 were used for classification. Results show a hybrid model of Inception V3 with random forest achieved an accuracy of 78.44% compared to an accuracy of 79.15% achieved by medical experts. |

| [54] | 2021 | USA | Electronic medical records (EMR) and Abdominopelvic CT imaging |

AUROC: 0.86 AUCPR: 0.70 |

The study aimed at developing a risk assessment model of ischemic heart disease (IHD) using combined information from patientsí EMR and features extracted from abdominopelvic CT imaging. In this study, CNN used to extract features from images and XGBoost was used as the learning algorithm. Results show an improved prediction performance with AUROC of 0.86 and AUCPR of 0.70 |

| [55] | 2021 | USA | Genetic, clinical, Demographic, imaging, and lifestyle. |

- | The study aimed at evaluating the ability of machine learning in detecting CAD subgroups using multimodal data. The multimodal data consisted of genetic, clinical, demographic, imaging, and lifestyle data. K-means clustering as well as Generalized low rank Modeling were utilized. Results show that 4 subgroups were uniquely identified. |

3. Materials and Methods

3.1. Ethical Approval

This research was carried out under the regulation of Qatar’s Ministry of Public Health. This work was approved by the Institutional Review Board of Qatar Biobank (QBB) in Qatar, and used a de-identified dataset from (QBB).

3.2. Data Collection from QBB

The data used in this study was collected from QBB. The details of the data collection protocol adopted by QBB are described in [56,57]. In brief, participants were invited to QBB and they were interviewed by staff nurses to collect their background history. Then multiple lab tests and imaging such as DXA scan and retinal images were collected. The dataset considered for this study is comprised of 500 participants equally divided into CVD group and control group. There were 262 male participants (CVD:Control = 137:125) and 238 female participants (CVD:Control = 113:125) in the studied cohort. Participants were all adult Qatari nationals aged between 18 and 84 years. Overall BMI was higher in CVD compared to the control group (26.10 ± 2.8:23.20 ± 2.8).

3.3. DXA Scan Data Preprocessing

The DXA data consisted of measurements related to bone mineral density, lean mass, fat content, and bone area measurements from different body parts of the participants. The dataset was cleaned by removing columns with missing values of more than 50% from each class (i.e., CVD and control). For the remaining columns, the missing values were imputed by the median value. After the data cleaning stage, 122 features were finally selected for this study. Supplementary File S1 provides the summary statistics of these features. The CVD and control groups’ data were then normalized using the min-max normalization technique [58]:

| (1) |

where x denotes the value of a specific DXA feature and and denote the largest and smallest values of that feature, respectively.

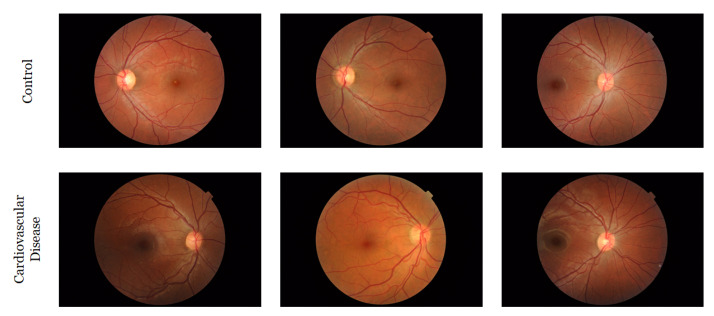

3.4. Retinal Image Collection and Preprocessing

The retinal fundus images were acquired at QBB utilizing a Topcon TRC-NW6S retinal camera to capture the “microscopic" characteristics of the optic nerve and macula of the participants. At least two images (one for each retina) were collected from each participant, but in some cases, multiple images (three or four) were collected from both eyes. Both (a) macula-centered images and (b) disc-centered images were captured for both eyes. A few participants had no retinal images and we discarded them from our analysis. Figure 1 shows few randomly selected images from each group.

Figure 1.

Some randomly selected images from the QBB retinal image dataset.

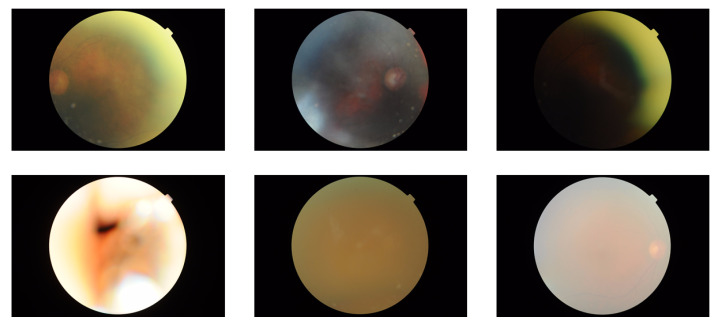

In the dataset, we had 1839 retinal images from all participants. Then, we removed low quality images by visual inspection since they may have negative impact on the downstream classification task. After removing low quality images (all were from the CVD group only), the number of images was reduced to 1805 (874 and 931 images from CVD and control group, respectively). For some participants all their images were removed due to low quality, and therefore, the number of participants in our study was reduced to 483, where 250 were from control and 233 were from CVD group. Examples of some low-quality images can be seen in Figure 2.

Figure 2.

Examples of few low-quality images.

4. Experiment Setup

To investigate the effect of different types of input data on the outcome of our study, we conducted multiple experiments, both uni-modal and multi-modal. Specifically, we used the tabular and image datasets in isolation in the uni-modal experiments and a combination of them in the multi-modal one. This resulted in three experiment configurations: (i) DXA model: applying traditional machine learning techniques on the DXA data. (ii) Retinal image model: applying deep learning on the retinal images. (iii) Hybrid model: applying deep learning on both the tabular DXA data and the retinal image data. All experiments were performed on an Intel(R) Core(TM) i9 CPU @ 3.60 GHz machine with 64 GB RAM and equipped with NVIDIA GeForce RTX 2080 Ti GPU. For implementation, We used Scikit-learn for the DXA model and fastai for the other two models. Details about each experiment is given below in the following sub-sections.

4.1. DXA Model

In the first experiment, we applied six different machine learning algorithms: Decision Tree (DT) [59], (Shallow) Artificial Neural Network (ANN) [60], Random Forest (RF) [61], Extreme Gradient Boosting (XGBoost) [62], CatBoost [63], and Logistic Regression (LR) [64]. We utilized the GridSearchCV utility from Python’s Scikit-learn package for hyperparameter tuning with nested cross validation [65]. Supplementary File S2 includes all the tuned parameters for the ML models.

4.2. Retinal Image Model

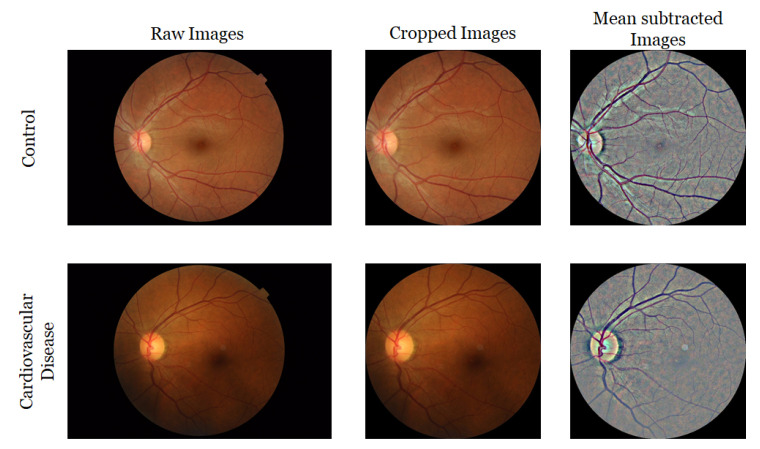

In the second experiment, we applied DL-based techniques to distinguish CVD group from the control group based on the retinal images only. For this experiment, two different image pre-processing steps were applied to generate two sets of images. For the first set, the circular region of each image was extracted. Then, we removed the border-noise by cropping the outside 10% of each image. For the second set, for each cropped image, local mean was subtracted from a 4 × 4-pixel neighborhood, and then placed on a dark background within a square-shaped image with tight boundaries. At the end, we had all images with a size of 540 × 540 with black background. Figure 3 presents samples of original images and pre-processed images. We also applied data augmentation techniques such as random horizontal flip as well as a random brightness and contrast perturbation to enhance the robustness of the model. We experimented with eight popular image classification models, namely, AlexNet [66], VGGNet-11 [67], VGGNet-16 [67], ResNet-18 [68], ResNet-34 [68], DenseNet-121 [69], SqueezeNet-0 and SqueezeNet-1 [70]. We used super-convergence in our experiments which allowed the network to converge faster [71]. The model was fine-tuned through 10 epochs, with all layers (except the last layer) frozen using a one-cycle policy [72] for scheduling the learning rate with a maximum learning rate of 0.01 and a batch size of 8. The model took around 30 min to train.

Figure 3.

Example of images from QBB dataset before and after pre-processing.

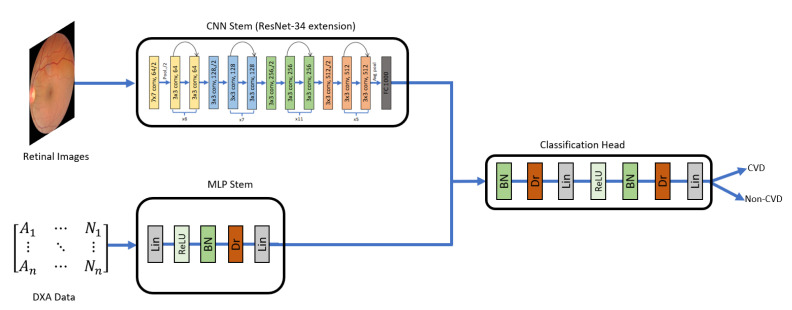

4.3. Hybrid Model

In the third experiment setup, we aimed to combine the DXA and the retinal image data in a deep learning-based approach. To this end, we designed a deep neural network that accepted a multi-modal input. The network consisted of three components: the CNN stem, the MLP stem, and the Classification Head (Figure 4). The CNN stem was responsible for processing the retinal images, while the MLP stem processed the DXA data. Hence, in our work, the fusion between the two data modalities does not take place at image-level. The data from the two different modalities, tabular data (DXA) and images (retinal fundus images) are passed through the two stem networks (MLP stem and CNN stem, respectively) and feature vectors produced from these are fused (vector concatenation) to form a single feature vector which is finally passed through the Classification Head to produce the output classification probability. The CNN stems we experimented with extensions of AlexNet, VGGNet-11, VGGNet-16, ResNet-18, ResNet-34, DenseNet-121, SqueezeNet-0, and SqueezeNet-1. We tried with multipl configurations of MLP stem and Classification Head with different number of layers and neurons. The best configuration we found for MLP stem, and the Classification Head is shown in Table 3.

Figure 4.

Hybrid model to distinguish CVD from non-CVD using Retinal Images and DXA tabular data. CNN stem for Retinal Image was based on ResNet-34 architecture [68]. The MLP stem includes Linear (Lin), Batch Normalization (BN), Dropout (Dr) layers as shown in the diagram. Both stems were integrated, and their output was fed into the Classification Head having multiple layers of BN, Dr, Lin, ReLU, BN, Dr, and finally a single linear layer as output layer (CVD or non-CVD).

Table 3.

Details of the layers in the MLP stem and the Classification Head of Hybrid model.

| Layer Name | Output Size | |

|---|---|---|

| MLP Stem | ||

| Linear | 8 | |

| ReLU | 8 | |

| BatchNorm1d | 8 | |

| Dropout | 8 | |

| Linear | 8 | |

| Classification Head | ||

| BatchNorm1d | 264 | |

| Dropout | 264 | |

| Linear | 32 | |

| ReLU | 32 | |

| BatchNorm1d | 32 | |

| Dropout | 32 | |

| Linear | 2 | |

For the retinal images, we used the cropped images and the mean subtracted images shown in Figure 3 and concatenated them with the tabular data. To achieve this, we used fastai and the image_tabluar library (https://github.com/naity/image_tabular, accessed on 15 August 2021) to integrate the image data and the DXA (tabular) data. Figure 4 shows a high-level diagram of our proposed network architecture for the hybrid model. For the CNN stem, multiple data augmentation techniques such as brightness, contrast, flipping, rotation, and scaling of the images were applied.

The hybrid model was fine-tuned through 10 epochs, with all layers (except the last layer) frozen using a one-cycle scheduler and a 1 × 10 learning rate and a batch size of 64. Then, after unfreezing the whole network, 10 epochs were employed for training utilizing discriminative learning rates in the range of (1 × 10 and 1 × 10). With the 20 epochs, the model took around 30 min to train.

4.4. Performance Evaluation Metrics

We evaluated the models with 5-fold cross validation (CV) [65] for all models. The models were evaluated on accuracy, sensitivity, precision, F1-score, and Matthews Correlation Coefficient (MCC). These metrics are highlighted in the following equations:

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

where true positive, false negative, false positive, and true negative are represented as tp, fn, fp, and tn, respectively. Moreover, we computed an empirical p-value for evaluating the significance of a cross-validated performance scores with permutations

5. Results

5.1. Experimental Results from the Machine Learning Models

The results obtained from the three experiments are presented in this section. For the DXA Model, an ablation study was conducted on different feature types of DXA separately and on all of them with 5-fold CV. Results based on the ablation study shows that the area measurements features have low contribution with 66.5% accuracy. body fat composition, BMD, and lean mass achieved highest accuracy of 77.0%, 73.2%, and 70.2%, respectively. Considering all features (122 features), XGBoost model performed the best achieving highest accuracy of 77.4%. The highest F1-score of 76.8% and the highest MCC of 55.5% (see Table 4). For all DXA based experiments, a p-value of < 0.05 was obtained indicating their statistical significance.

Table 4.

Performance of ML techniques for ablation study on DXA Model.

| Property (No of Features) | Evaluation Metric | DT | MLP | RF | LR | CatBoost | XGBoost |

|---|---|---|---|---|---|---|---|

| Bone Mineral Density (55) | Accuracy | 0.620 | 0.682 | 0.686 | 0.732 | 0.710 | 0.726 |

| Sensitivity | 0.617 | 0.574 | 0.647 | 0.678 | 0.635 | 0.672 | |

| Specificity | 0.628 | 0.795 | 0.724 | 0.785 | 0.784 | 0.780 | |

| Precision | 0.624 | 0.740 | 0.701 | 0.758 | 0.746 | 0.758 | |

| F1-score | 0.609 | 0.639 | 0.672 | 0.716 | 0.685 | 0.710 | |

| MCC | 0.250 | 0.382 | 0.373 | 0.466 | 0.424 | 0.456 | |

| p-value | 1.996 × 10 | 1.089 × 10 | 1.664 × 10 | 1.536 × 10 | 6.623 × 10 | 1.332 × 10 | |

| Body Fat Composition (15) | Accuracy | 0.720 | 0.770 | 0.746 | 0.742 | 0.740 | 0.754 |

| Sensitivity | 0.594 | 0.640 | 0.697 | 0.741 | 0.673 | 0.723 | |

| Specificity | 0.832 | 0.902 | 0.789 | 0.749 | 0.806 | 0.787 | |

| Precision | 0.790 | 0.867 | 0.770 | 0.747 | 0.777 | 0.767 | |

| F1-score | 0.669 | 0.734 | 0.731 | 0.741 | 0.720 | 0.743 | |

| MCC | 0.445 | 0.560 | 0.489 | 0.488 | 0.482 | 0.508 | |

| p-value | 1.248 × 10 | 1.175 × 10 | 1.110 × 10 | 1.052 × 10 | 4.975 × 10 | 9.515 × 10 | |

| Lean Mass (7) | Accuracy | 0.576 | 0.652 | 0.690 | 0.634 | 0.668 | 0.702 |

| Sensitivity | 0.556 | 0.652 | 0.674 | 0.613 | 0.702 | 0.669 | |

| Specificity | 0.596 | 0.664 | 0.710 | 0.657 | 0.638 | 0.736 | |

| Precision | 0.582 | 0.717 | 0.699 | 0.641 | 0.661 | 0.716 | |

| F1-score | 0.560 | 0.650 | 0.683 | 0.625 | 0.678 | 0.691 | |

| MCC | 0.156 | 0.329 | 0.386 | 0.270 | 0.342 | 0.406 | |

| p-value | 9.083 × 10 | 6.950 × 10 | 8.326 × 10 | 7.994 × 10 | 3.984 × 10 | 7.402 × 10 | |

| Area Measurements (45) | Accuracy | 0.580 | 0.614 | 0.600 | 0.664 | 0.644 | 0.598 |

| Sensitivity | 0.546 | 0.528 | 0.624 | 0.665 | 0.660 | 0.605 | |

| Specificity | 0.623 | 0.698 | 0.584 | 0.667 | 0.633 | 0.597 | |

| Precision | 0.597 | 0.636 | 0.602 | 0.675 | 0.644 | 0.598 | |

| F1-score | 0.556 | 0.575 | 0.607 | 0.664 | 0.647 | 0.598 | |

| MCC | 0.175 | 0.230 | 0.210 | 0.335 | 0.295 | 0.203 | |

| p-value | 7.138 × 10 | 4.135 × 10 | 6.662 × 10 | 6.447 × 10 | 3.332 × 10 | 6.057 × 10 | |

| All (122) | Accuracy | 0.672 | 0.750 | 0.748 | 0.768 | 0.750 | 0.774 |

| Sensitivity | 0.658 | 0.656 | 0.704 | 0.761 | 0.694 | 0.754 | |

| Specificity | 0.691 | 0.855 | 0.797 | 0.780 | 0.812 | 0.800 | |

| Precision | 0.694 | 0.827 | 0.777 | 0.777 | 0.789 | 0.790 | |

| F1-score | 0.663 | 0.722 | 0.736 | 0.766 | 0.734 | 0.768 | |

| MCC | 0.363 | 0.526 | 0.504 | 0.542 | 0.511 | 0.555 | |

| p-value | 5.879 × 10 | 5.711 × 10 | 5.552 × 10 | 5.402 × 10 | 2.849 × 10 | 5.126 × 10 |

For the Retinal Image Model, where retinal images were used only to distinguish CVD form control group, DenseNet-121 model achieved the highest accuracy of 75.6% on the cropped image set based on 5 fold CV. DenseNet-121 model achieved 73.0% accuracy on the mean subtracted image set. Table 5 highlights the comparison of performance among all DL models used in this study. For all retinal image-based experiments, a p-value of <0.05 was obtained indicating their statistical significance.

Table 5.

A Comparison on the performance of deep learning models built based on retinal images.

| Type of Images | DL Model | Accuracy | Sensitivity | Specificity | Precision | f1 Score | MCC | p-Value |

|---|---|---|---|---|---|---|---|---|

| Cropped images | DenseNet-121 | 0.756 | 0.753 | 0.758 | 0.74 | 0.746 | 0.511 | 2.023 × 10 |

| Resnet-18 | 0.694 | 0.735 | 0.656 | 0.661 | 0.696 | 0.392 | 1.732 × 10 | |

| ResNet-34 | 0.753 | 0.682 | 0.817 | 0.773 | 0.725 | 0.505 | 8.824 × 10 | |

| VGGNet-11 | 0.744 | 0.712 | 0.774 | 0.742 | 0.727 | 0.487 | 5.529 × 10 | |

| VGGNet-16 | 0.739 | 0.7 | 0.774 | 0.739 | 0.719 | 0.476 | 4.612 × 10 | |

| AlexNet | 0.699 | 0.659 | 0.737 | 0.696 | 0.677 | 0.397 | 3.519 × 10 | |

| SqueezeNet1_0 | 0.719 | 0.665 | 0.769 | 0.724 | 0.693 | 0.436 | 3.130 × 10 | |

| SqueezeNet1_1 | 0.685 | 0.729 | 0.645 | 0.653 | 0.689 | 0.375 | 6.687 × 10 | |

| Mean subtracted images | DenseNet-121 | 0.73 | 0.712 | 0.747 | 0.72 | 0.716 | 0.459 | 3.545 × 10 |

| Resnet-18 | 0.713 | 0.682 | 0.742 | 0.707 | 0.695 | 0.425 | 1.953 × 10 | |

| ResNet 34 | 0.713 | 0.635 | 0.785 | 0.73 | 0.679 | 0.426 | 6.846 × 10 | |

| VGGNet-11 | 0.685 | 0.735 | 0.64 | 0.651 | 0.691 | 0.376 | 5.984 × 10 | |

| VGGNet-16 | 0.725 | 0.724 | 0.726 | 0.707 | 0.715 | 0.449 | 3.542 × 10 | |

| AlexNet | 0.683 | 0.688 | 0.677 | 0.661 | 0.674 | 0.365 | 2.806 × 10 | |

| SqueezeNet1_0 | 0.677 | 0.635 | 0.715 | 0.671 | 0.653 | 0.352 | 2.390 × 10 | |

| SqueezeNet1_1 | 0.669 | 0.612 | 0.72 | 0.667 | 0.638 | 0.334 | 1.390 × 10 |

Finally, for the Hybrid Model, where both DXA tabular data and retinal images were combined, ResNet-34 achieved the highest accuracy of 78.3% with 5-fold CV. Table 6 shows a comparison between the eight DL models that we tested in this experiment. For all hybrid model based experiments, a p-value of <0.05 was obtained indicating their statistical significance. We also calculated the area under of curve (AUC) of receiver operating characteristics (ROC) for all DL models in this experiment for the cropped images (Supplementary File S3).

Table 6.

A comparison on the performance of hybrid models built based on both retinal image and DXA data.

| Type of Images | DL Model | Accuracy | Sensitivity | Specificity | Precision | f 1 Score | MCC | p-Value |

|---|---|---|---|---|---|---|---|---|

| Cropped images | DenseNet-121 + DXA | 0.74 | 0.688 | 0.793 | 0.771 | 0.719 | 0.492 | 1.371 × 10 |

| ResNet-18 + DXA | 0.756 | 0.666 | 0.842 | 0.802 | 0.725 | 0.519 | 1.255 × 10 | |

| ResNet-34 + DXA | 0.783 | 0.747 | 0.816 | 0.793 | 0.767 | 0.566 | 1.290 × 10 | |

| VGGNet-11 + DXA | 0.752 | 0.691 | 0.812 | 0.784 | 0.729 | 0.512 | 1.297 × 10 | |

| VGGNet-16 + DXA | 0.739 | 0.675 | 0.8 | 0.773 | 0.71 | 0.49 | 1.608 × 10 | |

| AlexNet + DXA | 0.778 | 0.698 | 0.854 | 0.815 | 0.751 | 0.559 | 1.166 × 10 | |

| SqueezeNet1_0 + DXA | 0.748 | 0.653 | 0.836 | 0.786 | 0.713 | 0.498 | 3.795 × 10 | |

| SqueezeNet1_1 + DXA | 0.767 | 0.736 | 0.795 | 0.773 | 0.753 | 0.534 | 1.243 × 10 | |

| Mean subtracted images | DenseNet-121 + DXA | 0.736 | 0.669 | 0.8 | 0.76 | 0.71 | 0.475 | 1.409 × 10 |

| ResNet-18 + DXA | 0.734 | 0.639 | 0.825 | 0.775 | 0.699 | 0.474 | 1.355 × 10 | |

| ResNet-34 + DXA | 0.757 | 0.755 | 0.761 | 0.754 | 0.75 | 0.52 | 1.173 × 10 | |

| VGGNet-11 + DXA | 0.734 | 0.707 | 0.760 | 0.737 | 0.715 | 0.474 | 1.323 × 10 | |

| VGGNet-16 + DXA | 0.723 | 0.702 | 0.746 | 0.727 | 0.705 | 0.456 | 1.608 × 10 | |

| AlexNet + DXA | 0.753 | 0.658 | 0.841 | 0.796 | 0.718 | 0.511 | 1.466 × 10 | |

| SqueezeNet1_0 + DXA | 0.754 | 0.673 | 0.829 | 0.789 | 0.725 | 0.511 | 1.241 × 10 | |

| SqueezeNet1_1 + DXA | 0.770 | 0.732 | 0.804 | 0.780 | 0.754 | 0.540 | 1.464 × 10 |

5.2. Performance of the Hybrid Model Based on Gender and Age Stratified Dataset

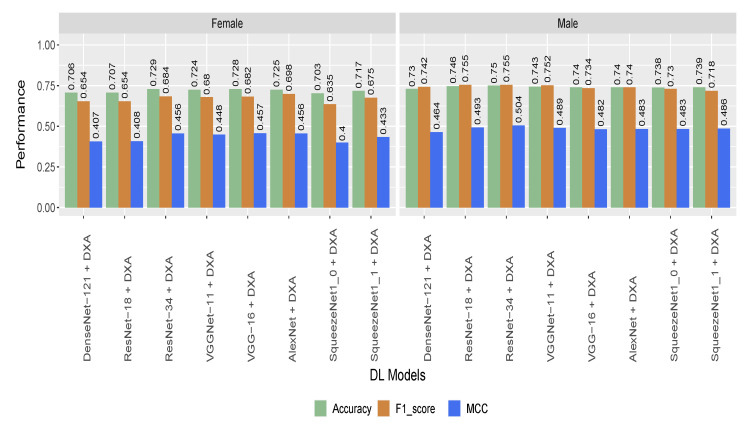

To check the effectiveness of the proposed model developed on different subgroups of population, we tested the Hybrid Model (incorporating the DXA and retinal image) on age- and gender-stratified samples. Though the performance of the model dropped slightly on the gender-stratified dataset, ResNet-34 based model achieved the highest performance with 75% and 72.9% accuracy for male and female, respectively (Figure 5). For the gender-stratified samples, all the eight models achieved better performance for males compared to females (Figure 5).

Figure 5.

Performance of the hybrid models on gender-stratified participants (based on cropped image).

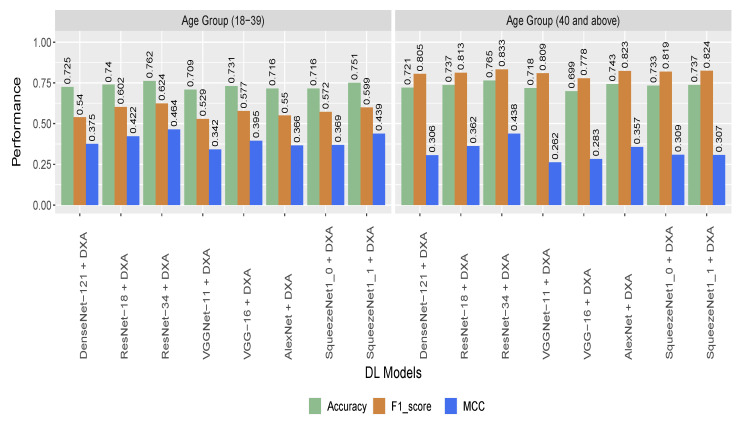

Table 7 provides information on the numbers of participants and images used for different age groups. For the age-stratified samples, the performance of the model was close to the performance of the model while whole dataset. The highest performance across all DL models were obtained from ResNet-34 model with 76.5% accuracy for the 40 and above age group. For the other age group (below 40), the ResNet-34 model also achieve best performance with 76.2% accuracy. Figure 6 shows the detailed results and comparison of the model performance for age-stratified participants.

Table 7.

Details of number of participants and number of images used for each age group.

| Class | Age Group (18–39) | Age Group (40 and Above) | ||

|---|---|---|---|---|

| Participants | Images | Participants | Images | |

| CVD | 115 | 440 | 118 | 434 |

| Control | 210 | 782 | 40 | 140 |

Figure 6.

Performance of the hybrid models on age-stratified participants (based on cropped image).

5.3. Statistical Analysis on DXA Data

We conducted statistical analysis on the DXA data for comparing the CVD group against the control group. After analyzing the 122 features, 95 features were statistically significant (p-value < 0.05) (Supplementary File S1).

When compared against the control group, CVD group accumulated more fat in different body parts including the arms, legs, trunk, android, gynoid, and android visceral.

Measurements of lean mass in android, leg, arm, trunk, etc., were also higher in the CVD group. For instance, lean mass in trunk (20,329.32 ± 3809:18,779.82 ± 3965) was greater in CVD than the control group. Overall, the total fat mass (25,908.58 ± 6342:20,962.12 ± 4946) as well as total lean mass (43,643.57 ± 8617:40,614.88 ± 9059) was higher in the CVD group and higher BMI value in CVD group reflect their fat and lean mass content.

We also observed higher level of BMD and anthropometric measurements from different body parts in the CVD group compared to the control group (Supplementary File S1). For BMD measurements in different body parts, e.g., head, arms, spine, troch, and trunk, the CVD group had a greater BMD than the control group. Overall the total BMD (1.20 ± 0.11:1.17 ± 0.12) was higher in the CVD group compared to the control group. Individuals with higher weight and BMI tend to have high BMD which might help to reduce the risk of bone fracture [73,74,75]. A similar trend was seen in our cohort.

Furthermore, when it comes to anthropometic measurements, we observed larger bone area in the troch, lumbar spine (L1, L2, L3, L4), and pelvis body parts for CVD group compared to the control group. In summary, most measurements for BMD, fat content, muscle mass, and bone area were higher in the CVD group compared to the control group, according to the analysis. Details of the analysis can be found in Supplementary File S1.

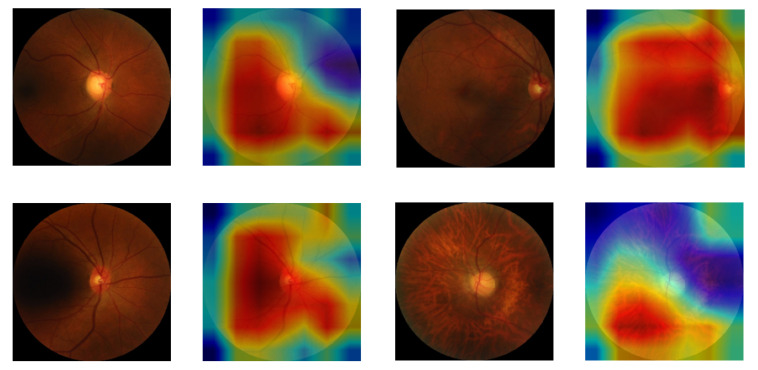

5.4. Class Activation Map for Highlighting the Region of Interest in CVD Patients

We used Gradient-weighted Class Activation Mapping (GradCAM) [76] to highlight regions of interest which influenced the DL model to make predictions. Figure 7 shows results of GradCAM on images of the CVD class. It was observed that regions of interest, as shown in the color-coded heat map on the right of each image, are mostly in the central region of the retina. Micro hemorrhage can be observed in all images and especially in the bottom left images which is associated with hypertension [77,78].

Figure 7.

Few retinal images from CVD group with overlaid GradCAM. Red-ish color indicates higher influence on the decision of prediction model compared to the blue-ish color that indicate less influence.

6. Discussion

6.1. Principal Findings

Integrating retinal images with DXA improves the performance of CVD detection. In our experiments, the classification accuracy for the retinal image data only and for the DXA data only was 75.6% and 77.4% respectively. However, when both datasets were integrated using a joined deep learning model, there was an improvement in the performance which has reached up to 78.3% accuracy with ResNet-34 based model. This indicates that integration of multi-modal dataset has improved the performance of the proposed model. For the gender-stratified participants, the proposed model achieved almost similar performance for both genders (Figure 5). The performance of the model based on the age-stratified participants were close to the performance of the model with all participants (Figure 6). This indicates that the performance of the proposed model is unbiased towards age-stratified adult population.

The majority of DXA readings in the CVD group were higher than the control group. For statistically significant variables, majority of the BMD, fat content, muscle mass, and bone area measurements for the CVD group had greater average values than the control group (Supplementary File S1). Obesity was shown to be linked as a protective factor of osteoporosis through different mechanisms including mechanical and biochemical mechanisms [79]. In the current study, although obesity (higher BMI) has deleterious effect on cardiovascular risk factors, we can also observe its protective effects on bone health. In summary, our results indicate a better bone health condition for the CVD group which had higher BMI than the control group. Ablation study revealed the better discriminatory power of fat content and BMD than muscle mass and bone areas (Table 4). This highlights the discriminatory power of DXA measurements which could open new avenues in the diagnosis plan of CVD.

6.2. Comparison against Other Tools

We could not find any work that used retinal images and DXA for CVD detection based on the Qatari population or outside Qatar. Published work that has been reviewed focused on the prediction of risk factors associated with CVD (refer to Table 1) instead of predicting CVD directly, and therefore, we could not compare the performance of the proposed model against any existing model.

We, however, present a summary of the existing methods (Table 2) that use a multi-modal approach albeit with modalities different from DXA and retinal images. Therefore, we would like to re-iterate that a direct comparison of our proposed multi-modal approach with any of these would not be fair due to the following reasons: First, none of the existing research uses the particular combination of multi-modal data (DXA and retinal images) in their work. To the best of our knowledge, ours is the first research that considers these two data modalities using a machine learning approach. Second, the data used in the approaches (Table 2) include bio-markers related directly to the health of the heart, and hence have an unfair advantage in CVD prediction over our approach at the cost of being more invasive. Our method stands superior in scenarios involving health centers located at remote regions with access to limited non-invasive resources.

6.3. Motivation for Using a Multi-Modal Approach

We were motivated to use a “multi-modal” approach to predict CVD using retinal images and DXA data for multiple reasons. First, we have shown that a multi-modal approach is a superior technique (Table 6) than the uni-modal counterparts (Table 4 and Table 5) for predicting CVD. Second, the particular combination of the modalities (DXA and retinal images) we used in our work has never been explored, hence providing a completely novel insight into the possible ways of predicting CVD in a non-invasive manner. Last but not the least, since neither DXA nor retinal data collection is invasive, our proposed multi-modal approach is also, overall, non-invasive, and hence is applicable to a wide variety of health centers possibly located in remote regions with limited resources.

7. Limitations

In this work, we used dataset from QBB that consisted of participants from Qatar only. This means that the outcome of this study could be specific to the Qatari population and people of gulf countries with similar lifestyle and ethnicity. This indicates that the results from this study might not be generalized to other population. Moreover, the human retinal images contain a vascular tree that orchestrate a complex branching pattern. To identify different aspects of this tree it is important to have retinal images with high contrast, color balance, and quality. Hence, having more and better quality controlled images could have pushed the accuracy of the proposed model even higher. Despite these limitations, this study serves as a proof of concept that incorporating information from retinal images and DXA provides a novel avenue to diagnose CVD with a reasonable accuracy in a non-invasive manner.

8. Conclusions

In this work, we presented a novel deep learning based model to distinguish CVD group from control group by integrating information from retinal images and DXA scans. The proposed multi-modal approach achieved an accuracy of 78.3% which performed better than the individual uni-modal (DXA and retinal images) models. To the best of our knowledge, this is the first study to diagnose CVD from DXA data and retinal images. The achieved performance demonstrated that signals from fast and relatively non-invasive techniques such as DXA and retinal images can be used to diagnose patients with CVD. The findings from our study are required to be validated in a clinical setup for proper understanding of their links to CVD diagnosis.

Acknowledgments

We thank Qatar Biobank (QBB) for providing access to the de-identified dataset. The open-access publication of this article is funded by the College of Science and Engineering, Hamad Bin Khalifa University (HBKU), Doha 34110, Qatar.

Abbreviations

The following abbreviations are used in this manuscript:

| ASCVD | Atherosclerotic Cardiovascular Disease |

| AUC | Area Under the Curve |

| BMI | Body Mass Index |

| BMD | Bone Mineral Density |

| CCTA | Coronary CT Angiography |

| CT | Computed Tomography |

| CVD | Cardiovascular disease |

| DL | Deep Learning |

| DXA | Dual-energy X-ray Absorptiometry |

| ECG | Electrocardiogram |

| ECHO | Echocardiography |

| ML | Machine Learning |

| MPI | Myocardial Perfusion Imaging |

| MRI | Magnetic Resonance Imaging |

| PCG | Phonocardiogram |

| ROC | Receiver Operating Characteristics |

| WHO | World Health Organization |

| WHR | Waist-to-hip Ratio |

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/s22124310/s1. Supplementary File S1: Summary Statistics of the DXA features. Supplementary File S2: Details for parameter optimization for the models. Supplementary File S3: ROC Curve Analysis.

Author Contributions

H.R.H.A.-A., T.A. conceived and planned the experiments. H.R.H.A.-A. and M.T.I. performed the experiments. H.R.H.A.-A. analyzed the data. H.R.H.A.-A., T.A. and M.T.I. prepared the manuscript with the assistance from other authors. Data curation, M.A.R. Validation, M.E.H.C. All authors have read and agreed to the final version of the manuscript.

Institutional Review Board Statement

This research was carried out under the regulation of Qatar’s Ministry of Public Health. This work was approved by the Institutional Review Board of Qatar Biobank (QBB) in Qatar, and used a de-identified dataset from QBB.

Informed Consent Statement

QBB received the infomred consent of all participants.

Data Availability Statement

Restrictions apply to the availability of these data. Data was obtained from QBB under non-disclosure agreement (NDA).

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Funding Statement

This research received no external funding.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Roth G.A., Mensah G.A., Johnson C.O., Addolorato G., Ammirati E., Baddour L.M., Barengo N.C., Beaton A.Z., Benjamin E.J., Benziger C.P., et al. Global burden of cardiovascular diseases and risk factors, 1990–2019: Update from the GBD 2019 study. J. Am. Coll. Cardiol. 2020;76:2982–3021. doi: 10.1016/j.jacc.2020.11.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.World Health Organization . Cardiovascular Diseases (CVDs) World Health Organization; Geneva, Switzerland: 2021. [Google Scholar]

- 3.Long C.P., Chan A.X., Bakhoum C.Y., Toomey C.B., Madala S., Garg A.K., Freeman W.R., Goldbaum M.H., DeMaria A.N., Bakhoum M.F. Prevalence of subclinical retinal ischemia in patients with cardiovascular disease—A hypothesis driven study. EClinicalMedicine. 2021;33:100775. doi: 10.1016/j.eclinm.2021.100775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.WHO-EMRO . Cardiovascular Diseases. World Health Organization, Regional Office for the Eastern Mediterranean; Cairo, Egypt: 2021. [Google Scholar]

- 5.Planning and Statistics Authority (Qatar) Births & Deaths in the State of Qatar (Review & Analysis). Report 2018. [(accessed on 18 September 2021)]; Available online: https://www.psa.gov.qa/en/statistics/Statistical%20Releases/General/StatisticalAbstract/2018/Birth_death_2018_EN.pdf.

- 6.Ghantous C.M., Kamareddine L., Farhat R., Zouein F.A., Mondello S., Kobeissy F., Zeidan A. Advances in cardiovascular biomarker discovery. Biomedicines. 2020;8:552. doi: 10.3390/biomedicines8120552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Al-Absi H.R., Refaee M.A., Rehman A.U., Islam M.T., Belhaouari S.B., Alam T. Risk Factors and Comorbidities Associated to Cardiovascular Disease in Qatar: A Machine Learning Based Case-Control Study. IEEE Access. 2021;9:29929–29941. doi: 10.1109/ACCESS.2021.3059469. [DOI] [Google Scholar]

- 8.Rehman A.U., Alam T., Belhaouari S.B. Investigating Potential Risk Factors for Cardiovascular Diseases in Adult Qatari Population; Proceedings of the 2020 IEEE International Conference on Informatics, IoT, and Enabling Technologies (ICIoT); Doha, Qatar. 2–5 February 2020; pp. 267–270. [Google Scholar]

- 9.Wilson P.W., D’Agostino R.B., Levy D., Belanger A.M., Silbershatz H., Kannel W.B. Prediction of coronary heart disease using risk factor categories. Circulation. 1998;97:1837–1847. doi: 10.1161/01.CIR.97.18.1837. [DOI] [PubMed] [Google Scholar]

- 10.American College of Cardiology . ASCVD Risk Estimator. American College of Cardiology; Washington, DC, USA: 2022. [(accessed on 18 September 2021)]. Available online: https://tools.acc.org/ascvd-risk-estimator-plus/ [Google Scholar]

- 11.Xu C., Xu L., Gao Z., Zhao S., Zhang H., Zhang Y., Du X., Zhao S., Ghista D., Liu H., et al. Direct delineation of myocardial infarction without contrast agents using a joint motion feature learning architecture. Med. Image Anal. 2018;50:82–94. doi: 10.1016/j.media.2018.09.001. [DOI] [PubMed] [Google Scholar]

- 12.Sudarshan V.K., Acharya U.R., Ng E., San Tan R., Chou S.M., Ghista D.N. An integrated index for automated detection of infarcted myocardium from cross-sectional echocardiograms using texton-based features (Part 1) Comput. Biol. Med. 2016;71:231–240. doi: 10.1016/j.compbiomed.2016.01.028. [DOI] [PubMed] [Google Scholar]

- 13.Madani A., Ong J.R., Tibrewal A., Mofrad M.R. Deep echocardiography: Data-efficient supervised and semi-supervised deep learning towards automated diagnosis of cardiac disease. NPJ Digit. Med. 2018;1:1–11. doi: 10.1038/s41746-018-0065-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vidya K.S., Ng E., Acharya U.R., Chou S.M., San Tan R., Ghista D.N. Computer-aided diagnosis of myocardial infarction using ultrasound images with DWT, GLCM and HOS methods: A comparative study. Comput. Biol. Med. 2015;62:86–93. doi: 10.1016/j.compbiomed.2015.03.033. [DOI] [PubMed] [Google Scholar]

- 15.Larroza A., López-Lereu M.P., Monmeneu J.V., Gavara J., Chorro F.J., Bodí V., Moratal D. Texture analysis of cardiac cine magnetic resonance imaging to detect nonviable segments in patients with chronic myocardial infarction. Med. Phys. 2018;45:1471–1480. doi: 10.1002/mp.12783. [DOI] [PubMed] [Google Scholar]

- 16.Snaauw G., Gong D., Maicas G., Van Den Hengel A., Niessen W.J., Verjans J., Carneiro G. End-to-end diagnosis and segmentation learning from cardiac magnetic resonance imaging; Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019); Venice, Italy. 8–11 April 2019; pp. 802–805. [Google Scholar]

- 17.Baessler B., Luecke C., Lurz J., Klingel K., Das A., von Roeder M., de Waha-Thiele S., Besler C., Rommel K.P., Maintz D., et al. Cardiac MRI and texture analysis of myocardial T1 and T2 maps in myocarditis with acute versus chronic symptoms of heart failure. Radiology. 2019;292:608–617. doi: 10.1148/radiol.2019190101. [DOI] [PubMed] [Google Scholar]

- 18.Mannil M., von Spiczak J., Manka R., Alkadhi H. Texture analysis and machine learning for detecting myocardial infarction in noncontrast low-dose computed tomography: Unveiling the invisible. Investig. Radiol. 2018;53:338–343. doi: 10.1097/RLI.0000000000000448. [DOI] [PubMed] [Google Scholar]

- 19.Coenen A., Kim Y.H., Kruk M., Tesche C., De Geer J., Kurata A., Lubbers M.L., Daemen J., Itu L., Rapaka S., et al. Diagnostic accuracy of a machine-learning approach to coronary computed tomographic angiography–based fractional flow reserve: Result from the MACHINE consortium. Circ. Cardiovasc. Imaging. 2018;11:e007217. doi: 10.1161/CIRCIMAGING.117.007217. [DOI] [PubMed] [Google Scholar]

- 20.Zreik M., Van Hamersvelt R.W., Wolterink J.M., Leiner T., Viergever M.A., Išgum I. A recurrent CNN for automatic detection and classification of coronary artery plaque and stenosis in coronary CT angiography. IEEE Trans. Med Imaging. 2018;38:1588–1598. doi: 10.1109/TMI.2018.2883807. [DOI] [PubMed] [Google Scholar]

- 21.Sacha J.P., Goodenday L.S., Cios K.J. Bayesian learning for cardiac SPECT image interpretation. Artif. Intell. Med. 2002;26:109–143. doi: 10.1016/S0933-3657(02)00055-6. [DOI] [PubMed] [Google Scholar]

- 22.Shibutani T., Nakajima K., Wakabayashi H., Mori H., Matsuo S., Yoneyama H., Konishi T., Okuda K., Onoguchi M., Kinuya S. Accuracy of an artificial neural network for detecting a regional abnormality in myocardial perfusion SPECT. Ann. Nucl. Med. 2019;33:86–92. doi: 10.1007/s12149-018-1306-4. [DOI] [PubMed] [Google Scholar]

- 23.Liu C.Y., Tang C.X., Zhang X.L., Chen S., Xie Y., Zhang X.Y., Qiao H.Y., Zhou C.S., Xu P.P., Lu M.J., et al. Deep learning powered coronary CT angiography for detecting obstructive coronary artery disease: The effect of reader experience, calcification and image quality. Eur. J. Radiol. 2021;142:109835. doi: 10.1016/j.ejrad.2021.109835. [DOI] [PubMed] [Google Scholar]

- 24.Farrah T.E., Dhillon B., Keane P.A., Webb D.J., Dhaun N. The eye, the kidney, and cardiovascular disease: Old concepts, better tools, and new horizons. Kidney Int. 2020;98:323–342. doi: 10.1016/j.kint.2020.01.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wong T.Y., Klein R., Klein B.E., Tielsch J.M., Hubbard L., Nieto F.J. Retinal microvascular abnormalities and their relationship with hypertension, cardiovascular disease, and mortality. Surv. Ophthalmol. 2001;46:59–80. doi: 10.1016/S0039-6257(01)00234-X. [DOI] [PubMed] [Google Scholar]

- 26.Zhang L., Yuan M., An Z., Zhao X., Wu H., Li H., Wang Y., Sun B., Li H., Ding S., et al. Prediction of hypertension, hyperglycemia and dyslipidemia from retinal fundus photographs via deep learning: A cross-sectional study of chronic diseases in central China. PLoS ONE. 2020;15:e0233166. doi: 10.1371/journal.pone.0233166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Islam M.T., Al-Absi H.R., Ruagh E.A., Alam T. DiaNet: A deep learning based architecture to diagnose diabetes using retinal images only. IEEE Access. 2021;9:15686–15695. doi: 10.1109/ACCESS.2021.3052477. [DOI] [Google Scholar]

- 28.Poplin R., Varadarajan A.V., Blumer K., Liu Y., McConnell M.V., Corrado G.S., Peng L., Webster D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018;2:158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 29.Cheung C.Y., Xu D., Cheng C.Y., Sabanayagam C., Tham Y.C., Yu M., Rim T.H., Chai C.Y., Gopinath B., Mitchell P., et al. A deep-learning system for the assessment of cardiovascular disease risk via the measurement of retinal-vessel calibre. Nat. Biomed. Eng. 2021;5:498–508. doi: 10.1038/s41551-020-00626-4. [DOI] [PubMed] [Google Scholar]

- 30.Spahillari A., Mukamal K., DeFilippi C., Kizer J.R., Gottdiener J.S., Djoussé L., Lyles M.F., Bartz T.M., Murthy V.L., Shah R.V. The association of lean and fat mass with all-cause mortality in older adults: The Cardiovascular Health Study. Nutr. Metab. Cardiovasc. Dis. 2016;26:1039–1047. doi: 10.1016/j.numecd.2016.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chuang T.L., Lin J.W., Wang Y.F. Bone Mineral Density as a Predictor of Atherogenic Indexes of Cardiovascular Disease, Especially in Nonobese Adults. Dis. Markers. 2019;2019:1045098. doi: 10.1155/2019/1045098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Messina C., Albano D., Gitto S., Tofanelli L., Bazzocchi A., Ulivieri F.M., Guglielmi G., Sconfienza L.M. Body composition with dual energy X-ray absorptiometry: From basics to new tools. Quant. Imaging Med. Surg. 2020;10:1687. doi: 10.21037/qims.2020.03.02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Park J., Yoon Y.E., Kim K.M., Hwang I.C., Lee W., Cho G.Y. Prognostic value of lower bone mineral density in predicting adverse cardiovascular disease in Asian women. Heart. 2021;107:1040–1046. doi: 10.1136/heartjnl-2020-318764. [DOI] [PubMed] [Google Scholar]

- 34.Ceniccola G.D., Castro M.G., Piovacari S.M.F., Horie L.M., Corrêa F.G., Barrere A.P.N., Toledo D.O. Current technologies in body composition assessment: Advantages and disadvantages. Nutrition. 2019;62:25–31. doi: 10.1016/j.nut.2018.11.028. [DOI] [PubMed] [Google Scholar]

- 35.Hansen A.B., Sander B., Larsen M., Kleener J., Borch-Johnsen K., Klein R., Lund-Andersen H. Screening for diabetic retinopathy using a digital non-mydriatic camera compared with standard 35-mm stereo colour transparencies. Acta Ophthalmol. Scand. 2004;82:656–665. doi: 10.1111/j.1600-0420.2004.00347.x. [DOI] [PubMed] [Google Scholar]

- 36.Oloumi F., Rangayyan R.M., Ells A.L. Digital Image Processing for Ophthalmology: Detection and Modeling of Retinal Vascular Architecture. Synth. Lect. Biomed. Eng. 2014;9:1–185. doi: 10.2200/S00569ED1V01Y201402BME049. [DOI] [Google Scholar]

- 37.Guo V.Y., Chan J.C.N., Chung H., Ozaki R., So W., Luk A., Lam A., Lee J., Zee B.C.Y. Retinal information is independently associated with cardiovascular disease in patients with type 2 diabetes. Sci. Rep. 2016;6:19053. doi: 10.1038/srep19053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gerrits N., Elen B., Van Craenendonck T., Triantafyllidou D., Petropoulos I.N., Malik R.A., De Boever P. Age and sex affect deep learning prediction of cardiometabolic risk factors from retinal images. Sci. Rep. 2020;10:9432. doi: 10.1038/s41598-020-65794-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Van Craenendonck T., Gerrits N., Buelens B., Petropoulos I.N., Shuaib A., Standaert A., Malik R.A., De Boever P. Retinal microvascular complexity comparing mono-and multifractal dimensions in relation to cardiometabolic risk factors in a Middle Eastern population. Acta Ophthalmol. 2021;99:e368–e377. doi: 10.1111/aos.14598. [DOI] [PubMed] [Google Scholar]

- 40.Sharp D.S., Andrew M.E., Burchfiel C.M., Violanti J.M., Wactawski-Wende J. Body mass index versus dual energy X-ray absorptiometry-derived indexes: Predictors of cardiovascular and diabetic disease risk factors. Am. J. Hum. Biol. 2012;24:400–405. doi: 10.1002/ajhb.22221. [DOI] [PubMed] [Google Scholar]

- 41.Lang P.O., Trivalle C., Vogel T., Proust J., Papazian J.P. Markers of metabolic and cardiovascular health in adults: Comparative analysis of DEXA-based body composition components and BMI categories. J. Cardiol. 2015;65:42–49. doi: 10.1016/j.jjcc.2014.03.010. [DOI] [PubMed] [Google Scholar]

- 42.Bekfani T., Pellicori P., Morris D.A., Ebner N., Valentova M., Steinbeck L., Wachter R., Elsner S., Sliziuk V., Schefold J.C., et al. Sarcopenia in patients with heart failure with preserved ejection fraction: Impact on muscle strength, exercise capacity and quality of life. Int. J. Cardiol. 2016;222:41–46. doi: 10.1016/j.ijcard.2016.07.135. [DOI] [PubMed] [Google Scholar]

- 43.Martens P., Ter Maaten J.M., Vanhaen D., Heeren E., Caers T., Bovens B., Dauw J., Dupont M., Mullens W. Heart failure is associated with accelerated age related metabolic bone disease. Acta Cardiol. 2021;76:718–726. doi: 10.1080/00015385.2020.1771885. [DOI] [PubMed] [Google Scholar]

- 44.Kerkadi A., Suleman D., Salah L.A., Lotfy C., Attieh G., Bawadi H., Shi Z. Adiposity indicators as cardio-metabolic risk predictors in adults from country with high burden of obesity. Diabetes Metab. Syndr. Obesity Targets Ther. 2020;13:175. doi: 10.2147/DMSO.S238748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bawadi H., Hassan S., Zadeh A.S., Sarv H., Kerkadi A., Tur J.A., Shi Z. Age and gender specific cut-off points for body fat parameters among adults in Qatar. Nutr. J. 2020;19:75. doi: 10.1186/s12937-020-00569-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Bartl R., Bartl C. The Osteoporosis Manual: Prevention, Diagnosis and Management. Springer International Publishing; Cham, Switzerland: 2019. Bone densitometry; pp. 67–75. [DOI] [Google Scholar]

- 47.Loncar G., Cvetinovic N., Lainscak M., Isaković A., von Haehling S. Bone in heart failure. J. Cachexia Sarcopenia Muscle. 2020;11:381–393. doi: 10.1002/jcsm.12516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Reid S., Schousboe J.T., Kimelman D., Monchka B.A., Jozani M.J., Leslie W.D. Machine learning for automated abdominal aortic calcification scoring of DXA vertebral fracture assessment images: A pilot study. Bone. 2021;148:115943. doi: 10.1016/j.bone.2021.115943. [DOI] [PubMed] [Google Scholar]

- 49.Bawadi H., Abouwatfa M., Alsaeed S., Kerkadi A., Shi Z. Body shape index is a stronger predictor of diabetes. Nutrients. 2019;11:1018. doi: 10.3390/nu11051018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Zhao J., Feng Q., Wu P., Lupu R.A., Wilke R.A., Wells Q.S., Denny J.C., Wei W.Q. Learning from longitudinal data in electronic health record and genetic data to improve cardiovascular event prediction. Sci. Rep. 2019;9:717. doi: 10.1038/s41598-018-36745-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhang H., Wang X., Liu C., Liu Y., Li P., Yao L., Li H., Wang J., Jiao Y. Detection of coronary artery disease using multi-modal feature fusion and hybrid feature selection. Physiol. Meas. 2020;41:115007. doi: 10.1088/1361-6579/abc323. [DOI] [PubMed] [Google Scholar]

- 52.Ali F., El-Sappagh S., Islam S.R., Kwak D., Ali A., Imran M., Kwak K.S. A smart healthcare monitoring system for heart disease prediction based on ensemble deep learning and feature fusion. Inf. Fusion. 2020;63:208–222. doi: 10.1016/j.inffus.2020.06.008. [DOI] [Google Scholar]

- 53.Apostolopoulos I.D., Apostolopoulos D.I., Spyridonidis T.I., Papathanasiou N.D., Panayiotakis G.S. Multi-input deep learning approach for cardiovascular disease diagnosis using myocardial perfusion imaging and clinical data. Phys. Medica. 2021;84:168–177. doi: 10.1016/j.ejmp.2021.04.011. [DOI] [PubMed] [Google Scholar]

- 54.Chaves J.M.Z., Chaudhari A.S., Wentland A.L., Desai A.D., Banerjee I., Boutin R.D., Maron D.J., Rodriguez F., Sandhu A.T., Jeffrey R.B., et al. Opportunistic assessment of ischemic heart disease risk using abdominopelvic computed tomography and medical record data: A multimodal explainable artificial intelligence approach. medRxiv. 2021 doi: 10.1101/2021.01.23.21250197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Flores A.M., Schuler A., Eberhard A.V., Olin J.W., Cooke J.P., Leeper N.J., Shah N.H., Ross E.G. Unsupervised Learning for Automated Detection of Coronary Artery Disease Subgroups. J. Am. Heart Assoc. 2021;10:e021976. doi: 10.1161/JAHA.121.021976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Al Thani A., Fthenou E., Paparrodopoulos S., Al Marri A., Shi Z., Qafoud F., Afifi N. Qatar biobank cohort study: Study design and first results. Am. J. Epidemiol. 2019;188:1420–1433. doi: 10.1093/aje/kwz084. [DOI] [PubMed] [Google Scholar]

- 57.Al Kuwari H., Al Thani A., Al Marri A., Al Kaabi A., Abderrahim H., Afifi N., Qafoud F., Chan Q., Tzoulaki I., Downey P., et al. The Qatar Biobank: Background and methods. BMC Public Health. 2015;15:1–9. doi: 10.1186/s12889-015-2522-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jain Y.K., Bhandare S.K. Min max normalization based data perturbation method for privacy protection. Int. J. Comput. Commun. Technol. 2011;2:45–50. doi: 10.47893/IJCCT.2013.1201. [DOI] [Google Scholar]

- 59.Breiman L., Friedman J.H., Olshen R.A., Stone C.J. Classification and Regression Trees. Routledge; London, UK: 2017. [Google Scholar]

- 60.Hagan M., Demuth H., Beale M. Neural Net and Traditional Classifiers. Lincoln Laboratory; Lexington, MA, USA: 1987. [Google Scholar]

- 61.Breiman L. Random forests. Mach. Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 62.Brownlee J. XGBoost with Python: Gradient Boosted Trees with XGBoost and Scikit-Learn. Machine Learning Mastery 2016. [(accessed on 27 October 2021)]. Available online: https://machinelearningmastery.com/xgboost-with-python/

- 63.Dorogush A.V., Ershov V., Gulin A. CatBoost: Gradient boosting with categorical features support. arXiv. 20181810.11363 [Google Scholar]

- 64.Hoffman J.I. Biostatistics for Medical and Biomedical Practitioners. Academic Press; Cambridge, MA, USA: 2015. [Google Scholar]

- 65.Cawley G.C., Talbot N.L. On over-fitting in model selection and subsequent selection bias in performance evaluation. J. Mach. Learn. Res. 2010;11:2079–2107. [Google Scholar]

- 66.Krizhevsky A., Sutskever I., Hinton G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012;25:1097–1105. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 67.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. 20141409.1556 [Google Scholar]

- 68.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE conference on computer vision and pattern recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 69.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the IEEE conference on computer vision and pattern recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- 70.Iandola F.N., Han S., Moskewicz M.W., Ashraf K., Dally W.J., Keutzer K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and< 0.5 MB model size. arXiv. 20161602.07360 [Google Scholar]

- 71.Smith L.N., Topin N. Super-convergence: Very fast training of neural networks using large learning rates; Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications; Baltimore, MD, USA. 10 May 2019; p. 1100612. [Google Scholar]

- 72.Smith L.N. A disciplined approach to neural network hyper-parameters: Part 1–learning rate, batch size, momentum, and weight decay. arXiv. 20181803.09820 [Google Scholar]

- 73.Nguyen T., Kelly P., Sambrook P., Gilbert C., Pocock N., Eisman J. Lifestyle factors and bone density in the elderly: Implications for osteoporosis prevention. J. Bone Miner. Res. 1994;9:1339–1346. doi: 10.1002/jbmr.5650090904. [DOI] [PubMed] [Google Scholar]

- 74.Ngugyen T.V., Eisman J.A., Kelly P.J., Sambroak P.N. Risk factors for osteoporotic fractures in elderly men. Am. J. Epidemiol. 1996;144:255–263. doi: 10.1093/oxfordjournals.aje.a008920. [DOI] [PubMed] [Google Scholar]

- 75.Khondaker M., Islam T., Khan J.Y., Refaee M.A., Hajj N.E., Rahman M.S., Alam T. Obesity in Qatar: A Case-Control Study on the Identification of Associated Risk Factors. Diagnostics. 2020;10:883. doi: 10.3390/diagnostics10110883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Gildenblat J. PyTorch Library for CAM Methods. [(accessed on 2 January 2022)]. Available online: https://github.com/jacobgil/pytorch-grad-cam.

- 77.Wong T.Y., Mitchell P. Hypertensive retinopathy. N. Engl. J. Med. 2004;351:2310–2317. doi: 10.1056/NEJMra032865. [DOI] [PubMed] [Google Scholar]

- 78.Kanukollu V.M., Ahmad S.S. Retinal Hemorrhage. StatPearls. [(accessed on 16 January 2022)];2021 Available online: https://www.ncbi.nlm.nih.gov/books/NBK560777/

- 79.Gkastaris K., Goulis D.G., Potoupnis M., Anastasilakis A.D., Kapetanos G. Obesity, osteoporosis and bone metabolism. J. Musculoskelet. Neuronal Interact. 2020;20:372. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Restrictions apply to the availability of these data. Data was obtained from QBB under non-disclosure agreement (NDA).