Significance

Scientific retraction has been on the rise recently. Retracted papers are frequently discussed online, enabling the broad dissemination of potentially flawed findings. Our analysis spans a nearly 10-y period and reveals that most papers exhaust their attention by the time they get retracted, meaning that retractions cannot curb the online spread of problematic papers. This is striking as we also find that retracted papers are pervasive across mediums, receiving more attention after publication than nonretracted papers even on curated platforms, such as news outlets and knowledge repositories. Interestingly, discussions on social media express more criticism toward subsequently retracted results and may thus contain early signals related to unreliable work.

Keywords: retraction, science of science, collective attention, scientific misinformation

Abstract

Retracted papers often circulate widely on social media, digital news, and other websites before their official retraction. The spread of potentially inaccurate or misleading results from retracted papers can harm the scientific community and the public. Here, we quantify the amount and type of attention 3,851 retracted papers received over time in different online platforms. Comparing with a set of nonretracted control papers from the same journals with similar publication year, number of coauthors, and author impact, we show that retracted papers receive more attention after publication not only on social media but also, on heavily curated platforms, such as news outlets and knowledge repositories, amplifying the negative impact on the public. At the same time, we find that posts on Twitter tend to express more criticism about retracted than about control papers, suggesting that criticism-expressing tweets could contain factual information about problematic papers. Most importantly, around the time they are retracted, papers generate discussions that are primarily about the retraction incident rather than about research findings, showing that by this point, papers have exhausted attention to their results and highlighting the limited effect of retractions. Our findings reveal the extent to which retracted papers are discussed on different online platforms and identify at scale audience criticism toward them. In this context, we show that retraction is not an effective tool to reduce online attention to problematic papers.

Retraction in academic publishing is an important and necessary mechanism for science to self-correct (1). Prior studies have shown that the number of retractions has increased in recent years (2–5). This rise can be explained by many different factors (3–7). One reason is that the number of publications is increasing exponentially (8). Meanwhile, as scientific research has become more complex and interdisciplinary than ever before, reviewers are facing a higher cognitive burden (9, 10). This undermines the scientific community’s ability to filter out problematic papers. In fact, research shows that prominent journals with rigorous screening and high publishing standards are as likely to publish erroneous papers as less prominent journals (11). Finally, not all retractions are due to research fraud—some papers are retracted due to unintentional errors or mistakes, which become more likely as research data grow in size and complexity (6).

Regardless of the reasons behind this increase, a high incidence of retractions in academic literature has the potential to undermine the credibility of scientific communities and reduce public trust in science (6, 12). What is more, the circulation of misleading findings can be harmful to the lay public (6, 13–15), especially given how broadly papers can be disseminated via social media (16). For instance, there are 2 retracted papers among the 10 most highly shared papers in 2020 according to Altmetric, a service that tracks the online dissemination of scientific articles (17). One of them, published in a top biology journal, reported that treatment with chloroquine had no benefit in COVID-19 patients based on data that were likely fabricated (18). Another paper, published in a well-regarded general interest journal, falsely claimed that having more female mentors was negatively correlated with postmentorship impact of junior scholars (19). Both papers attracted considerable attention before they were retracted, raising questions about their possible negative impact on online audiences’ trust in science.

As these examples suggest, retracted papers can attain substantial online attention, and potentially flawed knowledge can reach the public, which often is impacted by the research results (20). This large-scale spreading of papers occurs as the web has become the primary channel through which the lay public interacts with scientific information (21–23). Past research on the online diffusion of science has mainly studied the spread of papers without regard to their retraction status (24–29). Other work has examined the dissemination of retracted papers in scientific communities, focusing mainly on the associated citation penalty (5, 14, 30–36).

However, the impact of retraction on the online dissemination of retracted papers is unclear. Here, we address this essential open question. Past research found that authors tend to keep citing retracted papers long after they have been red flagged, although at a lower rate (5, 6, 12, 14). This raises the question of whether retraction is effective in reducing public attention beyond the academic literature. Studying the impact of retraction relative to the temporal “trajectory” of mentions a paper receives could be helpful for journals to devise policies and practices that maximize the effect of retractions (12, 37).

To understand whether retraction is appropriate for reducing dissemination online, we first assess the extent of online circulation of erroneous findings by investigating variations in how often retracted papers are mentioned on different types of platforms before and after retraction. Recent research indicates that, overall, retracted papers tend to receive more attention than nonretracted ones (38). Prior work also showed that retractions occur most frequently among highly cited articles published in high-impact journals (11, 39), suggesting a counterintuitive link between rigorous screening and retraction. Is there a similar tendency online where retracted papers receive more attention on carefully curated platforms, such as news outlets, than on platforms with limited entry barriers, like social media sites? Such a trend would highlight difficulties with identifying unreliable research given their broad visibility in established venues and could inform attempts to manage the harm caused by retractions. Second, we distinguish between critical and uncritical attention to papers to uncover how retracted research is mentioned. More than half of retracted papers are flagged because of scientific misconduct, such as fabrication, falsification, and plagiarism (4, 5). These papers may receive lots of attention due to criticism raised by online audiences. Is the attention received by retracted papers due to sharing without knowing about the mistakes of a paper, or is it rather expressing concerns (so-called “critical” mentions)? As suggested in a recent case study (40), knowing how retracted papers are mentioned may uncover users who are improving science-related discussions on Twitter by identifying papers that require a closer examination.

In this paper, we compiled a dataset to quantify the volume of attention that 3,985 retracted papers received on 14 online platforms (e.g., public social media posts on Twitter, Facebook, and Reddit), their coverage in online news, citations in Wikipedia, and research blogs. We compared their attention with nonretracted papers selected through a matching process based on publication venue and year, number of authors, and authors’ citation count (Fig. 1A and Identifying Control Papers). We obtained retracted papers from Retraction Watch (41), the largest database to date that records retracted papers, and pulled their complete trajectory of mentions over time on various platforms from a service called Altmetric (42) that has been tracking posts about research papers for the past decade. The granularity and scale of the data enabled us to differentiate mentions on four different types of platforms, including social media, news media, blogs, and knowledge repositories (Fig. 1 B and C). We thus provide a systematic investigation of the online mentions of papers disentangled by platform during the time periods between publication and retraction (Fig. 1D) and after retraction (Fig. 1E).

Fig. 1.

Illustration of the research process that compares the online attention received by retracted and control papers. (A) We match five control papers to each retracted paper using the Altmetric database. (B and C) We track the change in mentions over time on four different types of platforms and in top news outlets. As an example, we show here the retracted paper “Effect of a program combining transitional care and long-term self-management support on outcomes of hospitalized patients with chronic obstructive pulmonary disease: A randomized clinical trial” published in JAMA (DOI: 10.1001/jama.2018.17933) and one of its matched control papers: “Vitamin D, calcium, or combined supplementation for the primary prevention of fractures in community-dwelling adults: US Preventive Services Task Force Recommendation Statement” (DOI: 10.1001/jama.2018.3185). (D) We compute the average cumulative number of mentions across all platforms within 6 mo after publication (and before retraction) for all retracted and control papers in the dataset. (E) Similarly, we compute the average cumulative number of mentions within 6 mo after retraction. Error bars indicate 95% CIs.

Our findings offer insights into how extensively retracted papers are mentioned in different online platforms over time and how frequent their critical vs. uncritical discussion is on Twitter. Most importantly, we show that retractions are not reducing harmful dissemination of problematic research on any of the platforms studied here because by the time the retraction is issued, most papers have exhausted their online attention. We also contribute a large dataset of identifiers of tweets that mention the papers used in this study and human annotations of whether the tweets express criticism with respect to the findings of the papers (Labeling Critical Tweets). This dataset (43) can be useful to the broader research community for the study of criticism toward scientific articles and can aid the development of automated methods to detect criticism computationally (44).

Results

To understand whether retraction can contain the spread of problematic papers online in comparison with control papers, we first evaluate the amount of attention that retracted papers receive over time on four types of platforms. Then, we identify large-scale discussions on Twitter that criticize papers before they are retracted.

Are Retracted Papers More Popular Even in the News?

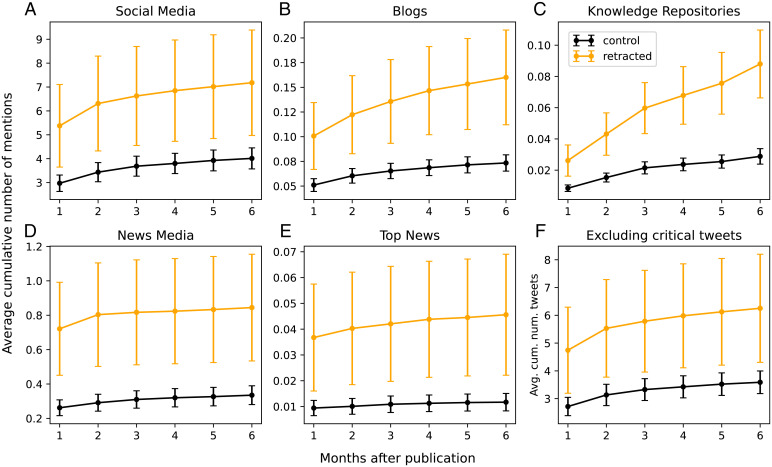

Retracted papers attract more overall attention than control papers (Fig. 1D). However, does this trend apply to all types of platforms? As shown in Fig. 2 A–E, across 2,830 retracted and 13,599 control papers with a tracking window of at least 6 mo (Defining Tracking Windows), we find that retracted papers receive more attention after publication on all four types of platforms and are also mentioned more in news outlets with high-quality science reporting (SI Appendix, SI Methods has outlet selection). On average, papers obtain mentions most frequently on social media followed by news media, and they receive roughly similar amounts of attention on blogs and knowledge repositories. Changing the length of the tracking window produces qualitatively similar results (SI Appendix, Fig. S1).

Fig. 2.

After publication and before retraction mentions. (A–E) Average cumulative number of mentions received within 6 mo after publication on four types of platforms and in top news outlets for both retracted and control papers. (F) Average cumulative number of mentions on Twitter after excluding critical tweets. Comparisons across different types of platforms show that retracted papers receive more attention after publication than nonretracted papers. Error bars indicate 95% CIs.

The distribution of online attention to retracted papers is right skewed, meaning that most papers do not receive much attention, while a few become very popular (SI Appendix, Fig. S2). To statistically compare the attention between retracted and control papers while controlling for fundamental factors that could affect the amount of attention a paper receives, we performed negative binomial regression to examine the association between retraction and attention. The association between a higher chance of retraction and more mentions of a paper is significant across all types of platforms and across different time windows (SI Appendix, Table S1). Furthermore, a Mann–Whitney U test also shows that the central tendency of the distribution of mentions for retracted papers is larger than that of control papers on all types of platforms but news media (SI Appendix, Table S2). Since matching based on journals in identifying control papers is imperfect in accounting for research topics, particularly for papers published in multidisciplinary journals, we repeated the analysis by excluding papers in general science journals and found our results to be robust to this change (SI Appendix, Fig. S13).

Moreover, investigating the ratio of the average number of mentions of retracted papers relative to their control counterparts, we find more attention to retracted papers in news outlets, including top news, and knowledge repositories than in social media and blogs (SI Appendix, Fig. S3). This suggests that curated content tends to contain more mentions of retracted findings for each mention of nonretracted research than unfiltered contributions by individuals in social media.

Are Retracted Papers Shared Critically?

Since it is possible that the additional mentions of retracted papers are not meant to propagate their findings but to express criticism, we repeated the analysis after excluding posts that express concerns about the claims of the paper. Here, we focused on Twitter, the single largest platform in our dataset, which accounts for about 80% of all posts in the Altmetric database. Twitter features a diverse representation of different types of users who share scientific content, including academics, practitioners, news organizations, and the lay public (16, 45) (SI Appendix, Fig. S16).

We define a critical tweet as a tweet expressing uncertainty, skepticism, doubt, criticism, concern, confusion, or disbelief with respect to a paper’s findings, data, credibility, novelty, contribution, or other scientific elements. We adopt the term “uncritical attention” to refer to mentions of the paper that do not express criticism. We used a combination of automated and manual methods to label critical tweets with high recall. We identified a large number of critical tweets after collecting three to four independent expert annotations for thousands of tweets (Labeling Critical Tweets).

Fig. 2F shows that even after excluding critical tweets for both groups of papers (full regression results are in SI Appendix, Table S3), retracted papers still receive more mentions on Twitter than control papers, suggesting that potentially flawed findings are indeed uncritically mentioned and shared more. The number of tweets does not necessarily reflect a paper’s true influence since different posts may have different audience sizes. Based on the number of followers of users who mention retracted vs. control papers, the former indeed reaches comparable or even more people after publication than the latter (SI Appendix, Fig. S4). Similar findings may also apply to other platforms, such as news outlets, given that journalists are discouraged from publishing news articles that question a scientific paper (46, 47).

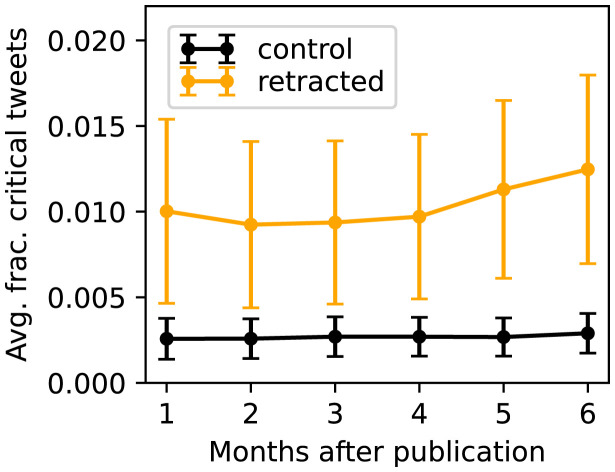

Having examined uncritical attention to papers on Twitter, we now analyze their critical attention. A recent case study suggested that Twitter attention received after publication could be indicative of factual information that should be investigated (40). To assess this possibility at a large scale, we investigated how frequently retracted papers receive critical tweets between publication and retraction. For control papers, we identified critical tweets between publication and the retraction date of their matched paper (an illustration of the reference date is in Fig. 1C). Fig. 3 shows that, compared with control papers, retracted papers receive a higher fraction of critical tweets, especially when looking at longer time periods, between 5 and 6 mo. This finding is also supported by regression analyses, with a paper’s fraction of critical tweets as the dependent variable and the retraction status as the independent variable while controlling for the publication year, the number of authors, and the author’s log citations. The coefficients of the retraction status are positive and statistically significant (P < 0.001 for 5 to 6 mo) (SI Appendix, Table S4). Note that we did not test whether this finding holds on platforms other than Twitter. Establishing a similar presence of critical discussions on other types of platforms requires further examination.

Fig. 3.

Average cumulative fraction of critical tweets within 6 mo after publication. Control papers are selected from a matching process that considers the publication year, publication venue, number of authors, and authors’ citation count. We focused on papers that have at least one tweet mention in each time window. Retracted papers receive a higher fraction of critical tweets.

If papers that receive a high fraction of critical tweets are also largely ignored by news media and other platforms, then the critical signal from Twitter would not be as relevant. We measured the correlation between the fraction of critical tweets and the number of mentions on other types of platforms (SI Appendix, Table S5), finding that there is no significant association. Retracted papers with a higher fraction of critical tweets are thus not necessarily those with more or less coverage in other types of platforms, such as news media.

Overall, this analysis suggests that Twitter readily hosts critical discussion of problematic papers well before they get retracted. These discussions credit voices that are actively helping to improve science-related discussions in digital media.

Is Retraction Effective in Reducing Attention?

The fact that retracted papers are mentioned more often after their publication prompts us to ask if they continue to receive outsized attention up until and even beyond their retraction. This leads to our main question focused on understanding the effectiveness of the retraction in reducing online attention. We found that retracted papers are discussed much more frequently than control papers even over the 6 mo after their retraction (SI Appendix, Fig. S5). This is not unexpected, as it is very likely that people are reacting to the news of retraction in ways that involve mentioning the paper, such as questioning and criticizing the authors’ practices, the peer review system, or the publishers.

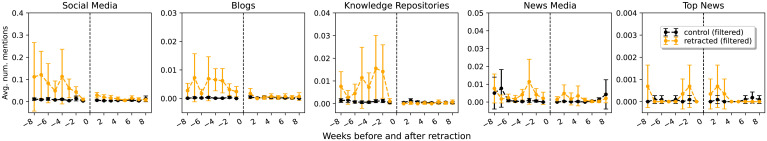

To more accurately measure the extent to which potentially flawed results from retracted papers are shared without regard to their problematic nature, we excluded posts that discussed the retraction itself. To identify such content, we used a filtering strategy (Filtering Retraction-Related Posts). As SI Appendix, Fig. S5 shows, after this filtering, retracted papers are mentioned as much as or less than control papers. This result indicates that the additional attention to retracted papers after their retraction is primarily related to the retraction incident rather than to the paper’s findings, and the previously observed surplus of mentions to retracted papers has disappeared. Yet, this finding does not show the effectiveness of the retraction itself. Since online attention tends to decay naturally over time (48), it is possible that retracted papers already received attention at a similar level as control papers by the time they were retracted. We thus compared the online attention to retracted and control papers before and after the retraction/reference date. Fig. 4 shows that, across different types of platforms, retracted papers are not discussed significantly more often than control papers immediately before their retraction. In fact, even without excluding any posts, 80.2% of retracted papers receive no mentions over the 2 mo preceding their retraction, while 93.6% of them receive no mentions in the last month before retraction. This suggests that by the time the retraction is issued, most papers have already exhausted their attention, meaning that the retraction does not serve the purpose of further reducing the attention. Note that we did not exclude critical tweets in this analysis but only posts that discussed the retraction itself. If we had excluded critical posts before retraction for all platforms, this result would only become more stark since the preretraction mentions would decrease further, indicating even less attention that the retraction could intervene on.

Fig. 4.

Average number of weekly mentions within 2 mo before and after the retraction. Trends are shown on four types of platforms and in top news outlets for data that exclude posts containing the phrase retract during the whole time period for both control and retracted papers. In the case of retracted papers, we also manually excluded all after-retraction posts that discussed the retraction in some form. Comparisons across different types of platforms show that retracted papers do not receive statistically more mentions than control papers immediately before or after retraction. Error bars indicate 95% CIs.

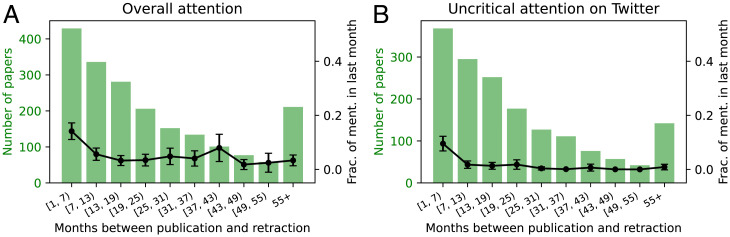

We provide six robustness tests to further probe the finding that retraction has limited efficacy in containing the spread of problematic papers. 1) Changing the length of the tracking window produced qualitatively similar results (SI Appendix, Fig. S6). 2) The finding was universally observed in each of four broad disciplines, including social sciences, life sciences, health sciences, and physical sciences (SI Appendix, Figs. S7–S10 and SI Methods). 3) We obtained consistent results when excluding papers published in nine multidisciplinary journals (SI Appendix, Figs. S13 and S14). 4) Considering only uncritical tweets before retraction on Twitter shows once again the ineffectiveness of retraction in reducing attention (SI Appendix, Fig. S11). 5) We performed an interrupted time series analysis (49) using the average weekly mention trajectory on all types of platforms and uncritical Twitter mentions to assess the effect of retraction on attention. This analysis confirms statistically that since online attention to retracted papers is exhausted when the retraction occurs, the retraction itself does not lead to a faster rate of decrease in mentions compared with the preretraction trend (SI Appendix, Table S6). 6) We investigated the fraction of mentions that occurred within the last month before retraction. We found that this fraction was very small for papers retracted more than 7 mo after their publication, which holds for both the overall attention (Fig. 5A) and the uncritical mentions on Twitter (Fig. 5B). These tests further support the main result that retractions do very little to limit the spread of problematic papers online, as attention has already been exhausted by the time retractions occur. This finding implies that retractions cannot be expected to remedy the problem that retracted papers get outsized attention.

Fig. 5.

Average fraction of mentions in the last month before retraction. The x axis represents different time windows between publication and retraction. The green bar plot (left y axis) shows the number of papers in each time window, excluding those with zero total mentions between publication and retraction. The black line plot (right y axis) shows the average fraction of mentions in the last month before retraction. Error bars indicate 95% CIs. (A) Data based on mentions in all platforms. (B) Similar results for uncritical mentions on Twitter.

Discussion

Our study shows that retracted papers attract more attention after publication than comparable nonretracted papers across a variety of online platforms, including social and news media, blogs, and knowledge repositories. Moreover, their popularity surplus relative to nonretracted papers tends to be higher on curated than noncurated platforms. On the platform accountable for most mentions of research papers, retracted papers remain mentioned more often, even after excluding critical tweets. These findings suggest that retracted papers are disseminated widely and through multiple channels before they are eventually retracted, possibly spreading flawed findings throughout the scientific community and the lay public.

Retracted papers might receive more attention due to a number of mechanisms. First, problematic papers may naturally attract more attention. For example, if the paper is retracted due to overclaiming, the results are likely to be presented as more significant, exciting, and attention grabbing than they should. Similarly, if the authors unintentionally make mistakes, they are more likely to publish the paper and receive attention if the results are positive rather than negative (50, 51). Hence, retracted papers are prone to present (false) positive findings and are, therefore, more eye-catching. Second, attention leads to scrutiny, which could increase the likelihood of retraction. In contrast, a paper that attracts limited attention may present little opportunity for retraction.

Our analysis of papers’ critical mentions indicates that subsequently retracted papers are questioned relatively more often on Twitter after their publication than control papers. This is nontrivial since retracted papers passed the peer review system that science relies on to legitimize new findings through expert evaluation. Our finding validates at population-wide scale a recent case study of three retracted COVID-19 papers (40) in suggesting that collective attention on Twitter includes meaningful discussions about science and may contain useful early signals related to problematic papers that could eventually contribute to their investigation.

We find that retracted papers continue to be discussed more than their control counterparts after being retracted. However, those discussions are primarily related to the retraction incident. Surprisingly, retracted papers do not receive more mentions than control papers right before the retraction and the corresponding reference date. This result is essential because it addresses an important open question about whether simply retracting a paper has an impact on the organic online dissemination of its findings, which can be extensive especially in the context of varied and broadly adopted online platforms as well as the increased engagement with science on most platforms (23, 52). We showed at scale that retraction has limited impact in reducing the spread of problematic papers online, as it comes after papers have already exhausted attention that is unaware of the retraction. This finding thus adds to knowledge about the consequences of retraction beyond the narrow scientific literature and academic sphere. One practical implication is that journals, the scientific community, and the lay public should not think of retraction as an effective tool in decreasing online attention to problematic papers.

Although we studied the largest set of retracted papers to date, our work is not without limitations. First, not all problematic papers that should be retracted are retracted (53, 54), and not all retracted papers are mentioned and traceable on major online platforms. Our findings are based solely on the subset recorded in the Altmetric database, which may not be representative of all problematic papers. Second, the Altmetric database may not cover all mentions of each paper. For instance, when a news outlet, such as the New Scientist, posts a tweet with a link to its piece that covers a research paper, this tweet and its retweets would not necessarily be tracked by Altmetric unless they explicitly contain the unique identifier of the paper. Third, our matching scheme controlling for journal topic, publication year, coauthor team size, and author citation impact leaves other potential factors, such as prestige of affiliation, uncharted. Future work should thus expand on the matching used here and explore controlling for more factors that could impact attention volume. Fourth, our detection of critical mentions is focused on a single platform. While Twitter accounts for a large fraction of all posts in the Altmetric database, future work needs to test our content-related findings on other online platforms. Fifth, our study analyzes public attention to retracted papers. Distinguishing different types of online audiences, including academics and professionals, who engage with problematic papers is an interesting avenue for future research.

Our results inform efforts concerned with the trustworthiness of science (55–57) and have crucial implications for the handling of retracted papers in academic publishing (58). First, it is important to retract problematic papers as they are widely shared online by scholars, practitioners, journalists, and the public, some of whom might have no knowledge of their errors. Second, the fact that people tend to question these papers before retraction could be incorporated into efforts to identify questionable results earlier. Third, our results show that by the time papers are retracted, they no longer receive much attention that is unaware of the retraction. This suggests that the scientific community and the lay public should not expect journal retractions to be an effective tool to decrease harmful attention to problematic papers. Beyond revealing the limited efficacy of retractions in reducing attention to problematic papers, our work introduces a framework and contributes a public dataset of critical and uncritical mentions of research papers, which open up avenues to study the dissemination of retracted papers beyond strictly academic circles.

Materials and Methods

Altmetric Database.

The dataset that records online attention to research papers is provided by Altmetric (October 8, 2019 version). It monitors a range of online sources, searching their posts for links and references to published research. The database we used contains posts for more than 26 million academic records, which are primarily research papers. Each post mentioning a paper contains a uniform resource locator (URL) to its unique identifiers, such as digital object identifier (DOI), PubMed identification, and arXiv identification. Altmetric combines different identifiers for each unique paper and also, collates a record of attention for different versions of each paper. The database contains a variety of paper metadata, such as the title, authors, journal, publication date, and article type (research paper, book, book chapter, dataset, editorial piece, etc.). Our analysis was focused on research papers and their posts written in English. For our critical mention analysis, we collected all tweets referenced in the Altmetric database using the Twitter application programming interface (API). Due to users’ privacy settings and account deletion, we successfully retrieved 90.7% of the 85,411,606 tweets. Detailed methods on defining four types of platforms, selecting top news outlets, and the four broad disciplines are shown in SI Appendix, SI Methods.

Retraction Watch Database.

We obtained the set of retracted articles from Retraction Watch (41). This database consists of 21,850 papers retracted by 2020. Each paper has metadata, including the title, journal, authors, publication date, and retraction date. Of the 9,201 retracted papers published after June 10, 2011, the launch date of Altmetric.com, 8,434 also have DOI information. The majority of papers with missing DOI are conference abstracts. We located 4,210 retracted papers in the Altmetric database based on DOI. Due to inconsistencies in recording and tracking retractions, we had to correct some publication and retraction dates. Details are in SI Appendix, SI Methods.

Identifying Control Papers.

To compare the amount of attention received by retracted and nonretracted papers, we used the Altmetric database to construct a set of five control papers that had similar characteristics as retracted papers in terms of the publication year, publication venue, number of authors, and authors’ total citation count 1 y before publication. The publication year controls for potential temporal variations in the amount of public attention to scientific papers online. Matching based on the journal ensures that we are comparing papers from similar research areas, at least in the case of disciplinary venues, which might also affect the volume of attention. The number of authors and their citation status control for confounding factors related to prestige that can influence a paper’s attention. We collected information on author citations from the Microsoft Academic Graph (59) based on papers’ unique identifiers (DOIs). To ensure that we identified matching control papers for as many retracted papers as possible, we used coarsened exact matching (60). For each retracted paper, we identified controls 1) published in the same journal 2) within 2 y of the publication year of the retracted paper (2 y before or 2 y after) 3) that have no less than two or no more than two authors of the retracted paper and 4) whose most highly cited author’s citation rank percentile is within 20% of that of the retracted paper. Rank percentile was calculated based on all papers in the Altmetric database. This matching strategy and its thresholds were determined based on a number of iterations that aimed to include as many potential confounding factors as possible while finding matches for as many retracted papers as possible. Retracted papers for which we found less than five matches were excluded. In total, we matched 19,255 control papers to 3,851 retracted papers.

Defining Tracking Windows.

To ensure that we are using comparable timescales throughout our analyses, we defined tracking windows for the periods after publication as well as before and after retraction. For the after-publication time, we define the tracking window of retracted papers to be the time from their publication to their retraction date or the Altmetric database access date of October 9, 2019 (whichever comes first). We consider the tracking window of control papers to be the window from their publication date to the Altmetric database access date. For the after-retraction time period, we define a reference date for each paper. Retracted papers have their retraction date as their reference date. Control papers have the retraction date of their matched paper as their reference date. The tracking window for both retracted and control papers is from the reference date to the Altmetric database access date.

Labeling Critical Tweets.

We collected all preretraction tweets that mention retracted or control papers (control papers used the retraction date of their matched papers as the reference date). When attempting to identify critical tweets in this sample, we found that existing automated methods of detecting critical posts did not work well. We devised an approach that started with constructing a set of key words to retrieve candidate tweets that may contain criticism. Our goal was for this heuristic to have high recall even if the precision was low. That is, we wanted the list to return a very large fraction of the critical tweets, even if it included many false positives. To construct the key word list, we began with a set of seed words, such as “wonder,” “concern,” and “suspect.” We then collected a random sample of 500 tweets and manually labeled them. Using these labels, we measured the recall of a simple criticism attribution based on whether the tweet contained any of the words from the current key word list. As long as the recall was not high enough, we expanded our key word list based on the content of tweets that were labeled as being critical but did not include any of the key words. After two iterations, we achieved a recall of 0.9 with a list of 76 key words (SI Appendix, SI Methods). We applied this heuristic to 42,766 preretraction tweets and found 7,036 tweets that contained at least one of the key words.

Then, we manually labeled each of these candidate tweets as critical or not. We used three to four annotators for this task. Each tweet was independently labeled by three different annotators. One of the authors trained the annotators by providing them a definition of critical tweets, examples, and labeling guidelines. The annotators were also familiarized with various aspects of scientific papers and publishing that are commonly criticized and questioned, such as the review process, the validity of the data, the generalizability of the findings, etc. The annotators then labeled a batch of 500 tweets and discussed disagreements with one of the authors. They then labeled a second batch of 500 tweets, which resulted in an average Cohen’s kappa score of 0.77, indicating substantial interrater agreement (61). The annotators then labeled all remaining tweets, resulting in three labels per tweet and achieving a weighted average Cohen’s kappa score of 0.72. Of the 7,036 tweets, 6,201 had unanimous agreement among the three annotators, with 720 being labeled as critical. The other authors of the paper then provided a fourth label for the 835 tweets that had disagreement among the three annotators. After this step, 476 tweets had a majority label (three vs. one) and were labeled accordingly. The other 359 tweets remained ambiguous (two vs. two) (SI Appendix, Table S7 shows some examples). We treated these ambiguous tweets as being uncritical. The results are qualitatively similar when treating them as critical (SI Appendix, Fig. S12).

Filtering Retraction-Related Posts.

To label posts that discuss the retraction of a paper, we first identified all paper mentions that contained the term “retract” as it is often used in phrases, such as “it has been retracted,” “journal retracts paper,” “retraction of that paper,” etc. All such posts were considered to be discussing the retraction incident (a manual inspection of 50 random such posts on each type of platform shows no false-positive cases). We then manually labeled each after-retraction post that did not contain this key word as either discussing the retraction or not. Some examples are as follows: “We have to dig deeper! Science is never settled” and “Be careful when you are expected to believe something because ‘its science.’” Using this method, we labeled all posts in news media and blogs. For social media, we labeled only posts from Twitter as this is the largest platform in that group. For knowledge repositories, we labeled Wikipedia posts, the second largest platform in this category (the largest one is patents, which we speculate do not usually discuss retraction). Note that since we did not label posts from all platforms, the mention volume of retracted papers after excluding retraction-related content is an overestimation.

Supplementary Material

Acknowledgments

We thank Altmetric.com, Retraction Watch, and Microsoft Academic Graph for providing the data used in this study; Annika Weinberg, Berit Ginsberg, Henry Dambanemuya, and Rod Abhari for labeling critical tweets; Danaja Maldeniya for collecting the tweets used in this study; Jordan Braun for copyediting; and Aparna Ananthasubramaniam, Danaja Maldeniya, and Ed Platt for helpful suggestions and discussion. This work was partially funded by NSF Career Grant IIS-1943506 and by Air Force Office of Scientific Research Award FA9550-19-1-0029.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2119086119/-/DCSupplemental.

Data Availability

The Altmetric data can be accessed free of charge by researchers from https://www.altmetric.com/research-access/. The Retraction Watch database is available freely from The Center For Scientific Integrity subject to a standard data use agreement (details are at https://retractionwatch.com/retraction-watch-database-user-guide/). The Microsoft Academic Graph is publicly available at https://www.microsoft.com/en-us/research/project/microsoft-academic-graph/ or https://www.microsoft.com/en-us/research/project/open-academic-graph/. All custom code and data created by us have been deposited on GitHub (https://github.com/haoopeng/retraction_attention/), including tweets about research papers labeled as expressing uncertainty with respect to their data, approach, or findings. The data we provide in our repository include unique identifiers for all retracted and control papers, which were used to link these data sources. For user privacy reasons, Twitter does not allow sharing of the text of tweets. To comply with this requirement, we provide identifications for the tweets that we labeled as critical/uncritical. Researchers can use these identifications to collect the text and metadata using the Twitter API (https://developer.twitter.com/en/products/twitter-api/academic-research).

References

- 1.Ajiferuke I., Adekannbi J. O., Correction and retraction practices in library and information science journals. J. Librarian. Inform. Sci. 52, 169–183 (2020). [Google Scholar]

- 2.Van Noorden R., Science publishing: The trouble with retractions. Nature 478, 26–28 (2011). [DOI] [PubMed] [Google Scholar]

- 3.Steen R. G., Retractions in the scientific literature: Is the incidence of research fraud increasing? J. Med. Ethics 37, 249–253 (2011). [DOI] [PubMed] [Google Scholar]

- 4.Fang F. C., Steen R. G., Casadevall A., Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Acad. Sci. U.S.A. 109, 17028–17033 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shuai X., et al., A multidimensional investigation of the effects of publication retraction on scholarly impact. J. Assoc. Inf. Sci. Technol. 68, 2225–2236 (2017). [Google Scholar]

- 6.Budd J. M., Sievert M., Schultz T. R., Phenomena of retraction: Reasons for retraction and citations to the publications. JAMA 280, 296–297 (1998). [DOI] [PubMed] [Google Scholar]

- 7.Steen R. G., Casadevall A., Fang F. C., Why has the number of scientific retractions increased? PLoS One 8, e68397 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Dong Y., Ma H., Shen Z., Wang K., “A century of science: Globalization of scientific collaborations, citations, and innovations” in Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (Association for Computing Machinery, New York, NY, 2017), pp. 1437–1446. [Google Scholar]

- 9.Lee C. J., Sugimoto C. R., Zhang G., Cronin B., Bias in peer review. J. Am. Soc. Inf. Sci. Technol. 64, 2–17 (2013). [Google Scholar]

- 10.Jones B. F., Wuchty S., Uzzi B., Multi-university research teams: Shifting impact, geography, and stratification in science. Science 322, 1259–1262 (2008). [DOI] [PubMed] [Google Scholar]

- 11.Cokol M., Iossifov I., Rodriguez-Esteban R., Rzhetsky A., How many scientific papers should be retracted? EMBO Rep. 8, 422–423 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Van Noorden R., The trouble with retractions: A surge in withdrawn papers is highlighting weaknesses in the system for handling them. Nature 478, 26–29 (2011). [DOI] [PubMed] [Google Scholar]

- 13.Marcus A., A scientist’s fraudulent studies put patients at risk. Science 362, 394 (2018). [DOI] [PubMed] [Google Scholar]

- 14.Sharma K., Patterns of retractions from 1981-2020: Does a fraud lead to another fraud? arXiv [Preprint] (2020). https://arxiv.org/abs/2011.13091 (Accessed 5 March 2021).

- 15.O’Grady C., Honesty study was based on fabricated data. Science 373, 950–951 (2021). [DOI] [PubMed] [Google Scholar]

- 16.Ke Q., Ahn Y. Y., Sugimoto C. R., A systematic identification and analysis of scientists on Twitter. PLoS One 12, e0175368 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Altmetric.com, The 2020 Altmetric top 100 (2020). https://www.altmetric.com/top100/2020/. Accessed 1 February 2021.

- 18.Mehra M. R., Ruschitzka F., Patel A. N., Retraction: Hydroxychloroquine or chloroquine with or without a macrolide for treatment of COVID-19: A multinational registry analysis. Lancet 395, 1820 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.AlShebli B., Makovi K., Rahwan T., Retracted note: The association between early career informal mentorship in academic collaborations and junior author performance. Nat. Commun. 11, 6446 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Clauset A., Larremore D. B., Sinatra R., Data-driven predictions in the science of science. Science 355, 477–480 (2017). [DOI] [PubMed] [Google Scholar]

- 21.Sundar S. S., Effect of source attribution on perception of online news stories. J. Mass Commun. Q. 75, 55–68 (1998). [Google Scholar]

- 22.Flanagin A. J., Metzger M. J., Perceptions of internet information credibility. J. Mass Commun. Q. 77, 515–540 (2000). [Google Scholar]

- 23.Scheufele D. A., Krause N. M., Science audiences, misinformation, and fake news. Proc. Natl. Acad. Sci. U.S.A. 116, 7662–7669 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Morris M. R., Counts S., Roseway A., Hoff A., Schwarz J., “Tweeting is believing?: Understanding microblog credibility perceptions” in Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work (ACM, New York, NY, 2012), pp . 441–450. [Google Scholar]

- 25.Brossard D., New media landscapes and the science information consumer. Proc. Natl. Acad. Sci. U.S.A. 110 (suppl. 3), 14096–14101 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Scheufele D. A., Communicating science in social settings. Proc. Natl. Acad. Sci. U.S.A. 110 (suppl. 3), 14040–14047 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Milkman K. L., Berger J., The science of sharing and the sharing of science. Proc. Natl. Acad. Sci. U.S.A. 111 (suppl. 4), 13642–13649 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Su L. Y. F., Akin H., Brossard D., Scheufele D. A., Xenos M. A., Science news consumption patterns and their implications for public understanding of science. J. Mass Commun. Q. 92, 597–616 (2015). [Google Scholar]

- 29.Zakhlebin I., Horvát E.-Á., “Diffusion of scientific articles across online platforms” in Proceedings of the International AAAI Conference on Web and Social Media (Association for the Advancement of Artificial Intelligence, Palo Alto, CA, 2020), pp. 762–773. [Google Scholar]

- 30.Lu S. F., Jin G. Z., Uzzi B., Jones B., The retraction penalty: Evidence from the Web of Science. Sci. Rep. 3, 3146 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Madlock-Brown C. R., Eichmann D., The (lack of) impact of retraction on citation networks. Sci. Eng. Ethics 21, 127–137 (2015). [DOI] [PubMed] [Google Scholar]

- 32.Avenell A., Stewart F., Grey A., Gamble G., Bolland M., An investigation into the impact and implications of published papers from retracted research: Systematic search of affected literature. BMJ Open 9, e031909 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Budd J. M., Coble Z., Abritis A., An investigation of retracted articles in the biomedical literature. Proc. Assoc. Inf. Sci. Technol. 53, 1–9 (2016). [Google Scholar]

- 34.Wadhwa R. R., Rasendran C., Popovic Z. B., Nissen S. E., Desai M. Y., Temporal trends, characteristics, and citations of retracted articles in cardiovascular medicine. JAMA Netw. Open 4, e2118263 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Jin G. Z., Jones B., Lu S. F., Uzzi B., The reverse Matthew effect: Consequences of retraction in scientific teams. Rev. Econ. Stat. 101, 492–506 (2019). [Google Scholar]

- 36.Azoulay P., Furman J. L., Murray F., Retractions. Rev. Econ. Stat. 97, 1118–1136 (2015). [Google Scholar]

- 37.Brainard J., You J., What a massive database of retracted papers reveals about science publishing’s ‘death penalty.’ Science 25, 1–5 (2018). [Google Scholar]

- 38.Serghiou S., Marton R. M., Ioannidis J. P. A., Media and social media attention to retracted articles according to Altmetric. PLoS One 16, e0248625 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Furman J. L., Jensen K., Murray F., Governing knowledge in the scientific community: Exploring the role of retractions in biomedicine. Res. Policy 41, 276–290 (2012). [Google Scholar]

- 40.Haunschild R., Bornmann L., Can tweets be used to detect problems early with scientific papers? A case study of three retracted COVID-19/SARS-CoV-2 papers. Scientometrics 126, 5181–5199 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Retraction Watch, Retraction Watch (2020). https://retractionwatch.com/. Accessed 10 January 2021.

- 42.Altmetric.com, Who’s talking about your research? (2019). https://www.altmetric.com/. Accessed 9 October 2019.

- 43.Peng H., Romero D. M., E.-Á. Horvát, Critical tweets about retracted papers. GitHub. https://github.com/haoopeng/retraction_attention/blob/main/data/all_tweets_uncertain_label_revision_share.csv. Deposited 29 March 2022.

- 44.Pei J., Jurgens D., “Measuring sentence-level and aspect-level (un)certainty in science communications” in Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (Association for Computational Linguistics, Stroudsburg, PA, 2021), pp. 9959–10011. [Google Scholar]

- 45.Kwak H., Lee C., Park H., Moon S., “What is Twitter, a social network or a news media?” in Proceedings of the 19th International Conference on World Wide Web (ACM, New York, NY, 2010), pp . 591–600. [Google Scholar]

- 46.Rödder S., Franzen M., Weingart P., The Sciences’ Media Connection—Public Communication and Its Repercussions (Springer Science & Business Media, 2011), vol. 28. [Google Scholar]

- 47.Blum D., Knudson M., Henig R. M., Eds., A Field Guide for Science Writers (Oxford University Press, New York, NY, 2006). [Google Scholar]

- 48.Wu F., Huberman B. A., Novelty and collective attention. Proc. Natl. Acad. Sci. U.S.A. 104, 17599–17601 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.McDowall D., McCleary R., Bartos B. J., Interrupted Time Series Analysis (Oxford University Press, 2019). [Google Scholar]

- 50.Olson C. M., et al., Publication bias in editorial decision making. JAMA 287, 2825–2828 (2002). [DOI] [PubMed] [Google Scholar]

- 51.Easterbrook P. J., Berlin J. A., Gopalan R., Matthews D. R., Publication bias in clinical research. Lancet 337, 867–872 (1991). [DOI] [PubMed] [Google Scholar]

- 52.Hargittai E., Füchslin T., Schäfer M. S., How do young adults engage with science and research on social media? Some preliminary findings and an agenda for future research. Soc. Media Soc., 10.1177/2056305118797720 (2018).

- 53.Ioannidis J. P., Why most published research findings are false. PLoS Med. 2, e124 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Fanelli D., Is science really facing a reproducibility crisis, and do we need it to? Proc. Natl. Acad. Sci. U.S.A. 115, 2628–2631 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Jamieson K. H., McNutt M., Kiermer V., Sever R., Signaling the trustworthiness of science. Proc. Natl. Acad. Sci. U.S.A. 116, 19231–19236 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Grimes D. R., Bauch C. T., Ioannidis J. P. A., Modelling science trustworthiness under publish or perish pressure. R. Soc. Open Sci. 5, 171511 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fanelli D., Ioannidis J. P., US studies may overestimate effect sizes in softer research. Proc. Natl. Acad. Sci. U.S.A. 110, 15031–15036 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Resnik D. B., Wager E., Kissling G. E., Retraction policies of top scientific journals ranked by impact factor. J. Med. Libr. Assoc. 103, 136–139 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Sinha A., et al., “An overview of Microsoft academic service (MAS) and applications” in Proceedings of the 24th International Conference on World Wide Web Companion (Association for Computing Machinery, New York, NY, 2015), pp. 243–246. [Google Scholar]

- 60.Iacus S. M., King G., Porro G., Causal inference without balance checking: Coarsened exact matching. Polit. Anal. 20, 1–24 (2012). [Google Scholar]

- 61.McHugh M. L., Interrater reliability: The kappa statistic. Biochem. Med. (Zagreb) 22, 276–282 (2012). [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The Altmetric data can be accessed free of charge by researchers from https://www.altmetric.com/research-access/. The Retraction Watch database is available freely from The Center For Scientific Integrity subject to a standard data use agreement (details are at https://retractionwatch.com/retraction-watch-database-user-guide/). The Microsoft Academic Graph is publicly available at https://www.microsoft.com/en-us/research/project/microsoft-academic-graph/ or https://www.microsoft.com/en-us/research/project/open-academic-graph/. All custom code and data created by us have been deposited on GitHub (https://github.com/haoopeng/retraction_attention/), including tweets about research papers labeled as expressing uncertainty with respect to their data, approach, or findings. The data we provide in our repository include unique identifiers for all retracted and control papers, which were used to link these data sources. For user privacy reasons, Twitter does not allow sharing of the text of tweets. To comply with this requirement, we provide identifications for the tweets that we labeled as critical/uncritical. Researchers can use these identifications to collect the text and metadata using the Twitter API (https://developer.twitter.com/en/products/twitter-api/academic-research).