Significance

Why does the public remain misinformed about many issues, even though studies have repeatedly shown that exposure to factual information increases belief accuracy? To answer this question, we administer a four-wave panel experiment to estimate the effects of exposure to news and opinion about climate change. We find that accurate scientific information about climate change increases factual accuracy and support for government action to address it immediately after exposure. However, these effects largely disappear in later waves. Moreover, opinion content that voices skepticism of the scientific consensus reverses the accuracy gains generated by science coverage (unlike exposure to issue coverage featuring partisan conflict, which has no measurable effects).

Keywords: factual beliefs, climate change, attitude change

Abstract

Although experiments show that exposure to factual information can increase factual accuracy, the public remains stubbornly misinformed about many issues. Why do misperceptions persist even when factual interventions generally succeed at increasing the accuracy of people’s beliefs? We seek to answer this question by testing the role of information exposure and decay effects in a four-wave panel experiment (n = 2,898 at wave 4) in which we randomize the media content that people in the United States see about climate change. Our results indicate that science coverage of climate change increases belief accuracy and support for government action immediately after exposure, including among Republicans and people who reject anthropogenic climate change. However, both effects decay over time and can be attenuated by exposure to skeptical opinion content (but not issue coverage featuring partisan conflict). These findings demonstrate that the increases in belief accuracy generated by science coverage are short lived and can be neutralized by skeptical opinion content.

While recent studies have shown that the provision of factually accurate information leads to more factually accurate political beliefs (1–3), the public remains stubbornly misinformed about many issues. Why do misperceptions remain widespread on issues like climate change even as factual interventions typically succeed at increasing the accuracy of people’s beliefs in experimental studies?

Some scholars attribute the persistence of misperceptions to the prevalence of directionally motivated reasoning on divisive political and social issues (4, 5). Even matters of fact can become entangled with partisan and other group identities, seemingly preventing people from reasoning about them dispassionately (6). These divisions are frequently driven by elite cues on issues where the parties have diverged (7, 8). As a result, misperceptions about issues like climate change frequently persist for years despite being contradicted by overwhelming scientific evidence (9).

Other researchers find, however, that exposure to factual evidence generally increases the accuracy of people’s beliefs even on issues with widespread and highly politicized misperceptions (1–3, 10, 11). These effects are also observed when factual information is bundled with competing partisan cues, although the presence of such cues tends to attenuate accuracy gains (12). Some scholars have extended this claim further, citing macro time series data to argue that public beliefs tend to become more accurate over time as people slowly update in response to evidence (13, 14).

In this paper, we describe results from an experiment designed to test three possible ways of reconciling these competing findings. First, the persistence of inaccurate beliefs may reflect insufficient levels of exposure to accurate information (15, 16). Under this explanation, the public sees too little factual information about issues on which misperceptions are common to produce durable accuracy increases. For instance, only 15% of climate coverage in the United States from 2011 to 2015 mentioned the scientific consensus on the issue. Among this, 10% featured false balance with fringe views, and 26% featured a polarizing opponent (16). If people were exposed to more accurate information about science, they might come to hold more accurate scientific beliefs.

An alternate possibility proposes that subsequent exposure to contrary partisan or ideological cues offsets the effects of factual information exposure and encourages people to revert to misperceptions they previously held (16). Under this explanation, partisan or ideological cues typically neutralize the accuracy gains resulting from exposure to science coverage. Finally, the effects of accurate information may decay away quickly after exposure (17–19). For instance, belief in the “birther” myth decreased substantially after the highly publicized release of Barack Obama’s birth certificate but rebounded to previous levels afterward (20, 21). According to this explanation, this pattern of surge and decline is likely to be common; exposure to science coverage increases the accuracy of people’s beliefs, but over time, they tend to revert back toward their prior views.

We test these explanations in the context of the climate change debate, perhaps the most high-profile issue in the United States on which factually accurate information has failed to reduce aggregate misperceptions as much as experimental research might seem to suggest. Although factual corrections of related misinformation reduce misperceptions about climate change (22, 23), a substantial minority of the public continues to reject the scientific consensus in high-quality surveys (24).

Our experimental design allows us to test each of the above explanations in the context of the climate debate. First, to investigate the effects of factually accurate information, we randomize exposure to news articles about the science of climate change. Second, to examine the possibility that partisan cues and/or ideological opinion may neutralize or even reverse the effects of factual information, we randomize exposure to news content featuring partisan conflict over climate or opinion articles that question the consensus on anthropogenic climate change. Finally, to measure how quickly effects dissipate, we measure effects over four waves of an experiment (with randomized treatments being administered in the second and third waves).

To approximate real-world news diets, the treatment stimuli consisted of articles published during the 2018 debate over climate change that followed the release of an Intergovernmental Panel on Climate Change (IPCC) report (Materials and Methods has details, and SI Appendix, section A has the full text of each article). Unlike prior studies, which typically document that belief accuracy increases following exposure to factual corrections, our study tests if general science news articles that do not explicitly seek to debunk misperceptions can also increase factual accuracy. Our findings on the effects of opinion articles and articles that emphasize partisan conflict test the effects of other types of similarly realistic stimuli that are more frequently encountered than fact checks or other types of corrective information.

After providing pretreatment measures of beliefs and attitudes in the first wave, respondents were randomized to read news articles describing scientific findings on climate change, journalistic coverage of climate change with partisan cues, opinion articles that voiced skepticism of climate change, or placebo content in waves 2 and 3. Wave 3 was launched 1 wk after wave 2, and wave 4 was launched 1 wk after wave 3. Overall, the median time elapsed from randomization in wave 2 to the conclusion of the study in wave 4 was 14 d.

Participants were US adults recruited from Mechanical Turk (Materials and Methods has more details). While nonrepresentative, other research on misperceptions has found that effects obtained on Mechanical Turk mirror those obtained via more representative samples (2). Critically for our purposes, the platform facilitates cost-effective multiwave experiments with comparable levels of attrition to high-quality longitudinal studies (25).

Table 1 describes the randomly assigned conditions to which respondents were assigned with equal probability and provides examples of content shown to respondents by condition and wave. These conditions were selected to test the effects of exposure to scientific coverage and how it can be offset or undermined by exposure to coverage focusing on partisan conflict or skeptical opinion content. For example, participants assigned to science wave 2 → opinion wave 3 were shown three science articles in the second wave (e.g., “Climate change warning is dire”) and three skeptical opinion articles in the third wave (e.g., “Climate change industry grows rich from hysteria”). Further discussion about our selection of conditions (including the combinations of treatments that we omitted to maximize statistical power) can be found in Materials and Methods.

Table 1.

Summary of treatment content by wave with example headline

| Condition | W2 | W3 |

|---|---|---|

| Science W2 → Science W3 | Science predicts catastrophe from climate change | Science predicts catastrophe from climate change |

| (e.g., “Climate change warning is dire”) | (e.g., “The clock is ticking to stop catastrophic global warming, top climate scientists say”) | |

| Science W2 → Opinion W3 | Science predicts catastrophe from climate change | Climate science can’t be trusted |

| (e.g., “Climate change warning is dire”) | (e.g., “Climate change industry grows rich from hysteria”) | |

| Science W2 → Placebo W3 | Science predicts catastrophe from climate change | Unrelated articles |

| (e.g., “Climate change warning is dire”) | (e.g., “Got a meeting? Take a walk”) | |

| Science W2 → Partisan W3 | Science predicts catastrophe from climate change | Democrats, Trump disagree over climate |

| (e.g., “Climate change warning is dire”) | (e.g., “Trump steadily undoing US efforts on climate change”) | |

| Placebo W2 → Science W3 | Unrelated articles | Science predicts catastrophe from climate change |

| (e.g., “Five sauces for the modern cook”) | (e.g., “The clock is ticking to stop catastrophic global warming, top climate scientists say”) | |

| Partisan W2 → Partisan W3 | Democrats, Trump disagree over climate | Democrats, Trump disagree over climate |

| (e.g., “Poles apart on climate change in electionseason” | (e.g., “Trump steadily undoing US efforts on climatechange”) | |

| Placebo W2 → Placebo W3 | Unrelated articles | Unrelated articles |

| (e.g., “Five sauces for the modern cook”) | (e.g., “Got a meeting? Take a walk”) |

W2 and W3 indicate waves 2 and 3, respectively.

The primary outcome measures of the study are factual beliefs and attitudes toward climate change, which we measure using questions adapted from the American National Election Study and Pew Research Center on whether climate change has been happening, whether climate change is caused by human activity, whether the government should do more to address climate change, and whether the respondent prefers to support renewable energy or fossil fuels. We also measure the perceived importance of climate change and attitudes toward scientists. (Full question wording is provided in SI Appendix, section A.)

We test two preregistered hypotheses. First, consistent with the explanation that misperceptions endure because of insufficient exposure to accurate information, we test whether exposure to science coverage of climate change increases the accuracy of people’s factual beliefs about the issue immediately after exposure (hypothesis 1). Second, we consider the explanation that partisan and ideological cues help negate science coverage effects by testing if the effects on belief accuracy are reduced by subsequent exposure to issue coverage featuring partisan conflict (hypothesis 2a) or skeptical opinion content (hypothesis 2b). Either type of content could undermine the effects of science coverage by exposing people to claims or arguments that conflict with the scientific evidence (often accompanied by partisan or ideological cues). Finally, we examine the decay explanation for persistent misperceptions by testing whether the effects of exposure to factual evidence on climate change beliefs persist over time (a preregistered research question).

We also consider several other preregistered research questions where existing literature and theory offer weaker expectations. First, we examine how our interventions affect not only factual beliefs but also, attitudes toward climate change and energy issues as well. Understanding the relationship between belief accuracy and related attitudes is especially important given the association between holding accurate beliefs about climate change and supporting policies to curtail it (26). However, prior experimental findings about the effect of factual information on policy attitudes are mixed (27–29).

Second, as described above, people may often encounter media coverage of controversial issues that includes partisan cues and conflict rather than news coverage featuring accurate information on matters of scientific consensus (16). Exposure to partisan-focused news coverage may promote directionally motivated reasoning or increase belief consistency pressures (8, 30). We therefore ask how exposure to climate coverage focused on partisan conflict affects climate beliefs in the wave of exposure, whether these effects persist among respondents who are repeatedly exposed to partisan-focused coverage, and how these effects compare with the effects of repeated exposure to science coverage of climate.*

Finally, given the overwhelming evidence of differences by party in climate beliefs and variation in information processing by prior views on the issue (e.g., ref. 33), we test whether the effects of our treatments differ by respondent partisanship and pretreatment beliefs about the human role in climate change.

Our between-subjects experiment was administered from 25 August to 22 September 2020. All deviations from our preregistration are noted below (preregistration link: https://osf.io/xc3v8). To simulate a realistic media environment during the month-long experiment, respondents were shown stimulus articles selected from articles published in the 2 mo after the 2018 IPCC report on the potential impacts of a 1.5 ∘C increase in global temperatures. (Materialsand Methods has details on the article selection process; the complete text of all survey items and stimulus materials is provided in SI Appendix, section A.)

Following our preanalysis plan, we report two sets of results. First, we estimate the immediate effect of exposure to each type of content—science news, coverage of partisan conflict, or skeptical opinion content—within wave 2 or wave 3. Second, we estimate the effects of assignment to one of the seven possible sequences of treatments on climate change beliefs and policy attitudes across waves 2, 3, and 4.

Our results indicate that exposure to science content improves factual accuracy but that the improvements are short lived and no longer detectable by the end of our study. We also find that exposure to opinion content skeptical of science can neutralize or reverse accuracy gains. Contrary to expectations, we do not find that exposure to news coverage focused on partisan conflict decreases factual accuracy. Immediately after exposure, science coverage about climate change increases support for government action to address climate change, but this effect fades over time. Reported effects are generally consistent across party affiliations and pretreatment belief (or not) in anthropogenic climate. However, the effects of skeptical opinion content are larger for both Republicans and climate change deniers.

Results

Primary hypotheses and research questions are tested using ordinary least squares regression with robust SEs. Following prior work (34), we use a lasso variable selection procedure to determine the set of prognostic covariates to include in each model (Materials and Methods has details). Consistent with our preanalysis plan, these models are estimated only on participants who completed all four waves. Respondents were balanced by condition at randomization (SI Appendix, Table S1). Consult ref. 35 for data.

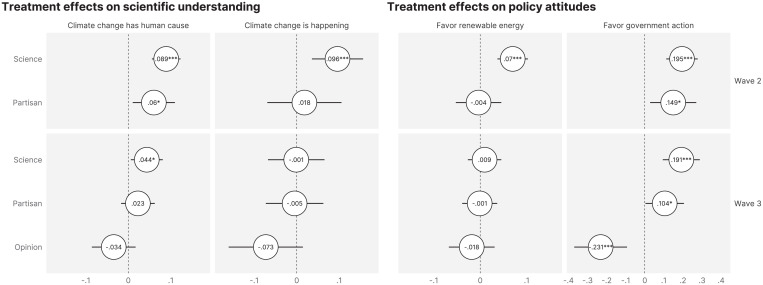

We first consider the direct effects of exposure to different types of content within waves 2 and 3 on the accuracy of people’s beliefs immediately after exposure. Our results, which are reported in Fig. 1, provide strong evidence supporting our first hypothesis, which predicts that exposure to science content about climate change would increase factual accuracy in the wave in which people were exposed. Consistent with our expectations, belief accuracy increased after exposure to science coverage of climate change both for climate change having a human cause in waves 2 and 3 ( = 0.089, and = 0.044, P < 0.05, respectively) and for whether climate chance is happening in wave 2 ( = 0.096, P < 0.005; no significant result was observed in wave 3). (Full model results are provided in SI Appendix, Table S2.)

Fig. 1.

Treatment effects on climate change beliefs and policy attitudes within the wave by content type. Point estimates represent estimates from OLS regressions with robust SEs and include 95% CIs. Each point represents the estimated effect of assignment to exposure to the content type in question rather than placebo within the wave. SI Appendix, Tables S2 and S3 have details. *P < 0.05; ***P < 0.005.

However, exposure to science coverage not only improved factual accuracy but also affected some policy attitudes immediately after exposure (a preregistered research question). Fig. 1 shows that participants exposed to science content reported greater agreement immediately afterward that government should do more to address climate in both waves 2 and 3 ( = 0.195, P < 0.005 and = 0.191, P < 0.005, respectively) and should support renewable energy in wave 2 ( = 0.070, P < 0.005; no significant result was observed in wave 3). (SI Appendix, Table S3 shows results in tabular form.)

By contrast, as Fig. 1 indicates, the effects of exposure to coverage of partisan conflict over climate are less consistent (a preregistered research question). Across both measures of factual beliefs and both waves, exposure to partisan conflict affected factual accuracy immediately after exposure in only one case—a belief that climate change has a human cause in wave 2 ( = 0.06, P < 0.05). Similarly, coverage of partisan conflict increased support for government action immediately after exposure in both waves ( = 0.149, P < 0.05 in wave 2 and = 0.104, P < 0.05 in wave 3) but did not have a measurable effect on support for renewable energy in either wave.†

The results above estimate the effects of a particular type of content immediately after exposure using models that compare respondents randomized to see that content in a given wave with those randomized to a placebo condition instead. For instance, we compare belief in climate change between respondents who were randomly assigned to see science coverage in wave 2 vs. those who saw placebo content in wave 2. However, respondents were randomized to one of seven possible sequences of conditions across waves 2 and 3 (Table 1). We therefore next estimate the effects of the sequence of stimuli to which respondents were exposed in waves 2, 3, and 4. For instance, to estimate the persistence of the effects of science coverage across waves, we compare respondents who were randomly assigned to science coverage in wave 2 and to placebo in wave 3 with respondents assigned to placebo in both waves.

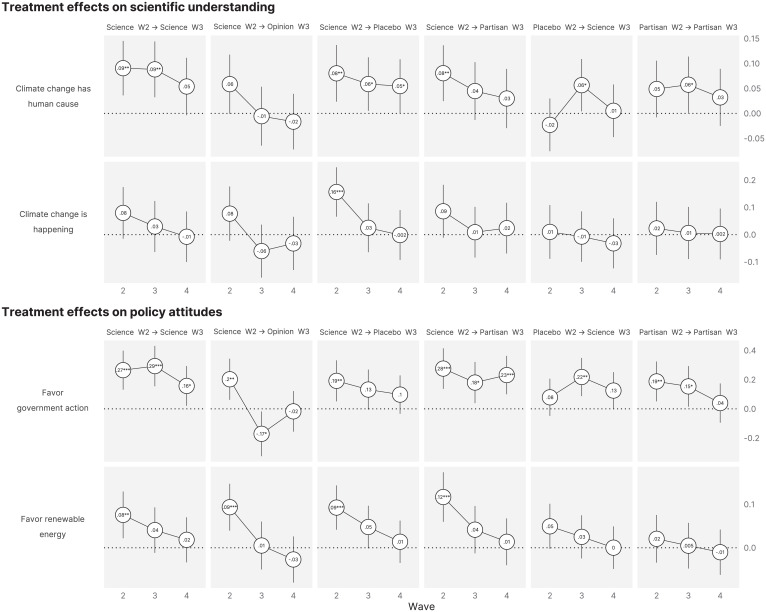

Fig. 2 displays the estimated effect of each treatment condition (i.e., each of the seven possible combinations of content that respondents could encounter) in waves 2, 3, and 4 vs. the placebo wave 2 → placebo wave 3 baseline. (SI Appendix, Tables S4 and S5 show full results.) The top two rows of Fig. 2 illustrate the effects on our factual belief measures, while the bottom two depict effects on our policy attitude measures.

Fig. 2.

Treatment effects on climate change beliefs and policy attitudes across waves by condition. Point estimates represent estimates from OLS regressions with robust SEs and include 95% CIs. Points represents the estimated effect of exposure to the condition in question rather than placebo wave 2 → placebo wave 3 in waves 2, 3, and 4. SI Appendix, Tables S4 and S5 have details. W, wave. *P < 0.05; **P < 0.01; ***P < 0.005.

The immediate effects of science coverage exposure on factual beliefs described above did not consistently endure in subsequent waves (a preregistered research question). Consider the third column in Fig. 2. In the top two cells, we illustrate the change in factual beliefs among those assigned to science coverage in wave 2 and then placebo content in wave 3 relative to the baseline condition (people who saw placebo content in both waves). While effects on belief that climate change had a human cause remained detectable in wave 3 ( = 0.06, 0.05), effects on belief that climate change is happening were no longer detectable. In addition, as the top two cells in the first column show, the effect of science coverage on factual beliefs was no longer detectable in wave 4 even when it was repeated in wave 2 and wave 3.

Similarly, while exposure to science coverage had immediate effects on attitudes, with subjects becoming more supportive of government action and renewable energy as a result, the effects did not consistently endure to the end of the study. As SI Appendix, Tables S5 and S23 show, the durability of effects on attitudes varies with model selection when exposure to science coverage is repeated. (As SI Appendix, Table S23 shows, the durability of effects on attitudes is no longer detectable when we use inverse probability weights to account for attrition.) Likewise, we observe no evidence that repeated exposure to coverage of partisan conflict leads to durable effects on climate change beliefs (a preregistered research question) (SI Appendix, Table S4).

We next test whether the effects of exposure to science coverage are undermined by subsequent exposure to two other forms of climate-related content: coverage of partisan conflict and skeptical opinion content.

Contrary to expectations (hypothesis 2a), our results provide no evidence that exposure to partisan conflict undermines the effects of science coverage. We found no measurable difference in factual beliefs between participants who viewed science coverage followed by partisan conflict and those for whom science coverage was followed by placebo content. This comparison also showed no measurable effect on support for renewable energy or government action to address climate change. (The relevant quantities are reported in SI Appendix, Tables S4 and S5, respectively.‡)

In contrast, exposure to skeptical opinion content rather than placebo diminished the effects of prior exposure to science coverage (hypothesis 2b). Although it had no effect on beliefs that climate change is happening, skeptical opinion content exposure reduced belief that climate change has a human cause in wave 3 ( = –0.064, 0.05). This effect remained detectable in wave 4 ( = –0.071, 0.01) (SI Appendix, Table S4 shows both). Exposure to skeptical opinion rather than placebo after science coverage also had a strongly negative effect on support for government action ( = –0.297, 0.005) (SI Appendix, Table S5), although this effect was no longer measurable in wave 4 and no effect was observed on support for renewable energy.

In total, we observe relatively little evidence of persistence in messaging effects. In the final wave, we find significant effects for 3 of 24 possible combinations of treatment conditions and outcome measures.

Next, we consider whether the treatment effects reported above vary by party or prior beliefs about anthropogenic climate change (two preregistered research questions). We formally test treatment effect heterogeneity by partisanship across waves and conditions in SI Appendix, Tables S8–S9 and by pretreatment beliefs in anthropogenic climate change in SI Appendix, Tables S10 and S11 (within-wave estimates are presented in SI Appendix, Tables S2 and S3). Marginal effect estimates by party and pre- treatment belief are plotted in SI Appendix, Figs. S1 and S2.

The most consistent evidence of treatment effect heterogeneity by political predispositions is observed for the skeptical opinion condition. Specifically, responses to skeptical opinion content varied significantly by both pretreatment partisanship and belief in anthropogenic climate change. Exposure to the opinion condition in the wave after exposure to science coverage of climate change differentially decreased support for government action among Republicans compared with Democrats (P < 0.05) and for people who reject anthropogenic climate change compared with those who accept it (P < 0.01). As a result, Republicans and climate change deniers exposed to skeptical opinion after science coverage favored government action less in wave 3 than their counterparts who saw placebo content in both treatment waves (P < 0.05 and P < 0.01, respectively). By contrast, like all respondents, Republicans and climate change deniers who saw science coverage of climate change in both treatment waves were more likely to support government action than their counterparts who saw placebo content instead (P < 0.01 in both cases). In sum, the effects of skeptical opinion were unique; exposure to this type of content depressed support for government action among Republicans and climate deniers immediately afterward—the opposite of the observed effect of science coverage on these subgroups.

We otherwise find little evidence of consistent treatment effect heterogeneity by pretreatment partisanship and belief in anthropogenic climate change across the other treatments. Similarly, we find minimal evidence of heterogeneous treatment effects by political knowledge and trust in science (also a preregistered research question) (SI Appendix, Tables S14–S17).

Finally, we find no evidence that any intervention affected trust and confidence in scientists (a preregistered research question) (SI Appendix, Table S6).

Discussion

Results from a multiwave panel experiment in the United States offer insights into the persistence of misperceptions about controversial issues in science and politics. We first demonstrate that exposure to real-world science news about climate change reduces misperceptions immediately after exposure. However, these effects proved fleeting; they were no longer detectable by the end of our study. Sustained exposure to accurate information may be required to create lasting change in mass factual beliefs (36). In addition, unlike news coverage of partisan conflict, skeptical opinion content was especially effective at cultivating resistance to factual information and government action, suggesting that its importance may have been neglected in past research.

These findings show that none of the three explanations considered in this study for the persistence of misperceptions are individually sufficient. First, even when we exogenously expose people to more science coverage, the resulting accuracy gains are fragile. This finding suggests that insufficient exposure to accurate information (the first explanation) offers an incomplete account of the persistence of misperceptions. Instead, the effects of science coverage exposure are reduced or eliminated by subsequent exposure to skeptical opinion content and the passage of time (consistent with the second and third explanations). In sum, the persistence of misperceptions reflects both the limits of human memory and the constraints imposed by the political information environment. Even high doses of accurate information can be neutralized by contrary opinion or naturally decay with time.§

Of course, the present research has limitations that should be noted. First, future studies should strive for greater realism. While we exposed participants to genuine articles published after the release of the most recent IPCC report, the articles still concerned events that had taken place nearly 2 y before. Second, treatment effects might have been larger had the science coverage of climate change that was shown to participants contained newer information or if participants had been exposed to science coverage more frequently. In addition, the skeptical opinion content that we study comes from op-eds, a form of content whose audience is likely rather small (38). Our expectation is that the content of the skeptical opinion articles we test is similar to the climate skepticism Americans encounter in formats such as talk radio and cable news, but future research should test this expectation and assess the effects of exposure to climate skepticism in other forms. Finally, it would be desirable to replicate this study on a more representative sample (presuming comparable levels of panel retention could be achieved); to expand the combinations of stimuli tested in this study beyond the seven we evaluate; to test whether our findings are mirrored on other issues and/or in different countries; and to administer a similar experiment over a longer period to more closely approximate the temporal scale of related work on beliefs in the mass public (13, 14).

Ultimately, however, these results help explain why misperceptions persist even though factual information improves belief accuracy. Simply put, the accuracy gains that factual information creates do not last; their effects wane over time and can be eliminated by exposure to skeptical opinion content. Durably improving factual accuracy would likely require long-term shifts in public information flows that create sustained exposure to factual information and reduced exposure to skeptical opinion content.

Materials and Methods

Institutional Review Board Approval.

The study was approved by institutional review boards at Dartmouth College (STUDY00032100), George Washington University (NCR202686), and Ohio State University (2020E0656). All participants provided informed consent prior to participating in each wave of the study.

Participant Recruitment and Sample Composition.

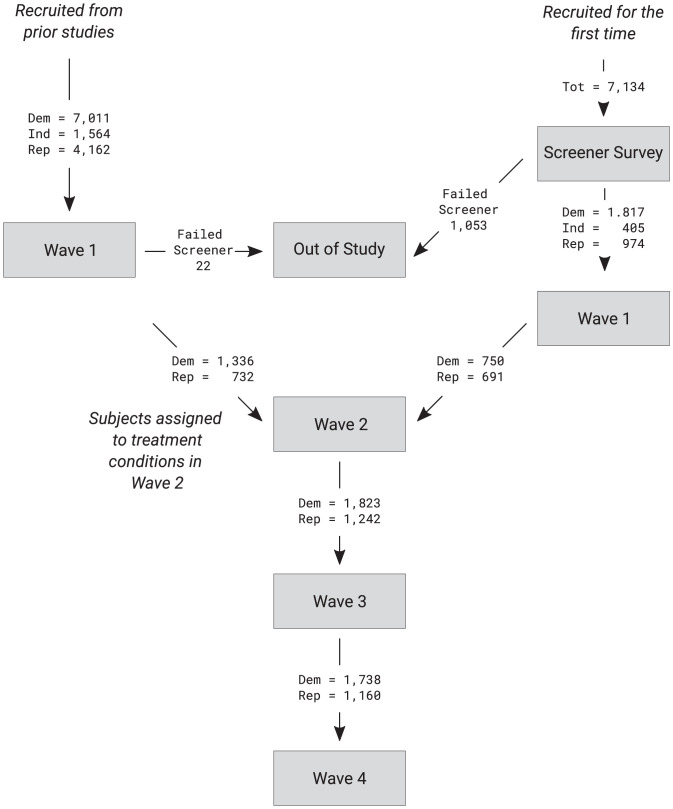

This preregistration was filed after collection of wave 1 of our planned four-wave study but prior to the experimental randomization, which took place at the onset of wave 2. Our final wave 1 sample, which was collected and finalized prior to preregistration, consists of 4,321 self-identified Democrats and Republicans who 1) were recruited on Mechanical Turk [an online platform whose validity for experimental research and survey panels has previously been established (25, 39)], 2) provided a valid response to an open-ended text question that previous work (40) has shown can identify low-quality respondents (including but not limited to bots and ineligible respondents outside the United States), 3) took at least 120 s to complete the wave 1 survey, and 4) did not say they provided humorous or insincere responses to survey questions “always” or “most of the time” on the wave 1 survey (those who skipped this question were also excluded).

Fig. 3 provides a study flow diagram that visually represents the recruitment process. The wave 1 sample was recruited via two mechanisms. Some respondents who were invited to take part in the survey had previously taken part in studies conducted by one author and were contacted by email through the Mechanical Turk platform. Others were identified via a screener survey that was open to participants on Mechanical Turk who have US IP addresses, are age 18 or over, and have a 95% or higher approval rate. Those respondents who completed wave 1 without being screened (that is, they were recruited from the set of prior study participants described above) were not invited to take part in wave 2 if they provided blank, incomplete, or nonsensical responses to an open-ended text question about what they thought were the most important national priorities in the first survey they took as part of the study (either the screener or the wave 1 survey) or if they did not meet conditions 3 and 4 described in the prior paragraph (40). Respondents who similarly failed to provide a valid response to this open-ended text question in the screener were not invited to take part in wave 1. Finally, Independents who do not lean toward either party were not included in the final wave 1 sample (and thus, not invited to take part in wave 2) to maximize our statistical power to distinguish between Democrats and Republicans in analyses of heterogeneous treatment effects.

Fig. 3.

Study flow diagram. Dem, Democrat; Ind, Independent; Rep, Republican; Tot, total.

We invited all respondents in the final wave 1 sample to take part in wave 2. Respondents who accepted the invitation were the participants in the experimental component of our study. All participants in wave 2 were then invited to take part in waves 3 and 4.

The preregistered study began with wave 2 of the panel. Waves 2 and 3 were open for 7 d, and wave 4 was open for 2 wk. Wave 2 was open from 25 August to 1 September 2020. Wave 3 was open from 1 September to 8 September 2020. Wave 4 was open from 8 September to 22 September 2020. We sent reminder emails to respondents who had not yet completed the relevant survey 3 d after launch during waves 2 and 3 and 3, 7, and 10 d after launch for wave 4. Waves 3 and 4 were open to all participants who took part in wave 2. Respondents were required to have taken part in wave 2 to participate in waves 3 and 4 (although participation in wave 3 was not required to be eligible for wave 4).

Participants who were invited to take part in wave 2 were randomized into one of the experimental conditions described above with equal probability. Each was defined with an indicator variable. Assignment to those conditions persisted in the data from waves 3 and 4.

We implemented the following exclusion restrictions, which were not preregistered. First, when responses from the same respondent were observed twice in a given survey wave, we retained the one with the longest duration (time spent on survey). Second, when respondents reported a Mechanical Turk identification that does not correspond to the one that they used to take part in the survey and matches an identification reported by another respondent, we remove all responses by that respondent across waves.

We observe no evidence of differential attrition by condition (SI Appendix, Table S18), although we do observe some evidence of demographic differences in attrition rates (SI Appendix, Table S19). We therefore demonstrate in SI Appendix that the results are largely unchanged when we estimate exploratory treatment effect models using inverse probability of treatment weights (as recommended by ref. 41). Overall, 76% of all reported significant effects remain significant after this procedure is performed (SI Appendix, Tables S20–S24). The effects discussed in this paper all remained significant after inverse probability weighting.

Stimulus Materials.

The news and opinion articles that respondents saw were drawn from the real-world debate over climate change after the 7 October 2018 release of the fall/winter 2018 publication of an IPCC report on the potential impacts of a 1.5 ∘C increase in global temperatures. They were selected via the following procedure.

First, research assistants not aware of our hypotheses or research questions selected candidate news and opinion articles in LexisNexis Academic, Newsbank, and Google that were published in prominent news sources between 7 October and 8 December 2018 (inclusive) and mentioned global warming or climate change. The research assistants were specifically asked to identify “news articles indicating the existence of a consensus or widespread agreement among experts on climate change that reference the report and do not discuss or reference partisan disagreement over the issue,” “news articles featuring partisan disagreement over climate change (facts and/or policies) that reference the report,” and “opinion articles that express a skeptical view of the scientific consensus on climate change that reference the report.” From these sets, we selected 26 candidate articles in corresponding categories of media content that we call “skeptical opinion,” “scientific coverage,” and “partisan conflict.”

The articles were then pretested to eliminate outliers and increase within-category uniformity. We conducted a pretest from 1 June to 6 June 2020 among workers on Mechanical Turk (n = 2,013) in which participants were asked to read a random subset of five candidate articles of any type and assess whether the articles were reporting or opinion, the credibility of the articles, and how well written the articles were. We used these results to eliminate articles that were viewed very differently across partisan groups as well as clear outliers (e.g., articles not viewed as not well written). Ultimately, we selected six scientific coverage articles, six partisan conflict articles, and three skeptical opinion articles (which are only shown in wave 3). These are presented in SI Appendix, section A along with the full instrument from each wave of the survey.

Finally, we used a brute force randomization technique to partition the group of six scientific coverage and partisan conflict articles into all possible groups of equal size. We then compared group differences in mean perceived article credibility and chose the partitioning rules that minimized these differences. Those partitions were then used to assign scientific and partisan articles between wave 2 and wave 3.

The six placebo articles, which concern unrelated topics such as cooking and bird-watching, were selected from placebo stimuli used in prior studies.

Condition Selection.

We selected the treatments tested in this study to maximize our ability to evaluate the hypotheses and research questions of interest given available resources and expected effect sizes. Given our research focus on understanding why the effects of factually accurate information do not persist, we included all possible combinations of the science treatment in wave 2 and the four stimuli types in wave 3 (i.e., science wave 2 → science wave 3, science wave 2 → opinion wave 3, science wave 2 → placebo wave 3, and science wave 2 → partisan wave3). The placebo wave 2 → placebo wave 3 condition was included as a baseline. The placebo wave 2 → science wave 3 condition was included to provide a baseline for separating the dosage effects of science wave 2 → science wave 3 from science wave 2 → placebo wave 3. Finally, we tested one other condition, in which respondents were assigned to partisan coverage in both waves (i.e., partisan wave 2 → partisan wave 3), that was required to test a hypothesis and multiple preregistered research questions. As noted in Discussion, it would be desirable to test other possible combinations of stimuli, but we limited our design to these conditions to maximize statistical power.

Control Variable Selection Procedure.

The models we report in this article were estimated using OLS regression with robust SEs. We use a lasso variable selection procedure to determine the set of prognostic covariates to include in each model (i.e., separately for each dependent variable). Specifically, we use the R package glmnet with default parameters and seed set to 1,234. If one or more levels (e.g.,“Northeast”) are selected from a factor variable, we include only the selected level(s) in the model. Below is the set of candidate variables that we select from (as measured in wave 1); if not otherwise stated, these variables are treated as continuous: pretreatment climate change belief (one to four), pretreatment belief in human causes of climate change (one to three), pretreatment preferences for how much the federal government should do about climate change (one to seven), pretreatment energy policy attitudes (one to three), pretreatment importance of climate change (one to five), education (college graduate indicator; factor variable), age group (18 to 34, 35 to 44, 45 to 54, 55 to 64, 65+; factor variable), male (one/zero; factor variable), region (Northeast, South, Midwest, West) (factor variable), party identification (three points; factor variable), ideological self-placement (seven points), knowledge (total correct answers: zero to five), non-White (one/zero; factor variable), interest in politics (one to five), and science trust and confidence (factor score).

Supplementary Material

Acknowledgments

We thank seminar participants at George Washington University, including Brandon Bartels, David Dagan, Matthew H. Graham, Danny Hayes, Jared Heern, Eric Lawrence, Shannon McQueen, Andrew Thompson, and Chris Warshaw. This research is supported by the John S. and James L. Knight Foundation through a grant to the Institute for Data, Democracy & Politics at George Washington University. All mistakes are our own.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. L.B. is a guest editor invited by the Editorial Board.

*Prior research suggests that repeated exposure may be more likely to lead to lasting change in beliefs or attitudes (31, 32).

†This pattern was mirrored when respondents were assigned to see science coverage or news about partisan conflict twice instead (a preregistered research question) (SI Appendix, Table S5).

‡In addition, we found that support for government action to address climate change increased for respondents who saw only coverage of partisan conflict in both wave 2 ( = 0.188, 0.01) and wave 3 ( = 0.155, 0.05)—an unexpected finding that deserves further investigation.

§We also note that the effect sizes in this study are small by traditional standards, underscoring the limited over-time impact of information on beliefs and attitudes about salient issues [although our findings are consistent with reported effect sizes in preregistered experiments (37)].

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2122069119/-/DCSupplemental.

Data Availability

Data and code necessary to replicate the findings in this article as well as materials from the pretests and screener surveys have been deposited in GitHub (https://github.com/thomasjwood/nyhanporterwood_tso) (35).

References

- 1.Chan M. S., Jones C. R., Hall Jamieson K., Albarracín D., Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychol. Sci. 28, 1531–1546 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Nyhan B., Porter E., Reifler J., Wood T., Taking fact-checks literally but not seriously? the effects of journalistic fact-checking on factual beliefs and candidate favorability. Polit. Behav. 42, 939–960 (2019). [Google Scholar]

- 3.Walter N., Cohen J., Holbert R. L., Morag Y., Fact-checking: A meta-analysis of what works and for whom. Polit. Commun. 37, 350–375 (2020). [Google Scholar]

- 4.Kunda Z., The case for motivated reasoning. Psychol. Bull. 108, 480–498 (1990). [DOI] [PubMed] [Google Scholar]

- 5.Taber C. S., Lodge M., Motivated skepticism in the evaluation of political beliefs. Am. J. Pol. Sci. 50, 755–769 (2006). [Google Scholar]

- 6.Kahan D. M., Peters E., Dawson E. C., Slovic P., Motivated numeracy and enlightened self-government. Behav. Public Policy 1, 54–86 (2017). [Google Scholar]

- 7.Tesler M., Elite domination of public doubts about climate change (not evolution). Polit. Commun. 35, 306–326 (2018). [Google Scholar]

- 8.Merkley E., Stecula D. A., Party cues in the news: Democratic elites, republican backlash, and the dynamics of climate skepticism. Br. J. Polit. Sci. (2020). [Google Scholar]

- 9.McCright A. M., Dunlap R. E., The politicization of climate change and polarization in the American public’s views of global warming, 2001. –2010. Sociol. Q. 52, 155–194 (2011). [Google Scholar]

- 10.Wood T., Porter E., The elusive backfire effect: Mass attitudes’ steadfast factual adherence. Polit. Behav. 41, 135–163 (2019). [Google Scholar]

- 11.Porter E., Wood T. J., The global effectiveness of fact-checking: Evidence from simultaneous experiments in Argentina, Nigeria, South Africa, and the United Kingdom. Proc. Natl. Acad. Sci. U.S.A. 118, e2104235118 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Porter E., Wood T., False Alarm: The Truth about Political Mistruths in the Trump Era (Cambridge University Press, 2019). [Google Scholar]

- 13.Page B. I., Shapiro R. Y., The Rational Public: Fifty Years of Trends in Americans’ Policy Preferences (University of Chicago Press, 1992). [Google Scholar]

- 14.Stimson J. A., Wager E. M., Converging on Truth: A Dynamic Perspective on Factual Debates in American Public Opinion (Cambridge Elements in American Politics, Cambridge University Press, 2020). [Google Scholar]

- 15.Guess A. M., Nyhan B., Reifler J., Exposure to untrustworthy websites in the 2016 US election. Nat. Hum. Behav. 4, 472–480 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Merkley E., Are experts (news)worthy? Balance, conflict, and mass media coverage of expert consensus. Polit. Commun. 37, 530–549 (2020). [Google Scholar]

- 17.Gerber A. S., Gimpel J. G., Green D. P., Shaw D. R., How large and long-lasting are the persuasive effects of televised campaign ads? Results from a randomized field experiment. Am. Polit. Sci. Rev. 105, 135–150 (2011). [Google Scholar]

- 18.Hill S. J., Lo J., Vavreck L., Zaller J., How quickly we forget: The duration of persuasion effects from mass communication. Polit. Commun. 30, 521–547 (2013). [Google Scholar]

- 19.Vinaes Larsen M., Olsen A. Leth, Reducing bias in citizens’ perception of crime rates: Evidence from a field experiment on burglary prevalence. J. Polit. 82, 747–752 (2020). [Google Scholar]

- 20.Berinsky A., The birthers are back. YouGovAmerica, 3 February 2012. https://today.yougov.com/topics/politics/articles-reports/2012/02/03/birthers-are-back. Accessed 28 February 2022.

- 21.Berinsky A., The birthers are (still) back. YouGovAmerica, 11 July 2012. https://today.yougov.com/topics/politics/articles-reports/2012/07/11/birthers-are-still-back. Accessed 28 February 2022.

- 22.Porter E., Wood T., Bahador B., Can presidential misinformation on climate change be corrected? Evidence from internet and phone experiments. Res. Politics, 10.1177/2053168019864784 (2019). [Google Scholar]

- 23.Benegal S. D., Scruggs L. A., Correcting misinformation about climate change: The impact of partisanship in an experimental setting. Clim. Change 148, 61–80 (2018). [Google Scholar]

- 24.Saad L., Global warming attitudes frozen since 2016. Gallup, 5 April 2021. https://news.gallup.com/poll/343025/global-warming-attitudes-frozen-2016.aspx. Accessed 28 April 2021.

- 25.Gross K., Porter E., Wood T. J., Identifying media effects through low-cost, multiwave field experiments. Polit. Commun. 36, 272–287 (2019). [Google Scholar]

- 26.van der Linden S. L., Leiserowitz A. A., Feinberg G. D., Maibach E. W., The scientific consensus on climate change as a gateway belief: Experimental evidence. PLoS One 10, e0118489 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sides J., Stories or science? Facts, frames, and policy attitudes. Am. Polit. Res. 44, 387–414 (2016). [Google Scholar]

- 28.Hopkins D. J., Sides J., Citrin J., The muted consequences of correct information about immigration. J. Polit. 81, 315–320 (2019). [Google Scholar]

- 29.Barnes L., Feller A., Haselswerdt J., Porter E., Information, knowledge, and attitudes: An evaluation of the taxpayer receipt. J. Polit. 80, 701–706 (2018). [Google Scholar]

- 30.Bolsen T., Druckman J. N., Cook F. L., The influence of partisan motivated reasoning on public opinion. Polit. Behav. 36, 235–262 (2014). [Google Scholar]

- 31.Carnahan D., Bergan D. E., Lee S., Do corrective effects last? Results from a longitudinal experiment on beliefs toward immigration in the US. Polit. Behav. 43, 1227–1246 (2020). [Google Scholar]

- 32.Lecheler S., Keer M., Schuck A. R., Hänggli R., The effects of repetitive news framing on political opinions over time. Commun. Monogr. 82, 339–358 (2015). [Google Scholar]

- 33.Egan P. J., Mullin M., Climate change: US public opinion. Annu. Rev. Polit. Sci. 20, 209–227 (2017). [Google Scholar]

- 34.Bloniarz A., Liu H., Zhang C. H., Sekhon J. S., Yu B., Lasso adjustments of treatment effect estimates in randomized experiments. Proc. Natl. Acad. Sci. U.S.A. 113, 7383–7390 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Nyhan B., Porter E., Wood T. J.. Replication data for “Time and skeptical opinion content erode the effects of science coverage on climate beliefs and attitudes.” GitHub. https://github.com/thomasjwood/nyhanporterwood_tso. Accessed 25 May 2022. [DOI] [PMC free article] [PubMed]

- 36.Nyhan B., Why the backfire effect does not explain the durability of political misperceptions. Proc. Natl. Acad. Sci. U.S.A. 118, e1912440117 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Schäfer T., Schwarz M. A., The meaningfulness of effect sizes in psychological research: Differences between sub-disciplines and the impact of potential biases. Front. Psychol. 10, 813 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Prior M., Media and political polarization. Annu. Rev. Polit. Sci. 16, 101–127 (2013). [Google Scholar]

- 39.Coppock A., Generalizing from survey experiments conducted on Mechanical Turk: A replication approach. Political Sci. Res. Methods 7, 613–628 (2019). [Google Scholar]

- 40.Kennedy C., et al., Assessing the risks to online polls from bogus respondents. Pew Research Center, 18 February 2020. https://www.pewresearch.org/methods/2020/02/18/assessing-the-risks-to-online-polls-from-bogus-respondents/. Accessed 3 February 2021.

- 41.Gerber A., Green D. P., Field Experiments: Design, Analysis, and Interpretation (Cambridge University Press, 2012). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data and code necessary to replicate the findings in this article as well as materials from the pretests and screener surveys have been deposited in GitHub (https://github.com/thomasjwood/nyhanporterwood_tso) (35).