Abstract

In this paper, a new classification approach of breast cancer based on Fully Convolutional Networks (FCNs) and Beta Wavelet Autoencoder (BWAE) is presented. FCN, as a powerful image segmentation model, is used to extract the relevant information from mammography images. It will identify the relevant zones to model while WAE is used to model the extracted information for these zones. In fact, WAE has proven its superiority to the majority of the features extraction approaches. The fusion of these two techniques have improved the feature extraction phase and this by keeping and modeling only the relevant and useful features for the identification and description of breast masses. The experimental results showed the effectiveness of our proposed method which has given very encouraging results in comparison with the states of the art approaches on the same mammographic image base. A precision rate of 94% for benign and 93% for malignant was achieved with a recall rate of 92% for benign and 95% for malignant. For the normal case, we were able to reach a rate of 100%.

1. Introduction

In the early stages of breast cancer, a tumor is too small to be felt and it may not cause any symptoms, but it could be seen by using different imaging techniques [1]. Various researchers have investigated the domain of breast cancer for early diagnosis. Machine learning (ML) algorithms are used in computer-aided diagnosis (CAD) systems to help in breast cancer detection and classification [2–5]. All machine learning algorithms are based on common steps, starting from the mammogram preprocessing followed by segmentation then feature extraction and selection, and finally applying the machine learning algorithm to classify the mass in the breast as either benign or malignant [6]. Deep learning uses efficient techniques that move from handcraft to automatic image segmentation, feature extraction, and feature selection to achieve more accurate detection and classification results [7]. Fully Convolutional Networks (FCNs) [8] are one of the most successful state-of-the-art deep learning methods that replaced all the fully connected layers with convolutional layers. The fundamental idea behind this method is to employ CNN as a feature extractor to generate high-level feature maps. These maps are then further up-sampled to provide pixel-by-pixel output. The method allows for end-to-end training of CNN for semantic segmentation with input images of any size. Skip connections [9] also allow data to flow directly from low-level feature maps to high-level feature maps without distortions, to improve localization accuracy and speed convergence. Many studies used FCN to segment breast cancer masses. In our proposed model, we combine FCN and Beta wavelet autoencoder BWAE to extract the most relevant features and reduce the dimension of the mammogram image through a set of layers (encoding), then reconstruct the image with the most significant features (decoding) to be classified correctly.

The idea is to model only the masses that are likely to be abnormal. FCN will be used to extract strange masses in the breast. Instead of modeling the total image, WAE will model just strange masses. In this case, we will only model the useful information.

Our paper is structured as follows: Section 1 presents the Literature Review. Section 2 demonstrates our proposed method for breast cancer detection and classification. Section 3 lists the experimental results, and Section 4 concludes the paper.

2. Literature Review

Over the past several years, deep learning techniques have achieved superior performance accuracy over classical machine learning methods on a wide variety of applications, especially in the medical field [10–12]. Convolutional Neural Networks (CNNs) [13] are the most common deep architecture used for breast cancer detection and classification. The latest studies of breast cancer detection and classification have achieved different performance and accuracy with different image preprocessing techniques [14, 15], CNN architectures [16], activation functions [17], and optimization algorithms [18, 19], and whether it applied as patches or images [20, 21]. Many research studies show that the CNN overcomes the limitation of classical machine learning methods and achieved better results in the detection and classification accuracies of breast cancer [22, 23]. Moreover, the depth and width of the deep network can help to improve the network's quality [24]. A comprehensive survey was conducted by Michael et. al. [25] for all Breast Cancer Segmentation Methods. In their research, they review the deep learning segmentation methods that are used to extract masses from mammogram images and highlight the most frequently used of them. The segmentation techniques play an essential role in the diagnosis, feature extraction, and classification accuracy of breast masses as benign and malignant. Different deep learning segmentation methods are used for breast cancer images such as FCN [8], U-Net [9, 26, 27], Segmentation Network (SegNet) [28], Full Resolution Convolutional Network (FrCN) [29], mask Region-Based Convolutional Neural Networks mask (RCNNs) [11, 30], Attention guided dense up-sampling network(Aunet) [31], Residual attention U-Net model (RUNet) [32], conditional Generative Adversarial Networks (cGANs) [33], Densely connected U-Net and attention gates (AGs) [34], and Conditional random field model (CRF) [35].

U-Net [9] is one of the most frequently deep learning segmentation techniques that is used with mammograms to detect breast cancer. It is encoder-decoder architectures that modify and extend FCN by using skip-connection operations to replicate the feature maps of its corresponding down-sampling process (i.e., pooling) and then combine them (called a concat layer) to include image pixel context information in the up-sampling convolution process. It is a fast and efficient segmentation method that demonstrates excellent results in breast cancer mass detection and classification. The U-Net has numerous advantages for segmentation tasks: first, it allows for the simultaneous use of global location and context. Second, it works well with a small number of training samples. Third, it produces the segmentation map using an end to end pipeline process that preserves the whole context of the input images, which is a significant advantage in mammogram classification.

A full resolution convolutional network (FrCN) model was proposed by Al-antari et. al. [29] to segment breast cancer masses that are detected by using You-Only-Look-Once (YOLO) model [36]. Their segmentation method preserves the details of tiny objects by removing max-pooling and subsampling layers in the encoder network. The decoder of FrCN replaces all three FC layers with three full convolutional layers. The accuracy of their segmentation model is 92.97%, and the overall accuracy of their CAD system using the CNN classifier is 95.64%.

Many researchers used U-Net architecture [32, 37] with or without filters to detect and classify mammogram images, and some of them modify U-Net architecture to enhance the classification accuracy of breast cancer. Abdelhafiz D. et al. [32] proposed a residual attention U-Net model (RUNet) that uses the U-Net architecture and replaced its regular convolution layers by residual blocks with the identity connections to connect the encoder and decoder paths at the same level. The new architecture is deeper to preserve information and enhance the segmentation performance of mammogram images. The last layer uses a ResNet classifier that achieved 98% classification accuracy.

Conditional Generative Adversarial Networks (cGANs) [33] is a deep segmentation method composed of encoder and decoder which make up the generator network. The encoder layers extract the features from the input images, such as texture, edge, and intensity, while decoder layers construct a binary mask based on the extracted features. The generator network generates a mask for mammograms masses that is fed to the classifier. Li S. et. al. [34] proposed a breast mass segmentation method that is composed of densely connected U-Net with attention gates (AGs). The encoder in the U-Net architecture is densely connected to CNN to work as a feature extractor of different size and shape of breast masses, and the decoder is integrated with AGs to enhance the segmentation of the U-Net model.

Deep neural networks integrate the probabilistic model called the Conditional Random Field (CRF) model [38] to be used for breast cancer mammogram images segmentation. CRF is used initially in deep neural networks as postprocessing of FCN which model the correlations among pixels for semantic image segmentation to achieve sharper boundaries.

Dhungel N. et. al. [35] proposed a deep learning model that integrates CNN, CRF, and Structured support vector machine SSVM to segment breast cancer masses in and their model shows significant results.

On the other hand, deep learning algorithms are complex architecture and require a large amount of data for training, so the researchers use different techniques to overcome these issues such as data augmentation and transfer learning. Also, modifying the architecture of the deep learning model to enhance the computation complexity and learning time such as combining deep learning and wavelet Network.

Combining deep neural networks with Beta wavelet networks and sparse coding are used for automatic breast cancer detection and classification. Ben Ali, R. et al. [39] proposed Deep Stacked Patched Auto-Encoders (DSPAEs) framework to detect and classify medical images. Their method is applied on mammogram images that are encoded and decoded by an Autoencoder to reduce the dimension of the input data through a set of layers (encoding), then reconstruct a new representation with more relevant features (decoding). The last layer of their model uses a linear classifier to classify breast images with 97.54% and 98.13% classification accuracy for MIAS and DDSM datasets, respectively.

Our work in this paper is encouraged by our previous work, where we proposed in Hassairi S. et. al. [40] a Deep Stacked Beta Wavelet Auto-Encoder (DSBWAE) to classify images and speech signals using the global score of each wavelet. The model constructs deep wavelet AEs layers with linear classifiers at the last layer. The classification results achieved outperform other classifiers that used the same datasets.

In Table 1, we demonstrate the results of different breast cancer segmentation and classification methods based on Deep Neural Networks.

Table 1.

List of studies used different deep segmentation methods of breast cancer mammograms images.

| Ref# | Year | Segmentation method | Segmentation accuracy (dice coefficient index) | Classifier | Dataset | Classification accuracy |

|---|---|---|---|---|---|---|

| [37] | 2020 | Vanilla U-net | 95.1% | VGG-16 | CBIS-DDSM, INbreast, UCHCDM, BCDR-01 | 92.6% |

| [32] | 2019 | RU-Net | 98.3% | ResNet | INbreast | 98.7% |

| [34] | 2019 | U-Net integrated AGs | 82.24% | — | DDSM | 78.38% |

| [29] | 2018 | FrCN | 92.69% | CNN | INbreast | 95.64% |

| [35] | 2015 | CRF | 90% | — | DDSM-BCRP and INbreast | — |

| [33] | 2020 | cGAN | 98% | CNN based on BI-RADS | Abreast | 97.85% |

| [39] | 2020 | DSPAE | — | Linear classifier | MIAS DDSM |

97.54% 98.13% |

3. Proposed Approach

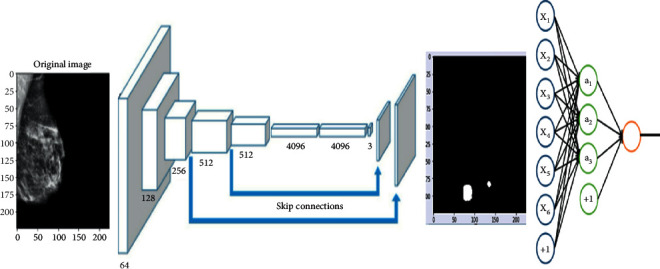

Figure 1 provides an overview of our proposed method for the detection and segmentation of a strange mass in the breast and the identification of the type of this mass.

Figure 1.

Illustration of the approach.

To ensure proper segmentation and classification of masses in the breast, the proposed method makes use of two sequential modules. The segmentation approach is based on an FCN architecture. Features extraction approach is based on Beta wavelet autoencoder. The classification phase is based on a linear classifier.

Before proceeding with image segmentation, a preprocessing phase is required. The details of the preprocessing phase are detailed in the next section.

3.1. Preprocessing

The goal of image preprocessing is to get them ready as input for the model. The idea is to increase the quality of the images so that features can be retrieved more easily. An approach like this offers the model with more relevant features that it can learn efficiently. The following are the steps in the preprocessing pipeline:

Cropping borders

Normalization

Removing the artifacts

CLAHE enhancement

Raw mammography has the drawback of elucidating the procedures involved in the preprocessing of the chosen image.

The purpose of this pipeline is to fix these issues so that we can obtain high-quality mammography images for use by the model.

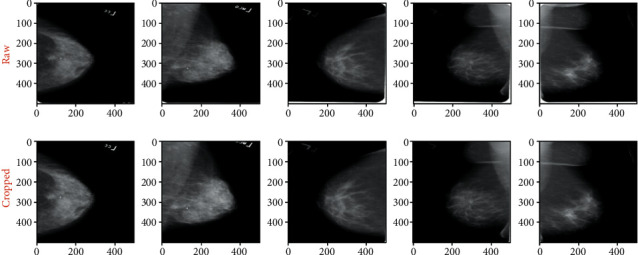

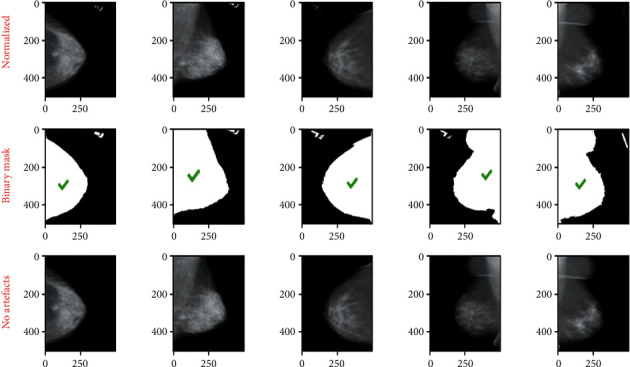

Step 1. Crop Borders: this step solves the problem of the bright white in borders and/or corners of raw mammograms. These white borders vary in thickness from image to image, which may cause huge issues in the model training phase, as they may consider these random edges as part of the features of mammograms. After a long process of trials and errors, we crop 1% of the image's width and 4% of the height. Figure 2 shows the results of cropped images.

- Step 2. Normalization: this step solves the problem of pixel value ranges from [0, 65535] to [0, 1]. The mammogram images are saved as 16 bit arrays. This means that the pixel values range from [0, 216] these large values may slow down the learning process of the Neural Network. The Min-Max scaling consists in changing the range of pixel intensity values into a range that is more familiar or normal to the senses. Mathematically, the Min-Max Normalization equation is represented as follows:

(1) where M is an image and Mi,j is a pixel.

Step 3. Artifacts Removal process:this step solves the problem of floating artifacts that appear in the background. In fact, these artifacts serve as markers that provide information like the orientation of the scan. Figure 3 illustrates images resulting from the normalization and removing artifacts phases.

To remove the artifacts, we follow these steps. By visual inspection, we can say that most of the background pixels are very close to black (pixel values are close to 0). Therefore, we can binary the mammogram using a threshold value to create a binary mask, where 0 indicates background pixels and 1 indicates a pixel belonging to the breast or artifacts region. After generating a binary mask we expand the boundaries of the white region in the mask to ensure that we really capture the entire region of any artifacts. Now from another visual inspection, the breast contour is almost always the largest contour in the mask and always binaries as a single region. We can safely say that the largest contour in the mask is the only region we want to keep from the original mammogram.

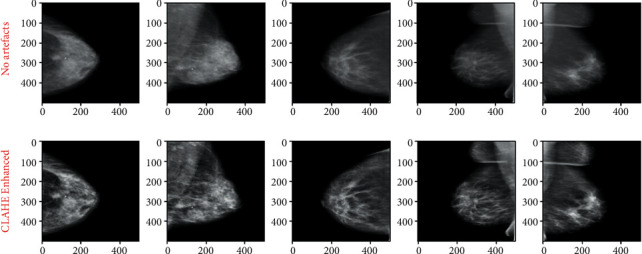

Step 4. CLAHE enhancement:this step solves the problem of poor contrast of breast tissues. This causes the breast region to appear as almost monotonous gray, resulting in almost no textures and meaningful differences between breast tissues and mass abnormalities. Therefore, this may slow the model's learning. To solve this problem we apply contrast-limited adaptive histogram equalization (CLAHE) that adjusts the global contrast of an image by updating the image histogram's pixel intensity distribution; this helps enhance the small details, textures, and features from the mammogram. Figure 4 shows the CLAHE enhancement of images.

Figure 2.

Original and cropped image.

Figure 3.

Images resulting from normalization and removing artifacts phases.

Figure 4.

CLAHE enhancement

3.2. FCN

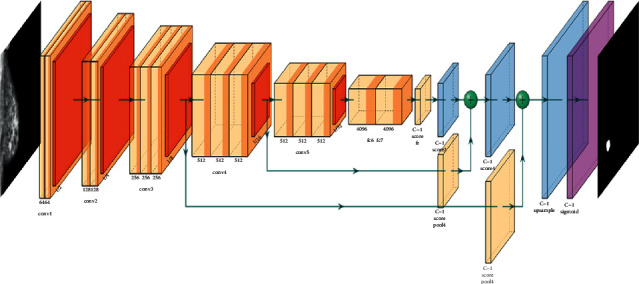

A Fully Convolutional Network, or FCN, is an encoded and decoded architecture, primarily used for semantic segmentation of an image. The encoder part extracts the pixelwise feature maps from the input image while the decoder restores the original resolution without losing any information. FCNs can take advantage of well-known ConvNets models such as VGG and ResNet that have been previously trained for the classification task. In keeping with this idea, we took a VGG-16 (with 16 layers) model pretrained on the ImageNet dataset and transforms it to serve as an encoder. To accomplish this transformation first, we removed the fully connected layers and replaced them with 1 × 1 convolutional layers. This method speeds up the learning process and improves the efficiency of our model, by utilizing the knowledge stored in a pretrained VGG-16, this is known as the transfer learning technique. As for the decoder part that follows the encoder, we used another technique known as transposed convolutional layers to upsample the resulted pixelwise feature maps from the encoder. After preparing the encoder and decoder, skip connections are added, what this connection does is to connect the output of one layer to a nonadjacent layer. By doing so, the skip connection allows our model to use information from multiple resolutions. As shown in Figure 5 that resumes our model, the skip connections outputted from the third and fourth max-pooling layers to connect with the first and the second transposed convolutional layers respectively in decoder parts.

Figure 5.

FCN8s model architecture.

In a classic convolutional network with fully connected final layers, the size of the fully connected layers limits the size of the input. Passing images of different sizes will result in outputs of different sizes. In the last step, the matrix multiplication cannot be performed. On the other hand, convolutional operations basically do not care about the size of the input; a fully convolutional network will work on images of any size.

Fully convolutional networks have been able to solve the size problem in computer vision tasks. These architectures take advantage of the following three special techniques:

Replace fully connected layers with one by one convolutional layers

Up-sampling through the use of transposed convolutional layers

Skip connections

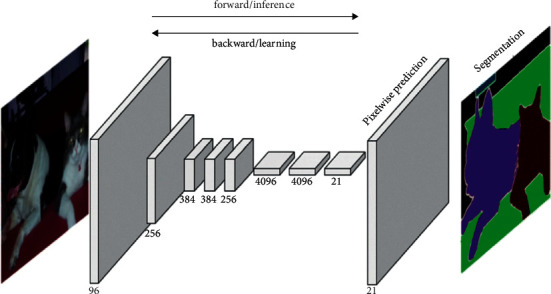

This architecture, Figure 6, will use information from multiple resolution scales allowed from skipped connections. As a result, the network is able to make more precise segmentation decisions.

Figure 6.

General architecture of an FCN [33].

An FCN is composed of an encoder and a decoder. The encoder will extract relevant features that will be used by the decoder. FCN is a CNN with a 1∗1 convolutional layer as a fully connected layer. The encoder is followed by the decoder using transposed convolutional layers to up-sample the image. A skip connection is added between layers. A skip connection is a connection of a layer to a nonadjacent layer. This technique allows the network to use information from multiple resolutions. Figure 5 illustrates an example of FCN architecture.

Figure 5 presents a FCN8 model. This architecture is composed of the following parameters:

Base model = VGG16-D

Input shape = (224∗224∗3)

Output shape = (224∗224∗1)

Number of classes = 1

Optimizer = Adam

Learning rate = 1e-05

Metrics = Accuracy and Intersection over Union (IoU)

Loss function = BinaryCrossentropy

Batch Size = 32

Epochs = 50

Steps_per_epoch = 506

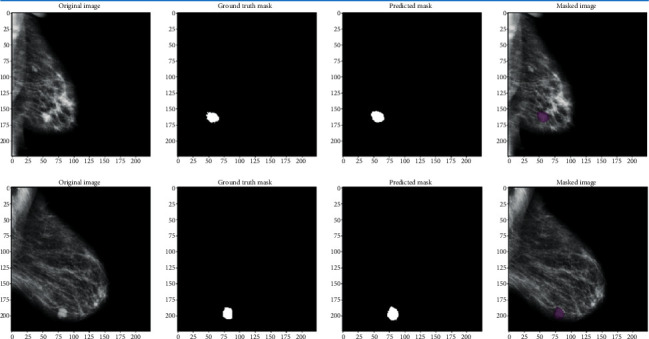

The segmentation phase is added to improve the modeling phase. Indeed, the images resulting from the segmentation phase have more useful information than the original images. Segmented images only present information describing the state of a cancerous nodule while a mammographic image may contain additional information unnecessary for the modeling phase and the classification phase. Figure 7 illustrates the results of the segmentation phase based on FCN8.

Figure 7.

FCN8s segmentation results.

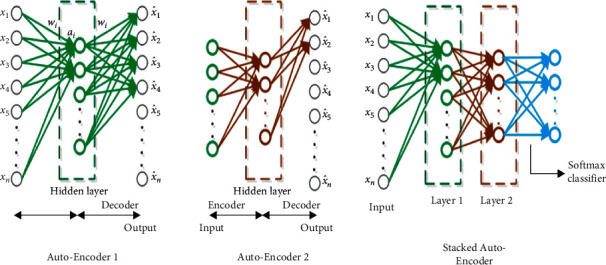

3.3. Autoencoder-Based Beta Wavelets Network

Based on the capacity of wavelet analysis and the AE approaches in feature extraction and learning, Hassairi et al. in [40] have implemented a BWAE. This architecture is based on a wavelet network (WN) and autoencoder. These two models have led to the DSBWAE from a new model of WN called Global Wavelet Network GWN.

To construct the GWN, we created a WN using the Best Contribution algorithm (BCA) [40]. It is based on WN using 2D FWT and multiresolution analysis. Each WN model has a signal. All WN of a class will be merged to construct GWN.

The creation of a GWN to approximate only one class from the dataset is considered as the first step. To get this GWN, we need to choose the best wavelets from all wavelets that are used in the decomposition of all signals of the dataset. Therefore, it is essential to create a WN for each signal and then calculate wavelets' scores to get the GWN that approximates only one class from the dataset [40].

To prepare a DSWAE, a set of WAEs is constructed. The association of WAEs led to a DSWAE. The hidden layer of the first WAE constitutes the input layer for the second WAE. Figure 8 illustrates the construction of a DSWAE.

Figure 8.

DSWAE with two layers.

The linear function inside the neurons is transformed to a sigmoid function to allow the application of the fine-tuning. This function, the sigmoid function, favors the important features and derives the activation function in the back propagation step.

The Fine tuning [41] is commonly used in DL. Also, this step is used to greatly improve the performance of a stacked AE.

An intelligent pooling [42, 43] is used to optimize the quality of features in the hidden layers. When the wavelets found in the adjacent neurons have the same scale and the same type, we apply a pooling on these neurons.

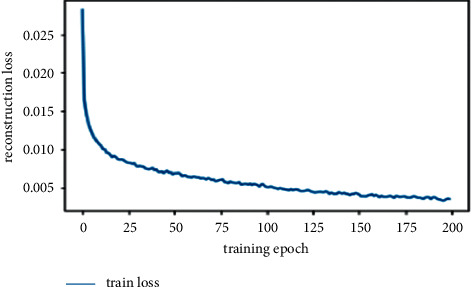

First, we trained the autoencoder in an unsupervised manner on normal and abnormal mammogram images, then we transferred the learning space from the autoencoder to the hybrid classification autoencoder, and then we trained the fully connected layer of the hybrid model using the fine-tuning technique. Figure 9 illustrates the results of the training phase of the autoencoder.

Figure 9.

Learning curves of training the autoencoder model.

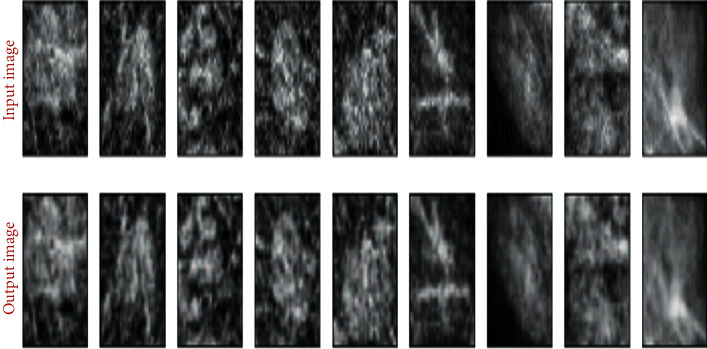

Figure 10 illustrates the output of the autoencoder of training images. The output images illustrate the good quality of modeling due to the segmentation phase. This phase, based on FCN8, made it possible to keep only the useful part of the mammographic image.

Figure 10.

Autoencoder images reconstruction results.

4. Experimental Results

4.1. Dataset

To train and test our architecture, we used CBIS-DDSM presented in [44]. It is a database of mammographic images of breasts containing a mass. DDSM is composed of 2,620 scanned film mammography studies. It contains normal, benign, and malignant cases with verified pathology information. In our case, we were interested in the images where he has a mass to check if the mass is benign or malignant.

4.2. Dataset Splitting

For the segmentation model, we divided the dataset in two folders. One folder contains the full mammogram images; the other folder contains the ground truth masks. To train the FCN, we divided the dataset on 80% for the training phase and 20% for the testing phase. As a result, we get cropped images of the mass abnormality dataset for the classification phase. We used 80% of the new dataset for the training phase and 20% for the testing phase of the classification approach.

4.3. Classification

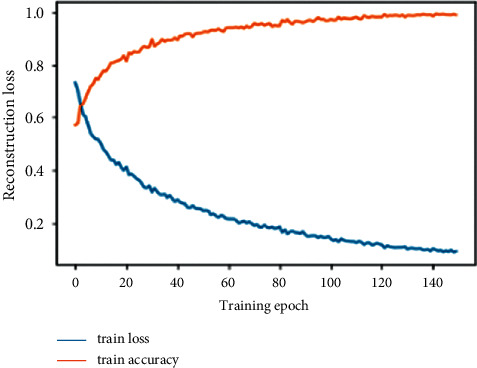

The classification process is based on a linear function. The SoftMax function was used at the end of the modeling process to identify the class of each image. Figure 11 illustrates the learning rate of the segmentation and modeling phase.

Figure 11.

Learning curves of training hybrid classification autoencoder model. Table 2 shows the confusion matrix presenting the classification rates resulting from our approach.

4.4. Evaluation Metrics

The true positive is a consequence where the predictive model accurately predicts the positive class. While the true negative in contrast is a consequence where the predictive model accurately predicts the negative class. Whereas the false positive is a result where the predictive model wrongly predicts the positive class. While a false negative unlike a false positive is a result where the predictive model mistakenly predicts the negative class.

We compared our approach to several state-of-the-art approaches. Table 3 presents an evaluation in terms of classification rates of the different approaches.

Table 3.

Classification rate evaluation.

The evaluation details of our approach are shown in Table 4.

Table 4.

Classification metrics.

| Precision | Recall | |

|---|---|---|

| Benign | 0.94 | 0.92 |

| Malignant | 0.93 | 0.95 |

| Normal | 100 | 100 |

According to Tables 3 and 4, we can notice the effectiveness of our approach. This quality is due to the following two criteria:

The feature extraction phase is based on the FCN. We no longer process the raw image. Based on the FCN, the part containing the useful information is segmented, which greatly reduces the modeling of the unnecessary information.

The use of autoencoders based on wavelet networks. We know well the capacity of wavelet analysis, namely, the data modeling phase based on wavelet networks. The autoencoder based on wavelet networks is the association of a set of wavelet networks. So this autoencoder will have wavelet analysis capability and wavelets network modeling capability.

5. Conclusion

The intelligent analysis and classification of medical images has been a growing field in recent years. In this context, we have proposed a new vision for the classification of mammography images of breasts containing nodules. The proposed approach is a hybridization of two techniques. The FCN is used for nodule segmentation and localization of the affected part of the breast. This will remove unnecessary information from a mammogram image. In the end, we will be able to have just the image of the nodule from which we will decide on its quality. The DSWAE is an autoencoder model based on wavelet networks. This WEA brings together the qualities of wavelet analysis and the modeling qualities of wavelet networks. We have shown the performance of our approach compared to other state-of-the-art methods on the same test basis. These results justified our choice of techniques for the identification and classification of breast mammograms. The proposed method can also be useful for other types of cancers such as skin cancer [48], lung cancer [49], and brain tumor [50] detection.

Table 2.

Confusion matrix.

| Benign | Malignant | Normal | ||

|---|---|---|---|---|

| Predicted | Benign | 473 | 41 | 0 |

| Malignant | 30 | 555 | 0 | |

| Normal | 0 | 0 | 500 |

Acknowledgments

This study was supported by Princess Nourah Bint Abdulrahman University Researchers Supporting Project number (Grant No. PNURSP2022R308), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia. The authors would like to acknowledge the financial support of this work by grants from General Direction of Scientific Research (DGRST), Tunisia, under the ARUB program.

Data Availability

To train and test our architecture, we used CBIS-DDSM presented in [36]. It is a database of mammographic images of breasts containing a mass. DDSM is composed of 2,620 scanned film mammography studies. It contains normal, benign, and malignant cases with verified pathology information. In our case, we were interested in the images where he has a mass to check if the mass is benign or malignant.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- 1.Jafari S. H., Saadatpour Z., Salmaninejad A., et al. Breast cancer diagnosis: imaging techniques and biochemical markers. Journal of Cellular Physiology . 2018;233(7):5200–5213. doi: 10.1002/jcp.26379. [DOI] [PubMed] [Google Scholar]

- 2.Cancer Society A. Breast Cancer Facts & Figures 2019-2020 . Atlanta: American Cancer Society, Inc; 2019. [Google Scholar]

- 3.Abbas S., Jalil Z., Javed A. R., et al. BCD-WERT: a novel approach for breast cancer detection using whale optimization based efficient features and extremely randomized tree algorithm. PeerJ Computer Science . 2021;7:p. e390. doi: 10.7717/peerj-cs.390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mohiyuddin A., Basharat A., Ghani U., et al. Breast tumor detection and classification in mammogram images using modified YOLOv5 network. Computational and Mathematical Methods in Medicine Hindawi . 2021:1748–670X. doi: 10.1155/2022/1359019. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 5.Mehmood M., Rizwan M., Gregus ml M., Abbas S. Machine learning assisted cervical cancer detection. Frontiers in Public Health . 2021;9 doi: 10.3389/fpubh.2021.788376.788376 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kaushal C., Bhat S., Koundal D., Singla A. Recent trends in computer assisted diagnosis (CAD) system for breast cancer diagnosis using histopathological images. IRBM . 2019;40(4):211–227. doi: 10.1016/j.irbm.2019.06.001. [DOI] [Google Scholar]

- 7.Hussain L., Aziz W., Saeed S., Rathore S., Rafique M. Automated breast cancer detection using machine learning techniques by extracting different feature extracting strategies. Proceedings of the 2018 17th IEEE International Conference on Trust, Security and Privacy in Computing and Communications/12th IEEE International Conference on Big Data Science and Engineering; August 2018; New York, NY, USA. TrustCom/BigDataSE); pp. 327–331. [DOI] [Google Scholar]

- 8.Long J., Shelhamer E., Trevor Darrell Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); May 2015; Boston, MA, USA. pp. 3431–3440. [DOI] [PubMed] [Google Scholar]

- 9.Ronneberger O., Fischer P., Brox T. U-net: convolutional networks for biomedical image segmentation. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; 2015 October; Cham. Springer; pp. 234–241. [DOI] [Google Scholar]

- 10.Schmidhuber J. Deep learning in neural networks: an overview. Neural Networks . 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 11.Brahimi S., Ben Aoun N., Ben Amar C. Very deep recurrent convolutional neural network for object recognition. Proceedings of the Ninth International Conference on Machine Vision (ICMV 2016); October2016; Miyazaki, Japan. IEE; [DOI] [Google Scholar]

- 12.Abdul R., Labiba G., Ahmad Farhan A., et al. Automated cognitive health assessment in smart homes using machine learning. Sustainable Cities and Society . 2021;65 doi: 10.1016/j.scs.2020.102572. [DOI] [Google Scholar]

- 13.Khan A., Sohail A., Zahoora U., Qureshi A. S. A survey of the recent architectures of deep convolutional neural networks. Artificial Intelligence Review . 2020;53(8):5455–5516. doi: 10.1007/s10462-020-09825-6. [DOI] [Google Scholar]

- 14.Beeravolu A. R., Azam S., Jonkman M., Shanmugam B., Kannoorpatti K., Anwar A. Preprocessing of breast cancer images to create datasets for deep-CNN. IEEE Access . 2021;9:33438–33463. doi: 10.1109/access.2021.3058773. [DOI] [Google Scholar]

- 15.Mejdoub M., Aoun N. B., Amar C. B. Bag of frequent subgraphs approach for image classification. Intelligent Data Analysis . 2015;19(1):75–88. doi: 10.3233/ida-140697. [DOI] [Google Scholar]

- 16.Saikia A. R., Bora K., Mahanta L. B., Das A. K. Comparative assessment of CNN architectures for classification of breast FNAC images. Tissue and Cell . 2019;57:8–14. doi: 10.1016/j.tice.2019.02.001. [DOI] [PubMed] [Google Scholar]

- 17.Acharya S., Alsadoon A., Prasad P. W. C., Abdullah S., Deva A. Deep convolutional network for breast cancer classification: enhanced loss function (ELF) The Journal of Supercomputing . 2020;76(11):8548–8565. doi: 10.1007/s11227-020-03157-6. [DOI] [Google Scholar]

- 18.Belhaj Soulami K., Kaabouch N., Saidi M. N., Tamtaoui A. An evaluation and ranking of evolutionary algorithms in segmenting abnormal masses in digital mammograms. Multimedia Tools & Applications . 2020;79 doi: 10.1007/s11042-019-08449-5. [DOI] [Google Scholar]

- 19.Memon M. H., Li J. P., Amin Ul Haq, Memon M. H., Wang Z. Breast cancer detection in the IOT health environment using modified recursive feature selection. Wireless Communications and Mobile Computing . 2019;2019:1–19. [Google Scholar]

- 20.Alzubaidi L., Al-Shamma O., Fadhel M. A., Farhan L., Zhang J., Duan Y. Optimizing the performance of breast cancer classification by employing the same domain transfer learning from hybrid deep convolutional neural network model. Electronics . 2020;9(3):p. 445. doi: 10.3390/electronics9030445. [DOI] [Google Scholar]

- 21.Li Y., Wu J., Wu Q. Classification of breast cancer histology images using multi-size and discriminative patches based on deep learning. IEEE Access . 2019;7:21400–21408. doi: 10.1109/access.2019.2898044. [DOI] [Google Scholar]

- 22.Wadhwa G., Mathur M. A convolutional neural network approach for the diagnosis of breast cancer. Proceedings of the 2020 Sixth International Conference on Parallel, Distributed and Grid Computing (PDGC); June 2021; Waknaghat, India. pp. 357–361. [Google Scholar]

- 23.Mohapatra P., Panda B., Swain S. Enhancing histopathological breast cancer image classification using deep learning. International Journal of Innovative Technology and Exploring Engineering . 2019;8(7):2024–2032. [Google Scholar]

- 24.Szegedy C., Vanhoucke V., Ioffe S., Shlens J., Wojna Z. Rethinking the inception architecture for computer vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. pp. 2818–2826. [DOI] [Google Scholar]

- 25.Michael E., Ma H., Li H., Kulwa F., Li J. BioMed Research International . Vol. 20. PMCID; 2021. Breast cancer segmentation methods: current status and future potentials.9962109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Brahimi S., Ben Aoun N., Benoit A., Lambert P., Ben Amar C. Semantic segmentation using reinforced fully convolutional densenet with multiscale kernel. Multimedia Tools and Applications . 2019;78(15):22077–22098. doi: 10.1007/s11042-019-7430-x. [DOI] [Google Scholar]

- 27.Brahimi S., Ben Aoun N., Ben Amar C., Benoit A., Lambert P. Multiscale fully convolutional denseNet for semantic segmentation. Journal of WSCG . 2018;26(2):104–111. doi: 10.24132/jwscg.2018.26.2.5. [DOI] [Google Scholar]

- 28.Badrinarayanan V., Kendall A., Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2017;39(12):2481–2495. doi: 10.1109/tpami.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 29.Al-antari M. A., Al-masni M. A., Choi M.-T., Han S.-M., Kim T.-S. A fully integrated computer-aided diagnosis system for digital x-ray mammograms via deep learning detection, segmentation, and classification. International Journal of Medical Informatics . 2018;117:44–54. doi: 10.1016/j.ijmedinf.2018.06.003. [DOI] [PubMed] [Google Scholar]

- 30.Min H., Wilson D., Huang Y., et al. Fully automatic computer-aided mass detection and segmentation via pseudo-color mammograms and mask r-cnn. Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI); May2020; Iowa City, IA, USA. pp. 1111–1115. [DOI] [Google Scholar]

- 31.Sun H., Li C., Liu B., et al. Aunet: attention-guided dense-upsampling networks for breast mass segmentation in whole mammograms. Physics in Medicine and Biology . 2020;65(5) doi: 10.1088/1361-6560/ab5745.055005 [DOI] [PubMed] [Google Scholar]

- 32.Abdelhafiz D., Nabavi S., Ammar R., Yang C., Bi J. Residual deep learning system for mass segmentation and classification in mammography. Proceedings of the 10th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics; 2019; Niagara Falls, NY, USA. pp. 475–484. [DOI] [Google Scholar]

- 33.Saffari N., Rashwan H. A., Abdel-Nasser M., et al. Fully automated breast density segmentation and classification using deep learning. Diagnostics . 2020;10(11):p. 988. doi: 10.3390/diagnostics10110988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Li S., Dong M., Du G., Mu X. Attention dense-U-net for automatic breast mass segmentation in digital mammogram. IEEE Access . 2019;7:59037–59047. doi: 10.1109/access.2019.2914873. [DOI] [Google Scholar]

- 35.Dhungel N., Carneiro G., Bradley A. P. Deep learning and structured prediction for the segmentation of mass in mammograms. Lecture Notes in Computer Science . 2015:605–612. doi: 10.1007/978-3-319-24553-9_74. [DOI] [Google Scholar]

- 36.Redmon J., Divvala S., Girshick R., Farhadi A. You only look once: unified, real-time object detection. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. pp. 779–788. [DOI] [Google Scholar]

- 37.Abdelhafiz D., Bi J., Ammar R., Yang C., Nabavi S. Convolutional neural network for automated mass segmentation in mammography. BMC Bioinformatics . 2020;21(1):p. 192. doi: 10.1186/s12859-020-3521-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chen L.-C., Papandreou G., Kokkinos I., Murphy K., Yuille A. L. Semantic image segmentation with deep convolutional nets and fully connected CRFs. Proceedings of the ICLR; May 2015; San Diego, CA, USA. [DOI] [PubMed] [Google Scholar]

- 39.Ben Ali R., Ejbali R., Zaied M. Classification of medical images based on deep stacked patched auto-encoders. Multimedia Tools and Applications . 2020;79:25237–25257. doi: 10.1007/s11042-020-09056-5. [DOI] [Google Scholar]

- 40.Hassairi S., Ejbali R., Zaied M. A deep stacked wavelet auto-encoders to supervised feature extraction to pattern classification. Multimedia Tools and Applications . 2018;77(5):5443–5459. doi: 10.1007/s11042-017-4461-z. [DOI] [Google Scholar]

- 41.Vincent P. Stacked DenoisingAutoencoders: learning useful representations in aDeep network with a local denoising criterion. 2010;11:3371–3408. [Google Scholar]

- 42.LeCun Y. Lect. Notes Artif Computer Vision - ECCV 2012. Workshops and Demonstrations . Vol. 7583. LNCS; 2012. Learning invariant feature hierarchies; pp. 496–505. [DOI] [Google Scholar]

- 43.Sermanet P., Chintala S., Le Y. C. Convolutional neural networks applied to housenumbers digit classification. Proc. Int. Conf. Pattern Recognit. ICPR12 . 2012:3288–3291. [Google Scholar]

- 44.Lee R. S., Gimenez F., Hoogi A., Miyake K. K., Gorovoy M., Rubin D. L. A curated mammography data set for use in computer-aided detection and diagnosis research. Scientific Data . 2017;4(1) doi: 10.1038/sdata.2017.177.170177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Tsochatzidis L., Zagoris K., Arikidis N., Karahaliou A., Costaridou L., Pratikakis I. Computer-aided diagnosis of mammographic masses based on a supervised content-based image retrieval approach. Pattern Recognition . 2017;71:106–117. doi: 10.1016/j.patcog.2017.05.023. [DOI] [Google Scholar]

- 46.Rouhi R., Jafari M., Kasaei S., Keshavarzian P. Benign and malignant breast tumors classification based on region growing and CNN segmentation. Expert Systems with Applications . 2015;42(3):990–1002. doi: 10.1016/j.eswa.2014.09.020. [DOI] [Google Scholar]

- 47.Xie W., Li Y., Ma Y. Breast mass classification in digital mammography based on extreme learning machine. Neurocomputing . 2016;173:930–941. doi: 10.1016/j.neucom.2015.08.048. [DOI] [Google Scholar]

- 48.Javaid A., Sadiq M., Akram F. Skin cancer classification using image processing and machine learning. Proceedings of the 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST); January 2021; Islamabad, Pakistan. IEEE; pp. 439–444. [DOI] [Google Scholar]

- 49.Maurer A. An early prediction of lung cancer using CT scan images. Journal of Computing and Natural Science . 2021;1:39–44. doi: 10.53759/181x/jcns202101008. [DOI] [Google Scholar]

- 50.Ramkumar G., Prabu R. T., Singh N. P., Maheswaran U. Experimental analysis of brain tumor detection system using Machine learning approach. Materials Today Proceedings . 2021 doi: 10.1016/j.matpr.2021.01.246. [DOI] [Google Scholar]

- 51.Javed A. R., Sarwar M. U., Beg M. O., Asim M., Baker T., Tawfik H. A collaborative healthcare framework for shared healthcare plan with ambient intelligence. Human-centric Computing and Information Sciences . 2020;10(1):p. 40. doi: 10.1186/s13673-020-00245-7. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

To train and test our architecture, we used CBIS-DDSM presented in [36]. It is a database of mammographic images of breasts containing a mass. DDSM is composed of 2,620 scanned film mammography studies. It contains normal, benign, and malignant cases with verified pathology information. In our case, we were interested in the images where he has a mass to check if the mass is benign or malignant.