Abstract

Purpose

Liver cancer is one of the most common malignant tumors in the world, ranking fifth in malignant tumors. The degree of differentiation can reflect the degree of malignancy. The degree of malignancy of liver cancer can be divided into three types: poorly differentiated, moderately differentiated, and well differentiated. Diagnosis and treatment of different levels of differentiation are crucial to the survival rate and survival time of patients. As the gold standard for liver cancer diagnosis, histopathological images can accurately distinguish liver cancers of different levels of differentiation. Therefore, the study of intelligent classification of histopathological images is of great significance to patients with liver cancer. At present, the classification of histopathological images of liver cancer with different degrees of differentiation has disadvantages such as time-consuming, labor-intensive, and large manual investment. In this context, the importance of intelligent classification of histopathological images is obvious.

Methods

Based on the development of a complete data acquisition scheme, this paper applies the SENet deep learning model to the intelligent classification of all types of differentiated liver cancer histopathological images for the first time, and compares it with the four deep learning models of VGG16, ResNet50, ResNet_CBAM, and SKNet. The evaluation indexes adopted in this paper include confusion matrix, Precision, recall, F1 Score, etc. These evaluation indexes can be used to evaluate the model in a very comprehensive and accurate way.

Results

Five different deep learning classification models are applied to collect the data set and evaluate model. The experimental results show that the SENet model has achieved the best classification effect with an accuracy of 95.27%. The model also has good reliability and generalization ability. The experiment proves that the SENet deep learning model has a good application prospect in the intelligent classification of histopathological images.

Conclusions

This study also proves that deep learning has great application value in solving the time-consuming and laborious problems existing in traditional manual film reading, and it has certain practical significance for the intelligent classification research of other cancer histopathological images.

Keywords: Histopathological images of liver cancer, SENet, Degree of differentiation of the whole type, Intelligent classification

Background

Liver cancer is one of the malignant tumors with extremely high fatality rate and directly threatening human life. Hepatocellularcarcinoma (HCC) is a kind of primary liver cancer derived from hepatocytes, with a high degree of malignancy. There are approximately 750,000 patients worldwide each year. The mortality rate of HCC is as high as 93%, ranking third among all malignant tumors. In 2012, more than 782,500 new liver cancer cases were diagnosed, and more than 745,000 liver cancer-related deaths were recorded globally; of these, half of the total numbers of cases and deaths occurred in China [1–8]. In recent years, the global morbidity and mortality of liver cancer have continued to increase, and liver cancer has seriously threatened human life and health. The main clinical features of liver cancer are low predictability, rapid deterioration, and easier death after cancer. The key to the treatment of liver cancer lies in timely diagnosis, and the diagnosis of liver cancer is very important [9–13]. At present, the main diagnostic methods for liver cancer include serum test, biopsy, and imaging diagnosis. Among them, the histopathological images of liver cancer can clearly see the location, size, number, cell and histological type, degree of differentiation, vascular and capsular invasion, satellite foci and metastases, and the lesions of the liver tissue adjacent to the cancer. Among them, the degree of differentiation can reflect the degree of malignancy. The higher the degree of differentiation, the closer to the normal tissue cells, the lower the degree of malignancy. The lower the degree of differentiation, the higher the degree of malignancy. The diagnosis and treatment of different degrees of differentiation is crucial to the survival rate and survival time of patients [14, 15]. Therefore, the accurate classification of histopathological images of liver cancer has a decisive and irreplaceable role in the diagnosis of liver cancer with different degrees of differentiation. However, the classification of histopathological images with different degrees of differentiation has problems such as time-consuming, labor-intensive, and large manual investment. At the same time, due to the lack of experience of doctors or the fatigue caused by the doctors working for a long time and the individual's subjective consciousness are likely to cause misjudgment, which seriously affects the formulation of the patient's treatment plan and prognostic effect [16, 17]. It has important research value for the further study of histopathological image classification of liver cancer [18–20].

In recent years, with the rapid development of artificial intelligence, artificial intelligence algorithms have been widely used in the medical field. Deep learning, as one of artificial intelligence algorithms, is a machine learning method based on deep neural networks [21, 22]. Deep learning is widely used in computer vision and natural language processing tasks [23]. Especially in medical image processing and early disease diagnosis [24–34]. Krishan et al. used six different classifiers to classify different stages of tumors from CT images. The accuracy of tumor classification ranged from 76.38 to 87.01%. This will allow radiologists to further save valuable time before liver diagnosis and treatment [35]. However, this study still has shortcomings such as the need to improve the classification accuracy.YU-SHIANG LIN used a GoogLeNet (Inception-V1)-based binary classifier to classify HCC histopathology images. The classifier achieved 91.37% (± 2.49) accuracy, 92.16% (± 4.93) sensitivity, and 90.57% (± 2.54) specificity in HCC classification [36]. In the work of Yasmeen Al-Saeed, a computer-aided diagnosis system was introduced to extract liver tumors from computed tomography scans and classify them as malignant or benign. The proposed computer aided diagnosis system achieved an average accuracy of 96.75%, sensitivity of 96.38%, specificity of 95.20% and Dice similarity coefficient of 95.13% [37]. However, this study still has shortcomings such as a single sample type. Xu et al. developed a radiomic diagnosis model based on CT image that can quickly distinguish Hepatocellular carcinoma (HCC) from intrahepatic cholangiocarcinoma (ICCA), in the training set, the AUC of the evaluation of the radiomics was 0.855 higher than for radiologists at 0.689. In the valuation cohorts, the AUC of the evaluation was 0.847 and the validation was 0.659, which may facilitate the differential diagnosis of HCC and ICCA in the future [38]. However, this study still has shortcomings such as the need to improve the classification accuracy. Wan et al. propose a deep learning-based multi-scale and multi-level fusing approach of CNNs for liver lesion diagnosis on magnetic resonance images, termed as MMF-CNN. They apply proposed approach to various state-of-the-art deep learning architectures. The experimental results demonstrate the effectiveness of their approach [39]. Zhou et al. introduced basic technical knowledge about AI, including traditional machine learning and deep learning algorithms, especially convolutional neural networks, and their use in liver disease medical imaging Clinical applications in the field, such as detecting and evaluating focal liver lesions, promoting treatment and predicting liver response to treatment. Finally, it is concluded that machine-assisted medical services will be a promising solution for liver medical services in the future [40]. Li, Jing designed a fully automated computer-aided diagnosis (CAD) system using a convolutional neural network (CNN) network structure to diagnose hepatocellular carcinoma (HCC). The study used a total of 165 venous phase CT images (including 46 diffuse tumors, 43 nodular tumors and 76 large tumors) to evaluate the performance of the proposed CAD system. Finally, the CNN classifier was obtained for the classification accuracy of diffuse, nodular and massive tumors of 98.4%, 99.7% and 98.7%, respectively [41]. However, the study still has sample types that do not use the gold standard, and it is difficult for doctors to design and arrange accurate treatment procedures based on the diagnosis results. Azer, Samy A studied the role of CNN in analyzing images and as a tool for early detection of HCC or liver masses. The results of this study prove that the accuracy of CNN for liver cancer image segmentation and classification has reached the best level [42]. However, this study still has the disadvantage of too few classification types, which has certain limitations. Liangqun Lu and Bernie J. Daigle, Jr et.al used the pre-trained CNN model VGG 16, Inception V3 and ResNet 50 to accurately distinguish normal samples from cancer samples using image features extracted from HCC histopathological images. However, the study still has the deficiency of a single classification sample category [43]. Sureshkumar et al. outlined various liver tumor detection algorithms and methods for liver tumor analysis. Proposed deep learning methods such as probabilistic neural networks to detect liver tumors and diagnose them through experimental results, and compare them with different methods. This study proposes a framework that can arrange CT images of the liver in expected or strange ways and distinguish unusual tumors with a reduced false positive rate and expanded accuracy [44]. However, the study still has insufficient sample types, which is not a deficiency of the diagnostic gold standard. In the study of Lin, HX, etc., 217 combinations of two-photon excitation fluorescence and second harmonic generation images were used to train a convolutional neural network based on the VGG-16 framework; the classification accuracy of liver cancer differentiation levels exceeded 90%. It is proved that the fusion of multiphoton microscope and deep learning algorithm can classify the differentiation of HCC, thereby providing an innovative computer-aided diagnosis method [45]. The research is relatively new, but there is still room for improvement in accuracy. In this study, we used a very complete dataset of histopathological images of multi-differentiated HCC, added the visual attention mechanism to the deep learning model to extract the feature information of medical images more effectively, and finally obtained 95.27% in SENet accuracy. This study proves that the SENet deep learning model has good application value in the intelligent classification of histopathological images.

In order to ensure the authenticity and integrity of the data, we have developed a complete data acquisition scheme to obtain a higher quality data set for the classification experiment. Since histopathological images have more obvious and different characteristic information than other conventional natural images, the characteristics of histopathological images must be fully considered when establishing a deep learning model for histopathological image classification. Therefore, this paper adds the visual attention mechanism to the deep learning model. The attention mechanism increases the interpretation of the model by visualizing the attention, which can extract the feature information of medical images more effectively. On this basis, for the first time, we applied the SENet deep learning model to the intelligent classification study of all types of differentiated liver cancer histopathological images, and compared it with the four deep learning models of VGG16, ResNet50, ResNet50_CBAM, and SKNet. Finally, SENet was obtained the classification accuracy rate is 95.27%. The experiment has proved that the SENet deep learning model has a good application prospect in the intelligent classification of histopathological images. The effective classification of different levels of differentiation is convenient for doctors to formulate treatment plans more effectively, so that patients can get treatment in time. At the same time, it can effectively reduce the workload of medical workers and reduce the reading time of histopathological images of liver cancer. This also provides a certain reference value for deep learning in the research of intelligent classification of medical images.

Materials and methods

Obtaining slice samples

The Institutional Review Committee of the Cancer Hospital Affiliated to Xinjiang Medical University approved this study with the ethical approval number K-2021050. Male and female patients who were treated in the Cancer Hospital of Xinjiang Medical University from August 2020 to August 2021 participated in this study with informed consent. Fresh liver cancer tissue samples were collected by professional surgeons. All patients did not receive hormone therapy, radiotherapy or chemotherapy before surgery. In the end, without collecting the personal information of these patients, we obtained 74 liver cancer tissue samples from these patients (including: 24 poorly differentiated pathological slice samples, 28 moderately differentiated pathological slice samples, and 22 highly differentiated pathological slice samples). The following is the specific process of slice sample preparation:

The first step is fixation. Fresh liver cancer specimens are quickly fixed with 10% neutral buffered formalin at room temperature for 18–24 h. The second step is dehydration, by immersing the formalin-fixed sample in a series of alcohol solutions that increase with the alcohol concentration to remove water from it. After removing the dehydrated sample with an organic solvent, the third step of sample preparation is to bury it in molten paraffin. After cooling, each paraffin-embedded block (1.5 × 1.5 × 0.3 cm3) was sliced into 4 µm consecutive slices, and each slice was placed on an albumin glass slide. The fourth step dyeing starts after the paraffin is dissolved. The histological section of liver cancer tissue is stained with H&E, which is the most commonly used staining method in medical diagnosis. Finally, 3 skilled pathologists with more than 10 years of pathology experience examined histological sections under a light microscope and selected representative H&E sections. The diagnosis results were consistent. Table 1 shows the basic information of all patients.

Table 1.

Basic patient information

| Differentiation | Age | Gender | Staging | Alcoholism | Smoking | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| M | W | I | II | III | IV | Y | N | Y | N | ||

| Poorly differentiated | 35–50 | 7 | 3 | 4 | 1 | 3 | 2 | 2 | 8 | 5 | 5 |

| 51–66 | 6 | 1 | 4 | 1 | 1 | 1 | 2 | 5 | 3 | 4 | |

| 67–82 | 4 | 3 | 2 | 4 | 1 | 0 | 0 | 7 | 0 | 7 | |

| Moderate differentiation | 35–50 | 8 | 0 | 2 | 3 | 3 | 0 | 2 | 6 | 3 | 5 |

| 51–66 | 12 | 2 | 8 | 4 | 2 | 0 | 6 | 8 | 8 | 6 | |

| 67–82 | 5 | 1 | 1 | 0 | 5 | 0 | 2 | 4 | 3 | 3 | |

| Well differentiated | 35–50 | 2 | 0 | 1 | 0 | 0 | 1 | 2 | 0 | 2 | 0 |

| 51–66 | 12 | 1 | 11 | 2 | 0 | 0 | 1 | 12 | 5 | 8 | |

| 67–82 | 4 | 1 | 1 | 1 | 3 | 0 | 0 | 5 | 2 | 3 | |

Data set preparation

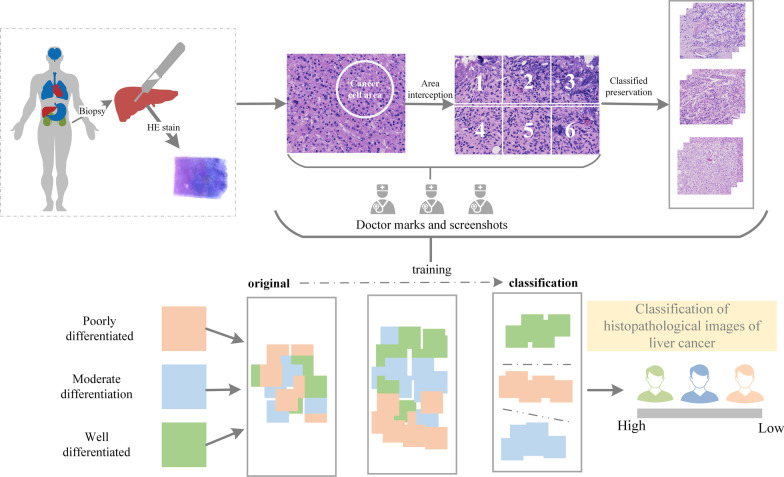

In this paper, 444 liver cancer histopathological images (There are 144 poorly differentiated histopathological images, 168 moderately differentiated histopathological images, and 132 highly differentiated histopathological images.) were obtained to form the research data set, and every image acquisition was done in the digital pathology scanner (Model: PRECICE 500B, Shanghai, China) with size of 1665 × 1393 pixels. The specific flow chart as is shown in Fig. 1.

Fig. 1.

Flowchart of data acquisition

The data set preparation process is as follows:

Putting 5 liver cancer pathological slices with the same degree of differentiation into the card slot of the digital pathology scanner simultaneously to complete the high-definition scanning of the pathological slices.

The dense area of Cancer cell in the high-definition image should be marked based on pathological expertise by a professional pathologist and cutting off 6 images evenly in a 2 × 3 matrix order in it after scanning, and then save the current images.

Repeat step (2) for the 5 pathological slices in the card slot in turn, and repeat the above process until all images are acquired.

The pathology medical workers should verify again whether all the acquired images meet the classification of different degrees of differentiation, exclude all images without normal cells, and perform the next classification experiment after confirming that they are correct.

Experimental environment

All program codes in the study, are developed based on the Python language. The software and hardware conditions of the specific experimental environment are shown in Table 2.

Table 2.

Experimental environment

| Category | Name | Version |

|---|---|---|

| CPU | IntelCore I5 9600KF | – |

| RAM | 32G 2666 MHz | – |

| GPU | NVIDIA GTX 1070 | – |

| Development language | Python | V3.7.0 |

| Image processing library | Sci-kit image | V0.16.2 |

| Data processing library | Numpy | V1.17.2 |

| Deep learning framework 1 | Sci-kit learn | V0.22 |

| Machine learning library | TensorFlow | V2.1.0 |

| Deep learning framework 2 | Keras | V2.3.1 |

Experimental methods

Image enhancement

There are 444 original liver cancer histopathological images used in this paper, which are divided into three categories: poorly differentiated, moderately differentiated, and well differentiated. Because a large number of samples are needed for training to achieve better generalization in the subsequent deep learning model training. Obviously, due to raw data set used in this paper can not meeting training conditions, so we have to enhance the raw data set. However, each piece of the histopathological image of liver cancer contains rich pathological diagnosis information, and many image enhancement methods cannot well retain its pathological diagnosis information. At the same time, in order to restore the complete process of medical workers in actual reading as much as possible, this research adopts image rotation enhancement method to enhance the original image, the principle is as follows:

Image rotation: Suppose that is the coordinate after rotation, is the coordinate before rotation. is the center of rotation. is the angle of rotation. is the coordinates of the upper left corner of the rotated image. The realization formula is as shown in (1):

| 1 |

The rotation angle in this paper is set to 10°, and each original image is rotated at an angle of 10° each time. A picture is rotated 36 times, and a total of 36 images are obtained after each original image is rotated. The enhanced data set contains a total of 15,984 histopathological images.

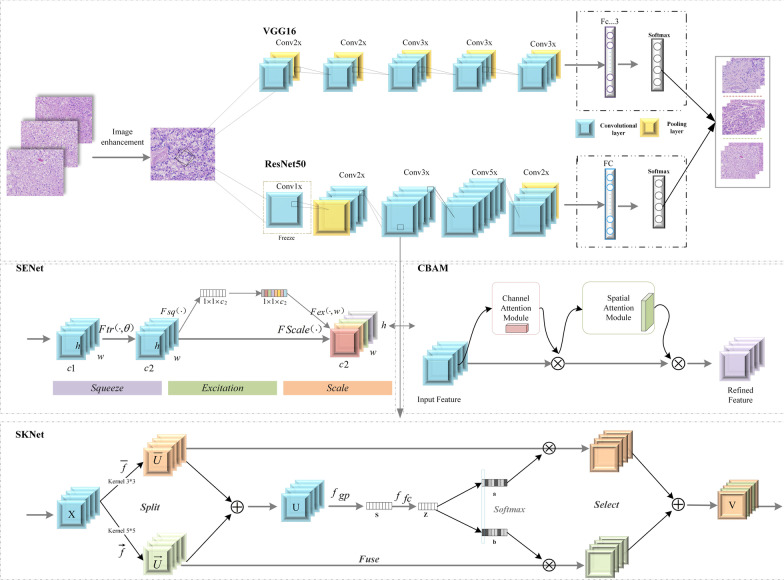

Model training

In this paper, five deep learning models which are VGG16, ResNet50, ResNet_CBAM, SENet, and SKNet are selected for classification experiments [46, 47]. The above model and specific training process will be described in detail as follows, and the experimental frame diagram is shown in Fig. 2.

Fig. 2.

Experimental frame diagram

VGG16 network is relatively simple and does not have so many hyperparameters. It is a simple network that only needs to focus on building a convolutional layer. First, a 3 × 3 filter with a stride of 1 is used to construct a convolutional layer, and the padding parameter is the parameter in the same convolution. Then use a 2 × 2 filter with a stride of 2 to build the maximum pooling layer. Therefore, a major advantage of VGG network is that it does simplify the neural network structure. However, the amount of parameters is too large, which consumes a lot of computing resources, so it is not suitable for image learning with complex features [48, 49].

Traditional convolutional networks or fully connected networks will more or less have problems such as information loss and loss when information is transmitted, and at the same time, they can cause gradients to disappear or explode, making deep networks unable to train. ResNet solves this problem to a certain extent. Its main idea is to add the idea of Highway Network to the network, which allows to retain a certain proportion of the output in the previous network layer. The input information is directly detoured to the output to protect the integrity of information in ResNet. The entire network only needs to learn the part of the difference between input and output to simplify the learning objectives and difficulty [50, 51]. Based on the above advantages, this article chooses ResNet50 as one of the training models.

CBAM is a simple and effective convolutional neural network attention module. Given an intermediate feature map arbitrarily in the convolutional neural network, CBAM injects the Attention Mapping along two independent dimensions of the channel and space of the feature map, and then multiplies the Attention by the input feature map to perform Adaptive feature refinement on the input feature map. CBAM is an end-to-end universal module [52]. Based on the advantages of CBAM combined with the ResNet50 model, this paper selects the ResNet_CBAM model for classification experiments.

The idea of SENet is very simple, and it is easy to extend to the existing network structure. The SENet model proposes a new block structure, called the SE block, which uses each feature layer in the squeeze compression model structure, and uses excitation to capture feature channel dependence [53, 54].So the SE block can be combined with many existing models. The SE module mainly includes two operations, Squeeze and Excitation, which can be applied to any mapping , , take convolution as an example, the kernel of convolution is , where v_m represents the mth convolution kernel. Then output ;

| 2 |

* represents the convolution operation, and represents an n channel 2-D convolution kernel, which inputs the spatial characteristics of a channel and learns the feature space relationship, but because the convolution results of each channel are added, the feature relationship of the channel is mixed with the spatial relationship learned by the convolution kernel. The SE block is to remove this confounding, so that the model directly learns the feature relationship of the channel.

SKNet and SENet are lightweight modules that can be directly embedded in the network. Feature maps of different convolution kernelsizes are fused through the Attention Mechanism, and the size of the Attention is based on the deterministic information extracted by convolution kernels of different sizes [55]. Intuitively, SKNet assimilate a soft attention mechanism into the network, so that the network can obtain information of different Receptive Field, which may become a network structure with better generalization ability [56].

After selecting the experimental model, this article randomly divides the ratio of the training set and the test machine according to the ratio of 8:2, and the specific division is shown in Table 3.

Table 3.

Data partition

| Differentiation type | Raw data | Enhanced data | Training set | Test set |

|---|---|---|---|---|

| Poorly differentiated | 144 | 5184 | 4176 | 1008 |

| Moderate differentiation | 168 | 6048 | 4860 | 1188 |

| Well differentiated | 132 | 4752 | 3816 | 936 |

In this paper, all the deep model training batches are set to 300 Epoch, and the configuration of the optimizer and the setting of the learning rate are also crucial in model training. Based on the advantages of the (Stochastic gradient descent) SGD method in the application of large data sets, the training speed is very fast and as long as the noise is not very large, it can converge well. This paper chooses the SGD method as the optimizer configuration algorithm. The algorithm principle is as follows:

Suppose there are n samples in a batch, and randomly select one . The model parameter is set to W, the cost function is set to , the gradient is , and the learning rate is , then the method update parameter expression is:

| 3 |

Among them; represents a gradient direction randomly selected, and represents the model parameter at the moment, , the expectation is the correct gradient descent.

In the above formula (6), the learning rate ε_t is one of the most important parameters in training, and the learning rate is set to 0.001 in the training of this article.

Evaluation index

There are many commonly used evaluation criteria for medical image classification, such as accuracy, error rate, false positive rate, true negative rate, false negative rate, true negative rate, etc. The evaluation indexes adopted in this paper include confusion matrix, Precision, recall, F1 Score, etc. These evaluation indexes can be used to evaluate the model in a very comprehensive and accurate way. The detailed description is given below.

1. Confusion matrix: All evaluation indexes are directly or indirectly related to the confusion matrix. All evaluation indexes can be directly calculated from the confusion matrix, and their specific forms are shown in Table 4:

Table 4.

Confusion matrix

| Actual class | ||

|---|---|---|

| Positive class | Negative class | |

| Predicted class | ||

| Positive class | True Positive (TP) | False Positive (FP) |

| Negative class | False negative (FN) | True negative (TN) |

In the confusion matrix shown in the table above:

TP represents that the actual class is positive, and the predicted class of the model is also positive.

FP represents that the predicted class is positive, but the actual class is negative. The actual class is inconsistent with the predicted class.

FN represents that the predicted class is negative, but the actual class is positive. The actual class is inconsistent with the predicted class.

TN represents that the actual class is negative, and the predicted class of the model is also Negative.

By using the confusion matrix, we can intuitively see the classification of a model in positive and negative categories.

Precision and recall: Formula (4) and formula (5) are used to calculate the precision and recall of the positive class:

| 4 |

| 5 |

Precision represents the proportion of samples whose actual class is positive in the samples whose predicted class is positive; the recall represents the percentage of samples that are successfully predicted in a truly positive sample by the model.

F1 Score: F1 Score is calculated by Formula (6):

| 6 |

F1 Score takes both the precision and recall of the classification model into account. It can be regarded as a harmonic average of precision and recall of model. It has a maximum value of 1 and a minimum value of 0.

Results analysis and discussion

The classification results

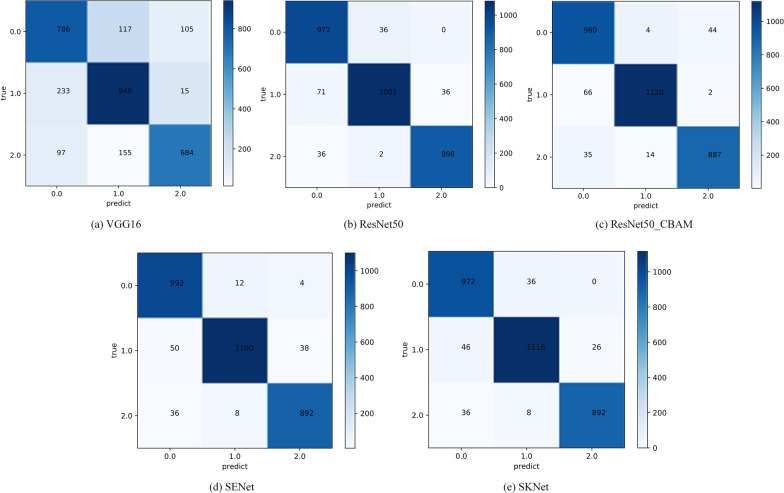

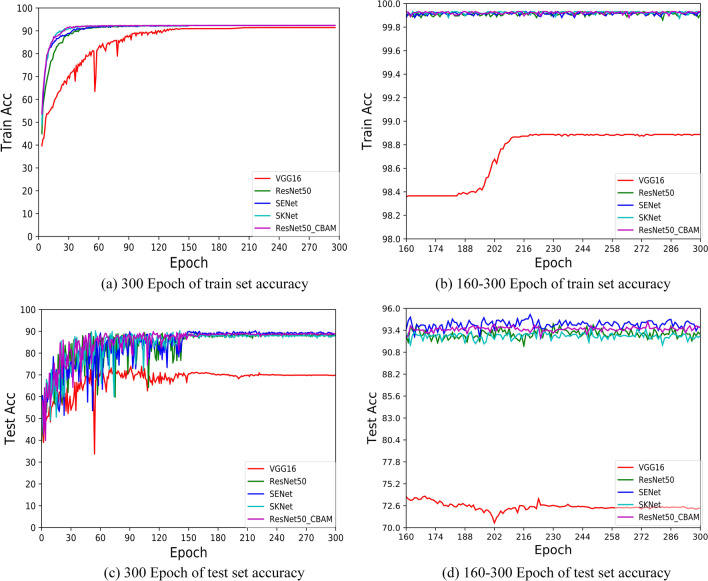

In this section, the classification results of different models are analyzed in detail. The training accuracy curves of all classification models under the same training conditions including optimizer algorithm, loss function, learning rate, Epoch and other conditions are shown in Fig. 4. Table 5 shows the classification accuracy of different differentiation types.

Fig. 4.

Confusion matrix of different models

Table 5.

Classification accuracy of histopathological images of liver cancer

| Classifier | Poorly differentiated | Moderate differentiation | Well differentiated | Accuracy |

|---|---|---|---|---|

| VGG16 | 77.98 | 79.12 | 73.08 | 76.95 |

| ResNet50 | 96.43 | 90.99 | 95.94 | 94.22 |

| ResNet50_CBAM | 95.24 | 94.28 | 94.76 | 94.73 |

| SENet | 98.41 | 92.59 | 95.30 | 95.27 |

| SKNet | 96.43 | 93.94 | 95.30 | 95.15 |

Bold indicates the highest accuracy

Unit:%

In Fig. 3a and c, we respectively show the accuracy curves of training set and test set in the five classification models. In order to observe the convergence process in the training process more intuitively, we intercept respectively the accuracy curves of training set and test set in 160–300 Epoch, as shown in Fig. 3b and d. In the training set, the models except VGG16 began to converge when the Epoch was 50 and completely converged when the Epoch was 120, while VGG16 model tended to converge completely when the Epoch was 215. In the test set, the models began to converge at 150 Epoch, and the VGG16 model began to converge at 220 Epoch. This also proves that the simple network model focusing on the construction of convolutional layer is not suitable for the classification task of histopathological images of liver cancer.

Fig. 3.

Classification accuracy curves

Through horizontal comparison of the accuracy of different models in Table 5, it was found that SENet model achieved the best classification effect with a classification accuracy of 95.27%. The ResNet50 model and resnet50_CPAM model achieved respectively 95.94% accuracy in the classification of well differentiated histopathological images and 94.28% accuracy in those of moderate differentiation histopathological images. It also confirmed the superiority of ResNet50 model in the classification of images with relatively simple features. At the same time, the SENet model achieved a 98.41% classification accuracy in the classification of poorly differentiated histopathological images.

Model evaluation

In this paper, the confusion matrix of the training model is used as the evaluation index of the model. From the confusion matrix of the model (0 represents poorly differentiated, 1 represents moderate differentiation, 2 represents well differentiated) we can very clearly see the liver cancer histopathological image classification accuracy of different models in different differentiation degree. From the confusion matrix of different models, it can be found that the VGG16 model has a general classification effect on the images with three different degrees of differentiation. It is worth noting that, in the moderate differentiation image classification, the number of images which are misjudged as poor differentiation was much larger than the number of images which are misjudged as well differentiation. The main cause of this phenomenon lies in the relatively complex image characteristics of histopathology images. So the VGG16 model whose structure is relatively simple can not learn more from the image characteristics and it causes the classification effect is poor. Since ResNet50 and ResNet50_CBAM models have deeper structures, their image classification effect with different degrees of differentiation is better than VGG16 model.ResNet50_CBAM model is slightly better than ResNet50 model in the classification of moderately differentiated images, and the number of correct classification of poorly differentiated images and well differentiated images are relatively consistent. SENet and SKNet models have achieved good classification results in the classification of three types of images, and they are completely consistent in the classification of well differentiated images. SENet model has more correct judgments in the classification of poorly differentiated images than SKNet model, while SKNet model has a slight advantage in the classification of moderate differentiation images. The main reason for this phenomenon is that SENet model pays more attention to strengthening useful information and compressing useless information, so it is more suitable for histopathological images with complex features (Fig. 4).

Through further analysis and calculation of the confusion matrix of different models, we can use more representative Precision, Recall and F1 Score to prove the reliability and generalization ability of different models. These indexes can comprehensively describe the performance of the model from different perspectives. Table 6 shows the evaluation indexes of different models in detail.

Table 6.

Evaluation indexes of different models

| Differentiation type | Metrics | VGG16 | ResNet50 | ResNet50_CBAM | SENet | SKNet |

|---|---|---|---|---|---|---|

| Poorly differentiated | Precision | 70.43 | 90.08 | 90.48 | 92.02 | 92.22 |

| Recall | 77.98 | 96.43 | 95.24 | 99.41 | 96.43 | |

| F1 Score | 74.01 | 93.15 | 92.80 | 95.11 | 94.28 | |

| Moderate differentiation | Precision | 77.56 | 96.60 | 98.42 | 98.21 | 96.21 |

| Recall | 79.12 | 90.99 | 94.28 | 92.59 | 93.94 | |

| F1 Score | 78.33 | 93.71 | 96.30 | 95.32 | 95.06 | |

| Well differentiated | Precision | 85.07 | 96.15 | 95.07 | 95.50 | 97.17 |

| Recall | 73.08 | 95.94 | 94.76 | 95.30 | 95.30 | |

| F1 Score | 78.62 | 96.04 | 94.92 | 95.40 | 96.22 |

Bold indicates the highest accuracy

Unit:%

Through the horizontal comparison of the above five classification models and their three different evaluation indexes, it was found that the VGG16 model did not achieve ideal results in any classification of the differentiation degree. In the classification of poorly differentiated histopathological images, SENet model achieved good scores both in recall and F1 Score, and its precision was next only to SKNet model. This indicates that SENet model is more suitable for distinguishing poorly differentiated histopathological images with more complex image features. We analyze the main reason for this phenomenon and think this is because the difference in image features of histopathological images is mainly reflected in channel dimension. While SENet model can pay more attention to channel features with the most information and suppress the channels features that are not important. Resnet50_CBAM model achieved the best score in the classification of moderate differentiation histopathological images in all three indexes. This is thanks to two independent sub-modules of CBAM, Channel Attention Module (CAM) and Spartial Attention Module (SAM). It is more suitable for moderate differentiation images that appear to be clearly abnormal from normal cells, but retain traces of some tissue source. The SKNet model achieved the best score in Precision and F1 Score for image classification of well differentiated histopathological images. For well differentiated histopathological images, they contain more normal cells, and their image features are relatively simple and easy to learn. SKNet carries out an attention mechanism for the convolutional nucleus, that is, the network selects the appropriate convolutional nucleus by itself, without too many changes to the overall structure of the model. Therefore, SKNet model is more stable and has better generalization ability in the classification of well differentiated histopathological images.

Conclusion

In this paper, a complete data acquisition scheme was developed for the intelligent classification of histopathological images with different degrees of differentiation, and histopathological images of liver cancer with full types and degrees of differentiation were obtained. Because histopathological images have more obvious and different feature information than other conventional natural images, the characteristics of histopathological images must be fully considered when establishing the deep learning model of histopathological image classification. Therefore, this article adds visual attention mechanism to some deep learning model, which can increase interpretation forms of the model through attention visualization and can more effectively extract the characteristic information of the medical image. Five different deep learning classification models are applied to collect the data set and evaluate model. The experimental results show that the SENet model has achieved the best classification effect with an accuracy of 95.27%. The model also has good reliability and generalization ability.

However, our work has the following limits: (1) the scale of the dataset used for the experiments was small, so the methods need to be compared in a larger dataset; (2) The data source is single, and there is still a certain distance from clinical application. In the future, we will continue to collect more samples from more different hospitals to further improve the experiment and make up for the shortcomings of the experiment.

This study also proves that deep learning has great application value in solving the time-consuming and laborious problems existing in traditional manual film reading, and it has certain practical significance for the intelligent classification research of other cancer histopathological images.

Acknowledgements

This work was supported by the special scientific research project for young medical science (2019Q003) and Karamay City Key R&D Project—Molecular Mechanism and Application of DNA Methylation Liquid Biopsy in the "Prevention, Diagnosis and Treatment" of Malignant Tumors.

Author contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Chen Chen, Cheng Chen and MM. The first draft of the manuscript was written by Chen Chen and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

This research was funded by the special scientific research project for young medical science (2019Q003), Xinjiang Uygur Autonomous Region Innovation Environment Construction special Project - Key Laboratory opening project (2022D04021), Natural Science Foundation of Xinjiang Uygur Autonomous Region (2022D01C290) and Karamay City Key R&D Project—Molecular Mechanism and Application of DNA Methylation Liquid Biopsy in the “Prevention, Diagnosis and Treatment” of Malignant Tumors.

Availability of data and materials

The data that support the findings of this study are available from the Cancer Hospital of Xinjiang Medical University but restrictions apply to the availability of these data, which were used under license for the current study. We do not have the right to disclose data and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of the Cancer Hospital of Xinjiang Medical University.

Declarations

Ethics approval and consent to participate

The Institutional Review Committee of the Cancer Hospital Affiliated to Xinjiang Medical University approved this study with the ethical approval number K-2021050. All subjects in this study gave informed consent. The data and materials used in this study were collected, processed, and analyzed in accordance with appropriate guidelines and regulations.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no relevant financial and/or non-financial conflict of interests in this article and no potential conflicts of interest to disclose.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Chen Chen and Cheng Chen have contributed equally to this work and should be considered co-first authors.

Contributor Information

Xiaoyi Lv, Email: xiaoz813@163.com.

Xiaogang Dong, Email: 11418267@zju.edu.cn.

References

- 1.Parkin DM, Bray F, Ferlay J, Pisani P. Global cancer statistics, 2002. CA-Cancer J Clin. 2005;55(2):74–108. doi: 10.3322/canjclin.55.2.74. [DOI] [PubMed] [Google Scholar]

- 2.Jemal A, Bray F, Center MM, Ferlay J, Ward E, Forman D. Global cancer statistics. CA-Cancer J Clin. 2011;61(2):69–90. doi: 10.3322/caac.20107. [DOI] [PubMed] [Google Scholar]

- 3.Ferlay J, Shin HR, Bray F, Forman D, Mathers C, Parkin DM. Estimates of worldwide burden of cancer in 2008: GLOBOCAN 2008. Int J Cancer. 2010;127(12):2893–2917. doi: 10.1002/ijc.25516. [DOI] [PubMed] [Google Scholar]

- 4.Jemal A. Global cancer statistics (vol 61, pg 69, 2011) CA-Cancer J Clin. 2011;61(2):134–134. doi: 10.3322/caac.20107. [DOI] [PubMed] [Google Scholar]

- 5.Niehoff NM, Zabor EC, Satagopan J, Widell A, O'Brien TR, Zhang M, Rothman N, Grimsrud TK, Van den Eeden SK, Engel LS. Prediagnostic serum polychlorinated biphenyl concentrations and primary liver cancer: a case-control study nested within two prospective cohorts. Environ Res. 2020;187:11. doi: 10.1016/j.envres.2020.109690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sun B, Karin M. Obesity, inflammation, and liver cancer. J Hepatol. 2012;56(3):704–713. doi: 10.1016/j.jhep.2011.09.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sun J, Luo H, Nie W, Xu X, Miao X, Huang F, Wu H, Jin X. Protective effect of RIP and c-FLIP in preventing liver cancer cell apoptosis induced by TRAIL. Int J Clin Exp Pathol. 2015;8(6):6519–6525. [PMC free article] [PubMed] [Google Scholar]

- 8.Zheng Y, Zhang W, Xu L, Zhou H, Yuan M, Xu H. Recent progress in understanding the action of natural compounds at novel therapeutic drug targets for the treatment of liver cancer. Front Oncol. 2022;11:795548. doi: 10.3389/fonc.2021.795548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Parikh S, Hyman D. Hepatocellular cancer: a guide for the internist. Am J Med. 2007;120(3):194–202. doi: 10.1016/j.amjmed.2006.11.020. [DOI] [PubMed] [Google Scholar]

- 10.Ali L, Wajahat I, Golilarz NA, Keshtkar F, Bukhari SAC. LDA-GA-SVM: improved hepatocellular carcinoma prediction through dimensionality reduction and genetically optimized support vector machine. Neural Comput Appl. 2021;33(7):2783–2792. doi: 10.1007/s00521-020-05157-2. [DOI] [Google Scholar]

- 11.Li HM. Microcirculation of liver cancer, microenvironment of liver regeneration, and the strategy of Chinese medicine. Chin J Integr Med. 2016;22(3):163–167. doi: 10.1007/s11655-016-2460-y. [DOI] [PubMed] [Google Scholar]

- 12.Sheng DP, Xu FC, Yu Q, Fang TT, Xia JJ, Li SR, Wang X. A study of structural differences between liver cancer cells and normal liver cells using FTIR spectroscopy. J Mol Struct. 2015;1099:18–23. doi: 10.1016/j.molstruc.2015.05.054. [DOI] [Google Scholar]

- 13.Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA-Cancer J Clin. 2018;68(6):394–424. doi: 10.3322/caac.21492. [DOI] [PubMed] [Google Scholar]

- 14.Liao HT, Xiong TY, Peng JJ, Xu L, Liao MH, Zhang Z, Wu ZR, Yuan KF, Zeng Y. Classification and prognosis prediction from histopathological images of hepatocellular carcinoma by a fully automated pipeline based on machine learning. Ann Surg Oncol. 2020;27(7):2359–2369. doi: 10.1245/s10434-019-08190-1. [DOI] [PubMed] [Google Scholar]

- 15.Sun CF, Wang YX, Sun MY, Zou Y, Zhang CC, Cheng SS, Hu WP. Facile and cost-effective liver cancer diagnosis by water-gated organic field-effect transistors. Biosens Bioelectron. 2020;164:6. doi: 10.1016/j.bios.2020.112251. [DOI] [PubMed] [Google Scholar]

- 16.Khan MA, Rubab S, Kashif A, Sharif MI, Muhammad N, Shah JH, Zhang Y-D, Satapathy SC. Lungs cancer classification from CT images: an integrated design of contrast based classical features fusion and selection. Pattern Recognit Lett. 2020;129:77–85. doi: 10.1016/j.patrec.2019.11.014. [DOI] [Google Scholar]

- 17.Khan MA, Rajinikanth V, Satapathy SC, Taniar D, Mohanty JR, Tariq U, Damasevicius R. VGG19 network assisted joint segmentation and classification of lung nodules in CT images. Diagnostics. 2021;11(12):2208. doi: 10.3390/diagnostics11122208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Xia BB, Jiang HY, Liu HL, Yi DH. A novel hepatocellular carcinoma image classification method based on voting ranking random forests. Comput Math Method Med. 2016;2016:1–8. doi: 10.1155/2016/2628463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sun CL, Xu A, Liu D, Xiong ZW, Zhao F, Ding WP. Deep learning-based classification of liver cancer histopathology images using only global labels. IEEE J Biomed Health Inform. 2020;24(6):1643–1651. doi: 10.1109/JBHI.2019.2949837. [DOI] [PubMed] [Google Scholar]

- 20.Barone M, Di Leo A, Sabba C, Mazzocca A. The perplexity of targeting genetic alterations in hepatocellular carcinoma. Med Oncol. 2020;37(8):5. doi: 10.1007/s12032-020-01392-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Afza F, Sharif M, Khan MA, Tariq U, Yong H-S, Cha J. Multiclass skin lesion classification using hybrid deep features selection and extreme learning machine. Sensors. 2022;22(3):799. doi: 10.3390/s22030799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Khan MA, Muhammad K, Sharif M, Akram T, Kadry S. Intelligent fusion-assisted skin lesion localization and classification for smart healthcare. Neural Comput Appl. 2021 doi: 10.1007/s00521-021-06490-w. [DOI] [Google Scholar]

- 23.Bibi A, Khan MA, Javed MY, Tariq U, Kang B-G, Nam Y, Mostafa RR, Sakr RH. Skin lesion segmentation and classification using conventional and deep learning based framework. CMC-Comput Mater Cont. 2022;71(2):2477–2495. [Google Scholar]

- 24.Arshad M, Khan MA, Tariq U, Armghan A, Alenezi F, Younus Javed M, Aslam SM, Kadry S. A computer-aided diagnosis system using deep learning for multiclass skin lesion classification. Comput Intell Neurosci. 2021;2021:9619079–9619079. doi: 10.1155/2021/9619079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Attique Khan M, Sharif M, Akram T, Kadry S, Hsu C-H. A two-stream deep neural network-based intelligent system for complex skin cancer types classification. Int J Intell Syst. 2021 doi: 10.1002/int.22691. [DOI] [Google Scholar]

- 26.Syed HH, Khan MA, Tariq U, Armghan A, Alenezi F, Khan JA, Rho S, Kadry S, Rajinikanth V. A rapid artificial intelligence-based computer-aided diagnosis system for COVID-19 classification from CT images. Behav Neurol. 2021;2021:1–13. doi: 10.1155/2021/2560388. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 27.Sakthisaravanan B, Meenakshi R. OPBS-SSHC: outline preservation based segmentation and search based hybrid classification techniques for liver tumor detection. Multimedia Tools Appl. 2020;79(31):22497–22523. doi: 10.1007/s11042-019-08582-1. [DOI] [Google Scholar]

- 28.Naeem S, Ali A, Qadri S, Mashwani WK, Tairan N, Shah H, Fayaz M, Jamal F, Chesneau C, Anam S. Machine-learning based hybrid-feature analysis for liver cancer classification using fused (MR and CT) images. Appl Sci-Basel. 2020;10(9):22. [Google Scholar]

- 29.Hemalatha V, Sundar C. Automatic liver cancer detection in abdominal liver images using soft optimization techniques. J Ambient Intell Humaniz Comput. 2021;12(5):4765–4774. doi: 10.1007/s12652-020-01885-4. [DOI] [Google Scholar]

- 30.Liao HT, Long YX, Han RJ, Wang W, Xu L, Liao MH, Zhang Z, Wu ZR, Shang XQ, Li XF, et al. Deep learning-based classification and mutation prediction from histopathological images of hepatocellular carcinoma. Clin Transl Med. 2020;10(2):e102. doi: 10.1002/ctm2.102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mat-Isa NA, Mashor MY, Othman NH. An automated cervical pre-cancerous diagnostic system. Artif Intell Med. 2008;42(1):1–11. doi: 10.1016/j.artmed.2007.09.002. [DOI] [PubMed] [Google Scholar]

- 32.Kanavati F, Toyokawa G, Momosaki S, Rambeau M, Kozuma Y, Shoji F, Yamazaki K, Takeo S, Iizuka O, Tsuneki M. Weakly-supervised learning for lung carcinoma classification using deep learning. Sci Rep. 2020;10(1):11. doi: 10.1038/s41598-020-66333-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sun H, Zeng XX, Xu T, Peng G, Ma YT. Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms. IEEE J Biomed Health Inform. 2020;24(6):1664–1676. doi: 10.1109/JBHI.2019.2944977. [DOI] [PubMed] [Google Scholar]

- 34.Kumar A, Singh SK, Saxena S, Lakshmanan K, Sangaiah AK, Chauhan H, Shrivastava S, Singh RK. Deep feature learning for histopathological image classification of canine mammary tumors and human breast cancer. Inf Sci. 2020;508:405–421. doi: 10.1016/j.ins.2019.08.072. [DOI] [Google Scholar]

- 35.Krishan A, Mittal D. Ensembled liver cancer detection and classification using CT images. Proc Inst Mech Eng Part H-J Eng Med. 2021;235(2):232–244. doi: 10.1177/0954411920971888. [DOI] [PubMed] [Google Scholar]

- 36.Lin Y-S, Huang P-H, Chen Y-Y. Deep learning-based hepatocellular carcinoma histopathology image classification: accuracy versus training dataset size. IEEE Access. 2021;9:33144–33157. doi: 10.1109/ACCESS.2021.3060765. [DOI] [Google Scholar]

- 37.Al-Saeed Y, Gab-Allah WA, Soliman H, Abulkhair MF, Shalash WM, Elmogy M. Efficient computer aided diagnosis system for hepatic tumors using computed tomography scans. CMC-Comput Mater Cont. 2022;71(3):4871–4894. [Google Scholar]

- 38.Xu X, Mao Y, Tang Y, Liu Y, Xue C, Yue Q, Liu Q, Wang J, Yin Y. Classification of hepatocellular carcinoma and intrahepatic cholangiocarcinoma based on radiomic analysis. Comput Math Method Med. 2022;2022:1–9. doi: 10.1155/2022/5334095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wan Y, Zheng Z, Liu R, Zhu Z, Zhou H, Zhang X, Boumaraf S. A multi-scale and multi-level fusion approach for deep learning-based liver lesion diagnosis in magnetic resonance images with visual explanation. Life-Basel. 2021;11(6):582. doi: 10.3390/life11060582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Zhou LQ, Wang JY, Yu SY, Wu GG, Wei Q, Deng YB, Wu XL, Cui XW, Dietrich CF. Artificial intelligence in medical imaging of the liver. World J Gastroenterol. 2019;25(6):672–682. doi: 10.3748/wjg.v25.i6.672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Li J, Wu YR, Shen NY, Zhang JW, Chen EL, Sun J, Deng ZQ, Zhang YC. A fully automatic computer-aided diagnosis system for hepatocellular carcinoma using convolutional neural networks. Biocybern Biomed Eng. 2020;40(1):238–248. doi: 10.1016/j.bbe.2019.05.008. [DOI] [Google Scholar]

- 42.Azer SA. Deep learning with convolutional neural networks for identification of liver masses and hepatocellular carcinoma: a systematic review. World J Gastrointest Oncol. 2019;11(12):1218–1230. doi: 10.4251/wjgo.v11.i12.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Lu LQ, Daigle BJ. Prognostic analysis of histopathological images using pre-trained convolutional neural networks: application to hepatocellular carcinoma. PeerJ. 2020;8:25. doi: 10.7717/peerj.8668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sureshkumar V, Chandrasekar V, Venkatesan R, Prasad RK. Improved performance accuracy in detecting tumor in liver using deep learning techniques. J Ambient Intell Humaniz Comput. 2021;12(6):5763–5770. doi: 10.1007/s12652-020-02107-7. [DOI] [Google Scholar]

- 45.Lin HX, Wei C, Wang GX, Chen H, Lin LS, Ni M, Chen JX, Zhuo SM. Automated classification of hepatocellular carcinoma differentiation using multiphoton microscopy and deep learning. J Biophotonics. 2019;12(7):7. doi: 10.1002/jbio.201800435. [DOI] [PubMed] [Google Scholar]

- 46.da Nóbrega RV, Rebouças Filho PP, Rodrigues MB, da Silva SP, Dourado Júnior CM, de Albuquerque VH. Lung nodule malignancy classification in chest computed tomography images using transfer learning and convolutional neural networks. Neural Comput Appl. 2020;32(15):11065–11082. doi: 10.1007/s00521-018-3895-1. [DOI] [Google Scholar]

- 47.Zhang HQ, Han L, Chen K, Peng YL, Lin JL. Diagnostic efficiency of the breast ultrasound computer-aided prediction model based on convolutional neural network in breast cancer. J Digit Imaging. 2020;33(5):1218–1223. doi: 10.1007/s10278-020-00357-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Gazioglu BSA, Kamasak ME. Effects of objects and image quality on melanoma classification using deep neural networks. Biomed Signal Process Control. 2021;67:102530. doi: 10.1016/j.bspc.2021.102530. [DOI] [Google Scholar]

- 49.Takeuchi M, Seto T, Hashimoto M, Ichihara N, Morimoto Y, Kawakubo H, Suzuki T, Jinzaki M, Kitagawa Y, Miyata H, et al. Performance of a deep learning-based identification system for esophageal cancer from CT images. Esophagus. 2021;18(3):612–620. doi: 10.1007/s10388-021-00826-0. [DOI] [PubMed] [Google Scholar]

- 50.Celik Y, Talo M, Yildirim O, Karabatak M, Acharya UR. Automated invasive ductal carcinoma detection based using deep transfer learning with whole-slide images. Pattern Recognit Lett. 2020;133:232–239. doi: 10.1016/j.patrec.2020.03.011. [DOI] [Google Scholar]

- 51.Wu N, Phang J, Park J, Shen Y, Huang Z, Zorin M, Jastrzebski S, Fevry T, Katsnelson J, Kim E, et al. Deep neural networks improve radiologists performance in breast cancer screening. IEEE Trans Med Imaging. 2020;39(4):1184–1194. doi: 10.1109/TMI.2019.2945514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Niu J, Li H, Zhang C, Li D. Multi-scale attention-based convolutional neural network for classification of breast masses in mammograms. Med Phys. 2021;48(7):3878–3892. doi: 10.1002/mp.14942. [DOI] [PubMed] [Google Scholar]

- 53.Shahidi F, Mohd Daud S, Abas H, Ahmad NA, Maarop N. Breast cancer classification using deep learning approaches and histopathology image: a comparison study. IEEE Access. 2020;8:187531–187552. doi: 10.1109/ACCESS.2020.3029881. [DOI] [Google Scholar]

- 54.Li X, Shen X, Zhou Y, Wang X, Li T-Q. Classification of breast cancer histopathological images using interleaved DenseNet with SENet (IDSNet) PLoS ONE. 2020;15(5):e0232127. doi: 10.1371/journal.pone.0232127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Cui Z, Leng J, Liu Y, Zhang T, Quan P, Zhao W. SKNet: detecting rotated ships as keypoints in optical remote sensing images. IEEE Trans Geosci Remote Sens. 2021;59(10):8826–8840. doi: 10.1109/TGRS.2021.3053311. [DOI] [Google Scholar]

- 56.Ma Y, Zhang Y. Insulator detection algorithm based on improved Faster-RCNN. J Comput Appl. 2022;42(2):631–637. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the Cancer Hospital of Xinjiang Medical University but restrictions apply to the availability of these data, which were used under license for the current study. We do not have the right to disclose data and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of the Cancer Hospital of Xinjiang Medical University.