Abstract

As deep-learning-based denoising and reconstruction methods are gaining more popularity in clinical CT, it is of vital importance that these new algorithms undergo rigorous and objective image quality assessment beyond traditional metrics to ensure diagnostic information is not sacrificed. Channelized Hotelling observer (CHO), which has been shown to be well correlated with human observer performance in many clinical CT tasks, has a great potential to become the method of choice for objective image quality assessment for these non-linear methods. However, practical use of CHO beyond research labs have been quite limited, mostly due to the strict requirement on a large number of repeated scans to ensure sufficient accuracy and precision in CHO computation and the lack of efficient and widely acceptable phantom-based method. In our previous work, we developed an efficient CHO model observer for accurate and precise measurement of low-contrast detectability with only 1–3 repeated scans on the most widely used ACR accreditation phantom. In this work, we applied this optimized CHO model observer to evaluating the low-contrast detectability of a deep learning-based reconstruction (DLIR) equipped on a GE Revolution scanner. The commercially available DLIR reconstruction method showed consistent increase in low-contrast detectability over the FBP and the IR method at routine dose levels, which suggests potential dose reduction to the FBP reconstruction by up to 27.5%.

Keywords: Image quality assessment, channelized Hotelling observer (CHO), protocol optimization, radiation dose reduction

1. INTRODUCTION

Accurate and quantitative methods for efficient and objective assessment of CT image quality are essential to ensure that radiation dose reduction does not inadvertently sacrifice important diagnostic information. Recently, deep convolutional neural network (CNN)-based denoising and reconstruction methods have become popular choices for improving CT image quality and reducing radiation dose [1]. However, it is well known that nonlinear methods could suffer from loss of low-contrast detectability with overly aggressive dose reduction. To assess image quality of CNN-based techniques, several studies relied on human observers to provide subjective evaluation with the assist of conventional metrics like contrast-to-noise ratio (CNR) [2] while some others chose objective evaluation using model observers, including CHO [3, 4].

Tremendous progress towards task-based image quality assessment using CHO has been made during the past decade, where its correlation with human observer performance in low-contrast detection, classification and localization tasks in clinical CT has been demonstrated [5]. Studies have been performed to improve its computational efficiency and inter-laboratory variation [6, 7]. CHO-based image quality assessment has been shown to avoid clinically significant diagnostic errors [8]. However, practical use of CHO beyond research labs have been quite limited, mostly due to the strict requirement on a large number of repeated scans to ensure sufficient accuracy and precision in computation [6] and the lack of efficient and widely acceptable phantom-based method. In our previous work, we developed an efficient CHO model observer for accurate and precise measurement of low-contrast detectability (d’) with only 1–3 repeated scans on the most widely used ACR accreditation phantom [9]. In this work, we employed this optimized CHO model to perform an efficient evaluation of the low-contrast detectability of a commercially available deep learning-based reconstruction (DLIR) from a GE Revolution scanner [10]. This work is expected to facilitate a wider adoption of CHO-based quantitative evaluation of low-contrast detectability in routine CT clinical practice.

2. METHODS

2.1. Optimized CHO model observer for the ACR phantom

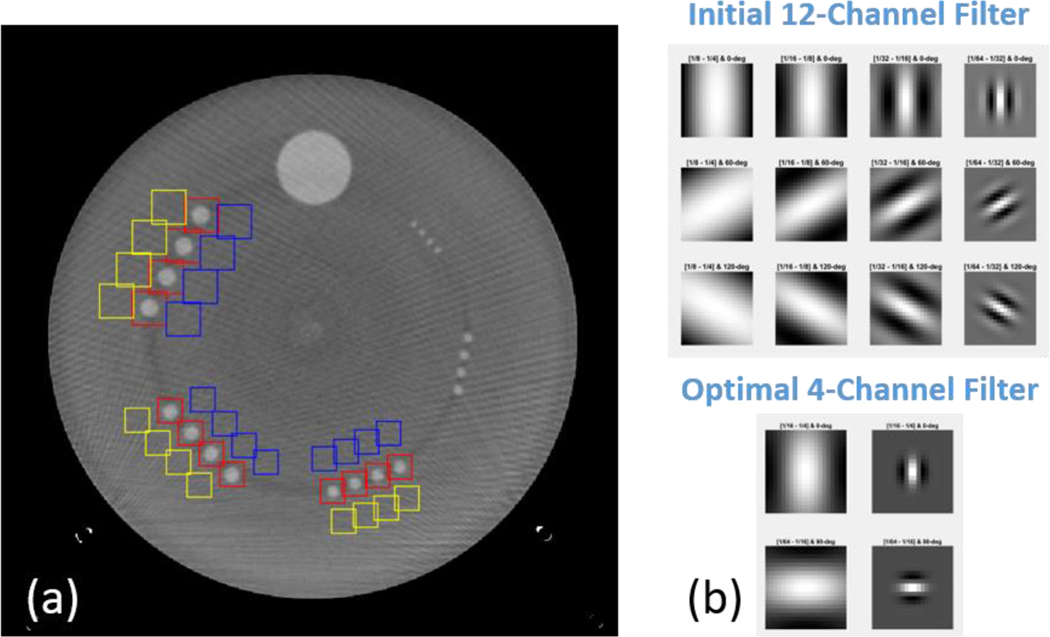

We took advantage of the fact that the ACR low-contrast module possesses several specifications that could largely reduce the bias and uncertainty of d’ estimations: 1) it contains 4 identical rods of the same size, 2) the rods are 4 cm long and multiple slices could be extracted, 3) multiple background regions of interest (ROIs) could be extracted, and 4) the low-contrast objects are of known sizes and shapes, which makes it possible to reduce the number of channel filters to increase the CHO computation efficiency. The CHO optimization on the ACR phantom was previously conducted, where highly accurate and precise measurement of low-contrast detectability could be achieved by 1–3 repeated scans [9]. The ACR ensemble image and the channel filters can be visualized in Figure 1. In this work, 2 sets of backgrounds ROIs in the vicinity of the low-contrast objects were selected, and the optimized channel filter is a 4-channel Gabor filter with 2 low passbands ([1/64,1/32]; [1/32,1/16]) and 2 orientations ([0, π/2]), as shown in Figure 1. In addition, to avoid any possible artifact from the adjacent ACR modules, only 20 image slices from the center 27 mm of the low-contrast module were extracted for CHO calculation.

Figure 1.

ACR phantom image and Gabor filters used in the CHO calculation: a) ensemble image with signal (red) and background (blue and yellow) ROI, b) initial reference 12-channel filter and optimal 4-channel filter.

2.2. Application to commercially available deep learning reconstructions

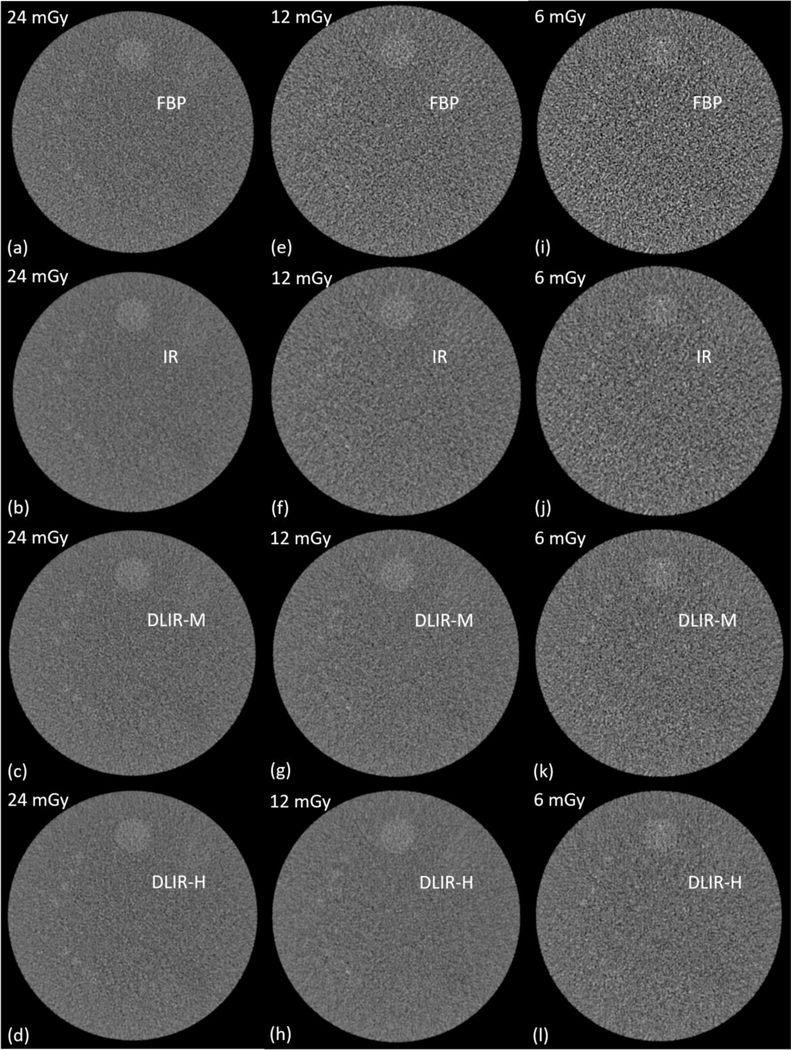

We applied the optimized CHO module to evaluating the low-contrast detectability of a deep learning-based reconstruction equipped on a GE Revolution scanner. An ACR phantom was scanned at three dose levels of 6, 12, and 24 mGy (CTDIvol) under routine abdomen pelvis protocol with a rotation time of 0.5 s and a spiral pitch factor of 0.5078, and three scans were acquired at each of the dose level. The images were reconstructed with a 210 mm FOV, 2.5 mm slice thickness and 1.25 mm slice increment using five reconstruction methods: 1) standard filtered back-projection (FBP), 2) iterative reconstruction (IR: ASiR-V 50%), and 3–5) deep learning reconstructions at low (DLIR-L), medium (DLIR-M) and high (DLIR-H) strength settings.

3. RESULTS

Both DLIR-M and DLIR-H reconstructions produced consistent increase in index of detectability compared to the FBP and IR methods at all investigated dose levels and object sizes despite not presenting the lowest noise levels. Quantitatively, the IR method improved low-contrast detectability d’ by an average of 5.5% over the FBP method while the DLIR-L, DLIR-M, and DLIR-H reconstructions did it by 5.8%, 8.8%, and 13.3%, respectively.

We also estimated the potential dose reduction to the FBP method by approximating 1) the dose levels of all the reconstruction methods to produce the same d’ value as the 12 mGy scan and DLIR-H reconstruction, and 2) the dose levels of all reconstruction methods to produce the same d’ value as the 12 mGy scan and FBP reconstruction. Compared to the FBP reconstruction, the average dose reduction by the IR method was 12.1% while it was 13.1%, 18.4%, and 27.5% for the DLIR-L, DLIR-M, and DLIR-H methods, respectively.

4. CONCLUSION

An efficient CHO-based model observer specifically developed for the most widely used ACR CT accreditation phantom was applied to evaluating the low-contrast detectability of a commercially available deep learning-based reconstruction method. The results showed consistent improvement in low-contrast detectability over the FBP and IR methods, which suggests a potential dose reduction by up to 27.5% compared to the FBP method.

Figure 2.

Sample images of the low-contrast model using four reconstruction methods (FBP, IR, and deep learning method at medium (DLIR-M) and high (DLIR-H) strength levels.

Figure 3.

Index of detectability of the 6-, 5-, and 4-mm objects by five reconstruction methods: FBP, IR, deep learning method at low (DLIR-L), medium (DLIR-M) and high (DLIR-H) strength levels.

5. ACKNOWLEDGEMENT

Research reported in this work was supported by the National Institutes of Health under award number U24EB028936. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Health.

REFERENCES

- 1.Mileto A, et al. , State of the Art in Abdominal CT: The Limits of Iterative Reconstruction Algorithms. Radiology, 2019. 293(3): p. 491–503. [DOI] [PubMed] [Google Scholar]

- 2.Jensen CT, et al. , Image quality assessment of abdominal CT by use of new deep learning image reconstruction: initial experience. American Journal of Roentgenology, 2020. 215(1): p. 50–57. [DOI] [PubMed] [Google Scholar]

- 3.Greffier J, et al. , Image quality and dose reduction opportunity of deep learning image reconstruction algorithm for CT: a phantom study. European radiology, 2020. 30(7): p. 3951–3959. [DOI] [PubMed] [Google Scholar]

- 4.Racine D, et al. , Image texture, low contrast liver lesion detectability and impact on dose: deep learning algorithm compared to partial model-based iterative reconstruction. European Journal of Radiology, 2021: p. 109808. [DOI] [PubMed] [Google Scholar]

- 5.Yu LF, et al. , Correlation between a 2D channelized Hotelling observer and human observers in a low-contrast detection task with multislice reading in CT. Medical Physics, 2017. 44(8): p. 3990–3999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ma C, et al. , Impact of number of repeated scans on model observer performance for a low-contrast detection task in computed tomography. J. of Med Imaging, 2016. 3(2). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ba A, et al. , Inter-laboratory comparison of channelized hotelling observer computation. Medical Physics, 2018. 45(7): p. 3019–3030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Favazza CP, et al. , Use of a channelized Hotelling observer to assess CT image quality and optimize dose reduction for iteratively reconstructed images. Journal of Medical Imaging, 2017. 4(3). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Fan M, et al. Accurate and Efficient Measurement of Channelized Hotelling Observer-based Low-contrast Detectability on the ACR Accreditation Phantom in 2021 AAPM Annual Meeting 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hsieh J, et al. , A new era of image reconstruction: TrueFidelity™. White Paper (JB68676XX), GE Healthcare, 2019. [Google Scholar]