Abstract

Objective

To evaluate the effectiveness of feedback reports and feedback reports + external facilitation on completion of life‐sustaining treatment (LST) note the template and durable medical orders. This quality improvement program supported the national roll‐out of the Veterans Health Administration (VA) LST Decisions Initiative (LSTDI), which aims to ensure that seriously‐ill veterans have care goals and LST decisions elicited and documented.

Data Sources

Primary data from national databases for VA nursing homes (called Community Living Centers [CLCs]) from 2018 to 2020.

Study Design

In one project, we distributed monthly feedback reports summarizing LST template completion rates to 12 sites as the sole implementation strategy. In the second involving five sites, we distributed similar feedback reports and provided robust external facilitation, which included coaching, education, and learning collaboratives. For each project, principal component analyses matched intervention to comparison sites, and interrupted time series/segmented regression analyses evaluated the differences in LSTDI template completion rates between intervention and comparison sites.

Data Collection Methods

Data were extracted from national databases in addition to interviews and surveys in a mixed‐methods process evaluation.

Principal Findings

LSTDI template completion rose from 0% to about 80% throughout the study period in both projects' intervention and comparison CLCs. There were small but statistically significant differences for feedback reports alone (comparison sites performed better, coefficient estimate 3.48, standard error 0.99 for the difference between groups in change in trend) and feedback reports + external facilitation (intervention sites performed better, coefficient estimate −2.38, standard error 0.72).

Conclusions

Feedback reports + external facilitation was associated with a small but statistically significant improvement in outcomes compared with comparison sites. The large increases in completion rates are likely due to the well‐planned national roll‐out of the LSTDI. This finding suggests that when dissemination and support for widespread implementation are present and system‐mandated, significant enhancements in the adoption of evidence‐based practices may require more intensive support.

Keywords: advance care planning, implementation science, interrupted time series analysis, nursing homes, United States Department of Veterans Affairs, Veteran

What is known on this topic

Audit with feedback involves the collection and summary of clinical performance data over a specified period to monitor, evaluate, and modify clinician behavior.

Without cointerventions such as facilitation, feedback interventions have been shown to have only a modest positive impact on behavior change needed to implement evidence‐based practices.

Facilitation strategies may incorporate supporting clinical champions, learning collaboratives, and coaching that allow for tailored approaches to implementation.

What this study adds

The feedback intervention we deployed that included feedback and robust facilitation showed a statistically significant positive impact between intervention and comparison sites than a similar project that included feedback only.

Our findings suggest that it is important to couple feedback with facilitation strategies to achieve impact.

In‐depth assessment of implementation interventions, especially feedback reports, is important to better understand their perceived usefulness to the end user.

1. INTRODUCTION

People receiving care in nursing homes are often seriously ill and frail and frequently face critical decisions about care. 1 , 2 , 3 Therefore, determining care preferences, particularly about life‐sustaining treatments (LSTs) such as cardiopulmonary resuscitation (CPR), mechanical ventilation, antibiotics, and medically administered nutrition and hydration, is critical to delivering person‐centered care in this setting. In 2017, the Veterans Health Administration (VA) National Center for Ethics in Health Care launched the Life‐Sustaining Treatment Decisions Initiative (LSTDI), an integrated program to promote goals of care conversations and identify preferences for LST for veterans with serious illness. 4 These conversations and preferences are documented in a durable note and order set that is readily accessible across the VA health care system through a standardized template (the LSTDI template) in the VA electronic health record. The LSTDI template consists of eight fields, four of which are mandatory such as decision making capacity; patient's goals of care (e.g., “to be cured” or “to be comfortable”), oral informed consent for the LST plan, and “CPR” status. The LSTDI, described in detail elsewhere, 4 is a multipronged evidence‐based program, which included a carefully planned dissemination program with national support for implementation across all VA care settings. VA is a national vertically and horizontally integrated system of care, the largest publicly financed health system in the United States, delivering comprehensive services to over 9 million veterans annually. 5 The LSTDI template is intended for use across all VA settings and is required to document limitations on resuscitation orders for inpatient care.

Complementary to the LSTDI, Implementing Goals of Care Conversations with Veterans in VA Long‐Term Care Settings (LTC QUERI) was a 5‐year quality improvement (QI) program funded through the VA Health Services Research and Development Service Quality Enhancement Research Initiative (QUERI) from 2015 to 2020. The VA's QUERI program, established in 1998, provides funding and infrastructure to ensure the adoption of research evidence, tools, and methods into routine care in the VA health care system. 6

Our goal in the LTC QUERI program was to support the implementation of the LSTDI by designing and testing tools and strategies to improve its implementation in two long‐term care settings: VA home‐based primary care and VA‐owned and operated nursing homes (called Community Living Centers [CLCs]). As a novel system‐wide intervention, the LSTDI was expected to be implemented nationally, but as with most nationally mandated programs, there was no guarantee at initiation that it would be completely or even widely implemented. We focused on long‐term settings, where resources such as nurse staffing and provider time are typically fewer than in acute or primary care. Our intent was to provide support in these settings, where the proportion of seriously ill veterans who could benefit from having goals of care discussions was likely to be high. We had two major projects within the LTC QUERI program; one focused on all veterans newly admitted to CLCs and home‐based primary care, and the other focused on all veterans in CLCs with an additional focus on veterans with dementia. In this report, we focus only on CLCs, as conditions in home‐based primary care are very different.

Audit with feedback (referred to as feedback reports in this paper) involves the collection and summary of clinical performance data over a specified period, which is then shared with clinicians and administrators who ideally use the feedback to monitor, evaluate, and modify clinician behavior. 7 , 8 Feedback reports have been shown to have a generally positive, although modest, increase in the likelihood of achieving desired behavior change. 7 , 9 Several publications call for augmenting feedback with additional strategies to increase action planning following receipt of feedback. 7 , 10 , 11 , 12

Facilitation is a global approach that supports action planning. Kirchner et al. describe facilitation as “a multifaceted strategy that applies a variety of discrete strategies … depending on what is needed given the context and characteristics of those that are providing and receiving the innovation.” 13 Facilitation strategies incorporate a broad array of implementation approaches, including supporting clinical champions, learning collaboratives, and coaching. Previous research shows evidence of facilitation effectiveness, as it allows for iterative, tailored approaches to implementation. 8 , 13 , 14 , 15

Despite the evidence supporting these two strategies, feedback reports and facilitation, few studies have explored the relative impact of combining these strategies compared to feedback reports alone. The LTC QUERI program allows this comparison. The purpose of this analysis was to evaluate the outcomes of the CLC‐focused components of the LTC QUERI program. Specifically, compared to matched comparison sites with no feedback, we examined the relative impact of a feedback report only intervention (Project 1) and feedback reports plus facilitation (Project 2) on the rate of LSTDI template completion for veterans in CLCs.

2. METHODS

The LSTDI was released to the full VA system in January 2017, with a mandate for all VA health care facilities to implement the program by July 2018. The LTC QUERI program initiated two related but separate projects in CLCs. In Project 1, we used monthly feedback reports to provide information on progress toward completing and documenting goals of care conversations for all veterans through the LSTDI template around the time of admission to the CLC. In Project 2, we sought to integrate the LSTDI into regular care planning meetings using monthly feedback reports, coupled with intensive facilitation strategies that are described below. 16 Project 2 included all veterans, with a focus on veterans with dementia. Both projects have been described in detail in earlier publications. 17 , 18

We distributed feedback reports quarterly to Project 1 intervention sites starting April 1, 2018, and increased frequency to monthly feedback reports to both Project 1 and 2 intervention sites starting October 1, 2018. We had intended to continue until July 2020, but the COVID‐19 pandemic fundamentally altered CLC admissions, and we were forced to end the intervention and data collection in March 2020, giving us a total of 17 feedback reports (2 quarterly then 15 monthly). We note that the number of feedback reports is significantly more than in most feedback intervention studies reported, where most studies report a single feedback report. 7

2.1. Sources of participants and data

The two projects were conducted in different states and VA regional networks. The 12 CLCs included in Project 1 were in three Midwestern states and four Western states, located in two VA regional networks. All CLCs in these networks participated in Project 1. Five CLCs in two Eastern states, located in one regional network, participated in Project 2, which involved fewer sites in aligning increased engagement with limited LTC QUERI staff. CLCs were selected because project leads were located in specific regional networks. Data showing regional distribution and rural/urban location are shown in Table 1.

TABLE 1.

Preintervention CLC and veteran characteristics used for matching (FY‐2018)

| Characteristics | Project 1 sites | Project 2 sites | ||

|---|---|---|---|---|

| Intervention (n = 12) | Matched (n = 24) | Intervention (n = 5) | Matched (n = 10) | |

| Continuous variables | Mean (SD) | |||

| Average daily census | 45.9 (25.0) | 58.7 (27.8) | 72.9 (28.6) | 85.1 (36.8) |

| All nursing hours/bed days | 7.6 (1.8) | 7.8 (1.3) | 6.3 (0.4) | 6.9 (1.2) |

| BIMS score | 12.4 (1.4) | 12.4 (1.3) | 11.8 (0.4) | 11.4 (1.2) |

| Cognition for daily decision making modified/moderately/severely impaired | 66.1% (17.6) | 68.0% (21.1) | 66.3% (9.7) | 71.5% (9.3) |

| JEN Frailty Index | 6.5 (0.7) | 6.6 (0.6) | 6.6 (0.4) | 6.7 (0.4) |

| Nosos score using VA HCC score a | 8.0 (1.5) | 8.2 (1.3) | 7.3 (0.7) | 7.8 (0.8) |

| Physical function: ADL score | 11.9 (3.0) | 12.7 (3.1) | 12.8 (2.6) | 12.5 (1.8) |

| Race/ethnicity: white | 82.0% (15.2) | 78.1% (20.4) | 73.5% (22.4) | 77.3% (19.2) |

| Treating specialty: NH long‐stay indicator b | 51.5% (24.6) | 51.0% (19.2) | 54.3% (16.2) | 54.8% (16.1) |

| Categorical variables | Percent | |||

| POLST Program: mature/endorsed | 33.3% | 54.2% | 80.0% | 30.0% |

| Geographic division | ||||

| 1: New England | 0.0% | 4.2%** | 0.0% | 0.0% |

| 2: Middle Atlantic | 0.0% | 16.7% | 80.0% | 30.0% |

| 3: East North Central | 50.0% | 0.0% | 0.0% | 10.0% |

| 4: West North Central | 0.0% | 16.7% | 0.0% | 0.0% |

| 5: South Atlantic | 0.0% | 20.8% | 20.0% | 30.0% |

| 6: East South Central | 0.0% | 0.0% | 0.0% | 10.0% |

| 7: West South Central | 8.3% | 8.3% | 0.0% | 0.0% |

| 8: Mountain | 41.7% | 8.3% | 0.0% | 10.0% |

| 9: Pacific | 0.0% | 25.0% | 0.0% | 10.0% |

| Rural–Urban Continuum Code: description | ||||

| 1: Metro areas of 1 million or more | 16.7% | 45.8%** | 80.0% | 30.0% |

| 2: Metro areas of 250,000–1 million | 8.3% | 29.2% | 0.0% | 30.0% |

| 3: Metro areas <250,000 | 50.0% | 8.3% | 20.0% | 20.0% |

| 4: Urban area of 20,000+, adjacent to a metro area | 8.3% | 8.3% | 0.0% | 0.0% |

| 5: Urban area of 20,000+, not adjacent to a metro area | 0.0% | 4.2% | 0.0% | 10.0% |

| 6: Urban area of 2500–19,999, adjacent to a metro area | 0.0% | 4.2% | 0.0% | 0.0% |

| 7: Urban area of 2500–19,999, not adjacent to a metro area | 16.7% | 0.0% | 0.0% | 10.0% |

Note: All continuous variables were included in the principal component analysis except where indicated; RUCC classifications from USDA Economic Research Service; Geographic Divisions from US Census Bureau; mature/endorsed POLST Program indicates a state has met national standards and POLST has become the standard of care.

Abbreviations: ADL, activities of daily living; Average Daily Census, average number of patients per day in FY‐2018; BIMS, brief interview on mental status; CLC, Community Living Center; HCC, hierarchical condition category; NH, nursing home; POLST, Physician Orders for Life‐Sustaining Treatment.

Concurrent model.

Not included in principal component analysis.

p < 0.1; **p < 0.05.

2.2. The interventions

2.2.1. Feedback reports

Both projects used feedback reports that were designed iteratively using user‐centered design methods prior to the national LSTDI rollout. 19 Participants in the user‐design phase came from four pilot site facilities for the LSTDI and were not included as intervention sites in either project. Project 1 feedback reports showed the number (count) of newly admitted veterans in CLCs with a completed LSTDI template, including veterans whose LSTDI template was completed any time prior to admission until the 14th day of their stay, on a monthly basis. We focused on admission as a critical time point, as it is a distinct event in the trajectory of a Veterans Care (e.g., recent change in status, or another event that requires a different level of care), 20 and other assessments (including the Minimum Data Set 3.0) are required in a specified time window (14 days). The feedback report also provided information about veterans admitted for a short stay (anticipated to be 90 days or less) as well as a long stay (no time limit, usually for the remainder of the resident's life).

Project 2 feedback reports were developed similar to those for Project 1. However, they differed in several ways. First, the reports provided data on completed LSTDI templates for all residents in each CLC, divided by long stay and short stay, not just those newly admitted. Second, the reports provided rates of LSTDI template completion for all veterans in the CLC but also identified veterans with dementia. Examples of feedback reports for both projects are included in Appendices S1 and S2.

Feedback reports were sent to intervention sites by email quarterly for Project 2 (in April and August 2018), and then monthly for both projects, beginning in October 2018. The primary recipients were site champions, who were locally identified as leaders for the LSTDI within their care settings and agreed to be the liaison for our work. They held diverse clinical (social work, nursing, and geropsychology) and administrative (QI) roles. In Project 1, the site champions decided whether the reports were then distributed to staff and/or leadership or were not shared beyond the champion. In Project 2, reports were sent to site champions as well as other identified CLC leaders in nursing, social work, and medicine, including the facility LSTDI coordinator.

2.2.2. Facilitation

For supporting the interpretation of feedback reports and improving LSTDI template completion, facilitation was provided to all sites in Project 2. In addition to receiving and sharing the feedback reports, Project 2 site champions educated, advocated, and built relationships among staff implementing the LSTDI with support from the QUERI project members. We provided champions with written educational materials, tip sheets, and a detailed protocol for conducting and documenting goals of care conversations in the CLC setting to share with staff. The LTC QUERI also presented live educational webinars on topics identified by CLC staff as important when discussing LSTs and facilitated monthly learning collaboration calls to review the feedback reports, engage in action planning to meet future LSTDI template completion targets, share ideas and best practices, and address barriers across sites. For facilitating prioritization of veterans for goals of care conversations and LSTDI template completion, each site champion received an encrypted email message with a spreadsheet listing the names of all residents, their admission date, dementia diagnosis (yes/no), completed LSTDI template (yes/no), and if applicable, date of the most recent LSTDI template. A detailed description of the development of these implementation strategies has been previously published. 18

2.3. Matching

For both projects, CLCs were matched with comparison CLCs (i.e., CLCs that did not participate in either project). We matched intervention to comparison CLCs using Euclidean distance calculated between scores for each CLC derived by estimating principal component analysis (PCA), a factor analytic approach that used a large number of variables to generate a predicted value or score, following the approach of Byrne et al. 21 , 22 We used retrospective data from 2018 to estimate scores. The continuous variables used in the matching process are shown in the top part of Table 1; these were used to estimate factors through PCA. There were two measures specific to the CLC—average daily census and licensed nurse staffing measured in hours/bed day of care—which are important CLC characteristics. Additional variables included proportions of veterans with impaired cognitive function (two variables), the proportion of frail veterans (two variables), the proportion with diminished physical function measured by Activities of Daily Living (one variable), and the proportion of veterans with non‐Hispanic White race and ethnicity. We chose these variables because previous studies have shown these variables are associated with quality of care. 23 , 24 , 25 With the exception of the proportion of long‐stay residents, these continuous variables were used in the PCA. In the final regression to create the score used for matching, we included the three highest‐loading factors from the PCA, together with the proportion of long‐stay residents as a separate independent variable. This had the effect of highly weighting the proportion of long‐stay residents in each CLC felt to be essential to understanding completion of LSTDI documentation, as long‐stay residents are generally more seriously ill and frail than short‐stay residents, who are often admitted for post‐acute rehabilitative care or other short procedures. We used Euclidean distance, a measure of closeness of the scores generated through the final regression, to determine the best matches, and matched 1:2 case: comparison. Euclidean distance was our primary assessment of the match, but we also assessed the fit by examining key variables, shown at the bottom of Table 1.

2.4. Outcome measure

Our primary outcome was the average proportion of veterans with completed LSTDI templates aggregated to the level of the CLC, using monthly rates of newly admitted veterans for Project 1 and monthly rates for all resident veterans for Project 2. Completed LSTDI templates are automatically entered into the VA electronic health record, both as orders and as data in specific fields; specific data elements called LST health factors are stored in each hospital's clinical database and extracted daily into the VA Corporate Data Warehouse (CDW). We retrieved data for completed templates from the CDW and merged it with data on veterans in CLCs. 4 , 17 , 24

For Project 1, the main feedback report metric—the overall percent of long‐ and short‐stay residents admitted with completed LSTDI templates any time prior to admission until the 14th day since admission, but prior to discharge—was aggregated bi‐weekly over the period from January 2017 through February 2020 for all intervention and all matched sites and used as the dependent variable in the interrupted time series (ITS) model. We used the 14th day, or 2 weeks, after admission because Minimum Data Set 3.0 assessments are required to be completed then, by VA policy. For Project 2, the outcome variable was the overall percent of completed LSTDI templates in a given month where the resident had a completed LSTDI template by the end of the month, including prior months, over the period from January 2017 through February 2020. We aggregated to bi‐weekly for both projects to increase the number of time points for the ITS analysis.

2.5. Statistical analysis

We calculated summary statistics (i.e., means, standard deviations, percentages) to describe the characteristics of veterans within the intervention and comparison CLCs as well as organizational characteristics of each CLC.

After matching, we used ITS analysis, also known as segmented regression analysis, 26 to estimate the effect of the interventions on the outcome variables. We conducted separate analyses for Project 1 and Project 2, given the differences in the interventions. In the ITS analysis, since the data are aggregated to a time point within and across sites, we aggregated for each project, and separately for all intervention and comparison sites, outcome data for the bi‐weekly time points between January 2017 and February 2020. We established the interruption point for both analyses on July 5, 2018, because all sites were expected to complete LSTDI implementation on July 1, 2018. This gave us 9 time points before the implementation time point, and 42 time points after. We ended the postintervention period in February 2020. We estimated coefficients for the variables included in the regression analyses and produced time series graphs.

We used SAS statistical software, version EG 7.1 to match the sites using PCA. R Studio, running R version 4.0.2, was used for the ITS analysis. We set statistical significance at p < 0.05.

2.6. Process evaluation

In Project 1, we conducted episodic interviews throughout the intervention period between April 2018 and February 2020. In addition, we conducted post‐feedback surveys toward the end of the intervention period, using a web‐based survey platform (REDCap). The survey consisted of five questions, and we distributed it the week after feedback reports were distributed, on a quarterly basis, to email lists of clinicians in the intervention CLCs provided by the site champions. The five questions all related to the feedback report and included whether the respondent received the report, read it, understood it, found it useful, and if they shared it with facility staff. We focused on this information to understand whether the feedback reports were being distributed to clinicians in intervention CLCs. We used descriptive statistics (means and standard deviations) to describe the data. In addition, for both projects, we assessed the proportion of LSTDI templates completed prior to admission (Project 1) or prior to the interval measured (Project 2) early in the project period, as well as later. These were secondary metrics provided in both project feedback reports. The rates of template completion prior to the measured event (admission in Project 1) or interval (month in Project 2) are important to understand because they reflect work done prior to the period, which may not be done within the CLC (e.g., during an acute hospital admission).

Our study was deemed QI by the VA Ann Arbor Healthcare System Research and Development Committee and exempt from human subjects oversight.

3. RESULTS

3.1. Matching

The means for the three‐factor scores included in the regression analysis were −0.17 (SD 0.87), −0.65 (SD 0.95), and 0.02 (SD 0.57). The mean Euclidean distance between intervention and matched comparisons was 0.76, with a standard deviation of 0.34. In Table 1, we present data describing veteran and CLC characteristics in intervention and comparison sites. The two columns present data from 2018 used in the matching process.

3.2. ITS analysis

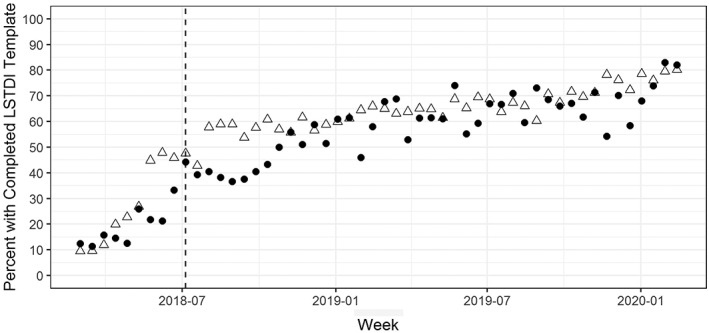

Prior to the intervention, both the comparison and intervention sites had relatively low completion rates (9.5% and 12.5%, respectively). At the time of the intervention, completion rates had notably increased (47.6% and 44.3%, respectively). At the final time point, completion rates exceeded 80% for both groups (80.2% and 82%, respectively). We display Project 1 ITS results in Table 2 and in Figure 1, depicting the changes in LSTDI completion rates over time for intervention and comparison sites. There were statistically significant differences between the 12 intervention CLC sites compared with their matched comparison sites. The coefficient estimate for the difference between groups for the preintervention trend, at the intervention point, and for the change in the regression slope during the intervention period are all significant. The difference in the preintervention trend is negative, and the other two coefficients are positive, indicating that the comparison sites appear to be doing better than the intervention sites. This finding was unexpected.

TABLE 2.

Project 1 interrupted time series results

| Coefficient estimate | SE | |

|---|---|---|

| Baseline level of completed LSTDI template | 4.40 | 3.31 |

| Trend before feedback intervention | 5.54* | 0.70 |

| Change in level at the beginning of feedback intervention | −1.83 | 4.24 |

| Change in trend after feedback intervention began | −4.96* | 0.70 |

| Dummy variable for group: intervention versus matched comparison | 5.31 | 4.69 |

| Difference between groups in prior trend | −3.27* | 0.98 |

| Difference between groups in change in level | 13.98** | 5.99 |

| Difference between groups in change in trend | 3.48* | 0.99 |

Abbreviation: LSTDI, Life‐Sustaining Treatment Decisions Initiative.

p < 0.01; **p < 0.05.

FIGURE 1.

Project 1 LSTDI template completion rate: intervention CLCs versus comparison CLCs. Δ, comparison CLCs, •, intervention CLCs. Dashed line indicates the start of intervention. LSTDI, Life‐Sustaining Treatment Decisions Initiative

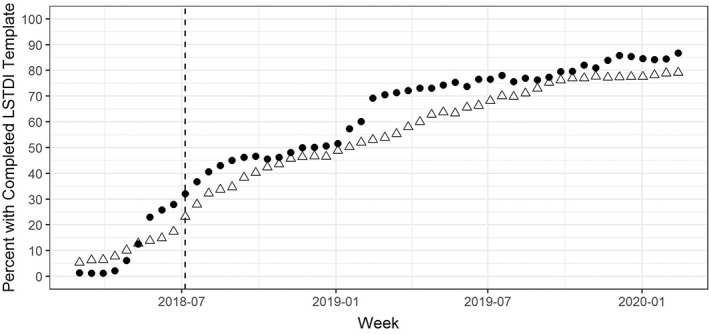

Similar to Project 1, Project 2 LSTDI preintervention mean completion rates for comparison and intervention sites were relatively low (5.3% and 1.4%, respectively). At the time of the intervention, rates modestly increased (23.1% and 32.1%, respectively). At the final time point, completion rates greatly improved (79.7% and 86.7%, respectively). We display Project 2 results in Table 3 and Figure 2. We found statistically significant differences between intervention and comparison sites in the prior trend and change in trend after intervention but not in the change in level at the intervention point. The coefficient estimate for the difference in the prior trend is positive, indicating that the intervention sites had a higher trend prior to the intervention, while the difference between groups in the trend after the intervention is negative, indicating that the rate of completed LSTDI templates increased more for the intervention group than the comparison group. The intervention sites appeared to perform better over the full period.

TABLE 3.

Project 2 interrupted time series results

| Coefficient estimate | SE | |

|---|---|---|

| Baseline level of completed LSTDI template | 4.28 | 2.40 |

| Trend before feedback + facilitation intervention | 1.56* | 0.50 |

| Change in level at beginning of feedback + facilitation intervention | 13.66* | 3.06 |

| Change in trend after feedback + facilitation intervention began | −0.27 | 0.51 |

| Dummy variable for group: intervention versus matched comparison | −8.60** | 3.39 |

| Difference between groups in prior trend | 2.34* | 0.71 |

| Difference between groups in change in level | −4.04 | 4.33 |

| Difference between groups in change in trend | −2.38* | 0.72 |

Abbreviation: LSTDI, Life‐Sustaining Treatment Decisions Initiative.

p < 0.01; **p < 0.05.

FIGURE 2.

Project 2 LSTDI template completion rate: intervention CLCs versus comparison CLCs. Δ, comparison CLCs; •, intervention CLCs. Dashed line indicates the start of intervention. LSTDI, Life‐Sustaining Treatment Decisions Initiative

Through the process evaluation, we learned that in Project 1, only a small proportion of site champions distributed the feedback reports, either to facility management or to clinicians and other team members in CLCs. The most commonly reported reason was a reluctance to give them what often seemed like bad news. In our analysis of the timing of completed LSTDI templates in both projects, we found that in Project 1, veterans in intervention sites on average had templates completed prior to admission 8% of the time prior to July 2018, while 12% of templates were completed prior to admission in comparison sites. After July 2018, the rate of completion prior to admission was 33% for intervention sites and 41% for comparison sites. In both time periods, a greater proportion of templates were completed prior to admission to CLC in comparison to intervention sites. In Project 2, the pre‐July 2018 rates for completing templates before each monthly interval were 7% for intervention sites and 8% for comparison sites. After July 2018, the average rate of preinterval completion was 61% for intervention sites and 54% for comparison sites. Preinterval completion was similar early on, but in the latter part of the intervention period, the rates of preinterval completion were higher for intervention than comparison sites. Figures 1 and 2 illustrate these results.

4. DISCUSSION

In this study, we evaluated whether the use of periodic feedback reports with and without facilitation was associated with improvements in LSTDI template completion rates for CLC residents. We found that feedback reports alone in Project 1 did not result in significant positive differences between intervention and comparison sites. In contrast to feedback alone, feedback plus facilitation in Project 2 showed a small but statistically significant difference in LSTDI template completion.

Changes in rates were significantly different between intervention and comparison groups, but the absolute differences in rates between the two groups were modest. The differences between the groups may have been minimized because overall LSTDI completion rates in CLCs across the system rose dramatically over the analytic period; completion rates averaged 82% across all intervention and comparison sites at the final ITS time point. This dramatic increase likely reflects the success of the entire LSTDI, which involved a broad range of training, implementation, and QI activities. 4 The LSTDI initiative was a VA‐wide mandate with strong leadership support. For example, veterans admitted to a CLC were almost 15 times more likely to have a completed LSTDI template than those not admitted to a CLC. 24 Communication and transitions across VA care settings also are managed within an integrated network, which may result in higher LSTDI completion rates; our research deepens understanding of LSTDI implementation in VA CLCs. However, even in community nursing homes, there is evidence suggesting that the adoption of resident‐centered care practices depends on continual buy‐in from leadership and staff. 27

Our findings suggest that feedback plus facilitation was more effective than feedback alone; this is consistent with findings from earlier studies 28 and supports the recommendation from implementation scientists that feedback interventions be coupled with additional approaches, specifically to support action planning. 7 , 13 Facilitation may be particularly important in environments such as CLCs, which have lower staffing ratios and provider time allocated, compared to acute care and other settings. In considering the intense 24‐h care provided in nursing homes, we note that the trends in pre‐interval completion were higher in the intervention than the comparison sites in Project 2, contrasted with Project 1. This may have been a minor factor in the different outcomes in the two projects. But one must consider the time and costs of facilitation when strong leadership support exists—suggesting that components of implementation strategies warrant further study in each unique nursing home setting.

We expected that feedback reports would enhance LSTDI completion rates and for this reason, the findings from Project 1 showing better increases in rates for comparison facilities compared with intervention sites is perplexing. A first‐line explanation suggests that the comparison sites, for unknown reasons, were able to implement LSTDI activities and begin using the template more quickly than the intervention sites, at least for veterans in CLCs. It is important to note that because we averaged the rates for both intervention and comparison sites, larger sites had a disproportionate effect on the average. With a rare exception, the Project 1 sites had a very difficult time getting started with the LSTDI, and by the mandated implementation date, July 2018, most CLCs included in this project were just beginning to document completed templates. It is notable that the intervention sites in Project 1 had lower proportions of templates completed prior to CLC admission than the comparison sites, which suggests that adoption in inpatient acute settings may have been faster in the comparison sites.

The lack of sufficient resources such as nurse staffing and provider time is a persistent problem in all long‐term care settings and is an important factor to consider when implementing complex interventions to improve care. For example, in a recent pragmatic cluster randomized clinical trial studying an advance care planning intervention in a non‐VA community nursing home setting, there were no significant changes in short‐and long‐stay nursing home resident outcomes. 29 , 30 The team noted staff fidelity to the intervention was low, demonstrating it is hard to change practice without greater attention to implementation strategies. Thus, trials of complex interventions in this setting may require more intense implementation than is typically used in pragmatic trials and QI projects.

There were several limitations to this study. First, our study compared the findings of two separate projects using different samples of intervention and comparison CLCs. However, we were able to match intervention sites in each project to comparison sites with similar characteristics for the analysis. Second, our Project 1 process evaluation showed that, in general, the reports were not distributed—making us realize that there may have been little difference between intervention and comparison sites in this project, given the limited reach of the intervention. It seems clear that the feedback reports could only have had a limited effect in changing behavior. Third, the delay in the release of the LSTDI (January 2017) represented a significant delay in the project as it was originally planned. We had expected a much longer period for the ITS analysis, which we compensated for by using biweekly time points instead of monthly. These were subject to more fluctuation than monthly time points, given the smaller numbers being aggregated at each time point. By looking at the overall trends postintervention in both projects, there may have been a more positive finding for Project 1 with a longer time period postintervention. In addition, we were forced to stop the time series 4 months earlier than planned because of the COVID‐19 pandemic. Over 25% of our intervention CLCs became units managing patients with COVID‐19, and in general, represented a very different population than planned. Finally, we used matching methods developed through VA research in our analysis. However, there are only approximately 130 CLCs in the system, thereby limiting the number of possible comparison sites and our ability to derive adequate matches, which introduces the potential for bias of the intervention effects.

Nonetheless, our findings are striking in the overall trend across all sites in both projects: sites went from zero to 80% on both metrics in a very rapid time period. This may be explained by the excellent dissemination and national support offered across the entire system by the VA National Center for Ethics in Health Care and the CLC staff. 4 , 24 Our efforts in supporting implementation were ancillary to the overall dissemination and implementation support given to this project.

In conclusion, evaluating approaches to user feedback with and without facilitation is useful. Striking a balance between the parsimonious and scalable approach to sending feedback reports alone to a single key individual, and intensive interactions with a much larger group of staff throughout a facility, is likely essential to supporting and extending the implementation of new evidence‐based practices. A promising direction for future research may be to test the effectiveness of various approaches to facilitation coupled with feedback and address both time and costs to arrive at recommendations for optimal uses of selected implementation strategies. In addition, an in‐depth assessment of the unexpected results from Project 1 that used feedback reports only is important to better understand the usefulness of audit and feedback to the end user.

CONFLICT OF INTEREST

The authors declare no conflicts of interest.

Supporting information

Appendix S1. Project 1 feedback report example.

Appendix S2. Project 2 feedback report example

ACKNOWLEDGMENTS

This project was supported through a grant from the Veterans Health Administration (VA), Health Services Research and Development Service Quality Enhancement Research Initiative (QUE 15–288). The following authors maintain employment with the Department of Veterans Affairs or are affiliated without compensation: JC, WS, JK, MBF, LH, RH, CL, VJP, CP, LP, AS, and ME. The views expressed in this paper are those of the authors and do not reflect the official positions of the Department of Veterans Affairs. The authors would like to acknowledge the resources provided by the VA's Geriatrics & Extended Care Data Analysis Center (GECDAC). The authors also would like to thank the contributions of the VA Community Living Center staff, the VA National Center for Ethics in Health Care, and Michele Karel, PhD to this project.

Carpenter JG, Scott WJ, Kononowech J, et al. Evaluating implementation strategies to support documentation of veterans' care preferences. Health Serv Res. 2022;57(4):734‐743. doi: 10.1111/1475-6773.13958

Funding information Quality Enhancement Research Initiative, Grant/Award Number: QUE 15‐288

REFERENCES

- 1. Teno JM, Mitchell SL, Kuo SK, et al. Decision‐making and outcomes of feeding tube insertion: a five‐state study. J Am Geriatr Soc. 2011;59(5):881‐886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Givens JL, Kiely DK, Carey K, Mitchell SL. Healthcare proxies of nursing home residents with advanced dementia: decisions they confront and their satisfaction with decision‐making. J Am Geriatr Soc. 2009;57(7):1149‐1155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kiely DK, Givens JL, Shaffer ML, Teno JM, Mitchell SL. Hospice use and outcomes in nursing home residents with advanced dementia. J Am Geriatr Soc. 2010;58(12):2284‐2291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Foglia MB, Lowery J, Sharpe VA, Tompkins P, Fox E. A comprehensive approach to eliciting, documenting, and honoring patient wishes for care near the end of life: the Veterans Health Administration's Life‐Sustaining Treatment Decisions Initiative. Jt Comm J Qual Patient Saf. 2019;45(1):47‐56. [DOI] [PubMed] [Google Scholar]

- 5. U.S. Department of Veterans Affairs . About VHA. 2021. Accessed January 22, 2021. https://www.va.gov/health/aboutvha.asp

- 6. U.S. Department of Veterans Affairs . QUERI ‐ Quality Enhancement Research Initiative. 2020. Accessed November 10, 2021. https://www.queri.research.va.gov/about/default.cfm

- 7. Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;(6):CD000259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Ivers NM, Grimshaw JM, Jamtvedt G, et al. Growing literature, stagnant science? Systematic review, meta‐regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29(11):1534‐1541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ivers NM, Sales A, Colquhoun H, et al. No more 'business as usual' with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9:14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Gardner B, Whittington C, McAteer J, Eccles MP, Michie S. Using theory to synthesise evidence from behaviour change interventions: the example of audit and feedback. Soc Sci Med. 2010;70(10):1618‐1625. [DOI] [PubMed] [Google Scholar]

- 12. Colquhoun HL, Carroll K, Eva KW, et al. Advancing the literature on designing audit and feedback interventions: identifying theory‐informed hypotheses. Implement Sci. 2017;12(1):117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kirchner JE, Smith JL, Powell BJ, Waltz TJ, Proctor EK. Getting a clinical innovation into practice: an introduction to implementation strategies. Psychiatry Res. 2020;283:112467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Baskerville NB, Liddy C, Hogg W. Systematic review and meta‐analysis of practice facilitation within primary care settings. Ann Fam Med. 2012;10(1):63‐74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Perry CK, Damschroder LJ, Hemler JR, Woodson TT, Ono SS, Cohen DJ. Specifying and comparing implementation strategies across seven large implementation interventions: a practical application of theory. Implement Sci. 2019;14(1):32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Harvey G, Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implement Sci. 2016;11:33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Sales AE, Ersek M, Intrator OK, et al. Implementing goals of care conversations with veterans in VA long‐term care setting: a mixed methods protocol. Implement Sci. 2016;11(1):132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Carpenter J, Miller SC, Kolanowski AM, et al. Partnership to enhance resident outcomes for Community Living Center residents with dementia: description of the protocol and preliminary findings. J Gerontol Nurs. 2019;45(3):21‐30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Landis‐Lewis Z, Kononowech J, Scott WJ, et al. Designing clinical practice feedback reports: three steps illustrated in Veterans Health Affairs long‐term care facilities and programs. Implement Sci. 2020;15(1):7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gaugler JE, Duval S, Anderson KA, Kane RL. Predicting nursing home admission in the U.S: a meta‐analysis. BMC Geriatr. 2007;7:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Byrne MM, Daw C, Pietz K, Reis B, Petersen LA. Creating peer groups for assessing and comparing nursing home performance. Am J Manag Care. 2013;19(11):933‐939. [PMC free article] [PubMed] [Google Scholar]

- 22. Byrne MM, Daw CN, Nelson HA, Urech TH, Pietz K, Petersen LA. Method to develop health care peer groups for quality and financial comparisons across hospitals. Health Serv Res. 2009;44(2 Pt 1):577‐592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Stelmokas J, Rochette AD, Hogikyan R, et al. Influence of cognition on length of stay and rehospitalization in older veterans admitted for post‐acute care. J Appl Gerontol. 2020;39(6):609‐617. [DOI] [PubMed] [Google Scholar]

- 24. Levy C, Ersek M, Scott W, et al. Life‐Sustaining Treatment Decisions Initiative: early implementation results of a National Veterans Affairs Program to honor veterans' care preferences. J Gen Intern Med. 2020;35(6):1803‐1812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Kutney‐Lee A, Smith D, Thorpe J, Del Rosario C, Ibrahim S, Ersek M. Race/ethnicity and end‐of‐life care among veterans. Med Care. 2017;55(4):342‐351. [DOI] [PubMed] [Google Scholar]

- 26. Wagner AK, Soumerai SB, Zhang F, Ross‐Degnan D. Segmented regression analysis of interrupted time series studies in medication use research. J Clin Pharm Ther. 2002;27(4):299‐309. [DOI] [PubMed] [Google Scholar]

- 27. Lima JC, Schwartz ML, Clark MA, Miller SC. The changing adoption of culture change practices in U.S. nursing homes. Innov Aging. 2020;4(3):igaa012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Boogaard JA, de Vet HCW, van Soest‐Poortvliet MC, Anema JR, Achterberg WP, van der Steen JT. Effects of two feedback interventions on end‐of‐life outcomes in nursing home residents with dementia: a cluster‐randomized controlled three‐armed trial. Palliat Med. 2018;32(3):693‐702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Loomer L, Ogarek JA, Mitchell SL, et al. Impact of an advance care planning video intervention on care of short‐stay nursing home patients. J Am Geriatr Soc. 2021;69(3):735‐743. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Mitchell SL, Volandes AE, Gutman R, et al. Advance care planning video intervention among long‐stay nursing home residents: a pragmatic cluster randomized clinical trial. JAMA Intern Med. 2020;180(8):1070‐1078. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1. Project 1 feedback report example.

Appendix S2. Project 2 feedback report example