Abstract

The brain regulates the body by anticipating its needs and attempting to meet them before they arise – a process called allostasis. Allostasis requires a model of the changing sensory conditions within the body, a process called interoception. In this paper, we examine how interoception may provide performance feedback for allostasis. We suggest studying allostasis in terms of control theory, reviewing control theory’s applications to related issues in physiology, motor control, and decision making. We synthesize these by relating them to the important properties of allostatic regulation as a control problem. We then sketch a novel formalism for how the brain might perform allostatic control of the viscera by analogy to skeletomotor control, including a mathematical view on how interoception acts as performance feedback for allostasis. Finally, we suggest ways to test implications of our hypotheses.

Keywords: Interoception, Allostasis, Predictive processing

1. Introduction: the functions of the brain in the body

Imagine that you are learning to play dodgeball as a beginner. You stand with the other players, divided into two teams, and when the game begins you need to pick up a large inflated ball from a pile in the middle and hit a member of the other team with it. As you run, throw, dodge, catch, and reach, your muscle cells require metabolic fuel in the form of molecules such as oxygen and glucose, which must be conveyed to those muscle cells via the blood. Your vascular system must deliver and distribute blood with speed, bringing nutrients and removing metabolites. Despite rapid muscle movements generating waste heat, your body temperature must remain within a narrow, viable range. As blood circulates more quickly throughout your body, your lungs must also increase the rate with which they breathe oxygen in and carbon dioxide out.

Playing a simple game of dodgeball, then, requires your brain to continually coordinate the systems of your body. At the same time, your body sends sensory information about internal events up the spinal cord and vagus nerve to the brain. It is standard practice in neuroscience to distinguish the brain’s “physiological sense of the condition of the body” (interoception (Craig, 2002, 2015; Quigley, Kanoski, Grill, Barrett, & Tsakiris, 2021)) from the collection of sensory modalities that inform the brain about the world outside the body (exteroception).

Interoception includes, but is not limited to, the brain’s modeling of the sensory signals from innervated visceral organs. Nociception, temperature, and C-tactile afferent-mediated (affective) touch on the skin are also considered interoceptive modalities, by virtue of their conveyance of sensory inputs to the brain via unmyelinated or lightly myelinated ascending fibers in the lamina 1 spinothalamic tract (Craig, 2002, 2009, 2015). A broad view of interoception also includes modeling chemosensation from within the body’s interior, such as changes in the endocrine system (Chen et al., 2021), changes in the immune system (Dantzer, 2018), and changes in the digestive system and gut (de Araujo, Schatzker, & Small, 2020; Muller et al., 2020). For simplicity’s sake, however, this paper will treat all these systems as “visceral”.

Viscerosensory signaling (i.e., the ascending signals from the sensory surfaces inside the body and the skin) informs the brain of the state of the body in an ever-changing and only partly predictable world. Since sensory signals themselves are ambiguous and noisy, this poses an inverse problem for the brain, one of inferring causes (the state of the body) from effects (the ascending viscerosensory signals). The brain solves this problem by means of an internal model (McNamee & Wolpert, 2019). Psychologists refer to the internal model, including interoception, by many terms, including memory (Buzsaki & Tingley, 2018), belief (Schwartenbeck, FitzGerald, & Dolan, 2016), perceptual inference (Aggelopoulos, 2015), unconscious inference (Von Helmholtz, 1867), embodied simulation (Barsalou, 2009), concepts and categories (Barrett, 2017), controlled hallucination (Grush, 2004), and prediction (Bar, 2009; Friston & Kiebel, 2009). Regardless of what it is called, the brain is hypothesized to construct a dynamic model of its body in the world (Barrett & Simmons, 2015; Hutchinson & Barrett, 2019). In this paper we will use the terms prediction, simulation, and concept.

The process of building and refining an internal model based on viscerosensory signals does not, in and of itself, accomplish the brain’s most basic task. This task is to maximize the energy efficiency of bodily functions, to “anticipate changing needs, evaluate priorities, and prepare the organism to satisfy them before they lead to errors” (page 4, Sterling, 2012), a process called allostasis (for further discussion on allostasis, see Sterling & Laughlin (2015); Schulkin & Sterling (2019)). Concurrent evolutionary (Cisek, 2019) and neuroanatomical (Barrett & Simmons, 2015; Chanes & Barrett, 2016; Barrett, 2017) evidence suggests that exteroceptive sensory signals, and the internal models anticipating them, contextualize and support motor control (McNamee & Wolpert, 2019). In a similar way, viscerosensory signals provide online feedback for allostasis, and interoceptive internal modeling subserves allostatic visceromotor control (Barrett & Simmons, 2015; Chanes & Barrett, 2016; Kleckner et al., 2017; Barrett, 2017). Many lines of evidence suggest the same conclusion: the brain is predictively regulating the body, which is a problem of motor control rather than of perceiving the world. It is a problem of regulating the body along a desired trajectory to achieve efficiency.

Existing formal models of interoception and body regulation (such as those reviewed by Hulme, Morville, & Gutkin (2019) and Petzschner, Garfinkel, Paulus, Koch, & Khalsa (2021), as well as recent works such as Unal et al. (2021)) have either formulated allostasis as a prospective decision-making problem (without considering how those decisions are enacted) or as a motor control problem (without considering where motor commands come from). Additionally, rather than treat metabolic efficiency as the objective, they discuss homeostasis, the regulation of bodily variables to fixed set points with fixed tolerances for error. While many interpretations allow for regulation to take place preemptively (see Carpenter (2004)), homeostasis is still assumed to correct deviations from a fixed set-point (Sterling, 2014). In addition, homeostasis is not well suited to deal with variation in demand on bodily systems across contexts and time, variation that has now been well-documented (e.g. (Mrosovsky, 1990; Cabanac, 2006; Woods & Ramsay, 2007; Kotas & Medzhitov, 2015)). This paper aims to fill this gap by proposing an initial formal model of allostatic regulation. In the process, it will connect existing accounts of motor control based on internal models (Kording & Wolpert, 2006; Gillespie, Ghasemi, & Freudenberg, 2016; McNamee & Wolpert, 2019) and accounts of brain function based on feedback control (Pezzulo & Cisek, 2016; Pezzulo, Donnarumma, Iodice, Maisto, & Stoianov, 2017; Maeda, Cluff, Gribble, & Pruszynski, 2018) to the brain’s regulation of the body’s internal environment.

This paper’s formal model of allostasis draws from control theory, a discipline widely employed in both systems biology and engineering. Control theory deals with driving dynamical systems to move (approximately) along a certain desired trajectory, despite physical disturbances to those systems that might drive it off that trajectory. Control theory also makes explicit the question of what the desired trajectory is, how the trajectory might be physically realized, and how one system can drive another to follow a more desired trajectory rather than a less desired one. This paper describes an approach to formally modeling regulation of the body that retains compatibility with previous empirical (e.g. Kleckner et al. (2017); Young, Gaylor, de Kerckhove, Watkins, & Benton (2019)) and theoretical (e.g. Pezzulo, Rigoli, & Friston (2015); Corcoran & Hohwy (2017); Petzschner et al. (2021)) investigations, while building upon control theory from first principles.

Four sections in this paper connect interoception to allostasis. Section 2 establishes how interoception enables the brain to estimate the physiological efficiency of the body in the present moment, which is precisely what it needs to know to evaluate and refine actions. Section 3 then introduces control theory and explains its applications in physiology, motor control, and decision making; these provide the conceptual tools for modeling how interoception informs allostasis. Section 4 applies the principles of control theory to derive a novel formal model of how the brain might estimate the desirability of physiological trajectories and make prospective regulatory decisions. Finally, Section 5 synthesizes the previous three sections to explore the direct implications of the proposed formalism. Appendix A provides a glossary of terms; Appendix B.1 provides mathematical details related to Section 3; and Appendix C.1 provides mathematical details related to Section 4.

2. Interoception: modeling the body, estimating its efficiency

This section takes up the question of how interoception offers performance metrics for visceromotor regulation. Many interoceptive modalities consist of viscerosensory signals whose values must remain within specific ranges conducive to efficient bodily function and survival (making these signals different from exteroceptive sensory signals in this regard). A core assumption is that the brain, as part of allostasis, estimates how efficiently physiological processes can enable or support needed changes in resource levels (Schulkin & Sterling, 2019). Towards that end, Section 2.1 differentiates two types of viscerosensory variables: those that represent quantities of resources (called regulated resources) and those that represent rates 1 at which processes act (called controlled processes) .2 Section 2.2 applies these concepts to the well-studied controlled process of the carotid baroreflex, which the brain must modulate by central command to meet oncoming demand for the oxygen, glucose, etc. in the blood. This subsection suggests that the brain predicts ongoing fluctuations in physiological efficiency. Section 2.3 considers a more complex regulatory setting, in which several physiological processes act on a common metabolic resource in different ways, and generalizes the proposed notion of physiological efficiency estimation to this more common case. Finally, Section 2.4 discusses how efficiency estimation in interoception could enable the brain to constructively evaluate a rich variety of predicted bodily conditions without requiring a modular, purpose-specific “reward” system.

The discussion of control theory in Section 3 then will use the concepts described here. In Section 4 these concepts will undergird a mathematical formalism for allostatic decision making.

2.1. Regulated resources and controlled processes in physiology

Regulated resources are kept relatively stable over time. Examples include blood glucose and core body temperature. By contrast, a non-regulated resource such as blood alcohol (ethanol) does not have its level stabilized by the body in most contexts. Insofar as a regulated resource like blood glucose represents a physical quantity3 or a substance (like glucose), its quantity cannot change instantaneously. Regulation of resources does not have to move levels towards a specific set point,4 and in fact, many can freely vary over a range of possible values without regulatory response. Such a range is called a settling range, since the level of a resource might settle anywhere in the range without provoking a regulatory response.

A regulated resource remains (relatively) stable over time thanks to the adaptive change of one or more controlled processes. Controlled process rates are the rates at which physiological processes operate. These regulatory processes contribute to the relative stability or change in regulated resources over time. Examples of controlled processes include sweating and shivering, while an example of a physiological change that is not a controlled process is when body temperature increases as a result of the body being in direct sunlight. The rates of controlled processes can speed up or slow down within a broad range by altering energy expenditure. Where a controlled process falls within its operating range will determine its effect upon the regulated resource to which it is coupled. Controlled processes do not have to affect the underlying regulated resource directly by a single causal mechanism; they can have their effect via other controlled processes (Box 1).

Box 1. Illustration by example.

Returning to the dodgeball example, the full range of physiological processes maintaining a person’s ability to play would include the metabolic necessities and byproducts carried in the blood itself: oxygen, glucose, and carbon dioxide chief among them. These can be viewed in terms of the functional categories delineated above. The levels of oxygen, glucose, and carbon dioxide in the blood, at any given moment, are called regulated resources. The demand for metabolic inputs by the muscles then can be considered a controlled process. In the specific case of the muscles, their metabolic uptake changes the circulating levels of oxygen, glucose, and carbon dioxide. In addition, blood pressure is a controlled process which subserves the maintenance or replenishment of the regulated resources. The heart rate and levels of autonomic activation (in both branches of the autonomic nervous system) then also function as controlled processes, modulated to indirectly keep the regulated resources in the desired range.

In evolutionary terms, controlled processes contribute to the fitness of the organism by responding to changes in the relevant underlying regulated resource to keep that variable within a viable range. In mathematical terms, changes in controlled process rates can be modeled as functions of regulated resource levels. However, those controlled processes also themselves have limited ranges of possible action. The limited ranges of both regulated resources and controlled processes can be predicted and modeled in terms of capacity curves, which are the topic of the next section.

2.2. Predicting and modeling the ranges of regulated and controlled processes

Both the heart rate and blood pressure must increase during aerobic exercise, as noted in the dodgeball example. If you were to try to play dodgeball at a resting level of blood flow, your muscles quickly would become fatigued and you would be unable to move (for a more detailed discussion, see Sterling & Laughlin (2015)). Your brain must therefore direct the sympathetic branch of your autonomic nervous system to increase its outflow, including increasing blood pressure via vasoconstriction .5 Under resting conditions, the baroreceptor-heart rate reflex would normally counter any rise in blood pressure by slowing the heartbeat. However, with exertion, your blood pressure and heart rate both must increase to support the needed increase in blood flow required by your exercising muscles. To accomplish this specific change, your brain modulates the response of your baroreceptor-heart rate reflex (Potts, Shi, & Raven, 1993, 1995), shifting the entire function relating a change in your blood pressure to a change in your heart rate (Ogoh et al., 2002). The alterations enable redistribution of blood to meet the new demand so that you can run to avoid the ball or throw the ball at someone else.

However, blood pressure is a controlled process, not a regulated resource. It must shift in order to stabilize the regulated resources of oxygen, glucose, and carbon dioxide concentrations in the blood. It therefore lacks a set point to which the brain will regulate the baroreceptor-heart rate reflex, the heartbeat, or other variables affecting the blood pressure. Although the controlled process regulating the blood pressure can and does shift its rate with time, that rate can only rise or fall so far before reaching physical limits, after which further modulation of the baroreceptor or the heartbeat will have no additional significant effect. The baroreflex’s responsive range can be defined as the range between where the controlled process (the blood pressure) effectively cannot decrease further (the threshold value point) and where it cannot increase further (the saturation value point .6

Threshold and saturation points partly define curves that are derived from functions which physiologists commonly use to model the connection between perturbations and regulatory responses, usually naming them response curves (e.g., Ogoh et al. (2005)) or transfer functions. The term capacity curves will be used to emphasize the fact that while such curves can shift over time, in any one instant they represent the current range of limited regulatory resources available to an organism. The terms threshold and saturation will also be used for the levels of the regulatory responses (plotted on the vertical axis) of a capacity curve, rather than the levels of the perturbing stimulus (plotted on the horizontal axis).

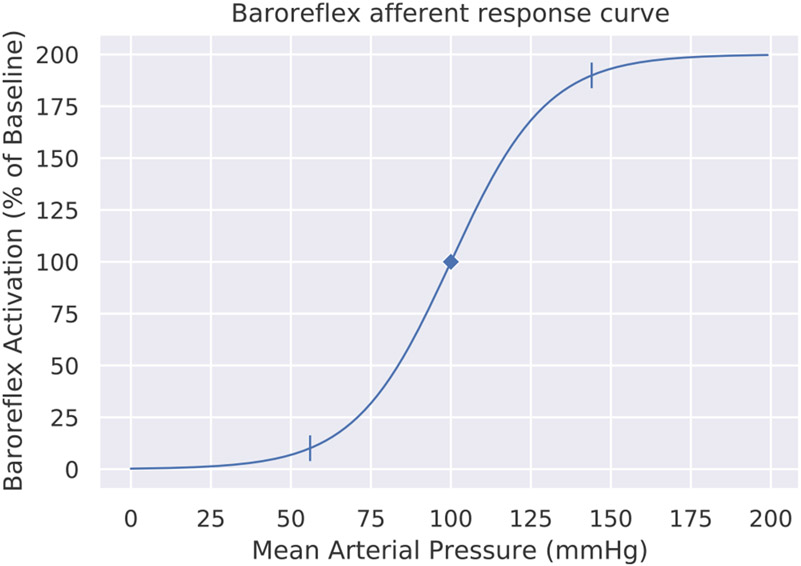

Fig. 1 shows an example capacity curve for afferent activity in human baroreceptors. The left tick marker shows the threshold value, and the right tick marker shows the safturation value. A parameter called the gain specifies the relative slope of the curve throughout its range, determining where the threshold and saturation values will fall. The mean arterial blood pressure (horizontal axis) is a controlled process, and so the baroreflex activation (vertical axis) is also a controlled process, one which only affects the underlying regulated resources (e.g., blood glucose, blood oxygen, etc.) indirectly.

Fig. 1.

Capacity curve for baroreceptor afferent firing, taken as a pedagogical example from Heesch (1999). As the curve flattens in either direction, the baroreflex can no longer respond proportionally to changes in blood pressure. The tick markers show the threshold value (the fifth percentile of response) and the saturation value (the 95th percentile of response) on the horizontal axis.

Mathematically, an ideal small change in the blood pressure will lead to a certain ideal small change in baroreflex activation .7 The operating point is where this potential response is greatest. For a symmetrical capacity curve such as that of the baroreceptor-heart rate reflex above, the operating point will lie in the center of the curve. Fig. 2 depicts the operating point with a diamond marker and the potential response around that point as a yellow dotted line. Physiologists often employ the sigmoidal form displayed here for a capacity curve because it provides a good empirical fit to data (see McDowall & Dampney (2006), Dampney (2016)).

Fig. 2.

Capacity curve from Fig. 1 above (blue), with the linearized response (orange) around the operating point (blue diamond marker). The diamond marker denotes the point of optimal responsiveness, or operating point. Responsiveness is optimal when the tangent line has maximal slope around the current blood pressure. Regulating to optimal responsiveness requires either keeping current blood pressure near the operating point, or relaxing the baroreflex’s gain to widen the curve. The latter sacrifices performance (slope) at the operating point but provides greater resilience against uncertainty and perturbations. Note that the operating point refers to the point on the horizontal axis.

The capacity curve in Fig. 1 has mathematical form

| (1) |

and its parameters will take values according to the figure. These values include the response range R (from lower to upper asymptote), the lower boundary B on the response, the operating point x = μ, and the gain k. The variable x on the figure’s horizontal axis represents the mean arterial blood pressure, while the variable y on the figure’s vertical axis represents the baroreflex activation as a percentage of the resting mean. For the figure, the parameters have the values

Eq. (1) defined y, the baroreflex activation, as a function of x, the mean arterial blood pressure. Elementary algebra allows the equation to be solved for x or y as a function of an intermediate quantity u ∈ (0, 1) called the quantile,

| (2) |

| (3) |

| (4) |

These equations outline the form of a generative model: a procedure for probabilistically predicting observed variables in terms of unobserved variables. μ, k, R, B, u serve as unobserved variables, which are sampled from a prior probability distribution not dependent on data. These variables are plugged into the equations to generate predictions for the observed variables: mean blood pressure x and baroreflex afferent activation y. Together, the prior and the likelihood can form a posterior probability distribution, which defines the probabilities of different values for the unobserved variables given the observed ones. In the brain, any internal generative model with similar structure to the above would likely obtain its probability densities for the unobserved variables from its general knowledge of the body in the world, rather than starting with an uninformed prior.

The proposal here has an unusual feature: the equations for x (the mean arterial blood pressure) and y (the baroreflex activation as a percent of baseline) are in terms of the quantile variable u. The quantile variable uniformly represents the relationship between the blood pressure and the baroreflex activation, irrespective of changes in the capacity curve’s operating point μ and gain k. The quantile depends only on the functional form of the capacity curve, not on the parameters. The distance u(x) − u(μ) (i.e., the relative distance between the current value of x and the operating point) therefore provides a time-independent performance metric for the regulatory task of the baroreceptor-heart reflex. Capacity curves change all the time due to variation in their underlying physiological systems (see plot of arterial pressure over time in Bevan, Honour, & Stott (1969), reprinted in Sterling (2012)), but quantiles will retain the same meaning no matter the current parameter values. This supports high regulatory flexibility, a concept often proposed by physiologists as an adjustable set point (Cabanac, 2006).

Any point on any capacity curve can be written in terms of quantiles, because capacity curves represent physical responses with finite ranges. Insofar as controlled process responses have the bounded form described above, they can potentially be described in terms of capacity curves, with mathematical description similar to that given above (although usually more complex in the details). Insofar as this remains empirically true, interoceptive internal modeling (Barrett & Simmons, 2015) could be described, mathematically, as estimating capacity curves over time. The brain could potentially model those capacity curves in terms of quantiles without loss of generality, and those quantiles would have a clear regulatory interpretation.

Overall, if the brain’s internal model were to infer capacity curves as a part of interoception, then a variety of sites in the brain would have to generate predictions, and integrate prediction errors, regarding both regulated resources and controlled processes. These sites would have to receive afferent viscerosensory signals to which to compare efferent predictions. The brain would have to generate efferent predictions for each capacity curve’s key parameters (e.g., operating point, gain, boundary, and range), and combine those parameters with an efferent prediction of the present state’s quantile representation. These would generate interoceptive predictions of viscerosensory stimuli: the regulated resources and controlled processes related by capacity curves. Afferent viscerosensory signals would confirm or correct these predictions, and thus correct or confirm the estimated performance metric u(x) − u(μ).

The process of correcting and/or confirming predictions will usually entail spending a sizable amount of energy just on neural firing to update the various predictions (Theriault, Young, & Barrett, 2021). On top of that, the brain also will have to spend energy to reconsider and re-plan current behavior. Imagine an internal chest pain during a game of dodgeball, when you know you haven’t been hit: it could be heartburn, or it could be a heart attack. Whatever the cause, the brain will have a metric of physiological efficiency with which to determine how to spend resources on updating predictions and behavior, so as to optimally keep regulated resources within the responsive ranges of their corresponding controlled processes.

2.3. Modeling the viable ranges of multiple controlled processes to support multi-system regulation and coordinated action

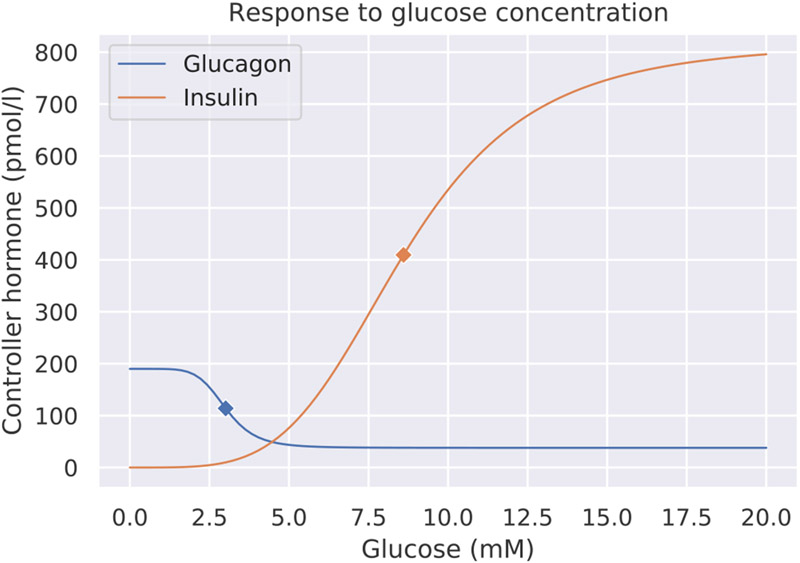

Unlike the simple regulatory relationship between blood pressure and heart rate, many regulated resources in the body cannot be tightly controlled by a small number of effectors. To return to the dodgeball example, the full range of physiological processes maintaining a person’s ability to play would include the metabolic necessities and byproducts carried in the blood itself: oxygen, glucose, and carbon dioxide are chief among them. In the specific case of the muscles, their metabolic uptake changes circulating levels of oxygen, glucose, and carbon dioxide, which are regulated resources that must remain within viable ranges. Heart rate, blood pressure, and the level of activation in both branches of the autonomic nervous are controlled processes that the brain modulates in service to the maintenance or replenishment of the regulated resources. Next, we focus on blood glucose as a regulated resource, with glucagon as the controlled process enabling secretion of glucose into the blood and insulin as the controlled process enabling removal of glucose from the blood.

Emerging theoretical (Saunders, Koeslag, & Wessels, 1998, 2000) and experimental (Sohn & Ho, 2020) evidence suggests that blood glucose levels are not actively defended at a biologically hard-coded set point any more than heart rate is. Instead, glucagon and insulin activity balance each other’s effects to bring the blood glucose to a point within its settling range (a settling point) with glucose entering the blood after the person ingests food and glucose then crossing from the blood into other bodily tissues to support their function. Recent evidence suggests that when glucagon stimulates insulin production in β-cells in the pancreas, it acts to suppress overshoot of the blood glucose level (Garzilli & Itzkovitz, 2018). This suggests that uptake of glucose into the blood from ingested food could plausibly act as the passive variable of a settling-point regulation model (Speakman et al., 2011). The rate of glucose uptake into tissues from the blood (which leads to insulin secretion) is plausibly a function of glucose availability in the blood.

Settling-point dynamics require either that a controlled process be regulated to decline proportionally to the current level of the regulated resource, or that outputs be regulated to increase proportionally to the current level of the regulated resource. Speakman et al. (2011) give the example of a water reservoir, with water as the resource and outflow from the reservoir as the controlled process. If the depth of the reservoir grows higher due to rain, so will the volume of outflow. The depth of the reservoir stabilizes when the incoming rain and the outgoing outflow over a period of time equal each-other.

In the body, both of these forms of regulation can and do happen: they are the job of the brain (Filippi, Abraham, Yue, & Lam, 2013). The brain may operate as an additional hierarchical level of control, actively balancing and minimizing the necessary metabolic control effort by preemptively regulating intake and uptake of glucose through behavior. Thus, uptake of glucose by the muscles during a game of dodgeball results in a fall in blood glucose and a corresponding increase in secretion of glucagon (Hall & Hall, 2020). Glucagon acts to cause the release of glucose into the blood (from liver cells). In the event of glucose overshoot (i.e., excess levels in blood), insulin will be secreted to restore blood glucose into its settling range. The brain also registers a “cost” of the glucagon release because it required energy expenditure (both in synthesis and secretion), an expenditure that could instead have been spent on the dodgeball game, had the glucose level been more actively maintained. The reverse can occur when the blood contains a surfeit of glucose stock, which then must be taken up into other tissues for storage or usage.

Mathematical modeling studies suggest that the inflection (operating) points on the generalized sigmoidal curves (generalized capacity curves) for glucagon and insulin can be found at 3.01 millimolar (mM) and 8.6 millimolar (mM) respectively, quite close to the lower and upper limits of normal human blood glucose (König, Bulik, & Holzhutter, 2012). Fig. 3 shows plots of the resulting functions, which can be interpreted as capacity curves. The inflection points are shown by the diamond markers, and by definition, each inflection point is a local optimum of regulatory responsiveness. If glucagon and insulin have their greatest responsiveness at the limits for hypoglycemia and hyperglycemia respectively, then regulation of glucose by behavior will aim to avoid straining either of the two hormones’ capacity curves, effectively keeping blood glucose in the normoglycemic range.

Fig. 3.

Capacity curves for glucagon (blue) and insulin (orange) responses to glucose levels in the blood, measured in millimolars. The diamond markers show the respective operating points (3.01 mM for glucagon, 8.6 mM for insulin) of the curves, and the space between those two markers denotes the potential settling range for blood glucose content.

Derived from König et al. (2012).

Evidence from existing studies (Filippi et al., 2013; Morville, Friston, Burdakov, Siebner, & Hulme, 2018; Zimmerman et al., 2016) suggests that chemo-sensory cells in the circumventricular organs and certain nuclei of the hypothalamus, which detect glucose, may be well-described as predictively modeling such generalized capacity curves. This kind of functionality may extend not only to insulin and glucagon, but to other paired (tandem) controlled processes in the endocrine system, such as leptin and ghrelin (Morville et al., 2018). Afferent hypothalamic firing can be interpreted as sending prediction errors to other parts of the forebrain (Chen & Knight, 2016; Morville et al., 2018) thereby signaling an unanticipated metabolic change, and potentially updating the brain’s internal model as described by these capacity curves. Each such capacity curve and the current point upon it could then motivate some mode of behavior: a predictable mixture of glucagon, ghrelin, and other similar signals could be well-described as motivating consumption behaviors (e.g., a shift in autonomic activity towards greater relative sympathetic nervous system activation, exploration of the environment, etc.), while a predictable mixture of insulin, leptin, etc. could be well-described as motivating satiety behaviors (e.g., a shift towards relatively greater parasympathetic activity, reduced motor activity, etc.).

Similar paired controlled processes seem to appear in a variety of regulatory “modalities” throughout the body, ranging from autonomic activity on the heart (Berntson, Cacioppo, & Quigley, 1991) to blood glucose (Filippi et al., 2013) (as above) to adiposity (Speakman et al., 2011) (in the form of leptin and ghrelin). Evidence also suggests that the brain can combine these signals when they operate in tandem to regulate common behaviors (Zimmerman & Knight, 2020). Allostasis may employ a generalized control motif of having paired peripheral controlled processes, which sometimes work together to drive regulatory behavior in one direction (e.g., a reciprocal mode of sympathetic increase and parasympathetic decrease which both drive the heart rate to increase), but can also “antagonize” each other’s effects (such as when both the sympathetic and parasympathetic branches coactivate, producing a heart rate that is the sum of these two countervailing forces, each driving the heart rate in a different direction). Interoceptive modeling in the brain also may employ these motifs. Such general motifs in interoceptive processing could provide a domain-general mechanism for quantifying regulatory imperatives in interoceptive internal models. This may provide greater flexibility in both physiological regulation and behavior than a centrally enforced set point can provide, as well as suffering less error against challenges in each direction (see (Saunders et al., 1998, 2000)).

2.4. Viable ranges and capacities could obviate a modular “reward system”

Standard accounts of allostatic regulation describe it chiefly on the physiological level of analysis, attributing allostatic control in the central nervous system to reinforcement learning. As Sterling (2012) writes,

The central representation of “reward” is a brief burst of spikes in neurons of the ventral midbrain that release a pulse of dopamine to the nucleus accumbens and prefrontal cortex. The precise correspondence between a “feeling” and a specific neuro-transmitter is difficult to establish and is probably oversimplified, since many chemicals change in concert. Yet, one imagines that the dopamine pulse evokes momentary relief from flagellating anxiety and a brief sense of satisfaction/pleasure – at last, the carrot.

The picture of “rewards” painted here suggests a modular “reward center” or “reward system” in the ventral midbrain, one whose specialized role is to perform apples-to-oranges comparisons in service to allostasis. However, insofar as the ventral midbrain would function as a “reward center”, the “reward” signals sent to the rest of the brain would not carry contextual information about the bodily needs to which they refer. More recent evidence shows that there is no unique, localized “reward center” or “reward system” in the brain: broad cortical and subcortical brain networks play various roles in reward as a construct (Berridge & Kringelbach, 2015) or an abstract concept in experiments.

Since there is no single brain site that specifically encodes appetitive or aversive reinforcement value, it is useful to reframe discrete “reward” and “decision” systems as a domain-general allostatic control system. Abundant empirical evidence supports such a reframing (Barrett & Satpute, 2013; Hackel et al., 2016), particularly analyses of the default-mode network and the salience network and their subcortical connections (Barrett & Simmons, 2015; Kleckner et al., 2017). Computationally, these networks could implement a formal model similar to what we introduce later in this paper, or they could translate interoceptive information into a teaching signal for a reinforcement learning system, as in Keramati & Gutkin (2014). The domain-generality of interoception provides further theoretical support for the idea that we do not need “mental modules” or “faculty psychology concepts” to understand how a brain works (Barrett, 2009; Lindquist & Barrett, 2012).

If interoceptive processes operate to estimate parameters analogous to operating points and tolerances, then those processes should be able to convey sufficient information to the brain for purposes of regulating the body. The ideas of Section 2.2 and Section 2.3 can be usefully combined here when considering controlled processes that regulate the same underlying resource. From this perspective, interoceptive prediction errors, in the context of decision-making experiments, can be interpreted as learning about “rewards” via “reward prediction errors”. Movement towards an operating point then can be considered a “reward”, and movement away from an operating point a “cost”. Each such movement can be weighted according to the same capacity curve’s gain or inverse-tolerance; this would convey the (momentary, estimated) relative “worth” of adapting to a load on one innervated organ system versus another. Conceived of in a high-dimensional space, such movements can be viewed as “towards” and “away from” trajectories of changing operating points.

Within a view of brain function not based on a modular reward system, the neurotransmitters produced by unmyelinated and lightly myelinated interoceptive nerve fibers (see Carvalho & Damasio (2021)) could play a role in signaling capacity curves. These neurotransmitters, which include dopamine and serotonin, are commonly thought to act as teaching signals for action (Boureau & Dayan, 2011). In support of this suggestion, dopaminergic neurons in the ventral tegmental area in mice (Dabney, Rowland, Bellemare, & Munos, 2018; Dabney et al., 2020; Lowet, Zheng, Matias, Drugowitsch, & Uchida, 2020) have been modeled using a class of mathematical functions that include our capacity curves.

A mathematically sound and biologically plausible account of allostatic control does not require a modular or separate “reward system” in the central nervous system. Rather, it simply requires a brain and a viscerosensory peripheral nervous system to behave as if parameters for capacity curves (describing how adaptable any given state would be to unexpected disturbances) were signaled alongside the location of current physiological states on the corresponding capacity curves. Different physiological needs (say, core body temperature versus blood glucose levels) could then be added, subtracted, compared, etc. by comparing the distance of the current state from the operating point in any given dimension, scaled by the capacity curve’s gain. Our formal model later will make this idea more precise, providing a way to put numbers to such “distances” and “movements”.

2.5. Summary

This section outlined a proposal for how the nervous system could potentially function to coordinate and control organ systems across timescales to provide allostatic regulation of the body. Section 2.2 considered the movement of physiological systems’ response curves (such as the example shown in Fig. 1) as signifying their capacity to adapt to challenge; the idea of matching actual system loads to operating points (along the lines of Fig. 2) provides a foundation for allostatic regulation. Section 2.3 extended the idea of capacity curves to systems based on more behavioral, settling-point regulation; in such systems the brain functions as the top level of a hierarchical control scheme, regulating the lower-level controllers. In Section 2.4 we then reasoned that movement toward or away from the responsive range of a capacity curve can be treated as “reward” or “cost”, respectively, and suggested that this could potentially obviate the need for a dedicated neural circuit or module that specifically calculates the behavioral constructs of “reward” and “cost”. Evidence from cognitive neuroscience supports the view that the brain lacks such modules, suggesting that we may gain empirical and theoretical traction by investigating decision-making constructs from an allostatic point of view. Section 3 will build on these ideas by introducing control theory, and consider the use of control theory in physiological regulation, motor control, and decision-making, as well as discuss the potential for control theory to unify previously disparate views on bodily regulation. Section 4 will build upon these foundations to propose a formalism for allostatic decision making. Embodied decision making includes all three of the forms of uncertainty to which allostatic regulation is subject: uncertainty about what is physiologically efficient, uncertainty about the consequences of movements, and uncertainty about the external world.

3. Control theory: A unifying lens for physiology, motor control, and decision making

Section 2 hypothesized that allostatic regulation can be understood in terms of controlled processes’ responsiveness to perturbation. It introduced capacity curves, responsive ranges, regulated resources, and controlled processes as ways to describe aspects of physiological regulation; it also sketched the functional form of a generative model that could infer capacity curves as latent properties of related interoceptive variables. It then suggested that moving actual physiological states towards the operating points of maximum adaptability, with each movement weighted by the relative gain (inverse-tolerance) of the response capacity, can formalize the functional dynamic of allostatic regulation. However, interoception is perception of the innervated body: it can include sensing allostatic responsiveness of present states of the body, as achieved by past actions, but it cannot produce present and future actions in and of itself. The latter is the role of visceromotor control processes. To investigate how the brain accomplishes visceromotor control, some additional theoretical tools are required.

This section introduces concepts from engineering control theory, and then reviews its applications in the life sciences. These include physiology (Section 3.1), skeletomotor movement (Section 3.2), and decision making (Section 3.3). Section 3.4 will connect future interoceptive states to present movements, illuminating what makes allostatic regulation more energy efficient than homeostatic regulation. The next section will build off the account of control used in physiology to suggest how interoception supports allostasis.

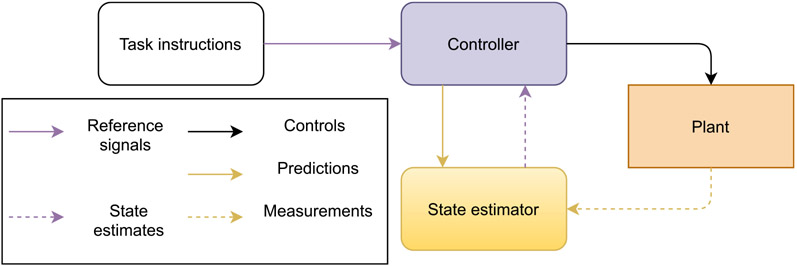

3.1. Control theory for physiology: A reliable body built from unreliable parts

Broadly, control theory deals with driving a physical system towards a desired trajectory, even when the system is built from unreliable or unpredictable parts. Control theorists call the driven system the plant, and its desired trajectory the reference trajectory.8 Generally a plant must be made to conform to its reference trajectory by a driving system called the controller. In controls engineering, these systems are typically thought to be separate physical entities with connections between them, and along these connections the systems transmit signals to each other. The “reference signal” that specifies the reference trajectory goes into the controller, and signals that leave the controller and enter the plant are called controls. Controls affect the state of the plant over time. The reference signal is thought to derive from a source that is external to the system, such as an engineer or a machine operator. Fig. 4 shows an example “block diagram” for an engineered control system.

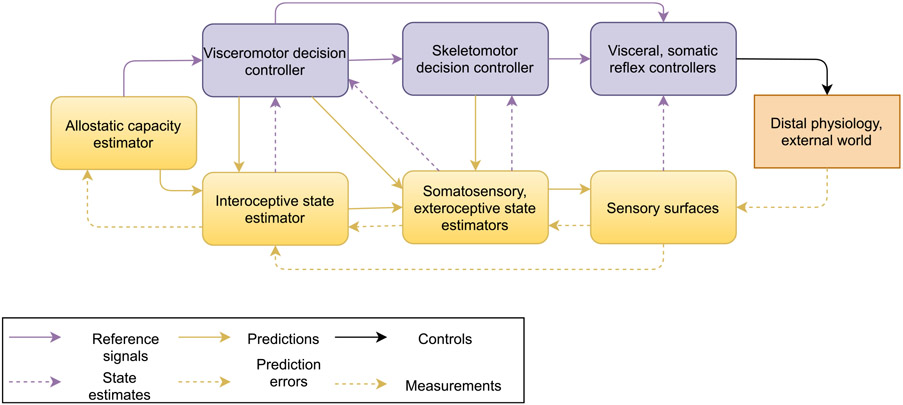

Fig. 4.

Functional block diagram of a model-based control system. The “plant” (orange) is the object or system whose motion or other behavior is controlled. The “controller” (purple) sends signals (“controls”, solid black arrows) that change how the plant moves, and signals the expected outcome (“predictions”, solid yellow arrow) to the “state estimator” (yellow). The task behavior of the plant is prescribed to the controller by an engineer or machine operator (transparent box), in the form of the reference signal (solid purple arrow). Measurements of the plant output (dashed yellow arrow) feed back to the state estimator to yield updated estimates (dashed purple arrow), which the controller compares to the reference signal to adjust the controls.

A controller functions to steer the plant along its reference trajectory, adapting to external disturbances that would push the plant away from the reference trajectory. From the standpoint of a brain controlling a body, “disturbances” might be thought of as uncontrolled changes in the workings of the body’s internal systems. There is an important distinction between an unpredictable event and a disturbance: unpredictable events can either push a system away from its reference trajectory or towards it, but a disturbance, which may or may not be surprising, is always an event that pushes the system away from its reference trajectory. Thus, a disturbance is always relative to the reference trajectory (Box 2).

Box 2. Illustration by example.

The dodgeball example illustrates the distinction between an unpredicted event and a disturbance. If a player looks across the field and sees a ball heading straight for her, her brain knows and predicts (by means of past experience) when and where the ball is likely to arrive. If she sees another player throw the ball, it will not be surprising (i.e., unpredicted) when the ball heads her way and hits her body. It will, nonetheless, be a disturbance; insofar as her brain estimated the movement of the ball and prepared her body to dodge, if she was unable to move fast enough, she deviated from her reference trajectory. By the same token, if a ball hits her from behind as she is standing still, her brain has made no estimate of its trajectory, nor has it prepared her body to dodge, but the hit remains a disturbance. For the same reason, if she positions herself in a way that enables her to catch, by luck or accident, a ball thrown at her, she has followed her reference trajectory, even though her brain will only register this after processing the ensuing prediction errors.

The whole point of a control system is to adapt to disturbances, and a system can attain much greater robustness and adaptability by using sensors to measure the plant’s actual behavior over time. Control theorists call these measurements the feedback for the controller, and the use of feedback to adjust control outputs is called feedback control. Physiologists recognize feedback control as a ubiquitous feature of bodily function (Carpenter, 2004; Cosentino & Bates, 2011), with endocrine control of blood glucose being a well-studied example (Saunders et al., 1998, 2000). Feedback control is essential because no controller is ever perfect: neither all forms of noise nor all external disturbances can ever be fully accounted for. If the feedback loop (seen in Fig. 4 as the arrows flowing from controller to plant, plant to state estimator, and state estimator to controller) is cut, the controller can no longer receive any information from the plant. The control signals calculated under such circumstances are called open-loop or feedforward controls, or even (somewhat idealistically) plans.

The concepts of control theory can illuminate the anatomy of the baroreflex, the example physiological system described above. For simplicity’s sake, the baroreflex and its components are considered the system in question. Its plant is the organs of the cardiovascular system as innervated by the autonomic nervous system (ANS). Its controller is a comparator circuit in the midbrain, specifically in the nucleus tractus solitarius (NTS) (Zanutto, Valentinuzzi, & Segura, 2010). Its reference signal comes from two sources: over the short term, top-down signaling from the cerebral cortex (which is outside the controller itself), and over the long term, midbrain structures rostral to the NTS, based upon (as yet not fully understood) endocrine signals (Osborn & Foss, 2017). These are compared to the actual blood pressure measured by the carotid and aortic baroreceptors (feedback state estimator). The baroreflex (the controller) adjusts cardiovascular variables to align the measured blood pressure (as sensed by the baroreceptors) with the reference trajectory of operating points (as specified by short-term signaling from the forebrain or longer-term endocrine signaling), by means of the sympathetic and parasympathetic branches of the autonomic nervous system (as control signals).

For a controller to perform well, it must contain some sort of copy or mirror of the plant’s expected behavior, which is referred to as an internal model (Conant & Ashby, 1970; Francis & Wonham, 1976). However, inaccuracies in the internal model limit controller performance (as does an absence of feedback in open-loop control). Internal models serve a dual purpose: to infer past trajectories 9 (including their control signals) on the basis of present or even counterfactual measurements (such as the reference signal) and to estimate future states and measurements10 in the plant on the basis of control signals. Fig. 4 divides these two functions into the components of a control system that they inform: the controller (purple) infers controls from state estimates to track the reference signal,11 and the state estimator (yellow) predicts future measurements on the basis of present control signals, refining those state estimates using measurements.12 Together, internal models predict the future and infer the past in the plant even when plant behavior is subject to process noise and measurements are subject to measurement noise.

Internal models play an important and specific role in control theory (Wolpert & Kawato, 1998; Kawato, 1999). State estimation of future measurements based on present control signals allows the difference between predicted and actual measurements, which is called the prediction error, to be used to measure the accuracy and precision of online control. The state estimator (yellow) can use prediction errors to send updated state estimates (dashed purple arrow) back to the controller. Comparing the updated state estimates to the reference signal then yields a quantity called the control error, on the basis of which the controller can refine the control signals. The process of calculating prediction errors and control errors online, and using them to improve control signals, is called feedback control. Model-based feedback control is widely used and well-known for driving control error to zero over time (a property called stability), particularly when prediction errors are also driven to zero by refining the internal model. This is a property that open-loop planning cannot enjoy, since an open-loop control system does not measure the control error, which amounts to (falsely) assuming it to be zero. An internal model alone only suffices for open-loop, feedforward planning, while control requires feedback.

Controllers also can couple to each other hierarchically: a higher-level controller can send a control signal to a lower-level controller, which functions as the reference signal for that lower-level controller. In turn, the lower-level controller may send a control error signal up to the higher-level controller. The higher-level and lower-level controller also each may have their own state estimators based on their own internal models. The next subsection will address hierarchical control in human motor control.

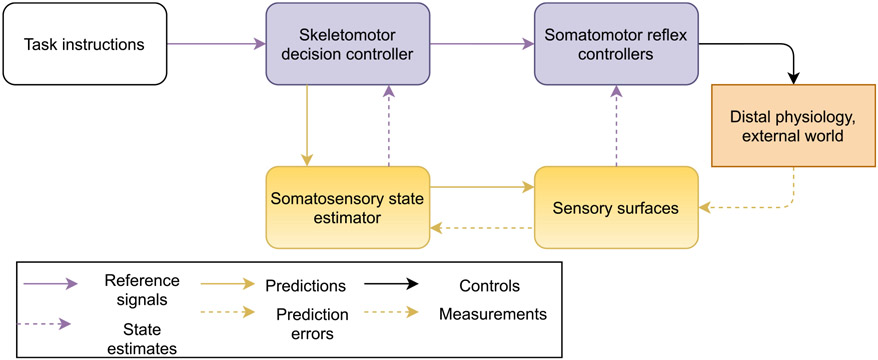

3.2. Moving the body: The referent control hypothesis

The referent control hypothesis (Feldman, 2015; Latash, 2021) describes the skeletomotor system in terms of a hierarchy of controllers, with higher-level controllers in the brain prescribing reference trajectories to the lower-level reflexes in the spinal cord. These reflexes then compare the actual length of the muscle, as signaled by afferent proprioceptor neurons, to the reference length sent down by the brain, and contracts the muscle to bring the two into agreement (Latash, 2010; Feldman, 2016). Effectively, higher level controllers tell lower ones what trajectory to visit, and the lower ones figure out how to track it successfully, a phenomenon beginning to be considered in engineered (i. e., non-biological) control systems (Merel, Botvinick, & Wayne, 2019).

Cortical regions involved in skeletomotor control (e.g., primary motor cortex, premotor cortices, etc.) also send a copy of the downward-flowing reference signals to somatosensory cortices (called an efferent copy), thereby providing prior predictions to somatosensory regions. These “prior” signals literally change the firing of neurons in somatosensory cortices, preparing them to receive incoming signals from the world based on upcoming skeletomotor movements. This dynamic takes place across all levels of the neural hierarchy, allowing the nervous system to use somatosensory prediction errors as feedback to confirm or correct movement performance; it also allows the nervous system to distinguish reafferent (self-caused) from exafferent (externally caused) sensory signals .13 Through this lens, the brain is considered to act as both a controller (in its visceromotor and skeletomotor functions) and a corresponding state estimator (in its perceptual and simulation functions). Since under the referent control hypothesis, skeletomotor “commands” take the form of descending signals specifying desired lengths and tensions for proprioceptive measurements, the brain’s state estimation machinery in sensory areas therefore can simulate the somatosensory consequences of those descending control signals.

The brain is hypothesized to exploit its internal model of both the body and the local external environment to predictively construct populations of reference trajectories as embodied simulations (Barsalou, 2009). These simulated populations of referent trajectories can also be thought of as action concepts (Barrett & Finlay, 2018; Leshinskaya, Wurm, & Caramazza, 2020) (for similar ideas see also Wolpert, Pearson, & Ghez (2013) and Hickok (2014)). In the brain, motor areas are hypothesized to implement feedforward control with action concepts by decompressing low-dimensional referent trajectories from higher in the neural hierarchy into higher-dimensional referent trajectories lower in the neural hierarchy .14 Decompressive prediction by the brain eventually produces referent coordinates in the highest-dimensional, most redundant system of coordinates: references for individual peripheral stretch reflexes (Feldman, 2015). Each such stretch reflex circuit compares the ascending stimulus from its proprioceptor neuron to its centrally commanded reference, and activates its motoneuron to suppress the difference between the two. The motor system thus can be imagined as a hierarchy of controllers, with higher-level controllers specifying the reference signals for lower-level controllers as their output control signals. In addition to converting signals from the conceptual reference frame of the behavioral task to the concrete reference frames of individual limbs and muscles, this hierarchical structure finesses neural signaling delays to provide fast, accurate control (Nakahira, Liu, Bernat, Sejnowski, & Doyle, 2019). Fig. 5 shows an elegant “outside-in” view15 of the motor control problem, imagined along these lines. Here, an experimenter directs a participant to perform a task, who constructs an action concept from the instructions. This action concept seeds the construction of sensorimotor prediction populations in the skeletomotor decision controller. The skeletomotor controller further unpacks the action concept into an actual body posture and its attendant reference coordinates for muscles, as well as predictions for the somatosensory state estimator. The movements created by the reference coordinates are themselves are stabilized by fast proprioceptive feedback at the level of the individual stretch reflexes in the spinal cord. The hypothesis expressed by the figure assumes that all systems in the body, both somatomotor and visceromotor pathways, serve an externally driven behavioral task.

Fig. 5.

Functional block diagram for an experimental psychologist’s task-oriented view of motor control. The diagram shows a formal logical structure here, at a conceptual level; the boxes and arrows do not map onto the anatomy of the brain or nervous system. In contrast to Fig. 4, this diagram differentiates between skeletomotor (brain) and peripheral (stretch reflex) controllers and between sensory state estimators (brain) and peripheral sense organs (sensory surfaces). The diagram shows an engineering perspective on a psychology experiment, in which the experimenter prescribes a task or behavior to participants, and a participant’s brain then acts as control system to achieve the prescribed behavior. Systems that maintain the body therefore serve systems that move the body, which in turn serve a prescribed behavior.

However, the structure of a motor-control experiment has only limited overlap with the actual structure of motor control as it unfolds in the natural world. An experiment on reaching behaviors involves an experimenter prescribing the reaching task to their participants. A participant’s brain does not function specifically to follow instructions from an experimenter, but rather to regulate their own body. The actual organization of the central nervous system accords better with an “inside-out” view16 of motor function: movement of the body (the somatomotor pathways) serves regulation of the body. For example, in a game of dodgeball, if you unexpectedly step hard on a sharp rock, you (usually) do not purposely impale yourself to stabilize your posture. Rather, you recoil in pain, and the unplanned disturbance of any tissue damage requires you to make a decision about what to do next: excuse yourself to nurse your foot, or play through the pain.

This referent control hypothesis relies upon an important assumption: that once a higher-level controller specifies a reference for a lower-level controller, it can rely on that lower-level controller to successfully enact the desired movement. That lower-level controller stabilizes itself with measurements at a smaller spatial scale, and functions reactively rather than predictively. Neither do the lower-level control systems implemented by proprioceptors and motoneurons integrate information other than proprioception. The stretch reflex receives a signal specifying the referent length for the muscle, compares it to the proprioceptor’s signal of the muscle’s actual length, and fires the motoneurons to contract the muscle if the actual length exceeds the referent length (Box 3).

Box 3. Terminology.

The terms for directions of neural signaling, depending on the implied origin of the signal, are efferent, afferent, and reafferent. Motor neuroscientists refer to efferent signals (flowing from somatomotor cortex down to somatosensory areas, the midbrain, and the peripheral nervous system) as feedforward signals. They then refer to afferent signals, particularly reafferent somatosensory signals, as feedback signals. Since the usage from motor neuroscience agrees with that from control theory, this paper follows that usage.

3.3. Making decisions: constructing future reference trajectories

So far control theory has been presented as it applies to physiology and motor neuroscience. These control mechanisms have largely been local, in the sense that they only drive neural outputs to impact a small, narrow domain: blood vessels and baroreceptors modulating autonomic outflow to reduce the heart rate; proprioceptors in individual muscle spindles driving the stretch reflex. In the stretch reflex, the reference is set by top-down signaling from the brain as part of voluntary movement, and therefore varies widely. The baroreflex displays a similar structure, receiving parameters for its capacity curve via central command.

Reactive control requires reference signals before physiological needs are fully known. In contrast, the brain controls the body predictively, both viscerally and through overt somatomotor behavior. Predictive neural control of the viscera must take into account biological processes that change the body (such as those occurring with development) and cyclical routines such as the wake-sleep cycle. The brain also must coordinate across a variety of physiological demands in the moment, each with its own capacity curve that may change over time. At the same time, the brain is subject to uncertainty from both sensory/measurement noise and process noise in the innervated tissues. Decision making in the brain must therefore operate according to control principles that take into account competing demands over time.

A control theory formulation meeting these criteria is stochastic optimal control (SOC). Stochastic optimal control uses probability distributions over states x to model the effects of both process noise and measurement noise. The goal then is to optimize the probabilistic expectation of an objective function summed over the indefinite future. This expectation of a sum, accounting for both uncertainty and time, is called the value function, and is defined recursively by the Bellman equation. A control strategy is optimal when it maximizes the value function. Since objective functions include terms that quantify the relative tolerance for regulatory error, it supports a wide variety of approximate solution methods to find “good enough” controls, which drive the plant close to its reference trajectory even when noise and disturbances prevent exact reference tracking.

Objective functions generalize the simple comparators often used in classical control; they compare a reference state with an actual state to generate a real number as output. Generally the output value should be monotonically related to the degree of agreement or disagreement between the reference and the actual state. Objective functions with a fixed notional reference state and a fixed tolerance for deviation from that state can model fixed physiological set-points and tolerances, like those found in homeostatic theories of regulation (Box 4).

Box 4. Terminology.

Predictive homeostasis is the hypothesized mode of regulation in which anticipatory decision-making mechanisms maintain regulated resources at fixed set-points with fixed tolerances. Neurally, a homeostatic regulator would consist of a comparator circuit without an incoming signal modulating its reference. Since most existing computational models of visceromotor control and interoception (e.g., (Petzschner et al., 2021; Gu & FitzGerald, 2014)) fall into this camp, we consider them to model allostasis as predictive homeostasis, designed for settings in which long-run set-points and tolerances define the chief control mechanism. This family of models includes certain active inference approaches (Pezzulo et al., 2015, 2018; Corcoran, Pezzulo, & Hohwy, 2020) and homeostatic reinforcement learning (Keramati & Gutkin, 2014). Sometimes in stochastic optimal control, the objective function is not known a priori, and must be learned from samples. This special case is called reinforcement learning (abbreviated as RL). Neuroscientists have studied reinforcement learning (Sutton & Barto, 2018) as a model of how the brain might make decisions over time. Modeling midbrain phasic dopamine signaling with RL has led to a popular approach in computational neuroscience (the reward prediction error hypothesis (Niv, 2009; Colombo, 2014)), and continues to yield novel findings today (Lowet, Zheng, Matias, Drugowitsch, & Uchida, 2020). Notably, these successes rely on a specific way to approximate the value function for behavior over time by comparing predictions generated in the brain to the actual sensory effects of behavior.

Stochastic optimal control, at first glance, might seem unnecessarily complex. Taking an expectation of a sum over time seems almost repetitive: why should the brain not just wait until it arrives to a future bodily state, and then compare it to the corresponding reference using the objective function? This is, after all, precisely how reactive control mechanisms work. What problem is allostatic decision-making in the brain solving that a homeostatic reflex in the body cannot? Reflexive, reactive control under uncertainty carries a hidden assumption: that uncertainty in the moment is equivalent to uncertainty over time, and thus if control mechanisms can compensate for errors in the moment, they can compensate for all errors in the future. When that assumption holds, predictive and reactive control will be equivalent. When that assumption does not hold, stochastic optimal control can yield vastly better regulation than reactive control.

That assumption is called ergodicity, and it amounts (very roughly) to modeling time as having no effect on probability distributions. B.1.1 discusses ergodicity in detail, including its implications for experimental design. B.1.2 also discusses a paradigm for studying the brain as a whole that does assume ergodicity, allowing it to bridge perceptual processing with decision making and motor control. The following material places more emphasis on situations that are not ergodic, which include those with periodic structure, or with irreversible changes over time. Real life is filled with non-ergodic situations in which one must make decisions: the cycles of day and night (and circadian rhythms tracking them) are non-ergodic; processes of development (from childhood to adulthood) are non-ergodic; events like injury and death are non-ergodic. The brain accounts for these non-ergodic realities of life in decision-making processes (Mangalam & Kelty-Stephen, 2021).

One final alternative way to think of SOC is in terms of what a controller needs to model, and which signals into a controller may be subject to noise or disturbance. In a typical control problem, the control engineer “trusts” that she can provide an exact reference signal, while building the controller to be robust to noise and disturbances in the plant. In SOC and RL, the control engineer may not be able to specify the reference signal exactly, but she can provide the controller with encouragement (rewards) or discouragement (costs) for following observed trajectories. “Rewards” thus count as evidence in favor of the plant’s recent behavior following the reference trajectory, while “costs” count as evidence for behavior deviating from the reference trajectory (Friston, Adams, & Montague, 2012). This implies that an optimal controller, viewed from the perspective of Fig. 4 or Fig. 5, has two sources of uncertainty that require two separate state-estimation processes: one for the state of the plant, and another for the reference signal. The next subsection will describe how interoception works alongside somatosensory perception to reduce both of these uncertainties.

3.4. Allostatic control Motivating movements with an interoceptive model

The brain allostatically regulates the body. Accordingly, there ought to be a description of the brain in terms analogous to inferring capacity curves; these can be transformed into objective functions and projected into the future to construct a value function. This value function would allow the brain to take account of the future when deciding what to do now. When the value function successfully predicted instantaneous allostatic capacity curves, it would seem as if the future body prescribed a reference trajectory to the present brain. Since moving the body would predictably change allostatic capacities (i.e., operating points and tolerances) in the future, a “circular causation” of self-organization and autonomy would emerge. Fig. 6 diagrams allostatic regulation using the language of control theory. This figure employs the same visual vocabulary of plants (orange), controllers (purple), internal models (yellow), feedforward signals (solid arrows), and feedback signals (dashed arrows) as the earlier Figs. 4 and 5. Systems that move the body are hypothesized to be in the service of systems that maintain the body (i.e., movement is in service of allostasis).

Fig. 6.

Functional block diagram for a control-theoretic view of allostasis. In contrast to Fig. 5, this diagram shows a closed-loop control system design for autonomous regulation of the body. An experimenter’s desired “task behavior” is replaced by the allostatic capacity estimator, which sends predictions of capacity curves to the interoceptive state estimator and receives prediction errors with which to update its estimates. The updated estimates are issued as a reference signal to the visceromotor controller. This diagram shows a formal logical structure, at a conceptual level; the hypothesis depicted is constrained by the inferred anatomical structures in Barrett (2017) but the boxes and arrows do not map one-to-one onto the anatomy of the brain or nervous system (Lee, Ferreira-Santos, & Satpute, 2021).

Allostatic regulation contains homeostatic regulation as a special case. Allostasis consists of regulating a system’s state to track a reference trajectory, one which fully allows for system states to change over time. Homeostasis consists of regulating a system’s state towards reference points, independent of time. Thus, an allostatic controller can implement homeostatic control by prescribing a reference trajectory as a single, unchanging point, while a homeostatic controller cannot implement allostatic control. This is because homeostasis does not really deal with context. The allostatic trajectory approach allows for the possibility that past behaviors affect the present state and reference. It allows the effect of past perturbations or interoceptive conditions to influence what happens next (Box 5).

Box 5. Illustration by example.

A return to the dodgeball example will ground these ideas. During a game of dodgeball, muscles will demand greater amounts of oxygen and glucose than they had needed during rest. Successfully throwing the ball at an opposing player will require mobilizing both the skeletomotor musculature (“soma”, plant) as well as the internal bodily systems such as the cardiovascular system (“viscera”, plant) across a timescale of tens of seconds to minutes via the visceromotor and somatomotor controllers (purple). We hypothesize that a functional equivalent to an allostatic capacity estimator (yellow) anticipates the need, altering the reference trajectory conveyed as a prediction to the visceromotor controller (purple). The visceromotor controller must then mobilize the cardiovascular system to supply those metabolic necessities via the blood. In this instance, among other adjustments, the visceromotor controller shifts and flattens the baroreflex’s capacity curve (Dicarlo & Bishop, 1992), allowing both vasoconstriction and an increased heart rate to work in tandem to supply more blood flow to the muscles.

Translating the example into the language of Fig. 6, the allostatic capacity estimator (yellow) signals the anticipated demand as a reference trajectory (solid purple arrow) to the visceromotor decision controller. The visceromotor controller decompresses this low-dimensional reference trajectory into higher-dimensional reference trajectories (solid purple arrows) for the skeletomotor decision controller (purple) and peripheral reflex controllers (purple). Simultaneously, the visceromotor decision controller sends efferent copies (downward solid yellow arrows) to the viscerosensory (“interoceptive”) and somatosensory exteroceptive state estimators (yellow) to generate sensory predictions (e.g., predict that the heart rate will be close to the reference). The skeletomotor controller decompresses its own reference trajectory into even higher-dimensional reference trajectories (solid purple arrow) for peripheral reflex controllers (purple); these convey motor signals about where your hands and feet should be, how bent your knees should be, etc. The skeletomotor controller also emits efferent copies to the somatosensory model, which generates sensory predictions regarding the sense data coming from sensory surfaces: the strain of bending the knees, the thump of the heart against the chest, and so on. Finally, the local reflex controllers enact motor controls (solid black arrow) that move the body. Basing sensory predictions on the efference copies enables the resultant measurements at the sensory surfaces (dotted yellow arrow) to generate sensory prediction errors (dotted yellow arrows), which serve as feedback on the timescale of tens or hundreds of milliseconds. This feedback flows through the state estimators to update their state estimates, and these estimates are then signalled to controllers as control feedback. At the far end, updates to interoceptive state estimates generate prediction errors that update the estimated allostatic capacities, thus closing the loop.

3.5. Summary

This section discussed three applications of control theory to studying the body and brain. Section 3.1 described control theory as a whole and discussed its applications in physiology, providing an example of a control system in Fig. 4. Section 3.2 then discussed the study of voluntary motor control in the nervous system. The construct of reference trajectories in control theory finds a close analogue in the referent control hypothesis of somatomotor control, and its application in Fig. 5 shows an engineer’s view of motor control. Section 3.3 discussed the necessity for allostatic decision-making in the brain to take account of changing bodily conditions over time, constructing references based on physiological capacity curves. The next section will apply the concepts from this section to describe a formal model of allostatic regulation.

Before diving into formal modeling details below, it might be helpful to compare and contrast the approach here with a prominent modeling framework: active inference (Friston, Daunizeau, Kilner, & Kiebel, 2010; Friston, Samothrakis, & Montague, 2012). Like many active inference models, the formal model below takes the form of information-theoretic model-predictive control (similar to work such as Williams, Drews, Goldfain, Rehg, & Theodorou (2018) and Nasiriany et al. (2021) in engineering) with a hierarchically-defined objective (similar to Smith, Thayer, Khalsa, & Lane (2017), Pezzulo et al. (2018)). Insofar as such efforts can be considered “active inference”, the formal model outlined in the next section is also an active inference model. Unlike most active inference models in the literature, however (with the exception of Millidge (2020)), the material below considers an indefinite-time or “infinite horizon” control setting. Insofar as the research community prefers for the term “active inference” to refer specifically to formal models derived from the free energy principle (see B.1.2 and Kirchhoff, Parr, Palacios, Friston, & Kiverstein (2018)), with its ergodic assumptions and its unique expected free energy objective, the formal model below is distinct from these traditional active inference models.

4. Allostasis as trajectory-tracking stochastic optimal control

The previous section overviewed and applied control theory to study physiological reflexes, voluntary motor movements, and decision making in the brain. The previous section described stochastic optimal control (SOC) theory as a mathematical formalism capable of flexibly modeling decision making under uncertainty. This section will describe our formal model of allostatic decision making: the Allostatic Path-Integral Control (APIC) model. APIC has a simple idea at its core: just as perceptual concepts serve as internal models of the body’s sensory surfaces (Barrett, 2017; Barrett & Finlay, 2018; Barsalou, 2009), action concepts also serve as internal models of potential behaviors and their predicted outcomes. The brain refines and selects sensory predictions derived from a concept based on their fit to past and present sensory evidence; we suggest that it likewise refines and selects the motoric reference configurations derived from an action concept based on their present and future allostatic value. Section 4.1 derives an SOC objective function from the mathematical form by which Section 2.2 represented capacity curves. Section 4.2 marshals behavioral and ecological evidence of how animals balance and meet their needs over time into a long-run mathematical form. Section 4.3 then sketches a formal model of how action concepts fit into SOC. Section 4.4 describes how to exploit action concepts optimally in feedback control. These last two subsections include the motivations for particular modeling choices.

4.1. Transforming capacity curves into objective functions

Section 3 pointed out that allostatic control poses a decision-making problem: the brain must predict the body’s future needs in the form of a reference trajectory or value function, and move to satisfy those needs before they become acute. This subsection presents a mathematical treatment of capacity curves as objective functions. The resulting objective functions have maxima at the operating points, and have slopes away from the maxima corresponding to tolerances for error. Since this derivation of objective functions applies to arbitrary capacity curves, it can be applied to model a variety of interoceptive modalities.

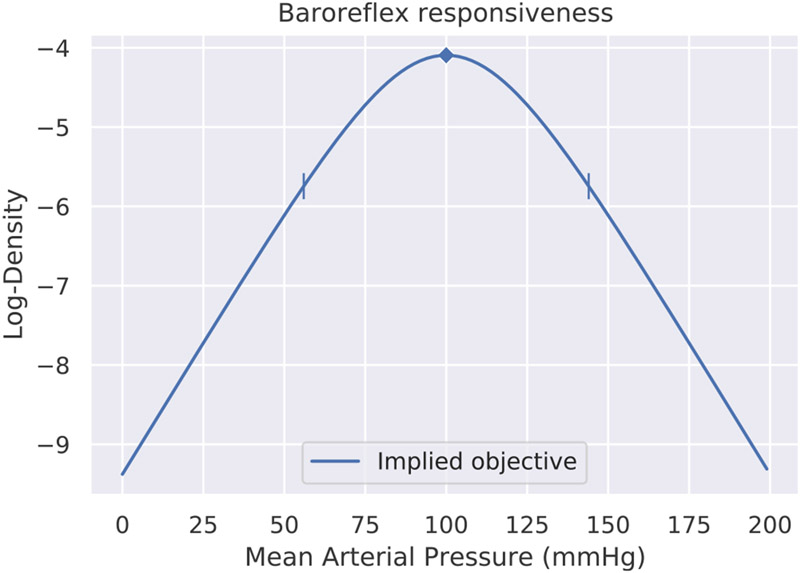

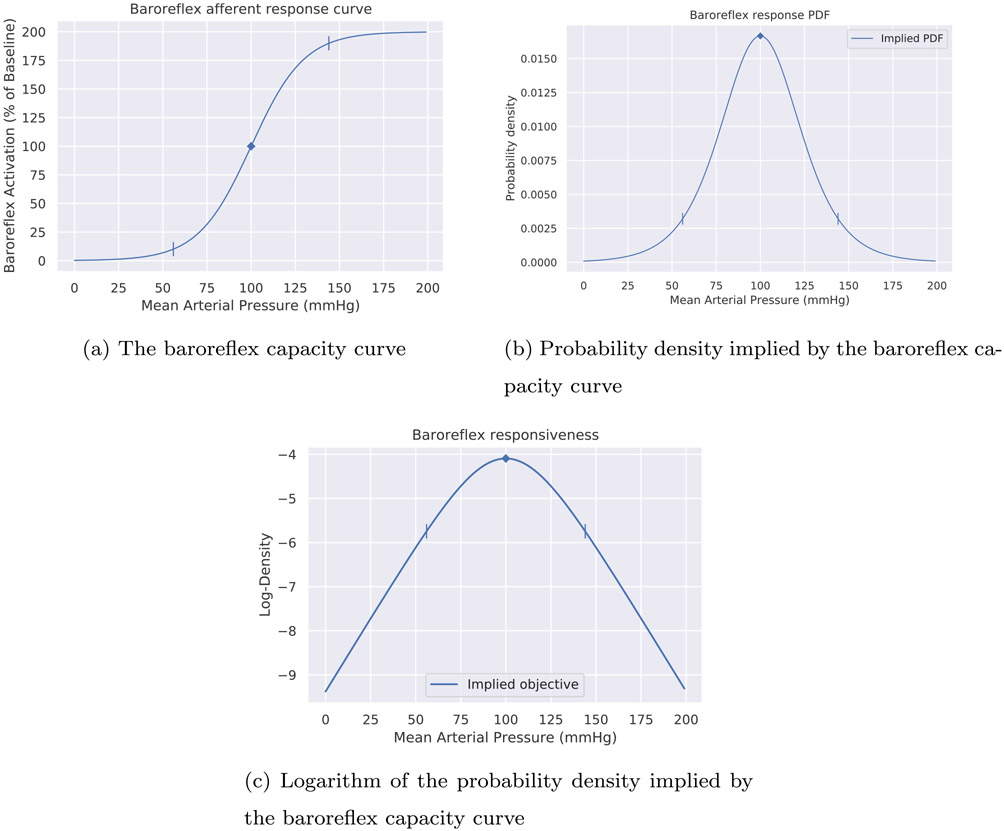

Since any given capacity curve (such as the one in Fig. 1 above) reaches a maximum value on the vertical axis, it can be divided by its maximum Y-value to “normalize” it to range between zero and one. Once normalized in this way, it can be interpreted as a cumulative distribution function (CDF) from probability theory. This is in fact precisely what Srinivasan, Laughlin, & Dubs (1982) did to interpret the firing of retinal neurons in flies as a form of predictive coding (for an excellent example, see their Fig. 1). The derivative (instantaneous slope) of a CDF yields a probability density function (PDF). This is the more familiar way of representing a probability distribution, where height on the vertical axis corresponds to likelihood, but for a PDF derived from a capacity curve, it represents relative responsiveness to perturbation. We will call such a distribution a reference distribution. Fig. 7 therefore shows the result of normalizing and differentiating the capacity curve in Fig. 2 to obtain its corresponding PDF. The density function’s graph clearly shows that the baroreflex’s capacity to adapt to changes in mean arterial pressure (MAP) degrades the further MAP moves from the peak at 100 mmHg. The rate at which it degrades, and the response elicited, is governed by the baroreflex gain. The gain of a capacity curve thereby defines the relative importance of deviations from the operating point, and thus corresponds neatly to precision in predictive coding. The greater the baroreflex gain, the more sharply curved the PDF around its operating point, and the greater the response mobilized by any deviation from the operating point. Section 2.3 described how the capacity curves in settling-point physiological controllers will often have inflection points that lie somewhere other than the center, thus being horizontally asymmetrical. This property translates neatly to probability densities: PDF’s need not be horizontally symmetrical either. Bodily responses such as inflammation or nociception could have highly asymmetrical capacity curves, with the operating point even potentially being located near a zero value of the PDF.

Fig. 7.