Abstract

Sequence learning, prediction and replay have been proposed to constitute the universal computations performed by the neocortex. The Hierarchical Temporal Memory (HTM) algorithm realizes these forms of computation. It learns sequences in an unsupervised and continuous manner using local learning rules, permits a context specific prediction of future sequence elements, and generates mismatch signals in case the predictions are not met. While the HTM algorithm accounts for a number of biological features such as topographic receptive fields, nonlinear dendritic processing, and sparse connectivity, it is based on abstract discrete-time neuron and synapse dynamics, as well as on plasticity mechanisms that can only partly be related to known biological mechanisms. Here, we devise a continuous-time implementation of the temporal-memory (TM) component of the HTM algorithm, which is based on a recurrent network of spiking neurons with biophysically interpretable variables and parameters. The model learns high-order sequences by means of a structural Hebbian synaptic plasticity mechanism supplemented with a rate-based homeostatic control. In combination with nonlinear dendritic input integration and local inhibitory feedback, this type of plasticity leads to the dynamic self-organization of narrow sequence-specific subnetworks. These subnetworks provide the substrate for a faithful propagation of sparse, synchronous activity, and, thereby, for a robust, context specific prediction of future sequence elements as well as for the autonomous replay of previously learned sequences. By strengthening the link to biology, our implementation facilitates the evaluation of the TM hypothesis based on experimentally accessible quantities. The continuous-time implementation of the TM algorithm permits, in particular, an investigation of the role of sequence timing for sequence learning, prediction and replay. We demonstrate this aspect by studying the effect of the sequence speed on the sequence learning performance and on the speed of autonomous sequence replay.

Author summary

Essentially all data processed by mammals and many other living organisms is sequential. This holds true for all types of sensory input data as well as motor output activity. Being able to form memories of such sequential data, to predict future sequence elements, and to replay learned sequences is a necessary prerequisite for survival. It has been hypothesized that sequence learning, prediction and replay constitute the fundamental computations performed by the neocortex. The Hierarchical Temporal Memory (HTM) constitutes an abstract powerful algorithm implementing this form of computation and has been proposed to serve as a model of neocortical processing. In this study, we are reformulating this algorithm in terms of known biological ingredients and mechanisms to foster the verifiability of the HTM hypothesis based on electrophysiological and behavioral data. The proposed model learns continuously in an unsupervised manner by biologically plausible, local plasticity mechanisms, and successfully predicts and replays complex sequences. Apart from establishing contact to biology, the study sheds light on the mechanisms determining at what speed we can process sequences and provides an explanation of fast sequence replay observed in the hippocampus and in the neocortex.

Introduction

Learning and processing sequences of events, objects, or percepts are fundamental computational building blocks of cognition [1–4]. Prediction of upcoming sequence elements, mismatch detection and sequence replay in response to a cue signal constitute central components of this form of processing. We are constantly making predictions about what we are going to hear, see, and feel next. We effortlessly detect surprising, non-anticipated events and adjust our behavior accordingly. Further, we manage to replay learned sequences, for example, when generating motor behavior, or recalling sequential memories. These forms of processing have been studied extensively in a number of experimental works on sensory processing [5, 6], motor production [7], and decision making [8].

The majority of existing biologically motivated models of sequence learning addresses sequence replay [9–12]. Sequence prediction and mismatch detection are rarely discussed. The Hierarchical Temporal Memory (HTM) [13] combines all three aspects: sequence prediction, mismatch detection and replay. Its Temporal Memory (TM) model [14] learns complex context dependent sequences in a continuous and unsupervised manner using local learning rules [15], and is robust against noise and failure in system components. Furthermore, it explains the functional role of dendritic action potentials (dAPs) and proposes a mechanism of how mismatch signals can be generated in cortical circuits [14]. Its capacity benefits from sparsity in the activity, and therefore provides a highly energy efficient sequence learning and prediction mechanism [16].

The original formulation of the TM model is based on abstract models of neurons and synapses with discrete-time dynamics. Moreover, the way the network forms synapses during learning is difficult to reconcile with biology. Here, we propose a continuous-time implementation of the TM model derived from known biological principles such as spiking neurons, dAPs, lateral inhibition, spike-timing-dependent structural plasticity, and homeostatic control of synapse growth. This model successfully learns, predicts and replays high-order sequences, where the prediction of the upcoming element is not only dependent on the current element, but also on the history. Bringing the model closer to biology allows for testing its hypotheses based on experimentally accessible quantities such as synaptic connectivity, synaptic currents, transmembrane potentials, or spike trains. Reformulating the model in terms of continuous-time dynamics moreover enables us to address timing-related questions, such as the role of the sequence speed for the prediction performance and the replay speed.

The study is organized as follows: the Methods describe the task, the network model, and the performance measures. The Results illustrate how the interaction of the model’s components gives rise to context dependent predictions and sequence replay, and evaluate the sequence processing speed and prediction performance. The Discussion finally compares the spiking TM model to other biologically motivated sequence learning models, summarizes limitations, and provides suggestions for future model extensions.

Methods

In the following, we provide an overview of the task and the training protocol, the network model, and the task performance analysis. A detailed description of the model and parameter values can be found in Tables 1 and 2.

Table 1. Description of the network model.

Parameter values are given in Table 2.

| Summary | |

| Populations | excitatory neurons (), inhibitory neurons (), external spike sources (); and composed of M disjoint subpopulations and (k = 1, …, M) |

| Connectivity |

|

| Neuron model |

|

| Synapse model | exponential or alpha-shaped postsynaptic currents (PSCs) |

| Plasticity | homeostatic spike-timing dependent structural plasticity in excitatory-to-excitatory connections |

Table 2. Model and simulation parameters.

Parameters derived from other parameters are marked in gray. Bold numbers depict default values.

| Name | Value | Description |

|---|---|---|

| Network | ||

| N E | 2100 | total number of excitatory neurons |

| N I | 14 | total number of inhibitory neurons |

| number of excitatory subpopulations (= number of external spike sources) | ||

| number of excitatory neurons per subpopulation | ||

| number of inhibitory neurons per subpopulation | ||

| ρ | 20 | (target) number of active neurons per subpopulation after learning = minimal number of coincident excitatory inputs required to trigger a spike in postsynaptic inhibitory neurons |

| (Potential) Connectivity | ||

| K EE | 420 | number of excitatory inputs per excitatory neuron (EE in-degree) |

| probability of potential (excitatory) connections | ||

| number of inhibitory inputs per excitatory neuron (EI in-degree) | ||

| number of excitatory inputs per inhibitory neuron (IE in-degree) | ||

| K II | 0 | number of inhibitory inputs per inhibitory neuron (II in-degree) |

| Excitatory neurons | ||

| τ m,E | 10 ms | membrane time constant |

| τ ref,E | 10 ms | absolute refractory period |

| C m | 250 pF | membrane capacity |

| V r | 0.0 mV | reset potential |

| θ E | 20 mV (predictive mode), 5 mV (replay mode) | somatic spike threshold |

| I dAP | 200 pA | dAP current plateau amplitude |

| τ dAP | 60 ms | dAP duration |

| θ dAP | 59 pA (predictive mode), 41.3 pA (replay mode) | dAP threshold |

| Inhibitory neurons | ||

| τ m,I | 5 ms | membrane time constant |

| τ ref,I | 2 ms | absolute refractory period |

| C m | 250 pF | membrane capacity |

| V r | 0.0 mV | reset potential |

| θ I | 15 mV | spike threshold |

| Synapse | ||

| γ | 5 | number co-active presynaptic neurons required to trigger a dAP in the postsynaptic neuron |

| W | 12.98 pA | weight of mature EE connections (EPSC amplitude) |

| 0.9 mV (predictive mode), 0.12 mV (replay mode) | weight of IE connections (EPSP amplitude) | |

| J IE | 581.19 pA (predictive mode), 77.49 pA (replay mode) | weight of IE connections (EPSC amplitude) |

| −40 mV | weight of EI connections (IPSP amplitude) | |

| weight of EI connections (IPSC amplitude) | ||

| 22 mV | weight of EX connections (EPSP amplitude) | |

| weight of EX connections (EPSC amplitude) | ||

| τ EE | 5 ms | synaptic time constant of EE connections |

| τ IE | 0.5 ms | synaptic time constant of IE connections |

| τ EI | 1 ms | synaptic time constant of EI connections |

| τ EX | 2 ms | synaptic time constant of EX connection |

| d EE | 2 ms | delay of EE connections (dendritic) |

| d IE | 0.1 ms | delay of IE connections |

| d EI | {0.1, 0.2} ms | delay of EI connections (non-default value used in Figs 10 and 11) |

| d EX | 0.1 ms | delay of EX connections |

| Plasticity | ||

| λ+ | 0.08 (sequence set I), 0.28 (sequence set II) | potentiation rate |

| λ− | 0.0015 (sequence set I), 0.0061 (sequence set II) | depression rate |

| θ P | 20 | synapse maturity threshold |

| minimum permanence | ||

| P max | 20 | maximum permanence |

| P 0,min | 0 | minimal initial permanence |

| P 0,max | 8 | maximal initial permanence |

| τ + | 20 ms | potentiation time constant |

| z* | 1 | target dAP activity |

| λh | 0.014 (sequence set I), 0.024 (sequence set II) | homeostasis rate |

| τ h | 440 ms (sequence set I), 1560 ms (sequence set II) | homeostasis time constant |

| y i | 1 | depression decrement |

| Δtmin | 4 ms | minimum time lag between pairs of pre- and postsynaptic spikes at which synapses are potentiated |

| Δtmax | 2ΔT | maximum time lag between pairs of pre- and postsynaptic spikes at which synapses are potentiated |

| Input | ||

| L | 1 | number of subpopulations per sequence element = number of target subpopulations per spike source |

| S | 2 (sequence set I), 6 (sequence set II) | number of sequences per set |

| C | 4 (sequence set I), 5 (sequence set II) | number of elements per sequence |

| A | 14 | alphabet length (total number of distinct sequence elements) |

| ΔT | {2,…,40,…,90} ms | inter-stimulus interval |

| ΔTseq | max(2.5ΔT, τdAP) | inter-sequence interval |

| ΔTcue | 80 ms | inter-cue interval |

| Simulation | ||

| Δt | 0.1 ms | time resolution |

| K | {80, 100} | number of training episodes |

Task and training protocol

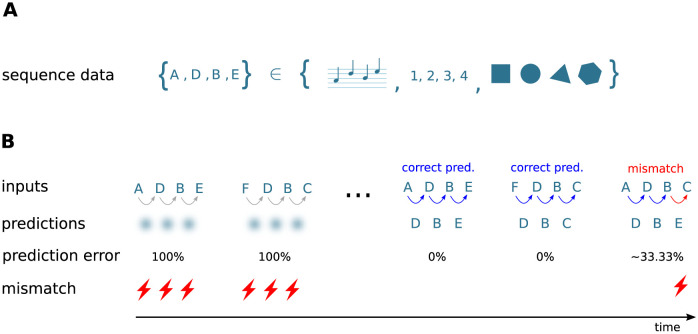

In this study, we develop a neuronal architecture that can learn and process an ensemble of S sequences of ordered discrete items ζi,j with , i ∈ [1, …, S]. The length of sequence si is denoted by Ci. Throughout this study, the sequence elements ζi,j ∈ {A, B, C, …} are represented by Latin characters, serving as placeholders for arbitrary discrete objects or percepts, such as images, numbers, words, musical notes, or movement primitives (Fig 1A). The order of the sequence elements within a given sequence represents the temporal order of item occurrence.

Fig 1. Sketch of the task and the learning protocol.

A) The neuronal network model developed in this study learns and processes sequences of ordered discrete elements, here represented by characters “A”, “B”, “C”, …. Sequence elements may constitute arbitrary discrete items, such as musical notes, numbers, or images. The order of sequence elements represents the temporal order of item occurrence. B) After repeated, consistent presentation of sets of high-order sequences, i.e., sequences with overlapping characters (here, {A, D, B, E} and {F, D, B, C}), the model learns to predict subsequent elements in response to the presentation of other elements (blue arrows) and to detect unanticipated elements by generating a mismatch signal if the prediction is not met (red arrows and flash symbols). The learning process is continuous and unsupervised. At the beginning of the learning process, all presented elements are unanticipated and hence trigger the generation of a mismatch signal. The learning progress is monitored and quantified by the prediction error (see Task performance measures).

The tasks to be solved by the network consist of

-

i)

predicting subsequent sequence elements in response to the presentation of other elements,

-

ii)

detecting unanticipated stimuli and generating a mismatch signal if the prediction is not met, and

-

iii)

autonomously replaying sequences in response to a cue signal after learning.

The architecture learns sequences in a continuous manner: the network is exposed to repeated presentations of a given ensemble of sequences (e.g., {A, D, B, E} and {F, D, B, C} in Fig 1B). In the prediction mode (task i) and ii)), there is no distinction between a “training” and a “testing” phase. At the beginning of the learning process, all presented sequence elements are unanticipated and do not lead to a prediction (diffuse shades in Fig 1B, left). As a consequence, the network generates mismatch signals (flash symbols in Fig 1B, left). After successful learning, the presentation of some sequence element leads to a prediction of the subsequent stimulus (colored arrows in Fig 1B). In case this subsequent stimulus does not match the prediction, the network generates a mismatch signal (red arrow and flash symbol in Fig 1B, right). The learning process is entirely unsupervised, i.e., the prediction performance does not affect the learning. As described in Sequence replay, the network can be configured into a replay mode where the network autonomously replays learned sequences in response to a cue signal (task iii)).

In general, the sequences in this study are “high-order” sequences, similar to those generated by a high-order Markov chain; the prediction of an upcoming sequence element requires accounting for not just the previous element, but for (parts of) the entire sequence history, i.e., the context. Sequences within a given set of training data can be partially overlapping; they may share certain elements or subsequences (such as in {A, D, B, E} and {F, D, B, C}). Similarly, the same sequence element (but not the first one, see Limitations and outlook) may occur multiple times within the same sequence (such as in {A, D, B, D}). Throughout this work, we use two sequence sets:

Sequence set I

For an illustration of the learning process and the network dynamics in the prediction (section Sequence learning and prediction) and in the replay mode (section Sequence replay), as well as for the investigation of the sequence processing speed (section Dependence of prediction performance on the sequence speed), we start with a simple set of two partially overlapping sequences s1 = {A, D, B, E} and s2 = {F, D, B, C} (see Fig 1B).

Sequence set II

For a more rigorous evaluation of the sequence prediction performance (section Prediction performance), we consider a set of S = 6 high-order sequences: s1 = {E, N, D, I, J}, s2 = {L, N, D, I, K}, s3 = {G, J, M, C, N}, s4 = {F, J, M, C, I}, s5 = {B, C, K, H, I}, s6 = {A, C, K, H, F}, each consisting of C = 5 elements. The complexity of this sequence ensemble is comparable to the one used in [14], but is more demanding in terms of the high-order context dependence.

Results for two additional sequence sets are summarized in the Supporting information. The set used in S2 Fig is composed of sequences with recurring first elements. In S3 Fig, we show results for longer sequences with a larger number of overlapping elements.

Network model

Algorithmic requirements

To solve the tasks outlined in Task and training protocol, the network model needs to implement a number of algorithmic components. Here, we provide an overview of these components and their corresponding implementations:

Learning and storage of sequences: in both the original and our model, sequences are represented by specific subnetworks embedded into the recurrent network. During the learning process, these subnetworks are carved out in an unsupervised manner by a form of structural Hebbian plasticity.

Context specificity: in our model, learning of high-order sequences is enabled by a sparse, random potential connectivity, and by a homeostatic regulation of synaptic growth.

Generation of predictions: neurons are equipped with a predictive state, implemented by a nonlinear synaptic integration mimicking the generation of dendritic action potentials (dAPs).

Mismatch detection: only few neurons become active if a prediction matches the stimulus. In our model, this sparsity is realized by a winner-take-all (WTA) dynamics implemented in the form of inhibitory feedback. In case of non-anticipated stimuli, the WTA dynamics cannot step in, thereby leading to a non-sparse activation of larger neuron populations.

Sequence replay: autonomous replay of learned sequences in response to a cue signal is enabled by increasing neuronal excitability.

In the following paragraphs, the implementations of these components and the differences between the original and our model are explained in more detail.

Network structure

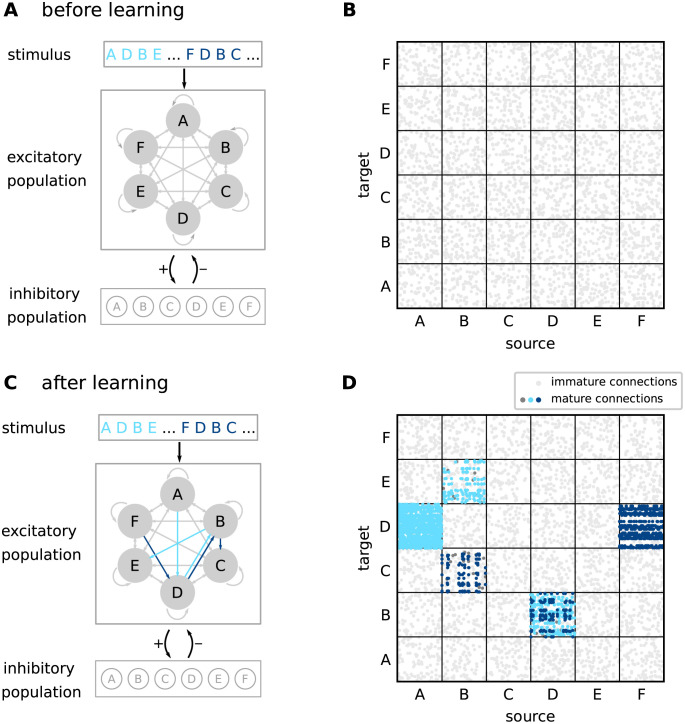

The network consists of a population of NE excitatory (“E”) and a population of NI inhibitory (“I”) neurons. The neurons in are randomly and recurrently connected, such that each neuron in receives KEE excitatory inputs from other randomly chosen neurons in . Note that these “EE” connections are potential connections in the sense that they can be either “mature” (“effective”) or “immature”. Immature connections have no effect on target neurons (see below). In the neocortex, the degree of potential connectivity depends on the distance between the neurons [17]. It can reach probabilities as high as 90% for neighboring neurons, and decays to 0% for neurons that are farther apart. In this work, the connection probability is chosen such that the connectivity is sufficiently dense, allowing for the formation of specific subnetworks, and sufficiently sparse for increasing the network capacity (see paragraph “Constraints on potential connectivity” below). The excitatory population is subdivided into M non-overlapping subpopulations , each of them containing neurons with identical stimulus preference (“receptive field”; see below). Each subpopulation thereby represents a specific element within a sequence (Fig 2A and 2B). In the original TM model [14], a single sequence element is represented by multiple (L) subpopulations (“minicolumns”). For simplicity, we identify the number M of subpopulations with the number of elements required for a specific set of sequences, such that each sequence element is encoded by just one subpopulation (L = 1). All neurons within a subpopulation are recurrently connected to a subpopulation-specific inhibitory neuron . The inhibitory neurons in are mutually unconnected. The subdivision of excitatory neurons into stimulus-specific subpopulations defines how external inputs are fed to the network (see next paragraph), but does not affect the potential excitatory connectivity, which is homogeneous and not subpopulation specific.

Fig 2. Sketch of the network structure.

A) The architecture constitutes a recurrent network of excitatory and inhibitory neurons. Excitatory neurons are stimulated by external sources providing sequence-element specific inputs “A”,“D”, etc. The excitatory neuron population is composed of subpopulations containing neurons with identical stimulus preference (gray circles). Connections between and within the excitatory subpopulations are random and sparse. Inhibitory neurons are mutually unconnected. Each neuron in the inhibitory population is recurrently connected to a specific subpopulation of excitatory neurons. B) Initial connectivity matrix for excitatory connections to excitatory neurons (EE connections). Target and source neurons are grouped into stimulus-specific subpopulations (“A”,…,“F”). Before learning, the excitatory neurons are sparsely and randomly connected via immature synapses (light gray dots). C) During learning, sequence specific, sparsely connected subnetworks with mature synapses are formed (light blue arrows: {A, D, B, E}, dark blue arrows: {F, D, B, C}). D) EE connectivity matrix after learning. During the learning process, subsets of connections between subpopulations corresponding to subsequent sequence elements become mature and effective (light and dark blue dots). Mature connections are context specific (see distinct connectivity between subpopulations “D” and “B” corresponding to different sequences), thereby providing the backbone for a reliable propagation of sequence-specific activity. In panels B and D, only 5% of sequence non-specific EE connections are shown for clarity. Dark gray dots in panel D correspond to mature connections between neurons that remain silent after learning. For details on the network structure, see Tables 1 and 2.

External inputs

During the prediction mode, the network is driven by an ensemble of M external inputs, representing inputs from other brain areas, such as thalamic sources or other cortical areas. Each of these external inputs xk represents a specific sequence element (“A”, “B”, …), and feeds all neurons in the subpopulation with the corresponding stimulus preference. The occurrence of a specific sequence element ζi,j at time ti,j is modeled by a single spike xk(t) = δ(t − ti,j) generated by the corresponding external source xk. Subsequent sequence elements ζi,j and ζi,j+1 within a sequence si are presented with an inter-stimulus interval ΔT = ti,j+1 − ti,j. Subsequent sequences si and si+1 are separated in time by an inter-sequence time interval . During the replay mode, we present only a cue signal encoding for first sequence elements ζi,1 at times ti,1. Subsequent cues are separated in time with an inter-cue time interval ΔTcue = ti+1,1 − ti,1. In the absence of any other (inhibitory) inputs, each external input spike is strong enough to evoke an immediate response spike in all target neurons . Sparse activation of the subpopulations in response to the external inputs is achieved by a winner-take-all mechanism implemented in the form of inhibitory feedback (see Sequence learning and prediction).

Neuron and synapse model

In the original TM model [14], excitatory (pyramidal) neurons are described as abstract three-state systems that can assume an active, a predictive, or a non-active state. State updates are performed in discrete time. The current state is fully determined by the external input in the current time step and the network state in the previous step. Each TM neuron is equipped with a number of dendrites (segments), modeled as coincidence detectors. The dendrites are grouped into distal and proximal dendrites. Distal dendrites receive inputs from other neurons in the local network, whereas proximal dendrites are activated by external sources. Inputs to proximal dendrites have a large effect on the soma and trigger the generation of action potentials. Individual synaptic inputs to a distal dendrite, in contrast, have no direct effect on the soma. If the total synaptic input to a distal dendritic branch at a given time step is sufficiently large, the neuron becomes predictive. This dynamic mimics the generation of dendritic action potentials (dAPs), NMDA spikes [18–20]), which result in a long-lasting depolarization (∼50–500 ms) of the somata of neocortical pyramidal neurons.

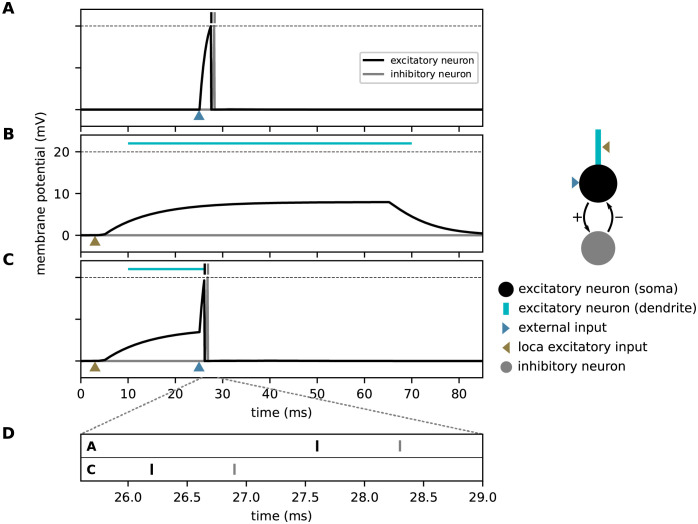

In contrast to the original study, the model proposed here employs neurons with continuous-time dynamics. For all types of neurons, the temporal evolution of the membrane potential is given by the leaky integrate-and-fire model Eq (10). The total synaptic input current of excitatory neurons is composed of currents in distal dendritic branches, inhibitory currents, and currents from external sources. Inhibitory neurons receive only inputs from excitatory neurons in the same subpopulation. Individual spikes arriving at dendritic branches evoke alpha-shaped postsynaptic currents, see Eq (12). The dendritic current includes an additional nonlinearity describing the generation of dAPs: if the dendritic current IED exceeds a threshold θdAP, it is instantly set to a the dAP plateau current IdAP, and clamped to this value for a period of duration τdAP, see Eq (16). This plateau current leads to a long lasting depolarization of the soma (see Fig 3B). The dAP threshold θdAP is chosen such that the co-activation of γ neurons with mature connections to the target neuron reliably triggers a dAP. In this work, we use a single dendritic branch per neuron. However, the model could easily be extended to include multiple dendritic branches. External and inhibitory inputs to excitatory neurons as well as excitatory inputs to inhibitory neurons trigger exponential postsynaptic currents, see Eqs (13)–(15). Similar to the original implementation, an external input strongly depolarizes the neurons and causes them to fire. To this end, the external weights JEX are chosen to be supra-threshold (see Fig 3A). Inhibitory interactions implement the WTA described in Sequence learning and prediction. The weights JIE of excitatory synapses on inhibitory neurons are chosen such that the collective firing of a subset of ρ excitatory neurons in the corresponding subpopulation causes the inhibitory neuron to fire. The weights JEI of inhibitory synapses on excitatory neurons are strong such that each inhibitory spike prevents all excitatory neurons in the same subpopulation that have not generated a spike yet from firing. All synaptic time constants, delays and weights are connection-type specific (see Table 1).

Fig 3. Effect of dendritic action potentials (dAP) on the firing response to an external stimulus.

Membrane-potential responses to an external input (blue arrow, A), a strong dendritic input (brown arrow, B) triggering a dAP, and a combination of both (C). Black and gray vertical bars mark times of excitatory and inhibitory spikes, respectively. The horizontal dashed line marks the spike threshold θE. The horizontal light blue lines depict the dAP plateau. D) Magnified view of spike times from panels A and C. A dAP preceding the external input (as in panel C) can speed up somatic, and hence, inhibitory firing, provided the time interval between the dAP and the external input is in the right range. The excitatory neuron is connected bidirectionally to an inhibitory neuron (see sketch on the right).

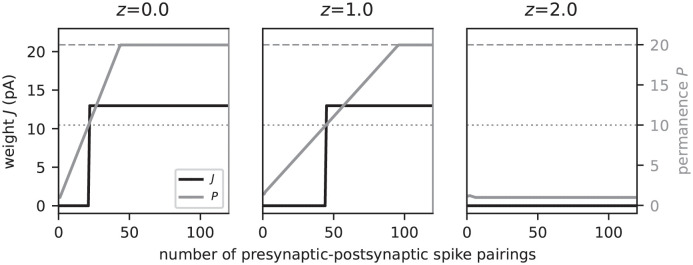

Plasticity dynamics

Both in the original [14] and in our model, the lateral excitatory connectivity between excitatory neurons (EE connectivity) is dynamic and shaped by a Hebbian structural plasticity mechanism mimicking principles known from the neuroscience literature [21–25]. All other connections are static. The dynamics of the EE connectivity is determined by the time evolution of the permanences Pij (), representing the synapse maturity, and the synaptic weights Jij. Unless the permanence Pij exceeds a threshold θP, the synapse {j → i} is immature, with zero synaptic weight Jij = 0. Upon threshold crossing, Pij ≥ θP, the synapse becomes mature, and its weight is assigned a fixed value Jij = W (∀i, j). Overall, the permanences evolve according to a Hebbian plasticity rule: the synapse {j → i} is potentiated, i.e., Pij is increased, if the activation of the postsynaptic cell i is immediately preceded by an activation of the presynaptic cell j. Otherwise, the synapse is depressed, i.e., Pij is decreased. At the beginning of the learning process or during relearning, the activity in the individual subpopulations is non-sparse. Hebbian learning alone would therefore lead to the strengthening of all existing synapses between two subsequently activated subpopulations, irrespective of the context these two subpopulations participate in. After learning, the subsets of neurons that are activated by a sequence element recurring in different sequences would therefore largely overlap. As a consequence, it becomes harder to distinguish between different contexts (histories) based on the activation patterns of these subsets. The original TM model [14] avoids this loss of context sensitivity by restricting synaptic potentiation to a small subset of synapses between a given pair of source and target subpopulations: if there are no predictive target neurons, the original algorithm selects a “matched” neuron from the set of active postsynaptic cells as the one being closest to becoming predictive, i.e., the neuron receiving the largest number of synaptic inputs on a given dendritic branch from the set of active presynaptic cells (provided this number is sufficiently large). Synapse potentiation is then restricted to this set of matched neurons. In case there are no immature synapses, the “least used” neuron or a randomly chosen neuron is selected as the “matched” cell, and connected to the winner cell of the previously active subpopulation. Restricting synaptic potentiation to synapses targeting such a subset of “matched” neurons is difficult to reconcile with biology. It is known that inhibitory inputs targeting the dendrites of pyramidal cells can locally suppress backpropagating action potentials and, hence, synaptic potentiation [26]. A selection mechanism based on such local inhibitory circuits would however involve extremely fast synapses and require fine-tuning of parameters. The model presented in this work circumvents the selection of “matched” neurons and replaces this with a homeostatic mechanism controlled by the postsynaptic dAP rate. In the following, the specifics of the plasticity dynamics used in this study are described in detail.

Within the interval [Pmin,ij, Pmax], the dimensionless permanences Pij(t) evolve according to a combination of an additive spike-timing-dependent plasticity (STDP) rule [27] and a homeostatic component [28, 29]:

| (1) |

At the boundaries Pmin,ij and Pmax, Pij(t) is clipped. While the maximum permanences Pmax are identical for all EE connections, the minimal permanences Pmin,ij are uniformly distributed in the interval [P0,min, P0,max] to introduce a form of persistent heterogeneity. The first term on the right-hand side of Eq (1) corresponds to the spike-timing dependent synaptic potentiation triggered by the postsynaptic spikes at times . Here, denotes the set of all postsynaptic spike times for which the time lag exceeds Δtmin for all presynaptic spikes . The indicator function ensures that the potentiation (and the homeostasis; see below) is restricted to time lags in the interval (Δtmin, Δtmax) to avoid a growth of synapses between synchronously active neurons belonging to the same subpopulation, and between neurons encoding for the first elements in different sequences; see Eq 17. Note that the potentiation update times lag the somatic postsynaptic spike times by the delay dEE, which is here interpreted as a purely dendritic delay [27, 30]. The potentiation increment is determined by the dimensionless potentiation rate λ+, and the spike trace xj(t) of the presynaptic neuron j, which is updated according to

| (2) |

The trace xj(t) is incremented by unity at each spike time , followed by an exponential decay with time constant τ+. The potentiation increment ΔPij at time therefore depends on the temporal distance between the postsynaptic spike time and all presynaptic spike times (STDP with all-to-all spike pairing; [27]). The second term in Eq (1) represents synaptic depression, and is triggered by each presynaptic spike at times . The depression decrement yi = 1 is treated as a constant, independently of the postsynaptic spike history. The depression magnitude is parameterized by the dimensionless depression rate λ−. The third term in Eq (1) corresponds to a homeostatic control triggered by postsynaptic spikes at times . Its overall impact is parameterized by the dimensionless homeostasis rate λh. The homeostatic control enhances or reduces the synapse growth depending on the dAP trace zi(t) of neuron i, the low-pass filtered dAP activity updated according to

| (3) |

Here, τh represents the homeostasis time constant, and the onset time of the kth dAP in neuron i. According to Eq (1), synapse growth is boosted if the dAP activity zi(t) is below a target dAP activity z*. Conversely, high dAP activity exceeding z* reduces the synapse growth (Fig 4). This homeostatic regulation of the synaptic maturity controlled by the postsynaptic dAP activity constitutes a variation of previous models [28, 29] describing ‘synaptic scaling’ [31–33]. It counteracts excessive synapse formation during learning driven by Hebbian structural plasticity. In addition, the combination of Hebbian plasticity and synaptic scaling can introduce a competition between synapses [28, 29]. Here, we exploit this effect to ensure that synapses are generated in a context specific manner, and thereby reduce the overlap between neuronal subpopulations activated by the same sequence element occurring in different sequences. To this end, the homeostasis parameters z* = 1 and τh are chosen such that each neuron tends to become predictive, i.e., generate a dAP, at most once during the presentation of a single sequence ensemble of total duration ((C − 1)ΔT + ΔTseq)S (see Network model). The time constant τh is hence adapted to the parameters of the task. For sequence sets I and II and the default inter-stimulus interval ΔT = 40 ms, it is set to τh = 440 ms and τh = 1560 ms, respectively. In section Dependence of prediction performance on the sequence speed, we study the effect of the sequence speed (inter-stimulus interval ΔT) on the prediction performance for a given network parameterization. For these experiments, τh = 440 ms is therefore fixed even though the inter-stimulus interval ΔT is varied.

Fig 4. Homeostatic regulation of the spike-timing-dependent structural plasticity by the dAP activity.

Evolution of the synaptic permanence (gray) and weight (black) during repetitive presynaptic-postsynaptic spike pairing for different levels of the dAP activity. In the depicted example, presynaptic spikes precede the postsynaptic spikes by 40 ms for each spike pairing. Consecutive spike pairs are separated by a 200 ms interval. In each panel, the postsynaptic dAP trace is clamped at a different value: z = 0 (left), z = 1 (middle), z = 2 (right). The dAP target activity is fixed at z* = 1. The horizontal dashed and dotted lines mark the maximum permanence Pmax and the maturity threshold θP, respectively.

The prefactor in Eq (1) ensures that all learning rates λ+, λ− and λh are measured in units of the maximum permanence Pmax.

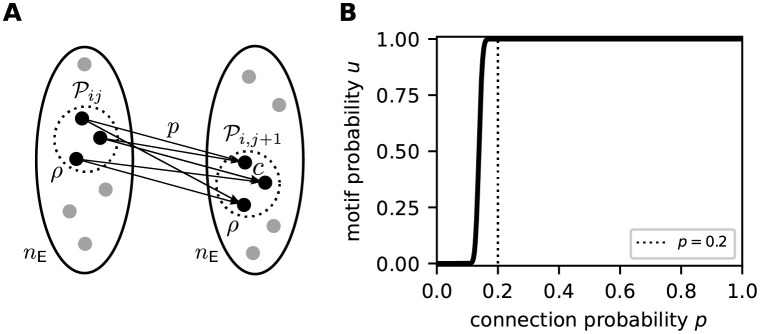

Constraints on potential connectivity

The sequence processing capabilities of the proposed network model rely on its ability to form sequence specific subnetworks based on the skeleton provided by the random potential connectivity. On the one hand, the potential connectivity must not be too diluted to ensure that a subset of neurons representing a given sequence element can establish sufficiently many mature connections to a second subset of neurons representing the subsequent element. On the other hand, a dense potential connectivity would promote overlap between subnetworks representing different sequences, and thereby slow down the formation of context specific subnetworks during learning (see Sequence learning and prediction). Here, we therefore identify the minimal potential connection probability p guaranteeing the existence of network motifs with a sufficient degree of divergent-convergent connectivity.

Consider the subset of ρ excitatory neurons representing the jth sequence element ζij in sequence si (see Task and training protocol and Network model). During the learning process, the plasticity dynamics needs to establish mature connections from to a second subset of neurons in another subpopulation representing the subsequent element ζi,j+1. Each neuron in must receive at least c = ⌈θdAP/W⌉ inputs from to ensure that synchronous firing of the neurons in can evoke a dAP after synapse maturing. For a random, homogeneous potential connectivity with connection probability p, the probability of finding these c potential connections for some arbitrary target neuron is given by

| (4) |

For a successful formation of sequence specific subnetworks during learning, the sparse subset of presynaptic neurons needs to recruit at least ρ targets in the set of nE neurons representing the subsequent sequence element (Fig 5A). The probability of observing such a divergent-convergent connectivity motif is given by

| (5) |

Note that the above described motif does not require the size of the postsynaptic subset to be exactly ρ. Eq (5) constrains the parameters p, c, nE and ρ to ensure such motifs exist in a random network. Fig 5B illustrates the dependence of the motif probability u on the connection probability p for our choice of parameters nE, c, and ρ. For p ≥ 0.2, the existence of the divergent-convergent connectivity motif is almost certain (u ≈ 1). For smaller connection probabilities p < 0.2, the motif probability quickly vanishes. Hence, p = 0.2 constitutes a reasonable choice for the potential connection probability.

Fig 5. Existence of divergent-convergent connectivity motifs in a random network.

A) Sketch of the divergent-convergent potential connectivity motif required for the formation of sequence specific subnetworks during learning. See main text for details. B) Dependence of the motif probability u on the connection probability p for nE = 150, c = 5, and ρ = 20 (see Table 2). The dotted vertical line marks the potential connection probability p = 0.2 used in this study.

Network realizations and initial conditions

For every network realization, the potential connectivity and the initial permanences are drawn randomly and independently. All other parameters are identical for different network realizations. The initial values of all state variables are given in Tables 1 and 2.

Simulation details

The network simulations are performed in the neural simulator NEST [34] under version 3.0 [35]. The differential equations and state transitions defining the excitatory neuron dynamics are expressed in the domain specific language NESTML [36, 37] which generates the required C++ code for the dynamic loading into NEST. Network states are synchronously updated using exact integration of the system dynamics on a discrete-time grid with step size Δt [38]. The full source code for the implementation with a list of other software requirements is available at Zenodo: https://doi.org/10.5281/zenodo.5578212.

Task performance measures

To assess the network performance, we monitor the dendritic currents reporting predictions (dAPs) as well as the somatic spike times of excitatory neurons. To quantify the prediction error, we identify for each last element in a sequence si all excitatory neurons that have generated a dAP in the time interval , where and ΔT denote the time of the external input corresponding to the last sequence element and the inter-stimulus interval, respectively (see Task and training protocol and Network model). All subpopulations with at least ρ/2 neurons generating a dAP are considered “predictive”. The prediction state of the network is encoded in an M dimensional binary vector o, where ok = 1 if the kth subpopulation is predictive, and ok = 0 else. The

| (6) |

is defined as the Euclidean distance between o and the binary target vector v representing the pattern of external inputs for each last element , normalized by the number L of subpopulations per sequence element. Furthermore, we assess the

| (7) |

and the

| (8) |

where Θ(⋅) denotes the Heaviside function. In addition to these performance measures, we monitor for each last sequence element the level of sparsity by measuring the ratio between the number of active neurons and the total number LnE of neurons representing this element. During learning, we expose the network repetitively to the same set {s1, …, sS} of sequences for a number of training episodes K. To obtain the total prediction performance in each episode, we average the prediction error, the false negative and false positive rates, as well as the level of sparsity across the set of sequences.

Results

Sequence learning and prediction

According to the Temporal Memory (TM) model, sequences are stored in the form of specific paths through the network. Prediction and replay of sequences correspond to a sequential sparse activation of small groups of neurons along these paths. Non-anticipated stimuli are signaled in the form of non-sparse firing of these groups. This subsection describes how the model components introduced in Network model interact and give rise to the network structure and behavior postulated by TM. For illustration, we here consider a simple set of two partly overlapping sequences {A, D, B, E} and {F, D, B, C} corresponding to the sequence set I (see Fig 1B).

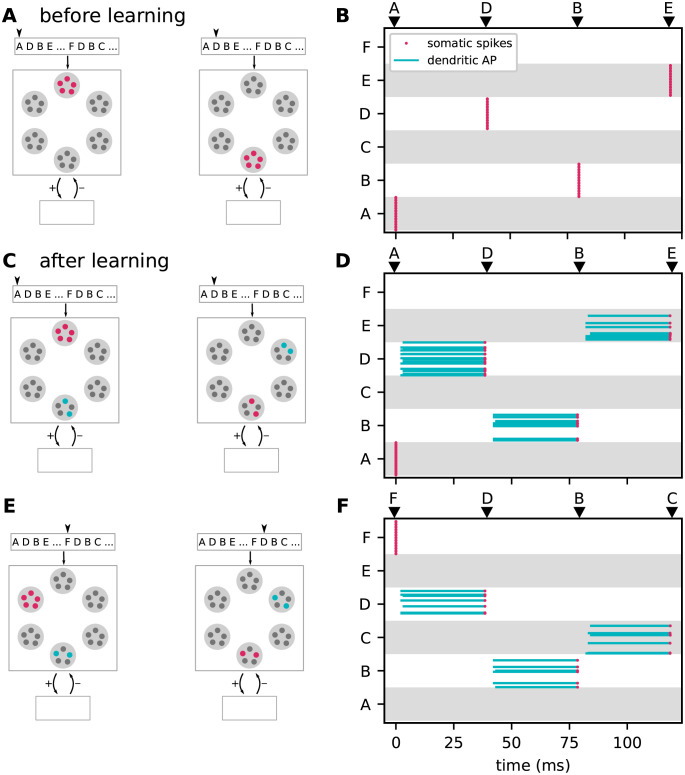

The initial sparse, random and immature network connectivity (Fig 2A and 2B) constitutes the skeleton on which the sequence-specific paths will be carved out during the learning process. To guarantee a successful learning, this initial skeleton must be neither too sparse nor too dense (see Methods). Before learning, the presentation of a particular sequence element causes all neurons with the corresponding stimulus preference to reliably and synchronously fire a somatic action potential due to the strong, suprathreshold external stimulus (Fig 3A). All other subpopulations remain silent (see Fig 6A and 6B). The lateral connectivity between excitatory neurons belonging to the different subpopulations is subject to a form of Hebbian structural plasticity. Repetitive and consistent sequential presentation of sequence elements turns immature connections between successively activated subpopulations into mature connections, and hence leads to the formation of sequence-specific subnetworks (see Fig 2C and 2D). Synaptic depression prunes connections not supporting the learned pattern, thereby reducing the chance of predicting wrong sequence items (false positives).

Fig 6. Context specific predictions.

Sketches (left column) and raster plots of network activity (right column) before (top row) and after learning of the two sequences {A, D, B, E} and {F, D, B, C} (middle and bottom rows). In the left column, large light gray circles depict the excitatory subpopulations (same arrangement as in Fig 2). Red, blue and gray circles mark active, predictive and silent neurons, respectively. In the right column, red dots and blue lines mark somatic spikes and dAP plateaus, respectively. Type and timing of presented stimuli are depicted by black arrows. A,B) Snapshots of network activity upon subsequent presentation of the sequence elements “A” and “D” (panel A), and network activity in response to presentation of the entire sequence {A, D, B, E} (panel B) before learning. All neurons in the stimulated subpopulations become active. C,D) Same as panels A and B, but after learning. Presenting the first element “A” causes all neurons in the corresponding subpopulations to fire. Activation of these neurons triggers dAPs (predictions) in a subset of neurons representing the subsequent element “D”. When the next element “D” is presented, only these predictive neurons become active, leading to predictions in the subpopulation representing the subsequent subpopulation (“B”), etc. E,F) Same as panels C and D, but for sequence {F, D, B, C}. The subsets of neurons representing “D” and “B” activated during sequences {A, D, B, E} and {F, D, B, C} are distinct, i.e., context specific. For clarity, panels B, D, and F show only a fraction of excitatory neurons (30%).

During the learning process, the number of mature connections grows to a point where the activation of a certain subpopulation by an external input generates dendritic action potentials (dAPs), a “prediction”, in a subset of neurons in the subsequent subpopulation (blue neurons in Fig 6C). The dAPs generate a long-lasting depolarization of the soma (Fig 3B). When receiving an external input, these depolarized neurons fire slightly earlier as compared to non-depolarized (non-predictive) neurons (Fig 3A, 3B and 3D). If the number of predictive neurons within a subpopulation is sufficiently large, their advanced spikes (Fig 3C) initiate a fast and strong inhibitory feedback to the entire subpopulation, and thereby suppress subsequent firing of non-predictive neurons in this population (Fig 6C and 6D). Owing to this winner-take-all dynamics, the network generates sparse spiking in response to predicted stimuli, i.e., if the external input coincides with a dAP-triggered somatic depolarization. In the presence of a non-anticipated, non-predicted stimulus, the neurons in the corresponding subpopulation fire collectively in a non-sparse manner, thereby signaling a “mismatch”.

In the model presented in this study, the initial synapse maturity levels, the permanences, are randomly chosen within certain bounds. During learning, connections with a higher initial permanence mature first. This heterogeneity in the initial permanences enables the generation of sequence specific sparse connectivity patterns between subsequently activated neuronal subpopulations (Fig 2D). For each pair of sequence elements in a given sequence ensemble, there is a unique set of postsynaptic neurons generating dAPs (Fig 6D). These different activation patterns capture the context specificity of predictions. When exposing a network that has learned the two sequences {A, D, B, E} and {F, D, B, C} to the elements “A” and “F”, different subsets of neurons are activated in “D” and “B”. By virtue of these sequence specific activation patterns, stimulation by {A, D, B} or {F, D, B} leads to correct predictions “E” or “C”, respectively (Fig 6C–6F).

Heterogeneity in the permanences alone, however, is not sufficient to guarantee context specificity. The subsets of neurons activated in different contexts may still exhibit a considerable overlap. This overlap is promoted by Hebbian plasticity in the face of the initial non-sparse activity, which leads to a strengthening of connections to neurons in the postsynaptic population in an unspecific manner (Fig 7A and 7B). Moreover, the reoccurrence of the same sequence elements in different co-learned sequences initially causes higher firing rates of the neurons in the respective populations (“D” and “B” in Fig 7). As a result, the formation of unspecific connections would even be accelerated if synapse formation was driven by Hebbian plasticity alone. The model in this study counteracts this loss of context specificity by supplementing the plasticity dynamics with a homeostatic component, which regulates synapse growth based on the rate of postsynaptic dAPs. This form of homeostasis prevents the same neuron from becoming predictive multiple times within the same set of sequences, and thereby reduces the overlap between subsets of neurons activated within different contexts (Fig 7C and S4 Fig). To further aid the formation of context specific paths, the density of the initial potential connectivity skeleton is set close to the minimum value ensuring the existence of the connectivity motifs required for a faithful prediction (see Methods).

Fig 7. dAP-rate homeostasis enhances context specificity.

A) Sketch of subpopulations of excitatory neurons representing the elements of the two sequences {F, D, B} and {A, D, B}, depicted by light and dark blue colors, respectively. Before learning, the connections between the subpopulations are immature (gray lines). Hence, for each element presentation, all neurons in the respective subpopulations fire (filled circles).B) Hebbian plasticity drives the formation of mature connections between subpopulations representing successive sequence elements (colored lines), and leads to sparse firing. The sets of neurons contributing to the two sequences partly overlap. C) Incorporating dAP-rate homeostasis reduces this overlap in the activation patterns.

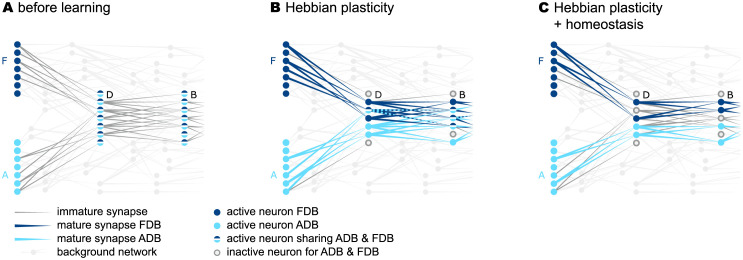

Prediction performance

To quantify the sequence prediction performance, we repetitively stimulate the network with the sequences in sequence set I (see Task and training protocol), and continuously monitor the prediction error, the false-positive and false-negative rates, as well as the fraction of active stimulated neurons as a measure of encoding sparsity (Fig 8; Task performance measures). To ensure the performance results are not specific to a single network, the evaluation is repeated for a number of randomly instantiated network realizations with different initial potential connectivities. At the beginning of the learning process, all neurons of a stimulated subpopulation collectively fire in response to the external input. Non-stimulated neurons remain silent. As the connectivity is still immature at this point, no dAPs are triggered in postsynaptic neurons, and, hence, no predictions are generated. As a consequence, the prediction error, the false-negative rate and the number of active neurons (in stimulated populations) are at their maximum, and the false positive rate is zero (Fig 8). During the first training episodes, the consistent collective firing of subsequently activated populations leads to the formation of mature connections as a result of the Hebbian structural plasticity. Upon reaching of a critical number of mature synapse, first dAPs (predictions) are generated in postsynaptic cells (in Fig 8, this happens after about 10 learning episodes). As a consequence, the false negative rate decreases, and the stimulus responses become more sparse. At this early phase of the learning, the predictions of upcoming sequence elements are not yet context specific (for sequence set I, non-sparse activity in “B” triggers a prediction in both “E” and “C”, irrespective of the context). Hence, the false-positive rate transiently increases. As the context specific connectivity is not consolidated at this point, more and more presynaptic subpopulations fail at triggering dAPs in their postsynaptic targets when they switch to sparse firing. Therefore, the false-positive rate decreases again, and the false-negative rate increases. In other words, there exists a negative feedback loop in the interim learning dynamics where the generation of predictions leads to an increase in sparsity which, in turn, causes prediction failures (and, hence, non-sparse firing). With an increasing number of training episodes, synaptic depression and homeostatic regulation increase context selectivity and thereby break this loop. Eventually, sparse firing of presynaptic populations is sufficient to reliably trigger predictions in their postsynaptic targets. For sequence set I, the total prediction error becomes zero and the stimulus responses maximally sparse after about 30 training episodes (Fig 8). For a time resolved visualization of the learning dynamics, see S1 Video.

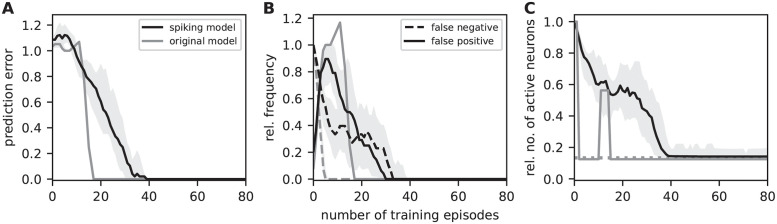

Fig 8. Sequence prediction performance for sequence set I.

Dependence of the sequence prediction error (A), the false-positive and false-negative rates (B), and the number of active neurons relative to the subpopulation size (C) on the number of training episodes during repetitive stimulation with sequence set I (see Task and training protocol). Curves and error bands indicate the median as well as the 5% and 95% percentiles across an ensemble of 5 different network realizations, respectively. All prediction performance measures are calculated as a moving average over the last 4 training episodes. The dashed gray horizontal line in panel C depicts the target sparsity level ρ/(LnE). Inter-stimulus interval ΔT = 40 ms. See Table 2 for remaining parameters.

Up to this point, we illustrated the model’s sequence learning dynamics and performance for a simple set of two sequences (sequence set I). In the following, we assess the network’s sequence prediction performance for a more complex sequence set (II) composed of five high-order sequences (see Task and training protocol), each consisting of five elements. This sequence set is comparable to the one used in [14], but contains a larger amount of overlap between sequences. The overall pattern of the learning dynamics resembles the one reported for sequence set I (Fig 9). The prediction error, the false-positive and false-negative rates as well as the sparsity measure vary more smoothly, and eventually converge at minimal levels after about 40 training episodes. To compare the spiking TM model with the original, non-spiking TM model, we repeat the experiment based on the simulation code provided in [14], see S1 Table. With our parameterization, the learning rates λ+ and λ− of the spiking model are by a factor of about 10 smaller than in the original model. As a consequence, learning sequence set II with the original model converges faster than with the spiking model (compare black and gray curves in Fig 9). The ratio in learning speeds, however, is not larger than about 2. Increasing the learning rates, i.e., the permanence increments, would speed up the learning process in the spiking model, but bears the risk that a large fraction of connections mature simultaneously. This would effectively overwrite the permanence heterogeneity which is essential to form context specific connectivity patterns (see Sequence learning and prediction). As a result, the network performance would decrease. The original model avoids this problem by limiting the number of potentiated synapses in each update step (see “Plasticity dynamics” in Network model).

Fig 9. Sequence prediction performance for sequence set II and comparison with original model.

Same figure arrangement, training and measurement protocol as in Fig 8. Data obtained during repetitive stimulation of the network with sequence set II (see Task and training protocol). Gray curves depict results obtained using the original (non-spiking) TM model from [14] with adapted parameters (see S1 Table). The dashed gray horizontal line in panel C depicts the target sparsity level ρ/(LnE).

In sequence sets I and II, the maximum sequence order is 2 and 3, respectively. For the two sequences {E, N, D, I, J} and {L, N, D, I, K} in sequence set II, for example, predicting element “J” after activation of “I” requires remembering the element “E”, which occured three steps back into the past. The TM model can cope with sequences of much higher order. Each sequence element in a particular context activates a specific pattern, i.e., a specific subset of neurons. The number of such patterns that can be learned is determined by the size of each subpopulation and the sparsity [39]. In a sequence with repeating elements, such as {ABBBBBC}, the maximum order is limited by this number. Without repeating elements, the order could be arbitrarily high provided the number of subpopulations matches or exceeds the number of distinct characters. In S3 Fig, we demonstrate successful learning of two sequences {A, D, B, G, H, I, J, K, L, M, N, E}, {F, D, B, G, H, I, J, K, L, M, N, C} of order 10.

Dependence of prediction performance on the sequence speed

The reformulation of the original TM model in terms of continuous-time dynamics allows us to ask questions related to timing aspects. Here, we investigate the sequence processing speed by identifying the range of inter-stimulus intervals ΔT that permit a successful prediction performance (Fig 10). The timing of the external inputs affects the dynamics of the network in two respects. First, reliable predictions of sequence elements can only be made if the time interval ΔT between two consecutive stimulus presentations is such that the second input coincides with the somatic depolarization caused by the dAP triggered by the first stimulus. Second, the formation of sequence specific connections by means of the spike-timing-dependent structural plasticity dynamics depends on ΔT.

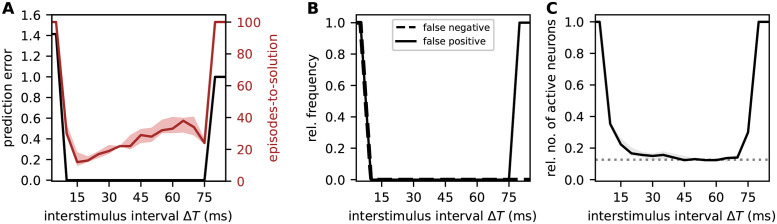

Fig 10. Effect of sequence speed on network performance.

Dependence of the sequence prediction error, the learning speed (episodes-to-solution; A), the false-positive and false-negative rates (B), and the number of active neurons relative to the subpopulation size (C) on the inter-stimulus interval ΔT after 100 training episodes. Curves and error bands indicate the median as well as the 5% and 95% percentiles across an ensemble of 5 different network realizations, respectively. Same task and network as in Fig 8.

If the external input does not coincide with the somatic dAP depolarization, i.e., if ΔT is too small or to large, the respective target population responds in a non-sparse, non-selective manner (mismatch signal; Fig 10C), and in turn, generates false positives (Fig 10B). For small ΔT, the external stimulus arrives before the dAP onset, i.e., before it is predicted. In consequence, the false negative rate is high. For large ΔT, the false negative rate remains low as the network is still generating predictions (Fig 10B). The inter-stimulus interval ΔT in addition affects the formation of sequence specific connections due to the dependence of the plasticity dynamics on the timing of pre- and postsynaptic spikes, see Eqs (1) and (2). Larger ΔT results in smaller permanence increments, and thereby a slow-down of the learning process (red curve in Fig 10A).

Taken together, the model predicts a range of optimal inter-stimulus interval ΔT (Fig 10A). For our choice of network parameters, this range spans intervals between 10 ms and 75 ms. The lower bound depends primarily on the synaptic time constant τEE, the spike transmission delay dEE, and the membrane time constant τm. The upper bound is mainly determined by the dAP plateau duration τdAP.

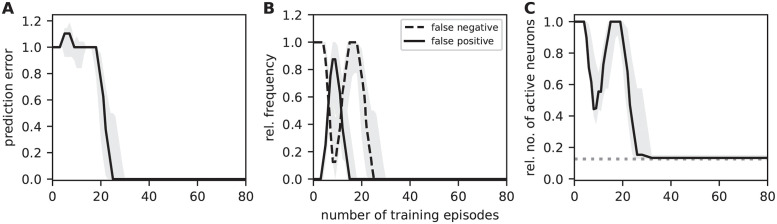

Sequence replay

So far, we studied the network in the predictive mode, where the network is driven by external inputs and generates predictions of upcoming sequence elements. Another essential component of sequence processing is sequence replay, i.e., the autonomous generation of sequences in response to a cue signal (see Task and training protocol). After successful learning, the network model presented in this study is easily configured into the replay mode by increasing the neuronal excitability, such that the somatic depolarization caused by a dAP alone makes the neuron fire a somatic spike. Here, this is implemented by lowering the somatic spike threshold θE of the excitatory neurons. In the biological system, this increase in excitability could, for example, be caused by the effect of neuromodulators [40, 41], additional excitatory inputs from other brain regions implementing a top-down control, e.g, attention [42, 43], or propagating waves during sleep [44, 45].

The presentation of the first sequence element activates dAPs in the subpopulation corresponding to the expected next element in a previously learned sequence. Due to the reduced firing threshold in the replay mode, the somatic depolarization caused by these dAPs is sufficient to trigger somatic spikes during the rising phase of this depolarization. These spikes, in turn, activate the subsequent element. This process repeats, such that the network autonomously reactivates all sequence elements in the correct order, with the same context specificity and sparsity level as in the predictive mode (see Fig 11A and 11B). The latency between the activation of subsequent sequence elements is determined by the spike transmission delay dEE, the synaptic time constant τEE, the membrane time constant τm,E, the synaptic weights JEE,ij, the dAP current plateau amplitude IdAP, and the somatic firing threshold θE. For sequences that can be successfully learned (see previous section), the time required for replaying the entire sequence is independent of the inter-stimulus interval ΔT employed during learning (Fig 11C).

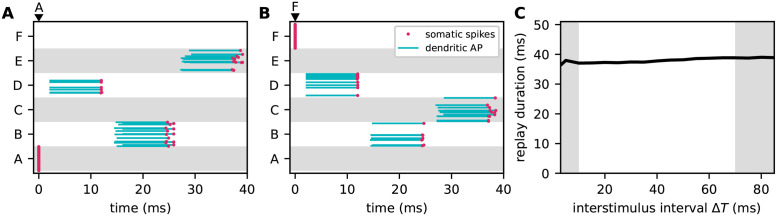

Fig 11. Sequence replay dynamics and speed.

Autonomous replay of the sequences {A, D, B, E} (A) and {F, D, B, C} (B), initiated by stimulating the subpopulations “A” and “F”, respectively. Red dots and blue lines mark somatic spikes and dAP plateaus, respectively, for a fraction of neurons (30%) within each subpopulation. During learning, the inter-stimulus interval ΔT is set to 40 ms. C) Dependence of the sequence replay duration on the inter-stimulus interval ΔT during learning. Replay duration is measured as the difference between the mean firing times of the populations representing the first and last elements in a given sequence. Gray areas mark regions with low prediction performance (see Dependence of prediction performance on the sequence speed). Error bands represent the mean ± standard deviation of the prediction error across 5 different network realizations. Same network and training set as in Fig 8.

As shown in the previous section, sequences cannot be learned if the inter-stimulus interval ΔT is too small or too large. For small ΔT, connections between subpopulations corresponding to subsequent elements are strongly potentiated by the Hebbian plasticity due to the consistent firing of pre- and postsynaptic populations during the learning process. The network responses are, however, non-sparse, as the winner-take-all mechanism cannot be invoked during the learning (Fig 10C). In the replay mode, sequences are therefore replayed in a non-sparse and non-context specific manner (left gray region in Fig 11C). Similarly, connections between subsequent populations are slowly potentiated for very large ΔT. With sufficiently long learning, sequences can still be replayed in the right order, but the activity is non-sparse and therefore not context specific (right gray region in Fig 11C).

Discussion

Summary

In this work, we reformulate the Temporal Memory (TM) model [14] in terms of biophysical principles and parameters. We replace the original discrete-time neuronal and synaptic dynamics with continuous-time models with biologically interpretable parameters such as membrane and synaptic time constants and synaptic weights. We further substitute the original plasticity algorithm with a more biologically plausible mechanism, relying on a form of Hebbian structural plasticity, homeostatic control, and sparse random connectivity. Moreover, our model implements a winner-take-all dynamics based on lateral inhibition that is compatible with the continuous-time neuron and synapse models. We show that the revised TM model supports successful learning and processing of high-order sequences with a performance similar to the one of the original model [14].

A new aspect that we investigated in the context of our work is sequence replay. After learning, the model is able to replay sequences in response to a cue signal. The duration of sequence replay is independent of the sequence speed during training, and determined by the intrinsic parameters of the network. In general, sequence replay is faster than the sequence presentation during learning, consistent with sequence compression and fast replay observed in hippocampus [46–48] and neocortex [6, 49].

Finally, we identified the range of possible sequence speeds that guarantee a successful learning and prediction. Our model predicts an optimal range of processing speeds (inter-stimulus intervals) with lower and upper bounds constrained by neuronal and synaptic parameters (e.g., firing threshold, neuronal and synaptic time constants, coupling strengths, potentiation time constants). Within this range, the number of required training episodes is proportional to the inter-stimulus interval ΔT.

Relationship to other models

The model presented in this work constitutes a recurrent, randomly connected network of neurons with predefined stimulus preferences. The model learns sequences in an unsupervised manner using local learning rules. This is in essence similar to several other spiking neuronal network models for sequence learning [9–12, 50]. The new components employed in this work are dendritic action potentials (dAPs) and Hebbian structural plasticity. We use structural plasticity to be as close as possible to the original model, and Hebbian forms of this are also known from the literature [21, 22, 25]. However, preliminary results show that classical (non-structural) spike-timing-dependent plasticity (STDP) can yield similar performance (see S1 Fig). Dendritic action potentials are instrumental for our model for two reasons. First, they effectively lower the threshold for coincidence detection and thereby permit a reliable and robust propagation of sparse activity [51, 52]. In essence, our model bears similarities to the classical synfire chain [53], one difference being that our mature network is not a simple feedforward network but has an abundance of recurrent connections. As shown in [54], a stable propagation of synchronous activity requires a minimal number of neurons in each synfire group. Without active dendrites, this minimal number is in the range of ∼100 for plausible single-cell and synaptic parameters. In our (and in the original TM) model, coincidence detection happens in the dendrites. The number of presynaptic spikes needed to trigger a dAP is small, of the order of 10 [55–57]. This helps to reduce redundancy (only a small number of neurons needs to become active) and to increase the capacity of the network (the number of different patterns that can be learned is increased with pattern sparsity; [39]). Second, dAPs equip neurons with a third type of state (next to the quiescent and the firing state): the predictive state, i.e., a long lasting (∼ 50–200 ms) strong depolarization of the soma. Due to the prolonged depolarization of the soma, the inter-stimulus interval can be much larger than the synaptic time constants and delays. An additional benefit of dAPs, which is not exploited in the current version of our model, is that they equip individual neurons with more possible states if they comprise more than one dendritic branch. Each branch constitutes an independent pattern detector. The response of the soma may depend on the collective predictions in different dendritic branches. A single neuron could hence perform the types of computations that are usually assigned to multilayer perceptrons, i.e., small networks [58, 59].

Similar to a large class of other models in the literature, the TM network constitutes a recurrent network in the sense that the connectivity before and after learning forms loops at the subpopulation level. Recurrence in the immature connectivity permits the learning of arbitrary sequences without prior knowledge of the input data. In particular, recurrent connections enable the learning of sequences with repeating elements (such as in {A, B, B, C} or {A, B, C, B}). Further, bidirectional connections between subpopulations are needed to learn sequences where pairs of elements occur in different orders (such as in {A, B, C}, {D, C, B}). Apart from providing the capability to learn sequences with all possible combinations of sequence elements, recurrent connections play no further functional role in the current version of the TM model. They may, however, become more important for future versions of the model enabling the learning of sequence timing and duration (see below).

Most of the existing models have been developed to replay learned sequences in response to a cue signal. The TM model can perform this type of pattern completion, too. In addition, it can act as a quiet, sparsely active observer of the world that becomes highly active only in the case of unforeseen, non-anticipated events. In this work, we didn’t directly analyze the network’s mismatch detection performance. However, this could be easily achieved by equipping each population with a “mismatch” neuron that fires if a certain fraction of neurons in the population fires (threshold detectors). In our model, predicted stimuli result in sparse firing due to inhibitory feedback (WTA). For unpredicted stimuli, this feedback is not effective, resulting in non-sparse firing indicating a mismatch. In [60], a similar mechanism is employed to generate mismatch signals for novel stimuli. In this study, the strength of the inhibitory feedback needs to be learned by means of inhibitory synaptic plasticity. In our model, the WTA mechanism is controlled by the predictions (dAPs) and implemented by static inhibitory connections. Furthermore, the model in [60] can learn a set of elements, but not the order of these elements in the sequence.

In contrast to other sequence learning models [9, 11], our model is not able to learn an element specific timing and duration of sequence elements. The model in [9] relies on a clock network, which activates sequence elements in the correct order and with the correct timing. With this architecture, different sequences with different timings would require separate clock networks. Our model learns both sequence contents and order for a number of sequences without any auxiliary network. In an extension of our model, the timing of sequence element could be learned by additional plastic recurrent connections within each subpopulation. The model in [11] can learn and recall higher-order sequences with limited history by means of an additional reservoir network with sparse readout. The TM model presents a more efficient way of learning and encoding the context in high-order sequences, without prior assignment of context specificity to individual neuron populations [9], and without additional network components (such as reservoir networks in [11]).

An important sequence processing component that is not addressed in our work is the capability of identifying recurring sequences within a long stream of inputs. In the literature, this process is referred to as chunking, and constitutes a form of feature segmentation [3]. Sequence chunking has been illustrated, for example, in [61, 62]. Similar to our model, the network model in [61, 62] is composed of neurons with dendritic and somatic compartments, with the dendritic activity signaling a prediction of somatic spiking. Recurrent connections in their model improve the context specificity of neuronal responses, and thereby permit a context dependent feature segmentation. The model can learn high order sequences, but the history is limited. Although not explicitly tested here, our model is likely to be able to perform chunking if sequences are presented randomly across trials and without breaks. If the order of sequences is not systematic across trials, connections between neurons representing different sequences are not strengthened by spike-timing-dependent potentiation. Consecutive sequences are therefore not merged and remain distinct.

An earlier spiking neural network version of the HTM model has already been devised in [63]. It constitutes a proof-of-concept study demonstrating that the HTM model can be ported to an analog-digital neuromorphic hardware system. It is restricted to small simplistic sequences and does not address the biological plausibility of the TM model. In particular, it does not offer a solution to the question of how the model can perform online learning by known biological ingredients. Our study delivers a solution for this based on local plasticity rules and permits a direct implementation on a neuromorphic hardware system.

Limitations and outlook

The model developed in this study serves as a proof of concept demonstrating that the TM algorithm proposed in [14] can be implemented using biological ingredients. While it is still fairly simplistic, it may provide the basis for a number of future extensions.

Our results on the sequence processing speed revealed that the model presented here can process fast sequences with inter-stimulus intervals ΔT up to ∼75 ms. This range of processing speeds is relevant in many behavioral contexts such as motor generation, vision (saccades), music perception and generation, language, and many others [64]. However, slow sequences with inter-stimulus intervals beyond several hundreds of milliseconds cannot be learned by this model with biologically plausible parameters. This is problematic as behavioral time scales are often larger [64, 65]. By increasing the duration τdAP of the dAP plateau, the upper bound for ΔT could be extended to 500 ms, and maybe beyond [66]. However, for such long intervals, the synaptic potentiation would be very slow, unless the time constant τ+ of the structural STDP is increased and the depression rate λ− is adapted accordingly. Furthermore, while our model explains the fast replay observed in the hippocampus and cortex, it is not able to learn an element specific timing and duration of sequence elements [5, 67, 68]. This could be overcome by equipping the model with a working memory mechanism, which maintains the activity of the subpopulations for behaviorally relevant time scales [9, 69].

In the current version of the model, the number of subpopulations, the number of neurons within each subpopulation, the number of dendritic branches per neuron, as well as the number of synapses per neuron are far from realistic [14]. The number of sequences that can be successfully learned in this network is hence rather small. In addition, the current work is focusing on sequence processing at a single abstraction level, not accounting for a hierarchical network and task structure with both bottom-up and top-down projections. A further simplification in this work is that the lateral inhibition within a subpopulation is mediated by a single interneuron with unrealistically strong and fast connections to and from the pool of excitatory neurons. In future versions of this model, this interneuron could be replaced by a recurrently connected network of inhibitory neurons, thereby permitting more realistic weights, and simultaneously speeding up the interaction between inhibitory and excitatory cells by virtue of the fast-tracking property of such networks [70]. Similarly, the external inputs in our model are represented by single spikes, which are passed to the corresponding target population by a strong connection, and thereby lead to an immediate synchronous spike response. Replacing each external input by a population of synchronously firing neurons would be a more realistic scenario without affecting the model dynamics. The external neurons could even fire in a non-synchronous, rate modulated fashion, provided the spike responses of the target populations remain nearly synchronous and can coincide with the dAP-triggered somatic depolarization (see S6 Fig). The current version of the model relies on a nearly synchronous immediate response to ensure that a small set of (∼ 20) active neurons can reliably trigger postsynaptic dAPs, and that the predictive neurons (those depolarized by the dAPs) consistently fire earlier as compared to the non-predictive neurons, such that they can be selected by the WTA dynamics. Non-synchronous responses could possibly lead to a reliable generation of dAPs in postsynaptic neurons, but would require large active neuron populations (loss of sparsity) or unrealistically strong synaptic weights. The temporal separation between predictive and non-predictive neurons becomes harder for non-synchronous spiking. In future versions of the model, it could potentially be achieved by increasing the dAP plateau potential, and simultaneously equipping the excitatory neurons with a larger membrane time constant, such that non-depolarized neurons need substantially longer to reach the spike threshold. Increasing the dAP plateau potential, however, makes the model more sensitive to background noise (see below). Note that, in our model, only the immediate initial spike response needs to be synchronous. After successfully triggering the WTA circuit, the winning neurons could –in principle– continue firing in an asynchronous manner (for example, due the working-memory dynamics mentioned above). Similarly, long lasting or tonic external inputs could lead to repetitive firing of the neurons in the TM network. As long as these repetitive responses remain nearly synchronous, the network performance is likely to be preserved.