Abstract

There are few data on the quality of cancer treatment information available on social media. Here, we quantify the accuracy of cancer treatment information on social media and its potential for harm. Two cancer experts reviewed 50 of the most popular social media articles on each of the 4 most common cancers. The proportion of misinformation and potential for harm were reported for all 200 articles and their association with the number of social media engagements using a 2-sample Wilcoxon rank-sum test. All statistical tests were 2-sided. Of 200 total articles, 32.5% (n = 65) contained misinformation and 30.5% (n = 61) contained harmful information. Among articles containing misinformation, 76.9% (50 of 65) contained harmful information. The median number of engagements for articles with misinformation was greater than factual articles (median [interquartile range] = 2300 [1200-4700] vs 1600 [819-4700], P = .05). The median number of engagements for articles with harmful information was statistically significantly greater than safe articles (median [interquartile range] = 2300 [1400-4700] vs 1500 [810-4700], P = .007).

The internet is a leading source of health misinformation (1). This is particularly true for social media, where false information spreads faster and more broadly than fact-checked information (2). Health misinformation threatens public health and must be addressed urgently (3-6) because it hinders delivery of evidence-based medicine, negatively affects patient–physician relationships, and can result in increased risk of death (7‐10). Because patients use social media for health information, addressing misinformation has become a critical public health goal (11). This is especially true in cancer care, where use of unproven therapies is associated with decreased survival (10). Here, we quantify the accuracy of cancer treatment information on social media, its potential for harm, and how engagement differs by factualness and harm.

Using web-scraping software (Buzzsumo.com) (12), we searched for the most popular English language articles containing relevant keywords for the 4 most common cancers (breast, prostate, colorectal, and lung). These articles include any news article or blog posted on Facebook, Reddit, Twitter, or Pinterest between January 2018 and December 2019. Each article had a unique link (URL) that allowed for tabulation of engagements, defined as upvotes (Twitter and Pinterest), comments (Reddit and Facebook), and reactions and shares (Facebook). Thus “total engagement” represents aggregate engagement from multiple platforms. The top 50 articles from each cancer type were collected, representing 200 unique articles. The vast majority of engagements were Facebook engagements and therefore analyzed separately.

Two National Comprehensive Cancer Network panel members were selected as content experts from each site (breast: M.M. and J.W.; prostate: T.D. and D.S.; colorectal: S.C. and J.H.; and lung: J.B. and W.A.). Content experts reviewed articles’ primary medical claims and completed 4-question assessments adapted from assessments of factuality and social media credibility (13,14) through an iterative process with L.S., J.T., and A.F. to account for cancer-specific claims (Supplementary Figure 1, available online). Expert reviewers were not compensated.

The experts used two 5-point Likert scales per article (Supplementary Figure 1, available online), and the 2 reviewers’ ratings were summed per article within each domain. Misinformation was defined as summary scores greater than or equal to 6, representing “mixture both true and false,” “mostly false,” and “false” information, and harmful information was defined as any rating by at least 1 reviewer of “probably harmful” and “certainly harmful” information. Inter-rater agreement was evaluated by Cohen kappa (ĸ) coefficient. The proportion of articles classified as misinformation and harmful information was reported, followed by descriptions of why articles were so rated, with multiple selections allowed.

The association of total and Facebook engagements between misinformation and harm was assessed using a 2-sample Wilcoxon rank-sum (Mann-Whitney) test. Statistical analyses were performed using Stata, version 16.1 (StataCorp). All statistical tests were 2-sided, and P less than .05 was considered statistically significant.

Of the 200 articles, 37.5% (n = 75), 41.5% (n = 83), 1.0% (n = 2), 3.0% (n = 6), and 17.0% (n = 34) were from traditional news (online versions of print and/or broadcast media), nontraditional news (digital only), personal blog, crowd-funding site, and medical journals, respectively. Following expert review, 32.5% (n = 65; ĸ = 0.63, 95% confidence interval [CI] = 0.50 to 0.77) contained misinformation, most commonly described as misleading (title not supported by text, statistics/data that did not support the conclusion [28.8%, 111 of 386]), strength of the evidence mischaracterized (weak evidence portrayed as strong or vice versa [27.7%, 107 of 386]), and unproven therapies (not studied, insufficient evidence [26.7%, 103 of 386]).

In total, 30.5% (61 of 200; ĸ = 0.66, 95% CI = 0.52 to 0.80) of articles contained harmful information, described as harmful inaction (could lead to delay or not seeking medical attention for treatable or curable condition [31.0%, 111 of 358]), economic harm (out-of-pocket financial costs associated with treatment or travel [27.7%, 99 of 358]), harmful action (potentially toxic effects of the suggested test or treatment [17.0%, 61 of 358]), or harmful interactions (known or unknown medical interactions with curative therapies [16.2%, 58 of 358]). Select qualitative examples of primary medical claims made for each assessment question are reported in Supplementary Table 1 (available online). Among articles containing misinformation, 76.9% (50 of 65) contained harmful information.

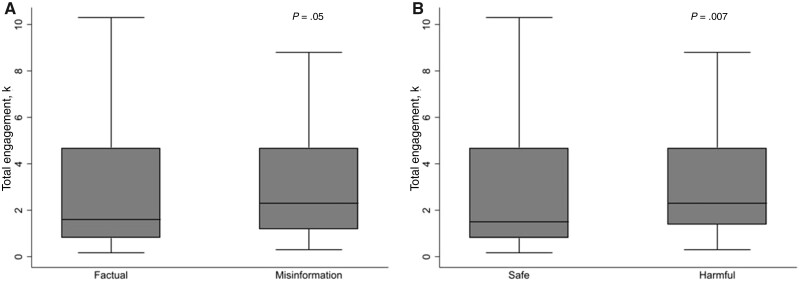

The median number of engagements was 1900 (interquartile range [IQR] = 941-4700), and 96.7% were Facebook engagements. The median engagement for articles with misinformation was greater than factual articles (median [IQR] = 2300 [1200-4700] vs 1600 [819-4700], P = .05) (Figure 1, A; Table 1). The median engagement for articles with harmful information was statistically significantly greater than for safe articles (median [IQR] = 2300 [1400-4700] vs 1500 [810-4700], P = .007) (Figure 1, B; Table 1). These findings were consistent for Facebook engagements (Table 1). Reddit and Twitter engagements were statistically significantly associated with misinformation and harm (all P < .05). However, Pinterest engagements were associated with neither misinformation nor harm (all P > .63).

Figure 1.

Box plots showing the association of total online engagements with cancer articles defined as A) factual and misinformation, and B) safe and harmful. P values were calculated using a 2-sided 2-sample Wilcoxon rank sum (Mann-Whitney test). Medians are shown within boxes that represent the interquartile ranges and the error bars that represent the ranges with outside values excluded.

Table 1.

The association between cancer article misinformation, harm, and total engagements and Facebook engagements

| Analysis and categories | Articles | Total engagements | Facebook engagements |

|---|---|---|---|

| No. (%) | Median No. (IQR) | Median No. (IQR) | |

| Total | 200 (100) | 1900 (941-4700) | 1800 (903-4650) |

| Misinformation analysis | |||

| Factual | 135 (67.5) | 1600 (819-4700) | 1500 (769-4700) |

| Misinformation | 65 (32.5) | 2300 (1200-4700) | 2300 (1200-4600) |

| Pa | .05 | .03 | |

| Harm analysis | |||

| Safe | 139 (69.5) | 1500 (810-4700) | 1500 (746-4700) |

| Harmful | 61 (30.5) | 2300 (1400-4700) | 2300 (1400-4600) |

| Pa | .007 | .005 |

Two-sided 2-sample Wilcoxon rank sum (Mann-Whitney) test. Expert reviewers demonstrated substantial agreement on presence of misinformation (ĸ = 0.63; 95% confidence interval = 0.50 to 0.77) and harmful information (ĸ = 0.66; 95% confidence interval = 0.52 to 0.80). IQR = interquartile range.

Between 2018 and 2019, nearly one-third of popular social media cancer articles contained misinformation and 76.9% of these contained harmful information. These data show that cancer information on social media is often inconsistent with expert opinion. This leaves patients in the confusing and uncomfortable position of determining the veracity of online information themselves or by talking to their physician. Most concerning, among the most popular articles on Facebook, articles containing misinformation and harmful information received statistically significantly more online engagement. This could result in a perpetuation of harmful misinformation, particularly within information silos curated for individuals susceptible to this influence.

Limitations of this study are that we included only the most popular English language cancer articles. Furthermore, although BuzzSumo data are obtained directly from social media platforms’ Application Programming Interface (12), there is a small possibility that this dataset is incomplete or that engagements do not match those recorded internally by the platforms. The data lack important qualitative information, but this was determined to be beyond the scope of this report. Lastly, reviewer bias towards conventional cancer treatments is possible; however, questions were structured to avoid stigmatization of nontraditional cancer treatments.

Collectively, these data show one-third of popular cancer articles on social media from 2018 and 2019 contained misinformation, and the majority of these contained the potential for harm. Research on patient discriminatory ability, identification of populations at risk of misinformation adoption, and predictors of belief in online cancer misinformation are underway. Further research is needed to address who is engaging with cancer misinformation; its impact on scientific belief, trust, and decision making; and the role of physician–patient communication in correcting misinformation. These findings could help lay the groundwork for future patient-specific tools and behavioral interventions to counter online cancer misinformation.

Funding

This study was funded, in part, by the Huntsman Cancer Institute. Briony Swire-Thompson’s, PhD effort was funded by an NIH Pathway to Independence Award.

Notes

Role of the funder: The funders did not participate in the design of the study, the collection, analysis, and interpretation of the data; the writing of the manuscript; nor the decision to submit the manuscript for publication.

Disclosures: Dr Johnson and Dr Parsons had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Skyler B. Johnson, MD, has no financial conflicts of interest. Jessica Baumann, MD is on the advisory boards from Pfizer and Kura. Stacey Cohen, MD is on the advisory boards of Kallyope, Acrotech, Natera, receives grant money from NIH P30-CA015704-44 and through institutional research funds including Boston Biomedical/Sumitomo Dainippon Pharma Oncology, Isofol, and Polaris. Tanya Dorff, MD is on the advisory boards of Abbvie, Advanced Accelerator Applications, Bayer, BMS, Exelixis, Janssen, and Seattle Genetics. Joleen Hubbard, MD is on the advisory boards of Bayer and Taiho and receives research funding to her institution from Merck, Boston biomedical, Treos Bio, Taiho, Senhwa pharmaceuticals, Bayer, Incyte, TriOncology, Seattle Genetics, and Hutchinson MediPharma. Daniel Spratt, MD receives personal fees from Janssen, Bayer, AstraZeneca, and Blue Earth, and funding from Janssen. All other researchers reported no financial conflicts of interests.

Author contributions: SBJ, MP, BST, LDS, JT, and AF conceptualized the study and methodology. JT and AF provided expertise, feedback and supervision. SBJ and MP verified research outputs, conducted formal analysis and maintained the research data. SBJ, TD, MSM, JHW, SAC, WA, JB, JH and DES conducted research and investigations. Writing of the original draft was conducted by SBJ, MP, CLB, BST, TO, LDS, JT, AF and review and editing was conducted by SBJ, MP, TD, MSM, JHW, SAC, WA, JB, JH, DES, CLB, BST, TO, LDS, JT and AF.

Data Availability

The data underlying this article are available online and the datasets were derived from sources in the public domain: buzzumo.com.

Supplementary Material

Contributor Information

Skyler B Johnson, Department of Radiation Oncology, University of Utah School of Medicine, Salt Lake City, UT, USA; Cancer Control and Population Sciences, Huntsman Cancer Institute, Salt Lake City, UT, USA.

Matthew Parsons, Department of Radiation Oncology, University of Utah School of Medicine, Salt Lake City, UT, USA.

Tanya Dorff, Department of Medical Oncology and Developmental, Therapeutics, City of Hope, Duarte, CA, USA; Department of Medicine, University of Southern California (USC) Keck School of Medicine and Norris Comprehensive Cancer Center (NCCC), Los Angeles, CA, USA.

Meena S Moran, Department of Therapeutic Radiology, Yale School of Medicine, Yale University, New Haven, CT, USA.

John H Ward, Oncology Division, Department of Internal Medicine, University of Utah School of Medicine, Salt Lake City, UT, USA.

Stacey A Cohen, Division of Oncology, University of Washington, Seattle, WA, USA; Clinical Research Division, Fred Hutchinson Cancer Research Center, Seattle, WA, USA.

Wallace Akerley, Huntsman Cancer Institute, University of Utah, Salt Lake City, UT, USA.

Jessica Bauman, Department of Hematology/Oncology, Fox Chase Cancer Center, Philadelphia, PA, USA.

Joleen Hubbard, Department of Medical Oncology, Mayo Clinic, Rochester, MN, USA.

Daniel E Spratt, Department of Radiation Oncology, University Hospitals, Case Western Reserve University, Cleveland, OH, USA.

Carma L Bylund, Division of Hematology and Oncology, College of Medicine, University of Florida, Gainesville, FL, USA; Department of Public Relations, College of Journalism and Communications, University of Florida, Gainesville, FL, USA.

Briony Swire-Thompson, Network Science Institute, Northeastern University, Boston, MA, USA; Institute for Quantitative Social Science, Harvard University, Cambridge, MA, USA.

Tracy Onega, Department of Population Sciences, University of Utah, Salt Lake City, UT, USA.

Laura D Scherer, Department of Medicine, Division of Cardiology, University of Colorado, Denver, CO, USA; VA Denver Center of Innovation, Denver, CO, USA.

Jonathan Tward, Department of Radiation Oncology, University of Utah School of Medicine, Salt Lake City, UT, USA.

Angela Fagerlin, Department of Population Sciences, University of Utah, Salt Lake City, UT, USA; VA Salt Lake City Health Care System, Salt Lake City, UT, USA.

References

- 1. Zhang Y, Sun Y, Xie B. Quality of health information for consumers on the web: a systematic review of indicators, criteria, tools, and evaluation results. J Assn Inf Sci Tec. 2015;66(10):2071–2084. [Google Scholar]

- 2. Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. 2018;359(6380):1146–1151. [DOI] [PubMed] [Google Scholar]

- 3. Chou W-YS, Oh A, Klein WM. Addressing health-related misinformation on social media. JAMA. 2018;320(23):2417–2418. [DOI] [PubMed] [Google Scholar]

- 4. Armstrong PW, Naylor CD. Counteracting health misinformation: a role for medical journals? JAMA. 2019;321(19):1863–1864. [DOI] [PubMed] [Google Scholar]

- 5. Merchant RM, Asch DA. Protecting the value of medical science in the age of social media and “fake news”. JAMA. 2018;320(23):2415–2416. [DOI] [PubMed] [Google Scholar]

- 6. Swire-Thompson B, Lazer D. Public health and online misinformation: challenges and recommendations. Annu Rev Public Health. 2020;41:433–451. [DOI] [PubMed] [Google Scholar]

- 7. Hill JA, Agewall S, Baranchuk A, et al. Medical misinformation: vet the message! J Am Heart Assoc. 2019;8(3):e011838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Bauchner H. Trust in health care. JAMA. 2019;321(6):547. [DOI] [PubMed] [Google Scholar]

- 9. Johnson SB, Park HS, Gross CP, et al. Complementary medicine, refusal of conventional cancer therapy, and survival among patients with curable cancers. JAMA Oncol. 2018;4(10):1375–1381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Johnson SB, Park HS, Gross CP, et al. Use of alternative medicine for cancer and its impact on survival. J Natl Cancer Inst. 2018;110(1):121–124. [DOI] [PubMed] [Google Scholar]

- 11. Sylvia Chou W-Y, Gaysynsky A. A Prologue to the Special Issue: Health Misinformation on Social Media. Am J Public Health. 2020;110(S3):S270–S272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Allcott H, Gentzkow M, Yu C. Trends in the diffusion of misinformation on social media. Res Polit. 2019;6(2):205316801984855. [Google Scholar]

- 13. Saurí R, Pustejovsky J. FactBank: a corpus annotated with event factuality. Lang Res Eval. 2009;43(3):227–268. [Google Scholar]

- 14. Mitra T, Gilbert E. Credbank: a large-scale social media corpus with associated credibility annotations. In: Ninth international AAAI conference on web and social media; 2015.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The data underlying this article are available online and the datasets were derived from sources in the public domain: buzzumo.com.