Summary

Recently, the proposed deep multilayer perceptron (MLP) models have stirred up a lot of interest in the vision community. Historically, the availability of larger datasets combined with increased computing capacity led to paradigm shifts. This review provides detailed discussions on whether MLPs can be a new paradigm for computer vision. We compare the intrinsic connections and differences between convolution, self-attention mechanism, and token-mixing MLP in detail. Advantages and limitations of token-mixing MLP are provided, followed by careful analysis of recent MLP-like variants, from module design to network architecture, and their applications. In the graphics processing unit era, the locally and globally weighted summations are the current mainstreams, represented by the convolution and self-attention mechanism, as well as MLPs. We suggest the further development of the paradigm to be considered alongside the next-generation computing devices.

Keywords: computer vision, neural network, deep MLP, paradigm shift

The bigger picture

The last decade has been called the third spring of deep learning, when extraordinary progress in both theory and applications has been made. This has attracted the enthusiasm of academia and an investment of resources from the whole society. During this period, the scale of networks has grown tremendously, and paradigms have emerged constantly. People wonder how long this trend will continue and what the next network will be.

We point out that existing paradigms blossom in a riot of color on the tree rooted in weighted summation with GPU computing. In terms of computation and power, its applications are still promising, but simply scaling the network with more GPUs is not sustainable. We recognize that the brilliance of this generation of networks is based on the switch of computing hardware from CPU to GPU and, similarly, we expect that the next paradigm will be brought about by the emergence of a new physical system-based computing hardware with a non-weighted summation network, such as the Boltzmann machine.

In the past decade, deep learning has boomed in three mainstream paradigms: convolution, self-attention, and fully connected. We compare these paradigms and comprehensively review MLPs with their vision applications and conclude that models within different paradigms achieve competitive performance. We point out that all deep architectures are weighted summation networks with the GPU-based computation, no matter what the paradigm is. The applications are still promising, but we expect the next paradigm will be beyond the weighted summation and come with the next-generation computing device.

Introduction

In computer vision, the ambition to create a system that imitates how the brain perceives and understands visual information fueled the initial development of neural networks.1,2 Subsequently, convolutional neural networks (CNNs),3, 4, 5 multilayer perceptrons (MLPs),6 and Boltzmann machine7,8 were proposed, and achieved fruitful results in theoretical research9, 10, 11, 12, 13, 14 in the last century. CNNs stood out due to their computational efficiency over MLPs and deep Boltzmann machines in the contest to replace hand-crafted features, and topped the list for vast visual tasks in the 2010s. From 2020, the Transformer-based models introduced from the natural language processing field to the visual field have once again reached a new peak. With the introduction of MLP-Mixer15 in 2021, the hot topic in the vision community comes: Will MLP become a new paradigm and push computer vision to a new height? This survey aims to provide opinions on this issue.

From a historical perspective, the availability of larger datasets combined with the transition from CPU-based training to graphics processing unit (GPU)-based training leads to paradigm shifts and a gradual reduction in human intervention. The locally weighted summation represented by convolution and globally weighted summation represented by self-attention are the current mainstreams. The token-mixing MLP15 in MLP-Mixer further abandons the artificially designed self-attention mechanism and allows the model learn the global weights matrix autonomously from the raw data, seemingly in line with the laws of historical development.

We review MLP-Mixer in detail, and compare the intrinsic connections and differences between convolution, self-attention mechanism, and token-mixing MLP. We observe that the token-mixing MLP is an enlarged and weights-shared-between-channel version of depthwise convolution,16 which faces challenges, such as high computational complexity and resolution sensitivity. Exhaustive analysis reveals that, not only the recent MLP-like variant designs are gradually approaching the direction of CNN, but the performance of these variants in visual tasks still lags behind CNN- and Transformer-based designs. At this moment, MLP is not a new paradigm that can push computer vision to new heights. In fact, computing paradigm and computing hardware are cooperative. The current weighted-sum paradigms have driven the booming of GPU-based computing and deep learning itself, while we believe the next paradigm or Boltzmann-like will also grow up with a new generation of computing hardware.

The rest of the paper is organized as follows. Preliminary reviews MLP, CNN, and Transformer, as well as their corresponding paradigms from a historical perspective. Pioneering model and new paradigm reviews the design of the latest MLP pioneering models, describes the differences and connections between token-mixing MLP, convolution, and self-attention mechanism, and presents the bottlenecks and challenges faced by the seemingly new paradigm. Block of MLP variants and architecture of MLP variants discuss the block evolution and network architecture of MLP-like variants. Applications of MLP variants sheds light on applications of MLP-like variants. Summary and outlook gives our summary and discusses potential future research directions.

Preliminary

For completeness and to provide helpful insight into the visual deep MLP presented in the subsequent sections, we briefly introduce MLP, CNN, and Transformer, including their brief histories and corresponding paradigms.

Multilayer perceptron and Boltzmann machine

The original “Perceptron” model was developed by Frank Rosenblatt in 1958,2 and can be viewed as a fully connected layer with only one output element. In 1969, a famous book entitled Perceptrons by Marvin Minsky and Seymour Papert17 critically analyzed perceptron and pointed out several critical weaknesses of perceptron, including that perceptron was unable to learn an XOR function. For a while, interest in perceptron waned.

Interest in perceptron revived in 1985 when Hinton and co-workers6 recognized that a feedforward neural network with two or more layers had a greater fitting ability than a single-layered perceptron. Indeed Hinton and co-workers proposed the MLP, a network composed of multiple layers of perceptrons and activation function, to solve the XOR problem. And they provided a reasonably effective training algorithm called backpropagation for neural networks. As shown in 1989 by Cybenko,9 Hornik et al.,10 and Funahashi,11 MLPs are universal function approximators and can be used to construct mathematical models for classification and regression analysis.

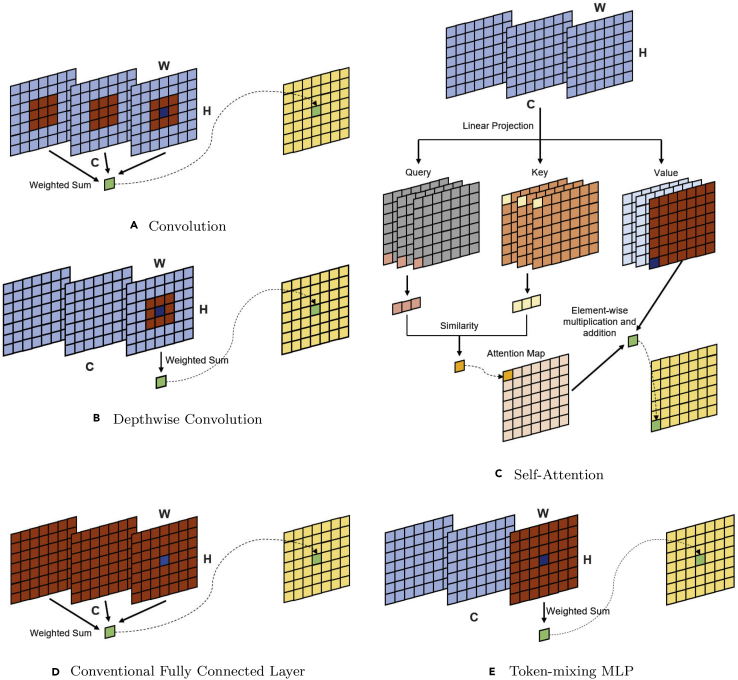

In MLP, the fully connected layer can be viewed as a paradigm for extracting features. As the name implies, the main feature lies in the full connectivity, i.e., all the neurons from one layer are connected to every neuron in the following layer (Figure 1D). One problem with fully connected layers is the input resolution sensitivity, where the number of neurons is related to the input size. Another significant problem with full connectivity is the enormous parameter cardinality and computational cost, growing quadratically with the image resolution.

Figure 1.

Illustrative shift between different weighted-sum paradigms

Illustrative shift between different weighted-sum paradigms in CNN (A and B), Transformer (C), and MLP (D and E). The input feature map is , where H, W, and C are the feature map’s height, width, and channel numbers, respectively. The light blue part highlights the input features, and the yellow part is the output features. The dark blue dot represents the position of interest, the dark orange denotes other features used in the calculation process, and the green dot represents the corresponding output feature. The token-mixing MLP is reduced to one fully connected layer to facilitate understanding. Linear projection performs a convolution along the channel dimension, and weighted sum means the elements are multiplied by the weights and then summed.

The Boltzmann machine7 proposed in 1985 is more theoretically intriguing because of the analogy of its dynamics to simple physical processes; and a restricted Boltzmann machine (RBM)8 comprises a layer of visible neurons and a layer of hidden neurons with only visible-hidden connections between the two layers. RBM is stacked18 and driven by recovering to a minimum energy state computed by a predetermined energy function. Contrarily speaking, stacked RBM is more computationally expensive than MLP, especially during inference. Computing power has been the main factor limiting the development of both MLP and RBM.

Convolution neural network

CNN was first proposed by Fukushima3,19,20 in an architecture called Neocognitron, which involved multiple pooling and convolutional layers, and inspired later CNNs. In 1989, LeCun et al.4 proposed a multilayered CNN for handwritten zip code recognition, and the prototype of the architecture later called LeNet. After years of research, LeCun5 proposed LeNet-5, which outperformed all other models on handwritten character recognition. In the CPU era, it was widely accepted that the backpropagation algorithm was ineffective, considering the limited computational power of CPUs, in converging to the global minima of the error surface, and hand-crafted features were generally better than that of CNN-based extractors.21

In 2007, NVIDIA developed the CUDA programming platform;22,23 and, in 2009, ImageNet,24 a large image dataset, was proposed to provide the raw material for networks to learn image features autonomously. Three years later, AlexNet25 won the ImageNet competition, a symbolically significant event of the first paradigm shift. CNN-based architectures have gradually been utilized to extract image features automatically instead of hand-crafted features. In traditional computer vision algorithms, features such as gradient, texture, and color are extracted locally. Hence, the inductive biases inherent to CNNs, such as local connectivity and translation invariance, significantly help image feature extraction. The development of self-supervision26, 27, 28, 29 and training strategies30, 31, 32, 33, 34, 35 further assisted the continuous improvement of CNNs. In addition to classification, CNNs outperform traditional algorithms for almost all computer vision tasks, such as object detection,36,37 segmentation,38,39 demosaicing,40 super-resolution,41, 42, 43 and deblurring.44 CNN is the de facto standard for computer vision.

A CNN architecture typically comprises alternate convolutional and pooling layers with several fully connected layers behind, where the standard local-connected convolutional layer is the paradigm (Figure 1A); and depthwise convolution is a variant of convolution, where it applies different convolutional filters to different single channel (Figure 1B). In the convolutional operation, sliding kernels with the same set of weights can extract full sets of features within an image, making the convolutional layer more parameter efficient than the fully connected layer.

Vision Transformer

Keeping pace with Moore’s law,45 the computing capability has increased steadily with each new generation of chips. In 2020, visual researchers noted the application and success of the Transformer46 in natural language processing and suggested moving beyond the limits of local connectivity and toward global connectivity. Vision Transformer (ViT47) is the first work promoting research on Transformer in the field of vision. It uses stacked transformer blocks of the same architecture to extract visual features, where each transformer block comprises two parts: a multi-head self-attention layer and a two-layer MLP, in which layer normalization and residual path are also added. Since then, the Transformer-based architecture has been widely accepted in the vision community, outperforming CNN-based architectures in tasks such as objection detection48,49 and segmentation,49, 50, 51 and achieving state-of-the-art performance on denoising,52 deraining,52 and super-resolution.52, 53, 54, 55 Furthermore, several works56, 57, 58, 59 demonstrate that the Transformer-based architecture is more robust than CNN-based methods. All the developments over the last two years indicate that Transformer has become another de facto standard for computer vision.

The paradigm in Transformer can be boiled down to the self-attention layer, where each input vector is linearly mapped into three different vectors: called query , key and value . Then the query, key, and value vectors come from different input vectors are aggregated into three corresponding matrices, denoted, , , and (Figure 1C). The self-attention mainly computes the cosine similarity between each query vector and all key vectors, and the is applied to normalize the similarity and obtain the attention matrix. Output features then become the weighted sum of all value vectors in the entire space, where the attention matrix gives the weights. Compared with the convolutional layer, which focuses only on local characteristics, the self-attention layer can capture long-distance characteristics and easily derive global information.

Pioneering model and new paradigm

The success of ViT marks the paradigm shift to the era of the global receptive field in computer vision, placing the consecutive question: Can we further abandon the artificially designed self-attention mechanism and allow the model learn the global weights matrix autonomously from the raw data? This motivation reminds researchers of the long-dusted simplest structure, MLP. After a long period of slumber, MLP finally reappears in May 2021, when the first deep MLP, called MLP-Mixer,15 is launched.

This section reviews in detail the structure of the latest so-called pioneering MLP model, MLP-Mixer,15 followed by a brief review of the contemporaneous ResMLP60 as well as FeedForward.61 After that, we strip the new paradigm, token-mixing MLP, from the network and elaborate its differences and connections with convolution and self-attention mechanisms. Finally, we explore the bottlenecks of token-mixing MLP and lay the foundation for introducing subsequent variants.

Structure of pioneering model

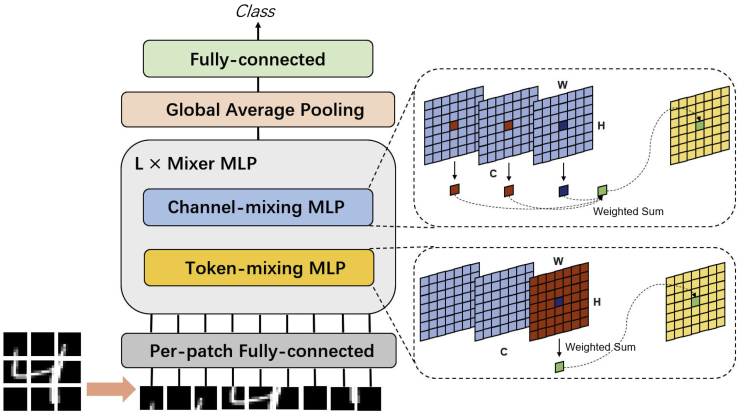

MLP-Mixer15 is the first proposed visual deep MLP network identified by the vision community as the pioneering MLP model. Compared with conventional MLP, it gets deeper and involves several differences. In detail, the MLP-Mixer comprises three modules, a per-patch fully connected layer for patch embedding, a stack of L mixer layers for feature extraction, and a classification header for classification (Figure 2).

Figure 2.

MLP-Mixer structure

We omit the layer normalization, non-linear activation function, and the residual path to improve readability. The token-mixing and channel-mixing MLP are reduced to one fully connected layer to ease understanding. The expression in the dashed box is consistent with Figure 1.

Patch embedding

The patch embedding is inherited from ViT,47 comprising three steps: (1) cut an image into non-overlap patches, (2) flatten the patches, and (3) linearly project these flattened patches. Specifically, an input image of the size is split into S non-overlapping patches, where H and W are the image’s height and width, respectively, S donates patch number, and p represents the patch size (typically 14 or 16). The patch is also called a token. Each patch is then unfolded into a vector . In total, we obtain a set of flattened patches , which are input of the MLP-Mixer. For each , the per-patch fully connected layer maps it into a C-dimensional embedding vector:

| (Equation 1) |

where is the embedding vector of and represents weights of the per-patch fully connected layer. In practice, it is possible to combine three steps presented above into a single step using a 2D convolution operation, where the convolutional kernel size and stride are equal to patch size.

Mixer layers

The MLP-Mixer stacks L mixer layers of the same architecture, where a single mixer layer essentially consists of a token-mixing MLP and a channel-mixing MLP. Let the patch features at the input of each mixer layer be , where S is the number of patches, and C is the dimension of each patch feature, i.e., the number of channels. The token-mixing MLP works on each column of , maps , and the weights are shared among all columns. The channel-mixing MLP works on each row of , maps , and the weights are shared among all rows. Both token-mixing and channel-mixing MLPs comprise two fully connected layers, and there is a non-linear activation function between the two layers. Thus, a mixer layer can be written as follows (omitting layer indices):

| (Equation 2) |

where σ is the GELU62 activation function, and denotes the layer normalization63 widely used in Transformer-based models. represents the weights of a fully connected layer, where , , , and , and is the expansion ratio (commonly ). It is worth mentioning that each mixer layer takes an input of the same size, which is most similar to Transformers or deep recurrent neural networks in other domains. However, it opposes most CNNs, which have a pyramidal structure: deeper layers have a lower resolution input but more channels.

Classification header

After processing with L stacking Mixer layers, S patch features are generated. Then, a global vector is calculated from the features through the average pooling scheme, which is forwarded into a fully connected layer for classification.

Compared with MLP-Mixer, FeedForward61 and ResMLP60 were proposed a few days later. FeedForward61 adopts essentially the same structure as the MLP-Mixer, but swaps the channel-mixing MLP and token-mixing MLP order. As another contemporary work, ResMLP60 simplifies the token-mixing MLP in the MLP-Mixer from two fully connected layers to one. Meanwhile, ResMLP proposes an affine element-wise transformation to replace the layer normalization in the MLP-Mixer and stabilize training.

Experimentally, these MLP pioneering models achieve comparable performance to CNN and ViT for image classification (Image classification). These empirical results significantly break past perceptions, challenge the necessity for the convolutional layer and the self-attention layer, and prompt the community to rethink whether the convolutional layer or the self-attention layer is necessary. The latter induces us to explore whether a pure MLP stack will become the new paradigm for computer vision.

Token-mixing MLP, a new paradigm?

To find out whether pure MLPs stacked into a mixer layer will become a new paradigm, it is necessary first to reveal the difference between it, convolution, and self-attention mechanisms. There is an indisputable fact that both the channel-mixing MLP in MLP-Mixer and the MLP in ViT are just a convolution commonly used in CNNs, allowing communication between different channels. The core points to compare come naturally to token-mixing MLP, self-attention, and convolution, which allow communication between different spatial locations. A detailed comparison between the three is reported in Table 1.

Table 1.

Comparison between convolution, self-attention, and token-mixing MLP

| Operation | Information aggregation | Receptive field | Resolution sensitive | Spatial | Channel | Params | FLOPs |

|---|---|---|---|---|---|---|---|

| Convolution | static | local | false | agnostic | specific | ||

| Depthwise convolution | static | local | false | agnostic | specific | ||

| Self-attention47 | dynamic | global | false | agnostic | specific | ||

| Token-mixing MLP15 | static | global | True | specific | agnostic |

H, W, and C are the height, width, and channel numbers of the feature map, respectively. k is the convolutional kernel size. “Information aggregation” refers to whether the weights are fixed or dynamically generated based on the input during inference. “Resolution sensitive” refers to whether the operation is sensitive to input resolution. “Spatial” refers whether feature extraction is sensitive to the spatial location of objects, “specific” means true, while “agnostic” means false. “Channel specific” means no weights are shared between channels, “Channel agnostic” means weights are shared between channels.

Convolution usually performs the aggregation computation of spatial information in a local region, but poorly models long-term dependencies. The token-mixing MLP (Figure 1E) can be viewed as an unusual convolution type whose convolutional kernel covers the entire space. To enhance efficiency, it aggregates spatial information from a single channel and shares weights for all channels. This is very close to the depthwise convolution16 (Figure 1B) used in CNN, which independently applies convolutions to each channel. However, the convolutional kernels in depthwise convolution are usually small and not shared between each channel. The self-attention mechanism considers all patches as well, where the weights are dynamically generated based on the input, while weights in token-mixing MLP and the convolutional layer are fixed and input independent.

We now shift to another important metric, namely complexity. Without loss of generality, we assume that the input feature map size is (or in CNNs), where H and W are the spatial resolutions, and C is the number of channels. Intuitively, local computation has minimal computational complexity, i.e., in depthwise convolution and in dense convolution. However, both self-attention and token-mixing MLP involving a global receptive field have greater complexity, . Fortunately, token-mixing MLP is less computationally intensive than the self-attention layer due to the lack of calculations, such as the attention matrix. As for the parameter cardinality, the parameter complexity is in token-mixing MLP, strongly correlated with the image resolution. So, once the network is fixed, the corresponding image resolution is also fixed. In comparison, other paradigms have more advantages in parameters. The newly proposed MLP-like variants are optimized in complexity, see Complexity analysis.

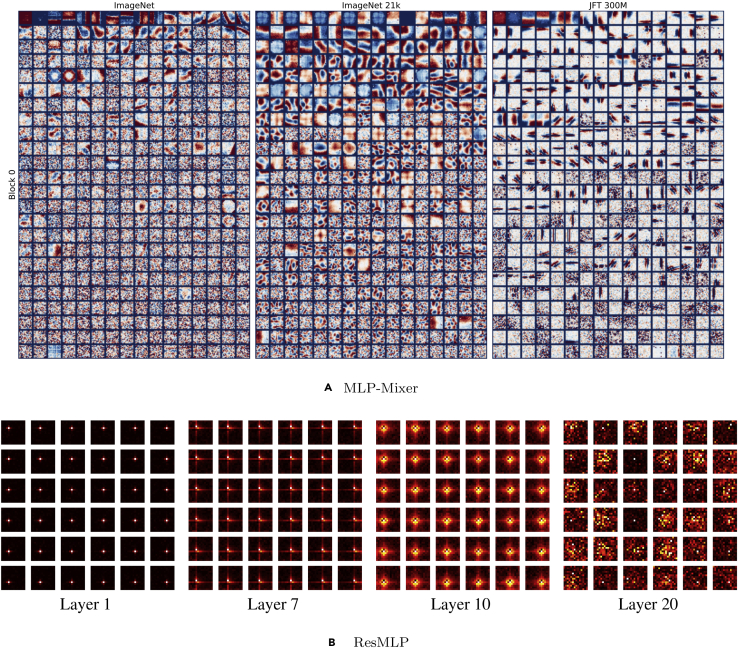

The above comparative analysis reveals that token-mixing MLP is seemly a new paradigm. But what does the new paradigm bring to the learning weights? Figure 3 visualizes the weights of fully connected layers (FC kernels) of MLP-Mixer and ResMLP trained on ImageNet24 or JFT-300M.64 ImageNet-1k contains 1.2 million labeled images, ImageNet-21k involves 14 million images, and JFT-300M has 300 million images. As the amount of training data increases, the number of FC kernels for locality computation in MLP-Mixer increases. ResMLP’s shallow FC kernels also present some local connectivity properties. These fully connected layers actually still perform local computation, which convolutional layers can replace. Thus, we conclude that: the shallow fully connected layers of deep MLP implement a convolution-like scheme. As the number of layers increases, i.e., the network becomes deeper, the effective range of receptive field increases, and the weights become disorganized. However, it is unclear if this is due to a lack of training data or if it should be so. Notably, while MLP-Mixer and ResMLP share a highly similar structure (except for the normalization layer), their learning weights are vastly different. This questions whether MLP is learning generic visual features. Moreover, MLP’s interpretability stands far behind.

Figure 3.

Visualizing the weights of the fully connected layers in MLP-Mixer and ResMLP

Visualizing the weights of the fully connected layers in MLP-Mixer (A) and ResMLP (B). Each weight matrix is resized to pixel images. In MLP-Mixer, white denotes that weight is 0, red means positive weights, blue means negative weights, and the brighter, the greater the weight. In ResMLP, black indicates that weight is 0, and the brighter, the greater the weight’s absolute value. The results are from Tolstikhin et al.15 and Touvron et al.,60 respectively.

Bottlenecks

Based on the above analysis and comparison, it is evident that the seemingly new paradigm still faces several bottlenecks:

-

1

Without the inductive biases of the local connectivity and the self-attention, the token-mixing MLP is more flexible and has a stronger fitting capability. However, it has a greater risk of over-fitting than the convolutional and self-attention layers. Thus, the large-scale dataset is needed to shorten the classification accuracy gap between MLP-Mixer, ViT, and CNN.

-

2

The complexity of the token-mixing MLP is quadratic to image size, which, for the current computing capability, makes it intractable for existing MLP-like models on high-resolution images.

-

3

The token-mixing MLP is resolution sensitive, and the network cannot deal with a flexible input resolution once the number of neurons in the fully connected layer is set. However, some tasks adopt a multi-scale training strategy48 and have different input resolutions between training and evaluation stages.65,66 In these cases, MLP models are non-transferable and impractical.

After several explorations and practices to address these challenges, the vision community has developed many MLP-like variants. Their main contributions are modifications to the token-mixing MLP, including reducing computational effort, removing resolution sensitivity, and reintroducing local receptive fields. These variants will be described in detail in the subsequent sections.

Block of MLP variants

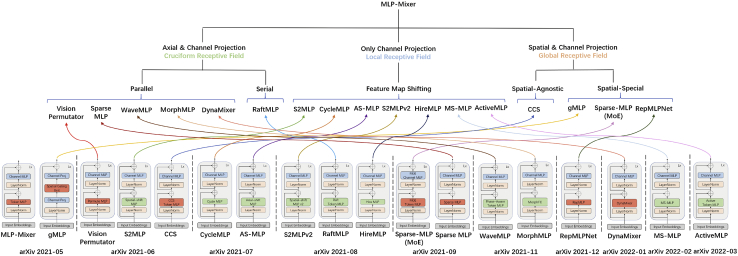

To overcome the challenges faced by the MLP-Mixer, the vision community has made several attempts and proposed many MLP-like variants. The improvements focus on redesigning the network’s interior parts, i.e., the block module. The lower part of Figure 4 illustrates the block designs of the latest MLP-like variants, highlighting that they are primarily modified for token-mixing MLP. Except for the gMLP,67 the remaining blocks retain the tandem spatial MLP and the channel MLP. Moreover, most of the improvements reduce the spatial MLP’s sensitivity to image resolution (green rectangle).

Figure 4.

Block comparison of MLP-Mixer variants

Sorted from left to right by model release month (“arXiv 2021-05” denotes “May, 2021”). Red-orange, token-mixing modules are resolution sensitive; green, token-mixing modules are resolution insensitive.

In this work, we reproduce most variants of MLP-like models in Jittor68,69 and Pytorch.70 Moreover, this section first details the redesigned blocks of the latest MLP-like variants, then compares their properties and receptive field, and finally discusses the findings.

MLP block variants

We divide the MLP block variants into three categories: (1) mappings employing both the axial direction and channel dimensions, (2) mappings considering only channel dimensions, and (3) mappings utilizing the entire spatial and channel dimensions. The upper part of Figure 4 categorizes the network variants. Since the channel-mixing MLP is basically the same (except for the gMLP), we review and describe the changes to the token-mixing MLP.

Axial and channel projection blocks

The global receptive field of the initial token-mixing MLP is heavily parameterized and computationally very complex. Some researchers have proposed orthogonally decomposing the spatial projections and maintaining long-range dependence while no longer encoding spatial information along a flat spatial dimension.

Hou et al.71 present the Vision Permutator (ViP), which separately encodes the feature representations along the height and width dimensions with linear projections. This design allows ViP to capture long-range dependencies along one spatial direction while preserving precise positional information along the other direction. As illustrated in Figure 5A, Permute-MLP comprises three branches responsible for encoding information along the height, width, or channel dimension. Specifically, it first splits the feature map into g segments along the channel dimension, where and the segments are then concatenated along the height dimension. Thus, it maps and is shared across the width and part channels. After mapping, the feature map is recovered to the original shape. The branch treatment in the width direction is the same as in the height direction. The third path is a simple mapping process along the channel dimension, which can also be regarded as a convolution. Finally, the outputs from all three branches are fused by exploiting the split attention.72

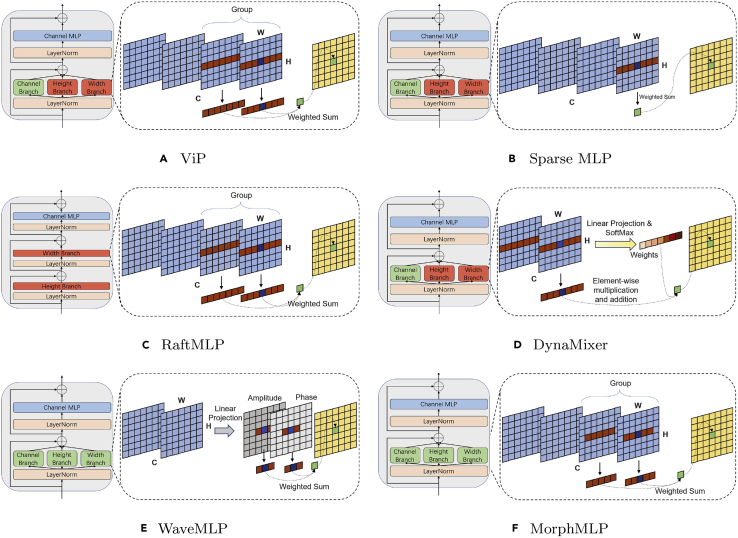

Figure 5.

Visualizing the module diagram of the MLP-like variants

Visualizing the module diagram of the MLP-like variants, including ViP (A), Sparse MLP (B), RaftMLP (C), DynaMixer (D), WaveMLP (E), and MorphMLP (F). Except for RaftMLP, the remaining block designs adopt three-branch parallelism. Red-orange branch, image resolution sensitive; green branch, resolution insensitive. The channel branch and linear projection both perform a linear projection along the channel dimension. Group means that the channel is split into different groups. The expression in the dashed box is consistent with Figure 1.

Tang et al.73 adopt a strategy consistent with ViP for spatial information extraction and build an attention-free network called Sparse MLP. As illustrated in Figure 5B, the block contains three parallel branches. The difference from ViP is no longer splitting the feature map along the channel dimension, but directly mapping and along the height and width dimension. Without the split attention, Sparse MLP’s fusion strategy involves concatenating the three tributary outputs by channel and then passing them through a fully connected layer for dimensionality reduction. There is also a minor modification where Sparse MLP places a depthwise convolution in front of each block.

RaftMLP74 employs serial mappings in high and wide dimensions to form a raft-token-mixing block (Figure 5C), which is different from the parallel branches of ViP and Sparse MLP. In the specific implementation, the raft-token-mixing block also splits the feature map along the channel dimension, consistent with ViP.

DynaMixer75 generates mixing matrices dynamically for each set of tokens to be mixed by considering their contents. DynaMixer adopts the parallel strategy for a computational speedup and mixes tokens in a row-wise and column-wise way (Figure 5D). The proposed DynaMixer operation performs dimensionality reduction and flattening first and then utilizes a linear function to estimate a or mixing matrix. is performed on each row of the mixing matrix to obtain the mixing weights. The output equals the product of the mix weights and the input.

WaveMLP76 considers the dynamic aggregation tokens and image resolution issues. It considers each token a wave with both amplitude and phase information (Figure 5E). The tokens are aggregated according to their varying contents from different input images with the dynamically produced phase. There are two parallel paths to aggregate spatial information along the horizontal and vertical directions, respectively. To address the issue of sensitivity to image resolution, WaveMLP adopts a simple strategy that restricts the fully connected layers only to connect tokens within a local window.49 However, the local window limits long-range dependencies.

MorphMLP77 considers long-range and short-range dependencies while continuing the static aggregation strategy (Figure 5F). It focuses on local details in the early stages of the network and gradually changes to long-term modeling in the later stages. The local window is used to solve the image resolution sensitivity problem, and the window size increases as the number of layers increases. The authors find that such a feature extraction model is beneficial for images and videos.

Discussion

ViP, Sparse MLP, RaftMLP, and DynaMixer encode spatial information along the axial direction instead of the entire plane, preserving the long-range dependence to a certain extent and reducing the complexity of parameters and the computational cost. However, they are still image resolution sensitive. WaveMLP and MorphMLP adopt a local window strategy but discard long-range dependencies. Furthermore, all those variants cannot mix tokens both globally and locally.

Channel-only projection blocks

The mainstream method adopts Swin’s proposal49 and uses a local window to achieve resolution insensitivity. Another approach replaces all the spatial fully connected layers with channel projection, i.e., convolution. However, it causes the tokens to no longer interact with each other, and the concept of the receptive field disappears. To reintroduce the receptive field, many works align features at different spatial locations to the same channel by shifting (or moving) the feature maps and then interacting with spatial information through channel projection. Such an operation enables an MLP-like architecture to achieve the same local receptive field as a CNN-like architecture.

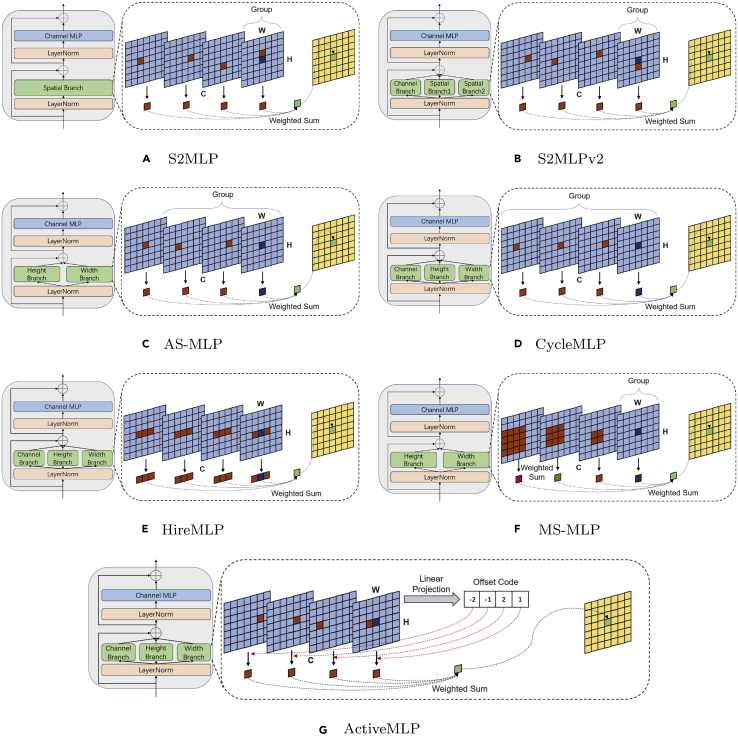

Yu et al.78 propose a spatial-shift MLP-like architecture for vision, called S2MLP. The actual practice is quite simple. As shown in Figure 6A, the proposed spatial-shift module groups C channels into several groups, shifting different channel groups in different directions. The feature map after the shift aligns different token features to the same channel, and then the interaction of the spatial information can be realized after channel projection. Given the simplicity of this approach, Yu et al.79 exploit the idea of ViP to extend the spatial-shift module into three parallel branches and then fuse the branch features by a split attention module to further improve the network’s performance (Figure 6B). This newly proposed network is called S2MLPv2.

Figure 6.

Visualizing the module diagram of the MLP-like variants

Visualizing the module diagram of the MLP-like variants, including S2MLP (A), S2MLPv2 (B), AS-MLP (C), CycleMLP (D), HireMLP (E), MS-MLP (F), and ActiveMLP (G). Green branch, resolution insensitive. The channel branch and linear projection both perform a linear projection along the channel dimension. Group means that the channel is split into different groups. The expression in the dashed box is consistent with Figure 1.

Unlike grouping and then performing the same shift operation for each group, the Axial Shifted MLP (AS-MLP)80 performs different operations within each group that contains a few channels, e.g., every three feature maps in the channel direction are left-shifted, no-shifted, right-shifted, and so on (Figure 6C). In addition, AS-MLP uses two parallel branches for horizontal and vertical shifting, where the outputs are added element-wise and projected along the channel dimension for information integration. It is worth mentioning that AS-MLP is also extended with different shifting strategies, allowing the receptive field to be similar to the dilated convolution (atrous convolution).81,82

CycleMLP83 was published three days after AS-MLP. Although CycleMLP does not directly shift feature maps, it integrates features at different spatial locations along the channel direction by employing deformable convolution,84 an equivalent approach to shifting the feature map. As illustrated in Figure 6D, CycleMLP and AS-MLP slightly differ as CycleMLP has three branches and AS-MLP has only two branches. Furthermore, CycleMLP relies on split attention to fuse the branched features.

ActiveMLP85 dynamically estimates the offset, rather than manually setting it like AS-MLP and CycleMLP do (Figure 6G). It first predicts the spatial locations of helpful contextual features along each direction at the channel level, and then finds and fuses them. This is equivalent to a deformable convolution.84

HireMLP86 adopts inner-region and cross-region rearrangements before channel projection to communicate spatial information. The inner-region rearrangement expands the feature map along the height or width direction, and the cross-region rearrangement moves the feature map cyclically along the width or height direction. The HireMLP block still comprises three parallel branches, and the output feature is obtained by adding the branched features (Figure 6E).

The six models mentioned above can communicate only localized information through feature map movement. MS-MLP87 effectively expands the range of receptive fields by mixing neighboring and distant tokens from fine- to coarse-grained levels and then gathering them via a shifting operation. From the implementation aspect, MS-MLP performs depthwise convolution of different sizes before channel alignment (Figure 6F). Compared with the global receptive field or local window, there is still some vacancy in the receptive field of MS-MLP.

Discussion

After shifting the feature maps, channel projection is equivalent to sampling features at different locations in different channels for aggregation. In other words, this strategy is an artificially designed deformable convolution. Thus, it may be far better to call these models CNN-like, as only local feature extraction can be performed.

Spatial and channel projection blocks

Some variants still retain full space and channel projection. Their module designs are not short of sparkle and enhance performance. Nevertheless, these methods are resolution sensitive, prohibiting them from being a general vision backbone.

gMLP67 is the first proposed MLP-Mixer variant. gMLP was developed by Liu et al., who experimented with several design choices for token-mixing architecture and found that spatial projections work well when they are linear and paired with multiplicative gating. In detail, the spatial gating unit first linearly projects the input X, . Then the output of the spatial gating unit is , where denotes the element-wise product. The authors found it effective to split X into two independent parts along the channel dimension for the gating function and the multiplicative bypass: . Note that the gMLP block has a channel projection before and after the spatial gating unit. However, there is no longer a channel-mixing MLP. Pleasingly, gMLP achieves good performance in both computer vision and natural language processing tasks.

Lou et al.88 consider how to scale the MLP-Mixer to more parameters with comparable computational cost, making the model more computationally efficient and better performing. Specifically, they introduce the mixture-of-experts (MoE)89 scheme into the MLP-Mixer and propose Sparse-MLP(MoE). Carlos et al.90 had already applied MoE on the MLP of the Transformer block, i.e., channel-mixing MLP in MLP-Mixer, a few months earlier. As a continuation of Carlos’ work, Lou et al. expand MoE from a channel-mixing MLP to a token-mixing MLP and achieve some performance gains compared with the primitive MLP-Mixer. More details about MoE can be found in Shazeer and co-workers.89,90

Yu et al.91 impose a circulant-structure constraint on the token-mixing MLP weights, reducing the spatial projection’s sensitivity to spatial translation while preserving the global receptive field. It should be noted that the model is still resolution sensitive. The authors reduce the number of parameters from to , but do not reduce the computation cost. Therefore, the authors employ a fast Fourier transform to reduce the FLOPs and enhance computational efficiency.

Finally, and the most ingeniously, Ding et al.92 propose a novel structural re-parameterization technique to merge the convolutional layers into the fully connected layers. Therefore, during training, the proposed RepMLPNet can learn parallel fully connected layers (global receptive field) and convolutional layers (local receptive field) and combine the two via transforming the parameters. Compared with the weight values of the fully connected layers before merging, the re-parameterization resultant weight has larger values around a specific position, suggesting that the model focuses more on the neighborhood. Although large images can be sliced and input to RepMLPNet for feature extraction, the resolution sensitivity makes it not a general vision backbone.

Others

ConvMLP93 is another special variant, which is lightweight, stage-wise, and comprises a co-design of convolutional layers. ConvMLP replaces the token-mixing MLP with a depthwise convolution, and therefore we consider ConvMLP as a pure CNN model rather than an MLP-like model.

In addition, LIT94 replaces the self-attention layers with MLP in the first two stages of a pyramid ViT. UniNet95 jointly searches the optimal combination of convolution, self-attention, and MLP to build a series of all-operator network architectures with high performances on visual tasks. However, these methods are beyond the scope of Vision MLP.

Receptive field and complexity analysis

The main novelty of MLP is allowing the model to autonomously learn the global receptive field from raw data. However, do these so-called MLP-like variants still hold to the original intent? Immediately following the module design, we compare and analyze the receptive field of these blocks to provide a more in-depth presentation for those MLP-like variants. Following that, we compare and analyze the complexity of different modules, with the corresponding results reported in Table 2. A comparison of the block’s spatial sensitivity, channel sensitivity, and resolution sensitivity is also provided.

Table 2.

Comparison of different spatial information fusion modules

| Spatial operation | Block | Spatial | Channel | Resolution sensitive | Params | FLOPs |

|---|---|---|---|---|---|---|

| Spatial projection | MLP-Mixer15 | specific | agnostic | true | ||

| ResMLP60 | specific | agnostic | true | |||

| FeedForward61 | specific | agnostic | true | |||

| gMLP67 | specific | agnostic | true | |||

| Sparse-MLP(MoE)88 | specific | agnostic | true | |||

| CCS91 | agnostic | group specific | true | |||

| RepMLPNet92 | specific | group specific | true | |||

| Axial projection | RaftMLP74 | specific | agnostic | true | ||

| ViP71 | specific | specific | true | |||

| Sparse MLP73 | specific | specific | true | |||

| DynaMixer75 | specific | specific | true | |||

| WaveMLP76 | specific | specific | false | |||

| MorphMLP77 | specific | specific | false | |||

| Shifting & channel projection | S2MLP78 | agnostic | specific | false | ||

| S2MLPv279 | agnostic | specific | false | |||

| AS-MLP80 | agnostic | specific | false | |||

| CycleMLP83 | agnostic | specific | false | |||

| HireMLP86 | agnostic | specific | false | |||

| MS-MLP87 | agnostic | specific | false | |||

| ActiveMLP85 | agnostic | specific | false |

H, W, and C are the feature map’s height, width, and channel numbers, respectively. L is the local window size. “Spatial” refers to whether feature extraction is sensitive to the spatial location of objects, “specific” means true, while “agnostic” means false. “Channel” refers whether weights are shared between channels, “agnostic” shares weights between all channels, “group specific” shares weights between groups, and “specific” does not share. “Resolution sensitive” refers to whether the module is resolution sensitive.

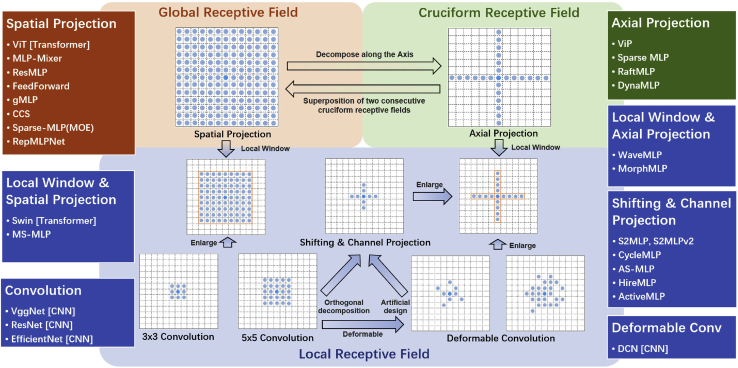

Receptive field

Receptive field was first used by Sherrington96 in 1906 to describe the skin area that could trigger a scratch reflex in a dog. Nowadays, the term receptive field is also used in describing artificial neural networks, which is deemed as the size of the region in the input that produces the output value. It measures the relevance of an output feature to the input region. Different information aggregation methods will generate different receptive fields, which we divide into three categories, global, cruciform, and local. CNNs have local receptive fields, while vision Transformers own global receptive fields. Swin Transformer49 introduces the concept of local window, reducing the global receptive field to a fixed region independent of the image resolution, which is still more extensive than the standard convolutional layer. Figure 7 displays the schematic diagrams of different receptive fields and the corresponding MLP-like variants in detail.

Figure 7.

Schematic diagram of different receptive fields, including global, cruciform, and local

The bright blue dot indicates the position of the encoded token, and the light blue dots are other locations involved in the calculation. The range of the blue dots constitutes the receptive field. The orange rectangle is the local window, which covers a larger region than the commonly used convolutional kernels. The transition between different receptive fields is marked next to the arrow. The corresponding networks are listed on the left and right sides. Although MS-MLP87 uses shifting and channel projection, its receptive field is most similar to the local window and spatial projection due to the depthwise convolution before shifting feature maps. The local receptive fields formed by MLP-like variants can be realized by convolution, causing them to lack an essential difference from CNNs.

Similar to MLP-Mixer,15 the full spatial projection in gMLP,67 Sparse-MLP(MoE),88 CCS,91 and RepMLPNet92 still retain the global receptive field, i.e., the encoded features of each token are the weighted sum of all input token features. It must be acknowledged that this global receptive field is at the patch level, not at the pixel level in the traditional sense. In other words, the globalness is approximated by patch partition, similar to the Transformer-based methods. The size of the patch affects the final result and the network’s computational complexity. Notably, the patch partition is a strong artificial assumption that is always ignored.

To balance long-distance dependence and computational cost, axial projection decomposes the full spatial projection orthogonally, i.e., along with horizontal and vertical directions. The projection on both axes is made serially (RaftMLP74) or parallel (ViP,71 Sparse MLP,73 and DynaMixer75). Thus, the token encoded only interacts with horizontal or vertical tokens in a single projection and forms a cruciform receptive field, which retains horizontal and vertical long-range dependence. However, if the token of interest and the current token are not at the same horizontal or vertical area, the two tokens cannot interact.

The global and cruciform receptive fields are required to cover the entire height and width of the space, resulting in a one-to-one correspondence between the neurons’ number in the fully connected layer and the image resolution, further restricting the model to utilize images of a specific size. To eliminate resolution sensitivity, many MLP-like variants (blue box in Figure 7) choose to use local receptive fields. Mainly, two approaches are adopted: local window and channel mapping after shifting feature maps. However, these operations can be achieved by expanding the convolutional kernel size and using deformable convolution, making these variants not fundamentally different from CNNs. A concern is that these MLP-like variants abandon the global receptive field, a significant MLP feature.

Virtually, the cruciform and local receptive field is a particular case of the global receptive field, e.g., the weight value is approximately 0 except for a specific area. Therefore, these two receptive field types are equivalent to learning only a small part of the weights of the global receptive field and setting the other weights to be constantly 0. This is also an artificial inductive bias, similar to the locality introduced by the convolutional layer.

Complexity analysis

Since all network blocks mentioned above contain the channel-mixing MLP module, where the number of parameters is , and the FLOPs are , we ignore this item in the later analysis and focus mainly on the module used for spatial information fusion, i.e., token-mixing MLP and its variants. Table 2 lists the comparison results of the attribute and complexity analysis of different spatial information fusion modules, where the network names are used to name each module for ease of understanding. The complexity is referenced from the analysis in the original papers.

The full spatial projection in the MLP-Mixer15 and its variants contains parameters and has FLOPs, both quadratic with the image resolution. Theoretically, it is difficult to apply the network to large-resolution images with the current computational power. Hence, a compromise to enhance computational efficiency significantly increases the patch size, e.g., or , and the information extracted is too coarse to discriminate small objects. The only difference is that CCS91 constructs an weight matrix using weight vectors of length and uses a fast Fourier transform to reduce the computational cost.

In contrast and similar to RaftMLP,74 the axial projection, the orthogonal decomposition of the full spatial projection, reduces the parameter cardinality from to , and the FLOPs from to . If three parallel branches are used, the number of parameters and the FLOPs are and , respectively. Notice that fusing information from multiple branches does not increase the computational complexity, such as splitting attention and dimensionality reduction after channel concatenation. If there is a local window, the number of parameters and FLOPs are further reduced to and , where L is the window size, supposing a channel branch exists. DynaMixer75 requires dynamic estimation of the weight matrix, which leads to higher complexity.

The approach based on shifting the feature map and channel projection further reduce computational complexity, i.e., the number of parameters is with FLOPs. MS-MLP87 adds some depthwise convolutions, ActiveMLP85 adds some channel projections, but both do not affect the overall complexity. Moreover, the number of weights is decoupled from the image resolution to no longer constrain these variants.

It is worth noting that computational complexity is only one of the determinants of inference time, as reshaping and shifting feature maps is also time-consuming. In addition, reducing complexity does not mean that the proposed network has fewer parameters. Conversely, the various networks retain a comparable number of parameters (Table 4). This allows networks of lower complexity to have more layers and more channels.

Table 4.

Image classification results of MLP-like models on ImageNet-1K benchmark without extra data

| Model | Date | Structure | Top 1 (%) | Params (M) | FLOPs (G) | Open source code |

|---|---|---|---|---|---|---|

| Small models | ||||||

| Sparse-MLP(MoE)-S88 | 2021.09 | single stage | 71.3 | 21 | – | false |

| RepMLPNet-T22492 | 2021.12 | pyramid | 76.4 | 15.2 | 2.8 | true |

| ResMLP-1260 | 2021.05 | single stage | 76.6 | 15 | 3.0 | true |

| Hire-MLP-Ti86 | 2021.08 | pyramid | 78.9 | 17 | 2.1 | falseb |

| gMLP-S67 | 2021.05 | single stage | 79.4 | 20 | 4.5 | true |

| AS-MLP-T80 | 2021.07 | pyramid | 81.3 | 28 | 4.4 | true |

| ViP-small/771 | 2021.06 | two stage | 81.5 | 25 | 6.9 | true |

| CycleMLP-B283 | 2021.07 | pyramid | 81.6 | 27 | 3.9 | true |

| MorphMLP-T77 | 2021.11 | pyramid | 81.6 | 23 | 3.9 | false |

| Sparse MLP-T73 | 2021.09 | pyramid | 81.9 | 24.1 | 5.0 | false |

| ActiveMLP-T85 | 2022.03 | pyramid | 82.0 | 27 | 4.0 | false |

| S2-MLPv2-small/779 | 2021.08 | two stage | 82.0 | 25 | 6.9 | false |

| MS-MLP-T87 | 2022.02 | pyramid | 82.1 | 28 | 4.9 | true |

| WaveMLP-S76 | 2021.11 | pyramid | 82.6 | 30.0 | 4.5 | falseb |

| DynaMixer-S75 | 2022.01 | two stage | 82.7∗ | 26 | 7.3 | false |

| Medium models | ||||||

| FeedForward61 | 2021.05 | single stage | 74.9 | 62 | 11.4 | true |

| Mixer-B/1615 | 2021.05 | single stage | 76.4 | 59 | 11.7 | true |

| Sparse-MLP(MoE)-B88 | 2021.09 | single stage | 77.9 | 69 | – | false |

| RaftMLP-1274 | 2021.08 | single stage | 78.0 | 58 | 12.0 | false |

| ResMLP-3660 | 2021.05 | single stage | 79.7 | 45 | 8.9 | true |

| Mixer-B/16 + CCS91 | 2021.06 | single stage | 79.8 | 57 | 11 | false |

| RepMLPNet-B224 92 | 2021.12 | pyramid | 80.1 | 68.2 | 6.7 | true |

| S2-MLP-deep 78 | 2021.06 | single stage | 80.7 | 51 | 9.7 | false |

| ViP-medium/7 71 | 2021.06 | two stage | 82.7 | 55 | 16.3 | true |

| CycleMLP-B4 83 | 2021.07 | pyramid | 83.0 | 52 | 10.1 | true |

| AS-MLP-S 80 | 2021.07 | pyramid | 83.1 | 50 | 8.5 | true |

| Hire-MLP-B 86 | 2021.08 | pyramid | 83.1 | 58 | 8.1 | falseb |

| MorphMLP-B 77 | 2021.11 | pyramid | 83.2 | 58 | 10.2 | false |

| Sparse MLP-B 73 | 2021.09 | pyramid | 83.4 | 65.9 | 14.0 | false |

| MS-MLP-S 87 | 2022.02 | pyramid | 83.4 | 50 | 9.0 | true |

| ActiveMLP-B 85 | 2022.03 | pyramid | 83.5 | 52 | 10.1 | false |

| S2-MLPv2-medium/7 79 | 2021.08 | two stage | 83.6 | 55 | 16.3 | false |

| WaveMLP-B 76 | 2021.11 | pyramid | 83.6 | 63.0 | 10.2 | falseb |

| DynaMixer-M 75 | 2022.01 | two stage | 83.7∗ | 57 | 17.0 | false |

| Large Models | ||||||

| Sparse-MLP(MoE)-L 88 | 2021.09 | single stage | 79.2 | 130 | – | false |

| S2-MLP-wide 78 | 2021.06 | single stage | 80.0 | 71 | 14.0 | false |

| gMLP-B 67 | 2021.05 | single stage | 81.6 | 73 | 15.8 | true |

| RepMLPNet-L256a92 | 2021.12 | pyramid | 81.8 | 117.7 | 11.5 | true |

| ViP-large/771 | 2021.06 | two stage | 83.2 | 88 | 24.3 | true |

| CycleMLP-B583 | 2021.07 | pyramid | 83.2 | 76 | 12.3 | true |

| AS-MLP-B80 | 2021.07 | pyramid | 83.3 | 88 | 15.2 | true |

| Hire-MLP-L86 | 2021.08 | pyramid | 83.4 | 96 | 13.5 | falseb |

| MorphMLP-L77 | 2021.11 | pyramid | 83.4 | 76 | 12.5 | false |

| ActiveMLP-L85 | 2022.03 | pyramid | 83.6 | 76 | 12.3 | false |

| MS-MLP-B87 | 2022.02 | pyramid | 83.8 | 88 | 16.1 | true |

| DynaMixer-L75 | 2022.01 | two stage | 84.3∗ | 97 | 27.4 | false |

The training and testing size is . “Date” means the initial release date on arXiv, where 2021.05 denotes May, 2021. “Open source code” refers to whether there is officially open source code.

The training and testing size is .

Unofficial code and weights are open sourced at https://github.com/sithu31296/image-classification.

The best performance.

Discussion on block

The bottleneck of token-mixing MLP (Bottlenecks) induces researchers to redesign the block. Recently released MLP-like variants reduce the model’s computational complexity, dynamic aggregation information, and resolve image resolution sensitivity. Specifically, researchers decompose the full spatial projection orthogonally, restrict interaction within a local window, perform channel projection after shifting feature maps, and make other artificial designs. These careful and clever designs demonstrate that researchers have noticed that the current amount of data and computational power is insufficient for pure MLPs. Comparing the computational complexity has a theoretical significance, but it is not the only determinant of inference time and final model efficiency. Analysis of the receptive field shows that the new paradigm is instead moving toward the old paradigm. To put it more bluntly, the development of MLP heads back to the way of CNNs. Hence, we still need to make efforts to balance long-distance dependence and image resolution sensitivity.

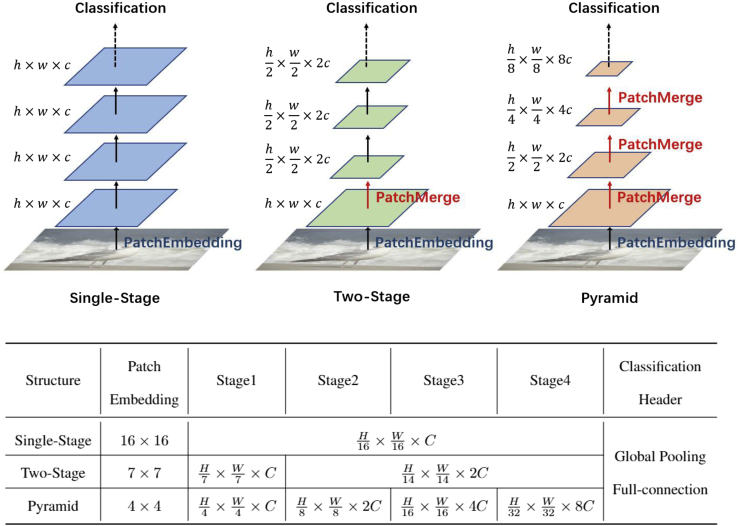

Architecture of MLP variants

Multiple blocks are stacked to form an architecture via selecting a network structure. According to our investigation, the traditional structures for classification models are also applicable to MLP-like architectures that can be divided into three categories (Figure 8): (1) a single-stage structure inherited from the ViT,47 (2) a two-stage structure with smaller patches in the early stage and larger patches in the later stage, and (3) a CNN-like pyramid structure; and, in each stage, there are multiple identical blocks. Table 3 illustrates the stacking structures of MLP-Mixer and its variants.

Figure 8.

Comparison of different hierarchical architectures for classification models

After patch embedding, the feature map size is , where h, w, and c are the height, width, and channel numbers. There is a patch merging operation between every two stages, usually patches are merged, and the number of channels doubles. The resolutions of the feature maps are different, usually in single stage, in two stage, and in pyramid, where H and W are the height and width of the input image.

Table 3.

Stacking structures for MLP-Mixer and its variants

| Spatial operation | Single stage | Two stage | Pyramid |

|---|---|---|---|

| Spatial projection | MLP-Mixer,15 ResMLP,60 gMLP,67 FeedForward,61 CCS,91 Sparse-MLP(MoE)88 | – | RepMLPNet92 |

| Axial projection | RaftMLP74 | ViP,71 DynaMixer75 | Sparse MLP,73 WaveMLP,76 MorphMLP77 |

| Shifting and channel projection | S2MLP78 | S2MLPv279 | CycleMLP,83 AS-MLP,80 HireMLP,86 MS-MLP,87 ActiveMLP85 |

From single stage to pyramid

MLP-Mixer15 inherits the “isotropic” design of ViT,47 i.e., after the patch embedding each block does not change the size of the feature map. This is called a single-stage structure. Models of this design include FeedForward,61 ResMLP,60 gMLP,67 S2MLP,78 CCS,91 RaftMLP,74 and Sparse-MLP(MoE).88 Due to the limited computing resources, the patch partition during patch embedding of the single-stage model is usually large, e.g., or , with the coarse-grained patch partition limiting the subsequent feature fineness. Although the impact is not significant for single-object classification, it impacts many downstream tasks, such as object detection and instance segmentation, especially for small targets.

Intuitively, smaller patches are beneficial in modeling fine-grained details in the images and tend to achieve higher recognition accuracy. ViP71 further proposes a two-staged configuration. Specifically, the network considers patch slices in the initial patch embedding and performs a patch merge after a few layers. During patch merging, the height and width of the feature map halve while the channels double. Compared with the patch embedding, encoding fine-level patch representations brings a slight top 1 accuracy improvement on ImageNet-1k (from 80.6% to 81.5%). S2MLPv279 follows ViP and achieves a similar top 1 accuracy improvement on ImageNet-1k (from 80.9% to 82.0%), while DynaMixer75 also adopts two-staged configurations.

If the initial patch size is further reduced, e.g., , more patch merging is required subsequently to reduce the number of patches (or tokens), promoting the network to adopt a pyramid structure. Specifically, the entire structure contains four stages, where the feature resolution reduces from to , and the output dimension increases accordingly. Almost all the recently proposed MLP-like variants adopt the pyramid structure (right side of Table 3). Worth mentioning, a convolutional layer can equivalently achieve patch embedding if its kernel size and stride are equal to the patch size. In the pyramid structure, the researchers find that using an overlapping patch embedding provides better results, that is, convolution of with instead of , which is similar to ResNet (the initial embedding layers of ResNet is a convolutional layer with followed by a max-pooling layer).83,97

Discussion on architecture

The architecture of MLP-like variants gradually evolves from single stage to pyramid, with smaller and smaller patch size and higher feature fineness. We believe this development trend is not only to cater to downstream task frameworks, such as FPN,98 but also to balance the original intention and computing power. When the patch size decreases, the number of tokens increases accordingly, so token interactions are limited to a small range to control the amount of computation. With a small range of token interactions, the global receptive field can only be preserved by reducing the size of the feature map, and the pyramid structure appears. This is consistent with the CNN concept, where the alternating use of convolutional and pooling layers has been around since 1979!3

We would like to point out that it is unfair to compare single-stage and pyramid models directly based on current configurations (the bottom half of Figure 8). What if a patch partition is used in a single-stage model? Will it be worse than the pyramid model? Currently, these are unknown. What is known is that the cost of calculations will increase significantly and is constrained by the current computing devices.

Applications of MLP variants

This section reviews the applications of MLP-like variants in computer vision, including image classification, object detection and semantic segmentation, low-level vision, video analysis, and point cloud. Due to the short development time of MLP, we focus on the first two aspects and give an intuitive comparison of MLP, CNN, and Transformer-based models. We are limited to some brief introduction for the latter three aspects, as only a few works are currently available.

Image classification

ImageNet24 is a large vision dataset designed for visual object classification. Since its release, it has been used as a benchmark for evaluating models in computer vision. Classification performance on ImageNet is often regarded as a reflection of the network’s ability to extract visual features. After training on ImageNet, the model can be well transferred to other datasets and downstream tasks, e.g., object detection and segmentation, where the transferred part is usually called vision backbone.

Table 4 compares the performance of current Vision MLP models on ImageNet-1k, including top 1 accuracy, parameters, and FLOPs, where all results are derived from the cited papers. We further divide the MLP models into three configuration types based on the number of parameters, and the rows are sorted by top 1 accuracy. The results highlight that, under the same training configuration, the recently proposed variants bring good performance gains. Table 5 provides more detailed and comprehensive information. Compared with the latest CNN and Transformer models, MLP-like variants still pose a performance gap. Without the support of extra training data, both CNN and Transformer exceed 87% top 1 accuracy, while the MLP-like variant currently achieves only 84.3%. High performance may benefit from better architecture-specific training strategies, e.g., PeCo,99 but we do not yet have a training mode specific to MLP. The gap between MLP and other networks is further widened with additional data support.

Table 5.

Image classification results of representative CNN, ViT, and MLP-like models on ImageNet-1K benchmark

| Model | Pre-trained dataset | Top 1 (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|

| CNN based | ||||

| VGG-16100 | – | 71.5 | 134 | 15.5 |

| Xception16 | – | 79.0 | 22.9 | – |

| Inception-ResNet-V2101 | – | 80.1 | – | – |

| ResNet-5097,102 | – | 80.4 | 25.6 | 4.1 |

| ResNet-15297,102 | – | 82.0 | 60.2 | 11.5 |

| RegNetY-8GF102,103 | – | 82.2 | 39 | 8.0 |

| RegNetY-16GF103 | – | 82.9 | 84 | 15.9 |

| ConvNeXt-B104 | – | 83.8 | 89.0 | 15.4 |

| VAN-Huge105 | – | 84.2 | 60.3 | 12.2 |

| EfficientNetV2-M106 | – | 85.1 | 54 | 24.0 |

| EfficientNetV2-L106 | – | 85.7 | 120 | 53.0 |

| PolyLoss(EfficientNetV2-L)107 | – | 87.21 | – | – |

| EfficientNetV2-XL106 | ImageNet-21k | 87.3 | 208 | 94.0 |

| RepLKNet-XL108 | ImageNet-21k | 87.82 | 335 | 128.7 |

| Meta pseudo labels (EfficientNet-L2)109 | JFT-300M | 90.23 | 480 | – |

| Transformer based | ||||

| ViT-B/1647 | – | 77.9 | 86 | 55.5 |

| DeiT-B/16110 | – | 81.8 | 86 | 17.6 |

| T2T-ViT-24111 | – | 82.3 | 64.1 | 13.8 |

| PVT-large112 | – | 82.3 | 61 | 9.8 |

| Swin-B49 | – | 83.5 | 88 | 15.4 |

| Nest-B113 | – | 83.8 | 68 | 17.9 |

| PyramidTNT-B114 | – | 84.1 | 157 | 16.0 |

| CSWin-B115 | – | 84.2 | 78 | 15.0 |

| CaiT-M-48-448116 | – | 86.5 | 356 | 330 |

| PeCo(ViT-H)99 | – | 88.31 | 635 | – |

| ViT-L/1647 | ImageNet-21k | 85.3 | 307 | – |

| SwinV1-L49 | ImageNet-21k | 87.3 | 197 | 103.9 |

| SwinV2-G117 | ImageNet-21k | 90.22 | 3000 | – |

| V-MoE90 | JFT-300M | 90.4 | 14,700 | – |

| ViT-G/1447 | JFT-300M | 90.53 | 1843 | – |

| CNN + Transformer | ||||

| Twins-SVT-B118 | – | 83.2 | 56 | 8.6 |

| Shuffle-B119 | – | 84.0 | 88 | 15.6 |

| CMT-B120 | – | 84.5 | 45.7 | 9.3 |

| CoAtNet-3121 | – | 84.5 | 168 | 34.7 |

| VOLO-D3122 | – | 85.4 | 86 | 20.6 |

| VOLO-D5122 | – | 87.11 | 296 | 69.0 |

| CoAtNet-4121 | ImageNet-21k | 88.12 | 275 | 360.9 |

| CoAtNet-7121 | JFT-300M | 90.93 | 2440 | – |

| MLP based | ||||

| DynaMixer-L75 | – | 84.31 | 97 | 27.4 |

| ResMLP-B24/860 | ImageNet-21k | 84.42 | 129.1 | 100.2 |

| Mixer-H/1415 | JFT-300M | 86.33 | 431 | – |

Pre-trained dataset column provides extra data information. PloyLoss, PeCo, and meta pseudo labels are different training strategies, where the used model is in the bracket

The best performance on ImageNet-1k without pre-trained dataset.

The best performance on ImageNet-1k with ImageNet-21k pre-training.

The best performance on ImageNet-1k with JFT-300M pre-training.

From Tables 4 and 5, it is concluded that: (1) MLP-like models can achieve competitive performance compared with CNN-based and Transformer-based architectures with the same training strategy and data volume, (2) the performance gains brought by increasing data volume and architecture-specific training strategies may be greater than the module redesign, (3) the visual community is encouraged to build self-supervised methods and appropriate training strategies for pure MLPs.

Object detection and semantic segmentation

Some MLP-like variants76,77,80,83,85, 86, 87 pre-trained on ImageNet are transferred to downstream tasks, such as object detection and semantic segmentation. Such tasks are more challenging than classification due to involving multiple objects of interest in one input image. However, we currently do not have a pure MLP framework for object detection and segmentation. These MLP variants are used as backbone networks to traditional CNN-based frameworks, such as Mask R-CNN38 and UperNet,123 requiring the variant to have a pyramidal structure and resolution insensitivity.

Table 6 reports object detection and semantic segmentation results of different backbones on the COCO val2017 dataset.65 As we limit the training strategy to Mask R-CNN 1x,38 the results are not state-of-the-art on the COCO dataset. Table 7 reports semantic segmentation results of different backbones on the ADE20K124 validation set, employing the Semantic FPN125 and UperNet123 frameworks. Empirical results show that the performance of MLP-like variants on object detection and semantic segmentation is still weaker than the most advanced CNN and Transformer-based backbones.

Table 6.

Object detection and instance segmentation results of different backbones on the COCO val2017 dataset

| Backbone | Mask R-CNN 1 38 |

|||||||

|---|---|---|---|---|---|---|---|---|

| Params | FLOPs | |||||||

| CNN based | ||||||||

| ResNet10197 | 40.4 | 61.1 | 44.2 | 36.4 | 57.7 | 38.8 | 63.2M | 336G |

| ResNeXt101126 | 42.8 | 63.8 | 47.3 | 38.4 | 60.6 | 41.3 | 101.9M | 493G |

| VAN-large105 | 47.1 | 67.9 | 51.9 | 42.2 | 65.4 | 45.5 | 64.4M | – |

| Transformer based | ||||||||

| PVT-large112 | 42.9 | 65.0 | 46.6 | 39.5 | 61.9 | 42.5 | 81M | 364G |

| Swin-B49 | 46.9 | – | – | 42.3 | – | – | 107M | 496G |

| CSWin-B115 | 48.7∗ | 70.4∗ | 53.9∗ | 43.9∗ | 67.8∗ | 47.3 | 97M | 526G |

| MLP based | ||||||||

| CycleMLP-B583 | 44.1 | 65.5 | 48.4 | 40.1 | 62.8 | 43.0 | 95.3M | 421G |

| WaveMLP-B76 | 45.7 | 67.5 | 50.1 | 27.8 | 49.2 | 59.7∗ | 75.1M | 353G |

| HireMLP-L86 | 45.9 | 67.2 | 50.4 | 41.7 | 64.7 | 45.3 | 115.2M | 443G |

| MS-MLP-B87 | 46.4 | 67.2 | 50.7 | 42.4 | 63.6 | 46.4 | 107.5M | 557G |

| ActiveMLP-L85 | 47.4 | 69.9 | 52.0 | 43.2 | 67.3 | 46.5 | 96.0M | – |

Employing the Mask R-CNN,38 where “1x” means that a single-scale training schedule is used.

The best performance.

Table 7.

Semantic segmentation results of different backbones on the ADE20K validation set

| Backbone | Semantic FPN125 |

UperNet123 |

||||

|---|---|---|---|---|---|---|

| Params | FLOPs | mIoU (%) | Params | FLOPs | mIoU (%) | |

| CNN based | ||||||

| ResNet10197 | 47.5M | 260G | 38.8 | 86M | 1029G | 44.9 |

| ResNeXt101126 | 86.4M | – | 40.2 | – | – | – |

| VAN-large105 | 49.0M | – | 48.1 | 75M | – | 50.1 |

| ConvNeXt-XL104 | – | – | – | 391M | 3335G | 54.0 |

| RepLKNet-XL108 | – | – | – | 374M | 3431G | 56.0 |

| Transformer based | ||||||

| PVT-medium112 | 48.0M | 219G | 41.6 | – | – | – |

| Swin-B49 | 53.2M | 274G | 45.2 | 121M | 1188G | 49.7 |

| CSWin-B115 | 81.2M | 464G | 49.9∗ | 109.2M | 1222G | 52.2 |

| BEiT-L127 | – | – | – | – | – | 57.0 |

| SwinV2-G117 | – | – | – | – | – | 59.9∗ |

| MLP based | ||||||

| MorphMLP-B77 | 59.3M | – | 45.9 | – | – | – |

| CycleMLP-B583 | 79.4M | 343G | 45.6 | – | – | – |

| Wave-MLP-M76 | 43.3M | 231G | 46.8 | – | – | – |

| AS-MLP-B80 | – | – | – | 121M | 1166G | 49.5 |

| HireMLP-L86 | – | – | – | 127M | 1125G | 49.9 |

| MS-MLP-B87 | – | – | – | 122M | 1172G | 49.9 |

| ActiveMLP-L85 | 79.8M | – | 48.1 | 108M | 1106G | 51.1 |

Currently, an optimal backbone choice is Transformer based, followed by CNN. Due to the resolution sensitivity, pure MLPs have not been used for downstream tasks. Recently, Transformer-based frameworks, e.g., DETR48 have been proposed. Thus, we expect the proposal of a pure MLP framework. To this end, MLPs still need to be further explored in these fields.

Low-level vision

Research on applying MLPs to the low-level vision domains, such as image generation and processing, is just beginning. These tasks output images instead of labels or boxes, making them more challenging than high-level vision tasks, such as image classification, object detection, and semantic segmentation.

Cazenavette and Guevara128 propose MixerGAN for unpaired image-to-image translation. Specially, MixerGAN adopts the framework of CycleGAN,129 but replaces the convolution-based residual block with the mixer layer of MLP-Mixer. Their experiments show that the MLP-Mixer succeeds at generative objectives and, although being an initial exploration, it is promising in extending the MLP-based architecture to image composition tasks further.

Tu et al.130 propose MAXIM, a UNet-shaped hierarchical structure that supports long-range interactions enabled by spatially gated MLPs. MAXIM contains two MLP-based building blocks: a multi-axis-gated MLP and a cross-gating block, both are variants of the gMLP block.67 By applying gMLP to low-level vision tasks to gain global information, the MAXIM family has achieved state-of-the-art performance in multiple image processing tasks with moderate complexity, including image dehazing, deblurring, denoising, deraining, and enhancement.

Video analysis

Several works extend MLPs to temporal modeling and video analysis. MorphMLP77 achieves competitive performance with recent state-of-the-art methods on Kinetics-400131 dataset, demonstrating that the MLP-like backbone is also suitable for video recognition. Skating-Mixer132 extends the MLP-Mixer-based framework to learn from video. It was used to score figure skating at the Beijing 2022 Winter Olympic Games and demonstrated satisfactory performance. However, compared with other methods, the number of frames in a single input has not been increased. Therefore, their advantage may be the larger spatial receptive field, instead of capturing long-term temporal information.

Point cloud

Point cloud analysis can be considered a special vision task, which is increasingly used in real-time by robots and self-driving vehicles to understand their environment and navigate through it. Unlike images, point clouds are inherently sparse, unordered, and irregular. The unordered nature is one of the biggest challenges for CNNs based on local receptive fields, because input adjacent does not imply spatial adjacent. In contrast, MLPs are naturally invariant to permutation, which perfectly fits the characteristic of point cloud,133 making classical frameworks, such as MLP-based PointNet134 and PointNet++.135

Choe et al.136 design PointMixer, which embeds geometric relations between point features into the MLP-Mixer’s framework. The relative position vector is utilized for processing unstructured 3D points, and token-mixing MLP is replaced with a softmax function. Ma et al.133 construct a pure residual MLP network, called PointMLP. It introduces a lightweight geometric affine module to transform the local points to a normal distribution. It then employs simple residual MLPs to represent local points, as they are permutation invariant and straightforward. PointMLP achieves the new state-of-the-art performance on multiple datasets. In addition, recently proposed Transformer-based networks137,138 show competitive performance, where self-attention is permutation invariant for processing a sequence of points, making it well suited for point cloud learning.

From the above analysis, it is evident that Transformer and MLP are appealing solutions for unordered data, where disorder makes it challenging to design artificial inductive biases.

Discussion on application

MLP-like variants have been applied for diverse vision tasks, such as image classification, image generation, object detection, semantic segmentation, and video analysis, achieving outstanding performance due to the artificial redesigning of the MLP block. Nevertheless, constructing MLP frameworks and employing MLP-specific training strategies may improve performance further. In addition, pure MLPs have already demonstrated their advantages in point cloud analysis, encouraging the application of MLPs to visual tasks with unordered data.

Summary and outlook

As the history of computer vision attests, the availability of larger datasets along with the increase in computational power often triggers a paradigm shift; and, within these paradigm shifts, there is a gradual reduction in human intervention, i.e., removing hand-crafted inductive biases and allowing the model to further freely learn from the raw data.15 The MLP and Boltzmann machines proposed in the last century exceeded the computational conditions at the time and were not widely used. In contrast, computationally efficient CNNs are more popular and replace manual feature extraction. From CNNs to Transformer, we have seen the models’ receptive field expand step by step, and the spatial range considered when encoding features is getting larger and larger. From Transformer to deep MLP, we no longer use similarity as the weight matrix, but allow the model to learn the weights from the raw data. The latest MLP works all seem to suggest that deep MLPs are making a strong comeback as the new paradigm. In the latest MLP development, we see compromises, such as:

-

1

The latest proposed deep MLP-based models use patch partition instead of flattening the entire input to compromise computational cost. This allows the full connectivity and global receptive field to be approximated at the patch level. The patch partition forms a two-dimensional matrix , instead of a one-dimensional vector as in the entire input flattening case, where are the input resolution, p is the patch size, and C is the input channel. Subsequently, the fully connected projections are performed alternately on space and channels. This is an orthogonal decomposition of traditional fully connected projections, just as the full space projection is further orthogonalized into horizontal and vertical directions.

-

2

At the module design level, there are two main improvement routes. One MLP-like variant type focuses on reducing computational complexity, which compromises computational power, and this reduction comes at the expense of decoupling the full spatial connectivity. Another type of variant addresses the resolution sensitivity problem, making it possible to transfer pre-trained models to downstream tasks. These works adopt CNN-like improvements, but the full connectivity and global receptive field as in MLPs are eroded. The receptive field evolves in the opposite direction in these models, becoming smaller and smaller and backing to the CNN ways.

-

3

At the architecture level, the traditional block-stacking patterns are also applicable to MLPs, and it seems that the pyramid structure is still the best choice, with the initial smaller patch size helping to obtain finer features. Note that this comparison is unfair because the initial patch of the current single-stage model is larger (), and the initial patch of the pyramid model is smaller (). The pyramid structure compromises small patches and low computational costs to some extent. What if a patch partition is used in a single-stage model? Will it be worse than the pyramid model? These are unknown. What is known is that the cost of calculations will increase significantly, and it strains the current computing devices.

The results of our research suggest that the current amount of data and computational capability are still not enough to support pure Vision MLP models to learn effectively. Moreover, human intervention still occupies an important place. Based on this conclusion, we elaborate on potential future research directions.

Vision-tailored designs