Abstract

Purpose

The detection of abdominal free fluid or hemoperitoneum can provide critical information for clinical diagnosis and treatment, particularly in emergencies. This study investigates the use of deep learning (DL) for identifying peritoneal free fluid in ultrasonography (US) images of the abdominal cavity, which can help inexperienced physicians or non‐professional people in diagnosis. It focuses specifically on first‐response scenarios involving focused assessment with sonography for trauma (FAST) technique.

Methods

A total of 2985 US images were collected from ascites patients treated from 1 January 2016 to 31 December 2017 at the Shenzhen Second People's Hospital. The data were categorized as Ascites‐1, Ascites‐2, or Ascites‐3, based on the surrounding anatomy. A uniform standard for regions of interest (ROIs) and the lack of obstruction from acoustic shadow was used to classify positive samples. These images were then divided into training (90%) and test (10%) datasets to evaluate the performance of a U‐net model, utilizing an encoder–decoder architecture and contracting and expansive paths, developed as part of the study.

Results

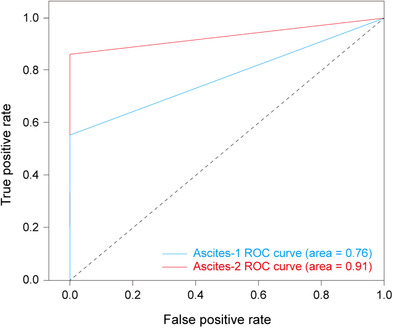

Test results produced sensitivity and specificity values of 94.38% and 68.13%, respectively, in the diagnosis of Ascites‐1 US images, with an average Dice coefficient of 0.65 (standard deviation [SD] = 0.21). Similarly, the sensitivity and specificity for Ascites‐2 were 97.12% and 86.33%, respectively, with an average Dice coefficient of 0.79 (SD = 0.14). The accuracy and area under the curve (AUC) were 81.25% and 0.76 for Ascites‐1 and 91.73% and 0.91 for Ascites‐2.

Conclusion

The results produced by the U‐net demonstrate the viability of DL for automated ascites diagnosis. This suggests the proposed technique could be highly valuable for improving FAST‐based preliminary diagnoses, particularly in emergency scenarios.

Keywords: abdominal free fluid, deep learning, emergency, ultrasonography

1. INTRODUCTION

Abdominal trauma is a common injury 1 , 2 , 3 , 4 , 5 , 6 , 7 , 8 , 9 , 10 potentially leading to active bleeding, caused by liver or spleen damage, which is the leading cause of death after trauma. 11 Patients suffering from intra‐abdominal injuries can be divided into hemodynamically stable and unstable categories. 10 Those with obvious signs of hemodynamic instability require active intervention and often a laparotomy. In contrast, a variety of alternative examination methods are available for hemodynamically stable individuals. 12 The current clinical consensus is that hemodynamic stability is the only determining factor for non‐surgical treatment. However, approximately 10% of such patients undergo surgery during treatment, often out of necessity. 13

As such, the ability to produce an accurate diagnosis quickly, in order to make accurate decisions concerning the need for surgery, would be of significant clinical benefit. For example, some blunt abdominal trauma patients require emergency surgical consultation and a laparotomy through physical examination, including cases of peritonitis or open pelvic fractures. However, abdominal injuries (liver or spleen rupture, gastrointestinal perforations, etc.) are often difficult to diagnose with a physical examination and clinical signs typically do not provide sufficient information concerning the need for an operation. In addition, it is possible for patients exhibiting normal physical examinations and vital signs to be suffering from abdominal injuries. 12 , 14 , 15 , 16 , 17 As a result, the assessment of abdominal trauma, particularly blunt abdominal trauma, remains challenging.

Early image‐based examinations are critical for trauma identification. For instance, mortality increases by approximately 1% for every 3 min of treatment delay for patients who require a laparotomy. Focused assessment with sonography for trauma (FAST) is a non‐invasive examination technique that has been widely used in the detection of abdominal trauma. 18 Specific areas in the abdomen are often examined for the presence of abdominal free fluid, a strong indication of severe intra‐abdominal injuries that may require an emergency laparotomy. 16 Several studies have shown that FAST can guide clinical decision‐making and determine the need for angiography or surgery, particularly for children, pregnant women, and patients exhibiting hemodynamic instabilities. 3 , 10 , 17 , 19 , 20 , 21 , 22 , 23 , 24 , 25 FAST can be performed at the bedside in 3‐4 min, repeatedly if need be, and avoids the risks associated with transporting patients and radiation. 3 , 14 , 26 FAST identifies the presence of free fluid in the abdominal cavity, which is typically thought to be secondary to serious abdominal trauma. 27 In the supine position, free fluid typically accumulates in specific areas like the hepatorenal fossa. Peritoneal free fluid detected by FAST may also provide information for clinical diagnosis and treatment in patients with stable conditions, such as the need for blood products. 16 , 28 , 29 , 30 , 31 As such, the early detection of abdominal free fluid is critical for the treatment of trauma patients in a variety of situations.

The use of artificial intelligence (AI) for medical applications has been expanding across multiple fields in recent years and deep learning (DL), specifically, has become one of the most popular computer‐aided diagnosis (CAD) techniques for ultrasonography (US) images. 32 , 33 , 34 , 35 , 36 , 37 , 38 DL has previously been applied to the CAD of ascites. 21 It is a data‐driven methodology that can be used to extract and learn nonlinear features from data, without requiring domain expertise. 39 This is particularly beneficial for first‐response and emergency situations, where access to specialists is limited. The U‐net is a DL model with an encoder–decoder architecture that was first proposed in 2015. 40 , 41 , 42 U‐nets have previously been applied to biomedical image processing problems such as liver and tumor segmentation in computed tomography (CT) scans, mass and calcification detection in digital mammograms, and the segmentation of skin lesions. 40 , 42 , 43 , 44 , 45 These models are composed of multiple layers and thus learn different hierarchical features in each iteration. 46 Scholars have recently improved on the basic U‐net structure, proposing more powerful frameworks such as UNet++, UNET 3+, and H‐DenseUNet. 41 , 43 , 47

It is not difficult for a physician with experience in ultrasound to identify peritoneal free fluid in US images. However, the identification of abdominal free fluid can still be time consuming for novice physicians, clinicians without ultrasonic imaging expertise, or non‐professional people. Thus, the proposed technique could be used to rapidly identify and locate peritoneal free fluid, thereby reducing examination times and leading to faster intervention. In addition, portable ultrasound is becoming more widely available and AI could enable those without a diagnostic background, such as emergency medical technicians, to use ultrasound in making decisions. A system of this type could also help novice physicians to learn and progress. As such, the primary objective of this study is to determine the viability of deep learning algorithms for timely CAD of abdominal free fluid in US images collected using FAST.

2. MATERIALS AND METHODS

A total of 2985 US images were collected from 845 ascites patients (45.43 ± 20.68 years old) from 1 January 2016 to 31 December 2017 as part of the ultrasonic picture archiving and communication systems (PACS) of the Shenzhen Second People's Hospital. The intended application environment for our study is primarily emergency and teaching situations. As such, we do not assume uniform ultrasonic equipment, interfacing software, scanning parameters, and so forth. In other words, the images we collected come from multivendor equipment, different doctors, and different scanning parameters. Ascites image classification was conducted using a predefined standard that can be described as follows. (1) The images must clearly exhibit abdominal free fluid (the affected region must be visible in the images). (2) Images containing the liver or spleen are classified as Ascites‐1. Images in which the liver, spleen, uterus, or bladder cannot be seen are classified as Ascites‐2. Images containing the uterus or bladder are classified as Ascites‐3 (not included as part of the study). Images were anonymized and all personal information from patients was removed during processing. As a result, the need for informed consent was waived.

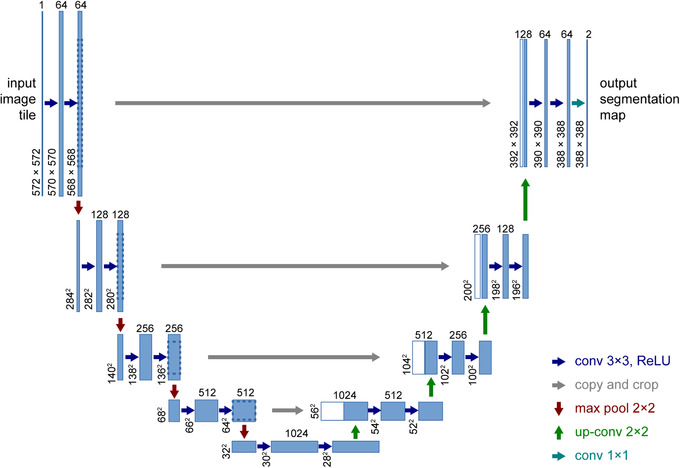

A U‐net model exhibiting contracting and expansive paths, often the basis of biomedical image segmentation networks, was developed as part of the study. The contracting path involved a repeated application of two 3 × 3 convolutions, each followed by a rectified linear unit (ReLU). The contracting path also included one max‐pooling layer utilizing a 2 × 2 kernel. The expansive path consisted of a repeated concatenation of features extracted from corresponding layers in the contracting path, two convolution layers, and one upsampling layer (see Figure 1). The 2 × 2 convolution layer kernel was the same as that of the contracting path and upsampling layer. The U‐net included 23 total convolutional layers, the last of which exhibited a 1 × 1 convolution kernel, and was trained over 200 epochs with a batch size of 16. 40 , 42 A binary cross‐entropy loss function was applied with a learning rate of 0.0001, an Adam optimizer, and a He‐normal weight initializer function. 40 , 48 , 49 All experiments were performed on an Intel Core i5 (12G) computer with a single GeForce GTX 1080 Ti GPU. Python (version 3.6) running on a Windows 10 operating system was used to process the images.

FIGURE 1.

The U‐net architecture with example for 32 × 32 pixels. Reprinted with permission from Springer Nature Customer Service Center GmbH 42

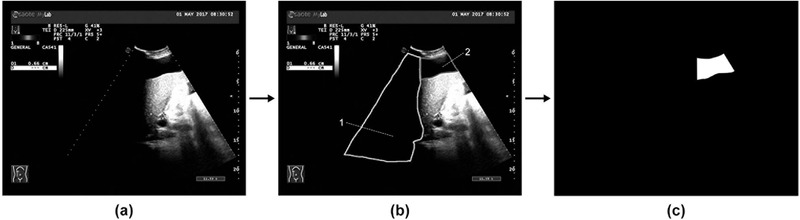

Supervised learning was implemented to simplify the training and test process. 50 , 51 The images need to be annotated (pre‐processed). Areas exhibiting clear free fluid in positive images were manually delineated and denoted as regions of interest (ROIs), excluding those that could not be identified due to the presence of acoustic shadows (see Figure 2). The detection of ROIs and the annotation process are done by an experienced physician.

FIGURE 2.

(a) A sample image collected from picture archiving and communication systems (PACS). (b) Area‐2 denotes regions of interest (ROIs) while Area‐1 indicates an obstruction from acoustic shadows. (c) The image after annotation. Pictures (a) and (c) were input to U‐net

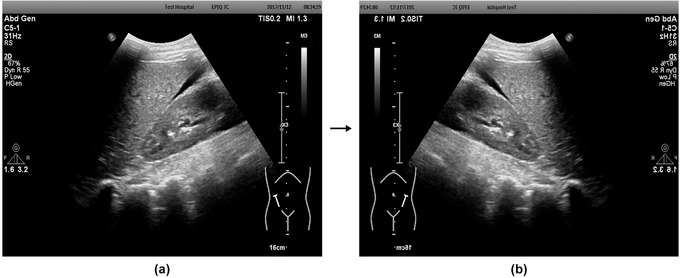

The demand for large quantities of annotated data is an obstacle to the development of robust AI systems. 52 Data augmentation is a better solution. Data augmentation can be used to reduce overfitting and improve performance. In this process, new data (images) are produced by manipulating the original data with strategies such as flipping, rotation, translation, and noise injection. 42 , 52 , 53 , 54 , 55 In our study, we use the strategy of horizontally flipping the images. Horizontally flipping retained the boundaries, echo details, and other important structural features, since the fluid always produces posterior acoustic enhancement effects in US images (see Figure 3). GNU Image Manipulation Program (GIMP, version 2.10.22) and Adobe Photoshop CS6 software were used to process the annotated results. The number of images reached 5970 by the data augmentation step.

FIGURE 3.

(a) An image prior to horizontal mirroring. (b) The image after horizontal mirroring (data augmentation)

After data augmentation, positive and negative ascites images were randomly assigned to training and test sets. The proportion of the training set and the test set was about 90/10. External validation, with data not involved in model development (here it refers to the previously mentioned test set), was used to assess U‐net performance and reduce overestimation of diagnostic accuracy. 56 Receiver operating characteristic (ROC) curve, sensitivity, specificity, accuracy, area under the curve (AUC), and average Dice coefficient were included as evaluation metrics. The Dice coefficient is a measure of contour consistency for segmented lesions occupying the same position and can be calculated as:

where |X| and |Y| indicate the number of voxels in segmentations X and Y, and X ∩ Y defines the set of voxels that overlap between segmentations X and Y. 57 , 58 The ascites images after annotation are regarded as a reference and compared with the results of U‐net.

3. PROCESSES AND RESULTS

In the first test, 900 US images exhibiting peritoneal free fluid (including Ascites‐1, Ascites‐2, and Ascites‐3) were selected to form the training set, while 182 images comprised the test set. The program achieved an average Dice coefficient of 0.61 (standard deviation [SD] = 0.26). While this value is relatively low, the location and segmentation of ROIs were secondary to diagnosing the presence of ascites, which was the primary goal of the study. Testing was repeated after grouping to compensate for significant differences in US images exhibiting peritoneal effusion in different areas. Model training was conducted using 627 Ascites‐1, 640 Ascites‐2, and 422 Ascites‐3 images. Testing involved 71 Ascites‐1, 72 Ascites‐2, and 47 Ascites‐3 images. Test results produced average Dice coefficients of 0.51 (SD = 0.26), 0.76 (SD = 0.17), and 0.60 (SD = 0.25) for Ascites‐1, Ascites‐2, and Ascites‐3, respectively. Low correctness was observed in the segmentation of Ascites‐1 and Ascites‐3, which suggests the U‐net is ideally suited to Ascites‐2.

Error was reduced by uniformly investigating the ROIs of all positive images, re‐annotating according to a predefined standard. Data augmentation was done at this time. The U‐net was then further trained using 2872 Ascites‐1 images (1436 positive and 1436 negative) and 2500 Ascites‐2 images (1250 positive and 1250 negative). The corresponding test set was composed of 320 Ascites‐1 images (160 positive and 160 negative) and 278 Ascites‐2 images (139 positive and 139 negative). Diagnostic sensitivity and specificity for Ascites‐1 were 94.38% and 68.13%, respectively, with an average Dice coefficient of 0.65 (SD = 0.21). The sensitivity and specificity for Ascites‐2 were 97.12% and 86.33%, respectively, with an average Dice coefficient of 0.79 (SD = 0.14). The accuracy was 81.25% for Ascites‐1 and 91.73% for Ascites‐2 (see Tables 1 and 2). The AUC was 0.76 for Ascites‐1 and 0.91 for Ascites‐2 (see Figure 4).

TABLE 1.

Numbers for training and test images used in the final training and test

| Ascites‐1 | Ascites‐2 | ||||

|---|---|---|---|---|---|

| Training set | Test set | Training set | Test set | Total | |

| Positive | 1436 | 160 | 1250 | 139 | 2985 |

| Negative | 1436 | 160 | 1250 | 139 | 2985 |

| All | 2872 | 320 | 2500 | 278 | 5970 |

TABLE 2.

Results of the final test

| Evaluation metrics | |||||||

|---|---|---|---|---|---|---|---|

| Sensitivity (%) | Specificity (%) | Average Dice coefficient | Minimum Dice coefficient | Maximum Dice coefficient | Accuracy (%) | AUC | |

| Ascites‐1 | 94.38 | 68.13 | 0.65 (SD = 0.21) | <0.01 | 0.93 | 81.25 | 0.76 |

| Ascites‐2 | 97.12 | 86.33 | 0.79 (SD = 0.14) | 0.35 | 0.98 | 91.73 | 0.91 |

AUC, area under the curve; SD, standard deviation.

< 0.01 means that U‐net identifies a wrong region.

FIGURE 4.

A receiver operating characteristic (ROC) curve for Ascites‐1 and Ascites‐2

4. DISCUSSION

Test results showed the U‐net offers high sensitivity but low specificity in the diagnosis of Ascites‐1 US images, with moderate accuracy in the contouring of ascites ROIs. Similarly, the U‐net exhibited both high sensitivity and high specificity in the diagnosis of Ascites‐2, with high accuracy for ascites ROIs division. After reading the output data, we found that image recognition was more accurate for large ascites areas (with large ROIs areas), while the segmentation of lesion areas was consistent. Conversely, image recognition was less accurate for small ascites areas (with small ROIs areas) and more prone to error identification. Ascites areas were relatively small in the Ascites‐1 images and relatively large in the Ascites‐2 images, which may explain why the U‐net performed better for Ascites‐2 than Ascites‐1. In addition, the model parameters selected may not be the best. Although some results predicted by the model are true positive or false positive, the predicted region is wrong. Further study is needed to assess the ability of a U‐net to diagnose and segment small amounts of intraperitoneal fluid. More powerful frameworks like U‐net 3+ may be suitable for Ascites‐1 or Ascites‐3, but this is yet to be investigated.

This study did include some other limitations too, as it utilized specific US images from the hospital and is not necessarily robust to all different equipment versions or manufacturers. Participants were also primarily from the surrounding area and thus represent a limited population sample. Examination parameters were not controlled and varied slightly between individual doctors and treatment environments. In addition, the performance of an AI model is highly dependent on the quantity and quality of training data. Recent developments in US equipment have led to US images exhibiting higher resolution and lower noise. Since the U‐net developed in this study was trained with existing US images, its diagnostic capabilities given higher quality data need to be verified. 52 Furthermore, our primary goal is to identify the presence of intraperitoneal injuries. However, the ascites US images selected in this study were not all from patients with abdominal trauma, which may have affected trauma recognition accuracy. The effects of fluid volume, intestinal fluid, and peritoneal effusion under normal conditions were not considered. Non‐image information is included in the US images too, which may affect the detection. Some human factors may overestimate the performance of the model, such as the unclear images excluded in the research process. Thus, the differential diagnostic capabilities of the developed U‐net for pathological ascites in US images should be investigated further.

It is not expected that AI should be entirely consistent with the diagnostic capabilities of experienced doctors. Although correctly outlining lesion borders is valuable for treatment planning, determining the presence or absence of abdominal free fluid is the first step in our proposed methodology. The Dice coefficients achieved in this study reflect the difficulty of delineating ascites areas. 58 The low sensitivity can also be attributed to issues with detecting lesions in abdominal parenchymal organs. Thus, although ultrasound is generally accepted as playing a significant role in the treatment of unstable patients, its use with stable patients is still controversial because it cannot rule out abdominal organ lesions. For patients with blunt trauma, the inability to demonstrate a lack of bleeding or delayed bleeding in the abdominal cavity has proven to be a primary limitation of US, often requiring patients to undergo contrast‐enhanced computed tomography. 21 , 29 , 59 However, as demonstrated in this study, the inclusion of AI could lead to earlier intervention, more precise treatment, and improved outcomes for trauma patients, particularly in emergency situations.

5. CONCLUSIONS

The proposed DL‐based methodology has been shown to be accurate for identifying the presence of abdominal free fluid and aiding doctors in diagnosing ascites. Despite the varied resolution of US images, the reported AUC values are comparable to those of recent studies investigating automated diagnosis of edema through varying modalities. 60 Further clinical validation of the U‐net is needed to demonstrate its effect on patient outcomes, in addition to the reported performance metrics (sensitivities of 94.38% and 97.12% for Ascites‐1 and Ascites‐2, respectively). A robust clinical assessment would require external testing among diverse cohorts that fully represent potential ascites patient groups and images, to avoid performance overestimation caused by overfitting. 56 In a future study, we will collect US images from additional sources, including various manufacturers, equipment types, scanning parameters, and populations to improve U‐net diagnostic performance. We will also evaluate the potential for differential diagnosis and develop relevant software for use in emergency centers and medical colleges.

CONFLICT OF INTEREST

The authors declare no conflict of interest.

ETHICS STATEMENT

This retrospective study was approved by the Clinical Research Ethics Committee of Shenzhen Second People's Hospital.

AUTHOR CONTRIBUTIONS

Zhanye Lin designed the methodology, acquired the US images, conducted the segmentation, collected patient data, performed the experiments, and wrote the paper. Zhengyi Li proposed the study, guided the methodology and experimental design, and revised the paper. Peng Cao and Yingying Lin provided the U‐net model and assisted in the statistical analysis. Fengting Liang and Libing Huang assisted in the acquisition and segmentation of US images and the collection of patient data. Jiajun He aided in U‐net interpretation and result analysis. All authors read and approved the final manuscript.

ACKNOWLEDGMENTS

We thank LetPub (www.letpub.com) for linguistic assistance and pre‐submission expert review. Figure 1 is reprinted by permission from Springer Nature Customer Service Center GmbH: Springer Nature, International Conference on Medical Image Computing and Computer‐Assisted Intervention. Ronneberger O, Fischer P, Brox T. U‐net: convolutional networks for biomedical image segmentation, October 5‐9, 2015.

Lin Z, Li Z, Cao P, et al. Deep learning for emergency ascites diagnosis using ultrasonography images. J Appl Clin Med Phys. 2022;23:e13695. 10.1002/acm2.13695

Zhanye Lin and Zhengyi Li are joint first authors and make the same contribution.

DATA AVAILABILITY STATEMENT

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

REFERENCES

- 1. Armstrong LB, Mooney DP, Paltiel H, et al. Contrast enhanced ultrasound for the evaluation of blunt pediatric abdominal trauma. J Pediatr Surg. 2018;53(3):548‐552. [DOI] [PubMed] [Google Scholar]

- 2. Becker C, Mentha G, Terrier F. Blunt abdominal trauma in adults: role of CT in the diagnosis and management of visceral injuries. Part 1: liver and spleen. Eur Radiol. 1998;8(4):553‐562. [DOI] [PubMed] [Google Scholar]

- 3. Desai N, Harris T. Extended focused assessment with sonography in trauma. BJA Educ. 2018;18(2):57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Glen J, Constanti M, Brohi K. Assessment and initial management of major trauma: summary of NICE guidance. BMJ. 2016;353:i3051. [DOI] [PubMed] [Google Scholar]

- 5. Liu M, Lee C‐H, P'eng F‐K. Prospective comparison of diagnostic peritoneal lavage, computed tomographic scanning, and ultrasonography for the diagnosis of blunt abdominal trauma. J Trauma. 1993;35(2):267‐270. [DOI] [PubMed] [Google Scholar]

- 6. Soyuncu S, Cete Y, Bozan H, Kartal M, Akyol A. Accuracy of physical and ultrasonographic examinations by emergency physicians for the early diagnosis of intraabdominal haemorrhage in blunt abdominal trauma. Injury. 2007;38(5):564‐569. [DOI] [PubMed] [Google Scholar]

- 7. Tang J, Li W, Lv F, et al. Comparison of gray‐scale contrast‐enhanced ultrasonography with contrast‐enhanced computed tomography in different grading of blunt hepatic and splenic trauma: an animal experiment. Ultrasound Med Biol. 2009;35(4):566‐575. [DOI] [PubMed] [Google Scholar]

- 8. Valentino M, Ansaloni L, Catena F, Pavlica P, Pinna A, Barozzi L. Contrast‐enhanced ultrasonography in blunt abdominal trauma: considerations after 5 years of experience. Radiol Med. 2009;114(7):1080‐1093. [DOI] [PubMed] [Google Scholar]

- 9. Wening J. Evaluation of ultrasound, lavage, and computed tomography in blunt abdominal trauma. Surg Endosc. 1989;3(3):152‐158. [DOI] [PubMed] [Google Scholar]

- 10. Nishijima DK, Simel DL, Wisner DH, Holmes JF. Does this adult patient have a blunt intra‐abdominal injury? JAMA. 2012;307(14):1517‐1527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Lv F, Tang J, Luo Y, et al. Contrast‐enhanced ultrasound imaging of active bleeding associated with hepatic and splenic trauma. Radiol Med. 2011;116(7):1076‐1082. [DOI] [PubMed] [Google Scholar]

- 12. Boulanger BR, McLellan BA. Blunt abdominal trauma. Emerg Med Clin North Am. 1996;14(1):151‐169. [DOI] [PubMed] [Google Scholar]

- 13. Fang J‐F, Wong Y‐C, Lin B‐C, Hsu Y‐P, Chen M‐F. The CT risk factors for the need of operative treatment in initially hemodynamically stable patients after blunt hepatic trauma. J Trauma Acute Care Surg. 2006;61(3):547‐554. [DOI] [PubMed] [Google Scholar]

- 14. Brown MA, Casola G, Sirlin CB, Patel NY, Hoyt DB. Blunt abdominal trauma: screening us in 2,693 patients. Radiology. 2001;218(2):352‐358. [DOI] [PubMed] [Google Scholar]

- 15. Hoffmann R, Nerlich M, Muggia‐Sullam M, et al. Blunt abdominal trauma in cases of multiple trauma evaluated by ultrasonography: a prospective analysis of 291 patients. J. Trauma. 1992;32(4):452‐458. [DOI] [PubMed] [Google Scholar]

- 16. Rudralingam V, Footitt C, Layton B. Ascites matters. Ultrasound. 2017;25(2):69‐79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Walcher F, Weinlich M, Conrad G, et al. Prehospital ultrasound imaging improves management of abdominal trauma. Br J Surg. 2006;93(2):238‐242. [DOI] [PubMed] [Google Scholar]

- 18. Savatmongkorngul S, Wongwaisayawan S, Kaewlai R. Focused assessment with sonography for trauma: current perspectives. Open Access Emerg Med. 2017;9:57‐62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Blaivas M, Sierzenski P, Theodoro D. Significant hemoperitoneum in blunt trauma victims with normal vital signs and clinical examination. Am J Emerg Med. 2002;20(3):218‐221. [DOI] [PubMed] [Google Scholar]

- 20. Byars D, Devine A, Maples C, Yeats A, Greene K. Physical examination combined with focused assessment with sonography for trauma examination to clear hemodynamically stable blunt abdominal trauma patients. Am J Emerg Med. 2013;31(10):1527‐1528. [DOI] [PubMed] [Google Scholar]

- 21. Jiang H, Deng W, Zhou J, et al. Machine learning algorithms to predict the 1 year unfavourable prognosis for advanced schistosomiasis. Int J Parasitol. 2021;51(11):959‐965. [DOI] [PubMed] [Google Scholar]

- 22. Lingawi SS, Buckley AR. Focused abdominal US in patients with trauma. Radiology. 2000;217(2):426‐429. [DOI] [PubMed] [Google Scholar]

- 23. Pinto F, Miele V, Scaglione M, Pinto A. The use of contrast‐enhanced ultrasound in blunt abdominal trauma: advantages and limitations. Acta Radiol. 2014;55(7):776‐784. [DOI] [PubMed] [Google Scholar]

- 24. Stengel D, Rademacher G, Ekkernkamp A, Güthoff C, Mutze S. Emergency ultrasound‐based algorithms for diagnosing blunt abdominal trauma. Cochrane Database Syst Rev. 2015;2015(9):CD004446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Whitson MR, Mayo PH. Ultrasonography in the emergency department. Crit Care. 2016;20(1):1‐8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. McGahan JP, Wang L, Richards JR. From the RSNA refresher courses: focused abdominal US for trauma. Radiographics. 2001;21(suppl 1):S191‐S199. [DOI] [PubMed] [Google Scholar]

- 27. Williams JW, Simel DL. Does this patient have ascites? How to divine fluid in the abdomen. JAMA. 1992;267(19):2645‐2648. [DOI] [PubMed] [Google Scholar]

- 28. Brenner M, Hicks C. Major abdominal trauma: critical decisions and new frontiers in management. Emerg Med Clin North Am. 2018;36(1):149‐160. [DOI] [PubMed] [Google Scholar]

- 29. Brown MA, Casola G, Sirlin CB, Hoyt DB. Importance of evaluating organ parenchyma during screening abdominal ultrasonography after blunt trauma. J Ultrasound Med. 2001;20(6):577‐583. [DOI] [PubMed] [Google Scholar]

- 30. Hahn DD, Offerman SR, Holmes JF. Clinical importance of intraperitoneal fluid in patients with blunt intra‐abdominal injury. Am J Emerg Med. 2002;20(7):595‐600. [DOI] [PubMed] [Google Scholar]

- 31. Meyers MA. The spread and localization of acute intraperitoneal effusions. Radiology. 1970;95(3):547‐554. [DOI] [PubMed] [Google Scholar]

- 32. Banzato T, Bonsembiante F, Aresu L, Gelain M, Burti S, Zotti A. Use of transfer learning to detect diffuse degenerative hepatic diseases from ultrasound images in dogs: a methodological study. Vet J. 2018;233:35‐40. [DOI] [PubMed] [Google Scholar]

- 33. Chiappa V, Interlenghi M, Salvatore C, et al. Using rADioMIcs and machine learning with ultrasonography for the differential diagnosis of myometRiAL tumors (the ADMIRAL pilot study). Radiomics and differential diagnosis of myometrial tumors. Gynecol Oncol. 2021;161(3):838‐844. [DOI] [PubMed] [Google Scholar]

- 34. Eaton JE, Vesterhus M, McCauley BM, et al. Primary sclerosing cholangitis risk estimate tool (PREsTo) predicts outcomes of the disease: a derivation and validation study using machine learning. Hepatology. 2020;71(1):214‐224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Li W, Huang Y, Zhuang B‐W, et al. Multiparametric ultrasomics of significant liver fibrosis: a machine learning‐based analysis. Eur Radiol. 2019;29(3):1496‐1506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Martínez‐Más J, Bueno‐Crespo A, Khazendar S, et al. Evaluation of machine learning methods with Fourier Transform features for classifying ovarian tumors based on ultrasound images. PLoS ONE. 2019;14(7):e0219388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Yan Y, Li Y, Fan C, et al. A novel machine learning‐based radiomic model for diagnosing high bleeding risk esophageal varices in cirrhotic patients. Hepatol Int. 2022;16(2):423‐432. [DOI] [PubMed] [Google Scholar]

- 38. Liu Y, Liu X, Wang S, Song J, Zhang J. A novel method for accurate extraction of liver capsule and auxiliary diagnosis of liver cirrhosis based on high‐frequency ultrasound images. Comput Biol Med. 2020;125:104002. [DOI] [PubMed] [Google Scholar]

- 39. Song KD. Current status of deep learning applications in abdominal ultrasonography. Ultrasonography. 2021;40(2):177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Behboodi B, Rivaz H. Ultrasound segmentation using u‐net: learning from simulated data and testing on real data. Paper presented at: 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); July 23‐27, 2019; Berlin. [DOI] [PubMed]

- 41. Huang H, Lin L, Tong R, et al. Unet 3+: a full‐scale connected Unet for medical image segmentation. Paper presented at: ICASSP 2020‐2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); May 4‐8, 2020: Virtual Barcelona.

- 42. Ronneberger O, Fischer P, Brox T. U‐net: convolutional networks for biomedical image segmentation. Paper presented at: International Conference on Medical Image Computing and Computer‐Assisted Intervention; October 5‐9, 2015; Munich, Germany.

- 43. Li X, Chen H, Qi X, Dou Q, Fu C‐W, Heng P‐A . H‐DenseUNet: hybrid densely connected UNet for liver and tumor segmentation from CT volumes. IEEE Trans Med Imaging. 2018;37(12):2663‐2674. [DOI] [PubMed] [Google Scholar]

- 44. Sathyan A, Martis D, Cohen K. Mass and calcification detection from digital mammograms using Unets. Paper presented at: 2020 7th International Conference on Soft Computing & Machine Intelligence (ISCMI); November 14‐15, 2020; Stockholm, Sweden.

- 45. Tang P, Liang Q, Yan X, et al. Efficient skin lesion segmentation using separable‐Unet with stochastic weight averaging. Comput Methods Programs Biomed. 2019;178:289‐301. [DOI] [PubMed] [Google Scholar]

- 46. Shin TY, Kim H, Lee J‐H, et al. Expert‐level segmentation using deep learning for volumetry of polycystic kidney and liver. Investig Clin Urol. 2020;61(6):555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J. Unet++: a nested u‐net architecture for medical image segmentation. In: Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Springer; 2018:3‐11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. He K, Zhang X, Ren S, Sun J. Delving deep into rectifiers: surpassing human‐level performance on imagenet classification. Paper presented at: 2015 IEEE International Conference on Computer Vision (ICCV); December 7‐13, 2015; Santiago, Chile. [Google Scholar]

- 49. Kingma DP, Adam Ba J.: A method for stochastic optimization. arXiv:14126980, 2014. [Google Scholar]

- 50. Litjens G, Kooi T, Bejnordi BE, et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60‐88. [DOI] [PubMed] [Google Scholar]

- 51. Norgeot B, Quer G, Beaulieu‐Jones BK, et al. Minimum information about clinical artificial intelligence modeling: the MI‐CLAIM checklist. Nat Med. 2020;26(9):1320‐1324. 10.1038/s41591-020-1041-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Kim J, Kim HJ, Kim C, Kim WH. Artificial intelligence in breast ultrasonography. Ultrasonography. 2021;40(2):183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Shorten C, Khoshgoftaar TM. A survey on image data augmentation for deep learning. J Big Data. 2019;6(1):1‐48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Yu AC, Eng J. One algorithm may not fit all: how selection bias affects machine learning performance. Radiographics. 2020;40(7):1932‐1937. [DOI] [PubMed] [Google Scholar]

- 55. Zhu Y‐C, AlZoubi A, Jassim S, et al. A generic deep learning framework to classify thyroid and breast lesions in ultrasound images. Ultrasonics. 2021;110:106300. [DOI] [PubMed] [Google Scholar]

- 56. Park SH, Han K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology. 2018;286(3):800‐809. [DOI] [PubMed] [Google Scholar]

- 57. Dice LR. Measures of the amount of ecologic association between species. Ecology. 1945;26(3):297‐302. [Google Scholar]

- 58. Schelb P, Tavakoli AA, Tubtawee T, et al. Comparison of prostate MRI lesion segmentation agreement between multiple radiologists and a fully automatic deep learning system. Rofo. 2021;193(5):559‐573. [DOI] [PubMed] [Google Scholar]

- 59. Poletti PA, Kinkel K, Vermeulen B, Irmay F, Unger P‐F, Terrier F. Blunt abdominal trauma: should US be used to detect both free fluid and organ injuries? Radiology. 2003;227(1):95‐103. [DOI] [PubMed] [Google Scholar]

- 60. Dong TS, Kalani A, Aby ES, et al. Machine learning‐based development and validation of a scoring system for screening high‐risk esophageal varices. Clin Gastroenterol Hepatol. 2019;17(9):1894‐1901. e1. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.