Abstract

Esophageal squamous cell carcinoma (ESCC) is one of the highest incidence and mortality cancers in the world. An effective survival prediction model can improve the quality of patients' survival. Therefore, a parameter-optimized deep belief network based on the improved Archimedes optimization algorithm is proposed in this paper for the survival prediction of patients with ESCC. Firstly, a combination of features significantly associated with the survival of patients is found by the minimum redundancy and maximum relevancy (MRMR) algorithm. Secondly, a DBN network is introduced to make predictions for survival of patients. Aiming at the problem that the deep belief network model is affected by parameters in the construction process, this paper uses the Archimedes optimization algorithm to optimize the learning rate α and batch size β of DBN. In order to overcome the problem that AOA is prone to fall into local optimum and low search accuracy, an improved Archimedes optimization algorithm (IAOA) is proposed. On this basis, a survival prediction model for patients with ESCC is constructed. Finally, accuracy comparison tests are carried out on IAOA-DBN, AOA-DBN, SSA-DBN, PSO-DBN, BES-DBN, IAOA-SVM, and IAOA-BPNN models. The results show that the IAOA-DBN model can effectively predict the five-year survival rate of patients and provide a reference for the clinical judgment of patients with ESCC.

1. Introduction

Cancer is the second leading cause of death in the world and poses a great danger to human health [1, 2]. There will be approximately 19.29 million new cancer cases and 9.95 million cancer deaths worldwide in 2021 [3]. Esophageal cancer is the sixth most common cause of cancer-related death worldwide, including esophageal squamous cell carcinoma and esophageal adenocarcinoma [4]. More than 90% of esophageal cancers are esophageal squamous cell carcinoma (ESCC). The pathology of esophageal squamous cell carcinoma is complex, and it is often found at an advanced stage, which brings a huge burden to the patient's family [5, 6]. In recent years, the incidence of esophageal squamous cell carcinoma has been increasing [7], and the mortality rate is still high [8, 9].

One of the most fundamental difficulties in the treatment of ESCC is the lack of effective methods for predicting survival risk [10, 11]. Currently, with the more in-depth research on ESCC and the continuous development of medical technology [12], the use of various types of intelligent systems in esophageal cancer diagnosis is increasing [13]. The treatment methods and treatment concepts for patients with ESCC have continued to rise [14]. However, as with other malignancies, the incidence of patients with ESCC is increasing. Even for professional doctors, it is difficult to judge the patient's ultimate risk of survival [15].

Generally, blood indicators, age, and TNM stage information are considered related to the survival rate of cancer patients, and they are often used to predict the survival status of patients [16–18]. In recent years, with the continuous progress of machine learning technology, more and more intelligent algorithms are proposed and applied in multiple fields [19–21]. In the medical field, the research on the survival risk of cancer patients has become a popular research content [22]. A reasonable survival prediction model will effectively improve the survival of cancer patients. Essentially, the cancer patient survival prediction model is a classification problem [23], including the screening of datasets and analyzing the connections between the data. So far, many data mining methods have been proposed in the literature to predict the survival status of esophageal cancer patients [24, 25]. In [26], 90 breast cancer risk miRNAs are predicted based on the proposed DMTN by using the SVM classifier, which obtained an AUV of 0.9633. The method of backpropagation artificial neural network is adopted to predict whether postoperative fatigue occurred in patients undergoing gastrointestinal tumor surgery in [27], and the accuracy rate reached 0.872.

The above approach based on shallow architecture achieves good performance in cancer prediction problems. However, since the classification accuracy of shallow learning depends largely on the quality of the extracted features, it may cause problems when dealing with more complex applications [28]. In fact, for high latitude and complex cancer patient data, it is not sufficient to use simple traditional shallow architecture to solve it [29]. Correspondingly, the deep learning model has multiple nonlinear network structures, which enable it to extract the features of the original data from the hidden layer step by step and improve the classification and prediction accuracy of the model [30, 31]. Therefore, a network structure with deeper layers is preferred.

Deep learning is a new direction in the field of machine learning that models high-level abstractions in input data with hierarchies and multiple layers [32, 33]. Through the establishment of artificial neural network with a network hierarchy, multiple layers gradually extract higher-level features from the original input for learning. Different types of deep neural networks for classification prediction have been used in multiple literatures [34–36]. DBN is a probabilistic generative network, which is considered more suitable for prediction of cancer classification with high feature similarity and complexity [37]. However, in the process of building DBN, improper parameter setting will lead to the instability of the model and the problem of poor classification accuracy. Often, the selection of parameters still relies on the experience of experts to be manually tuned. Aiming at the above problems, a cancer patient survival prediction model based on the improved Archimedes optimization algorithm (IAOA) to optimize DBN parameters is proposed.

In this paper, seventeen blood indicators, age, and TNM staging information of 298 patients with ESCC are studied. Firstly, the clinical data of cancer patients are selected by the minimum redundancy and maximum relevancy algorithm, and the feature indexes are sorted according to their importance. A combination of eleven indicators is selected that is significantly associated with patient survival, which is verified by the Cox regression method in the SPSS software. Secondly, the IAOA is introduced to optimize the parameters in the DBN network training process to improve the stability and classification accuracy of the DBN model. Finally, a survival prediction model of patients with ESCC based on IAOA-DBN is established. The above eleven related indicators are used as inputs, and the five-year survival rate of the patient is used as output. The prediction accuracy rate of IAOA-DBN is better than the existing AOA-DBN, SSA-DBN, PSO-DBN, BES-DBN, IAOA-SVM, and IAOA-BPNN. Therefore, the method for survival diagnosis of patients with ESCC proposed in this paper can accurately predict the survival level of patients. The main contributions of this article can be summarized as follows:

A combination of eleven indicators is found based on minimum redundancy and maximum relevancy feature selection, which is verified to be significantly associated with survival in patients with ESCC

The proposed method uses IAOA to optimize the parameters of the DBN, which effectively improves the stability and classification accuracy of the DBN network. The problem that AOA tends to fall into local optimum and low convergence accuracy is effectively improved by the IAOA. Through the establishment of the IAOA-DBN model, the five-year survival rate of patients with ESCC is effectively predicted

This work is presented as follows. In Section 2, the original data is analyzed, and a combination of multiple indicators that is significantly related to patient survival is found based on minimum redundancy and maximum relevancy algorithm. An improved Archimedes algorithm is proposed in Section 3, which can effectively improve the optimization accuracy and stability of AOA. In Section 4, a survival prediction model based on IAOA-DBN is proposed, which can effectively predict the five-year survival rate of patients with ESCC. In Section 5, the conclusions of this article are presented.

2. Dataset Analysis

2.1. Data Introduction

The clinical data of 298 patients with ESCC used in this article are from patients who were treated in the First Affiliated Hospital of Zhengzhou University from January 2007 to December 2018. The clinical information includes seventeen blood indicators, age, and TNM staging information. The seventeen blood indicators are basophil count (BASO), eosinophil count (EO), fibrinogen (FIB), platelet count (PTL), albumin (ALB), hemoglobin concentration (HGB), white blood cell count (WBC), monocyte count (MONO), activated partial thromboplastin time (APTT), globulin (GLOB), red blood cell count (RBC), prothrombin time (PT), lymphocyte count (LYMPH), neutrophil count (NEUT), total protein (TP), international normalized ratio (INR), and thrombin time (TT). The population proportion information of the dataset is shown in Table 1. Information of seventeen blood indicators is shown in Table 2.

Table 1.

Population proportion information of the dataset.

| Project | Category | Number of population | Percentage of population |

|---|---|---|---|

| Genders | Male | 186 | 62.4% |

| Female | 112 | 37.6% | |

| Ages | ≤61.5 | 192 | 64.4% |

| 61.5 | 112 | 37.6% | |

| T stages | T1 | 42 | 14.1% |

| T2 | 89 | 29.9% | |

| T3 | 165 | 55.4% | |

| T4 | 2 | 0.6% | |

| N stages | N0 | 170 | 57.1% |

| N1 | 80 | 26.8% | |

| N2 | 34 | 11.4% | |

| N3 | 14 | 4.7% | |

| TNM stages | 1 | 37 | 12.4% |

| 2 | 139 | 46.6% | |

| 3 | 106 | 35.6% | |

| 4 | 16 | 5.4% |

Table 2.

Basic information about seventeen blood indicators.

| Variable | Mean | Median (range) | Variance | Standard deviation |

|---|---|---|---|---|

| BASO | 0.050 | 0 (0-1) | 0.014 | 0.118 |

| EO | 0.144 | 0.1 (0-3) | 0.074 | 0.272 |

| FIB | 379.262 | 362.811 (167.613-909.725) | 924.038 | 30.398 |

| PTL | 226.289 | 227.5 (45-448) | 62.902 | 7.931 |

| ALB | 42.077 | 42 (27-59) | 25.055 | 5.005 |

| HGB | 137.742 | 139 (95-189) | 227.216 | 15.074 |

| WBC | 6.564 | 6.1 (2.18-15.3) | 4.077 | 2.019 |

| MONO | 0.406 | 0 (0-1) | 0.091 | 0.301 |

| APTT | 35.929 | 35.1 (15.4-78.5) | 62.479 | 7.904 |

| GLOB | 29.077 | 29 (17-45) | 26.240 | 5.122 |

| RBC | 4.452 | 4.5 (2.93-6.04) | 0.224 | 0.473 |

| PT | 10.322 | 10.2 (7-16.5) | 2.834 | 1.684 |

| LYMPH | 1.930 | 1.905 (0-8) | 0.479 | 0.692 |

| NEUT | 3.864 | 3.5 (0-10.6) | 2.829 | 1.682 |

| TP | 71.070 | 71 (50-92) | 51.971 | 7.209 |

| INR | 0.796 | 0.78 (0.45-1.64) | 0.034 | 0.185 |

| TT | 15.569 | 15.7 (1.3-46.5) | 6.629 | 2.575 |

The unit of WBC, LYMPH, GLOB, ALB, RBC, BASO, EO, NEUT, TP, HGB, and PLT is g/L. The unit of PT, TT, APTT is second(s). The unit of FIB is mg/L.

Among all patients, 147 patients survived more than five years, 151 patients survived less than five years, and the data are evenly distributed. The age distribution of the patients ranged from 38 to 82 years, including 190 male patients and 108 female patients. In addition, the selected patients should have complete treatment records and be followed up for more than six months.

2.2. Minimum Redundancy and Maximum Relevancy Algorithm

The minimum redundancy and maximum relevancy (MRMR) algorithm [38] is a typical feature selection method. The purpose of MRMR is to select the features with the minimal redundancy and the maximal relevance with the class label. The relevance between features and class labels is represented by mutual information. The mutual information is calculated as Equation (1).

| (1) |

where x and y are given two random variables, p(x, y) is the joint probability density function of x and y, p(x) and p(y) are the probability density functions of x and y, respectively. The minimum redundancy and maximum relevancy are calculated as follows, respectively.

| (2) |

| (3) |

where S and |S| are feature subsets and the number of features contained therein, respectively, C is the class label, I(xi; c) is the mutual information between feature i and class label C, I(xi; xj) is the mutual information between feature i and feature j, D is the mean between each feature in the feature set S and the class label C, indicating the relevance between the feature set and the corresponding class label, and R is the size of the mutual information between the features in the feature set S, which represents the redundancy between the features.

The goal of the MRMR algorithm is to maximize the classification performance of the selected feature subset while minimizing the feature dimension. Therefore, it is required that the relevance between the feature subset and the label is the largest, and the redundancy between the features is the least. The minimum redundancy and maximum relevancy are constructed as follows.

| (4) |

The main process of minimum redundancy and maximum relevancy (MRMR) algorithm is as follows.

2.2.1. Step 1: The First Feature Is Selected

The mutual information between all candidate variables and target variables in the clinical data of esophageal cancer patients is calculated. The feature variable with the largest mutual information is the first feature variable selected.

2.2.2. Step 2: The Second Feature Is Selected

The redundancy between the selected first feature and the other features is calculated. The feature variable with the least redundancy is the second feature variable.

2.2.3. Step 3: Sequential Selection of Other Features

Based on the selected two feature variables, the selection of the next feature variable is required to make the selected feature subset have the largest relevance with the target variable and the least redundancy with the selected feature. Therefore, it is necessary to satisfy the minimum redundancy and maximum relevancy criterion of Equation (4). Repeat the calculation of the criteria shown in Equation (4), and add the variables that meet the requirements to the selected feature subset in turn. When the number of selected features meets the requirements, the algorithm ends.

In order to clearly express the MRMR process, the framework of MRMR is shown in Algorithm 1.

Algorithm 1.

Framework of MRMR.

2.3. Selection of Optimal Subset Combinations

The patients' 17 blood indicators, age, and TNM staging information are used as input and five-year survival status as output. The patients' indicators are reordered according to their importance by the MRMR method. The reordered dataset is put into the BP neural network [39], and the classification accuracy of the feature combination is verified by tenfold cross-validation. When the highest classification accuracy is achieved, the combination with the smallest number of features is the optimal feature combination. The result is shown in Figure 1. When the highest classification accuracy is achieved, the number of features is eleven. Therefore, the features selected in this paper are the first eleven features. The eleven features are TNM stage, BASO, Age, PT, FIB, LYMPH, RBC, TT, PLT, T stage, and GLOB.

Figure 1.

The change of classification accuracy with the number of feature variables.

2.4. Cox Regression Analysis to Verify the Correlation of Indicators

Cox regression models [40] are widely used in the medical field to analyze the effects of multiple variables on survival status and survival time. In this section, Cox regression models are used to further validate the correlation of selected features with a 5-year survival status and survival time of patients with ESCC. The SPSS 26.0 statistical software is used to make the Cox model. The survival time and survival outcome of patients with ESCC are used as dependent variables. The above eleven indicators are independent variables. The survival function at the mean of the covariate is shown in Figure 2. The results show that the p value of the overall score of the eleven indicators is much less than 0.05. The combination of these eleven indicators is significantly related to the survival rate of patients.

Figure 2.

Survival function at the mean of the covariate. The survival years are taken as the time, the eleven indicators obtained from minimum redundancy and maximum relevancy algorithm.

3. Improving the Archimedes Optimization Algorithm

3.1. Basic Archimedes Optimization Algorithm

The Archimedes optimization algorithm [41] (AOA) is a new metaheuristic algorithm proposed in 2020. In this algorithm, the population individuals are submerged objects, and the population position is updated by adjusting the density, volume, and acceleration of the objects. According to whether the objects collide in the liquid, AOA is divided into a global exploration stage and a local search stage. If the objects do not collide, the global exploration phase is performed. Instead, a partial development phase is performed.

3.1.1. Initial Stage

In the initialization phase, AOA randomly initializes the density (den), volume (vol), and acceleration (acc) of individuals in the population. The current optimal individual (xbest), optimal density (denbest), optimal volume (volbest), and optimal acceleration (accbest) are selected. In the AOA, the individual density, volume and transfer factor TF are calculated as Equations (5)–(7), respectively.

| (5) |

| (6) |

where rand is a random number between (0,1). denit and denit+1 are the densities of the individual i for the generation t and the generation t + 1, respectively. volit and volit+1 are the volumes of the individual i in the generation t and the generation t + 1, respectively.

| (7) |

where t is the current iteration number and tmax is the maximum iteration number.

When TF ≤ 0.5, AOA performs a global search, and the update of the individual acceleration is calculated as follows.

| (8) |

When TF = 0.5, AOA is developed locally, and the individual acceleration is updated to the following:

| (9) |

The acceleration of the individual is normalized to obtain Equation (10).

| (10) |

where acci−normt+1 is the normalized acceleration of the individual i in the t generation, u and l are the parameters for adjusting the normalization range.

During the global search phase, the individual positions are updated by Equation (11).

| (11) |

where xit+1 and xit are the positions of individuals in the t + 1 and t generations and xrand is the positions of random individuals in the generation t. rand ∈ (0, 1) is a random number. C1 is a fixed constant. d is the density factor, which is calculated as follows.

| (12) |

During the local development stage, the individual position is updated by Equation (13).

| (13) |

where c2 is a fixed constant and F is the direction factor that determines the update direction of the individual position, which is constructed as follows:

| (14) |

where p = 2 × rand − C4 and C4 is a fixed constant. T = C3 × TF, and T ∈ [C3 × 0.3,1.]

3.2. Improved Archimedean Optimization Algorithm

In the basic AOA, the update of the optimal individual of the population depends on the update of the population in each iteration. After each iteration, the optimal individual is replaced by the individual with the best fitness, and the algorithm does not actively disturb the optimal individual. When the optimal individual of the population falls into the local extremum space, the algorithm will fall into the local optimum, and the phenomenon of premature convergence will occur [42]. Therefore, this paper introduces the corresponding improvement strategy to improve the defects of the basic AOA. Firstly, Sine chaos mapping and reverse learning strategies are used to initialize the population, which can enhance the population diversity and improve the solving efficiency. Secondly, Gaussian variation and superior selection strategies are used to perturb the positions of optimal individuals, which can enhance the global search ability and help the population to jump out of the local optimum. In this paper, the improved AOA is called IAOA. The specific strategy is as follows.

3.2.1. Sine Chaos Reverse Learning Initialization Strategy

The population of AOA is initialized by random generation. This leads to uneven distribution of individuals in the initial population, which affects the later iterative optimization. The Sine chaotic model [43] is a chaotic model with good randomness and ergodicity with infinite number of map foldings. Reverse learning [44] can obtain its corresponding reverse solution through the current solution. The optimal initial solution can be obtained by comparing and selecting a better solution. In this paper, the Sine chaotic strategy is used to generate an initial population with better diversity. Second, the reverse population is generated according to reverse learning. Finally, the fitness of the obtained population is calculated, and the solution with low fitness is selected as the initial population to improve the probability of obtaining the optimal initial solution. The 1-dimensional mapping expression of Sine chaos is calculated as the follows.

| (15) |

where t = 0, 1, 2 ⋯ , T and Xn ≠ 0.

The population X = {Xi, i = 1, 2, ⋯.T}, Xj = {Xj, j = 1, 2, ⋯dim} is obtained by mapping the Sine chaos into the solution space. The population individuals are represented as follows.

| (16) |

where Xi+1,j is the dimensional j value of the population i + 1.

The reverse population can be represented as X∗ = {Xi∗, i = 1, 2, ⋯T}, Xi∗ = {Xij∗, j = 1, 2, ⋯dim}. The reverse population individual Xij∗ can be calculated by the following.

| (17) |

where [Xminj, Xmaxj] is the population search dynamic boundary.

The new population {X ∪ X∗} is formed by the Sine chaotic population X and the reverse population X∗. The fitness values of the new population are ranked, and N individuals with the best fitness values are selected to form the initial population.

3.2.2. Gaussian Operator and Superior Selection Strategy

The Gaussian operator [45, 46] is introduced in this paper in order to avoid AOA from falling into local optimum and to maintain the diversity of individuals in the population. The current optimal solution Xtbest is subjected to Gaussian variation with certain probability p, and a meritocratic selection strategy is taken. The expression of the Gaussian variational operator is calculated as follows:

| (18) |

where Xit+1 denotes the individual position after variation and Gauss(δ) is a random variable satisfying a Gaussian distribution. The global optimal solution position is updated as follows.

| (19) |

where rand1 is a random variable between [0, 1], p is the probability of superior selection, and f(.) is the individual fitness value. Therefore, variational operations on the global optimal solution can avoid the algorithm from falling into a local optimum and effectively improve the search efficiency.

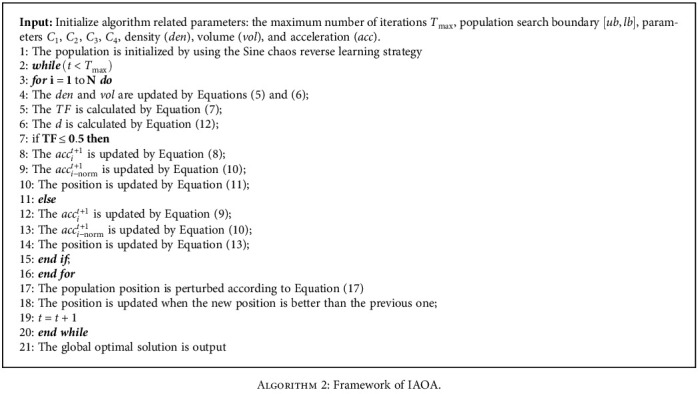

In order to clearly express the IAOA process, the framework of IAOA is shown in Algorithm 2.

Algorithm 2.

Framework of IAOA.

3.2.3. IAOA Validation and Comparison

In order to fully verify the effectiveness of the IAOA proposed in this paper, the improved Archimedes optimization algorithm, Archimedes optimization algorithm, sparrow search algorithm [47], and bald eagle search algorithm [48] are compared and tested under thirteen benchmark functions at the same time. The selected benchmark functions are classified into three categories. The first category is the single-peak benchmark function, as shown in F1-F5 in Table 3. The second category is the multipeak benchmark function, as shown in F6-F10 in Table 3. The third category is the multimodal benchmark function with fixed dimension, as shown in F11-F13 in Table 3. The basic parameters of the algorithm are as follows: the population size is 30, and the maximum number of iterations is 500. The other parameters within the algorithm are shown in Table 4. The experimental results are presented in Tables 5 and 6. The optimization ability of the algorithm is reflected by the optimal value and the average value, and the stability of the algorithm is reflected by the standard deviation. Firstly, for the five single-peaked functions, IAOA has higher convergence accuracy and stability compared to other algorithms. Secondly, F6 and F8 are able to reach the theoretical optimum when solving for the multipeak function. For other multipeaked functions, IAOA has the best search accuracy and stability. For fixed dimensional functions, IAOA is also better than other algorithms. Therefore, the improvement strategy proposed in this paper has improved the performance of the algorithm to some extent.

Table 3.

Baseline test functions.

| Funs | Name | Range | Optimum |

|---|---|---|---|

| F1 | Sphere | [−100,100]D | Min = 0 |

| F2 | Schwefel2.22 | [−10, 10]D | Min = 0 |

| F3 | Schwefel1.20 | [−100,100]D | Min = 0 |

| F4 | Schwefel2.21 | [−100,100]D | Min = 0 |

| F5 | Quartic | [−1.28,1.28]D | Min = 0 |

| F6 | Rastraign | [−32, 32]D | Min = 0 |

| F7 | Ackley | [−600,600]D | Min = 0 |

| F8 | Griewank | [−600,600]D | Min = 0 |

| F9 | Penalized 1 | [−50, 50]D | Min = 0 |

| F10 | Penalized 2 | [−50, 50]D | Min = 0 |

| F11 | Kowalik's | [−5, 5]2 | 3.08E − 04 |

| F12 | Six-hump | [−5, 5]2 | −1.03E + 00 |

| F13 | Branin | [-5,10]∪[0, 15]2 | 3.98E − 01 |

Table 4.

Algorithm parameter settings.

| Algorithm | Main parameters |

|---|---|

| AOA | C1 = 2, C2 = 6, C3 = 1, C4 = 2 |

| IAOA | C 1 = 2, C2 = 6, C3 = 1, C4 = 2 |

| SSA | ST = 0.6, PD = 0.7, SD = 0.2 |

| BES | a = 10; R = 1.5 |

Table 5.

Comparison with the results of 3 metaheuristic algorithms.

| Statistics | Algorithm | F1 | F2 | F3 | F4 | F5 | F6 | F7 |

|---|---|---|---|---|---|---|---|---|

| Best | IAOA | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 5.26E − 06 | 0.00E + 00 | 8.88E − 16 |

| AOA | 4.93E − 126 | 5.61E − 60 | 1.27E − 100 | 1.55E − 59 | 9.09E − 05 | 0.00E + 00 | 8.88E − 16 | |

| SSA | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 3.54E − 05 | 0.00E + 00 | 8.88E − 16 | |

| BES | 5.31E − 46 | 8.70E − 29 | 8.10E − 19 | 8.13E − 14 | 5.36E − 05 | 0.00E + 00 | 8.88E − 16 | |

| Mean | IAOA | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 9.89E − 05 | 0.00E + 00 | 8.88E − 16 |

| AOA | 2.96E − 87 | 3.69E − 45 | 9.36E − 73 | 1.23E − 40 | 6.76E − 04 | 5.46E + 00 | 2.31E − 15 | |

| SSA | 1.58E − 64 | 3.17E − 29 | 7.63E − 42 | 1.62E − 45 | 7.50E − 04 | 0.00E + 00 | 8.88E − 16 | |

| BES | 4.38E − 41 | 1.17E − 25 | 4.47E − 04 | 1.51E − 01 | 2.39E − 03 | 3.64E + 01 | 8.03E − 03 | |

| Worst | IAOA | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 4.39E − 04 | 0.00E + 00 | 8.88E − 16 |

| AOA | 5.17E − 86 | 1.06E − 43 | 2.74E − 71 | 2.28E − 39 | 2.35E − 03 | 1.64E + 02 | 4.44E − 15 | |

| SSA | 4.74E − 63 | 9.51E − 28 | 2.29E − 40 | 4.87E − 44 | 2.04E − 03 | 0.00E + 00 | 8.88E − 16 | |

| BES | 4.59E − 40 | 1.93E − 24 | 1.02E − 02 | 6.02E − 01 | 8.91E − 03 | 1.49E + 02 | 2.41E − 01 | |

| Std | IAOA | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 0.00E + 00 | 1.03E − 04 | 0.00E + 00 | 0.00E + 00 |

| AOA | 8.66E − 64 | 1.94E − 44 | 5.00E − 72 | 4.44E − 40 | 5.35E − 04 | 2.99E + 01 | 1.60E − 15 | |

| SSA | 1.55E − 39 | 1.74E − 28 | 4.18E − 41 | 8.89E − 45 | 5.47E − 04 | 0.00E + 00 | 0.00E + 00 | |

| BES | 1.08E − 40 | 3.55E − 25 | 1.93E − 03 | 2.23E − 01 | 2.11E − 03 | 5.61E + 01 | 4.40E − 02 |

Table 6.

Continuation of Table 5.

| Statistics | Algorithm | F8 | F9 | F10 | F11 | F12 | F13 |

|---|---|---|---|---|---|---|---|

| Best | IAOA | 0.00E + 00 | 1.04E − 13 | 4.32E − 12 | 3.19E − 04 | −1.03E + 00 | 3.98E − 01 |

| AOA | 0.00E + 00 | 4.85E − 01 | 2.60E + 00 | 3.35E − 04 | −1.03E + 00 | 3.98E − 01 | |

| SSA | 0.00E + 00 | 5.02E − 06 | 2.21E − 05 | 3.08E − 04 | −1.03E + 00 | 3.98E − 01 | |

| BES | 0.00E + 00 | 7.53E − 23 | 8.05E − 05 | 3.07E − 04 | −1.03E + 00 | 3.98E − 01 | |

| Mean | IAOA | 0.00E + 00 | 7.03E − 09 | 6.96E − 08 | 4.68E − 04 | −1.03E + 00 | 3.98E − 01 |

| AOA | 0.00E + 00 | 8.16E − 01 | 2.89E + 00 | 9.10E − 04 | −1.03E + 00 | 3.98E − 01 | |

| SSA | 0.00E + 00 | 2.42E − 05 | 1.49E − 03 | 3.74E − 04 | −1.03E + 00 | 3.98E − 01 | |

| BES | 0.00E + 00 | 1.04E − 02 | 5.03E − 02 | 3.18E − 03 | −1.03E + 00 | 3.98E − 01 | |

| Worst | IAOA | 0.00E + 00 | 3.86E − 08 | 3.93E − 07 | 1.32E − 03 | −1.03E + 00 | 3.98E − 01 |

| AOA | 0.00E + 00 | 1.15E + 00 | 2.99E + 00 | 5.91E − 03 | −1.03E + 00 | 3.98E − 01 | |

| SSA | 0.00E + 00 | 8.30E − 05 | 1.32E − 02 | 1.22E − 03 | −1.03E + 00 | 3.98E − 01 | |

| BES | 0.00E + 00 | 1.04E − 01 | 2.33E − 01 | 2.04E − 02 | −1.03E + 00 | 3.98E − 01 | |

| Std | IAOA | 0.00E + 00 | 1.09E − 08 | 1.16E − 07 | 1.68E − 04 | 1.79E − 10 | 5.48E − 07 |

| AOA | 0.00E + 00 | 1.78E − 01 | 8.39E − 02 | 9.73E − 04 | 3.93E − 04 | 3.89E − 06 | |

| SSA | 0.00E + 00 | 1.87E − 05 | 3.88E − 03 | 2.53E − 04 | 4.72E − 08 | 8.16E − 07 | |

| BES | 0.00E + 00 | 3.16E − 02 | 7.47E − 02 | 6.86E − 03 | 1.65E − 08 | 7.18E − 07 |

4. Survival Prediction Model of Patients with ESCC Based on IAOA-DBN

4.1. An Overview of DBN

The deep belief network (DBN) is a probabilistic generative network. It is composed by a bunch of restricted Boltzmann machines (RBMs) and a backpropagation (BP) neural network [49]. The learning process of DBN can be divided into pretraining and fine-tuning. During the pretraining process, each RBM is trained individually by an unsupervised learning algorithm in turn, and the network parameters of each layer are gradually adjusted. In the fine-tuning process, the classification labels are used as the output layer of the DBN. The BP neural network is trained sequentially from top to bottom, and the training error is propagated back to the RBM to fine-tune the parameters of all layers to reach the global optimal parameters of the DBN. The structure of DBN is shown in Figure 3.

Figure 3.

Schematic diagram of the DBN structure.

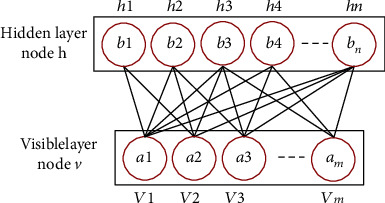

4.1.1. Pretraining of RBM

The restricted Boltzmann machine (RBM) is a neural perceptron consisting of a visible layer (v) and a hidden layer (h). Its structure is shown in Figure 4. There are bidirectional connections between the visible and hidden layers, while there is no connection between units in the same layer. In RBM, there is a weight w between any two connected neurons in the visible layer and the hidden layer to represent the connection strength. Each neuron has a bias coefficient a (for the neurons in the visible layer) and b (for the neurons in the hidden layer) to represent its own weight. Therefore, the energy function contained in each RBM is calculated as follows:

| (20) |

Figure 4.

Schematic diagram of RBM structure.

where θ represents the parameter set of RBM, including the state vi and bias ai of the visible layer and the state hj and bias bj of the hidden layer, ωij is the connection weight between the visible layer node and the hidden layer node, and n and m represent the number of neurons in the visible layer and the hidden layer, respectively.

According to the energy function of the RBM, the joint distribution of the visible layer and the hidden layer is calculated as follows.

| (21) |

where T(θ) = ∑v,he−E(v, h; θ), called the normalization factor.

The independent probability distribution of the visible layer is calculated as follows.

| (22) |

There is no connection between nodes in the same layer in the RBM, so the conditional probability distribution of each neuron in the visible layer and the hidden layer is as follows:

| (23) |

where ε(x) = 1/(1 + exp(x)) is the sigmoid function.

The goal of RBM training learning is to make the Gibbs distribution of the RBM network representation as close as possible to the distribution of the original data so that p(v) is maximized.

The network structure parameters θ = {ai, bj, ωij} of the RBM can be obtained using the maximum likelihood estimation method, and the parameter set θ can be updated by the comparative scattering method, as expressed by the following.

| (24) |

| (25) |

where 〈〉p(v|θ) represents the expected value of the partial derivative under the distribution of p(v | θ).

The model parameter update method is as follows:

| (26) |

where 〈.〉data represents the expectation of p(h | v) defined by the current RBM model, 〈.〉remodel represents the expectation of p(h | v) defined by the reconstructed RBM model, α represents the learning rate, and β is the batch size.

The pretraining of DBN starts from the bottom layer. After the first RBM is trained, the current hidden layer is transformed into the visible layer of the next RBM. The network is trained layer by layer from bottom to top to avoid falling into local optimum.

4.1.2. Fine-Tuning of RBM

In the fine-tuning stage, a BP neural network is constructed using the hidden layer of the last RBM and the output layer of the DBN for supervised training. The parameters of each layer are optimized from the top to the bottom to obtain the final model parameters.

4.2. The Proposed Parameter Optimization of DBN Based on IAOA

During the construction of DBN, the choice of hyperparameters such as learning rate α and batch size β has an important impact on the training results of DBN. However, the selection of hyperparameters in traditional DBNs mainly relies on subjective experience, which also causes the problem of insufficient training efficiency. This also leads to a decrease in the classification accuracy and model stability of DBNs. In this paper, in order to reduce the influence of human interference factors and improve the classification accuracy of DBN, the IAOA is proposed to optimize the learning rate α and batch size β of DBN. The classification error rate of DBN is used as the objective function of IAOA optimization, and the objective function is fitnessfunction = 1 − classification error rate. The larger the fitness value, the higher the classification effect of DBN. In order to clearly express the IAOA-DBN process, the framework of IAOA-DBN is shown in Figure 5.

Figure 5.

The framework of IAOA-DBN.

4.3. Survival Prediction Model of Patients with ESCC

In this paper, eleven indicators significantly related to the survival rate of patients with ESCC are obtained through the MRMR algorithm, and these indicators are TNM stage, BASO, age, PT, FIB, LYMPH, RBC, TT, PLT, T stage, and GLOB, respectively. Eleven indicators and all indicators of the patients are used as inputs to the IAOA-DBN model, respectively, and the five-year survival rate of the patients is used as the output. A survival prediction model for esophageal cancer patients is established. The established survival prediction model for patients with ESCC is shown in Figure 6. To verify the validity of this model, the Archimedes optimization algorithm-deep belief network (AOA-DBN), sparrow search algorithm-deep belief network (SSA-DBN), particle swarm optimization-deep belief network (PSO-DBN) [50], bald eagle search-deep belief network (BES-DBN), improved Archimedean optimization algorithm-support vector machines (IAOA-SVM), and improved Archimedean optimization algorithm-backpropagation neural networks (IAOA-BPNN) are used for comparison. The initial population of AOA, SSA, PSO, and BES is uniformly set to 20, and the maximum number of iterations is 500. The dataset is divided into ten parts, and the tenfold cross-validation method is used to verify the classification accuracy of the model. The prediction results of the DBN optimized by the five optimization algorithms, IAOA-SVM, and IAOA-BPNN model are shown in Table 7.

Figure 6.

ESCC patient survival prediction model.

Table 7.

Comparison of different algorithms for predicting five-year survival of patients with esophageal squamous cell carcinoma.

| Algorithm | 10-fold cross-validation accuracy | |

|---|---|---|

| Eleven indicators | All indicators | |

| IAOA-DBN | 89.66% | 88.13% |

| AOA-DBN | 87.46% | 86.24% |

| SSA-DBN | 88.14% | 86.93% |

| PSO-DBN | 86.78% | 85.46% |

| BES-DBN | 87.29% | 86.12% |

| IAOA-SVM | 86.27% | 85.19% |

| IAOA-BPNN | 86.61% | 85.32% |

When eleven patient indicators are used as input, the Tables 5 and 6 show that the prediction results of IAOA-DBN, AOA-DBN, SSA-DBN, PSO-DBN, BES-DBN, IAOA-SVM, and IAOA-BPNN are 89.66%, 87.46%, 88.14%, 86.78%, 87.29%, 86.27%, and 86.61%, respectively. When all patient indicators are used as input, Table 7 shows that the prediction results of IAOA-DBN, AOA-DBN, SSA-DBN, PSO-DBN, BES-DBN, IAOA-SVM, and IAOA-BPNN are 88.13%, 86.24%, 86.93%, 85.46%, 86.12%, 85.19%, and 85.32%, respectively. The comparison shows that IAOA-DBN has a high accuracy rate and can accurately predict the five-year survival rate of ESCC patients. In addition, when the input to the model is eleven indicators, the prediction results are better than using all indicators. Therefore, the MRMR-IAOA-DBN model proposed in this paper can better predict the five-year survival of patients with ESCC.

To better demonstrate the effectiveness of the proposed model, the Wisconsin Diagnostic Breast Cancer (WBCD) dataset is used for testing. In Wisconsin Diagnostic Breast Cancer (WBCD) dataset, 30 indexes of patients are used as input, and the benign and malignant tumors of patients are used as output. The dataset is divided into ten parts, and the tenfold cross-validation method is used to verify the performance of the model. The test results are shown in Table 8. From the test results, it can be seen that IAOA-DBN has higher prediction accuracy than other models. Therefore, the survival prediction model proposed in this paper can effectively predict the prognosis of cancer patients.

Table 8.

Comparison of the results of different algorithms.

| Algorithm | 10-fold cross-validation accuracy |

|---|---|

| IAOA-DBN | 97.538% |

| AOA-DBN | 96.974% |

| SSA-DBN | 97.177% |

| PSO-DBN | 96.629% |

| BES-DBN | 96.829% |

| IAOA-SVM | 95.602% |

| IAOA-BPNN | 95.038% |

5. Conclusions

A novel survival prediction model for patients with ESCC is presented in this paper. Firstly, a minimum redundancy and maximum relevancy algorithm is used to screen out indicators significantly correlated with survival in patients, which is validated by the Cox regression analysis. Secondly, an IAOA-DBN model is proposed. The model uses IAOA to optimize the parameters in the DBN training process, which improves the stability and classification accuracy of the DBN model. Finally, the model is applied to the survival prediction model for patients with ESCC. The results of comparison with four methods verify the validity and superiority of the model. The key conclusions are expressed as follows.

The patients' clinical indicators are ranked by importance using the minimum redundancy and maximum relevancy algorithm, and a new subset of features is selected. The experimental results show that the new feature subset is with better prediction results than the all-feature set

Aiming at the problem that poor convergence accuracy and easy to fall into local optimum of AOA, an improved AOA (IAOA) is proposed in this paper. The experimental results show that the improved strategy proposed in this paper improves the performance of AOA to a certain extent

The learning rate α and batch size β of DBN are optimized using IAOA to obtain the optimal parameters, which improved the classification prediction accuracy and stability of the DBN model. Compared with AOA-DBN, SSA-DBN, PSO-DBN, and BES-DBN, the results verify the effectiveness and superiority of the IAOA-DBN model

Acknowledgments

This work was supported in part by the Joint Funds of the National Natural Science Foundation of China under grant U1804262, in part by the Foundation of Young Key Teachers from University of Henan Province under grant 2018GGJS092, in part by the Youth Talent Lifting Project of Henan Province under grant 2018HYTP016, in part by the Henan Province University Science and Technology Innovation Talent Support Plan under grant 20HASTIT027, in part by the Zhongyuan Thousand Talents Program under grant 204200510003, and in part by the Open Fund of State Key Laboratory of Esophageal Cancer Prevention and Treatment under grant K2020-0010 and grant K2020-0011.

Data Availability

The datasets presented in this article are not readily available because the data used in the study are private and confidential data. Requests to access the datasets should be directed to Junwei Sun, junweisun@yeah.net.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- 1.Ferlay J., Colombet M., Soerjomataram I., et al. Cancer statistics for the year 2020: an overview. International Journal of Cancer . 2021;149(4):778–789. doi: 10.1002/ijc.33588. [DOI] [PubMed] [Google Scholar]

- 2.Cao W., Chen H. D., Yu Y. W., Li N., Chen W. Q. Changing profiles of cancer burden worldwide and in China: a secondary analysis of the global cancer statistics 2020. Chinese Medical Journal . 2021;134(7):783–791. doi: 10.1097/CM9.0000000000001474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Knight S. R., Shaw C. A., Pius R., et al. Global variation in postoperative mortality and complications after cancer surgery: a multicentre, prospective cohort study in 82 countries. The Lancet . 2021;397(10272):387–397. doi: 10.1016/S0140-6736(21)00001-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Urakawa S., Makino T., Yamasaki M., et al. Lymph node response to neoadjuvant chemotherapy as an independent prognostic factor in metastatic esophageal cancer. Annals of Surgery . 2021;273(6):1141–1149. doi: 10.1097/SLA.0000000000003445. [DOI] [PubMed] [Google Scholar]

- 5.Wells J. C., Sharma S., Del Paggio J. C., et al. An analysis of contemporary oncology randomized clinical trials from low/middle-income vs high-income countries. JAMA Oncology . 2021;7(3):379–385. doi: 10.1001/jamaoncol.2020.7478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Huang J., Koulaouzidis A., Marlicz W., et al. Global burden, risk factors, and trends of esophageal cancer: an analysis of cancer registries from 48 countries. Cancers . 2021;13(1):p. 141. doi: 10.3390/cancers13010141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sakamoto T., Fujiogi M., Matsui H., Fushimi K., Yasunaga H. Comparing perioperative mortality and morbidity of minimally invasive esophagectomy versus open esophagectomy for esophageal cancer: a nationwide retrospective analysis. Annals of Surgery . 2021;274(2):324–330. doi: 10.1097/SLA.0000000000003500. [DOI] [PubMed] [Google Scholar]

- 8.Ajani J. A., D’Amico T. A., Bentrem D. J., et al. Esophageal and esophagogastric junction cancers, version 2.2019, NCCN Clinical Practice Guidelines in Oncology. Journal of the National Comprehensive Cancer Network . 2019;17(7):855–883. doi: 10.6004/jnccn.2019.0033. [DOI] [PubMed] [Google Scholar]

- 9.Fransen L. F., Berkelmans G. H., Asti E., et al. The effect of postoperative complications after minimally invasive esophagectomy on long-term survival: an international multicenter cohort study. Annals of Surgery . 2021;274(6):e1129–e1137. doi: 10.1097/SLA.0000000000003772. [DOI] [PubMed] [Google Scholar]

- 10.Huang F. L., Yu S. J. Esophageal cancer: risk factors, genetic association, and treatment. Asian Journal of Surgery . 2018;41(3):210–215. doi: 10.1016/j.asjsur.2016.10.005. [DOI] [PubMed] [Google Scholar]

- 11.Kelly R. J., Ajani J. A., Kuzdzal J., et al. Adjuvant nivolumab in resected esophageal or gastroesophageal junction cancer. New England Journal of Medicine . 2021;384(13):1191–1203. doi: 10.1056/NEJMoa2032125. [DOI] [PubMed] [Google Scholar]

- 12.Lin S. H., Hobbs B. P., Verma V., et al. Randomized phase iib trial of proton beam therapy versus intensity-modulated radiation therapy for locally advanced esophageal cancer. Journal of Clinical Oncology . 2020;38(14):1569–1579. doi: 10.1200/JCO.19.02503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Hashimoto R., Requa J., Dao T., et al. Artificial intelligence using convolutional neural networks for real-time detection of early esophageal neoplasia in Barrett’s esophagus (with video) Gastrointestinal Endoscopy . 2020;91(6):1264–1271.e1. doi: 10.1016/j.gie.2019.12.049. [DOI] [PubMed] [Google Scholar]

- 14.Vollenbrock S. E., Voncken F. E., Bartels L. W., Beets-Tan R. G., Bartels-Rutten A. Diffusion-weighted MRI with ADC mapping for response prediction and assessment of oesophageal cancer: a systematic review. Radiotherapy and Oncology . 2020;142:17–26. doi: 10.1016/j.radonc.2019.07.006. [DOI] [PubMed] [Google Scholar]

- 15.Nobel T. B., Dave N., Eljalby M., et al. Incidence and risk factors for isolated esophageal cancer recurrence to the brain. The Annals of Thoracic Surgery . 2020;109(2):329–336. doi: 10.1016/j.athoracsur.2019.09.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Okadome K., Baba Y., Yagi T., et al. Prognostic nutritional index, tumor-infiltrating lymphocytes, and prognosis in patients with esophageal cancer. Annals of Surgery . 2020;271(4):693–700. doi: 10.1097/SLA.0000000000002985. [DOI] [PubMed] [Google Scholar]

- 17.Napier K. J., Scheerer M., Misra S. Esophageal cancer: a review of epidemiology, pathogenesis, staging workup and treatment modalities. World journal of gastrointestinal oncology . 2014;6(5):112–120. doi: 10.4251/wjgo.v6.i5.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Martincorena I., Fowler J. C., Wabik A., et al. Somatic mutant clones colonize the human esophagus with age. Science . 2018;362(6417):911–917. doi: 10.1126/science.aau3879. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sun J., Han J., Wang Y., Liu P. Memristor-based neural network circuit of emotion congruent memory with mental fatigue and emotion inhibition. IEEE Transactions on Biomedical Circuits and Systems . 2021;15(3):606–616. doi: 10.1109/TBCAS.2021.3090786. [DOI] [PubMed] [Google Scholar]

- 20.Sun J., Han J., Liu P., Wang Y. Memristor-based neural network circuit of Pavlov associative memory with dual mode switching. AEUinternational Journal of Electronics and Communications . 2021;129, article 153552 [Google Scholar]

- 21.Sun J., Han G., Zeng Z., Wang Y. Memristor-based neural network circuit of full-function Pavlov associative memory with time delay and variable learning rate. IEEE transactions on cybernetics . 2020;50(7):2935–2945. doi: 10.1109/TCYB.2019.2951520. [DOI] [PubMed] [Google Scholar]

- 22.Azad T. D., Chaudhuri A. A., Fang P., et al. Circulating tumor DNA analysis for detection of minimal residual disease after chemoradiotherapy for localized esophageal cancer. Gastroenterology . 2020;158(3):494–505.e6. doi: 10.1053/j.gastro.2019.10.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jiang Y., Liang X., Wang W., et al. Noninvasive prediction of occult peritoneal metastasis in gastric cancer using deep learning. JAMA Network Open . 2021;4(1):e2032269–e2032269. doi: 10.1001/jamanetworkopen.2020.32269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Doja M., Kaur I., Ahmad T. Current state of the art for survival prediction in cancer using data mining techniques. Current Bioinformatics . 2020;15(3):174–186. doi: 10.2174/1574893614666190902152142. [DOI] [Google Scholar]

- 25.Kaviarasi R., Gandhi R. R. Accuracy enhanced lung cancer prognosis for improving patient survivability using proposed Gaussian classifier system. Journal of Medical Systems . 2019;43(7):1–9. doi: 10.1007/s10916-019-1297-2. [DOI] [PubMed] [Google Scholar]

- 26.Qiu M., Fu Q., Jiang C., Liu D. Machine learning based network analysis determined clinically relevant miRNAs in breast cancer. Frontiers in Genetics . 2020;11:p. 1467. doi: 10.3389/fgene.2020.615864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Xu X. Y., Lu J. L., Xu Q., Hua H. X., Xu L., Chen L. Risk factors and the utility of three different kinds of prediction models for postoperative fatigue after gastrointestinal tumor surgery. Supportive Care in Cancer . 2021;29(1):203–211. doi: 10.1007/s00520-020-05483-0. [DOI] [PubMed] [Google Scholar]

- 28.Xu Y., Zhou Y., Sekula P., Ding L. Machine learning in construction: from shallow to deep learning. Developments in the Built Environment . 2021;6, article 100045 doi: 10.1016/j.dibe.2021.100045. [DOI] [Google Scholar]

- 29.Ronoud S., Asadi S. An evolutionary deep belief network extreme learning-based for breast cancer diagnosis. Soft Computing . 2019;23(24):13139–13159. doi: 10.1007/s00500-019-03856-0. [DOI] [Google Scholar]

- 30.Xiao Y., Wu J., Lin Z., Zhao X. A deep learning-based multi-model ensemble method for cancer prediction. Computer Methods and Programs in Biomedicine . 2018;153:1–9. doi: 10.1016/j.cmpb.2017.09.005. [DOI] [PubMed] [Google Scholar]

- 31.de Groof A. J., Struyvenberg M. R., van der Putten J., et al. Deep-learning system detects neoplasia in patients with Barrett’s esophagus with higher accuracy than endoscopists in a multistep training and validation study with benchmarking. Gastroenterology . 2020;158(4):915–929.e4. doi: 10.1053/j.gastro.2019.11.030. [DOI] [PubMed] [Google Scholar]

- 32.Takeuchi M., Seto T., Hashimoto M., et al. Performance of a deep learning-based identification system for esophageal cancer from CT images. Esophagus . 2021;18(3):612–620. doi: 10.1007/s10388-021-00826-0. [DOI] [PubMed] [Google Scholar]

- 33.Ramachandram D., Taylor G. W. Deep multimodal learning: a survey on recent advances and trends. IEEE Signal Processing Magazine . 2017;34(6):96–108. doi: 10.1109/MSP.2017.2738401. [DOI] [Google Scholar]

- 34.Yasaka K., Akai H., Kunimatsu A., Kiryu S., Abe O. Deep learning with convolutional neural network in radiology. Japanese Journal of Radiology . 2018;36(4):257–272. doi: 10.1007/s11604-018-0726-3. [DOI] [PubMed] [Google Scholar]

- 35.Lamba M., Munjal G., Gigras Y. A hybrid gene selection model for molecular breast cancer classification using a deep neural network. International Journal of Applied Pattern Recognition . 2021;6(3):195–216. doi: 10.1504/IJAPR.2021.117203. [DOI] [Google Scholar]

- 36.Shanid M., Anitha A. Adaptive optimisation driven deep belief networks for lung cancer detection and severity level classification. International Journal of Bio-Inspired Computation . 2021;18(2):114–121. doi: 10.1504/IJBIC.2021.118101. [DOI] [Google Scholar]

- 37.Gai J., Zhong K., Du X., Yan K., Shen J. Detection of gear fault severity based on parameter-optimized deep belief network using sparrow search algorithm. Measurement . 2021;185, article 110079 doi: 10.1016/j.measurement.2021.110079. [DOI] [Google Scholar]

- 38.Qasim M., Algamal Z., Ali H. M. A binary qsar model for classifying neuraminidase inhibitors of influenza a viruses (h1n1) using the combined minimum redundancy maximum relevancy criterion with the sparse support vector machine. SAR and QSAR in Environmental Research . 2018;29(7):517–527. doi: 10.1080/1062936X.2018.1491414. [DOI] [PubMed] [Google Scholar]

- 39.Zhang H., Chen C., Gao R., et al. Rapid identification of cervical adenocarcinoma and cervical squamous cell carcinoma tissue based on Raman spectroscopy combined with multiple machine learning algorithms. Photodiagnosis and Photodynamic Therapy . 2021;33, article 102104 doi: 10.1016/j.pdpdt.2020.102104. [DOI] [PubMed] [Google Scholar]

- 40.Li H., Zhang S., Guo J., Zhang L. Hepatic metastasis in newly diagnosed esophageal cancer: a population-based study. Frontiers in Oncology . 2021;11:p. 1151. doi: 10.3389/fonc.2021.644860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hashim F. A., Hussain K., Houssein E. H., Mabrouk M. S., Al-Atabany W. Archimedes optimization algorithm: a new metaheuristic algorithm for solving optimization problems. Applied Intelligence . 2021;51(3):1531–1551. doi: 10.1007/s10489-020-01893-z. [DOI] [Google Scholar]

- 42.Desuky A. S., Hussain S., Kausar S., Islam M. A., El Bakrawy L. M. EAOA: an enhanced Archimedes optimization algorithm for feature selection in classification. IEEE Access . 2021;9:120795–120814. doi: 10.1109/ACCESS.2021.3108533. [DOI] [Google Scholar]

- 43.Zhu H., Qi W., Ge J., Liu Y. Analyzing devaney chaos of a sine-cosine compound function system. International Journal of Bifurcation and Chaos . 2018;28(14):p. 1850176. doi: 10.1142/S0218127418501766. [DOI] [Google Scholar]

- 44.Lin P., Wang A., Zhang L., et al. An improved cuckoo search with reverse learning and invasive weed operators for suppressing sidelobe level of antenna arrays. International Journal of Numerical Modelling: Electronic Networks, Devices and Fields . 2021;34(2, article e2829) doi: 10.1002/jnm.2829. [DOI] [Google Scholar]

- 45.Huo M., Deng Y., Duan H. Cauchy-Gaussian pigeon-inspired optimisation for electromagnetic inverse problem. International Journal of Bio-Inspired Computation . 2021;17(3):182–188. doi: 10.1504/IJBIC.2021.114875. [DOI] [Google Scholar]

- 46.Sun J., Zang M., Liu P., Wang Y. A secure communication scheme of three-variable chaotic coupling synchronization based on DNA chemical reaction networks. IEEE Transactions on Signal Processing . 2022;70:2362–2373. doi: 10.1109/TSP.2022.3173154. [DOI] [Google Scholar]

- 47.Xue J., Shen B. A novel swarm intelligence optimization approach: sparrow search algorithm. Systems Science & Control Engineering . 2020;8(1):22–34. doi: 10.1080/21642583.2019.1708830. [DOI] [Google Scholar]

- 48.Alsattar H., Zaidan A., Zaidan B. Novel metaheuristic bald eagle search optimisation algorithm. Artificial Intelligence Review . 2020;53(3):2237–2264. doi: 10.1007/s10462-019-09732-5. [DOI] [Google Scholar]

- 49.Hinton G. E., Osindero S., Teh Y. W. A fast learning algorithm for deep belief nets. Neural Computation . 2006;18(7):1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 50.Shao H., Jiang H., Zhang X., Niu M. Rolling bearing fault diagnosis using an optimization deep belief network. Measurement Science and Technology . 2015;26(11, article 115002) doi: 10.1088/0957-0233/26/11/115002. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets presented in this article are not readily available because the data used in the study are private and confidential data. Requests to access the datasets should be directed to Junwei Sun, junweisun@yeah.net.