Abstract

Introduction

The Royal Australian and New Zealand College of Radiologists (RANZCR) led the medical community in Australia and New Zealand in considering the impact of machine learning and artificial intelligence (AI) in health care. RANZCR identified that medical leadership was largely absent from these discussions, with a notable absence of activity from governments in the Australasian region up to 2019. The clinical radiology and radiation oncology sectors were considered ripe for the adoption of AI, and this raised a range of concerns about how to ensure the ethical application of AI and to guide its safe and appropriate use in our two specialties.

Methods

RANZCR’s Artificial Intelligence Committee undertook a landscape review in 2019 anddetermined that AI within clinical radiology and radiation oncology had the potential to grow rapidly and significantly impact the professions. In order to address this, RANZCR drafted ethical principles on the use of AI and standards to guide deployment and engaged in extensive stakeholder consultation to ensure a range of perspectives were received and considered.

Results

RANZCR published two key bodies of work: The Ethical Principles of Artificial Intelligence in Medicine, and the Standards of Practice for Artificial Intelligence in Clinical Radiology.

Conclusion

RANZCR’s publications in this area have established a solid foundation to prepare for the application of AI, however more work is needed. We will continue to assess the evolution of AI and ML within our professions, strive to guide the upskilling of clinical radiologists and radiation oncologists, advocate for appropriate regulation and produce guidance to ensure that patient care is delivered safely.

Keywords: artificial intelligence, clinical radiology, ethics, machine learning, radiation oncology

Introduction

The Royal Australian and New Zealand College of Radiologists (RANZCR) began some of the first consideration and discussion 1 within the medical community in Australia and New Zealand in thinking through the impact of machine learning (ML) and artificial intelligence (AI) in health care.

After analysing global developments in AI for over twelve months, particularly those relating to medical imaging, RANZCR identified throughout 2018 that medical leadership was largely absent from these discussions. RANZCR also observed a notable absence of activity from governments in the Australasian region, which contrasted with a pro‐active approach in similar countries in North America and Europe. 2 , 3 , 4 Given that AI technologies were reported to be making advances within medical imaging, the clinical radiology and radiation oncology sectors were considered ripe for the adoption of AI. This raised a range of concerns about how to ensure ethical application of AI and how to guide its safe and appropriate use in our two specialties.

RANZCR determined to take a pro‐active approach to shape the adoption of AI in clinical radiology and radiation oncology in Australia and New Zealand that would ensure it would be deployed safely into care pathways. RANZCR was aware of the potential for AI to greatly impact patient care in clinical radiology and radiation oncology and had a desire to see AI integrated in a manner that respected the ethics and standards required to deliver safe and quality care to the people of Australia and New Zealand and beyond. When commencing with our work, we concentrated on areas within our remit and influence: to ensure AI would be used ethically in medicine in our jurisdictions, to support quality service provision using AI and to prepare our trainees and members in transitioning to practise in an AI‐rich environment.

Overarching governance

As the lead representative organisation for clinical radiology and radiation oncology, RANZCR is bestowed with a range of responsibilities and objectives under its Articles of Association 5 and Faculty by‐laws 6 , 7 including the following:

Setting registration standards for clinical radiologists and radiation oncologists,

Running a training and assessment programme,

Providing a framework and opportunities for continuing professional development,

Supporting research in clinical radiology and radiation oncology,

Ensuring provision of quality care in both specialties,

Advocating for better access to services. 8

These responsibilities are detailed further in RANZCR’s Strategic Plan (2018–2021) 9 and the pillars contained within. Familiarity with this broad range of responsibilities and strategic priorities allowed RANZCR to look holistically at the issue of AI in medicine.

RANZCR's Faculties of Clinical Radiology (FCR) and Radiation Oncology (FRO) are governed and overseen by Faculty Councils, 10 which have powers to create committees and working groups to further their objectives.

In March 2018, RANZCR established an AI Working Group (AIWG), which later evolved into an advisory committee, known as the Artificial Intelligence Committee (AIC) under the FCR in partnership with FRO. The working group was tasked with considering the implications of AI and ML on the disciplines, particularly the appropriate education for members, stakeholders and the public. 11 The AIC sought to complement existing medical ethical codes and standards frameworks in Australia and New Zealand, while providing specific guidance for novel clinical issues pertaining to AI.

The AIC held a workshop in November 2018 to assess the risks and opportunities posed by AI and consider how they might be best managed through ethics, standards, policies and upskilling for clinical radiologists (and subsequently for radiation oncologists). A landscape review was undertaken, and it was concluded that AI is a rapidly evolving area which presents a broad range of opportunities and risks and is likely to result in significant changes to existing practice of clinical radiology and radiation oncology. At this point, the AIC reviewed existing definitions and determined to utilise some already in existence for the basis of its work, determining that AI be defined as follows: ‘Technologies with the ability to perform tasks that would otherwise require human intelligence, such as visual perception, speech recognition, and language translation’ (Her Majesty's Government, 2017), and ML be defined as follows: ‘One particular form of AI, which gives computers the ability to learn from and improve with experience, without being explicitly programmed. When provided with sufficient data, a machine learning algorithm can learn to make predictions or solve problems, such as identifying objects in pictures or winning at particular games, for example’ (Authority of the House of Lords, 2018).

In April 2019, RANZCR published a position statement to outline anticipated issues and chart the path forward for our sectors titled ‘Artificial Intelligence in Radiology and Radiation Oncology: The State of Play 2019’. 12

When RANZCR’s AIC started to consider ethics in detail, ethical questions regarding the application of AI to a variety of industries were already being posed. 3 , 13 , 14 , 15 , 16 , 17 At the time, the discussion on ethics of AI was largely driven by software vendors with no real consideration given to the field of medicine. Moreover, there were glaring gaps in standards and regulation; AI did not meet traditional definitions of software as a medical device and hence risked slipping though the usual device regulations. When AI is considered carefully, besides the opportunities it presents, it has the potential to cause significant harm to patients if it is not implemented safely. As a consequence, RANZCR decided to begin some of the first consideration and discussion of AI in medicine in Australasia. It is now apparent that AI should be core business for us all as individuals, clinicians, consumers, policymakers, regulators and industry partners.

Broader questions about the ethical use of AI across the economy were taken up in a contemporary white paper, 18 auspiced jointly by the Australian Human Rights Commission and the World Economic Forum, which considered what responsible innovation would look like in the Australian landscape. The Commonwealth Scientific and Industrial Research Organisation (CSIRO) was also commissioned by the Australian government to write a discussion paper, ‘Artificial Intelligence, Australia’s Ethics Framework. 19 Both of these works contain exceptionally sound advice to governments and regulators, much of which has not yet been put into practice. Even still, the broad definition of ‘software as a medical device’ by the Therapeutic Goods Administration means that the nuances of introducing ML and AI to patient care present significant challenges to ensure robust ethical frameworks.

Partnerships between clinicians, consumers and industry with governance oversight by people who really understand the requirement for an ethical and standard‐driven framework are essential in getting this right. Government and regulators have been tardy in appreciating the need for having strong frameworks in place for assessment and deployment.

RANZCR does not wish to slow down the development of clinically advantageous ML and AI and see that this can be done in parallel with strong ethical and standards frameworks.

Adopting AI in clinical radiology and radiation oncology

AI is used widely as a catchall phrase; however, most AI applications so far are actually ML.

There is no true artificial intelligence as yet, anywhere, but machine learning, complex neural networks and associated data analyses are becoming more complex and more common. Algorithms are the building blocks on which all AI is built. Getting the algorithms correct, asking questions and quantifying outcomes that really matter with ethics and standards overlaid gives us the opportunity to provide significant benefits for our whole clinical community and our patients. We have a chance to build systems that are helpful, and if sufficient well‐curated information is contained, which can be an extremely valuable resource. On the other hand, getting this wrong for the population in question gives significant potential for harm.

We need to understand the source of the data, the manner in which it was acquired and whether permission was sought to do so. Was it accurate and well curated? Was the population comparable to that we are looking to apply the algorithms to? Were the questions being asked realistic and of value to us all? Could accurate answers help us do better, better at getting the diagnosis and prognosis more accurate? Are they accurate enough – do we know how accurate they really are in the real world? All of these questions and more need to be understood and answered. Clinicians need to be involved in ensuring the quality of data for inclusion in ML algorithms or in assessing data in externally provided algorithms. It should be part of the professional responsibility of doctors to take the lead in this area, in the structuring and collection of routine clinical information to provide accurate and quality‐assured data.

As a diagnostic example, if algorithms are developed to interrogate lung masses and we do not have insight into the development of these, we have no way of ascertaining their validity or worth. If the algorithms have been developed in populations with high rates of smoking and high rates of tuberculosis or opportunistic lung infections, and we then try to use this to interrogate chest CT scans in Australia and New Zealand, the limitations and concerns become apparent. When we then start applying this algorithm to First Nations peoples, or to other sub‐populations within our nations, the potential to do harm by misinterpretation becomes extremely concerning. We usually have no insight into the development of such algorithms and little visibility of their background, the data they were trained on, their validity or their relevance.

In the world of radiation oncology, the use of predictive AI has begun to evolve planning and delivery systems and is in the main a highly collaborative iterative process. Nonetheless, the ethical and standards framework must remain upfront and central. Without such a framework, we run a risk of AI falling into disrepute and this has the potential to set us back many years.

Repeatedly, our Fellows and their departments are asked (by researchers or commercial operators) to provide enormous amounts of digital data on prior medical images and reports, often without curation or accompanying contextual information or without consent from the patient. This may be without much thought or insight from the requestors as to what this really means. Radiology and radiation oncology are data rich, and usually stored in digital format, and this makes them highly desirable for those seeking to develop ML tools. The unlabelled data on their own however are meaningless, no matter how voluminous the amount of information. The rest of the critical information, curated properly and with consent, provides the richness that is required to start to build algorithms that matter.

Methodology and development

RANZCR’s AIC considered how best to approach the wider subject and determined to commence its work with the development of ethical principles that would guide the development of standards, regulatory advice and upskilling for clinical radiologists and radiation oncologists. The AIC oversaw the development of two seminal pieces of work, the Ethical Principles for Artificial Intelligence in Medicine 20 and the Standards of Practice for Artificial Intelligence in Clinical Radiology. 21 Both were among the first publications of their kind for healthcare bodies globally.

The AIC followed a standard methodology of policy formation for the development of the ethical principles and standards, as outlined in the below Figure 1.

Figure 1.

Policy development process

An initial list of eight ethical principles were drafted following the AIC’s workshop, which had encapsulated the specific themes to cover and demonstrated clearly the links to established frameworks of medical ethics, namely: respect for patient’s autonomy, beneficence (act in patient’s best interest), non‐maleficence (do no harm) and justice (equity). It was clear that all ought to be applied to AI albeit tailored to the emerging circumstances.

The draft ethical principles were refined by the AIC and published for stakeholder and member consultation in February 2019. The consultation document was downloaded almost 2000 times, and 15 responses were received (Table 1).

Table 1.

Ethical principles for artificial intelligence in medicine respondents

| Respondent Type | Number |

|---|---|

| Regulators | 2 |

| Australian Medical Colleges | 3 |

| Australian Associations/Societies | 4 |

| Australian Universities | 2 |

| Other | 2 |

| Individuals | 2 |

A review of the consultation feedback helped RANZCR to clarify the intended audiences, refine each principle to be both normative and action directing and revise the order in which the principles were presented for a more coherent flow.

Meanwhile, RANZCR continued to review subsequent publications globally during the consultation period which saw some highly beneficial and complementary documents, namely the Joint North American and European Multisociety document 22 and the European Union’s AI Guidelines. 23 Key concepts from both were considered alongside the consultation feedback in the refinement of RANZCR’s inaugural document into nine ethical principles. This subsequent review and contemporary discussion with AI experts inspired an additional principle covering ‘teamwork’ to underline the importance of cross‐disciplinary collaboration and understanding the skills and contribution that each member of the team would bring.

RANZCR’s Standards of Practice for Artificial intelligence in Clinical Radiology were subsequently developed and followed a similar process. This began following the publication of the draft Ethical Principles. An initial draft of standards was developed, aligned to the format of the existing RANZCR Standards of Practice for Clinical Radiology which outlined the expected standard to meet, with indicators that demonstrate it is being met. The draft was presented to the AIC for discussion, subsequently refined and prepared for stakeholder and member consultation which began in September 2019.

The consultation document was downloaded 700 times, with responses received as detailed in the below Table 2:

Table 2.

Standards of practice for artificial intelligence in clinical radiology respondents

| Respondent type | Number |

|---|---|

| Australian Associations/Societies | 4 |

| Australian Colleges | 2 |

| Australian Government | 3 |

| Medical Indemnity providers | 1 |

| International colleges | 1 |

| Individuals | 4 |

| Industry | 2 |

The consultation feedback helped the AIC to clarify further the intended audience and the precise remit of the document. The ability for large and small radiology service providers to adopt each standards was considered further. Other changes made included refinement of the definitions, terminology used, indicators and evidence to demonstrate compliance.

Following this period of refinement by the AIC, the final version of RANZCR’s Standards of Practice for AI in Clinical Radiology was published in September 2020.

Both frameworks are detailed below in brief with extracts from the documents published by RANZCR and ought to be considered companion documents.

Box 1. Ethical Principles for Artificial Intelligence in Medicine (Royal Australian and New Zealand College of Radiologists, 2019.

Ethical Principles for Artificial Intelligence in Medicine

PRINCIPLE 1: SAFETY

Although ML and AI have enormous potential, a range of new risks will emerge from ML and AI or through their implementation.

The first and foremost consideration in the development, deployment or utilisation of ML or AI must be patient safety and quality of care, with the evidence base to support this .

PRINCIPLE 2: PRIVACY AND PROTECTION OF DATA

Healthcare data are among the most sensitive data that can be held about an individual. Patient data must not be transferred from the clinical environment at which care is provided without the patient’s consent, approval from an ethics board or where otherwise required or permitted by law. Where data are transferred or otherwise used for AI research, it must be de‐identified such that the patient’s identity cannot be reconstructed.

A patient’s data must be stored securely and in line with relevant laws and best practice .

PRINCIPLE 3: AVOIDANCE OF BIAS

ML and AI are limited by their algorithmic design and the data they have access to, making them prone to bias. As a general rule, ML and AI trained on greater volumes and varieties of data should be less biased. Moreover, bias in algorithmic design should be minimised by involving a range of perspectives and skill sets in the design process and by considering how to avoid bias.

The data on which ML and AI are based should be representative of the target patient population on which the system or tool is being used. The characteristics of the training data set and the environment in which it was tested must be clearly stated when marketing an AI tool to provide transparency and facilitate implementation in appropriate clinical settings. Particular care must be taken when applying an AI tool to a population, demographic or ethnic group for which it has not been proven effective.

To minimise bias, the same standard of evidence used for other clinical interventions must be applied when regulating ML and AI, and their limitations must be transparently stated .

PRINCIPLE 4: TRANSPARENCY AND EXPLAINABILITY

ML and AI can produce results that are difficult to interpret or replicate. When used in medicine, the doctor must be capable of interpreting the basis on which a result was reached, weighing up the potential for bias and exercising clinical judgement regarding findings.

When designing or implementing ML or AI, consideration must be given to how a result that can impact patient care can be understood and explained by a discerning medical practitioner .

PRINCIPLE 5: APPLICATION OF HUMAN VALUES

The development of ML and AI for medicine should ultimately benefit the patient and society. ML and AI are programmed to operate in line with a specific worldview (Note: Specific world view implies the values, learnings and experience of those who contributed to its development.); however, the use of ML and AI should function without unfair discrimination and not exacerbate existing disparities in health outcomes. Any shortcomings or risks of ML or AI should be considered and weighed against the benefits of enhanced decision‐making for specific patient groups.

The doctor must apply humanitarian values (from their training and the ethical framework in which they operate) to any circumstances in which ML or AI is used in medicine, but they also must consider the personal values and preferences of their patient in this situation .

PRINCIPLE 6: DECISION‐MAKING ON DIAGNOSIS AND TREATMENT

Fundamental to quality health care is the relationship between the doctor and the patient. The doctor is the trusted advisor on complex medical conditions, test results, procedures and treatments who then communicates findings to the patient clearly and sensitively, answers questions and provides advice on the next steps.

While ML and AI can enhance decision‐making capability, final decisions about care are made after a discussion between the doctor and patient, taking into account the patient’s presentation, history, options and preferences .

PRINCIPLE 7: TEAMWORK

ML and AI will necessitate new skill sets and teams forming in research and medicine. It is imperative that all team members get to know each other’s strengths, capabilities and integral role in the team.

To deliver the best care for patients, each team member must understand the role and contribution of their colleagues and leverage them through collaboration .

PRINCIPLE 8: RESPONSIBILITY FOR DECISIONS MADE

Responsibility for decisions made about patient care rests principally with the medical practitioner in conjunction with the patient. Medical practitioners need to be aware of the limitations of ML and AI and must exercise solid clinical judgement at all times. However, given the multiple potential applications of ML and AI in the patient journey, there may be instances where responsibility is shared between:

The medical practitioner caring for the patient;

The hospital or practice management who took the decision to use the systems or tools; and

The manufacturer that developed the ML or AI.

The potential for shared responsibility when using ML or AI must be identified, recognised by the relevant party and recorded upfront when researching or implementing ML or AI .

PRINCIPLE 9: GOVERNANCE

ML and AI are fast‐moving areas with potential to add great value, but also to do harm. The implementation of ML and AI requires consideration of a broad range of factors, including how the ML or AI will be adopted across a hospital or practice and to which patient groups, and how it might align with patients’ goals of care and values.

A hospital or practice using or developing ML or AI for patient care applications must have accountable governance to oversee implementation and monitoring of performance and use, to ensure practice is compliant with ethical principles, standards and legal requirements .

Artificial intelligence standards for clinical radiology

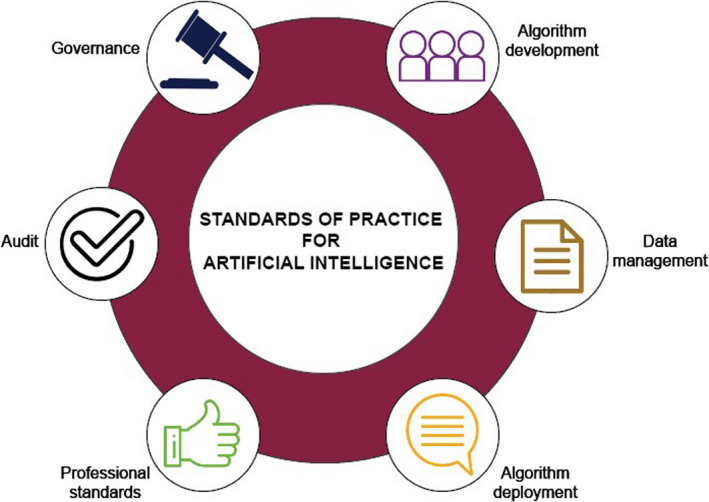

There are six standards domains, and all have detailed applicable indicators. An excerpt is provided here for brevity (Figure 2).

Figure 2.

Standards of practice for artificial intelligence in clinical radiology

Box 2. Standards of Practice for Artificial Intelligence in Clinical Radiology (Royal Australian and New Zealand College of Radiologists, 2020).

STANDARD 1: ALGORITHM DEVELOPMENT

ML systems and AI tools used in clinical radiology practices must be developed in line with RANZCR’s Ethical Principles on the Use of Artificial Intelligence in Medicine. The developer has a responsibility to develop ML and AI ethically, and in particular to minimise bias. A developer may be employed within the practice group or be external (e.g. employed by a medical device manufacturer). If the developer is employed by the practice group, they must also meet the standards outlined below. Practices procuring AI tools developed by external vendors should satisfy themselves that standards, expert advice, design appropriate for clinical use, and transparency and explainability have been met by the developer.

There must be a clear distinction between mature tools that are deemed appropriate (and likely registered) for clinical use, and those that are under development. The latter should not be used to inform clinical management.

A team‐based approach is imperative to the safe development of ML systems and AI tools for clinical radiology. Each team member will have specific professional expertise, and input from a range of professions is required to ensure optimal patient care outcomes.

STANDARD 2: INFORMATION MANAGEMENT

Healthcare data are among the most sensitive types of information that can be held about an individual. ML and AI will use data in a new range of ways. These techniques may be most powerful when applied to raw imaging data, before it has been post‐processed into pixel data. Specific care must be taken to comply with all relevant laws and best practice in information management when accessing, storing and transferring healthcare data.

Due to the new opportunities and risks that could emerge with the use of ML and AI, developers and practices will need to consider upfront the robust information management policies that will be required, including those pertaining to the retention or otherwise of raw data. Patients have a right to sensitive health data being used judiciously in ML or AI. These Standards state RANZCR’s expectations relating to the information management practices to be followed when AI techniques are being developed or used in AI in clinical radiology. Information security, consent and privacy, and establishing secure data transfer are essential.

STANDARD 3: ALGORITHM DEPLOYMENT

Algorithms have been in use in certain formats for some time; however, the introduction of more sophisticated neural networks and deep learning in new ML systems and AI tools will have significant impacts on patient care. These are difficult to fully anticipate in the early stages of deployment of ML and AI; therefore, these Standards take a conservative approach to deployment in patient and clinical care. Appropriate governance measures must be in place in a practice prior to ML systems or AI tools being deployed.

RANZCR recommends that practices ensure clinical teams are appropriately trained in the use of any new technologies prior to their use in patient care. RANZCR also recommends strong clinical leadership with a designated clinician (referred to here as the Chief Radiologist Information Officer (CRIO)) providing oversight for ML, AI and related health informatics considerations. Algorithm deployment and implementation into practice need to account for suitability and appropriateness, compliance with record keeping, integration into the practice and workflow, risk management and audit.

STANDARD 4: PROFESSIONAL STANDARDS

The Practice and all clinical staff employed by it are responsible for both understanding the principles behind ML systems and AI tools, and for relaying relevant information to patients.

The clinical team should play a central role in the deployment and use of ML and AI at the Practice. There is a clear focus on patient safety and training of staff in ML and AI, and the understanding that practitioners must apply clinical decision judgement incorporating ML and AI with other clinical information.

The CRIO will have defined responsibility and authority for implementing and maintaining ML and AI tools within the Practice.

STANDARD 5: AUDIT

Audit is a critical part of deployment of ML and AI tools. It is required to ensure optimal function of programmes and that they are operating as intended and all regulatory reporting requirements are fulfilled.

STANDARD 6: GOVERNANCE

Appropriate governance measures to oversee the deployment and use of ML and AI must be in place in a Practice prior to ML systems or AI tools being deployed.

Special consideration should be given to the interaction of the ML or AI with clinical decision‐making, the responsibilities of the different parties, how conflicts of interest are managed and ensuring the completion of due diligence. There is a shared responsibility for patient safety and the whole of the professional team must be involved.

Discussion

Both clinical radiology and radiation oncology have fundamental building blocks that make them ripe for the adoption of AI: use of sophisticated IT, large and reasonably well‐structured data sets and a relatively well‐funded and competitive landscape. Both specialties therefore have numerous incentives to adopt AI and ML technologies, either to improve patient care or cost‐effectiveness, and were anticipated to be early adopters in health care. Notwithstanding this, several voices were urging caution that AI technology was being over‐hyped, particularly in respect of clinical diagnoses or other medical applications.

As noted above, RANZCR was uniquely well positioned to develop ethical principles, standards of practice, professional standards and advocate for regulatory change, all with a view to ensuring safe and high‐quality service provision in clinical radiology or radiation oncology.

While valuable in guiding medicine in the right direction on AI, a set of ethical principles alone cannot guarantee safe and quality care in practice. For this reason, RANZCR sought to embed those principles through its clinical radiology practice standards and associated revisions to the training curricula for clinical radiologists and radiation oncologists. RANZCR’s Standards of Practice for Artificial Intelligence in Clinical Radiology also consider other members of the radiology team (such as radiographers, medical physicists and nurses) and require their upskilling prior to AI being adopted in a practice or hospital (detailed in Standard 4). The professional bodies overseeing those professions will have a key role to play in that arena.

RANZCR plans to keep refining both the Ethical Principles and the AI Standards of Practice to ensure their currency as AI use evolves across medicine. Suggestions or comments should be sent to the Faculty of Clinical Radiology at: fcr@ranzcr.edu.au.

The AIC will also consider whether additional accompanying guidance is required to assist implementation at hospitals and practices, for example on how to implement a robust governance framework (relating to Standard 6). RANZCR also has plan to develop Standards of Practice for AI in Radiation Oncology in the near future and will utilise some of the principles inherent in the AI Standards for Clinical Radiology in drafting them. Although specific to radiology, the risk management framework therein would likely benefit any area of medicine looking to develop similar standards.

RANZCR’s work in this area will enable our Fellows and trainees to become competent to use AI tools in their practice, both safely and optimally. The college is developing training, CPD modules and content at our annual scientific meetings to guide and develop members to safely interact with these technologies. Furthermore, we envisage a cohort of our members will develop deep and broad expertise in AI and become Chief Radiologist Information Officers (and similar roles in radiation oncology). The college will develop resources and provide access to materials to support their journey.

From the consultation feedback, a shortcoming was apparent in the scope of RANZCR’s Standards of Practice in that they were not applicable to the supply chain. The AIC therefore modified Standard 1 so that it focused on procurement of AI and ML by practices and hospitals. While this contains the risks to clinical radiology patients from inadequate regulation of AI, it does not fully address it. RANZCR is therefore developing a position statement on regulation of AI as a medical device and will work collaboratively with industry and advocate strongly to governments for best practice and patient safety to be reflected in regulation of AI and ML in medicine.

In conclusion, RANZCR’s Ethical Principles for Use of AI in Medicine and Standards of Practice for AI in Clinical Radiology were developed with input from a range of experts on the AIC and subject to extensive consultation across health care and industry. These two seminal documents will guide the safe deployment of AI and ML and quality care in medicine as these tools and their applications evolve.

LM Kenny MBBS, FRANZCR, FACR (hon), FBIR (hon), FRCR (hon), FCIRSE; M Nevin BSc, MSc; K Fitzpatrick.

Conflict of interest: Lizbeth Kenny: Employee, Royal Brisbane and Women's Hospital, Employee, School of Medicine, University of Queensland. Mark Nevin: Employee, Royal Australian and New Zealand College of Radiologists. Kirsten Fitzpatrick: Employee, Royal Australian and New Zealand College of Radiologists.

References

- 1. Coiera E. The price of artificial intelligence. Yearb Med Inform 2019; 28: 14–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Executive Order 13960 Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government. [Cited 10 May 2021.] Available from URL: https://www.federalregister.gov/documents/2020/12/08/2020‐27065/promoting‐the‐use‐of‐trustworthy‐artificial‐intelligence‐in‐the‐federal‐government

- 3. AI In the UK: Ready Willing and Able. [Cited 3 March 2021.] Available from URL: https://publications.parliament.uk/pa/ld201719/ldselect/ldai/100/100.pdf

- 4. The European AI Landscape Workshop Report. [Cited 3 March 2021.] Available from URL: https://ec.europa.eu/jrc/communities/sites/jrccties/files/reportontheeuropeanailandscapeworkshop.pdf

- 5. RANZCR Articles of Association. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/1837‐ranzcr‐articles‐of‐association/file

- 6. RANZCR Faculty of Radiation Oncology By‐laws. [Cited 3 March 2021.] Available from: https://www.ranzcr.com/documents/1604‐faculty‐of‐radiation‐oncology‐by‐laws/file

- 7. RANZCR Faculty of Clinical Radiology By‐laws. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/1605‐faculty‐of‐clinical‐radiology‐by‐laws/file

- 8. 2019 RANZCR Reaccreditation Submission to AMC and MCNZ. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/4896‐2019‐ranzcr‐reaccreditation‐submission‐to‐amc‐and‐mcnz‐pdf/file

- 9. RANZCR Strategy to 2021. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/4758‐ranzcr‐strategy‐to‐2021/file

- 10. RANZCR Structure and Governance. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/college/about/structure‐governance

- 11. RANZCR Artificial Intelligence Advisory Committee Terms of Reference. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/5040‐ai‐reference‐group‐terms‐of‐reference‐1/file

- 12. RANZCR Artificial Intelligence: The State of Play 2019. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/4868‐artificial‐intelligence‐the‐state‐of‐play‐2019/file

- 13. Artificial Intelligence and Life in 2030. [Cited 3 March 2021.] Available from URL: https://ai100.stanford.edu/sites/g/files/sbiybj9861/f/ai_100_report_0831fnl.pdf

- 14. Ethical Standards are Important. Here’s Why. [Cited 3 March 2021.] Available from URL: https://www.linkedin.com/pulse/ethical‐standards‐artificial‐intelligence‐important‐heres‐guillen/

- 15. For a Meaningful Artificial Intelligence. [Cited 3 March 2021.] Available from URL: https://www.aiforhumanity.fr/pdfs/MissionVillani_Report_ENG‐VF.pdf

- 16. RANZCR Response to the Australian Human Rights Commission’s Artificial Intelligence: Governance and Leadership Consultation. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/4846‐response‐to‐australian‐human‐rights‐commission‐ai‐consultation/file

- 17. ISO Information Technology – Big Data Reference Architecture. [Cited 3 March 2021.] Available from URL: https://www.iso.org/standard/68305.html

- 18. Artificial Intelligence: Governance and Leadership. [Cited 3 March 2021.] Available from URL: https://tech.humanrights.gov.au/sites/default/files/2019‐02/AHRC_WEF_AI_WhitePaper2019.pdf

- 19. Australia’s Ethics Framework: A Discussion Paper. [Cited 3 March 2021.] Available from URL: https://consult.industry.gov.au/strategic‐policy/artificial‐intelligence‐ethics‐framework/supporting_documents/ArtificialIntelligenceethicsframeworkdiscussionpaper.pdf?utm_source=Data61_website&utm_medium=link_click&utm_campaign=AI_Ethics_Framework&utm_term=Data61_AI_Ethics_Framework&utm_content=AI_Ethics_Framework

- 20. Ethical Principles for Artificial Intelligence in Medicine. [Cited June 30 2021.] Available from URL: https://www.ranzcr.com/documents/4952‐ethical‐principles‐for‐ai‐in‐medicine/file

- 21. Standards of Practice for Clinical Radiology. [Cited 3 March 2021.] Available from URL: https://www.ranzcr.com/documents/510‐ranzcr‐standards‐of‐practice‐for‐diagnostic‐and‐interventional‐radiology/file

- 22. Ethics of AI in Radiology. [Cited 3 March 2021.] Available from URL: https://www.acr.org/‐/media/ACR/Files/Informatics/Ethics‐of‐AI‐in‐Radiology‐European‐and‐North‐American‐Multisociety‐Statement‐‐6‐13‐2019.pdf

- 23. Ethics guidelines for trustworthy AI. [Cited 3 March 2021.] Available from URL: https://ec.europa.eu/digital‐single‐market/en/news/ethics‐guidelines‐trustworthy‐ai