Abstract

In human communication, social intentions and meaning are often revealed in the way we move. In this study, we investigate the flexibility of human communication in terms of kinematic modulation in a clinical population, namely, autistic individuals. The aim of this study was twofold: to assess (a) whether communicatively relevant kinematic features of gestures differ between autistic and neurotypical individuals, and (b) if autistic individuals use communicative kinematic modulation to support gesture recognition. We tested autistic and neurotypical individuals on a silent gesture production task and a gesture comprehension task. We measured movement during the gesture production task using a Kinect motion tracking device in order to determine if autistic individuals differed from neurotypical individuals in their gesture kinematics. For the gesture comprehension task, we assessed whether autistic individuals used communicatively relevant kinematic cues to support recognition. This was done by using stick‐light figures as stimuli and testing for a correlation between the kinematics of these videos and recognition performance. We found that (a) silent gestures produced by autistic and neurotypical individuals differ in communicatively relevant kinematic features, such as the number of meaningful holds between movements, and (b) while autistic individuals are overall unimpaired at recognizing gestures, they processed repetition and complexity, measured as the amount of submovements perceived, differently than neurotypicals do. These findings highlight how subtle aspects of neurotypical behavior can be experienced differently by autistic individuals. They further demonstrate the relationship between movement kinematics and social interaction in high‐functioning autistic individuals.

Lay Summary

Hand gestures are an important part of how we communicate, and the way that we move when gesturing can influence how easy a gesture is to understand. We studied how autistic and typical individuals produce and recognize hand gestures, and how this relates to movement characteristics. We found that autistic individuals moved differently when gesturing compared to typical individuals. In addition, while autistic individuals were not worse at recognizing gestures, they differed from typical individuals in how they interpreted certain movement characteristics.

Keywords: autism, gesture, kinematics, motion tracking, movement

INTRODUCTION

In everyday communication, humans often use visual signals such as iconic hand gestures (i.e., gestures that represent objects, actions or ideas) in order to depict objects, actions, and ideas. The specific kinematic characteristics of these gestures, such as their size, complexity, and speed of movement are highly communicatively relevant as they influence how understandable the meaning of the gesture is, and signal the underlying social intention of the gesturer (Trujillo et al., 2018, 2020). However, less is known about whether these flexible aspects of visible communication are different in neurodiverse individuals. For example, those diagnosed with autism spectrum disorder (ASD), also referred to as autism spectrum conditions (ASC). Even though this possibility has been proposed for individuals with ASC (Cook, 2016), little research has compared autistic individuals with neurotypical individuals (i.e., those not diagnosed with ASC) in terms of how they produce and understand iconic gestures, particularly at the kinematic level.

Gesture and communication

Gestures form an integrated and important aspect of communication and social interaction (Holler & Levinson, 2019; Özyürek, 2014). However, it is not just the use of gestures, but also how one produces a gesture. Previous research has demonstrated that the kinematics of iconic gestures (i.e., those that represent objects, actions or ideas; Kendon, 2004; McNeill, 1992) play an important role in communication. This can be seen in studies of gesture production showing that the kinematics of iconic gestures are modulated by the communicative context in which they are produced. For example, co‐speech iconic gestures produced for children are larger and more complex than those produced for an adult (Campisi & Özyürek, 2013). Similarly, silent gestures (i.e., those produced in the absence of speech) produced when there is more incentive to be communicative are larger, contain more constituent submovements (e.g., have more repetitions of a relevant movement, or are more complex in their depiction), and have faster peak velocities compared to those produced in a less‐communicative context (Trujillo et al., 2018). These studies suggest that gestures are shaped to current communicative demands.

That kinematics are also relevant for communication has been demonstrated by gesture comprehension studies. Specifically, the kinematics of an observed gesture are important to how an addressee interprets the gesture at multiple levels. When trying to understand the meaning of a silent gesture, observers rely on the temporal kinematic structure (i.e., contrasts in movement velocity and holds that make the constituent movements of a gesture clear) of the gesture, with more highly segmented gesture being easier to understand than those with fewer holds to segment the gesture (Trujillo et al., 2020). Beyond just understanding the meaning of a gesture, observers can also use the kinematics of a silent gesture to infer the underlying social intention. For example, greater gesture size and use of space can signal the intention to communicate (Trujillo et al., 2018; Trujillo et al., 2020). Gesture kinematics are therefore important for communication, with some kinematic features influencing the readability of a gesture, and others influencing how an observer interprets social intention.

The importance of kinematics in expressing meaning and intention as well as interpreting the gestures of others could therefore have important implications for neurodiverse populations who have different styles of movement. Autistic individuals in particular move differently from neurotypicals, and specifically this motoric difference, which also leads to a different visual perceptual experience of movement, has been proposed to be (partially) responsible for the difficulties in social interaction that autistic individuals frequently experience (Cook, 2016).

Gesture production and autism

Atypical gesture production (i.e., problems with using gestures) in autism has been repeatedly reported in clinical literature (Asperger, 1944; Kanner, 1943; Lord et al., 2002), and even forms part of the diagnostic criteria for tools such as the Autism Diagnostic Observation Schedule (ADOS; Lord et al., 2002) and the Autism Diagnostic Interview (Lord, Rutter, & Le Couteur, 1994), as well as the official DSM‐5 diagnostic criteria (American Psychiatric Association, 2013). However, recent research challenges this claim of an absence or reduction in the overall frequency of gestures per se. Instead, autistic individuals may use different types of gestures more frequently than neurotypicals, and may produce these gestures differently. For example, one study shows that in fact autistic individuals produce gestures during conversation, but these gestures may be functionally different. For example, de Marchena and Eigsti (2014) have found that the communicative co‐speech gestures that autistic individuals utilize are more often so‐called interactive gestures that regulate turn‐taking rather than explicitly representing semantic information (de Marchena et al., 2019), and these gestures were also motorically different, being more frequently unilateral than bilateral, compared to when neurotypicals produce these kinds of gestures (de Marchena et al., 2019). While this is evidence for a difference in handedness and type of gesture produced, an open question is whether gesture kinematics, particularly when considering more complex, iconic gestures, are also different.

Although there have been no studies specifically investigating the kinematics of iconic gesture production in autistic adults, there is some evidence for differences in the way autistic individuals produce simple arm movements outside of a communicative context (Cook et al., 2013), as seen in the low‐level kinematics of acceleration and jerk profiles. This is similar to the finding of a generally increased variability in motor output when comparing autistic to neurotypical individuals (Gowen & Hamilton, 2013). An open question is thus whether such low‐level kinematic differences are also apparent in communicative gestures.

Gesture perception and autism

Similar to gesture production, autistic individuals also show differences in gesture comprehension. Aldaqre and colleagues found that autistic adults are sensitive to explicitly communicative gestures (e.g., pointing) during a spatial cueing task, but processing the communicative relevance required more effort (Aldaqre et al., 2016). This increase in required effort may be due to perceptual processing differences between autistic and neurotypical individuals. In terms of processing differences, some early studies showed impaired perception of biological motion in autistic children (Blake et al., 2003; Moore et al., 1997). Whether this holds for adults is unclear, as some studies have reported that, at least in the case of so‐called “high‐functioning” autistic adults,1 action recognition as well as the sensitivity to low‐level kinematics (Edey et al., 2019) seems to be intact (Cusack et al., 2015; Murphy et al., 2009; von der Lühe et al., 2016), while others have reported that action (or biological motion) recognition is impaired in autistic adults (Hsiung et al., 2019; Nackaerts et al., 2012). More generally, there seems to be a difference in how autistic adults process and utilize movement information. For example, autistic children and young adults may have an impaired ability to recognize subtle differences in action kinematics (Di Cesare et al., 2017; Rochat et al., 2013). Similarly, Amoruso and colleagues found that autistic adults are less likely to integrate top‐down contextual expectations with kinematics when observing actions (Amoruso et al., 2018). In line with this, studies have shown that while autistic adults can understand an observed action, they do not use that action to predict the actions of others in response to the original action (Chambon et al., 2017; von der Lühe et al., 2016). In other words, there seems to be an impairment of, or at least a less automatic, processing of contextually meaningful kinematics.

Altered kinematic processing could have several potential causes. Very generally, this could result from autistic individuals having a different style of processing perceptual information (Keehn et al., 2013; Lawson et al., 2017; Van de Cruys et al., 2014). One influential model suggests that autistic individuals actually perceive the world more accurately than neurotypicals, because they rely less on top‐down prior knowledge and more on sensory input (Pellicano & Burr, 2012). More recent studies suggest that the ability to utilize prior knowledge is intact in ASC, but the learning and adjustment of this knowledge may be atypical (Sapey‐Triomphe et al., 2020; Van de Cruys et al., 2014). Furthermore, the way autistic individuals orient, engage, and disengage their attention in response to cues in the perceptual environment may differ compared to neurotypical individuals (Keehn et al., 2013). Such perceptual processing differences have been proposed to explain both adverse symptoms of autism, such as difficulties processing social information (Dawson et al., 2004; Pierce et al., 1997), but also symptoms that are often considered a strength in autistic individuals, such as enhanced visual search abilities (O'riordan, 2004). While it can be difficult to disentangle these competing theories of perceptual differences, we can conclude that differences in the way autistic and neurotypical individuals process communicatively relevant kinematic information may not relate to broad impairments. Instead, autistic individuals may be using different prior knowledge (e.g., due to a different sensorimotor experience throughout life), or they may simply orient their attention differently towards incoming kinematic information. Regardless of the underlying cause, differences in perceptual processing style could lead to an action being interpreted differently with regard to its relevance to the observer, for example due to an atypical interpretation of kinematic cues.

Current study

In sum, recent studies suggest that autistic adults are sensitive to human movement kinematics and unimpaired in the general recognition of actions, but may differ from neurotypicals in how they process or interpret the social relevance of an action. Given that people utilize subtle kinematic cues in order to signal their intentions, this difference in kinematic processing could have important consequences for interactions between neurotypical and autistic individuals.

The current study aims to investigate how high‐functioning autistic adults produce and perceive iconic gestures (in this case, gestures that visually depict instrumental actions), looking at the level of communicatively relevant kinematic features. We had three main research questions. First, do iconic gestures produced autistic and neurotypical individuals differ in (communicatively relevant) kinematics? Second, do autistic individuals differ from neurotypicals in terms of their recognition accuracy of iconic gestures? And finally, do autistic and neurotypical individuals utilize a similar set of observed kinematic cues in order to support their recognition of iconic gestures? To this end, we present data from two experiments involving the same set of high‐function autistic adults and matched neurotypical controls.

In the first experiment participants took part in a silent, iconic gesture elicitation task while being recorded using a Kinect motion tracking device. To address our first research question, we quantified communicative kinematic features to determine whether the two groups differed in their gesture kinematics. Our hypothesis was that kinematics would be different autistic individuals, specifically in temporal features such as holdtime, peak velocity, as well as segmental features such as submovements. This would be in line with previous studies showing kinematic differences in the timing or smoothness of movements (Cook et al., 2013; Glazebrook et al., 2006; Gowen & Hamilton, 2013; Papadopoulos et al., 2012) when comparing autistic and neurotypical individuals, but would expand these findings to communicatively relevant kinematic features. In order to determine whether any kinematic differences relate to more basic traits of ASC, we also tested whether gesture kinematics were correlated with motor coordination or symptom severity scores. Additionally, we tested whether motor cognition score correlated with gesture kinematics. This was done as an exploratory test of whether such differences in communicative kinematics could be predicted by one's self‐awareness related to movement, as would be predicted by Cook (2016).

In the second experiment, participants carried out an iconic gesture recognition task using visually impoverished stick‐light figures as stimuli. The stimuli showed iconic gestures being produced with various kinematic profiles. To address our second research question, we calculated accuracy and response time and tested whether autistic individuals are able to recognize iconic gestures at a similar accuracy as neurotypicals. Our final research question related to whether autistic and neurotypical individuals utilized a similar set of kinematic cues to support their recognition. To this end, we used the kinematic features of the observed gestures (quantified in Trujillo et al., 2020) and tested whether these kinematics were correlated with task performance, and whether the same features were related to performance in both groups. Our hypothesis for these two research questions was that autistic individuals would be able to recognize gestures with similar accuracy to neurotypicals, but would either utilize different kinematic feature to do so, or would not be supported by these kinematic cues. These hypotheses are in line with studies showing atypical interpretation of biological motion kinematics on the one hand (predicting the utilization of different kinematic features; i.e., Cook et al., 2013), and studies showing that autistic individuals may not process subtle kinematic differences in how an action is performed (Di Cesare et al., 2017). Similar to the production experiment, we additionally tested whether motor cognition scores predicted recognition performance. This study can provide novel insights into how motoric and perceptual differences in autistic individuals can contribute to social difficulties, thus providing more understanding for how autistic individuals interact with and perceive the social world. Furthermore, determining whether autistic individuals utilize the same kinematic features to support gesture recognition is also informative about the generalizability of communicative kinematic cues used by neurotypical individuals.

METHODS

Participants

A total of 25 autistic individuals (15 female; 22 right‐handed) and 25 neurotypical individuals (14 female; 21 right‐handed) participated in the study. Autistic participants were recruited via the Psychiatry Department of the Radboud University Medical Centre. Patients were recruited via two routes. In the first route, patients were contacted by their psychiatrist at the Radboud UMC with general, global information about the study and asked if they agree to being approached by researchers. In the second route, a message was posted in a private, organization‐specific social network, where Radboud UMC psychiatrists have message board style contact with past patients. All participants were clinically diagnosed with Autism Spectrum Condition according to the criteria defined in the DSM‐5. Potential participants were excluded if they had a history of any other (neuro‐) psychiatric disorders, brain surgery or brain trauma, or used anti‐psychotic medication. The neurotypical control group was recruited via the Radboud University SONA system, which allows for pre‐signup screening of several participant characteristics. By starting recruitment of the ASC group first, we were able to pre‐screen our control group in an attempt to match age and gender between the two groups. This was done by changing the filter settings in the SONA recruitment system to match the ASC group sign‐ups. We additionally collected data on education and handedness for further group matching. The study was approved by a local ethics committee (CMO Arnhem‐Nijmegen) and all procedures were performed in accordance with the Declaration of Helsinki. The power analyses used to calculate sample size are described in Appendix I.

Demographics and neuropsychological measures

We collected self‐report information on age (years) and handedness, and asked participants to fill out questionnaires for the autism quotient (AQ), the Actions and Feelings Questionnaire (AFQ; [Williams et al., 2016]), and level of education (based on highest level of completed education as defined by Verhage (Verhage, 1964) and updated by Hendriks (Hendriks et al., 2014). The AQ was collected in order to quantify autism symptom severity. The Dutch version of the AFQ (van der Meer et al., 2021), which quantifies the relation between motor cognition and empathy, was collected as a secondary measure to determine if it is a valid predictor of participants' gestural or gesture‐recognition performance. We also carried out the Dutch short form of the Wechsler Abbreviated Intelligence Test (WAIS‐II; [Wechsler, 2011]) as well as the Purdue Pegboard Test (Tiffin & Asher, 1948). These were carried out in order to obtain an estimate of IQ as well as general motor coordination that could be used to ensure the two groups are matched in these general domains. Demographic information is provided in Table 1. In order to check group matching, we used equivalence testing following the two one‐sided t‐test (TOST) approach (Lakens et al., 2018). We set the smallest effect size of interest (SESOI) for age to five, as age differences of less than five likely still fall within the same general age category. We set the SESOI for IQ to eight, following the suggestion of Lakens, Scheel, & Isager, 2018 to use half a SD, with the SD of 16 being taken from a large sample of Dutch adults performing the WAIS (van Ool et al., 2018). SESOI was set to three for the Purdue pegboard, as this is the minimal detectable change in this test (Lee et al., 2013). We used Welch's t‐tests to statistically determine whether the two groups differed in the clinical scores where we expected them to differ (i.e., AFQ and AQ).

TABLE 1.

Overview of demographic information

| ASC | NT | |||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Age (years) | 28.29 | 3.722 | 24.24 | 4.75 |

| Gender (female) | N = 13 | 56% | N = 14 | 56% |

| Handedness (right‐handed) | N = 20 | 87% | N = 21 | 84% |

| Purdue assembly (motor coordination) | 9.00 | 1.75 | 9.92 | 1.55 |

| IQ (estimate) | 111.12 | 23.31 | 112.99 | 10.39 |

| AFQ | 22.00 | 5.99 | 30.34 | 6.73 |

| AQ | 30.5 | 7.32 | 14.00 | 6.12 |

Abbreviations: AFQ, actions and feelings questionnaire; AQ, autism quotient; ASC, autism spectrum conditions; IQ, intelligence quotient; NT, neurotypical.

For the demographics that we expected to be matched (i.e., age, IQ, and motor coordination), we found that autistic and neurotypical individuals differed significantly in age (t[45.39] = 3.493, p = 0.001), the two groups did not differ significantly in IQ (t[33.17] = −0.682, p = 0.500), nor in Purdue (motor coordination) scores (t(47.26) = 1.458, p = 0.151. For the clinical measures, we found significantly higher AFQ scores in neurotypicals compared to autistic individuals (t[41.48] = 4.961, p < 0.001), and we found higher AQ scores in the autistic individuals compared to the neurotyicals (t[41.704] = 7.650, p < 0.001).

Due to the difference in age between our two groups, we carried out additional tests to ensure that age did not influence any outcome measures on either task (tasks described below). We found no evidence for age influencing our outcome measures, and the results for these analyses can be found in Appendix II.

Tasks and data acquisition

Gesture production

Physical set‐up

In the Gesture Production task, participants were seated at a table with a 24″ computer monitor and two button‐boxes. The button boxes were placed at a distance halfway between the participant and the monitor, and at the approximate width where one would rest their hands, ensuring a comfortable resting position. The button‐boxes were used to ensure accurate time‐stamps for the gesture recordings. A Microsoft Kinect V2 was placed approximately 1 m away from the participant, at the corner of the desk. Kinect data was collected using an in‐house developed Presentation (Neurobehavioral Systems, Inc., Berkeley, CA, www.neurobs.com) script.

Task

Participants were first presented a set of instructions. After this, two practice rounds of the task were completed, after which participants had the opportunity to ask any clarification questions. During the task, participants were presented with written instructions pertaining to instrumental actions, as well as images of the objects that this action pertained to (e.g., the participant might read “cut the paper with the scissors” and see an image of a sheet of paper, and an image of a pair of scissors). Text and images were presented together, with text above the images. Participants were instructed to act out the action as if they had the objects directly in front of them. Once the images and text were displayed, participants could start acting out the action (i.e., producing an iconic gesture). After completing a gesture, participants were instructed to return their hands to the button boxes. Once both hands activated the button‐boxes, the screen went blank for 2 s before the next stimulus was given. In total, 31 stimuli were utilized, plus two unique stimuli for the practice rounds. Stimuli were presented using an in‐house developed PsychoPy (Peirce et al. 2019) script. These are the same action prompts utilized in Trujillo et al., 2018. The action prompts used in these stimuli are provided in Table S1.

Gesture comprehension

Physical set‐up

As this task is embedded in part of a larger project that involves neuroimaging data (under review), participants performed the task in a magnetic resonance imaging scanner. Participants were positioned in the supine position in the scanner with an adjustable mirror attached to the head coil. Through the mirror, participants were able to see a projection screen outside the scanner. Participants were given an MRI‐compatible response box, which they operated using the index finger of their right hand to press a button on the right and the index finger of their left hand to press a button on the left. Button locations corresponded to the two response options given on the screen. The resolution of the projector was 1024 × 768 pixels, with a projection size of 454 × 340 mm and a 755‐mm distance between the participant and the mirror. Video size on the projection was adjusted such that the stick figures in the videos were seen at a size of 60 × 60 pixels. This ensured that the entire figure fell on the fovea, reducing eye movements during image acquisition. Stimuli were presented using an in‐house developed PsychoPy (Peirce et al. 2019) script.

Stimuli

For our video stimuli, we utilized recordings from Trujillo et al., 2018. These recordings were based on a gesture production similar to what was performed in the present study, although using real objects placed in front of the participants, rather than images on a computer screen. Following the same methodology of Trujillo et al. (2020), we utilized the motion tracking data from the 2018 recordings in order to reconstruct the movements of the upper‐body joints (Trujillo et al., 2018). Videos consisted of these reconstructions, using x, y, z coordinates acquired at 30 frames per second of these joints (see Figure 1 for an illustration of the joints utilized). Note that no joints pertaining to the fingers were visually represented. This ensured that hand shape was not a feature that could be identified by an observer. These points were depicted with lines drawn between the individual points to create a light stick figure, representing the participants' kinematic skeleton. Skeletons were centered in space on the screen, with the viewing angle adjusted to reflect an azimuth of 20° and an elevation of 45° in reference to the center of the skeleton.

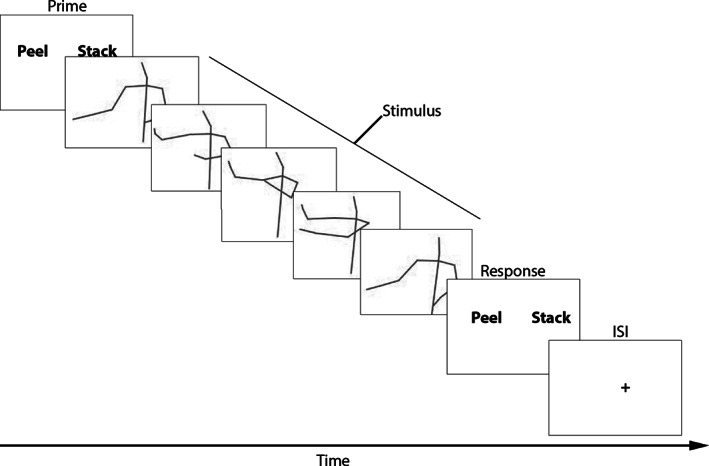

FIGURE 1.

Schematic overview of gesture comprehension task and stimuli. Trials start with a prime, followed by the stimulus video, after which the two response options are again shown and the participant is able to respond. After this, there is a short interstimulus interval (ISI)

Task

During the task, participants were first presented with two response options in random order, with one on the left and one on the right. This was the Prime phase, which provided so that when participants saw the gesture video, they knew what potential actions they were looking for. After this, a stimulus video was then displayed on the screen. After this stimulus phase, the two response options were again presented on screen. The two possible answers were presented, one on the left, and one on the right. During this Response phase, participants could use a button‐box to pick either the left or right answer, using the left and right buttons on the button‐box. Participants were given 2500 ms to respond. Answers consisted of one verb that captured the action (e.g., the correct answer to the item “peel the banana” was “peel”). Correct answers were randomly assigned to one of the two sides. The second option was always one of the possible answers from the total set. Therefore, all options were presented equally often as the correct answer and as the wrong (distractor) option. After responding, there was a variable interstimulus interval (ISI) during which participants would see a fixation cross for a period 1000 ms with a jitter of 250 ms. See Figure 1 for a schematic overview of a trial. Accuracy and response time (RT) were recorded for each video.

Analyses

See Table 2 for an overview of the analyses and their corresponding research questions for the two experiments.

TABLE 2.

Analyses employed and their corresponding research questions

| Test | Research question |

|---|---|

| Production | |

| Linear discriminant analysis (LDA) | Are there kinematic differences between the two groups? |

| Permutation testing | What is the accuracy of the LDA model? |

| Multidimensional scaling | What is the (visual) relationship between kinematics and group membership? |

| Mixed modeling and chi‐square tests of model fit | Are group differences in kinematics explained by other neuropsychological measures? |

| Comprehension | |

| Mixed model of accuracy |

Is there a difference in accuracy between the groups? Do the observed kinematics influence their accuracy? |

| Mixed model of response time |

Is there a difference in response time between the groups? Do the observed kinematics influence their response time? |

| Mixed models of neuropsychological measures and gesture recognition | Can accuracy and/or response time be explained by autism symptom severity or self‐report motor cognition (AFQ)? |

Gesture production

The primary analysis for the gesture production data was to determine if the two groups differed substantially in the kinematics of their gestures. We first calculated several kinematic features: total duration (i.e., the time from initial movement to returning the hands to their resting position), peak velocity (i.e., the highest velocity achieved by either hand), holdtime (i.e., the amount of time spent holding a static position), holdcount (i.e., the number of times a static position is held before moving again), max size (i.e., the maximum distance of either hand from its starting point), and submovements (i.e., the number of individual movements comprising the gesture). In order to test for differences between groups, we used a machine learning approach, implementing linear discriminant analysis and permutation testing with the PredPsych R package. This approach is advantageous as it allows us to utilize all available kinematic information in one model, rather than testing separate mixed‐effects models, for example. Linear discriminant analysis uses the input data, in this case kinematic data, and builds a model of discriminant functions based on linear combinations of the data that provide the best discrimination between, or classification of, the target groups. The model is tested, in this case, using k‐folds cross‐validation, which splits the dataset into 10 folds, training the model on nine folds and holding one out for testing the model. This ensures that the model is trained and tested on separate subsets of the data.

While the model fitting provides a measure of test accuracy in percentage, we also test whether the features have significant discriminatory power using permutation testing, also implemented in PredPsych (Koul et al., 2018). This method randomly shuffles the group labels and refits the model a number of times. This repeated shuffling and re‐fitting builds a probability density curve depicting the model accuracy values that are likely to occur simply by chance. The accuracy of the “real” model is compared to this curve in order to provide an indication, and a p‐value describing, whether the given accuracy is likely to have occurred by chance, or only when the group labels are accurate. In this study we applied permutation testing with 1000 simulations (re‐shuffles).

To visualize the data, we use multidimensional scaling, which projects the multidimensional (i.e., consisting of all of the kinematic features) data into a two‐dimensional space, allowing us to more easily visualize the relationship between group membership and the kinematic features. Finally, we quantify the relative contribution of each of the kinematic features to the classification accuracy using Fisher scores (F‐scores) that determine how well a given feature, by itself, can classify between the two groups (Chen & Lin, 2006; Koul et al., 2018).

Finally, in order to determine whether any group differences are due to more general clinical measures, we test whether gesture kinematics are related to autism symptom severity (AQ), action awareness (AFQ), or motor coordination (Purdue pegboard assembly). For these, we calculated mean kinematic scores for each participant and constructed separate linear models, with each neuropsychological measure (i.e., AQ, AFQ, Purdue assembly) as the dependent variable and the mean kinematic scores as the independent variables. We followed a maximal model approach, including all kinematic features, plus an interaction with group, in the first model, and subsequently removing one model term at a time, using chi‐square tests to determine if this removal impacted on model fit. When it did not, we left this term out and continued. Once an optimal model was found, or we were left with only one term (plus group), this final model was compared, using chi‐square tests, against a “null model” that included only group. Significance testing therefore shows whether the neuropsychological measures can explain any additional variance in gesture kinematics beyond any group differences.

Gesture comprehension

Gesture comprehension analyses focused on whether the two groups differed in terms of their accuracy or response times, and how the observed kinematics affected task performance. In order to quantify the effects of the observed kinematics, we took the raw kinematic values from the stimuli, obtained in Trujillo et al., (2018), and calculated z‐scores for each kinematic feature, per item (i.e., gesture), in the same way as in the original paper, but using only the values found in the current stimulus set. This ensures that the z‐scores used here reflect the magnitude of the kinematics relative to the gestures that our participants observed in the current experiment. These kinematic features were total duration (i.e., the time from initial movement to returning the hands to their resting position), peak velocity (i.e., the highest velocity achieved by either hand), holdtime (i.e., the amount of time spent holding a static position), holdcount (i.e., the number of times a static position is held before moving again), max size (i.e., the maximum distance of either hand from its starting point), and submovements (i.e., the number of individual movements comprising the gesture).

We tested for performance (i.e., accuracy and RT) effects using (generalized) linear mixed models, implemented in the R package lme4 (Bates et al., 2015). Following the advice of Barr (Barr et al., 2013), we first fitted maximally‐defined models that included accuracy or RT as dependent variable, with fixed effects terms for each of the observed kinematic values, plus video duration as a covariate, and random intercepts for participant, actor, and item. We additionally included group (ASC and neurotypical) as a fixed effect and an interaction effect with each kinematic term. In the maximal model, including a by‐participant random slope term for group led to convergence issues, so this was added at a later step (see below). The maximal model for accuracy, for example, was defined as: glmer(accuracy ~ duration + group * ZHoldtime + group * ZSize + group * Zright_submovements + group*Zleft_submovements + group*Zright_Velocity + group*Zleft_Velocity + (1 + group|participant) + (1|actor) + (1|item).

In order to select and assess the best‐fit model for our data, we proceeded using likelihood ratio model comparisons, removing the term with the lowest coefficient and testing whether this significantly changed the model fit. If so, the term was kept in the model, otherwise it was removed and we proceeded to the next term. After trimming down the model, we then tested this best‐fit model compared to a null model that included only the random terms and video duration. If this was significant, we then also added group as a random slope to participant, allowing the model to better account for within‐group, inter‐individual variance. We report the chi‐square tests for this final best‐fit versus null model comparison. For the Accuracy model terms, we report the p‐values given by the lme4 summary function, while for the RT model terms, we calculate p‐values using Type II Wald chi‐square tests, implemented in the R package “car” (Fox et al., 2012).

Similar to gesture production, we also tested whether gesture recognition accuracy or RT can be explained by clinical or neuropsychological measures. We again modeled AQ and AFQ as dependent variables, with mean accuracy or mean RT as the independent variable (in separate models for each independent and dependent variable). Chi‐square tests were used to determine significance.

Finally, as an additional, exploratory analysis, we followed up on any model that indicated that observed kinematics significantly contributed to gesture recognition. Specifically, we calculated the difference between the observed and executed kinematic features (e.g., observed number of submovements minus executed number of submovements) for each item (i.e., action). With this observed/executed similarity score, we added this to the best fit model, including an interaction term between the observed/executed similarity and the observed kinematics, as we expect that any similarity (or dissimilarity) would also modulate the influence of the observed kinematics on performance.

RESULTS

Gesture production

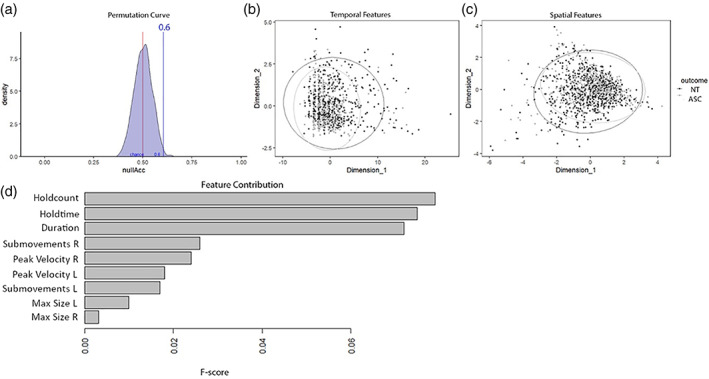

The first analysis aimed to assess whether there were kinematic differences between the two groups. This linear discriminant analysis of kinematic features was able to classify group membership (ASC vs. NT) with an accuracy of 60%, which was shown to be significantly above chance level (p = 0.008) by permutation testing (see Figure 2(a)). The confusion matrix for this analysis (Table 3) shows that the NT group was classified with higher accuracy than the ASC group, which likely results from the higher variability in the ASC group (see Figure 2(b,c)). F‐score analysis revealed that the features with the highest discriminatory power were Holdcount (ASC mean = 4.60, NT mean = 3.78), Holdtime (ASC mean = 0.47 s, NT mean = 0.46 s), and duration (ASC mean = 8.72 s, NT mean = 7.11 s), followed by Submovements (ASC mean = 20.88, NT mean = 18.24) and then by Peak Velocity (ASC mean = 0.80 m/s, NT mean = 0.82 m/s) (see Figure 2 for an overview of the F‐scores). This dominance of the temporal features (i.e., those that capture aspects of timing, such as the static holds, and the overall duration) is also evident in Figure 2(b,c).

FIGURE 2.

Results from the linear discriminant analysis. Panel (a) shows the permutation curve for significance testing of the model discriminatory power. The distribution curve shows the accuracy of the model under the shuffled‐data simulations, with chance‐level (50%) indicated by a red vertical line. The accuracy of the real model (60%) is indicated with the blue line, showing that it falls outside of the distribution of randomly shuffled data. Panels (b and c) show the MDS scatterplots, with panel (b) using data from the temporal kinematic features (i.e., peak velocity, holdcount, holdtime), and panel (c) using data from the spatial kinematic features (i.e., submovements, max size). In both plots, the x‐ and y‐axes represent the two components of the dimensionality reduction. Dark points show the ASC group, while gray points show the NT group. Circles indicate the boundaries of group classification. ASC, autism spectrum conditions; NT, neurotypical

TABLE 3.

Confusion matrix from linear discriminant analysis, classifying group membership based on kinematic features

| ASC | NT | Total | |

|---|---|---|---|

| ASC | 282 (43%) | 382 (57%) | 664 (100%) |

| NT | 230 (33%) | 460 (67%) | 690 (100%) |

Note: Gray diagonals highlight the group cases that were correctly classified.

Abbreviations: ASC, autism spectrum conditions; NT, neurotypical.

The next set of analyses assessed whether kinematic features were related to any neuropsychological or motor coordination measures. Here, we found that gesture kinematics were not correlated with AQ, F(1) = 0.363, p = 0.550, nor with general motor coordination, F(1) = 0.815, p = 0.371. However, we found a relation between kinematics and AFQ, F(1) = 5.92, p = 0.019. Specifically, increasing holdtime was associated with a decrease in AFQ. This means that gesture kinematic differences between ASC and NT individuals provide a relatively independent marker of autism that may not be directly related to general motor coordination or autism symptom severity, but may be related specifically to the action‐awareness aspect of motor cognition as captured by the AFQ.

Gesture comprehension

In order to determine whether gesture recognition performance differed between the two groups, and whether the observed kinematics influenced performance, we tested mixed models for both accuracy and RT.

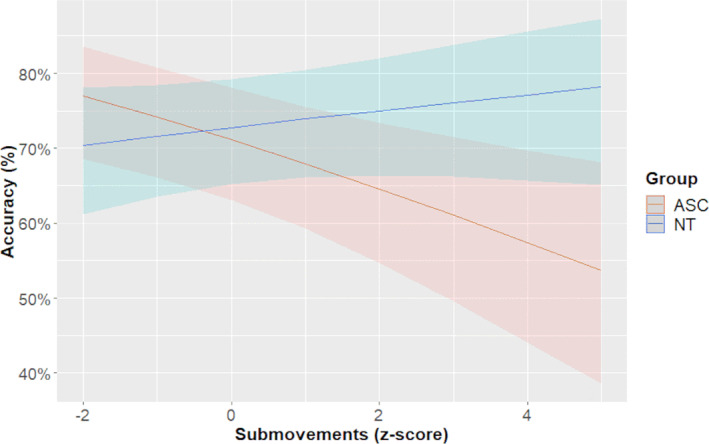

For recognition accuracy, the best‐fit model included duration, group, and a submovement*group interaction, as well as random slopes for participants, χ2(5) = 33.465, p < 0.001. The two groups did not differ significantly in accuracy (z = 0.416, p = 0.678). However, there was a significant main effect of submovements (β = −0.152, z = −2.858, p = 0.004) as well as a significant group*submovement interaction (β = 0.198, z = 2.710, p = 0.007). This model demonstrates that while NT individuals benefitted from increased submovements, ASC individuals showed lower accuracy when there were more submovements (see Figure 3). In terms of observed/executed similarity, we found no evidence that similarity between observed and executed number of submovements modulated the influence of submovements on accuracy, χ2(5) = 3.513, p = 0.173.

FIGURE 3.

Gesture recognition accuracy per group, as affected by observed kinematics (submovements). Submovements are given on the x‐axis as a z‐score, while accuracy is given on the y‐axis, in percentage. The blue line represents neurotypicals, while the red line represents autistic individuals. The light bar behind each line depicts the standard error of the regression line

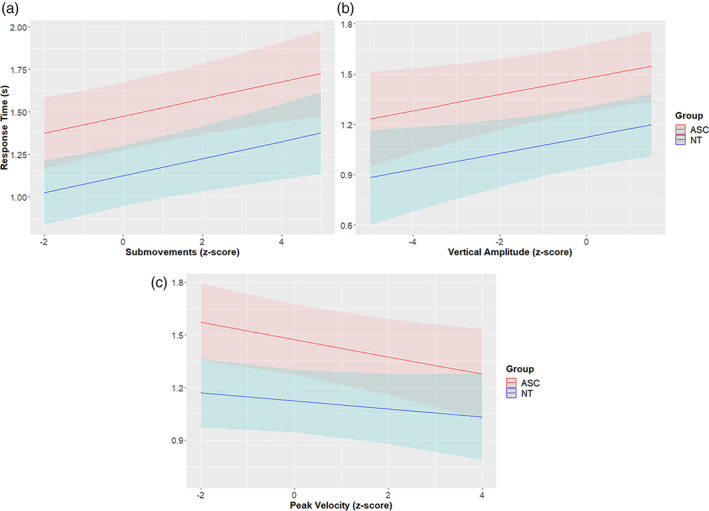

For recognition RT, the best‐fit model included duration, group, Vertical Amplitude, submovements, peak velocity, and a group*peak velocity interaction, and no random slopes for participants, χ2 (5) 27.033, p < 0.001. The two groups significantly differed in RT (χ2 (1) = 6.927, p = 0.008), with NT individuals showing responding 351 ± 133 ms faster than ASC individuals. We additionally found that, regardless of group, vertical amplitude slowed RT by 48 ± 22 ms (χ2 (1) = 5.067, p = 0.024), submovements slowed RT by 50 ± 16 ms (χ2 (1) = 9.703, p = 0.002), and peak velocity sped RT by 49 ± 22 ms (χ2 (1) = 5.652, p = 0.017). The group*peak velocity interaction was not significant (χ2 (1) = 0.793, p = 0.373). See Figure 4 for an overview of these results. In terms of observed/executed similarity, we found no evidence for similarity in vertical amplitude, submovements or peak velocity influencing RT, χ2 (6) = 10.807, p = 0.095.

FIGURE 4.

Response times per group as a function of kinematic features. In each plot, the kinematic feature is given on the x‐axis, while response time is given on the y‐axis, in seconds. The blue line represents neurotypicals, while the red line represents autistic individuals. The light bar behind each line depicts the standard error of the regression line. Panel (a) depicts response time by submovements, panel (b) depicts response time by vertical amplitude, and panel (c) depicts response time by peak velocity

Regarding neuropsychological measures, recognition accuracy was not related to AQ, F(2) = 0.247, p = 0.783, and there was only a weak association with AFQ, F(2) = 2.59, p = 0.089. Similarly, we found no association between RT and AQ, F(2) = 0.239, p = 0.788, nor between RT and AFQ, F(2) = 0.971, p = 0.389. This suggests that gesture recognition performance is not related to autism symptom severity or action‐based empathy.

DISCUSSION

This study set out to compare how individuals with ASC utilize gesture kinematics, investigating both gesture production and gesture recognition in the same individuals. We found that (a) gestures produced by autistic individuals were kinematically different than those produced by neurotypical individuals, and (b) while autistic individuals were unimpaired in their ability to recognize iconic gestures, they processed repetition and complexity (i.e., number of submovements) differently than neurotypical individuals did. These results highlight that, in producing and recognizing iconic gestures, autistic individuals may take different routes to achieve similar results.

Gesture production: High variability of gesture kinematics and increased holdtime in ASC

In gesture production, we find that gesture kinematics, and particularly temporal features such as holdtime (i.e., the meaningful pauses between individual movements) and duration, were different in gestures produced by autistic and neurotypical individuals. These features can be interpreted as the degree of segmentation and of a gesture through the use of brief pauses between movements (i.e., hold‐time and hold‐count) as well as the overall time spent gesturing. This is in line with previous results showing that autistic adults differ from neurotypicals when comparing the temporal kinematics of simple, non‐communicative arm movements (Cook et al., 2013), as well as more coarse‐grained studies of gesture production in autistic adults that have shown differences in both the type of gestures preferentially produced by autistic individuals compared to neurotypicals, as well in the handedness (i.e. left or right versus bilateral) of these gestures (de Marchena et al., 2019). Our study brings these previous results together by showing that communicatively relevant kinematic features of iconic (silent) gestures are quantitatively different between autistic and neurotypical individuals. These findings are relevant for understanding the difficulties experienced by autistic individuals during social interaction, as kinematic differences in communicative gestures can impact on how these gestures are interpreted by an addressee (Trujillo et al., 2018, 2020).

While our results show that gesture kinematics differ between autistic and neurotypical individuals, inspection of the classification results reveals that this is not due to the two groups showing entirely separate kinematic profiles. Instead, the data suggest that classification is more accurate for detecting neurotypicals. This could be due to neurotypicals having a relatively uniform kinematic profile, while autistic individuals show much more variability. Specifically, autistic individuals sometimes show kinematic profiles similar to that of neurotypical participants, but frequently also show more extreme values. This variability in the autistic group could mean that extreme values are more likely to be correctly classified as belonging to the autistic group, while the more “typical” values are more likely to be incorrectly classified as neurotypical. Overall, this idea is in line with the review by Gowen and Hamilton that showed higher variability in the motor output of autistic compared to neurotypical individuals (Cavallo et al., 2018; Gowen & Hamilton et al., 2013). Interestingly, the features describing the temporal qualities of gesture, such as holdtime, had the highest discriminatory power. A previous study using a similar setup found that holdtime in particular is strongly related to gesture comprehension in neurotypical participants (Trujillo et al., 2020). As gesture holds segment a movement into constituent parts and can mark the salience of a particular hand/arm configuration, changes in this feature could impact on the communicative efficacy of these gestures.

Importantly, we found no evidence for gesture kinematic differences being related to general motor coordination or symptom severity. However, the AFQ, a self‐report measure of action‐awareness and (social) motor cognition, was significantly related to gesture holdtime, even when controlling for group differences. This suggests that these gestural differences are specifically related to (or are at least predicted by) motor cognition, and may not be picked up by general screening or assessment tools.

Gesture comprehension: Different perceptual processing strategy in the ASC group

In gesture comprehension, we found no difference in accuracy between the groups. Interestingly, while the observed submovements affected recognition accuracy in both groups, it had opposite effects in the two groups. Higher modulation values, which were considered “more communicative” in the original studies from which these stimuli were derived (Trujillo et al., 2018), were correlated with higher accuracy in the neurotypical group. In autistic individuals, however, higher modulation values were correlated with lower accuracy. This effect was specific to increases in gesture submovements, which relates to increased repetitions of relevant movements (e.g., more “hammering” movements) or greater complexity of a depiction (e.g., first pantomiming the reaching and grasping of the hammer before depicting the hammering motion itself). Differences in how these kinematics are interpreted may be due to differences in perceptual processing strategies between neurotypical and autistic individuals. One hypothesis is that, for autistic individuals, prior expectations may be weaker (Pellicano & Burr, 2012) or less flexible (Sapey‐Triomphe et al., 2020) compared to sensory information. Therefore, the weak or less flexible expectation for how this particular gesture should appear can lead to communicative modulations confusing the interpretation of the kinematic information. In neurotypical individuals, the prior expectation is more flexible, and thus additional communicative modulations can serve to trigger recognition of the gesture. Alternatively, this could result from differences in how the two groups orient their attention (Keehn et al., 2013) and interpret the salience of different aspects of an observed gesture, or individual movements within the gesture. In this case, the communicatively relevant and visually salient kinematic features that neurotypicals utilize to better understand an iconic gesture may not be salient to autistic individuals. This would fit well with the framing of sensory differences in autism being related to an abnormal neural connectivity between visual networks and saliency networks (Jao Keehn et al., 2021; Zalla & Sperduti, 2013). Interestingly, however, despite this difference there was not a significant difference in accuracy. This suggests that although neurotypical and autistic individuals use different perceptual strategies that may affect how well a specific gesture is recognized, this may not be evident when only assessing task accuracy.

Several studies have suggested that how one performs an action can influence how one interprets that same action performed by someone else (Schuster et al., 2021; Cook et al., 2013; Edey et al., 2017). However, we found no evidence for kinematic similarity modulating how specific kinematic features influenced gesture recognition. This may be because these previous studies have primarily investigated mental or emotional state attribution, rather than action recognition per se. Our results may therefore suggest that at least in gesture recognition, one's own gesture kinematics do not influence how easily others' gestures are recognized.

LIMITATIONS

One limitation of the current study is that the gestures being produced and recognized were removed from an interactive, social context. In the production study, this lack of interaction could have led to gestures that are less representative of what would have been produced during a social interaction. Similarly, gestures are typically observed as an integrated aspect of communication (Holler & Levinson, 2019), rather than in isolation. However, removing gestures from the social interaction context also allowed us to focus on gesture kinematics while controlling the myriad other variables that would have influenced both the production and interpretation of the gestures had they been contained within the more complex context of social interaction. Future studies should build on these results by investigating how differences in gesture kinematics affect social interaction, both in terms of how autistic individuals produce gestures, as well as how they utilize kinematic information in the gestures of others. A second potential limitation relates to the generalizability of these findings, given that we specifically tested high‐functioning autistic participants. Given the complex, multidimensional nature of ASC, we cannot say whether our results can be generalized to all autistic individuals. It will therefore be important for future studies to test how these findings hold in individuals on other parts of the autism spectrum.

CONCLUSION

This study builds on previous studies of autism spectrum conditions by showing that both the production and comprehension of communicative movements is altered in autistic individuals. Specifically, autistic individuals differ in their gesture kinematics compared to neurotypical individuals, and this difference cannot be explained by symptom severity or motor coordination. Furthermore, autistic individuals process the kinematics of others' gestures differently than neurotypical individuals. This may affect how certain communicative modulations are interpreted, but it does not lead to a general impairment in gesture recognition. These results shed light on the relationship between movement and social interaction in high‐functioning autistic individuals, and highlight the flexible nature of human communication.

CONFLICT OF INTEREST

The authors declare no conflicts of interest.

Supporting information

Appendix S1: Supporting information.

ACKNOWLEDGMENTS

The authors would like to thank everyone who took part in the study, and in particular the autistic participants, who also provided feedback regarding the study and its implications. We would also like to thank Hedwig van der Meer, Bas Leijh, Anne‐Marie van der Meer, and Olympia Colizoli for their contribution to translating the AFQ into Dutch. This research was supported by an NWO Language in Interaction Gravitation Grant (024.001.006).

Trujillo, J. P. , Özyürek, A. , Kan, C. C. , Sheftel‐Simanova, I. , & Bekkering, H. (2021). Differences in the production and perception of communicative kinematics in autism. Autism Research, 14(12), 2640–2653. 10.1002/aur.2611

Irina Sheftel‐Simanova and Harold Bekkering contributed equally to this study.

Funding information NWO Language in Interaction Gravitation Grant, Grant/Award Number: 024.001.006

Footnotes

In the current paper, we use the term “high‐functioning” to refer to those with an autism diagnosis, but without any intellectual disability. In other words, it refers to those individuals with an IQ higher than 70. The term is not meant to say anything about overall autism symptom severity, but rather to make a distinction between groups that may have very different characteristics.

REFERENCES

- Aldaqre, I. , Schuwerk, T. , Daum, M. M. , Sodian, B. , & Paulus, M. (2016). Sensitivity to communicative and non‐communicative gestures in adolescents and adults with autism spectrum disorder: Saccadic and pupillary responses. Experimental Brain Research, 234(9), 2515–2527. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association . (2013). Diagnostic and statistical manual of mental disorders (DSM‐5®). American Psychiatric Pub.

- Amoruso, L. , Finisguerra, A. , & Urgesi, C. (2018). Autistic traits predict poor integration between top‐down contextual expectations and movement kinematics during action observation. Scientific Reports, 8(1), 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asperger, H. (1944). Die “Autistischen Psychopathen” im Kindesalter. Archiv für Psychiatrie und Nervenkrankheiten, 117(1), 76–136. [Google Scholar]

- Barr, D. J. , Levy, R. , Scheepers, C. , & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68(3), 255–278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates, D. , Maechler, M. , Bolker, B. , & Walker, S. (2015). Fitting linear mixed‐effects models using lme4. Journal of Statistical Software, 67(1), 1–48. [Google Scholar]

- Blake, R. , Turner, L. M. , Smoski, M. J. , Pozdol, S. L. , & Stone, W. L. (2003). Visual recognition of biological motion is impaired in children with autism. Psychological Science, 14(2), 151–157. [DOI] [PubMed] [Google Scholar]

- Campisi, E. , & Özyürek, A. (2013). Iconicity as a communicative strategy: Recipient design in multimodal demonstrations for adults and children. Journal of Pragmatics, 47(1), 14–27. [Google Scholar]

- Cavallo, A. , Romeo, L. , Ansuini, C. , Podda, J. , Battaglia, F. , Veneselli, E. , Pontil, M. , & Becchio, C. (2018). Prospective motor control obeys to idiosyncratic strategies in autism. Scientific Reports, 8(1), 717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambon, V. , Farrer, C. , Pacherie, E. , Jacquet, P. O. , Leboyer, M. , & Zalla, T. (2017). Reduced sensitivity to social priors during action prediction in adults with autism spectrum disorders. Cognition, 160, 17–26. [DOI] [PubMed] [Google Scholar]

- Chen, Y.‐W. , & Lin, C.‐J. (2006). Combining SVMs with various feature selection strategies. In Guyon I., Nikravesh M., Gunn S., & Zadeh L. A. (Eds.), Feature Extraction: Foundations and Applications (pp. 315–324). Springer. [Google Scholar]

- Cook, J. L. (2016). From movement kinematics to social cognition: The case of autism. Philosophical Transactions of the Royal Society, B: Biological Sciences, 371(1693), 372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook, J. L. , Blakemore, S.‐J. , & Press, C. (2013). Atypical basic movement kinematics in autism spectrum conditions. Brain, 136(9), 2816–2824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cusack, J. P. , Williams, J. H. G. , & Neri, P. (2015). Action perception is intact in autism Spectrum disorder. Journal of Neuroscience, 35(5), 1849–1857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson, G. , Toth, K. , Abbott, R. , Osterling, J. , Munson, J. , Estes, A. , & Liaw, J. (2004). Early social attention impairments in autism: Social orienting, joint attention, and attention to distress. Developmental Psychology, 40(2), 271. [DOI] [PubMed] [Google Scholar]

- de Marchena, A. B. , Kim, E. S. , Bagdasarov, A. , Parish‐Morris, J. , Maddox, B. B. , Brodkin, E. S. , & Schultz, R. T. (2019). Atypicalities of gesture form and function in autistic adults. Journal of Autism and Developmental Disorders, 49(4), 1438–1454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Cesare, G. , Sparaci, L. , Pelosi, A. , Mazzone, L. , Giovagnoli, G. , Menghini, D. , Ruffaldi, E. , & Vicari, S. (2017). Differences in action style recognition in children with autism spectrum disorders. Frontiers in Psychology, 8, 1456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edey, R. , Cook, J. L. , Brewer, R. , Bird, G. , & Press, C. (2019). Adults with autism spectrum disorder are sensitive to the kinematic features defining natural human motion. Autism Research, 12(2), 284–294. [DOI] [PubMed] [Google Scholar]

- Edey, R. , Yon, D. , Cook, J. L. , Dumontheil, I. , & Press, C. (2017). Our own action kinematics predict the perceived affective states of others. Journal of Experimental Psychology: Human Perception and Performance, 43(7), 1263–1268. [DOI] [PubMed] [Google Scholar]

- Fox, J. , Friendly, M. , & Weisberg, S. (2012). Hypothesis tests for multivariate linear models using the car package. The R Journal, 5(1), 39–52. [Google Scholar]

- Glazebrook, C. M. , Elliott, D. , & Lyons, J. (2006). A kinematic analysis of how young adults with and without autism plan and control goal‐directed movements. Motor Control, 10(3), 244–264. [DOI] [PubMed] [Google Scholar]

- Gowen, E. , & Hamilton, A. F. de C. (2013). Motor abilities in autism: A review using a computational context. Journal of Autism and Developmental Disorders, 43(2), 323–344. [DOI] [PubMed] [Google Scholar]

- Hendriks, M. P. H. , Kessels, R. P. C. , Gorissen, M. E. E. , Schmand, B. A. , & Duits, A. A. (2014). Neuropsychologische diagnostiek: De klinische praktijk. Boom; https://repository.ubn.ru.nl/handle/2066/133321. [Google Scholar]

- Holler, J. , & Levinson, S. C. (2019). Multimodal language processing in human communication. Trends in Cognitive Sciences, 23(8), 639–652. [DOI] [PubMed] [Google Scholar]

- Hsiung, E.‐Y. , Chien, S. H.‐L. , Chu, Y.‐H. , & Ho, M. W.‐R. (2019). Adults with autism are less proficient in identifying biological motion actions portrayed with point‐light displays. Journal of Intellectual Disability Research, 63(9), 1111–1124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jao Keehn, R. J. , Pueschel, E. B. , Gao, Y. , Jahedi, A. , Alemu, K. , Carper, R. , Fishman, I. , & Müller, R.‐A. (2021). Underconnectivity between visual and salience networks and links with sensory abnormalities in autism spectrum disorders. Journal of the American Academy of Child & Adolescent Psychiatry, 60(2), 274–285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanner, L. (1943). Autistic disturbances of affective contact. The Nervous Child, 2, 217–250. [Google Scholar]

- Keehn, B. , Müller, R.‐A. , & Townsend, J. (2013). Atypical attentional networks and the emergence of autism. Neuroscience & Biobehavioral Reviews, 37(2), 164–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendon, A. (2004). Gesture: Visible Action as Utterance. Cambridge University Press; https://books.google.nl/books?hl=en&lr=&id=hDXnnzmDkOkC&oi=fnd&pg=PR6&dq=kendon+2004&ots=RK4Txd6XgG&sig=WJXG_VR0o‐FXWjdCRsXbudT_lvA#v=onepage&q=kendon%202004&f=false [Google Scholar]

- Koul, A. , Becchio, C. , & Cavallo, A. (2018). PredPsych: A toolbox for predictive machine learning‐based approach in experimental psychology research. Behavior Research Methods, 50(4), 1657–1672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakens, D. , Scheel, A. M. , & Isager, P. M. (2018). Equivalence testing for psychological research: A tutorial. Advances in Methods and Practices in Psychological Science, 1(2), 259–269. [Google Scholar]

- Lawson, R. P. , Mathys, C. , & Rees, G. (2017). Adults with autism overestimate the volatility of the sensory environment. Nature Neuroscience, 20(9), 1293–1299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, P. , Liu, C.‐H. , Fan, C.‐W. , Lu, C.‐P. , Lu, W.‐S. , & Hsieh, C.‐L. (2013). The test‐retest reliability and the minimal detectable change of the Purdue pegboard test in schizophrenia. Journal of the Formosan Medical Association = Taiwan Yi Zhi, 112(6), 332–337. [DOI] [PubMed] [Google Scholar]

- Lord, C. , Rutter, M. , DiLavore, P. C. , & Risi, S. (2002). Autism diagnostic observation schedule‐WPS (ADOS‐WPS). Western Psychological Services. https://psycnet.apa.org/doiLanding?doi=10.1037%2Ft17256-000

- Lord, C. , Rutter, M. , & Le Couteur, A. (1994). Autism diagnostic interview‐revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24(5), 659–685. [DOI] [PubMed] [Google Scholar]

- McNeill, D. (1992). Hand and mind: What gestures reveal about thought. University of Chicago Press.

- Moore, D. G. , Hobson, R. P. , & Lee, A. (1997). Components of person perception: An investigation with autistic, non‐autistic retarded and typically developing children and adolescents. British Journal of Developmental Psychology, 15(4), 401–423. [Google Scholar]

- Murphy, P. , Brady, N. , Fitzgerald, M. , & Troje, N. F. (2009). No evidence for impaired perception of biological motion in adults with autistic spectrum disorders. Neuropsychologia, 47(14), 3225–3235. [DOI] [PubMed] [Google Scholar]

- Nackaerts, E. , Wagemans, J. , Helsen, W. , Swinnen, S. P. , Wenderoth, N. , & Alaerts, K. (2012). Recognizing biological motion and emotions from point‐light displays in autism Spectrum disorders. PLoS One, 7(9), 473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'riordan, M. A. (2004). Superior visual search in adults with autism. Autism, 8(3), 229–248. [DOI] [PubMed] [Google Scholar]

- Özyürek, A. (2014). Hearing and seeing meaning in speech and gesture: Insights from brain and behaviour. Philosophical Transactions of the Royal Society, B: Biological Sciences, 369(1651), 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papadopoulos, N. , McGinley, J. , Tonge, B. J. , Bradshaw, J. L. , Saunders, K. , & Rinehart, N. J. (2012). An investigation of upper limb motor function in high functioning autism and Asperger's disorder using a repetitive Fitts' aiming task. Research in Autism Spectrum Disorders, 6(1), 286–292. [Google Scholar]

- Pellicano, E. , & Burr, D. (2012). When the world becomes “too real”: A Bayesian explanation of autistic perception. Trends in Cognitive Sciences, 16(10), 504–510. [DOI] [PubMed] [Google Scholar]

- Pierce, K. , Glad, K. S. , & Schreibman, L. (1997). Social perception in children with autism: An Attentional deficit? Journal of Autism and Developmental Disorders, 27(3), 265–282. [DOI] [PubMed] [Google Scholar]

- Rochat, M. J. , Veroni, V. , Bruschweiler‐Stern, N. , Pieraccini, C. , Bonnet‐Brilhault, F. , Barthélémy, C. , Malvy, J. , Sinigaglia, C. , Stern, D. N. , & Rizzolatti, G. (2013). Impaired vitality form recognition in autism. Neuropsychologia, 51(10), 1918–1924. [DOI] [PubMed] [Google Scholar]

- Sapey‐Triomphe, L.‐A. , Timmermans, L. , & Wagemans, J. (2020). Priors bias perceptual decisions in autism, but are less flexibly adjusted to the context. Autism Research, 14(6), 1134–1146. 10.1002/aur.2452 [DOI] [PubMed] [Google Scholar]

- Schuster, B. A. , Fraser, D. S. , Gordon, A. S. , Huh, D. , & Cook, J. L. (2021). Attributing minds to triangles: kinematics and observer‐animator kinematic similarity predict mental state attribution in the animations task. Research Square. 10.21203/rs.3.rs-208776/v2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tiffin, J. , & Asher, E. J. (1948). The Purdue pegboard: Norms and studies of reliability and validity. Journal of Applied Psychology, 32(3), 234–247. [DOI] [PubMed] [Google Scholar]

- Trujillo, J. P. , Simanova, I. , Bekkering, H. , & Özyürek, A. (2018). Communicative intent modulates production and comprehension of actions and gestures: A Kinect study. Cognition, 180, 38–51. [DOI] [PubMed] [Google Scholar]

- Trujillo, J. P. , Simanova, I. , Bekkering, H. , & Özyürek, A. (2020). The communicative advantage: How kinematic signaling supports semantic comprehension. Psychological Research, 84, 1897–1911. 10.1007/s00426-019-01198-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trujillo, J. P. , Simanova, I. , Ozyurek, A. , & Bekkering, H. (2020). Seeing the unexpected: How brains read communicative intent through kinematics. Cerebral Cortex, 3(30), 1056–1067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van de Cruys, S. , Evers, K. , Van der Hallen, R. , Van Eylen, L. , Boets, B. , de‐Wit, L. , & Wagemans, J. (2014). Precise minds in uncertain worlds: Predictive coding in autism. Psychological Review, 121(4), 649. [DOI] [PubMed] [Google Scholar]

- van der Meer, H. A. , Sheftel‐Simanova, I. , Kan, C. C. , & Trujillo, J. P. (2021). Translation, cross‐cultural adaptation, and validation of a Dutch version of the actions and feelings questionnaire in autistic and neurotypical adults. Journal of Autism and Developmental Disorders. 10.1007/s10803-021-05082-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Ool, J. S. , Hurks, P. P. M. , Snoeijen‐Schouwenaars, F. M. , Tan, I. Y. , Schelhaas, H. J. , Klinkenberg, S. , Aldenkamp, A. P. , & Hendriksen, J. G. M. (2018). Accuracy of WISC‐III and WAIS‐IV short forms in patients with neurological disorders. Developmental Neurorehabilitation, 21(2), 101–107. [DOI] [PubMed] [Google Scholar]

- Verhage, F. (1964). Intelligentie en leeftijd: Onderzoek bij Nederlanders van twaalf tot zevenenzeventig jaar [Intelligence and age: Research in Dutch persons age twelve to seventy‐seven years].

- von der Lühe, T. , Manera, V. , Barisic, I. , Becchio, C. , Vogeley, K. , & Schilbach, L. (2016). Interpersonal predictive coding, not action perception, is impaired in autism. Philosophical Transactions of the Royal Society, B: Biological Sciences, 371(1693), 373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler, D. (2011). WASI‐II: Wechsler abbreviated scale of intelligence. Psychol.Corp.

- Williams, J. H. G. , Cameron, I. M. , Ross, E. , Braadbaart, L. , & Waiter, G. D. (2016). Perceiving and expressing feelings through actions in relation to individual differences in empathic traits: The action and feelings questionnaire (AFQ). Cognitive, Affective, & Behavioral Neuroscience, 16(2), 248–260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zalla, T. , & Sperduti, M. (2013). The amygdala and the relevance detection theory of autism: An evolutionary perspective. Frontiers in Human Neuroscience, 7, 894. 10.3389/fnhum.2013.00894 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1: Supporting information.